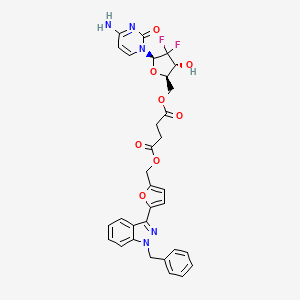

GEM-5

Description

BenchChem offers high-quality this compound suitable for many research applications. Different packaging options are available to accommodate customers' requirements. Please inquire for more information about this compound including the price, delivery time, and more detailed information at info@benchchem.com.

Properties

Molecular Formula |

C32H29F2N5O8 |

|---|---|

Molecular Weight |

649.6 g/mol |

IUPAC Name |

1-O-[[(2R,3R,5R)-5-(4-amino-2-oxopyrimidin-1-yl)-4,4-difluoro-3-hydroxyoxolan-2-yl]methyl] 4-O-[[5-(1-benzylindazol-3-yl)furan-2-yl]methyl] butanedioate |

InChI |

InChI=1S/C32H29F2N5O8/c33-32(34)29(42)24(47-30(32)38-15-14-25(35)36-31(38)43)18-45-27(41)13-12-26(40)44-17-20-10-11-23(46-20)28-21-8-4-5-9-22(21)39(37-28)16-19-6-2-1-3-7-19/h1-11,14-15,24,29-30,42H,12-13,16-18H2,(H2,35,36,43)/t24-,29-,30-/m1/s1 |

InChI Key |

HJFGXFTZAGKEDF-HGLPBTONSA-N |

Isomeric SMILES |

C1=CC=C(C=C1)CN2C3=CC=CC=C3C(=N2)C4=CC=C(O4)COC(=O)CCC(=O)OC[C@@H]5[C@H](C([C@@H](O5)N6C=CC(=NC6=O)N)(F)F)O |

Canonical SMILES |

C1=CC=C(C=C1)CN2C3=CC=CC=C3C(=N2)C4=CC=C(O4)COC(=O)CCC(=O)OCC5C(C(C(O5)N6C=CC(=NC6=O)N)(F)F)O |

Origin of Product |

United States |

Foundational & Exploratory

getting started with GEM-5 for computer architecture research

An In-depth Technical Guide for Researchers

Getting Started with gem5 for Computer Architecture Research

This guide serves as a comprehensive introduction to the gem5 simulator, a powerful and modular platform for computer architecture research. It is designed for researchers and scientists who are new to gem5 and aims to provide a foundational understanding of its core concepts, setup, and basic operation.

Introduction to the gem5 Simulator

The gem5 simulator is a modular, open-source platform for computer system architecture research, covering everything from system-level architecture to processor microarchitecture.[1][2] It is a discrete-event simulator, meaning it models the passage of time as a series of distinct events.[3] gem5 is highly flexible, allowing researchers to configure, extend, or replace its components to suit their specific research needs.[3] The simulator is primarily written in C++ and Python, with simulation configurations being handled by Python scripts.[3]

Key features of gem5 include:

-

Multiple ISAs Support : gem5 supports a variety of Instruction Set Architectures (ISAs), including x86, ARM, RISC-V, SPARC, and others, allowing for diverse and cross-architecture studies.[2][4][5]

-

Interchangeable CPU Models : It provides several CPU models with varying levels of detail, such as simple functional models for speed and detailed out-of-order models for accuracy.[2][6][7]

-

Detailed Memory System : gem5 includes a flexible, event-driven memory system that can model complex, multi-level cache hierarchies and various DRAM controllers.[2][8]

-

Multiple Simulation Modes : gem5 can operate in two primary modes: Syscall Emulation (SE) and Full System (FS).[9][10]

Simulation Modes: SE vs. FS

gem5 offers two main simulation modes that cater to different research needs.[9]

-

Syscall Emulation (SE) Mode : This mode focuses on simulating the CPU and memory system for a single user-space application.[9][10] It relies on the host operating system to handle system calls, which simplifies the simulation setup.[10][11] SE mode is ideal for studies where the detailed interaction with the operating system is not critical.

-

Full System (FS) Mode : In this mode, gem5 emulates a complete hardware system, allowing an unmodified operating system and its applications to run on the simulated hardware.[6][9] This mode is akin to a virtual machine and is essential for research involving OS interactions, device drivers, and complex software stacks.[10]

Getting Started: Installation and Setup

This section provides a detailed protocol for downloading and compiling gem5 on a Unix-like operating system.

Experimental Protocol: gem5 Installation

Objective: To download the gem5 source code and compile the simulator binary.

Prerequisites: A Unix-like operating system (Linux is recommended) with necessary dependencies installed.[12]

Dependencies: Before compiling gem5, you need to install several packages. Key dependencies include:

-

git: For cloning the source code repository.

-

scons: The build system used by gem5.

-

g++ or another C++ compiler.

-

python-dev: Python development headers.

-

swig: A software development tool that connects C/C++ programs with high-level programming languages.

-

Other libraries such as zlib1g-dev and libprotobuf-dev.[13][14]

Procedure:

-

Clone the gem5 Repository : Download the source code from the official gem5 GitHub repository. It is recommended to use the latest stable branch for research.[12]

-

Compile gem5 : Use scons to build the simulator. The build process can be time-consuming and memory-intensive.[12] The command specifies the target ISA and the desired optimization level. The -j flag specifies the number of parallel compilation jobs.[11]

-

Verification : Upon successful compilation, a gem5 binary will be created in the build/ALL/ directory (e.g., build/ALL/gem5.opt).[12]

The gem5 Architecture: Core Concepts

gem5's modularity is built upon a few fundamental concepts that are crucial for users to understand.

SimObjects

The core of gem5's modular design is the SimObject.[9] Most simulated components, such as CPUs, caches, memory controllers, and buses, are implemented as SimObjects.[10][17] These C++ objects are exported to Python, allowing researchers to instantiate and configure them within a Python script to define the simulated system's architecture.[9][17]

The gem5 Standard Library

To simplify the process of creating simulation scripts, gem5 provides a standard library (stdlib).[17][18] This library offers a collection of pre-defined, high-level components that can be easily combined to build a simulated system.[17][19] The philosophy behind the stdlib is analogous to building a real computer from off-the-shelf parts.[18] It abstracts away much of the low-level configuration, reducing boilerplate code and the potential for errors.[19]

The main components of the standard library are:

-

Board : The backbone of the system where other components are connected.[19]

-

Processor : Contains one or more CPU cores.[18]

-

MemorySystem : Defines the main memory, such as a DDR3 or DDR4 system.[18]

-

CacheHierarchy : Defines the components between the processor and main memory, such as L1 and L2 caches.[18]

Your First Simulation: A "Hello World" Experiment

Running a simulation in gem5 involves executing the compiled binary with a Python configuration script as an argument.[12] This protocol details how to run a basic "Hello World" example in SE mode using the gem5 standard library.

Experimental Protocol: Running a "Hello World" Simulation

Objective: To run a pre-compiled "Hello World" binary on a simple simulated system in SE mode.

Procedure:

-

Create a Configuration Script : Create a Python file (e.g., hello.py) to define the simulated system. This script will use components from the gem5 standard library.[15]

-

Run the Simulation : Execute the gem5 binary with your Python script.

-

Observe the Output : The simulation will run, and you should see "Hello world!" printed to your terminal, which is the output from the simulated binary.[12] An output directory named m5out will also be created.[20]

Simulation Workflow Diagram

The following diagram illustrates the high-level workflow of a gem5 simulation.

Analyzing the Output

After a simulation completes, gem5 generates an output directory, typically named m5out, which contains detailed information about the simulation run.[20]

The key files in the m5out directory are:

-

config.ini / config.json: These files contain a complete record of every SimObject created for the simulation and all of its parameters, including those set by default.[20] This is crucial for ensuring reproducibility.

-

stats.txt: This file contains a dump of all the statistics collected during the simulation.[20] Statistics are registered by SimObjects and provide detailed insights into the behavior and performance of the simulated system.

Data Presentation: Key Simulation Statistics

The stats.txt file provides a wealth of quantitative data. Below is a table summarizing some of the most important high-level statistics.

| Statistic Name | Description | Example Use Case |

| sim_seconds | The total simulated time that has passed.[20] | Calculating simulated performance. |

| sim_insts | The total number of instructions committed by the CPU(s).[20] | Measuring workload progress. |

| host_inst_rate | The simulation speed in terms of host instructions per second.[20] | Assessing the performance of the simulator itself. |

| system.cpu.ipc | Instructions Per Cycle for the CPU. | Core performance analysis. |

| system.cpu.dcache.miss_rate | The miss rate of the L1 data cache. | Memory system performance analysis. |

| system.mem_ctrl.bw_total | Total memory bandwidth utilized. | Analyzing memory system bottlenecks. |

Building a System: CPU, Cache, and Memory

To conduct meaningful research, you will need to move beyond the simplest configurations and build systems with more detailed components, such as caches. The modular nature of gem5 and its standard library makes this straightforward.

Experimental Protocol: Simulating a System with Caches

Objective: To configure and simulate a simple system consisting of a CPU, L1 instruction and data caches, an L2 cache, and a memory bus.

Procedure:

-

Modify the Configuration Script : Start with the "Hello World" script and replace the NoCache hierarchy with a classic cache hierarchy like PrivateL1PrivateL2CacheHierarchy.

-

Run and Analyze : Run the simulation as before. The new configuration will be reflected in m5out/config.ini. You can now analyze cache-related statistics (e.g., miss rates, hit latency) in m5out/stats.txt to understand the performance of your memory system.

System Component Connection Diagram

The following diagram illustrates the logical connections in the simple cached system you configured.

Summary of Quantitative Data

For quick reference, the following tables summarize key quantitative and categorical data about gem5's capabilities.

Table 1: Supported Instruction Set Architectures (ISAs)

| ISA | Support Status |

| X86 | Supports 64-bit extensions; can boot unmodified Linux kernels.[5] |

| ARM | Supports ARMv8-A profile (AArch32 and AArch64); can boot Linux.[5] |

| RISC-V | Support for privileged ISA spec is a work in progress.[2] |

| SPARC | Models a single core of an UltraSPARC T1; can boot Solaris.[5] |

| MIPS | Supported.[2] |

| Alpha | Models a DEC Tsunami system; can boot Linux 2.4/2.6 and FreeBSD.[5] |

| POWER | Limited to syscall emulation mode based on POWER ISA v3.0B.[5] |

Table 2: gem5 CPU Models

| CPU Model | Type | Key Characteristics |

| AtomicSimpleCPU | Functional | Uses atomic memory accesses for speed; not cycle-accurate for memory.[21] |

| TimingSimpleCPU | In-Order | Uses timing-based memory accesses; stalls on cache misses.[21] |

| O3CPU | Out-of-Order | A detailed, cycle-accurate model of an out-of-order processor.[7] |

| MinorCPU | In-Order | A more realistic in-order CPU model with a fixed pipeline.[6] |

| KVMCPU | KVM-based | Uses virtualization to accelerate simulation, especially for non-interesting code regions.[2] |

References

- 1. gem5: The gem5 simulator system [gem5.org]

- 2. gem5: About [gem5.org]

- 3. gem5: Learning gem5 [gem5.org]

- 4. Vayavya Labs Pvt. Ltd. - Introducing gem5 : An Open-Source Computer Architecture Simulator [vayavyalabs.com]

- 5. gem5: Architecture Support [gem5.org]

- 6. developer.arm.com [developer.arm.com]

- 7. gem5: gem5's CPU models [gem5.org]

- 8. gem5: gem5_memory_syste [gem5.org]

- 9. gem5: Creating a simple configuration script [gem5.org]

- 10. Creating a simple configuration script — gem5 Tutorial 0.1 documentation [courses.grainger.illinois.edu]

- 11. cs.pomona.edu [cs.pomona.edu]

- 12. gem5: Getting Started with gem5 [gem5.org]

- 13. eshita_omar.gitbooks.io [eshita_omar.gitbooks.io]

- 14. Gem5 and GS Gem5-Validate Tutorial [web-archive.southampton.ac.uk]

- 15. gem5: Hello World Tutorial [gem5.org]

- 16. Instruction Set Assignmnet: T · GitHub [gist.github.com]

- 17. gem5: Creating a simple configuration script [courses.grainger.illinois.edu]

- 18. gem5: Standard Library Overview [gem5.org]

- 19. scribd.com [scribd.com]

- 20. gem5: Understanding gem5 statistics and output [gem5.org]

- 21. gem5: Simple CPU Models [gem5.org]

GEM-5 tutorial for beginners in academic research

An In-Depth Guide to GEM-5 for Academic Research

Abstract

The gem5 simulator is a modular and flexible platform for computer architecture research, enabling detailed performance analysis of complex hardware systems.[1] This guide provides a comprehensive introduction for researchers and scientists new to gem5. It covers the fundamental concepts, initial setup, simulation modes, and a typical research workflow. Detailed protocols for key experiments are provided, along with visualizations of core concepts and workflows to facilitate understanding. The document aims to equip beginners with the necessary knowledge to start using gem5 effectively in their academic research.

Introduction to this compound

gem5 is a modular, discrete-event driven computer system simulator platform.[2] Its key characteristics make it an invaluable tool for academic research:

-

Modularity : gem5 is composed of interchangeable components, known as SimObjects, which can be configured, extended, or replaced to model novel architectures.[2][3]

-

Flexibility : It supports multiple Instruction Set Architectures (ISAs) like X86, ARM, and RISC-V.[2]

-

Dual Simulation Modes : gem5 offers two primary simulation modes: Syscall Emulation (SE) and Full System (FS), catering to different research needs.[2][4]

-

Collaborative and Open-Source : As a widely-used, open-source project, it benefits from a large community of academic and industrial contributors.[1]

The simulator's architecture is primarily based on C++ for performance-critical models and Python for configuration and control, a separation that allows researchers to easily define and modify complex systems.[2][3]

Getting Started: The Initial Setup

The first and often most challenging step for beginners is setting up the simulation environment.[5] This protocol outlines the standard procedure.

Experimental Protocol 1: Environment Setup

This protocol details the steps to get a working build of gem5 on a Unix-like operating system.

-

System Requirements : Ensure your host system is a Unix-like OS (Linux is recommended) with necessary dependencies installed, such as git, scons, g++, and Python development libraries.[6][7]

-

Clone the Repository : Download the gem5 source code from its official repository using git.[6][7]

-

Compile this compound : Use scons to build the simulator. The build target specifies the ISA and the optimization level. Building can be time-consuming and memory-intensive.[6] Using multiple threads with the -j flag is recommended on multi-core machines.[7][8]

Table 1: Common this compound Build Targets

| Target Suffix | Description | Use Case |

| .opt | An optimized build with debugging symbols. | General use, balancing performance and debuggability.[4] |

| .fast | A highly optimized build with no debugging symbols. | Maximum simulation speed for large-scale experiments.[8] |

| .debug | A build with full debugging symbols and no optimizations. | Development and debugging of new models.[9] |

Understanding this compound Simulation Modes

gem5 provides two main modes of operation, each with distinct advantages and use cases.[4]

-

Syscall Emulation (SE) Mode : In SE mode, gem5 simulates only the user-space instructions of an application.[10] System calls are trapped and handled by the host operating system.[8] This mode is faster and simpler to configure, making it ideal for research focused on CPU and memory subsystem performance without the complexity of a full OS.[4][9]

-

Full System (FS) Mode : FS mode simulates a complete hardware system, including CPUs, caches, memory, and I/O devices.[11] This allows it to boot an unmodified operating system and run a full software stack.[11] While more complex to set up—requiring a compiled kernel and disk image—it is essential for research involving OS interactions or complex I/O behavior.[11]

Running Your First Simulation (SE Mode)

This protocol guides you through running a simple "Hello World" application in SE mode, which is the typical starting point for new users.[6]

Experimental Protocol 2: Executing a "Hello World" Program

-

Identify the Configuration Script : gem5 uses Python scripts for simulation configuration. For this test, we use a basic script provided with the source code: configs/learning_gem5/part1/simple.py.[6] This script defines a simple system with a CPU and memory.

-

Prepare the Command : The gem5 executable takes the configuration script as an argument. The script, in turn, may take its own options. For this test, no additional options are needed.

-

Execute the Simulation : Run the following command from the root of the gem5 directory.

-

Inspect the Output : After execution, gem5 creates an output directory named m5out/.[4] This directory contains simulation statistics, configuration details, and any standard output from the simulated program. You should see "Hello world!" printed in the terminal output.[6]

Analyzing Simulation Output

The primary source of quantitative data from a gem5 simulation is the stats.txt file located in the m5out directory.[4][8] This file contains a detailed breakdown of various metrics for every SimObject in the simulation.

Table 2: Example Performance Metrics from stats.txt

| Statistic Name | Description | Common Use |

| simSeconds | The total simulated time in seconds. | Overall simulation runtime. |

| system.cpu.numCycles | The number of CPU cycles simulated. | Core performance measurement. |

| system.cpu.committedInsts | The number of instructions committed by the CPU. | Instructions Per Cycle (IPC) calculation. |

| system.cpu.dcache.overallMisses | The total number of misses in the L1 data cache. | Memory access pattern analysis. |

| system.mem_ctrls.num_reads | The number of read requests to the memory controller. | Memory bandwidth analysis. |

A Typical Academic Research Workflow

Using gem5 in academic research is an iterative process that involves modifying the simulator to model a novel idea, running experiments, and analyzing the results.

The workflow typically involves:

-

Hypothesis Formulation : Define a new architectural feature or optimization to be evaluated.

-

Model Development : Modify the gem5 C++ source code to implement the new feature, often by creating or extending a SimObject.[2]

-

Experiment Configuration : Write or adapt a Python configuration script to integrate and parameterize the new model within a larger system.

-

Simulation Execution : Run a set of benchmarks or workloads on the modified simulator.

-

Data Analysis : Analyze the stats.txt output to quantify the performance, power, or thermal impact of the proposed change.

-

Iteration : Refine the model based on the analysis and repeat the process.

Conclusion

gem5 is a powerful and essential tool for modern computer architecture research. While it has a steep learning curve, its modularity and extensive capabilities provide unparalleled opportunities for exploration and innovation. By following a structured approach—starting with environment setup, understanding the core simulation modes, and running simple experiments—beginners can build the foundational knowledge required to tackle complex research questions. For continued learning, the official gem5 documentation and community resources are invaluable.[2][12]

References

- 1. gem5.org [gem5.org]

- 2. gem5: Learning gem5 [gem5.org]

- 3. youtube.com [youtube.com]

- 4. developer.arm.com [developer.arm.com]

- 5. gem5.org [gem5.org]

- 6. gem5: Getting Started with gem5 [gem5.org]

- 7. Gem5 and GS Gem5-Validate Tutorial [web-archive.southampton.ac.uk]

- 8. cs.pomona.edu [cs.pomona.edu]

- 9. scribd.com [scribd.com]

- 10. research.cs.wisc.edu [research.cs.wisc.edu]

- 11. gem5 Full System Simulation — gem5 Tutorial 0.1 documentation [lowepower.com]

- 12. gem5: gem5 documentation [gem5.org]

An In-depth Technical Guide to the GEM-5 Architecture and its Core Components

Audience Clarification: This guide is intended for researchers and scientists. It is important to note that GEM-5 is a computer architecture simulator, a tool for designing and modeling computer hardware and software systems. While its applications can extend to accelerating scientific workloads, it is not directly a tool for drug development or the analysis of biological signaling pathways. This document will provide a comprehensive technical overview of the this compound architecture for individuals interested in systems-level computer modeling and performance analysis.

Introduction to this compound

The this compound simulator is a modular and flexible platform for computer-system architecture research, encompassing system-level architecture and processor microarchitecture.[1][2] It is an open-source, discrete-event simulator widely used in academia and industry for a variety of research tasks, including processor design, memory subsystem development, and application performance optimization.[3] this compound was formed from the merger of the m5 and GEMS simulators and supports a wide range of instruction set architectures (ISAs), including x86, ARM, RISC-V, and SPARC.[1][3]

Key features of this compound include:

-

Modular Design: this compound is built with a highly modular design, allowing researchers to interchange different models for CPUs, caches, memory, and other system components.[3]

-

Multiple Simulation Modes: It supports two primary simulation modes:

-

System-call Emulation (SE) Mode: This mode simulates user-space programs, and system calls are forwarded to the host operating system. It is simpler to configure and is focused on CPU and memory system simulation.[4][5]

-

Full-System (FS) Mode: This mode emulates an entire hardware system, allowing an unmodified operating system to be booted and run. This provides a more realistic simulation environment.[4][6]

-

-

Diverse CPU Models: this compound provides a library of interchangeable CPU models with varying levels of detail and performance, from simple functional models to detailed out-of-order pipeline models.[1]

-

Flexible Memory System: It includes a detailed and configurable memory system that can model complex cache hierarchies, interconnects, and various memory technologies.[1]

-

Python Integration: Simulation configurations are written in Python, providing a powerful and flexible way to define, script, and control experiments.[3]

The Core Architecture: SimObjects

The fundamental building block of any this compound simulation is the SimObject .[5][7] SimObjects are C++ objects that are exposed to the Python configuration scripts.[8] Most components in a simulated system, such as CPUs, caches, memory controllers, and buses, are SimObjects.[9] This object-oriented design allows for the hierarchical construction of complex systems by instantiating and connecting different SimObjects.[8]

Key characteristics of SimObjects:

-

They represent physical components of a computer system.[10]

-

Their parameters can be set from the Python configuration files.[8]

-

They are connected via a port abstraction to form the desired system topology.

Figure 1: Basic SimObject Inheritance

CPU Models

This compound offers several CPU models, each providing a different trade-off between simulation speed and microarchitectural detail.[1] This allows researchers to select the most appropriate model for their specific study.

| CPU Model | Description | Use Case | Memory Access Type |

| AtomicSimpleCPU | A simple, in-order CPU model that assumes atomic memory accesses. It is the fastest model but provides no timing information for the memory system.[11] | Functional validation, fast-forwarding simulation to a region of interest.[11] | Atomic |

| TimingSimpleCPU | An in-order CPU model that uses a timing-based memory system. It stalls on memory accesses and waits for a response, providing more accurate timing.[11] | Simulations where a simple pipeline is sufficient, but memory timing is important. | Timing |

| MinorCPU | A detailed, in-order pipelined CPU model with a fixed pipeline structure. It models pipeline hazards and stalls more accurately than TimingSimpleCPU.[4] | Research on in-order processor designs and their interaction with the memory system. | Timing |

| O3CPU (Out-of-Order) | A detailed, out-of-order CPU model that simulates a modern superscalar processor with features like instruction fetching, decoding, renaming, issuing, and committing.[12] | Detailed microarchitectural studies of out-of-order processors and their performance. | Timing |

| KVMCPU | Utilizes the host's Kernel-based Virtual Machine (KVM) to execute instructions natively, significantly speeding up simulation. This requires the guest and host ISAs to be the same.[12][13] | Fast-forwarding to a specific point in a full-system simulation or when detailed CPU timing is not required.[13] | N/A |

The Memory System

This compound's memory system is a critical component for performance analysis and is broadly divided into two main subsystems: the "Classic" memory system and "Ruby."

Classic Memory System

The Classic memory system is a flexible and relatively easy-to-configure memory hierarchy.[14] It is composed of interconnected SimObjects like caches and buses. Components communicate through a port interface, with MasterPorts initiating requests and SlavePorts receiving them.[15] This allows for the construction of arbitrary, multi-level cache hierarchies.[14]

Figure 2: Classic Memory System Data Flow

Ruby Memory System

Ruby is a more detailed and powerful memory system simulator that originated from the GEMS project.[16] It is designed to model complex cache coherence protocols and interconnection networks with high fidelity.[16][17] Ruby separates the coherence protocol logic, network topology, and cache controller implementation, providing a highly modular framework for memory system research.[17] It uses a domain-specific language called SLICC (Specification Language for Implementing Cache Coherence) to define coherence protocols.[16]

Experimental Protocol: A Basic this compound Simulation Workflow

Running a simulation in this compound involves several key steps, from setting up the environment to executing the simulation and analyzing the results.

Methodology

-

Compilation: The first step is to compile the this compound source code for the target ISA and desired components. This is typically done using scons.[18]

-

Configuration Script: A Python script is created to define the system to be simulated. This involves:

-

Importing the necessary SimObject classes from the m5.objects module.

-

Instantiating the SimObjects that will make up the system (e.g., a System, Cpu, Cache, MemBus, DDR3_1600_SingleChannel).[7][19]

-

Setting the parameters for each SimObject (e.g., clock frequency, cache size, memory range).[5][9]

-

Connecting the SimObjects together by assigning master ports to slave ports.

-

Specifying the workload (e.g., a binary to run in SE mode).[19]

-

-

Execution: The this compound binary is invoked with the Python configuration script as an argument.[18]

-

Analysis: After the simulation completes, this compound generates output files in the m5out directory. The primary files for analysis are:

Figure 3: this compound Simulation Workflow

Quantitative Data and Performance Metrics

This compound simulations produce a wealth of statistical data that can be used for performance analysis.[20] The stats.txt file provides detailed metrics for each component in the simulated system.

| Statistic Category | Example Metrics |

| System-Wide | sim_seconds: Total simulated time.[22]sim_insts: Total number of committed instructions.[22]host_inst_rate: Simulation speed in instructions per second.[20] |

| CPU Core | committedInsts: Number of instructions committed.numCycles: Number of CPU cycles simulated.cpi: Cycles Per Instruction. |

| Cache | overall_hits::total: Total number of cache hits.overall_misses::total: Total number of cache misses.overall_miss_rate::total: The ratio of misses to total accesses. |

| Memory Controller | bytes_read::total: Total bytes read from main memory.bytes_written::total: Total bytes written to main memory.bw_total::total: Total memory bandwidth utilized.[20] |

References

- 1. gem5: About [gem5.org]

- 2. gem5: The gem5 simulator system [gem5.org]

- 3. gem5 - Wikipedia [en.wikipedia.org]

- 4. developer.arm.com [developer.arm.com]

- 5. gem5: Creating a simple configuration script [gem5.org]

- 6. researchgate.net [researchgate.net]

- 7. Creating a simple configuration script — gem5 Tutorial 0.1 documentation [courses.grainger.illinois.edu]

- 8. gem5: Creating a very simple SimObject [gem5.org]

- 9. gem5: Creating a simple configuration script [courses.grainger.illinois.edu]

- 10. gem5.org [gem5.org]

- 11. gem5: Simple CPU Models [gem5.org]

- 12. 01-GEM5-CPU/GEM5_CPU.md · main · Ratko Pilipovic / RS-Vaje · GitLab [repo.sling.si]

- 13. youtube.com [youtube.com]

- 14. gem5: Classic memory system coherence [gem5.org]

- 15. gem5: Memory system [gem5.org]

- 16. gem5: Introduction to Ruby [gem5.org]

- 17. gem5: Introduction [gem5.org]

- 18. gem5: Getting Started with gem5 [gem5.org]

- 19. gem5: Hello World Tutorial [gem5.org]

- 20. gem5: Understanding gem5 statistics and output [gem5.org]

- 21. gem5: Understanding gem5 statistics and output [courses.grainger.illinois.edu]

- 22. Understanding gem5 statistics and output — gem5 Tutorial 0.1 documentation [courses.grainger.illinois.edu]

A Researcher's Guide to GEM-5 Simulation: System Call Emulation (SE) vs. Full System (FS) Mode

An In-depth Technical Guide for Architectural and Systems-Level Research

The gem5 simulator is a powerful and modular open-source platform for computer architecture research, enabling detailed exploration of processor and memory system designs. A fundamental choice when initiating a gem5 simulation is the execution mode: System Call Emulation (SE) or Full System (FS). This decision profoundly impacts the simulation's scope, accuracy, speed, and setup complexity. This guide provides an in-depth technical comparison of SE and FS modes to help researchers, scientists, and professionals select the appropriate methodology for their experimental needs.

Core Concepts: Two Paradigms of Simulation

At its core, gem5 offers two distinct environments for executing and analyzing workloads.[1][2]

-

System Call Emulation (SE) Mode: This mode focuses on simulating user-level code, such as a specific application or benchmark.[3] It avoids the complexity of modeling a complete operating system by intercepting and emulating system calls made by the application.[3][4] When the simulated program requests a service from the OS (e.g., file I/O), gem5 traps the call and often passes it to the host operating system to handle.[3] This approach simplifies the simulation setup and significantly speeds up execution.[5]

-

Full System (FS) Mode: In contrast, FS mode simulates a complete, bare-metal machine, including CPUs, I/O devices, and interrupts.[3][4] This allows it to boot an unmodified operating system, such as Linux.[2][6] Researchers can then interact with the simulated OS to run complex, multi-process, and multi-threaded applications just as they would on a real computer.[6][7] This provides a far more realistic and accurate simulation environment, capturing the intricate interactions between hardware, the OS, and the application.[3][8]

Comparative Analysis: SE vs. FS Mode

Choosing the right mode requires a clear understanding of the trade-offs between simplicity, speed, and fidelity. The following tables summarize the key differences.

Table 1: Feature and Scope Comparison

| Feature | System Call Emulation (SE) Mode | Full System (FS) Mode |

| Simulation Scope | User-level code, CPU, and memory system.[1][9] | Complete hardware system, including devices and peripherals.[4][6] |

| Operating System | Not simulated; system calls are emulated by gem5 and the host OS.[3][4] | A full, unmodified guest OS (e.g., Linux) is booted and executed.[6][8] |

| Workloads | Typically single, statically-linked applications (e.g., SPEC CPU).[3][9] | Any unmodified binary, multi-process applications, and complex software stacks.[6] |

| I/O & Peripherals | Not modeled; I/O-intensive workloads are unsuitable.[3][4] | Models a variety of I/O devices (network, disk, etc.).[3][4] |

| Threading Model | Limited; threads are often statically mapped to cores as there is no OS scheduler.[3] | Full support for OS-level thread scheduling and management.[10] |

| Fidelity | Lower; misses OS effects like page table walks, interrupts, and scheduling. | Higher; provides a more realistic simulation by including OS interactions.[4][6][8] |

Table 2: Practical Considerations for Researchers

| Consideration | System Call Emulation (SE) Mode | Full System (FS) Mode |

| Setup Complexity | Low. Requires a compiled benchmark and a gem5 configuration script.[9] | High. Requires a compiled kernel, a disk image with applications, and a more complex configuration.[6][8] |

| Simulation Speed | Faster. No overhead from booting or running an OS.[5] | Slower. Includes the overhead of booting the OS and running background processes. |

| Use Cases | CPU and memory hierarchy studies, algorithm analysis, initial hardware design exploration. | OS-level research, complex workload analysis (e.g., web servers), device driver development, full-stack performance analysis.[3][4][6] |

| Reproducibility | High for a given setup. | High, but dependent on the exact kernel, disk image, and OS configuration. |

Logical and Workflow Diagrams

Visualizing the components and setup process for each mode clarifies their fundamental differences.

Logical Components

The diagram below illustrates the interaction of components in both SE and FS modes. In SE mode, gem5 directly emulates OS services for the application. In FS mode, the application interacts with a complete guest OS, which in turn interacts with the simulated hardware.

Experimental Workflow

The setup process for each mode differs significantly. SE mode involves a straightforward compilation and execution path, while FS mode requires substantial preparatory work to create a bootable system.

Experimental Protocols: A Methodological Overview

This section provides a generalized protocol for initiating experiments in both modes.

Protocol 1: System Call Emulation (SE) Mode Experiment

This protocol outlines the steps for running a pre-compiled "hello world" test program that ships with gem5.

-

Prerequisites: A successful build of the gem5 executable (e.g., build/X86/gem5.opt).

-

Identify Target Application: For this example, we use the pre-compiled binary: tests/test-progs/hello/bin/x86/linux/hello.

-

Configuration Script: Use the provided example script configs/example/se.py. This script is designed to set up a simple system with a CPU and memory for SE mode execution.

-

Execution Command: Navigate to the root gem5 directory and run the simulation using the following command structure:

-

build/X86/gem5.opt: The compiled gem5 binary.

-

configs/example/se.py: The Python configuration script that defines the simulated system.

-

-c: The command-line option to specify the executable to run.

-

-

Data Collection: Upon completion, simulation results and statistics are stored in the m5out/ directory. The primary file for analysis is m5out/stats.txt, which contains detailed metrics about the simulation run, such as the number of instructions committed and cache hit rates.

Protocol 2: Full System (FS) Mode Experiment

This protocol describes the high-level steps to boot a Linux operating system and run a command.

-

Prerequisites:

-

A compiled gem5 binary for the target architecture (e.g., build/X86/gem5.opt).[11]

-

A compiled Linux kernel binary compatible with gem5.

-

A raw disk image containing a bootable OS (e.g., Ubuntu).[12][13]

-

The m5 utility binary, which allows communication between the simulated guest and the host simulator, should be placed in the disk image (e.g., in /sbin).[8][13]

-

-

Acquire System Files: The easiest method is to download pre-built kernels and disk images from the official gem5 resources page. Manually creating these involves using tools like qemu to install an OS onto a raw disk file.[12]

-

Configuration Script: A more complex script is needed for FS mode. The example script configs/example/fs.py or the newer library-based scripts can be used as a starting point.[11] This script must specify the paths to the kernel and disk image.

-

Execution Command: The command to launch an FS simulation is more involved:

-

--kernel: Specifies the Linux kernel binary.

-

--disk-image: Specifies the OS disk image file.

-

-

Interaction and Data Collection: The simulation will boot the full operating system. To run benchmarks, you typically need to include a run script inside the disk image that gem5 can execute after the OS has booted.[7] Alternatively, you can attach to the simulated serial port to interact with the system manually. All statistics are again saved to m5out/stats.txt.

Conclusion: Selecting the Right Mode for Your Research

The choice between SE and FS mode is a critical first step in structuring computer architecture research with gem5.

-

Choose System Call Emulation (SE) Mode when your research is focused on the performance of the CPU core and memory hierarchy, and the workload has minimal or well-understood OS interaction.[5] It is ideal for rapid prototyping and iterating on microarchitectural designs due to its speed and simplicity.

-

Choose Full System (FS) Mode when accuracy is paramount and your research involves complex software, OS-level behavior, or I/O devices.[5] It is the only viable option for studying entire system performance, interactions between applications and the OS, or workloads that cannot be easily run in an emulated environment.[3][4]

For many research projects, a hybrid approach is effective: begin with SE mode for initial exploration and performance tuning, then validate the most promising results in the more realistic but slower FS mode.[5] This methodology balances the need for rapid iteration with the demand for high-fidelity, reproducible results.

References

- 1. developer.arm.com [developer.arm.com]

- 2. arxiv.org [arxiv.org]

- 3. scispace.com [scispace.com]

- 4. research.cs.wisc.edu [research.cs.wisc.edu]

- 5. When to use full system FS vs syscall emulation SE with userland programs in gem5? - Stack Overflow [stackoverflow.com]

- 6. gem5 Full System Simulation — gem5 Tutorial 0.1 documentation [lowepower.com]

- 7. Running Benchmarks on Full System Mode [groups.google.com]

- 8. gem5: SPEC Tutorial [gem5.org]

- 9. Creating a simple configuration script — gem5 Tutorial 0.1 documentation [courses.grainger.illinois.edu]

- 10. [gem5-users] Some questions regarding SE vs FS in GEM5 for multi-core applications [gem5-users.gem5.narkive.com]

- 11. gem5: X86 Full-System Tutorial [gem5.org]

- 12. Setting up gem5 full system [lowepower.com]

- 13. gem5: Creating disk images [gem5.org]

A Researcher's Guide to CPU Models in gem5: Atomic, Timing, and O3

An In-depth Technical Guide for Scientists and Drug Development Professionals

The gem5 simulator is a powerful and flexible tool for computer architecture research, offering a variety of CPU models to suit different research needs. For researchers, scientists, and drug development professionals leveraging simulation in their work, understanding the trade-offs between these models is crucial for obtaining accurate and timely results. This guide provides an in-depth technical exploration of three core CPU models in gem5: AtomicSimpleCPU, TimingSimpleCPU, and the detailed Out-of-Order (O3) CPU. We will delve into their architectures, use cases, and performance characteristics, providing detailed experimental protocols and comparative data to inform your simulation choices.

Introduction to gem5 CPU Models

The gem5 simulator's modular design allows for the interchange of various components, with the CPU model being one of the most critical choices, directly impacting simulation speed and accuracy. The selection of a CPU model should align with the specific research question. For instance, early-stage functional validation might prioritize speed over cycle-level accuracy, while detailed microarchitectural studies demand a more precise, albeit slower, model.

gem5 offers several CPU models, but this guide focuses on three fundamental types that represent a spectrum of trade-offs:

-

AtomicSimpleCPU: A simple, in-order CPU model designed for the fastest possible functional simulation.[1]

-

TimingSimpleCPU: An in-order CPU model that introduces timing to memory accesses, offering a balance between speed and accuracy.[1]

-

O3CPU (Out-of-Order CPU): A detailed, superscalar, out-of-order processor model for high-fidelity microarchitectural exploration.[2]

Simulations in gem5 can be run in two primary modes:

-

System-Call Emulation (SE) Mode: In this mode, gem5 simulates the CPU and memory system, trapping and emulating system calls made by the application to the host operating system. SE mode is generally faster and easier to configure.[3]

-

Full System (FS) Mode: FS mode simulates a complete hardware system, allowing an unmodified operating system to boot and run. This mode is more realistic, especially for studies where OS interactions are significant, but it is also more complex to set up and slower to simulate.[4]

A Deep Dive into gem5 CPU Models

AtomicSimpleCPU: The Speed Runner

The AtomicSimpleCPU is a functionally-first, in-order CPU model.[1] Its primary design goal is simulation speed. It achieves this by treating memory accesses as "atomic," meaning they complete in a single, variable-latency step without modeling the detailed contention and queuing delays of the memory system.[5] While it receives a latency from the memory system, the CPU itself does not stall; it can proceed with subsequent instructions, making it a non-cycle-accurate model.

Key Characteristics:

-

Execution Model: In-order, single-cycle instruction execution (except for memory accesses).

-

Memory Model: Atomic memory accesses. The simulation proceeds without waiting for memory responses, though a timing annotation is received.

-

Use Cases: Ideal for fast-forwarding to a region of interest in a simulation, functional verification of code, and studies where detailed cycle-level accuracy of the CPU core is not the primary concern.

-

Limitations: Not suitable for performance analysis that depends on accurate timing of CPU pipeline effects or memory system interactions.

TimingSimpleCPU: A Step Towards Realism

The TimingSimpleCPU builds upon the simplicity of the AtomicSimpleCPU by introducing a more realistic memory timing model.[1] Like its atomic counterpart, it is an in-order model. However, when a memory access is initiated, the CPU stalls and waits for a response from the memory system, accurately modeling memory access latencies.[5] This makes it more cycle-accurate than the AtomicSimpleCPU, particularly for memory-bound workloads.

Key Characteristics:

-

Execution Model: In-order, single-cycle instruction execution, but with stalls on memory accesses.

-

Memory Model: Timing-based memory accesses. The CPU waits for the memory system to respond before proceeding.

-

Use Cases: Suitable for studies where the performance of the memory subsystem is a key factor, but a full out-of-order core model is not necessary. It offers a good balance between simulation speed and memory-related performance accuracy.

-

Limitations: As an in-order model, it does not capture the complexities of modern superscalar, out-of-order processors, such as instruction-level parallelism.

O3CPU: The Pinnacle of Detail

The O3CPU is gem5's most detailed and complex CPU model, implementing a superscalar, out-of-order execution pipeline loosely based on the Alpha 21264.[2] It models the key components of a modern high-performance CPU, including a reorder buffer (ROB), issue queues, and physical register files, enabling it to exploit instruction-level parallelism.[6] The O3CPU uses a timing-based memory model, similar to the TimingSimpleCPU.

Pipeline Stages:

The O3CPU implements a configurable pipeline, with the following key stages[2][7]:

-

Fetch: Fetches instructions from the instruction cache.

-

Decode: Decodes instructions into micro-operations.

-

Rename: Renames architectural registers to physical registers to eliminate false dependencies.

-

Issue/Execute/Writeback (IEW): Dispatches instructions to functional units, executes them, and writes back the results.

-

Commit: Commits instructions in-order, making their results architecturally visible.

Key Characteristics:

-

Execution Model: Out-of-order, superscalar pipeline.

-

Memory Model: Timing-based memory accesses.

-

Use Cases: The preferred model for detailed microarchitectural studies, including research on instruction scheduling, branch prediction, cache coherence protocols, and other performance-critical aspects of modern CPUs.

-

Limitations: The high level of detail makes it the slowest of the three models. Its complexity also presents a steeper learning curve for configuration and analysis.

Quantitative Performance Comparison

To illustrate the performance trade-offs between the CPU models, the following table summarizes typical results for simulation speed and simulated performance across a selection of benchmarks. The data presented here is illustrative and based on trends observed in various studies. Actual results will vary based on the specific benchmark, system configuration, and host machine.

| CPU Model | Simulation Speed (Instructions/Second) | Simulated Performance (IPC) | Cycles Per Instruction (CPI) |

| AtomicSimpleCPU | Very High (e.g., > 1 MIPS) | High (often unrealistic) | Low (often unrealistic) |

| TimingSimpleCPU | Moderate (e.g., 100-500 KIPS) | Moderate | Moderate |

| O3CPU | Low (e.g., 10-100 KIPS) | Realistic | Realistic |

Table 1: Illustrative Performance Comparison of gem5 CPU Models.

O3CPU Microarchitectural Parameters

The O3CPU model is highly configurable, allowing researchers to model a wide range of out-of-order processor designs. The table below lists some of the key parameters that can be adjusted in the gem5 configuration scripts.

| Parameter | Description | Default Value (Typical) |

| fetchWidth | Number of instructions fetched per cycle. | 8 |

| decodeWidth | Number of instructions decoded per cycle. | 8 |

| renameWidth | Number of instructions renamed per cycle. | 8 |

| issueWidth | Number of instructions issued to functional units per cycle. | 8 |

| commitWidth | Number of instructions committed per cycle. | 8 |

| numROBEntries | Number of entries in the Reorder Buffer. | 192 |

| numIQEntries | Number of entries in the Instruction Queue (Issue Queue). | 64 |

| numPhysIntRegs | Number of physical integer registers. | 256 |

| numPhysFloatRegs | Number of physical floating-point registers. | 256 |

| branchPred | The branch predictor to use (e.g., TournamentBP). | TournamentBP |

Table 2: Key Microarchitectural Parameters of the O3CPU Model.

Experimental Protocols

This section provides a detailed methodology for conducting a comparative study of the three CPU models in gem5 using the SPEC CPU® 2017 benchmark suite in Full System (FS) mode. This protocol is based on established practices for running SPEC benchmarks in gem5.[4]

Prerequisites

-

gem5 Installation: A working installation of gem5, compiled for the desired instruction set architecture (e.g., X86 or ARM).

-

SPEC CPU 2017 Benchmark Suite: A licensed copy of the SPEC CPU 2017 benchmark suite.

-

Disk Image and Kernel: A pre-compiled disk image containing the SPEC benchmarks and a compatible Linux kernel. Resources for creating these are available through the gem5 project.

Configuration Script

The following Python script (spec_cpu_comparison.py) provides a basic framework for running a SPEC benchmark with a chosen CPU model.

Running the Simulation

-

Compile the Configuration: Ensure the Python script is in a directory accessible by gem5.

-

Execute the Simulation: Run the simulation from the gem5 directory using the following command structure. The --cpu-type flag in the configuration script will determine which CPU model is used.

-

Collect and Analyze Results: After the simulation completes, the statistics will be available in the m5out/stats.txt file. Key metrics to analyze include sim_seconds (simulation time), system.cpu.ipc (Instructions Per Cycle), and system.cpu.cpi (Cycles Per Instruction).

Visualizing CPU Model Workflows

The following diagrams, generated using the DOT language, illustrate the logical workflows of the AtomicSimpleCPU, TimingSimpleCPU, and O3CPU models, as well as the experimental workflow.

AtomicSimpleCPU Workflow

TimingSimpleCPU Workflow

O3CPU Pipeline Workflow

Experimental Workflow

Conclusion

Choosing the right CPU model in gem5 is a critical decision that balances simulation speed and accuracy. The AtomicSimpleCPU offers the fastest simulation times, making it ideal for functional verification and rapid exploration. The TimingSimpleCPU provides a middle ground by incorporating realistic memory timing, suitable for studies where memory performance is key. For the highest fidelity and detailed microarchitectural analysis, the O3CPU is the model of choice, despite its slower simulation speed.

For researchers, scientists, and drug development professionals, this guide provides the foundational knowledge to make informed decisions about which CPU model best suits their research objectives. By understanding the architectural nuances, performance trade-offs, and experimental methodologies, you can effectively leverage the power of gem5 for your computational research.

References

- 1. gem5: Simple CPU Models [gem5.org]

- 2. gem5: Out of order CPU model [gem5.org]

- 3. gem5: Creating a simple configuration script [gem5.org]

- 4. gem5: SPEC Tutorial [gem5.org]

- 5. ws.engr.illinois.edu [ws.engr.illinois.edu]

- 6. gem5: More complex configuration script [courses.grainger.illinois.edu]

- 7. O3CPU - gem5 [old.gem5.org]

A Deep Dive into GEM-5: A Technical Guide to Memory Hierarchy and System Modeling for Researchers

For Researchers, Scientists, and Drug Development Professionals

In the modern landscape of scientific discovery, high-performance computing is an indispensable tool. From molecular dynamics simulations in drug development to the analysis of vast genomic datasets, the ability to model and understand complex computational systems is paramount. This guide provides an in-depth technical overview of the GEM-5 simulator, a powerful open-source tool for computer architecture research. While the subject matter is deeply rooted in computer engineering, a foundational understanding of these concepts can empower researchers to better leverage computational resources and to collaborate more effectively with computer scientists in designing optimized simulation environments for their specific research needs.

Core Concepts of this compound System Modeling

The gem5 simulator is a modular platform designed for computer system architecture research, encompassing everything from processor microarchitecture to the system-level interactions of various components.[1] At its core, this compound is built upon the concept of SimObjects , which are C++ objects that model physical hardware components like CPUs, caches, memory controllers, and buses.[2][3] These SimObjects are then configured and interconnected using Python scripts, offering a high degree of flexibility to researchers.[2][4]

Simulation Modes: Full System (FS) vs. System-call Emulation (SE)

This compound offers two primary simulation modes, each with its own set of trade-offs between simulation speed and fidelity.[5]

-

Full System (FS) Mode: In this mode, this compound simulates a complete hardware platform, capable of booting an unmodified operating system.[5] This provides a highly realistic simulation environment, crucial for studies where the interaction between hardware and the operating system is of interest, such as the impact of page table walks on performance.[2] However, FS mode is generally slower and more complex to configure, requiring a compiled kernel and a disk image.[5][6]

-

System-call Emulation (SE) Mode: SE mode focuses on simulating a user-space application, where the simulator traps and emulates system calls made by the program.[5] This mode is significantly faster and easier to configure as it does not require a full operating system.[2] It is well-suited for studies that are primarily concerned with the performance of a specific application and its interaction with the CPU and memory hierarchy, without the overhead of simulating an entire OS.[2]

The choice between FS and SE mode depends on the specific research question. For detailed investigations into OS-level effects on drug discovery simulations, FS mode would be necessary. For rapid prototyping and analysis of a computational chemistry algorithm's memory access patterns, SE mode is often the more practical choice.

The this compound Memory Hierarchy

A critical aspect of modern computer systems is the memory hierarchy, which consists of multiple levels of caches to bridge the speed gap between the fast processor and the slower main memory. This compound provides two distinct and powerful memory system models to explore this hierarchy: the "Classic" model and the "Ruby" model.

The Classic Memory System

The Classic memory model provides a simplified, yet effective, framework for simulating a memory hierarchy. It is generally faster to simulate than Ruby and is a good choice when the fine-grained details of cache coherence are not the primary focus of the study.[7] The Classic model implements a standard MOESI (Modified, Owned, Exclusive, Shared, Invalid) coherence protocol.

The Ruby Memory System

Ruby is a more detailed and flexible memory system simulator that is designed to model cache coherence protocols with a high degree of accuracy.[8] It uses a domain-specific language called SLICC (Specification Language for Implementing Cache Coherence) to define coherence protocols. This allows researchers to design and evaluate novel coherence protocols. Ruby is the preferred model when studying the performance of multi-core systems where data sharing and communication between cores are critical factors.

The logical flow of a memory request within the this compound memory hierarchy is a fundamental concept. The following diagram illustrates this process.

Quantitative Performance Analysis

This compound provides a rich set of statistics to analyze the performance of a simulated system. These statistics cover various aspects of the CPU, caches, and main memory.[9][10] The following tables summarize key performance metrics obtained from various studies using this compound, showcasing the impact of different memory hierarchy configurations.

| Metric | Configuration A | Configuration B | Benchmark | Source |

| L1 D-Cache Miss Rate | 32kB, 2-way | 64kB, 4-way | Big Data Benchmark | [1] |

| L2 Cache Miss Rate | 256kB, 8-way | 512kB, 8-way | PARSEC | [5] |

| Average Memory Access Time (ns) | Classic Coherence | Ruby (MESI_Two_Level) | Synthetic Traffic | [11] |

| DDR4 Bandwidth (GB/s) | This compound Simulation | Real Hardware | STREAM | [12] |

| Simulation Speed (Host Inst/sec) | 8kB L1 Caches | 32kB L1 Caches | RISC-V Core | [13] |

Note: The values in this table are illustrative and represent the types of data that can be extracted from this compound simulations. For precise values, refer to the cited sources.

Experimental Protocols for Memory Hierarchy Studies

Conducting a well-defined experiment is crucial for obtaining meaningful results from this compound. The following protocol outlines the key steps for setting up and running a memory-focused simulation experiment.

Protocol: Evaluating Cache Performance in SE Mode

Objective: To analyze the impact of L1 data cache size on the performance of a memory-intensive scientific application.

1. Environment Setup:

- Install this compound and its dependencies on a Linux-based host system.[5]

- Compile this compound for the target instruction set architecture (ISA), for example, X86 or ARM.[14]

2. Benchmark Preparation:

- Select a representative memory-intensive benchmark. For scientific applications, benchmarks like STREAM or workloads from the PARSEC suite are suitable.[10][15]

- Statically compile the benchmark for the target ISA to be used in SE mode.[2]

3. Configuration Script (.py file):

- Create a Python script to define the simulated system.[2]

- Instantiate a System SimObject.[4]

- Define a clock domain and memory mode (typically timing for performance analysis).[4]

- Instantiate a CPU model (e.g., TimingSimpleCPU for a basic timing simulation).[16]

- Define the memory hierarchy. For this experiment, you will create L1 instruction and data caches and connect them to a memory bus. The se.py script in the configs/example/ directory provides a good starting point.[16]

- Parameterize the L1 data cache size. It is good practice to make this a command-line argument for easy experimentation.

- Instantiate a memory controller and define the physical memory range.[2]

- Set up the process to be simulated by pointing to the compiled benchmark executable.[2]

4. Simulation Execution:

- Run the this compound executable, passing the Python configuration script and the desired L1 data cache size as arguments.

- Redirect the simulation statistics to an output file (stats.txt).[9]

5. Data Analysis:

- Parse the stats.txt file to extract relevant performance metrics. Key statistics for this experiment include:

- sim_seconds: Total simulated time.[9]

- sim_insts: Total number of committed instructions.[9]

- system.cpu.dcache.mshr_misses::total: Total number of L1 data cache misses.

- system.cpu.dcache.overall_accesses::total: Total number of accesses to the L1 data cache.

- Calculate the L1 data cache miss rate (misses / accesses).

- Repeat the simulation for a range of L1 data cache sizes and plot the miss rate and simulated time to analyze the performance impact.

The following diagram illustrates the workflow for this experimental protocol.

Conclusion

This compound is a versatile and powerful tool for researchers across various scientific domains who rely on high-performance computing. By providing a flexible platform for modeling and simulating computer systems, it enables a deeper understanding of how hardware characteristics, particularly the memory hierarchy, can influence the performance of complex scientific applications. For researchers in fields like drug development, this knowledge can be instrumental in optimizing computational pipelines and accelerating the pace of discovery. While a deep expertise in computer architecture is not a prerequisite, a foundational understanding of the concepts presented in this guide can foster more effective collaboration with computer scientists and lead to more efficient and impactful computational research.

References

- 1. researchgate.net [researchgate.net]

- 2. gem5: Creating a simple configuration script [gem5.org]

- 3. gem5: gem5_memory_syste [gem5.org]

- 4. Creating a simple configuration script — gem5 Tutorial 0.1 documentation [courses.grainger.illinois.edu]

- 5. developer.arm.com [developer.arm.com]

- 6. gem5: X86 Full-System Tutorial [gem5.org]

- 7. gem5: Adding cache to configuration script [gem5.org]

- 8. youtube.com [youtube.com]

- 9. Understanding gem5 statistics and output — gem5 Tutorial 0.1 documentation [courses.grainger.illinois.edu]

- 10. gem5: Understanding gem5 statistics and output [gem5.org]

- 11. arch.cs.ucdavis.edu [arch.cs.ucdavis.edu]

- 12. researchgate.net [researchgate.net]

- 13. fires.im [fires.im]

- 14. OKLAHOMA STATE UNIVERSITY [vlsiarch.ecen.okstate.edu]

- 15. gem5: PARSEC Tutorial [gem5.org]

- 16. Lele's Memo: Gem5 [cnlelema.github.io]

how to write a simple simulation script in GEM-5

An In-Depth Technical Guide to Writing a Simple Simulation Script in GEM-5

Introduction to this compound Simulation

This compound is a modular and extensible discrete-event simulator for computer architecture research. It provides a framework for modeling various hardware components, including processors, memory systems, and interconnects. At its core, a this compound simulation is controlled by a Python configuration script, which allows researchers to define, configure, and connect different simulation components, known as SimObjects.[1][2][3] SimObjects are C++ objects exported to the Python environment, enabling detailed and flexible system configuration.[1][2]

This compound supports two primary simulation modes: Syscall Emulation (SE) and Full System (FS).

-

Syscall Emulation (SE) Mode: This mode focuses on simulating the CPU and memory system for a single user-mode application. It avoids the complexity of booting an operating system by intercepting and emulating system calls made by the application.[1] SE mode is significantly easier to configure and is ideal for architectural studies focused on application performance without the overhead of a full OS.[1][4]

-

Full System (FS) Mode: In this mode, this compound emulates a complete hardware system, including devices and interrupt controllers, allowing it to boot an unmodified operating system.[1][5] FS mode provides higher fidelity by modeling OS interactions but is considerably more complex to configure.[4][5]

This guide will focus on creating a simple simulation script using the more straightforward Syscall Emulation mode.

Experimental Protocol: Crafting a Basic SE Mode Script

The process of writing a this compound simulation script involves defining the hardware components, configuring their parameters, and specifying the workload to be executed. The gem5 standard library simplifies this process by providing pre-defined, connectable components.[2][6]

Step 1: Importing Necessary this compound Components

The first step is to import the required classes from the gem5 standard library. These classes represent the building blocks of our simulated system, such as the main board, processor, memory, and cache hierarchy.[6]

Step 2: Defining the System Components

Next, we instantiate the imported components to define the hardware configuration. For a simple system, we will model a processor connected directly to main memory without any caches.[6]

-

Cache Hierarchy: We explicitly define that there will be no caches using the NoCache component.[6]

-

Memory System: A single channel of DDR3 memory is configured.[6]

-

Processor: A simple, single-core atomic CPU is chosen. An atomic CPU model is faster as it completes memory requests in a single cycle, suitable for initial functional simulations.[6]

-

Board: The SimpleBoard acts as the backbone, connecting the processor, memory, and cache hierarchy.[2][6] We must specify the clock frequency and the previously defined components for the board.

Step 3: Setting the Workload

With the hardware defined, we specify the application to be run. In SE mode, this involves pointing the simulator to a statically compiled executable.[1][3] The obtain_resource function can be used to download pre-built test binaries, such as a "Hello World" program.[6]

Step 4: Instantiating and Running the Simulation

The final step is to create a Simulator object with the configured board and launch the simulation. The run() method starts the execution, which continues until the workload completes.[1][6]

Data Presentation: Key Simulation Parameters

The following table summarizes the core quantitative parameters configured in our simple simulation script.

| Parameter | Component | Value | Description |

| clk_freq | SimpleBoard | "3GHz" | The clock frequency for the system board. |

| cpu_type | SimpleProcessor | CPUTypes.ATOMIC | Specifies the atomic CPU model for faster, less detailed simulation. |

| num_cores | SimpleProcessor | 1 | The number of CPU cores in the processor. |

| isa | SimpleProcessor | ISA.X86 | The instruction set architecture for the processor. |

| size | SingleChannelDDR3_1600 | "2GiB" | The total size of the main memory. |

| cache_hierarchy | SimpleBoard | NoCache | Indicates a direct connection between the processor and memory without caches. |

Visualizations

This compound Simulation Script Workflow

The logical workflow for creating and executing a this compound simulation script involves defining, configuring, and connecting components before instantiation and execution.

A diagram illustrating the logical workflow of a this compound simulation script.

Simple System Component Hierarchy

This diagram illustrates the hierarchical relationship between the simulated hardware components defined in the script. The SimpleBoard acts as the top-level container for the SimpleProcessor and SingleChannelDDR3_1600 memory system, which are interconnected.

Component hierarchy for the simple this compound simulation.

References

- 1. gem5: Creating a simple configuration script [gem5.org]

- 2. gem5: Creating a simple configuration script [courses.grainger.illinois.edu]

- 3. Creating a simple configuration script — gem5 Tutorial 0.1 documentation [courses.grainger.illinois.edu]

- 4. When to use full system FS vs syscall emulation SE with userland programs in gem5? - Stack Overflow [stackoverflow.com]

- 5. Full system configuration files — gem5 Tutorial 0.1 documentation [lowepower.com]

- 6. gem5: Hello World Tutorial [gem5.org]

Unlocking Architectural Insights: A Technical Guide to GEM-5 Statistics and Output Analysis

For Researchers, Scientists, and Drug Development Professionals

In the intricate world of computer architecture research and its application in fields like computational drug discovery, the ability to accurately model and analyze system performance is paramount. The GEM-5 simulator stands as a cornerstone for such exploration, offering a powerful and flexible platform for detailed microarchitectural investigation. However, the wealth of data generated by this compound can be as daunting as it is valuable. This in-depth technical guide provides a comprehensive walkthrough of this compound's statistical output, empowering researchers to harness this data for robust analysis and informed decision-making.

Deconstructing the this compound Output: m5out Directory

Upon completion of a this compound simulation, a directory named m5out is generated, containing the primary results of the experiment.[1][2] For the discerning researcher, two files within this directory are of immediate importance: config.ini and stats.txt.

-

config.ini : This file serves as the definitive record of the simulated system's configuration.[1][2] It meticulously lists every simulation object (SimObject) created and their corresponding parameter values, including those set by default.[1][2] It is considered a best practice to always review this file as a sanity check to ensure the simulation environment aligns with the intended experimental setup.[2]

-

stats.txt : This is the focal point of our analysis, containing a detailed dump of all registered statistics for every SimObject in the simulation.[1][2] The data is presented in a human-readable text format, with each line representing a specific statistic.

The structure of a line in stats.txt typically follows this format:

Core Performance Indicators: A Quantitative Overview

The stats.txt file is rich with data. The following tables summarize key performance indicators (KPIs) that are fundamental for most research analyses.

Global Simulation Statistics

These statistics provide a high-level summary of the entire simulation run.

| Statistic | Description |

| sim_seconds | The total simulated time, representing the time elapsed in the simulated world.[2][3] |

| sim_ticks | The total number of simulated clock ticks. |

| host_inst_rate | The rate at which the host machine executed simulation instructions, indicating the performance of the this compound simulator itself.[2][3] |

| host_op_rate | The rate at which the host machine executed simulation operations. |

CPU Core Statistics (e.g., O3CPU)

The Out-of-Order (O3) CPU model in this compound provides a wealth of statistics for detailed pipeline analysis.[4]

| Statistic Category | Key Statistics | Description |

| Instruction-Level Parallelism | ipc | Instructions Per Cycle, a primary measure of processor performance. |

| cpi | Cycles Per Instruction, the reciprocal of IPC. | |

| committedInsts | The total number of instructions committed. | |

| Branch Prediction | branchPred.lookups | The total number of branch predictor lookups. |

| branchPred.condPredicted | The number of conditional branches correctly predicted. | |

| branchPred.condIncorrect | The number of conditional branches incorrectly predicted. | |

| Pipeline Stages | fetch.Insts | Number of instructions fetched. |

| decode.DecodedInsts | Number of instructions decoded. | |

| rename.RenamedInsts | Number of instructions renamed. | |

| iew.InstsIssued | Number of instructions issued to the execution units. | |

| commit.CommittedInsts | Number of instructions committed. | |

| Resource Stalls | rename.RenameStalls | Number of cycles the rename stage was stalled. |

| iew.IssueStalls | Number of cycles the issue stage was stalled due to full instruction queue. | |

| commit.ROBStalls | Number of cycles the commit stage was stalled due to a full reorder buffer. |

Memory Hierarchy Statistics

Understanding the memory system's behavior is critical for performance analysis.

| Statistic Category | Key Statistics | Description |

| L1 Caches (Instruction & Data) | icache.overall_miss_rate | The miss rate of the L1 instruction cache. |

| dcache.overall_miss_rate | The miss rate of the L1 data cache. | |

| icache.avg_miss_latency | The average latency for an instruction cache miss. | |

| dcache.avg_miss_latency | The average latency for a data cache miss. | |

| L2 Cache | l2.overall_miss_rate | The overall miss rate of the L2 cache. |

| l2.avg_miss_latency | The average latency for an L2 cache miss. | |

| DRAM Controller | dram.readReqs | The total number of read requests to the DRAM controller. |

| dram.writeReqs | The total number of write requests to the DRAM controller. | |

| dram.avgMemAccLat | The average memory access latency as seen by the DRAM controller. | |

| dram.bwTotal | The total bandwidth utilized for the DRAM. |

Experimental Protocols for Research Analysis

A structured approach is essential for meaningful analysis of this compound data. The following protocols outline common experimental workflows.

Protocol 1: Baseline Performance Characterization

Objective: To establish a baseline performance profile of an application on a specific architecture.

Methodology:

-

Configuration: Define a baseline system configuration using a this compound Python configuration script. Specify the CPU model (e.g., O3CPU), cache hierarchy (sizes, associativities), and memory technology.

-

Simulation: Run the target application workload on the configured system.

-

Data Extraction: From stats.txt, extract key performance indicators, including sim_seconds, system.cpu.ipc, system.cpu.cpi, and the miss rates for all cache levels.

-

Analysis: Document these baseline metrics. They will serve as the reference point for all future architectural explorations.

Protocol 2: Analyzing the Impact of Cache Size

Objective: To quantify the effect of L2 cache size on application performance.

Methodology:

-

Iterative Configuration: Create a series of this compound configuration scripts, each identical to the baseline except for the L2 cache size. For example, you might test sizes of 256kB, 512kB, 1MB, and 2MB.

-

Batch Simulation: Execute the simulation for each configuration.

-

Targeted Data Extraction: For each run, parse the stats.txt file to extract system.cpu.ipc, system.l2.overall_miss_rate, and system.dram.readReqs.

-

Comparative Analysis: Create a table comparing the extracted metrics across the different L2 cache sizes. Visualize the relationship between L2 cache size, miss rate, and IPC to identify the point of diminishing returns.

Protocol 3: Power and Energy Estimation

Objective: To estimate the power and energy consumption of the simulated system.

Methodology:

-

Enable Power Modeling: In your this compound configuration, enable power modeling. This can be done using this compound's native MathExprPowerModel, which allows you to define power consumption as a mathematical expression of other statistics.[5] For more detailed analysis, this compound can be integrated with external tools like McPAT.[1][6]

-

Simulation: Run the simulation with power modeling enabled.

-

Power Statistics Extraction: The stats.txt file will now contain power and energy-related statistics, such as system.cpu.power_model.dynamic_power and system.cpu.power_model.static_power.

-

Energy Calculation: Calculate the total energy consumption by integrating the power over the simulated time. Analyze the energy breakdown between different components to identify power hotspots.

Mandatory Visualizations

Visual diagrams are indispensable for communicating complex architectural concepts and experimental workflows. The following diagrams are rendered using the DOT language for Graphviz.

By leveraging the detailed statistics provided by this compound and following structured experimental protocols, researchers can gain profound insights into the performance and power characteristics of novel computer architectures. This guide serves as a foundational resource for navigating the complexities of this compound's output, enabling more efficient and impactful research in computationally intensive domains.

References

- 1. How to get power consumption in gem5? · gem5 · Discussion #980 · GitHub [github.com]

- 2. gem5: Understanding gem5 statistics and output [gem5.org]

- 3. What is the difference between the gem5 CPU models and which one is more accurate for my simulation? - Stack Overflow [stackoverflow.com]

- 4. gem5: Out of order CPU model [gem5.org]

- 5. gem5: ARM Power Modelling [gem5.org]

- 6. eprints.soton.ac.uk [eprints.soton.ac.uk]

A Technical Guide to Supported Instruction Set Architectures in gem5: Capabilities and Research Implications

An In-depth Technical Guide for Researchers and Scientists

The gem5 simulator is a cornerstone of computer architecture research, providing a modular and flexible platform for exploring novel processor designs, memory systems, and full-system behavior.[1] A key feature of gem5 is the decoupling of Instruction Set Architecture (ISA) semantics from its detailed CPU models, enabling robust support for a diverse range of ISAs.[1][2] This guide provides a comprehensive overview of the ISAs supported by gem5, their level of maturity, and the research avenues they enable.