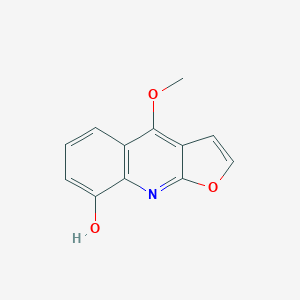

Robustine

Description

This compound has been reported in Zanthoxylum simulans, Zanthoxylum wutaiense, and other organisms with data available.

Properties

IUPAC Name |

4-methoxyfuro[2,3-b]quinolin-8-ol | |

|---|---|---|

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI |

InChI=1S/C12H9NO3/c1-15-11-7-3-2-4-9(14)10(7)13-12-8(11)5-6-16-12/h2-6,14H,1H3 | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI Key |

VGVNNMLKTSWBAR-UHFFFAOYSA-N | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Canonical SMILES |

COC1=C2C=COC2=NC3=C1C=CC=C3O | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Molecular Formula |

C12H9NO3 | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

DSSTOX Substance ID |

DTXSID20177086 | |

| Record name | Furo(2,3-b)quinolin-8-ol, 4-methoxy- | |

| Source | EPA DSSTox | |

| URL | https://comptox.epa.gov/dashboard/DTXSID20177086 | |

| Description | DSSTox provides a high quality public chemistry resource for supporting improved predictive toxicology. | |

Molecular Weight |

215.20 g/mol | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

CAS No. |

2255-50-7 | |

| Record name | 8-Hydroxydictamnine | |

| Source | CAS Common Chemistry | |

| URL | https://commonchemistry.cas.org/detail?cas_rn=2255-50-7 | |

| Description | CAS Common Chemistry is an open community resource for accessing chemical information. Nearly 500,000 chemical substances from CAS REGISTRY cover areas of community interest, including common and frequently regulated chemicals, and those relevant to high school and undergraduate chemistry classes. This chemical information, curated by our expert scientists, is provided in alignment with our mission as a division of the American Chemical Society. | |

| Explanation | The data from CAS Common Chemistry is provided under a CC-BY-NC 4.0 license, unless otherwise stated. | |

| Record name | Furo(2,3-b)quinolin-8-ol, 4-methoxy- | |

| Source | ChemIDplus | |

| URL | https://pubchem.ncbi.nlm.nih.gov/substance/?source=chemidplus&sourceid=0002255507 | |

| Description | ChemIDplus is a free, web search system that provides access to the structure and nomenclature authority files used for the identification of chemical substances cited in National Library of Medicine (NLM) databases, including the TOXNET system. | |

| Record name | Furo(2,3-b)quinolin-8-ol, 4-methoxy- | |

| Source | EPA DSSTox | |

| URL | https://comptox.epa.gov/dashboard/DTXSID20177086 | |

| Description | DSSTox provides a high quality public chemistry resource for supporting improved predictive toxicology. | |

Foundational & Exploratory

The Bedrock of Discovery: A Technical Guide to Research Robustness in Drug Development

For Immediate Release

In the high-stakes world of drug development, where the path from a promising molecule to a life-saving therapy is long and fraught with peril, the concept of research robustness stands as the bedrock upon which all progress is built. This in-depth guide, intended for researchers, scientists, and drug development professionals, will dissect the core tenets of research robustness, explore its critical importance, and provide actionable insights into ensuring its implementation in the laboratory and beyond.

Defining Research Robustness: More Than Just a Single Result

Research robustness is the quality of a scientific finding to hold true under a variety of conditions.[1][2] It is the manifestation of reliability and dependability in experimental outcomes.[1][3] A robust finding is not a fleeting observation tied to a specific, highly controlled setting but rather a consistent and reproducible effect that can withstand scrutiny and variations in experimental parameters.[1][2]

At its core, research robustness is a composite of several key concepts:

-

Reproducibility : This refers to the ability to obtain the same or very similar results when re-analyzing the original data using the same computational steps, methods, and code.[4][5][6] It is a test of the transparency and integrity of the data analysis process.

-

Replicability : This is the ability to achieve consistent results when an entire experiment is repeated, including the collection of new data.[4][5][6] It validates the original findings and demonstrates that they were not due to chance or specific, unrecorded experimental conditions.

-

Generalizability : This concept extends replicability further, assessing whether the findings hold true in different contexts, such as with different populations, in different settings, or with slight variations in the experimental model.[4][5][7]

A failure in any of these areas can have profound consequences, particularly in the drug development pipeline, where decisions worth millions of dollars and impacting countless lives are based on preclinical research findings.

The Imperative of Robustness in Drug Development

The journey of a drug from the lab bench to the patient's bedside is a multi-stage process, with preclinical research forming the foundation for clinical trials. A lack of robustness in these early stages can lead to a cascade of costly and ultimately fruitless efforts.

The consequences of non-robust preclinical research are stark:

-

Wasted Resources : Companies may invest heavily in developing a drug candidate based on promising preclinical data, only to find that the initial results cannot be replicated, leading to the termination of the project.

-

Ethical Concerns : Flawed research can lead to unnecessary clinical trials, exposing patients to potentially ineffective or even harmful treatments.

-

Erosion of Public Trust : The inability to replicate high-profile scientific findings can undermine public confidence in the scientific enterprise as a whole.

Landmark internal reviews by pharmaceutical companies have highlighted the severity of this issue. Famously, researchers at Amgen were able to replicate only 6 out of 53 "landmark" cancer research studies.[8][9] Similarly, a team at Bayer HealthCare reported that they could not validate the findings of approximately two-thirds of 67 published preclinical studies.[8]

These sobering statistics underscore the urgent need for a renewed focus on research robustness.

The Reproducibility Project: Cancer Biology - A Case Study

The "Reproducibility Project: Cancer Biology," a major initiative to systematically replicate key findings in preclinical cancer research, has provided invaluable quantitative data on the challenges of ensuring robustness. The project aimed to replicate 193 experiments from 53 high-impact cancer papers published between 2010 and 2012.[3][10][11]

The results of this ambitious project highlight several critical areas of concern:

| Metric | Finding | Source |

| Replication Success Rate | Of the 50 experiments that could be replicated, the median effect size was 85% smaller than the original finding. | [3][11] |

| Direction of Effect | While 79% of replications showed an effect in the same direction as the original positive findings, the magnitude was often substantially lower. | [3] |

| Statistical Significance | Only 43% of replication effects for original positive findings were statistically significant and in the same direction. | [3] |

| Protocol Availability | A significant challenge was the lack of detailed experimental protocols in the original publications, necessitating extensive communication with the original authors. | [7][10] |

These findings do not necessarily imply misconduct or flawed science in the original studies. Instead, they point to systemic issues such as publication bias towards novel and positive results, and a lack of emphasis on detailed methods reporting.

Experimental Protocols: The Blueprint for Robustness

The single most critical factor in ensuring the reproducibility and replicability of research is a detailed and transparent experimental protocol. Without a clear and comprehensive description of the methods used, other researchers cannot hope to replicate the findings.

A robust experimental protocol should include, but not be limited to:

-

Reagents : Specific details of all reagents used, including manufacturer, catalog number, and lot number.

-

Cell Lines and Animal Models : Source, authentication, and health status of all biological materials.

-

Experimental Procedures : Step-by-step instructions for all procedures, including incubation times, temperatures, and concentrations.

-

Data Collection : A clear description of how data was collected and what instruments were used.

-

Statistical Analysis Plan : The statistical methods to be used for data analysis should be predefined to avoid p-hacking and other questionable research practices.

The "Registered Reports" format, where the experimental protocol and analysis plan are peer-reviewed and accepted for publication before the research is conducted, is a powerful tool for promoting transparency and robustness. The Reproducibility Project: Cancer Biology utilized this format, and their published Registered Reports in journals like eLife serve as excellent examples of detailed protocols.

Visualizing Complexity: Workflows and Signaling Pathways

To further aid in the understanding and implementation of robust research practices, visual representations of experimental workflows and biological pathways are invaluable.

A Generalized Workflow for Preclinical Drug Discovery

The following diagram illustrates a typical, albeit simplified, workflow for preclinical drug discovery, highlighting key stages where robustness is critical.

Key Signaling Pathways in Cancer Biology

Understanding the intricate signaling pathways that drive cancer is fundamental to developing targeted therapies. The robustness of research into these pathways is paramount. Below are diagrams of several key pathways frequently implicated in cancer.

This pathway is crucial for regulating the cell cycle and is often overactive in cancer, promoting cell survival and proliferation.

The Ras/MAPK pathway is a critical signaling cascade that transmits signals from cell surface receptors to the nucleus, influencing gene expression and cell proliferation. Dysregulation of this pathway is a common feature of many cancers.

Best Practices for Fostering Research Robustness

Achieving research robustness requires a concerted effort from individual researchers, institutions, and funding agencies. Key best practices include:

-

Rigorous Experimental Design : This includes appropriate controls, randomization, and blinding to minimize bias.

-

Transparent Reporting : Detailed and transparent reporting of all methods, data, and analysis code is essential for reproducibility.

-

Data Sharing : Making raw data publicly available allows for independent verification of analyses.

-

Pre-registration of Studies : Pre-registering study protocols and analysis plans before data collection helps to prevent p-hacking and HARKing (hypothesizing after the results are known).

-

Embracing Null Results : The publication of null or negative findings is crucial for a complete and unbiased scientific record.

Conclusion: A Call for a Culture of Robustness

The challenges to research robustness are significant, but they are not insurmountable. By fostering a culture that values rigor, transparency, and collaboration, the scientific community can enhance the reliability of preclinical research. For the drug development industry, a commitment to robust research is not just good scientific practice; it is a fundamental requirement for success. By building on a foundation of robust and reproducible findings, we can accelerate the discovery of new medicines and deliver on the promise of improving human health.

References

- 1. Reproducibility in Cancer Biology: The challenges of replication | eLife [elifesciences.org]

- 2. cos.io [cos.io]

- 3. Culturing Suspension Cancer Cell Lines | Springer Nature Experiments [experiments.springernature.com]

- 4. Reproducibility Project: Cancer Biology | Collections | eLife [elifesciences.org]

- 5. osf.io [osf.io]

- 6. opticalcore.wisc.edu [opticalcore.wisc.edu]

- 7. Registered Reports | eLife [elifesciences.org]

- 8. azurebiosystems.com [azurebiosystems.com]

- 9. osf.io [osf.io]

- 10. Western Blotting: Products, Protocols, & Applications | Thermo Fisher Scientific - HK [thermofisher.com]

- 11. miltenyibiotec.com [miltenyibiotec.com]

A Technical Guide to Reproducible Research in Drug Development

For Researchers, Scientists, and Drug Development Professionals

This in-depth technical guide provides a comprehensive overview of the core principles and practices of reproducible research, tailored for professionals in the drug development pipeline. By adhering to the methodologies outlined below, researchers can enhance the reliability, transparency, and impact of their work. This guide will walk through a practical example of a reproducible bioinformatics workflow to identify markers of drug sensitivity in cancer cell lines.

The Core Principles of Reproducible Research

Reproducible research is the foundation of robust scientific discovery, ensuring that findings can be independently verified and built upon.[1] In the context of drug development, where the stakes are exceptionally high, a commitment to reproducibility is paramount for both scientific integrity and efficient translation of preclinical discoveries.[2] The core tenets of reproducible research include:

-

Transparent and Detailed Reporting: All methods, materials, and analytical procedures must be documented with sufficient detail to allow other researchers to replicate the work.[3]

-

Data and Code Accessibility: The raw and processed data, along with the code used for analysis, should be made available to the broader scientific community.[4]

-

Version Control: A systematic approach to managing changes to code and documents over time is essential for tracking the evolution of an analysis and ensuring that specific results can be regenerated.[5]

-

Environment Consistency: The computational environment, including software versions and dependencies, should be captured and shared to prevent discrepancies arising from different system configurations.

A Reproducible Workflow for Cancer Drug Sensitivity Analysis

To illustrate the practical application of these principles, this guide will outline a reproducible workflow for analyzing publicly available cancer drug sensitivity data. The goal of this analysis is to identify genomic features that are associated with a cancer cell line's response to a particular drug.

Datasets

This workflow utilizes two of the largest publicly available pharmacogenomics datasets:

-

Genomics of Drug Sensitivity in Cancer (GDSC): A comprehensive resource of drug sensitivity data for a wide range of cancer cell lines, along with their genomic characterization.[4]

-

Cancer Cell Line Encyclopedia (CCLE): A collaborative project that has characterized a large panel of human cancer cell lines at the genetic and pharmacologic levels.[6]

For this example, we will focus on a subset of the data, specifically the response of breast cancer cell lines to the MEK inhibitor, Trametinib.

Experimental Protocol: Data Acquisition and Preprocessing

The following protocol details the steps for acquiring and preparing the data for analysis.

-

Data Acquisition:

-

Download the drug sensitivity data (IC50 values) and the genomic data (gene expression and mutation data) from the GDSC and CCLE portals.

-

-

Data Integration:

-

Merge the drug sensitivity and genomic datasets for the cell lines that are common to both the GDSC and CCLE projects. This step is crucial for increasing the statistical power of the analysis.

-

-

Data Cleaning and Normalization:

-

Handle missing values in the datasets. For this protocol, cell lines with missing drug sensitivity values for Trametinib will be excluded.

-

Normalize the gene expression data to account for technical variations between experiments. A common method is to use a log2 transformation.

-

-

Feature Selection:

-

For this analysis, we will focus on the association between the expression of genes in the MAPK signaling pathway and sensitivity to Trametinib, a MEK inhibitor. A list of genes in this pathway can be obtained from publicly available pathway databases such as KEGG or Reactome.

-

The following diagram illustrates the data acquisition and preprocessing workflow:

References

- 1. GitHub - SmritiChawla/Precily [github.com]

- 2. Tutorial 2a: Digging Deeper with Cell Line and Drug Subgroups [pkimes.com]

- 3. researchgate.net [researchgate.net]

- 4. google.com [google.com]

- 5. researchgate.net [researchgate.net]

- 6. Enhancing Reproducibility in Cancer Drug Screening: How Do We Move Forward? - PMC [pmc.ncbi.nlm.nih.gov]

Foundational Concepts of Validity in Scientific Experiments: An In-depth Technical Guide

For Researchers, Scientists, and Drug Development Professionals

The Four Pillars of Experimental Validity

Internal Validity: Establishing a True Cause-and-Effect Relationship

Threats to Internal Validity:

A variety of factors can jeopardize the internal validity of an experiment. Researchers must be vigilant in identifying and mitigating these threats:

-

Confounding Variables: These are unmeasured third variables that influence both the cause and the effect.[1][6][7] For instance, in a study examining the link between a new cardiovascular drug and reduced heart attack risk, a confounding variable could be the participants' diet, which independently affects heart health.

-

History: External events that occur during the course of a study can influence the outcome.[2][8][9][10]

-

Maturation: Natural changes in the participants over time, such as aging or spontaneous remission of a condition, can be mistaken for a treatment effect.[2][8][9][10]

-

Instrumentation: Changes in the measurement instruments or their administration can lead to apparent changes in the outcome.[8][9][10]

-

Testing: The act of taking a pre-test can influence the results of a post-test, independent of the treatment.[8][9]

-

Selection Bias: If groups are not comparable at the start of the study, any observed differences in outcome may be due to pre-existing differences rather than the treatment.[1][2][8][9]

-

Attrition (Experimental Mortality): If participants drop out of a study at different rates across groups, the remaining samples may no longer be comparable.[1][2][8]

Strategies to Enhance Internal Validity:

-

Randomization: Randomly assigning participants to treatment and control groups helps to ensure that confounding variables are evenly distributed, reducing their potential to bias the results.[7][11]

-

Control Groups: A well-defined control group provides a baseline against which the effects of the treatment can be compared.

-

Blinding: Masking the treatment allocation from participants (single-blind) or from both participants and researchers (double-blind) minimizes placebo effects and observer bias.

-

Standardized Protocols: Ensuring that the experimental procedures are consistent for all participants reduces variability and the influence of extraneous factors.

External Validity: Generalizing Findings to the Real World

External validity refers to the extent to which the results of a study can be generalized to other settings, populations, and times.[1][4][12][13] In drug development, high external validity is critical for predicting a drug's effectiveness in the broader patient population beyond the controlled environment of a clinical trial.[14][15]

Threats to External Validity:

-

Sample Features: If the study sample is not representative of the target population, the findings may not be generalizable.[1] For example, a drug tested only on a specific age group may have different effects in other age groups.

-

Situational Factors: The specific setting and conditions of the experiment, such as the time of day or the characteristics of the researchers, can limit the generalizability of the findings.[1][16]

-

Pre- and Post-test Effects: The use of a pre-test can sensitize participants to the treatment, making the results not generalizable to a population that has not been pre-tested.[1]

-

Hawthorne Effect: Participants may alter their behavior simply because they are aware of being observed, leading to results that may not be replicated in a real-world setting.[17][18]

Strategies to Enhance External Validity:

-

Representative Sampling: Using random sampling techniques to select participants that accurately reflect the characteristics of the target population.

-

Replication: Conducting the study in different settings and with different populations to see if the results are consistent.[1]

-

Field Experiments: Conducting studies in real-world settings rather than highly controlled laboratory environments.[16]

-

Ecological Validity: Ensuring that the experimental conditions mimic the real-world situations to which the findings are intended to apply.[19]

Construct Validity: Measuring the Intended Concept

Construct validity is the degree to which a test or measurement tool accurately assesses the theoretical concept it is intended to measure.[10][20][21][22] In drug development, this means ensuring that the chosen endpoints and biomarkers truly reflect the underlying disease process or the drug's mechanism of action.

Threats to Construct Validity:

-

Inadequate Preoperational Explication of Constructs: A failure to clearly and comprehensively define the construct of interest before developing the measurement tool.

-

Mono-operation Bias: Using only one method to measure a construct, which may not capture all of its facets.

-

Mono-method Bias: Using only one type of measurement (e.g., self-report), which may be influenced by method-specific biases.

-

Confounding Constructs and Levels of Constructs: The measurement may inadvertently capture other related constructs.

Strategies to Enhance Construct Validity:

-

Clear Operational Definitions: Providing a precise and detailed definition of the construct and how it will be measured.[23]

-

Convergent and Discriminant Validity: Demonstrating that the measure correlates with other measures of the same construct (convergent validity) and does not correlate with measures of unrelated constructs (discriminant validity).[10][24]

-

Expert Review: Having experts in the field evaluate the relevance and appropriateness of the measurement tool.[23]

-

Pilot Testing: Conducting a small-scale trial of the measurement tool to identify any potential issues before the main study.[23][25]

Statistical Conclusion Validity: The Accuracy of Inferences

-

Fishing and the Error Rate Problem: Conducting multiple statistical tests on the same data increases the probability of finding a significant result by chance (Type I error).[7]

-

Unreliability of Measures: Inconsistent or error-prone measurement tools can obscure a true relationship between variables.[26]

-

Power Analysis: Conducting a power analysis before the study to determine the appropriate sample size.[6]

-

Appropriate Statistical Tests: Selecting and using statistical tests that are suitable for the research design and the type of data collected.[23]

-

Controlling for Multiple Comparisons: Using statistical methods to adjust for the increased risk of Type I errors when conducting multiple tests.

-

Using Reliable Measures: Employing measurement tools with established reliability.[26]

Experimental Protocol: In Vitro Efficacy of a Novel Anti-Cancer Compound (NACC-1)

To illustrate the practical application of these validity concepts, a hypothetical experimental protocol for assessing the in vitro efficacy of a novel anti-cancer compound is presented below.

2.1 Objective: To determine the dose-dependent cytotoxic effect of a novel anti-cancer compound (NACC-1) on a human breast cancer cell line (MCF-7).

2.2 Materials and Methods:

-

Cell Line: MCF-7 human breast cancer cell line.

-

Compound: Novel Anti-Cancer Compound 1 (NACC-1), dissolved in DMSO.

-

Reagents: Dulbecco's Modified Eagle Medium (DMEM), Fetal Bovine Serum (FBS), Penicillin-Streptomycin, Trypsin-EDTA, MTT (3-(4,5-dimethylthiazol-2-yl)-2,5-diphenyltetrazolium bromide) reagent, DMSO.

-

Equipment: 96-well plates, incubator (37°C, 5% CO2), microplate reader.

2.3 Experimental Procedure:

-

Cell Culture: MCF-7 cells are cultured in DMEM supplemented with 10% FBS and 1% Penicillin-Streptomycin in a humidified incubator at 37°C with 5% CO2.

-

Cell Seeding: Cells are seeded into 96-well plates at a density of 5,000 cells per well and allowed to adhere overnight.

-

Compound Treatment: NACC-1 is serially diluted in culture medium to final concentrations of 0.1, 1, 10, 50, and 100 µM. The vehicle control group receives medium with the same concentration of DMSO as the highest NACC-1 concentration. A negative control group receives only fresh medium. Each treatment is performed in triplicate.

-

Incubation: The treated cells are incubated for 48 hours.

-

MTT Assay: After 48 hours, the medium is removed, and MTT reagent is added to each well. The plates are incubated for 4 hours, allowing viable cells to convert MTT into formazan crystals.

-

Data Collection: The formazan crystals are dissolved in DMSO, and the absorbance is measured at 570 nm using a microplate reader.

2.4 Data Analysis:

Cell viability is calculated as a percentage relative to the vehicle control. The IC50 (half-maximal inhibitory concentration) value is determined by plotting the percentage of cell viability against the log of the compound concentration and fitting the data to a sigmoidal dose-response curve.

Data Presentation

The quantitative data from the hypothetical experiment is summarized in the table below.

| NACC-1 Concentration (µM) | Mean Absorbance (570 nm) | Standard Deviation | % Cell Viability |

| Vehicle Control (0) | 1.25 | 0.08 | 100 |

| 0.1 | 1.22 | 0.07 | 97.6 |

| 1 | 1.05 | 0.06 | 84.0 |

| 10 | 0.63 | 0.05 | 50.4 |

| 50 | 0.25 | 0.03 | 20.0 |

| 100 | 0.10 | 0.02 | 8.0 |

Mandatory Visualizations

Signaling Pathway: Hypothetical Mechanism of NACC-1 Action

This diagram illustrates a plausible signaling pathway through which NACC-1 might exert its anti-cancer effects.

Caption: Hypothetical signaling pathway of NACC-1 action.

Experimental Workflow: In Vitro Efficacy Assessment

This diagram outlines the logical flow of the experimental protocol described above.

Caption: Workflow for in vitro efficacy assessment of NACC-1.

Conclusion

References

- 1. Confounding Variables | Definition, Examples & Controls [enago.com]

- 2. researchgate.net [researchgate.net]

- 3. toolify.ai [toolify.ai]

- 4. General Principles of Preclinical Study Design - PMC [pmc.ncbi.nlm.nih.gov]

- 5. Cell signaling pathways step-by-step [mindthegraph.com]

- 6. communitymedicine4asses.wordpress.com [communitymedicine4asses.wordpress.com]

- 7. viares.com [viares.com]

- 8. What is a confounding variable? [dovetail.com]

- 9. Threats to validity of Research Design [web.pdx.edu]

- 10. quillbot.com [quillbot.com]

- 11. stats.libretexts.org [stats.libretexts.org]

- 12. Pre-Clinical Testing → Example of In Vitro Study for Efficacy | Developing Medicines [drugdevelopment.web.unc.edu]

- 13. DOT Language | Graphviz [graphviz.org]

- 14. researchgate.net [researchgate.net]

- 15. Assessing the validity of clinical trials - PubMed [pubmed.ncbi.nlm.nih.gov]

- 16. Introduction - Assuring Data Quality and Validity in Clinical Trials for Regulatory Decision Making - NCBI Bookshelf [ncbi.nlm.nih.gov]

- 17. Statistical Considerations for Preclinical Studies - PMC [pmc.ncbi.nlm.nih.gov]

- 18. 21 Top Threats to External Validity (2025) [helpfulprofessor.com]

- 19. Single-Case Design, Analysis, and Quality Assessment for Intervention Research - PMC [pmc.ncbi.nlm.nih.gov]

- 20. youtube.com [youtube.com]

- 21. The role of a statistician in drug development: Pre-clinical studies | by IDEAS ESRs | IDEAS Blog | Medium [medium.com]

- 22. sketchviz.com [sketchviz.com]

- 23. Addressing Methodologic Challenges and Minimizing Threats to Validity in Synthesizing Findings from Individual Level Data Across Longitudinal Randomized Trials - PMC [pmc.ncbi.nlm.nih.gov]

- 24. Construct Validity | Definition, Types, & Examples [scribbr.com]

- 25. Statistical Analysis of Preclinical Data – Pharma.Tips [pharma.tips]

- 26. researchgate.net [researchgate.net]

- 27. Confounding - PMC [pmc.ncbi.nlm.nih.gov]

A Technical Guide to Ensuring Robustness in Qualitative Research for Scientific and Drug Development Contexts

In the realms of scientific inquiry and drug development, the rigor and validity of research methodologies are paramount. While quantitative studies have long-established metrics for robustness, qualitative research employs a distinct set of principles to ensure the trustworthiness and credibility of its findings. This guide provides an in-depth exploration of the core dimensions of robustness in qualitative research, offering detailed methodologies and practical applications for researchers, scientists, and drug development professionals. Understanding and implementing these dimensions are critical for generating high-quality, dependable insights that can confidently inform subsequent research and development phases.

The trustworthiness of qualitative research is generally assessed through four key dimensions: credibility, transferability, dependability, and confirmability. These pillars ensure that the research findings are believable, applicable to other contexts, consistent over time, and free from researcher bias.

Credibility: Ensuring the "Truth" of the Findings

Methodologies for Enhancing Credibility:

-

Prolonged Engagement: This involves spending sufficient time with participants in their natural setting to build trust and gain a deep understanding of the context. The extended period allows the researcher to move beyond initial impressions and uncover more nuanced insights.

-

Triangulation: This technique involves using multiple data sources, methods, investigators, or theories to corroborate findings. For instance, a researcher might use interviews, observations, and document analysis to study a phenomenon (data triangulation) or have multiple researchers analyze the same data independently (investigator triangulation).

-

Peer Debriefing: This involves regular meetings with a disinterested peer who is familiar with the research methodology but not directly involved in the study. This process allows for an external check on the research process, challenging the researcher's assumptions and interpretations.

-

Member Checking: This is the process of sharing research findings with the participants from whom the data were originally collected. It allows them to validate the researcher's interpretations and correct any inaccuracies, ensuring the findings are grounded in their experiences.

A typical workflow for ensuring credibility through these methods can be visualized as an iterative process of data collection, analysis, and validation.

Transferability: Applicability in Other Contexts

Transferability, akin to external validity, refers to the extent to which the findings of a qualitative study can be applied to other contexts or with other participants. Unlike quantitative research, the goal is not to generalize findings to a population but to provide a rich and detailed description so that other researchers can assess the applicability to their own settings.

Methodology for Enhancing Transferability:

-

Thick Description: This is the cornerstone of ensuring transferability. The researcher must provide a detailed and vivid account of the research context, participants, and the phenomenon under investigation. This allows readers to have a comprehensive understanding of the setting and to make an informed judgment about whether the findings could be relevant to their own situations.

To demonstrate the context for a study on the adoption of a new medical device, a researcher might provide the following demographic and contextual data.

| Parameter | Description |

| Setting | Two tertiary care hospitals (one urban, one rural) |

| Participant Roles | Surgeons, surgical nurses, and hospital administrators |

| Number of Participants | 15 (8 surgeons, 5 nurses, 2 administrators) |

| Years of Experience | Range: 3-25 years; Mean: 12.5 years |

| Prior Experience with Similar Devices | 60% of clinicians had prior experience with a previous generation of the device |

Dependability: Consistency of Findings

Dependability, analogous to reliability in quantitative research, addresses the consistency and stability of the research findings over time. It seeks to ensure that if the study were to be repeated with the same participants in the same context, the findings would be similar.

Methodology for Enhancing Dependability:

-

Audit Trail: This involves creating a detailed and transparent record of the entire research process. This documentation should include all raw data, notes on data collection and analysis, and records of methodological decisions. An external auditor can then examine this trail to assess the rigor and consistency of the research process.

The logical relationship between the components of an audit trail for dependability is illustrated below.

Confirmability: Objectivity of Findings

Confirmability refers to the degree to which the research findings are a result of the participants' experiences and not the researcher's biases, motivations, or interests. It is concerned with the objectivity of the data interpretation.

Methodology for Enhancing Confirmability:

-

Reflexivity Journal: The researcher maintains a journal throughout the study, documenting their personal thoughts, feelings, assumptions, and biases. This practice helps the researcher to be aware of their own influence on the research process and to actively mitigate it.

-

Confirmability Audit: Similar to the dependability audit, a confirmability audit involves an external reviewer examining the audit trail. However, the focus here is on assessing whether the data and interpretations are logically connected and not skewed by the researcher's perspective. The auditor would trace the data from collection through analysis to the final findings to ensure a clear and unbiased path.

The interconnectedness of the four dimensions of trustworthiness is crucial. A study cannot be considered robust if it meets the criteria for one dimension but fails on another. The following diagram illustrates the supportive relationship between these dimensions.

By systematically addressing these four dimensions, researchers in scientific and drug development fields can significantly enhance the robustness and impact of their qualitative work. The detailed methodologies provided serve as a practical toolkit for designing and executing qualitative studies that are not only insightful but also stand up to rigorous scrutiny. This commitment to robustness ensures that qualitative findings can be a valuable and trusted component of the evidence base in any scientific endeavor.

Defining and Demonstrating Robustness in a Research Proposal: A Technical Guide

In the landscape of scientific research and drug development, a "robust" finding is one that is reliable, reproducible, and resilient to minor variations in experimental conditions.[1][2] For researchers, scientists, and drug development professionals, articulating a clear strategy for ensuring robustness is no longer a subsidiary component of a research proposal but a cornerstone of its credibility. A robust proposal convinces reviewers and stakeholders that the planned research has been meticulously designed to yield results that are not only statistically significant but also scientifically meaningful and translatable.[3][4]

This guide provides an in-depth framework for defining, designing, and presenting a robust research plan. It covers the conceptual pillars of robustness, details key experimental and statistical methodologies, and offers a clear visual language for representing these complex strategies.

The Pillars of a Robust Research Proposal

Robustness is a multifaceted concept built on three core pillars: conceptual strength, methodological rigor, and statistical validity. A compelling proposal must address all three.

-

Conceptual Robustness: This is the foundation of the entire project. It refers to the strength and clarity of the research question and hypothesis.[5] A conceptually robust proposal is grounded in a thorough literature review that identifies a clear gap in knowledge and presents a well-reasoned hypothesis.[6]

-

Methodological Robustness: This pillar addresses the quality and rigor of the experimental design.[7][8] It ensures that the methods are not only appropriate for the research question but are also designed to minimize bias and maximize reproducibility.[4][9] Key elements include transparent reporting, randomization, blinding, and the use of appropriate controls.[8][9]

-

Statistical Robustness: This involves the use of statistical methods that yield reliable results, even when underlying assumptions are not perfectly met.[10][11] It is about ensuring that the findings are not statistical artifacts, unduly influenced by outliers, or dependent on a single analytical approach.[10][12]

The logical relationship between these pillars forms the overall strength of the proposal.

Methodologies for Demonstrating Robustness

To move from theory to practice, a proposal must detail the specific experiments and analyses that will be used to establish robustness. This involves a proactive approach to identifying and testing potential sources of variability.

A robust experimental design anticipates challenges and incorporates strategies to mitigate them.[13] This includes validation, replication, and the use of controls to ensure that results are dependable.[14][15] The goal is to demonstrate that the findings are not an artifact of a specific model, reagent batch, or experimental condition.[4][16]

References

- 1. Robustness in Science → Term [pollution.sustainability-directory.com]

- 2. Replication Of Studies: Advancing Scientific Rigor & Reliability - Mind the Graph Blog [mindthegraph.com]

- 3. Adding robustness to rigor and reproducibility for the three Rs of improving translational medical research - PMC [pmc.ncbi.nlm.nih.gov]

- 4. How to design robust preclinical efficacy studies that make a difference [jax.org]

- 5. scholar.place [scholar.place]

- 6. writelerco.com [writelerco.com]

- 7. scholar.place [scholar.place]

- 8. Methodology Matters: Designing Robust Research Methods [falconediting.com]

- 9. Methodological Rigor in Preclinical Cardiovascular Studies: Targets to Enhance Reproducibility and Promote Research Translation - PMC [pmc.ncbi.nlm.nih.gov]

- 10. Robust statistics - Wikipedia [en.wikipedia.org]

- 11. academic.oup.com [academic.oup.com]

- 12. regression - How do Measure "Robustness" in Statistics? - Cross Validated [stats.stackexchange.com]

- 13. Mastering Research: The Principles of Experimental Design [servicescape.com]

- 14. Importance of Replication Studies - Enago Academy [enago.com]

- 15. longdom.org [longdom.org]

- 16. Robustness in experimental design: A study on the reliability of selection approaches - PMC [pmc.ncbi.nlm.nih.gov]

An In-Depth Technical Guide to the Core Principles of Reproducibility and Replicability in Scientific Research

In the realms of scientific inquiry and drug development, the concepts of reproducibility and replicability serve as the bedrock of evidentiary support.[1][2] Though often used interchangeably, they represent distinct, yet interconnected, pillars of the scientific method.[3][4][5] This guide delineates the critical differences between these two concepts, providing researchers, scientists, and drug development professionals with a comprehensive framework for ensuring the validity and reliability of their work.

Defining the Core Concepts: Reproducibility vs. Replicability

At its core, the distinction between reproducibility and replicability lies in the origin of the data being analyzed.

-

Reproducibility , in its modern, computationally focused definition, is the ability to obtain consistent results using the same input data, computational steps, methods, and code as the original study.[4][6][7][8] This is often referred to as "computational reproducibility."[4][6] A successful reproduction demonstrates that the data analysis was conducted transparently, fairly, and correctly.[3] It is a minimum necessary condition for trusting the analytical findings from a given dataset.[3]

-

Replicability is the ability to obtain consistent results across studies aimed at answering the same scientific question, each of which has obtained its own new data.[4][6][7][8] This process involves conducting the entire research process anew, from data collection to analysis.[3] A successful replication shows that the original study's findings are reliable and not just an artifact of a specific dataset or experimental condition.[3]

It is important to note that terminology can vary across different scientific communities.[4][9] However, the distinction between re-analyzing original data (reproducibility) and collecting new data (replicability) is the most widely accepted framework.[4][7]

Core Differences Summarized

The following table provides a structured comparison of the key attributes distinguishing reproducibility from replicability.

| Attribute | Reproducibility | Replicability |

| Primary Goal | To validate the correctness and transparency of the data analysis.[3] | To assess the reliability and generalizability of a scientific finding.[2] |

| Starting Materials | Original researcher's data, code, and computational methods.[4][6][7] | Original study's experimental protocol and methods. |

| Data Source | Same data as the original study.[4][6][8] | New data collected in an independent experiment.[4][6][7] |

| Expected Outcome | The same or very similar numerical results, figures, and tables. | Consistent findings and conclusions, given the inherent uncertainty.[4][6] |

| Inference from Success | The original analysis is computationally sound and was reported honestly.[3] | The scientific claim is robust and likely not due to chance or artifact. |

| Inference from Failure | Potential issues with the original analysis, code, or data handling. | The original finding may not be reliable or generalizable. |

| Synonymous Term | Computational Reproducibility.[4] | Experimental Replicability.[5] |

The "Reproducibility Crisis" in Scientific Research

The distinction between these concepts is not merely academic; it is at the heart of the "reproducibility crisis" affecting many scientific fields.[10] A 2016 survey published in Nature revealed that over 70% of researchers have tried and failed to reproduce another scientist's experiments, and more than half have failed to reproduce their own.[11]

| Metric | Finding | Source |

| Failed Replication of Another's Work | >70% of researchers reported failure to reproduce another scientist's experiments.[11] | Nature survey of 1,576 researchers.[11] |

| Failed Replication of Own Work | >50% of researchers reported failure to reproduce their own experiments.[11][12] | Nature survey of 1,576 researchers.[11] |

| Belief in a "Crisis" | 52% of surveyed researchers agree there is a significant "reproducibility crisis."[10][11] | Nature survey of 1,576 researchers.[11] |

| Replication Rates in Preclinical Research | Low replication rates found in biomedical research, with one study in cancer biology finding a rate as low as 10%.[11] | Amgen study (Begley and Ellis, 2012).[13] |

| Replication in Psychology | The Reproducibility Project: Psychology replicated 100 studies and found that only 36% had statistically significant results. | Open Science Collaboration (2015).[13] |

This lack of reproducibility and replicability can lead to wasted resources, delays in scientific progress, and an erosion of public trust in science.[14] In drug development, the inability to replicate preclinical studies can have severe consequences, contributing to the failure of clinical trials.[12]

Visualizing the Workflows and Logical Relationships

To further clarify the distinction, the following diagrams illustrate the workflows for achieving reproducibility and replicability, as well as their logical relationship.

A study can be reproducible but not replicable.[3] For example, the analysis of a dataset might be perfectly sound (reproducible), but the results might not hold up when a new experiment is conducted due to previously unrecognized variables or random chance in the original experiment.[3] This highlights that reproducibility is a necessary, but not sufficient, condition for replicability.

Detailed Methodologies for Ensuring Reproducibility and Replicability

Achieving reproducibility and replicability requires meticulous planning and documentation.[15][16][17] Below are illustrative protocols for both a computational (reproducibility) and a wet-lab (replicability) experiment.

This protocol details the steps to reproduce an analysis identifying differentially expressed genes from a public dataset.

-

1.0 Data Source & Acquisition

-

1.1 Dataset: The raw and processed RNA-seq data are available from the Gene Expression Omnibus (GEO) under accession number GSE00000.

-

1.2 Download: Data was downloaded on 2025-10-27 using the GEOquery R package (v2.74.0).

-

-

2.0 Computational Environment

-

2.1 Operating System: Ubuntu 22.04.1 LTS

-

2.2 Language: R version 4.3.1

-

2.3 Key R Packages:

-

DESeq2 (v1.42.0) for differential expression analysis.

-

ggplot2 (v3.4.4) for visualization.

-

dplyr (v1.1.3) for data manipulation.

-

-

2.4 Environment Management: A Docker container definition file (Dockerfile) is provided in the supplementary materials to reconstruct the exact computational environment.

-

-

3.0 Analysis Script

-

3.1 Script Name: run_differential_expression.R (available at GitHub: [link-to-repo]).

-

3.2 Script Execution: The entire analysis can be run from the command line using Rscript run_differential_expression.R.

-

3.3 Seed: The random seed is set to 12345 at the beginning of the script for stochastic processes to ensure identical results.

-

-

4.0 Analysis Steps

-

4.1 Data Loading: Raw count data and metadata are loaded from the downloaded GEO files.

-

4.2 Pre-processing: Genes with a total count of less than 10 across all samples are removed.

-

4.3 Differential Expression: The DESeq() function from the DESeq2 package is used with the design formula ~ condition.

-

4.4 Results Extraction: Results are extracted using the results() function with an adjusted p-value (padj) threshold of 0.05.

-

4.5 Visualization: A volcano plot is generated using ggplot2 and saved as volcano_plot.png.

-

This protocol details the steps to replicate an experiment measuring the IC50 of a novel kinase inhibitor.

-

1.0 Materials & Reagents

-

1.1 Cell Line: A549 (ATCC, Cat# CCL-185, Lot# 70000001). Cells were used between passages 5 and 10.

-

1.2 Culture Medium: F-12K Medium (Gibco, Cat# 21127022, Lot# 00001) supplemented with 10% Fetal Bovine Serum (FBS) (Sigma-Aldrich, Cat# F0926, Lot# 00002) and 1% Penicillin-Streptomycin (Thermo Fisher, Cat# 15140122, Lot# 00003).

-

1.3 Kinase Inhibitor: Compound XYZ (synthesis protocol provided in supplementary materials), dissolved in DMSO (Sigma-Aldrich, Cat# D2650, Lot# 00004) to a 10 mM stock.

-

1.4 Assay Reagent: CellTiter-Glo® Luminescent Cell Viability Assay (Promega, Cat# G7570, Lot# 00005).

-

-

2.0 Equipment

-

2.1 Plate Reader: Tecan Spark 10M with luminescence module.

-

2.2 Incubator: Heracell VIOS 160i, set to 37°C, 5% CO2.

-

2.3 Liquid Handler: Eppendorf epMotion 5075.

-

-

3.0 Experimental Procedure

-

3.1 Cell Seeding: A549 cells were seeded into a 96-well white, clear-bottom plate (Corning, Cat# 3610) at a density of 5,000 cells/well in 100 µL of culture medium. Plates were incubated for 24 hours.

-

3.2 Compound Treatment: Compound XYZ was serially diluted in culture medium to create a 10-point, 3-fold dilution series. 10 µL of each dilution was added to the respective wells. The final DMSO concentration was maintained at 0.1% across all wells.

-

3.3 Incubation: Plates were incubated for 72 hours at 37°C, 5% CO2.

-

3.4 Viability Measurement: Plates were equilibrated to room temperature for 30 minutes. 100 µL of CellTiter-Glo® reagent was added to each well. The plate was placed on an orbital shaker for 2 minutes and then incubated at room temperature for 10 minutes to stabilize the luminescent signal.

-

3.5 Data Acquisition: Luminescence was read on the Tecan Spark 10M with an integration time of 1 second.

-

-

4.0 Data Analysis

-

4.1 Normalization: Data was normalized to vehicle (0.1% DMSO) controls (100% viability) and no-cell controls (0% viability).

-

4.2 Curve Fitting: The normalized data was fitted to a four-parameter logistic (4PL) curve using GraphPad Prism (v9.5.1) to determine the IC50 value.

-

Conclusion

References

- 1. online225.psych.wisc.edu [online225.psych.wisc.edu]

- 2. fiveable.me [fiveable.me]

- 3. Reproducibility vs Replicability | Difference & Examples [scribbr.com]

- 4. Understanding Reproducibility and Replicability - Reproducibility and Replicability in Science - NCBI Bookshelf [ncbi.nlm.nih.gov]

- 5. Reproducibility | NNLM [nnlm.gov]

- 6. Summary - Reproducibility and Replicability in Science - NCBI Bookshelf [ncbi.nlm.nih.gov]

- 7. quora.com [quora.com]

- 8. gesis.org [gesis.org]

- 9. khinsen.wordpress.com [khinsen.wordpress.com]

- 10. Replication crisis - Wikipedia [en.wikipedia.org]

- 11. rr.gklab.org [rr.gklab.org]

- 12. mindwalkai.com [mindwalkai.com]

- 13. Replicability - Reproducibility and Replicability in Science - NCBI Bookshelf [ncbi.nlm.nih.gov]

- 14. Reproducibility and Replicability in Research: Challenges and Way Forward | Researcher.Life [researcher.life]

- 15. bitesizebio.com [bitesizebio.com]

- 16. Ensuring Reproducibility and Transparency in Experimental Research [statology.org]

- 17. bitesizebio.com [bitesizebio.com]

Methodological & Application

Application Notes and Protocols for Ensuring Robustness in Clinical Trial Design

For Researchers, Scientists, and Drug Development Professionals

These application notes and protocols provide a comprehensive guide to designing robust clinical trials, ensuring the reliability, validity, and reproducibility of study outcomes. Adherence to these principles is critical for generating high-quality evidence to support regulatory approval and inform clinical practice.

Core Principles of Robust Clinical Trial Design

A robust clinical trial is designed to minimize bias and produce results that are both reliable and generalizable.[1] The foundation of such a trial rests on several key principles that must be meticulously planned and executed.

A well-designed clinical trial protocol should clearly outline the study's objectives, design, ethical considerations, and regulatory requirements.[2] The research question should be well-defined, forming the basis of a successful trial.[3]

Key Principles:

-

Randomization: The random allocation of participants to different treatment groups is a cornerstone of robust trial design.[4][5] It aims to create comparable groups, minimizing selection bias and confounding.[6]

-

Blinding (or Masking): This practice prevents bias in the reporting and assessment of outcomes by ensuring that participants, investigators, and/or assessors are unaware of the treatment assignments.[5][6]

-

Well-Defined Endpoints: The selection of appropriate primary and secondary endpoints is crucial for a trial's success.[7][8] Endpoints should be clinically relevant, measurable, and sensitive to the effects of the intervention.[8][9]

-

Adequate Sample Size and Power: The trial must be planned with a sufficient number of participants to detect a clinically meaningful treatment effect with high probability (power) while controlling for the risk of false-positive results.[10][11]

-

Handling of Missing Data: Strategies for preventing and managing missing data should be established during the design phase to avoid biased results and a reduction in statistical power.[12][13]

-

Adaptive Designs: These designs allow for pre-planned modifications to the trial based on accumulating data, which can increase efficiency and the likelihood of success.[14][15][16]

Experimental Protocols

Protocol for Randomization and Blinding

Objective: To minimize selection and assessment bias through the implementation of robust randomization and blinding procedures.

Methodologies:

-

Selection of Randomization Method: Choose a randomization technique appropriate for the trial's specific needs.[17]

-

Simple Randomization: Assigns participants to treatment groups with a known probability, similar to a coin toss. Best suited for large trials.[17]

-

Block Randomization: Ensures a balance in the number of participants in each group at specific points during enrollment.[17]

-

Stratified Randomization: Used to ensure balance of important baseline characteristics (e.g., age, disease severity) across treatment groups.[17][18]

-

Adaptive Randomization: Adjusts the probability of assignment to different treatment arms based on accumulating data.[14][17]

-

-

Allocation Concealment: The process of hiding the treatment allocation sequence from those involved in participant recruitment and enrollment. This is crucial to prevent selection bias.[5] Methods include central randomization (e.g., via a telephone or web-based system) or the use of sequentially numbered, opaque, sealed envelopes.

-

Implementation of Blinding:

-

Single-Blind: The participant is unaware of the treatment assignment.[19]

-

Double-Blind: Both the participant and the investigators/assessors are unaware of the treatment assignment.[19][20] This is the "gold standard" for reducing bias.[6]

-

Triple-Blind: Participants, investigators, and data analysts are all blinded to the treatment allocation.

-

-

Maintaining the Blind: Use of identical-appearing placebo or active comparator treatments is essential. Procedures should be in place to manage situations where unblinding is necessary for patient safety.

Protocol for Sample Size Calculation

Objective: To determine the minimum number of participants required to achieve the study's objectives with adequate statistical power.

Methodologies:

-

Define Study Parameters:

-

Primary Endpoint: The main outcome used to evaluate the treatment effect.[21]

-

Clinically Meaningful Difference: The smallest treatment effect that is considered clinically important.[21]

-

Statistical Power (1-β): The probability of detecting a true treatment effect, typically set at 80% or 90%.[11][21]

-

Significance Level (α): The probability of a Type I error (false positive), usually set at 5% (0.05).[11][21]

-

Variability of the Outcome: The expected standard deviation of the primary endpoint, often estimated from previous studies.[10]

-

-

Select the Appropriate Formula: The formula for sample size calculation varies depending on the study design (e.g., superiority, non-inferiority) and the type of endpoint (e.g., continuous, binary, time-to-event).[10][22]

-

Calculate the Sample Size: Use statistical software or online calculators to perform the calculation based on the defined parameters.[10]

-

Adjust for Attrition: Account for potential dropouts by increasing the calculated sample size. A common approach is to divide the initial sample size by (1 - expected dropout rate).[10]

Protocol for Handling Missing Data

Objective: To minimize the impact of missing data on the validity and integrity of the trial results.

Methodologies:

-

Prevention of Missing Data:

-

Design a protocol that is feasible and minimizes participant burden.

-

Implement robust data collection and management systems.[3]

-

Maintain regular contact with participants to encourage retention.

-

-

Define the Missing Data Mechanism:

-

Missing Completely at Random (MCAR): The probability of data being missing is unrelated to both observed and unobserved data.[13]

-

Missing at Random (MAR): The probability of data being missing depends only on the observed data.[13]

-

Missing Not at Random (MNAR): The probability of data being missing depends on the unobserved data.[13]

-

-

Select an Appropriate Analysis Method: The choice of method depends on the assumed missing data mechanism.

-

Complete Case Analysis: Only includes participants with complete data. This can lead to biased results if data are not MCAR.[13]

-

Last Observation Carried Forward (LOCF): Imputes missing values with the last observed value. This method is generally discouraged as it can introduce bias.[12]

-

Multiple Imputation (MI): A robust method that creates multiple complete datasets by imputing missing values based on the observed data. This approach accounts for the uncertainty associated with the imputed values.[23][24]

-

Mixed Models for Repeated Measures (MMRM): A model-based approach that can handle missing data under the MAR assumption without imputation.[23]

-

Data Presentation: Summary Tables

Table 1: Comparison of Randomization Techniques

| Randomization Technique | Description | Advantages | Disadvantages | Best Suited For |

| Simple Randomization | Each participant has an equal chance of being assigned to any group.[17] | Easy to implement. | Can lead to unequal group sizes, especially in small trials.[17] | Large clinical trials. |

| Block Randomization | Participants are randomized in blocks to ensure balance between groups at the end of each block.[17] | Maintains balance in group sizes throughout the trial.[17] | Can be predictable if the block size is known. | Most clinical trials. |

| Stratified Randomization | Participants are first divided into strata based on important prognostic factors, then randomized within each stratum.[17] | Ensures balance of key prognostic factors across groups.[17] | Can be complex to implement with many strata. | Trials where specific baseline characteristics are known to influence the outcome. |

| Adaptive Randomization | The probability of assigning a participant to a particular group changes as data from the trial accumulate.[17] | Can increase the number of participants assigned to the more effective treatment.[14] | Can be complex to design and implement; potential for operational bias.[15] | Early phase or exploratory trials. |

Table 2: Key Parameters for Sample Size Calculation

| Parameter | Definition | Typical Value(s) | Impact on Sample Size |

| Significance Level (α) | Probability of a Type I error (false positive).[11] | 0.05 (two-sided)[11] | Decreasing α increases sample size. |

| Power (1-β) | Probability of detecting a true effect.[11] | 80% or 90%[11] | Increasing power increases sample size.[21] |

| Clinically Meaningful Difference (Effect Size) | The smallest difference in outcome between treatment groups that is considered clinically relevant.[21] | Varies by disease and intervention. | Detecting a smaller difference requires a larger sample size.[21] |

| Variability (Standard Deviation) | The spread of the data for the primary outcome. | Estimated from prior studies. | Higher variability increases sample size. |

| Dropout Rate | The proportion of participants expected to leave the study before completion.[10] | Varies by study duration and population. | Higher anticipated dropout rate increases the required sample size.[10] |

Mandatory Visualizations

Workflow for a Robust Randomized Controlled Trial (RCT)

Caption: Workflow of a robust randomized controlled trial.

Logical Relationships in Blinding

Caption: Levels of blinding in a clinical trial.

Decision Pathway for Handling Missing Data

Caption: Decision pathway for handling missing data.

References

- 1. Optimizing Clinical Trials with Robust Design [clinicaltrialsolutions.org]

- 2. lindushealth.com [lindushealth.com]

- 3. Best Practices for Designing Effective Clinical Drug Trials [raptimresearch.com]

- 4. Practical aspects of randomization and blinding in randomized clinical trials - PubMed [pubmed.ncbi.nlm.nih.gov]

- 5. Randomization and blinding: Significance and symbolism [wisdomlib.org]

- 6. Purpose of Randomization and Blinding in Research Studies - Academy [pubrica.com]

- 7. clinilaunchresearch.in [clinilaunchresearch.in]

- 8. bioaccessla.com [bioaccessla.com]

- 9. memoinoncology.com [memoinoncology.com]

- 10. riskcalc.org [riskcalc.org]

- 11. intuitionlabs.ai [intuitionlabs.ai]

- 12. scispace.com [scispace.com]

- 13. Strategies for Dealing with Missing Data in Clinical Trials: From Design to Analysis - PMC [pmc.ncbi.nlm.nih.gov]

- 14. veristat.com [veristat.com]

- 15. Benefits, challenges and obstacles of adaptive clinical trial designs - PMC [pmc.ncbi.nlm.nih.gov]

- 16. Key design considerations for adaptive clinical trials: a primer for clinicians | The BMJ [bmj.com]

- 17. Top Five Tips for Clinical Trial Design - Biotech at Scale [thermofisher.com]

- 18. pure.johnshopkins.edu [pure.johnshopkins.edu]

- 19. Chapter 6 Randomization and allocation, blinding and placebos | Clinical Biostatistics [bookdown.org]

- 20. clinicalpursuit.com [clinicalpursuit.com]

- 21. Sample Size Estimation in Clinical Trial - PMC [pmc.ncbi.nlm.nih.gov]

- 22. researchgate.net [researchgate.net]

- 23. quanticate.com [quanticate.com]

- 24. Robust analyzes for longitudinal clinical trials with missing and non-normal continuous outcomes - PMC [pmc.ncbi.nlm.nih.gov]

Application Notes and Protocols for Assessing the Robustness of Statistical Models

Introduction

In statistical analysis, particularly within research, drug development, and scientific studies, the robustness of a statistical model refers to its ability to remain effective and provide reliable results even when its underlying assumptions are not perfectly met.[1] Robust statistics are resistant to outliers or minor deviations from model assumptions.[1] Assessing model robustness is a critical practice to ensure the soundness, reliability, and generalizability of research findings.[2][3] This document provides detailed application notes and protocols for key methods used to evaluate the robustness of statistical models.

Cross-Validation

Application Note

Cross-validation is a powerful statistical technique for evaluating how the results of a statistical analysis will generalize to an independent dataset.[3] It is essential for assessing a model's performance on unseen data, thereby preventing issues like overfitting, where a model learns the training data too well, including its noise, and fails to generalize to new data.[3][4] The core idea is to partition the data into complementary subsets, train the model on one subset, and validate it on the other.[3] This process is repeated multiple times to obtain a more reliable estimate of the model's predictive performance.[3][4] Common types of cross-validation include K-Fold, Stratified K-Fold, and Leave-One-Out Cross-Validation (LOOCV).[5][6]

Key Applications:

-

Model Performance Estimation: Provides a more accurate measure of how a model will perform on new, unseen data.[4]

-

Model Selection: Helps in comparing the performance of different models to select the best one.[5]

-

Hyperparameter Tuning: Aids in optimizing model parameters for the best performance.[5]

-

Overfitting Prevention: Ensures the model is not just memorizing the training data but can generalize well.[4]

Experimental Protocol: K-Fold Cross-Validation

This protocol describes the steps to perform a K-Fold cross-validation to assess model robustness.

-

Data Partitioning: 1.1. Choose an integer 'K' (e.g., 5 or 10). This value represents the number of folds or subsets the data will be divided into. 1.2. Randomly shuffle the dataset. 1.3. Split the dataset into K equal-sized folds.[5]

-

Iterative Model Training and Validation: 2.1. For each of the K folds: a. Select the current fold as the validation set. b. Use the remaining K-1 folds as the training set. c. Train the statistical model on the training set. d. Evaluate the model's performance on the validation set using a chosen metric (e.g., Mean Squared Error for regression, Accuracy for classification). e. Store the performance score.

-

Performance Aggregation: 3.1. After iterating through all K folds, calculate the average of the K performance scores.[4] 3.2. This average score represents the overall performance of the model. The standard deviation of the scores can also be calculated to understand the variability of the model's performance across different data subsets.

Caption: Workflow for K-Fold Cross-Validation.

Data Presentation: Comparison of Cross-Validation Techniques

| Technique | Description | Pros | Cons |

| Hold-Out | The dataset is split into two parts: a training set and a testing set (e.g., 80%/20%).[4] | Simple and fast to implement.[4] | High variance due to a single split; performance can depend heavily on the specific split.[4] |

| K-Fold | The data is divided into K folds. The model is trained on K-1 folds and tested on the remaining one, repeated K times.[4] | Provides a more accurate performance estimate as every data point is used for testing.[4] | Can be computationally expensive for large K. |

| Leave-One-Out (LOOCV) | A special case of K-Fold where K equals the number of data points (n). Each data point is used once as a test set.[6][7] | Provides an unbiased estimate of the test error. | Computationally very expensive for large datasets.[5][7] |

| Stratified K-Fold | A variation of K-Fold that ensures each fold has the same proportion of class labels as the original dataset.[5] | Particularly useful for imbalanced datasets to ensure representative splits. | Can be complex to implement for multi-label classification. |

| Time Series | Special methods like Forward Chaining are used where the training set consists of past data and the test set consists of future data.[4] | Maintains the temporal order of data, which is crucial for time-dependent datasets. | Reduces the amount of available training data in early folds. |

Bootstrapping

Application Note

Bootstrapping is a resampling technique used to estimate the distribution of a statistic by repeatedly drawing samples from the original dataset with replacement.[8][9] In the context of model validation, bootstrapping helps assess the stability and variability of a model's performance estimates.[9][10] By creating multiple "bootstrap samples," training the model on each, and evaluating its performance, one can obtain a robust estimate of performance metrics and their confidence intervals.[8] This method is particularly useful when the underlying distribution of the data is unknown or when the sample size is limited.[8][9]

Key Applications:

-

Estimating Model Performance: Provides a robust estimate of metrics like accuracy, precision, and recall.[8]

-

Assessing Stability: Evaluates how much the model's performance varies with different training data.[10]

-

Confidence Interval Estimation: Constructs confidence intervals for model parameters and performance metrics.[9]

-

Reducing Variance: Helps in reducing the variance of performance estimates by averaging over many samples.[8]

Experimental Protocol: Bootstrap Validation

This protocol outlines the steps for performing bootstrap validation of a statistical model.

-

Bootstrap Sampling: 1.1. From the original dataset of size 'n', create a large number (B) of bootstrap samples (e.g., B=1000). 1.2. Each bootstrap sample is created by randomly selecting 'n' instances from the original dataset with replacement.[8] This means some instances may appear multiple times in a sample, while others may not appear at all.

-

Model Training and Evaluation: 2.1. For each of the B bootstrap samples: a. Train the statistical model on the bootstrap sample. b. The instances from the original dataset that were not included in the bootstrap sample form the "out-of-bag" (OOB) sample.[8] c. Evaluate the trained model on the OOB sample to get an unbiased performance estimate.[8] d. Store the performance score.

-

Result Aggregation and Analysis: 3.1. After iterating through all B bootstrap samples, you will have a distribution of B performance scores. 3.2. Calculate the mean of these scores to get the bootstrap-estimated model performance. 3.3. Calculate the standard deviation of the scores to estimate the standard error of the model's performance. 3.4. Construct a confidence interval (e.g., a 95% confidence interval) for the performance metric from the distribution of scores.

Caption: Workflow for Bootstrap Validation.

Data Presentation: Summary of Bootstrap Analysis Results

| Performance Metric | Bootstrap Mean | Standard Error | 95% Confidence Interval |

| Accuracy | 0.852 | 0.021 | [0.811, 0.893] |

| Precision | 0.789 | 0.035 | [0.720, 0.858] |

| Recall | 0.881 | 0.028 | [0.826, 0.936] |

| AUC | 0.915 | 0.015 | [0.886, 0.944] |

Sensitivity Analysis

Application Note

Key Applications:

-

Identifying Influential Variables: Determines which input variables have the greatest effect on the model's output.[11]

-

Assessing Robustness of Findings: Helps understand how sensitive the results are to changes in assumptions.[12]

-

Uncertainty Quantification: Quantifies how uncertainty in the inputs translates to uncertainty in the output.[13][14]

-

Model Simplification: Can help in identifying and potentially eliminating non-sensitive parameters.[11]

Experimental Protocol: One-at-a-Time (OAT) Sensitivity Analysis

This protocol describes a basic method for conducting a sensitivity analysis.

-

Define Baseline: 1.1. Establish a baseline scenario by setting all input variables to their central or most likely values. 1.2. Run the model with these baseline values and record the output.

-

Vary Inputs Systematically: 2.1. For each input variable, define a plausible range of values (e.g., based on confidence intervals or expert opinion). 2.2. One at a time, vary each input variable across its defined range while keeping all other variables at their baseline values.[11] 2.3. For each variation, run the model and record the output.

-

Analyze and Quantify Sensitivity: 3.1. For each input variable, analyze how the model output changes in response to the variations. 3.2. Quantify the sensitivity. This can be done by calculating the partial derivative, standardized regression coefficients, or simply the range of output change for a given input change.[12][14] 3.3. Rank the input variables based on their influence on the output to identify the most critical factors.

Caption: Conceptual flow of Sensitivity Analysis.

Data Presentation: Results of a Sensitivity Analysis

| Input Variable | Range of Variation | Baseline Output | Output Range | Sensitivity Index |

| Drug Dosage (mg) | 50 - 150 | 0.75 | 0.60 - 0.85 | 0.45 |

| Patient Age (years) | 40 - 70 | 0.75 | 0.72 - 0.78 | 0.15 |

| Biomarker X Level | 0.5 - 1.5 | 0.75 | 0.74 - 0.76 | 0.08 |

| Sensitivity Index can be a normalized measure of the output change. |

Simulation Studies

Application Note

Simulation studies are computer experiments that involve generating data through pseudo-random sampling to evaluate the performance of statistical methods.[15] They are essential for assessing the robustness of a model when its underlying assumptions are violated.[16] By creating simulated datasets where the "true" relationships are known, researchers can see how well a model performs under various conditions, including misspecification of the model, presence of outliers, or different data distributions.[15][16] This provides empirical evidence about a method's performance and helps in making informed modeling decisions.[15]

Key Applications:

-

Evaluating Method Performance: Gauges the performance of new or existing statistical methods.[15]

-

Comparing Methods: Compares how different methods perform under specific scenarios.[15]

-

Assessing Robustness to Misspecification: Tests how a model behaves when its assumptions (e.g., normality, linearity) are not met.[16]

-

Sample Size and Power Calculation: Can be used to determine the required sample size for a study.[15]

Experimental Protocol: Designing a Simulation Study

This protocol provides a framework for conducting a simulation study to test model robustness.

-

Define Objectives and Scenarios: 1.1. Clearly state the goal of the simulation. For example, to assess the robustness of a linear regression model to non-normally distributed errors. 1.2. Define the different scenarios to be tested. This includes the "correctly specified" scenario where model assumptions are met, and one or more "misspecified" scenarios where assumptions are violated.[16]

-

Data Generating Mechanism: 2.1. For each scenario, define the process for generating the simulated datasets. This involves specifying the true underlying model, the distribution of variables, and the sample size. 2.2. Generate a large number of datasets (e.g., 1000) for each scenario.

-

Model Fitting and Evaluation: 3.1. For each generated dataset, fit the statistical model being tested. 3.2. Calculate relevant performance metrics. For example, the bias and variance of parameter estimates, or the mean squared error of predictions.[15]

-

Summarize and Compare Results: 4.1. Aggregate the performance metrics across all simulated datasets for each scenario. 4.2. Compare the model's performance in the misspecified scenarios to its performance in the correctly specified scenario.[16] 4.3. A model is considered robust to a particular assumption violation if its performance does not degrade significantly in the misspecified scenario.

Caption: Workflow for a Simulation Study.

Data Presentation: Summary of Simulation Study Results

| Scenario | Assumed Error Distribution | True Error Distribution | Parameter Estimate Bias | Mean Squared Error (MSE) |

| 1 (Correctly Specified) | Normal | Normal | 0.002 | 1.05 |

| 2 (Misspecified) | Normal | Skewed (Chi-squared) | 0.150 | 2.54 |

| 3 (Misspecified) | Normal | Heavy-tailed (t-dist) | 0.005 | 1.88 |

References

- 1. What Is Robustness in Statistics? [thoughtco.com]

- 2. Robustness Checking in Regression: Ensuring Stability and Validity in Supervised Learning | by Macro Pulse - Daily 3 Miniute Brief | Medium [medium.com]

- 3. Cross-validation (statistics) - Wikipedia [en.wikipedia.org]

- 4. Cross-Validation and Its Types: A Comprehensive Guide - Data Science Courses in Edmonton, Canada [saidatascience.com]

- 5. medium.com [medium.com]

- 6. analyticsvidhya.com [analyticsvidhya.com]

- 7. 10.6 - Cross-validation | STAT 501 [online.stat.psu.edu]

- 8. bugfree.ai [bugfree.ai]

- 9. Bootstrap Method - GeeksforGeeks [geeksforgeeks.org]

- 10. Bootstrap validation [causalwizard.app]

- 11. statisticshowto.com [statisticshowto.com]