Diafen NN

Description

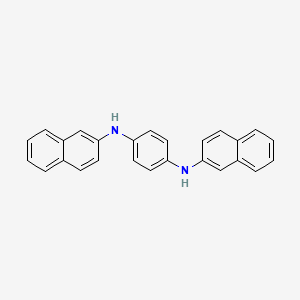

there are several cpds with the general name "diafen"; structure

Properties

IUPAC Name |

1-N,4-N-dinaphthalen-2-ylbenzene-1,4-diamine | |

|---|---|---|

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI |

InChI=1S/C26H20N2/c1-3-7-21-17-25(11-9-19(21)5-1)27-23-13-15-24(16-14-23)28-26-12-10-20-6-2-4-8-22(20)18-26/h1-18,27-28H | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI Key |

VETPHHXZEJAYOB-UHFFFAOYSA-N | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Canonical SMILES |

C1=CC=C2C=C(C=CC2=C1)NC3=CC=C(C=C3)NC4=CC5=CC=CC=C5C=C4 | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Molecular Formula |

C26H20N2 | |

| Record name | N,N'-DI-2-NAPHTHYL-P-PHENYLENEDIAMINE | |

| Source | CAMEO Chemicals | |

| URL | https://cameochemicals.noaa.gov/chemical/20267 | |

| Description | CAMEO Chemicals is a chemical database designed for people who are involved in hazardous material incident response and planning. CAMEO Chemicals contains a library with thousands of datasheets containing response-related information and recommendations for hazardous materials that are commonly transported, used, or stored in the United States. CAMEO Chemicals was developed by the National Oceanic and Atmospheric Administration's Office of Response and Restoration in partnership with the Environmental Protection Agency's Office of Emergency Management. | |

| Explanation | CAMEO Chemicals and all other CAMEO products are available at no charge to those organizations and individuals (recipients) responsible for the safe handling of chemicals. However, some of the chemical data itself is subject to the copyright restrictions of the companies or organizations that provided the data. | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

DSSTOX Substance ID |

DTXSID3020918 | |

| Record name | N,N'-Di-2-naphthyl-p-phenylenediamine | |

| Source | EPA DSSTox | |

| URL | https://comptox.epa.gov/dashboard/DTXSID3020918 | |

| Description | DSSTox provides a high quality public chemistry resource for supporting improved predictive toxicology. | |

Molecular Weight |

360.4 g/mol | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Physical Description |

N,n'-di-2-naphthyl-p-phenylenediamine is a gray powder. (NTP, 1992) | |

| Record name | N,N'-DI-2-NAPHTHYL-P-PHENYLENEDIAMINE | |

| Source | CAMEO Chemicals | |

| URL | https://cameochemicals.noaa.gov/chemical/20267 | |

| Description | CAMEO Chemicals is a chemical database designed for people who are involved in hazardous material incident response and planning. CAMEO Chemicals contains a library with thousands of datasheets containing response-related information and recommendations for hazardous materials that are commonly transported, used, or stored in the United States. CAMEO Chemicals was developed by the National Oceanic and Atmospheric Administration's Office of Response and Restoration in partnership with the Environmental Protection Agency's Office of Emergency Management. | |

| Explanation | CAMEO Chemicals and all other CAMEO products are available at no charge to those organizations and individuals (recipients) responsible for the safe handling of chemicals. However, some of the chemical data itself is subject to the copyright restrictions of the companies or organizations that provided the data. | |

Boiling Point |

Decomposes at 450-453 °F (NTP, 1992) | |

| Record name | N,N'-DI-2-NAPHTHYL-P-PHENYLENEDIAMINE | |

| Source | CAMEO Chemicals | |

| URL | https://cameochemicals.noaa.gov/chemical/20267 | |

| Description | CAMEO Chemicals is a chemical database designed for people who are involved in hazardous material incident response and planning. CAMEO Chemicals contains a library with thousands of datasheets containing response-related information and recommendations for hazardous materials that are commonly transported, used, or stored in the United States. CAMEO Chemicals was developed by the National Oceanic and Atmospheric Administration's Office of Response and Restoration in partnership with the Environmental Protection Agency's Office of Emergency Management. | |

| Explanation | CAMEO Chemicals and all other CAMEO products are available at no charge to those organizations and individuals (recipients) responsible for the safe handling of chemicals. However, some of the chemical data itself is subject to the copyright restrictions of the companies or organizations that provided the data. | |

Solubility |

less than 1 mg/mL at 66 °F (NTP, 1992) | |

| Record name | N,N'-DI-2-NAPHTHYL-P-PHENYLENEDIAMINE | |

| Source | CAMEO Chemicals | |

| URL | https://cameochemicals.noaa.gov/chemical/20267 | |

| Description | CAMEO Chemicals is a chemical database designed for people who are involved in hazardous material incident response and planning. CAMEO Chemicals contains a library with thousands of datasheets containing response-related information and recommendations for hazardous materials that are commonly transported, used, or stored in the United States. CAMEO Chemicals was developed by the National Oceanic and Atmospheric Administration's Office of Response and Restoration in partnership with the Environmental Protection Agency's Office of Emergency Management. | |

| Explanation | CAMEO Chemicals and all other CAMEO products are available at no charge to those organizations and individuals (recipients) responsible for the safe handling of chemicals. However, some of the chemical data itself is subject to the copyright restrictions of the companies or organizations that provided the data. | |

Density |

1.25 (NTP, 1992) - Denser than water; will sink | |

| Record name | N,N'-DI-2-NAPHTHYL-P-PHENYLENEDIAMINE | |

| Source | CAMEO Chemicals | |

| URL | https://cameochemicals.noaa.gov/chemical/20267 | |

| Description | CAMEO Chemicals is a chemical database designed for people who are involved in hazardous material incident response and planning. CAMEO Chemicals contains a library with thousands of datasheets containing response-related information and recommendations for hazardous materials that are commonly transported, used, or stored in the United States. CAMEO Chemicals was developed by the National Oceanic and Atmospheric Administration's Office of Response and Restoration in partnership with the Environmental Protection Agency's Office of Emergency Management. | |

| Explanation | CAMEO Chemicals and all other CAMEO products are available at no charge to those organizations and individuals (recipients) responsible for the safe handling of chemicals. However, some of the chemical data itself is subject to the copyright restrictions of the companies or organizations that provided the data. | |

CAS No. |

93-46-9 | |

| Record name | N,N'-DI-2-NAPHTHYL-P-PHENYLENEDIAMINE | |

| Source | CAMEO Chemicals | |

| URL | https://cameochemicals.noaa.gov/chemical/20267 | |

| Description | CAMEO Chemicals is a chemical database designed for people who are involved in hazardous material incident response and planning. CAMEO Chemicals contains a library with thousands of datasheets containing response-related information and recommendations for hazardous materials that are commonly transported, used, or stored in the United States. CAMEO Chemicals was developed by the National Oceanic and Atmospheric Administration's Office of Response and Restoration in partnership with the Environmental Protection Agency's Office of Emergency Management. | |

| Explanation | CAMEO Chemicals and all other CAMEO products are available at no charge to those organizations and individuals (recipients) responsible for the safe handling of chemicals. However, some of the chemical data itself is subject to the copyright restrictions of the companies or organizations that provided the data. | |

| Record name | N,N′-Di(2-naphthyl)-p-phenylenediamine | |

| Source | CAS Common Chemistry | |

| URL | https://commonchemistry.cas.org/detail?cas_rn=93-46-9 | |

| Description | CAS Common Chemistry is an open community resource for accessing chemical information. Nearly 500,000 chemical substances from CAS REGISTRY cover areas of community interest, including common and frequently regulated chemicals, and those relevant to high school and undergraduate chemistry classes. This chemical information, curated by our expert scientists, is provided in alignment with our mission as a division of the American Chemical Society. | |

| Explanation | The data from CAS Common Chemistry is provided under a CC-BY-NC 4.0 license, unless otherwise stated. | |

| Record name | Diafen NN | |

| Source | ChemIDplus | |

| URL | https://pubchem.ncbi.nlm.nih.gov/substance/?source=chemidplus&sourceid=0000093469 | |

| Description | ChemIDplus is a free, web search system that provides access to the structure and nomenclature authority files used for the identification of chemical substances cited in National Library of Medicine (NLM) databases, including the TOXNET system. | |

| Record name | Dnpda | |

| Source | DTP/NCI | |

| URL | https://dtp.cancer.gov/dtpstandard/servlet/dwindex?searchtype=NSC&outputformat=html&searchlist=3410 | |

| Description | The NCI Development Therapeutics Program (DTP) provides services and resources to the academic and private-sector research communities worldwide to facilitate the discovery and development of new cancer therapeutic agents. | |

| Explanation | Unless otherwise indicated, all text within NCI products is free of copyright and may be reused without our permission. Credit the National Cancer Institute as the source. | |

| Record name | 1,4-Benzenediamine, N1,N4-di-2-naphthalenyl- | |

| Source | EPA Chemicals under the TSCA | |

| URL | https://www.epa.gov/chemicals-under-tsca | |

| Description | EPA Chemicals under the Toxic Substances Control Act (TSCA) collection contains information on chemicals and their regulations under TSCA, including non-confidential content from the TSCA Chemical Substance Inventory and Chemical Data Reporting. | |

| Record name | N,N'-Di-2-naphthyl-p-phenylenediamine | |

| Source | EPA DSSTox | |

| URL | https://comptox.epa.gov/dashboard/DTXSID3020918 | |

| Description | DSSTox provides a high quality public chemistry resource for supporting improved predictive toxicology. | |

| Record name | N,N'-di-2-naphthyl-p-phenylenediamine | |

| Source | European Chemicals Agency (ECHA) | |

| URL | https://echa.europa.eu/substance-information/-/substanceinfo/100.002.046 | |

| Description | The European Chemicals Agency (ECHA) is an agency of the European Union which is the driving force among regulatory authorities in implementing the EU's groundbreaking chemicals legislation for the benefit of human health and the environment as well as for innovation and competitiveness. | |

| Explanation | Use of the information, documents and data from the ECHA website is subject to the terms and conditions of this Legal Notice, and subject to other binding limitations provided for under applicable law, the information, documents and data made available on the ECHA website may be reproduced, distributed and/or used, totally or in part, for non-commercial purposes provided that ECHA is acknowledged as the source: "Source: European Chemicals Agency, http://echa.europa.eu/". Such acknowledgement must be included in each copy of the material. ECHA permits and encourages organisations and individuals to create links to the ECHA website under the following cumulative conditions: Links can only be made to webpages that provide a link to the Legal Notice page. | |

| Record name | N,N'-DI-2-NAPHTHALENYL-1,4-BENZENEDIAMINE | |

| Source | FDA Global Substance Registration System (GSRS) | |

| URL | https://gsrs.ncats.nih.gov/ginas/app/beta/substances/EWK9V6MZH6 | |

| Description | The FDA Global Substance Registration System (GSRS) enables the efficient and accurate exchange of information on what substances are in regulated products. Instead of relying on names, which vary across regulatory domains, countries, and regions, the GSRS knowledge base makes it possible for substances to be defined by standardized, scientific descriptions. | |

| Explanation | Unless otherwise noted, the contents of the FDA website (www.fda.gov), both text and graphics, are not copyrighted. They are in the public domain and may be republished, reprinted and otherwise used freely by anyone without the need to obtain permission from FDA. Credit to the U.S. Food and Drug Administration as the source is appreciated but not required. | |

Melting Point |

437 to 444 °F (NTP, 1992) | |

| Record name | N,N'-DI-2-NAPHTHYL-P-PHENYLENEDIAMINE | |

| Source | CAMEO Chemicals | |

| URL | https://cameochemicals.noaa.gov/chemical/20267 | |

| Description | CAMEO Chemicals is a chemical database designed for people who are involved in hazardous material incident response and planning. CAMEO Chemicals contains a library with thousands of datasheets containing response-related information and recommendations for hazardous materials that are commonly transported, used, or stored in the United States. CAMEO Chemicals was developed by the National Oceanic and Atmospheric Administration's Office of Response and Restoration in partnership with the Environmental Protection Agency's Office of Emergency Management. | |

| Explanation | CAMEO Chemicals and all other CAMEO products are available at no charge to those organizations and individuals (recipients) responsible for the safe handling of chemicals. However, some of the chemical data itself is subject to the copyright restrictions of the companies or organizations that provided the data. | |

Foundational & Exploratory

DIA-NN: A Technical Guide to the Deep Learning-Powered Engine for Proteomics

Authored for Researchers, Scientists, and Drug Development Professionals

Executive Summary

In the landscape of mass spectrometry-based proteomics, Data-Independent Acquisition (DIA) has emerged as a powerful technique, prized for its reproducibility and comprehensive sampling of complex protein digests. However, the intricate nature of DIA data necessitates sophisticated software for accurate peptide identification and quantification. DIA-NN is a state-of-the-art software suite that has rapidly gained prominence by leveraging deep learning to dramatically improve the analysis of DIA proteomics data.[1][2][3][4] It offers a fast, robust, and user-friendly platform that excels in high-throughput applications, enabling deeper and more confident proteome coverage than many preceding tools.[1][3][4][5] This guide provides an in-depth technical overview of DIA-NN's core functionalities, its underlying algorithms, benchmarked performance, and key experimental considerations.

Core Principles of DIA-NN

DIA-NN (Data-Independent Acquisition by Neural Networks) is engineered around several key principles:

-

Deep Learning for Signal Processing : At its core, DIA-NN uses an ensemble of deep neural networks (DNNs) to distinguish true peptide signals from noise and interference.[4] This approach is particularly effective in deconvoluting the highly multiplexed spectra generated by DIA, where fragment ions from multiple co-eluting peptides are captured simultaneously.

-

Library-Free and Library-Based Analysis : DIA-NN is highly versatile, supporting both traditional library-based workflows (using empirically generated spectral libraries) and an innovative library-free mode.[4] In its library-free operation, DIA-NN generates a predicted spectral library in silico directly from a protein sequence database (FASTA file), eliminating the need for separate, time-consuming data-dependent acquisition (DDA) experiments to build a library.[6]

-

Automated and Robust Workflow : The software is designed for ease of use, automating critical parameter optimization such as mass accuracy and retention time alignment.[6] This robustness allows it to handle data from various mass spectrometry platforms and chromatographic setups with minimal manual intervention.

-

Speed and Scalability : DIA-NN is optimized for high-throughput analysis, capable of processing large datasets from extensive sample cohorts with remarkable speed.[6]

The DIA-NN Analytical Workflow

The DIA-NN data processing pipeline is a multi-stage process that transforms raw mass spectrometry data into a quantified list of peptides and proteins. The workflow intelligently combines peptide-centric and spectrum-centric strategies to maximize identification accuracy and quantification precision.

Workflow Overview

The process begins with either an in silico generated library or a user-provided experimental library. DIA-NN then extracts chromatograms for all target precursors and their corresponding decoys (negative controls). An ensemble of deep neural networks scores putative elution peaks, and a sophisticated algorithm corrects for interferences before final quantification.

Caption: The DIA-NN data processing workflow, illustrating both library-free and library-based modes.

Key Algorithmic Steps:

-

Library Generation (Library-Free Mode) : When no spectral library is provided, DIA-NN performs in silico digestion of a FASTA database. It then predicts the fragmentation patterns (MS/MS spectra) and retention times for the resulting peptides to create a comprehensive theoretical library.

-

Chromatogram Extraction : For each target precursor ion in the library (and a corresponding set of decoy peptides), DIA-NN extracts elution profiles for the precursor and its major fragment ions from the raw DIA data.

-

Peak Scoring and DNN Classification : Putative elution peaks are identified and described by a set of 73 distinct scores reflecting characteristics like mass accuracy, fragment co-elution, and spectral similarity to the library reference.[2] An ensemble of deep neural networks is then used as a classifier, taking these scores as input to calculate a single discriminant score for each peak. This score reflects the likelihood that the peak represents a true peptide detection. This step is critical for assigning a statistical confidence (q-value) to each peptide identification.

-

Interference Correction and Quantification : A common challenge in DIA is signal interference, where fragment ions from multiple co-eluting peptides overlap. DIA-NN employs an effective algorithm to detect and remove these interferences. It identifies the fragment least affected by interference to serve as a reference for the true elution profile, allowing for more accurate quantification.[4] Protein quantification is then typically performed using a MaxLFQ (Max-value Label-Free Quantification) algorithm.[7]

Performance Benchmarks

DIA-NN's performance has been extensively benchmarked against other leading software packages. It consistently demonstrates superior or competitive performance, particularly in high-throughput applications with short chromatographic gradients.

Protein and Peptide Identifications

DIA-NN often identifies a greater number of proteins and peptides at a controlled 1% False Discovery Rate (FDR), especially in library-free mode.

| Workflow | Avg. Proteins Quantified | Avg. Peptides Quantified | Reference |

| DIA-NN (Library-Free) | ~2016 | ~23,800 | [8] |

| Spectronaut (Library-Free) | ~1817 | ~22,900 | [8] |

| OpenSWATH (Library-Based) | ~1450 | ~16,500 | [8] |

| Skyline (Library-Based) | ~1600 | ~19,000 | [8] |

| Table 1: Comparison of protein and peptide quantification from a complex E. coli proteomic standard across different DIA software workflows. Data is averaged across four different DIA window acquisition schemes.[8] |

Quantification Precision

Quantification precision is critical for detecting subtle biological changes. It is often measured by the coefficient of variation (CV) across technical replicates, with lower CVs indicating higher precision. DIA-NN consistently demonstrates excellent quantification reproducibility.

| Software | Library Mode | Median CV (%) on Yeast Proteome | Reference |

| DIA-NN | In Silico Predicted | ~5.5% | [9] |

| DIA-NN | Library-Free | ~6.0% | [9] |

| EncyclopeDIA | DDA-Based Library | ~7.5% | [9] |

| Spectronaut | Library-Free | ~8.0% | [9] |

| Spectronaut | DDA-Based Library | ~10.5% | [9] |

| Table 2: Quantification precision (median CV) of background yeast proteins in a spike-in experiment. DIA-NN shows the highest precision across different analysis modes.[9] |

Example Experimental Protocol: HeLa Cell Proteome Analysis

The following is a representative protocol for the preparation and analysis of a human cell line (HeLa) proteome, a common benchmark sample, for a DIA-NN workflow.

A. Cell Culture and Lysis

-

Culture HeLa S3 cells to ~80% confluency in RPMI 1640 medium.

-

Aspirate the medium and wash the cell monolayer twice with 10 mL of ice-cold Phosphate-Buffered Saline (PBS).

-

Add 1 mL of hot (99°C) lysis buffer (e.g., 5% SDC, 100 mM Tris-HCl, pH 8.5) directly to the plate, scraping the cells to collect the lysate in a 1.5 mL tube.[10]

-

Heat the lysate at 99°C for 10 minutes with shaking to denature proteins and inactivate proteases.

-

Sonicate the lysate to shear DNA and reduce viscosity (e.g., 2 minutes with 1 sec ON/OFF pulses).[10]

-

Centrifuge at 16,000 x g for 10 minutes and retain the supernatant. Determine protein concentration using a BCA assay.

B. Protein Digestion

-

Reduction : Add Dithiothreitol (DTT) to a final concentration of 10 mM and incubate at 56°C for 30 minutes.

-

Alkylation : Cool the sample to room temperature. Add Iodoacetamide (IAA) to a final concentration of 20 mM and incubate for 30 minutes in the dark.

-

Digestion : Dilute the sample 5-fold with 100 mM Tris-HCl (pH 8.5). Add sequencing-grade trypsin at a 1:50 enzyme-to-protein ratio and incubate overnight at 37°C.

-

Cleanup : Acidify the sample with trifluoroacetic acid (TFA) to a final concentration of 1% to precipitate the SDC detergent. Centrifuge at 16,000 x g for 10 minutes.

-

Desalt the resulting peptides using a C18 solid-phase extraction (SPE) cartridge, elute with 80% acetonitrile (B52724)/0.1% formic acid, and dry the peptides in a vacuum centrifuge.

C. LC-MS/MS Analysis (DIA Method)

-

Sample Resuspension : Reconstitute dried peptides in 0.1% formic acid.

-

Chromatography : Load approximately 1 µg of peptides onto a C18 analytical column (e.g., 75 µm x 50 cm) coupled to a nano-LC system (e.g., Dionex Ultimate 3000). Separate peptides using a linear gradient of 5% to 35% acetonitrile in 0.1% formic acid over 90 minutes.

-

Mass Spectrometry : Analyze the eluting peptides on a high-resolution mass spectrometer (e.g., Orbitrap Exploris 480 or timsTOF Pro).

-

MS1 Scan : Acquire a survey scan from 350 to 1200 m/z at a resolution of 120,000.

-

DIA Scans : Use a DIA method with 40-60 variable isolation windows covering the mass range of 400 to 1000 m/z. Acquire MS2 spectra at a resolution of 30,000.

-

Application in Biological Research: TNF-α Signaling

DIA-NN is a powerful tool for systems biology, enabling the precise quantification of protein and post-translational modification changes in response to stimuli. A study benchmarking DIA software analyzed the phosphoproteome of MCF-7 cells stimulated with Tumor Necrosis Factor-alpha (TNF-α), a key inflammatory cytokine.[7] The results from DIA-NN successfully recapitulated the known signaling cascade.

The diagram below illustrates a simplified representation of the TNF-α signaling pathway leading to the activation of NF-κB, with key phosphoproteins that can be quantified using a DIA-NN workflow.

Caption: Key nodes in the TNF-α to NF-κB signaling pathway quantifiable by DIA proteomics.

In such an experiment, DIA-NN would quantify the abundance changes of thousands of phosphosites, including those on IKKα/β and IκBα, providing precise data to model the pathway's activation dynamics. The analysis by DIA-NN successfully enriched for known TNF-α responsive pathways, demonstrating its utility in discovering biologically relevant regulation.[7]

Conclusion

DIA-NN represents a significant advancement in the field of DIA proteomics. By integrating deep learning, it provides a powerful, fast, and accessible tool for researchers to achieve deep and reliable proteome quantification. Its robust performance in both library-based and library-free modes makes it adaptable to a wide range of experimental designs, from large-scale clinical cohort studies to fundamental cell biology. For professionals in drug development and scientific research, DIA-NN offers a scalable and high-confidence solution to translate complex biological samples into actionable proteomic insights.

References

- 1. DIA-NN: neural networks and interference correction enable deep proteome coverage in high throughput - PubMed [pubmed.ncbi.nlm.nih.gov]

- 2. researchgate.net [researchgate.net]

- 3. DIA-NN: neural networks and interference correction enable deep proteome coverage in high throughput | Springer Nature Experiments [experiments.springernature.com]

- 4. DIA-NN: Neural networks and interference correction enable deep proteome coverage in high throughput - PMC [pmc.ncbi.nlm.nih.gov]

- 5. biorxiv.org [biorxiv.org]

- 6. GitHub - vdemichev/DiaNN: DIA-NN - a universal automated software suite for DIA proteomics data analysis. [github.com]

- 7. Benchmarking commonly used software suites and analysis workflows for DIA proteomics and phosphoproteomics - PMC [pmc.ncbi.nlm.nih.gov]

- 8. biorxiv.org [biorxiv.org]

- 9. Benchmarking DIA data analysis workflows | bioRxiv [biorxiv.org]

- 10. HeLa quality control sample preparation for MS-based proteomics [protocols.io]

DIA-NN: A Deep Dive into the Engine of Modern DIA Proteomics

An In-depth Technical Guide for Researchers, Scientists, and Drug Development Professionals

Data-Independent Acquisition (DIA) mass spectrometry has emerged as a powerful technique for reproducible and comprehensive proteome quantification. At the heart of unlocking the potential of complex DIA datasets lies the sophisticated software required for their analysis. DIA-NN, a groundbreaking software suite, has distinguished itself through its novel integration of deep learning, enabling faster and more profound proteome coverage. This guide provides a detailed technical overview of the core components of DIA-NN, its underlying algorithms, and the methodologies that underpin its high performance.

The Core Architecture of DIA-NN: A Hybrid Approach

DIA-NN employs a peptide-centric approach, which can be initiated with either a pre-existing spectral library or by generating one in silico from a protein sequence database (FASTA file).[1] This flexibility allows for both discovery and targeted proteomics workflows. The software's architecture is designed for automation and efficiency, minimizing the need for manual parameter optimization by automatically determining settings like retention time windows and mass accuracy.[1]

A key innovation in DIA-NN is its hybrid use of both peptide-centric and spectrum-centric strategies. This combination allows it to leverage the strengths of both approaches for improved identification and quantification.[2] The workflow is multi-staged, beginning with data extraction and culminating in robust statistical analysis.

The DIA-NN Workflow: From Raw Data to Protein Quantities

The DIA-NN data processing pipeline can be broken down into several key stages, each employing sophisticated algorithms to ensure high accuracy and sensitivity. The entire process is designed to be computationally efficient, allowing for the analysis of large-scale datasets.

Spectral Library Generation and Decoy Creation

DIA-NN can either utilize an empirical spectral library or generate a predicted one in silico from a FASTA database.[1][3] For library-free workflows, it employs prediction models for fragmentation spectra and retention times.[4] To control for false discoveries, DIA-NN generates a library of negative controls, or "decoy" precursors, for each target precursor.[1]

Chromatogram Extraction and Peak Scoring

For every target and decoy precursor, DIA-NN extracts chromatograms from the raw DIA data.[1] It then identifies putative elution peaks, which consist of the elution profiles of the precursor and its fragment ions around the expected retention time.[1] Each of these peaks is then characterized by a set of 73 distinct scores that describe various attributes, including:

-

Co-elution of fragment ions: How well the elution profiles of different fragments of the same precursor correlate with each other.

-

Mass accuracy: The deviation of the measured mass-to-charge ratio (m/z) from the theoretical m/z.

-

Spectral similarity: The resemblance between the observed and the reference (library) spectra.[1]

A linear classifier is initially used to select the best candidate peak for each precursor based on these scores.[1]

Deep Learning for Confident Identification

The defining feature of DIA-NN is its use of an ensemble of deep neural networks (DNNs) to distinguish true signals from noise.[1] This is a significant departure from traditional methods that often rely on linear classifiers.

The architecture of the DNNs in DIA-NN is as follows:

-

Type: An ensemble of feed-forward, fully-connected deep neural networks.

-

Layers: Each network consists of five hidden layers with the tanh activation function and a softmax output layer.

-

Input: The 73 peak scores calculated in the previous step serve as the input for the neural networks.

-

Training: The networks are trained for one epoch to differentiate between target and decoy precursors, using cross-entropy as the loss function.[1]

The output of the DNN ensemble is a discriminant score that reflects the likelihood of a peak corresponding to a target precursor. These scores are then used to calculate q-values for false discovery rate (FDR) control.[1]

Interference Correction and Quantification

DIA data is often convoluted with interfering signals from co-eluting precursors. DIA-NN incorporates a novel algorithm to address this challenge. For each putative elution peak, it identifies the fragment ion least affected by interference, pinpointed as the one with the elution profile that best correlates with the other fragment elution profiles.[1] This reference profile is then used to subtract interferences from the other fragment ion signals, leading to more accurate quantification.[1]

For precursor quantification, DIA-NN selects the three fragment ions with the highest average correlation scores across all runs in an experiment.[1] The intensities of these three fragments are then summed to determine the total precursor ion intensity in each run.[1]

Protein Inference and Normalization

To move from precursor to protein-level quantification, DIA-NN employs the principle of maximum parsimony, implemented through a greedy set cover algorithm.[1] This approach aims to explain the identified peptides with the minimum number of proteins.

Finally, DIA-NN performs cross-run normalization to correct for variations in sample loading and instrument performance, ensuring that protein abundance can be accurately compared across different samples.[1]

Visualizing the DIA-NN Workflow

The following diagrams illustrate the core logical flow of the DIA-NN software.

References

- 1. DIA-NN: Neural networks and interference correction enable deep proteome coverage in high throughput - PMC [pmc.ncbi.nlm.nih.gov]

- 2. biorxiv.org [biorxiv.org]

- 3. GitHub - vdemichev/DiaNN: DIA-NN - a universal automated software suite for DIA proteomics data analysis. [github.com]

- 4. biorxiv.org [biorxiv.org]

DIA-NN: A Technical Guide to Data-Independent Acquisition Mass Spectrometry Analysis

For Researchers, Scientists, and Drug Development Professionals

Introduction

Data-Independent Acquisition (DIA) mass spectrometry has emerged as a powerful technique for reproducible and comprehensive proteome quantification. DIA-NN is a cutting-edge software suite that leverages deep neural networks and novel algorithms to process DIA data with high speed and accuracy. This guide provides an in-depth technical overview of DIA-NN, its core functionalities, experimental considerations, and performance benchmarks, tailored for professionals in research and drug development. DIA-NN improves identification and quantification in conventional DIA applications and is particularly beneficial for high-throughput analyses due to its speed and ability to achieve deep proteome coverage with fast chromatographic methods.[1][2]

Core Concepts and Workflow

DIA-NN employs a peptide-centric approach, which can be initiated with either a pre-existing spectral library or through a library-free workflow that generates an in-silico spectral library from a protein sequence database.[2] The software is designed for ease of use, with a high degree of automation that simplifies the analysis setup to a few clicks, requiring no extensive bioinformatics expertise.[3]

The general workflow of DIA-NN involves several key stages:

-

Spectral Library Generation: In the library-free mode, DIA-NN generates a predicted spectral library from a FASTA database.[3] This in-silico library can be reused for multiple experiments on the same organism. Alternatively, an empirical spectral library from a previous DIA experiment can be used.[3]

-

Chromatogram Extraction: For each precursor ion and its fragment ions, DIA-NN extracts chromatograms from the raw DIA data.

-

Peak Scoring and Selection: Putative elution peaks are scored based on various characteristics, including the co-elution of fragment ions and mass accuracy. An ensemble of deep neural networks is used to distinguish true signals from noise, and the best peak is selected for each precursor.[2]

-

Interference Correction: A key feature of DIA-NN is its ability to detect and remove interferences from tandem mass spectra, which significantly improves quantification accuracy.[4]

-

Quantification and Normalization: DIA-NN performs cross-run precursor ion quantification.[2] After quantification, cross-run normalization is applied to account for variations between samples.

-

Protein Inference and Quantification: The software infers protein groups from the identified peptides and provides protein-level quantification.[2][3]

DIA-NN Data Processing Workflow

References

- 1. DIA-NN: neural networks and interference correction enable deep proteome coverage in high throughput - PubMed [pubmed.ncbi.nlm.nih.gov]

- 2. DIA-NN: Neural networks and interference correction enable deep proteome coverage in high throughput - PMC [pmc.ncbi.nlm.nih.gov]

- 3. GitHub - vdemichev/DiaNN: DIA-NN - a universal automated software suite for DIA proteomics data analysis. [github.com]

- 4. scispace.com [scispace.com]

The Core Principles of Interference Correction in DIA-NN: A Technical Guide

For Researchers, Scientists, and Drug Development Professionals

Data-Independent Acquisition (DIA) mass spectrometry has emerged as a powerful technique for reproducible and in-depth proteomic analysis. However, the co-fragmentation of multiple precursors in DIA scans presents a significant challenge in data analysis, leading to signal interference that can compromise quantification accuracy. DIA-NN, a state-of-the-art software suite, employs a sophisticated interference correction strategy rooted in deep learning and a novel quantification algorithm to address this challenge, enabling deep and accurate proteome coverage, particularly in high-throughput applications.[1][2][3] This technical guide provides an in-depth exploration of the core principles behind DIA-NN's interference correction, supported by experimental data and protocols.

The Peptide-Centric Approach: A Foundation for Interference Detection

DIA-NN's interference correction strategy begins with a peptide-centric approach.[1][4] The process initiates by extracting chromatograms for each precursor ion and all its corresponding fragment ions from the raw DIA data. This is guided by a spectral library, which can be empirically generated or predicted in silico from a protein sequence database.[1][3] For each precursor, DIA-NN identifies putative elution peaks, which are localized regions in the chromatogram where the precursor and its fragments are detected.

Identifying the "Best" Fragment: A Proxy for the True Elution Profile

A core innovation in DIA-NN is its method for handling interference at the fragment ion level. For each identified elution peak of a precursor, DIA-NN assesses the elution profiles of all its fragment ions. The software then selects the fragment that is least affected by interference. This "best" fragment is identified as having the elution profile that correlates most strongly with the elution profiles of the other fragments.[1][3] The underlying assumption is that the true peptide signal will result in highly correlated fragment ion chromatograms, while interference will introduce deviations in these correlations. The elution profile of this best fragment is then considered to be the most representative proxy for the true, interference-free elution profile of the peptide.[3]

Interference Subtraction: Purifying the Signal

Once the best fragment's elution profile is established as the reference, DIA-NN proceeds to correct the signals of the other fragment ions. It compares the elution profile of each of the other fragments to this reference profile. By analyzing the differences, the software can identify and subtract the interfering signals from the chromatograms of the other fragments.[3][5] This novel quantification algorithm allows for a more accurate determination of the precursor's quantity, as it is based on purified fragment ion signals.[3] This entire process is independent of the reference fragment intensities in the spectral library, making it robust to variations in library quality.

Deep Learning Integration: Enhancing Signal from Noise

DIA-NN integrates deep neural networks (DNNs) to distinguish genuine peptide signals from noise and interference.[1][2] An ensemble of DNNs is trained to score the likelihood that a given elution peak corresponds to a target peptide versus a decoy. This scoring is based on a variety of features extracted from the elution peak, including the correlation of fragment ion traces. By leveraging deep learning, DIA-NN can more accurately identify true signals in the highly complex data generated by DIA-MS, especially in experiments with short chromatographic gradients where interference is more pronounced.[1]

A Spectrum-Centric Refinement

In a subsequent step, DIA-NN incorporates a spectrum-centric-like approach to further refine peptide identification and reduce interference-related false positives.[1] It examines precursors that are matched to the same retention time and exhibit interfering fragments. If the level of interference is deemed significant, DIA-NN will only report the precursor with the highest discriminant score as identified.[1] This strategy effectively reduces ambiguity and improves the reliability of the final reported identifications and quantifications.

Summary of Key Principles:

-

Peptide-Centric Chromatogram Extraction: Focuses on individual precursors and their fragments.

-

Best Fragment Selection: Identifies the least interfered fragment based on elution profile correlation.

-

Reference-Based Interference Subtraction: Uses the best fragment's profile to correct other fragment signals.

-

Deep Learning for Signal Scoring: Employs neural networks to differentiate true signals from noise.

-

Spectrum-Centric Refinement: Resolves ambiguity for co-eluting, interfering precursors.

This multi-pronged approach to interference correction is a key contributor to DIA-NN's high performance in terms of identification depth, quantitative accuracy, and reproducibility, making it a valuable tool for researchers in basic science and drug development.

Quantitative Data Summary

The following tables summarize the performance of DIA-NN in various benchmark studies, highlighting its ability to handle interference and provide accurate quantification.

Table 1: Precursor Identification Performance with Short Gradients

| Software | 0.5h Gradient (Precursors at 1% FDR) | 1h Gradient (Precursors at 1% FDR) | 2h Gradient (Precursors at 1% FDR) |

| DIA-NN | > 35,000 | > 40,000 | > 50,000 |

| OpenSWATH | Not Analyzed | < 30,000 | ~40,000 |

| Skyline | < 20,000 | ~30,000 | ~45,000 |

| Spectronaut | ~25,000 | ~35,000 | ~50,000 |

Data adapted from the original DIA-NN publication, showcasing performance on HeLa cell lysate digests. The results demonstrate DIA-NN's superior performance, especially with very short chromatographic gradients where interference is a major challenge.[1]

Table 2: LFQbench Performance Evaluation

| Software | Median CV (%) | Median Ratio Error | Number of Quantified Proteins |

| DIA-NN | 5.2 | 0.08 | 3,012 |

| Spectronaut | 6.1 | 0.10 | 3,058 |

| OpenSWATH | 7.5 | 0.12 | 2,890 |

| Skyline | 8.9 | 0.15 | 2,754 |

This table summarizes the performance of different software on the LFQbench dataset, which is designed to assess label-free quantification performance. DIA-NN demonstrates high precision (low CV) and accuracy (low ratio error).

Experimental Protocols

Protocol 1: HeLa Protein Digest for Benchmarking

Objective: To prepare a complex human cell line digest for evaluating DIA software performance across different gradient lengths.

Methodology:

-

Cell Culture and Lysis: HeLa cells were cultured under standard conditions. Cells were harvested, washed with PBS, and lysed in a buffer containing 8 M urea (B33335).

-

Protein Reduction and Alkylation: Proteins were reduced with dithiothreitol (B142953) (DTT) and alkylated with iodoacetamide.

-

Protein Digestion: The protein solution was diluted to reduce the urea concentration, and proteins were digested overnight with trypsin.

-

Peptide Cleanup: The resulting peptide mixture was desalted using a solid-phase extraction (SPE) cartridge.

-

LC-MS/MS Analysis: The cleaned peptide digest was analyzed on a QExactive HF mass spectrometer coupled to a nano-LC system. Analyses were performed using various chromatographic gradient lengths (e.g., 0.5h, 1h, 2h, 4h) to assess software performance under different conditions of co-elution and interference.

Protocol 2: Two-Species (Human/Maize) Library for FDR Estimation

Objective: To create a spectral library containing peptides from two different species to enable accurate False Discovery Rate (FDR) estimation.

Methodology:

-

Sample Preparation: Tryptic digests of human (HeLa) and maize proteins were prepared separately as described in Protocol 1.

-

DDA Analysis for Library Generation: Each digest was analyzed separately using Data-Dependent Acquisition (DDA) to generate comprehensive spectral libraries.

-

Library Merging: The spectral libraries from the human and maize DDA runs were combined into a single library.

-

DIA Analysis: A mixture of the human and maize digests was analyzed using DIA.

-

Data Processing: The DIA data was processed using the combined two-species library. The maize peptides serve as a true negative control (decoys) for the human peptide identifications, allowing for a more accurate estimation of the FDR.[4]

Visualizations

Logical Workflow of DIA-NN Interference Correction

Caption: Logical workflow of the interference correction process in DIA-NN software.

Signaling Pathway Example (Illustrative)

While DIA-NN is a data analysis tool and does not directly model signaling pathways, the accurate protein quantification it provides is crucial for studying them. Below is an illustrative example of a signaling pathway that could be studied using data processed with DIA-NN.

References

- 1. DIA-NN: Neural networks and interference correction enable deep proteome coverage in high throughput - PMC [pmc.ncbi.nlm.nih.gov]

- 2. Benchmarking of analysis strategies for data-independent acquisition proteomics using a large-scale dataset comprising inter-patient heterogeneity - PMC [pmc.ncbi.nlm.nih.gov]

- 3. biorxiv.org [biorxiv.org]

- 4. biorxiv.org [biorxiv.org]

- 5. DIA-NN: neural networks and interference correction enable deep proteome coverage in high throughput | Springer Nature Experiments [experiments.springernature.com]

Navigating the Proteomic Landscape: A Technical Guide to DIA-NN's Library-Free and Spectral Library-Based Analyses

For Researchers, Scientists, and Drug Development Professionals

Data-Independent Acquisition (DIA) mass spectrometry has emerged as a powerful technique for large-scale protein quantification, offering high reproducibility and deep proteome coverage.[1][2] At the heart of DIA data analysis are sophisticated software solutions, among which DIA-NN has gained prominence for its speed and accuracy, largely due to its innovative use of deep neural networks.[3][4][5][6][7] This guide provides an in-depth technical comparison of two primary analytical strategies within the DIA-NN framework: the traditional spectral library-based approach and the increasingly popular library-free methodology. Understanding the core principles, experimental protocols, and performance metrics of each is crucial for designing robust proteomic experiments and generating high-quality, actionable data in research and drug development.

Core Concepts: Two Paths to Peptide Identification

The fundamental difference between the two approaches lies in the source of the reference information used to identify and quantify peptides from complex DIA spectra.

1. Spectral Library Approach: This classic method relies on an empirically generated spectral library.[1][8] This library is a comprehensive catalog of peptide fragmentation patterns and retention times, typically created by performing Data-Dependent Acquisition (DDA) on fractionated samples representative of the study's biological context.[1][8][9] In essence, the library serves as a pre-existing "map" to navigate the complex DIA data, allowing for highly specific and sensitive targeted data extraction.[8]

2. Library-Free (Direct DIA) Approach: This newer strategy bypasses the need for a dedicated, empirically derived spectral library. Instead, it leverages in silico predicted spectral libraries.[1][10] DIA-NN employs deep learning models to predict the fragmentation patterns and retention times of peptides directly from a protein sequence database (FASTA file).[10][11] This approach offers greater flexibility and is particularly advantageous when sample material is limited or when analyzing novel proteomes.[1]

Comparative Analysis: Performance and Considerations

The choice between a spectral library and a library-free approach depends on the specific experimental goals, sample availability, and the desired balance between depth of coverage, quantitative accuracy, and workflow efficiency.

| Feature | Spectral Library Approach | Library-Free Approach |

| Reference Data Source | Empirically derived from DDA experiments of representative samples.[1][8] | In silico predicted from a protein sequence database (FASTA).[10][11] |

| Workflow | Requires upfront investment in generating and validating a spectral library.[1][12] | Streamlined workflow without the need for prior DDA experiments.[1][13] |

| Identification Confidence | High, based on matching to experimentally observed spectra.[1] | High, but dependent on the accuracy of prediction algorithms.[1] |

| Quantitative Accuracy | Generally high precision and accuracy.[5] | Comparable accuracy to library-based methods, with DIA-NN showing strong performance.[14][15] |

| Flexibility | Less flexible; changes in sample type or methodology may require a new library.[1] | Highly flexible; adaptable to different sample types and experimental conditions.[1] |

| Throughput | Lower, due to the time required for library generation.[12] | Higher, with faster analysis turnaround.[4][6][7] |

| Best Use Cases | Targeted studies, well-characterized proteomes, validation of findings.[1] | Large-scale discovery studies, analysis of novel organisms, limited sample availability.[1][16] |

Quantitative Performance Metrics: A Benchmarking Overview

Several studies have benchmarked the performance of DIA-NN's library-free and spectral library-based approaches. The following tables summarize key findings from representative studies.

Table 1: Protein and Peptide Identifications

| Study / Condition | Analysis Approach | Number of Protein Groups Identified | Number of Peptides Identified |

| Demichev et al. (2020) - HeLa Cells | DIA-NN (Library-Free) | ~5,500 | ~40,000 |

| Demichev et al. (2020) - HeLa Cells | Spectronaut (Library-Based) | ~5,200 | ~35,000 |

| Gessulat et al. (2019) - HEK293T Cells | DIA-NN (Library-Free) | >7,000 | >60,000 |

| Gessulat et al. (2019) - HEK293T Cells | Spectronaut (Library-Based) | ~6,500 | ~55,000 |

| Muntel et al. (2019) - Human Plasma | DIA-NN (Library-Free) | ~400 | ~3,000 |

| Muntel et al. (2019) - Human Plasma | OpenSWATH (Library-Based) | ~350 | ~2,500 |

Table 2: Quantification Precision (Coefficient of Variation - CV)

| Study / Condition | Analysis Approach | Median Peptide CV (%) | Median Protein CV (%) |

| Demichev et al. (2020) - Two-species mix | DIA-NN (Library-Free) | 5.6 | 3.0 |

| Demichev et al. (2020) - Two-species mix | Spectronaut (Library-Based) | 7.0 | 3.8 |

| Searle et al. (2020) - Yeast/Human/E.coli mix | DIA-NN (Library-Free) | <10 | <5 |

| Searle et al. (2020) - Yeast/Human/E.coli mix | EncyclopeDIA (Library-Based) | ~12 | ~7 |

Experimental Protocols: A Step-by-Step Guide

Detailed and standardized experimental protocols are critical for reproducible and high-quality DIA-MS results.

Protocol 1: Spectral Library Generation (DDA-based)

-

Sample Preparation:

-

Pool a representative aliquot from each sample or condition in the study.

-

Perform protein extraction, reduction, alkylation, and tryptic digestion.

-

For deep libraries, perform high-pH reversed-phase fractionation of the pooled sample to reduce complexity.[15]

-

-

DDA Mass Spectrometry:

-

Analyze each fraction using a high-resolution mass spectrometer operating in DDA mode.

-

Employ a long chromatographic gradient (e.g., 90-120 minutes) to maximize peptide separation and identification.

-

Set DDA parameters to acquire MS/MS spectra for a large number of precursor ions (e.g., top 20-30).

-

-

Database Searching and Library Generation:

-

Search the raw DDA files against a protein sequence database (e.g., UniProt) using a search engine like Mascot, Sequest, or MaxQuant.

-

Apply a strict false discovery rate (FDR) of 1% at both the peptide and protein levels.

-

Use the search results to generate a spectral library in a format compatible with DIA-NN (e.g., .tsv, .speclib).[17]

-

Protocol 2: DIA-NN Library-Free Analysis

-

Sample Preparation:

-

Perform protein extraction, reduction, alkylation, and tryptic digestion for each individual sample.

-

-

DIA Mass Spectrometry:

-

Acquire DIA data for each sample using a high-resolution mass spectrometer.

-

Optimize DIA windowing scheme (e.g., variable windows) based on the mass range of interest and instrument capabilities.

-

Use a consistent chromatographic gradient for all samples to ensure reproducibility.

-

-

DIA-NN Data Analysis:

-

Provide the raw DIA files and a protein sequence database (FASTA file) as input to DIA-NN.[11]

-

Enable the "Library-Free" or "Predicted Library" mode within the software.[17]

-

DIA-NN will perform in silico digestion of the FASTA file and predict fragmentation patterns and retention times to generate a theoretical spectral library.[10]

-

The software then uses this predicted library to search the DIA data, perform peak extraction, and quantify peptides and proteins.[11]

-

Enable Match-Between-Runs (MBR) for large-scale experiments to enhance data completeness.[16]

-

Visualizing the Workflows

To further elucidate the distinct processes of spectral library-based and library-free DIA analysis, the following diagrams illustrate the key steps in each workflow.

References

- 1. Library-Free vs Library-Based DIA Proteomics: Strategies, Software, and Best Use Cases - Creative Proteomics [creative-proteomics.com]

- 2. DIA Proteomics Comparison 2025: DIA-NN, Spectronaut, FragPipe - Creative Proteomics [creative-proteomics.com]

- 3. researchgate.net [researchgate.net]

- 4. DIA-NN: neural networks and interference correction enable deep proteome coverage in high throughput - PubMed [pubmed.ncbi.nlm.nih.gov]

- 5. biorxiv.org [biorxiv.org]

- 6. DIA-NN: Neural networks and interference correction enable deep proteome coverage in high throughput | Semantic Scholar [semanticscholar.org]

- 7. DIA-NN: neural networks and interference correction enable deep proteome coverage in high throughput | Springer Nature Experiments [experiments.springernature.com]

- 8. Building spectral libraries from narrow window data independent acquisition mass spectrometry data - PMC [pmc.ncbi.nlm.nih.gov]

- 9. Acquiring and Analyzing Data Independent Acquisition Proteomics Experiments without Spectrum Libraries - PMC [pmc.ncbi.nlm.nih.gov]

- 10. What Is a DIA-NN Library-Free Search in a Data-Independent Acquisition (DIA) Proteomics Workflow on ZenoTOF 7600 System? [sciex.com]

- 11. DIA-NN: Neural networks and interference correction enable deep proteome coverage in high throughput - PMC [pmc.ncbi.nlm.nih.gov]

- 12. Reddit - The heart of the internet [reddit.com]

- 13. sciex.com [sciex.com]

- 14. biorxiv.org [biorxiv.org]

- 15. Benchmarking commonly used software suites and analysis workflows for DIA proteomics and phosphoproteomics - PMC [pmc.ncbi.nlm.nih.gov]

- 16. Galaxy [usegalaxy.eu]

- 17. GitHub - vdemichev/DiaNN: DIA-NN - a universal automated software suite for DIA proteomics data analysis. [github.com]

The DIA-NN Workflow: A Technical Guide for Researchers

An in-depth examination of the key features, advantages, and practical application of the DIA-NN software suite for data-independent acquisition proteomics.

Data-Independent Acquisition (DIA) mass spectrometry has emerged as a powerful technique for reproducible and comprehensive proteome quantification. At the heart of processing this complex data is the software, and DIA-NN has rapidly gained prominence for its innovative use of deep neural networks and its robust performance. This technical guide provides researchers, scientists, and drug development professionals with a detailed overview of the DIA-NN workflow, its core features, and its advantages over other platforms.

Key Features and Advantages of DIA-NN

DIA-NN distinguishes itself through a combination of cutting-edge algorithms and user-friendly design. Its core strengths lie in its ability to deliver high-throughput analysis with exceptional identification rates and quantitative accuracy.

Deep Learning Integration: At its core, DIA-NN leverages deep neural networks (DNNs) to differentiate true signals from noise, significantly improving the accuracy of peptide identification and quantification. An ensemble of feed-forward, fully-connected neural networks is trained to distinguish between target and decoy precursors, enabling more confident proteome coverage, especially at strict false discovery rate (FDR) thresholds.[1][2]

Library-Free Workflow: A major advantage of DIA-NN is its powerful library-free capability.[3] It can generate a predicted spectral library directly from a FASTA protein sequence database in silico.[1] This eliminates the need for separate, time-consuming data-dependent acquisition (DDA) experiments to build an experimental spectral library, streamlining the entire proteomics workflow.[3] This is particularly beneficial when sample material is limited or when studying organisms with uncharacterized proteomes.

High Performance and Speed: Benchmarking studies consistently demonstrate DIA-NN's superior performance in terms of the number of identified precursors and proteins compared to other popular software such as Spectronaut, OpenSWATH, and Skyline, especially with short chromatographic gradients.[1] Furthermore, DIA-NN is designed for speed and scalability, capable of processing up to 1000 mass spectrometry runs per hour on a conventional processing PC.[4]

Interference Correction: DIA spectra are inherently complex due to the co-fragmentation of multiple precursors. DIA-NN employs a sophisticated strategy to detect and subtract signal interferences, leading to more accurate quantification.[5]

Automated and User-Friendly: DIA-NN is designed for ease of use with a high degree of automation.[4] It features an intuitive graphical user interface (GUI) and a powerful command-line interface for integration into automated pipelines.[4] Many parameters, such as mass accuracies and retention time windows, can be optimized automatically, reducing the need for extensive manual tuning.[4]

The DIA-NN Processing Workflow

The DIA-NN workflow is a multi-step process that transforms raw mass spectrometry data into a quantified list of proteins. The logical progression of this workflow is depicted in the diagram below.

Quantitative Performance Benchmarks

The performance of DIA-NN has been extensively benchmarked against other leading DIA software packages. The following tables summarize the key quantitative outcomes from these studies, highlighting DIA-NN's strengths in protein and peptide identification.

Table 1: Protein and Peptide Identifications in HeLa Cell Lysates with Varying Gradient Lengths

| Gradient Length | Software | Protein Groups Identified | Precursors Identified |

| 30 min | DIA-NN | ~5,500 | ~45,000 |

| Spectronaut | ~5,200 | ~40,000 | |

| Skyline | ~4,000 | ~30,000 | |

| 60 min | DIA-NN | ~6,800 | ~65,000 |

| Spectronaut | ~6,500 | ~60,000 | |

| Skyline | ~5,500 | ~45,000 | |

| 120 min | DIA-NN | ~7,800 | ~85,000 |

| Spectronaut | ~7,500 | ~80,000 | |

| Skyline | ~6,800 | ~65,000 |

Data compiled from multiple benchmarking studies. Actual numbers may vary based on experimental conditions and specific software versions.

Table 2: Performance Comparison in a Three-Proteome Mixture (Human, Yeast, E. coli)

| Software | Correctly Identified Human Proteins | Correctly Identified Yeast Proteins | Correctly Identified E. coli Proteins | Median CV (Human Proteins) |

| DIA-NN | High | High | High | <10% |

| Spectronaut | High | High | High | <15% |

| OpenSWATH | Moderate | Moderate | Moderate | <20% |

This table provides a qualitative summary based on reported trends in benchmarking studies.

Experimental Protocols

While specific experimental parameters will vary depending on the instrument and the biological question, this section provides a generalized protocol for a typical DIA experiment analyzed with DIA-NN.

Sample Preparation (HeLa Cell Lysate)

-

Cell Lysis: HeLa cells are lysed in a buffer containing a denaturing agent (e.g., urea (B33335) or SDS) and protease inhibitors.

-

Protein Reduction and Alkylation: Proteins are reduced with dithiothreitol (B142953) (DTT) and alkylated with iodoacetamide (B48618) (IAA) to break and block disulfide bonds.

-

Protein Digestion: The protein mixture is digested overnight with trypsin.

-

Peptide Desalting: The resulting peptide mixture is desalted using a C18 solid-phase extraction cartridge.

-

Peptide Quantification: The concentration of the final peptide solution is determined using a quantitative colorimetric assay (e.g., BCA assay).

Liquid Chromatography-Mass Spectrometry (LC-MS/MS)

The following provides example settings for a Thermo Scientific Orbitrap Exploris 480 mass spectrometer.

-

LC System: UltiMate 3000 RSLCnano System

-

Column: 75 µm x 50 cm PepMap C18 column

-

Gradient: 60-minute linear gradient from 2% to 32% acetonitrile (B52724) in 0.1% formic acid.

-

MS Instrument: Orbitrap Exploris 480 Mass Spectrometer

-

MS1 Resolution: 120,000

-

MS1 AGC Target: 3e6

-

MS1 Maximum IT: 60 ms

-

DIA Scan Range: 400-1000 m/z

-

DIA Isolation Windows: 40 variable windows

-

MS2 Resolution: 30,000

-

MS2 AGC Target: 1e6

-

MS2 Maximum IT: 54 ms

-

Normalized Collision Energy (NCE): 27%

DIA-NN Data Analysis (Library-Free)

The following outlines the key steps and parameters for a library-free analysis using the DIA-NN command-line interface.

-

Generate a Predicted Spectral Library:

This command takes the human FASTA file as input and generates a predicted spectral library.

-

Run the Main Analysis:

This command uses the predicted library to analyze the raw files. Key parameters include:

-

--lib: Specifies the spectral library.

-

--f: Specifies the input raw files.

-

--out: Specifies the output report file.

-

--threads: Sets the number of CPU threads to use.

-

--verbose: Controls the level of output to the console.

-

--qvalue: Sets the precursor q-value cutoff for filtering.

-

Signaling Pathways and Logical Relationships

The core logic of DIA-NN's statistical validation process, which is crucial for its high accuracy, can be visualized as a decision-making pathway.

References

- 1. DIA-NN: Neural networks and interference correction enable deep proteome coverage in high throughput - PMC [pmc.ncbi.nlm.nih.gov]

- 2. Untargeted analysis of DIA datasets using FragPipe | FragPipe-Analyst [fragpipe-analyst-doc.nesvilab.org]

- 3. What Is a DIA-NN Library-Free Search in a Data-Independent Acquisition (DIA) Proteomics Workflow on ZenoTOF 7600 System? [sciex.com]

- 4. GitHub - vdemichev/DiaNN: DIA-NN - a universal automated software suite for DIA proteomics data analysis. [github.com]

- 5. researchgate.net [researchgate.net]

DIA-NN: A Technical Guide to High-Throughput Proteomics

For Researchers, Scientists, and Drug Development Professionals

Data-Independent Acquisition (DIA) has emerged as a powerful technique in mass spectrometry-based proteomics, offering high reproducibility and deep proteome coverage. At the heart of DIA data analysis is the software, and DIA-NN has rapidly become a leading tool due to its speed, accuracy, and innovative use of deep learning. This technical guide provides an in-depth overview of DIA-NN's core functionalities, experimental protocols, and performance metrics, tailored for professionals in research and drug development.

Core Principles of DIA-NN

DIA-NN (Data-Independent Acquisition by Neural Networks) is a software suite designed for the processing of DIA proteomics data.[1] It distinguishes itself through a combination of novel algorithms and the integration of deep neural networks to enhance peptide identification and quantification.[2][3] Key features include:

-

Deep Learning for Scoring: DIA-NN employs deep neural networks (DNNs) to discriminate between true and false signals. The DNNs are trained on a set of features calculated for each potential peptide identification, allowing for a more accurate and sensitive classification than traditional scoring algorithms.[2]

-

Interference Correction: A significant challenge in DIA is the co-fragmentation of multiple precursors within the same isolation window, leading to signal interference. DIA-NN implements a sophisticated algorithm to detect and remove these interferences, thereby improving quantification accuracy.[1][2]

-

Library-Based and Library-Free Workflows: DIA-NN supports both traditional library-based analysis, where experimental spectra are matched against a pre-existing spectral library, and a library-free approach.[2] In the library-free mode, an in-silico spectral library is generated from a protein sequence database (FASTA file), making it highly versatile and suitable for organisms without established spectral libraries.[4]

-

High-Throughput and Speed: The software is optimized for speed and can process large datasets efficiently, a critical requirement for high-throughput proteomics in clinical research and drug development.[5]

The DIA-NN Workflow

The DIA-NN data analysis workflow is a multi-step process designed to be largely automated and user-friendly.[5] The core steps are outlined below.

Quantitative Performance Benchmarks

DIA-NN has been extensively benchmarked against other popular DIA software. The following tables summarize key performance indicators from various studies, highlighting its strengths in protein and peptide identification, as well as quantitative precision and accuracy.

Table 1: Protein and Peptide Identifications

This table showcases the number of identified protein groups and peptides by DIA-NN in comparison to other software across different studies and datasets.

| Dataset/Study | Software | Library Type | Protein Groups Identified | Peptides Identified | Reference |

| HeLa Cells (1-hour gradient) | DIA-NN | Spectral | ~6,000 | ~55,000 | [6] |

| Spectronaut | Spectral | ~5,500 | ~45,000 | [6] | |

| OpenSWATH | Spectral | ~4,000 | ~30,000 | [6] | |

| Human/Yeast/E.coli Mixture (LFQbench) | DIA-NN | Spectral | - | - | [6] |

| Spectronaut | Spectral | - | - | [6] | |

| TIMS-PASEF (Library-Free) | DIA-NN | Library-Free | 7,606 | - | [7] |

| Spectronaut (directDIA) | Library-Free | 4,875 | - | [7] | |

| Mixed-species dataset | DIA-NN | Library-Free | Significantly Outperformed | Significantly Outperformed | [4] |

| Spectronaut | Library-Free | - | - | [4] | |

| OpenSWATH | Library-Based | - | - | [4] | |

| EncyclopeDIA | Library-Based | - | - | [4] | |

| Skyline | Library-Based | - | - | [4] |

Note: The numbers are approximate and can vary based on specific experimental conditions and software versions.

Table 2: Quantitative Precision and Accuracy

This table focuses on the quantitative performance of DIA-NN, specifically the coefficient of variation (CV) and accuracy in quantifying known protein ratios.

| Metric | Dataset/Study | DIA-NN | Spectronaut | Other Software | Reference |

| Median CV (%) | TIMS-PASEF | 6.0 - 7.2 | 7.9 - 8.8 | - | [7][8] |

| LFQbench (Human proteins) | 3.0 | 3.8 | - | [6] | |

| Quantification Accuracy | UPS1 in E.coli background | High | High | EncyclopeDIA: High | [9] |

| Data Completeness (%) | Mouse Membrane Proteome | 16.6 - 18.7 | 7.2 - 4.5 (directDIA) | MaxDIA: 17.0 - 21.4 | [10] |

Detailed Experimental Protocol for DIA-NN Analysis

This section provides a generalized, step-by-step protocol for a typical DIA proteomics experiment analyzed with DIA-NN.

Sample Preparation

-

Protein Extraction: Lyse cells or tissues in a suitable buffer containing protease and phosphatase inhibitors.

-

Protein Quantification: Determine the protein concentration using a standard method (e.g., BCA assay).

-

Reduction and Alkylation: Reduce disulfide bonds with dithiothreitol (B142953) (DTT) and alkylate with iodoacetamide (B48618) (IAA).

-

Protein Digestion: Digest proteins into peptides using a protease such as trypsin. A common enzyme-to-protein ratio is 1:50 to 1:100 (w/w), incubated overnight at 37°C.[11]

-

Peptide Cleanup: Desalt the peptide mixture using C18 solid-phase extraction (SPE) to remove contaminants.

-

Peptide Quantification: Quantify the peptide concentration, for example, using a NanoDrop or a quantitative colorimetric peptide assay.

-

Sample Normalization: Adjust the peptide concentration to a standard value (e.g., 0.5-1 µg/µL) in a buffer suitable for LC-MS injection (e.g., 0.1% formic acid in water).[11]

LC-MS/MS Analysis (DIA Method)

-

Liquid Chromatography (LC):

-

Use a nano- or micro-flow HPLC system.

-

Load a defined amount of peptides (e.g., 1 µg) onto a C18 trap column.

-

Separate peptides on a C18 analytical column using a gradient of increasing acetonitrile (B52724) concentration. Gradient length can vary from short (e.g., 30 minutes for high-throughput) to long (e.g., 120 minutes for deep coverage).[1][12]

-

-

Mass Spectrometry (MS):

-

Operate the mass spectrometer in DIA mode.

-

Define the precursor mass range (e.g., 400-1200 m/z).

-

Set up a series of precursor isolation windows covering the entire mass range. The number and width of these windows can be optimized for the specific instrument and experiment.

-

Acquire a full MS1 scan followed by a series of MS2 scans for each isolation window.

-

DIA-NN Data Analysis

-

Software Setup:

-

Analysis Configuration (GUI or Command Line):

-

Input Files: Select the raw DIA data files.[5]

-

FASTA File/Spectral Library: Provide a FASTA file for library-free analysis or a pre-existing spectral library.[5]

-

Main Settings:

-

Mass Accuracy: Set the MS1 and MS/MS mass accuracy in ppm based on the instrument used (e.g., 15 ppm for timsTOF, 10 ppm for Orbitrap Astral).[6]

-

Scan Window: This can be automatically determined by DIA-NN or set manually.[6]

-

Library Generation: For library-free analysis, select "Prediction from FASTA".

-

Quantification Strategy: Ensure "Match Between Runs" (MBR) is enabled for quantitative analyses to increase data completeness.[6]

-

-

Advanced Settings:

-

Protease: Specify the enzyme used for digestion (e.g., Trypsin/P).

-

Modifications: Define any expected variable modifications (e.g., oxidation of methionine, phosphorylation of serine/threonine/tyrosine).

-

-

-

Run Analysis: Start the DIA-NN analysis.

-

Output Interpretation:

Visualization of a Signaling Pathway Analyzed by DIA-NN

DIA-NN is particularly well-suited for studying dynamic cellular processes like signaling pathways due to its quantitative accuracy and reproducibility. The following is a conceptual representation of the TNF-α signaling pathway, which can be investigated using DIA-NN-based phosphoproteomics to quantify changes in protein phosphorylation upon TNF-α stimulation.[16]

In a DIA-NN phosphoproteomics experiment, researchers can quantify the phosphorylation levels of key kinases like RIPK1, TAK1, and IKK, as well as their downstream targets, providing insights into the pathway's activation state under different conditions.[16]

Conclusion

DIA-NN has established itself as a cornerstone in the field of high-throughput proteomics. Its innovative use of deep learning, robust interference correction, and flexible library-free workflow empowers researchers to achieve deep and reproducible proteome coverage. For professionals in drug development and clinical research, the speed, accuracy, and scalability of DIA-NN make it an invaluable tool for biomarker discovery, pathway analysis, and understanding disease mechanisms. As the field of proteomics continues to evolve, DIA-NN is poised to remain at the forefront of DIA data analysis.

References

- 1. Discovery proteomic (DIA) LC-MS/MS data acquisition and analysis [protocols.io]

- 2. DIA-NN: Neural networks and interference correction enable deep proteome coverage in high throughput - PMC [pmc.ncbi.nlm.nih.gov]

- 3. creative-diagnostics.com [creative-diagnostics.com]

- 4. A Comparative Analysis of Data Analysis Tools for Data-Independent Acquisition Mass Spectrometry - PMC [pmc.ncbi.nlm.nih.gov]

- 5. DIA Proteomics Comparison 2025: DIA-NN, Spectronaut, FragPipe - Creative Proteomics [creative-proteomics.com]

- 6. GitHub - vdemichev/DiaNN: DIA-NN - a universal automated software suite for DIA proteomics data analysis. [github.com]

- 7. biorxiv.org [biorxiv.org]

- 8. researchgate.net [researchgate.net]

- 9. biorxiv.org [biorxiv.org]

- 10. Benchmarking commonly used software suites and analysis workflows for DIA proteomics and phosphoproteomics - PMC [pmc.ncbi.nlm.nih.gov]

- 11. Optimizing Sample Preparation for DIA Proteomics - Creative Proteomics [creative-proteomics.com]

- 12. researchgate.net [researchgate.net]

- 13. reddit.com [reddit.com]

- 14. Reddit - The heart of the internet [reddit.com]

- 15. biorxiv.org [biorxiv.org]

- 16. researchgate.net [researchgate.net]

Comparison of DIA-NN with other DIA processing software

An In-depth Technical Guide to DIA-NN in Comparison with Other Data-Independent Acquisition (DIA) Processing Software

For Researchers, Scientists, and Drug Development Professionals

Abstract

Data-Independent Acquisition (DIA) mass spectrometry has become a cornerstone of modern proteomics, offering high reproducibility and deep proteome coverage. The choice of data processing software is critical to the success of any DIA study. This guide provides a detailed technical comparison of DIA-NN (Data-Independent Acquisition Neural Networks), a leading open-source tool, with other prominent software packages including Spectronaut, MaxQuant (MaxDIA), and Skyline. We delve into the core algorithms, experimental workflows, and performance benchmarks, presenting quantitative data in structured tables and visualizing key processes with Graphviz diagrams to aid researchers in selecting the optimal software for their specific needs.

Introduction to DIA Data Processing

In DIA, the mass spectrometer systematically fragments all precursor ions within predefined mass-to-charge (m/z) windows, generating complex MS2 spectra that are a composite of all co-eluting peptides. The computational challenge lies in deconvoluting these complex spectra to accurately identify and quantify individual peptide precursors. Various software solutions have been developed to tackle this challenge, each with its unique algorithms and workflows.

DIA-NN has emerged as a powerful, open-source software suite that leverages deep neural networks and novel quantification strategies to process DIA data.[1][2] It is particularly recognized for its high speed and performance in high-throughput applications, especially in its "library-free" mode, which generates a spectral library in silico from protein sequences.[1][2]

Spectronaut , a commercial software from Biognosys, is a mature and widely used platform for DIA analysis. It offers both library-based and "directDIA" (library-free) modes and is known for its user-friendly interface and robust performance.[3]

MaxQuant , a popular free software for proteomics data analysis, has incorporated a DIA processing module known as MaxDIA .[2][4] It leverages the well-established MaxLFQ algorithm for quantification.[2]

Skyline is a free, open-source Windows application that is widely used for targeted proteomics, but also supports DIA data analysis.[5] It excels at data visualization and manual inspection of peptide identifications.[6]

Core Algorithmic Approaches

The performance of DIA software is largely determined by its underlying algorithms for peptide identification, scoring, and quantification.

DIA-NN: Deep Learning for Enhanced Identification

DIA-NN's workflow is centered around a peptide-centric approach.[1] A key innovation in DIA-NN is its use of deep neural networks (DNNs) to distinguish true signals from noise.[1][7] For each potential peptide-spectrum match (PSM), DIA-NN extracts a set of features that are then used as input for an ensemble of DNNs.[7] The output of these networks provides a discriminant score that reflects the likelihood of a correct identification, which is then used to calculate q-values for false discovery rate (FDR) control.[1][7]

Another critical feature of DIA-NN is its sophisticated interference correction algorithm.[1][7] For each putative elution peak, it identifies the fragment ion least affected by interference and uses its elution profile as a reference to subtract the interference from other fragment ion signals, leading to more accurate quantification.[1][7]

Spectronaut: Polished Workflows and directDIA