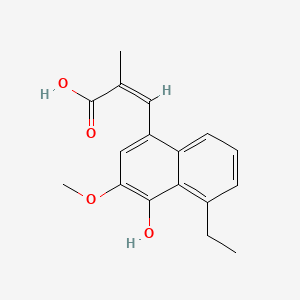

DA-E 5090

Description

Properties

CAS No. |

131420-84-3 |

|---|---|

Molecular Formula |

C17H18O4 |

Molecular Weight |

286.32 g/mol |

IUPAC Name |

(Z)-3-(5-ethyl-4-hydroxy-3-methoxynaphthalen-1-yl)-2-methylprop-2-enoic acid |

InChI |

InChI=1S/C17H18O4/c1-4-11-6-5-7-13-12(8-10(2)17(19)20)9-14(21-3)16(18)15(11)13/h5-9,18H,4H2,1-3H3,(H,19,20)/b10-8- |

InChI Key |

WGNQQQSMLCOUAX-NTMALXAHSA-N |

Isomeric SMILES |

CCC1=C2C(=CC=C1)C(=CC(=C2O)OC)/C=C(/C)\C(=O)O |

Canonical SMILES |

CCC1=C2C(=CC=C1)C(=CC(=C2O)OC)C=C(C)C(=O)O |

Appearance |

Solid powder |

Purity |

>98% (or refer to the Certificate of Analysis) |

shelf_life |

>2 years if stored properly |

solubility |

Soluble in DMSO |

storage |

Dry, dark and at 0 - 4 C for short term (days to weeks) or -20 C for long term (months to years). |

Synonyms |

3-(4-hydroxy-5-ethyl-3-methoxy-1-naphthalenyl)-2-methyl-2-propenoic acid 3-(5-ethyl-4-hydroxy-3-methoxy-1-naphthalenyl)-2-methyl-2-propenoic acid DA-E 5090 DA-E5090 |

Origin of Product |

United States |

Foundational & Exploratory

NVIDIA Blackwell Architecture: A Deep Dive for Scientific and Drug Discovery Applications

An In-depth Technical Guide on the Core Architecture, with a Focus on the GeForce RTX 5090

The NVIDIA Blackwell architecture represents a monumental leap in computational power, poised to significantly accelerate scientific research, particularly in the fields of drug development, molecular dynamics, and large-scale data analysis. This guide provides a comprehensive technical overview of the Blackwell architecture, with a specific focus on the anticipated flagship consumer GPU, the GeForce RTX 5090. The information herein is tailored for researchers, scientists, and professionals in drug development who seek to leverage cutting-edge computational hardware for their work.

Core Architectural Innovations

The Blackwell architecture, named after the distinguished mathematician and statistician David H. Blackwell, succeeds the Hopper and Ada Lovelace microarchitectures.[1] It is engineered to address the escalating demands of generative AI and high-performance computing workloads.[2] The consumer-facing GPUs, including the RTX 5090, are built on a custom TSMC 4N process.[1][3]

A key innovation in the data center-focused Blackwell GPUs is the multi-die design, where two large dies are connected via a high-speed 10 terabytes per second (TB/s) chip-to-chip interconnect, allowing them to function as a single, unified GPU.[4][5] This design packs an impressive 208 billion transistors.[4][5] While it is not confirmed that the consumer-grade RTX 5090 will feature a dual-die configuration, the underlying architectural enhancements will be present.

The Blackwell architecture introduces several key technological advancements:

-

Fifth-Generation Tensor Cores: These new Tensor Cores are designed to accelerate AI and floating-point calculations.[1] A significant advancement for researchers is the introduction of new, lower-precision data formats, including 4-bit floating point (FP4) AI.[4] This can double the performance and the size of models that can be supported in memory while maintaining high accuracy, which is crucial for large-scale AI models used in drug discovery and genomic analysis.[4]

-

Fourth-Generation RT Cores: These cores are specialized for hardware-accelerated real-time ray tracing, a technology that can be applied to molecular visualization and simulation for more accurate and intuitive representations of complex biological structures.[6][7]

-

Second-Generation Transformer Engine: This engine utilizes custom Blackwell Tensor Core technology to accelerate both the training and inference of large language models (LLMs) and Mixture-of-Experts (MoE) models.[4][8] For researchers, this translates to faster processing of scientific literature, analysis of biological sequences, and development of novel therapeutic candidates.

-

Unified INT32 and FP32 Execution: A notable architectural shift is the unification of the integer (INT32) and single-precision floating-point (FP32) execution units.[9][10] This allows for more flexible and efficient execution of diverse computational workloads.

-

Enhanced Memory Subsystem: The RTX 50 series, including the RTX 5090, is expected to be the first consumer GPU to feature GDDR7 memory, offering a significant increase in memory bandwidth.[11] This is critical for handling the massive datasets common in scientific research.

Quantitative Specifications

The following tables summarize the key quantitative specifications of the NVIDIA Blackwell architecture, comparing the data center-grade B200 GPU with the rumored specifications of the consumer-grade GeForce RTX 5090.

Data Center GPU: NVIDIA B200 Specifications

| Feature | Specification | Source(s) |

| Transistors | 208 billion (total for dual-die) | [4][5][12] |

| Manufacturing Process | Custom TSMC 4NP | [4][12] |

| AI Performance | Up to 20 petaFLOPS | [8][12] |

| Memory | 192 GB HBM3e | [5] |

| NVLink | 5th Generation, 1.8 TB/s total bandwidth | [8][12] |

Rumored GeForce RTX 5090 Specifications

| Feature | Rumored Specification | Source(s) |

| CUDA Cores | 21,760 | [13][14] |

| Memory | 32 GB GDDR7 | [6][13][15] |

| Memory Interface | 512-bit | [11][13] |

| Total Graphics Power (TGP) | ~575-600W | [13][15] |

| PCIe Interface | PCIe 5.0 | [3][11] |

Methodologies and Performance Claims

While detailed, peer-reviewed experimental protocols are not publicly available for this commercial architecture, NVIDIA has made several performance claims based on their internal testing. For instance, the GB200 NVL72, a system integrating 72 Blackwell GPUs, is claimed to offer up to a 30x performance increase in LLM inference workloads compared to the previous generation H100 GPUs, with a 25x improvement in energy efficiency.[5][12] These gains are attributed to the new FP4 precision support and the second-generation Transformer Engine.[12]

For researchers in drug development, these performance improvements could translate to:

-

Accelerated Virtual Screening: Faster and more accurate screening of vast chemical libraries to identify potential drug candidates.

-

Enhanced Molecular Dynamics Simulations: Longer and more complex simulations of protein folding and drug-target interactions.

-

Rapid Analysis of Large Datasets: Quicker processing of genomic, proteomic, and other large biological datasets.

Visualizing the Blackwell Architecture

The following diagrams, generated using the DOT language, illustrate key aspects of the NVIDIA Blackwell architecture.

Caption: High-level architectural hierarchy of a consumer-grade Blackwell GPU.

Caption: Simplified data flow within the Blackwell GPU architecture.

Caption: Workflow of the second-generation Transformer Engine.

References

- 1. Blackwell (microarchitecture) - Wikipedia [en.wikipedia.org]

- 2. cdn.prod.website-files.com [cdn.prod.website-files.com]

- 3. GeForce RTX 50 series - Wikipedia [en.wikipedia.org]

- 4. The Engine Behind AI Factories | NVIDIA Blackwell Architecture [nvidia.com]

- 5. Weights & Biases [wandb.ai]

- 6. GeForce RTX 5090 Graphics Cards | NVIDIA [nvidia.com]

- 7. scribd.com [scribd.com]

- 8. aspsys.com [aspsys.com]

- 9. Reddit - The heart of the internet [reddit.com]

- 10. emergentmind.com [emergentmind.com]

- 11. tomshardware.com [tomshardware.com]

- 12. nexgencloud.com [nexgencloud.com]

- 13. tomshardware.com [tomshardware.com]

- 14. scan.co.uk [scan.co.uk]

- 15. pcgamer.com [pcgamer.com]

GeForce RTX 5090: A Technical Guide for Scientific and Pharmaceutical Research

Executive Summary

The NVIDIA GeForce RTX 5090, powered by the next-generation Blackwell architecture, represents a significant leap in computational performance.[1] Engineered with substantial increases in CUDA core counts, fifth-generation Tensor Cores, and fourth-generation RT Cores, the RTX 5090 is positioned to dramatically accelerate a wide range of scientific workloads.[2] Its adoption of GDDR7 memory provides unprecedented bandwidth, crucial for handling the large datasets common in genomics, molecular dynamics, and cryogenic electron microscopy (cryo-EM) data processing.[3] For researchers in drug development, the enhanced AI capabilities, driven by the new Tensor Cores, promise to shorten timelines for virtual screening, protein folding, and generative chemistry.[1][4]

Core Architectural Enhancements

The RTX 5090 is built upon NVIDIA's Blackwell architecture, which succeeds the Ada Lovelace generation.[4] This new architecture is designed to unify high-performance graphics and AI computation, introducing significant optimizations in data paths and task allocation.[1][5] Key advancements include next-generation CUDA cores for raw parallel processing power, more efficient RT cores for simulations involving ray-based physics, and deeply enhanced Tensor Cores that introduce support for new precision formats like FP8 and FP4, which can double AI throughput for certain models with minimal accuracy impact.[4]

The Blackwell platform also introduces a hardware decompression engine, which can accelerate data analytics in frameworks like RAPIDS by up to 800GB/s, a critical feature for processing the massive datasets generated in scientific experiments.[6]

Quantitative Specifications

The specifications of the GeForce RTX 5090 present a substantial upgrade over its predecessors. The following tables summarize the key quantitative data for easy comparison.

Table 1: GPU Core and Processing Units

| Feature | NVIDIA GeForce RTX 5090 | NVIDIA GeForce RTX 4090 |

| GPU Architecture | Blackwell (GB202)[7] | Ada Lovelace |

| CUDA Cores | 21,760[8] | 16,384 |

| Tensor Cores | 680 (5th Generation)[7][8] | 512 (4th Generation) |

| RT Cores | 170 (4th Generation)[7][9] | 128 (3rd Generation) |

| Boost Clock | ~2.41 GHz[4] | ~2.52 GHz |

| Transistors | 92 Billion[3][4] | 76.3 Billion |

Table 2: Memory and Bandwidth

| Feature | NVIDIA GeForce RTX 5090 | NVIDIA GeForce RTX 4090 |

| Memory Size | 32 GB GDDR7[2][8] | 24 GB GDDR6X |

| Memory Interface | 512-bit[8] | 384-bit |

| Memory Bandwidth | 1,792 GB/s[2][8] | 1,008 GB/s |

| L2 Cache | 98 MB[8] | 72 MB |

Table 3: Physical and Thermal Specifications

| Feature | NVIDIA GeForce RTX 5090 |

| Total Graphics Power (TGP) | 575 W[7][8] |

| Power Connectors | 1x 16-pin (12V-2x6) or 4x 8-pin adapter[8][10] |

| Form Factor | 2-Slot Founders Edition[1][8] |

| Display Outputs | 3x DisplayPort 2.1b, 1x HDMI 2.1b[7] |

| PCIe Interface | Gen 5.0[8] |

Experimental Protocols for Performance Benchmarking

To quantitatively assess the performance of the GeForce RTX 5090 in a scientific context, a standardized benchmarking protocol is essential. The following methodology outlines a procedure for evaluating performance in molecular dynamics simulations using GROMACS, a widely used application in drug discovery and materials science.

Objective: To measure and compare the simulation throughput (nanoseconds per day) of the GeForce RTX 5090 against the GeForce RTX 4090 using a standardized set of molecular dynamics benchmarks.

4.1 System Configuration

-

CPU: AMD Ryzen 9 9950X

-

Motherboard: X870E Chipset

-

RAM: 64 GB DDR5-6000

-

Storage: 4 TB NVMe PCIe 5.0 SSD

-

Operating System: Ubuntu 24.04 LTS

-

NVIDIA Driver: Proprietary Driver Version 570.86.16 or newer[11]

-

CUDA Toolkit: Version 12.8 or newer[11]

4.2 Benchmarking Software

-

Application: GROMACS 2024

-

Benchmarks:

-

ApoA1: Apolipoprotein A1 (92k atoms), a standard benchmark for lipid metabolism studies.

-

STMV: Satellite Tobacco Mosaic Virus (1.06M atoms), representing a large, complex biomolecular system.

-

4.3 Experimental Procedure

-

System Preparation: Perform a clean installation of the operating system, NVIDIA drivers, and CUDA toolkit. Compile GROMACS from source with GPU acceleration enabled.

-

Environment Consistency: Ensure the system is idle with no background processes. Maintain a consistent ambient temperature (22°C ± 1°C) to ensure thermal stability.

-

Simulation Setup:

-

Use the standard input files for the ApoA1 and STMV benchmarks.

-

Configure the simulation to run exclusively on the GPU under test.

-

Set simulation parameters for NPT (isothermal-isobaric) ensemble with a 2 fs timestep.

-

-

Execution and Data Collection:

-

Execute each benchmark simulation for a minimum of 10,000 steps.

-

Run each test five consecutive times to ensure statistical validity.

-

Extract the performance metric (ns/day) from the GROMACS log file at the end of each run.

-

-

Data Analysis:

-

Discard the first run of each set as a warm-up.

-

Calculate the mean and standard deviation of the performance metric from the remaining four runs.

-

Compare the mean performance between the RTX 5090 and RTX 4090 to determine the performance uplift.

-

Visualized Workflows for Scientific Computing

Diagrams are crucial for representing complex computational workflows. The following sections provide Graphviz-generated diagrams for a performance benchmarking process and a GPU-accelerated drug discovery pipeline.

5.1 Performance Benchmarking Workflow

This diagram illustrates the logical flow of the experimental protocol described in Section 4.0.

5.2 GPU-Accelerated Virtual Screening Workflow

References

- 1. easychair.org [easychair.org]

- 2. Optimizing Drug Discovery with CUDA Graphs, Coroutines, and GPU Workflows | NVIDIA Technical Blog [developer.nvidia.com]

- 3. Hands-on: Analysis of molecular dynamics simulations / Analysis of molecular dynamics simulations / Computational chemistry [training.galaxyproject.org]

- 4. preprints.org [preprints.org]

- 5. High-Performance Computing (HPC) performance and benchmarking overview | Microsoft Learn [learn.microsoft.com]

- 6. tandfonline.com [tandfonline.com]

- 7. NAMD GPU Benchmarks and Hardware Recommendations | Exxact Blog [exxactcorp.com]

- 8. Drug Discovery With Accelerated Computing Platform | NVIDIA [nvidia.com]

- 9. Hands-on: High Throughput Molecular Dynamics and Analysis / High Throughput Molecular Dynamics and Analysis / Computational chemistry [training.galaxyproject.org]

- 10. redoakconsulting.co.uk [redoakconsulting.co.uk]

- 11. researchgate.net [researchgate.net]

Core Specifications and Architectural Advancements

An In-depth Technical Guide to the NVIDIA RTX 5090 for Research Applications

For professionals engaged in the computationally intensive fields of scientific research and drug development, the advent of new hardware architectures can signify a paradigm shift in the pace and scope of discovery. The NVIDIA RTX 5090, powered by the next-generation Blackwell architecture, represents such a leap forward.[1][2] This technical guide provides an in-depth analysis of the RTX 5090's specifications and its potential applications in demanding research environments.

The RTX 5090 is built upon the NVIDIA Blackwell architecture, which introduces significant enhancements over its predecessors.[3] Manufactured using a custom TSMC 4NP process, the Blackwell architecture packs 208 billion transistors in its full implementation, enabling a substantial increase in processing units.[4] For researchers, this translates to faster simulation times, the ability to train more complex AI models, and higher throughput for data processing tasks.

Quantitative Data Summary

The following tables summarize the core technical specifications of the RTX 5090 and provide a direct comparison with its predecessor, the RTX 4090, highlighting the generational improvements relevant to scientific and research workloads.

Table 1: NVIDIA GeForce RTX 5090 Technical Specifications

| Feature | Specification | Relevance to Research Applications |

| GPU Architecture | NVIDIA Blackwell | Provides foundational improvements in core processing, memory handling, and AI acceleration.[1][3] |

| Graphics Processor | GB202 | The flagship consumer die, designed for maximum performance in graphics and compute tasks.[3][5] |

| CUDA Cores | 21,760 | A significant increase in parallel processing units for general-purpose computing tasks like molecular dynamics and data analysis.[1][2][5][6] |

| Tensor Cores | 680 (5th Generation) | Accelerates AI and machine learning workloads, crucial for deep learning-based drug discovery, medical image analysis, and bioinformatics.[1][5][6] |

| RT Cores | 170 (4th Generation) | Enhances performance in ray tracing for scientific visualization and rendering of complex molecular structures.[5][6][7] |

| Memory | 32 GB GDDR7 | A large and fast memory pool allows for the analysis of larger datasets and the training of bigger AI models without performance bottlenecks.[1][5][7][8] |

| Memory Interface | 512-bit | A wider interface increases the total data throughput between the GPU and its memory.[1][5][6] |

| Memory Bandwidth | 1,792 GB/s | High bandwidth is critical for feeding the massive number of processing cores, reducing latency in data-intensive applications like genomics and cryo-EM.[1][6] |

| Boost Clock | ~2.41 GHz | Higher clock speeds result in faster execution of individual computational tasks.[2][6] |

| L2 Cache | 98 MB | A larger cache improves data access efficiency and reduces reliance on slower VRAM, speeding up repetitive calculations.[1] |

| Power Consumption (TGP) | ~575 W | A key consideration for deployment in lab or data center environments, requiring robust power and cooling solutions.[1][2][5] |

| Interconnect | PCIe 5.0 | Provides faster data transfer speeds between the GPU and the host system's CPU and main memory.[2][3][5] |

Table 2: Generational Comparison: RTX 5090 vs. RTX 4090

| Specification | NVIDIA RTX 5090 | NVIDIA RTX 4090 | Generational Improvement |

| Architecture | Blackwell | Ada Lovelace | Next-generation architecture with enhanced efficiency and new features like the second-generation Transformer Engine.[3][4] |

| CUDA Cores | 21,760[1][5] | 16,384 | ~33% increase in parallel processing cores. |

| Tensor Cores | 680 (5th Gen)[1][5] | 512 (4th Gen) | ~33% more Tensor Cores with architectural improvements for AI.[9] |

| Memory Size | 32 GB GDDR7[1][5] | 24 GB GDDR6X | 33% more VRAM with a faster memory technology. |

| Memory Bandwidth | 1,792 GB/s[1][6] | 1,008 GB/s | ~78% increase in memory bandwidth. |

| L2 Cache | 98 MB[1] | 72 MB | ~36% larger L2 cache. |

| Power (TGP) | ~575 W[1][5] | 450 W | Increased power draw to fuel higher performance. |

Applications in Drug Development and Scientific Research

The specifications of the RTX 5090 translate directly into tangible benefits for several key research methodologies. The combination of increased CUDA cores, larger and faster memory, and next-generation Tensor Cores makes it a formidable tool for tasks that were previously computationally prohibitive.[10][11]

Experimental Protocols

Below are detailed methodologies for key experiments where the RTX 5090 can be leveraged.

1. Experimental Protocol: High-Throughput Molecular Dynamics (MD) Simulation

-

Objective: To simulate the binding affinity of multiple ligand candidates to a target protein to identify potential drug leads.

-

Methodology:

-

System Preparation: The protein-ligand complex systems are prepared using simulation packages like GROMACS or AMBER. Each system is solvated in a water box with appropriate ions to neutralize the charge.

-

Energy Minimization: Each system undergoes a steepest descent energy minimization to relax the structure and remove steric clashes.

-

Equilibration: The systems are gradually heated to the target temperature (e.g., 300K) and equilibrated under NVT (constant volume) and then NPT (constant pressure) ensembles.

-

Production MD: Production simulations are run for an extended period (e.g., 100 ns per system). The massive parallel processing capability of the 21,760 CUDA cores is utilized to calculate intermolecular forces at each timestep.

-

Data Analysis: Trajectories are analyzed to calculate binding free energies using methods like MM/PBSA or MM/GBSA. The 32 GB GDDR7 memory allows for larger trajectories to be held and processed directly on the GPU, accelerating this analysis.

-

-

Objective: To train a deep learning model to predict the bioactivity of small molecules against a specific target, enabling the rapid screening of vast chemical libraries.

-

Methodology:

-

Dataset Curation: A large dataset of molecules with known bioactivity (active/inactive) for the target is compiled. Molecules are represented as molecular graphs or fingerprints.

-

Model Architecture: A graph neural network (GNN) or a multi-layer perceptron (MLP) is designed. The model will learn to extract features from the molecular representations that correlate with activity.

-

Model Training: The model is trained on the curated dataset. The 5th generation Tensor Cores and the second-generation Transformer Engine of the Blackwell architecture are leveraged to accelerate the training process, especially for large and complex models. The 32 GB VRAM is crucial for accommodating large batch sizes, which improves training stability and speed.

-

Validation: The model's predictive performance is evaluated on a separate test set using metrics like ROC-AUC.

-

Inference: The trained model is used to predict the activity of millions of unseen compounds from a virtual library. The high throughput of the RTX 5090 enables rapid screening.

-

Mandatory Visualizations

The following diagrams, generated using the DOT language, illustrate key concepts and workflows discussed in this guide.

Caption: High-level logical architecture of the RTX 5090 GPU.

Caption: Simplified MAPK/ERK signaling pathway, a common drug target.

Conclusion

References

- 1. vast.ai [vast.ai]

- 2. neoxcomputers.co.uk [neoxcomputers.co.uk]

- 3. Blackwell (microarchitecture) - Wikipedia [en.wikipedia.org]

- 4. The Engine Behind AI Factories | NVIDIA Blackwell Architecture [nvidia.com]

- 5. NVIDIA GeForce RTX 5090 Specs | TechPowerUp GPU Database [techpowerup.com]

- 6. notebookcheck.net [notebookcheck.net]

- 7. GeForce RTX 5090 Graphics Cards | NVIDIA [nvidia.com]

- 8. techradar.com [techradar.com]

- 9. pugetsystems.com [pugetsystems.com]

- 10. box.co.uk [box.co.uk]

- 11. lenovo.com [lenovo.com]

- 12. skillsgaptrainer.com [skillsgaptrainer.com]

5th Generation Tensor Cores: A Technical Deep Dive for AI Research in Drug Discovery

An In-depth Technical Guide for Researchers, Scientists, and Drug Development Professionals

The advent of NVIDIA's 5th generation Tensor Cores, integral to the Blackwell architecture, marks a significant leap forward in the computational power available for artificial intelligence (AI) research. For professionals in drug discovery, these advancements offer unprecedented opportunities to accelerate complex simulations, enhance predictive models, and ultimately, shorten the timeline for therapeutic development. This guide provides a comprehensive overview of the 5th generation Tensor Core technology, its performance capabilities, and its direct applications in drug development workflows.

Architectural Innovations of the 5th Generation Tensor Core

At the heart of the NVIDIA Blackwell architecture, the 5th generation Tensor Cores introduce several key innovations designed to dramatically boost performance and efficiency for AI workloads. These cores are engineered to accelerate the matrix-multiply-accumulate (MMA) operations that are fundamental to deep learning.

Complementing the new data formats is the second-generation Transformer Engine . This specialized hardware and software combination intelligently manages and dynamically switches between different numerical precisions to optimize performance and maintain accuracy for Transformer models, which are foundational to many modern AI applications in genomics and natural language processing.[5][6]

The Blackwell architecture itself is a feat of engineering, with the GB200 system featuring a multi-die "Superchip" design that connects two Blackwell GPUs with a Grace CPU via a high-speed interconnect.[7] This architecture boasts an impressive 208 billion transistors and is manufactured using a custom TSMC 4NP process.[6]

Performance and Specifications

The performance gains offered by the 5th generation Tensor Cores are substantial, enabling researchers to tackle previously intractable computational problems. The NVIDIA GB200 NVL72 system, which incorporates 72 Blackwell GPUs, serves as a prime example of the scale of performance now achievable.

Quantitative Data Summary

For ease of comparison, the following tables summarize the key technical specifications and performance metrics of the NVIDIA Blackwell GB200 and its 5th generation Tensor Cores, with comparisons to the previous generation where relevant.

| NVIDIA GB200 NVL72 System Specifications | |

| Component | Specification |

| GPUs | 72 NVIDIA Blackwell GPUs |

| CPUs | 36 NVIDIA Grace CPUs |

| Total FP4 Tensor Core Performance | 1,440 PetaFLOPS |

| Total FP8/FP6 Tensor Core Performance | 720 PetaFLOPS/PetaOPS |

| Total FP16/BF16 Tensor Core Performance | 360 PFLOPS |

| Total TF32 Tensor Core Performance | 180 PFLOPS |

| Total FP64/FP64 Tensor Core Performance | 2,880 TFLOPS |

| Total HBM3e Memory | Up to 13.4 TB |

| Total Memory Bandwidth | Up to 576 TB/s |

| NVLink Bandwidth | 130 TB/s |

| Per-GPU Performance (Illustrative) | NVIDIA Blackwell B200 | NVIDIA Hopper H100 |

| FP4 Tensor Core (Sparse) | 20 PetaFLOPS | N/A |

| FP8 Tensor Core (Sparse) | 10 PetaFLOPS | 4 PetaFLOPS |

| FP16/BF16 Tensor Core (Sparse) | 5 PetaFLOPS | 2 PetaFLOPS |

| TF32 Tensor Core (Sparse) | 2.5 PetaFLOPS | 1 PetaFLOP |

| FP64 Tensor Core | 45 TeraFLOPS | 60 TeraFLOPS |

| HBM3e Memory | Up to 192 GB | N/A (HBM3 up to 80GB) |

| Memory Bandwidth | Up to 8 TB/s | Up to 3.35 TB/s |

Note: Performance figures, especially for sparse operations, represent theoretical peak performance and can vary based on the workload.

Application in AI Research and Drug Discovery

Accelerating Molecular Docking with AutoDock-GPU

Molecular docking is a computational method used to predict the binding orientation of a small molecule (ligand) to a larger molecule (receptor), such as a protein. This is a critical step in virtual screening for identifying potential drug candidates. AutoDock-GPU is a leading software for high-performance molecular docking that can leverage the power of GPUs.[8][9]

Recent research has demonstrated that the performance of AutoDock-GPU can be significantly enhanced by offloading specific computational tasks, such as sum reduction operations within the scoring function, to the Tensor Cores.[8][9][10] This optimization can lead to a 4-7x speedup in the reduction operation itself and a notable improvement in the overall docking simulation time.[11]

The following protocol outlines the general steps for performing a molecular docking experiment using a Tensor Core-accelerated version of AutoDock-GPU.

-

System Preparation:

-

Hardware: A system equipped with an NVIDIA Blackwell (or compatible) GPU.

-

Software: A compiled version of AutoDock-GPU with Tensor Core support enabled. This typically involves using the latest CUDA toolkit and specifying the appropriate architecture during compilation.

-

-

Input Preparation:

-

Receptor: Prepare the 3D structure of the target protein, typically in PDBQT format. This involves removing water molecules, adding hydrogen atoms, and assigning partial charges.

-

Ligand: Prepare a library of small molecules to be screened, also in PDBQT format.

-

Grid Parameter File: Define the search space for the docking simulation by creating a grid parameter file that specifies the center and dimensions of the docking box.

-

-

Execution of Docking Simulation:

-

Execute the AutoDock-GPU binary, providing the receptor, ligand library, and grid parameter file as inputs.

-

The software will automatically leverage the Tensor Cores for the accelerated portions of the calculation.

-

-

Analysis of Results:

-

The output will consist of a series of docked conformations for each ligand, ranked by their predicted binding affinity.

-

Further analysis can be performed to visualize the binding poses and identify promising drug candidates.

-

AI-Driven Analysis of Signaling Pathways

Understanding cellular signaling pathways is crucial for identifying new drug targets. The PI3K/AKT pathway, for instance, is a key regulator of cell growth, proliferation, and survival, and its dysregulation is implicated in many diseases, including cancer.[12][13][14][15][16] AI, particularly deep learning models, can be used to analyze large-scale biological data (e.g., genomics, proteomics) to model and predict the behavior of these complex pathways.

The computational intensity of training these models on vast datasets makes them ideal candidates for acceleration with 5th generation Tensor Cores. The ability to use lower precision formats like FP4 and FP8 can significantly speed up the training of graph neural networks (GNNs) and other architectures used for pathway analysis, enabling researchers to iterate on models more quickly and analyze more complex biological systems.

Benchmarking and Experimental Protocols

The performance of the NVIDIA Blackwell architecture has been evaluated in the industry-standard MLPerf benchmarks. These benchmarks provide a standardized way to compare the performance of different hardware and software configurations on a variety of AI workloads.

MLPerf Inference v4.1

In the MLPerf Inference v4.1 benchmarks, the NVIDIA Blackwell platform demonstrated up to a 4x performance improvement over the NVIDIA H100 Tensor Core GPU on the Llama 2 70B model, a large language model.[17][18] This gain is attributed to the second-generation Transformer Engine and the use of FP4 Tensor Cores.[17]

The following is a generalized protocol based on the MLPerf submission guidelines.

-

System Configuration:

-

Hardware: A server equipped with an NVIDIA B200 GPU.

-

Software: NVIDIA's optimized software stack, including the CUDA toolkit, TensorRT-LLM, and the specific drivers used for the submission.

-

-

Benchmark Implementation:

-

Use the official MLPerf Inference repository and the Llama 2 70B model.

-

The submission must adhere to the "Closed" division rules, meaning the model and processing pipeline cannot be substantially altered.

-

-

Execution:

-

Run the benchmark in both "Server" and "Offline" scenarios. The Server scenario measures latency under a specific query arrival rate, while the Offline scenario measures raw throughput.

-

The benchmark is executed for a specified duration to ensure stable performance measurements.

-

-

Validation:

-

The accuracy of the model's output must meet a predefined quality target.

-

The results are submitted to MLCommons for verification.

-

MLPerf Training v5.0

In the MLPerf Training v5.0 benchmarks, the NVIDIA GB200 NVL72 system showcased up to a 2.6x performance improvement per GPU compared to the previous Hopper architecture.[19] For the Llama 3.1 405B pre-training benchmark, a 2.2x speedup was observed.[19]

-

System Configuration:

-

Hardware: A multi-node cluster of NVIDIA GB200 NVL72 systems connected via InfiniBand. The smallest NVIDIA submission for this benchmark utilized 256 GPUs.[20]

-

Software: A Slurm-based environment with Pyxis and Enroot for containerized execution, along with NVIDIA's optimized deep learning frameworks.[20]

-

-

Dataset and Model:

-

The benchmark uses a specific dataset and the Llama 3.1 405B model. The dataset and model checkpoints must be downloaded and preprocessed.

-

-

Execution:

-

The training process is launched using a SLURM script with specific hyperparameters and configuration files as provided in the submission repository.

-

The training is run until the model reaches a predefined quality target.

-

-

Scoring:

-

The time to train is measured from the start of the training run to the point where the quality target is achieved.

-

Conclusion

References

- 1. edge-ai-vision.com [edge-ai-vision.com]

- 2. Introducing NVFP4 for Efficient and Accurate Low-Precision Inference | NVIDIA Technical Blog [developer.nvidia.com]

- 3. Inside NVIDIA Blackwell Ultra: The Chip Powering the AI Factory Era | NVIDIA Technical Blog [developer.nvidia.com]

- 4. hyperstack.cloud [hyperstack.cloud]

- 5. cdn.prod.website-files.com [cdn.prod.website-files.com]

- 6. The Engine Behind AI Factories | NVIDIA Blackwell Architecture [nvidia.com]

- 7. scribd.com [scribd.com]

- 8. themoonlight.io [themoonlight.io]

- 9. [2410.10447] Accelerating Drug Discovery in AutoDock-GPU with Tensor Cores [arxiv.org]

- 10. researchgate.net [researchgate.net]

- 11. researchgate.net [researchgate.net]

- 12. Computational Modeling of PI3K/AKT and MAPK Signaling Pathways in Melanoma Cancer - PubMed [pubmed.ncbi.nlm.nih.gov]

- 13. researchgate.net [researchgate.net]

- 14. researchgate.net [researchgate.net]

- 15. direct.mit.edu [direct.mit.edu]

- 16. Mathematical modeling of PI3K/Akt pathway in microglia - CentAUR [centaur.reading.ac.uk]

- 17. NVIDIA Blackwell Sets New Standard for Gen AI in MLPerf Inference Debut | NVIDIA Blog [blogs.nvidia.com]

- 18. Deep dive into NVIDIA Blackwell Benchmarks — where does the 4x training and 30x inference performance gain, and 25x reduction in energy usage come from? | by adrian cockcroft | Medium [adrianco.medium.com]

- 19. NVIDIA Blackwell Delivers up to 2.6x Higher Performance in MLPerf Training v5.0 | NVIDIA Technical Blog [developer.nvidia.com]

- 20. Reproducing NVIDIA MLPerf v5.0 Training Scores for LLM Benchmarks | NVIDIA Technical Blog [developer.nvidia.com]

Unveiling the Engine of Discovery: A Technical Deep Dive into the 4th Generation Ray Tracing Cores of the NVIDIA RTX 5090

For Immediate Release

Aimed at the forefront of scientific and pharmaceutical research, this technical guide provides an in-depth analysis of the 4th generation Ray Tracing (RT) Cores featured in NVIDIA's latest flagship GPU, the RTX 5090. Powered by the new Blackwell architecture, these cores introduce significant advancements poised to accelerate discovery in fields ranging from drug development and molecular dynamics to advanced scientific visualization.

This document details the architectural innovations of the 4th generation RT Cores, presents quantitative performance data in relevant scientific applications, and outlines experimental methodologies for key benchmarks. Furthermore, it provides visualizations of core technological concepts and workflows to facilitate a comprehensive understanding for researchers, scientists, and drug development professionals.

Architectural Innovations of the 4th Generation RT Cores

The Blackwell architecture, at the heart of the RTX 5090, ushers in the 4th generation of RT Cores, representing a significant leap in hardware-accelerated ray tracing.[1][2] These new cores are engineered to deliver unprecedented realism and performance in complex simulations and visualizations.

A key enhancement is the doubled ray-triangle intersection throughput compared to the previous Ada Lovelace generation.[3] This fundamental improvement directly translates to faster rendering of complex geometries, a crucial aspect of visualizing large molecular structures or intricate biological systems.

Furthermore, the 4th generation RT Cores introduce two novel hardware units:

-

Triangle Cluster Intersection Engine: This engine is designed for the efficient processing of "Mega Geometry," enabling the rendering of scenes with vastly increased geometric detail.[3][4] For scientific applications, this means the ability to visualize larger and more complex datasets with greater fidelity.

-

Linear Swept Spheres: This feature provides hardware acceleration for ray tracing finer details, such as hair and other intricate biological filaments, which are often challenging to render accurately and efficiently.[4]

These architectural advancements are built upon a custom TSMC 4N process, which allows for greater transistor density and power efficiency.[1]

Performance Benchmarks in Scientific Applications

The theoretical advancements of the 4th generation RT Cores translate into tangible performance gains in critical scientific software. The following tables summarize the performance of the NVIDIA RTX 5090 in molecular dynamics simulations.

Table 1: NVIDIA RTX 5090 Performance in NAMD

| Simulation Benchmark (Input) | System | Performance (days/ns) |

| ATPase (327,506 Atoms) | NVIDIA GeForce RTX 5090 | Data not available in specific units |

| STMV (1,066,628 Atoms) | NVIDIA GeForce RTX 5090 | Data not available in specific units |

Note: While specific performance metrics in "days/ns" were not available in the preliminary reports, the RTX 5090 demonstrated a significant performance uplift in NAMD simulations compared to the previous generation RTX 4090, particularly in larger datasets where the Blackwell GPU and GDDR7 memory can be fully leveraged.[5]

Table 2: NVIDIA RTX 5090 Performance in GROMACS

| System Configuration | Simulation Performance (ns/day) |

| Intel i9-14900K, Gigabyte RTX 5090 Aorus Master | >700 |

| Intel i5-9600K, RTX 2060 (for comparison) | ~70 |

A user report on a drug discovery simulation using GROMACS showcased a dramatic performance increase, with a system equipped with an RTX 5090 achieving over 700 ns/day, a tenfold improvement over a previous generation system.[6]

Experimental Protocols

To ensure scientific rigor and reproducibility, detailed experimental protocols are paramount. While comprehensive methodologies for the latest RTX 5090 benchmarks are still emerging, the following outlines the typical procedures for benchmarking molecular dynamics and cryo-EM applications.

Molecular Dynamics (NAMD & GROMACS) Benchmarking Protocol

A standardized approach to benchmarking molecular dynamics simulations on new hardware like the RTX 5090 involves the following steps:

-

System Preparation: A clean installation of the operating system (e.g., a Linux distribution like Ubuntu) is performed. The latest NVIDIA drivers and CUDA toolkit are installed to ensure optimal performance and compatibility.[5]

-

Software Compilation: The molecular dynamics software (e.g., NAMD, GROMACS) is compiled from source to ensure it is optimized for the specific hardware architecture.

-

Benchmark Selection: Standard and publicly available benchmark datasets are used. For NAMD, these often include systems like the Satellite Tobacco Mosaic Virus (STMV) and ATP synthase (ATPase). For GROMACS, benchmarks might involve simulations of proteins in water boxes of varying sizes.

-

Execution and Data Collection: The simulations are run for a set number of steps, and the performance is typically measured in nanoseconds of simulation per day (ns/day). Multiple runs are often performed to ensure the consistency and reliability of the results.

-

System Monitoring: Throughout the benchmark, system parameters such as GPU utilization, temperature, and power consumption are monitored to ensure the hardware is performing as expected.

Cryo-Electron Microscopy (RELION) Benchmarking Protocol

Benchmarking cryo-EM software like RELION on a new GPU would typically follow this protocol:

-

Software and System Setup: Similar to MD benchmarking, a clean OS with the latest NVIDIA drivers and CUDA toolkit is essential. RELION is then installed and configured.

-

Dataset: A well-characterized, publicly available cryo-EM dataset is used for the benchmark. This allows for comparison across different hardware setups.

-

Processing Workflow: The benchmark would involve running key processing steps in RELION, such as 2D classification, 3D classification, and 3D refinement.

-

Performance Measurement: The primary metric for performance is the time taken to complete each processing step. This is often measured in wall-clock time.

-

Parameter Consistency: It is crucial to use the same processing parameters (e.g., number of classes, particle box size, angular sampling rate) across all hardware being compared to ensure a fair and accurate assessment.

Visualizing the Advancements

To better illustrate the concepts discussed, the following diagrams are provided in the DOT language for use with Graphviz.

Signaling Pathway of Ray Tracing in Scientific Visualization

Caption: A simplified workflow of ray tracing for scientific visualization on the RTX 5090.

Logical Relationship: 4th Gen RT Core Enhancements

Caption: Architectural improvements of the 4th Gen RT Core and their benefits.

Conclusion and Future Outlook

The 4th generation Ray Tracing Cores in the NVIDIA RTX 5090 represent a significant step forward in computational science. The architectural enhancements, leading to substantial performance gains in molecular dynamics and other scientific applications, will empower researchers to tackle larger, more complex problems. The ability to visualize massive datasets with unprecedented fidelity and interactivity will undoubtedly accelerate the pace of discovery in drug development and other scientific fields. As software ecosystems continue to mature and leverage the full capabilities of the Blackwell architecture, we can anticipate even greater breakthroughs in the years to come.

References

- 1. GeForce RTX 50 series - Wikipedia [en.wikipedia.org]

- 2. NVIDIA GeForce RTX 50 Technical Deep Dive | TechPowerUp [techpowerup.com]

- 3. A Deeper Analysis of Nvidia RTX 50 Blackwell GPU Architecture [guru3d.com]

- 4. Blackwell (microarchitecture) - Wikipedia [en.wikipedia.org]

- 5. phoronix.com [phoronix.com]

- 6. reddit.com [reddit.com]

Initial Impressions and Potential for Breakthroughs in Research

An In-Depth Technical Guide to the NVIDIA RTX 5090 for Academic and Scientific Applications

The release of the NVIDIA GeForce RTX 5090, powered by the "Blackwell" architecture, marks a significant leap forward in computational power, with profound implications for academic research, particularly in the fields of drug discovery, molecular dynamics, and genomics.[1] Announced at CES 2025 and launched on January 30, 2025, this flagship GPU is engineered to handle the most demanding computational tasks.[2][3][4] For researchers and scientists, the RTX 5090 offers the potential to accelerate complex simulations, train larger and more sophisticated AI models, and analyze vast datasets with unprecedented speed. This guide provides an in-depth technical overview of the RTX 5090, its performance in relevant academic workloads, and initial impressions of its utility for scientific and drug development professionals.

Core Specifications

The RTX 5090 introduces substantial upgrades over its predecessor, the RTX 4090. Key improvements include a significant increase in CUDA and Tensor cores, the adoption of next-generation GDDR7 memory, and a wider memory bus, all contributing to a considerable boost in raw computational and AI performance.[1][3][5][6]

| Specification | NVIDIA GeForce RTX 5090 | NVIDIA GeForce RTX 4090 |

| GPU Architecture | Blackwell (GB202)[1][2][5][7] | Ada Lovelace |

| Process Size | 5 nm[2] | 5 nm |

| Transistors | 92.2 Billion[2] | 76.3 Billion |

| CUDA Cores | 21,760[2][3][4][5] | 16,384 |

| Tensor Cores | 680 (5th Gen)[2][3][7] | 512 (4th Gen) |

| RT Cores | 170 (4th Gen)[2][3][7] | 128 (3rd Gen) |

| Boost Clock | 2407 MHz[2] | 2520 MHz |

| Memory Size | 32 GB GDDR7[2][4][8][9] | 24 GB GDDR6X |

| Memory Interface | 512-bit[2][3][5] | 384-bit |

| Memory Bandwidth | 1792 GB/s[1][3][4][5] | 1008 GB/s |

| TDP | 575 W[1][2][3][7] | 450 W |

| Launch Price (MSRP) | $1,999[2][3][9][10] | $1,599 |

Performance in Key Research Areas

Preliminary benchmarks indicate that the RTX 5090 offers a substantial performance uplift in a variety of compute-intensive applications relevant to academic and scientific research.

Molecular Dynamics (MD) Simulations

The RTX 5090 demonstrates a significant performance improvement in molecular dynamics simulations, a critical tool in drug discovery and materials science.[11] Benchmarks using NAMD (Nanoscale Molecular Dynamics) show a more substantial leap in performance from the RTX 4090 to the RTX 5090 than was observed between the RTX 3090 and RTX 4090.[11] This is attributed to the Blackwell architecture and the high bandwidth of the GDDR7 memory.[11] For AMBER 24 simulations, the RTX 5090 is noted as offering the best performance for its cost in single-GPU workstations.[12] However, its large physical size may limit its scalability in multi-GPU setups.[12]

| Benchmark | System | Performance (ns/day) |

| NAMD (ATPase - 327,506 Atoms) | RTX 5090 | Data not specified, but noted as a "nice leap forward"[11] |

| NAMD (STMV - 1,066,628 Atoms) | RTX 5090 | Data not specified, but noted as a "nice uplift"[11] |

| GROMACS (~45,000 Atoms) | Ryzen Threadripper, RTX 5090, 256GB RAM | 500 ns in < 12 hours[13] |

The reported NAMD benchmarks were conducted using NAMD 3.0.1 with CUDA 12.8 on a Linux system with the NVIDIA 570.86.10 driver.[11] The tests were performed on various NVIDIA GeForce graphics cards to compare performance.[11] Two different simulation inputs were used: ATPase with 327,506 atoms and STMV with 1,066,628 atoms.[11]

AI-Driven Drug Discovery and Genomics

In genomics, GPU-accelerated tools like NVIDIA Parabricks can significantly reduce the time required for secondary analysis of sequencing data.[15][16] While direct RTX 5090 benchmarks for Parabricks are not yet widely available, the card's specifications suggest it will dramatically speed up processes like sequence alignment and variant calling.[15][17]

| AI Benchmark (UL Procyon) | Metric | RTX 5090 | RTX 4090 | RTX 6000 Ada |

| AI Text Generation (Phi) | Score | 5,749 | 4,958 | 4,508 |

| AI Text Generation (Mistral) | Score | 6,267 | 5,094 | 4,255 |

| AI Text Generation (Llama3) | Score | 6,104 | 4,849 | 4,026 |

| AI Text Generation (Llama2) | Score | 6,591 | 5,013 | 3,957 |

| AI Image Gen. (Stable Diffusion 1.5 FP16) | Time (s) | 12.204 | Not specified | Not specified |

References

- 1. beebom.com [beebom.com]

- 2. NVIDIA GeForce RTX 5090 Specs | TechPowerUp GPU Database [techpowerup.com]

- 3. Everything You Need to Know About the Nvidia RTX 5090 GPU [runpod.io]

- 4. New GeForce RTX 50 Series Graphics Cards & Laptops Powered By NVIDIA Blackwell Bring Game-Changing AI and Neural Rendering Capabilities To Gamers and Creators | GeForce News | NVIDIA [nvidia.com]

- 5. vast.ai [vast.ai]

- 6. Nvidia RTX 5090 Graphics Card Review — Get Neural Or Get Left Behind [forbes.com]

- 7. notebookcheck.net [notebookcheck.net]

- 8. Nvidia GeForce RTX 5090 Revealed And It’s Massive [forbes.com]

- 9. GeForce RTX 5090 Graphics Cards | NVIDIA [nvidia.com]

- 10. NVIDIA announces new RTX 5090 graphics card that costs $2,000 at CES [engadget.com]

- 11. phoronix.com [phoronix.com]

- 12. Exxact Corp. | Custom Computing Solutions Integrator [exxactcorp.com]

- 13. researchgate.net [researchgate.net]

- 14. Drug Discovery With Accelerated Computing Platform | NVIDIA [nvidia.com]

- 15. AI in Genomics Research | NVIDIA [nvidia.com]

- 16. blogs.oracle.com [blogs.oracle.com]

- 17. Genomics Analysis Blueprint by NVIDIA | NVIDIA NIM [build.nvidia.com]

- 18. storagereview.com [storagereview.com]

- 19. Reddit - The heart of the internet [reddit.com]

Technical Guide: Evaluating the NVIDIA RTX 5090 for High-Performance Computing Clusters in Scientific Research and Drug Development

Executive Summary

The release of the NVIDIA GeForce RTX 5090, built on the "Blackwell" architecture, presents a compelling proposition for high-performance computing (HPC) applications, particularly in budget-constrained research environments.[1][2] This guide provides a technical deep-dive into the RTX 5090's architecture, performance metrics, and its suitability for computationally intensive tasks common in scientific research and drug discovery. We will analyze its specifications in comparison to its predecessor and its professional-grade counterparts, discuss the implications of its consumer-focused design, and provide workflows for its integration into research clusters. While offering unprecedented raw performance and next-generation features, its adoption in critical research requires careful consideration of its limitations compared to workstation and data center-grade GPUs.

Core Architecture: The Blackwell Advantage

The RTX 5090 is powered by the NVIDIA Blackwell architecture, which introduces significant advancements relevant to HPC.[2] Built on a custom 4N FinFET process from TSMC, the architecture is meticulously crafted to accelerate AI workloads, large-scale HPC tasks, and advanced graphics rendering.[2][3]

Key architectural improvements include:

-

Fourth-Generation Ray Tracing (RT) Cores: While primarily a gaming-focused feature, enhanced RT cores can also accelerate scientific visualization, enabling researchers to render complex molecular structures and simulations with greater fidelity and speed.[1][8]

-

Enhanced CUDA Cores: The fundamental processing units of the GPU have undergone generational improvements, leading to higher instructions per clock (IPC) and overall better performance in general-purpose GPU computing.[9]

-

Advanced Memory Subsystem: The RTX 5090 utilizes GDDR7 memory on a wide 512-bit bus, delivering memory bandwidth that approaches 1.8 TB/s.[3][9] This is critical for HPC workloads that are often memory-bound, such as molecular dynamics simulations, where large datasets must be rapidly accessed by the GPU cores.

Quantitative Data: Specification Comparison

To contextualize the RTX 5090's capabilities, it is essential to compare it against its predecessor, the GeForce RTX 4090, and a professional workstation card from the same Blackwell generation, the RTX PRO 6000.

| Feature | NVIDIA GeForce RTX 5090 | NVIDIA GeForce RTX 4090 | NVIDIA RTX PRO 6000 (Blackwell) |

| GPU Architecture | Blackwell | Ada Lovelace | Blackwell |

| CUDA Cores | 21,760[3][10][11] | 16,384 | 24,064[12][13] |

| Tensor Cores | 680 (5th Gen)[3][10] | 512 (4th Gen) | Not specified, but 5th Gen |

| RT Cores | 170 (4th Gen)[3][10] | 128 (3rd Gen) | Not specified, but 4th Gen |

| Boost Clock | ~2.41 GHz[3][6] | ~2.52 GHz | Not specified |

| Memory Size | 32 GB GDDR7[10][11][14] | 24 GB GDDR6X | 96 GB GDDR7 ECC[12][13] |

| Memory Interface | 512-bit[3][10][14] | 384-bit | 512-bit |

| Memory Bandwidth | ~1,792 GB/s[3][14] | ~1,008 GB/s | ~1,792 GB/s |

| FP32 Performance | ~103 TFLOPS (Theoretical)[12] | ~82.6 TFLOPS | ~130 TFLOPS (Theoretical)[12] |

| AI Performance | 3,352 AI TOPS[6] | ~1,321 AI TOPS | Not specified, expected higher |

| TGP (Total Graphics Power) | 575 W[3][10][11] | 450 W | 600 W[12][13] |

| ECC Memory Support | No[12] | No | Yes[12] |

| NVLink Support | No[12] | No | No (PCIe Gen 5 only)[12] |

| Driver | Game Ready / Studio | Game Ready / Studio | NVIDIA Enterprise / RTX Workstation[12] |

| Launch Price (MSRP) | $1,999[9][10] | $1,599 | ~$8,000[15] |

Table 1: Comparative analysis of key GPU specifications.

Suitability for Scientific and Drug Development Workloads

The RTX 5090's specifications make it a powerful tool for many research applications, but its suitability depends on the specific nature of the computational tasks.

Strengths

-

Exceptional Price-to-Performance Ratio: For raw single-precision (FP32) and AI inference performance, the RTX 5090 offers capabilities that rival or exceed previous-generation professional cards at a fraction of the cost. This is highly advantageous for academic labs and startups where budget is a primary constraint.

-

Massive Memory Bandwidth and Size: With 32 GB of GDDR7 memory and a bandwidth of nearly 1.8 TB/s, the RTX 5090 can handle significantly larger datasets and more complex models than its predecessors.[3][9][14] This is beneficial for molecular dynamics simulations of large biomolecular systems, cryo-EM data processing, and training moderately sized AI models.[16]

Limitations and Considerations

-

Lack of ECC Memory: The absence of Error-Correcting Code (ECC) memory is a significant drawback for mission-critical, long-running simulations where data integrity is paramount.[12] ECC memory can detect and correct in-memory data corruption, which can otherwise lead to silent errors in scientific results.

-

Double Precision (FP64) Performance: Consumer-grade GeForce cards are typically limited in their FP64 performance, often at a 1/64 ratio of their FP32 throughput. While the Blackwell architecture for data centers emphasizes AI, some traditional HPC simulations (e.g., certain quantum chemistry or fluid dynamics calculations) still rely heavily on FP64 precision. The professional RTX PRO 6000 and data center B200 GPUs are better suited for these tasks.[4]

-

Driver and Software Support: The RTX 5090 uses GeForce Game Ready or NVIDIA Studio drivers. While the Studio drivers are optimized for creative applications, they lack the rigorous testing, certification for scientific software (ISV certifications), and enterprise-level support that come with the RTX Workstation drivers.[12] This can lead to potential instabilities or suboptimal performance in specialized research software.

-

Cooling and Power in Cluster Environments: The high Total Graphics Power (TGP) of 575W requires robust power delivery and, more importantly, effective thermal management in a dense cluster environment.[3][10] The dual-slot, flow-through cooler of the Founders Edition is designed for a standard PC case, not necessarily for rack-mounted servers where airflow is different.

Experimental Protocols & Methodologies

While specific peer-reviewed experimental protocols for the RTX 5090 are emerging, methodologies from benchmark reports and early adopter experiences provide a framework for performance evaluation.

Methodology: AI Inference and Training Benchmarks

-

System Configuration:

-

CPU: Intel Core i9-14900K or AMD Ryzen 9 9950X3D.[16]

-

Motherboard: Z790 or X670E chipset with PCIe 5.0 support.

-

RAM: 96GB of DDR5 @ 6000 MT/s or higher.[16]

-

GPU: NVIDIA GeForce RTX 5090 (32GB).

-

Storage: 2TB NVMe SSD (PCIe 5.0).

-

Power Supply: 1300W or higher, 80+ Platinum rated.[16]

-

OS: Ubuntu 22.04 LTS with NVIDIA Driver Version 581.80 or newer.[10]

-

Software: CUDA Toolkit, PyTorch, TensorFlow, Docker.

-

-

Experimental Procedure (AI Text Generation):

-

Deploy large language models (LLMs) of varying sizes, such as Meta's Llama 3.1 and Microsoft's Phi-3.5, locally on the GPU.

-

Utilize frameworks like llama.cpp for inference testing.

-

Measure key performance indicators:

-

Time to First Token (TTFT): The latency from prompt input to the generation of the first token.

-

Tokens per Second (TPS): The throughput of token generation for a sustained output.

-

-

Run tests with different levels of model quantization (e.g., 8-bit) to assess performance trade-offs.

-

Methodology: Molecular Dynamics (MD) Simulation

This protocol is based on common practices in computational drug discovery.[16]

-

System Configuration: As described in 5.1.

-

Software Stack:

-

MD Engine: GROMACS, AMBER, or NAMD (GPU-accelerated versions).

-

Visualization: VMD or PyMOL.

-

System Preparation: Standard molecular modeling software (e.g., Maestro, ChimeraX).

-

-

Experimental Procedure:

-

System Setup: Prepare a biomolecular system (e.g., a target protein embedded in a lipid bilayer with solvent and ions). System sizes can range from 100,000 to 300,000 atoms to test the limits of the 32GB VRAM.[16]

-

Minimization & Equilibration: Perform energy minimization followed by NVT (constant volume) and NPT (constant pressure) equilibration phases to stabilize the system.

-

Production Run: Execute a production MD simulation for a defined period (e.g., 100 nanoseconds).

-

Performance Measurement: The primary metric is simulation throughput, measured in nanoseconds per day (ns/day) .

-

Analysis: Compare the ns/day performance of the RTX 5090 against other GPUs for the same molecular system.

-

Visualizations: Workflows and Logical Relationships

The following diagrams illustrate key concepts for integrating the RTX 5090 into a research computing environment.

Caption: GPU-accelerated drug discovery workflow.

Caption: RTX 5090 vs. RTX PRO 6000 for research.

Caption: GPU selection flowchart for research workloads.

Conclusion and Recommendation

The NVIDIA GeForce RTX 5090 is a transformative piece of hardware that significantly lowers the barrier to entry for high-performance computing. Its raw computational power, especially in AI and single-precision tasks, is undeniable.[1][17]

-

For Critical, High-Precision Simulations: For long-running molecular dynamics simulations where results contribute to clinical decisions, or for quantum mechanical calculations demanding high precision and data integrity, the lack of ECC memory and certified drivers makes the RTX 5090 a riskier proposition. In these scenarios, a professional card like the RTX PRO 6000, despite its higher cost, is the more appropriate tool.[12]

Ultimately, the RTX 5090 is highly suitable for HPC clusters in research and drug development, provided its role is clearly defined. It excels as a high-density compute solution for exploratory research, AI model development, and visualization. However, for final validation and mission-critical simulations, it should be complemented by professional-grade GPUs that guarantee the highest level of reliability and data integrity.

References

- 1. box.co.uk [box.co.uk]

- 2. medium.com [medium.com]

- 3. notebookcheck.net [notebookcheck.net]

- 4. Weights & Biases [wandb.ai]

- 5. The Engine Behind AI Factories | NVIDIA Blackwell Architecture [nvidia.com]

- 6. neoxcomputers.co.uk [neoxcomputers.co.uk]

- 7. NVIDIA Blackwell GeForce RTX 50 Series Opens New World of AI Computer Graphics | NVIDIA Newsroom [nvidianews.nvidia.com]

- 8. newegg.com [newegg.com]

- 9. NVIDIA GeForce RTX 5090 Founders Edition Review - The New Flagship | TechPowerUp [techpowerup.com]

- 10. NVIDIA GeForce RTX 5090 Specs | TechPowerUp GPU Database [techpowerup.com]

- 11. pcgamer.com [pcgamer.com]

- 12. RTX PRO 6000 vs RTX 5090: Which GPU Leads the Next Era? [newegg.com]

- 13. xda-developers.com [xda-developers.com]

- 14. vast.ai [vast.ai]

- 15. reddit.com [reddit.com]

- 16. Reddit - The heart of the internet [reddit.com]

- 17. Nvidia GeForce RTX 5090 review: Brutally fast, but DLSS 4 is the game changer | PCWorld [pcworld.com]

- 18. pugetsystems.com [pugetsystems.com]

The Future of Large-Scale Data Analysis: A Technical Deep Dive into 32GB GDDR7 Memory

For Researchers, Scientists, and Drug Development Professionals

The relentless growth of data in scientific research, from genomics to molecular modeling, demands computational hardware that can keep pace. The advent of 32GB GDDR7 memory marks a pivotal moment, offering unprecedented speed and capacity for handling massive datasets. This guide explores the core technical advancements of GDDR7 and its transformative potential in accelerating large-scale data analysis, with a focus on applications in research and drug development.

GDDR7: A Generational Leap in Memory Performance

Graphics Double Data Rate 7 (GDDR7) is the next evolution in high-performance memory, engineered to eliminate data bottlenecks that can hinder complex computational workloads. Its key innovations lie in significantly higher bandwidth, improved power efficiency, and increased density, making it an ideal solution for memory-intensive applications.

Quantitative Comparison: GDDR7 vs. GDDR6

The table below summarizes the key performance metrics of GDDR7 compared to its predecessor, GDDR6, highlighting the substantial advancements of the new technology.

| Feature | GDDR6 | GDDR7 |

| Peak Bandwidth per Pin | Up to 24 Gbps[1] | Initially 32 Gbps, with a roadmap to 48 Gbps[2][3] |

| Signaling Technology | NRZ (Non-Return-to-Zero) | PAM3 (Pulse Amplitude Modulation, 3-level)[1][4] |

| System Bandwidth (Theoretical) | Up to 1.1 TB/s | Over 1.5 TB/s[5][6][7] |

| Operating Voltage | 1.25V - 1.35V | 1.1V - 1.2V[2][5] |

| Power Efficiency | Baseline | Over 50% improvement in power efficiency over GDDR6[8] |

| Maximum Density | 16Gb | 32Gb and higher[9] |

Hypothetical Experimental Protocol: Large-Scale Genomic Data Analysis

The high bandwidth and 32GB capacity of GDDR7 can dramatically accelerate genomic workflows, which are often bottlenecked by the sheer volume of data.

Objective: To perform variant calling and downstream analysis on a cohort of 100 whole-genome sequencing (WGS) samples.

Methodology:

-

Data Loading and Pre-processing:

-

Raw sequencing data (FASTQ files) for 100 samples, each approximately 100GB, are loaded from high-speed NVMe storage into the 32GB GDDR7 memory of a GPU-accelerated system.

-

The high data transfer rate of GDDR7 allows for rapid loading of these large files, minimizing I/O wait times.

-

Initial quality control and adapter trimming are performed in parallel on the GPU, leveraging its massive core count.

-

-

Alignment to Reference Genome:

-

The pre-processed reads are aligned to a human reference genome (e.g., GRCh38) using a GPU-accelerated aligner like BWA-MEM2.

-

The entire reference genome and the reads for multiple samples can be held in the 32GB GDDR7 memory, reducing the need for slower system RAM access and enabling faster alignment.

-

-

Variant Calling:

-

The aligned reads (BAM files) are processed with a GPU-accelerated variant caller such as NVIDIA's Parabricks.

-

The high bandwidth of GDDR7 is crucial here, as the variant caller needs to perform random access reads across the large alignment files to identify genetic variations.

-

-

Joint Genotyping and Annotation:

-

Variants from all 100 samples are jointly genotyped to improve accuracy. This process is highly memory-intensive, and the 32GB capacity of GDDR7 allows for larger cohorts to be processed simultaneously.

-

The resulting VCF file is annotated with information from large databases (e.g., dbSNP, ClinVar), which can be pre-loaded into the GDDR7 memory for rapid lookups.

-

Workflow Diagram: Genomics Analysis

Caption: Genomics analysis workflow accelerated by 32GB GDDR7 memory.

Hypothetical Experimental Protocol: High-Throughput Virtual Screening for Drug Discovery

Molecular dynamics simulations and virtual screening are critical in modern drug discovery but are computationally demanding. GDDR7 can significantly reduce the time to results.

Objective: To screen a library of 1 million small molecules against a target protein to identify potential drug candidates.

Methodology:

-

System Preparation:

-

The 3D structure of the target protein is loaded into the 32GB GDDR7 memory.

-

The small molecule library is also loaded. The large capacity of GDDR7 allows for a significant portion of the library to be held in memory, reducing I/O overhead.

-

-

Molecular Docking:

-

A GPU-accelerated docking program (e.g., AutoDock-GPU) is used to predict the binding orientation of each small molecule in the active site of the protein.

-

The high bandwidth of GDDR7 enables rapid access to the protein structure and the parameters for each small molecule, allowing for a high throughput of docking calculations.

-

-

Molecular Dynamics (MD) Simulation:

-

The most promising protein-ligand complexes identified from docking are subjected to short MD simulations to evaluate their stability.

-

GPU-accelerated MD engines like AMBER or GROMACS can leverage the 32GB GDDR7 to simulate larger and more complex systems. The high memory bandwidth is essential for the frequent updates of particle positions and forces.

-

-

Binding Free Energy Calculation:

-

For the most stable complexes, more computationally intensive methods like MM/PBSA or free energy perturbation are used to calculate the binding free energy.

-

These calculations are highly parallelizable and benefit from the ability of GDDR7 to quickly feed the GPU cores with the necessary data from the MD trajectories.

-

Workflow Diagram: Drug Discovery Virtual Screening

Caption: High-throughput virtual screening workflow for drug discovery.

Signaling Pathways and Logical Relationships

The advancements in computational power enabled by 32GB GDDR7 memory can also facilitate the analysis of complex biological systems, such as signaling pathways. By allowing for more comprehensive simulations and the integration of multi-omics data, researchers can build more accurate models of cellular processes.

Diagram: Simplified MAPK Signaling Pathway Analysis

Caption: Analysis of the MAPK signaling pathway using integrated multi-omics data.

Conclusion

The introduction of 32GB GDDR7 memory represents a significant milestone for computational research. Its high bandwidth, increased capacity, and improved power efficiency will empower researchers, scientists, and drug development professionals to tackle larger and more complex problems. By reducing data bottlenecks and enabling more sophisticated simulations and analyses, GDDR7 is poised to accelerate the pace of discovery and innovation across a wide range of scientific disciplines. The adoption of this technology in next-generation GPUs, such as the upcoming NVIDIA RTX 50 series[3][10], will make these capabilities more accessible to the broader research community.

References

- 1. What are the advantages of using GDDR7 memory over GDDR6 in a high-performance computing application? - Massed Compute [massedcompute.com]

- 2. rambus.com [rambus.com]

- 3. tomshardware.com [tomshardware.com]

- 4. semiengineering.com [semiengineering.com]

- 5. GDDR7 SDRAM - Wikipedia [en.wikipedia.org]

- 6. investors.micron.com [investors.micron.com]

- 7. GDDR7 - the next generation of graphics memory | Micron Technology Inc. [micron.com]

- 8. assets.micron.com [assets.micron.com]

- 9. community.cadence.com [community.cadence.com]

- 10. GeForce RTX 50 series - Wikipedia [en.wikipedia.org]

NVIDIA RTX 5090: A Technical Deep Dive into its Generative AI Core for Scientific and Drug Discovery Applications

For Researchers, Scientists, and Drug Development Professionals

The NVIDIA RTX 5090, powered by the groundbreaking Blackwell architecture, represents a significant leap forward in computational power, particularly for generative AI workloads that are becoming increasingly central to scientific research and drug development. This technical guide explores the core capabilities of the RTX 5090, focusing on its performance in generative AI tasks, the underlying architectural innovations, and detailed methodologies for reproducing key performance benchmarks.

Core Architectural Innovations of the Blackwell GPU

The Blackwell architecture, at the heart of the RTX 5090, introduces several key technologies designed to accelerate generative AI. These advancements provide substantial performance gains and new capabilities for researchers working with complex models in fields like molecular dynamics, protein folding, and drug discovery.

The second-generation Transformer Engine further enhances performance by intelligently managing and optimizing the precision of calculations on the fly.[2] This engine, combined with NVIDIA's TensorRT™-LLM and NeMo™ Framework, accelerates both inference and training for large language models (LLMs) and Mixture-of-Experts (MoE) models.[2]

The data flow for a generative AI inference task leveraging these new features can be conceptualized as a streamlined pipeline. Input data is fed into the model, where the Transformer Engine dynamically selects the optimal precision for different layers. The fifth-generation Tensor Cores then execute the matrix-multiply-accumulate operations at high speed, leveraging FP4 where possible to maximize throughput and minimize memory access. The results are then passed through the subsequent layers of the neural network to generate the final output.

References

DLSS 4 for Scientific Visualization: A Technical Introduction

An In-depth Guide for Researchers, Scientists, and Drug Development Professionals

NVIDIA's Deep Learning Super Sampling (DLSS) has emerged as a transformative technology in real-time rendering, primarily within the gaming industry. However, the latest iteration, DLSS 4, with its fundamentally new AI architecture, presents a compelling case for its application in computationally intensive scientific visualization tasks. This guide provides a technical overview of the core components of DLSS 4, exploring its potential to accelerate workflows and enhance visual fidelity for researchers, scientists, and professionals in drug development. While concrete benchmarks in scientific applications are still emerging, this paper will extrapolate from existing data to present a forward-looking perspective on how DLSS 4 can revolutionize the visualization of complex scientific datasets.

Core Technologies of DLSS 4

DLSS 4 represents a significant leap from its predecessors, primarily due to its adoption of a transformer-based AI model . This marks a departure from the Convolutional Neural Networks (CNNs) used in previous versions. Transformers are renowned for their ability to capture long-range dependencies and contextual relationships within data, a capability that translates to more robust and accurate image reconstruction.[1][2] This new architecture underpins the two primary pillars of DLSS 4: Super Resolution and Ray Reconstruction , and introduces a novel feature called Multi-Frame Generation .

Super Resolution and Ray Reconstruction: The Power of the Transformer Model

The new transformer model in DLSS 4 significantly enhances the capabilities of Super Resolution and Ray Reconstruction.[1][2] For scientific visualization, this translates to the ability to render large and complex datasets at lower resolutions and then intelligently upscale them to high resolutions, preserving intricate details while maintaining interactive frame rates.

The transformer architecture allows the AI to better understand the spatial and temporal relationships within a rendered scene.[3][1] This leads to a number of key improvements relevant to scientific visualization:

-

Enhanced Detail Preservation: The model can more accurately reconstruct fine details in complex structures, such as molecular bonds or intricate cellular components.

-

Improved Temporal Stability: When exploring dynamic simulations, the transformer model reduces flickering and ghosting artifacts that can occur with fast-moving elements.

-

Superior Handling of Translucency and Volumetric Effects: The model's ability to understand global scene context can lead to more accurate rendering of translucent surfaces and volumetric data, which are common in biological and medical imaging.

Multi-Frame Generation: A Paradigm Shift in Performance

Exclusive to the GeForce RTX 50 series and subsequent architectures, Multi-Frame Generation is a revolutionary technique that generates multiple intermediate frames for each rendered frame.[1] This is achieved by a sophisticated AI model that analyzes motion vectors and optical flow to predict and create entirely new frames. For scientific visualization, this can lead to a dramatic increase in perceived smoothness and interactivity, especially when dealing with very large datasets that would otherwise be rendered at low frame rates.

Potential Applications in Scientific and Drug Development Workflows

The advancements in DLSS 4 have the potential to significantly impact various stages of the scientific research and drug development pipeline:

-

Molecular Dynamics and Structural Biology: Interactively visualizing large biomolecular complexes, such as proteins and viruses, is crucial for understanding their function. DLSS 4 could enable researchers to explore these structures in high resolution and at fluid frame rates, facilitating the identification of binding sites and the analysis of molecular interactions.

-

Volumetric Data Visualization: Medical imaging techniques like MRI and CT scans, as well as microscopy data, generate large volumetric datasets. DLSS 4 can accelerate the rendering of this data, allowing for real-time exploration and analysis of anatomical structures and cellular processes.

-

Computational Fluid Dynamics (CFD) and Simulation: Visualizing the results of complex simulations, such as blood flow in arteries or airflow over a surface, often requires significant computational resources. DLSS 4 could provide a pathway to interactive visualization of these simulations, enabling researchers to gain insights more quickly.

Quantitative Data and Performance Metrics

While specific benchmarks for DLSS 4 in scientific visualization applications are not yet widely available, we can look at the performance gains observed in the gaming industry to understand its potential. The following tables summarize the performance improvements seen in demanding real-time rendering scenarios. It is important to note that these are for illustrative purposes and the actual performance gains in scientific applications will depend on the specific software and dataset.

Table 1: Illustrative Performance Gains with DLSS 4 Multi-Frame Generation (Gaming Scenarios)

| Game/Engine | Resolution | Settings | Native Rendering (FPS) | DLSS 4 with Multi-Frame Generation (FPS) | Performance Uplift |

| Cyberpunk 2077 | 4K | Max, Ray Tracing: Overdrive | ~25 | ~150 | ~6x |

| Alan Wake 2 | 4K | Max, Path Tracing | ~30 | ~180 | ~6x |

| Unreal Engine 5 Demo | 4K | High, Lumen | ~40 | ~240 | ~6x |

Note: Data is aggregated from various gaming-focused technology reviews and is intended to be illustrative of the potential performance gains.

Table 2: DLSS 4 Technical Specifications and Improvements

| Feature | DLSS 3.5 (CNN Model) | DLSS 4 (Transformer Model) | Key Advantage for Scientific Visualization |

| AI Architecture | Convolutional Neural Network (CNN) | Transformer | Improved understanding of global context and long-range dependencies, leading to better detail preservation. |

| Frame Generation | Single Frame Generation | Multi-Frame Generation (up to 3 additional frames) | Dramatically smoother and more interactive visualization of large datasets. |

| Ray Reconstruction | AI-based denoiser | Enhanced by Transformer model | More accurate and stable rendering of complex lighting and transparent structures. |

| Hardware Requirement | GeForce RTX 20/30/40 Series | Super Resolution/Ray Reconstruction: RTX 20/30/40/50 Series; Multi-Frame Generation: RTX 50 Series and newer | Access to foundational AI upscaling on a wider range of hardware, with the most significant performance gains on the latest generation. |

Experimental Protocols and Methodologies

As the adoption of DLSS 4 in the scientific community is still in its early stages, standardized experimental protocols for its evaluation are yet to be established. However, a robust methodology for assessing its impact on a given scientific visualization workflow would involve the following steps:

-

Baseline Performance Measurement: Render a representative dataset using the native rendering capabilities of the visualization software (e.g., VMD, ChimeraX, ParaView) and record key performance metrics such as frames per second (FPS), frame time, and memory usage.

-

DLSS 4 Integration and Configuration: If the software supports DLSS 4, enable it and test different quality modes (e.g., Quality, Balanced, Performance, Ultra Performance).