CS476

Description

Properties

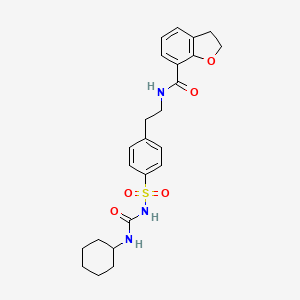

IUPAC Name |

N-[2-[4-(cyclohexylcarbamoylsulfamoyl)phenyl]ethyl]-2,3-dihydro-1-benzofuran-7-carboxamide | |

|---|---|---|

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI |

InChI=1S/C24H29N3O5S/c28-23(21-8-4-5-18-14-16-32-22(18)21)25-15-13-17-9-11-20(12-10-17)33(30,31)27-24(29)26-19-6-2-1-3-7-19/h4-5,8-12,19H,1-3,6-7,13-16H2,(H,25,28)(H2,26,27,29) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI Key |

RNQMWIVFHOCVMM-UHFFFAOYSA-N | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Canonical SMILES |

C1CCC(CC1)NC(=O)NS(=O)(=O)C2=CC=C(C=C2)CCNC(=O)C3=CC=CC4=C3OCC4 | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Molecular Formula |

C24H29N3O5S | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

DSSTOX Substance ID |

DTXSID50194105 | |

| Record name | CS 476 | |

| Source | EPA DSSTox | |

| URL | https://comptox.epa.gov/dashboard/DTXSID50194105 | |

| Description | DSSTox provides a high quality public chemistry resource for supporting improved predictive toxicology. | |

Molecular Weight |

471.6 g/mol | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

CAS No. |

41177-35-9 | |

| Record name | CS 476 | |

| Source | ChemIDplus | |

| URL | https://pubchem.ncbi.nlm.nih.gov/substance/?source=chemidplus&sourceid=0041177359 | |

| Description | ChemIDplus is a free, web search system that provides access to the structure and nomenclature authority files used for the identification of chemical substances cited in National Library of Medicine (NLM) databases, including the TOXNET system. | |

| Record name | CS-476 | |

| Source | DTP/NCI | |

| URL | https://dtp.cancer.gov/dtpstandard/servlet/dwindex?searchtype=NSC&outputformat=html&searchlist=302998 | |

| Description | The NCI Development Therapeutics Program (DTP) provides services and resources to the academic and private-sector research communities worldwide to facilitate the discovery and development of new cancer therapeutic agents. | |

| Explanation | Unless otherwise indicated, all text within NCI products is free of copyright and may be reused without our permission. Credit the National Cancer Institute as the source. | |

| Record name | CS 476 | |

| Source | EPA DSSTox | |

| URL | https://comptox.epa.gov/dashboard/DTXSID50194105 | |

| Description | DSSTox provides a high quality public chemistry resource for supporting improved predictive toxicology. | |

| Record name | CS-476 | |

| Source | FDA Global Substance Registration System (GSRS) | |

| URL | https://gsrs.ncats.nih.gov/ginas/app/beta/substances/G6BG4727TQ | |

| Description | The FDA Global Substance Registration System (GSRS) enables the efficient and accurate exchange of information on what substances are in regulated products. Instead of relying on names, which vary across regulatory domains, countries, and regions, the GSRS knowledge base makes it possible for substances to be defined by standardized, scientific descriptions. | |

| Explanation | Unless otherwise noted, the contents of the FDA website (www.fda.gov), both text and graphics, are not copyrighted. They are in the public domain and may be republished, reprinted and otherwise used freely by anyone without the need to obtain permission from FDA. Credit to the U.S. Food and Drug Administration as the source is appreciated but not required. | |

Foundational & Exploratory

CS476 course syllabus and learning objectives

An In-depth Technical Guide to the Core of CS476: Programming Language Design

Introduction

This document provides a comprehensive technical overview of the this compound course on Programming Language Design. The primary objective of this course is to equip students with the foundational knowledge and practical skills to understand, describe, and reason about the features of various programming languages. The curriculum delves into the theoretical frameworks used to define language behavior and includes the practical implementation of interpreters for these languages. Key paradigms covered include imperative, functional, and object-oriented programming.[1]

Core Learning Objectives

Upon successful completion of this compound, individuals will have mastered the ability to:

-

Formally Describe Language Syntax and Semantics: Develop a formal understanding of how to specify the structure and meaning of programming languages.[1]

-

Implement and Extend Interpreters: Gain hands-on experience in building interpreters for new programming languages and augmenting existing ones with new features.[1]

-

Utilize Mathematical Tools for Program Specification: Apply a range of mathematical and logical tools to formally specify and reason about program behavior.[1]

-

Understand Diverse Language Paradigms: Comprehend the distinguishing features and underlying principles of imperative, functional, object-oriented, and logic programming paradigms.[1]

-

Formalize and Implement Type Systems: Formally describe type systems and implement both type checking and type inference algorithms.[1]

Course Structure and Assessment

The course is structured to provide a balance of theoretical knowledge and practical application. Student performance is evaluated through a combination of programming and written assignments.

Assessment Methodology

A summary of the assessment components and their respective weightings is provided in the table below.

| Assessment Component | Description |

| Programming Assignments | Implementation of interpreters, type checkers, and other language features using OCaml. |

| Written Assignments | Application of logical systems and formal methods to programming language design. |

Note: Specific weightings for each assignment are typically provided in the detailed course schedule.

Experimental Protocols: Assignment Methodologies

The assignments in this course are designed to provide hands-on experience with the concepts taught in lectures.

Programming Assignments Protocol:

-

Specification Review: A detailed specification of a programming language feature is provided.

-

Implementation in OCaml: Students implement the specified feature, such as an interpreter or a type checker, using the OCaml programming language.

-

Initial Submission and Feedback: The initial implementation is submitted for review and feedback.

-

Revision and Final Submission: Students incorporate the feedback to refine their implementation for the final submission.

Written Assignments Protocol:

-

Problem Statement: A problem related to the logical or mathematical foundations of programming languages is presented.

-

Formal Analysis: Students apply formal methods and logical systems to analyze and solve the problem.

-

Submission: The formal written analysis is submitted for evaluation.

Key Conceptual Frameworks

The course covers several fundamental concepts in programming language design. The relationships between these concepts can be visualized as a logical workflow.

References

A Technical Introduction to Program Verification: Methodologies and Applications

A Whitepaper for Researchers, Scientists, and Drug Development Professionals

Abstract

Program verification, the process of formally proving the correctness of a computer program with respect to a certain formal specification, is a critical discipline for ensuring the reliability and safety of software systems. This is particularly crucial in domains such as drug development and scientific research, where software errors can have significant consequences. This technical guide provides an in-depth overview of the core principles and techniques in program verification, with a focus on methodologies relevant to a rigorous, research-oriented audience. Drawing upon concepts typically covered in advanced academic courses like CS476 (Program Verification), this paper details key experimental protocols, presents quantitative data on the performance of verification tools, and visualizes complex logical relationships and workflows.

Introduction to Formal Methods in Program Verification

Formal methods are a collection of techniques rooted in mathematics and logic for the specification, development, and verification of software and hardware systems.[1][2][3] The primary goal of formal methods is to eliminate ambiguity and errors early in the development lifecycle, thereby increasing the assurance of a system's correctness.[4] Unlike traditional testing, which can only demonstrate the presence of bugs for a finite set of inputs, formal verification aims to prove the absence of certain classes of errors for all possible inputs and system states.[2]

Formal methods encompass a variety of techniques, each with its own strengths and applications. The main categories relevant to program verification include:

-

Deductive Verification: This approach uses logical reasoning to prove that a program satisfies its specification. It often involves annotating the program with formal assertions, such as preconditions, postconditions, and loop invariants.[5]

-

Model Checking: This is an automated technique that systematically explores all possible states of a system to check if a given property, typically expressed in temporal logic, holds.[6][7]

-

Abstract Interpretation: This technique approximates the semantics of a program to analyze its properties without executing it. It is often used to find runtime errors like null pointer dereferences or buffer overflows.

This guide will focus on deductive verification and model checking, as they form the cornerstone of many program verification curricula and are widely used in research and industry.

Core Verification Techniques

Deductive Verification and Hoare Logic

Deductive verification is a powerful technique for proving the functional correctness of sequential programs. The foundation of many deductive verification approaches is Hoare logic , a formal system developed by Tony Hoare.[8]

Hoare Triples: The central concept in Hoare logic is the Hoare triple, written as {P} C {Q}, where:

-

P is the precondition , a logical formula describing the state of the program before the execution of command C.

-

C is a program command (e.g., an assignment, a conditional statement, or a loop).

-

Q is the postcondition , a logical formula describing the state of the program after the execution of C, assuming P was true initially.

A Hoare triple {P} C {Q} is said to be valid if, for any initial state in which P holds, the execution of C terminates in a state where Q holds.

Inference Rules: Hoare logic provides a set of inference rules for reasoning about the correctness of programs. These rules allow for the compositional verification of complex programs by breaking them down into smaller, manageable parts. Key rules include the axiom of assignment, the rule of composition, the conditional rule, and the while rule, which requires the identification of a loop invariant .

Experimental Protocol: Verifying a Sorting Algorithm with Dafny

Dafny is a programming language and verifier that uses a deductive verification approach based on Hoare logic.[2][5][9] It requires programmers to annotate their code with specifications, which the Dafny verifier then attempts to prove automatically using an underlying SMT (Satisfiability Modulo Theories) solver, typically Z3.[9]

Objective: To formally verify that a given implementation of an insertion sort algorithm correctly sorts an array of integers.

Methodology:

-

Specification:

-

Define a predicate sorted(a: array

) that returns true if and only if the array a is sorted in non-decreasing order. -

Define a predicate multiset(a: array

) that returns the multiset of elements in the array a. -

The InsertionSort method is specified with a precondition (requires) and a postcondition (ensures). The precondition is simply true. The postcondition states that the output array is sorted and that it is a permutation of the input array (i.e., their multisets are equal).

-

-

Implementation with Annotations:

-

The implementation of the insertion sort algorithm consists of an outer loop that iterates through the array and an inner loop that inserts the current element into its correct position in the sorted portion of the array.

-

Loop Invariants: Crucially, both the outer and inner loops must be annotated with loop invariants.

-

The outer loop invariant states that at the beginning of each iteration i, the subarray a[0..i] is sorted, and the multiset of the entire array a is the same as the multiset of the original array.

-

The inner loop invariant for inserting the element at index i into the sorted subarray a[0..i] would state that the subarray a[0..i] remains a permutation of its original elements at the start of the outer loop iteration, and that elements from j+1 to i are greater than or equal to the element being inserted.

-

-

-

Verification:

-

The Dafny verifier is run on the annotated program.

-

The verifier translates the Dafny code and its specifications into an intermediate language called Boogie, which in turn generates verification conditions (logical formulas) that are passed to the Z3 SMT solver.[10]

-

If Z3 can prove all verification conditions, Dafny reports that the program is verified. If not, it provides feedback on which assertion (precondition, postcondition, or loop invariant) could not be proven.

-

Visualization of the Dafny Verification Workflow:

Model Checking and Temporal Logic

Model checking is an automated verification technique particularly well-suited for concurrent and reactive systems, where the interleaving of actions can lead to a massive number of possible executions (the "state explosion problem").[6][7] The core idea is to represent the system as a finite-state model and to check whether this model satisfies a formal property.

The Model Checking Process:

-

Modeling: The system under verification is modeled as a state-transition system, often described in a specialized modeling language like Promela (for the SPIN model checker) or TLA+ (for the TLC model checker).

-

Specification: The properties to be verified are specified using a formal language, typically a temporal logic.

-

Verification: The model checker systematically explores the state space of the model to determine if the specification holds. If a property is violated, the model checker provides a counterexample, which is a sequence of states demonstrating the failure.

Temporal Logic: Temporal logics are used to reason about the behavior of systems over time.[1][11] They extend classical logic with operators that describe how properties change over a sequence of states. Two common types of temporal logic used in model checking are:

-

Linear Temporal Logic (LTL): LTL reasons about properties of individual computation paths. Its operators include:

-

G p (Globally): p is true in all future states of the path.

-

F p (Finally/Eventually): p is true at some point in the future of the path.

-

X p (Next): p is true in the next state of the path.

-

p U q (Until): p is true until q becomes true.

-

-

Computation Tree Logic (CTL): CTL reasons about properties of the computation tree, which represents all possible future paths from a given state. It combines path quantifiers (A - for all paths, E - for some path) with the temporal operators.

Experimental Protocol: Verifying a Mutual Exclusion Algorithm with TLA+

TLA+ is a formal specification language used to design, model, document, and verify concurrent and distributed systems.[12] It is often used with the TLC model checker.

Objective: To verify Lamport's Bakery Algorithm for mutual exclusion, ensuring that no two processes are in the critical section at the same time (safety) and that every process that wants to enter the critical section will eventually do so (liveness).[13][14]

Methodology:

-

Modeling in PlusCal: The Bakery algorithm is modeled using PlusCal, a high-level algorithmic language that translates to TLA+. The model includes:

-

Variables for the state of each process (idle, waiting, critical).

-

Shared variables for the "ticket numbers" and "choosing" flags used by the algorithm.

-

The logic of the algorithm, including the process of choosing a ticket number and waiting for one's turn.

-

-

Specification in TLA+:

-

Safety Property (Mutual Exclusion): An invariant MutualExclusion is defined, stating that for any two distinct processes i and j, it is not the case that both are in the critical state simultaneously. This is a property of the form G(MutualExclusion).

-

Liveness Property (Starvation-Freedom): A temporal property StarvationFreedom is defined, stating that if a process i is in the waiting state, it will eventually enter the critical state. This is a property of the form G(process_i_is_waiting => F(process_i_is_critical)).

-

-

Verification with TLC:

-

The TLC model checker is configured to check the TLA+ specification. This includes defining the initial state of the system and the next-state relation.

-

TLC explores the state space of the model, checking if the MutualExclusion invariant holds in every reachable state.

-

TLC also checks for violations of the StarvationFreedom property by searching for infinite execution paths where a process remains in the waiting state forever.

-

Visualization of the Model Checking Process:

Advanced Topics in Program Verification

Counterexample-Guided Abstraction Refinement (CEGAR)

The state explosion problem is a major challenge in model checking. Counterexample-Guided Abstraction Refinement (CEGAR) is a technique used to mitigate this problem by iteratively refining an abstract model of the system.[1][15]

The CEGAR Loop:

-

Abstraction: An initial, coarse-grained abstraction of the system is created. This abstract model has a smaller state space than the original system.

-

Model Checking: The model checker is run on the abstract model. If the property holds, it also holds for the concrete system, and the process terminates.

-

Counterexample Analysis: If the property is violated in the abstract model, a counterexample is generated. This abstract counterexample is then checked against the concrete system.

-

If the counterexample is valid in the concrete system, a real bug has been found.

-

If the counterexample is not valid in the concrete system, it is a spurious counterexample , resulting from the imprecision of the abstraction.

-

-

Refinement: The abstraction is refined to eliminate the spurious counterexample, and the process repeats from step 2.

Visualization of the CEGAR Loop:

References

- 1. researchgate.net [researchgate.net]

- 2. homepage.cs.uiowa.edu [homepage.cs.uiowa.edu]

- 3. researchgate.net [researchgate.net]

- 4. homes.cs.washington.edu [homes.cs.washington.edu]

- 5. leino.science [leino.science]

- 6. dmi.unict.it [dmi.unict.it]

- 7. researchgate.net [researchgate.net]

- 8. doc.ic.ac.uk [doc.ic.ac.uk]

- 9. arxiv.org [arxiv.org]

- 10. cs.cmu.edu [cs.cmu.edu]

- 11. medium.com [medium.com]

- 12. Lamport\'s Bakery Algorithm [tutorialspoint.com]

- 13. Bakery Algorithm in Process Synchronization - GeeksforGeeks [geeksforgeeks.org]

- 14. Counterexample-guided abstraction refinement - Wikipedia [en.wikipedia.org]

- 15. [2303.06477] Reproduction Report for SV-COMP 2023 [arxiv.org]

A Deep Dive into the CS476 Software Development Project: A Technical Guide

This in-depth technical guide provides a comprehensive overview of a typical CS476 Software Development Project course. The content is synthesized from various university curricula and is intended for researchers, scientists, and drug development professionals seeking to understand the structured process of modern software engineering education and its practical application in team-based projects. This guide details the course structure, learning objectives, project lifecycle, and key methodologies that underpin a successful software development capstone project.

Course Philosophy and Objectives

The this compound course is designed to provide students with a hands-on, immersive experience in developing a large-scale software system. It serves as a capstone experience, integrating the theoretical knowledge gained in previous computer science coursework into a practical, project-based setting. The primary objective is to simulate a real-world software development environment where students navigate the complexities of teamwork, project management, and technical execution.

Upon successful completion of this course, a student will have gained:

-

Experience in the complete software development lifecycle, from requirements gathering to deployment and maintenance.[1]

-

Proficiency in modern software engineering methodologies, such as Agile or hybrid models.[2]

-

Expertise in software architecture and design, including the application of design patterns.[1]

-

Skills in team collaboration, project management, and professional communication.[3][4][5]

-

Practical knowledge of tools and technologies for version control, continuous integration, and quality assurance.

Quantitative Course Structure

The course is heavily weighted towards a semester-long team project. The grading is designed to reflect the multifaceted nature of software development, rewarding both individual contributions and overall team success.

Table 1: Grading Scheme

| Component | Weighting | Description |

| Semester-long Project | 70% | Comprises multiple deliverables evaluated at key milestones throughout the semester. |

| Feasibility Study & Requirements | 15% | Initial analysis of the project's viability and detailed specification of its requirements.[1][3] |

| Architectural & Detailed Design | 20% | High-level system architecture and low-level design of components and their interactions.[1] |

| Implementation & Code Quality | 25% | The functional correctness, readability, and maintainability of the source code. |

| Testing & Quality Assurance | 10% | The thoroughness of unit, integration, and system testing.[1] |

| Project Presentation & Demo | 30% | A formal presentation and live demonstration of the completed software project to an audience of peers, faculty, and potentially industry sponsors.[1] |

| Individual Contribution | (Factor) | Individual grades may be adjusted based on peer evaluations and mentor assessments to reflect personal contributions to the team effort.[3][4] |

Table 2: Typical Project Timeline

| Week | Phase | Key Activities |

| 1-2 | Phase 1: Inception | Team formation, project selection, initial client meetings. |

| 3-4 | Phase 2: Elaboration | Requirements elicitation, feasibility analysis, risk assessment.[3][4] |

| 5-7 | Phase 3: Architectural Design | High-level system design, technology stack selection, creation of architectural diagrams. |

| 8-12 | Phase 4: Construction | Iterative development (sprints), implementation of features, unit and integration testing. |

| 13-14 | Phase 5: Transition | System testing, user acceptance testing, final preparations for deployment. |

| 15 | Phase 6: Deployment | Final project demonstration, code hand-off, and project retrospective. |

Experimental Protocols

To provide a clearer understanding of the practical work involved, this section details the methodologies for two key "experiments" in the software development process: Requirements Elicitation and Validation, and an Agile Sprint Cycle.

Protocol 1: Requirements Elicitation and Validation

-

Objective: To accurately capture and validate the needs of the stakeholders to produce a formal requirements specification document.

-

Methodology:

-

Stakeholder Identification: Identify all individuals or groups who have an interest in the project's outcome (e.g., clients, end-users).

-

Elicitation Sessions: Conduct structured interviews and brainstorming sessions with stakeholders to gather initial requirements.

-

Use Case Modeling: Translate the gathered needs into formal use cases, detailing user interactions with the system.[1]

-

Prototyping: Develop low-fidelity mockups or wireframes of the user interface to provide a visual representation of the system and gather early feedback.

-

Formal Specification: Document the validated requirements in a Software Requirements Specification (SRS) document, using a standardized template.

-

Validation Review: Hold a formal review meeting with stakeholders to walk through the SRS and obtain their sign-off.

-

Protocol 2: Agile Sprint Cycle

-

Objective: To iteratively and incrementally develop functional software in a time-boxed period.

-

Methodology:

-

Sprint Planning: At the beginning of a two-week sprint, the team selects a set of high-priority features from the project backlog to be completed within the sprint.

-

Daily Stand-ups: Each day, the team holds a brief meeting to synchronize activities, discuss progress, and identify any impediments.

-

Development: Team members work on their assigned tasks, which include design, coding, and unit testing.

-

Continuous Integration: All code is regularly merged into a central repository and automatically built and tested to ensure stability.

-

Sprint Review: At the end of the sprint, the team demonstrates the completed features to stakeholders and gathers feedback.

-

Sprint Retrospective: The team reflects on the sprint, discussing what went well, what could be improved, and actions for the next sprint.

-

Visualizing Workflows and Concepts

To better illustrate the logical flow and relationships within the this compound course, the following diagrams are provided in the DOT language for Graphviz.

Caption: The waterfall-like progression of the main phases in the software development project.

Caption: The cyclical nature of the Agile sprint process used for iterative development.

Caption: Prerequisite course structure leading to the this compound capstone project.

References

Foundational Principles of Requirements Engineering: A Technical Guide for Scientific and Drug Development Professionals

Authored for CS476

Introduction

In the realms of scientific research and pharmaceutical drug development, precision, clarity, and verifiability are paramount. The journey from a novel hypothesis to a validated discovery or a market-approved therapeutic is governed by rigorous protocols and meticulous documentation. A similar systematic approach is essential in the development of the software systems that underpin these critical endeavors. This guide delves into the foundational principles of Requirements Engineering (RE), a core discipline within software engineering, and frames them within the context of scientific and drug development workflows.

Requirements Engineering is the systematic process of defining, documenting, and maintaining the requirements for a system.[1] It is a critical phase that bridges the gap between stakeholder needs and the final software product.[1] Neglecting this phase is a primary contributor to project failure and significant cost overruns. Indeed, a staggering 80% of software project failures can be traced back to issues with requirements.

The High Cost of Inadequate Requirements

The financial and operational impact of poorly defined requirements is substantial. Research indicates that 56% of all software defects originate during the requirements phase. Furthermore, 60% of the costs associated with rework are due to incorrect or incomplete requirements. In 2022, the estimated cost of poor software quality to U.S. businesses was a monumental $2.41 trillion.

To illustrate the escalating cost of fixing errors as a project progresses, consider the following data, which highlights the relative cost of rectifying a defect at different stages of the software development lifecycle.

| Development Phase | Relative Cost to Fix a Defect |

| Requirements | 1x |

| Architecture | 3x |

| Construction (Coding) | 5-10x |

| System Testing | 15x |

| Post-Release (Production) | 30x+ |

This table summarizes the exponential increase in the cost of fixing a software defect as it progresses through the development lifecycle.

Core Principles of Requirements Engineering

The discipline of requirements engineering is founded on a set of core principles that ensure a thorough and effective process. These principles are universally applicable, whether developing a new laboratory information management system (LIMS), a data analysis pipeline for genomic sequencing, or software to manage clinical trial data.

1. Value-Orientation: Every requirement should deliver tangible value by contributing to the project's objectives and mitigating risks.[2]

2. Stakeholder Focus: The primary goal of requirements engineering is to satisfy the needs and expectations of all stakeholders, including researchers, clinicians, regulatory bodies, and patients.[2]

3. Shared Understanding: A common and unambiguous understanding of the requirements must be established among all stakeholders and the development team.[3]

4. Context Awareness: A system cannot be understood in isolation. Its operational environment, including other software, hardware, and human workflows, must be considered.[2]

5. Problem-Requirement-Solution Separation: It is crucial to distinguish between the problem to be solved, the requirements that will address the problem, and the final solution that implements those requirements.

6. Validation: Requirements must be validated to ensure they accurately reflect the stakeholders' needs and will lead to a useful system. Non-validated requirements are of little use.[2]

7. Evolution: Requirements are rarely static. The process must accommodate changes and evolve as the project progresses and understanding deepens.

8. Innovation: Requirements engineering is not merely about transcribing stakeholder requests but also about exploring innovative solutions that can provide greater value.[2]

9. Systematic and Disciplined Work: A structured and disciplined approach is essential for the quality of the final system.[3]

The Requirements Engineering Process: An Analogy to Drug Development

To further contextualize the requirements engineering process for professionals in the life sciences, a direct analogy can be drawn to the phased nature of drug development and clinical trials. Just as a new therapeutic must pass through rigorous stages of investigation and validation, so too must the requirements for a software system.

A Detailed Experimental Protocol for Requirements Elicitation

Requirements elicitation is the process of gathering requirements from stakeholders.[4] This is a critical and highly interactive phase. The following protocol outlines a systematic approach to requirements elicitation, adaptable for various scientific and clinical software projects.

Objective: To comprehensively identify, document, and prioritize the requirements for a new or updated software system from all relevant stakeholders.

Materials:

-

Interview and workshop recording tools (with consent)

-

Whiteboards or virtual collaboration tools

-

Prototyping software

-

Document analysis templates

-

Requirements management software

Procedure:

Phase 1: Stakeholder Identification and Analysis

-

Identify all potential stakeholder groups: This includes end-users (e.g., lab technicians, clinical research coordinators), principal investigators, IT staff, quality assurance personnel, and regulatory experts.

-

Characterize each stakeholder group: Document their roles, responsibilities, and anticipated interaction with the system.

-

Select representatives: For larger groups, identify individuals who can act as primary points of contact and decision-makers.

Phase 2: Elicitation Sessions

-

Conduct initial interviews: Hold one-on-one or small group interviews with stakeholder representatives to gain an initial understanding of their needs, pain points, and desired outcomes.

-

Organize facilitated workshops: Bring together diverse stakeholder groups for collaborative sessions to brainstorm requirements, resolve conflicts, and build consensus.

-

Perform observational studies: Observe users interacting with existing systems or performing manual workflows that the new system will replace. This can reveal unstated requirements and usability issues.

-

Administer surveys and questionnaires: For large and geographically dispersed stakeholder groups, use surveys to gather quantitative and qualitative data on specific features or priorities.

Phase 3: Documentation and Analysis

-

Document all elicited information: Transcribe interviews and workshop notes, and consolidate survey results.

-

Perform document analysis: Review existing documentation, such as standard operating procedures (SOPs), regulatory guidelines, and user manuals for current systems, to extract relevant requirements.

-

Create initial requirement statements: Draft clear, concise, and unambiguous statements for each identified requirement.

-

Categorize and prioritize requirements: Group requirements into logical categories (e.g., functional, non-functional, data-related) and work with stakeholders to prioritize them based on importance and urgency.

Phase 4: Validation and Refinement

-

Develop prototypes and mockups: Create visual representations of the proposed system to provide stakeholders with a tangible model for feedback.

-

Conduct review meetings: Present the documented requirements and prototypes to stakeholders for their review and approval.

-

Iterate and refine: Based on feedback, revise the requirements documentation until a consensus is reached.

Mandatory Visualizations of Core Concepts

To further clarify the foundational principles, the following diagrams illustrate key logical relationships and workflows in requirements engineering.

Conclusion

For researchers, scientists, and drug development professionals, the integrity of your data and the efficiency of your workflows are non-negotiable. The software you rely on must be as robust and well-validated as your scientific methods. By embracing the foundational principles of requirements engineering, you can ensure that your software development projects are built on a solid foundation of clear, complete, and correct requirements. This systematic approach not only mitigates the risk of costly errors and project delays but also leads to the creation of powerful tools that can accelerate discovery and innovation.

References

Key topics in CS476 programming language design

An In-depth Technical Guide to Core Concepts in Programming Language Design

Introduction

The design of a programming language is a discipline that blends formal logic, abstraction, and practical engineering. For researchers and scientists, understanding these principles is akin to understanding the design of a formal experimental protocol; the language provides the structure and rules within which complex processes (computations) are expressed and executed. A well-designed language ensures that instructions are unambiguous, verifiable, and efficient. This guide provides a technical overview of the core topics in programming language design, framed to be accessible to professionals in research and development fields who rely on computational tools.

Formal Syntax and Semantics: The Blueprint of a Language

Before a program can be executed, its structure and meaning must be precisely defined. This is the role of syntax and semantics.

-

Syntax refers to the rules that govern the structure of a valid program. It is the "grammar" of the language. These rules are commonly defined using a formal notation called Backus-Naur Form (BNF) .

-

Semantics refers to the meaning of the syntactically valid programs.[1] It defines what a program is supposed to do when it runs. There are several approaches to defining semantics, with Operational Semantics being a common method that describes program execution as a series of computational steps.[2]

Methodology: Defining Language Structure with Operational Semantics

Operational semantics provides a rigorous, step-by-step model of program execution, much like a detailed experimental protocol.[2] It uses inference rules to define how program constructs are evaluated.

Protocol for Semantic Evaluation:

-

Define the State: The "state" represents the memory of the program at any given time, typically as a mapping from variable names to their values.

-

Establish Judgment Forms: A judgment is a formal statement about the program's behavior. A common form is ⟨C, S⟩ ⇓ S', which can be read as: "Executing command C in an initial state S results in a final state S'."

This formal approach allows for the precise and unambiguous specification of a language's behavior, which is critical for building reliable compilers and interpreters.[3]

The Compilation Workflow: From Source Code to Execution

A compiler is a program that translates source code written in one programming language into another language, typically machine code that a computer's processor can execute.[4][5] This process is a multi-stage workflow.

Experimental Workflow: The Phases of Compilation

The compilation process can be visualized as a pipeline where the output of one stage becomes the input for the next.[6][7]

-

Lexical Analysis (Scanning): The raw source code text is broken down into a sequence of "tokens."[4] Tokens are the smallest meaningful units of the language, such as keywords (if, while), identifiers (variable names), operators (+, =), and literals (123, "hello").

-

Syntax Analysis (Parsing): The sequence of tokens is analyzed to check if it conforms to the language's grammar. The parser typically builds a hierarchical structure called an Abstract Syntax Tree (AST) , which represents the logical structure of the code.[6]

-

Semantic Analysis: The AST is traversed to check for semantic correctness. This phase ensures that the code makes sense. A key part of this is type checking , which verifies that operators are applied to compatible types (e.g., preventing the addition of a number to a text string).[5][6]

-

Intermediate Code Generation: The compiler generates a low-level, machine-independent representation of the program. This intermediate representation is easier to optimize.

-

Code Optimization: This phase analyzes the intermediate code to produce a more efficient version that runs faster or uses less memory.

-

Code Generation: The final phase translates the optimized intermediate code into the target machine code for a specific processor architecture.

References

- 1. Semantics (computer science) - Wikipedia [en.wikipedia.org]

- 2. Formal semantics | Programming Languages [hanielb.github.io]

- 3. CS 476: Programming Language Design · Syllabus [cs.uic.edu]

- 4. Compiler Design Tutorial - GeeksforGeeks [geeksforgeeks.org]

- 5. tutorialspoint.com [tutorialspoint.com]

- 6. Last Minute Notes - Compiler Design - GeeksforGeeks [geeksforgeeks.org]

- 7. Introduction of Compiler Design - GeeksforGeeks [geeksforgeeks.org]

CS476 numeric computation for financial modeling introduction

An In-Depth Technical Guide to Numeric Computation for Financial Modeling

Introduction to Numeric Computation in Finance

Quantitative finance bridges financial theory with mathematical models and computational techniques to analyze markets, price securities, and manage risk.[1] Many complex financial problems, particularly in derivatives pricing, do not have simple, closed-form analytical solutions.[2][3] Consequently, numerical methods are essential tools for practitioners and researchers. These methods transform complex, continuous-time financial models into discrete, solvable algorithms that can be executed by computers.[1]

This guide focuses on three fundamental numerical methods that form the core of computational finance: the Binomial Option Pricing Model, Monte Carlo Simulation, and Finite Difference Methods for solving partial differential equations (PDEs) like the Black-Scholes equation.[2][3][4] We will also discuss the critical process of model validation through backtesting. While the topic is financial, the methodologies are rooted in applied mathematics and computer science, making them accessible to a broad audience of researchers and scientists.

The Binomial Option Pricing Model

The Binomial Option Pricing Model (BOPM) is a discrete-time numerical method for valuing options.[5] First formalized by Cox, Ross, and Rubinstein in 1979, the model is intuitive and can handle a variety of conditions, such as American-style options, which can be exercised at any time before expiration.[5][6] The core assumption is that over a small time step, the price of the underlying asset can only move to one of two possible prices: an "up" movement or a "down" movement.[6][7]

By constructing a "binomial tree" of possible future asset prices, the model traces the evolution of the option's underlying variables.[5][8] Valuation is then performed iteratively, starting from the option's known payoff at expiration and working backward to the present day to find its current value.[5]

Computational Protocol: Binomial Tree Valuation

The methodology for pricing an option using a binomial tree involves the following steps:

-

Tree Generation : Construct a binomial tree representing the possible price paths of the underlying asset.[8]

-

Define the number of time steps (N).

-

Calculate the size of the up (u) and down (d) movements and the risk-neutral probability (p) of an up move. These are typically derived from the asset's volatility (σ), the risk-free interest rate (r), and the time step duration (Δt).

-

-

Terminal Node Valuation : Calculate the option's value at each of the final nodes of the tree (at the expiration date). For a call option, this is max(0, S_T - K), and for a put option, it is max(0, K - S_T), where S_T is the asset price at expiration and K is the strike price.[7]

-

Backward Induction : Work backward from the final nodes. At each preceding node, calculate the option value as the discounted expected value of the two possible future nodes. The formula is: Option Price = e^(-rΔt) * [p * Option_up + (1 - p) * Option_down]

-

For American options, the value at each node is compared with the intrinsic value (the value if exercised immediately), and the higher of the two is chosen.[6]

-

-

Initial Node Value : The value calculated at the very first node of the tree is the estimated fair price of the option today.[6]

Quantitative Comparison: Binomial Model vs. Black-Scholes

As the number of time steps in the binomial model increases, its result for European options converges to the value given by the continuous-time Black-Scholes model.[5]

| Number of Steps (N) | Binomial Model Price (Call) | Black-Scholes Price (Call) | Absolute Error |

| 10 | $6.8021 | $6.9613 | $0.1592 |

| 50 | $6.9442 | $6.9613 | $0.0171 |

| 100 | $6.9525 | $6.9613 | $0.0088 |

| 500 | $6.9596 | $6.9613 | $0.0017 |

| 1000 | $6.9604 | $6.9613 | $0.0009 |

Monte Carlo Simulation

Monte Carlo simulation is a powerful stochastic method used to model the probability of different outcomes in a process that is affected by random variables.[9][10] In finance, it is widely used for valuing complex derivatives, performing sensitivity analysis, and assessing risk.[11][12] The method relies on repeated random sampling to generate thousands of possible future price paths for an underlying asset.[9] The option's payoff is calculated for each path, and the average of these payoffs, discounted to the present value, provides the option's price.[9]

Computational Protocol: Monte Carlo Option Pricing

-

Model the Stochastic Process : Define the mathematical model that describes the evolution of the underlying asset's price. The Geometric Brownian Motion (GBM) is a common model for stock prices.[13]

-

dS = rS dt + σS dX

-

Where dS is the change in stock price, r is the risk-free rate, S is the stock price, dt is the time step, σ is the volatility, and dX is a random variable drawn from a normal distribution.

-

-

Discretize the Path : Convert the continuous GBM model into a discrete-time formula to simulate the price path over N time steps until the option's expiration T.

-

S(t+Δt) = S(t) * exp((r - 0.5 * σ^2)Δt + σ * sqrt(Δt) * Z)

-

Where Z is a random number drawn from a standard normal distribution.

-

-

Simulate Price Paths : Generate a large number (M) of independent price paths for the asset from the current time to the expiration date using the discretized formula.

-

Calculate Payoffs : For each simulated price path, determine the option's payoff at expiration. For a European call option, this is max(0, S_M,T - K).

-

Average and Discount : Calculate the average of all the payoffs from the M simulations. Discount this average back to the present day using the risk-free interest rate to find the option's value.

-

Option Price = e^(-rT) * (1/M) * Σ(Payoff_i)

-

Quantitative Data: Convergence of Monte Carlo Methods

The accuracy of a Monte Carlo simulation improves as the number of simulated paths increases. The convergence rate of the standard Monte Carlo method is proportional to 1/√M, where M is the number of paths. This means that to double the accuracy, one must quadruple the number of simulations.

| Number of Paths (M) | Estimated Price (Call) | Standard Error | 95% Confidence Interval |

| 10,000 | $6.981 | $0.102 | [$6.781, $7.181] |

| 100,000 | $6.955 | $0.032 | [$6.892, $7.018] |

| 1,000,000 | $6.963 | $0.010 | [$6.943, $6.983] |

| 10,000,000 | $6.961 | $0.003 | [$6.955, $6.967] |

Finite Difference Methods for PDEs

Many problems in finance, including option pricing, can be modeled by a partial differential equation (PDE).[14] The famous Black-Scholes model, for instance, is a PDE that describes how an option's value evolves over time as a function of the underlying asset's price and other parameters.[14][15] Finite Difference Methods (FDM) solve these PDEs by approximating the continuous derivatives with discrete differences.[14][15] This transforms the PDE into a system of linear algebraic equations that can be solved on a grid.[16]

Computational Protocol: FDM for Black-Scholes

-

Discretize the Domain : Create a grid in the time (t) and asset price (S) dimensions. The grid will have N time steps and M asset price steps.[17]

-

Approximate Derivatives : Replace the partial derivatives in the Black-Scholes PDE with finite difference approximations (e.g., forward, backward, or central differences). This results in a stencil that relates the option value at one grid point to the values at neighboring points.[18]

-

Set Boundary Conditions : Define the value of the option at the boundaries of the grid.

-

Solve the System : Starting from the known values at expiration, step backward in time, solving the system of linear equations at each time step. There are several schemes to do this:

-

Explicit Method : Simple and fast, but only stable under certain conditions relating the time and asset price step sizes.

-

Implicit Method : Unconditionally stable but more computationally intensive per time step.

-

Crank-Nicolson Method : A combination of the two, offering unconditional stability and higher accuracy.[3][14]

-

-

Final Value : The solution on the grid at the initial time (t=0) for the current asset price gives the estimated option value.

Quantitative Data: Comparison of FDM Schemes

Different FDM schemes offer trade-offs between stability, accuracy, and computational speed. The Crank-Nicolson method is often preferred for its balance of these properties.[3][19]

| Method | Stability Condition | Order of Accuracy (Time) | Order of Accuracy (Space) | Notes |

| Explicit | Conditionally Stable | O(Δt) | O(ΔS²) | Simple to implement, but stability constraint can be restrictive. |

| Fully Implicit | Unconditionally Stable | O(Δt) | O(ΔS²) | More computationally intensive per step than the explicit method. |

| Crank-Nicolson | Unconditionally Stable | O(Δt²) | O(ΔS²) | Higher accuracy in time; can produce spurious oscillations with non-smooth payoffs.[3][20] |

Model Validation: Backtesting

Developing a financial model is only part of the process; validating its performance is critical. Backtesting is the process of applying a predictive model or trading strategy to historical data to assess how it would have performed.[21][22] It provides empirical evidence of a strategy's potential effectiveness and helps identify its weaknesses before deploying real capital.[22]

Protocol for a Basic Backtest

-

Formulate a Hypothesis : Clearly define the strategy or model to be tested. For example, "A trading strategy based on a 50-day moving average crossover will be profitable."

-

Obtain Historical Data : Acquire a clean, high-quality dataset for the relevant financial instruments and time period. The data should not have been used in the development of the model to avoid lookahead bias.[23]

-

Simulate the Strategy : Code a simulation that iterates through the historical data, day by day or bar by bar.[21]

-

At each step, check if the strategy's conditions for entering or exiting a trade are met.

-

Record all hypothetical trades, including entry/exit prices, position sizes, and transaction costs.

-

-

Analyze Performance Metrics : Once the simulation is complete, calculate key performance metrics to evaluate the strategy.

-

Sensitivity Analysis and Review : Test the strategy on different time periods or with slightly different parameters to check for robustness.[24] A strategy that only works on a specific historical dataset is likely overfitted.

Key Backtesting Performance Metrics

| Metric | Description | Purpose |

| Net Profit/Loss | The total monetary gain or loss over the backtesting period.[21] | Measures the absolute profitability of the strategy. |

| Sharpe Ratio | The average return earned in excess of the risk-free rate per unit of volatility. | Measures risk-adjusted return; a higher value is better. |

| Maximum Drawdown | The largest peak-to-trough decline in portfolio value. | Measures the largest loss from a peak, indicating downside risk. |

| Win/Loss Ratio | The ratio of the number of winning trades to losing trades. | Indicates the consistency of the strategy's success. |

| Volatility | The standard deviation of the returns of the strategy.[21] | Measures the degree of variation in trading returns. |

References

- 1. questdb.com [questdb.com]

- 2. Numerical and Analytic Methods in Option Pricing - Overleaf, Online LaTeX Editor [overleaf.com]

- 3. scienpress.com [scienpress.com]

- 4. scribd.com [scribd.com]

- 5. Binomial options pricing model - Wikipedia [en.wikipedia.org]

- 6. Understanding the Binomial Option Pricing Model for Valuing Options [investopedia.com]

- 7. forecastr.co [forecastr.co]

- 8. Binomial Option Pricing Model for Algo Traders [akashmitra.com]

- 9. corporatefinanceinstitute.com [corporatefinanceinstitute.com]

- 10. Monte Carlo Simulation: What It Is, How It Works, History, 4 Key Steps [investopedia.com]

- 11. Monte Carlo Simulation in Financial Modeling – Magnimetrics [magnimetrics.com]

- 12. projectionlab.com [projectionlab.com]

- 13. abhyankar-ameya.medium.com [abhyankar-ameya.medium.com]

- 14. Finite difference methods for option pricing - Wikipedia [en.wikipedia.org]

- 15. Finite Difference Methods: A Numerical Approach to Option Pricing and Derivatives | HackerNoon [hackernoon.com]

- 16. C++ Explicit Euler Finite Difference Method for Black Scholes | QuantStart [quantstart.com]

- 17. Finite Difference Method for the Multi-Asset Black–Scholes Equations [mdpi.com]

- 18. antonismolski.medium.com [antonismolski.medium.com]

- 19. upcommons.upc.edu [upcommons.upc.edu]

- 20. homepages.ucl.ac.uk [homepages.ucl.ac.uk]

- 21. corporatefinanceinstitute.com [corporatefinanceinstitute.com]

- 22. Backtesting Investment Strategies with Historical ... | FMP [site.financialmodelingprep.com]

- 23. Reddit - The heart of the internet [reddit.com]

- 24. m.youtube.com [m.youtube.com]

Understanding formal languages and automata CS476

An In-depth Technical Guide to Formal Languages and Automata Theory

Introduction to Formal Languages and Automata

Automata theory is a foundational pillar of theoretical computer science, dealing with the design and analysis of abstract self-propelled computing devices, known as automata.[1][2] These mathematical models are used to solve computational problems by following a predetermined sequence of operations.[1][2][3] The theory is inextricably linked to formal language theory, which provides a framework for defining and classifying languages based on the complexity of the grammars that generate them.[2][4][5] A formal language is a set of strings, where each string is a finite sequence of symbols from a specified alphabet, formed according to a specific set of rules.[5][6]

The study of formal languages and automata is crucial for understanding the limits of computation, and it has profound practical applications in various domains. These include compiler design, where automata are used for lexical analysis and parsing; natural language processing (NLP) for understanding human language syntax; artificial intelligence for modeling decision-making processes; and bioinformatics for pattern analysis in biological sequences.[1][2][7][8] The Chomsky Hierarchy, proposed by linguist Noam Chomsky, provides a critical framework that classifies formal languages into four nested types, each corresponding to a specific type of automaton.[4]

Core Concepts: The Building Blocks of Computation

Understanding formal languages begins with a few key definitions:

-

Symbol: An abstract, user-defined entity. Examples include letters, digits, or special characters.[3][5]

-

Alphabet (Σ): A finite, non-empty set of symbols. For example, a binary alphabet is Σ = {0, 1}.[3][6]

-

String: A finite sequence of symbols chosen from an alphabet. The empty string, denoted by ε, is a string with zero symbols.[6]

-

Language (L): A set of strings over a given alphabet. A language can be finite or infinite.[3][4][9]

Automata are the machines that recognize or generate these languages. They process input strings and either accept or reject them based on a set of rules.

Type 3: Regular Languages and Finite Automata

Regular languages represent the simplest class of formal languages. They can be described by regular expressions and are recognized by Finite Automata (FA). FAs have a finite number of states and transition between these states based on input symbols.[2] They are widely used in text processing, pattern matching, and the lexical analysis phase of a compiler.[1][7][10]

There are two types of Finite Automata:

-

Deterministic Finite Automaton (DFA): For every state and every input symbol, there is exactly one transition to a next state.[11] DFAs are efficient for implementation.

-

Non-deterministic Finite Automaton (NFA): A state can have zero, one, or multiple transitions for a given input symbol. Every NFA can be converted into an equivalent DFA.

Experimental Protocol: DFA Operation

A DFA is formally defined as a 5-tuple (Q, Σ, δ, q₀, F):

-

Q: A finite set of states.

-

Σ: A finite set of input symbols (the alphabet).

-

δ: The transition function (δ: Q × Σ → Q).

-

q₀: The initial state.

-

F: A set of final or accepting states.

Methodology:

-

The DFA begins in the initial state, q₀.

-

It reads the input string one symbol at a time, from left to right.

-

For each symbol, it transitions to a new state as dictated by the transition function δ.

-

After the last symbol is read, the machine halts.

-

If the DFA is in a final state (a state in F), the input string is accepted . Otherwise, it is rejected .

Below is a diagram of a DFA that accepts binary strings containing an even number of '0's.

Type 2: Context-Free Languages and Pushdown Automata

Context-Free Languages (CFLs) form a larger class of languages than regular languages and are generated by Context-Free Grammars (CFGs).[12][13] CFGs are essential for describing the syntax of most programming languages.[12][14] These languages are recognized by a more powerful automaton called a Pushdown Automaton (PDA).

A PDA is essentially a finite automaton equipped with a stack—an auxiliary memory with last-in, first-out (LIFO) access. This stack allows the PDA to "remember" an unbounded amount of information, which is necessary for recognizing languages that require counting or matching nested structures, such as balanced parentheses.

Experimental Protocol: CFG Derivation

A CFG is formally defined as a 4-tuple (V, T, P, S):

-

V: A finite set of non-terminal symbols (variables).

-

T: A finite set of terminal symbols (the alphabet), disjoint from V.

-

P: A finite set of production rules, where each rule is of the form A → α, with A in V and α being a string of symbols from (V ∪ T)*.

-

S: The start symbol, a special non-terminal.

Methodology:

-

Begin with a string consisting only of the start symbol S.

-

Choose a non-terminal symbol in the current string.

-

Select a production rule that has this non-terminal on its left-hand side.

-

Replace the non-terminal with the right-hand side of the chosen rule.

-

Repeat steps 2-4 until the string contains only terminal symbols. This final string is a member of the language generated by the grammar.

The following diagram illustrates the components of a Pushdown Automaton.

Type 0: Recursively Enumerable Languages and Turing Machines

The most powerful automaton is the Turing Machine (TM), conceived by Alan Turing in 1936.[15] It serves as the theoretical foundation for all modern computers.[16] A TM can simulate any computer algorithm, regardless of its complexity.[15][17] The class of languages that a Turing Machine can accept is known as the recursively enumerable languages.

A Turing Machine consists of an infinite tape that serves as its memory, a tape head that can read and write symbols on the tape, and a finite set of states that governs its behavior.[17][18] Unlike simpler automata, the tape head can move both left and right, allowing the machine to re-examine and modify any part of the input.

Experimental Protocol: Turing Machine Operation

A Turing Machine is formally a 7-tuple (Q, Σ, Γ, δ, q₀, B, F):

-

Q: A finite set of states.

-

Σ: The input alphabet.

-

Γ: The tape alphabet (Σ ⊆ Γ).

-

δ: The transition function (δ: Q × Γ → Q × Γ × {L, R}).

-

q₀: The initial state.

-

B: The blank symbol.

-

F: The set of final or accepting states.

Methodology:

-

The input string is written on the tape, surrounded by blank symbols. The tape head starts at the first symbol of the input.

-

The machine is in the initial state q₀.

-

Based on the current state and the symbol under the tape head, the transition function δ determines: a. The next state to move to. b. The symbol to write on the tape (replacing the current one). c. The direction to move the tape head (Left or Right).

-

This process repeats.

-

The machine halts if it enters a final state (accepting the input) or if there is no defined transition for the current configuration (rejecting the input).

The Chomsky Hierarchy of Languages

The Chomsky Hierarchy provides a clear, nested classification of formal languages, connecting language types with the automata that recognize them and the grammars that generate them. This hierarchy is fundamental to understanding the expressive power and computational complexity of different language classes.

| Type | Language Class | Grammar | Recognizing Automaton | Examples |

| Type-3 | Regular | Regular | Finite Automaton (DFA/NFA) | ab |

| Type-2 | Context-Free | Context-Free | Pushdown Automaton (PDA) | {aⁿbⁿ | n ≥ 0} |

| Type-1 | Context-Sensitive | Context-Sensitive | Linear-Bounded Automaton (LBA) | {aⁿbⁿcⁿ | n ≥ 0} |

| Type-0 | Recursively Enumerable | Unrestricted | Turing Machine (TM) | Any language solvable by an algorithm |

This hierarchy illustrates a clear progression in computational power. Every regular language is context-free, every context-free language is context-sensitive, and every context-sensitive language is recursively enumerable.

References

- 1. quora.com [quora.com]

- 2. Automata theory - Wikipedia [en.wikipedia.org]

- 3. youtube.com [youtube.com]

- 4. Applications of Automata Theory [cs.stanford.edu]

- 5. gcekjr.ac.in [gcekjr.ac.in]

- 6. Automata Theory Tutorial [tutorialspoint.com]

- 7. Applications of various Automata - GeeksforGeeks [geeksforgeeks.org]

- 8. tutorialspoint.com [tutorialspoint.com]

- 9. youtube.com [youtube.com]

- 10. quora.com [quora.com]

- 11. Deterministic finite automaton - Wikipedia [en.wikipedia.org]

- 12. Context Free Grammars | Brilliant Math & Science Wiki [brilliant.org]

- 13. fiveable.me [fiveable.me]

- 14. medium.com [medium.com]

- 15. Department of Computer Science and Technology – Raspberry Pi: Introduction: What is a Turing machine? [cl.cam.ac.uk]

- 16. reddit.com [reddit.com]

- 17. Turing machine - Wikipedia [en.wikipedia.org]

- 18. Turing Machines | Brilliant Math & Science Wiki [brilliant.org]

Unveiling the Blueprint of Modern Software: A Technical Deep Dive into Design Patterns

References

- 1. swimm.io [swimm.io]

- 2. sourcemaking.com [sourcemaking.com]

- 3. Gang of 4 Design Patterns Explained: Creational, Structural, and Behavioral | DigitalOcean [digitalocean.com]

- 4. Gang of Four (GOF) Design Patterns - GeeksforGeeks [geeksforgeeks.org]

- 5. Creational pattern - Wikipedia [en.wikipedia.org]

- 6. Creational Design Patterns - GeeksforGeeks [geeksforgeeks.org]

- 7. sourcemaking.com [sourcemaking.com]

- 8. Structural Design Patterns - GeeksforGeeks [geeksforgeeks.org]

- 9. celepbeyza.medium.com [celepbeyza.medium.com]

- 10. sourcemaking.com [sourcemaking.com]

- 11. scaler.com [scaler.com]

- 12. refactoring.guru [refactoring.guru]

- 13. Behavioral Design Patterns - GeeksforGeeks [geeksforgeeks.org]

- 14. msoft.team [msoft.team]

- 15. springframework.guru [springframework.guru]

Methodological & Application

Application Notes: Applying Hoare Logic for Program Verification

Introduction

This document provides an overview of and protocols for applying Hoare Logic, a foundational formal system for reasoning rigorously about the correctness of computer programs.[8][9][10] Originally developed by Tony Hoare, this logic provides a structured way to think about program behavior that should be intuitive to researchers accustomed to the rigor of the scientific method.[8][11] By viewing programs as formal protocols and their specifications as testable hypotheses, we can build a higher degree of confidence in our computational tools.

Application Notes

Core Concept: The Hoare Triple

The central concept in Hoare Logic is the Hoare Triple , denoted as {P} C {Q}.[10] This can be understood through an analogy to an experimental protocol:

-

{P} - The Precondition: An assertion that describes the initial state required before the program C is executed. This is analogous to the starting conditions of an experiment, such as the purity of reagents, the initial temperature, or the format of an input data file.

-

C - The Command: The program or a piece of code itself. This is the experimental procedure or the sequence of data processing steps.

-

{Q} - The Postcondition: An assertion that describes the guaranteed final state afterC successfully executes. This is the expected outcome of the experiment, such as the properties of the resulting compound or the expected characteristics of the output data.

A Hoare Triple {P} C {Q} expresses partial correctness. It means that if the precondition P is true before C runs, and ifC terminates, then the postcondition Q will be true in the final state.[9][10] Proving termination is a separate concern.[9]

Diagram 1: Conceptual Model of a Hoare Triple

Caption: A Hoare Triple asserts that if a program C starts in a state satisfying precondition P and terminates, it will end in a state satisfying postcondition Q.

The Role of Inference Rules

Hoare Logic is a formal system equipped with a set of inference rules that allow for the compositional verification of programs.[8][12] This means the correctness proof of a large program is constructed from the proofs of its smaller constituent parts, mirroring the program's structure.[8][13] This structured approach is similar to how a complex synthesis is broken down into individual, verifiable reaction steps.

Loop Invariants: Reasoning About Iteration

Scientific algorithms frequently involve loops (e.g., for iterative optimization, sequence alignment, or processing large datasets). Reasoning about loops requires a special assertion called a loop invariant .[12] An invariant is a property that is true at the beginning of the first loop iteration and remains true after every subsequent iteration. It captures the essential, unchanging property of the loop's operation and is critical for proving its correctness.

Protocols

Protocol 1: General Workflow for Program Verification

This protocol outlines the high-level steps for formally verifying a program's correctness against its specification using Hoare Logic.

Methodology:

-

Formal Specification:

-

Define the program's intended purpose.

-

Translate this purpose into a formal precondition {P} (what the program assumes about its inputs) and a postcondition {Q} (what it guarantees about its outputs).

-

-

Program Annotation:

-

For programs with loops, define a suitable loop invariant for each loop. This is often the most intellectually demanding step.

-

Insert intermediate assertions between program statements to break the proof into smaller, manageable steps.

-

-

Generate Verification Conditions:

-

Apply the inference rules of Hoare Logic (see Protocol 2) systematically, starting from the postcondition and working backward to the precondition.

-

This process generates a set of mathematical obligations (predicates) that must be proven true.

-

-

Discharge Verification Conditions:

-

Prove that each generated predicate is a valid logical implication. For example, prove that the program's precondition implies the precondition required by the first statement.

-

This step often requires a theorem prover or logical deduction. If all conditions are proven, the program is considered verified.[12]

-

Diagram 2: Experimental Workflow for Program Verification

Caption: The workflow for verifying a program, moving from high-level specification to detailed logical proof.

Protocol 2: Application of Core Hoare Logic Inference Rules

This protocol details the primary rules used to generate verification conditions. These rules are applied to prove that a program satisfies its specification.

Data Summary Table:

| Rule Name | Hoare Triple (Conclusion) | Premise(s) (What must be proven) | Description |

| Assignment | {P[E/x]} x := E {P} | None (Axiom) | To ensure P is true after assigning E to x, the precondition must be P with all free occurrences of x replaced by E.[8][11] |

| Sequence | {P} C1; C2 {Q} | {P} C1 {R} and {R} C2 {Q} | To prove correctness of a sequence, find an intermediate assertion R that is the postcondition of C1 and the precondition of C2.[12][13] |

| Conditional (If) | {P} if B then C1 else C2 {Q} | {P ∧ B} C1 {Q} and {P ∧ ¬B} C2 {Q} | One must prove that both the 'then' branch (when B is true) and the 'else' branch (when B is false) lead to the same postcondition Q.[11] |

| Loop (While) | {I} while B do C {I ∧ ¬B} | {I ∧ B} C {I} | One must find a loop invariant I and prove that it is maintained by the loop body C when the loop condition B is true.[11][14] |

| Consequence | {P} C {Q} | P ⇒ P', {P'} C {Q'}, Q' ⇒ Q | A proof can be strengthened by using a stronger precondition (P') or weakened by allowing for a weaker postcondition (Q').[9][15] |

Diagram 3: Logical Structure of a Hoare Proof

Hoare Logic provides a formal, deductive framework for ensuring the correctness of software, much like how mathematical proofs provide certainty in other scientific domains. For researchers, scientists, and drug development professionals who rely on complex computational models and data analysis pipelines, embracing the principles of formal verification can significantly enhance the reliability and trustworthiness of their work.[2][16][17] While manual application of Hoare Logic can be intensive, its principles form the foundation of modern automated program verification tools that can make this level of rigor more accessible.[12] Adopting this verification-oriented mindset is a crucial step toward building more robust and dependable scientific software.

References

- 1. Verifiable biology - PubMed [pubmed.ncbi.nlm.nih.gov]

- 2. Galois - Formal Methods and Scientific Computing [galois.com]

- 3. academic.oup.com [academic.oup.com]

- 4. Verification and validation of bioinformatics software without a gold standard: a case study of BWA and Bowtie - PMC [pmc.ncbi.nlm.nih.gov]

- 5. Formal methods - Wikipedia [en.wikipedia.org]

- 6. Formal Methods and Logic – Penn Computer & Information Science Highlights [highlights.cis.upenn.edu]

- 7. Formal Methods [users.ece.cmu.edu]

- 8. Hoare: Hoare Logic, Part I [softwarefoundations.cis.upenn.edu]

- 9. users.cecs.anu.edu.au [users.cecs.anu.edu.au]

- 10. www3.risc.jku.at [www3.risc.jku.at]

- 11. Hoare logic - Wikipedia [en.wikipedia.org]

- 12. fiveable.me [fiveable.me]

- 13. cs.princeton.edu [cs.princeton.edu]

- 14. cs.utexas.edu [cs.utexas.edu]

- 15. cs.iit.edu [cs.iit.edu]

- 16. academic.oup.com [academic.oup.com]

- 17. m.youtube.com [m.youtube.com]

Application Notes & Protocols: Finite Automata in Lexical Analysis Research

Audience: Researchers, Scientists, and Drug Development Professionals

Introduction:

Lexical analysis is the foundational phase of compiling a computer program, where a stream of input characters is converted into a sequence of meaningful units called tokens.[1] This process is driven by a powerful mathematical model known as the finite automaton. For researchers in fields like bioinformatics and drug development, the principles of lexical analysis offer a robust framework for complex pattern matching. Whether searching for specific motifs in genomic sequences, identifying functional groups in chemical structure data (e.g., SMILES strings), or parsing large-scale experimental data logs, the underlying algorithms are directly analogous and immensely powerful.[2] This document provides detailed protocols and technical notes on leveraging finite automata for such research applications.

Core Concepts: From Regular Expressions to Deterministic Finite Automata (DFA)

At its heart, lexical analysis is about recognizing patterns. These patterns are formally described using regular expressions . A regular expression is a sequence of characters that specifies a search pattern. For example, in bioinformatics, a regular expression could define a DNA binding site. To use these patterns computationally, they are converted into a finite automaton.

The standard process involves a two-step conversion:

-

Regular Expression to Nondeterministic Finite Automaton (NFA): NFAs are computationally easier to derive from a regular expression but can be ambiguous in their operation.[3]

-

NFA to Deterministic Finite Automaton (DFA): DFAs are unambiguous and fast for recognition, as for any given state and input symbol, there is only one possible next state.[3] This makes them ideal for high-throughput scanning.

The overall workflow from a set of pattern definitions (regular expressions) to an efficient scanner is a cornerstone of computer science.

References

Application Notes and Protocols: Research Applications of Context-Free Grammars

This document provides detailed application notes and protocols on the research uses of Context-Free Grammars (CFGs). It is intended for researchers, scientists, and drug development professionals who are interested in the application of formal language theory to solve complex problems in bioinformatics, natural language processing, and computer science.

Application 1: Bioinformatics - RNA Secondary Structure Prediction

Application Note

Context-Free Grammars are exceptionally well-suited for modeling the secondary structure of RNA molecules. The folding of a single-stranded RNA molecule is largely determined by the formation of hydrogen bonds between complementary bases (Adenine-Uracil, Guanine-Cytosine). This base pairing creates stem-loop structures with a nested, non-crossing dependency, which is a hallmark of context-free languages. In this analogy, the nucleotides are the terminal symbols of the grammar, and the production rules define how they can pair and form structural elements like stems, loops, and bulges.[1]

Stochastic Context-Free Grammars (SCFGs) are a probabilistic extension used to score and rank potential structures.[2][3] In an SCFG, each production rule is assigned a probability, representing the likelihood of that particular structural formation.[1] By finding the parse tree with the highest probability for a given RNA sequence, researchers can predict its most likely secondary structure.[4][5] This predictive power is crucial for understanding RNA function, designing RNA-based therapeutics, and interpreting experimental data in drug development. SCFGs can be trained on databases of known RNA structures to learn the probabilities of various structural motifs.[2][6]

Experimental Protocol: RNA Folding via Nussinov's Algorithm

The Nussinov algorithm is a foundational dynamic programming method for predicting RNA secondary structure by maximizing the number of complementary base pairs. It provides a clear example of how the nested structure problem is solved computationally.[7][8]

Objective: To find an RNA secondary structure with the maximum number of non-crossing base pairs for a given RNA sequence.

Methodology:

-

Initialization: Given an RNA sequence S of length L, create an L x L matrix, N. Initialize the diagonals N[i, i] and N[i, i-1] to 0 for all i from 1 to L.[9] This represents the base case: a subsequence of length 1 or 0 has zero base pairs.

-

Matrix Filling (Recurrence): Fill the matrix for increasing subsequence lengths. For i from L-1 down to 1, and j from i+1 to L, calculate N[i, j] using the following recurrence relation:[10]

-

N[i, j] = max(

-

N[i+1, j] (Nucleotide i is unpaired)

-

N[i, j-1] (Nucleotide j is unpaired)

-

N[i+1, j-1] + score(S[i], S[j]) (Nucleotides i and j form a pair)

-

max(N[i, k] + N[k+1, j]) for k from i to j-1 (The structure bifurcates into two independent substructures)

-

-

The score(S[i], S[j]) is 1 if S[i] and S[j] are complementary bases (e.g., A-U, G-C) and 0 otherwise.

-

-