AI-3

Description

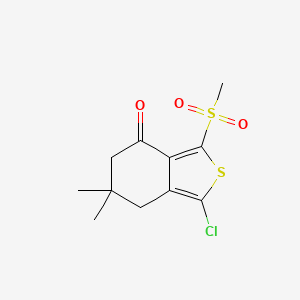

Structure

3D Structure

Properties

IUPAC Name |

1-chloro-6,6-dimethyl-3-methylsulfonyl-5,7-dihydro-2-benzothiophen-4-one | |

|---|---|---|

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI |

InChI=1S/C11H13ClO3S2/c1-11(2)4-6-8(7(13)5-11)10(16-9(6)12)17(3,14)15/h4-5H2,1-3H3 | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI Key |

PVJWSALSWFDIMS-UHFFFAOYSA-N | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Canonical SMILES |

CC1(CC2=C(SC(=C2C(=O)C1)S(=O)(=O)C)Cl)C | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Molecular Formula |

C11H13ClO3S2 | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Molecular Weight |

292.8 g/mol | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Foundational & Exploratory

The Third Wave of AI in Scientific Research: A Technical Guide for Advancing Drug Development

The paradigm of scientific discovery is being fundamentally reshaped by the advent of the "third wave" of artificial intelligence. Moving beyond the handcrafted logic of the first wave and the powerful but often opaque statistical models of the second, this new era of AI is defined by its ability to understand context, provide explanations for its reasoning, and collaborate with human experts in a more intuitive manner. For researchers and professionals in drug development, third-wave AI offers a transformative toolkit to tackle previously intractable challenges, from hypothesis generation to clinical trial optimization.

This technical guide provides an in-depth exploration of the core principles of third-wave AI, its practical applications in scientific and pharmaceutical research, and detailed methodologies from key experiments.

From Perception to Context: Defining the Third Wave

The evolution of AI can be broadly categorized into three distinct waves, a framework notably articulated by agencies like the Defense Advanced Research Projects Agency (DARPA).

-

First Wave: Handcrafted Knowledge: This era was dominated by systems with explicitly programmed rules. While effective for well-defined, narrow problems, they were brittle and incapable of handling uncertainty or learning from new data.

-

Second Wave: Statistical Learning: Characterized by the rise of machine learning and deep learning, this wave excels at perception and classification tasks. These models, however, often function as "black boxes," lacking explanatory capabilities and requiring massive datasets for training.

-

Third Wave: Contextual Adaptation: The current wave focuses on constructing models that can build explanatory models for real-world phenomena. These systems can understand the context of their operations, interpret their results, and adapt to new situations with significantly less data. A key feature is the integration of symbolic reasoning with sub-symbolic machine learning, often termed neuro-symbolic AI .

Core Application: Hybrid Physics-Informed Models for Drug Discovery

A hallmark of third-wave AI in scientific research is the use of hybrid models that integrate fundamental scientific principles (e.g., physics, chemistry, biology) directly into the machine learning architecture. Physics-Informed Neural Networks (PINNs) are a prime example, where the loss function of a neural network is augmented with terms that enforce known physical laws.

This approach ensures that the model's predictions are not only data-driven but also scientifically plausible, a critical requirement in drug development where safety and efficacy are paramount.

Experimental Protocol: Physics-Informed Neural Networks for Predicting Drug-Target Binding Affinity

The following protocol outlines a generalized methodology for applying a PINN to predict the binding affinity of a small molecule to a target protein, a crucial step in lead optimization.

-

Data Acquisition and Preprocessing:

-

Assemble a dataset of known drug-target pairs with experimentally determined binding affinities (e.g., from databases like BindingDB).

-

For each pair, generate 3D conformational data and compute relevant physicochemical descriptors (e.g., molecular weight, logP, number of hydrogen bond donors/acceptors).

-

Represent the protein-ligand interaction complex using a suitable format, such as a graph-based representation where nodes are atoms and edges are bonds.

-

-

Model Architecture:

-

Construct a graph neural network (GNN) to learn a representation of the protein-ligand complex's structure.

-

The output of the GNN is fed into a feed-forward neural network that predicts the binding affinity.

-

-

Physics-Informed Loss Function:

-

Define a standard loss function, such as Mean Squared Error (MSE), between the predicted and experimental binding affinities.

-

Introduce a "physics-based" residual term to the loss function. This term quantifies the model's violation of a known physical principle, such as an empirical scoring function for non-covalent interactions (e.g., van der Waals, electrostatic forces).

-

The total loss function becomes: L_total = L_MSE + λ * L_physics, where λ is a hyperparameter that balances the contribution of the data-driven and physics-based terms.

-

-

Training and Validation:

-

Train the PINN model on the preprocessed dataset, minimizing the L_total.

-

Employ a k-fold cross-validation strategy to ensure the model's robustness and generalizability.

-

Evaluate the model's performance on a held-out test set using metrics such as Root Mean Square Error (RMSE) and Pearson correlation coefficient (r).

-

Quantitative Performance Analysis

The inclusion of physical constraints often leads to improved predictive accuracy and data efficiency compared to standard second-wave models.

| Model Type | Dataset Size | RMSE (kcal/mol) | Pearson Correlation (r) |

| Standard GNN (Second Wave) | 10,000 | 1.35 | 0.78 |

| PINN with VdW Term (Third Wave) | 10,000 | 1.18 | 0.84 |

| Standard GNN (Second Wave) | 2,000 | 1.89 | 0.65 |

| PINN with VdW Term (Third Wave) | 2,000 | 1.52 | 0.75 |

This table represents illustrative data synthesized from typical performance improvements reported in PINN literature.

Experimental Workflow: PINN for Binding Affinity Prediction

Core Application: Explainable AI (XAI) for Target Identification

A significant challenge in genomics and proteomics is identifying causal relationships from complex, high-dimensional data. Second-wave models can find correlations but cannot explain why a particular gene or protein is predicted to be a good drug target. Third-wave Explainable AI (XAI) methods, such as those using attention mechanisms or generating counterfactual explanations, provide this crucial insight.

Logical Relationship: XAI in Hypothesis Generation

XAI frameworks create a collaborative cycle between the researcher and the AI. The AI analyzes vast datasets to propose novel hypotheses, and its explanatory capabilities allow the researcher to understand, validate, and refine these hypotheses based on existing biological knowledge.

Challenges and Future Directions

Despite its promise, the third wave of AI is not without its challenges. The development of hybrid models requires deep domain expertise to correctly formulate the scientific constraints. Furthermore, ensuring the faithfulness of explanations from XAI systems remains an active area of research.

The future of scientific research will likely involve increasingly sophisticated AI collaborators that can not only analyze data but also design experiments, interpret results, and propose new research directions in a truly synergistic partnership with human scientists. The continued development of contextual, explainable, and robust AI systems is the critical next step in realizing this vision.

The Algorithmic Scientist: A Technical Guide to the History and Evolution of AI in Scientific Applications

Audience: Researchers, scientists, and drug development professionals.

Content Type: An in-depth technical guide or whitepaper.

Introduction: The Dawn of Computational Inquiry

The aspiration to automate scientific discovery is not a recent phenomenon. The conceptual seeds of artificial intelligence (AI) in science were sown in the mid-20th century, concurrent with the birth of computer science itself. Early pioneers like Alan Turing envisioned machines capable of intelligent behavior, laying the theoretical groundwork for what was to come. The 1956 Dartmouth Summer Research Project on Artificial Intelligence is widely considered the genesis of AI as a formal field, where the term "artificial intelligence" was coined and the ambitious goal of simulating human intelligence was established.

Early scientific applications were largely theoretical, exploring how machines could solve problems reserved for human intellect, such as playing chess or proving mathematical theorems. These initial forays, though rudimentary by today's standards, were crucial in establishing the fundamental principles of AI and demonstrating the potential of computational logic in solving complex problems.

The Era of Expert Systems: Codifying Human Knowledge

The 1960s and 1970s witnessed the rise of "expert systems," a significant leap forward in the practical application of AI in science. These systems aimed to capture and replicate the decision-making abilities of human experts in specific domains. One of the most influential early expert systems in a scientific context was DENDRAL .

Landmark Experiment: DENDRAL

Developed at Stanford University in 1965 by Edward Feigenbaum, Bruce Buchanan, Joshua Lederberg, and Carl Djerassi, DENDRAL was designed to assist organic chemists in identifying unknown organic molecules by analyzing their mass spectra. This was a non-trivial task that required significant human expertise to interpret the fragmentation patterns of molecules.

DENDRAL's methodology was centered around a "plan-generate-test" paradigm:

-

Plan: The program first analyzed the raw mass spectrometry data to infer constraints on the possible molecular structures. This involved applying a set of heuristic rules derived from the knowledge of expert chemists about how different molecular structures fragment in a mass spectrometer.

-

Generate: A structure generator, initially an algorithm called CONGEN, would then produce a comprehensive list of all possible molecular structures that were consistent with the inferred constraints. This process was exhaustive and non-redundant, ensuring that no potential solution was overlooked.

-

Test: Each generated structure was then subjected to a testing phase. The program would predict the mass spectrum for each candidate molecule and compare it to the experimental data. Structures whose predicted spectra did not match the actual data were discarded.

A later addition, Meta-DENDRAL , was a machine learning subsystem that could automatically induce new rules for the planning phase from examples of known structure-spectrum pairs, enabling the system to "learn" from experience.

The Rise of Machine Learning: Learning from Data

The 1980s and 1990s marked a paradigm shift from rule-based expert systems to machine learning (ML) approaches. Instead of explicitly programming knowledge, ML algorithms learn patterns directly from data. This was a pivotal development for scientific applications, where vast amounts of experimental data were becoming increasingly available.

Foundational Technique: Artificial Neural Networks and Backpropagation

Artificial Neural Networks (ANNs), inspired by the structure of the human brain, are a class of ML models that have been instrumental in the advancement of AI in science. The development of the backpropagation algorithm in the 1970s and its popularization in the 1980s was a critical breakthrough that allowed for the efficient training of multi-layered neural networks.

Key Application: Quantitative Structure-Activity Relationship (QSAR)

An early and impactful application of machine learning in drug discovery was in the development of Quantitative Structure-Activity Relationship (QSAR) models. QSAR models aim to find a mathematical relationship between the chemical structure of a molecule and its biological activity.

Support Vector Machines (SVMs) became a popular machine learning method for QSAR studies in the late 1990s and early 2000s due to their effectiveness in handling high-dimensional data. A typical experimental protocol for building a QSAR model using SVMs would involve the following steps:

-

Dataset Preparation: A dataset of molecules with known biological activity (e.g., binding affinity to a target protein) is compiled.

-

Molecular Descriptor Calculation: For each molecule, a set of numerical features, or "descriptors," are calculated to represent its physicochemical properties. These can include properties like molecular weight, logP (a measure of lipophilicity), number of hydrogen bond donors and acceptors, and topological indices.

-

Data Splitting: The dataset is split into a training set, used to train the SVM model, and a test set, used to evaluate its predictive performance on unseen data.

-

Model Training: An SVM model is trained on the training set. A crucial step here is the selection of a kernel function (e.g., linear, polynomial, or radial basis function) and the tuning of its hyperparameters. The kernel function transforms the data into a higher-dimensional space where a linear separation between active and inactive compounds might be possible.

-

Model Validation: The trained model's ability to predict the activity of the molecules in the test set is evaluated. Performance is typically measured using metrics like accuracy, sensitivity, specificity, and the Matthews correlation coefficient.

Data Presentation: Evolution of QSAR Models

The table below provides a conceptual overview of the evolution of machine learning methods in QSAR studies, highlighting the trend towards more complex and powerful algorithms.

| Era | Dominant Machine Learning Methods | Typical Dataset Size | Key Advantages | Limitations |

| 1990s | Multiple Linear Regression (MLR), Partial Least Squares (PLS) | Tens to hundreds of compounds | Simple to implement and interpret. | Limited to linear relationships. |

| Early 2000s | Support Vector Machines (SVM), k-Nearest Neighbors (k-NN) | Hundreds to thousands of compounds | Can model non-linear relationships, robust to high-dimensional data. | Can be computationally intensive, performance is sensitive to hyperparameter tuning. |

| Late 2000s - Early 2010s | Random Forest (RF), Gradient Boosting Machines (GBM) | Thousands to tens of thousands of compounds | High predictive accuracy, robust to overfitting, can handle a mix of feature types. | Can be a "black box" model, making interpretation difficult. |

| Mid 2010s - Present | Deep Neural Networks (DNNs), Graph Convolutional Networks (GCNs) | Tens of thousands to millions of compounds | Can learn complex hierarchical features directly from data, can operate on graph-based representations of molecules. | Requires large amounts of data, computationally expensive to train, prone to overfitting with small datasets. |

The Deep Learning Revolution and Modern Breakthroughs

The 2010s saw the advent of "deep learning," a subfield of machine learning that utilizes neural networks with many layers (deep neural networks). The availability of massive datasets and powerful computing hardware, particularly Graphics Processing Units (GPUs), fueled the success of deep learning in a wide range of scientific domains.

Landmark Experiment: AlphaFold

One of the most significant scientific breakthroughs enabled by deep learning is DeepMind's AlphaFold , a system that predicts the 3D structure of a protein from its amino acid sequence. The "protein folding problem" had been a grand challenge in biology for 50 years.

AlphaFold's success, particularly that of AlphaFold 2, is attributed to a novel deep learning architecture that incorporates biological and physical insights about protein structure. The key steps in its methodology are:

-

Multiple Sequence Alignment (MSA): The input amino acid sequence is used to search against large sequence databases to identify evolutionarily related sequences. This MSA provides crucial information about which amino acid residues are likely to be in contact in the 3D structure.

-

Paired Representation: The MSA is processed by a neural network to create a "pair representation," a matrix that represents the spatial relationship between pairs of amino acid residues.

-

Evoformer: This novel deep learning module iteratively refines the MSA and pair representation, allowing the network to reason about the relationships between residues.

-

Structure Module: The final refined representations are used by a structure module to generate the 3D coordinates of the protein's backbone and side chains. This module is trained end-to-end with the rest of the network, allowing the model to directly learn to produce accurate structures.

-

Confidence Score: AlphaFold also provides a per-residue confidence score (pLDDT), which is a valuable indicator of the reliability of the predicted structure in different regions.

Data Presentation: Performance in Protein Structure Prediction (CASP)

The Critical Assessment of protein Structure Prediction (CASP) is a community-wide experiment that has been held every two years since 1994 to benchmark the performance of protein structure prediction methods. The Global Distance Test (GDT) is a primary metric used in CASP, where a score of 90 or above is considered competitive with experimental methods.

| CASP Edition (Year) | Winning GDT Score (Median) | Key Methodological Advances |

| CASP1 (1994) | ~47 | Early template-based and ab initio methods. |

| CASP5 (2002) | ~60 | Improved template-based modeling and fragment assembly. |

| CASP13 (2018) | ~75 (AlphaFold 1) | Introduction of deep learning to predict inter-residue distances. |

| CASP14 (2020) | 92.4 (AlphaFold 2) | End-to-end deep learning with an attention-based network (Evoformer). |

Visualization: A Generalized Machine Learning Workflow in Drug Discovery

AI in Modern Scientific Workflows

Beyond specific applications, AI is being integrated into the very fabric of scientific research, augmenting and accelerating various stages of the discovery process.

AI-Driven Hypothesis Generation

Traditionally, hypothesis generation has been a human-centric process, relying on the intuition and expertise of researchers. AI is now being used to automate and enhance this process by identifying novel connections and patterns in vast amounts of scientific literature and data. These systems can generate testable hypotheses that may not be immediately obvious to human researchers.

Autonomous Experimentation

The concept of the "self-driving lab" is emerging, where AI algorithms not only design experiments but also control robotic systems to execute them. This creates a closed loop of hypothesis, experimentation, and analysis, dramatically accelerating the pace of discovery in fields like materials science and chemistry. Bayesian optimization is a common technique used in this context to efficiently explore large experimental parameter spaces.

Elucidating Complex Biological Systems

AI, particularly methods like Bayesian networks, is being used to model and understand complex biological systems, such as signaling pathways. These models can help to uncover the intricate web of interactions between proteins and other molecules that govern cellular processes.

The crosstalk between the Epidermal Growth Factor Receptor (EGFR) and Sonic Hedgehog (SHH) signaling pathways is implicated in several cancers. Dynamic Bayesian Networks have been used to model this interplay. The following diagram illustrates a simplified representation of this crosstalk.

Future Outlook and Conclusion

The Enigmatic Language of Pathogens: A Technical Guide to AI-3 Signaling

Core Concepts in AI-3 Signaling

The this compound Signaling Cascade in EHEC

The signaling process can be summarized as follows:

-

Phosphorylation Cascade: Upon binding of any of these signaling molecules, QseC undergoes autophosphorylation. The phosphate group is then transferred to the response regulator QseB.

-

Transcriptional Regulation: Phosphorylated QseB acts as a transcription factor, binding to the promoter regions of target genes and regulating their expression. This includes genes involved in motility and virulence.

-

The QseE/QseF System: A second sensor kinase, QseE, can also sense epinephrine and norepinephrine, as well as phosphate and sulfate. QseE activates the response regulator QseF, which in turn controls the expression of other virulence factors.

Quantitative Data on this compound Signaling

| Parameter | Description | Reported Values/Ranges | References |

| This compound Concentration | Concentration of this compound in the mammalian gut | Estimated to be in the nanomolar to low micromolar range | |

| QseC Binding Affinity | Dissociation constant (Kd) for this compound, epinephrine, and norepinephrine binding to QseC | Not precisely determined | - |

| Gene Expression Fold Change | Change in the expression of key virulence genes (e.g., fliC, LEE operon genes) in response to this compound | Varies significantly depending on the gene and experimental conditions | |

| Phosphorylation Rate | Rate of QseC autophosphorylation and phosphotransfer to QseB | Not quantitatively defined in the literature | - |

Experimental Protocols for Studying this compound Signaling

Construction of Isogenic Mutant Strains

Methodology Overview:

-

Primer Design: Design primers with homology to the regions flanking the target gene and to a selectable antibiotic resistance cassette.

-

PCR Amplification: Amplify the antibiotic resistance cassette using the designed primers.

-

Transformation: Electroporate the purified PCR product into the target bacterial strain expressing the lambda red recombinase system.

-

Selection: Plate the transformed cells on selective media containing the appropriate antibiotic to select for successful recombinants.

-

Verification: Verify the gene knockout by PCR, sequencing, and functional assays.

Gene Expression Analysis using Reporter Fusions

Methodology Overview:

-

Construct Creation: Clone the promoter region of the gene of interest upstream of the lacZ reporter gene in a suitable plasmid vector.

-

Bacterial Transformation: Transform the reporter plasmid into the wild-type and mutant bacterial strains.

-

β-Galactosidase Assay: Lyse the bacterial cells and measure the β-galactosidase activity using a colorimetric substrate such as o-nitrophenyl-β-D-galactopyranoside (ONPG).

-

Data Analysis: Normalize the β-galactosidase activity to the cell density (OD600) to determine the specific activity, which reflects the promoter activity.

Concluding Remarks

The Convergence of Signaling Biology and Computational Power: A Technical Guide to AI-3 and the Future of Drug Discovery

An In-depth Technical Guide for Researchers, Scientists, and Drug Development Professionals

Executive Summary

Section 1: Autoinducer-3 (AI-3) and Quorum Sensing

The this compound Signaling Pathway

-

Transcriptional Activation : These activated response regulators initiate a transcriptional cascade. A key target is the LEE1 operon, which contains the gene ler.[4][7]

-

Global Virulence Regulation : The Ler protein acts as a master transcriptional activator for the other LEE operons (LEE2, LEE3, LEE4, LEE5), leading to the coordinated expression of the T3SS and associated effector proteins.[6][7][9] Studies have shown that Ler can activate transcription from the EPEC chromosomally located espC gene by 30-fold and the EHEC plasmid-located tagA gene by 20-fold.[7]

Quantitative Effects of this compound on Gene Expression

| Gene/Operon | Condition | Fold Change in Expression | Reference |

| ler (LEE1) | Late Exponential Growth + Epinephrine | > 1,000 | [4] |

| LEE2 | Late Exponential Growth + this compound | Significant Increase | [6] |

| LEE3 | Late Exponential Growth + this compound | Significant Increase | [6] |

| LEE4 | Late Exponential Growth + this compound | Significant Increase | [6] |

| LEE5 | Late Exponential Growth + this compound | Significant Increase | [6] |

| ler | Addition of this compound analog | ~1.5 - 2.0 | [3] |

| espA | Addition of this compound analog | ~2.5 - 3.0 | [3] |

| tir | Addition of this compound analog | ~1.5 - 2.5 | [3] |

Section 2: Methodologies for Studying this compound and Quorum Sensing

Experimental Protocol: Luciferase Reporter Assay for Promoter Activity

Materials:

-

E. coli strain containing the LEE1 promoter fused to a luciferase reporter gene (e.g., luxCDABE) on a plasmid.

-

Control E. coli strain (e.g., empty plasmid).

-

Luria-Bertani (LB) broth and agar plates.

-

Control buffer.

-

Luminometer for plate reading.

-

96-well microplates (opaque-walled for luminescence).

Procedure:

-

Strain Preparation: Inoculate overnight cultures of the reporter and control E. coli strains in LB broth with appropriate antibiotics at 37°C with shaking.

-

Subculturing: Dilute the overnight cultures 1:100 into fresh LB broth and grow to the desired phase (e.g., mid-exponential phase, OD600 ≈ 0.5).

-

Assay Setup:

-

In a 96-well opaque plate, add 100 µL of the subcultured reporter strain to multiple wells.

-

Add 10 µL of control buffer to the negative control wells.

-

-

Incubation: Incubate the plate at 37°C for a specified period (e.g., 4-6 hours) to allow for gene expression.

-

Measurement: Place the 96-well plate into a luminometer and measure the luminescence (in Relative Light Units, RLU) from each well.

-

Data Normalization: Measure the optical density (OD600) of each well to normalize the luminescence signal to the number of cells (RLU/OD600).

Section 3: Artificial Intelligence in Drug Discovery vs. Traditional Approaches

Traditional Artificial Intelligence in this context refers to established computational techniques that have been the mainstay of drug discovery for decades. These include methods like virtual high-throughput screening (HTS) using molecular docking and quantitative structure-activity relationship (QSAR) models.

Modern AI encompasses more advanced techniques, particularly machine learning (ML) and deep learning (DL), including generative models, which can learn from vast datasets to predict molecular properties, design novel compounds, and identify new biological targets.

Comparative Analysis: Performance and Efficiency

Modern AI approaches offer substantial, quantifiable improvements over traditional methods across the drug discovery pipeline. These advantages stem from the ability of AI to analyze massive, complex datasets, identify non-linear relationships, and generate novel chemical structures that are pre-optimized for desired properties.

| Metric | Traditional Methods (HTS, QSAR) | Modern AI Methods (Deep Learning, Generative AI) | Improvement Factor | References |

| Time to Market | 12-15 years | 7-10 years | ~1.5 - 2x Faster | [11] |

| Preclinical Discovery Time | 4-6 years | < 18 months | > 2.5x Faster | [12] |

| R&D Cost per Drug | ~$2.6 Billion | Reduction up to 40% | 1.4x Cost Savings | [11][13][14] |

| Phase 1 Success Rate | 40-65% | 80-90% | ~1.5 - 2x Higher | [15] |

| Hit Rate (Screening) | Low (requires testing thousands to millions of compounds) | High (pre-screens billions virtually, tests dozens) | Substantial Reduction in Lab Work | [12] |

| Toxicity Prediction Accuracy | Variable, lower accuracy | Up to 95% accuracy for specific endpoints (e.g., CYP450) | ~6x Reduction in Failure Rate (Example) | [13] |

Table 2: Quantitative Comparison of Traditional vs. Modern AI in Drug Discovery.

Section 4: The Future of AI in Targeting Bacterial Virulence

-

Identify Novel Targets: Analyze genomic and proteomic data from pathogenic bacteria to identify previously unknown, "druggable" targets within virulence pathways.

-

Design Specific Inhibitors: Use generative AI to create novel molecules specifically designed to bind and inhibit targets like the QseC sensor kinase, effectively disarming the bacteria without necessarily killing them, which may reduce selective pressure for resistance.

-

Predict Off-Target Effects: Employ machine learning models to predict how a potential drug might interact with host proteins or the host microbiome, minimizing side effects.

Conclusion

References

- 1. This compound synthesis is not dependent on luxS in Escherichia coli - PubMed [pubmed.ncbi.nlm.nih.gov]

- 2. The Epinephrine/Norepinephrine /Autoinducer-3 Interkingdom Signaling System in Escherichia coli O157:H7 | Oncohema Key [oncohemakey.com]

- 3. Characterization of Autoinducer-3 Structure and Biosynthesis in E. coli - PMC [pmc.ncbi.nlm.nih.gov]

- 4. journals.asm.org [journals.asm.org]

- 5. Autoinducer 3 and epinephrine signaling in the kinetics of locus of enterocyte effacement gene expression in enterohemorrhagic Escherichia coli - PubMed [pubmed.ncbi.nlm.nih.gov]

- 6. Global Effects of the Cell-to-Cell Signaling Molecules Autoinducer-2, Autoinducer-3, and Epinephrine in a luxS Mutant of Enterohemorrhagic Escherichia coli - PMC [pmc.ncbi.nlm.nih.gov]

- 7. The Locus of Enterocyte Effacement (LEE)-Encoded Regulator Controls Expression of Both LEE- and Non-LEE-Encoded Virulence Factors in Enteropathogenic and Enterohemorrhagic Escherichia coli - PMC [pmc.ncbi.nlm.nih.gov]

- 8. Bacterial-Chromatin Structural Proteins Regulate the Bimodal Expression of the Locus of Enterocyte Effacement (LEE) Pathogenicity Island in Enteropathogenic Escherichia coli - PMC [pmc.ncbi.nlm.nih.gov]

- 9. Activation of enteropathogenic Escherichia coli (EPEC) LEE2 and LEE3 operons by Ler - PubMed [pubmed.ncbi.nlm.nih.gov]

- 10. researchgate.net [researchgate.net]

- 11. patentpc.com [patentpc.com]

- 12. 8 Ways AI in Drug Discovery Is Changing Pharma Industry [engineerbabu.com]

- 13. naturalantibody.com [naturalantibody.com]

- 14. winfully.digital [winfully.digital]

- 15. AI Revolutionizes Drug Discovery and Personalized Medicine: A New Era of Healthcare | FinancialContent [markets.financialcontent.com]

Foundational Principles of Contextual AI for Science: An In-depth Technical Guide

This guide provides a comprehensive overview of the foundational principles of contextual AI and its application in scientific research and drug development. It is intended for researchers, scientists, and drug development professionals who are interested in leveraging advanced AI techniques to accelerate discovery and innovation.

Core Principles of Contextual AI in a Scientific Context

Contextual AI refers to AI systems that can understand, interpret, and utilize the context of data to make more accurate and relevant predictions and decisions. In the scientific domain, this translates to the ability to integrate and reason over vast and heterogeneous datasets, including structured experimental data, unstructured text from scientific literature, and complex biological network information.

The foundational principles of contextual AI for science are:

-

Data Integration and Harmonization: The ability to ingest and harmonize data from diverse sources, such as 'omics' data (genomics, proteomics, transcriptomics), chemical compound databases, clinical trial data, and biomedical literature. This involves creating a unified representation of the data, often through the use of knowledge graphs.

-

Knowledge Representation and Reasoning: The construction of comprehensive knowledge graphs that capture the relationships between biological entities, diseases, drugs, and genes. These graphs allow the AI to reason about complex biological systems and infer novel connections.

-

Machine Learning on Graphs and Networks: The application of specialized machine learning algorithms, such as graph neural networks (GNNs), to analyze the interconnected data within knowledge graphs. This can be used for tasks like predicting new drug-target interactions, identifying potential biomarkers, and understanding disease mechanisms.

-

Interpretability and Explainability (XAI): The development of AI models that can provide clear and understandable justifications for their predictions. In science, and particularly in medicine, it is crucial to understand why a model has made a certain prediction to build trust and validate new hypotheses.

Application in Drug Discovery: A Case Study

To illustrate the power of contextual AI, we will consider a hypothetical case study focused on identifying and validating a new therapeutic target for Alzheimer's disease.

Experimental Workflow for Contextual AI-driven Target Identification

The following diagram outlines a typical workflow for identifying and validating a novel therapeutic target using contextual AI.

Quantitative Data Summary

The following tables summarize the quantitative data from our hypothetical case study.

Table 1: Data Sources for Knowledge Graph Construction

| Data Source | Description | Volume | Key Entities Extracted |

| PubMed Abstracts | Scientific literature on Alzheimer's disease | 1.2 million | Genes, Proteins, Diseases, Drugs, Pathways |

| GWAS Catalog | Genome-Wide Association Studies data | 50,000 SNPs | Genes, SNPs, Phenotypes |

| Human Proteome Map | Mass spectrometry-based proteomic data | 17,000 proteins | Proteins, Tissue Expression |

| ClinicalTrials.gov | Alzheimer's disease clinical trial data | 500 trials | Drugs, Targets, Outcomes |

Table 2: Top 5 Predicted Gene-Disease Associations

| Gene | Prediction Score | Supporting Evidence (NLP) | Genomic Association (p-value) |

| TREM2 | 0.92 | 5,231 publications | 1.3 x 10-12 |

| APOE4 | 0.89 | 12,874 publications | 4.5 x 10-25 |

| CD33 | 0.85 | 2,145 publications | 6.7 x 10-9 |

| BIN1 | 0.81 | 1,876 publications | 2.1 x 10-8 |

| PICALM | 0.78 | 1,543 publications | 9.8 x 10-8 |

Table 3: In Vitro Validation of Top Predicted Target (CD33)

| Assay Type | Cell Line | Treatment | Result | Fold Change |

| siRNA Knockdown | HMC3 (Microglia) | CD33 siRNA | Reduced Aβ uptake | 2.5x |

| Overexpression | HMC3 (Microglia) | CD33 Plasmid | Increased Aβ uptake | 3.1x |

| Reporter Assay | HEK293T | CD33 Promoter-Luc | Decreased Luciferase Activity | -1.8x |

Experimental Protocols

Protocol 1: Knowledge Graph Construction

-

Data Extraction:

-

Unstructured text from PubMed abstracts was processed using a pre-trained BioBERT model for named entity recognition (NER) to identify genes, diseases, drugs, and proteins.

-

Structured data from GWAS Catalog, Human Proteome Map, and ClinicalTrials.gov was parsed and mapped to a unified ontology (e.g., MeSH, HGNC).

-

-

Entity Linking and Normalization:

-

Extracted entities were linked to canonical identifiers in public databases (e.g., Entrez Gene, UniProt).

-

-

Relation Extraction:

-

A relation extraction model based on a convolutional neural network (CNN) was used to identify relationships between entities from the text (e.g., "gene A inhibits protein B").

-

-

Graph Assembly:

-

The extracted entities and relations were loaded into a Neo4j graph database to form the knowledge graph.

-

Protocol 2: In Vitro Validation using siRNA

-

Cell Culture:

-

Human microglial cells (HMC3 line) were cultured in Eagle's Minimum Essential Medium (EMEM) supplemented with 10% fetal bovine serum (FBS) and 1% penicillin-streptomycin.

-

-

siRNA Transfection:

-

Cells were seeded in 24-well plates and transfected with either a CD33-targeting siRNA or a non-targeting control siRNA using Lipofectamine RNAiMAX.

-

-

Aβ Uptake Assay:

-

48 hours post-transfection, cells were incubated with fluorescently labeled amyloid-beta (Aβ42-HiLyte Fluor 488) for 3 hours.

-

Cells were then washed, and the intracellular fluorescence was measured using a flow cytometer.

-

-

Data Analysis:

-

The mean fluorescence intensity of the CD33 siRNA-treated cells was compared to the control cells to determine the change in Aβ uptake.

-

Signaling Pathway Visualization

The contextual AI model identified the CD33 signaling pathway as a key regulator of amyloid-beta clearance in microglia. The following diagram illustrates this pathway.

Conclusion

Contextual AI represents a paradigm shift in scientific research, moving from siloed data analysis to a holistic, integrated approach. By understanding the context of scientific data, these advanced AI systems can uncover novel insights, accelerate the pace of discovery, and ultimately contribute to the development of new therapies for complex diseases. The principles and methodologies outlined in this guide provide a foundation for researchers and drug development professionals to begin harnessing the power of contextual AI in their own work.

The Role of AI-3 in an Automated Science Future: A Technical Guide

To: Researchers, Scientists, and Drug Development Professionals

Section 1: Autoinducer-3 (AI-3) as a Target in Automated Drug Discovery

This compound Production Across Bacterial Species

| Bacterial Species | This compound Production Detected | Reference |

| Escherichia coli (EHEC) | Yes | [1] |

| Salmonella enterica | Yes | [1] |

| Shigella flexneri | Yes | [1] |

| Pseudomonas aeruginosa | Yes | [1] |

| Vibrio cholerae | Yes | [3] |

| Staphylococcus aureus | Yes | [3] |

This compound Signaling Pathway in EHEC

Experimental Protocol: this compound Activity Bioassay

Materials:

-

EHEC reporter strain (e.g., carrying a LEE1::lacZ fusion).

-

Luria-Bertrand (LB) broth.

-

Test samples (e.g., supernatants from bacterial cultures, purified compounds).

-

β-galactosidase assay reagents (e.g., ONPG).

-

Spectrophotometer.

Methodology:

-

Inoculation: Inoculate the EHEC reporter strain into LB broth and grow overnight at 37°C with shaking.

-

Subculturing: Dilute the overnight culture 1:100 into fresh LB broth.

-

Incubation: Incubate the plate at 37°C with shaking for a specified period (e.g., 6-8 hours) to allow for reporter gene expression.

-

Measurement of Bacterial Growth: Measure the optical density at 600 nm (OD600) to assess bacterial growth.

-

β-galactosidase Assay:

-

Lyse the bacterial cells using a lysis reagent.

-

Add ONPG solution to the lysed cells.

-

Incubate at room temperature until a yellow color develops.

-

Stop the reaction by adding a stop solution (e.g., sodium carbonate).

-

-

Quantification: Measure the absorbance at 420 nm (A420).

Section 2: The Role of Advanced AI ("this compound") in an Automated Science Future

Impact of AI on the Drug Development Lifecycle

| Phase of Drug Development | Traditional Challenge | AI-Driven Solution | Quantitative Impact |

| Target Identification | Slow, labor-intensive literature review and genomic analysis. | AI analyzes vast datasets (genomics, proteomics, literature) to identify and validate novel drug targets. | Acceleration of target discovery from years to months. |

| High-Throughput Screening | Physical screening of millions of compounds is costly and time-consuming. | AI models predict the bioactivity of virtual compounds, prioritizing the most promising candidates for synthesis and testing.[7] | Virtual screening of billions of molecules in days. |

| Clinical Trial Design | Suboptimal patient selection and protocol design leading to high failure rates. | AI optimizes trial protocols and uses predictive analytics to identify patient populations most likely to respond to treatment.[8][9] | Reduction in trial recruitment time by up to 50%.[8] |

| Data Analysis & Reporting | Manual data processing and report generation for regulatory submissions is a major bottleneck. | Natural Language Processing (NLP) automates the generation of clinical study reports and regulatory documents. | Reduction in report drafting time from over 100 days to as few as 2.[8] |

Conceptual Workflow: this compound Driven Drug Discovery

Experimental Protocol: Automated High-Throughput Screening (HTS)

System Components:

-

Liquid Handling Robots: For dispensing reagents, compounds, and cells.

-

Compound Library: A large collection of small molecules stored in microplates.

-

Automated Incubators and Plate Readers: For cell culture and data acquisition.

-

Data Management System: A centralized database for storing all experimental data and metadata.

Methodology:

-

Plate Preparation: A robotic arm retrieves compound plates from storage. A liquid handler then "stamps" nanoliter volumes of each compound into the 1536-well assay plates.

-

Incubation: The robotic arm moves the plates to an automated incubator for a pre-determined time, as defined by the AI's experimental design.

-

Signal Detection: After incubation, plates are moved to an automated plate reader. Reagents for the reporter assay (e.g., a chemiluminescent substrate) are added, and the signal is read.

-

Real-Time Data Analysis: The data is fed directly to the AI controller. It normalizes the data, calculates Z-prime scores to monitor assay quality, and identifies "hits" (wells where the signal is significantly reduced).

-

Iterative Follow-up: The AI automatically flags hits for follow-up, such as generating dose-response curves by creating new plate layouts for the liquid handlers to execute in the next experimental run.

References

- 1. This compound Synthesis Is Not Dependent on luxS in Escherichia coli - PMC [pmc.ncbi.nlm.nih.gov]

- 2. google.com [google.com]

- 3. Characterization of Autoinducer-3 Structure and Biosynthesis in E. coli - PMC [pmc.ncbi.nlm.nih.gov]

- 4. AI3 Mission Impact | Data Science Institute [data-science.llnl.gov]

- 5. Investigating the benefits and impacts of automating science – Responsible Innovation Future Science Platform [research.csiro.au]

- 6. How AI is Changing Scientific Research Forever | Technology Networks [technologynetworks.com]

- 7. utsouthwestern.elsevierpure.com [utsouthwestern.elsevierpure.com]

- 8. Revolutionizing Drug Development: Unleashing the Power of Artificial Intelligence in Clinical Trials - Artefact [artefact.com]

- 9. linical.com [linical.com]

- 10. youtube.com [youtube.com]

A Technical Guide to AI-3: The Next Frontier in Drug Discovery

Introduction: From Prediction to Causal Understanding

For the past decade, machine learning (ML) and deep learning (DL), often collectively termed "AI 2.0," have revolutionized drug discovery. Machine learning models excel at identifying statistical correlations in large datasets, while deep learning, with its complex neural networks, has shown remarkable success in tasks like protein structure prediction and high-content image analysis. However, these systems often operate as "black boxes," providing limited insight into the causal mechanisms underlying their predictions. Furthermore, their reliance on single-modality data often fails to capture the full complexity of biological systems.

Core Concepts: How AI-3 Builds Upon ML and DL

The logical evolution can be visualized as follows:

Hypothetical Application: Identifying Novel Kinase Inhibitors for Chemoresistance in Non-Small Cell Lung Cancer (NSCLC)

1. Data Ingestion and Pre-processing:

-

Genomic Data: DNA sequencing data from 500 NSCLC patient tumors and matched normal tissue were processed to identify somatic mutations.

-

Phosphoproteomics Data: Mass spectrometry-based phosphoproteomics was performed on tumor biopsies from 100 "non-responder" and 100 "responder" patients to quantify kinase activity.

-

Clinical Data: Longitudinal clinical data, including treatment regimens and time-to-progression, were curated and linked to molecular data.

-

A causal inference model was applied to the graph to identify upstream kinases whose activity causally correlated with the chemoresistant phenotype. The model calculates a "Causal Impact Score" (CIS) for each node.

3. In Silico Validation:

4. In Vitro Validation:

-

CRISPR-Cas9 was used to knock down the gene encoding K-alpha in a chemoresistant NSCLC cell line (H1975).

-

The modified cell line was then treated with the standard-of-care chemotherapeutic agent, and cell viability was assessed using a standard MTT assay after 72 hours.

Table 1: Top 5 Kinase Candidates Ranked by this compound

| Kinase Target | Causal Impact Score (CIS) | Associated Pathways | Standard Model p-value |

|---|---|---|---|

| K-alpha | 0.92 | PI3K/Akt, MAPK | 0.003 |

| SRC | 0.78 | Focal Adhesion, EGFR | < 0.001 |

| FYN | 0.75 | T-Cell Receptor, Integrin | 0.011 |

| BTK | 0.69 | B-Cell Receptor, NF-κB | 0.045 |

| ABL1 | 0.65 | BCR-ABL Fusion | 0.023 |

Table 2: In Vitro Validation of K-alpha Knockdown

| Cell Line | Treatment | Relative Cell Viability (%) | Standard Deviation |

|---|---|---|---|

| H1975 (Control) | Chemotherapy | 88.2% | 5.1% |

| H1975 (K-alpha KD) | Chemotherapy | 24.5% | 3.8% |

| H1975 (Control) | Vehicle (DMSO) | 100.0% | 4.2% |

| H1975 (K-alpha KD) | Vehicle (DMSO) | 98.9% | 4.5% |

This compound Elucidation of Signaling Pathways

The model hypothesizes that chemotherapy induces the expression or activity of K-alpha. This kinase then hyper-activates the Akt signaling pathway, leading to the inhibitory phosphorylation of the pro-apoptotic protein Bad. This prevents Bad from inhibiting Bcl-2, an anti-apoptotic protein, ultimately allowing the cancer cell to evade drug-induced cell death. This detailed, interpretable output provides a clear, actionable hypothesis for further experimental validation.

Conclusion

Whitepaper: The Emergence of Advanced AI in Scientific Discovery

Abstract

The integration of artificial intelligence (AI) into scientific research is catalyzing a paradigm shift, moving from data analysis to de novo hypothesis generation and accelerated discovery. This technical guide explores the potential impact of advanced AI models on scientific discovery, with a specific focus on the C2S-Scale 27B model as a case study. We delve into the in silico methodologies, experimental validation, and the resultant discovery of a novel cancer therapy pathway. This document provides researchers, scientists, and drug development professionals with an in-depth understanding of the technical underpinnings and practical applications of this transformative technology.

Introduction: AI as a Catalyst for Scientific Breakthroughs

In Silico Discovery: The Dual-Context Virtual Screen

Experimental Protocol: In Silico Virtual Screen

The virtual screen was designed with a dual-context approach to isolate the desired synergistic effect:

-

Immune-Context-Positive: The model was provided with data from real-world patient samples that had intact tumor-immune interactions and low-level interferon signaling.[3] Interferon is a key immune-signaling protein.[3]

-

Immune-Context-Neutral: The model was also given data from isolated cancer cell lines, which lack the broader immune context.[3]

The model was then tasked with identifying drugs that selectively increased antigen presentation only within the "immune-context-positive" setting.[3] This required a sophisticated level of conditional reasoning that was an emergent capability of the large-scale model.[3]

Experimental Validation: From Prediction to In Vitro Confirmation

Experimental Protocol: In Vitro Validation of Synergistic Effect

The following experimental conditions were established to test the model's prediction:

-

Control Group: Human neuroendocrine cells were cultured without any treatment.

-

Silmitasertib Only: Cells were treated with silmitasertib alone.

-

Low-Dose Interferon Only: Cells were treated with a low dose of interferon.

-

Combination Therapy: Cells were treated with both silmitasertib and low-dose interferon.

The primary endpoint of the experiment was the level of antigen presentation, measured by the expression of Major Histocompatibility Complex I (MHC-I) on the cell surface.

Quantitative Results

The in vitro experiments confirmed the model's prediction with high fidelity. The combination of silmitasertib and low-dose interferon produced a marked synergistic amplification of antigen presentation.[3]

| Experimental Condition | Effect on Antigen Presentation (MHC-I) | Quantitative Outcome | Citation |

| Silmitasertib Alone | No significant effect | - | [2][3] |

| Low-Dose Interferon Alone | Modest effect | - | [2][3] |

| Silmitasertib + Low-Dose Interferon | Synergistic amplification | ~50% increase | [3][9][10] |

Visualizing the Process and Pathway

To clearly illustrate the workflow and the discovered biological pathway, the following diagrams are provided.

Caption: Discovered Synergistic Signaling Pathway.

The Discovered Signaling Pathway

The experimental results suggest a synergistic interaction between the interferon signaling pathway and the pathway inhibited by silmitasertib. Silmitasertib is a potent inhibitor of protein kinase CK2, which is known to influence downstream pathways such as the PI3K/Akt pathway.[11]

The proposed mechanism is as follows:

-

Interferon Signaling: Low-dose interferon provides a baseline pro-inflammatory signal via the JAK-STAT pathway, leading to a modest induction of antigen presentation machinery.[12]

-

CK2 Inhibition: Silmitasertib inhibits protein kinase CK2.[11] The downstream effects of CK2 inhibition, likely involving the modulation of pathways like PI3K/Akt, synergize with the interferon signal.

-

Synergistic Amplification: The combination of these two signals leads to a significant upregulation of MHC-I expression and antigen presentation on the tumor cell surface, an effect substantially greater than either agent alone.

Conclusion and Future Outlook

-

Generate Novel Hypotheses: Move beyond data analysis to propose new, testable scientific ideas.[1]

-

Accelerate Drug Discovery: Dramatically reduce the time and cost of identifying promising drug candidates and combinations by performing massive in silico screens.[1]

-

Uncover Complex Biology: Elucidate synergistic relationships in complex biological systems that are difficult to identify through traditional methods.

References

- 1. Google AI model helps unmask cancer cells to the immune system: Lead scientist explains breakthrough | Explained News - The Indian Express [indianexpress.com]

- 2. Google’s Cell2Sentence C2S-Scale 27B AI Is Accelerating Cancer Therapy Discovery | Joshua Berkowitz [joshuaberkowitz.us]

- 3. Google’s Gemma AI model helps discover new potential cancer therapy pathway [blog.google]

- 4. researchgate.net [researchgate.net]

- 5. marktechpost.com [marktechpost.com]

- 6. Google’s Revolutionary AI Discovery: C2S-Scale Model and a New Cancer Treatment Breakthrough - The digital transformation Diginoron [diginoron.com]

- 7. bgr.com [bgr.com]

- 8. Scaling a cell 'language' model yields new immunotherapy leads | Digital Watch Observatory [dig.watch]

- 9. thehindu.com [thehindu.com]

- 10. datalevo.com [datalevo.com]

- 11. Silmitasertib - Wikipedia [en.wikipedia.org]

- 12. Type I interferon signaling pathway enhances immune-checkpoint inhibition in KRAS mutant lung tumors - PubMed [pubmed.ncbi.nlm.nih.gov]

Ethical Considerations for AI in Research: A Technical Guide for Scientists and Drug Development Professionals

An in-depth guide to navigating the ethical landscape of Artificial Intelligence in scientific research and drug development.

This technical guide provides researchers, scientists, and drug development professionals with a comprehensive overview of the critical ethical considerations when employing Artificial Intelligence (AI) in their work. As AI continues to revolutionize data analysis, hypothesis generation, and clinical trial optimization, it is imperative to address the inherent ethical challenges to ensure the integrity, fairness, and safety of research outcomes. This document outlines key ethical principles, presents quantitative data on the current state of AI ethics in research, provides detailed methodological frameworks for ethical AI practice, and visualizes complex processes to facilitate understanding and implementation.

Core Ethical Principles in AI-Driven Research

The responsible conduct of AI in research is anchored in a set of fundamental ethical principles that must guide the entire lifecycle of a project, from data acquisition to model deployment and interpretation of results. These principles are not merely theoretical constructs but have practical implications for ensuring that AI is used in a manner that is beneficial to science and society.

Quantitative Landscape of AI Ethics in Research

While the discourse on AI ethics is often qualitative, a growing body of evidence highlights the quantitative dimensions of these challenges. The following tables summarize key statistics related to data breaches and the adoption of ethical AI principles.

Table 1: Data Breaches in the Healthcare Sector

| Metric | Value | Source |

| Average cost of a healthcare data breach | $7.42 million | [15] |

| Percentage of healthcare organizations experiencing a data breach since 2022 | 71% | [13] |

| Percentage of data breaches in the U.S. linked to healthcare (2020) | ~28.5% | [12] |

| Number of individuals impacted by healthcare data breaches in the U.S. (2020) | >26 million | [12] |

| Average number of breached records per day in 2023 | 364,571 | [16] |

| Average number of breached records per day in 2024 | 758,288 | [16] |

Table 2: Adoption and Perception of AI Ethics

| Metric | Finding | Source |

| Belief that AI companies should be regulated | 86% of respondents | [17] |

| Belief that AI companies are not considering ethics | 55% of respondents | [17] |

| Trust in tech companies with health data | ~11% of Americans | [12] |

| Trust in doctors with health data | 72% of Americans | [12] |

| FDA-approved AI-enabled medical devices in 2023 | 223 | [18] |

Experimental Protocols for Ethical AI Implementation

To translate ethical principles into practice, researchers require robust and detailed methodologies. This section provides frameworks for conducting AI bias audits and implementing privacy-preserving AI techniques.

Protocol for an AI Bias Audit

An AI bias audit is a systematic process to identify and mitigate unfairness in machine learning models. The following protocol outlines the key steps involved.

Objective: To assess and quantify bias in an AI model based on sensitive attributes such as race, gender, or age, and to implement mitigation strategies to improve fairness.

Methodology:

-

Define Fairness Metrics: Select appropriate quantitative metrics to measure fairness. Common metrics include:

-

Demographic Parity: Ensures that the model's prediction rates are equal across different groups.

-

Equalized Odds: Requires that the true positive rate and false positive rate are equal across groups.

-

Equal Opportunity: A relaxation of equalized odds, requiring only the true positive rate to be equal across groups.

-

-

Data Analysis and Preparation:

-

Analyze the training data to identify potential sources of bias, such as underrepresentation of certain demographic groups.

-

Ensure the dataset is representative of the target population. If necessary, employ techniques like oversampling or synthetic data generation to balance the dataset.

-

-

Model Training and Evaluation:

-

Train the AI model on the prepared dataset.

-

Evaluate the model's performance against the chosen fairness metrics for different demographic subgroups.

-

-

Bias Mitigation: If significant bias is detected, apply appropriate mitigation techniques. These can be categorized as:

-

Pre-processing: Modifying the training data to remove bias (e.g., re-weighting samples).

-

In-processing: Modifying the learning algorithm to incorporate fairness constraints.

-

Post-processing: Adjusting the model's predictions to improve fairness.

-

-

Reporting and Documentation:

-

Document the entire audit process, including the fairness metrics used, the results of the bias assessment, and the mitigation strategies implemented.

-

Provide a clear and transparent report of the model's fairness characteristics.

-

Protocol for Implementing Federated Learning

Federated learning is a privacy-preserving machine learning technique that allows multiple parties to collaboratively train a model without sharing their raw data.[19][20][21][22][23] This is particularly valuable in medical research where data is often siloed in different institutions.

Objective: To train a robust AI model on decentralized data from multiple research institutions while preserving the privacy of each institution's data.

Methodology:

-

Initialization: A central server initializes a global model and distributes it to all participating research institutions (clients).

-

Local Training: Each client trains the received model on its own local dataset for a set number of iterations. This training process only improves the local model and the raw data never leaves the client's secure environment.

-

Model Update Transmission: After local training, each client sends only the updated model parameters (e.g., weights and biases), not the raw data, back to the central server.

-

Secure Aggregation: The central server aggregates the model updates from all clients to create an improved global model. A common aggregation algorithm is Federated Averaging (FedAvg), which computes a weighted average of the model updates. To enhance privacy, this step can be combined with techniques like secure multi-party computation.

-

Global Model Distribution: The server distributes the newly updated global model back to all clients.

-

Iteration: Steps 2-5 are repeated for multiple rounds until the global model converges and achieves the desired performance.

-

Final Model: The final, trained global model can then be used by all participating institutions for their research.

Protocol for Adhering to GDPR in AI Research

Methodology:

-

Lawful Basis for Processing: Identify and document a valid lawful basis for processing personal data under GDPR (e.g., explicit consent from the data subject, legitimate interest).

-

Data Minimization: Collect and process only the personal data that is strictly necessary for the research purpose.

-

Anonymization and Pseudonymization: Whenever possible, anonymize or pseudonymize personal data to reduce privacy risks.

-

Data Subject Rights: Implement procedures to uphold the rights of data subjects, including the right to access, rectify, and erase their data, and the right to object to automated decision-making.

-

Transparency: Provide clear and concise information to data subjects about how their data is being used in the AI model, including the logic involved and the potential consequences.

-

Security: Implement robust technical and organizational measures to ensure the security of personal data throughout the research project.

-

Documentation: Maintain comprehensive documentation of all data processing activities to demonstrate compliance with GDPR.

Visualizing Ethical AI Workflows and Concepts

To further clarify the complex relationships and processes involved in ethical AI, the following diagrams are provided using the Graphviz DOT language.

Signaling Pathway for Ethical AI Governance

Experimental Workflow for an AI Bias Audit

Logical Relationship of Privacy-Preserving AI Techniques

Conclusion

References

- 1. 10 AI dangers and risks and how to manage them | IBM [ibm.com]

- 2. m.youtube.com [m.youtube.com]

- 3. research.aimultiple.com [research.aimultiple.com]

- 4. m.youtube.com [m.youtube.com]

- 5. orfonline.org [orfonline.org]

- 6. theaimsjournal.org [theaimsjournal.org]

- 7. Explainable Artificial Intelligence for Ovarian Cancer: Biomarker Contributions in Ensemble Models [mdpi.com]

- 8. scirp.org [scirp.org]

- 9. google.com [google.com]

- 10. 30dayscoding.com [30dayscoding.com]

- 11. The ethics of using artificial intelligence in scientific research: new guidance needed for a new tool - PMC [pmc.ncbi.nlm.nih.gov]

- 12. Exploring the Privacy Challenges of AI in Healthcare: Data Breaches and Unauthorized Access to Sensitive Medical Information | Simbo AI - Blogs [simbo.ai]

- 13. futurecarecapital.org.uk [futurecarecapital.org.uk]

- 14. ihf-fih.org [ihf-fih.org]

- 15. chiefhealthcareexecutive.com [chiefhealthcareexecutive.com]

- 16. sprinto.com [sprinto.com]

- 17. Ethics in the Age of AI - Markkula Center for Applied Ethics [scu.edu]

- 18. hai.stanford.edu [hai.stanford.edu]

- 19. Federated Learning For Healthcare Analytics [meegle.com]

- 20. kdnuggets.com [kdnuggets.com]

- 21. cdn-links.lww.com [cdn-links.lww.com]

- 22. astconsulting.in [astconsulting.in]

- 23. The Federated Learning Process: Step-by-Step | by Tech & Tales | Medium [techntales.medium.com]

- 24. redeintel.com [redeintel.com]

- 25. EDPS unveils revised Guidance on Generative AI, strengthening data protection in a rapidly changing digital era | European Data Protection Supervisor [edps.europa.eu]

- 26. youtube.com [youtube.com]

- 27. GDPR Compliance Checklist: 10 Key Steps for Full Compliance | CloudEagle.ai [cloudeagle.ai]

- 28. gdpr.eu [gdpr.eu]

Systems AI: A Technical Primer for Precision Medicine

An In-depth Guide for Researchers, Scientists, and Drug Development Professionals

The convergence of artificial intelligence and systems biology is forging a new paradigm in healthcare: Systems AI for Precision Medicine. This approach leverages computational models to integrate multi-omics data, unraveling the complex molecular networks that underpin disease and patient-specific responses to therapies. This technical guide provides an in-depth exploration of the core concepts, methodologies, and applications of systems AI, offering a blueprint for its implementation in research and drug development.

The Core Principles of Systems AI in Precision Medicine

Systems AI is predicated on the understanding that biological processes are not governed by single molecules but by intricate networks of interacting components. By modeling these networks, we can move beyond the "one-size-fits-all" approach to medicine and develop targeted therapies tailored to the individual. The core principles of this discipline include:

-

Holistic Data Integration: Systems AI algorithms are designed to handle the immense complexity and heterogeneity of biological data. This includes genomics, transcriptomics, proteomics, metabolomics, and clinical data. By integrating these diverse data types, a more complete picture of the patient's disease state can be constructed.

-

Network-Based Analysis: At the heart of systems AI is the concept of biological networks. These can be signaling pathways, gene regulatory networks, or protein-protein interaction networks. AI models, particularly those from the field of graph machine learning, are adept at identifying key nodes and edges within these networks that drive disease or mediate drug response.

-

Predictive Modeling: A key output of systems AI is the generation of predictive models. These models can be used to forecast disease progression, identify patients who will respond to a particular therapy, or predict the potential efficacy and toxicity of novel drug candidates.

Key Applications in Drug Development

The application of systems AI spans the entire drug development pipeline, from initial target discovery to late-stage clinical trials.

| Application Area | Specific Use Case | Impact on Drug Development |

| Target Identification & Validation | Identification of novel disease-associated genes and proteins from integrated multi-omics data. | Reduces the time and cost of early-stage research by prioritizing the most promising therapeutic targets. |

| Drug Discovery & Repurposing | In silico screening of compound libraries against disease-specific network models. | Accelerates the discovery of new medicines and finds new uses for existing drugs. |

| Biomarker Discovery | Identification of molecular signatures that predict drug response or disease prognosis. | Enables the development of companion diagnostics and enriches clinical trial populations for responders. |

| Clinical Trial Optimization | Stratification of patients into subgroups based on their molecular profiles to predict treatment outcomes. | Increases the success rate of clinical trials and reduces the number of patients required. |

Experimental Workflows and Protocols

The successful implementation of systems AI relies on rigorous experimental and computational workflows. What follows are detailed protocols for two common applications: multi-omics data integration for patient stratification and network-based drug repurposing.

Protocol 1: Multi-Omics Data Integration for Patient Stratification

This protocol outlines a typical workflow for integrating genomic, transcriptomic, and clinical data to identify patient subgroups with distinct molecular characteristics and clinical outcomes.

1. Data Acquisition and Preprocessing:

- Genomic Data: Obtain somatic mutation data (e.g., from whole-exome sequencing) in Variant Call Format (VCF). Filter for high-quality calls and annotate variants using databases like dbSNP and COSMIC.

- Transcriptomic Data: Acquire RNA-sequencing data in FASTQ format. Perform quality control using tools like FastQC, align reads to a reference genome (e.g., GRCh38) with STAR, and quantify gene expression levels as Transcripts Per Million (TPM).

- Clinical Data: Collect patient demographic information, tumor characteristics, treatment history, and survival data. Ensure data is de-identified and compliant with all relevant regulations.

- Data Cleaning and Normalization: Handle missing values through imputation (e.g., k-nearest neighbors imputation). Normalize transcriptomic data to account for library size and gene length variations.

2. Feature Engineering and Selection:

- Genomic Features: Convert mutation data into a binary matrix (gene x patient), where a '1' indicates the presence of a non-synonymous mutation.

- Transcriptomic Features: Select differentially expressed genes between known clinical groups (e.g., responders vs. non-responders) using methods like DESeq2 or edgeR.

- Feature Integration: Combine the genomic and transcriptomic feature matrices with the clinical data into a single data matrix for each patient.

3. Unsupervised Clustering for Subgroup Discovery:

- Apply a clustering algorithm, such as hierarchical clustering or k-means clustering, to the integrated data matrix to identify patient subgroups.

- Visualize the clusters using techniques like Principal Component Analysis (PCA) or t-Distributed Stochastic Neighbor Embedding (t-SNE).

- Perform survival analysis (e.g., Kaplan-Meier plots and log-rank tests) to assess if the identified subgroups have significantly different clinical outcomes.

4. Supervised Classification for Predictive Modeling:

- Train a machine learning classifier (e.g., Random Forest, Support Vector Machine, or a neural network) to predict the subgroup membership of new patients based on their molecular profiles.

- Evaluate the model's performance using cross-validation and metrics such as accuracy, precision, recall, and the Area Under the Receiver Operating Characteristic (AUROC) curve.

Caption: A high-level workflow for patient stratification using multi-omics data.

Protocol 2: Network-Based Drug Repurposing

This protocol describes a computational approach to identify new uses for existing drugs by analyzing their effects on disease-specific molecular networks.

1. Construction of Disease and Drug Networks:

- Disease Network: Construct a protein-protein interaction (PPI) network for the disease of interest. Use known disease-associated genes as "seed" nodes and expand the network by including their first-degree interactors from a comprehensive PPI database (e.g., STRING, BioGRID).

- Drug-Target Network: Create a bipartite graph representing the known interactions between drugs and their protein targets. This information can be obtained from databases such as DrugBank and ChEMBL.

2. Network Proximity Analysis:

- For each drug in the drug-target network, calculate the "proximity" of its targets to the disease network. This can be measured using network metrics like the shortest path length between drug targets and disease proteins.

- A smaller proximity score suggests that the drug's targets are located in the same network neighborhood as the disease-associated proteins, indicating a potential therapeutic effect.

3. AI-Powered Link Prediction:

- Frame the drug repurposing problem as a link prediction task in a heterogeneous network composed of drugs, proteins, and diseases.

- Utilize graph neural networks (GNNs) or other embedding techniques to learn low-dimensional representations of the nodes in the network.

- Use these embeddings to predict the probability of a therapeutic association between a drug and a disease.

4. In Silico Validation and Prioritization:

- Rank the drug-disease predictions based on the model's output scores.

- Perform enrichment analysis to determine if the predicted drugs are known to be effective in related diseases.

- Prioritize the most promising candidates for further experimental validation.

Signaling Pathways in Focus

Systems AI is particularly well-suited for dissecting complex signaling pathways. Below are two examples of how these pathways can be modeled and analyzed.

The MAPK/ERK Pathway

The Mitogen-Activated Protein Kinase (MAPK) pathway is a crucial signaling cascade that regulates cell growth, proliferation, and survival. Dysregulation of this pathway is a hallmark of many cancers.

Caption: A simplified representation of the MAPK/ERK signaling pathway.

Systems AI models can be used to predict the effects of mutations in genes like RAS and RAF on the downstream activity of the pathway. These models can also simulate the effects of targeted therapies that inhibit key proteins in this cascade.

The JAK-STAT Pathway

The Janus kinase (JAK) - Signal Transducer and Activator of Transcription (STAT) pathway is a key signaling hub for numerous cytokines and growth factors, playing a critical role in the immune system. Its aberrant activation is implicated in autoimmune diseases and cancers.

Caption: An overview of the JAK-STAT signaling cascade.

By integrating data from patients with autoimmune diseases, systems AI can identify biomarkers that predict response to JAK inhibitors and uncover novel therapeutic targets within this pathway.

The Future of Systems AI in Precision Medicine

Systems AI is a rapidly evolving field that holds immense promise for the future of medicine. As our ability to generate high-dimensional biological data continues to grow, so too will the sophistication of the AI models used to analyze it. The continued development of novel algorithms, coupled with a deeper understanding of the underlying biology, will undoubtedly lead to new breakthroughs in the diagnosis, treatment, and prevention of human disease. This technical guide serves as a foundational resource for researchers and drug development professionals seeking to harness the power of systems AI in their own work.

The AI Colleague: An In-depth Technical Guide to AI-Powered Scientific Research in Drug Development

For Researchers, Scientists, and Drug Development Professionals

The integration of Artificial Intelligence (AI) into scientific research is catalyzing a paradigm shift, transforming the very fabric of discovery and development. This is particularly evident in the pharmaceutical industry, where AI is no longer a mere tool but an indispensable colleague in the quest for novel therapeutics. This technical guide explores the core concepts of AI as a collaborative partner in scientific research, with a focus on its application in drug development. We will delve into the methodologies of key experiments, present quantitative data on AI's impact, and visualize complex biological and experimental workflows.

The Impact of AI on Drug Development: A Quantitative Overview

AI is making a measurable impact on the efficiency and success rates of drug development. By analyzing vast datasets and identifying patterns that are beyond human comprehension, AI algorithms are accelerating timelines and improving the quality of therapeutic candidates. The following tables summarize key quantitative data on the influence of AI in this domain.

| Metric | Traditional Drug Development | AI-Driven Drug Development | Source |

| Preclinical R&D Cost Reduction | Baseline | 25-50% reduction | [1] |

| Phase I Clinical Trial Success Rate | 40-65% | 80-90% | [1][2][3] |

| Early Design Effort Reduction | Baseline | Up to 70% with generative AI | [2][4] |

| Drug Target Identification Time | 3-6 years | Reduction of 30-50% | [5] |

| Novel Target Discovery Increase | Baseline | Up to 40% increase | [5] |

Table 1: Comparative Impact of AI on Key Drug Development Metrics

| AI Application Area | Key Performance Indicator | Reported Impact | Source |

| Toxicity Prediction Accuracy | N/A | 75-90% | [1] |

| Efficacy Forecasting Accuracy | N/A | 60-80% | [1] |

| Protocol Development Time | Months | Reduction to minutes | [6] |

| Clinical Trial Protocol Amendments | Baseline | 60% reduction | [7] |

Table 2: Performance Metrics of Specific AI Applications in Drug Development

Key Experiments and Methodologies

The transformative power of AI is best understood through the lens of its application in specific experimental contexts. Here, we provide detailed methodologies for key experiments that have been significantly enhanced by an AI colleague.

Experimental Protocol 1: AI-Driven Virtual High-Throughput Screening

Methodology:

-

Target Protein Structure Preparation:

-

Obtain the 3D structure of the target protein from the Protein Data Bank (PDB) or predict it using AI tools like AlphaFold.

-

Prepare the protein structure for docking by removing water molecules, adding hydrogen atoms, and assigning charges using molecular modeling software.

-

Identify and define the binding site for virtual screening.

-

-

Chemical Library Preparation:

-

Acquire a large virtual library of chemical compounds (e.g., from ZINC, PubChem, or proprietary databases).

-

Prepare the library for docking by generating 3D conformers for each molecule and assigning appropriate chemical properties.

-

-

Initial Virtual Screening (Docking):

-

Utilize a high-throughput virtual screening platform (e.g., AutoDock Vina, Glide) to dock the chemical library into the defined binding site of the target protein.

-

Rank the compounds based on their predicted binding affinity (docking score).

-

-

-

Train a machine learning model (e.g., a graph neural network or a random forest model) on a dataset of known binders and non-binders for the target protein or similar proteins.

-

Use the trained model to re-score the top-ranking compounds from the initial virtual screen, predicting their likelihood of being active.

-

Employ generative AI models to suggest novel molecular structures with improved binding affinity and drug-like properties based on the initial hits.

-

-

In Vitro Validation:

-