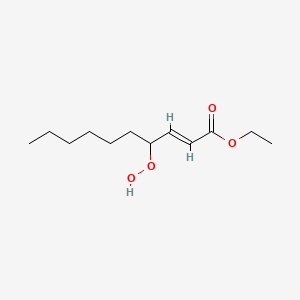

Hpo-daee

Description

BenchChem offers high-quality this compound suitable for many research applications. Different packaging options are available to accommodate customers' requirements. Please inquire for more information about this compound including the price, delivery time, and more detailed information at info@benchchem.com.

Structure

2D Structure

3D Structure

Properties

Molecular Formula |

C12H22O4 |

|---|---|

Molecular Weight |

230.30 g/mol |

IUPAC Name |

ethyl (E)-4-hydroperoxydec-2-enoate |

InChI |

InChI=1S/C12H22O4/c1-3-5-6-7-8-11(16-14)9-10-12(13)15-4-2/h9-11,14H,3-8H2,1-2H3/b10-9+ |

InChI Key |

LNQJMDJQUAQRGI-MDZDMXLPSA-N |

Isomeric SMILES |

CCCCCCC(/C=C/C(=O)OCC)OO |

Canonical SMILES |

CCCCCCC(C=CC(=O)OCC)OO |

Origin of Product |

United States |

Foundational & Exploratory

The Human Phenotype Ontology: A Technical Guide for Researchers and Drug Development Professionals

The Human Phenotype Ontology (HPO) has emerged as a critical global standard for the standardized description of phenotypic abnormalities in human disease. Its structured vocabulary and hierarchical organization provide a powerful computational framework for a wide range of applications, from clinical diagnostics and rare disease gene discovery to the elucidation of disease mechanisms and the advancement of drug development. This in-depth guide provides a technical overview of the HPO's core components, its applications, and the methodologies that underpin its use in research and therapeutic development.

Core Concepts of the Human Phenotype Ontology

The Human Phenotype Ontology is a comprehensive and structured vocabulary of terms that describe phenotypic abnormalities encountered in human diseases.[1][2] Each term in the HPO represents a specific phenotypic feature, such as "Atrial septal defect" or "Intellectual disability, severe". The ontology is organized as a Directed Acyclic Graph (DAG), where terms are interconnected by "is a" relationships. This hierarchical structure allows for varying levels of granularity in phenotype description, from general terms like "Abnormality of the cardiovascular system" to highly specific terms like "Ostium primum atrial septal defect". A key feature of the HPO is that a term can have multiple parent terms, enabling the representation of complex and multifaceted phenotypic features.[3]

The development of the HPO is an ongoing international effort, with terms and annotations continually added and refined based on medical literature, and data from resources such as Online Mendelian Inheritance in Man (OMIM), Orphanet, and DECIPHER.[2]

Quantitative Growth of the Human Phenotype Ontology

The HPO has experienced substantial growth in both the number of phenotypic terms and the breadth of disease annotations since its inception. This expansion reflects its increasing adoption and utility within the biomedical community. The following table summarizes the growth of the HPO over time, based on published data.

| Metric | January 2009 - August 2013 | January 2018 | September 2020 | 2024 |

| Number of Terms | ~10,088 | ~12,000 | 15,247 | >18,000 |

| Disease Annotations | - | >123,000 (rare diseases) >132,000 (common diseases) | >205,192 (total) | >156,000 (hereditary diseases) |

As of September 2020, the annotations within the HPO were sourced from several key databases, providing a comprehensive view of the phenotypic landscape of human disease.

| Data Source | Number of Annotations | Number of Diseases Annotated |

| OMIM | 108,580 | 7,801 |

| Orphanet | 96,612 | 3,956 |

| DECIPHER | 296 | 47 |

Logical Structure of HPO Terms: The Entity-Quality (EQ) Model

To enhance computational reasoning and interoperability with other ontologies, HPO terms are increasingly being given logical definitions based on the Entity-Quality (EQ) model. This model decomposes a phenotype into an affected anatomical or physiological "Entity" and an abnormal "Quality" that inheres in that entity. The Phenotype and Trait Ontology (PATO) is used to provide the standardized "Quality" terms.

For example, the HPO term "Microcephaly" (HP:0000252) can be logically defined as:

-

Entity: Cephalon (from an anatomy ontology like UBERON)

-

Quality: Decreased size (from PATO)

This decomposition allows for more precise computational comparisons and facilitates cross-species phenotype analysis by mapping entities and qualities between different organisms.

Experimental and Computational Protocols

The HPO is a cornerstone of several powerful computational methods for analyzing patient data and prioritizing candidate genes. Below are detailed methodologies for some of the key applications.

HPO-based Semantic Similarity Analysis

Semantic similarity measures quantify the degree of resemblance between two HPO terms or two sets of HPO terms based on their positions within the ontology's hierarchy and their information content. These measures are fundamental for comparing patient phenotypes to known disease phenotypes.

Methodology:

-

Information Content (IC) Calculation: The IC of an HPO term t is a measure of its specificity and is calculated as the negative log of its frequency of annotation in a corpus of diseases. IC(t) = -log(p(t)) where p(t) is the probability of observing term t or any of its descendants in the annotation database. More specific terms have a higher IC.

-

Pairwise Term Similarity: Several methods exist to calculate the similarity between two terms, t1 and t2.

-

Resnik Similarity: The similarity is the IC of the Most Informative Common Ancestor (MICA) of the two terms. sim_Resnik(t1, t2) = IC(MICA(t1, t2))

-

Lin Similarity: This method normalizes the Resnik similarity by the IC of the two terms. sim_Lin(t1, t2) = (2 * IC(MICA(t1, t2))) / (IC(t1) + IC(t2))

-

Jiang and Conrath Similarity: This measure is based on the distance between two terms, which is then converted to a similarity score. dist_JC(t1, t2) = IC(t1) + IC(t2) - 2 * IC(MICA(t1, t2)) sim_JC(t1, t2) = 1 - dist_JC(t1, t2)

-

-

Groupwise Similarity: To compare a patient's set of HPO terms with a disease's set of HPO terms, pairwise similarities are aggregated. Common methods include taking the average or the maximum similarity between all pairs of terms.

HPO Term Enrichment Analysis

Enrichment analysis is used to determine if a set of genes is significantly associated with particular HPO terms. This can help in understanding the functional consequences of genetic perturbations.

Methodology:

-

Input Data: A list of genes of interest (e.g., differentially expressed genes from an RNA-seq experiment) and a background set of all genes considered in the experiment.

-

Statistical Test: The hypergeometric test is commonly used to calculate the probability of observing the given overlap between the gene set of interest and the set of genes annotated to a specific HPO term by chance. The test is based on the following contingency table:

| In Gene Set of Interest | Not in Gene Set of Interest | Total | |

| Annotated to HPO term | k | K - k | K |

| Not annotated to HPO term | n - k | N - n - K + k | N - K |

| Total | n | N - n | N |

-

P-value Calculation: The p-value is the probability of observing k or more genes from the gene set of interest annotated to the HPO term.

-

Correction for Multiple Testing: Since enrichment is tested for many HPO terms simultaneously, a correction for multiple hypothesis testing, such as the Benjamini-Hochberg False Discovery Rate (FDR) correction, is applied to the p-values.

Phenotype-Driven Gene and Variant Prioritization

A major application of the HPO is in prioritizing candidate genes and variants from next-generation sequencing data. Tools like Exomiser and LIRICAL leverage HPO terms to rank genes based on their phenotypic relevance to a patient's clinical presentation.

Methodology (Conceptual Workflow):

-

Patient Phenotyping: The patient's clinical features are systematically recorded as a set of HPO terms.

-

Variant Calling: Whole-exome or whole-genome sequencing is performed, and a list of genetic variants is generated.

-

Phenotypic Matching: The patient's HPO terms are compared to a knowledge base of gene-phenotype and disease-phenotype associations. A phenotype score is calculated for each gene based on the semantic similarity between the patient's phenotype and the phenotypes associated with that gene.

-

Variant Scoring: Each variant is scored based on its predicted pathogenicity (e.g., using tools like SIFT, PolyPhen-2, CADD) and its population frequency (rarer variants are prioritized).

-

Integrated Ranking: The phenotype score and the variant score are combined to produce a final ranked list of candidate genes and variants.

HPO in the Context of Signaling Pathways and Drug Development

The HPO provides a crucial link between molecular pathways and clinical outcomes. By annotating the phenotypic consequences of mutations in genes within a specific signaling pathway, researchers can gain insights into the pathway's role in human health and disease. This is particularly valuable for drug development, as it allows for the identification of potential therapeutic targets and the prediction of on- and off-target effects based on phenotypic data.

Example: The Hippo Signaling Pathway and HPO Terms

The Hippo signaling pathway is a key regulator of organ size and tissue homeostasis, and its dysregulation is implicated in various cancers. Mutations in genes within this pathway can lead to developmental disorders with distinct phenotypic features. For example, mutations in the YAP1 gene, a downstream effector of the Hippo pathway, are associated with a range of phenotypes that can be captured by HPO terms.

By systematically mapping HPO terms to genes within various signaling pathways, researchers can build comprehensive "phenotype pathways" that can inform drug discovery efforts. For example, if a drug candidate is known to modulate a particular pathway, the associated HPO terms can help predict potential adverse effects or identify patient populations that are most likely to respond to the therapy.

Conclusion

The Human Phenotype Ontology has become an indispensable tool for researchers, clinicians, and drug development professionals. Its standardized vocabulary, hierarchical structure, and rich set of annotations provide a robust framework for integrating phenotypic data into genomic and systems biology analyses. The computational methodologies enabled by the HPO, such as semantic similarity analysis, enrichment analysis, and phenotype-driven gene prioritization, are accelerating the pace of rare disease diagnosis and the discovery of novel therapeutic targets. As the HPO continues to evolve and expand, its role in bridging the gap between genotype and phenotype will undoubtedly become even more critical in the era of precision medicine.

References

Human Phenotype Ontology (HPO) for Beginners in Genetic Research: An In-depth Technical Guide

For Researchers, Scientists, and Drug Development Professionals

The Human Phenotype Ontology (HPO) has emerged as a critical tool in the field of genetics, providing a standardized vocabulary for describing phenotypic abnormalities in human disease. This guide offers a comprehensive overview of the HPO, its core concepts, and its practical applications in genetic research and drug development. We provide detailed methodologies for key experiments, summarize quantitative data for easy comparison, and present visual workflows and pathways to facilitate understanding.

Introduction to the Human Phenotype Ontology (HPO)

The Human Phenotype Ontology (HPO) is a structured vocabulary of terms used to describe phenotypic abnormalities observed in human diseases.[1][2][3] It provides a standardized and computable format for clinical information, which is essential for computational analysis and data sharing in genetics. The HPO is organized as a directed acyclic graph (DAG), where each term has defined relationships with other terms, allowing for sophisticated computational analyses of phenotypic similarity.[4]

The primary goal of the HPO is to provide a standardized vocabulary for clinical annotations that can be used to improve the diagnosis and understanding of rare genetic diseases.[1] By using a consistent set of terms, researchers and clinicians can more effectively compare patient phenotypes, identify novel disease-gene associations, and improve the diagnostic yield of genomic sequencing.

Core Applications of HPO in Genetic Research

The HPO has a wide range of applications in genetic research, from improving the diagnostic process to facilitating the discovery of new disease genes.

Deep Phenotyping for Enhanced Diagnostics

"Deep phenotyping" refers to the comprehensive and precise description of a patient's phenotypic abnormalities. The HPO is the de facto standard for deep phenotyping in the field of rare disease. A detailed and accurate phenotypic profile is crucial for a successful genetic diagnosis. Providing a complete summary of a patient's condition using HPO terms is critical when analyzing genomic data, as incorrect or incomplete information can lead to missed diagnoses.

Phenotype-Driven Variant Prioritization

Whole-exome and whole-genome sequencing typically identify thousands of genetic variants, making it challenging to pinpoint the single causative variant for a rare disease. Phenotype-driven variant prioritization tools leverage HPO terms to rank variants based on their relevance to the patient's clinical presentation. These tools compare the patient's HPO profile to known gene-phenotype associations to identify the most likely disease-causing variants. This approach has been shown to significantly improve the efficiency and accuracy of genetic diagnosis.

Gene-Phenotype Association and Discovery

The HPO is instrumental in identifying new associations between genes and phenotypes. By analyzing large cohorts of patients with similar HPO profiles, researchers can identify common genetic variants and discover novel disease genes. This has been particularly successful in large-scale research projects like the Undiagnosed Diseases Network (UDN).

Quantitative Impact of HPO on Genetic Diagnostics

The use of HPO has demonstrated a significant positive impact on the diagnostic yield of genetic testing. The following tables summarize key quantitative data from various studies.

| Study/Tool | Metric | Result | Reference |

| Exomiser | Diagnostic variant rank (with HPO) | Top candidate in 74% of cases, top 5 in 94% | |

| Exomiser | Diagnostic variant rank (without HPO) | Top candidate in 3% of cases, top 5 in 27% | |

| EVIDENCE automated system | Diagnostic Yield | 42.7% in 330 probands | |

| CincyKidsSeq Study | Diagnostic Yield | Increased with the number of HPO terms used | |

| GADO | Diagnostic Yield Improvement | Identified likely causative genes in 10 out of 61 previously undiagnosed cases |

Table 1: Impact of HPO on Diagnostic Variant Prioritization and Yield. This table illustrates the significant improvement in ranking the correct disease-causing variant when HPO terms are utilized in the analysis. It also shows the overall diagnostic yield achieved in different studies using HPO-driven approaches.

| Tool | Top-1 Accuracy | Top-5 Accuracy | Top-10 Accuracy | Reference |

| LIRICAL | 48.2% | 72.8% | 80.7% | |

| AMELIE | 46.9% | 69.5% | 77.1% | |

| Phen2Gene | - | - | 85% (in DDD dataset) | |

| Exomiser | 42.3% | 69.2% | 78.4% |

Table 2: Benchmarking of HPO-based Gene Prioritization Tools. This table compares the performance of several widely used tools that leverage HPO terms to prioritize candidate genes. The accuracy is measured by the percentage of cases where the correct causal gene was ranked within the top 1, 5, or 10 candidates.

Experimental Protocols

This section provides detailed methodologies for key experimental and computational workflows involving the HPO.

Protocol for Patient Phenotyping using HPO

Accurate and comprehensive phenotyping is the cornerstone of successful HPO-driven analysis.

Objective: To create a standardized, computable phenotypic profile for a patient using HPO terms.

Materials:

-

Patient's clinical notes, medical records, and imaging reports.

-

Access to the HPO website or a compatible software tool (e.g., PhenoTips).

Procedure:

-

Review Clinical Documentation: Thoroughly review all available clinical information for the patient.

-

Identify Phenotypic Abnormalities: Extract all terms describing the patient's physical and developmental abnormalities.

-

Map to HPO Terms: Use the HPO browser or an integrated tool to find the most specific HPO term for each identified abnormality. It is recommended to use at least 5-7 major phenotypic terms if possible.

-

Select the Most Specific Term: The HPO is hierarchical. Always choose the most specific term that accurately describes the phenotype. For example, instead of "Abnormality of the hand," use "Arachnodactyly" if the patient has abnormally long and slender fingers.

-

Record HPO IDs: For each selected term, record the unique HPO identifier (e.g., HP:0001166 for Arachnodactyly).

-

Use Negated Terms (Optional but Recommended): If a significant feature for a suspected differential diagnosis is absent, you can use a negated HPO term to indicate this. This can help to refine the computational analysis.

-

Create a Phenopacket (Optional but Recommended): A Phenopacket is a standardized file format for sharing phenotypic information along with other relevant clinical and genomic data.

Protocol for HPO-Driven Variant Prioritization

This protocol outlines the steps for using HPO terms to prioritize variants from a VCF file.

Objective: To identify and rank potential disease-causing variants from whole-exome or whole-genome sequencing data.

Materials:

-

Patient's VCF file.

-

Patient's HPO profile (list of HPO IDs).

-

A variant prioritization tool that accepts HPO terms (e.g., Exomiser, LIRICAL, AMELIE).

-

A computer with sufficient processing power and memory.

Procedure:

-

Install and Configure the Tool: Follow the documentation to install your chosen variant prioritization tool. This may involve downloading reference data and configuring paths.

-

Prepare Input Files:

-

Ensure the VCF file is properly formatted and indexed.

-

Create a file containing the patient's HPO terms, one ID per line.

-

If analyzing a family, prepare a PED file describing the relationships between family members.

-

-

Run the Analysis: Execute the tool from the command line, providing the VCF file, HPO file, and any other required parameters. For example, a basic Exomiser command might look like this:

Note: This is a simplified example. Refer to the specific tool's documentation for detailed command-line options.

-

Review the Results: The tool will generate a ranked list of candidate genes and variants. The output is typically in a human-readable format (e.g., HTML) and a machine-readable format (e.g., JSON or TSV).

-

Interpret the Top Candidates: Manually review the top-ranked variants. This involves:

-

Checking the variant's predicted pathogenicity using tools like SIFT and PolyPhen.

-

Reviewing the gene's function and its association with the patient's phenotype in databases like OMIM and GeneReviews.

-

Examining the variant's inheritance pattern in the context of the family history.

-

-

Functional Validation (if necessary): For novel or uncertain variants, further experimental validation (e.g., Sanger sequencing, functional assays) may be required to confirm pathogenicity.

Visualization of Workflows and Pathways

The following diagrams, created using the DOT language, illustrate key workflows and concepts related to HPO in genetic research.

Figure 1: A high-level overview of the HPO-driven genetic research workflow.

Figure 2: A detailed workflow for phenotype-driven variant prioritization.

HPO in the Context of Signaling Pathways

Defects in cellular signaling pathways are often the underlying cause of genetic disorders. HPO terms can be used to describe the specific constellation of phenotypes associated with the dysregulation of a particular pathway.

Example: The mTOR Pathway and mTORopathies

The mTOR (mechanistic target of rapamycin) pathway is a crucial signaling cascade that regulates cell growth, proliferation, and survival. Germline mutations in genes encoding components of the mTOR pathway lead to a group of disorders collectively known as "mTORopathies." These disorders are often characterized by neurological manifestations such as epilepsy, intellectual disability, and cortical malformations.

Figure 3: Simplified mTOR signaling pathway and associated HPO terms in mTORopathies.

HPO in Drug Discovery and Development

The standardized phenotypic data provided by the HPO is increasingly being leveraged in the pharmaceutical industry to accelerate drug discovery and development, particularly for rare diseases.

Target Identification and Validation

By linking specific phenotypes (HPO terms) to genes, researchers can identify novel potential drug targets. If a particular HPO term is consistently associated with mutations in a specific gene across a large patient cohort, that gene and its protein product become attractive targets for therapeutic intervention.

Patient Stratification for Clinical Trials

HPO profiles can be used to stratify patients for inclusion in clinical trials. This ensures that the trial population is more homogeneous in terms of their clinical presentation, which can increase the statistical power to detect a treatment effect.

Drug Repurposing

By identifying similarities in the HPO profiles of different diseases, researchers can identify opportunities for drug repurposing. If a drug is effective for a disease with a particular set of HPO terms, it may also be effective for another disease that shares a similar phenotypic profile.

Figure 4: A workflow illustrating the use of HPO in drug discovery and development.

Conclusion

The Human Phenotype Ontology has revolutionized the way we capture, analyze, and utilize clinical information in genetic research. For beginners, understanding the core principles of HPO and its applications is essential for conducting effective and impactful research. By providing a standardized language for describing phenotypes, the HPO facilitates everything from improved diagnostic accuracy to the discovery of novel therapeutic strategies. As genomic medicine continues to advance, the importance and utility of the HPO will only continue to grow, making it an indispensable tool for researchers, scientists, and drug development professionals.

References

An In-depth Technical Guide to the Human Phenotype Ontology (HPO) for Researchers and Drug Development Professionals

The Human Phenotype Ontology (HPO) has emerged as a critical global standard for the comprehensive and computable representation of phenotypic abnormalities in human disease.[1][2][3] Its application spans from rare disease diagnostics and clinical research to cohort analysis and the advancement of precision medicine, making a thorough understanding of its core tenets essential for researchers, scientists, and drug development professionals.[2][4] This guide provides an in-depth exploration of HPO terms and definitions, methodologies for its application, and its intersection with relevant biological pathways.

Core Concepts of the Human Phenotype Ontology

The HPO is a structured vocabulary that provides a standardized set of terms to describe phenotypic abnormalities. Each term is assigned a unique identifier (e.g., HP:0001166 for "Arachnodactyly") and is organized in a hierarchical, directed acyclic graph. This structure allows for computational reasoning, where relationships between terms are defined, such as 'is a' relationships (e.g., "Arachnodactyly" is a type of "Abnormality of the long bones of the limbs"). This enables powerful computational analyses of phenotypic data.

The ontology is continuously expanding through community-driven efforts and collaborations with clinical experts to refine and add new terms, ensuring its relevance and accuracy. As of recent updates, the HPO contains over 18,000 terms and more than 156,000 annotations to hereditary diseases.

Data Presentation: HPO by the Numbers

The utility of the HPO can be quantified through its extensive annotation of human diseases. The following tables summarize key quantitative data related to the HPO, providing a snapshot of its scale and application.

| Metric | Value | Source |

| Total HPO Terms | > 18,000 | |

| Total Annotations to Hereditary Diseases | > 156,000 | |

| Diseases with HPO Annotations (OMIM) | 7,801 | |

| Total Annotations from OMIM | 108,580 | |

| Mean Annotations per Disease (OMIM) | 13.9 | |

| Diseases with HPO Annotations (Orphanet) | 3,956 | |

| Total Annotations from Orphanet | 96,612 | |

| Mean Annotations per Disease (Orphanet) | 24.4 |

Experimental Protocols: Methodologies for HPO-based Analysis

While the HPO is a computational resource and does not involve wet-lab experimental protocols in the traditional sense, its application in research and clinical settings follows a structured workflow. This "experimental protocol" outlines the key steps for utilizing the HPO for deep phenotyping and computational analysis.

Objective: To standardize and computationally analyze patient phenotypic data to aid in differential diagnosis, gene discovery, and cohort characterization.

Materials:

-

Patient clinical data (e.g., electronic health records, clinical notes, physical examination findings).

-

Access to the Human Phenotype Ontology database (--INVALID-LINK--).

-

Software tools for HPO-based analysis (e.g., Phenomizer, Exomiser).

Procedure:

-

Phenotypic Data Collection (Deep Phenotyping):

-

Thoroughly document all observed phenotypic abnormalities in the patient. This includes both major and minor anomalies, as well as any relevant clinical modifiers such as age of onset, severity, and progression. The goal is to create a comprehensive "deep phenotype" profile.

-

-

HPO Term Mapping:

-

For each documented phenotypic abnormality, identify the most specific and accurate HPO term.

-

Utilize the HPO browser or integrated search functions within clinical software to find the appropriate terms. These tools often support searching by clinical description, synonyms, and term IDs.

-

-

Creation of a Patient-Specific HPO Profile:

-

Compile a list of all selected HPO terms for the individual patient. This list constitutes the patient's computable phenotypic profile.

-

-

Computational Analysis:

-

Input the patient's HPO profile into a suitable analysis tool. These tools employ various algorithms to compare the patient's profile against a database of diseases annotated with HPO terms.

-

Differential Diagnosis: Tools like Phenomizer use semantic similarity algorithms to rank diseases based on the similarity of their phenotypic profiles to the patient's profile.

-

Gene Prioritization: When combined with genomic data (e.g., from whole-exome or whole-genome sequencing), tools like Exomiser can prioritize candidate genes by integrating phenotypic data with variant data.

-

-

Interpretation of Results:

-

Analyze the ranked list of diseases or genes provided by the computational tool.

-

The output should be interpreted in the context of the patient's full clinical picture and, if applicable, genomic findings. The HPO-driven analysis serves as a powerful decision-support tool for clinicians and researchers.

-

Mandatory Visualization: Signaling Pathways and Logical Relationships

To illustrate the biological context in which phenotypic abnormalities arise and the logical structure of analytical workflows, the following diagrams are provided.

References

Phenotype-Driven Diagnostics: A Technical Guide for Advancing Research and Drug Development

Authored for Researchers, Scientists, and Drug Development Professionals

Introduction

Phenotype-driven approaches are experiencing a resurgence in diagnostics and drug discovery, offering a powerful, unbiased strategy to unearth novel therapeutics and diagnostic markers. In contrast to target-based methods that begin with a known molecular target, phenotypic screening starts with the desired biological outcome—the phenotype—and works backward to identify causative genes or effective compounds.[1][2] This approach is particularly advantageous for complex diseases where the underlying molecular pathology is not fully understood. By focusing on the whole biological system, such as cells or model organisms, phenotype-driven discovery can identify compounds acting on novel targets or through unexpected mechanisms of action.[2][3] This guide provides an in-depth technical overview of the core concepts, experimental protocols, and computational methodologies that underpin modern phenotype-driven diagnostics.

Core Methodologies in Phenotypic Screening

Phenotypic screening relies on a variety of sophisticated experimental platforms to identify and characterize compounds or genetic perturbations that induce a desired change in a biological system. High-content screening (HCS) and the use of model organisms are two of the most prominent techniques.

High-Content Screening (HCS)

High-content screening combines automated microscopy with sophisticated image analysis to extract quantitative data from cells.[4] This technique allows for the simultaneous measurement of multiple cellular parameters, providing a detailed "fingerprint" of a cell's response to a given stimulus.

Experimental Protocol: High-Content Screening for Cellular Morphology

This protocol provides a generalized workflow for an image-based phenotypic screen to identify compounds that alter cellular morphology.

-

Cell Preparation:

-

Seed cells of interest (e.g., a cancer cell line, primary neurons) into 96- or 384-well microplates at a predetermined density to ensure a sub-confluent monolayer during imaging.

-

Allow cells to adhere and grow for 24 hours in a controlled incubator environment (37°C, 5% CO2).

-

-

Compound Treatment:

-

Prepare a library of small molecules at desired concentrations.

-

Use an automated liquid handler to dispense the compounds into the wells containing the cells. Include appropriate controls (e.g., vehicle-only, positive control known to alter morphology).

-

Incubate the cells with the compounds for a predetermined period (e.g., 24-48 hours) to allow for phenotypic changes to occur.

-

-

Cell Staining:

-

Fix the cells using a solution such as 4% paraformaldehyde.

-

Permeabilize the cells with a detergent like 0.1% Triton X-100 to allow for intracellular staining.

-

Stain specific subcellular compartments using fluorescent dyes. A common combination for morphological profiling includes:

-

Hoechst 33342: To stain the nucleus.

-

Phalloidin conjugated to a fluorophore (e.g., Alexa Fluor 488): To stain F-actin filaments and visualize the cytoskeleton.

-

MitoTracker Red CMXRos: To stain mitochondria.

-

-

-

Image Acquisition:

-

Use a high-content imaging system (automated microscope) to capture images from each well.

-

Acquire images in multiple fluorescence channels corresponding to the different stains used.

-

Typically, multiple fields of view are captured per well to ensure robust statistical analysis.

-

-

Image Analysis:

-

Utilize image analysis software to segment the images and identify individual cells and their subcellular compartments (nuclei, cytoplasm).

-

Extract a wide range of quantitative features for each cell, such as:

-

Morphological features: Cell area, perimeter, shape factor.

-

Intensity features: Mean and standard deviation of fluorescence intensity in each channel.

-

Texture features: Measures of the spatial arrangement of pixel intensities.

-

-

-

Data Analysis and Hit Identification:

-

Normalize the extracted feature data to account for plate-to-plate variability.

-

Use statistical methods or machine learning algorithms to identify compounds that induce a significant deviation in the cellular phenotype compared to negative controls.

-

"Hits" can be prioritized based on the magnitude and specificity of the phenotypic change.

-

Phenotypic Screening in Model Organisms: The Zebrafish Example

Zebrafish (Danio rerio) are a powerful in vivo model for phenotypic screening due to their rapid external development, optical transparency, and genetic tractability.

Experimental Protocol: Zebrafish-Based Small Molecule Screen for Angiogenesis Inhibitors

This protocol outlines a method to screen for compounds that disrupt blood vessel development in zebrafish embryos.

-

Embryo Collection and Staging:

-

Set up breeding tanks with adult zebrafish.

-

Collect freshly fertilized embryos and incubate them in embryo medium at 28.5°C.

-

Use a transgenic line that expresses a fluorescent protein in the vasculature (e.g., Tg(fli1:EGFP)) to facilitate visualization of blood vessels.

-

-

Compound Administration:

-

At 24 hours post-fertilization (hpf), array individual embryos into 96-well plates containing embryo medium.

-

Add compounds from a chemical library to each well at a final concentration typically in the micromolar range. Include vehicle controls (e.g., DMSO).

-

-

Phenotypic Assessment:

-

Incubate the embryos for an additional 24-48 hours.

-

At 48-72 hpf, examine the embryos under a fluorescence microscope to assess the development of the intersegmental vessels (ISVs).

-

Score phenotypes based on predefined criteria, such as:

-

Complete absence of ISVs.

-

Truncated or misguided ISV growth.

-

Defects in the dorsal aorta or posterior cardinal vein.

-

-

-

Hit Validation and Secondary Screens:

-

Re-test initial "hits" in a dose-response manner to confirm their activity and determine potency.

-

Perform secondary assays to rule out general toxicity, such as assessing heart rate and overall morphology.

-

Data Presentation and Quantitative Analysis

A key advantage of phenotype-driven approaches is the generation of rich, quantitative data. Presenting this data in a structured format is crucial for interpretation and comparison.

Table 1: Comparison of Drug Discovery Approaches

| Metric | Phenotypic Discovery | Target-Based Discovery | Reference |

| First-in-Class Small Molecules (1999-2008) | 28 | 17 | |

| Follower Drugs (1999-2008) | 30 | Not specified, but target-based was more successful | |

| Primary Advantage | Unbiased discovery of novel mechanisms. | Rational, hypothesis-driven design. | |

| Primary Challenge | Target deconvolution can be time-consuming. | Potential for flawed hypotheses about the target's role in disease. |

Table 2: Performance of Phenotype-Driven Variant Prioritization Tools

| Tool | Top-Ranked Diagnostic Success Rate (Real Patient Data) | Key Features | Reference |

| LIRICAL | ~67% | Integrates clinical phenotype, inheritance pattern, and variant data. | |

| Exomiser | Can increase diagnostic yield from ~3% (variant only) to 74% (with phenotype data). | Combines variant data with patient phenotypes and cross-species phenotype data. | |

| FATHMM | One of the top five performers in a comparative study. | Predicts the functional consequences of protein missense mutations. | |

| M-CAP | One of the top five performers in a comparative study. | A pathogenicity classifier for missense variants. | |

| MetaLR | One of the top five performers in a comparative study. | An ensemble method for predicting the pathogenicity of missense variants. |

Visualization of Pathways and Workflows

Visualizing complex biological pathways and experimental workflows is essential for understanding the relationships between different components. The following diagrams were generated using the Graphviz DOT language.

Signaling Pathway Diagrams

Experimental and Logical Workflow Diagrams

Conclusion

Phenotype-driven diagnostics represents a powerful paradigm in modern biomedical research and drug development. By embracing the complexity of biological systems, these approaches offer a vital, unbiased complement to target-based methods. The integration of high-content imaging, model organism screening, and advanced computational analysis of multi-omics data is poised to accelerate the discovery of novel therapeutic strategies and diagnostic tools. As these technologies continue to evolve, they will undoubtedly play a crucial role in unraveling the intricate connections between genotype and phenotype, ultimately leading to more effective and personalized medicine.

References

- 1. Phenotypic vs. target-based drug discovery for first-in-class medicines - PubMed [pubmed.ncbi.nlm.nih.gov]

- 2. Achieving Modern Success in Phenotypic Drug Discovery [pfizer.com]

- 3. Drug Discovery: Target Based Versus Phenotypic Screening | Scientist.com [app.scientist.com]

- 4. High-content screening - Wikipedia [en.wikipedia.org]

The Human Phenotype Ontology: A Technical Guide to its History, Development, and Core Principles

A Whitepaper for Researchers, Scientists, and Drug Development Professionals

The Human Phenotype Ontology (HPO) has emerged as a critical global standard for the comprehensive and computational analysis of phenotypic abnormalities in human disease. Its structured vocabulary provides a crucial link between clinical observations and genomic data, powering advancements in rare disease diagnosis, gene discovery, and the development of targeted therapeutics. This in-depth technical guide explores the history, development, and core architectural principles of the HPO, offering researchers, clinicians, and drug development professionals a thorough understanding of this invaluable resource.

A History of Standardizing the Human Phenome

The HPO was launched in 2008 to address a significant challenge in clinical genetics: the lack of a standardized, computable vocabulary to describe human phenotypic abnormalities.[1] Prior to the HPO, clinical descriptions of patient phenotypes were often recorded in free text, making it difficult to perform large-scale computational analysis or to compare phenotypic profiles across different patients and diseases. The initial development was motivated by the need to create a resource that could be used for clinical diagnostics, mapping phenotypes to model organisms, and as a standard vocabulary for clinical databases.[2]

Developed at the Charité – Universitätsmedizin Berlin and later as a key component of the Monarch Initiative, the HPO was created by leveraging data from the Online Mendelian Inheritance in Man (OMIM) database and the medical literature.[2][3] The ontology has grown significantly since its inception, a testament to the contributions of clinical experts and researchers from a wide range of disciplines.[1] Today, the HPO is a worldwide standard for phenotype exchange and is utilized by numerous international rare disease organizations, registries, and clinical labs, facilitating global data exchange to uncover the etiologies of diseases.

Core Architectural Principles

The HPO is more than a simple vocabulary; it is a formal ontology with a robust logical structure that enables computational inference and sophisticated data analysis. Its core principles are designed to ensure consistency, interoperability, and computational tractability.

2.1. Hierarchical Structure: The Directed Acyclic Graph (DAG)

The HPO is structured as a directed acyclic graph (DAG), where each term represents a specific phenotypic abnormality. The relationships between terms are primarily "is a" (subclass) relationships, meaning that a child term is a more specific instance of its parent term. For example, Arachnodactyly (abnormally long and slender fingers and toes) "is a" type of Abnormality of the digits. This hierarchical structure allows for varying levels of granularity in phenotype description, from broad categories to highly specific features.

A key feature of the DAG structure is that a term can have multiple parent terms, allowing for the representation of complex biological relationships from different perspectives. For instance, Atrial septal defect is a child of both Abnormality of the cardiac septa and Abnormality of the cardiac atria.

2.2. Interoperability with Other Ontologies

A fundamental strength of the HPO is its interoperability with other biomedical ontologies. Logical definitions for HPO terms are created using concepts from species-neutral ontologies such as the Gene Ontology (GO) for biological processes, Uberon for anatomy, and the Cell Ontology. This cross-ontology integration allows for more sophisticated computational analyses, including the ability to compare human phenotypes with those of model organisms, such as mice and zebrafish, which is crucial for translational research and identifying candidate genes for rare diseases.

Quantitative Growth of the Human Phenotype Ontology

The HPO has undergone substantial growth since its inception, continuously expanding its coverage of human phenotypic abnormalities. This growth is a direct result of ongoing curation efforts and contributions from the global research and clinical communities.

| Year | Number of Terms | Number of Annotations to Hereditary Diseases | Key Milestones and Publications |

| 2008 | > 8,000 | Annotations for all clinical entries in OMIM | Initial launch of the HPO. |

| 2014 | ~10,000 | > 100,000 | Publication detailing the HPO project and its applications. |

| 2015 | > 11,000 | > 116,000 | Expansion to include common diseases through text mining. |

| 2018 | > 13,000 | > 156,000 | Increased adoption by major international rare disease initiatives. |

| 2020 | 15,247 | > 108,000 (OMIM) + > 96,000 (Orphanet) | Major extensions for neurology, nephrology, immunology, and other areas. |

| 2024 | > 18,000 | > 156,000 | Internationalization efforts with translations into multiple languages. |

Experimental Protocols

The development and application of the HPO are guided by rigorous and standardized methodologies. The following sections detail the protocols for HPO term curation and the application of HPO in phenotype-driven analysis.

4.1. Protocol for HPO Term Curation and Addition

The addition of new terms and the refinement of existing ones is a continuous, community-driven process. The HPO team has established a systematic workflow to ensure the quality and consistency of the ontology.

Step 1: Term Proposal

-

Researchers or clinicians identify the need for a new term or a modification to an existing one.

-

A new term request is submitted through the HPO GitHub issue tracker. The request includes a proposed term label, a definition, synonyms, and references to relevant publications.

Step 2: Initial Curation and Review

-

The HPO curation team reviews the proposal for clarity, necessity, and adherence to HPO's structural principles.

-

A machine learning-based model may be used to initially extract phenotypic features from publications and map them to potential HPO terms.

Step 3: Expert Evaluation

-

The proposed term and its definition are subjected to a two-tier expert evaluation process.

-

Domain experts review the term for clinical and scientific accuracy.

Step 4: Integration and Quality Control

-

Once a consensus is reached (often requiring at least 80% agreement among experts), the new term is integrated into the ontology.

-

The HPO has a sophisticated quality control pipeline that utilizes custom software and tools like ROBOT ('ROBOT is an OBO Tool') to check for logical consistency and adherence to ontology standards.

Step 5: Release

-

New terms and updates are included in the regular releases of the HPO.

4.2. Protocol for Phenotype-Driven Analysis using HPO

The HPO is a cornerstone of phenotype-driven genomic analysis, a powerful approach for identifying disease-causing variants. The following protocol outlines the general steps for using HPO in conjunction with tools like Exomiser for variant prioritization.

Step 1: Deep Phenotyping of the Patient

-

A clinician performs a thorough clinical examination of the patient to identify all phenotypic abnormalities.

-

Using the HPO browser or integrated clinical software, the clinician selects the most specific HPO terms that accurately describe the patient's phenotype. It is crucial to capture a comprehensive set of abnormalities.

Step 2: Input Data Preparation

-

The patient's genomic data, typically in a Variant Call Format (VCF) file from whole-exome or whole-genome sequencing, is prepared.

-

A list of the selected HPO terms for the patient is compiled.

Step 3: Variant Prioritization with Exomiser

-

The VCF file and the list of HPO terms are provided as input to the Exomiser tool.

-

Filtering: Exomiser first filters the variants based on criteria such as allele frequency in population databases, predicted pathogenicity, and mode of inheritance.

-

Phenotype-based Scoring: The tool then calculates a "phenotype score" for each remaining gene. This score reflects the semantic similarity between the patient's HPO terms and the known phenotype annotations for that gene in various databases (including human diseases and model organisms).

-

Combined Scoring: A final "Exomiser score" is calculated by combining the variant's pathogenicity score with the gene's phenotype score.

Step 4: Candidate Gene Ranking and Interpretation

-

Exomiser generates a ranked list of candidate genes and variants based on the combined score.

-

The top-ranked candidates are then reviewed by clinicians and researchers to determine the most likely disease-causing variant.

Applications in Research and Drug Development

The HPO has become an indispensable tool across the spectrum of biomedical research and drug development.

-

Accelerating Rare Disease Diagnosis: By providing a standardized language for phenotypes, the HPO enables computational tools to compare a patient's clinical presentation to a vast database of known genetic disorders, significantly shortening the diagnostic odyssey for many individuals with rare diseases.

-

Novel Disease Gene Discovery: Phenotype-driven analysis using the HPO can help identify novel gene-disease relationships by prioritizing candidate genes that have phenotypic profiles consistent with a patient's clinical features, even if the gene has not been previously associated with a human disease.

-

Improving Clinical Trial Design: The HPO can be used to define more precise patient cohorts for clinical trials by ensuring that participants share a common and well-defined set of phenotypic characteristics. This can lead to more statistically powerful studies and a higher likelihood of success.

-

Facilitating Drug Repurposing: By identifying phenotypic similarities between different diseases, the HPO can suggest potential new applications for existing drugs. If two diseases share overlapping phenotypic features, a drug effective for one may also be beneficial for the other.

-

Enhancing Pharmacogenomics: The HPO can be used to correlate specific phenotypic abnormalities with responses to drug treatments, helping to identify genetic variants that influence drug efficacy and adverse events.

Conclusion and Future Directions

The Human Phenotype Ontology has fundamentally transformed the way we describe, analyze, and compute over human phenotypic data. Its continued development, driven by a collaborative global community, will further enhance its utility in both clinical and research settings. Future directions for the HPO include expanding its coverage to more clinical domains, improving its integration with electronic health records, and developing more sophisticated algorithms for phenotype-driven analysis. As we move further into the era of precision medicine, the HPO will undoubtedly remain a cornerstone of efforts to unravel the complexities of human disease and to develop more effective, personalized therapies.

References

The Human Phenotype Ontology: A Technical Guide to Describing Clinical Abnormalities for Researchers and Drug Development Professionals

An in-depth exploration of the Human Phenotype Ontology (HPO) reveals its pivotal role in standardizing the description of clinical abnormalities, thereby enhancing genomic diagnostics, translational research, and drug development. This guide provides a comprehensive overview of the HPO's core principles, detailed methodologies for its application, and a summary of its quantitative impact.

The Human Phenotype Ontology (HPO) has emerged as a global standard for the comprehensive and computable description of phenotypic abnormalities in human disease.[1][2][3] Its hierarchical structure and standardized vocabulary enable precise and consistent documentation of clinical features, moving beyond ambiguous clinical narratives to a structured format amenable to computational analysis.[4][5] This facilitates a deeper understanding of the genotype-phenotype relationship, which is crucial for advancing rare disease diagnostics, stratifying patient cohorts for clinical trials, and identifying novel therapeutic targets.

Core Concepts of the Human Phenotype Ontology

The HPO is a directed acyclic graph (DAG) where each node represents a specific phenotypic abnormality. Terms are arranged hierarchically, with more general terms (e.g., "Abnormality of the cardiovascular system") branching into more specific terms (e.g., "Atrial septal defect"). This structure allows for computational reasoning and semantic similarity calculations, enabling algorithms to identify relationships between different phenotypes and diseases.

The HPO currently contains over 18,000 terms and is continually expanding through community curation and literature review. These terms are used to create detailed phenotypic profiles for patients, which can then be compared to a vast knowledge base of over 156,000 annotations to hereditary diseases.

Applications in Clinical Research and Drug Development

The primary application of HPO is in "deep phenotyping," the precise and comprehensive analysis of an individual's phenotypic abnormalities. This detailed phenotypic description is a critical component in modern genomics, significantly improving the diagnostic yield of whole-exome and whole-genome sequencing. By integrating HPO-based phenotype data with genomic data, researchers and clinicians can more effectively prioritize candidate genes and identify disease-causing variants.

In the realm of drug development, HPO facilitates the identification and stratification of patient cohorts for clinical trials. By using a standardized vocabulary, researchers can define inclusion and exclusion criteria with greater precision, leading to more homogenous study populations. Furthermore, HPO can be used to identify novel drug indications by finding phenotypic overlaps between different diseases.

Quantitative Overview of the Human Phenotype Ontology

The growth and application of the HPO can be quantified in several ways, highlighting its increasing adoption and impact on the field.

| Metric | Value | Source |

| Total Terms | > 18,000 | |

| Disease Annotations | > 156,000 | |

| Annotated Hereditary Syndromes | 7,278 | |

| Common Disease Annotations | 132,006 (for 3,145 diseases) |

Distribution of HPO Annotations by Organ System

The distribution of HPO annotations across different organ systems reflects the prevalence of well-described phenotypic abnormalities in various medical specialties.

| Top-Level HPO Category | Percentage of Annotations |

| Abnormality of the nervous system | 30.36% |

| Neoplasm | 22.50% |

| Abnormality of the integument | 16.60% |

| Abnormality of the skeletal system | 15.62% |

Source: Adapted from Groza et al. (2015)

Impact on Diagnostic Yield

The integration of HPO-based deep phenotyping into diagnostic workflows has demonstrated a significant improvement in the diagnostic yield of genetic testing.

| Study | Diagnostic Yield with HPO | Improvement over Variant-Only Analysis |

| A study on a cohort of 330 patients | 42.7% | Not explicitly stated, but similarity scores were crucial for diagnosis. |

| Another automated variant interpretation system study | 51% improvement in diagnostic yield | The system facilitated the diagnosis of various genetic diseases. |

Experimental Protocols and Methodologies

The application of HPO in research and clinical settings follows structured protocols to ensure consistency and comparability of data.

Protocol for Deep Phenotyping using HPO

This protocol outlines the steps for creating a standardized phenotypic profile for a patient.

-

Clinical Examination and Data Collection: A thorough clinical examination is performed, and all observed clinical abnormalities are documented. This includes physical examination findings, imaging results, and laboratory data.

-

HPO Term Selection: The documented clinical abnormalities are translated into the most specific HPO terms available. Tools such as the HPO Browser can be used to search for and select appropriate terms. It is crucial to select the most precise term that accurately describes the finding.

-

Inclusion of Negative Findings: To increase the specificity of the phenotypic profile, it is important to also document relevant normal findings by negating HPO terms.

-

Data Entry and Storage: The selected HPO terms, along with patient identifiers, are entered into a database or electronic health record that supports HPO encoding.

Deep Phenotyping Workflow

HPO-based Patient Cohort Analysis Workflow

This workflow describes the process of analyzing a cohort of patients who have been phenotypically characterized using HPO.

-

Cohort Definition: Define the patient cohort based on the research question (e.g., patients with a specific disease, patients with a particular set of phenotypes).

-

Phenotypic Data Aggregation: Collect the HPO-based phenotypic profiles for all individuals in the cohort.

-

Phenotypic Similarity Analysis: Use semantic similarity algorithms to calculate the phenotypic similarity between patients within the cohort. This can be used to identify subgroups of patients with similar clinical presentations.

-

Enrichment Analysis: Perform HPO term enrichment analysis to identify phenotypic features that are overrepresented in the cohort or in specific subgroups compared to a background population.

-

Genotype-Phenotype Correlation: If genomic data is available, correlate the identified phenotypic subgroups with specific genetic variants or genes to uncover novel genotype-phenotype associations.

Patient Cohort Analysis Workflow

HPO-driven Variant Prioritization Workflow (e.g., using Exomiser)

This workflow illustrates how HPO terms are used in variant prioritization tools like Exomiser to identify disease-causing genes.

-

Input Data: Provide the patient's genomic data (in VCF format) and their HPO-based phenotypic profile.

-

Variant Annotation and Filtering: The tool annotates the variants with information such as gene function, population frequency, and predicted pathogenicity. It then filters out common and low-impact variants.

-

Phenotype-based Gene Scoring: The tool calculates a "phenotype score" for each gene harboring a candidate variant. This score reflects the semantic similarity between the patient's HPO terms and the known phenotypes associated with that gene in human and model organism databases.

-

Combined Scoring and Ranking: The phenotype score is combined with a "variant score" (based on pathogenicity predictions and rarity) to generate a final ranked list of candidate genes.

-

Review and Interpretation: The top-ranked genes and variants are then reviewed by a clinician or researcher to determine the final diagnosis.

References

- 1. Diagnostic yield and clinical utility of whole exome sequencing using an automated variant prioritization system, EVIDENCE - PMC [pmc.ncbi.nlm.nih.gov]

- 2. researchgate.net [researchgate.net]

- 3. Introduction — HPO Workshop 1 documentation [hpoworkshop.readthedocs.io]

- 4. Encoding Clinical Data with the Human Phenotype Ontology for Computational Differential Diagnostics - PMC [pmc.ncbi.nlm.nih.gov]

- 5. Human Phenotype Ontology (HPO) — Knowledge Hub [genomicseducation.hee.nhs.uk]

The Human Phenotype Ontology: A Technical Guide for Researchers and Drug Development Professionals

An in-depth exploration of the core concepts, applications, and methodologies of the Human Phenotype Ontology (HPO), a critical tool in modern genomics and precision medicine.

The Human Phenotype Ontology (HPO) has emerged as a cornerstone of contemporary genetics and translational research, providing a standardized vocabulary for describing the clinical abnormalities associated with human diseases. This guide offers a comprehensive overview of the HPO's fundamental principles, its practical applications in research and drug development, and detailed methodologies for its use. It is intended for researchers, scientists, and professionals in the pharmaceutical industry who are leveraging phenotypic data to advance our understanding of disease and develop novel therapeutics.

Core Concepts of the Human Phenotype Ontology

The Human Phenotype Ontology is a structured vocabulary of terms that represent phenotypic abnormalities encountered in human disease. Each term is a specific, computer-readable concept, such as "Atrial septal defect."[1] The HPO's power lies in its hierarchical organization, which is structured as a directed acyclic graph (DAG). This structure allows for the representation of relationships between phenotypic terms, where more general terms (e.g., "Abnormality of the cardiovascular system") are parents to more specific terms (e.g., "Abnormality of the heart"). This hierarchical nature enables computational reasoning and the ability to perform "fuzzy" searches, which are crucial for comparing the phenotypic profiles of patients and diseases that may not have exact a one-to-one correspondence of symptoms.

The HPO is continuously expanding through curation from medical literature, as well as integration with other major clinical and genetic resources like OMIM, Orphanet, and DECIPHER.[1] This ongoing development ensures that the HPO remains a comprehensive and up-to-date resource for the scientific and medical communities.

A key concept in the application of the HPO is the use of logical definitions based on the Entity-Quality (EQ) model. This approach provides a computer-interpretable definition for HPO terms by linking them to anatomical entities and the qualities that describe their abnormality. For example, the term "Arachnodactyly" (abnormally long and slender fingers) can be decomposed into the entity "finger" and the quality "long and slender." This structured approach enhances computational analysis and facilitates cross-species phenotype comparisons.

Quantitative Data and Metrics

The Human Phenotype Ontology is a large and growing resource. The table below summarizes some key quantitative metrics of the HPO, providing a snapshot of its scale and complexity.

| Metric | Value | Source |

| Number of Terms | > 18,000 | --INVALID-LINK-- |

| Annotations to Diseases | > 156,000 | --INVALID-LINK-- |

| Annotated Hereditary Diseases | > 7,000 | --INVALID-LINK-- |

| Annotated Genes | > 4,000 | --INVALID-LINK-- |

Logical Structure and Relationships

The logical foundation of the HPO is its directed acyclic graph (DAG) structure, where terms are interconnected by "is a" relationships. This means that a more specific term is a subtype of its parent term. This hierarchical organization is fundamental to many of the computational analyses performed using the HPO.

Figure 1: A simplified representation of the HPO's hierarchical structure.

Experimental Protocols and Methodologies

The HPO is integral to a variety of experimental and computational workflows in modern genetics. Below are detailed methodologies for some of the key applications.

Phenotype-Driven Variant Prioritization with Exomiser

Exomiser is a powerful tool that integrates variant data from whole-exome or whole-genome sequencing with a patient's phenotypic profile (encoded as HPO terms) to prioritize candidate disease-causing variants.

Methodology:

-

Input Files:

-

VCF file: A standard Variant Call Format file containing the patient's genetic variants. For family-based analysis (e.g., a trio), a multi-sample VCF is used.

-

PED file: A pedigree file describing the relationships between individuals in a multi-sample VCF. The format is: family_id individual_id paternal_id maternal_id sex phenotype.

-

Analysis Configuration File (analysis.yml): A YAML file specifying the patient's HPO terms, inheritance patterns to consider, and various filtering and prioritization parameters.

-

-

Exomiser Command-Line Execution (Trio Analysis Example):

-

Example analysis.yml for Autosomal Dominant Analysis:

Figure 2: High-level workflow for phenotype-driven variant prioritization using Exomiser.

Patient Cohort Analysis using HPO

Identifying patient cohorts with similar phenotypic profiles is crucial for understanding disease heterogeneity, identifying novel disease subtypes, and for patient stratification in clinical trials.

Methodology using R:

This protocol outlines a conceptual workflow for performing cohort analysis in R.

-

Data Preparation: Load patient data into a data frame. This should include a unique patient identifier and a list of HPO terms for each patient.

-

Semantic Similarity Calculation: Utilize an R package such as ontologySimilarity to calculate a pairwise similarity matrix for all patients based on their HPO profiles.

-

Clustering: Apply a clustering algorithm (e.g., hierarchical clustering) to the similarity matrix to group patients with similar phenotypes.

-

Visualization: Visualize the results using heatmaps and dendrograms to interpret the patient clusters.

Figure 3: Workflow for identifying patient cohorts based on HPO term similarity.

Signaling Pathways and HPO

Mutations in genes that are components of critical signaling pathways often lead to developmental disorders with characteristic phenotypic profiles. The HPO can be used to describe these phenotypes, providing a bridge between molecular mechanisms and clinical manifestations.

Example: TGF-β Signaling Pathway in Loeys-Dietz and Marfan Syndromes

Mutations in genes of the Transforming Growth Factor-beta (TGF-β) signaling pathway, such as TGFBR1, TGFBR2, and FBN1 (which influences TGF-β activity), cause connective tissue disorders like Loeys-Dietz syndrome (LDS) and Marfan syndrome (MFS).[2][3]

Figure 4: Dysregulation of the TGF-β signaling pathway and associated HPO phenotypes.

Applications in Drug Development

The HPO is increasingly being recognized as a valuable tool in the drug development pipeline, from target identification to clinical trial design.

-

Target Identification and Validation: By linking specific phenotypes to genes and pathways, the HPO can help researchers identify and validate novel drug targets. A deep understanding of the phenotypic consequences of a gene's dysfunction can provide strong evidence for its role in disease and its potential as a therapeutic target.

-

Drug Repurposing: HPO-based phenotypic similarity analysis can be used to identify new indications for existing drugs. If a drug is effective for a disease with a particular phenotypic profile, it may also be effective for other diseases that share similar HPO-defined clinical features.

-

Patient Stratification for Clinical Trials: The HPO enables a more precise definition of patient populations for clinical trials. By using HPO terms to define inclusion and exclusion criteria, researchers can select a more homogeneous group of patients who are more likely to respond to a specific therapy. This can lead to smaller, more efficient, and more successful clinical trials.

Conclusion

The Human Phenotype Ontology provides a powerful framework for the standardized description and computational analysis of human phenotypic abnormalities. Its hierarchical structure and comprehensive content make it an indispensable tool for researchers, clinicians, and drug development professionals. By enabling a more precise and computable representation of clinical data, the HPO is accelerating the pace of gene discovery, improving diagnostic accuracy, and paving the way for the development of targeted therapies. As the fields of genomics and personalized medicine continue to evolve, the importance of the HPO in translating genomic information into clinical action will only continue to grow.

References

- 1. Human Phenotype Ontology [hpo.jax.org]

- 2. TGFBR1 and TGFBR2 mutations in patients with features of Marfan syndrome and Loeys-Dietz syndrome - PubMed [pubmed.ncbi.nlm.nih.gov]

- 3. Frontiers | Genotype-phenotype correlations of marfan syndrome and related fibrillinopathies: Phenomenon and molecular relevance [frontiersin.org]

The Human Phenotype Ontology (HPO) in Rare Disease Research: An In-depth Technical Guide

Audience: Researchers, scientists, and drug development professionals.

Introduction

The diagnosis and study of rare diseases present a significant challenge due to their vast number, clinical heterogeneity, and the often-complex relationship between genotype and phenotype. The Human Phenotype Ontology (HPO) has emerged as a critical tool to address these challenges by providing a standardized, controlled vocabulary for describing phenotypic abnormalities encountered in human disease.[1][2] This structured approach allows for the computational analysis of patient phenotypes, facilitating improved diagnostic accuracy, gene discovery, and the development of targeted therapies. This technical guide provides an in-depth overview of the application of HPO in rare disease research, including quantitative data on its impact, detailed experimental protocols, and visualizations of key workflows.

The Core of HPO: A Standardized Language for Phenotypes

The HPO is a hierarchically organized ontology where each term represents a specific phenotypic abnormality.[1][2] This structure allows for the computation of semantic similarity between patients and diseases, forming the basis for many phenotype-driven analysis tools.[3] The ontology is continuously updated and expanded, incorporating information from sources like OMIM, Orphanet, and DECIPHER.

Quantitative Impact of HPO on Rare Disease Diagnostics

The integration of HPO into diagnostic workflows has demonstrably improved the ability to identify causative genes in rare diseases. Several benchmarking studies have evaluated the performance of HPO-driven gene and variant prioritization tools.

Performance of Phenotype-Driven Gene Prioritization Tools

The following table summarizes the performance of various tools that utilize HPO terms to rank candidate genes. The metrics reported include the percentage of cases where the correct causative gene was ranked within the top 1, 3, 5, or 10, and the Mean Reciprocal Rank (MRR), which assesses the overall ranking performance.

| Tool/Study | Top 1 (%) | Top 3 (%) | Top 5 (%) | Top 10 (%) | MRR | Cohort/Notes |

| Exomiser (100,000 Genomes Project) | 82.6 | 91.3 | 92.4 | 93.6 | - | 4,877 diagnosed cases. Demonstrates high efficacy in a large-scale clinical setting. |

| LIRICAL & AMELIE (DDD & KMCGD cohorts) | - | - | - | - | - | Benchmarked on 305 DDD cases and 209 in-house cases. Tools using HPO and VCF files outperformed those with phenotype data alone. |

| PhenIX (Simulated & Real Data) | 71.4 | - | - | 85 | - | Evaluated on simulated data and 20 Japanese patients. |

| Exomiser (Retinal Disorders) | 74 | - | 94 | - | - | 134 cases with known causal variants in retinal disorders. |

| Phen-Gen (Simulated Exomes) | 88 | - | - | - | - | Benchmarked using simulated exomes based on 1000 Genomes Project data. |

| GADO (OMIM Benchmark) | - | - | 49% in top 5% | - | - | Retrospectively ranked 3,382 OMIM disease genes based on their HPO annotations. |

Impact of HPO Term Number on Diagnostic Yield

The completeness of phenotypic information, as captured by the number of HPO terms, can influence diagnostic success. While more detailed phenotyping is generally beneficial, some studies suggest a point of diminishing returns. It has been shown that using more than five HPO terms per patient only slightly improves the performance of some prioritization tools. However, the probability of diagnostic yield generally increases with the number of HPO terms provided.

Experimental Protocols

This section provides detailed methodologies for key experiments and workflows that leverage the HPO in rare disease research.

Protocol 1: Deep Phenotyping of Patients with Rare Diseases using HPO

Objective: To accurately and comprehensively capture a patient's phenotypic abnormalities using the standardized HPO vocabulary.

Materials:

-

Patient's clinical records (e.g., clinical notes, imaging reports, laboratory results).

-

Access to an HPO browser or tool (e.g., Phenotips, SAMS, HPO website).

Procedure:

-

Review Clinical Data: Thoroughly review all available clinical information for the patient. This includes the presenting symptoms, physical examination findings, developmental history, family history, and results from any diagnostic investigations.

-

Extract Phenotypic Terms: Identify and list all abnormal clinical features. Use the patient's specific terminology as a starting point.

-

Map to HPO Terms: For each identified phenotypic feature, search for the most specific and accurate HPO term using an HPO browser or tool.

-

Utilize the search function with synonyms and alternative descriptions.

-

Browse the ontology's hierarchical structure to find the most appropriate level of specificity. For example, instead of a general term like "Arrhythmia," a more specific term like "Bradycardia" should be used if applicable.

-

-

Record HPO IDs: For each selected HPO term, record its unique identifier (e.g., HP:0001662 for Bradycardia).

-

Indicate Presence or Absence: For each HPO term, indicate whether the phenotype is present or absent in the patient. Documenting absent but relevant phenotypes can be crucial for differential diagnosis.

-

Review and Refine: Review the complete list of HPO terms to ensure it accurately reflects the patient's overall clinical presentation. This list of HPO terms constitutes the patient's "phenotypic profile."

Protocol 2: Phenotype-Driven Variant Prioritization using HPO and Exome/Genome Data

Objective: To identify the most likely disease-causing genetic variant(s) from a patient's exome or genome data by integrating their phenotypic profile.

Materials:

-

Patient's phenotypic profile (list of HPO terms) generated from Protocol 1.

-

Patient's genetic data in Variant Call Format (VCF).

-

A variant prioritization tool that accepts HPO terms and VCF files as input (e.g., Exomiser, LIRICAL).

Procedure:

-

Input Data: Provide the patient's VCF file and their list of HPO terms as input to the chosen software tool. If family data is available (e.g., a trio), a PED file describing the family structure should also be provided.

-

Variant Filtering: The software will first filter the variants in the VCF file based on several criteria:

-

Quality: Remove low-quality variant calls.

-

Allele Frequency: Filter out common variants present above a certain frequency in population databases (e.g., gnomAD).

-

Predicted Pathogenicity: Retain variants predicted to be deleterious by in silico tools (e.g., SIFT, PolyPhen).

-

-

Phenotype-Based Gene Ranking: The tool will then rank the remaining candidate genes based on the semantic similarity between the patient's HPO profile and the known phenotype associations for each gene. This is often achieved through algorithms that calculate a "phenotype score."

-

Combined Scoring: A final score is typically calculated for each variant by combining the variant's predicted pathogenicity score and the gene's phenotype score.

-

Output and Interpretation: The tool will output a ranked list of candidate variants. The top-ranked variants are the most likely to be causative and should be prioritized for further investigation and clinical interpretation. The output is often provided in user-friendly formats like HTML or machine-readable formats like JSON for integration into bioinformatics pipelines.

Protocol 3: HPO-Based Patient Similarity Analysis

Objective: To identify patients with similar phenotypic profiles for cohort building, differential diagnosis, or novel disease gene discovery.

Materials:

-

Phenotypic profiles (lists of HPO terms) for a cohort of patients.

-

A computational environment with libraries for semantic similarity calculations (e.g., HPOSim R package).

Procedure:

-

Data Preparation: Ensure all patient phenotypes are consistently represented as lists of HPO IDs.

-

Pairwise Similarity Calculation: For each pair of patients in the cohort, calculate a semantic similarity score based on their HPO profiles. Several methods can be used for this calculation, often based on the information content of the most informative common ancestor (MICA) of the HPO terms in the two profiles.

-

Similarity Matrix Generation: The pairwise similarity scores are used to generate a similarity matrix, where each cell (i, j) represents the similarity between patient i and patient j.

-

Clustering and Network Analysis: Apply clustering algorithms (e.g., k-means) to the similarity matrix to identify groups of patients with highly similar phenotypes. These clusters can represent distinct disease entities or subtypes. The similarity data can also be used to construct a patient network graph for visualization and analysis.

-

Applications:

-

Differential Diagnosis: Compare a new patient's profile to the clusters to aid in diagnosis.

-

Gene Discovery: Identify shared genetic variants within a cluster of phenotypically similar, undiagnosed patients.

-

Protocol 4: Automated HPO Term Extraction from Clinical Notes using NLP

Objective: To automate the process of deep phenotyping by extracting HPO terms from unstructured clinical text.

Materials:

-

A corpus of clinical notes in digital format.

-

A Natural Language Processing (NLP) pipeline designed for clinical text (e.g., cTAKES, John Snow Labs' Healthcare NLP).

Procedure:

-

Text Preprocessing: The NLP pipeline first preprocesses the raw clinical text, which may include sentence segmentation, tokenization, and part-of-speech tagging.

-

Named Entity Recognition (NER): A trained NER model identifies mentions of clinical phenotypes within the text.

-

Assertion Status Detection: The system determines the status of each identified phenotype (e.g., present, absent, suspected, or conditional).

-

Concept Normalization: The extracted phenotype mentions are then mapped to the most appropriate HPO terms and their corresponding IDs. This step often involves embedding-based similarity measures.

-

Output: The pipeline outputs a structured list of HPO terms with their assertion status, effectively creating a machine-readable phenotypic profile from the unstructured text.

Mandatory Visualizations

The following diagrams, generated using the DOT language, illustrate key workflows in HPO-based rare disease research.

Caption: A high-level workflow for HPO-driven rare disease diagnosis.

References

The Unseen Engine of Discovery: A Technical Guide to Standardized Phenotype Vocabularies in Research and Drug Development

For Immediate Release