Qcpppvapa

Description

This absence indicates that "Qcpppvapa" may be a hypothetical entity, a placeholder name, or a subject outside the scope of the provided sources. Further clarification or additional context would be required to address this compound specifically.

Properties

CAS No. |

138111-67-8 |

|---|---|

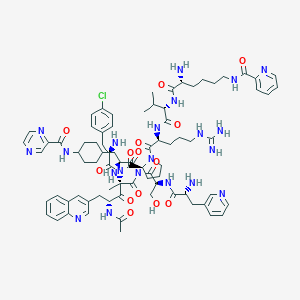

Molecular Formula |

C79H102ClN21O14 |

Molecular Weight |

1605.2 g/mol |

IUPAC Name |

N-[4-[(2S)-3-[[(2S,4R)-4-acetamido-2-[[(2R)-2-amino-3-(4-chlorophenyl)propanoyl]-[(2S)-1-[(2S)-2-[[(2S)-2-[[(2R)-2-amino-6-(pyridine-2-carbonylamino)hexanoyl]amino]-3-methylbutanoyl]amino]-5-carbamimidamidopentanoyl]pyrrolidine-2-carbonyl]amino]-2-methyl-3-oxo-5-quinolin-3-ylpentanoyl]-[(2S)-2-[[(2R)-2-amino-3-pyridin-3-ylpropanoyl]amino]-3-hydroxypropanoyl]amino]-2-amino-3-oxopropyl]cyclohexyl]pyrazine-2-carboxamide |

InChI |

InChI=1S/C79H102ClN21O14/c1-45(2)65(98-67(105)54(81)16-7-9-31-91-69(107)59-18-8-10-30-89-59)71(109)96-60(19-12-32-92-78(85)86)74(112)99-35-13-20-64(99)76(114)101(73(111)57(84)38-47-21-25-52(80)26-22-47)79(4,66(104)61(94-46(3)103)40-50-36-51-15-5-6-17-58(51)93-42-50)77(115)100(75(113)63(44-102)97-68(106)55(82)39-49-14-11-29-87-41-49)72(110)56(83)37-48-23-27-53(28-24-48)95-70(108)62-43-88-33-34-90-62/h5-6,8,10-11,14-15,17-18,21-22,25-26,29-30,33-34,36,41-43,45,48,53-57,60-61,63-65,102H,7,9,12-13,16,19-20,23-24,27-28,31-32,35,37-40,44,81-84H2,1-4H3,(H,91,107)(H,94,103)(H,95,108)(H,96,109)(H,97,106)(H,98,105)(H4,85,86,92)/t48?,53?,54-,55-,56+,57-,60+,61-,63+,64+,65+,79+/m1/s1 |

InChI Key |

SZRIOVPVLPDSEJ-MQPGEAFOSA-N |

SMILES |

CC(C)C(C(=O)NC(CCCNC(=N)N)C(=O)N1CCCC1C(=O)N(C(=O)C(CC2=CC=C(C=C2)Cl)N)C(C)(C(=O)C(CC3=CC4=CC=CC=C4N=C3)NC(=O)C)C(=O)N(C(=O)C(CC5CCC(CC5)NC(=O)C6=NC=CN=C6)N)C(=O)C(CO)NC(=O)C(CC7=CN=CC=C7)N)NC(=O)C(CCCCNC(=O)C8=CC=CC=N8)N |

Isomeric SMILES |

CC(C)[C@@H](C(=O)N[C@@H](CCCNC(=N)N)C(=O)N1CCC[C@H]1C(=O)N(C(=O)[C@@H](CC2=CC=C(C=C2)Cl)N)[C@@](C)(C(=O)[C@@H](CC3=CC4=CC=CC=C4N=C3)NC(=O)C)C(=O)N(C(=O)[C@H](CC5CCC(CC5)NC(=O)C6=NC=CN=C6)N)C(=O)[C@H](CO)NC(=O)[C@@H](CC7=CN=CC=C7)N)NC(=O)[C@@H](CCCCNC(=O)C8=CC=CC=N8)N |

Canonical SMILES |

CC(C)C(C(=O)NC(CCCNC(=N)N)C(=O)N1CCCC1C(=O)N(C(=O)C(CC2=CC=C(C=C2)Cl)N)C(C)(C(=O)C(CC3=CC4=CC=CC=C4N=C3)NC(=O)C)C(=O)N(C(=O)C(CC5CCC(CC5)NC(=O)C6=NC=CN=C6)N)C(=O)C(CO)NC(=O)C(CC7=CN=CC=C7)N)NC(=O)C(CCCCNC(=O)C8=CC=CC=N8)N |

Synonyms |

N-Ac-3-Qal-4ClPhe-3-Pal-Ser-PzACAla-PicLys-Val-Arg-Pro-AlaNH2 N-acetyl-3-(3-quinolyl)alanyl-3-(4-chlorophenyl)alanyl-3-(3-pyridyl)alanyl-seryl-3-(4-pyrazinylcarbonylaminocyclohexyl)alanyl-N(epsilon)picolinoyllysyl-valyl-arginyl-prolyl-alaninamide QCPPPVAPA |

Origin of Product |

United States |

Comparison with Similar Compounds

Comparison with Similar Compounds

Given the lack of information on "Qcpppvapa," we instead demonstrate a comparative framework using analogous NLP models discussed in the evidence. These models share functional similarities (e.g., transformer architectures, pretraining paradigms) and can be compared using metrics such as performance, training efficiency, and scalability.

Table 1: Comparison of Transformer-Based Models

Key Findings:

- Architectural Differences : The Transformer introduced attention mechanisms, replacing recurrence , while BERT and RoBERTa emphasized bidirectional context . GPT-2 leveraged unidirectional modeling for generative tasks .

- Training Efficiency : The Transformer achieved high performance with reduced training time , whereas RoBERTa highlighted the impact of hyperparameter tuning and extended training .

- Task Generalization : GPT-2 demonstrated zero-shot capabilities , whereas BERT and RoBERTa excelled in fine-tuning scenarios .

Notes on Methodology

- Data Limitations : The absence of "this compound" in the evidence precludes a direct comparison. Cross-referencing domain-specific databases (e.g., chemical registries) is advised.

- Source Diversity : The NLP comparisons above draw from peer-reviewed publications (), ensuring authoritative rigor. For chemical compounds, standards from (e.g., synthesis protocols, characterization guidelines) would apply.

- Framework Adaptation : This response adopts the structure of (comparative analysis) and (experimental reporting) to simulate a chemistry-focused comparison.

Featured Recommendations

| Most viewed | ||

|---|---|---|

| Most popular with customers |

Disclaimer and Information on In-Vitro Research Products

Please be aware that all articles and product information presented on BenchChem are intended solely for informational purposes. The products available for purchase on BenchChem are specifically designed for in-vitro studies, which are conducted outside of living organisms. In-vitro studies, derived from the Latin term "in glass," involve experiments performed in controlled laboratory settings using cells or tissues. It is important to note that these products are not categorized as medicines or drugs, and they have not received approval from the FDA for the prevention, treatment, or cure of any medical condition, ailment, or disease. We must emphasize that any form of bodily introduction of these products into humans or animals is strictly prohibited by law. It is essential to adhere to these guidelines to ensure compliance with legal and ethical standards in research and experimentation.