RL

Description

Structure

3D Structure

Properties

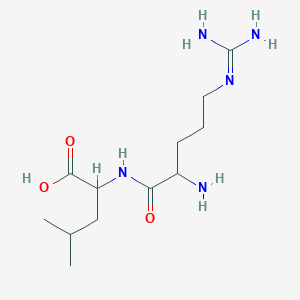

IUPAC Name |

2-[[2-amino-5-(diaminomethylideneamino)pentanoyl]amino]-4-methylpentanoic acid | |

|---|---|---|

| Details | Computed by LexiChem 2.6.6 (PubChem release 2019.06.18) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI |

InChI=1S/C12H25N5O3/c1-7(2)6-9(11(19)20)17-10(18)8(13)4-3-5-16-12(14)15/h7-9H,3-6,13H2,1-2H3,(H,17,18)(H,19,20)(H4,14,15,16) | |

| Details | Computed by InChI 1.0.5 (PubChem release 2019.06.18) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI Key |

WYBVBIHNJWOLCJ-UHFFFAOYSA-N | |

| Details | Computed by InChI 1.0.5 (PubChem release 2019.06.18) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Canonical SMILES |

CC(C)CC(C(=O)O)NC(=O)C(CCCN=C(N)N)N | |

| Details | Computed by OEChem 2.1.5 (PubChem release 2019.06.18) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Molecular Formula |

C12H25N5O3 | |

| Details | Computed by PubChem 2.1 (PubChem release 2019.06.18) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Molecular Weight |

287.36 g/mol | |

| Details | Computed by PubChem 2.1 (PubChem release 2021.05.07) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Foundational & Exploratory

Reinforcement Learning for Scientific Research: An In-depth Technical Guide

For Researchers, Scientists, and Drug Development Professionals

The integration of artificial intelligence, particularly reinforcement learning (RL), is poised to revolutionize scientific research by automating complex decision-making processes and accelerating discovery in fields ranging from drug development to materials science. This guide provides a comprehensive technical introduction to the core concepts of this compound and its practical applications in scientific domains. It is designed for researchers and professionals seeking to understand and leverage this powerful computational tool to solve complex research problems.

Core Concepts of Reinforcement Learning

Reinforcement learning is a paradigm of machine learning where an "agent" learns to make a sequence of decisions in an "environment" to maximize a cumulative "reward".[1] Unlike supervised learning, which relies on labeled data, this compound agents learn from the consequences of their actions through a trial-and-error process.[2]

The fundamental components of an this compound framework are:

-

Agent : The learner or decision-maker that interacts with the environment. In a scientific context, the agent could be a computational model that suggests new molecules, experimental parameters, or treatment strategies.[2]

-

Environment : The external world with which the agent interacts. This could be a chemical reaction simulator, a model of a biological system, or a real-world laboratory setup.[2]

-

State (s) : A representation of the environment at a specific point in time. For example, the current set of reactants and products in a chemical synthesis or the current health status of a patient in a clinical trial.

-

Action (a) : A decision made by the agent to interact with the environment. This could be adding a specific molecule, changing the temperature of a reaction, or administering a particular drug dosage.

-

Reward (r) : A scalar feedback signal that indicates how well the agent is performing. The goal of the agent is to maximize the cumulative reward over time. Rewards can be designed to represent desired outcomes, such as high yield in a chemical reaction or tumor reduction in a cancer treatment model.[3]

-

Policy (π) : The strategy that the agent uses to select actions based on the current state. The policy is what is learned by the this compound algorithm.

This iterative process of observing a state, taking an action, and receiving a reward is the foundation of how an this compound agent learns to achieve its goals.

The Mathematical Foundation: Markov Decision Processes

The interaction between the agent and the environment is formally described by a Markov Decision Process (MDP) . An MDP is a mathematical framework for modeling sequential decision-making under uncertainty.[4] It is defined by a tuple (S, A, P, R, γ), where:

-

S is the set of all possible states.

-

A is the set of all possible actions.

-

P(s' | s, a) is the state transition probability, which is the probability of transitioning to state s' from state s after taking action a.

-

R(s, a, s') is the reward function, which defines the immediate reward received after transitioning from state s to s' as a result of action a.

-

γ is the discount factor (0 ≤ γ ≤ 1), which determines the importance of future rewards. A value of 0 makes the agent "myopic" by only considering immediate rewards, while a value closer to 1 makes it strive for long-term high rewards.

The core assumption of an MDP is the Markov property , which states that the future is independent of the past given the present. In other words, the current state s provides all the necessary information for the agent to make an optimal decision, without needing to know the history of all previous states and actions.

The following diagram illustrates the fundamental workflow of a Reinforcement Learning agent interacting with its environment, which is modeled as a Markov Decision Process.

Reinforcement Learning in Drug Discovery and Development

One of the most promising areas for the application of this compound in scientific research is drug discovery and development. The process of finding a new drug is incredibly long and expensive, and this compound offers a paradigm to accelerate and optimize several stages of this pipeline.

De Novo Molecular Design

De novo drug design aims to generate novel molecules with desired pharmacological properties. This compound can be used to guide the generation of molecules towards specific objectives, such as high binding affinity to a target protein, desirable pharmacokinetic properties (ADMET - Absorption, Distribution, Metabolism, Excretion, and Toxicity), and synthetic accessibility.[5]

The general workflow for de novo molecular design using this compound is as follows:

-

Generative Model : A deep learning model, such as a Recurrent Neural Network (RNN) or a Generative Adversarial Network (GAN), is pre-trained on a large dataset of known molecules to learn the rules of chemical structure and syntax (e.g., using the SMILES string representation).[5]

-

This compound Fine-Tuning : The pre-trained generative model acts as the agent's policy. The agent generates a molecule (an action).

-

Reward Function : The generated molecule is then evaluated by a reward function, which can be a composite of several desired properties. This often involves computational or machine learning-based predictions of:

-

Binding Affinity : Docking scores or more sophisticated binding free energy calculations.

-

Drug-likeness : Metrics like the Quantitative Estimation of Drug-likeness (QED).

-

Physicochemical Properties : Molecular weight, LogP (lipophilicity), etc.

-

Synthetic Accessibility : Scores that estimate how easily a molecule can be synthesized.

-

-

Policy Update : The reward is used to update the policy of the generative model, encouraging it to generate more molecules with desirable properties. Policy gradient methods are commonly used for this update.

The following diagram illustrates a typical workflow for de novo molecular design using reinforcement learning.

Quantitative Data on this compound for Molecular Optimization

The following table summarizes the performance of different this compound-based approaches in optimizing various molecular properties. The metrics include Quantitative Estimation of Drug-likeness (QED), penalized LogP, and docking scores against specific protein targets.

| Model/Algorithm | Target Property | Initial Value (Mean) | Optimized Value (Mean) | Reference |

| ReLeaSE | JAK2 Inhibition | - | Generated novel, active compounds | [5] |

| MolDQN | QED | 0.45 | 0.948 | [6] |

| Augmented Hill-Climb | Docking Score (DRD2) | - | -8.5 | [7] |

| REINVENT 2.0 | Docking Score (DRD2) | - | -9.0 | [7] |

| FREED++ | Docking Score (USP7) | -8.3 | -10.2 | [8] |

Experimental Protocol: De Novo Design of JAK2 Inhibitors with ReLeaSE

This section outlines a detailed methodology for using the ReLeaSE (Reinforcement Learning for Structural Evolution) framework to generate novel Janus protein kinase 2 (JAK2) inhibitors.[5]

1. Model Architecture:

-

Generative Model : A stack-augmented Recurrent Neural Network (RNN) with Gated Recurrent Units (GRUs). The model is trained to generate valid SMILES strings.

-

Predictive Model : A separate deep neural network (DNN) trained to predict the bioactivity of a molecule against JAK2 based on its SMILES string.

2. Training Data:

-

Generative Model Pre-training : A large dataset of drug-like molecules from a database like ChEMBL is used to teach the model the grammar of SMILES and the general characteristics of drug-like molecules.

-

Predictive Model Training : A dataset of known JAK2 inhibitors and non-inhibitors with their corresponding activity values (e.g., IC50) is used to train the predictive model.

3. Reinforcement Learning Phase:

-

Agent : The pre-trained generative model.

-

Action : The generation of a complete SMILES string representing a molecule.

-

Environment : The predictive model for JAK2 activity.

-

Reward Function : A reward is calculated based on the predicted activity of the generated molecule from the predictive model. A higher predicted activity results in a higher reward. Additional rewards can be incorporated for other desired properties like chemical diversity or novelty.

-

Policy Update : A policy gradient method, such as REINFORCE, is used to update the weights of the generative model. The update rule is designed to increase the probability of generating molecules that receive high rewards.

4. Hyperparameters:

-

Generative Model :

-

Number of GRU layers: 3

-

Hidden layer size: 512

-

Embedding size: 256

-

-

Predictive Model :

-

Number of dense layers: 2

-

Hidden layer size: 256

-

Activation function: ReLU

-

-

This compound Training :

-

Learning rate: 0.001

-

Discount factor (γ): 0.99

-

Batch size: 64

-

5. Experimental Workflow:

-

Pre-train the generative model on the ChEMBL dataset until it can generate a high percentage of valid and unique SMILES strings.

-

Train the predictive model on the JAK2 activity dataset and validate its performance using cross-validation.

-

Initialize the this compound agent with the weights of the pre-trained generative model.

-

In each epoch of this compound training: a. The agent generates a batch of molecules. b. For each molecule, the predictive model calculates the predicted activity. c. A reward is computed based on the predicted activity. d. The policy gradient is calculated and used to update the weights of the generative model.

-

After training, generate a large library of molecules and filter them based on predicted activity and other desired properties for further in silico and experimental validation.

Reinforcement Learning for Optimizing Chemical Processes

Beyond molecular design, this compound is being applied to optimize entire chemical processes, from identifying optimal reaction pathways to controlling reactors in real-time.

Chemical Reaction Pathway Optimization

Discovering the most efficient and highest-yielding pathway to synthesize a target molecule is a complex combinatorial problem. This compound can be used to navigate the vast space of possible reactions and intermediates to find optimal synthesis routes.[9]

The workflow for reaction pathway optimization using this compound can be conceptualized as follows:

References

- 1. Reinforcement Learning for Precision Oncology - PMC [pmc.ncbi.nlm.nih.gov]

- 2. Drug Development Levels Up with Reinforcement Learning - PharmaFeatures [pharmafeatures.com]

- 3. youtube.com [youtube.com]

- 4. arxiv.org [arxiv.org]

- 5. Deep reinforcement learning for de novo drug design - PMC [pmc.ncbi.nlm.nih.gov]

- 6. DOT Language | Graphviz [graphviz.org]

- 7. google.com [google.com]

- 8. researchgate.net [researchgate.net]

- 9. chemrxiv.org [chemrxiv.org]

fundamental concepts of reinforcement learning in computational biology

A Technical Guide to Reinforcement Learning in Computational Biology

Audience: Researchers, scientists, and drug development professionals.

Executive Summary

Reinforcement Learning (RL), a powerful paradigm of machine learning, is emerging as a transformative force in computational biology and drug discovery. Unlike traditional supervised learning, this compound enables an agent to learn optimal decision-making policies through interaction with a dynamic environment, guided by a system of rewards and penalties. This approach is particularly well-suited for complex biological problems characterized by vast search spaces and intricate, often non-linear, relationships. This guide provides an in-depth overview of the fundamental concepts of this compound and explores its application in key areas of computational biology, including de novo drug design and bioprocess optimization. We present detailed methodologies for cited experiments, quantitative data in structured tables, and visualizations of core concepts and workflows to offer a comprehensive technical resource for professionals in the field.

Fundamental Concepts of Reinforcement Learning

The core components of an this compound system are:

-

State (s) : A representation of the environment's condition at a specific time. For instance, the current molecular structure being built or the current measurements (e.g., pH, glucose concentration) in a bioreactor.

-

Action (a) : A decision made by the agent to interact with the environment, such as adding a specific atom to a molecule or adjusting the temperature in a cell culture.

-

Reward (r) : A scalar feedback signal from the environment that indicates the desirability of the agent's action in a given state. The agent's goal is to learn a policy that maximizes the cumulative reward over time.[2][3]

-

Policy (π) : The strategy or mapping that the agent uses to select actions based on the current state. The policy is what the agent aims to optimize.[2]

The Markov Decision Process (MDP)

Most this compound problems are formalized as Markov Decision Processes (MDPs). An MDP is a mathematical framework for modeling decision-making where outcomes are partly random and partly under the control of the agent.[2] A key assumption of an MDP is the Markov Property , which states that the future state depends only on the current state and action, not on the sequence of events that preceded it.

The logical relationship of an MDP is visualized below.

References

The Strategic Application of Markov Decision Processes in Experimental Science: A Technical Guide for Researchers and Drug Development Professionals

An in-depth technical guide on the core principles of Markov decision processes and their application in experimental science, with a focus on drug development and clinical research.

Introduction

In the landscape of experimental science, particularly within drug discovery and development, researchers are constantly faced with sequential decision-making under uncertainty. The process of identifying a drug candidate, optimizing its dosage, and designing clinical trials involves a series of choices where the outcome of each step influences the next. Markov decision processes (MDPs) offer a robust mathematical framework for modeling and solving such sequential decision problems.[1][2][3] By conceptualizing experimental workflows as a series of states, actions, and rewards, MDPs, and by extension, reinforcement learning (RL), provide a powerful tool to optimize these processes for desired outcomes. This guide delves into the core principles of MDPs and illustrates their practical application in experimental science, providing researchers, scientists, and drug development professionals with the foundational knowledge to leverage these techniques in their work.

Core Principles of Markov Decision Processes

An MDP is a discrete-time stochastic control process that provides a mathematical framework for modeling decision-making in situations where outcomes are partly random and partly under the control of a decision-maker.[2][4] The core components of an MDP are:

-

States (S): A set of states representing the different situations or configurations of the system being modeled. In drug development, a state could represent the current stage of a clinical trial, the health status of a patient, or the molecular configuration of a compound.

-

Actions (A): A set of actions available to the decision-maker in each state. Actions could include advancing to the next phase of a trial, administering a specific drug dosage, or modifying a molecule's structure.

-

Transition Probabilities (P(s'|s, a)): The probability of transitioning from state s to state s' after taking action a. This captures the stochastic nature of experimental outcomes.

-

Rewards (R(s, a, s')): A scalar value representing the immediate reward (or cost) of transitioning from state s to s' as a result of action a. Rewards are defined to align with the goals of the experiment, such as maximizing therapeutic efficacy or minimizing toxicity.

-

Policy (π): A strategy that specifies the action to take in each state. The goal of an MDP is to find an optimal policy that maximizes the cumulative reward over time.

A fundamental assumption of MDPs is the Markov property , which states that the future is independent of the past given the present. In other words, the transition to the next state depends only on the current state and the action taken, not on the sequence of states and actions that preceded it.

Solving Markov Decision Processes

The primary objective in an MDP is to find an optimal policy, denoted as π*, which maximizes the expected cumulative reward. Two common algorithms for solving MDPs are:

-

Value Iteration: This algorithm iteratively computes the optimal state-value function, which represents the maximum expected cumulative reward achievable from each state.

-

Policy Iteration: This algorithm starts with an arbitrary policy and iteratively improves it until it converges to the optimal policy.

In many real-world applications, especially in drug development, the transition probabilities and reward functions are not known in advance. This is where reinforcement learning (this compound) becomes particularly valuable. This compound algorithms can learn the optimal policy through direct interaction with the environment (i.e., through experiments or simulations) without a complete mathematical model of the system.

Applications in Drug Development and Experimental Science

MDPs and this compound are increasingly being applied to various stages of the drug development pipeline to enhance efficiency and success rates.

Optimizing Dosing Regimens

Determining the optimal dosing schedule for a new therapeutic is a critical challenge. An incorrect dosage can lead to inefficacy or unacceptable toxicity. This compound, modeled as an MDP, can be used to derive patient-specific dosing schedules that adapt to individual responses to treatment.

For instance, researchers have demonstrated that chemotherapeutic dosing strategies learned via this compound methods are more robust to variations in patient-specific parameters compared to traditional optimal control methods.[5][6] In one study, an this compound agent was trained on a model of tumor growth and drug response to determine the optimal chemotherapy schedule. The state was defined by the tumor size and the patient's bone marrow density (a proxy for toxicity), the actions were the different possible drug doses, and the reward was a function that balanced tumor reduction with minimizing toxicity.

Adaptive Clinical Trial Design

Traditional clinical trials follow a rigid protocol. Adaptive trial designs, in contrast, allow for pre-specified modifications to the trial based on interim data. This flexibility can lead to more efficient and ethical trials. MDPs provide a formal framework for optimizing these adaptations.

Disease Progression Modeling

Understanding the natural history of a disease is crucial for developing effective treatments. Markov models are widely used to represent the progression of chronic diseases through different health states over time. By incorporating actions (treatments) and rewards (quality-adjusted life years), these models can be extended into MDPs to identify optimal long-term treatment strategies.

Quantitative Data Presentation

The following tables summarize quantitative data from studies that have applied MDP-based approaches in a scientific context.

Table 1: Comparison of Dosing Strategies for Sepsis Treatment

| Dosing Recommendation | Deep Deterministic Policy Gradient (DDPG) Model | Deep Q-Network (DQN) Model |

| Percentage of patients recommended for a higher dose | 54.76% | 34.82% |

| Number of patients with dose difference within 2 units | ~42,000 (74.5% of total) | ~42,000 (74.5% of total) |

This data is derived from a study that used reinforcement learning to determine optimal dosing for sepsis patients. The DDPG model, a more advanced this compound algorithm, recommended a higher dosage for a larger proportion of patients compared to the DQN model.

Table 2: Cost-Effectiveness of Osimertinib for Non-Small Cell Lung Cancer

| Metric | Osimertinib | Placebo | Incremental Difference |

| Cost (USA) | $898,107 | - | $178,953 |

| Quality-Adjusted Life Years (QALYs) (USA) | 3.70 | - | 0.56 |

| Incremental Cost-Effectiveness Ratio (ICER) (USA) | - | - | $322,308/QALY |

| Cost (China) | $49,565 | - | $17,872 |

| Quality-Adjusted Life Years (QALYs) (China) | 3.49 | - | 0.51 |

| Incremental Cost-Effectiveness Ratio (ICER) (China) | - | - | $35,186/QALY |

This table presents the results of a cost-effectiveness analysis using a Markov model to simulate the disease course of patients with non-small cell lung cancer. The ICER represents the additional cost for each additional QALY gained with the new treatment.

Experimental Protocols

This section provides a detailed methodology for implementing an MDP/RL approach to optimize a chemotherapy dosing schedule, based on the principles outlined in the cited literature.

Protocol: Reinforcement Learning for Chemotherapy Dosing Optimization

1. Define the MDP Components:

-

States (S): The state at time t is a vector representing the patient's condition. This should include:

-

Tumor size (e.g., number of cancer cells or tumor volume).

-

A measure of patient health/toxicity (e.g., bone marrow density, absolute neutrophil count).

-

-

Actions (A): A discrete set of possible chemotherapy doses that can be administered at each time step (e.g., 0 mg/kg, 10 mg/kg, 20 mg/kg).

-

Reward Function (R): The reward function is designed to achieve the therapeutic goal. A common approach is a weighted sum of terms:

-

A negative reward for the tumor size (to incentivize its reduction).

-

A negative reward for toxicity (to penalize harm to the patient).

-

A small negative reward for the administered dose (to discourage excessive drug use).

-

The reward at each step is calculated based on the change in state.

-

-

Transition Probabilities (P): The transition probabilities are governed by a mathematical model of tumor growth and drug pharmacodynamics. This model simulates how the tumor and patient health evolve in response to a given dose. This is often a system of ordinary differential equations.

2. In Silico Environment:

-

Develop a simulation of the patient's physiology based on the tumor growth and drug effect models. This simulator will serve as the environment for the this compound agent to learn in.

-

The simulator takes the current state and an action (dose) as input and outputs the next state and the reward.

3. Reinforcement Learning Algorithm:

-

Q-learning , a model-free this compound algorithm, is a suitable choice for this problem. Q-learning aims to learn a Q-function, Q(s, a), which represents the expected cumulative reward of taking action a in state s and following the optimal policy thereafter.

-

The Q-learning update rule is: Q(s, a) ← Q(s, a) + α * [r + γ * max_a' Q(s', a') - Q(s, a)] where:

-

α is the learning rate.

-

γ is the discount factor.

-

r is the immediate reward.

-

s' is the next state.

-

4. Training the Agent:

-

Initialize the Q-table (a table storing the Q-values for all state-action pairs) with zeros.

-

For a large number of episodes (simulated patients):

-

Initialize the patient's state (e.g., with a certain tumor size and healthy bone marrow).

-

For each time step in the episode:

-

Choose an action a based on the current state s. An ε-greedy policy is often used, where the agent chooses the action with the highest Q-value with probability 1-ε and a random action with probability ε. This balances exploration and exploitation.

-

Simulate the effect of the action in the environment to get the next state s' and the reward r.

-

Update the Q-value for the state-action pair (s, a) using the Q-learning update rule.

-

Set the current state to the next state: s ← s'.

-

-

The episode ends when a terminal state is reached (e.g., the tumor is eradicated, or the patient's health falls below a critical threshold).

-

5. Deriving the Optimal Policy:

-

After training, the optimal policy π* is to choose the action a that maximizes the Q-value for the current state s: π*(s) = argmax_a Q(s, a)

6. Evaluation:

-

Evaluate the learned policy on a separate set of simulated patients with varying initial conditions and model parameters to assess its robustness and performance compared to standard-of-care dosing regimens.

Mandatory Visualization

The following diagrams, created using the DOT language, illustrate key concepts and workflows related to the application of MDPs in experimental science.

Caption: Core components of a Markov Decision Process framework.

References

- 1. btdenton.engin.umich.edu [btdenton.engin.umich.edu]

- 2. Markov Decision Processes: A Tool for Sequential Decision Making under Uncertainty - PMC [pmc.ncbi.nlm.nih.gov]

- 3. researchgate.net [researchgate.net]

- 4. Markov Decision Process - GeeksforGeeks [geeksforgeeks.org]

- 5. Reinforcement learning derived chemotherapeutic schedules for robust patient-specific therapy - PubMed [pubmed.ncbi.nlm.nih.gov]

- 6. researchgate.net [researchgate.net]

The Nexus of Intelligence and Experimentation: A Technical Guide to Reinforcement Learning in Drug Discovery

An In-depth Guide for Researchers, Scientists, and Drug Development Professionals on the Core Principles and Applications of Reinforcement Learning Agents and Environments in Scientific Research.

The confluence of artificial intelligence and the life sciences has ushered in a new era of therapeutic innovation. Among the most promising of these computational tools is Reinforcement Learning (RL), a paradigm of machine learning that enables an "agent" to learn optimal behaviors through trial-and-error interactions with a dynamic "environment." This technical guide provides a comprehensive overview of the fundamental concepts of this compound agents and their environments, with a specific focus on their application in drug discovery and development. We will delve into the detailed methodologies of key experiments, present quantitative data from seminal studies, and visualize the intricate workflows and logical relationships that underpin this transformative technology.

Core Concepts: The Agent and the Environment in Drug Discovery

At its heart, reinforcement learning is a framework for sequential decision-making. The two primary components are:

-

The Agent: This is the computational entity that learns to make decisions. In the context of drug discovery, the agent is an algorithm that proposes new molecules, suggests modifications to existing ones, or determines optimal reaction conditions. The agent's goal is to maximize a cumulative reward signal.

-

The Environment: This is the world in which the agent operates and from which it receives feedback. In drug discovery, the environment can be a simulated chemical space, a predictive model that estimates the properties of a molecule (e.g., a Quantitative Structure-Activity Relationship or QSAR model), or even a real-world automated laboratory setup.

The interaction between the agent and the environment is cyclical. The agent takes an action (e.g., adding an atom to a molecule), the environment transitions to a new state (the modified molecule), and the agent receives a reward (a score based on the molecule's desired properties). The agent's internal "strategy" for choosing actions is called a policy . Through repeated interactions, the agent refines its policy to take actions that lead to higher cumulative rewards.

Key Applications of Reinforcement Learning in Drug Discovery

Reinforcement learning is being applied across the drug discovery pipeline to accelerate and improve various stages:

-

De Novo Drug Design: Generating entirely new molecules with desired properties. The agent explores the vast chemical space to design novel compounds that are predicted to be active against a specific biological target.

-

Lead Optimization: Iteratively modifying a known active compound (a "lead") to improve its properties, such as potency, selectivity, and pharmacokinetic profile.

-

Chemical Synthesis Planning: Devising the most efficient and cost-effective synthetic routes for a target molecule. The agent learns to navigate the complex web of possible chemical reactions.

-

Reaction Optimization: Determining the optimal experimental conditions (e.g., temperature, catalysts, solvents) to maximize the yield and purity of a chemical reaction.

Experimental Protocols: A Closer Look at this compound in Action

To provide a practical understanding of how this compound is implemented in research, we detail the methodologies of two influential studies.

Experimental Protocol 1: De Novo Design of DDR1 Kinase Inhibitors with GENTthis compound

A significant breakthrough in this compound-driven drug discovery was the development of Generative Tensorial Reinforcement Learning (GENTthis compound), which was used to design potent inhibitors of Discoidin Domain Receptor 1 (DDR1), a kinase implicated in fibrosis. The entire discovery process, from model training to experimental validation of the generated molecules, was completed in just 21 days.[1]

Methodology:

-

Agent: The agent in GENTthis compound is a deep generative model, specifically a Variational Autoencoder (VAE), which learns a compressed representation of molecules in a continuous "latent space."

-

Environment: The environment is a combination of a predefined chemical space and a reward function. The agent explores the latent space, and for each point it samples, a molecule is generated.

-

State: The state is the current position of the agent in the latent space.

-

Action: An action consists of moving to a new point in the latent space, which corresponds to generating a new molecule.

-

Reward Function: The reward function is a multi-objective score that incorporates:

-

Predicted biological activity: A predictive model estimates the potency of the generated molecule against DDR1.

-

Synthetic feasibility: A score that assesses how easily the molecule can be synthesized.

-

Novelty: A measure to encourage the generation of new chemical scaffolds.

-

-

Training Procedure:

-

Pre-training: The VAE is first pre-trained on a large database of known molecules to learn the fundamental rules of chemistry and molecular structure.

-

Reinforcement Learning: The pre-trained agent is then fine-tuned using this compound. The agent explores the latent space, generates molecules, and receives rewards. The policy of the agent is updated to favor the generation of molecules with higher rewards.

-

-

Molecule Selection and Validation: After training, the agent generates a library of novel molecules. The top-scoring compounds are then synthesized and tested in biochemical and cell-based assays to validate their activity.

Experimental Protocol 2: QSAR-Guided Reinforcement Learning for Syk Inhibitor Discovery

This study integrated a Quantitative Structure-Activity Relationship (QSAR) model with a deep reinforcement learning framework to discover novel inhibitors for Spleen Tyrosine Kinase (Syk), a target for autoimmune diseases.[2][3]

Methodology:

-

Agent: The agent is a generative model, likely a Recurrent Neural Network (RNN) trained to generate molecules represented as SMILES strings.

-

Environment: The environment is defined by the rules of chemical valence and a composite reward function.

-

State: The current partially generated SMILES string.

-

Action: Appending a new character to the SMILES string to build the molecule.

-

Reward Function: The reward is a multi-component score that includes:

-

QSAR-predicted potency: A pre-trained QSAR model predicts the inhibitory activity (pIC50) of the generated molecule against Syk.

-

Binding affinity: A docking score from molecular simulations to predict how well the molecule binds to the Syk protein.

-

Drug-likeness: Properties like the Quantitative Estimate of Drug-likeness (QED) and synthetic accessibility (SA) score.

-

-

Training Procedure:

-

QSAR Model Training: A stacking-ensemble QSAR model is trained on a dataset of known Syk inhibitors to predict their pIC50 values from their molecular structures.

-

Generative Model Pre-training: The RNN-based agent is pre-trained on a large chemical database to learn the grammar of SMILES strings.

-

Reinforcement Learning Fine-tuning: The pre-trained agent is then fine-tuned using a policy gradient method (e.g., REINFORCE). The agent generates molecules, and the reward function, incorporating the predictions from the QSAR model, guides the agent towards generating potent and drug-like Syk inhibitors.

-

-

Candidate Selection: From over 78,000 generated molecules, candidates were filtered based on high predicted potency, favorable binding affinity, and optimal drug-like properties, resulting in 139 promising compounds.[2][3]

Quantitative Data Summary

The following tables summarize the quantitative outcomes from various reinforcement learning applications in drug discovery, showcasing the performance and efficiency of these methods.

| Experiment/Model | Target | Key Performance Metric | Result | Reference |

| GENTthis compound | DDR1 Kinase | Time to discover potent inhibitors | 21 days | [1] |

| QSAR-guided this compound | Syk Kinase | Number of promising candidates generated | 139 (from >78,000) | [2][3] |

| REINVENT-based study | EGFR | Percentage of generated molecules with predicted activity | >95% for DRD2 activity | [4] |

| Language Model with this compound | General Proteins | Improvement in drug-likeness (QED) | Mean QED increased from 0.5705 to 0.6537 | [5] |

| Molthis compound-MGPT | GuacaMol Benchmark | Novelty and Effectiveness | Close to 100% | [6] |

| POLO | Lead Optimization | Average success rate on single-property tasks | 84% | [7] |

| 3D-MCTS | Structure-based design | Hit rate compared to virtual screening | 30 times more hits with high binding affinity | [8] |

| MolDQN | Multi-objective optimization | Chemical validity of generated molecules | 100% | [9] |

Visualizing the Reinforcement Learning Workflow in Drug Discovery

The following diagrams, created using the DOT language for Graphviz, illustrate the logical flow of reinforcement learning in drug discovery.

Caption: General workflow of reinforcement learning for de novo drug design.

The diagram above illustrates the typical three-phase process. It begins with pre-training a generative model on a large dataset of known molecules. This is followed by a cyclical reinforcement learning phase where the agent generates or modifies molecules, receives a reward from the environment based on predicted properties, and updates its policy. Finally, the optimized agent generates a library of candidate molecules for synthesis and experimental validation.

Caption: this compound workflow for kinase inhibitor design with a multi-objective reward.

This diagram details a more specific application of reinforcement learning for designing kinase inhibitors. The agent, a recurrent neural network, generates molecules as SMILES strings. These molecules are then evaluated by a multi-objective reward function that integrates predictions from a QSAR model for potency, results from docking simulations for binding affinity, and other calculated drug-like properties. The agent's policy is updated based on this comprehensive reward, guiding it to generate more effective and developable kinase inhibitor candidates.

Conclusion

Reinforcement learning represents a paradigm shift in computational drug discovery, moving from static predictions to dynamic, goal-oriented generation and optimization. By framing molecular design and optimization as a sequential decision-making problem, this compound agents can intelligently explore the vast and complex chemical space to identify novel therapeutic candidates with desired properties. The experimental protocols and quantitative results presented herein demonstrate the tangible successes of this approach. As this compound algorithms become more sophisticated and their integration with predictive models and automated experimental platforms deepens, we can expect a further acceleration in the discovery and development of new medicines, ultimately benefiting patients with a wide range of diseases.

References

- 1. unopertutto.unige.net [unopertutto.unige.net]

- 2. Integrating QSAR modelling with reinforcement learning for Syk inhibitor discovery - PMC [pmc.ncbi.nlm.nih.gov]

- 3. researchgate.net [researchgate.net]

- 4. Reddit - The heart of the internet [reddit.com]

- 5. chemrxiv.org [chemrxiv.org]

- 6. researchgate.net [researchgate.net]

- 7. Leveraging artificial intelligence and machine learning in kinase inhibitor development: advances, challenges, and future prospects - RSC Medicinal Chemistry (RSC Publishing) DOI:10.1039/D5MD00494B [pubs.rsc.org]

- 8. academic.oup.com [academic.oup.com]

- 9. [1810.08678] Optimization of Molecules via Deep Reinforcement Learning [arxiv.org]

The Symbiotic Revolution: Reinforcement Learning in Scientific Discovery

An In-depth Technical Guide for Researchers, Scientists, and Drug Development Professionals

In the relentless pursuit of scientific advancement, a new paradigm is emerging at the intersection of artificial intelligence and empirical research. Reinforcement Learning (RL), a sophisticated subset of machine learning, is transcending its origins in game playing and robotics to become a pivotal tool in accelerating scientific discovery. From the rational design of novel therapeutics to the automated orchestration of complex experiments, this compound is empowering researchers to navigate vast and intricate parameter spaces with unprecedented efficiency and ingenuity. This technical guide delves into the core applications of reinforcement learning across key scientific domains, providing a comprehensive overview of its methodologies, quantitative impact, and the transformative potential it holds for the future of research and development.

De Novo Drug Design: Crafting Molecules with Purpose

The challenge of designing novel molecules with specific desired properties lies at the heart of drug discovery. Traditional methods often involve laborious and costly screening of vast chemical libraries. Reinforcement learning offers a powerful alternative by reframing molecule generation as a sequential decision-making process.

Methodology: The ReLeaSE (Reinforcement Learning for Structural Evolution) Approach

A prominent example of this compound in de novo drug design is the ReLeaSE (Reinforcement Learning for Structural Evolution) framework. This method utilizes a two-phase learning process to generate novel, synthesizable molecules with desired biological activities.[1][2]

Phase 1: Supervised Pre-training

-

Generative Model Training: A generative model, typically a Recurrent Neural Network (RNN) with Long Short-Term Memory (LSTM) or Gated Recurrent Unit (GRU) cells, is trained on a large database of known chemical structures (e.g., ChEMBL). The model learns the grammatical rules of chemical representation, such as the Simplified Molecular Input Line Entry System (SMILES), enabling it to generate valid chemical structures.[3][4]

-

Predictive Model Training: A separate predictive model, often a deep neural network, is trained on a dataset of molecules with known properties (e.g., binding affinity to a target protein). This model learns to predict the desired property of a given molecule based on its SMILES representation.[3]

Phase 2: Reinforcement Learning-based Fine-tuning

-

Agent-Environment Setup: The pre-trained generative model acts as the "agent," and the "environment" is a chemical space. The agent's "actions" are the sequential generation of characters in a SMILES string.

-

Reward Function: The predictive model serves as a "critic," evaluating the molecules generated by the agent. A reward is calculated based on the predicted property of the generated molecule. For instance, in the design of an inhibitor, a higher predicted binding affinity would result in a higher reward.[1]

-

Policy Gradient Optimization: The agent's policy (its strategy for generating molecules) is updated using a policy gradient algorithm, such as REINFORCE. The goal is to maximize the expected reward, thereby biasing the generation process towards molecules with the desired properties.[1]

Experimental Workflow: De Novo Design of JAK2 Inhibitors

The following diagram illustrates the experimental workflow for designing Janus kinase 2 (JAK2) inhibitors using the ReLeaSE methodology.

Quantitative Performance

The application of this compound in de novo drug design has yielded promising quantitative results.

| Metric | Value | Reference |

| Validity of Generated Molecules | 95% of generated structures were chemically valid. | [5] |

| Novelty of Generated Molecules | In a JAK2 inhibitor design task, only 13 out of 10,000 this compound-generated molecules showed high similarity to known inhibitors, indicating a high degree of novelty. | [6] |

| Retrospective Discovery | The ReLeaSE model retrospectively discovered 793 commercially available compounds in the ZINC database, which accounted for approximately 5% of the total generated library. | [5] |

| Hit Rate (Q-learning approach) | A Q-learning-based approach for designing inhibitors of an influenza A virus protein achieved a hit rate of 22% (2 out of 9 synthesized compounds showed activity). | [4] |

Chemical Synthesis: Automating the Path to Discovery

Optimizing chemical reactions is a fundamental aspect of chemical synthesis, often requiring extensive experimentation to determine the ideal conditions for maximizing yield and purity. Reinforcement learning is emerging as a powerful tool for automating this optimization process.

Methodology: The Deep Reaction Optimizer (DRO)

The Deep Reaction Optimizer (DRO) is an this compound-based system that iteratively explores experimental conditions to find the optimal parameters for a chemical reaction.[7][8]

-

State Representation: The "state" is defined by the current experimental conditions, such as temperature, reaction time, and reactant concentrations.

-

Action Space: The "actions" are the adjustments made to the experimental conditions in the next iteration.

-

Reward Function: The "reward" is a function of the reaction outcome, typically the yield of the desired product. An increase in yield from the previous experiment results in a positive reward.

-

Policy Network: A Recurrent Neural Network (RNN) is used as the policy network. It takes the history of experimental conditions and outcomes as input and outputs the next set of conditions to be tested.[9]

-

Training: The DRO is trained to maximize the cumulative reward, effectively learning a policy that efficiently navigates the parameter space to find the optimal reaction conditions.[7]

Experimental Workflow: Automated Reaction Optimization

The following diagram illustrates the workflow of the Deep Reaction Optimizer in an automated chemical synthesis setup.

Quantitative Performance

The DRO has demonstrated significant improvements in efficiency compared to traditional and other black-box optimization methods.

| Metric | DRO Performance | Comparison Method | Reference |

| Number of Experiments | Required 71% fewer steps to reach the optimal yield. | State-of-the-art blackbox optimization algorithm. | [7][8] |

| Optimization Time | Optimized a microdroplet reaction in 30 minutes. | - | [8] |

| Steps to Reach Target Yield | Reached the target yield in significantly fewer steps. | CMA-ES algorithm required over 120 steps for the same yield. | [5] |

Automation of Scientific Experiments: The Self-Driving Laboratory

Beyond specific applications in chemistry, reinforcement learning is poised to revolutionize the very process of scientific experimentation by enabling the creation of "self-driving laboratories." These autonomous systems can design, execute, and analyze experiments with minimal human intervention.

Methodology: this compound for Optimal Experimental Design (OED)

In the context of biological research, this compound can be used for Optimal Experimental Design (OED) to efficiently parameterize models of biological systems.[9][10]

-

Environment: The environment is the biological system under investigation, which can be a real-world experiment or a simulation.

-

Agent: The this compound agent is a controller that decides the next experimental action.

-

State: The state is the current set of observations from the experiment (e.g., cell density, protein concentration).

-

Actions: The actions are the experimental parameters that can be controlled (e.g., nutrient concentration, temperature).

-

Reward: The reward function is designed to maximize the information gained from each experiment. This is often based on metrics like the Fisher Information Matrix, which quantifies the amount of information an observation carries about the unknown parameters of a model.

-

Goal: The agent learns a policy to select a sequence of experiments that will most rapidly and accurately determine the parameters of the underlying biological model.

Experimental Workflow: Automated Characterization of Bacterial Growth

The following diagram illustrates an this compound-driven workflow for the automated characterization of a bacterial growth model.

Genomics and Materials Science: Expanding the Frontiers

The applications of reinforcement learning in scientific discovery extend to other data-rich and complex domains such as genomics and materials science.

Genomics: Unraveling Gene Regulatory Networks

In genomics, this compound is being explored for the inference of gene regulatory networks (GRNs). The intricate web of interactions between genes can be modeled as a graph, and this compound agents can be trained to predict the regulatory links between genes based on gene expression data. While still an emerging area, the use of metrics like the Area Under the Precision-Recall Curve (AUPRC) is crucial for evaluating the performance of these models, especially in the context of imbalanced datasets typical of GRNs.[11]

Materials Science: Accelerating the Discovery of Novel Materials

In materials science, this compound is being used to accelerate the discovery of new materials with desired properties, such as high conductivity or thermal stability. The vast combinatorial space of possible material compositions makes exhaustive searches infeasible. This compound agents can intelligently explore this space, guided by reward functions that are based on predicted material properties. Performance in this domain is often measured by metrics such as "Discovery Yield" (the number of high-performing materials found) and "Discovery Probability" (the likelihood of finding a high-performing material).

Conclusion

Reinforcement learning is rapidly transitioning from a theoretical concept to a practical and powerful tool in the arsenal of the modern scientist. By enabling the autonomous exploration of complex scientific landscapes, this compound is not only accelerating the pace of discovery but also uncovering novel solutions that may have been missed by traditional methods. As algorithms become more sophisticated and the integration with automated experimental platforms becomes more seamless, the symbiotic relationship between artificial intelligence and scientific inquiry is set to redefine the boundaries of what is possible, ushering in a new era of data-driven discovery.

References

- 1. Create a Flowchart using Graphviz Dot - Prashant Mhatre - Medium [medium.com]

- 2. researchgate.net [researchgate.net]

- 3. sketchviz.com [sketchviz.com]

- 4. GitHub - GuoJeff/generative-drug-design-with-experimental-validation: Compilation of literature examples of generative drug design that demonstrate experimental validation [github.com]

- 5. Deep reinforcement learning for de novo drug design - PMC [pmc.ncbi.nlm.nih.gov]

- 6. portal.fis.tum.de [portal.fis.tum.de]

- 7. pubs.acs.org [pubs.acs.org]

- 8. Optimizing Chemical Reactions with Deep Reinforcement Learning - PMC [pmc.ncbi.nlm.nih.gov]

- 9. discovery.ucl.ac.uk [discovery.ucl.ac.uk]

- 10. biorxiv.org [biorxiv.org]

- 11. Improved gene regulatory network inference from single cell data with dropout augmentation | PLOS Computational Biology [journals.plos.org]

Getting Started with Reinforcement Learning in a Laboratory Setting: An In-depth Technical Guide

For Researchers, Scientists, and Drug Development Professionals

The integration of artificial intelligence, particularly reinforcement learning (RL), into the laboratory setting is poised to revolutionize drug discovery and development. By enabling autonomous optimization of experiments and molecular design, this compound offers the potential to accelerate research, reduce costs, and uncover novel therapeutic candidates. This guide provides a comprehensive technical overview for researchers, scientists, and drug development professionals on the core principles of this compound and its practical application in a laboratory environment.

Core Concepts of Reinforcement Learning in the Laboratory

Reinforcement learning is a machine learning paradigm where an "agent" learns to make a sequence of decisions in an "environment" to maximize a cumulative "reward".[1] In a laboratory context, the agent is a computational model, the environment is the experimental setup (e.g., a chemical reaction or a cell culture), the actions are the experimental parameters to be adjusted (e.g., temperature, concentration, dosage), and the reward is a quantifiable measure of the desired outcome (e.g., reaction yield, cell viability, binding affinity).[2][3]

The interaction between the agent and the environment is typically modeled as a Markov Decision Process (MDP) , a mathematical framework for sequential decision-making under uncertainty.[4] An MDP is defined by a set of states, actions, transition probabilities between states, and a reward function.[4] The goal of the this compound agent is to learn a "policy," which is a strategy for choosing actions in each state to maximize the total expected reward over time.[4]

Several this compound algorithms can be employed to learn this optimal policy. A foundational value-based method is Q-learning , where the agent learns a Q-function that estimates the expected future reward for taking a specific action in a given state.[1] More advanced methods, often falling under the umbrella of Deep Reinforcement Learning (Dthis compound) , utilize deep neural networks to approximate the Q-function or the policy directly, enabling them to handle complex, high-dimensional state and action spaces common in biological and chemical experiments.[5]

Experimental Protocols: Integrating this compound with Laboratory Automation

The successful implementation of this compound in a laboratory setting hinges on the seamless integration of computational algorithms with physical experimental platforms. This "closed-loop" system allows the this compound agent to autonomously design, execute, and learn from experiments. Below are detailed methodologies for key applications.

Automated Chemical Synthesis

Objective: To optimize the reaction conditions for a chemical synthesis to maximize the yield of the desired product.

Methodology:

-

Environment Setup:

-

A robotic synthesis platform capable of dispensing reagents, controlling temperature and pressure, and monitoring the reaction progress in real-time (e.g., using HPLC or mass spectrometry).

-

The state of the environment is defined by the current reaction conditions (e.g., temperature, pressure, concentrations of reactants and catalysts) and the measured reaction yield at a given time.

-

-

This compound Agent and Action Space:

-

A Dthis compound agent, such as a Proximal Policy Optimization (PPO) or Deep Q-Network (DQN) algorithm, is implemented.

-

The action space consists of the controllable experimental parameters, such as adjustments to temperature, stirring speed, and the rate of addition of a reagent.

-

-

Reward Function:

-

The reward is directly proportional to the measured yield of the target molecule. A penalty can be introduced for the formation of undesirable byproducts.

-

-

Training and Optimization Loop:

-

The this compound agent proposes an initial set of reaction conditions (an action).

-

The robotic platform executes the experiment with these conditions.

-

Real-time data on reaction progress is fed back to the agent.

-

The agent calculates the reward based on the final yield.

-

The agent updates its policy based on the state, action, and reward.

-

This loop is repeated, with the agent continuously proposing new experiments to explore the reaction space and exploit promising conditions to maximize the yield.

-

Optimization of Cell Culture Protocols

Objective: To optimize the feeding strategy in a fed-batch bioreactor to maximize the production of a therapeutic protein from a cell culture.

Methodology:

-

Environment Setup:

-

An automated bioreactor system with pumps for nutrient feeding, sensors for monitoring critical process parameters (e.g., glucose concentration, cell density, product titer), and a control interface.

-

The state is defined by the measurements from the bioreactor sensors at discrete time intervals.

-

-

This compound Agent and Action Space:

-

An this compound agent, potentially a model-based this compound algorithm that can learn the dynamics of the cell culture, is used.

-

The action space is the amount of different nutrients to be fed to the bioreactor at each time step.

-

-

Reward Function:

-

The reward function is designed to maximize the final product titer while potentially penalizing the excessive use of expensive nutrients or conditions that lead to cell death.

-

-

Training and Optimization Loop:

-

The this compound agent, based on the current state of the bioreactor, decides on the feeding action.

-

The automated system implements the feeding strategy.

-

The bioreactor state is monitored, and the data is used to update the agent's model of the environment.

-

A reward is calculated based on the change in product concentration.

-

The agent's policy is updated to improve future feeding decisions. This process continues for the duration of the fed-batch culture.

-

Data Presentation: Benchmarking this compound Performance

The evaluation of this compound agents in a laboratory setting requires well-defined metrics to compare their performance against traditional methods or other algorithms. Key performance indicators include the final optimized value (e.g., yield, titer), the number of experiments required to reach that optimum (sample efficiency), and the stability of the learning process.

| Application Area | This compound Algorithm | Key Performance Metric | Reported Improvement | Reference |

| De Novo Drug Design | Reinforcement Learning for Structural Evolution (ReLeaSE) | Generation of novel, valid, and synthesizable molecules with desired properties. | Successfully generated novel molecules with specific desired properties (e.g., melting point, hydrophobicity). | [5][6] |

| De Novo Drug Design | Molthis compound-MGPT (Multiple GPT Agents) | Performance on GuacaMol benchmark for goal-directed molecular generation. | Showed promising results on the GuacaMol benchmark and in designing inhibitors for SARS-CoV-2 targets. | [7] |

| De Novo Drug Design | RLDV (this compound with Dynamic Vocabulary) | Performance on GuacaMol benchmark. | Outperformed existing models on multiple tasks in the GuacaMol benchmark. | [8] |

| Antibody Design | Structured Q-learning (SQL) | Binding energy of designed antibody sequences to target pathogens. | Found high binding energy sequences and performed favorably against baselines on eight antibody design tasks. | [1] |

| Clinical Trial Design | Q-learning | Discovery of optimal individualized treatment regimens. | Simulation studies show the ability to extract optimal strategies directly from clinical data. | [9][10] |

Mandatory Visualizations: Pathways and Workflows

Visualizing the complex logical relationships and workflows in this compound-driven laboratory research is crucial for understanding and communication. The following diagrams are generated using the Graphviz DOT language.

The Core Reinforcement Learning Loop

This diagram illustrates the fundamental interaction between the this compound agent and the laboratory environment.

Closed-Loop Workflow for Automated Synthesis

This diagram outlines the practical workflow of integrating an this compound agent with a robotic platform for optimizing a chemical synthesis.

MAPK Signaling Pathway in Cancer

The Mitogen-Activated Protein Kinase (MAPK) pathway is a critical signaling cascade that is often dysregulated in cancer, making it a key target for drug discovery.[11][12] This diagram illustrates a simplified view of the core MAPK/ERK pathway.

JAK-STAT Signaling Pathway

The Janus kinase (JAK)-signal transducer and activator of transcription (STAT) pathway is another crucial signaling cascade involved in immunity, cell proliferation, and differentiation, and its dysregulation is implicated in various diseases, including cancer and autoimmune disorders.[13][14][15]

References

- 1. researchgate.net [researchgate.net]

- 2. Deep reinforcement learning for optimal experimental design in biology - PMC [pmc.ncbi.nlm.nih.gov]

- 3. Deep reinforcement learning for optimal experimental design in biology | PLOS Computational Biology [journals.plos.org]

- 4. arxiv.org [arxiv.org]

- 5. Deep reinforcement learning for de novo drug design - PMC [pmc.ncbi.nlm.nih.gov]

- 6. [1711.10907] Deep Reinforcement Learning for De-Novo Drug Design [arxiv.org]

- 7. arxiv.org [arxiv.org]

- 8. De novo Drug Design using Reinforcement Learning with Dynamic Vocabulary | OpenReview [openreview.net]

- 9. Reinforcement learning design for cancer clinical trials - PMC [pmc.ncbi.nlm.nih.gov]

- 10. Reinforcement Learning Strategies for Clinical Trials in Non-small Cell Lung Cancer - PMC [pmc.ncbi.nlm.nih.gov]

- 11. MAPK Pathway in Cancer: What's New In Treatment? - Cancer Commons [cancercommons.org]

- 12. ERK/MAPK signalling pathway and tumorigenesis - PMC [pmc.ncbi.nlm.nih.gov]

- 13. creative-diagnostics.com [creative-diagnostics.com]

- 14. cusabio.com [cusabio.com]

- 15. The JAK/STAT signaling pathway: from bench to clinic - PMC [pmc.ncbi.nlm.nih.gov]

The Symbiotic Revolution: A Technical Guide to Reinforcement Learning in Applied Science

For Researchers, Scientists, and Drug Development Professionals

In the intricate landscape of scientific discovery and drug development, the quest for novel solutions and optimized processes is perpetual. Traditional methodologies, often guided by heuristics and extensive trial-and-error, are increasingly being augmented by the power of artificial intelligence. Among the vanguards of this transformation is Reinforcement Learning (RL), a paradigm of machine learning that learns to make optimal sequential decisions in complex and uncertain environments. This in-depth technical guide delves into the theoretical foundations of reinforcement learning and explores its practical applications in applied science, with a particular focus on the multifaceted challenges of drug discovery.

The Core Engine: Understanding Reinforcement Learning

At its heart, reinforcement learning is a computational framework for goal-oriented learning from interaction. An autonomous agent learns to make decisions by taking actions in an environment to maximize a cumulative reward. This learning process is fundamentally different from supervised learning, as the agent is not told which actions to take but must discover which actions yield the most reward by trying them.

The interaction between the agent and the environment is typically modeled as a Markov Decision Process (MDP) , a mathematical framework for modeling decision-making in situations where outcomes are partly random and partly under the control of a decision-maker. The core components of an MDP are:

-

States (S): A set of states representing the condition of the environment.

-

Actions (A): A set of actions that the agent can take.

-

Transition Function (T): The probability of transitioning from one state to another after taking a specific action.

-

Reward Function (R): A function that provides a scalar reward to the agent for being in a state or for taking an action in a state.

-

Discount Factor (γ): A value between 0 and 1 that discounts future rewards, reflecting the preference for immediate rewards over delayed ones.

The agent's goal is to learn a policy (π) , which is a mapping from states to actions, that maximizes the expected cumulative discounted reward.

Key Theoretical Concepts in Reinforcement Learning

To navigate the complexities of scientific problems, several key theoretical concepts and algorithms in reinforcement learning are employed.

Value Functions and Bellman Equations

A central concept in this compound is the value function , which estimates the expected cumulative reward from a given state or a state-action pair.

-

State-Value Function (Vπ(s)): The expected return when starting in state s and following policy π.

-

Action-Value Function (Qπ(s, a)): The expected return when starting in state s, taking action a, and then following policy π.

The Bellman equations provide a recursive relationship for the value functions, forming the basis for many this compound algorithms. They express the value of a state as a combination of the immediate reward and the discounted value of the subsequent state.

Q-Learning: Learning the Optimal Action-Value Function

Q-learning is a model-free this compound algorithm that aims to learn the optimal action-value function, denoted as Q*(s, a). This function represents the maximum expected cumulative reward achievable from a given state-action pair. The learning process involves iteratively updating the Q-values using the Bellman equation as an update rule.

Policy Gradients: Directly Optimizing the Policy

Instead of learning a value function, policy gradient methods directly learn the policy by parameterizing it and optimizing the parameters using gradient ascent.[1] The gradient of the expected reward with respect to the policy parameters is estimated and used to update the policy in the direction of higher reward. This approach is particularly useful in continuous action spaces.

Application in Drug Discovery: De Novo Molecular Design

One of the most promising applications of reinforcement learning in drug discovery is de novo molecular design, where the goal is to generate novel molecules with desired chemical and biological properties. The Molecule Deep Q-Networks (MolDQN) framework is a prime example of this application.

Experimental Protocol for MolDQN

The MolDQN framework formulates the process of molecule generation as a Markov Decision Process.

-

State: A state is represented by the current molecule, which is featurized using Morgan fingerprints . These fingerprints are a method of encoding molecular structures into a numerical vector. Specifically, extended-connectivity fingerprints of radius 2 are commonly used.[2] The input to the deep Q-network is this fingerprint vector.

-

Action: The set of actions includes chemically valid modifications to the current molecule, such as adding or removing specific atoms and bonds. To ensure chemical validity, a set of predefined rules and heuristics are applied. For instance, these rules prevent the formation of unstable chemical structures or violations of atomic valency.

-

Reward: The reward function is designed to guide the generation process towards molecules with desired properties. For multi-objective optimization, the reward is often a weighted sum of different property scores, such as the Quantitative Estimate of Drug-likeness (QED) and the similarity to a known active molecule.

-

Q-Network Architecture: A deep neural network is used to approximate the Q-function. The input to this network is the Morgan fingerprint of the molecule, and the output is a vector of Q-values for each possible action.

Quantitative Performance of Reinforcement Learning in Molecular Design

The effectiveness of reinforcement learning in generating molecules with desired properties has been demonstrated in various studies. The following table summarizes the performance of different this compound-based models on benchmark tasks for molecular optimization.

| Model | Task | Metric | Value |

| MolDQN | Penalized logP Optimization | Mean Improvement | 5.23 |

| GCPN | Penalized logP Optimization | Mean Improvement | 4.87 |

| JT-VAE | Penalized logP Optimization | Mean Improvement | 3.84 |

| MolDQN | QED Optimization | Success Rate | 85% |

| GCPN | QED Optimization | Success Rate | 81% |

| JT-VAE | QED Optimization | Success Rate | 75% |

Data compiled from various benchmark studies in de novo drug design.

Application in Preclinical Research: Optimizing Dosing Regimens

Beyond molecular design, reinforcement learning holds significant potential for optimizing various stages of preclinical research, such as determining optimal dosing regimens for novel drug candidates.

MDP Formulation for Preclinical Dosing Optimization

An MDP can be formulated to find a dosing strategy that maximizes therapeutic efficacy while minimizing toxicity.

-

State: The state can be a vector of clinically relevant biomarkers, pharmacokinetic (PK) and pharmacodynamic (PD) parameters, and patient-specific information. For instance, in an oncology setting, this could include tumor size, concentration of the drug in the blood, and liver enzyme levels.

-

Action: The action space consists of different dosing decisions, such as increasing, decreasing, or maintaining the current dose, or changing the dosing frequency.

-

Reward: The reward function is crucial and must be carefully designed to balance competing objectives. A positive reward could be given for a reduction in tumor size, while a negative reward (penalty) would be associated with exceeding toxicity thresholds.

Experimental Protocol for this compound-based Dosing Optimization

-

Environment Simulation: A significant challenge in applying this compound to clinical scenarios is the need for a reliable simulation of the patient's physiological response to the drug. This can be achieved by developing a pharmacokinetic/pharmacodynamic (PK/PD) model based on preclinical experimental data.

-

Agent Training: An this compound agent, often based on an actor-critic architecture, is trained within this simulated environment. The actor (policy) proposes a dosing action based on the current state, and the critic (value function) evaluates the long-term value of that action.

-

Policy Evaluation: The learned dosing policy is then evaluated through extensive in-silico trials to assess its robustness and safety before any potential application in real-world preclinical studies.

Conclusion and Future Directions

Reinforcement learning offers a powerful and flexible framework for tackling complex decision-making problems in applied science and drug development. From designing novel molecules with desired properties to optimizing preclinical experimental protocols, the potential applications are vast and transformative. As our ability to generate high-quality data and develop more sophisticated algorithms grows, we can expect this compound to play an increasingly integral role in accelerating the pace of scientific discovery and bringing new therapies to patients faster. Future research will likely focus on developing more sample-efficient and interpretable this compound algorithms, as well as integrating them more seamlessly into existing scientific workflows. The symbiotic relationship between artificial intelligence and scientific inquiry is poised to unlock new frontiers of knowledge and innovation.

References

Methodological & Application

Application Notes and Protocols for Deep Q-Networks in Experimental Parameter Tuning

Audience: Researchers, scientists, and drug development professionals.

Introduction to Deep Q-Networks for Experimental Optimization

Deep Reinforcement Learning (DRL), particularly the Deep Q-Network (DQN) algorithm, offers a powerful paradigm for optimizing complex experimental parameters in real-time. Unlike traditional methods like Design of Experiments (DoE), which often require a complete set of experiments to be planned in advance, a DQN agent learns from interactions with the experimental system, continuously adapting its strategy to maximize a desired outcome. This approach is particularly advantageous in scenarios with large parameter spaces and non-linear relationships, common in drug discovery and chemical synthesis. By learning a policy that maps the current state of an experiment to the most promising next action, DQNs can efficiently navigate the parameter landscape to find optimal conditions with fewer experiments, saving time and resources.

Core Concepts: State, Action, and Reward

To apply a DQN to an experimental problem, the process must be framed in the context of reinforcement learning. This involves defining the following key components:

-

State (s): The state is a representation of the current condition of the experimental system. This can include a combination of controllable parameters and observable outcomes. For example, in a chemical reaction, the state could be a vector representing the current temperature, pressure, catalyst concentration, and the measured yield of the product.

-

Action (a): An action is a modification made to the controllable parameters of the experiment. The agent chooses an action from a predefined set of possibilities. For instance, in a cell culture experiment, an action could be to increase or decrease the concentration of a specific nutrient in the medium.

-

Reward (r): The reward is a scalar value that provides feedback to the agent on the quality of its action. The goal of the agent is to maximize the cumulative reward over time. The reward function is designed to reflect the experimental objective. A higher product yield in a chemical reaction or increased cell viability in a cell culture would typically result in a positive reward.

General Workflow for DQN-based Experimental Parameter Tuning

The application of a DQN to optimize experimental parameters follows a cyclical process of interaction between the DQN agent and the experimental setup, which can be either a real-world laboratory system or a simulation.

Application Case Study 1: Optimizing CHO Cell Culture for Monoclonal Antibody Production

Background

Chinese Hamster Ovary (CHO) cells are a primary platform for producing monoclonal antibodies (mAbs). Optimizing the fed-batch cell culture process is critical for maximizing product titer and quality. This involves tuning numerous parameters, including the composition of the culture medium and feeding strategy. A Deep Reinforcement Learning approach has been shown to significantly improve these outcomes.[1][2]

Quantitative Data Summary

| Metric | Traditional Method | DQN-based Optimization | Improvement |

| Product Titer | Baseline | Baseline + 25-35%[1][2] | 25-35% Increase |

| Process Parameter Variability | Baseline | Baseline - 40-50%[1][2] | 40-50% Reduction |

Experimental Protocol

1. Define the State, Action, and Reward:

-

State (s): A vector representing the current culture conditions, including:

-

Viable cell density

-

Concentrations of key metabolites (e.g., glucose, lactate, amino acids)

-

Product (mAb) concentration

-

Current values of controllable parameters (e.g., pH, temperature, feed rates)

-

-

Action (a): A discrete set of adjustments to the feeding strategy, for example:

-

Increase/decrease the feed rate of a specific nutrient solution by a predefined step.

-

Maintain the current feed rates.

-

-

Reward (r): A function designed to maximize productivity and maintain cell health. For example:

-

Reward = (change in mAb concentration) - (penalty for high lactate concentration)

-

2. DQN Agent Setup:

-

Network Architecture: A multi-layer perceptron (MLP) with several hidden layers. The input layer receives the state vector, and the output layer provides the Q-value for each possible action.

-

Hyperparameters:

-

Learning rate (e.g., 0.001)

-

Discount factor (gamma) (e.g., 0.9)

-

Epsilon (for epsilon-greedy exploration), with a decay schedule.

-

Replay buffer size (e.g., 10,000)

-

Batch size (e.g., 64)

-

3. Training Loop:

-

Initialize the CHO cell culture in a bioreactor with a standard starting medium.

-

At each time step (e.g., every 12 or 24 hours): a. Measure the parameters to define the current state (s). b. The DQN agent selects an action (a) based on the current state using an epsilon-greedy policy. c. Apply the chosen action to the bioreactor (adjust feed rates). d. After a defined interval, measure the new state (s') and calculate the reward (r). e. Store the transition (s, a, r, s') in the replay buffer. f. Sample a mini-batch of transitions from the replay buffer to train the DQN.

-

Continue this process for the duration of the fed-batch culture.

-

Repeat with multiple bioreactor runs to improve the agent's policy.

Signaling Pathways in CHO Cell Growth

The composition of the cell culture medium directly impacts intracellular signaling pathways that regulate cell growth, proliferation, and survival. The DQN agent, by optimizing the nutrient concentrations, is indirectly influencing these pathways to achieve the desired outcome. Key pathways include:

Application Case Study 2: Optimization of Suzuki-Miyaura Cross-Coupling Reaction

Background