Eec8DZ2V4P

Description

The Transformer architecture, introduced by Vaswani et al. (2017), revolutionized NLP by replacing recurrent and convolutional layers with self-attention mechanisms. This enabled parallelizable training and superior performance on tasks like machine translation, achieving 28.4 BLEU on WMT 2014 English-to-German and 41.8 BLEU on English-to-French translation . Subsequent models like BERT, RoBERTa, T5, and GPT-2 built on this foundation, refining pretraining objectives, scalability, and task generalization.

Properties

CAS No. |

869184-36-1 |

|---|---|

Molecular Formula |

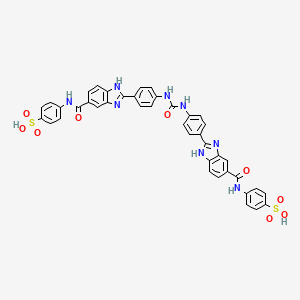

C41H30N8O9S2 |

Molecular Weight |

842.9 g/mol |

IUPAC Name |

4-[[2-[4-[[4-[5-[(4-sulfophenyl)carbamoyl]-1H-benzimidazol-2-yl]phenyl]carbamoylamino]phenyl]-1H-benzimidazole-5-carbonyl]amino]benzenesulfonic acid |

InChI |

InChI=1S/C41H30N8O9S2/c50-39(42-27-11-15-31(16-12-27)59(53,54)55)25-5-19-33-35(21-25)48-37(46-33)23-1-7-29(8-2-23)44-41(52)45-30-9-3-24(4-10-30)38-47-34-20-6-26(22-36(34)49-38)40(51)43-28-13-17-32(18-14-28)60(56,57)58/h1-22H,(H,42,50)(H,43,51)(H,46,48)(H,47,49)(H2,44,45,52)(H,53,54,55)(H,56,57,58) |

InChI Key |

FGRDETPXVJYJTR-UHFFFAOYSA-N |

Canonical SMILES |

C1=CC(=CC=C1C2=NC3=C(N2)C=CC(=C3)C(=O)NC4=CC=C(C=C4)S(=O)(=O)O)NC(=O)NC5=CC=C(C=C5)C6=NC7=C(N6)C=CC(=C7)C(=O)NC8=CC=C(C=C8)S(=O)(=O)O |

Origin of Product |

United States |

Preparation Methods

Synthetic Routes and Reaction Conditions

The synthesis of Eec8DZ2V4P involves multiple steps, starting with the preparation of intermediate compounds that are subsequently combined to form the final product. The key steps include:

Formation of benzimidazole derivatives: This involves the reaction of o-phenylenediamine with carboxylic acids under acidic conditions to form benzimidazole rings.

Coupling reactions: The benzimidazole derivatives are then coupled with sulfonic acid groups through condensation reactions, often facilitated by dehydrating agents like phosphorus oxychloride.

Industrial Production Methods

Industrial production of This compound follows similar synthetic routes but on a larger scale. The process involves:

Batch reactors: Large-scale batch reactors are used to carry out the multi-step synthesis, ensuring precise control over reaction conditions.

Purification: The crude product is purified using techniques such as recrystallization and chromatography to achieve the desired purity levels.

Quality control: Rigorous quality control measures are implemented to ensure consistency and compliance with industry standards.

Chemical Reactions Analysis

Types of Reactions

Eec8DZ2V4P: undergoes various chemical reactions, including:

Oxidation: The compound can be oxidized using strong oxidizing agents like potassium permanganate, leading to the formation of sulfone derivatives.

Reduction: Reduction reactions using agents like lithium aluminum hydride can convert the carbonyl groups to alcohols.

Substitution: The aromatic rings in This compound can undergo electrophilic substitution reactions, such as nitration and halogenation.

Common Reagents and Conditions

Oxidation: Potassium permanganate in acidic or basic medium.

Reduction: Lithium aluminum hydride in anhydrous ether.

Major Products

Oxidation: Sulfone derivatives.

Reduction: Alcohol derivatives.

Substitution: Nitro and halogenated derivatives.

Scientific Research Applications

Eec8DZ2V4P: has a wide range of applications in scientific research, including:

Chemistry: Used as a reagent in organic synthesis and as a building block for more complex molecules.

Biology: Investigated for its potential as a biochemical probe due to its ability to interact with various biomolecules.

Medicine: Explored for its potential therapeutic properties, including anti-inflammatory and anticancer activities.

Industry: Utilized in the production of specialty chemicals and materials

Mechanism of Action

The mechanism of action of Eec8DZ2V4P involves its interaction with specific molecular targets, such as enzymes and receptors. The compound’s structure allows it to bind to active sites, inhibiting or modulating the activity of these targets. For example, it may inhibit enzyme activity by forming stable complexes with the enzyme’s active site, thereby preventing substrate binding and subsequent catalytic activity .

Comparison with Similar Compounds

Comparison with Similar Models

The following table summarizes key differences among transformer-based models:

Key Findings :

- Training Efficiency : The Transformer reduced training costs significantly compared to recurrent models, achieving high performance with 3.5 days on 8 GPUs . BERT and RoBERTa highlighted the importance of hyperparameter tuning and extended training, with RoBERTa outperforming BERT by removing Next Sentence Prediction and optimizing data size .

- Task Generalization : T5 unified diverse tasks into a text-to-text framework, demonstrating versatility across summarization, QA, and classification . GPT-2 excelled in zero-shot learning, leveraging its 1.5B parameters to generate coherent text without task-specific fine-tuning .

- Bidirectionality vs. Autoregression : BERT’s bidirectional context improved understanding of word relationships , while GPT-2’s autoregressive design prioritized generative tasks .

Critical Analysis of Limitations

Featured Recommendations

| Most viewed | ||

|---|---|---|

| Most popular with customers |

Disclaimer and Information on In-Vitro Research Products

Please be aware that all articles and product information presented on BenchChem are intended solely for informational purposes. The products available for purchase on BenchChem are specifically designed for in-vitro studies, which are conducted outside of living organisms. In-vitro studies, derived from the Latin term "in glass," involve experiments performed in controlled laboratory settings using cells or tissues. It is important to note that these products are not categorized as medicines or drugs, and they have not received approval from the FDA for the prevention, treatment, or cure of any medical condition, ailment, or disease. We must emphasize that any form of bodily introduction of these products into humans or animals is strictly prohibited by law. It is essential to adhere to these guidelines to ensure compliance with legal and ethical standards in research and experimentation.