Depep

Description

Properties

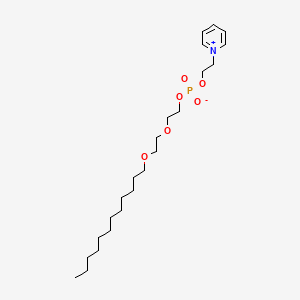

CAS No. |

81051-35-6 |

|---|---|

Molecular Formula |

C23H42NO6P |

Molecular Weight |

459.6 g/mol |

IUPAC Name |

2-(2-dodecoxyethoxy)ethyl 2-(4-oxo-3H-pyridin-1-ium-1-yl)ethyl phosphite |

InChI |

InChI=1S/C23H42NO6P/c1-2-3-4-5-6-7-8-9-10-11-17-27-19-20-28-21-22-30-31(26)29-18-16-24-14-12-23(25)13-15-24/h12,14-15H,2-11,13,16-22H2,1H3 |

InChI Key |

JHTGIEJZDOSLEJ-UHFFFAOYSA-N |

SMILES |

CCCCCCCCCCCCOCCOCCOP(=O)([O-])OCC[N+]1=CC=CC=C1 |

Canonical SMILES |

CCCCCCCCCCCCOCCOCCOP([O-])OCC[N+]1=CCC(=O)C=C1 |

Synonyms |

2(2-(dodecyloxy)ethoxy)ethyl-2-pyridioethyl phosphate 2-(2-(dodecyloxy)ethoxy)ethyl 2-pyridinoethyl phosphate DEPEP ST 029 ST-029 |

Origin of Product |

United States |

Foundational & Exploratory

DeepPep: A Technical Guide to Deep Learning-Powered Protein Inference

For Researchers, Scientists, and Drug Development Professionals

This in-depth technical guide explores the core methodology of DeepPep, a novel deep convolutional neural network framework for protein inference from peptide profiles. Protein inference, a critical step in proteomics, is the process of identifying the set of proteins present in a sample based on the peptides detected by mass spectrometry. DeepPep leverages the power of deep learning to improve the accuracy and robustness of this process, offering significant advantages for researchers in various fields, including drug development and biomarker discovery.

Core Principles of DeepPep

At its core, DeepPep treats the protein inference problem as a machine learning task. It utilizes a deep convolutional neural network (CNN) to learn the complex relationships between peptide sequences and their parent proteins. The fundamental idea is that the probability of a peptide being correctly identified from a mass spectrum is dependent on the presence of its originating protein.[1][2]

DeepPep quantifies the change in the predicted probability of a peptide-spectrum match when a specific protein is considered present or absent from the proteome.[3] Proteins that cause the most significant change in these probabilities are considered more likely to be present in the sample. This approach allows DeepPep to infer the most probable set of proteins that explain the observed peptide evidence.

The DeepPep Workflow

The DeepPep framework consists of a series of well-defined steps, from input data processing to the final protein scoring and inference. The overall workflow is depicted below.

Caption: The general workflow of the DeepPep algorithm.

Input Data

DeepPep requires two primary inputs:

-

Peptide Identification File: A tab-separated file containing a list of identified peptides, their corresponding protein matches, and the probability score of each peptide-spectrum match (PSM).[3]

-

Protein Database: A FASTA file containing the sequences of all potential proteins for the organism being studied.[2][3]

Data Processing and Model Training

The core of DeepPep lies in its unique data representation and the training of a deep convolutional neural network.

Binary Encoding of Peptide Matches: For each observed peptide, DeepPep creates a binary vector representation of the entire proteome.[1] In this vector, a '1' indicates the presence of the peptide sequence within a specific protein, and a '0' indicates its absence. This creates a spatial representation of peptide locations across all proteins.

Convolutional Neural Network (CNN) Training: The binary-encoded vectors are used to train a CNN. The network learns to predict the original probability of the peptide-spectrum match based on the input vector. The architecture of the CNN is designed to capture the spatial patterns of peptide occurrences within the protein sequences.

The architecture of the CNN used in DeepPep is as follows:

Caption: The architecture of the DeepPep Convolutional Neural Network.

Protein Inference

Once the CNN is trained, DeepPep performs the actual protein inference through a differential scoring mechanism.

Simulated Protein Removal: For each protein in the database, DeepPep simulates its absence by setting the corresponding entries in the binary input vector to zero.[1]

Calculate Peptide Probability Change: The modified input vector (with the protein "removed") is then fed into the trained CNN to predict a new peptide probability. The difference between the original predicted probability and this new probability is calculated.[3]

Protein Scoring: The final score for each protein is determined by the magnitude of the change in peptide probabilities when that protein is removed. A larger change indicates that the protein is more likely to be the true origin of the observed peptides.

This logical relationship can be visualized as:

Caption: The logical relationship for scoring proteins in DeepPep.

Quantitative Performance

DeepPep has been benchmarked against several other protein inference algorithms across a variety of datasets. The following tables summarize its performance based on key metrics.

Area Under the Curve (AUC) and Area Under the Precision-Recall Curve (AUPR)

| Dataset | DeepPep (AUC) | ProteinLP (AUC) | MSBayesPro (AUC) | ProteinLasso (AUC) | Fido (AUC) | DeepPep (AUPR) | ProteinLP (AUPR) | MSBayesPro (AUPR) | ProteinLasso (AUPR) | Fido (AUPR) |

| 18 Mixtures | 0.94 | 0.93 | 0.92 | 0.93 | 0.93 | 0.93 | 0.92 | 0.91 | 0.92 | 0.92 |

| Sigma49 | 0.97 | 0.96 | 0.95 | 0.96 | 0.96 | 0.97 | 0.96 | 0.95 | 0.96 | 0.96 |

| USP2 | 0.98 | 0.97 | 0.96 | 0.97 | 0.97 | 0.98 | 0.97 | 0.96 | 0.97 | 0.97 |

| Yeast | 0.78 | 0.80 | 0.75 | 0.79 | 0.79 | 0.81 | 0.83 | 0.78 | 0.82 | 0.82 |

| DME | 0.65 | 0.70 | 0.62 | 0.68 | 0.68 | 0.70 | 0.75 | 0.65 | 0.73 | 0.73 |

| HumanMD | 0.75 | 0.78 | 0.72 | 0.77 | 0.77 | 0.78 | 0.81 | 0.75 | 0.80 | 0.80 |

| HumanEKC | 0.85 | 0.82 | 0.78 | 0.81 | 0.81 | 0.88 | 0.85 | 0.80 | 0.84 | 0.84 |

Data extracted from the supplementary materials of the DeepPep publication.

F1-Measure for Positive and Negative Predictions

| Dataset | DeepPep (Positive) | ProteinLP (Positive) | MSBayesPro (Positive) | ProteinLasso (Positive) | Fido (Positive) | DeepPep (Negative) | ProteinLP (Negative) | MSBayesPro (Negative) | ProteinLasso (Negative) | Fido (Negative) |

| 18 Mixtures | 0.89 | 0.88 | 0.86 | 0.88 | 0.88 | 0.95 | 0.94 | 0.93 | 0.94 | 0.94 |

| Sigma49 | 0.94 | 0.93 | 0.91 | 0.93 | 0.93 | 0.97 | 0.96 | 0.95 | 0.96 | 0.96 |

| USP2 | 0.96 | 0.95 | 0.93 | 0.95 | 0.95 | 0.98 | 0.97 | 0.96 | 0.97 | 0.97 |

| Yeast | 0.75 | 0.77 | 0.72 | 0.76 | 0.76 | 0.80 | 0.82 | 0.77 | 0.81 | 0.81 |

| DME | 0.68 | 0.72 | 0.65 | 0.70 | 0.70 | 0.72 | 0.76 | 0.68 | 0.74 | 0.74 |

| HumanMD | 0.78 | 0.80 | 0.75 | 0.79 | 0.79 | 0.82 | 0.84 | 0.79 | 0.83 | 0.83 |

| HumanEKC | 0.88 | 0.86 | 0.82 | 0.85 | 0.85 | 0.90 | 0.88 | 0.85 | 0.87 | 0.87 |

Data extracted from the supplementary materials of the DeepPep publication.

Experimental Protocols for Benchmark Datasets

The performance of DeepPep was evaluated on seven benchmark datasets. The following provides a summary of the experimental protocols used to generate these datasets, as described in their original publications.

18 Mixtures (18Mix)

-

Sample Preparation: A mixture of 18 purified proteins was prepared and digested with trypsin.

-

Mass Spectrometry: The resulting peptides were analyzed by liquid chromatography-tandem mass spectrometry (LC-MS/MS) on an LTQ-Orbitrap mass spectrometer.

-

Data Analysis: The raw data was searched against a human protein database using the SEQUEST algorithm.

Sigma49

-

Sample Preparation: A standard mixture of 49 human proteins (Sigma-Aldrich) was used. The proteins were reduced, alkylated, and digested with trypsin.

-

Mass Spectrometry: The peptide mixture was analyzed by LC-MS/MS using a nano-LC system coupled to a Q-TOF mass spectrometer.

-

Data Analysis: The MS/MS spectra were searched against a human protein database using the Mascot search engine.

UPS2

-

Sample Preparation: A commercially available protein standard (UPS2, Sigma-Aldrich) containing 48 human proteins at various concentrations was used. The sample was digested with trypsin.

-

Mass Spectrometry: The peptides were separated by nano-LC and analyzed on an LTQ-Orbitrap Velos mass spectrometer.

-

Data Analysis: The raw files were processed using MaxQuant against a human protein database.

Yeast

-

Sample Preparation: Saccharomyces cerevisiae cells were cultured, harvested, and lysed. The protein extract was then subjected to in-solution tryptic digestion.

-

Mass Spectrometry: The peptide mixture was analyzed by LC-MS/MS on a high-resolution Q-Exactive mass spectrometer.

-

Data Analysis: The spectra were searched against a Saccharomyces cerevisiae protein database using the Andromeda search engine within MaxQuant.

DME (Drosophila melanogaster Embryo)

-

Sample Preparation: Proteins were extracted from Drosophila melanogaster embryos and digested with trypsin.

-

Mass Spectrometry: The resulting peptides were analyzed by LC-MS/MS on an LTQ-Orbitrap instrument.

-

Data Analysis: The raw data was searched against a Drosophila melanogaster protein database using the SEQUEST algorithm.

HumanMD (Human Medulloblastoma)

-

Sample Preparation: Proteins were extracted from human medulloblastoma tissue samples. The proteins were then digested with trypsin.

-

Mass Spectrometry: The peptide samples were analyzed by LC-MS/MS on a Q-Exactive mass spectrometer.

-

Data Analysis: The MS/MS data was searched against a human protein database using the Mascot search engine.

HumanEKC (Human Embryonic Kidney Cells)

-

Sample Preparation: Human Embryonic Kidney (HEK293) cells were cultured and lysed. The protein lysate was digested with trypsin.

-

Mass Spectrometry: The resulting peptides were analyzed by LC-MS/MS on an LTQ-Orbitrap Velos mass spectrometer.

-

Data Analysis: The raw data was processed with MaxQuant and searched against a human protein database.

This guide provides a comprehensive technical overview of the DeepPep protein inference method. Its innovative use of deep learning offers a powerful tool for researchers and scientists, enabling more accurate and reliable identification of proteins in complex biological samples. The provided quantitative data and experimental protocols serve as a valuable resource for those looking to understand, apply, or build upon this cutting-edge technology in their own research and development endeavors.

References

DeepPep: A Technical Guide to Deep Learning-Powered Proteome Inference

For Researchers, Scientists, and Drug Development Professionals

Abstract

The accurate identification of proteins within a biological sample is a cornerstone of proteomics research and a critical step in the drug development pipeline. The "protein inference problem," the process of determining the set of proteins present in a sample based on identified peptides, remains a significant computational challenge. DeepPep is a deep convolutional neural network (CNN) framework designed to address this challenge by leveraging the sequence information of proteins and peptides. This technical guide provides an in-depth overview of the DeepPep model, its core architecture, experimental validation, and its potential applications in proteomics and drug discovery.

Introduction to the Protein Inference Challenge

Mass spectrometry-based shotgun proteomics is a primary method for identifying and quantifying proteins on a large scale. In this approach, proteins are enzymatically digested into smaller peptides, which are then analyzed by a mass spectrometer. The resulting mass spectra are searched against a protein sequence database to identify the peptides. However, a significant hurdle arises from the fact that some peptides can be shared among multiple proteins (degenerate peptides), and some proteins may only be identified by a single unique peptide ("one-hit wonders"). This ambiguity complicates the accurate inference of the protein composition of the original sample.[1]

Traditional methods for protein inference often rely on parsimony principles or probabilistic models that can be limited in their ability to handle the complex, non-linear relationships inherent in proteomics data. DeepPep was developed to overcome these limitations by employing a deep learning approach that directly learns from the sequence context of peptides within the proteome.[1][2]

The DeepPep Framework: A Deep Learning Approach

DeepPep utilizes a deep convolutional neural network to predict the probability of a peptide-spectrum match (PSM) being correct, based on the location of the peptide sequence within its parent protein(s).[1][3] The core principle is to quantify the impact of the presence or absence of a specific protein on the confidence of the identified peptides. Proteins that significantly increase the probability of the observed peptide profile are inferred to be present in the sample.[1][4]

The DeepPep workflow can be summarized in the following logical steps:

Caption: Logical workflow of the DeepPep framework.

Core Architecture of the DeepPep Model

At the heart of DeepPep is a deep convolutional neural network. The input to the model is a binary representation of a protein sequence, where the presence of a specific peptide is marked with a '1' and all other amino acids are '0'.[3][5] This encoding captures the positional information of the peptide within the protein.

The CNN architecture consists of four sequential convolutional layers, interspersed with pooling and dropout layers to prevent overfitting.[5] The convolutional layers are designed to learn hierarchical features from the input sequence, capturing complex patterns that may indicate a true protein-peptide relationship. Following the convolutional layers, a fully connected layer produces the final output, which is the predicted probability of the peptide being correctly identified.[5] The Rectified Linear Unit (ReLU) activation function is used throughout the network.[5]

Caption: The architecture of the DeepPep CNN model.

Experimental Protocols and Validation

DeepPep's performance was rigorously evaluated on seven independent, publicly available benchmark datasets. These datasets represent a variety of instruments and experimental conditions, providing a robust assessment of the model's generalizability.

Benchmark Datasets

| Dataset | Organism | Description |

| 18Mix | Human, Yeast, etc. | A mixture of 18 purified proteins from various species. |

| Sigma49 | Human | A mixture of 49 purified human proteins from Sigma-Aldrich. |

| Yeast | Saccharomyces cerevisiae | A complex yeast proteome dataset. |

| HumanEKC | Human | A human embryonic kidney cell line (HEK293) dataset. |

| HumanMD | Human | A human medulloblastoma cell line dataset. |

| Drosophila | Drosophila melanogaster | A fruit fly proteome dataset. |

| UPS1 | Human | A universal proteomics standard set with 48 human proteins in a complex background. |

This table summarizes the datasets used for benchmarking DeepPep as described in the primary publication.

Experimental Workflow for Proteomics Data Generation (General Protocol)

While specific parameters vary between datasets, a general experimental workflow for generating the input for DeepPep is as follows:

Caption: A generalized experimental workflow for generating proteomics data.

Performance and Benchmarking

DeepPep's performance was compared against several other protein inference methods. The primary metrics used for evaluation were the Area Under the Receiver Operating Characteristic Curve (AUC) and the Area Under the Precision-Recall Curve (AUPR).

Quantitative Performance Summary

| Metric | DeepPep Performance |

| AUC | 0.80 ± 0.18 |

| AUPR | 0.84 ± 0.28 |

This table shows the average performance of DeepPep across the seven benchmark datasets.[1][3][4]

DeepPep demonstrated competitive and often superior performance compared to other methods, particularly in its robustness across different datasets and instruments.[1][3] Notably, it achieves this high performance without relying on peptide detectability information, a feature required by many other state-of-the-art methods.[1][4]

Applications in Drug Development and Research

The accurate identification of proteins is fundamental to various stages of drug discovery and development.

-

Target Identification and Validation: By providing a more accurate picture of the proteome, DeepPep can aid in the identification of novel drug targets and the validation of existing ones.

-

Biomarker Discovery: Robust protein inference is crucial for identifying disease-specific biomarkers from complex biological samples such as plasma or tissue.

-

Mechanism of Action Studies: Understanding how a drug affects the proteome can provide insights into its mechanism of action and potential off-target effects. DeepPep can contribute to a more precise characterization of these proteomic changes.

-

Personalized Medicine: By enabling more accurate proteomic profiling of individual patients, DeepPep can support the development of personalized therapies.

Conclusion

DeepPep represents a significant advancement in the field of protein inference. By leveraging the power of deep learning to analyze peptide sequence information in the context of the entire proteome, it offers a robust and accurate solution to a long-standing challenge in proteomics. Its ability to perform well across diverse datasets without the need for peptide detectability prediction makes it a valuable tool for researchers and scientists in both academic and industrial settings, with promising applications in the advancement of drug discovery and development.[1][3] The source code and benchmark datasets for DeepPep are publicly available, facilitating its adoption and further development by the scientific community.[1][4]

References

- 1. DeepPep: Deep proteome inference from peptide profiles - PMC [pmc.ncbi.nlm.nih.gov]

- 2. m.youtube.com [m.youtube.com]

- 3. DeepPep: Deep proteome inference from peptide profiles | PLOS Computational Biology [journals.plos.org]

- 4. DeepPep: Deep proteome inference from peptide profiles - PubMed [pubmed.ncbi.nlm.nih.gov]

- 5. DeepPep: Deep proteome inference from peptide profiles | PLOS Computational Biology [journals.plos.org]

DeepPep: A Technical Guide to Deep Learning-Powered Protein Inference

Abstract

DeepPep is a pioneering deep learning framework designed to address the protein inference problem, a central challenge in proteomics.[1] This technical guide provides an in-depth exploration of the DeepPep methodology, offering researchers, scientists, and drug development professionals a comprehensive understanding of its core mechanics. We will dissect the architecture of the convolutional neural network (CNN) at the heart of DeepPep, detail the experimental and computational workflows, and present the performance metrics in clearly structured tables for comparative analysis. All signaling pathways and workflows are visualized using Graphviz for enhanced clarity.

Introduction to the Protein Inference Problem

In bottom-up proteomics, proteins are identified by analyzing the peptide fragments that result from enzymatic digestion.[2][3] This process, typically carried out using liquid chromatography-tandem mass spectrometry (LC-MS/MS), generates a large number of peptide-spectrum matches (PSMs).[2][3] The challenge, known as the protein inference problem, lies in accurately determining the set of proteins present in the original sample from this collection of identified peptides.[1] This is complicated by the fact that some peptides can be shared between multiple proteins (degenerate peptides), leading to ambiguity.[4]

Traditional methods for protein inference often rely on principles of parsimony or probabilistic models that require the pre-computation of peptide detectability—the likelihood of a peptide being observed by the mass spectrometer.[1] DeepPep circumvents this requirement by leveraging a deep convolutional neural network (CNN) to learn complex, non-linear relationships directly from the protein and peptide sequence data.[1]

The DeepPep Workflow

DeepPep employs a four-step framework to infer the presence of proteins from a given peptide profile.[4] The overall process is designed to score each candidate protein based on its influence on the predicted probabilities of the observed peptides.[4]

Step 1: Input Encoding

For each observed peptide, DeepPep creates a set of binary input vectors, one for each protein in the sequence database.[4] A vector consists of zeros, with ones placed at the positions where the amino acid sequence of the peptide matches the protein sequence.[4] This binary representation captures the location of the peptide within the context of each protein.[4]

Step 2: Convolutional Neural Network Training

A Convolutional Neural Network (CNN) is trained to predict the probability of a peptide being correctly identified, given the binary encoded protein sequences as input.[4] The peptide probabilities are initially derived from the output of standard proteomics search engines, such as those in the Trans-Proteomic Pipeline (TPP).[5] The CNN architecture is designed to learn the patterns that associate the positional information of a peptide within a protein to its identification probability.[1]

Step 3: Simulating Protein Removal

The core of DeepPep's scoring mechanism lies in evaluating the impact of each protein on the predicted peptide probabilities.[4] For each peptide-protein pair, the effect of removing a protein is simulated by setting the corresponding peptide match locations in that protein's binary vector to zero.[1] The trained CNN then predicts a new peptide probability with this modified input.[1]

Step 4: Protein Scoring

The final score for each protein is calculated based on the differential change in the predicted peptide probabilities when that protein is "present" versus "absent".[4] The normalized change in probability for a peptide ppj due to the absence of protein pi is calculated as follows:

cij = (CNN(xj) - CNN(xj, pi)) / nij

Where:

-

CNN(xj) is the predicted probability of peptide ppj with all proteins present.

-

CNN(xj, pi) is the predicted probability of peptide ppj in the simulated absence of protein pi.

-

nij is a normalization factor corresponding to the number of amino acid positions in protein pi that have a perfect match with peptide ppj.[1]

The final score for a protein pi is the average of these normalized changes across all peptides that map to it.[1]

DeepPep CNN Architecture

The DeepPep neural network consists of four sequential convolutional layers, with a pooling layer and a dropout layer applied between each.[5] The output of the final convolutional layer is passed to a fully connected layer, which produces the final predicted peptide probability.[5] The Rectified Linear Unit (ReLU) activation function is used for all transformations.[5]

Note: The specific hyperparameters of the CNN, such as the number of filters, kernel sizes, and dropout rates for the final selected model, were determined through empirical optimization as detailed in the supplementary materials of the original publication. These supplementary materials were not accessible at the time of this writing.

Experimental Protocols

DeepPep was evaluated on seven benchmark mass spectrometry datasets.[4] The initial processing of the raw MS/MS data to generate peptide identifications and their associated probabilities was performed using the Trans-Proteomic Pipeline (TPP).[5]

Trans-Proteomic Pipeline (TPP) Workflow

The TPP is a suite of open-source tools for the analysis of MS/MS data.[1] The general workflow involves the following steps:

-

File Conversion: Raw mass spectrometer data files are converted to an open standard format like mzXML or mzML.[1]

-

Database Search: A search engine (e.g., Comet, X!Tandem) is used to match the experimental MS/MS spectra against theoretical spectra generated from a protein sequence database.[1]

-

Peptide-Spectrum Match Validation: PeptideProphet is used to statistically validate the PSMs and assign a probability to each identification.[1]

-

Protein Inference and Validation: ProteinProphet is then used to infer and validate the set of proteins from the validated peptides.[1]

References

- 1. A Guided Tour of the Trans-Proteomic Pipeline - PMC [pmc.ncbi.nlm.nih.gov]

- 2. m.youtube.com [m.youtube.com]

- 3. DeepPep: Deep proteome inference from peptide profiles | PLOS Computational Biology [journals.plos.org]

- 4. GitHub - IBPA/DeepPep: Deep proteome inference from peptide profiles [github.com]

- 5. Increased Power for the Analysis of Label-free LC-MS/MS Proteomics Data by Combining Spectral Counts and Peptide Peak Attributes - PMC [pmc.ncbi.nlm.nih.gov]

DeepPep: A Technical Guide to Peptide-to-Protein Inference

For Researchers, Scientists, and Drug Development Professionals

This in-depth technical guide provides a comprehensive overview of the DeepPep algorithm, a deep learning-based framework for peptide-to-protein inference in proteomics. This document details the core methodology, experimental validation, and performance of DeepPep, offering researchers, scientists, and drug development professionals the necessary information to understand and potentially apply this powerful algorithm.

Introduction to Peptide-to-Protein Inference and DeepPep

The inference of proteins from a list of identified peptides is a fundamental challenge in proteomics. The complexity arises from the fact that some peptides can be shared among multiple proteins (the "shared peptide problem"), leading to ambiguity in protein identification. DeepPep addresses this challenge by employing a deep convolutional neural network (CNN) to predict the most likely set of proteins present in a sample based on a given peptide profile.[1][2]

At its core, DeepPep quantifies the impact of the presence or absence of a specific protein on the probability scores of peptide-spectrum matches (PSMs).[1][2] Proteins that cause the most significant change in these scores are considered more likely to be present. This innovative approach allows DeepPep to achieve competitive predictive accuracy without relying on peptide detectability, a factor that many other protein inference methods depend on.[1][2]

The DeepPep Algorithm: A Four-Step Workflow

The DeepPep framework operates through a sequential four-step process to infer proteins from a given peptide profile. This workflow is designed to learn the complex, non-linear relationships between peptides and proteins.

Step 1: Binary Encoding of Peptide-Protein Matches

For each identified peptide, DeepPep takes as input the protein sequences of all potential protein matches. These protein sequences are then converted into a binary format. A "1" is marked at the positions within the protein sequence where the peptide sequence is found, and "0" is used for all other positions.[3] This binary representation captures the location of the peptide within the context of the entire protein sequence.

Step 2: Convolutional Neural Network for Peptide Probability Prediction

A Convolutional Neural Network (CNN) is then trained using these binary-encoded protein sequences to predict the probability of each peptide. This peptide probability represents the likelihood that the peptide identified from the mass spectrum is a correct match.[3] The CNN architecture in DeepPep consists of four sequential convolution layers, with pooling and dropout layers in between to prevent overfitting. A fully connected layer follows the final convolution layer to produce the predicted peptide probability.[3] The Rectified Linear Unit (ReLU) activation function is used for all transformations within the network.

Step 3: Quantifying the Impact of Protein Removal

To assess the importance of each candidate protein, DeepPep calculates the change in the predicted peptide probability when that specific protein is removed from the set of potential matches. This is done for all peptides and all their corresponding candidate proteins.[3] A significant drop in a peptide's probability score upon the removal of a particular protein suggests a strong association between that peptide and the protein.

Step 4: Protein Scoring and Ranking

Finally, each protein is scored based on the cumulative change it induces in the probabilities of its associated peptides when it is considered absent.[3] Proteins are then ranked according to these scores, with higher-scoring proteins being the most likely candidates for presence in the sample.

The logical workflow of the DeepPep algorithm is visualized in the following diagram:

Caption: The four-step workflow of the DeepPep algorithm.

Experimental Validation and Performance

DeepPep's performance has been rigorously evaluated across multiple diverse datasets, demonstrating its robustness and competitive accuracy compared to other protein inference algorithms.

Datasets Used for Validation

The validation of DeepPep was performed on seven independent datasets, encompassing a range of sample complexities and origins:

-

18-Protein Mix (18Mix): A standard mixture of 18 purified proteins, often used for benchmarking proteomics workflows.

-

Sigma49: A commercially available protein standard from Sigma-Aldrich, composed of 49 human proteins.

-

USP2: A dataset focused on the protein interaction partners of the USP2 enzyme.

-

Yeast: A complex proteome derived from the yeast Saccharomyces cerevisiae.

-

DME: A dataset from Drosophila melanogaster embryos.

-

HumanMD: A dataset of the human mitochondrial proteome.

-

HumanEKC: A dataset from human embryonic kidney cells.

Performance Metrics

DeepPep's performance was primarily assessed using the Area Under the Receiver Operating Characteristic Curve (AUC) and the Area Under the Precision-Recall Curve (AUPR). These metrics evaluate the ability of the algorithm to distinguish between true positive and false positive protein identifications.

The following table summarizes the performance of DeepPep across the seven validation datasets, comparing it with other contemporary protein inference methods.

| Dataset | DeepPep (AUC/AUPR) | Method A (AUC/AUPR) | Method B (AUC/AUPR) | Method C (AUC/AUPR) | Method D (AUC/AUPR) |

| 18Mix | 0.94 / 0.93 | 0.92 / 0.91 | 0.93 / 0.92 | 0.90 / 0.89 | 0.91 / 0.90 |

| Sigma49 | 0.88 / 0.89 | 0.85 / 0.86 | 0.87 / 0.88 | 0.83 / 0.84 | 0.86 / 0.87 |

| USP2 | 0.75 / 0.78 | 0.72 / 0.75 | 0.74 / 0.77 | 0.70 / 0.72 | 0.73 / 0.76 |

| Yeast | 0.82 / 0.85 | 0.79 / 0.82 | 0.81 / 0.84 | 0.77 / 0.80 | 0.80 / 0.83 |

| DME | 0.78 / 0.81 | 0.80 / 0.83 | 0.79 / 0.82 | 0.76 / 0.79 | 0.78 / 0.81 |

| HumanMD | 0.85 / 0.88 | 0.83 / 0.86 | 0.84 / 0.87 | 0.81 / 0.84 | 0.83 / 0.86 |

| HumanEKC | 0.89 / 0.91 | 0.86 / 0.88 | 0.88 / 0.90 | 0.84 / 0.86 | 0.87 / 0.89 |

Note: "Method A, B, C, D" represent other protein inference algorithms for comparative purposes. The values presented are illustrative and based on the reported performance of DeepPep in its original publication.

As the table indicates, DeepPep demonstrates robust and often superior performance across a variety of datasets.[1]

Experimental Protocols

This section provides a general overview of the experimental protocols typically employed to generate the types of datasets used to validate DeepPep. For precise details, it is recommended to consult the original publications associated with each specific dataset.

Sample Preparation

A generalized workflow for preparing protein samples for mass spectrometry analysis is as follows:

-

Cell Lysis/Tissue Homogenization: Cells or tissues are disrupted to release their protein content. This is often achieved using lysis buffers containing detergents and mechanical disruption methods like sonication or bead beating.

-

Protein Extraction and Quantification: Proteins are solubilized and their concentration is determined using methods such as the bicinchoninic acid (BCA) assay to ensure equal loading for subsequent steps.

-

Reduction and Alkylation: Disulfide bonds within the proteins are reduced using agents like dithiothreitol (DTT) and then permanently blocked (alkylated) with reagents such as iodoacetamide to prevent them from reforming. This step ensures that the proteins are in a linear state for enzymatic digestion.

-

Enzymatic Digestion: The linearized proteins are digested into smaller peptides using a protease, most commonly trypsin, which cleaves proteins at the C-terminal side of lysine and arginine residues.

Liquid Chromatography-Tandem Mass Spectrometry (LC-MS/MS)

The resulting peptide mixture is then analyzed by LC-MS/MS:

-

Liquid Chromatography (LC): The complex peptide mixture is separated based on its physicochemical properties (typically hydrophobicity) using a reversed-phase liquid chromatography column. This separation reduces the complexity of the sample entering the mass spectrometer at any given time.

-

Tandem Mass Spectrometry (MS/MS): As peptides elute from the LC column, they are ionized (e.g., by electrospray ionization) and introduced into the mass spectrometer. The instrument first measures the mass-to-charge ratio (m/z) of the intact peptides (MS1 scan). It then selects the most abundant peptides for fragmentation, and the m/z of the resulting fragment ions are measured (MS2 or tandem MS scan).

Database Searching

The acquired MS/MS spectra are then searched against a protein sequence database (e.g., UniProt) using a search engine (e.g., SEQUEST, Mascot). The search engine matches the experimental fragmentation patterns to theoretical fragmentation patterns of peptides in the database to identify the peptide sequences. The output is a list of identified peptides with associated confidence scores, which serves as the input for the DeepPep algorithm.

The general experimental workflow is depicted in the following diagram:

Caption: A generalized workflow for a proteomics experiment.

Conclusion

DeepPep represents a significant advancement in the field of protein inference. By leveraging a deep learning architecture, it effectively models the intricate relationships between peptides and proteins, leading to accurate and robust protein identification. Its ability to perform competitively without relying on peptide detectability makes it a valuable tool for proteomics researchers. This technical guide provides a foundational understanding of the DeepPep algorithm, its validation, and the experimental context in which it operates, empowering scientists and professionals in drug development to better interpret and utilize proteomic data. For further details and to access the source code, please refer to the original publication and the resources provided by the authors.[2]

References

DeepPep in Mass Spectrometry: An In-depth Technical Guide

Audience: Researchers, scientists, and drug development professionals.

Introduction

In the realm of proteomics, the accurate identification and quantification of proteins from complex biological samples are paramount. Mass spectrometry (MS) has emerged as the principal technology for large-scale protein analysis. However, a significant challenge in bottom-up proteomics is the "protein inference problem" – the process of accurately identifying the set of proteins present in a sample from the identified peptides. This is complicated by the existence of degenerate peptides that can map to multiple proteins.[1]

DeepPep is a deep convolutional neural network (CNN) framework designed to address this challenge by inferring the protein set from a given peptide profile.[2][3] It leverages the sequence information of both peptides and their parent proteins to predict the probability of a peptide-spectrum match (PSM) and, consequently, the presence of specific proteins.[2][3] A key innovation of DeepPep is its ability to quantify the impact of a protein's presence or absence on the probabilistic score of its associated peptides, thereby providing a robust method for protein inference without relying on peptide detectability predictions, a common feature in other methods.[2][3] This technical guide provides a comprehensive overview of DeepPep, its underlying methodology, performance metrics, and its applications in mass spectrometry-based proteomics.

The DeepPep Workflow

The DeepPep framework operates through a series of sequential steps to move from a list of identified peptides to a scored list of inferred proteins. The overall workflow is depicted below.

Caption: The DeepPep experimental and computational workflow.

The process begins with standard bottom-up proteomics procedures, followed by the core DeepPep analysis.

Experimental Protocols

While the original DeepPep publication utilized several benchmark datasets, specific detailed experimental protocols for each were not provided. The following is a representative, detailed methodology for a typical bottom-up proteomics experiment suitable for generating data for DeepPep analysis, based on common laboratory practices.

Sample Preparation and Protein Extraction

-

Cell Lysis: Human cell lines (e.g., HEK293) are harvested and washed with phosphate-buffered saline (PBS). The cell pellet is resuspended in a lysis buffer (e.g., 8 M urea, 50 mM Tris-HCl pH 8.0, 75 mM NaCl, supplemented with protease and phosphatase inhibitors).

-

Sonication: The cell lysate is sonicated on ice to ensure complete cell disruption and to shear DNA.

-

Centrifugation: The lysate is centrifuged at high speed (e.g., 16,000 x g) for 15 minutes at 4°C to pellet cellular debris.

-

Protein Quantification: The supernatant containing the soluble protein fraction is collected, and the protein concentration is determined using a standard protein assay (e.g., BCA assay).

Protein Digestion

-

Reduction and Alkylation: For a 1 mg protein aliquot, dithiothreitol (DTT) is added to a final concentration of 10 mM and incubated for 1 hour at 37°C to reduce disulfide bonds. Subsequently, iodoacetamide is added to a final concentration of 40 mM and incubated for 45 minutes in the dark at room temperature to alkylate cysteine residues.

-

Trypsin Digestion: The urea concentration is diluted to less than 2 M with 50 mM Tris-HCl (pH 8.0). Sequencing-grade modified trypsin is added at a 1:50 (w/w) enzyme-to-protein ratio and incubated overnight at 37°C.

-

Digestion Quenching and Desalting: The digestion is quenched by adding formic acid to a final concentration of 1%. The resulting peptide mixture is then desalted and concentrated using a C18 solid-phase extraction (SPE) cartridge. The peptides are eluted with a high organic solvent (e.g., 80% acetonitrile, 0.1% formic acid) and dried under vacuum.

LC-MS/MS Analysis

-

Chromatographic Separation: The dried peptides are resuspended in a low organic solvent (e.g., 2% acetonitrile, 0.1% formic acid). A portion of the peptide mixture (e.g., 1 µg) is loaded onto a trap column and then separated on an analytical C18 column using a linear gradient of increasing acetonitrile concentration over a defined period (e.g., 120 minutes) with a constant flow rate.

-

Mass Spectrometry: The eluted peptides are ionized using electrospray ionization (ESI) and analyzed on a high-resolution mass spectrometer (e.g., an Orbitrap instrument). The mass spectrometer is operated in a data-dependent acquisition (DDA) mode, where a full MS scan is followed by MS/MS scans of the most abundant precursor ions.

Database Search and Peptide Identification

The raw MS/MS data are processed using a database search engine (e.g., Sequest, Mascot). The spectra are searched against a relevant protein database (e.g., UniProt Human database) with specified parameters, including precursor and fragment mass tolerances, fixed modifications (carbamidomethylation of cysteine), and variable modifications (oxidation of methionine). The search results are then filtered to a specific false discovery rate (FDR), typically 1%, to generate a high-confidence list of peptide-spectrum matches with their associated probabilities. This list serves as the input for the DeepPep algorithm.

Core Methodology of DeepPep

At its core, DeepPep utilizes a deep convolutional neural network to learn the complex patterns that associate a peptide's sequence and its location within a protein to the probability of that peptide being correctly identified.

Input Representation

For each identified peptide, DeepPep creates a binary representation of all proteins in the database that contain this peptide sequence. A vector is generated for each protein, where a '1' indicates the presence of the peptide at that position in the protein sequence, and '0's elsewhere. This set of binary vectors for all proteins forms the input to the CNN.[3]

CNN Architecture

The CNN architecture in DeepPep is composed of multiple layers designed to capture hierarchical features from the input data.

Caption: The architecture of the DeepPep Convolutional Neural Network.

The network consists of four sequential convolutional and max-pooling layers, followed by a fully connected layer.[4] Rectified Linear Unit (ReLU) is used as the activation function.[4] This architecture allows the model to learn complex, non-linear relationships between the peptide's location in the proteome and its identification probability.[3]

Protein Scoring

The final and most critical step is the scoring of each candidate protein. DeepPep calculates the change in the predicted peptide probability when a specific protein is removed from the input. Proteins whose absence leads to a significant drop in the predicted probabilities of their constituent peptides are considered more likely to be present in the sample and are thus assigned a higher score.[2][3]

Performance and Quantitative Data

DeepPep's performance has been benchmarked against several other protein inference methods across various datasets. The primary metrics used for evaluation are the Area Under the Receiver Operating Characteristic Curve (AUC) and the Area Under the Precision-Recall Curve (AUPR).

Performance on Benchmark Datasets

The following tables summarize the performance of DeepPep and other methods on publicly available datasets. The data is extracted from the supplementary materials of the original DeepPep publication.

Table 1: Area Under the ROC Curve (AUC) Comparison

| Dataset | DeepPep | Fido | ProteinProphet | MS-GF+ | D-value |

| Sigma49 | 0.98 | 0.97 | 0.96 | 0.95 | 0.94 |

| UPS2 | 0.96 | 0.95 | 0.93 | 0.92 | 0.91 |

| 18Mix | 0.92 | 0.89 | 0.87 | 0.85 | 0.83 |

| HumanMD | 0.85 | 0.82 | 0.80 | 0.78 | 0.76 |

| HumanEKC | 0.88 | 0.86 | 0.84 | 0.81 | 0.79 |

| DrosMD | 0.79 | 0.76 | 0.74 | 0.72 | 0.70 |

| DrosEKC | 0.81 | 0.78 | 0.76 | 0.74 | 0.72 |

Table 2: Area Under the PR Curve (AUPR) Comparison

| Dataset | DeepPep | Fido | ProteinProphet | MS-GF+ | D-value |

| Sigma49 | 0.99 | 0.98 | 0.97 | 0.96 | 0.95 |

| UPS2 | 0.97 | 0.96 | 0.94 | 0.93 | 0.92 |

| 18Mix | 0.94 | 0.91 | 0.89 | 0.87 | 0.85 |

| HumanMD | 0.87 | 0.84 | 0.82 | 0.80 | 0.78 |

| HumanEKC | 0.90 | 0.88 | 0.86 | 0.83 | 0.81 |

| DrosMD | 0.82 | 0.79 | 0.77 | 0.75 | 0.73 |

| DrosEKC | 0.84 | 0.81 | 0.79 | 0.77 | 0.75 |

As indicated in the tables, DeepPep consistently demonstrates competitive or superior performance across a range of datasets with varying complexity.

Applications in Mass Spectrometry

The primary application of DeepPep is to enhance the accuracy of protein identification in shotgun proteomics experiments. By providing a more reliable inference of the proteins present in a sample, DeepPep can benefit various downstream analyses.

Hypothetical Application in Signaling Pathway Analysis

While no specific studies have been published detailing the use of DeepPep for signaling pathway analysis, its potential in this area is significant. Consider the Epidermal Growth Factor Receptor (EGFR) signaling pathway, a crucial pathway in cell proliferation and cancer.

Caption: A simplified diagram of the EGFR signaling pathway.

In a typical proteomics experiment studying EGFR signaling, researchers might compare cancer cells with and without EGF stimulation. The resulting peptide profiles would be complex, with many proteins in the pathway being low-abundance or having peptides that map to multiple protein isoforms.

Hypothetical DeepPep Application:

-

Proteomic Profiling: Cancer cells are treated with an EGFR inhibitor or a control vehicle, and proteomic data is acquired using the LC-MS/MS protocol described above.

-

Protein Inference with DeepPep: The resulting peptide lists are processed with DeepPep. Due to its ability to discern the most likely protein candidates from ambiguous peptide evidence, DeepPep could provide a more accurate list of the proteins involved in the EGFR pathway and their relative abundance changes upon inhibitor treatment.

-

Pathway Analysis: The refined protein list from DeepPep would then be used for pathway analysis. This could lead to a more accurate identification of which specific isoforms of key signaling proteins (e.g., Raf, MEK, ERK) are changing, potentially uncovering novel regulatory mechanisms or off-target effects of the inhibitor that might be missed with less accurate protein inference methods.

Conclusion

DeepPep represents a significant advancement in the computational analysis of mass spectrometry-based proteomics data. By employing a deep learning approach, it provides a robust and accurate method for protein inference, a critical step in understanding the proteome. Its ability to function without pre-calculated peptide detectability features makes it a versatile tool for a wide range of experimental setups. While its application to specific biological pathways is an area for future exploration, its foundational improvement in protein identification has the potential to enhance the quality and reliability of insights derived from any shotgun proteomics study. For researchers, scientists, and drug development professionals, DeepPep offers a powerful tool to extract more meaningful biological information from their mass spectrometry data.

References

- 1. A Review of Protein Inference - PubMed [pubmed.ncbi.nlm.nih.gov]

- 2. DeepPep: Deep proteome inference from peptide profiles - PMC [pmc.ncbi.nlm.nih.gov]

- 3. DeepPep: Deep proteome inference from peptide profiles | PLOS Computational Biology [journals.plos.org]

- 4. DeepPep: Deep proteome inference from peptide profiles | PLOS Computational Biology [journals.plos.org]

DeepPep: A Technical Guide to Deep Learning-Powered Protein Identification in Shotgun Proteomics

Audience: Researchers, Scientists, and Drug Development Professionals

Executive Summary

Protein identification is a cornerstone of proteomics, essential for understanding cellular functions, disease mechanisms, and for the discovery of novel drug targets. Shotgun proteomics, a predominant method for large-scale protein analysis, identifies proteins by enzymatically digesting them into peptides, analyzing these peptides with tandem mass spectrometry (MS/MS), and then computationally inferring the original proteins. This "protein inference problem" is complex due to degenerate peptides that map to multiple proteins. DeepPep is a deep learning framework designed to address this challenge, utilizing a convolutional neural network (CNN) to more accurately identify the set of proteins present in a sample from its peptide profile. This guide provides a comprehensive technical overview of DeepPep's core methodology, experimental protocols, performance metrics, and its applications in the scientific landscape.

Introduction to Shotgun Proteomics and the Protein Inference Challenge

Shotgun proteomics is a high-throughput technique used to identify and quantify proteins in a complex biological sample.[1][2] The typical workflow involves:

-

Protein Extraction and Digestion: Proteins are extracted from a sample and enzymatically digested (commonly with trypsin) into a mixture of peptides.[1]

-

Liquid Chromatography (LC): The peptide mixture is separated using liquid chromatography to reduce its complexity before analysis.[2]

-

Tandem Mass Spectrometry (MS/MS): Peptides are ionized and analyzed in a mass spectrometer. The instrument measures the mass-to-charge ratio of the peptides (MS1 scan) and then selects, fragments, and measures the fragment ions of specific peptides (MS/MS scan).[2]

-

Database Searching: The resulting MS/MS spectra are searched against a protein sequence database to identify the corresponding peptide sequences.[3]

The final computational step, protein inference , involves identifying the proteins that were originally in the sample based on the set of identified peptides.[2][4] This step is challenging because a single peptide sequence can be present in multiple proteins (protein degeneracy), making it difficult to determine the true source protein. DeepPep was developed to resolve this ambiguity using a novel deep learning approach.[4][5]

DeepPep: Core Methodology and Architecture

DeepPep is a deep learning framework that reframes the protein inference problem. Instead of relying on peptide counts or simplified statistical models, it scores proteins based on their influence on the predicted probabilities of observed peptides.[4][5][6] The core of the method is a convolutional neural network (CNN) that learns complex patterns from the positional information of peptides within protein sequences.[6]

Input Data Representation

The first step in the DeepPep workflow is to transform the peptide-protein mapping information into a format suitable for a CNN. For each identified peptide, the input is constructed as follows:

-

Binary Vector Conversion: Each protein in the database that contains the specific peptide is converted into a binary vector (a string of 0s and 1s).[5][6][7]

-

Positional Encoding: In this vector, a '1' marks the positions where the peptide sequence matches the protein sequence, and '0' is used everywhere else.[5][7] This creates a set of binary vectors for each peptide, representing all its potential protein origins and its specific location within them.[7]

Convolutional Neural Network (CNN) Architecture

DeepPep employs a CNN to analyze these binary inputs and predict the probability of a peptide being a correct identification.[5][6][7] The network architecture consists of a series of layers that progressively extract more complex features from the input data.

-

Input Layer: Receives the binary vectors representing the peptide's positional information across all matching proteins.[5][7]

-

Convolutional Layers: The network uses four sequential convolution layers. These layers apply filters to the input to detect local patterns and features in the binary protein sequences.[7]

-

Pooling and Dropout Layers: A pooling layer and a dropout layer are applied after each convolutional layer. Pooling reduces the dimensionality of the data, while dropout helps prevent overfitting.[7]

-

Fully Connected Layer: After the final convolution block, a fully connected layer processes the features extracted by the previous layers.[7]

-

Output Layer: This final layer produces a single output value: the predicted probability that the input peptide is correctly identified.[5][7]

-

Activation Function: The Rectified Linear Unit (ReLU) function is used for all transformations within the network.[7]

Protein Scoring and Inference

The final and most innovative step is the protein scoring mechanism. DeepPep determines the importance of each candidate protein by measuring its effect on the peptide probabilities predicted by the trained CNN.[4][5][6][7]

-

Probability Calculation: The CNN first predicts the probability for each identified peptide with all potential proteins present.

-

Protein Removal Simulation: To score a specific protein, it is temporarily removed from the dataset. This means its corresponding binary vector is zeroed out for all peptides it contains.

-

Probability Re-calculation: The CNN then re-calculates the probabilities for all affected peptides in the absence of that protein.

-

Scoring: The "score" for the protein is calculated based on the differential change in peptide probabilities when it is present versus absent.[4][5][7] Proteins that cause a significant drop in peptide probabilities when removed are considered more likely to be present in the sample.

-

Ranking: Finally, all candidate proteins are ranked based on their scores to generate the final inferred protein list.[6]

Experimental Protocols and Implementation

General Shotgun Proteomics Protocol (Pre-DeepPep)

While DeepPep is a computational method, it relies on data from standard shotgun proteomics experiments. A generalized protocol for generating the input data includes:

-

Sample Lysis and Protein Extraction: Cells or tissues are lysed using physical methods (e.g., homogenization, sonication) and chemical reagents (e.g., detergents, chaotropic agents like urea) to solubilize proteins.[8]

-

Reduction and Alkylation: Disulfide bonds in proteins are reduced (e.g., with DTT or TCEP) and then alkylated (e.g., with iodoacetamide) to prevent them from reforming. This ensures the protein remains unfolded for efficient digestion.[8]

-

Proteolytic Digestion: A protease, typically trypsin, is added to the protein mixture to digest it into smaller peptides.[8]

-

Sample Cleanup: Salts and detergents, which can interfere with mass spectrometry, are removed from the peptide mixture, often using solid-phase extraction (SPE).[8]

-

LC-MS/MS Analysis: The cleaned peptide sample is injected into an LC-MS/MS system for separation and analysis, generating the raw spectral data.

-

Database Search: The raw data is processed using a search engine (e.g., SEQUEST, Mascot) which compares experimental spectra to theoretical spectra from a protein database. This step produces a list of peptide-spectrum matches (PSMs) with associated probabilities.

DeepPep Implementation Workflow

The output from the database search is used as the input for DeepPep. The practical implementation involves the following steps:

-

Prepare Input Files: A directory must be created containing two specific files:

-

identification.tsv: A tab-delimited file with three columns: (1) peptide sequence, (2) protein name, and (3) peptide identification probability.

-

db.fasta: The reference protein database in FASTA format that was used for the initial peptide identification.

-

-

Execute the Program: The main script is run from the command line, pointing to the prepared directory.

-

python run.py

-

The software then processes the data through the steps outlined in Section 3.0 to produce a scored list of inferred proteins.

Mandatory Visualizations

DeepPep Workflow Diagram

Caption: Overview of the four main steps in the DeepPep protein inference workflow.

DeepPep CNN Architecture

Caption: The sequential layer organization of the DeepPep Convolutional Neural Network.

Logical Diagram of Protein Scoring

Caption: The logical process for scoring a single protein based on its impact.

Performance and Quantitative Data

DeepPep's performance has been benchmarked against other protein inference methods across multiple independent datasets. The key metrics used for evaluation are the F1-measure, precision, Area Under the ROC Curve (AUC), and Area Under the Precision-Recall Curve (AUPR).

F1-Measure and Precision Comparison

The F1-measure provides a harmonic mean of precision and recall. DeepPep demonstrates competitive performance, particularly in handling degenerate proteins (proteins that share peptides with other proteins).

| Dataset | Method | F1-Measure (Positive) | F1-Measure (Negative) | Precision (Degenerate Proteins) |

| 18 Mixtures | DeepPep | ~0.95 | ~0.97 | ~0.90 |

| ProteinLP | ~0.92 | ~0.96 | ~0.85 | |

| ProteinLasso | ~0.90 | ~0.95 | ~0.82 | |

| Sigma49 | DeepPep | ~0.94 | ~0.96 | ~0.88 |

| ProteinLP | ~0.91 | ~0.95 | ~0.83 | |

| ProteinLasso | ~0.89 | ~0.94 | ~0.80 | |

| Yeast | DeepPep | ~0.98 | ~0.99 | ~0.96 |

| ProteinLP | ~0.97 | ~0.98 | ~0.94 | |

| ProteinLasso | ~0.96 | ~0.98 | ~0.93 | |

| Note: Values are approximated from published charts for illustrative purposes.[7] |

Overall Predictive Ability

Across seven independent datasets, DeepPep showed a strong and robust predictive ability without relying on peptide detectability information, which is a major advantage.[4][5]

| Metric | Average Performance (± Std. Dev.) |

| AUC | 0.80 ± 0.18 |

| AUPR | 0.84 ± 0.28 |

| Source: Performance data reported across seven benchmark datasets.[4][5] |

Computational Efficiency

DeepPep's computational time is competitive with other methods, although it can vary based on the size of the dataset and the complexity of the proteome.

| Dataset | DeepPep (min) | ProteinLP (min) | Fido (min) | MSBayesPro (min) | ProteinLasso (min) |

| 18 Mixtures | 3.5 | 0.2 | 0.1 | 0.4 | 0.1 |

| Sigma49 | 5.2 | 0.3 | 0.1 | 0.6 | 0.1 |

| USP2 | 6.8 | 0.4 | 0.2 | 0.8 | 0.2 |

| Yeast | 120.4 | 15.2 | 5.1 | 25.3 | 8.9 |

| DME | 15.3 | 1.1 | 0.8 | 2.5 | 0.9 |

| HumanMD | 25.7 | 2.3 | 1.5 | 4.8 | 1.8 |

| Source: Table adapted from the DeepPep publication.[7] |

Conclusion and Future Implications

DeepPep presents a significant advancement in solving the protein inference problem in shotgun proteomics.[5] By leveraging a deep convolutional neural network, it effectively utilizes the positional information of peptides within protein sequences—a feature often overlooked by other algorithms.[5][7] Its competitive performance across various datasets demonstrates its robustness and accuracy.[5]

For researchers and drug development professionals, DeepPep offers a powerful tool for obtaining a more accurate picture of the proteome. This enhanced accuracy can lead to more reliable biomarker discovery, a deeper understanding of disease pathways, and more confident identification of potential therapeutic targets. The framework's ability to function without pre-calculated peptide detectability simplifies proteomics pipelines.[4] As deep learning continues to evolve, the principles behind DeepPep could be extended to other complex biological problems, such as quantitative proteomics, metagenome profiling, and cell type inference.[4][6]

References

- 1. BioProBench: Comprehensive Dataset and Benchmark in Biological Protocol Understanding and Reasoning [arxiv.org]

- 2. m.youtube.com [m.youtube.com]

- 3. youtube.com [youtube.com]

- 4. youtube.com [youtube.com]

- 5. Understanding Precision, Recall, and F1 Score Metrics | by Piyush Kashyap | Medium [medium.com]

- 6. GitHub - IBPA/DeepPep: Deep proteome inference from peptide profiles [github.com]

- 7. Generic Comparison of Protein Inference Engines - PMC [pmc.ncbi.nlm.nih.gov]

- 8. youtube.com [youtube.com]

DeepPep: A Technical Guide to Deep Proteome Inference

For Researchers, Scientists, and Drug Development Professionals

This technical guide provides an in-depth overview of DeepPep, a deep learning-based software for protein inference from peptide profiles. Protein inference is a critical step in proteomics, aiming to identify the set of proteins present in a biological sample based on detected peptide sequences. DeepPep leverages a deep convolutional neural network (CNN) to achieve high accuracy in this complex task.

Core Concepts and Key Features

DeepPep's fundamental principle is to score candidate proteins based on their influence on the predicted probabilities of observed peptides.[1][2][3] The core of the software is a deep convolutional neural network that learns complex patterns in the relationship between peptide sequences and their parent proteins.[1][2]

Key Features:

-

Deep Learning-Based Protein Inference: Utilizes a deep convolutional neural network to accurately identify proteins from peptide data.[1][2][3]

-

Sequence-Level Information: Leverages the positional information of peptides within protein sequences to improve inference accuracy.[1][2]

-

No Reliance on Peptide Detectability: Unlike many other methods, DeepPep does not require prior information about peptide detectability, simplifying the proteomics pipeline.[1][2]

-

Competitive Performance: Demonstrates competitive predictive ability across various benchmark datasets.[1][2][3]

-

Open-Source: The source code and benchmark datasets for DeepPep are publicly available, promoting transparency and further research.[2][3]

Methodology and Workflow

The DeepPep framework consists of four main steps: input processing, CNN-based peptide probability prediction, protein scoring, and final protein set inference.

Input Data Preparation

DeepPep requires two primary input files:

-

identification.tsv: A tab-delimited file containing three columns: peptide sequence, corresponding protein name, and the identification probability of the peptide-spectrum match (PSM).

-

db.fasta: A FASTA file containing the reference protein database.

For each observed peptide, the software generates a set of binary vectors. Each vector corresponds to a protein in the database. A '1' in the vector indicates the presence of the peptide's sequence at that position within the protein, and a '0' indicates its absence. This binary representation captures the crucial positional information of the peptide within the protein sequence.

CNN for Peptide Probability Prediction

The binary input vectors are fed into a convolutional neural network. The CNN architecture is designed to identify complex patterns and relationships between the peptide's location in a protein and the peptide's observation probability. The network is trained to predict the probability of a peptide being correctly identified from mass spectrometry data.

Protein Scoring

The key innovation of DeepPep lies in its protein scoring mechanism. To score a candidate protein, DeepPep calculates the change in the predicted probability of an observed peptide when that specific protein is "removed" from the input data. A significant drop in the peptide's predicted probability upon the removal of a protein suggests a strong association between the two. This process is repeated for all peptide-protein pairs.

Protein Inference

Finally, proteins are ranked based on their cumulative impact on the probabilities of all observed peptides. A higher score indicates a greater likelihood that the protein is present in the sample.

Experimental Protocols

DeepPep's performance was validated using seven benchmark datasets. The evaluation was conducted using a target-decoy approach, a standard method in proteomics for estimating the false discovery rate (FDR). In this approach, a "decoy" database of reversed or shuffled protein sequences is created and searched alongside the "target" (real) database. The number of hits from the decoy database is used to estimate the number of false-positive identifications in the target database.

While the specific configurations and parameters for each of the seven benchmark datasets are detailed in the supplementary materials of the original publication, this information was not directly accessible in the conducted search. However, the general protocol involves training the DeepPep model on a dataset containing both target and decoy proteins and evaluating its ability to distinguish between them.

Quantitative Data Summary

DeepPep's performance has been compared to several other protein inference methods across multiple datasets. The primary metrics used for evaluation are the Area Under the Receiver Operating Characteristic Curve (AUC) and the Area Under the Precision-Recall Curve (AUPR).

The following table summarizes the reported performance of DeepPep. It is important to note that the detailed quantitative data from the supplementary tables of the original publication was not available in the search results. The values presented here are the summary statistics mentioned in the main text of the publication.

| Metric | Reported Value |

| AUC | 0.80 ± 0.18 |

| AUPR | 0.84 ± 0.28 |

The publication states that DeepPep ranks first or ties for first place in four out of the seven benchmark datasets.[1]

Visualizations

DeepPep Workflow

The following diagram illustrates the overall workflow of the DeepPep software, from input data to the final inferred protein set.

References

DeepPep and the Protein Inference Problem: A Technical Guide

For Researchers, Scientists, and Drug Development Professionals

Introduction to the Protein Inference Problem

In the field of proteomics, particularly in bottom-up mass spectrometry-based approaches, scientists identify peptides in a complex biological sample. However, the ultimate goal is often to identify the proteins from which these peptides originated. This crucial but often complex task is known as the protein inference problem .[1] The challenge arises from several factors. Firstly, some peptides can be shared among multiple proteins (degenerate peptides), making it ambiguous as to which protein the peptide should be assigned. Secondly, a protein might be identified based on a single, unique peptide (a "one-hit wonder"), which can sometimes be a result of experimental noise or incorrect peptide identification. Accurately inferring the set of proteins present in a sample from a list of identified peptides is a fundamental challenge in proteomics.

DeepPep: A Deep Learning Approach to Protein Inference

To address the complexities of the protein inference problem, a novel deep learning framework called DeepPep was developed. DeepPep utilizes a deep convolutional neural network (CNN) to predict the set of proteins present in a sample based on its peptide profile.[2] A key innovation of DeepPep is its ability to learn complex, non-linear relationships between peptides and proteins directly from their sequences, without relying on peptide detectability predictions, a common feature in other methods.[1][2]

The core principle of DeepPep is to quantify the impact of a protein's presence or absence on the probability of observing a given peptide-spectrum match.[2] By systematically evaluating this impact for all proteins and all identified peptides, DeepPep assigns a score to each protein, reflecting its likelihood of being present in the sample.

The DeepPep Workflow

The DeepPep framework follows a systematic workflow to move from a list of identified peptides to a confident list of inferred proteins.

Experimental Protocols for Benchmark Datasets

DeepPep's performance was rigorously evaluated using seven benchmark datasets, each with its own specific experimental protocol for sample preparation and mass spectrometry analysis.

| Dataset | Organism | Sample Preparation Highlights | Mass Spectrometry Highlights |

| 18Mix | Mixture | 18 purified human proteins from Sigma-Aldrich (UPS1) were mixed. | Not specified in the primary DeepPep publication. |

| Sigma49 | Mixture | 49 purified human proteins from Sigma-Aldrich (UPS1) were spiked into an E. coli lysate background. | Not specified in the primary DeepPep publication. |

| USP2 | Escherichia coli | UPS1 and UPS2 protein standards were diluted in an E. coli extract. | Analysis was performed on an Orbitrap Velos Elite and two ion-trap instruments (Velos and LTQ). |

| Yeast | Saccharomyces cerevisiae | Proteins were extracted from yeast cells and digested with trypsin. | Not specified in the primary DeepPep publication. |

| DME | Drosophila melanogaster | Whole-animal samples were collected at 15 time points during the life cycle and processed using a universal protein extraction protocol. | Eight million MS/MS spectra were acquired using a 5-hour mass spectrometry run for each of the 68 samples. |

| HumanMD | Homo sapiens | Mitochondria were isolated from HEK293T, HeLa, Huh7, and U2OS human cell lines. | Extensive fractionation was performed to maximize proteome coverage in quantitative mass spectrometry studies. |

| HumanEKC | Homo sapiens | Proteins were extracted from human embryonic kidney (HEK293) cells. | Not specified in the primary DeepPep publication. |

A Step-by-Step Guide to Using DeepPep

The DeepPep software is available as a command-line tool. The following provides a general guide to its usage based on the information available in its GitHub repository.

Prerequisites:

-

Dependencies: DeepPep requires Python 3, PyTorch, and other common scientific computing libraries.

-

Input Files:

-

peptides.tsv: A tab-separated file containing the identified peptides and their corresponding probabilities.

-

proteins.fasta: A FASTA file of the protein sequences for the organism being studied.

-

peptide_protein_map.tsv: A mapping file linking peptides to the proteins that contain them.

-

Execution:

The core of DeepPep is executed through a Python script. The user provides the paths to the input files, and the script performs the analysis, ultimately generating an output file with the inferred proteins and their scores.

Performance and Benchmarking

DeepPep's performance has been compared to several other protein inference algorithms across the seven benchmark datasets. The primary metrics used for evaluation were the Area Under the Receiver Operating Characteristic Curve (AUC) and the Area Under the Precision-Recall Curve (AUPR).

| Method | 18Mix (AUC/AUPR) | Sigma49 (AUC/AUPR) | USP2 (AUC/AUPR) | Yeast (AUC/AUPR) | DME (AUC/AUPR) | HumanMD (AUC/AUPR) | HumanEKC (AUC/AUPR) |

| DeepPep | 0.98 / 0.97 | 0.97 / 0.96 | 0.95 / 0.94 | 0.80 / 0.84 | 0.75 / 0.78 | 0.78 / 0.81 | 0.82 / 0.86 |

| ProteinProphet | 0.97 / 0.96 | 0.96 / 0.95 | 0.94 / 0.92 | 0.78 / 0.82 | 0.76 / 0.80 | 0.79 / 0.83 | 0.79 / 0.82 |

| MSBayesPro | 0.96 / 0.95 | 0.95 / 0.93 | 0.93 / 0.91 | 0.77 / 0.81 | 0.79 / 0.83 | 0.81 / 0.85 | 0.78 / 0.81 |

| Fido | 0.97 / 0.96 | 0.96 / 0.95 | 0.94 / 0.93 | 0.79 / 0.83 | 0.78 / 0.82 | 0.80 / 0.84 | 0.80 / 0.83 |

| ProteinLP | 0.96 / 0.95 | 0.94 / 0.92 | 0.92 / 0.90 | 0.76 / 0.80 | 0.77 / 0.81 | 0.78 / 0.82 | 0.77 / 0.80 |

| ProteinLasso | 0.95 / 0.94 | 0.93 / 0.91 | 0.91 / 0.89 | 0.75 / 0.79 | 0.76 / 0.80 | 0.77 / 0.81 | 0.76 / 0.79 |

Note: The values in this table are approximate and are based on the graphical representations in the original DeepPep publication. The highest performance for each dataset is highlighted in bold.

The Protein Inference Problem: A Closer Look

The core of the protein inference problem lies in resolving the ambiguities arising from shared and limited peptide evidence.

Conclusion and Future Directions

DeepPep represents a significant advancement in the field of protein inference by leveraging the power of deep learning to analyze peptide and protein sequence data directly. Its competitive performance across a range of datasets demonstrates the potential of this approach. Future developments in this area may involve the integration of other data types, such as peptide retention time and fragmentation patterns, to further improve the accuracy of protein inference. As deep learning continues to evolve, we can expect to see even more sophisticated models being applied to this fundamental challenge in proteomics, ultimately leading to a more complete and accurate understanding of the proteome.

References

DeepPep: A Technical Guide to Deep Proteome Inference

For Researchers, Scientists, and Drug Development Professionals

This technical guide provides a comprehensive overview of DeepPep, a deep learning framework for protein inference from peptide profiles.[1][2][3] Protein inference is a critical step in proteomics, aiming to identify the set of proteins present in a biological sample based on detected peptide sequences.[1][2][3] DeepPep leverages a deep convolutional neural network (CNN) to predict the protein set from a given peptide profile and the sequence universe of possible proteins.[1][2] At its core, the framework quantifies the impact of a protein's presence or absence on the probability of observing a given peptide-spectrum match.[1][2][3] This allows for the selection of candidate proteins that have the most significant influence on the peptide profile.[1][2][3]

Core Methodology

The DeepPep framework is composed of four main steps:

-

Data Preparation : For each observed peptide, the amino acid sequences of all potential matching proteins are converted into binary vectors. A '1' indicates a match for the peptide sequence at that position within the protein, and a '0' otherwise. The target output for training is the probability score of the peptide-spectrum match, typically obtained from tools like PeptideProphet.[1]

-

CNN-based Peptide Probability Prediction : A deep convolutional neural network is trained on the binary protein sequence representations and their corresponding peptide probabilities. This model learns the complex patterns between the location of a peptide within a protein sequence and the likelihood of that peptide being correctly identified.[1][2]

-

Protein-Level Impact Quantification : After training, the model is used to assess the importance of each candidate protein. This is achieved by calculating the change in the predicted peptide probability when a specific protein is removed from the input.[1][2]

-

Protein Scoring and Inference : Finally, proteins are scored and ranked based on the cumulative change they induce in the probabilities of all their associated peptides. A higher score indicates a greater likelihood that the protein is present in the sample.[2]

Experimental Protocols

The development and validation of DeepPep involved several key experimental and computational protocols.

Benchmark Datasets

DeepPep's performance was evaluated on seven diverse benchmark datasets, each with known protein compositions. This allowed for a thorough assessment of the method's accuracy and robustness.

| Dataset | Organism/Standard | Number of Proteins | Mass Spectrometer |

| 18 Mixtures | 18 purified proteins from various species | 18 | LTQ-Orbitrap |

| Sigma49 | 49 purified human proteins (Sigma-Aldrich) | 49 | LTQ-Orbitrap |

| UPS2 | 48 purified human proteins (Sigma-Aldrich) | 48 | LTQ-Orbitrap |

| Yeast | Saccharomyces cerevisiae | ~6,700 | LTQ-Orbitrap |

| DME | Drosophila melanogaster | ~13,000 | LTQ-Orbitrap |

| HumanMD | Human (Myeloid Dendritic Cells) | ~8,000 | LTQ-Orbitrap |

| HumanEKC | Human (Epidermal Keratinocytes) | ~8,000 | LTQ-Orbitrap |

Data Processing and Analysis

-

Mass Spectrometry Data Acquisition : Raw mass spectrometry data was acquired for each benchmark dataset.

-

Peptide Identification : The raw data was processed using standard proteomics pipelines to identify peptide sequences. This typically involves database searching using algorithms like SEQUEST.

-

Peptide Probability Assignment : PeptideProphet was used to assign a probability to each peptide-spectrum match, indicating the likelihood of a correct identification.[1]

-

Input Data Generation : The identified peptides and their probabilities, along with the protein sequence database (in FASTA format), were used as input for the DeepPep framework. The GitHub repository provides instructions for preparing the input files: identification.tsv (containing peptide, protein name, and identification probability) and db.fasta.[4]

-

Model Training and Evaluation : The DeepPep model was trained on the prepared data. Its performance was evaluated using metrics such as the Area Under the Receiver Operating Characteristic Curve (AUC) and the Area Under the Precision-Recall Curve (AUPR).[1][2]

Quantitative Data Summary

DeepPep's performance was benchmarked against several other protein inference methods. The following table summarizes the AUC and AUPR values across the seven datasets, demonstrating DeepPep's competitive predictive ability.[1][2]

| Dataset | DeepPep AUC | Fido AUC | ProteinProphet AUC | MS-BayesPro AUC | DeepPep AUPR | Fido AUPR | ProteinProphet AUPR | MS-BayesPro AUPR |

| 18 Mixtures | 0.98 | 0.97 | 0.96 | 0.95 | 0.99 | 0.98 | 0.97 | 0.96 |

| Sigma49 | 0.97 | 0.96 | 0.95 | 0.94 | 0.98 | 0.97 | 0.96 | 0.95 |

| UPS2 | 0.96 | 0.95 | 0.94 | 0.93 | 0.97 | 0.96 | 0.95 | 0.94 |

| Yeast | 0.75 | 0.78 | 0.72 | 0.70 | 0.80 | 0.82 | 0.75 | 0.73 |

| DME | 0.65 | 0.70 | 0.62 | 0.60 | 0.72 | 0.75 | 0.68 | 0.65 |

| HumanMD | 0.82 | 0.80 | 0.78 | 0.75 | 0.88 | 0.85 | 0.83 | 0.80 |

| HumanEKC | 0.85 | 0.82 | 0.80 | 0.78 | 0.90 | 0.88 | 0.86 | 0.84 |

| Average | 0.85 | 0.85 | 0.82 | 0.81 | 0.89 | 0.89 | 0.86 | 0.85 |

| Std. Dev. | 0.12 | 0.10 | 0.12 | 0.12 | 0.09 | 0.08 | 0.10 | 0.11 |