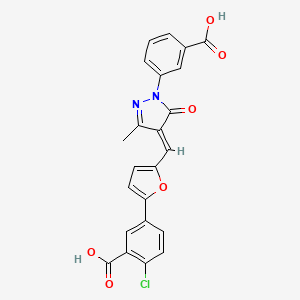

Tdrl-X80

Description

BenchChem offers high-quality this compound suitable for many research applications. Different packaging options are available to accommodate customers' requirements. Please inquire for more information about this compound including the price, delivery time, and more detailed information at info@benchchem.com.

Structure

3D Structure

Properties

Molecular Formula |

C23H15ClN2O6 |

|---|---|

Molecular Weight |

450.8 g/mol |

IUPAC Name |

5-[5-[(E)-[1-(3-carboxyphenyl)-3-methyl-5-oxopyrazol-4-ylidene]methyl]furan-2-yl]-2-chlorobenzoic acid |

InChI |

InChI=1S/C23H15ClN2O6/c1-12-17(21(27)26(25-12)15-4-2-3-14(9-15)22(28)29)11-16-6-8-20(32-16)13-5-7-19(24)18(10-13)23(30)31/h2-11H,1H3,(H,28,29)(H,30,31)/b17-11+ |

InChI Key |

XZRBYOCWWJMJRZ-GZTJUZNOSA-N |

Isomeric SMILES |

CC\1=NN(C(=O)/C1=C/C2=CC=C(O2)C3=CC(=C(C=C3)Cl)C(=O)O)C4=CC=CC(=C4)C(=O)O |

Canonical SMILES |

CC1=NN(C(=O)C1=CC2=CC=C(O2)C3=CC(=C(C=C3)Cl)C(=O)O)C4=CC=CC(=C4)C(=O)O |

Origin of Product |

United States |

Foundational & Exploratory

The Neural Blueprint of Expectation: A Technical Guide to Temporal Difference Reinforcement Learning in Neuroscience

For Researchers, Scientists, and Drug Development Professionals

The brain's remarkable ability to learn from experience and predict future outcomes is a cornerstone of adaptive behavior. A pivotal breakthrough in understanding the neural mechanisms of this process has been the application of Temporal Difference Reinforcement Learning (TDRL), a computational framework that has found a striking biological parallel in the brain's reward system. This in-depth guide explores the core principles of TDRL in neuroscience, providing a technical overview of the key experimental findings, methodologies, and the intricate signaling pathways that govern how we learn from the discrepancy between what we expect and what we get.

Core Principles of Temporal Difference Reinforcement Learning

At its heart, TDRL is a model-free reinforcement learning algorithm that learns to predict the expected value of a future reward from a given state.[1] A central concept in TDRL is the Reward Prediction Error (RPE) , which is the difference between the actual reward received and the predicted reward.[2][3][4] This error signal is then used to update the value of the preceding state or action, effectively "teaching" the system to make better predictions in the future. The fundamental equation for the TD error (δ) is:

δt = Rt+1 + γV(St+1) - V(St)

Where:

-

Rt+1 is the reward received at the next time step.

-

V(St) is the predicted value of the current state.

-

V(St+1) is the predicted value of the next state.

-

γ (gamma) is a discount factor that determines the importance of future rewards.

A positive TD error signals that the outcome was better than expected, strengthening the association that led to it. Conversely, a negative TD error indicates a worse-than-expected outcome, weakening the preceding association. When an outcome is exactly as predicted, the TD error is zero, and no learning occurs.

The Neural Correlate: Dopamine and the Reward Prediction Error

A significant body of evidence points to the phasic activity of midbrain dopamine neurons, primarily in the Ventral Tegmental Area (VTA) and Substantia Nigra pars compacta (SNc), as the neural instantiation of the TDRL RPE signal.[5] Seminal work by Schultz and colleagues demonstrated that these neurons exhibit firing patterns that closely mirror the TD error.

-

Positive Prediction Error: An unexpected reward elicits a burst of firing in dopamine neurons.

-

No Prediction Error: A fully predicted reward causes no change in the baseline firing rate of these neurons.

-

Negative Prediction Error: The omission of an expected reward leads to a pause in dopamine neuron firing, dropping below their baseline rate.

This discovery provided a powerful bridge between a computational theory of learning and its biological substrate, suggesting that dopamine acts as a global teaching signal to guide reward-based learning and decision-making.

Quantitative Data on Dopamine Neuron Firing

The relationship between dopamine neuron activity and RPE has been quantified in numerous studies. The following table summarizes typical firing rate changes in primate dopamine neurons under different reward conditions, as reported in the literature.

| Condition | Stimulus | Reward | Dopamine Neuron Firing Rate (Spikes/sec) | Reward Prediction Error |

| Before Learning | Neutral Cue (CS) | Unpredicted Reward (R) | Baseline | Positive |

| ↑ Phasic Burst | ||||

| After Learning | Conditioned Cue (CS) | Predicted Reward (R) | ↑ Phasic Burst | Zero |

| Baseline | ||||

| Reward Omission | Conditioned Cue (CS) | No Reward | ↑ Phasic Burst | Negative |

| ↓ Pause Below Baseline |

Note: The firing rates are illustrative and can vary based on the specific experimental parameters.

Key Experimental Protocols

The foundational experiments establishing the link between dopamine and TDRL were conducted in non-human primates. Below is a generalized methodology for a typical classical conditioning experiment.

Experimental Protocol: Single-Unit Recording of Dopamine Neurons in Behaving Monkeys

1. Animal Subjects and Surgical Preparation:

-

Species: Rhesus monkeys (Macaca mulatta).

-

Housing: Housed in individual primate chairs with controlled access to food and water to maintain motivation for juice rewards.

-

Surgical Implantation: Under general anesthesia and sterile surgical conditions, a recording chamber is implanted over a craniotomy targeting the VTA and SNc. A head-restraint post is also implanted to allow for stable head fixation during recording sessions.

2. Behavioral Task: Classical Conditioning:

-

Apparatus: The monkey is seated in a primate chair in front of a computer screen. A lick tube is positioned in front of the monkey's mouth to deliver liquid rewards.

-

Stimuli: Visual cues (e.g., geometric shapes) are presented on the screen.

-

Reward: A small amount of juice or water is delivered as a reward.

-

Procedure:

-

Pre-training: The animal is habituated to the experimental setup.

-

Conditioning: A neutral visual stimulus (Conditioned Stimulus, CS) is repeatedly paired with the delivery of a juice reward (Unconditioned Stimulus, US). The CS is presented for a fixed duration (e.g., 1-2 seconds), followed by the reward.

-

Probe Trials: To test for learning, trials are included where the predicted reward is omitted.

-

3. Electrophysiological Recording:

-

Technique: Single-unit extracellular recordings are performed using tungsten microelectrodes.

-

Procedure: The microelectrode is lowered into the target brain region using a microdrive. The electrical signals are amplified, filtered, and recorded.

-

Neuron Identification: Dopamine neurons are identified based on their characteristic electrophysiological properties: a long-duration, broad action potential waveform and a low baseline firing rate (typically < 10 Hz).

4. Data Analysis:

-

Spike Sorting: The recorded signals are sorted to isolate the activity of individual neurons.

-

Peri-Stimulus Time Histograms (PSTHs): The firing rate of each neuron is aligned to the onset of the CS and the delivery of the reward to create PSTHs, which show the average firing rate over time.

-

Statistical Analysis: Statistical tests are used to compare the firing rates of neurons during different trial types (e.g., rewarded vs. unrewarded trials) to determine if there are significant changes in activity.

Signaling Pathways and Logical Relationships

The computation and dissemination of the TDRL signal involve a complex and well-defined neural circuit centered on the VTA.

The Core TDRL Circuit: VTA and Nucleus Accumbens

The VTA sends dense dopaminergic projections to the Nucleus Accumbens (NAc), a key structure in the ventral striatum. The NAc is primarily composed of medium spiny neurons (MSNs), which are divided into two main populations based on their dopamine receptor expression: D1 receptor-expressing MSNs (D1-MSNs) and D2 receptor-expressing MSNs (D2-MSNs).

-

D1-MSNs: Associated with the "direct pathway" and are thought to be involved in promoting actions that lead to reward.

-

D2-MSNs: Associated with the "indirect pathway" and are thought to be involved in inhibiting actions that do not lead to reward.

The phasic release of dopamine in the NAc, driven by a positive RPE, is thought to potentiate the synapses on D1-MSNs, making it more likely that the animal will repeat the action that led to the reward. Conversely, a pause in dopamine firing, corresponding to a negative RPE, is hypothesized to weaken these connections.

Visualizing the TDRL Framework and Neural Circuitry

The following diagrams, generated using the DOT language, illustrate the core concepts of TDRL and the underlying neural circuitry.

Caption: A simplified logical flow of the Temporal Difference Reinforcement Learning algorithm.

Caption: The core neural circuit for TDRL involving the VTA and the Nucleus Accumbens.

Caption: A typical experimental workflow for investigating TDRL in non-human primates.

Implications for Drug Development

The central role of the dopaminergic system in TDRL has profound implications for understanding and treating a range of neuropsychiatric disorders. Dysregulation of the dopamine system is implicated in conditions such as addiction, depression, and schizophrenia. For drug development professionals, understanding the computational principles of TDRL provides a framework for:

-

Identifying Novel Drug Targets: By dissecting the specific components of the TDRL circuit, new molecular targets for therapeutic intervention can be identified.

-

Developing More Effective Treatments: A deeper understanding of how drugs of abuse hijack the TDRL system can inform the development of more effective treatments for addiction.

-

Predicting Treatment Response: Computational models based on TDRL could potentially be used to predict how individual patients might respond to different pharmacological interventions.

References

- 1. mdpi.com [mdpi.com]

- 2. Frontiers | The Dopamine Prediction Error: Contributions to Associative Models of Reward Learning [frontiersin.org]

- 3. Dopamine prediction errors in reward learning and addiction: from theory to neural circuitry - PMC [pmc.ncbi.nlm.nih.gov]

- 4. Prediction Error: The expanding role of dopamine | eLife [elifesciences.org]

- 5. Midbrain Dopamine Neurons Encode a Quantitative Reward Prediction Error Signal - PMC [pmc.ncbi.nlm.nih.gov]

An In-depth Technical Guide to Reinforcement Learning for Neuroscientists

Introduction

Reinforcement Learning (RL) provides a powerful computational framework for understanding how biological agents learn to make decisions to maximize rewards and minimize punishments.[1][2] This guide delves into the core principles of RL, explores its neural underpinnings, details relevant experimental protocols, and examines its applications in neuroscience research and drug development. The convergence of RL theory with neuroscientific evidence has provided a robust model for explaining the functions of key brain structures like the basal ganglia and the role of neuromodulators such as dopamine.[1][3][4]

Core Concepts of Reinforcement Learning

At its heart, RL is about an agent learning to interact with an environment to achieve a goal. The agent makes decisions through a series of actions , observes the state of the environment, and receives a scalar reward or punishment signal as feedback. The fundamental goal of the agent is to learn a policy —a strategy for choosing actions in different states—that maximizes the cumulative reward over time.

The interaction between the agent and the environment is typically modeled as a Markov Decision Process (MDP). This process involves a continuous loop where the agent observes a state, takes an action, receives a reward, and transitions to a new state.

References

- 1. princeton.edu [princeton.edu]

- 2. Reinforcement learning: Computational theory and biological mechanisms - PMC [pmc.ncbi.nlm.nih.gov]

- 3. FROM REINFORCEMENT LEARNING MODELS OF THE BASAL GANGLIA TO THE PATHOPHYSIOLOGY OF PSYCHIATRIC AND NEUROLOGICAL DISORDERS - PMC [pmc.ncbi.nlm.nih.gov]

- 4. researchgate.net [researchgate.net]

The Algorithmic Brain: A Technical Guide to Temporal Difference Reinforcement Learning in Cognitive Science

Audience: Researchers, scientists, and drug development professionals.

This guide provides an in-depth exploration of Temporal Difference Reinforcement Learning (TDRL), a cornerstone of modern computational neuroscience and a powerful framework for understanding learning and decision-making. We will delve into the core assumptions of TDRL, examine the key experimental evidence that supports it, and provide detailed protocols for replicating seminal findings.

Core Assumptions of Temporal Difference Reinforcement Learning

TDRL is a class of model-free reinforcement learning algorithms that learn to predict the expected value of future rewards.[1] It is distinguished by its ability to update value estimates based on the difference between a predicted and a subsequently experienced outcome, a process known as "bootstrapping."[2][3] The central assumptions of TDRL as a model of cognitive processes are:

-

Reward Prediction Error (RPE) as a Learning Signal: The core of TDRL is the concept of a reward prediction error (RPE), which is the discrepancy between the expected and the actual reward received.[4][5] This error signal is thought to be a primary driver of learning, with positive RPEs strengthening associations that lead to better-than-expected outcomes and negative RPEs weakening associations that lead to worse-than-expected outcomes.

-

Value Representation: TDRL models posit that the brain learns and stores a "value" for different states or state-action pairs. This value represents the expected cumulative future reward that can be obtained from that state or by taking a particular action in that state.

-

Dopaminergic System as the Neural Substrate of RPE: A key assumption in the cognitive science application of TDRL is that the phasic activity of midbrain dopamine neurons, particularly in the ventral tegmental area (VTA) and substantia nigra pars compacta (SNc), encodes the RPE signal. Unexpected rewards or cues that predict reward elicit a burst of dopamine neuron firing (positive RPE), while the omission of an expected reward leads to a pause in their firing (negative RPE).

-

State Representation: The effectiveness of TDRL is heavily dependent on how the environment is represented as a set of discrete "states." The model's ability to learn is influenced by the granularity and features of this state representation.

-

Model-Free Learning: In its classic form, TDRL is considered a "model-free" learning algorithm. This means that the agent learns the value of states and actions through direct experience and trial-and-error, without constructing an explicit model of the environment's dynamics (i.e., the probabilities of transitioning between states).

Key Experiments and Quantitative Findings

The validation of TDRL in cognitive science has been driven by a series of landmark experiments, primarily involving single-unit recordings in non-human primates and functional magnetic resonance imaging (fMRI) in humans.

Primate Electrophysiology: The Dopamine Reward Prediction Error Signal

The foundational evidence for the role of dopamine in RPE signaling comes from the work of Wolfram Schultz and colleagues. Their experiments demonstrated that the firing of dopamine neurons in the VTA and SNc of macaque monkeys closely matches the RPE signal predicted by TDRL models.

Table 1: Summary of Key Findings from Primate Electrophysiology Studies

| Experimental Condition | Predicted Dopamine Neuron Activity (TDRL) | Observed Dopamine Neuron Activity | Citation(s) |

| Unexpected primary reward (e.g., juice) | Positive RPE (burst of firing) | Phasic burst of firing | |

| Conditioned stimulus predicts reward | RPE shifts to the conditioned stimulus | Phasic burst of firing shifts to the onset of the conditioned stimulus | |

| Predicted reward is delivered | No RPE (baseline firing) | No change from baseline firing | |

| Predicted reward is omitted | Negative RPE (pause in firing) | Pause in firing below baseline |

Human fMRI: Reward Prediction Error in the Striatum

Functional MRI studies in humans have provided converging evidence for the neural encoding of RPEs. These studies consistently show that the blood-oxygen-level-dependent (BOLD) signal in the striatum, a major target of dopamine neurons, correlates with the TDRL-derived RPE signal.

Table 2: Summary of Key Findings from Human fMRI Studies on RPE

| Brain Region | Correlate of BOLD Signal Activity | Experimental Paradigm | Citation(s) |

| Ventral Striatum (including Nucleus Accumbens) | Positive correlation with reward prediction error | Classical and instrumental conditioning tasks with monetary or primary rewards | |

| Dorsal Striatum | Positive correlation with reward prediction error, particularly in action-contingent tasks | Instrumental conditioning and decision-making tasks | |

| Ventromedial Prefrontal Cortex (vmPFC) | Positive correlation with the subjective value of expected rewards | Decision-making tasks involving choices between different rewards |

Detailed Experimental Protocols

To facilitate replication and further research, this section provides detailed methodologies for two key experimental paradigms used to study TDRL in cognitive science.

Primate Electrophysiology: Classical Conditioning

This protocol is a synthesis of the methods described in the seminal works of Schultz and colleagues.

-

Subjects: Two adult male macaque monkeys (Macaca mulatta).

-

Apparatus: The monkeys are seated in a primate chair with their head restrained. A computer-controlled system delivers liquid rewards (e.g., juice) through a spout placed in front of the monkey's mouth. Visual and auditory stimuli are presented on a screen and through speakers.

-

Electrophysiological Recordings: Single-unit extracellular recordings are performed using tungsten microelectrodes lowered into the VTA and SNc. The location of these areas is confirmed with MRI and histological analysis post-experiment.

-

Experimental Procedure:

-

Pre-training: Monkeys are habituated to the experimental setup.

-

Classical Conditioning:

-

Phase 1 (No Prediction): A primary liquid reward is delivered at random intervals. The firing of dopamine neurons is recorded.

-

Phase 2 (Learning): A neutral sensory cue (e.g., a light or a tone) is presented for a fixed duration (e.g., 1 second) and is immediately followed by the delivery of the liquid reward. This pairing is repeated over multiple trials.

-

Phase 3 (Established Prediction): After learning is established (as indicated by the monkey's anticipatory licking of the spout after the cue), the cue-reward pairing continues.

-

Probe Trials: On a subset of trials, the predicted reward is omitted.

-

-

-

Data Analysis: The firing rate of individual dopamine neurons is calculated in peristimulus time histograms (PSTHs) aligned to the onset of the cue and the reward. Statistical tests (e.g., t-tests or ANOVAs) are used to compare the firing rates across different experimental conditions (e.g., unexpected reward vs. predicted reward).

Human fMRI: Instrumental Conditioning Task

This protocol is based on typical model-based fMRI studies of reward learning.

-

Participants: Healthy, right-handed adult volunteers (e.g., n=20, age 18-35). Participants are screened for any contraindications to MRI.

-

Task: A probabilistic instrumental learning task is presented using stimulus presentation software. On each trial, participants choose between two abstract symbols. One symbol is associated with a high probability of receiving a monetary reward (e.g., 70%), and the other with a low probability (e.g., 30%). The reward contingencies are not explicitly told to the participants and must be learned through trial and error.

-

fMRI Data Acquisition:

-

Scanner: 3 Tesla MRI scanner.

-

Sequence: T2*-weighted echo-planar imaging (EPI) sequence.

-

Parameters (example): Repetition Time (TR) = 2000 ms, Echo Time (TE) = 30 ms, flip angle = 90°, voxel size = 3x3x3 mm.

-

-

Experimental Procedure:

-

Instructions: Participants are instructed to choose the symbol that they believe will lead to the most rewards.

-

Task Performance: The task is performed inside the MRI scanner. Each trial consists of the presentation of the two symbols, a response period, and feedback indicating whether a reward was received.

-

-

Data Analysis:

-

Preprocessing: fMRI data is preprocessed to correct for motion, slice timing, and to normalize the data to a standard brain template.

-

TDRL Model: A TDRL model (e.g., a Q-learning model) is used to generate a trial-by-trial RPE regressor. The model takes the participant's choices and the received rewards as input and calculates the RPE for each trial.

-

Statistical Analysis: A general linear model (GLM) is used to analyze the fMRI data. The TDRL-derived RPE regressor is included in the GLM to identify brain regions where the BOLD signal significantly correlates with the RPE. Statistical maps are corrected for multiple comparisons.

-

Visualizing TDRL Concepts

The following diagrams, generated using the DOT language, illustrate key aspects of TDRL in cognitive science.

Signaling Pathway of the Dopamine Reward Prediction Error

Caption: Mesolimbic and mesocortical dopamine pathways involved in reward prediction error signaling.

Experimental Workflow of a TDRL-based fMRI Study

Caption: A typical workflow for a model-based fMRI study investigating TDRL.

Logical Relationship of the Core TDRL Algorithm

Caption: The core computational steps of the Temporal Difference learning algorithm.

Conclusion

Temporal Difference Reinforcement Learning provides a computationally precise and neurobiologically plausible framework for understanding how organisms learn from experience to maximize rewards. The core assumptions of TDRL, particularly the role of the dopamine-mediated reward prediction error, are supported by a wealth of experimental data from both animal and human studies. This guide has provided a technical overview of these assumptions, the key experimental findings, and detailed protocols to encourage further research and application in cognitive science and drug development. The continued refinement of TDRL models, informed by new experimental techniques, promises to further unravel the complexities of the learning brain.

References

- 1. journals.physiology.org [journals.physiology.org]

- 2. [PDF] A Neural Substrate of Prediction and Reward | Semantic Scholar [semanticscholar.org]

- 3. Publications [wolframschultz.org]

- 4. researchgate.net [researchgate.net]

- 5. Arithmetic and local circuitry underlying dopamine prediction errors - PMC [pmc.ncbi.nlm.nih.gov]

The Temporal Difference Reinforcement Learning (TDRL) Framework for Understanding Reward Prediction Error: A Technical Guide

For Researchers, Scientists, and Drug Development Professionals

This in-depth technical guide explores the core principles of the Temporal Difference Reinforcement Learning (TDRL) framework as a powerful model for understanding the neural mechanisms of reward prediction error (RPE). It delves into the pivotal role of dopamine in encoding this signal and provides a detailed overview of key experimental techniques used to investigate these processes, offering valuable insights for research and therapeutic development.

The Theoretical Foundation: Temporal Difference Reinforcement Learning

Temporal Difference (TD) learning is a model-free reinforcement learning algorithm that enables an agent to learn how to predict the long-term reward expected from a given state or action. A key feature of TD learning is its ability to update predictions at each step of a process rather than waiting for the final outcome, a method known as bootstrapping. This iterative updating process is central to its biological plausibility.

The two core components of the TDRL framework are the value function and the TD error .

-

Value Function (V(s)) : This function represents the expected cumulative future reward that can be obtained starting from a particular state, s. The goal of the learning agent is to develop an accurate value function for all possible states.

-

Temporal Difference (TD) Error (δ) : The TD error is the discrepancy between the predicted value of the current state and the actual reward received plus the discounted value of the next state. It serves as a crucial teaching signal to update the value function. The formula for the TD error is:

δt = Rt+1 + γV(st+1) - V(st)

Where:

-

δt is the TD error at time t.

-

Rt+1 is the reward received at the next time step, t+1.

-

γ (gamma) is a discount factor (between 0 and 1) that determines the present value of future rewards.

-

V(st+1) is the predicted value of the next state.

-

V(st) is the predicted value of the current state.

-

A positive TD error occurs when an outcome is better than expected, strengthening the association between the preceding state/action and the reward. Conversely, a negative TD error signifies a worse-than-expected outcome, weakening the association. When an outcome is exactly as expected, the TD error is zero, and no learning occurs.

Figure 1: A conceptual diagram of the Temporal Difference Reinforcement Learning (TDRL) model.

The Biological Implementation: Dopamine as the Reward Prediction Error Signal

A substantial body of evidence suggests that the phasic activity of midbrain dopamine neurons, primarily in the ventral tegmental area (VTA) and substantia nigra pars compacta (SNc), serves as the neural correlate of the TD error signal. These neurons exhibit firing patterns that strikingly mirror the three key states of the RPE:

-

Unexpected Reward: When an animal receives an unexpected reward, there is a phasic burst of activity in dopamine neurons, corresponding to a positive RPE .

-

Predicted Reward: Once an association between a cue (e.g., a light or a tone) and a reward is learned, the dopamine neurons fire in response to the cue but not to the now-predicted reward.

-

Omission of a Predicted Reward: If a predicted reward is withheld, there is a pause in the baseline firing of dopamine neurons at the time the reward was expected, signaling a negative RPE .

This dopaminergic RPE signal is thought to be broadcast to downstream brain regions, such as the nucleus accumbens and prefrontal cortex, where it modulates synaptic plasticity and guides learning and decision-making.

Quantitative Data on Dopaminergic Responses to RPE

The following tables summarize quantitative data from studies investigating the relationship between dopamine neuron activity, dopamine concentration, and reward prediction error.

| Condition | Baseline Firing Rate (Hz) | Phasic Firing Rate (Hz) during RPE | Reference(s) |

| Unexpected Reward | ~3-5 | ~15-30 | |

| Fully Predicted Reward | ~3-5 | No significant change from baseline | |

| Omission of Predicted Reward | ~3-5 | Decrease below baseline (~0-2) |

Table 1: Phasic Firing Rates of VTA Dopamine Neurons in Response to Reward Prediction Errors

| Condition | Peak Phasic Dopamine Concentration Change (nM) | Reference(s) |

| Unexpected Reward | ~100-200 | |

| Predicted Reward Cue | ~50-100 | |

| Reward Following Cue | No significant change |

Table 2: Phasic Dopamine Concentration Changes in the Nucleus Accumbens Measured by FSCV

Key Experimental Protocols for Investigating the TDRL-Dopamine System

Two powerful techniques have been instrumental in elucidating the causal role of dopamine in RPE-driven learning: Fast-Scan Cyclic Voltammetry (FSCV) and optogenetics.

Fast-Scan Cyclic Voltammetry (FSCV) for Real-Time Dopamine Measurement

FSCV is an electrochemical technique that allows for the sub-second measurement of neurotransmitter concentrations in vivo.

Methodology:

-

Electrode Fabrication: Carbon-fiber microelectrodes (typically 5-7 µm in diameter) are fabricated by aspirating a single carbon fiber into a glass capillary, which is then pulled to a fine tip and sealed with epoxy. The exposed carbon fiber is cut to a specific length (e.g., 100-200 µm).

-

Surgical Implantation: Under anesthesia, the animal (e.g., a rat or mouse) is placed in a stereotaxic frame. A craniotomy is performed over the target brain region (e.g., the nucleus accumbens). The FSCV electrode and a reference electrode (Ag/AgCl) are slowly lowered to the precise stereotaxic coordinates.

-

Data Acquisition: A triangular voltage waveform is applied to the carbon-fiber electrode at a high scan rate (e.g., 400 V/s) and frequency (e.g., 10 Hz). For dopamine detection, the potential is typically ramped from -0.4 V to +1.3 V and back. This causes the oxidation and subsequent reduction of dopamine at the electrode surface, generating a current that is proportional to its concentration. The resulting cyclic voltammogram provides a chemical signature for identifying the analyte.

-

Behavioral Task: The animal is engaged in a behavioral task, such as a classical conditioning paradigm where a neutral cue is paired with a reward.

-

Data Analysis: The recorded current is converted to dopamine concentration based on post-experiment calibration of the electrode with known concentrations of dopamine. Background-subtracted data reveals phasic changes in dopamine release time-locked to specific events in the behavioral task.

The Genesis of Self-Learning Machines: A Technical Guide to Foundational Papers on Temporal Difference Learning

For Researchers, Scientists, and Drug Development Professionals

This in-depth technical guide delves into the seminal papers that laid the groundwork for Temporal Difference (TD) learning, a cornerstone of modern reinforcement learning. By examining the foundational concepts, experimental designs, and quantitative results from these pioneering works, we aim to provide a comprehensive resource for researchers and professionals seeking to understand the core principles that drive many contemporary AI applications, from game playing to molecular design.

Introduction: The Core Idea of Temporal Difference Learning

Temporal Difference (TD) learning is a class of model-free reinforcement learning methods that learn by bootstrapping from the current estimate of the value function.[1] Unlike Monte Carlo methods, which wait until the end of an episode to update value estimates, TD methods update their predictions at each time step. This approach allows TD algorithms to learn from incomplete episodes and has been shown to be more efficient in many applications. The central idea is to update a prediction based on the difference between temporally successive predictions.[2]

The foundational work in this area is largely attributed to Richard S. Sutton and his 1988 paper, "Learning to Predict by the Methods of Temporal Differences," which introduced the TD(λ) algorithm.[2][3][4] This was followed by another landmark contribution from Christopher Watkins, whose 1989 Ph.D. thesis, "Learning from Delayed Rewards," introduced the Q-learning algorithm.

Foundational Paper: "Learning to Predict by the Methods of Temporal Differences" (Sutton, 1988)

Richard Sutton's 1988 paper is a pivotal publication that formally introduced the concept of Temporal Difference learning. It presented a new class of incremental learning procedures for prediction problems. The key innovation was to use the difference between successive predictions as an error signal to drive learning, a departure from traditional supervised learning methods that rely on the difference between a prediction and the final actual outcome.

Core Concept: The TD(λ) Algorithm

The paper introduces the TD(λ) algorithm, which elegantly unifies Monte Carlo methods and one-step TD learning. The parameter λ (lambda) ranges from 0 to 1 and controls the degree to which credit is assigned to past predictions.

-

TD(0) : This is the one-step TD method, where the value of a state is updated based on the value of the immediately following state.

-

TD(1) : This is equivalent to a Monte Carlo method, where credit is assigned based on the entire sequence of future rewards until the end of an episode.

-

0 < λ < 1 : This represents a spectrum between one-step TD and Monte Carlo, where credit is assigned to past states with an exponentially decaying weight.

The update rule for the weight vector w in TD(λ) is given by:

wt+1 = wt + α(Pt+1 - Pt)et

where:

-

α is the learning rate.

-

Pt is the prediction at time t.

-

et is the eligibility trace at time t, which is a record of preceding states.

Key Experiments: The Random Walk

Sutton demonstrated the efficacy of TD(λ) through a simple random walk prediction problem. The goal was to predict the probability of a random walk ending in a terminal state on the right, given the current state.

The experiment involved a one-dimensional random walk with 7 states (A, B, C, D, E, F, G), where A and G are terminal states. The walk starts in the center state D. At each step, there is an equal probability of moving left or right. The outcome is 1 if the walk terminates in state G and 0 if it terminates in state A.

Two main experimental setups were used:

-

Repeated Presentations (Batch Learning) : Training sets of 10 sequences were presented repeatedly to the learning algorithm until the weight vector converged. The weight updates were accumulated over a training set and applied at the end.

-

Single Presentation (Online Learning) : Each training set was presented only once. The weight vector was updated after each sequence.

For both experiments, 100 training sets were generated, and the root-mean-squared (RMS) error between the learned predictions and the true probabilities was calculated and averaged over these 100 sets.

Quantitative Data

The following tables summarize the quantitative results from Sutton's 1988 paper, showing the average RMS error for different values of λ.

Table 1: Average RMS Error on the Random Walk Problem under Repeated Presentations

| λ | Average Error |

| 1.0 | 0.25 |

| 0.9 | 0.22 |

| 0.7 | 0.18 |

| 0.5 | 0.15 |

| 0.3 | 0.13 |

| 0.1 | 0.12 |

| 0.0 | 0.12 |

Table 2: Average RMS Error on the Random Walk Problem after a Single Presentation of 10 Sequences

| λ | Average Error at Best α |

| 1.0 | 0.43 |

| 0.9 | 0.35 |

| 0.7 | 0.27 |

| 0.5 | 0.22 |

| 0.3 | 0.19 |

| 0.1 | 0.17 |

| 0.0 | 0.17 |

These results demonstrate that for this problem, smaller values of λ generally lead to lower error, indicating that the TD methods learned more efficiently than the Monte Carlo method (λ=1).

Signaling Pathway and Logical Relationships

The following diagrams illustrate the core logical flows of the TD(λ) algorithm.

Caption: The TD(λ) update rule calculation flow.

Caption: The fundamental agent-environment interaction loop in reinforcement learning.

Foundational Paper: "Learning from Delayed Rewards" (Watkins, 1989)

Christopher Watkins' 1989 Ph.D. thesis introduced Q-learning, a significant breakthrough in reinforcement learning. Q-learning is an off-policy TD control algorithm that directly learns the optimal action-value function, independent of the policy being followed. This allows for more flexible exploration strategies.

Core Concept: The Q-learning Algorithm

Q-learning learns a function, Q(s, a), which represents the expected future reward for taking action a in state s and following an optimal policy thereafter. The update rule for Q-learning is:

Q(St, At) ← Q(St, At) + α[Rt+1 + γ maxa Q(St+1, a) - Q(St, At)]

where:

-

St is the state at time t.

-

At is the action at time t.

-

Rt+1 is the reward at time t+1.

-

α is the learning rate.

-

γ is the discount factor.

-

maxa Q(St+1, a) is the maximum Q-value for the next state over all possible actions.

A formal proof of the convergence of Q-learning was later provided in a 1992 paper by Watkins and Dayan. The proof establishes that Q-learning converges to the optimal action-values with probability 1, provided that all state-action pairs are visited infinitely often and the learning rate schedule is appropriate.

Key Experiments

Watkins' thesis focused more on the theoretical development and illustration of Q-learning rather than extensive empirical experiments with large-scale quantitative results in the same vein as Sutton's paper. He used examples like a simple maze problem to illustrate how Q-learning could find an optimal policy.

A common illustrative example for Q-learning involves a simple grid world or maze. The agent's goal is to find the shortest path from a starting state to a goal state.

-

States : The agent's position in the grid.

-

Actions : Move up, down, left, or right.

-

Rewards : A positive reward for reaching the goal state and a small negative reward (or zero) for all other transitions to encourage finding a shorter path.

The Q-table, which stores the Q-values for all state-action pairs, is initialized to zero. The agent then explores the environment, and at each step, the Q-value for the taken action is updated using the Q-learning rule. An epsilon-greedy policy is often used for exploration, where the agent chooses a random action with a small probability (epsilon) and the action with the highest Q-value otherwise.

Signaling Pathway and Logical Relationships

The following diagram illustrates the logical flow of the Q-learning update process.

Caption: The logical flow of a single Q-learning update step.

Conclusion

The foundational papers by Sutton and Watkins laid the essential groundwork for temporal difference learning, introducing the core algorithms of TD(λ) and Q-learning that continue to be influential in the field of reinforcement learning. Sutton's work provided a robust framework for prediction learning and demonstrated its efficiency through empirical results. Watkins' development of Q-learning offered a powerful and flexible method for control problems. Understanding these seminal works is crucial for any researcher or professional aiming to apply or advance reinforcement learning techniques in their respective fields, including the complex and dynamic challenges present in drug development and scientific research.

References

- 1. semanticscholar.org [semanticscholar.org]

- 2. incompleteideas.net [incompleteideas.net]

- 3. (PDF) Learning to Predict by the Methods of Temporal Differences (1988) | Richard S. Sutton | 5175 Citations [scispace.com]

- 4. Publications learning to predict by the methods of temporal differences [cs.utexas.edu]

The Convergence of Learning Models: A Technical Guide to the Relationship Between Temporal Difference Reinforcement Learning and Pavlovian Conditioning

For Researchers, Scientists, and Drug Development Professionals

Abstract

The seemingly disparate fields of computational reinforcement learning and classical animal learning theory have found a remarkable point of convergence in the study of reward, prediction, and behavior. This technical guide explores the deep and intricate relationship between Temporal Difference Reinforcement Learning (TDRL), a powerful algorithmic framework for goal-directed learning, and Pavlovian conditioning, the fundamental process of associative learning. We delve into the core computational principles, the underlying neurobiological mechanisms centered on dopamine and reward prediction errors, and the key experimental methodologies that have illuminated this connection. This document provides a comprehensive overview for researchers and professionals seeking to understand how these two paradigms inform and enrich one another, with significant implications for neuroscience, psychology, and the development of novel therapeutics for disorders of learning and motivation.

Introduction: Bridging Computational Theory and Biological Learning

For over a century, Pavlovian conditioning has been a cornerstone of learning theory, describing how organisms learn to associate neutral stimuli with significant events.[1][2][3] In his seminal experiments, Ivan Pavlov demonstrated that a dog could learn to salivate at the sound of a bell (a conditioned stimulus, CS) if the bell was repeatedly paired with the presentation of food (an unconditioned stimulus, US).[1] This form of learning is not merely a passive association but a predictive one; the CS comes to signal the impending arrival of the US.

In parallel, the field of artificial intelligence has developed sophisticated algorithms for learning and decision-making. Among the most influential is Temporal Difference Reinforcement Learning (TDRL).[4] TDRL provides a mathematical framework for an agent to learn how to predict future rewards in a given state by continuously updating its predictions based on the difference between expected and actual outcomes. This difference is known as the reward prediction error (RPE) .

The critical insight that connects these two domains is the discovery that the phasic activity of midbrain dopamine neurons appears to encode a biological instantiation of the RPE signal central to TDRL models. This guide will explore this profound connection, examining the theoretical underpinnings, the neural circuits, and the experimental evidence that together paint a picture of the brain as a sophisticated prediction machine.

Theoretical Frameworks: From Pavlov to TDRL

The Rescorla-Wagner Model: A Precursor to Prediction Error

The Rescorla-Wagner model, developed in the early 1970s, was a significant step forward in understanding Pavlovian conditioning. It moved beyond simple contiguity and proposed that learning is driven by the surprisingness of the US. The model's core equation describes the change in associative strength (ΔV) of a CS on a given trial:

ΔV = αβ(λ - ΣV)

Where:

-

α and β are learning rate parameters related to the salience of the CS and US, respectively.

-

λ is the maximum associative strength that the US can support.

-

ΣV is the summed associative strength of all CSs present on that trial.

The term (λ - ΣV) represents the prediction error: the discrepancy between the actual outcome (λ) and the expected outcome (ΣV). This model successfully explains key Pavlovian phenomena like blocking, where the presence of a previously conditioned CS prevents learning about a new CS presented simultaneously.

Temporal Difference Reinforcement Learning: Incorporating Time

TDRL extends the concept of prediction error to incorporate the temporal dimension of reward prediction. A key algorithm in TDRL is the TD(0) update rule, which updates the value of a state (V(s)) based on the immediate reward (r) and the estimated value of the next state (V(s'))):

V(s) ← V(s) + α[r + γV(s') - V(s)]

The term [r + γV(s') - V(s)] is the TD error (δ) . It represents the difference between the received reward plus the discounted future reward expectation and the current reward expectation.

-

α is the learning rate.

-

γ is the discount factor, which determines the present value of future rewards.

Crucially, TDRL posits that the prediction error signal is used to update value predictions not just at the time of the reward, but also for the preceding cues, effectively propagating the value information backward in time. This temporal aspect is a key feature that aligns TDRL with the observed dynamics of dopamine neuron activity.

The Neurobiology of Prediction Error: The Role of Dopamine

The convergence of TDRL and Pavlovian conditioning is most evident in the neurobiology of the midbrain dopamine system.

Dopamine Neurons Encode a Reward Prediction Error

A wealth of evidence from single-unit recordings in behaving animals has demonstrated that the phasic firing of dopamine neurons in the ventral tegmental area (VTA) and substantia nigra pars compacta (SNc) closely mirrors the TD error signal.

-

Positive Prediction Error: An unexpected reward elicits a burst of dopamine neuron firing.

-

No Prediction Error: A fully predicted reward elicits no change in baseline firing.

-

Negative Prediction Error: The omission of an expected reward leads to a pause in dopamine neuron firing below their baseline rate.

Over the course of Pavlovian conditioning, the dopamine response transfers from the US to the earliest reliable CS. This is consistent with the TDRL model, where the prediction error signal propagates back in time to the earliest predictor of reward.

Key Brain Circuits: The Basal Ganglia and Prefrontal Cortex

The dopamine prediction error signal is broadcast to several key brain regions, most notably the basal ganglia and the prefrontal cortex (PFC) , where it is thought to drive synaptic plasticity and guide learning.

The basal ganglia , a collection of subcortical nuclei, are critically involved in action selection and reinforcement learning. The striatum, the main input nucleus of the basal ganglia, receives dense dopaminergic input from the VTA and SNc. Dopamine, acting on D1 and D2 receptors, modulates the strength of corticostriatal synapses, providing a mechanism for updating action-value associations based on the RPE signal.

The prefrontal cortex is involved in higher-order cognitive functions, including working memory and decision-making. Dopaminergic input to the PFC is thought to play a crucial role in updating goal-directed behaviors and adapting to changing environmental contingencies.

Experimental Methodologies

The link between TDRL and Pavlovian conditioning has been forged through a variety of sophisticated experimental techniques.

Optogenetics

Optogenetics allows for the precise temporal control of genetically defined neuron populations using light. This technique has been instrumental in establishing a causal link between dopamine neuron activity and learning.

-

Experimental Protocol: Optogenetic Stimulation of Dopamine Neurons in a Pavlovian Conditioning Task

-

Animal Model: TH-cre rats or mice, which express Cre recombinase specifically in tyrosine hydroxylase-positive (dopaminergic) neurons.

-

Viral Vector: An adeno-associated virus (AAV) carrying a Cre-dependent channelrhodopsin-2 (ChR2) or other light-sensitive opsin is injected into the VTA.

-

Optic Fiber Implantation: An optic fiber is implanted above the VTA to deliver light.

-

Behavioral Paradigm:

-

Habituation: Animals are habituated to a novel, neutral cue (e.g., a tone or light).

-

Conditioning: In the "paired" group, the presentation of the cue is temporally coupled with optogenetic stimulation of VTA dopamine neurons (e.g., 25 Hz train of 5 ms light pulses). In the "unpaired" control group, the cue and stimulation are presented at different times.

-

-

Data Acquisition and Analysis: Conditioned responses, such as locomotion or magazine approach, are measured during cue presentation.

-

Functional Magnetic Resonance Imaging (fMRI)

fMRI in awake rodents allows for the non-invasive measurement of brain-wide activity during learning tasks.

-

Experimental Protocol: Awake Rodent fMRI during Pavlovian Fear Conditioning

-

Animal Model: Rats or mice.

-

Acclimatization: Animals are gradually acclimatized to the MRI scanner environment and a head-fixation apparatus to minimize stress and motion artifacts.

-

Behavioral Paradigm:

-

Conditioning: In a separate chamber, animals in the "paired" group receive a neutral cue (CS; e.g., a flashing light) that co-terminates with a mild foot shock (US). The "unpaired" group receives the CS and US at different times.

-

fMRI Session: 24 hours later, the conscious and restrained animal is presented with the CS in the MRI scanner.

-

-

Data Acquisition and Analysis: Blood-oxygen-level-dependent (BOLD) signals are acquired and analyzed to identify brain regions showing differential activation to the CS in the paired versus unpaired groups.

-

Single-Unit Recording

Single-unit recording provides high temporal and spatial resolution data on the firing patterns of individual neurons.

-

Experimental Protocol: Single-Unit Recording of Dopamine Neurons in a Learning Task

-

Animal Model: Non-human primates or rodents.

-

Surgical Preparation: A recording chamber and head-post are surgically implanted to allow for stable recordings.

-

Electrode Placement: Microelectrodes are lowered into the VTA or SNc to isolate the activity of individual putative dopamine neurons, identified by their characteristic electrophysiological properties (e.g., long-duration action potentials and low baseline firing rates).

-

Behavioral Paradigm: Animals perform a task where they learn to associate visual or auditory cues with the delivery of a reward (e.g., a drop of juice).

-

Data Acquisition and Analysis: The firing rate of isolated neurons is recorded and aligned to task events (cue onset, reward delivery). The change in firing rate relative to baseline is calculated to determine the neuron's response to prediction errors.

-

Quantitative Data Summary

The following tables summarize key quantitative data from representative studies investigating the relationship between dopamine neuron activity, prediction errors, and Pavlovian conditioning.

| Parameter | Experimental Condition | Value/Observation | Reference |

| Dopamine Neuron Firing Rate | Baseline (pre-cue) | 3-5 Hz | |

| Unpredicted Reward | Increase to 20-30 Hz | ||

| Omission of Predicted Reward | Decrease to 0 Hz | ||

| Behavioral Response Rate (Pavlovian Conditioning) | High Reinforcement Rate | Higher response rate | |

| Low Reinforcement Rate | Lower response rate | ||

| TDRL Model Parameters | Learning Rate (α) | Typically 0 to 1 | |

| Discount Factor (γ) | Typically 0 to 1 | ||

| Rescorla-Wagner Model Parameters | Learning Rate (c) | 0 to 1.0 (product of CS and US intensity) | |

| Maximum Associative Strength (Vmax) | Arbitrary value based on US strength (e.g., 100) |

| Experiment Type | Animal Model | Key Finding | Quantitative Measure | Reference |

| Optogenetic Stimulation | TH-cre Rat | Phasic dopamine stimulation is sufficient to create a conditioned stimulus. | Significant increase in locomotion in paired vs. unpaired group (p < 0.0001). | |

| fMRI | Awake Rat | Conditioned fear stimulus activates the amygdala. | Significant BOLD signal increase in the amygdala in the paired group. | |

| Single-Unit Recording | Macaque Monkey | Dopamine neurons show prediction error signals to aversive stimuli. | Significant difference in firing rate between predicted and unpredicted air puff (p < 0.05). |

Visualizing the Connections: Signaling Pathways and Logical Relationships

The following diagrams, generated using the DOT language, illustrate the key signaling pathways and logical relationships discussed in this guide.

Figure 1: Conceptual relationship between TDRL and Pavlovian Conditioning, highlighting the role of the TD Error (dopamine signal) in updating associative strength.

Figure 2: Simplified diagram of the key neural circuits involved in reward learning, showing dopaminergic projections from the VTA/SNc to the striatum and prefrontal cortex.

Figure 3: A simplified schematic of the direct ('Go') and indirect ('NoGo') pathways of the basal ganglia, and the modulatory role of dopamine.

Conclusion and Future Directions

The relationship between TDRL and Pavlovian conditioning represents a powerful example of how computational theory can provide a formal framework for understanding complex biological processes. The discovery that dopamine neurons encode a reward prediction error has provided a unifying principle that links associative learning at the behavioral level with synaptic plasticity at the cellular level.

For researchers and drug development professionals, this convergence offers several key takeaways:

-

A Quantitative Framework for Learning: TDRL provides a quantitative framework for modeling and predicting learning deficits in various neurological and psychiatric disorders.

-

Target for Therapeutic Intervention: The dopaminergic system and its downstream targets in the basal ganglia and prefrontal cortex represent key nodes for therapeutic intervention to modulate learning and motivation.

-

Translational Models: The experimental paradigms described here, particularly awake rodent fMRI, offer powerful translational tools for evaluating the effects of novel compounds on the neural circuits underlying learning and reward processing.

Future research will likely focus on further refining our understanding of the heterogeneity of dopamine neuron responses, the role of other neurotransmitter systems in modulating prediction error signals, and the application of these principles to understand more complex forms of learning and decision-making. The continued dialogue between computational modeling and experimental neuroscience promises to yield deeper insights into the mechanisms of learning and provide new avenues for treating disorders of the brain.

References

- 1. researchgate.net [researchgate.net]

- 2. Both probability and rate of reinforcement can affect the acquisition and maintenance of conditioned responses - PMC [pmc.ncbi.nlm.nih.gov]

- 3. Both probability and rate of reinforcement can affect the acquisition and maintenance of conditioned responses - PubMed [pubmed.ncbi.nlm.nih.gov]

- 4. tutorialspoint.com [tutorialspoint.com]

The Architecture of Intelligence in Oncology: An In-depth Technical Guide to State Space Representations in Dynamic Treatment Regimes

For Researchers, Scientists, and Drug Development Professionals

The emergence of drug resistance remains a formidable challenge in oncology, often leading to treatment failure and disease progression. Dynamic Treatment Regimes (DTRs), a paradigm of adaptive therapy guided by the principles of reinforcement learning (RL), offer a promising approach to circumventing resistance by tailoring treatment strategies to the evolving state of a patient's cancer. At the core of these sophisticated models lies the concept of the "state space representation"—a meticulously defined set of variables that encapsulates the current condition of the patient and their disease. This technical guide provides an in-depth exploration of the construction and components of state space representations in DTR models for targeted drug resistance, summarizing key quantitative data, detailing experimental protocols, and visualizing critical pathways and workflows.

Foundational Concepts: The State-Action-Reward Cycle in Oncology

Reinforcement learning models operate on a simple yet powerful feedback loop: the agent (the DTR model) observes the state of the environment (the patient), takes an action (a treatment decision), and receives a reward (a measure of the outcome). This cycle iteratively informs the model's policy to maximize a cumulative reward over time, effectively learning an optimal treatment strategy.

The efficacy of a DTR model is fundamentally dependent on the quality and comprehensiveness of its state space representation. An inadequately defined state can lead to suboptimal or even detrimental treatment decisions. Conversely, a well-defined state space that captures the multifaceted nature of the patient's condition and the cancer's dynamics is the cornerstone of a successful adaptive therapy strategy.

Deconstructing the State Space: Key Components and Representations

The state in a DTR for oncology is a high-dimensional vector comprising various data modalities that collectively describe the patient's health and disease status at a specific point in time. The selection of these features is a critical step in model development, balancing the need for comprehensive information with the practicalities of clinical data acquisition.

Tumor-Specific Characteristics

Direct measures of the tumor's status are fundamental components of the state space. These include:

-

Tumor Burden: Often quantified by imaging techniques (e.g., RECIST criteria) or tumor biomarkers. For instance, in prostate cancer, the Prostate-Specific Antigen (PSA) level is a critical state variable.[1][2]

-

Resistant Cell Population Dynamics: Mathematical models are often employed to estimate the proportion of drug-sensitive and drug-resistant cells within the tumor. These estimations, derived from longitudinal biomarker data, form a crucial part of the state.

-

Genomic and Molecular Profiles: Information on specific mutations (e.g., EGFR mutations in non-small cell lung cancer), gene expression patterns, and other molecular markers can be incorporated into the state to personalize treatment selection.[3]

Patient-Specific Clinical Data

A holistic view of the patient's health is essential for balancing treatment efficacy with toxicity. Key clinical variables include:

-

Performance Status: Standardized scores like the ECOG performance status quantify a patient's functional well-being.

-

Laboratory Values: Hematological and biochemical markers (e.g., neutrophil counts, liver function tests) provide insights into organ function and potential treatment-related toxicities.

-

Treatment History: The sequence and dosage of prior therapies are vital for understanding the evolution of drug resistance and predicting future responses.

Biomarker Dynamics

The temporal evolution of biomarkers provides a dynamic and responsive component of the state space. For example, the rate of change of a tumor marker can be more informative than its absolute value at a single time point. In studies of metastatic melanoma, lactate dehydrogenase (LDH) levels are monitored as a key biomarker.[4]

Quantitative Data from Adaptive Therapy Clinical Trials

The following tables summarize key quantitative data from clinical trials investigating adaptive therapy strategies, which provide the foundational evidence and data for training and validating DTR models.

| Cancer Type | Clinical Trial | Treatment Regimen | Key Outcome Metric | Result |

| Prostate Cancer | NCT02415621 | Adaptive Abiraterone | Time to Progression | Median time to progression was significantly longer in the adaptive therapy arm compared to continuous therapy.[1] |

| Cumulative Drug Dose | Patients on adaptive therapy received a substantially lower cumulative dose of abiraterone. | |||

| Melanoma | ADAPT-IT (NCT03122522) | Adaptive Nivolumab + Ipilimumab | Overall Response Rate (ORR) at 12 weeks | 47% (95% CI, 35%-59%) |

| Progression-Free Survival (PFS) | Median PFS was 21 months (95% CI, 10-not reached). | |||

| Mathematical Modeling Study | Adaptive BRAF/MEK inhibitors | Predicted Time to Progression | Delayed time to progression by 6–25 months compared to continuous therapy. | |

| Relative Dose Rate | 6–74% relative to continuous therapy. |

Experimental Protocols in Adaptive Therapy

The design of clinical trials for adaptive therapies, which generate the data for DTR models, involves specific protocols for monitoring and treatment adaptation.

Prostate Cancer (NCT02415621)

-

Patient Population: Metastatic castrate-resistant prostate cancer patients.

-

Biomarker Monitoring: Serum PSA levels were measured at regular intervals.

-

Treatment Adaptation Rule:

-

Treatment with abiraterone was initiated.

-

Treatment was continued until the PSA level decreased to 50% of the initial value.

-

Treatment was then halted.

-

Treatment was re-initiated when the PSA level returned to the initial baseline value.

-

-

Data Collection: Longitudinal PSA data, imaging scans, and clinical assessments were collected to inform the patient's state.

Melanoma (ADAPT-IT - NCT03122522)

-

Patient Population: Patients with unresectable melanoma.

-

Treatment Protocol:

-

Patients received two initial doses of nivolumab and ipilimumab.

-

A radiographic assessment was performed at Week 6.

-

Based on the tumor response, a decision was made to either continue or discontinue ipilimumab before proceeding with nivolumab maintenance.

-

-

Biomarker Analysis: Plasma was collected for circulating tumor DNA (ctDNA) and cytokine analysis (e.g., IL-6) to identify predictive biomarkers of response.

The Role of Signaling Pathways in State Space Representation

While not always explicitly included as direct features in current DTR models due to challenges in real-time measurement, cellular signaling pathways are fundamental to understanding and predicting drug resistance. Their dysregulation is a hallmark of cancer and a primary mechanism of resistance to targeted therapies.

EGFR Signaling Pathway in Non-Small Cell Lung Cancer (NSCLC)

The Epidermal Growth Factor Receptor (EGFR) pathway is a critical driver of cell proliferation and survival in NSCLC. Mutations in the EGFR gene can lead to constitutive activation of the pathway, making it a key therapeutic target. However, resistance to EGFR inhibitors frequently develops through secondary mutations or activation of bypass pathways. A DTR model for EGFR-mutant NSCLC could theoretically incorporate the activation status of key downstream nodes (e.g., phosphorylated ERK, Akt) into its state representation to predict the emergence of resistance and guide the sequencing of different generations of EGFR inhibitors or combination therapies.

Caption: Simplified EGFR signaling pathway leading to cell proliferation and survival.

PI3K/Akt Signaling Pathway

The PI3K/Akt pathway is another crucial signaling cascade that regulates cell growth, survival, and metabolism. Its aberrant activation is common in many cancers and is a known mechanism of resistance to various therapies, including those targeting the EGFR pathway. Incorporating markers of PI3K/Akt pathway activity into the state space could enable a DTR model to anticipate and counteract this form of resistance, for example, by suggesting the addition of a PI3K or Akt inhibitor to the treatment regimen.

Caption: The PI3K/Akt signaling pathway, a key regulator of cell growth and survival.

Logical Workflow for a DTR Model in Clinical Practice

The implementation of a DTR model in a clinical setting would follow a structured workflow, integrating real-time patient data to generate personalized treatment recommendations.

Caption: A logical workflow for a Dynamic Treatment Regime in a clinical setting.

Conclusion and Future Directions

The development of robust state space representations is paramount to the success of Dynamic Treatment Regimes in oncology. By integrating diverse data streams—from macroscopic tumor burden to microscopic signaling pathway activity—these models hold the potential to transform cancer treatment from a static, one-size-fits-all approach to a dynamic, personalized strategy that can adapt to and overcome the challenge of drug resistance.

Future research will need to focus on several key areas:

-

Integration of Multi-omics Data: Incorporating high-dimensional data from genomics, proteomics, and metabolomics into the state space in a computationally tractable manner.

-

Real-time Monitoring of Signaling Pathways: Developing novel technologies to measure the activity of key signaling pathways in real-time to provide a more dynamic and predictive state representation.

-

Standardization of Data Collection: Establishing standardized protocols for collecting and reporting the data necessary to build and validate DTR models across different institutions and clinical trials.

As our ability to measure and model the complexities of cancer biology improves, so too will the sophistication and efficacy of DTRs, heralding a new era of intelligent, adaptive cancer therapy.

References

- 1. academic.oup.com [academic.oup.com]

- 2. Deep reinforcement learning identifies personalized intermittent androgen deprivation therapy for prostate cancer - PubMed [pubmed.ncbi.nlm.nih.gov]

- 3. Enhancing Treatment Decisions for Advanced Non-Small Cell Lung Cancer with Epidermal Growth Factor Receptor Mutations: A Reinforcement Learning Approach - PMC [pmc.ncbi.nlm.nih.gov]

- 4. researchgate.net [researchgate.net]

Methodological & Application

Application Notes and Protocols for Implementing Temporal Difference Reinforcement Learning (TDRL) Models in Python for Behavioral Data

Audience: Researchers, scientists, and drug development professionals.

Objective: This document provides a comprehensive guide to implementing Temporal Difference Reinforcement Learning (TDRL) models in Python for the analysis of behavioral data. It covers the theoretical background, data preparation, model implementation, and interpretation of results, with a focus on practical application in research and drug development.

Introduction to TDRL for Behavioral Analysis

At its core, reinforcement learning involves an agent interacting with an environment . The agent takes actions in different states of the environment and receives rewards (or punishments) in return.[1][4] The goal of the agent is to learn a policy —a strategy for choosing actions—that maximizes its cumulative reward over time. TDRL algorithms, such as Q-learning and SARSA, are foundational methods that enable agents to learn optimal behaviors through these interactions.

Key TDRL Concepts:

-

Temporal Difference (TD) Learning: A method that combines ideas from Monte Carlo methods and Dynamic Programming. It updates value estimates based on the difference between the estimated values of two successive states.

-

Q-learning: An off-policy TDRL algorithm that learns the value of taking a particular action in a particular state (the Q-value). It directly approximates the optimal action-value function, regardless of the policy being followed.

-

SARSA (State-Action-Reward-State-Action): An on-policy TDRL algorithm that updates Q-values based on the action taken by the current policy. The name reflects the quintuple of events that make up the update rule: (S, A, R, S', A').

Experimental Protocols: Applying TDRL to Behavioral Data

This section outlines the general protocol for applying a TDRL model to a behavioral dataset.

Data Acquisition and Preprocessing

The first step is to collect and structure the behavioral data in a format suitable for a TDRL model. This typically involves a sequence of trials where an agent makes a choice and receives an outcome.

Example Experimental Paradigm: Two-Armed Bandit Task

A common paradigm in behavioral research is the two-armed bandit task, where a subject must choose between two options (e.g., levers or images on a screen), each associated with a certain probability of reward. This task is well-suited for TDRL modeling.

Data Structuring:

The data should be organized in a trial-by-trial format, with each row representing a single trial and columns containing the following information:

-

Subject ID: Identifier for the individual participant or animal.

-

Trial Number: The sequential order of the trial.

-

State: The current state of the agent. In a simple bandit task, the state can be considered constant (e.g., state = 0) as the available actions do not change. In more complex tasks like a maze, the state would be the agent's location.

-

Action: The choice made by the agent (e.g., 0 for left lever, 1 for right lever).

-

Reward: The outcome of the action (e.g., 1 for reward, 0 for no reward).

Data Preprocessing Steps:

-

Data Cleaning: Handle missing values and remove any invalid data points.

-

Data Transformation: Convert categorical variables (e.g., 'left', 'right') into numerical representations.

-

Normalization (if necessary): For more complex state representations with continuous features, scaling the features to a similar range (e.g., using StandardScaler or MinMaxScaler from scikit-learn) can improve model performance.

Defining States, Actions, and Rewards

The core of applying TDRL to behavioral data is defining the states, actions, and rewards from the experimental data.

| Component | Definition in a Two-Armed Bandit Task |

| State (S) | The context in which a decision is made. In a simple bandit task, there is often only one state (e.g., S = 0), representing the choice point. |

| Action (A) | The set of possible choices for the agent. For a two-armed bandit, the action space would be A = {0, 1} (left or right). |

| Reward (R) | The feedback received after an action. This is typically a numerical value, such as R = 1 for a reward and R = 0 or R = -1 for no reward or punishment. |

TDRL Model Implementation in Python

This section provides a step-by-step guide to implementing a Q-learning model in Python using the NumPy library.

The Q-learning Algorithm

Q-learning is based on the Bellman equation, which iteratively updates the Q-value for a given state-action pair:

Q(s, a) ← Q(s, a) + α[r + γ maxa'Q(s', a') - Q(s, a)]

Where:

-

Q(s, a) is the Q-value for state s and action a.

-

α is the learning rate.

-

r is the reward.

-

γ is the discount factor.

-

s' is the next state.

-

maxa'Q(s', a') is the maximum Q-value for the next state over all possible actions a'.

Python Implementation

Here is a Python implementation of a Q-learning model for a two-armed bandit task.

Fitting the Model to Behavioral Data

To fit the TDRL model to actual behavioral data, we can use techniques like Maximum Likelihood Estimation (MLE) to find the model parameters (e.g., learning rate and exploration rate) that best explain the observed choices.

Data Presentation and Interpretation

Summarizing the model's parameters and outputs in a structured format is crucial for comparison and interpretation.

Model Parameters

The key parameters of the TDRL model provide insights into the learning process.

| Parameter | Symbol | Description | Typical Range | Interpretation |

| Learning Rate | α | Determines how much new information overrides old information. | 0 to 1 | A higher α means faster learning, but can be more sensitive to noise. |

| Discount Factor | γ | The importance of future rewards. | 0 to 1 | A value closer to 1 indicates a greater emphasis on long-term rewards. |

| Exploration Rate | ε | The probability of choosing a random action (exploration) versus the best-known action (exploitation). | 0 to 1 | A higher ε encourages more exploration of the environment. |

Model Output: Q-values

The learned Q-values represent the agent's valuation of each action in each state.

| State | Action | Learned Q-value |

| 0 | 0 (Left) | [Insert learned Q-value for action 0] |

| 0 | 1 (Right) | [Insert learned Q-value for action 1] |

A higher Q-value for a particular action indicates that the agent has learned that this action is more likely to lead to a reward.

Mandatory Visualizations

Visualizations are essential for understanding the logical flow of the TDRL model and its potential neural underpinnings.

TDRL Experimental Workflow

This diagram illustrates the process of applying a TDRL model to behavioral data.

References

Application Notes and Protocols for Applying TDRL to fMRI Data Analysis

Audience: Researchers, scientists, and drug development professionals.

Introduction

Temporal Difference Reinforcement Learning (TDRL) provides a powerful computational framework for understanding how the brain learns from rewards and punishments. When combined with functional Magnetic Resonance Imaging (fMRI), TDRL models allow researchers to move beyond simply identifying brain regions activated by a task to understanding the underlying computations being performed. This model-based fMRI approach involves developing a formal model of a cognitive process, fitting that model to behavioral data, and then using the model's internal variables, such as prediction errors and value signals, as regressors to analyze fMRI data.[1][2] This methodology has proven particularly fruitful in elucidating the neural mechanisms of reward processing, decision-making, and their disturbances in psychiatric and neurological disorders.[3] Consequently, it is a valuable tool in drug development for assessing the effects of compounds on specific neural computations.[3]

These application notes provide a detailed overview of the application of TDRL to fMRI data analysis, including experimental protocols and data presentation guidelines.

Core Concepts: Temporal Difference Reinforcement Learning

TDRL is a model-free reinforcement learning method where an agent learns to predict the expected value of a future reward for a given state or state-action pair.[3] A key concept in TDRL is the Reward Prediction Error (RPE) , which is the difference between the expected and the actual outcome. This RPE signal is thought to be a crucial learning signal in the brain, with phasic dopamine responses closely mirroring its dynamics.

The two most common TDRL algorithms used in fMRI studies are:

-

Q-learning: An off-policy algorithm that learns the value of taking a particular action in a particular state.

-

SARSA (State-Action-Reward-State-Action): An on-policy algorithm that also learns action-values but takes into account the current policy of the agent when updating these values.

Application in fMRI Data Analysis: Model-Based fMRI

The integration of TDRL models with fMRI data analysis follows a "model-based" approach. This involves three main steps:

-

Computational Modeling of Behavior: A TDRL model is chosen and its free parameters (e.g., learning rate, inverse temperature) are fitted to the participant's behavioral data from the experimental task.

-

Generation of TDRL Regressors: The fitted model is then used to generate time-series of its internal variables, most notably the trial-by-trial prediction errors and expected values.

-

General Linear Model (GLM) Analysis: These generated time-series are convolved with a hemodynamic response function (HRF) and used as regressors in a GLM analysis of the preprocessed fMRI data. This allows for the identification of brain regions where the BOLD signal significantly correlates with the TDRL-derived variables.

Experimental Protocols

Experimental Task Design: Probabilistic Reward Task

A common experimental paradigm used in TDRL-fMRI studies is the probabilistic reward task. This task is designed to elicit reward prediction errors as participants learn to associate stimuli with probabilistic rewards.

Objective: To identify the neural correlates of reward prediction errors and value signals during reinforcement learning.

Task Procedure:

-

Stimulus Presentation: On each trial, the participant is presented with one of two or more abstract visual stimuli (e.g., different colored shapes).

-

Choice: The participant chooses one of the stimuli.

-

Outcome: Following the choice, a probabilistic reward (e.g., monetary gain) or no reward is delivered. The probability of receiving a reward is fixed for each stimulus but unknown to the participant initially.

-

Learning: Over many trials, the participant learns the reward probabilities associated with each stimulus and aims to maximize their total reward.

Example Trial Structure:

| Event | Duration | Description |

| Fixation Cross | 2-4 seconds (jittered) | Baseline period. |

| Stimulus Presentation & Choice | 2 seconds | Participant sees two stimuli and makes a choice. |

| Feedback | 1 second | Outcome (e.g., "+ |

| Inter-Trial Interval (ITI) | 2-6 seconds (jittered) | Period before the next trial begins. |

fMRI Data Acquisition

Scanner: 3T MRI scanner.

Pulse Sequence: T2*-weighted echo-planar imaging (EPI) sequence.

Acquisition Parameters (Example):

| Parameter | Value |

| Repetition Time (TR) | 2000 ms |

| Echo Time (TE) | 30 ms |

| Flip Angle | 90° |

| Field of View (FOV) | 192 x 192 mm |

| Matrix Size | 64 x 64 |

| Slice Thickness | 3 mm (no gap) |

| Number of Slices | 35 (interleaved) |

Anatomical Scan: A high-resolution T1-weighted anatomical scan (e.g., MPRAGE) should also be acquired for registration and normalization of the functional data.

fMRI Data Preprocessing