DQn-1

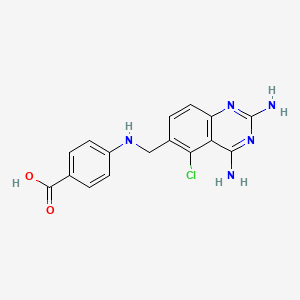

Description

BenchChem offers high-quality this compound suitable for many research applications. Different packaging options are available to accommodate customers' requirements. Please inquire for more information about this compound including the price, delivery time, and more detailed information at info@benchchem.com.

Properties

CAS No. |

57343-54-1 |

|---|---|

Molecular Formula |

C16H14ClN5O2 |

Molecular Weight |

343.77 g/mol |

IUPAC Name |

4-[(2,4-diamino-5-chloroquinazolin-6-yl)methylamino]benzoic acid |

InChI |

InChI=1S/C16H14ClN5O2/c17-13-9(3-6-11-12(13)14(18)22-16(19)21-11)7-20-10-4-1-8(2-5-10)15(23)24/h1-6,20H,7H2,(H,23,24)(H4,18,19,21,22) |

InChI Key |

OARHSEZBVKKLFI-UHFFFAOYSA-N |

Canonical SMILES |

C1=CC(=CC=C1C(=O)O)NCC2=C(C3=C(C=C2)N=C(N=C3N)N)Cl |

Origin of Product |

United States |

Foundational & Exploratory

The Core Theory of Deep Q-Networks: A Technical Guide

For Researchers, Scientists, and Drug Development Professionals

Deep Q-Networks (DQN) represent a pivotal advancement in the field of reinforcement learning (RL), demonstrating the capacity of artificial agents to achieve human-level performance in complex tasks with high-dimensional sensory inputs. This technical guide provides an in-depth exploration of the foundational theory of DQN, its key components, and the experimental validation that established it as a cornerstone of modern artificial intelligence. The principles outlined herein have significant implications for various research and development domains, including the potential for optimizing complex decision-making processes in drug discovery and development.

Foundational Concepts: From Reinforcement Learning to Q-Learning

Q-learning is a model-free RL algorithm that learns a function, Q(s, a), which represents the expected future rewards for taking a specific action 'a' in a given state 's'.[4] This function is often referred to as the action-value function. In traditional Q-learning, these Q-values are stored in a table, with an entry for every state-action pair. The learning process iteratively updates these Q-values using the Bellman equation, which expresses the value of a state in terms of the values of subsequent states.[4]

The Advent of Deep Q-Networks: Merging Q-Learning with Deep Neural Networks

Deep Q-Networks overcome the limitations of traditional Q-learning by using a deep neural network to approximate the Q-value function, Q(s, a; θ), where θ represents the weights of the network.[5][6] This innovation allows the agent to handle high-dimensional inputs, such as images, and to generalize to unseen states.[7] The input to the DQN is the state of the environment, and the output is a vector of Q-values for each possible action in that state.[7]

The training of the Q-network is framed as a supervised learning problem. The network learns by minimizing a loss function that represents the difference between the predicted Q-values and a target Q-value derived from the Bellman equation.[8] The loss function is typically the mean squared error (MSE) between the target and predicted Q-values.[8]

From Reinforcement Learning to Deep Q-Networks.

Key Innovations of Deep Q-Networks

The successful application of deep neural networks to Q-learning required two key innovations to stabilize the learning process: Experience Replay and the use of a Target Network .

Experience Replay

In standard online Q-learning, the agent learns from consecutive experiences, which are highly correlated. This correlation can lead to inefficient learning and instability in the neural network.[9] Experience replay addresses this by storing the agent's experiences—tuples of (state, action, reward, next state)—in a large memory buffer.[9] During training, the Q-network is updated by sampling random mini-batches of experiences from this buffer.[9]

This technique has several advantages:

-

Breaks Correlations: Random sampling breaks the temporal correlations between consecutive experiences, leading to more stable training.

-

Increases Data Efficiency: Each experience can be used for multiple weight updates, making the learning process more efficient.

-

Smoothes Learning: By averaging over a diverse set of past experiences, the updates are less prone to oscillations.

The Experience Replay Mechanism.

Target Network

The second innovation is the use of a separate "target" network to generate the target Q-values for the loss function.[10] In the DQN algorithm, the same network is used to both select the best action and to evaluate the value of that action. This can lead to instabilities, as the target value is constantly shifting with the network's weights.

To mitigate this, a second neural network, the target network, is introduced. The target network is a clone of the main Q-network but its weights are updated only periodically with the weights of the main network.[10] This provides a more stable target for the Q-network to learn towards, preventing oscillations and divergence during training.

The Target Network Architecture.

Experimental Validation: The Atari 2600 Benchmark

Experimental Protocol

The experimental setup for the Atari benchmark was designed to be as general as possible, with the same network architecture and hyperparameters used across all games.[7]

| Parameter | Description | Value |

| Input | Raw pixel frames from the Atari emulator, preprocessed to 84x84 grayscale images and stacked over 4 consecutive frames to capture temporal information. | 84x84x4 image |

| Network Architecture | A convolutional neural network (CNN) with three convolutional layers followed by two fully connected layers. | - |

| Conv Layer 1 | 32 filters of 8x8 with stride 4, followed by a ReLU activation. | - |

| Conv Layer 2 | 64 filters of 4x4 with stride 2, followed by a ReLU activation. | - |

| Conv Layer 3 | 64 filters of 3x3 with stride 1, followed by a ReLU activation. | - |

| Fully Connected 1 | 512 rectifier units. | - |

| Output Layer | A fully connected linear layer with an output for each valid action (between 4 and 18 depending on the game). | - |

| Replay Memory Size | The number of recent experiences stored in the replay buffer. | 1,000,000 frames |

| Minibatch Size | The number of experiences sampled from the replay memory for each training update. | 32 |

| Optimizer | RMSProp | - |

| Learning Rate | The step size for updating the network weights. | 0.00025 |

| Discount Factor (γ) | The factor by which future rewards are discounted. | 0.99 |

| Exploration (ε-greedy) | The agent's policy for balancing exploration and exploitation. Epsilon was annealed linearly from 1.0 to 0.1 over the first million frames, and then fixed at 0.1. | - |

| Target Network Update Freq. | The number of updates to the main Q-network before the target network's weights are updated. | 10,000 |

Table 1: Hyperparameters and Network Architecture for the DQN Atari Experiments.[7]

Quantitative Results

The DQN agent's performance was evaluated against other reinforcement learning methods and a professional human games tester. The results demonstrated that DQN could achieve superhuman performance on many of the games.

| Game | Random Play | Human Tester | DQN |

| Breakout | 1.2 | 30.5 | 404.7 |

| Pong | -20.7 | 14.6 | 20.9 |

| Space Invaders | 148 | 1,669 | 1,976 |

| Seaquest | 68.4 | 28,010 | 5,286 |

| Beam Rider | 363.9 | 16,926.5 | 10,036 |

| Q*bert | 163.9 | 13,455 | 18,989 |

| Enduro | 0 | 860.5 | 831.6 |

Logical Workflow of the Deep Q-Network Algorithm

The overall logic of the DQN algorithm can be summarized in the following workflow:

The Deep Q-Network Training Algorithm.

Implications for Drug Discovery and Development

The principles underlying Deep Q-Networks have the potential to be applied to complex decision-making problems in drug discovery and development. For instance, DQNs could be used to optimize treatment strategies by learning from patient data and clinical outcomes. The ability to learn from high-dimensional data makes it suitable for integrating various data types, such as genomic data, patient history, and treatment responses, to personalize therapeutic regimens. Furthermore, the concept of learning a value function to guide decisions could be applied to optimizing molecular design or planning multi-step chemical syntheses.

Conclusion

Deep Q-Networks represent a significant leap forward in reinforcement learning, demonstrating the power of combining deep neural networks with traditional RL algorithms. The key innovations of experience replay and target networks were crucial in stabilizing the learning process and enabling the agent to learn from high-dimensional sensory input. The successful application of DQN to the Atari 2600 benchmark not only set a new standard for AI performance in complex tasks but also opened up new avenues for applying reinforcement learning to a wide range of real-world problems, including those in the scientific and pharmaceutical domains.

References

- 1. youtube.com [youtube.com]

- 2. newatlas.com [newatlas.com]

- 3. Mastering Atari with Deep Q-Learning | by Beyond the Horizon | Medium [medium.com]

- 4. GitHub - danielegrattarola/deep-q-atari: Keras and OpenAI Gym implementation of the Deep Q-learning algorithm to play Atari games. [github.com]

- 5. GitHub - adhiiisetiawan/atari-dqn: Implementation Deep Q Network to play Atari Games [github.com]

- 6. cs.toronto.edu [cs.toronto.edu]

- 7. towardsdatascience.com [towardsdatascience.com]

- 8. Step-by-Step Deep Q-Networks (DQN) Tutorial: From Atari Games to Bioengineering Research | by Yinxuan Li | Medium [medium.com]

- 9. GitHub - google-deepmind/dqn: Lua/Torch implementation of DQN (Nature, 2015) [github.com]

- 10. Reinforcement Learning: Deep Q-Learning with Atari games | by Cheng Xi Tsou | Nerd For Tech | Medium [medium.com]

The Core Principles of Deep Q-Networks: A Technical Guide for Scientific Professionals

An In-depth Technical Guide on the Core Principles of Deep Q-Network Models

For researchers, scientists, and professionals in drug development, understanding the frontiers of artificial intelligence is paramount for driving innovation. Among the groundbreaking advancements in machine learning, Deep Q-Networks (DQNs) represent a significant leap in reinforcement learning, enabling agents to learn complex behaviors in high-dimensional environments. This guide provides a comprehensive technical overview of the core principles of DQNs, their foundational experiments, and the methodologies that underpin their success.

Introduction to Reinforcement Learning and Q-Learning

Reinforcement learning (RL) is a paradigm of machine learning where an agent learns to make decisions by interacting with an environment.[1] The agent receives feedback in the form of rewards or penalties for its actions, with the objective of maximizing its cumulative reward over time.

At the heart of many RL algorithms is the concept of a Q-function , which represents the "quality" of taking a certain action in a given state. The optimal Q-function, denoted as Q*(s, a), gives the maximum expected future reward achievable by taking action 'a' in state 's' and continuing optimally thereafter.

Q-learning is a model-free RL algorithm that aims to learn this optimal Q-function.[2][3] For environments with a finite and manageable number of states and actions, Q-learning can be implemented using a simple lookup table, known as a Q-table. The algorithm iteratively updates the Q-values in this table using the Bellman equation.[4]

However, in many real-world scenarios, such as analyzing complex biological systems or navigating the vast chemical space for drug discovery, the number of possible states can be astronomically large or even continuous.[2][5] This "curse of dimensionality" renders the use of a Q-table computationally infeasible.

The Advent of Deep Q-Networks

Deep Q-Networks solve this challenge by approximating the Q-function using a deep neural network.[2][5][6] This innovation allows the agent to handle high-dimensional inputs, such as raw pixel data from a video game or complex molecular representations, and generalize its learned experiences to new, unseen states.[2][7][8]

The core idea of a DQN is to use a neural network that takes the state of the environment as input and outputs the Q-values for all possible actions in that state.[4][7][9] This approach transforms the problem of finding the optimal Q-function into a supervised learning problem where the network is trained to predict the target Q-values.

Key Innovations of Deep Q-Networks

The successful application of deep neural networks to Q-learning was made possible by two key innovations that address the instability often encountered when training neural networks with reinforcement learning signals: Experience Replay and the use of a Target Network .[5][7][10]

-

Experience Replay: Instead of training the network on consecutive experiences as they occur, which can lead to highly correlated and non-stationary training data, DQN stores the agent's experiences—tuples of (state, action, reward, next state)—in a large memory buffer.[3][7][10][11] During training, mini-batches of experiences are randomly sampled from this buffer.[11] This technique breaks the temporal correlations between experiences, leading to more stable and efficient learning.[10][11]

-

Target Network: To further improve stability, DQN employs a second, separate neural network called the target network.[1][6][7] This network has the same architecture as the main Q-network but its weights are held constant for a period of time. The target network is used to generate the target Q-values for the Bellman equation during the training of the main Q-network. The weights of the target network are periodically updated with the weights of the main network.[6][10][12] This approach provides a more stable target for the Q-value updates, preventing the rapid oscillations that can occur when a single network is used to both predict and update its own target values.

Foundational Experiments: Mastering Atari Games

The groundbreaking success of Deep Q-Networks was demonstrated in a series of experiments where a single DQN agent learned to play a diverse set of 49 classic Atari 2600 games, in many cases surpassing human-level performance.[7][13] This was a landmark achievement as the agent learned directly from raw pixel data and the game score, with no prior knowledge of the game rules.[7][14]

Experimental Protocol

The experimental setup for the Atari experiments provides a clear methodology for applying DQNs to complex problems:

-

Input Preprocessing: To reduce the dimensionality of the input, the raw 210x160 pixel frames from the Atari emulator were preprocessed. Each frame was converted to grayscale and down-sampled to an 84x84 image.[5] To capture temporal information, such as the movement of objects, the final state representation was created by stacking the last four preprocessed frames.

-

Network Architecture: A convolutional neural network (CNN) was used to process the stacked frames.[7][9] The initial layers of the CNN were convolutional, designed to extract spatial features from the images. These were followed by fully connected layers that ultimately outputted a Q-value for each possible action in the game.[7]

-

Training and Hyperparameters: The network was trained using the RMSProp optimization algorithm. The agent was trained for a total of 50 million frames across all games. An epsilon-greedy policy was used for action selection, where the agent would choose a random action with a probability that annealed over time, encouraging exploration early in training and exploitation of learned knowledge later on.

Quantitative Results

The performance of the DQN agent was evaluated against other reinforcement learning methods and a professional human games tester. The following table summarizes the average scores achieved by the DQN on a selection of these games, as reported in the original DeepMind publications.

| Game | Random Play | Human Tester | DQN |

| Beam Rider | 354 | 16926 | 4092 |

| Breakout | 1.7 | 30.5 | 225 |

| Enduro | 0 | 864 | 470 |

| Pong | -20.7 | 14.6 | 20 |

| Q*bert | 163.9 | 13455 | 1952 |

| Seaquest | 68.4 | 42054 | 1743 |

| Space Invaders | 148 | 1668 | 581 |

Data sourced from "Playing Atari with Deep Reinforcement Learning" (Mnih et al., 2013).

Visualizing the Core Concepts of DQN

To further elucidate the mechanisms of Deep Q-Networks, the following diagrams, generated using the Graphviz DOT language, illustrate the key logical relationships and workflows.

Conclusion and Future Directions

Deep Q-Networks represent a pivotal development in the field of reinforcement learning, demonstrating the power of deep learning to solve complex decision-making problems. The core principles of using a neural network as a function approximator, combined with the stabilizing techniques of experience replay and a target network, have laid the foundation for many subsequent advancements in the field.

For professionals in scientific research and drug development, these concepts offer a powerful toolkit. The ability of DQNs and their successors to learn from complex, high-dimensional data opens up new avenues for exploring vast parameter spaces, optimizing experimental designs, and discovering novel molecular compounds. As the field of deep reinforcement learning continues to evolve, its applications in solving real-world scientific challenges are poised to expand significantly.

References

- 1. Human-level control through deep reinforcement learning | The WAIM RCN [waim.network]

- 2. Human-level control through deep reinforcement learning. [junshern.github.io]

- 3. GitHub - adhiiisetiawan/atari-dqn: Implementation Deep Q Network to play Atari Games [github.com]

- 4. A Deep Q-Network learns to play Enduro [kyscg.github.io]

- 5. Step-by-Step Deep Q-Networks (DQN) Tutorial: From Atari Games to Bioengineering Research | by Yinxuan Li | Medium [medium.com]

- 6. Deep Q-Learning for Atari Breakout [keras.io]

- 7. cs.toronto.edu [cs.toronto.edu]

- 8. researchgate.net [researchgate.net]

- 9. [PDF] Human-level control through deep reinforcement learning | Semantic Scholar [semanticscholar.org]

- 10. [PDF] Playing Atari with Deep Reinforcement Learning | Semantic Scholar [semanticscholar.org]

- 11. scribd.com [scribd.com]

- 12. fanpu.io [fanpu.io]

- 13. web.stanford.edu [web.stanford.edu]

- 14. semanticscholar.org [semanticscholar.org]

The Evolution of Deep Q-Learning: A Technical Guide for Scientific Application

A comprehensive overview of the development of Deep Q-Learning algorithms, from the foundational Deep Q-Network to its advanced successors. This guide details the core mechanisms, experimental validation, and applications in scientific domains, particularly drug discovery, for researchers, scientists, and drug development professionals.

Introduction

Deep Q-Learning has marked a significant milestone in the field of artificial intelligence, demonstrating the ability of autonomous agents to achieve superhuman performance in complex decision-making tasks. By combining the principles of reinforcement learning with the representational power of deep neural networks, these algorithms can learn effective policies directly from high-dimensional sensory inputs. This technical guide provides an in-depth exploration of the history and evolution of Deep Q-Learning, detailing the seminal algorithms that have defined its trajectory and their applications in scientific research, with a particular focus on drug development.

The Genesis: Deep Q-Network (DQN)

The advent of the Deep Q-Network (DQN) in 2013 by Mnih et al. from DeepMind is widely considered the starting point of the deep reinforcement learning revolution.[1][2] Prior to DQN, traditional Q-learning was limited to environments with discrete, low-dimensional state spaces, as it relied on a tabular approach to store and update action-values (Q-values).[3] DQN overcame this limitation by employing a deep convolutional neural network to approximate the Q-value function, enabling it to process high-dimensional inputs like raw pixel data from Atari 2600 games.[4]

Core Concepts

The DQN algorithm introduced two key innovations to stabilize the learning process when using a non-linear function approximator like a neural network:

-

Experience Replay: This technique stores the agent's experiences—comprising a state, action, reward, and next state—in a replay memory.[4][5] During training, mini-batches of experiences are randomly sampled from this memory to update the network's weights. This breaks the temporal correlations between consecutive experiences, leading to more stable and efficient learning.

-

Target Network: To further enhance stability, DQN uses a separate "target" network to generate the target Q-values for the Bellman equation. The weights of this target network are periodically updated with the weights of the online Q-network, providing a stable target for the Q-value updates and preventing oscillations and divergence.[6]

Experimental Protocol: Atari 2600 Benchmark

The original DQN paper demonstrated its capabilities on the Atari 2600 benchmark, a suite of diverse video games.[4]

-

Input Preprocessing: Raw game frames (210x160 pixels) were preprocessed by converting them to grayscale, down-sampling to 84x84, and stacking four consecutive frames to provide the network with temporal information.[4]

-

Network Architecture: The network consisted of three convolutional layers followed by two fully connected layers. The input was the 84x84x4 preprocessed image, and the output was a set of Q-values, one for each possible action in the game.[4]

-

Training: The network was trained using the RMSProp optimizer with a batch size of 32. An ε-greedy policy was used for action selection, where ε was annealed from 1.0 to 0.1 over the first million frames.[2]

Addressing Overestimation: Double DQN (DDQN)

A key issue identified in the original DQN algorithm is the overestimation of Q-values. This occurs because the max operator in the Q-learning update rule uses the same network to both select the best action and evaluate its value. This can lead to a positive bias and suboptimal policies.[7] Double Deep Q-Network (DDQN), introduced by van Hasselt et al. in 2015, addresses this problem by decoupling the action selection and evaluation.[8][9]

Core Mechanism

DDQN modifies the target Q-value calculation. Instead of using the target network to find the maximum Q-value of the next state, the online network is used to select the best action for the next state, and the target network is then used to evaluate the Q-value of that chosen action.[6][10] This separation helps to mitigate the overestimation bias.[7]

DQN Target Q-value: Y_t^DQN = r_t + γ * max_a' Q(s_{t+1}, a'; θ⁻)

Double DQN Target Q-value: Y_t^DDQN = r_t + γ * Q(s_{t+1}, argmax_a' Q(s_{t+1}, a'; θ); θ⁻)

Experimental Protocol

The experimental setup for DDQN was largely consistent with the original DQN experiments on the Atari 2600 benchmark to allow for direct comparison. The primary change was the modification in the target Q-value calculation. The same network architecture and hyperparameters were used.[9]

Decomposing the Q-value: Dueling DQN

Introduced by Wang et al. in 2016, the Dueling Network Architecture provides a more nuanced estimation of Q-values by explicitly decoupling the value of a state from the advantage of each action in that state.[11] This allows the network to learn which states are valuable without having to learn the effect of each action for each state, leading to better policy evaluation in the presence of many similar-valued actions.[11][12]

Network Architecture

The Dueling DQN architecture features two separate streams of fully connected layers after the convolutional layers. One stream estimates the state-value function V(s), while the other estimates the advantage function A(s, a) for each action. These two streams are then combined to produce the final Q-values.[11]

Q-value Combination: Q(s, a) = V(s) + (A(s, a) - mean_a'(A(s, a')))

The subtraction of the mean advantage ensures that the advantages have zero mean at the chosen action, which improves the stability of the optimization.[13]

Experimental Protocol

The Dueling DQN was also evaluated on the Atari 2600 benchmark, using a similar experimental setup to the original DQN. The key difference was the modified network architecture. The authors demonstrated that combining Dueling DQN with Prioritized Experience Replay (discussed next) achieved state-of-the-art performance.[11]

Focusing on Important Experiences: Prioritized Experience Replay (PER)

Proposed by Schaul et al. in 2015, Prioritized Experience Replay (PER) improves upon the uniform sampling of experiences from the replay memory by prioritizing transitions from which the agent can learn the most.[5] The intuition is that agents learn more from "surprising" events where their prediction is far from the actual outcome.[14]

Core Mechanism

PER assigns a priority to each transition in the replay memory, typically proportional to the magnitude of its temporal-difference (TD) error. Transitions with higher TD error are more likely to be sampled for training. To avoid exclusively sampling high-error transitions, a stochastic sampling method is used that gives all transitions a non-zero probability of being sampled.[14]

To correct for the bias introduced by this non-uniform sampling, PER uses importance-sampling (IS) weights in the Q-learning update. These weights down-weight the updates for transitions that are sampled more frequently, ensuring that the parameter updates remain unbiased.[4]

Experimental Protocol

PER was evaluated by integrating it into both the standard DQN and Double DQN algorithms on the Atari 2600 benchmark. The results showed that PER significantly improved the performance and data efficiency of both algorithms.[14] The hyperparameters for PER, such as the prioritization exponent α and the importance-sampling correction exponent β, were annealed during training.[4]

Performance Comparison on Atari 2600 Benchmark

The following table summarizes the performance of the different Deep Q-Learning algorithms on a selection of Atari 2600 games, as reported in their respective original publications. The scores are typically averaged over a number of episodes after a fixed number of training frames.

| Game | DQN[4] | Double DQN[9] | Dueling DQN (with PER)[11] | Prioritized Replay (with Double DQN)[14] |

| Mean Normalized Score | 122% | - | 591.9% | 551% |

| Median Normalized Score | 48% | 111% | 172.1% | 128% |

| Games > Human Level | 15 | - | - | 33 |

Note: The performance metrics are based on different sets of games and evaluation protocols, so direct comparison should be made with caution. The "Normalized Score" is typically calculated as (agent_score - random_score) / (human_score - random_score).

Application in Drug Discovery and Development

Deep Q-Learning and its variants have found promising applications in the field of drug discovery, particularly in the area of de novo molecule generation. The goal is to design novel molecules with desired pharmacological properties.[15][16]

Methodology: Graph-Based Molecular Generation

In this context, the process of generating a molecule is framed as a sequential decision-making problem, making it amenable to reinforcement learning.[17] The state is the current molecular graph, and the actions are modifications to this graph, such as adding or removing atoms and bonds.[18][19] A deep Q-network is trained to predict the value of each possible modification, guiding the generation process towards molecules with high reward.[20]

The reward function is typically a composite of several desired properties, including:

-

Binding Affinity: Predicted binding strength to a target protein.[20]

-

Drug-likeness (QED): A quantitative estimate of how "drug-like" a molecule is.[21][22]

-

Synthetic Accessibility: A score indicating how easy the molecule is to synthesize.

-

Other Physicochemical Properties: Such as solubility and molecular weight.[21]

Graph neural networks (GNNs) are often used as the function approximator for the Q-network, as they are well-suited for learning representations of molecular graphs.[17]

Experimental Protocols in De Novo Drug Design

A typical experimental setup for de novo drug design using Deep Q-Learning involves the following steps:

-

Environment: A molecular environment is defined where states are molecular graphs and actions are valid chemical modifications.

-

Reward Function: A reward function is designed to score molecules based on a combination of desired properties. This often involves using pre-trained predictive models for properties like binding affinity and drug-likeness.[20]

-

Agent: A DQN agent, often with a GNN-based Q-network, is trained to interact with the molecular environment.

-

Training: The agent generates molecules, receives rewards, and updates its Q-network to maximize the expected cumulative reward. Techniques like experience replay are often employed.

-

Evaluation: The generated molecules are evaluated based on the desired properties, and their novelty and diversity are assessed.

Conclusion

The evolution of Deep Q-Learning algorithms has been a story of continuous innovation, with each new development addressing fundamental challenges and pushing the boundaries of what autonomous agents can achieve. From the foundational Deep Q-Network that first successfully combined deep learning with reinforcement learning, to the more sophisticated architectures of Double DQN and Dueling DQN that improve learning stability and efficiency, and the intelligent sampling of Prioritized Experience Replay, these advancements have significantly enhanced the capabilities of AI. The application of these powerful algorithms to scientific domains, such as drug discovery, demonstrates their potential to accelerate research and development by automating complex design and optimization tasks. As research in this area continues, we can expect to see even more powerful and versatile Deep Q-Learning algorithms that will undoubtedly play a crucial role in solving some of the most challenging scientific problems.

References

- 1. [PDF] Playing Atari with Deep Reinforcement Learning | Semantic Scholar [semanticscholar.org]

- 2. Reinforcement Learning: Deep Q-Learning with Atari games | by Cheng Xi Tsou | Nerd For Tech | Medium [medium.com]

- 3. researchgate.net [researchgate.net]

- 4. cs.toronto.edu [cs.toronto.edu]

- 5. [1511.05952] Prioritized Experience Replay [arxiv.org]

- 6. cs230.stanford.edu [cs230.stanford.edu]

- 7. Reddit - The heart of the internet [reddit.com]

- 8. Learning To Play Atari Games Using Dueling Q-Learning and Hebbian Plasticity [arxiv.org]

- 9. reinforcement learning - Performance Comparison between DoubleDQN & DQN - Stack Overflow [stackoverflow.com]

- 10. proceedings.mlr.press [proceedings.mlr.press]

- 11. atlantis-press.com [atlantis-press.com]

- 12. A COMPARATIVE STUDY OF DEEP REINFORCEMENT LEARNING MODELS: DQN VS PPO VS A2C [arxiv.org]

- 13. arxiv.org [arxiv.org]

- 14. Deep reinforcement learning for de novo drug design - PMC [pmc.ncbi.nlm.nih.gov]

- 15. researchgate.net [researchgate.net]

- 16. Molecule generation toward target protein (SARS-CoV-2) using reinforcement learning-based graph neural network via knowledge graph - PMC [pmc.ncbi.nlm.nih.gov]

- 17. Enhancing Molecular Design through Graph-based Topological Reinforcement Learning [arxiv.org]

- 18. researchgate.net [researchgate.net]

- 19. academic.oup.com [academic.oup.com]

- 20. Reinforcement Learning for Enhanced Targeted Molecule Generation Via Language Models [arxiv.org]

- 21. Reinforcement Learning for Enhanced Targeted Molecule Generation Via Language Models | OpenReview [openreview.net]

- 22. themoonlight.io [themoonlight.io]

The Cornerstone of Deep Q-Networks: An In-depth Technical Guide to Experience Replay

For Researchers, Scientists, and Drug Development Professionals

In the landscape of deep reinforcement learning, the advent of Deep Q-Networks (DQNs) marked a pivotal moment, enabling agents to achieve human-level performance on complex tasks, such as playing Atari 2600 games, directly from raw pixel inputs. A critical innovation underpinning this success is experience replay , a mechanism that fundamentally addresses the challenges of training deep neural networks with correlated and non-stationary data generated from reinforcement learning interactions. This technical guide provides an in-depth exploration of the foundational concepts of experience replay, its evolution, and its profound impact on the stability and efficiency of DQNs.

The Core Concept: Breaking the Chains of Correlation

At its heart, experience replay introduces a simple yet powerful idea: instead of using the most recent experience for training, the agent stores its experiences in a large memory buffer, often referred to as a replay buffer.[1][2] An "experience" is typically a tuple representing a single transition: (state, action, reward, next_state).[3]

The learning process is then decoupled from the data collection process. During training, instead of using the latest transition, the algorithm samples a minibatch of transitions randomly from this replay buffer. This random sampling is the key to breaking the temporal correlations inherent in sequential experience, thereby better approximating the independent and identically distributed (i.i.d.) data assumption required for stable training of deep neural networks with stochastic gradient descent.[2][4]

Key Advantages of Experience Replay

The introduction of a replay buffer offers several significant advantages:

-

Breaking Temporal Correlations : By randomly sampling from a large history of transitions, the updates are based on a diverse set of experiences, which significantly stabilizes the learning process.[2][4]

-

Increased Data Efficiency : Each experience can be reused multiple times for network updates, allowing the agent to extract more learning value from each interaction. This is particularly beneficial in environments where data collection is costly or time-consuming.[1][4]

-

Smoother Learning : Averaging over a minibatch of diverse past experiences can smooth out the learning updates, reducing oscillations and preventing the agent from getting stuck in short-sighted policies.[1]

The Mechanics of Experience Replay

The implementation of experience replay involves two primary components: the replay buffer itself and the sampling strategy.

The Replay Buffer: A Repository of Past Experiences

The replay buffer is typically implemented as a fixed-size circular buffer.[1][5] As the agent interacts with the environment, new experiences are added to the buffer. When the buffer reaches its capacity, the oldest experiences are discarded to make room for new ones. The size of this buffer is a crucial hyperparameter; a larger buffer can store a more diverse range of experiences but requires more memory.[1][4]

Sampling Strategies: From Uniform to Prioritized

The most straightforward sampling strategy is uniform random sampling , where every experience in the replay buffer has an equal probability of being selected for a training minibatch.[1] While effective, this approach treats all experiences as equally important.

A significant advancement in experience replay is Prioritized Experience Replay (PER) .[6][7] The core idea behind PER is that an agent can learn more effectively from some transitions than from others.[6][7] Experiences that are "surprising" or where the agent's prediction was highly inaccurate are considered more valuable for learning.

PER assigns a priority to each transition, typically proportional to the magnitude of its Temporal-Difference (TD) error. Transitions with higher TD errors are more likely to be sampled for training.[7][8] To avoid exclusively replaying a small subset of experiences, a stochastic sampling method is used that interpolates between uniform sampling and greedy prioritization. To correct for the bias introduced by this non-uniform sampling, PER uses importance sampling weights in the Q-learning update.[8]

Experimental Analysis: The Impact of Experience Replay

The efficacy of experience replay and its variants has been extensively demonstrated on the Atari 2600 benchmark. The following tables summarize the performance improvements observed.

Quantitative Comparison of Uniform vs. Prioritized Experience Replay

The introduction of Prioritized Experience Replay led to a substantial improvement in the performance of DQN across a wide range of Atari games.

| Game | Uniform DQN | Prioritized DQN |

| Median Normalized Performance | 47.5% | 123.3% |

| Mean Normalized Performance | 118.0% | 431.1% |

| Games with State-of-the-Art Performance | N/A | 41 out of 49 |

Table 1: Summary of normalized scores on 49 Atari games, comparing a DQN with uniform experience replay to one with prioritized experience replay. The normalized score is calculated as (agent_score - random_score) / (human_score - random_score). Data sourced from the "Prioritized Experience Replay" paper by Schaul et al.[1][8]

A subsequent study on Prioritized Sequence Experience Replay (PSER) also provided a direct comparison between uniform and prioritized replay on a larger set of 60 Atari games.

| Game | Uniform DQN | Prioritized DQN (PER) |

| Mean Score | 1297 | 2249 |

| Median Score | 249 | 450 |

Table 2: Mean and median scores across 60 Atari 2600 games, comparing DQN with uniform sampling to DQN with Prioritized Experience Replay (PER). Data sourced from the "Prioritized Sequence Experience Replay" paper.[6]

Detailed Experimental Protocols

The following protocol outlines the typical experimental setup used in the evaluation of DQN with experience replay on the Atari 2600 benchmark, as detailed in the foundational papers.

| Parameter | Value | Description |

| Replay Memory Size | 1,000,000 frames | The capacity of the replay buffer.[9] |

| Minibatch Size | 32 | The number of transitions sampled from the replay buffer for each training update.[9] |

| Optimizer | RMSProp | The optimization algorithm used to update the network weights.[9] |

| Learning Rate | 0.00025 | The step size for the optimizer. |

| Discount Factor (γ) | 0.99 | The factor by which future rewards are discounted. |

| Exploration Strategy | ε-greedy | The agent chooses a random action with probability ε and the greedy action with probability 1-ε. |

| Initial ε | 1.0 | The initial probability of choosing a random action. |

| Final ε | 0.1 | The final probability of choosing a random action. |

| ε-decay Frames | 1,000,000 | The number of frames over which ε is linearly annealed from its initial to its final value. |

| Target Network Update Frequency | 10,000 steps | The frequency (in number of parameter updates) with which the target network is updated. |

| Action Repetition | 4 | The agent's selected action is repeated for 4 consecutive frames. |

| Preprocessing | Grayscale, Down-sampling, Stacking | Raw frames are converted to grayscale, down-sampled to 84x84, and 4 consecutive frames are stacked to create the state representation. |

Table 3: Typical hyperparameters and experimental settings for training a DQN with experience replay on the Atari 2600 environment.

Visualizing the Core Concepts

To further elucidate the foundational concepts, the following diagrams, generated using the DOT language, illustrate the logical flow and relationships within the experience replay mechanism.

Caption: The workflow of a DQN agent with experience replay.

Caption: A comparison of uniform and prioritized sampling strategies.

Conclusion

Experience replay is a foundational and indispensable component of Deep Q-Networks. By creating a buffer of past experiences and sampling from it to train the neural network, it effectively mitigates the issues of correlated data and non-stationary distributions that arise in online reinforcement learning. The evolution from uniform to prioritized sampling has further enhanced the efficiency and performance of DQNs, demonstrating that not all experiences are created equal. For researchers and professionals in fields such as drug development, where understanding and modeling complex sequential decision-making processes is crucial, a deep grasp of these core reinforcement learning concepts is invaluable for developing more intelligent and adaptive systems.

References

- 1. arxiv.org [arxiv.org]

- 2. researchgate.net [researchgate.net]

- 3. researchgate.net [researchgate.net]

- 4. apxml.com [apxml.com]

- 5. proceedings.neurips.cc [proceedings.neurips.cc]

- 6. arxiv.org [arxiv.org]

- 7. Prioritized Experience Replay :: Reinforcement Learning Playbook [rlplaybook.com]

- 8. [PDF] Prioritized Experience Replay | Semantic Scholar [semanticscholar.org]

- 9. cs.toronto.edu [cs.toronto.edu]

The Stabilizing Force: Understanding the Role of Target Networks in Deep Q-Networks

An In-depth Technical Guide for Researchers and Drug Development Professionals

Abstract

Deep Q-Networks (DQN) marked a significant breakthrough in reinforcement learning, demonstrating the ability to achieve human-level performance in complex tasks directly from high-dimensional sensory inputs. A cornerstone of this success is the introduction of a target network , a simple yet powerful mechanism designed to stabilize the learning process. This technical guide provides a comprehensive examination of the target network's role, the problem of non-stationary targets it solves, and its practical implementation. We will delve into the underlying theory, detail common experimental protocols for its evaluation, and present quantitative data on its impact, offering researchers and professionals a thorough understanding of this critical component in modern reinforcement learning.

The Core Challenge: The "Moving Target" Problem

In standard Q-learning, the goal is to learn a Q-function, Q(s, a), which estimates the expected future reward for taking an action 'a' in a state 's'. The Q-values are updated iteratively using the Bellman equation. When a neural network is used to approximate this Q-function, as in DQN, the network's weights (let's call them θ) are updated at each step to minimize a loss function, typically the Mean Squared Error (MSE) between the predicted Q-value and a target Q-value.

The target Q-value is calculated as: y = r + γ maxa' Q(s', a'; θ)

Here, r is the reward, γ is the discount factor, and s' is the next state. The critical issue arises from the fact that the same network with weights θ is used to compute both the predicted Q-value, Q(s, a; θ), and the target Q-value.[1] This means that with every update to the weights θ, the target y also changes.[2] This phenomenon is known as the "moving target" problem .[3]

Trying to train a network to converge to a target that is constantly shifting creates significant instability, leading to oscillations in performance and, in many cases, divergence of the learning process.[2][4][5] Conceptually, this is like trying to hit a target that moves every time you adjust your aim based on your last shot.[1]

The Solution: Decoupling with a Target Network

To mitigate this instability, the DQN algorithm introduces a second neural network called the target network .[6][7]

-

Architecture: The target network is an exact, separate copy of the main network (often called the "online" or "policy" network).[8]

-

Function: Its purpose is to provide a stable and consistent target for the online network's updates.[7][9] During the loss calculation for a training step, the target network's parameters (θ⁻) are held fixed.[8]

The Bellman equation is modified to use the target network for calculating the future Q-value component:

y = r + γ maxa' Q(s', a'; θ⁻)

By using the fixed parameters θ⁻ to generate the target, the online network (with parameters θ) has a stable objective to learn from for a period, breaking the destructive feedback loop and significantly improving training stability.[3][8]

The DQN Training Workflow with Target Networks

The introduction of the target network, combined with another key DQN innovation—Experience Replay—creates a robust training loop.[9]

-

Action Selection: The agent uses the online network (θ) to select an action based on the current state, typically using an ε-greedy policy.

-

Experience Storage: The resulting transition (state, action, reward, next state) is stored in a replay buffer.

-

Batch Sampling: A mini-batch of experiences is randomly sampled from the replay buffer. This breaks the temporal correlation between consecutive experiences.[2]

-

Target Calculation: For each experience in the batch, the target network (θ⁻) is used to calculate the target Q-value y.

-

Gradient Descent: A gradient descent step is performed on the online network (θ) to minimize the loss (e.g., MSE) between its predicted Q-values and the stable targets calculated in the previous step.

-

Target Network Update: Periodically, the weights of the target network (θ⁻) are updated with the weights from the online network (θ).

The following diagram illustrates this logical workflow.

References

- 1. Monte Carlo method - Wikipedia [en.wikipedia.org]

- 2. Kaustab Pal [kaustabpal.github.io]

- 3. Solving CartPole-v0 with DQN · GitHub [gist.github.com]

- 4. researchgate.net [researchgate.net]

- 5. proceedings.neurips.cc [proceedings.neurips.cc]

- 6. itm-conferences.org [itm-conferences.org]

- 7. Reddit - The heart of the internet [reddit.com]

- 8. DDQN hyperparameter tuning using Open AI gym Cartpole - ADG Efficiency [adgefficiency.com]

- 9. m.youtube.com [m.youtube.com]

The Quantum Leap in Drug Discovery: A Technical Guide to Q-Learning and Deep Q-Learning

An In-depth Technical Guide for Researchers, Scientists, and Drug Development Professionals

In the ever-evolving landscape of drug discovery, the integration of artificial intelligence, particularly reinforcement learning (RL), has emerged as a transformative approach. This guide provides a deep dive into two pivotal RL algorithms, Q-Learning and its advanced successor, Deep Q-Learning (DQN). Understanding the nuances of these methods is crucial for leveraging their power to navigate the vast chemical space and accelerate the identification of novel therapeutic candidates.

Core Concepts: From Tabular to Deep Reinforcement Learning

At its heart, reinforcement learning revolves around an "agent" that learns to make optimal decisions by interacting with an "environment". The agent's goal is to maximize a cumulative "reward" signal it receives for its actions.

Q-Learning: The Foundation

Q-Learning is a model-free RL algorithm that learns the value of an action in a particular state. It does this without needing a model of the environment's dynamics. The core of Q-Learning is the Q-table , a lookup table where each entry, Q(s, a), represents the expected future reward for taking action 'a' in state 's'.

The agent updates the Q-values iteratively using the Bellman equation, which considers the immediate reward and the discounted maximum expected future reward. This trial-and-error process allows the agent to build a "cheat sheet" that guides it toward the most rewarding sequences of actions.

However, the reliance on a Q-table is also its primary limitation. For environments with a large or continuous number of states and actions, the Q-table becomes computationally intractable, a phenomenon often referred to as the "curse of dimensionality".[1][2]

Deep Q-Learning: Overcoming Scalability with Neural Networks

Deep Q-Learning (DQN) addresses the limitations of traditional Q-Learning by replacing the Q-table with a deep neural network.[1][3] This neural network, known as a Deep Q-Network, takes the state as input and outputs the Q-values for all possible actions in that state.[1] This function approximation allows DQN to handle high-dimensional and continuous state spaces, making it applicable to complex problems like molecule generation.

To stabilize the learning process, which can be notoriously unstable when using non-linear function approximators like neural networks, DQN introduces two key techniques:

-

Experience Replay: The agent stores its experiences (state, action, reward, next state) in a replay memory. During training, it samples random mini-batches of these experiences to update the Q-network. This breaks the correlation between consecutive experiences, leading to more stable and robust learning.

-

Target Network: DQN uses a second, separate neural network called the target network to calculate the target Q-values in the Bellman equation. The weights of this target network are updated less frequently than the main Q-network, providing a more stable target for the updates and preventing oscillations in the learning process.[1]

Quantitative Comparison: Q-Learning vs. Deep Q-Learning

The choice between Q-Learning and Deep Q-Learning hinges on the complexity of the problem at hand. The following table summarizes their key quantitative and qualitative differences, particularly in the context of drug discovery applications.

| Feature | Q-Learning | Deep Q-Learning (DQN) |

| State-Action Space | Small, discrete | Large, continuous |

| Data Structure | Q-table (lookup table) | Deep Neural Network |

| Memory Requirement | Proportional to the number of states and actions | Proportional to the number of network parameters |

| Computational Cost | Low for small state spaces, intractable for large spaces | High, requires significant computational resources (GPUs) |

| Generalization | None (cannot handle unseen states) | High (can generalize to unseen states) |

| Convergence Time | Faster for simple problems with small state spaces | Slower to converge due to the complexity of training a deep neural network, but feasible for complex problems where Q-learning would not converge at all[4] |

| Stability | Generally stable for tabular cases | Prone to instability; requires techniques like experience replay and target networks |

| Example Application | Simple grid-world navigation | De novo molecule generation, optimizing chemical properties |

Experimental Protocols: A Step-by-Step Guide to Deep Q-Learning for Molecule Generation

This section outlines a detailed methodology for applying Deep Q-Learning to the task of de novo molecule generation, inspired by frameworks such as MolDQN.

Environment and State Representation

-

Molecule Representation: Represent molecules as graphs, where atoms are nodes and bonds are edges. For input to the neural network, these graphs can be converted into a fixed-size vector representation, such as a molecular fingerprint.

-

State Definition: The "state" is the current molecule being generated.

-

Action Space: The "actions" are chemical modifications that can be applied to the current molecule. This can include adding or removing atoms, changing bond types, or adding functional groups.

Reward Function Design

The reward function is critical for guiding the generation process towards molecules with desired properties. A composite reward function is often used, combining multiple objectives:

-

Drug-likeness (QED): A quantitative estimate of how "drug-like" a molecule is.

-

Synthetic Accessibility (SA) Score: An estimate of how easily a molecule can be synthesized.

-

Binding Affinity: A predicted binding affinity to a specific biological target (e.g., a protein kinase). This can be obtained from a separate predictive model, such as a Quantitative Structure-Activity Relationship (QSAR) model.

-

Similarity to a Reference Molecule: To guide the generation towards analogues of a known active compound.

Deep Q-Network Architecture

A multi-layer perceptron (MLP) is a common choice for the Q-network architecture. The input to the network is the fingerprint of the current molecule, and the output layer has a neuron for each possible action, representing the predicted Q-value for that action.

Training Protocol

-

Initialization: Initialize the main Q-network and the target network with the same random weights. Initialize the replay memory buffer.

-

Episode Loop: An episode consists of a series of steps to modify an initial molecule.

-

Action Selection: For the current state (molecule), select an action using an epsilon-greedy policy. With probability epsilon, choose a random action (exploration); otherwise, choose the action with the highest predicted Q-value from the main network (exploitation).

-

Environment Step: Apply the selected action to the molecule to get the next state (the modified molecule).

-

Reward Calculation: Calculate the reward for the new molecule based on the defined reward function.

-

Store Experience: Store the transition (state, action, reward, next state) in the replay memory.

-

Network Training:

-

Sample a random mini-batch of transitions from the replay memory.

-

For each transition in the batch, calculate the target Q-value using the target network: target_Q = reward + gamma * max_a' Q_target(next_state, a').

-

Calculate the loss as the mean squared error between the predicted Q-values from the main network and the target Q-values.

-

Update the weights of the main Q-network using backpropagation.

-

-

Update Target Network: Periodically (e.g., every N steps), copy the weights from the main Q-network to the target network.

-

-

Termination: The episode ends after a fixed number of modification steps or when a desired property threshold is reached.

-

Repeat: Repeat the episode loop until the model converges.

Visualizing the Workflow and Signaling Pathways

To effectively apply these reinforcement learning techniques in drug discovery, it is essential to understand the overall workflow and the biological context.

Drug Discovery Workflow with Deep Q-Learning

The following diagram illustrates a typical workflow for de novo drug design using Deep Q-Learning.

Caption: A workflow for de novo drug design using a Deep Q-Learning agent.

Signaling Pathway Example: JAK-STAT Pathway

The Janus kinase (JAK) and Signal Transducer and Activator of Transcription (STAT) signaling pathway is a critical pathway in cytokine signaling and is a well-established target for drugs treating inflammatory diseases and cancers.[5][6][7][][9] The diagram below illustrates a simplified representation of the JAK-STAT pathway, a potential target for inhibitors designed using reinforcement learning.

Caption: A simplified diagram of the JAK-STAT signaling pathway.

Conclusion

References

- 1. baeldung.com [baeldung.com]

- 2. De Novo Molecular Design Enabled by Direct Preference Optimization and Curriculum Learning [arxiv.org]

- 3. quora.com [quora.com]

- 4. Convergence time of Q-learning Vs Deep Q-learning - Stack Overflow [stackoverflow.com]

- 5. aacrjournals.org [aacrjournals.org]

- 6. Sometimes Small Is Beautiful: Discovery of the Janus Kinases (JAK) and Signal Transducer and Activator of Transcription (STAT) Pathways and the Initial Development of JAK Inhibitors for IBD Treatment - PMC [pmc.ncbi.nlm.nih.gov]

- 7. Small molecule drug discovery targeting the JAK-STAT pathway - PubMed [pubmed.ncbi.nlm.nih.gov]

- 9. JAK-STAT signaling pathway - Wikipedia [en.wikipedia.org]

Unraveling the Theoretical Constraints of Deep Q-Network Models: A Technical Guide

For Researchers, Scientists, and Drug Development Professionals

Deep Q-Network (DQN) models marked a significant breakthrough in the field of reinforcement learning, demonstrating the ability to achieve human-level performance in complex tasks, such as playing Atari 2600 games, directly from raw pixel data. However, the fusion of deep neural networks with traditional Q-learning introduces several theoretical limitations that can impede training stability and lead to suboptimal policies. This technical guide provides an in-depth exploration of these core limitations, detailing the experimental protocols that have been established to identify and mitigate these challenges, and presenting the quantitative outcomes of these innovations.

The Challenge of Instability: The "Deadly Triad"

A primary limitation of DQN models is the potential for training instability. This arises from the interplay of three components, often referred to as the "deadly triad" in reinforcement learning: function approximation, bootstrapping, and off-policy learning.[1][2]

-

Function Approximation: DQNs use deep neural networks to approximate the action-value function, Q(s, a). This is essential for handling large, high-dimensional state spaces, but the non-linear nature of neural networks can lead to instability when combined with the other two elements.[3]

-

Bootstrapping: DQN updates its Q-value estimates based on other Q-value estimates (i.e., it "bootstraps"). Specifically, the target Q-value is calculated using the estimated Q-value of the next state. This can lead to the propagation and magnification of errors.[4]

-

Off-Policy Learning: DQNs use a replay memory to store and sample past experiences, allowing the agent to learn from transitions generated by older policies. This improves data efficiency but can lead to updates that are not based on the current policy, a hallmark of off-policy learning that can contribute to divergence.[3][5]

The combination of these three factors can cause the Q-value estimates to oscillate or even diverge, preventing the agent from learning an effective policy.[6]

Experimental Protocol: The Original DQN

The foundational experiments that highlighted both the promise and the challenges of DQNs were conducted on the Atari 2600 benchmark.

Methodology:

-

Environment: A suite of 49 Atari 2600 games from the Arcade Learning Environment.[7]

-

Input: Raw 210x160 pixel frames were preprocessed into 84x84 grayscale images. Four consecutive frames were stacked to provide the network with a sense of motion.[7]

-

Network Architecture:

-

Input Layer: 84x84x4 image

-

Layer 1 (Convolutional): 32 filters of 8x8 with a stride of 4, followed by a ReLU activation.

-

Layer 2 (Convolutional): 64 filters of 4x4 with a stride of 2, followed by a ReLU activation.

-

Layer 3 (Convolutional): 64 filters of 3x3 with a stride of 1, followed by a ReLU activation.

-

Layer 4 (Fully Connected): 512 rectifier units.

-

Output Layer (Fully Connected): A single output for each valid action (between 4 and 18 depending on the game).[7]

-

-

Key Hyperparameters:

-

Replay Memory Size: 1,000,000 recent frames.

-

Minibatch Size: 32.

-

Optimizer: RMSProp.

-

Learning Rate: 0.00025.

-

Discount Factor (γ): 0.99.

-

Target Network Update Frequency: Every 10,000 steps.[8]

-

Mitigation of Instability: The Target Network

To combat the instability caused by a constantly changing target Q-value, the original DQN introduced a target network .[9] This is a separate, periodically updated copy of the main Q-network. The target network is used to generate the target Q-values for the Bellman equation, providing a stable target for a fixed number of training steps. This helps to prevent the feedback loop where an update to the Q-network immediately changes the target, which can lead to oscillations.[3][4]

Overestimation of Q-Values

A significant theoretical limitation inherent to Q-learning, and consequently to DQN, is the systematic overestimation of Q-values.[4][10] This overestimation arises from the use of the max operator in the Bellman equation to select the action for the next state. When the Q-value estimates are noisy or inaccurate (which is always the case during training), the max operator is more likely to select an action whose Q-value is overestimated than one that is underestimated.[10] This can lead to a positive bias in the learned Q-values, which can result in suboptimal policies if the agent learns to favor actions that lead to states with inaccurately high Q-value estimates.[7][10]

Mitigation: Double Deep Q-Network (Double DQN)

To address the overestimation bias, the Double DQN (DDQN) algorithm was introduced.[4] DDQN decouples the action selection from the action evaluation in the target Q-value calculation. It uses the main Q-network to select the best action for the next state, but then uses the target network to evaluate the Q-value of that chosen action.[11][12] This prevents the same network from being responsible for both selecting and evaluating the action, which helps to reduce the upward bias.[13]

Experimental Protocol: Double DQN vs. DQN

The effectiveness of Double DQN was demonstrated by comparing its performance against the standard DQN on the same Atari 2600 benchmark.

Methodology:

The experimental setup was largely identical to the original DQN experiments to ensure a fair comparison. The key difference was the modification in the calculation of the target Q-value.[4]

Quantitative Data Summary:

The following table presents a comparison of the mean scores achieved by DQN and Double DQN on a selection of Atari games after 200 million frames of training. The scores are normalized such that a random policy scores 0% and a professional human tester scores 100%.

| Game | DQN (Normalized Score) | Double DQN (Normalized Score) |

| Alien | 771% | 2735% |

| Asterix | 538% | 2401% |

| Atlantis | 13410% | 22485% |

| Crazy Climber | 107805% | 114104% |

| Double Dunk | -17.8% | -1.2% |

| Enduro | 831% | 1006% |

| Gopher | 2321% | 8520% |

| James Bond | 408% | 577% |

| Krull | 2395% | 3805% |

| Ms. Pacman | 1629% | 2311% |

| Q*bert | 10596% | 19538% |

| Seaquest | 2895% | 10032% |

| Space Invaders | 1423% | 1976% |

Data sourced from the original Double DQN paper.[14]

Inefficient Exploration

The exploration-exploitation dilemma is a fundamental challenge in reinforcement learning.[15] DQN typically relies on a simple ε-greedy strategy for exploration, where the agent selects a random action with a probability of ε and the greedy action (the one with the highest estimated Q-value) with a probability of 1-ε.[16] While easy to implement, this approach has limitations:

-

Uniform Exploration: It does not distinguish between actions that are promising and those that are clearly suboptimal, leading to inefficient exploration.

-

Sample Inefficiency: It can take a very long time to explore the state-action space, especially in environments with sparse rewards.[12]

Mitigation Strategies:

Several techniques have been developed to improve upon the basic ε-greedy exploration and enhance the efficiency of learning in DQNs.

-

Prioritized Experience Replay (PER): Instead of sampling uniformly from the replay memory, PER prioritizes transitions from which the agent can learn the most.[17] The "surprise" or learning potential of a transition is measured by the magnitude of its temporal-difference (TD) error. By replaying these high-error transitions more frequently, the agent can learn more efficiently.[15][18]

-

Dueling Network Architecture: This architecture separates the estimation of the state value function V(s) and the action advantage function A(s, a).[1] The Q-value is then a combination of these two. This allows the network to learn the value of a state without having to learn the effect of each action in that state, which is particularly useful in states where the actions have little consequence.[6][19]

These advancements, along with others, have significantly improved the performance and stability of DQN models, making them more robust and applicable to a wider range of complex sequential decision-making problems. Understanding these foundational limitations and their corresponding solutions is crucial for researchers and professionals aiming to leverage deep reinforcement learning in their respective domains.

References

- 1. proceedings.mlr.press [proceedings.mlr.press]

- 2. Dueling Network Architectures for Deep Reinforcement Learning [pemami4911.github.io]

- 3. researchgate.net [researchgate.net]

- 4. ojs.aaai.org [ojs.aaai.org]

- 5. eudl.eu [eudl.eu]

- 6. semanticscholar.org [semanticscholar.org]

- 7. Human-level control through deep reinforcement learning [jhamrick.github.io]

- 8. Human-level control through deep reinforcement learning | Neural Aspect [neuralaspect.com]

- 9. Deep Q Learning: A critique. This article is mainly a critique of… | by Shubham Jha | Medium [medium.com]

- 10. atlantis-press.com [atlantis-press.com]

- 11. apxml.com [apxml.com]

- 12. Deep Q-Learning, Part2: Double Deep Q Network, (Double DQN) | by Amber | Medium [medium.com]

- 13. Double Deep Q-Networks Tutorial: A General Guide to Solving Atari Games with DDQN | by Nameless | Medium [medium.com]

- 14. [1509.06461] Deep Reinforcement Learning with Double Q-learning [arxiv.org]

- 15. arxiv.org [arxiv.org]

- 16. reinforcement learning - Performance Comparison between DoubleDQN & DQN - Stack Overflow [stackoverflow.com]

- 17. Prioritized experience replay based on dynamics priority - PMC [pmc.ncbi.nlm.nih.gov]

- 18. [1511.05952] Prioritized Experience Replay [arxiv.org]

- 19. arxiv.org [arxiv.org]

The Nexus of Decision-Making: A Deep Dive into the State-Action Value Function in Deep Q-Networks

An In-depth Technical Guide for Researchers, Scientists, and Drug Development Professionals

In the landscape of artificial intelligence, Deep Q-Networks (DQNs) represent a pivotal advancement in reinforcement learning, enabling agents to learn complex behaviors in high-dimensional environments. At the heart of this powerful algorithm lies the state-action value function, or Q-function, a critical component that quantifies the value of taking a specific action in a given state. This technical guide provides a comprehensive exploration of the Q-function within DQNs, detailing its theoretical underpinnings, practical implementation, and the experimental protocols used to validate its performance.

The Core Concept: Approximating the Optimal Action-Value Function

In reinforcement learning, the goal of an agent is to learn a policy that maximizes its cumulative reward over time. The state-action value function, denoted as Q(s, a), is central to achieving this. It represents the expected total future discounted reward an agent can expect to receive by taking action 'a' in state 's' and following an optimal policy thereafter.[1][2] Traditional Q-learning methods often rely on a tabular approach to store these Q-values for every state-action pair. However, in complex environments with vast or continuous state spaces, such as those encountered in drug discovery simulations or robotic control, this tabular method becomes computationally infeasible.[3][4]

Deep Q-Networks overcome this limitation by employing a deep neural network to approximate the Q-function, Q(s, a; θ), where θ represents the network's weights.[3][5] This neural network takes the state of the environment as input and outputs the corresponding Q-values for all possible actions in that state.[6][7] The use of a neural network allows for generalization across states, enabling the agent to make informed decisions even in situations it has not encountered before.

The foundational principle for training this network is the Bellman equation, which expresses a recursive relationship for the optimal action-value function.[5][8] The Bellman equation for Q-learning is:

Q(s, a) = E[r + γ maxa' Q(s', a') | s, a]

Here, 'r' is the immediate reward, 'γ' is the discount factor that balances immediate and future rewards, 's'' is the next state, and 'a'' is the next action.[6][9] This equation states that the optimal Q-value for a state-action pair is the expected immediate reward plus the discounted maximum Q-value of the next state over all possible actions.

The DQN Algorithm: Learning the Q-function

The DQN algorithm leverages the Bellman equation to create a loss function for training the neural network. The loss is typically the mean squared error (MSE) between the Q-value predicted by the network and a target Q-value derived from the Bellman equation.[3][10]

The training process involves several key components:

-

Experience Replay: To break the correlation between consecutive experiences and stabilize training, the agent's experiences (state, action, reward, next state) are stored in a replay buffer.[4][11] During training, mini-batches of these experiences are randomly sampled to update the network.[9]

The loss function is then defined as:

L(θ) = E(s, a, r, s') ~ D [(r + γ maxa' Q(s', a'; θ') - Q(s, a; θ))2]

where D is the replay buffer. The gradient of this loss function is then used to update the weights θ of the main Q-network through stochastic gradient descent.[8][10]

Experimental Protocols and Performance

The performance of DQNs is typically evaluated on a set of benchmark environments, with the Atari 2600 games and classic control tasks like CartPole being prominent examples.[13][14]

Detailed Methodologies for Key Experiments

Atari 2600 Environment:

-

Preprocessing: Raw game frames (210x160 pixels) are typically preprocessed by converting them to grayscale and down-sampling to a smaller square image (e.g., 84x84).[1] To capture temporal information, a stack of the last four frames is used as the input to the neural network.[15]

-

Network Architecture: The original DeepMind paper utilized a convolutional neural network (CNN) with three convolutional layers followed by two fully connected layers.[15]

-

Training: The network is trained using the RMSProp optimizer with mini-batches of size 32. The exploration-exploitation trade-off is managed using an ε-greedy policy, where ε is annealed from 1.0 to 0.1 over a set number of frames.[15]

-

Evaluation: The agent's performance is evaluated by averaging the total reward over a number of episodes, with a fixed ε-greedy policy (e.g., ε = 0.05).[15]

Classic Control Tasks (e.g., CartPole):

-

State Representation: The state is typically a low-dimensional vector of physical properties (e.g., cart position, pole angle).[16]

-

Network Architecture: A smaller, fully connected neural network is usually sufficient for these tasks.[17]

-

Training and Evaluation: Similar to the Atari setup, training involves experience replay and a target network. Performance is often measured by the number of time steps the pole remains balanced.[17]

Quantitative Data Summary

The following tables summarize the performance of the original DQN and its key variants on benchmark tasks.

| Algorithm | Environment | Metric | Score | Source |

| DQN | Atari: Breakout | Average Reward | 400+ | [18] |

| DQN | Atari: Pong | Average Reward | 20 | [19] |

| DQN | Atari: Space Invaders | Average Reward | 1,976 | [20] |

| Double DQN | Atari: Space Invaders | Average Reward | 3,974 | [20] |

| Dueling DQN | Atari (Mean Normalized) | Performance | ~1200% Human | [21] |

| DQN | CartPole-v1 | Average Timesteps | ~500 | [13] |

| PPO | CartPole-v1 | Average Timesteps | ~500 | [13] |

| Hyperparameter | Value (Atari) | Source |

| Optimizer | RMSProp | [15] |

| Minibatch Size | 32 | [15] |

| Replay Memory Size | 1,000,000 | [15] |

| Target Network Update Freq. | 10,000 | [22] |

| Discount Factor (γ) | 0.99 | [15] |

| Learning Rate | 0.00025 | [15] |

| Initial Exploration (ε) | 1.0 | [15] |

| Final Exploration (ε) | 0.1 | [15] |

| Exploration Annealing Frames | 1,000,000 | [15] |

Visualizing the Core Processes

To better understand the logical flow and relationships within the DQN framework, the following diagrams are provided in the DOT language for Graphviz.

Conclusion

The state-action value function is the cornerstone of Deep Q-Networks, providing the essential mechanism for an agent to learn and make optimal decisions in complex environments. By approximating this function with a deep neural network and employing techniques like experience replay and target networks, DQNs have achieved remarkable success in a variety of domains. The continued refinement of the DQN architecture and training methodologies, as seen in extensions like Double and Dueling DQNs, further underscores the power and flexibility of this approach. For researchers and professionals in fields such as drug development, understanding the intricacies of the Q-function in DQNs opens up new avenues for tackling complex simulation and optimization problems.

References

- 1. Step-by-Step Deep Q-Networks (DQN) Tutorial: From Atari Games to Bioengineering Research | by Yinxuan Li | Medium [medium.com]

- 2. atlantis-press.com [atlantis-press.com]

- 3. An Evaluation Methodology for Interactive Reinforcement Learning with Simulated Users - PMC [pmc.ncbi.nlm.nih.gov]

- 4. openreview.net [openreview.net]

- 5. bugfree.ai [bugfree.ai]

- 6. freecodecamp.org [freecodecamp.org]

- 7. Mastering Atari Game: Deep Q-Network (DQN) Agents in Reinforcement Learning | by Kabila MD Musa | Medium [medium.com]

- 8. artificial intelligence - How do you evaluate a trained reinforcement learning agent whether it is trained or not? - Stack Overflow [stackoverflow.com]

- 9. jetir.org [jetir.org]

- 10. Deep Reinforcement Learning - Google DeepMind [deepmind.google]

- 11. How do you measure the performance of an RL agent? [milvus.io]

- 12. How do you evaluate the performance of a reinforcement learning agent? - Zilliz Vector Database [zilliz.com]

- 13. researchgate.net [researchgate.net]

- 14. DQN arXiv 10-year anniversary: What are the outstanding problems being actively researched in deep Q-learning since 2019? - Artificial Intelligence Stack Exchange [ai.stackexchange.com]

- 15. cs.toronto.edu [cs.toronto.edu]

- 16. Weights & Biases [wandb.ai]

- 17. Deep Q Learning for the CartPole. The purpose of this post is to… | by Rita Kurban | TDS Archive | Medium [medium.com]

- 18. Human-level control through deep reinforcement learning | Neural Aspect [neuralaspect.com]

- 19. medium.com [medium.com]

- 20. slm-lab.gitbook.io [slm-lab.gitbook.io]

- 21. [RL] Deep Q-Learning: DQN Extensions [leeyngdo.github.io]

- 22. docs.cleanrl.dev [docs.cleanrl.dev]

Methodological & Application

Application Notes and Protocols: Implementing a Deep Q-Network (DQN) in Python with TensorFlow

Audience: Researchers, scientists, and drug development professionals.

Objective: To provide a detailed guide on the principles and practical implementation of a Deep Q-Network (DQN), a foundational reinforcement learning algorithm. This document outlines the theoretical basis, experimental protocols for a Python-based implementation using TensorFlow, and potential applications in the field of drug discovery and development.

Introduction to Deep Q-Networks

Reinforcement Learning (RL) is a paradigm of machine learning where an "agent" learns to make decisions by performing actions in an "environment" to maximize a cumulative reward.[1][2] This approach is particularly powerful for solving dynamic decision problems where the optimal path is not known beforehand.[3] In drug discovery, RL can be applied to complex challenges such as de novo molecular design, optimizing synthetic pathways, or personalizing treatment regimens.[2][3][4]

A Deep Q-Network (DQN) is a type of RL algorithm that combines Q-learning with deep neural networks.[5][6] Traditional Q-learning uses a table to store the expected rewards (Q-values) for each action in every possible state.[7] This becomes infeasible for complex problems with large or continuous state spaces.[7][8] DQNs overcome this limitation by using a neural network to approximate the Q-value function, enabling it to handle high-dimensional inputs like molecular structures or biological system states.[6][7][8]

The key innovations of the DQN algorithm that stabilize training are:

-

Experience Replay: A memory buffer stores the agent's experiences (state, action, reward, next state). During training, mini-batches are randomly sampled from this buffer, which breaks the correlation between consecutive samples and improves data efficiency.[1][5][8]

-

Target Network: A separate, fixed copy of the main Q-network is used to calculate the target Q-values.[1] This target network's weights are updated only periodically, which adds a layer of stability to the learning process by preventing rapid shifts in the target values.[1][8]

Core Concepts and Methodology