SAINT-2

Description

BenchChem offers high-quality this compound suitable for many research applications. Different packaging options are available to accommodate customers' requirements. Please inquire for more information about this compound including the price, delivery time, and more detailed information at info@benchchem.com.

Properties

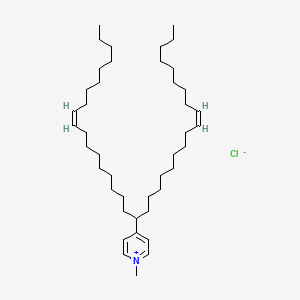

Molecular Formula |

C43H78ClN |

|---|---|

Molecular Weight |

644.5 g/mol |

IUPAC Name |

4-[(9Z,28Z)-heptatriaconta-9,28-dien-19-yl]-1-methylpyridin-1-ium;chloride |

InChI |

InChI=1S/C43H78N.ClH/c1-4-6-8-10-12-14-16-18-20-22-24-26-28-30-32-34-36-42(43-38-40-44(3)41-39-43)37-35-33-31-29-27-25-23-21-19-17-15-13-11-9-7-5-2;/h18-21,38-42H,4-17,22-37H2,1-3H3;1H/q+1;/p-1/b20-18-,21-19-; |

InChI Key |

FQKXELHZMFODBN-YIQDKWKASA-M |

Isomeric SMILES |

CCCCCCCC/C=C\CCCCCCCCC(C1=CC=[N+](C=C1)C)CCCCCCCC/C=C\CCCCCCCC.[Cl-] |

Canonical SMILES |

CCCCCCCCC=CCCCCCCCCC(CCCCCCCCC=CCCCCCCCC)C1=CC=[N+](C=C1)C.[Cl-] |

Origin of Product |

United States |

Foundational & Exploratory (saint - Interactomics)

Unveiling Protein-Protein Interactions: A Technical Guide to SAINT Analysis in Proteomics

For Researchers, Scientists, and Drug Development Professionals

In the intricate landscape of cellular biology, understanding the complex web of protein-protein interactions (PPIs) is paramount to deciphering biological processes and advancing drug discovery. Affinity Purification followed by Mass Spectrometry (AP-MS) has emerged as a powerful technique for identifying these interactions. However, a significant challenge lies in distinguishing genuine biological interactors from a vast background of non-specific binders. This is where the Significance Analysis of INTeractome (SAINT) algorithm comes into play.[1] SAINT is a computational tool that provides a statistical framework to score the confidence of PPIs identified in AP-MS experiments, enabling researchers to focus on high-probability interactions.[1]

This in-depth technical guide provides a comprehensive overview of SAINT analysis, from the underlying statistical principles to detailed experimental protocols and data interpretation.

Core Principles of SAINT Analysis

The fundamental principle of SAINT is to assign a probability score to each potential protein-protein interaction.[2] It achieves this by modeling the quantitative data from AP-MS experiments, such as spectral counts or peptide intensities, as a mixture of two distinct distributions: one representing true, bona fide interactions and another for false, non-specific interactions.[3][4][5] By comparing the observed data for a specific "bait" (the protein of interest) and "prey" (its potential interactor) pair against these two distributions, SAINT calculates the posterior probability of it being a true interaction.[3][4][5]

The Statistical Foundation of SAINT

SAINT's statistical model is the cornerstone of its ability to differentiate true interactors from background noise. For each potential bait-prey interaction, the observed quantitative measurement (e.g., spectral count, denoted as X) is assumed to have arisen from one of two states: a true interaction (T) or a false interaction (F).[2]

The probability of observing a certain spectral count X for a given bait-prey pair is modeled as a mixture of two probability distributions:

-

P(X|T): The probability of observing spectral count X given a true interaction.

-

P(X|F): The probability of observing spectral count X given a false interaction.

For spectral count data, these distributions are often modeled using the Poisson distribution , which is well-suited for count data.[6] In cases where the variance of the data is significantly larger than the mean (a phenomenon known as overdispersion), the Negative Binomial distribution may be used for a better fit.

Using Bayes' theorem, SAINT calculates the posterior probability of a true interaction, which is the SAINT score, P(T|X):[2]

P(T|X) = [P(X|T) * P(T)] / [P(X|T) * P(T) + P(X|F) * P(F)]

Where:

-

P(T|X) is the posterior probability of a true interaction given the observed spectral count X (the SAINT score).

-

P(X|T) and P(X|F) are the probabilities of observing the spectral count X under the true and false interaction models, respectively.

-

P(T) is the prior probability of a true interaction.

-

P(F) is the prior probability of a false interaction, which is 1 - P(T).

The parameters for the true and false distributions are estimated from the entire dataset, often incorporating information from negative control experiments.[3][4]

Experimental Protocol: Affinity Purification-Mass Spectrometry (AP-MS)

A robust SAINT analysis begins with a well-designed and meticulously executed AP-MS experiment. The goal is to isolate the bait protein and its interacting partners from a complex cellular lysate.

Key Methodologies

-

Bait Protein Expression and Tagging:

-

The bait protein is typically fused with an epitope tag (e.g., FLAG, HA, GFP) to facilitate its specific capture.

-

Expression levels of the bait protein should be near-physiological to minimize non-specific interactions that can arise from overexpression.[7]

-

-

Cell Lysis:

-

Cells expressing the tagged bait protein are harvested and lysed under non-denaturing conditions to preserve protein complexes.

-

Lysis buffers should contain protease and phosphatase inhibitors to prevent protein degradation.

-

-

Immunoprecipitation (IP):

-

The cell lysate is incubated with beads coated with an antibody that specifically recognizes the epitope tag on the bait protein. This allows for the capture of the bait protein and its associated interactors.

-

Incubation is typically performed at 4°C for 1-4 hours with gentle rotation.

-

-

Washing:

-

The beads are washed multiple times with a wash buffer to remove non-specifically bound proteins. The stringency of the washes (e.g., salt and detergent concentrations) is a critical parameter that needs to be optimized to reduce background without disrupting true interactions.[1]

-

-

Elution:

-

The bait protein and its interacting partners are eluted from the beads. Elution can be achieved using various methods:

-

Acidic Elution: Using a low pH buffer (e.g., 0.1 M glycine, pH 2.5-3.0).

-

Denaturing Elution: Boiling the beads in SDS-PAGE sample buffer (e.g., Laemmli buffer). This is a harsh method that disrupts protein complexes.[8]

-

Competitive Elution: Using a high concentration of the epitope tag peptide to compete with the tagged bait for binding to the antibody.

-

Detergent-based "Soft" Elution: Using a buffer containing a low concentration of SDS and a non-ionic detergent (e.g., 0.2% SDS, 0.1% Tween-20) can effectively elute the complex while leaving a significant portion of the antibody on the beads.[9]

-

-

-

Protein Digestion and Mass Spectrometry:

-

The eluted proteins are typically separated by SDS-PAGE, and the gel lane is excised and cut into slices. The proteins within each slice are then subjected to in-gel digestion with a protease, most commonly trypsin.[1]

-

The resulting peptide mixture is analyzed by liquid chromatography-tandem mass spectrometry (LC-MS/MS).[2] The mass spectrometer measures the mass-to-charge ratio of the peptides and fragments them to determine their amino acid sequences.[2]

-

-

Protein Identification and Quantification:

-

The acquired MS/MS spectra are searched against a protein sequence database to identify the proteins present in the sample.

-

Label-free quantification methods, such as spectral counting (the number of MS/MS spectra identified for a protein) or precursor ion intensity, are used to determine the relative abundance of each protein.

-

Data Presentation: SAINT Input and Output

SAINT analysis requires three specifically formatted tab-delimited input files. It is crucial that the identifiers for baits and preys are consistent across all three files.

SAINT Input Files

| File Name | Column 1 | Column 2 | Column 3 | Column 4 |

| interaction.dat | IP Name | Bait Name | Prey Name | Spectral Count/Intensity |

| prey.dat | Prey Name | Protein Length | Gene Name | |

| bait.dat | IP Name | Bait Name | Test (T) or Control (C) |

Interpreting SAINT Output

The primary output of a SAINT analysis is a list of all potential bait-prey interactions with their corresponding scores. This allows for the ranking of interactions by confidence.

| Column Header | Description | Interpretation |

| Bait | The identifier for the bait protein. | |

| Prey | The identifier for the prey protein. | |

| PreyGene | The gene name of the prey protein. | |

| Spec | The spectral count of the prey in the current purification. | A raw measure of abundance. |

| SpecSum | The sum of spectral counts for the prey across all purifications of the bait. | A measure of total abundance for the interaction. |

| AvgSpec | The average spectral count of the prey across all purifications of the bait. | A normalized measure of abundance. |

| NumReplicates | The number of replicate purifications in which the interaction was observed. | Indicates the reproducibility of the interaction. |

| ctrlCounts | The spectral counts of the prey in the control purifications. | Used to assess background binding. |

| FoldChange | The ratio of the average spectral count in the bait purifications to the average in the control purifications. | A measure of enrichment. |

| iProb | The individual probability score for the interaction in a single replicate. | |

| AvgP | The average probability score for the interaction across all replicates.[10] | The primary SAINT score, indicating the overall confidence in the interaction. A score closer to 1 signifies a higher probability of a true interaction. |

| MaxP | The maximum probability score for the interaction from any single replicate.[10] | Useful for identifying strong but potentially less consistently observed interactions. |

| TopoAvgP | A topology-aware probability score that incorporates information about known interactions between prey proteins. | Can help identify members of a protein complex. |

| SaintScore | The final confidence score, often the maximum of AvgP and TopoAvgP. | A composite score for ranking interactions. |

| BFDR | Bayesian False Discovery Rate. An estimate of the false discovery rate for interactions at or above the given SaintScore. | Helps in setting a threshold for high-confidence interactions. |

Mandatory Visualizations

AP-MS Experimental Workflow

References

- 1. wp.unil.ch [wp.unil.ch]

- 2. Mass spectrometry‐based protein–protein interaction networks for the study of human diseases - PMC [pmc.ncbi.nlm.nih.gov]

- 3. SAINT: Probabilistic Scoring of Affinity Purification - Mass Spectrometry Data - PMC [pmc.ncbi.nlm.nih.gov]

- 4. genepath.med.harvard.edu [genepath.med.harvard.edu]

- 5. researchgate.net [researchgate.net]

- 6. SAINT-MS1: protein-protein interaction scoring using label-free intensity data in affinity purification – mass spectrometry experiments - PMC [pmc.ncbi.nlm.nih.gov]

- 7. fiveable.me [fiveable.me]

- 8. Immunoprecipitation (IP) and co-immunoprecipitation protocol | Abcam [abcam.com]

- 9. Improved Elution Conditions for Native Co-Immunoprecipitation - PMC [pmc.ncbi.nlm.nih.gov]

- 10. reprint-apms.org [reprint-apms.org]

An In-Depth Technical Guide to SAINT for Protein Interaction Analysis

For Researchers, Scientists, and Drug Development Professionals

This guide provides a comprehensive technical overview of the Significance Analysis of INTeractome (SAINT) algorithm, a powerful statistical tool for analyzing protein-protein interaction (PPI) data derived from affinity purification-mass spectrometry (AP-MS) experiments. This document details the core principles of SAINT, experimental protocols for generating high-quality data for SAINT analysis, presents quantitative data from a landmark study, and provides visualizations of experimental workflows and a signaling pathway elucidated using this methodology.

Introduction to SAINT: A Probabilistic Approach to Scoring Protein Interactions

SAINT is a computational tool developed to assign confidence scores to PPIs identified through AP-MS experiments.[1] AP-MS is a widely used technique to isolate a protein of interest (the "bait") along with its interacting partners (the "prey") from a complex mixture of cellular proteins.[2] However, a significant challenge in AP-MS is distinguishing bona fide interactors from non-specific background proteins that co-purify with the bait.[1]

SAINT addresses this challenge by applying a probabilistic scoring model to quantitative data from AP-MS experiments, such as spectral counts or peptide/protein intensities.[1] The algorithm models the distribution of true and false interactions separately to calculate the probability of a genuine interaction between a bait and a prey protein.[1] This statistical rigor allows for a more objective and reproducible analysis compared to arbitrary filtering methods.

Several versions of the SAINT algorithm have been developed to accommodate different types of quantitative data and experimental designs:

-

SAINT: The original version, often used for spectral count data.[1]

-

SAINT-MS1: An adaptation for label-free MS1 intensity data.[2]

-

SAINTexpress: A faster version that is particularly effective when good negative controls are available.

-

SAINTq: Designed for fragment or peptide intensity data, often from data-independent acquisition (DIA) mass spectrometry.

A key feature of SAINT is its ability to incorporate data from negative control purifications, which are experiments performed without the bait protein or with an unrelated control protein.[3] This allows SAINT to build a more accurate model of the background noise and improve the discrimination of true interactors.[3]

Experimental Protocols: Affinity Purification-Mass Spectrometry (AP-MS)

The quality of SAINT analysis is highly dependent on the quality of the input AP-MS data. A well-designed and executed AP-MS experiment is crucial for obtaining reliable results. The following is a detailed, step-by-step protocol for a typical AP-MS experiment.

-

Cell Culture and Harvest: Culture cells expressing the epitope-tagged bait protein to a sufficient density. Harvest the cells by centrifugation and wash with ice-cold phosphate-buffered saline (PBS).

-

Lysis Buffer Preparation: Prepare a lysis buffer that maintains protein-protein interactions. A common lysis buffer contains:

-

50 mM Tris-HCl, pH 7.4

-

150 mM NaCl

-

1 mM EDTA

-

1% Triton X-100 or 0.5% NP-40

-

Protease and phosphatase inhibitor cocktails (added fresh)

-

-

Cell Lysis: Resuspend the cell pellet in the lysis buffer and incubate on ice for 30 minutes with occasional vortexing to ensure complete lysis.

-

Clarification of Lysate: Centrifuge the lysate at high speed (e.g., 14,000 x g) for 15 minutes at 4°C to pellet cellular debris. The supernatant containing the soluble proteins is the clarified lysate.

-

Antibody-Bead Conjugation: Covalently couple an antibody specific to the epitope tag on the bait protein to agarose (B213101) or magnetic beads (e.g., Protein A/G beads). Alternatively, use commercially available pre-conjugated beads.

-

Incubation: Add the antibody-conjugated beads to the clarified cell lysate and incubate for 2-4 hours or overnight at 4°C with gentle rotation to allow for the formation of the antibody-bait-prey complexes.

-

Washing: Pellet the beads by centrifugation or using a magnetic rack and discard the supernatant. Wash the beads extensively with lysis buffer (typically 3-5 times) to remove non-specifically bound proteins.

-

Elution: Elute the bound proteins from the beads. This can be achieved by:

-

Competitive Elution: Using a peptide that corresponds to the epitope tag.

-

pH Elution: Using a low pH buffer (e.g., 0.1 M glycine, pH 2.5).

-

On-Bead Digestion: Directly digesting the proteins while they are still bound to the beads.

-

-

Reduction and Alkylation: Reduce the disulfide bonds in the eluted proteins using a reducing agent like dithiothreitol (B142953) (DTT) at 56°C for 1 hour. Alkylate the free cysteine residues with iodoacetamide (B48618) at room temperature in the dark for 45 minutes to prevent the reformation of disulfide bonds.

-

In-Solution or In-Gel Digestion:

-

In-Solution: Dilute the protein sample to reduce the concentration of denaturants and add a protease, typically trypsin, at a 1:50 to 1:100 enzyme-to-protein ratio. Incubate overnight at 37°C.

-

In-Gel: Run the eluted proteins on an SDS-PAGE gel. Excise the protein bands, destain, and perform in-gel digestion with trypsin.

-

-

Peptide Desalting: Desalt the digested peptide mixture using a C18 StageTip or a similar reverse-phase chromatography medium to remove salts and detergents that can interfere with mass spectrometry.

-

LC Separation: Load the desalted peptides onto a reverse-phase analytical column connected to a high-performance liquid chromatography (HPLC) system. Separate the peptides using a gradient of increasing organic solvent (e.g., acetonitrile) concentration.

-

Mass Spectrometry: As the peptides elute from the LC column, they are ionized (typically by electrospray ionization) and introduced into a tandem mass spectrometer.

-

MS1 Scan: The mass spectrometer performs a full scan to determine the mass-to-charge ratio (m/z) of the intact peptide ions.

-

MS2 Scan (Tandem MS): The most abundant peptide ions from the MS1 scan are selected for fragmentation (e.g., by collision-induced dissociation), and the m/z of the resulting fragment ions are measured.

-

-

Data Acquisition: The mass spectrometer cycles through MS1 and MS2 scans to acquire data for a large number of peptides in the sample.

Data Presentation: Quantitative Analysis of the Human Deubiquitinating Enzyme (DUB) Interactome

To illustrate the output of a SAINT analysis, the following table summarizes a subset of the high-confidence interactions identified in the seminal study by Sowa et al. (2009), which mapped the interactome of human deubiquitinating enzymes. This study utilized a similar statistical approach to SAINT for scoring interactions. The table includes the bait DUB, the interacting prey protein, the spectral counts observed in the AP-MS experiments, and a confidence score.

| Bait Protein | Prey Protein | Spectral Count (Replicate 1) | Spectral Count (Replicate 2) | Confidence Score |

| USP7 | GMPS | 15 | 12 | 0.98 |

| USP7 | UHRF1 | 10 | 8 | 0.95 |

| USP9X | MARK4 | 25 | 21 | 0.99 |

| USP9X | AFDN | 18 | 15 | 0.97 |

| USP11 | ZNF278 | 30 | 28 | 1.00 |

| USP11 | DDX39A | 22 | 19 | 0.98 |

| ATXN3 | RAD23B | 45 | 41 | 1.00 |

| ATXN3 | UBQLN1 | 38 | 35 | 0.99 |

Note: The confidence scores are illustrative and based on the high-confidence interactions reported in the original publication. The actual scoring metric used in the Sowa et al. study was the CompPASS system, which shares principles with SAINT.

Mandatory Visualization

This section provides diagrams created using the Graphviz DOT language, illustrating key workflows and a signaling pathway relevant to SAINT analysis.

Caption: A high-level overview of the Affinity Purification-Mass Spectrometry (AP-MS) experimental workflow.

Caption: The logical flow of the SAINT algorithm for scoring protein-protein interactions.

The following diagram illustrates a portion of the Drosophila Insulin/TOR signaling pathway, with high-confidence protein-protein interactions identified through quantitative AP-MS and analyzed with a SAINT-like statistical framework. This network is based on the findings of Glatter et al. (2011).

Caption: A simplified network of the Drosophila Insulin/TOR signaling pathway based on AP-MS data.

Conclusion

SAINT and its derivatives have become indispensable tools for the analysis of protein-protein interaction data from AP-MS experiments. By providing a robust statistical framework for assigning confidence scores to interactions, SAINT enables researchers to more reliably identify true biological interactions from a background of non-specific binders. The combination of meticulous experimental design and sophisticated computational analysis, as exemplified by the SAINT workflow, is crucial for unraveling the complex protein interaction networks that underpin cellular processes and disease. This guide provides the foundational knowledge for researchers and drug development professionals to effectively utilize and interpret the results from SAINT-based proteomics studies.

References

Unveiling Protein Alliances: A Technical Guide to the SAINT Algorithm

The Significance Analysis of INTeractome (SAINT) algorithm is a cornerstone in the field of proteomics, providing a robust statistical framework to assign confidence scores to protein-protein interactions (PPIs) identified through affinity purification-mass spectrometry (AP-MS) experiments. For researchers, scientists, and drug development professionals, SAINT is an indispensable tool for distinguishing bona fide biological interactions from the background of non-specific binders inherent in AP-MS data. This in-depth technical guide elucidates the core mechanics of the SAINT algorithm, from the underlying statistical models to practical data formatting and experimental considerations.

Core Principles of the SAINT Algorithm

At its heart, SAINT is a computational method that calculates the probability of a true interaction between a "bait" protein and its co-purified "prey" proteins.[1][2][3] It leverages quantitative data from label-free AP-MS experiments, such as spectral counts or peptide intensities, to model the distributions of true and false interactions separately.[1][4] This probabilistic approach provides a more nuanced and statistically grounded assessment of interaction confidence compared to arbitrary fold-change cutoffs.

The fundamental premise is that for any given bait-prey pair, the observed quantitative measurement is a mixture of signals arising from two distinct possibilities: a genuine biological interaction or a non-specific background association.[3] By statistically modeling these two populations, SAINT can calculate the posterior probability of a true interaction for each observed bait-prey pair.[3]

Several versions of the SAINT algorithm have been developed to cater to different data types and experimental designs, including the original SAINT, SAINT-MS1 for intensity data, and the faster SAINTexpress.[5]

The Statistical Foundation of SAINT

SAINT employs a Bayesian framework to model the quantitative data from AP-MS experiments.[2] The choice of statistical distribution depends on the nature of the quantitative data.

Modeling Spectral Count Data

For spectral count data, which are discrete counts, SAINT typically uses either the Poisson distribution or the Negative Binomial distribution.

-

Poisson Distribution: This distribution is suitable when the mean and variance of the spectral counts are approximately equal. The probability mass function (PMF) for a Poisson distribution is given by:

P(k; λ) = (λ^k * e^-λ) / k!

Where k is the observed number of spectral counts and λ is the average rate of spectral counts. In the SAINT model, separate Poisson distributions are fitted for true interactions (with mean λ_true) and false interactions (with mean λ_false).

-

Negative Binomial Distribution: AP-MS data often exhibit overdispersion, where the variance in spectral counts is greater than the mean. In such cases, the Negative Binomial distribution provides a more appropriate model. The variance of the Negative Binomial distribution is a function of its mean (µ) and a dispersion parameter (α), given by µ + αµ². A smaller α indicates that the distribution is closer to a Poisson distribution.

Bayesian Mixture Model

SAINT utilizes a mixture model to represent the bimodal distribution of true and false interactions. The algorithm estimates the parameters for these distributions (e.g., λ_true, λ_false) for each potential interaction by leveraging the entire dataset, including data from negative control purifications.[2] This global modeling approach enhances statistical power, particularly for datasets with a limited number of replicates.[6]

Using Bayes' theorem, the posterior probability of a true interaction, given the observed spectral count, is calculated. This posterior probability is the SAINT score.

Data Input Requirements for SAINT Analysis

A standard SAINT analysis requires three tab-delimited input files: the interaction file, the prey file, and the bait file.[7]

Data Presentation: Input File Structure

| File Name | Column 1 | Column 2 | Column 3 | Column 4 |

| interaction.dat | IP Name | Bait Name | Prey Name | Spectral Count/Intensity |

| prey.dat | Prey Name | Protein Length | Gene Name | |

| bait.dat | IP Name | Bait Name | Test (T) or Control (C) |

Table 1: Required format for the three input files for SAINT and SAINTexpress.

Experimental Protocols: A Detailed AP-MS Methodology

The quality of the input data is paramount for a successful SAINT analysis. A well-designed and executed AP-MS experiment is crucial. The following is a detailed methodology for a typical AP-MS experiment aimed at generating data for SAINT analysis.

Bait Protein Expression and Cell Culture

-

Cloning and Expression: The gene encoding the bait protein is cloned into an expression vector containing an affinity tag (e.g., FLAG, HA, GFP). This vector is then transfected into a suitable cell line (e.g., HEK293T, HeLa). Stable cell lines expressing the tagged bait protein are often generated to ensure consistent expression levels.

-

Cell Culture: Cells are cultured in appropriate media and conditions to the desired confluence. For a typical experiment, cells are expanded to multiple 15-cm plates to ensure sufficient starting material.

Cell Lysis and Affinity Purification

-

Cell Lysis: Cells are harvested and washed with ice-cold phosphate-buffered saline (PBS). The cell pellet is then resuspended in a non-denaturing lysis buffer (e.g., 50 mM Tris-HCl pH 7.4, 150 mM NaCl, 1 mM EDTA, 0.5% NP-40, and a cocktail of protease and phosphatase inhibitors). The lysate is incubated on ice and then clarified by centrifugation to remove cellular debris.

-

Affinity Purification: The clarified lysate is incubated with beads conjugated to an antibody that specifically recognizes the affinity tag (e.g., anti-FLAG M2 affinity gel). This incubation is typically performed for 2-4 hours at 4°C with gentle rotation.

Washing and Elution

-

Washing: The beads are washed extensively with the lysis buffer (or a wash buffer with slightly different salt concentrations) to remove non-specifically bound proteins. This is a critical step to reduce background contaminants. Typically, 3-5 washes are performed.

-

Elution: The bait protein and its interacting partners are eluted from the beads. For FLAG-tagged proteins, this is often achieved by competitive elution with a solution of 3X FLAG peptide.

Sample Preparation for Mass Spectrometry

-

Protein Digestion: The eluted protein complexes are denatured, reduced with DTT, and alkylated with iodoacetamide. The proteins are then digested into smaller peptides using a protease, most commonly trypsin.

-

Desalting: The resulting peptide mixture is desalted using a C18 solid-phase extraction column to remove contaminants that could interfere with mass spectrometry analysis.

Mass Spectrometry Analysis

-

LC-MS/MS: The peptide mixture is analyzed by liquid chromatography-tandem mass spectrometry (LC-MS/MS). The peptides are separated by reverse-phase liquid chromatography and then ionized and introduced into the mass spectrometer. The instrument measures the mass-to-charge ratio of the peptides (MS1 scan) and then fragments the most abundant peptides to determine their amino acid sequence (MS2 or MS/MS scan).

Data Processing and Protein Identification

-

Database Searching: The acquired MS/MS spectra are searched against a protein sequence database (e.g., UniProt) using a search engine such as Sequest, Mascot, or MaxQuant.

-

Protein Identification and Quantification: The search engine identifies the peptides and, by inference, the proteins present in the sample. It also provides quantitative information, such as spectral counts or peptide intensities, for each identified protein.

Interpreting SAINT Output

The primary output of a SAINT analysis is a comprehensive table that provides quantitative metrics for each potential protein-protein interaction. This structured format allows for easy comparison and prioritization of high-confidence interactors.

Data Presentation: Example SAINT Output

| Bait | Prey | Spec | FoldChange | SaintScore | BFDR |

| BCL2L1 | BID | 15 | 15.0 | 1.00 | 0.00 |

| BCL2L1 | BAD | 12 | 12.0 | 0.99 | 0.00 |

| BCL2L1 | BAX | 10 | 10.0 | 0.98 | 0.01 |

| BCL2L1 | HSP90AA1 | 50 | 2.5 | 0.50 | 0.15 |

| BCL2L1 | TUBA1A | 100 | 1.1 | 0.10 | 0.85 |

Table 2: A simplified, illustrative example of a SAINT output file. Spec refers to the spectral count in the bait purification. FoldChange is the enrichment over control purifications. SaintScore is the probability of a true interaction. BFDR is the Bayesian False Discovery Rate.

Mandatory Visualizations

Experimental and Computational Workflow

A high-level workflow for an AP-MS experiment coupled with SAINT analysis.

Logical Flow of the SAINT Algorithm

The logical flow of the SAINT algorithm for scoring protein-protein interactions.

Example Signaling Pathway: Wnt Signaling

The Wnt signaling pathway is a crucial regulator of cell fate, proliferation, and migration. The following diagram illustrates how high-confidence interactions for key Wnt pathway components, as identified by SAINT, could be visualized to map out protein complexes and signaling cascades.

Hypothetical Wnt signaling pathway with SAINT-identified interactors.

References

- 1. Analyzing protein-protein interactions from affinity purification-mass spectrometry data with SAINT - PMC [pmc.ncbi.nlm.nih.gov]

- 2. reprint-apms.org [reprint-apms.org]

- 3. genepath.med.harvard.edu [genepath.med.harvard.edu]

- 4. saint-apms.sourceforge.net [saint-apms.sourceforge.net]

- 5. SAINT-MS1: protein-protein interaction scoring using label-free intensity data in affinity purification – mass spectrometry experiments - PMC [pmc.ncbi.nlm.nih.gov]

- 6. Integrated analysis of the Wnt responsive proteome in human cells reveals diverse and cell-type specific networks - PMC [pmc.ncbi.nlm.nih.gov]

- 7. raw.githubusercontent.com [raw.githubusercontent.com]

Principles of Significance Analysis of INTeractome (SAINT): A Technical Guide

Authored for Researchers, Scientists, and Drug Development Professionals

Introduction

In the landscape of proteomics and systems biology, understanding the intricate web of protein-protein interactions (PPIs) is paramount to elucidating cellular function, disease mechanisms, and identifying novel therapeutic targets. Affinity Purification coupled with Mass Spectrometry (AP-MS) has emerged as a powerful technique for identifying putative protein interactions. However, a significant challenge in AP-MS is distinguishing bona fide interactors from non-specific background contaminants. The Significance Analysis of INTeractome (SAINT) is a computational tool developed to address this challenge by assigning a probability score to each potential PPI, thereby enabling a more rigorous and objective assessment of interaction data.[1][2][3] This in-depth technical guide provides a comprehensive overview of the core principles of SAINT, detailed experimental protocols, and the interpretation of its quantitative outputs.

Core Principles of SAINT

SAINT is a sophisticated statistical method that analyzes quantitative data from AP-MS experiments to differentiate true interactions from noise.[2][3] The fundamental principle of SAINT is to model the distribution of prey proteins for both true and false interactions separately.[3] It then calculates the posterior probability of a true interaction for each bait-prey pair.[3]

The algorithm leverages quantitative information, such as spectral counts or peptide intensities, derived from label-free quantification.[1] By comparing the abundance of a prey protein in purifications with a specific "bait" protein against its abundance in negative control purifications, SAINT can statistically assess the likelihood of a genuine interaction.

Several versions of the SAINT software have been developed, each with specific features:

-

SAINT: The original implementation, which laid the groundwork for probabilistic scoring.[3]

-

SAINTexpress: An improved and faster version with a simpler statistical model, making it a popular choice for many researchers.[4]

-

SAINT-MS1: An extension of SAINT tailored for analyzing MS1 intensity data.[5]

-

SAINTq: A version designed to handle peptide or fragment-level intensity data, particularly from Data-Independent Acquisition (DIA) workflows.[6]

The core output of a SAINT analysis is a list of putative PPIs, each assigned several scores to help researchers prioritize high-confidence interactions for further investigation.

Experimental Protocols

A robust SAINT analysis is predicated on a well-designed and executed AP-MS experiment. The following is a detailed methodology for a typical AP-MS experiment geared for SAINT analysis.

Bait Protein Expression and Cell Culture

-

Vector Construction: The gene encoding the "bait" protein of interest is cloned into an expression vector containing an affinity tag (e.g., FLAG, HA, Strep-tag II, GFP).

-

Cell Line Transfection/Transduction: The expression vector is introduced into a suitable cell line (e.g., HEK293T, HeLa) using standard transfection or viral transduction methods. Stable cell lines expressing the tagged bait protein are often preferred for consistency.

-

Control Samples: It is crucial to generate appropriate negative control samples. A common control is a cell line expressing the affinity tag alone.

-

Cell Culture and Expansion: The bait-expressing and control cell lines are cultured and expanded to generate sufficient biomass for affinity purification.

Cell Lysis and Lysate Preparation

-

Cell Harvest: Cells are harvested from culture plates, typically by scraping, and washed with ice-cold phosphate-buffered saline (PBS).

-

Lysis Buffer: Cells are lysed in a non-denaturing lysis buffer to preserve protein complexes. A typical lysis buffer contains:

-

50 mM Tris-HCl, pH 7.4

-

150 mM NaCl

-

1 mM EDTA

-

1% Triton X-100 or 0.5% NP-40

-

Protease and phosphatase inhibitor cocktails (added fresh)

-

-

Lysis Procedure: The cell pellet is resuspended in lysis buffer and incubated on ice with occasional vortexing. The lysate is then centrifuged at high speed (e.g., 14,000 x g) to pellet cell debris. The supernatant (clarified lysate) is collected.[7]

Affinity Purification

-

Bead Preparation: Agarose or magnetic beads conjugated with an antibody or affinity resin that specifically recognizes the affinity tag (e.g., anti-FLAG agarose) are washed and equilibrated with lysis buffer.

-

Immunoprecipitation: The clarified cell lysate is incubated with the prepared beads to allow the bait protein and its interacting partners to bind to the beads. This incubation is typically performed for several hours to overnight at 4°C with gentle rotation.[8]

-

Washing: The beads are extensively washed with lysis buffer (or a wash buffer with slightly different salt and detergent concentrations) to remove non-specifically bound proteins. This is a critical step to reduce background contamination.[9]

Elution and Sample Preparation for Mass Spectrometry

-

Elution: The bound protein complexes are eluted from the beads. Common elution methods include:

-

Competitive Elution: Using a peptide that competes with the affinity tag for binding to the beads (e.g., 3xFLAG peptide for FLAG-tagged proteins). This is a gentle elution method.

-

pH Elution: Using a low pH buffer (e.g., glycine-HCl, pH 2.5) to disrupt the antibody-antigen interaction. The pH is then neutralized.

-

-

Protein Digestion: The eluted protein sample is denatured, reduced, and alkylated. The proteins are then digested into peptides using a protease, most commonly trypsin.

LC-MS/MS Analysis

-

Liquid Chromatography (LC): The peptide mixture is separated using reverse-phase liquid chromatography, which separates peptides based on their hydrophobicity.

-

Tandem Mass Spectrometry (MS/MS): The separated peptides are ionized and analyzed in a tandem mass spectrometer. The instrument first measures the mass-to-charge ratio of the intact peptides (MS1 scan) and then selects the most abundant peptides for fragmentation, followed by mass analysis of the fragments (MS2 scan).

Data Processing and Quantification

-

Database Searching: The acquired MS/MS spectra are searched against a protein sequence database (e.g., UniProt, RefSeq) using a search engine such as Mascot, SEQUEST, or MaxQuant to identify the peptides and subsequently the proteins.[1]

-

Label-Free Quantification: The abundance of each identified protein is quantified. The two most common methods are:

-

Spectral Counting: The number of MS/MS spectra identified for a given protein is used as a proxy for its abundance.

-

Peptide Intensity: The area under the curve of the peptide's chromatographic peak in the MS1 scan is used as a measure of its abundance.

-

-

Data Formatting for SAINT: The quantitative data is then formatted into three tab-delimited input files for SAINTexpress: interaction.txt, prey.txt, and bait.txt.[2][10]

Data Presentation: Quantitative Analysis of the HDAC1/2 Interactome

The following table presents a summarized and representative example of a SAINTexpress output for the analysis of the Histone Deacetylase 1 (HDAC1) and HDAC2 interactome.[11][12] This table highlights the key quantitative metrics used to assess the confidence of interactions.

| Bait | Prey | AvgSpec (Bait) | AvgSpec (Control) | Fold Change | AvgP | BFDR |

| HDAC1 | HDAC2 | 152.5 | 1.2 | 127.1 | 1.00 | 0.00 |

| HDAC1 | MTA2 | 85.3 | 0.5 | 170.6 | 1.00 | 0.00 |

| HDAC1 | RBBP4 | 210.1 | 5.8 | 36.2 | 1.00 | 0.00 |

| HDAC1 | SIN3A | 45.7 | 0.1 | 457.0 | 0.98 | 0.01 |

| HDAC1 | RCOR1 | 33.2 | 0.0 | 332.0 | 0.95 | 0.01 |

| HDAC1 | TBL1XR1 | 15.8 | 2.1 | 7.5 | 0.85 | 0.03 |

| HDAC2 | HDAC1 | 148.9 | 1.2 | 124.1 | 1.00 | 0.00 |

| HDAC2 | MTA1 | 78.6 | 0.3 | 262.0 | 1.00 | 0.00 |

| HDAC2 | RBBP7 | 189.4 | 4.5 | 42.1 | 1.00 | 0.00 |

| HDAC2 | SIN3A | 42.1 | 0.1 | 421.0 | 0.97 | 0.01 |

| HDAC2 | NCOR1 | 25.6 | 0.2 | 128.0 | 0.92 | 0.02 |

Table 1: Representative SAINTexpress Output for HDAC1 and HDAC2 Interactome Analysis. This table shows a curated list of high-confidence interactors for HDAC1 and HDAC2. AvgSpec (Bait) and AvgSpec (Control) represent the average spectral counts for the prey protein in the bait and control purifications, respectively. Fold Change is the ratio of AvgSpec (Bait) to AvgSpec (Control). AvgP is the average probability of a true interaction, and BFDR is the Bayesian False Discovery Rate. A higher AvgP and lower BFDR indicate a higher confidence interaction.

Mandatory Visualizations

Signaling Pathway: Insulin/TOR Signaling

The following diagram illustrates a simplified representation of the Insulin/TOR signaling pathway, a crucial regulator of cell growth and metabolism.[13][14] Interactions within this pathway can be effectively studied using AP-MS and SAINT analysis.

Experimental Workflow

This diagram outlines the major steps in an AP-MS experiment designed for subsequent SAINT analysis.

Logical Relationships in SAINT Analysis

This diagram illustrates the logical flow of the SAINT algorithm, from input data to the final scored list of interactions.

Conclusion

The Significance Analysis of INTeractome (SAINT) provides a robust statistical framework for assigning confidence scores to protein-protein interactions identified through AP-MS experiments. By leveraging quantitative data and appropriate negative controls, SAINT enables researchers to distinguish true biological interactions from non-specific background, thereby generating higher-confidence interactome datasets. A thorough understanding of the underlying principles of SAINT, coupled with meticulously executed experimental protocols, is essential for obtaining reliable and insightful results. The continued development and application of SAINT and its variants will undoubtedly further our understanding of the complex protein interaction networks that govern cellular life.

References

- 1. Analyzing protein-protein interactions from affinity purification-mass spectrometry data with SAINT - PMC [pmc.ncbi.nlm.nih.gov]

- 2. raw.githubusercontent.com [raw.githubusercontent.com]

- 3. SAINT: Probabilistic Scoring of Affinity Purification - Mass Spectrometry Data - PMC [pmc.ncbi.nlm.nih.gov]

- 4. SAINTexpress: improvements and additional features in Significance Analysis of Interactome software - PMC [pmc.ncbi.nlm.nih.gov]

- 5. pubs.acs.org [pubs.acs.org]

- 6. saint-apms.sourceforge.net [saint-apms.sourceforge.net]

- 7. Immunoprecipitation Mass Spectrometry Protocol | MtoZ Biolabs [mtoz-biolabs.com]

- 8. Identifying Novel Protein-Protein Interactions Using Co-Immunoprecipitation and Mass Spectroscopy - PMC [pmc.ncbi.nlm.nih.gov]

- 9. Co-immunoprecipitation Mass Spectrometry: Unraveling Protein Interactions - Creative Proteomics [creative-proteomics.com]

- 10. benchchem.com [benchchem.com]

- 11. deepblue.lib.umich.edu [deepblue.lib.umich.edu]

- 12. The functional interactome landscape of the human histone deacetylase family - PMC [pmc.ncbi.nlm.nih.gov]

- 13. sdbonline.org [sdbonline.org]

- 14. Insulin/TOR signaling in growth and homeostasis: a view from the fly world - PubMed [pubmed.ncbi.nlm.nih.gov]

Understanding SAINT Scores in AP-MS Data: An In-depth Technical Guide

For Researchers, Scientists, and Drug Development Professionals

This guide provides a comprehensive technical overview of the Significance Analysis of INTeractome (SAINT) algorithm, a pivotal tool for assigning confidence scores to protein-protein interactions (PPIs) identified through Affinity Purification-Mass Spectrometry (AP-MS) experiments. By employing a probabilistic model, SAINT distinguishes bona fide interactions from non-specific background contaminants, enabling researchers to focus on high-confidence candidates for further investigation.

Core Principles of SAINT

The fundamental premise of SAINT is to model the quantitative data from AP-MS experiments, such as spectral counts or peptide intensities, as a mixture of two distributions: one representing true interactions and another representing false or non-specific interactions.[1] By comparing the observed data for a specific bait-prey pair to these distributions, SAINT calculates the posterior probability of it being a genuine interaction.[1] This probabilistic approach offers a more statistically robust and objective assessment of interaction confidence compared to traditional methods that rely on arbitrary fold-change cutoffs.

SAINT is adaptable to various experimental designs, including those with and without negative controls, and can handle different types of quantitative data.[1][2] The algorithm normalizes spectral counts to account for protein length and the total number of spectra in the purification run.[1] For experiments with biological replicates, SAINT can compute a combined probability score, enhancing the reliability of the results.[1]

Experimental Protocol: Affinity Purification-Mass Spectrometry (AP-MS)

A well-executed AP-MS experiment is critical for generating high-quality data amenable to SAINT analysis. The following protocol outlines a generalized workflow.[3]

-

Bait Protein Expression and Tagging : The protein of interest (the "bait") is tagged with an epitope (e.g., FLAG, HA, GFP) to facilitate its purification. This is typically achieved by cloning the bait's gene into an expression vector. It is crucial to establish stable cell lines expressing the tagged bait protein and to include appropriate negative controls, such as cells expressing the tag alone.[3]

-

Cell Lysis : Cells are harvested and lysed under non-denaturing conditions to preserve the integrity of protein complexes. The lysis buffer is supplemented with protease and phosphatase inhibitors to prevent protein degradation.[3]

-

Affinity Purification : The cell lysate is incubated with beads coated with antibodies specific to the epitope tag.[3] This allows for the capture of the bait protein along with its interacting partners ("prey"). The beads are then washed multiple times to remove non-specifically bound proteins.[3]

-

Elution : The bound protein complexes are eluted from the beads.[3]

-

Protein Digestion : The eluted proteins are typically digested with trypsin to generate peptides suitable for mass spectrometry analysis.

-

LC-MS/MS Analysis : The peptide mixture is separated by liquid chromatography (LC) and analyzed by tandem mass spectrometry (MS/MS).[3][4] The mass spectrometer measures the mass-to-charge ratio of the peptides and fragments them to determine their amino acid sequence.

-

Protein Identification and Quantification : The MS/MS spectra are searched against a protein sequence database to identify the peptides and, consequently, the proteins present in the sample. Label-free quantification methods, such as spectral counting (the number of MS/MS spectra identified for a protein) or measuring peptide ion intensities, are used to determine the relative abundance of each protein.[2][5]

Data Presentation: Interpreting SAINT Output

The output of a SAINT analysis is a list of potential protein-protein interactions, each with an associated confidence score. Understanding these quantitative metrics is key to prioritizing candidates for further study.

| Score/Metric | Description | Interpretation | Common Threshold |

| SAINT Score / AvgP | The primary score representing the average probability of a true interaction across replicates. It ranges from 0 to 1. | A higher score indicates a greater confidence in the interaction. | ≥ 0.8 for high-confidence |

| MaxP | The maximum probability of a true interaction from any single replicate. | Useful for identifying strong but potentially less consistently observed interactions. | Varies based on experimental goals |

| BFDR (Bayesian False Discovery Rate) | An estimate of the false discovery rate for interactions at or above a given SAINT Score. | Provides a statistical measure of the expected proportion of false positives. | ≤ 0.01 or 0.05 |

| Spectral Counts / Intensity | The raw quantitative value for a given prey protein in a specific bait purification. | Provides the underlying quantitative evidence for the interaction. | Not used as a direct cutoff in SAINT |

Visualizing the Process and Pathways

To better understand the experimental and computational workflows, the following diagrams have been generated using the DOT language.

References

- 1. genepath.med.harvard.edu [genepath.med.harvard.edu]

- 2. Analyzing protein-protein interactions from affinity purification-mass spectrometry data with SAINT - PMC [pmc.ncbi.nlm.nih.gov]

- 3. benchchem.com [benchchem.com]

- 4. Affinity purification–mass spectrometry and network analysis to understand protein-protein interactions - PMC [pmc.ncbi.nlm.nih.gov]

- 5. pubs.acs.org [pubs.acs.org]

Decoding Protein Interactions: A Technical Guide to SAINT

For Researchers, Scientists, and Drug Development Professionals

This in-depth technical guide explores the core principles and practical application of Significance Analysis of INTeractome (SAINT), a robust statistical method for identifying bona fide protein-protein interactions from Affinity Purification-Mass Spectrometry (AP-MS) data. By providing a probabilistic framework, SAINT distinguishes genuine interactors from non-specific background contaminants, a critical step in mapping cellular signaling pathways and identifying potential drug targets.

Core Principles of SAINT

SAINT is a computational tool that assigns a confidence score to each potential protein-protein interaction detected in an AP-MS experiment. The fundamental concept behind SAINT is the statistical modeling of quantitative data, such as spectral counts or peptide intensities, for both true and false interactions. By establishing separate probability distributions for bona fide interactors and background contaminants, SAINT calculates the posterior probability of a genuine interaction for each bait-prey pair. This probabilistic approach provides a more objective and reliable method for identifying high-confidence interactions compared to arbitrary fold-change cutoffs.

Several versions of the SAINT algorithm have been developed, including the original SAINT, the faster SAINTexpress, and SAINTq for handling data from data-independent acquisition (DIA) mass spectrometry.[1] While the underlying statistical models may vary slightly, the core principle of probabilistically scoring interactions remains the same.

The SAINT Scoring Algorithm

At its core, SAINT models the distribution of quantitative measurements (e.g., spectral counts) for each bait-prey pair as a mixture of two distributions: one representing true interactions and the other representing false or non-specific interactions. For spectral count data, the Poisson distribution is often used.[2]

The algorithm then uses Bayes' theorem to calculate the posterior probability of a true interaction given the observed quantitative data. This posterior probability is the primary SAINT score (often denoted as SAINTscore or AvgP). A higher score indicates a greater likelihood of a true interaction.

The final output of a SAINT analysis is a list of potential protein-protein interactions, each with an associated confidence score. Researchers can then apply a false discovery rate (FDR) threshold to this list to select a set of high-confidence interactions for further investigation.[3]

Experimental Protocol: Affinity Purification-Mass Spectrometry (AP-MS)

A successful SAINT analysis is predicated on a well-designed and meticulously executed AP-MS experiment. The following protocol outlines the key steps for isolating protein complexes for subsequent mass spectrometry and SAINT analysis.

1. Bait Protein Expression and Cell Culture:

-

Vector Construction: The gene encoding the bait protein is cloned into an expression vector containing an affinity tag (e.g., FLAG, HA, GFP).

-

Cell Line Transfection/Transduction: The expression vector is introduced into a suitable cell line using standard transfection or viral transduction methods. A stable cell line expressing the tagged bait protein is often preferred for consistency.

-

Cell Culture and Expansion: The engineered cell line is cultured under appropriate conditions to generate sufficient biomass for the experiment. A parallel culture of parental cells or cells expressing an unrelated tagged protein should be prepared as a negative control.

2. Cell Lysis and Protein Extraction:

-

Cell Harvesting: Cells are harvested by centrifugation and washed with ice-cold phosphate-buffered saline (PBS).

-

Lysis Buffer Preparation: A lysis buffer containing a mild non-ionic detergent (e.g., NP-40 or Triton X-100), protease inhibitors, and phosphatase inhibitors is prepared. The buffer composition should be optimized to maintain protein complex integrity while efficiently solubilizing cellular proteins.

-

Cell Lysis: The cell pellet is resuspended in lysis buffer and incubated on ice with gentle agitation to lyse the cells and release protein complexes.

-

Clarification of Lysate: The cell lysate is centrifuged at high speed to pellet cellular debris. The clarified supernatant containing the soluble protein complexes is transferred to a new tube.

3. Affinity Purification:

-

Bead Preparation: Affinity beads coupled to an antibody or other high-affinity reagent that specifically recognizes the affinity tag (e.g., anti-FLAG agarose (B213101) beads) are washed and equilibrated with lysis buffer.

-

Immunoprecipitation: The clarified cell lysate is incubated with the prepared affinity beads with gentle rotation at 4°C to allow the tagged bait protein and its interacting partners to bind to the beads.

-

Washing: The beads are washed extensively with lysis buffer to remove non-specifically bound proteins. This is a critical step to reduce background noise.

-

Elution: The bound protein complexes are eluted from the affinity beads. This can be achieved by competitive elution with a peptide corresponding to the affinity tag (e.g., 3xFLAG peptide) or by changing the buffer conditions (e.g., low pH).

4. Sample Preparation for Mass Spectrometry:

-

Protein Denaturation, Reduction, and Alkylation: The eluted protein complexes are denatured with a chaotropic agent (e.g., urea), reduced with dithiothreitol (B142953) (DTT) to break disulfide bonds, and then alkylated with iodoacetamide (B48618) (IAA) to prevent the reformation of disulfide bonds.

-

Proteolytic Digestion: The proteins are digested into smaller peptides using a sequence-specific protease, most commonly trypsin.

-

Peptide Desalting and Cleanup: The resulting peptide mixture is desalted and purified using a solid-phase extraction method (e.g., C18 StageTips) to remove detergents and other contaminants that can interfere with mass spectrometry analysis.

5. Liquid Chromatography-Tandem Mass Spectrometry (LC-MS/MS):

-

Peptide Separation: The cleaned peptide mixture is injected onto a reverse-phase liquid chromatography system coupled to the mass spectrometer. Peptides are separated based on their hydrophobicity.

-

Mass Spectrometry Analysis: As peptides elute from the chromatography column, they are ionized and introduced into the mass spectrometer. The mass spectrometer measures the mass-to-charge ratio of the intact peptides (MS1 scan) and then selects precursor ions for fragmentation, generating tandem mass spectra (MS/MS scans) that provide information about the amino acid sequence of the peptides.

6. Data Analysis:

-

Database Searching: The acquired MS/MS spectra are searched against a protein sequence database to identify the peptides and, consequently, the proteins present in the sample.

-

Protein Quantification: The relative abundance of each identified protein is quantified. For label-free quantification, this is often done by counting the number of MS/MS spectra matched to a particular protein (spectral counting) or by measuring the integrated signal intensity of the peptides belonging to that protein.

-

SAINT Analysis: The quantitative protein data is then used as input for the SAINT algorithm to score the protein-protein interactions.

Data Presentation for SAINT Analysis

SAINT requires specific input files that detail the interactions, prey proteins, and bait proteins. The quantitative data from the AP-MS experiments are summarized in these files.

Table 1: Hypothetical Interaction Data (interaction.tsv)

This file contains the core quantitative data, linking each prey protein to a specific bait purification and providing a measure of its abundance.

| IP_name | Bait_name | Prey_name | SpectralCount |

| BaitA_rep1 | BaitA | PreyX | 25 |

| BaitA_rep1 | BaitA | PreyY | 12 |

| BaitA_rep1 | BaitA | PreyZ | 5 |

| BaitA_rep2 | BaitA | PreyX | 30 |

| BaitA_rep2 | BaitA | PreyY | 15 |

| Ctrl_rep1 | Control | PreyX | 2 |

| Ctrl_rep1 | Control | PreyZ | 4 |

| Ctrl_rep2 | Control | PreyX | 1 |

| Ctrl_rep2 | Control | PreyY | 1 |

Table 2: Prey Protein Information (prey.tsv)

This file lists all identified prey proteins and their corresponding sequence lengths.

| Prey_name | SequenceLength | GeneName |

| PreyX | 450 | GENEX |

| PreyY | 620 | GENEY |

| PreyZ | 310 | GENEZ |

Table 3: Bait Protein Information (bait.tsv)

This file describes the bait proteins used in the purifications and indicates whether each experiment was a test ('T') or a control ('C') pulldown.

| IP_name | Bait_name | Test/Control |

| BaitA_rep1 | BaitA | T |

| BaitA_rep2 | BaitA | T |

| Ctrl_rep1 | Control | C |

| Ctrl_rep2 | Control | C |

Visualizing Workflows and Logical Relationships

Experimental Workflow for AP-MS

References

Navigating the Interactome: A Technical Guide to SAINTexpress and SAINT 2.0

For Researchers, Scientists, and Drug Development Professionals

This in-depth technical guide provides a comprehensive overview of SAINTexpress and its predecessor, SAINT 2.0, powerful computational tools for the analysis of protein-protein interaction (PPI) data derived from affinity purification-mass spectrometry (AP-MS) experiments. This document details the core functionalities, experimental considerations, and key differences between these platforms, enabling researchers to effectively leverage these tools for the confident identification of bona fide protein interactions.

Introduction to Significance Analysis of INTeractome (SAINT)

The Significance Analysis of INTeractome (SAINT) algorithm is a foundational tool in the field of proteomics, designed to assign confidence scores to PPIs identified through AP-MS.[1][2][3] By employing a probabilistic model, SAINT distinguishes genuine interactions from background contaminants and non-specific binders, a critical step in interpreting complex AP-MS datasets.[1][2][3] It utilizes quantitative data from label-free AP-MS experiments, such as spectral counts or peptide intensities, to model the distributions of true and false interactions separately.[1][2][3] This statistical rigor provides a more objective and transparent analysis compared to arbitrary fold-change cutoffs.[4]

Core Platforms: SAINT 2.0 and SAINTexpress

While both platforms share the same fundamental goal of scoring PPIs, they differ significantly in their underlying algorithms, performance, and user experience. For the purpose of this guide, "SAINT 2.0" refers to the earlier, more flexible versions of the SAINT algorithm (e.g., v2.3.4), which offered a higher degree of customization. SAINTexpress was subsequently developed as a faster, streamlined version with a simplified statistical model.[5][6][7]

Key Distinctions

SAINTexpress was engineered to address some of the practical drawbacks of its predecessor, primarily the time-consuming nature of the Markov Chain Monte Carlo (MCMC) sampling-based estimation used in SAINT 2.0.[6] This earlier approach, while flexible, could take a significant amount of time for large datasets.[6] In contrast, SAINTexpress utilizes a quicker scoring algorithm, leading to substantial improvements in computational speed.[5]

Another key difference lies in the statistical model. SAINTexpress employs a simpler model, which, in many cases, improves the sensitivity of scoring.[5] However, this simplification comes at the cost of the extensive customization options available in SAINT 2.0, which allowed users to tailor the statistical model for specific datasets.[4][6]

| Feature | SAINT 2.0 (e.g., v2.3.4) | SAINTexpress |

| Scoring Algorithm | Markov Chain Monte Carlo (MCMC) sampling | Faster, direct computation |

| Computational Speed | Slower, can take minutes to hours for large datasets | Significantly faster, often completing in seconds to minutes |

| Statistical Model | More complex and customizable with various options (e.g., lowMode, minFold, normalize) | Simpler, more streamlined model |

| Flexibility | High degree of user-defined parameters for model tuning | Less flexible, with fewer user-adjustable options |

| Primary Use Case | Datasets requiring specific statistical tailoring and fine-tuning | Rapid and robust scoring of standard AP-MS datasets |

Quantitative Performance Comparison

A direct comparison of SAINT (v2.3.4) and SAINTexpress on a published dataset demonstrates their relative performance in identifying high-confidence interactions.

| Metric | SAINT (v2.3.4) | SAINTexpress |

| High-Confidence Interactions (AvgP ≥ 0.8) | 697 | 639 |

| Overlap in High-Confidence Interactions | \multicolumn{2}{c | }{584 (>90%)} |

| Unique High-Confidence Interactions | 113 | 55 |

| Reported FDR at AvgP ≥ 0.8 | \multicolumn{2}{c | }{5.4%} |

| Computational Time (Example Dataset) | ~37 minutes (with 12,000 iterations) | ~20 seconds |

Data synthesized from the SAINTexpress publication, which analyzed a dataset of 10 bait proteins and 2,496 prey proteins.[5]

The high degree of overlap in identified interactions indicates a good concordance between the two methods.[5] The interactions uniquely identified by SAINTexpress were often those penalized in the earlier SAINT model due to high spectral counts in other unrelated baits, a scenario the simplified model of SAINTexpress is designed to handle more effectively.[5] Conversely, interactions uniquely identified by the older SAINT version were often borderline cases that did not meet the stricter criteria of the SAINTexpress model.[5]

Experimental Protocols

A robust AP-MS experiment is the foundation for reliable SAINT analysis. The following sections outline generalized protocols for two common experimental approaches: traditional AP-MS and Proximity-Dependent Biotinylation (BioID).

Affinity Purification-Mass Spectrometry (AP-MS) Protocol

This protocol describes the general workflow for isolating a "bait" protein and its interacting "prey" proteins.

1. Bait Protein Expression and Cell Lysis:

-

Vector Construction: The gene encoding the bait protein is cloned into a mammalian expression vector, typically with an affinity tag (e.g., FLAG, HA, Strep-tag) at the N- or C-terminus.

-

Cell Culture and Transfection: A suitable cell line (e.g., HEK293T) is cultured and transfected with the bait protein expression vector. Stable cell lines are often generated for consistent expression.

-

Cell Lysis: Cells are harvested and lysed in a buffer containing detergents to solubilize proteins and protease/phosphatase inhibitors to prevent degradation.

2. Affinity Purification:

-

Bead Preparation: Affinity beads (e.g., anti-FLAG agarose, streptavidin-sepharose) are equilibrated with lysis buffer.

-

Incubation: The cell lysate is incubated with the prepared beads to allow the tagged bait protein and its interactors to bind.

-

Washing: The beads are washed multiple times with lysis buffer to remove non-specifically bound proteins.

3. Elution and Sample Preparation:

-

Elution: The bound protein complexes are eluted from the beads, often by competition with a high concentration of the affinity tag peptide or by changing buffer conditions.

-

Protein Digestion: The eluted proteins are typically denatured, reduced, alkylated, and then digested into peptides using an enzyme like trypsin.

4. Mass Spectrometry Analysis:

-

LC-MS/MS: The digested peptides are separated by liquid chromatography and analyzed by tandem mass spectrometry.

-

Protein Identification and Quantification: The resulting MS/MS spectra are searched against a protein database to identify peptides and infer the corresponding proteins. The abundance of each protein is quantified using methods like spectral counting or precursor ion intensity.

Proximity-Dependent Biotinylation (BioID) Protocol

BioID is a technique that identifies proteins in close proximity to a protein of interest in living cells.[8][9]

1. Fusion Protein Expression:

-

A promiscuous biotin (B1667282) ligase (e.g., BirA*) is fused to the bait protein of interest.

-

The fusion protein is expressed in the chosen cell line, often as a stable cell line.

2. Biotin Labeling:

-

The cells are incubated with an excess of biotin for a defined period (e.g., 16-24 hours).

-

During this time, the biotin ligase will biotinylate proteins in its immediate vicinity (typically within a 10-20 nm radius).

3. Cell Lysis and Protein Denaturation:

-

Cells are lysed under denaturing conditions to disrupt protein-protein interactions while preserving the covalent biotin tags.

4. Affinity Capture of Biotinylated Proteins:

-

The biotinylated proteins are captured using streptavidin-coated beads.

-

Extensive washing is performed to remove non-biotinylated proteins.

5. Elution and Mass Spectrometry:

-

The captured proteins are eluted and processed for mass spectrometry analysis as described in the AP-MS protocol.

Data Formatting for SAINT Analysis

Both SAINT 2.0 and SAINTexpress require three tab-delimited input files:

-

interactions.txt : This file contains the quantitative data for each observed interaction.

-

Column 1: IP name (unique identifier for each purification)

-

Column 2: Bait name

-

Column 3: Prey name

-

Column 4: Spectral count or intensity value

-

-

prey.txt : This file provides information about the identified prey proteins.

-

Column 1: Prey name (must match the prey names in interactions.txt)

-

Column 2: Protein length (in amino acids)

-

Column 3: Prey gene name

-

-

bait.txt : This file defines the experimental design, specifying which purifications are tests and which are controls.

-

Column 1: IP name (must match the IP names in interactions.txt)

-

Column 2: Bait name

-

Column 3: 'T' for test purification or 'C' for control purification

-

Visualization of Workflows and Pathways

Experimental and Computational Workflow

The following diagram illustrates the general workflow from an AP-MS experiment to the identification of high-confidence protein-protein interactions using SAINT.

Example Signaling Pathway: EGFR Interactome

The Epidermal Growth Factor Receptor (EGFR) signaling pathway is a well-studied network that is frequently investigated using AP-MS. The following diagram illustrates a simplified view of key EGFR interactions that can be identified using these methods.[10][11]

Conclusion

SAINTexpress and the earlier SAINT 2.0 versions are indispensable tools for the analysis of AP-MS data, providing a statistical framework to confidently identify protein-protein interactions. While SAINT 2.0 offers greater flexibility for specialized datasets, SAINTexpress provides a rapid and robust solution for the high-throughput analysis of interactomes. By understanding the principles behind these tools and adhering to rigorous experimental protocols, researchers can effectively map protein interaction networks, paving the way for new discoveries in cellular biology and drug development.

References

- 1. researchgate.net [researchgate.net]

- 2. BioID: A Screen for Protein-Protein Interactions - PMC [pmc.ncbi.nlm.nih.gov]

- 3. genepath.med.harvard.edu [genepath.med.harvard.edu]

- 4. reprint-apms.org [reprint-apms.org]

- 5. SAINTexpress: improvements and additional features in Significance Analysis of Interactome software - PMC [pmc.ncbi.nlm.nih.gov]

- 6. saint-apms.sourceforge.net [saint-apms.sourceforge.net]

- 7. researchgate.net [researchgate.net]

- 8. BioID as a Tool for Protein-Proximity Labeling in Living Cells - PMC [pmc.ncbi.nlm.nih.gov]

- 9. pubs.acs.org [pubs.acs.org]

- 10. Proteomic Analysis of the Epidermal Growth Factor Receptor (EGFR) Interactome and Post-translational Modifications Associated with Receptor Endocytosis in Response to EGF and Stress - PMC [pmc.ncbi.nlm.nih.gov]

- 11. Characterization of the EGFR interactome reveals associated protein complex networks and intracellular receptor dynamics - PubMed [pubmed.ncbi.nlm.nih.gov]

The Theoretical Cornerstone of Protein Interaction Analysis: A Technical Guide to SAINT in Mass Spectrometry

For Researchers, Scientists, and Drug Development Professionals

Introduction

In the intricate landscape of systems biology and drug discovery, understanding the complex web of protein-protein interactions (PPIs) is paramount. Affinity Purification coupled with Mass Spectrometry (AP-MS) has emerged as a powerful technique to elucidate these interactions. However, a significant challenge in AP-MS is distinguishing bona fide interactors from a vast background of non-specific proteins. The Significance Analysis of INTeractome (SAINT) algorithm provides a robust statistical framework to address this challenge, enabling researchers to assign a probability of interaction to each identified protein. This technical guide delves into the theoretical underpinnings of SAINT, its various iterations, and the experimental and computational workflows that leverage its power.

Core Principles of the SAINT Algorithm

SAINT is a computational tool designed to score protein-protein interactions from label-free quantitative proteomics data, such as spectral counts or protein intensities, derived from AP-MS experiments. Its fundamental principle is to model the observed protein quantification data as a mixture of two distributions: one representing true, specific interactions and the other representing false, non-specific interactions. By fitting this mixture model to the data, SAINT calculates the posterior probability of a true interaction for each bait-prey pair.

A key feature of SAINT is its ability to incorporate data from negative control purifications. These controls, which typically involve expressing an unrelated protein or no bait at all, are crucial for accurately modeling the distribution of background contaminants. This semi-supervised approach allows for a more stringent and reliable identification of high-confidence interactions.

Statistical Foundation

The statistical model at the heart of SAINT assumes that the quantitative measurement for a given prey protein in a specific bait purification is drawn from one of two distinct distributions:

-

True Interaction Distribution: The quantitative value is expected to be significantly higher than in control purifications.

-

False Interaction Distribution: The quantitative value is expected to be comparable to that observed in control purifications.

For its original implementation using spectral count data, SAINT models these distributions using the Poisson distribution . The mean of the Poisson distribution for a true interaction is considered to be a product of the bait's and prey's individual abundance levels, allowing the model to share information across different experiments.

The probability of a true interaction is then calculated using Bayes' rule. For experiments with multiple replicates, the final probability is typically an average of the probabilities from each individual replicate.

Evolution of the SAINT Algorithm

Over time, the SAINT algorithm has evolved to accommodate different types of quantitative data and to improve computational efficiency:

-

SAINT: The original implementation designed for spectral count data.

-

SAINT-MS1: An extension that reformulates the statistical model for log-transformed MS1 intensity data, which can provide more accurate quantification, especially for low-abundance proteins.

-

SAINTexpress: A faster implementation with a simplified statistical model that is particularly well-suited for datasets with negative controls.

-

SAINTq: Developed to handle data from Data Independent Acquisition (DIA) workflows, utilizing fragment or peptide intensity data and the reproducibility of these measurements as a key scoring criterion.

Experimental Protocol: Affinity Purification-Mass Spectrometry (AP-MS)

A well-designed AP-MS experiment is critical for a successful SAINT analysis. The following protocol outlines the key steps:

-

Bait Protein and Tagging: The protein of interest (the "bait") is tagged with an epitope (e.g., FLAG, HA, GFP) to facilitate its specific capture.

-

Cell Lysis: Cells expressing the tagged bait protein are lysed to release protein complexes.

-

Immunoprecipitation: The cell lysate is incubated with beads coated with an antibody that specifically recognizes the epitope tag. This captures the bait protein along with its interacting partners ("prey").

-

Washing: The beads are washed to remove non-specifically bound proteins.

-

Elution: The bait and its interacting prey proteins are eluted from the beads.

-

Protein Digestion: The eluted protein complexes are denatured, reduced, alkylated, and then digested into smaller peptides, typically using the enzyme trypsin.

-

LC-MS/MS Analysis: The resulting peptide mixture is separated by liquid chromatography and analyzed by tandem mass spectrometry (LC-MS/MS). The mass spectrometer measures the mass-to-charge ratio of the peptides and fragments them to determine their amino acid sequences.

-

Protein Identification and Quantification: The acquired MS/MS spectra are searched against a protein sequence database to identify the proteins present in the sample. Label-free quantification methods, such as spectral counting or precursor ion intensity measurement, are then used to determine the relative abundance of each identified protein.

Caption: A generalized workflow for an Affinity Purification-Mass Spectrometry (AP-MS) experiment.

Data Formatting and Presentation for SAINT Analysis

SAINT requires the input data to be formatted into three specific tab-delimited files:

-

interaction.dat: This file contains the core quantitative data for each prey protein in each AP-MS experiment.

-

prey.dat: This file contains information about the prey proteins, such as their sequence length.

-

bait.dat: This file defines the bait proteins and specifies which experiments are test purifications and which are negative controls.

Input Data Tables

Table 1: Example interaction.dat file

| IP Name | Bait Name | Prey Name | Spectral Count |

| BaitA_rep1 | BaitA | PreyX | 25 |

| BaitA_rep1 | BaitA | PreyY | 5 |

| BaitA_rep2 | BaitA | PreyX | 30 |

| BaitA_rep2 | BaitA | PreyZ | 2 |

| Control_rep1 | GFP | PreyX | 2 |

| Control_rep1 | GFP | PreyY | 4 |

| Control_rep2 | GFP | PreyX | 1 |

Table 2: Example prey.dat file

| Prey Name | Sequence Length | Gene Name |

| PreyX | 550 | genex |

| PreyY | 320 | geney |

| PreyZ | 780 | genez |

Table 3: Example bait.dat file

| IP Name | Bait Name | Test/Control |

| BaitA_rep1 | BaitA | T |

| BaitA_rep2 | BaitA | T |

| Control_rep1 | GFP | C |

| Control_rep2 | GFP | C |

Output Data Presentation

The primary output of a SAINT analysis is a list of all potential bait-prey interactions, each with several calculated scores. This output should be organized into a clear table for interpretation.

Table 4: Example SAINT Output

| Bait | Prey | Spec | AvgP | SaintScore | FoldChange | BFDR |

| BaitA | PreyX | 27.5 | 0.98 | 0.99 | 18.3 | 0.01 |

| BaitA | PreyY | 4.5 | 0.55 | 0.60 | 1.1 | 0.25 |

| BaitA | PreyZ | 1.0 | 0.20 | 0.22 | 2.0 | 0.68 |

-

Spec: The average spectral count of the prey in the bait purifications.

-

AvgP: The average probability of a true interaction across replicates.

-

SaintScore: The final probability score.

-

FoldChange: The fold change in abundance of the prey in the bait purifications relative to the control purifications.

-

BFDR (Bayesian False Discovery Rate): An estimate of the false discovery rate at a given SaintScore threshold.

Logical and Signaling Pathway Visualizations

Visualizing the logical flow of the SAINT algorithm and the biological context of the identified interactions is crucial for a comprehensive understanding.

Caption: The logical flow of the SAINT (Significance Analysis of INTeractome) algorithm.

Application to a Signaling Pathway: The mTOR Pathway

SAINT has been successfully applied to elucidate the protein interaction networks of various signaling pathways. For instance, in a study of the insulin (B600854) receptor/target of rapamycin (B549165) (mTOR) signaling pathway in Drosophila, SAINT was used to identify high-confidence interactors of key pathway components. The mTOR pathway is a central regulator of cell growth, proliferation, and metabolism.

Caption: A simplified overview of the mTOR signaling pathway.