Acmptn

Description

Given the absence of explicit references to "Acmptn" in the provided evidence, this analysis assumes "this compound" represents a hypothetical compound or model analogous to foundational NLP architectures like the Transformer (introduced in ). The Transformer model revolutionized sequence transduction by replacing recurrence and convolution with self-attention mechanisms, enabling parallelizable training and superior performance on tasks like machine translation . Key innovations include:

- Multi-head attention: Captures diverse contextual relationships.

- Positional encoding: Injects sequence order information without recurrence.

- Scalability: Achieves state-of-the-art (SOTA) results with fewer computational resources (e.g., 41.8 BLEU on English-to-French translation with 3.5 days of training on 8 GPUs) .

Properties

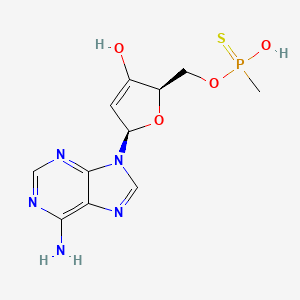

CAS No. |

130320-50-2 |

|---|---|

Molecular Formula |

C11H14N5O4PS |

Molecular Weight |

343.3 g/mol |

IUPAC Name |

(2R,5R)-5-(6-aminopurin-9-yl)-2-[[hydroxy(methyl)phosphinothioyl]oxymethyl]-2,5-dihydrofuran-3-ol |

InChI |

InChI=1S/C11H14N5O4PS/c1-21(18,22)19-3-7-6(17)2-8(20-7)16-5-15-9-10(12)13-4-14-11(9)16/h2,4-5,7-8,17H,3H2,1H3,(H,18,22)(H2,12,13,14)/t7-,8-,21?/m1/s1 |

InChI Key |

ICRGYFPAGKGSJA-SYCXPTRRSA-N |

SMILES |

CP(=S)(O)OCC1C(=CC(O1)N2C=NC3=C(N=CN=C32)N)O |

Isomeric SMILES |

CP(=S)(O)OC[C@@H]1C(=C[C@@H](O1)N2C=NC3=C(N=CN=C32)N)O |

Canonical SMILES |

CP(=S)(O)OCC1C(=CC(O1)N2C=NC3=C(N=CN=C32)N)O |

Synonyms |

ACMPTN adenosine 3',5'-cyclic methylphosphonothioate |

Origin of Product |

United States |

Comparison with Similar Compounds

Table 2: Training Efficiency Comparison

| Model | Training Data Size | Training Time | Key Benchmark Results |

|---|---|---|---|

| Transformer | WMT 2014 datasets | 3.5 days | 41.8 BLEU (En-Fr) |

| RoBERTa | 160GB text | 100+ epochs | 89.4 F1 on SQuAD 2.0 |

Transformer vs. T5 (Text-to-Text Transfer Transformer)

T5 () unifies NLP tasks into a text-to-text framework, leveraging the Transformer’s encoder-decoder architecture. Key distinctions:

Table 3: Performance on Summarization Tasks

| Model | ROUGE-1 (CNN/DM) | Training Efficiency |

|---|---|---|

| Transformer | 38.2 | High (parallelizable) |

| T5 | 43.5 | Moderate (requires massive compute) |

Transformer vs. GPT-2

GPT-2 () adopts a decoder-only architecture for autoregressive language modeling:

- Zero-shot learning : Achieves 55 F1 on CoQA without task-specific training .

- Generative strength : Produces coherent paragraphs but struggles with factual consistency.

- Scalability : Performance improves log-linearly with model size (1.5B parameters in GPT-2).

Key Trade-off : While GPT-2 excels in open-ended generation, it underperforms bidirectional models (e.g., BERT) on tasks requiring contextual understanding .

Research Findings and Implications

Architectural Flexibility : The Transformer’s modular design enables adaptations like BERT’s bidirectionality and T5’s text-to-text framework, highlighting its versatility .

Training Optimization : RoBERTa’s success underscores the impact of hyperparameter tuning (e.g., dynamic masking, batch size) on model performance .

Data and Scale : T5 and GPT-2 demonstrate that scaling model size and data diversity are critical for SOTA results but raise concerns about computational sustainability .

Featured Recommendations

| Most viewed | ||

|---|---|---|

| Most popular with customers |

Disclaimer and Information on In-Vitro Research Products

Please be aware that all articles and product information presented on BenchChem are intended solely for informational purposes. The products available for purchase on BenchChem are specifically designed for in-vitro studies, which are conducted outside of living organisms. In-vitro studies, derived from the Latin term "in glass," involve experiments performed in controlled laboratory settings using cells or tissues. It is important to note that these products are not categorized as medicines or drugs, and they have not received approval from the FDA for the prevention, treatment, or cure of any medical condition, ailment, or disease. We must emphasize that any form of bodily introduction of these products into humans or animals is strictly prohibited by law. It is essential to adhere to these guidelines to ensure compliance with legal and ethical standards in research and experimentation.