Orestrate

Description

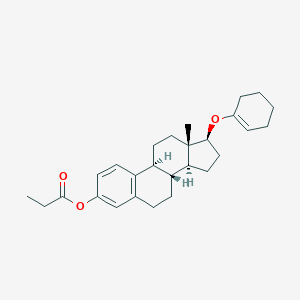

Structure

3D Structure

Properties

IUPAC Name |

[(8R,9S,13S,14S,17S)-17-(cyclohexen-1-yloxy)-13-methyl-6,7,8,9,11,12,14,15,16,17-decahydrocyclopenta[a]phenanthren-3-yl] propanoate | |

|---|---|---|

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI |

InChI=1S/C27H36O3/c1-3-26(28)30-20-10-12-21-18(17-20)9-11-23-22(21)15-16-27(2)24(23)13-14-25(27)29-19-7-5-4-6-8-19/h7,10,12,17,22-25H,3-6,8-9,11,13-16H2,1-2H3/t22-,23-,24+,25+,27+/m1/s1 | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI Key |

VYAXJSIVAVEVHF-RYIFMDQWSA-N | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Canonical SMILES |

CCC(=O)OC1=CC2=C(C=C1)C3CCC4(C(C3CC2)CCC4OC5=CCCCC5)C | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Isomeric SMILES |

CCC(=O)OC1=CC2=C(C=C1)[C@H]3CC[C@]4([C@H]([C@@H]3CC2)CC[C@@H]4OC5=CCCCC5)C | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Molecular Formula |

C27H36O3 | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

DSSTOX Substance ID |

DTXSID80930230 | |

| Record name | Orestrate | |

| Source | EPA DSSTox | |

| URL | https://comptox.epa.gov/dashboard/DTXSID80930230 | |

| Description | DSSTox provides a high quality public chemistry resource for supporting improved predictive toxicology. | |

Molecular Weight |

408.6 g/mol | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

CAS No. |

13885-31-9 | |

| Record name | Estra-1,3,5(10)-trien-3-ol, 17-(1-cyclohexen-1-yloxy)-, 3-propanoate, (17β)- | |

| Source | CAS Common Chemistry | |

| URL | https://commonchemistry.cas.org/detail?cas_rn=13885-31-9 | |

| Description | CAS Common Chemistry is an open community resource for accessing chemical information. Nearly 500,000 chemical substances from CAS REGISTRY cover areas of community interest, including common and frequently regulated chemicals, and those relevant to high school and undergraduate chemistry classes. This chemical information, curated by our expert scientists, is provided in alignment with our mission as a division of the American Chemical Society. | |

| Explanation | The data from CAS Common Chemistry is provided under a CC-BY-NC 4.0 license, unless otherwise stated. | |

| Record name | Orestrate [INN] | |

| Source | ChemIDplus | |

| URL | https://pubchem.ncbi.nlm.nih.gov/substance/?source=chemidplus&sourceid=0013885319 | |

| Description | ChemIDplus is a free, web search system that provides access to the structure and nomenclature authority files used for the identification of chemical substances cited in National Library of Medicine (NLM) databases, including the TOXNET system. | |

| Record name | Orestrate | |

| Source | EPA DSSTox | |

| URL | https://comptox.epa.gov/dashboard/DTXSID80930230 | |

| Description | DSSTox provides a high quality public chemistry resource for supporting improved predictive toxicology. | |

| Record name | ORESTRATE | |

| Source | FDA Global Substance Registration System (GSRS) | |

| URL | https://gsrs.ncats.nih.gov/ginas/app/beta/substances/G9VC23W7W0 | |

| Description | The FDA Global Substance Registration System (GSRS) enables the efficient and accurate exchange of information on what substances are in regulated products. Instead of relying on names, which vary across regulatory domains, countries, and regions, the GSRS knowledge base makes it possible for substances to be defined by standardized, scientific descriptions. | |

| Explanation | Unless otherwise noted, the contents of the FDA website (www.fda.gov), both text and graphics, are not copyrighted. They are in the public domain and may be republished, reprinted and otherwise used freely by anyone without the need to obtain permission from FDA. Credit to the U.S. Food and Drug Administration as the source is appreciated but not required. | |

Foundational & Exploratory

The Orchestration of Gene Expression: A Technical Guide for Researchers

Audience: Researchers, scientists, and drug development professionals.

Introduction: The Symphony of the Genome

Gene expression is the intricate process by which the genetic information encoded in DNA is converted into functional products, such as proteins or functional RNA molecules. This process is not a simple linear pathway but a highly regulated and dynamic symphony of molecular interactions that orchestrate cellular function, differentiation, and response to the environment. Understanding the precise control of gene expression is fundamental to unraveling the complexities of biology and disease, and it is a cornerstone of modern drug discovery and development.

This technical guide provides an in-depth exploration of the core mechanisms governing the orchestration of gene expression. We will delve into the roles of signaling pathways in transmitting extracellular cues to the nucleus, the epigenetic modifications that shape the chromatin landscape, and the experimental techniques that allow us to interrogate these complex regulatory networks.

Core Mechanisms of Gene Expression Regulation

The regulation of gene expression occurs at multiple levels, from the accessibility of DNA to the final post-translational modification of proteins. Key control points include chromatin accessibility, transcription initiation, and post-transcriptional modifications.

Chromatin Accessibility and Epigenetic Modifications

In eukaryotic cells, DNA is packaged into a highly condensed structure called chromatin. For a gene to be transcribed, the chromatin must be in an "open" or accessible state, allowing transcription factors and RNA polymerase to bind to the DNA. The accessibility of chromatin is dynamically regulated by epigenetic modifications, which are chemical alterations to DNA or histone proteins that do not change the underlying DNA sequence.

Key epigenetic modifications include:

-

Histone Acetylation: Generally associated with active transcription, the addition of acetyl groups to histone tails neutralizes their positive charge, leading to a more relaxed chromatin structure.

-

Histone Methylation: The effect of histone methylation depends on the specific lysine or arginine residue that is methylated and the number of methyl groups added. For example, H3K4me3 is a mark of active promoters, while H3K27me3 is associated with transcriptional repression.[1][2][3]

-

DNA Methylation: The addition of a methyl group to cytosine bases, typically in the context of CpG dinucleotides, is often associated with gene silencing.[2]

Transcription Factors: The Master Conductors

Transcription factors (TFs) are proteins that bind to specific DNA sequences, known as promoter and enhancer regions, to control the rate of transcription. TFs can act as activators, recruiting co-activators and RNA polymerase to the transcription start site, or as repressors, blocking the binding of the transcriptional machinery. The activity of transcription factors is often regulated by upstream signaling pathways that are initiated by extracellular stimuli.

Signaling Pathways: Relaying the Message to the Nucleus

Cells constantly receive signals from their environment in the form of hormones, growth factors, and other signaling molecules. These signals are transmitted into the cell and ultimately to the nucleus through complex signaling pathways, which are cascades of protein-protein interactions and modifications. These pathways converge on transcription factors, altering their activity and leading to changes in gene expression.

The MAPK Signaling Pathway

The Mitogen-Activated Protein Kinase (MAPK) pathway is a key signaling cascade involved in regulating a wide range of cellular processes, including proliferation, differentiation, and apoptosis.[4][5] The pathway consists of a three-tiered kinase module: a MAP kinase kinase kinase (MAPKKK), a MAP kinase kinase (MAPKK), and a MAPK.[4][6] Activation of this cascade by extracellular signals, such as growth factors binding to receptor tyrosine kinases, leads to the phosphorylation and activation of a terminal MAPK (e.g., ERK). Activated MAPK then translocates to the nucleus and phosphorylates various transcription factors, such as c-Myc and CREB, thereby modulating the expression of target genes.[5]

Figure 1: The MAPK signaling pathway.

The PI3K/AKT Signaling Pathway

The Phosphoinositide 3-kinase (PI3K)/AKT pathway is another critical signaling cascade that regulates cell survival, growth, and proliferation.[7][8] Upon activation by growth factors, PI3K phosphorylates phosphatidylinositol (4,5)-bisphosphate (PIP2) to generate phosphatidylinositol (3,4,5)-trisphosphate (PIP3).[9][10][11] PIP3 acts as a second messenger, recruiting AKT to the cell membrane where it is activated through phosphorylation.[10][11] Activated AKT then phosphorylates a variety of downstream targets, including the transcription factor FOXO, leading to its cytoplasmic localization and the subsequent regulation of genes involved in apoptosis and cell cycle progression.[8]

References

- 1. ChIP-Seq Histone Modification Data | Genome Sciences Centre [bcgsc.ca]

- 2. news-medical.net [news-medical.net]

- 3. genome.ucsc.edu [genome.ucsc.edu]

- 4. creative-diagnostics.com [creative-diagnostics.com]

- 5. MAPK/ERK pathway - Wikipedia [en.wikipedia.org]

- 6. Function and Regulation in MAPK Signaling Pathways: Lessons Learned from the Yeast Saccharomyces cerevisiae - PMC [pmc.ncbi.nlm.nih.gov]

- 7. anygenes.com [anygenes.com]

- 8. KEGG PATHWAY: PI3K-Akt signaling pathway - Homo sapiens (human) [kegg.jp]

- 9. researchgate.net [researchgate.net]

- 10. researchgate.net [researchgate.net]

- 11. creative-diagnostics.com [creative-diagnostics.com]

Orchestrating Cellular Destiny: A Technical Guide to Signaling Pathways in Cell Fate Determination

For Researchers, Scientists, and Drug Development Professionals

Abstract

The determination of cell fate is a fundamental process in developmental biology and tissue homeostasis, governed by a complex interplay of intercellular signaling pathways. These pathways act as intricate communication networks, translating extracellular cues into specific programs of gene expression that dictate whether a cell will proliferate, differentiate, apoptose, or maintain a quiescent state. Dysregulation of these critical signaling networks is a hallmark of numerous diseases, including cancer and developmental disorders. This technical guide provides an in-depth exploration of three core signaling pathways—Notch, Wnt/β-catenin, and TGF-β—that are instrumental in orchestrating cell fate decisions. We will dissect their molecular mechanisms, present key quantitative data, and provide detailed experimental protocols for their study, offering a comprehensive resource for researchers and drug development professionals seeking to understand and manipulate these pivotal cellular processes.

Core Signaling Pathways in Cell Fate

The Notch Signaling Pathway: A Master of Binary Decisions

The Notch signaling pathway is a highly conserved, juxtacrine signaling system essential for cell-cell communication. It plays a critical role in determining binary cell fate decisions, a process known as lateral inhibition, where a cell adopting a specific fate inhibits its immediate neighbors from doing the same.[1] This mechanism is crucial for the precise patterning of tissues during embryonic development and for maintaining homeostasis in adult tissues.[1][2]

Mechanism of Action: The pathway is initiated when a transmembrane ligand (e.g., Delta-like [Dll] or Jagged [Jag]) on a "sending" cell binds to a Notch receptor (NOTCH1-4) on an adjacent "receiving" cell.[3] This interaction triggers a cascade of proteolytic cleavages of the Notch receptor. The first cleavage occurs in the Golgi apparatus, and the subsequent cleavages are ligand-dependent.[3] An ADAM metalloprotease performs the second cleavage (S2), followed by a third cleavage (S3) within the transmembrane domain by the γ-secretase complex.[3] This S3 cleavage releases the Notch Intracellular Domain (NICD) into the cytoplasm.[4] The NICD then translocates to the nucleus, where it forms a complex with the DNA-binding protein CSL (CBF1/Su(H)/Lag-1) and a coactivator of the Mastermind-like (MAML) family. This transcriptional activation complex drives the expression of Notch target genes, most notably the Hes and Hey families of transcriptional repressors, which in turn regulate downstream genes to execute a specific cell fate program.[1][4]

Quantitative Data Summary: Notch Pathway

| Parameter | Interacting Molecules | KD (Dissociation Constant) | Cell Type/System | Notes |

| Binding Affinity | Notch1 / Delta-like 4 (Dll4) | ~270 nM | In vitro (Biolayer interferometry) | Dll4 binds to Notch1 with over 10-fold higher affinity than Dll1.[5] |

| Binding Affinity | Notch1 / Delta-like 1 (Dll1) | ~3.4 µM | In vitro (Biolayer interferometry) | Weaker affinity compared to Dll4.[5] |

| Binding Affinity | Notch1 / Dll1 | ~10 µM | In solution (Analytical ultracentrifugation) | Demonstrates that apparent affinities can vary by technique.[6] |

| Signaling Potency | Dll1 vs. Dll4 on Notch1 | - | Cell-based reporter assays | Dll4 is a more potent activator of Notch1 signaling than Dll1.[7][8] |

| Fringe Modulation | Dll1-Notch1 | - | Cell-based reporter assays | Lfng glycosyltransferase can increase Dll1-Notch1 signaling up to 3-fold.[7][8] |

| Fringe Modulation | Jag1-Notch1 | - | Cell-based reporter assays | Lfng can decrease Jag1-Notch1 signaling by over 2.5-fold.[7][8] |

The Wnt/β-catenin Pathway: A Key Regulator of Development and Stem Cells

The Wnt signaling pathways are a group of signal transduction pathways activated by the binding of Wnt proteins to Frizzled (Fzd) family receptors.[9] The canonical Wnt/β-catenin pathway is crucial for regulating cell fate specification, proliferation, and body axis patterning during embryonic development and for maintaining adult tissue homeostasis.[9][10][11]

Mechanism of Action: In the absence of a Wnt ligand ("Off-state"), cytoplasmic β-catenin is targeted for degradation. It is phosphorylated by a "destruction complex" consisting of Axin, Adenomatous Polyposis Coli (APC), Casein Kinase 1 (CK1), and Glycogen Synthase Kinase 3β (GSK-3β).[10] Phosphorylated β-catenin is then ubiquitinated and degraded by the proteasome.[10]

Pathway activation ("On-state") occurs when a Wnt ligand binds to an Fzd receptor and its co-receptor, LRP5/6.[10] This binding event leads to the recruitment of the Dishevelled (Dvl) protein and the destruction complex to the plasma membrane. This sequesters GSK-3β, inhibiting its activity and leading to the stabilization and accumulation of β-catenin in the cytoplasm.[10] Accumulated β-catenin translocates to the nucleus, where it displaces co-repressors (like Groucho) from T-Cell Factor/Lymphoid Enhancer Factor (TCF/LEF) transcription factors, recruiting co-activators to initiate the transcription of Wnt target genes, such as c-Myc and Cyclin D1.[10]

References

- 1. Video: Genome Editing in Mammalian Cell Lines using CRISPR-Cas [jove.com]

- 2. genemedi.net [genemedi.net]

- 3. TGF-β family ligands exhibit distinct signalling dynamics that are driven by receptor localisation - PMC [pmc.ncbi.nlm.nih.gov]

- 4. bpsbioscience.com [bpsbioscience.com]

- 5. Intrinsic Selectivity of Notch 1 for Delta-like 4 Over Delta-like 1 - PMC [pmc.ncbi.nlm.nih.gov]

- 6. researchgate.net [researchgate.net]

- 7. Diversity in Notch ligand-receptor signaling interactions [elifesciences.org]

- 8. Diversity in Notch ligand-receptor signaling interactions | eLife [elifesciences.org]

- 9. scienceopen.com [scienceopen.com]

- 10. researchgate.net [researchgate.net]

- 11. Development of a Bioassay for Detection of Wnt-Binding Affinities for Individual Frizzled Receptors - PMC [pmc.ncbi.nlm.nih.gov]

Revolutionizing Research: A Technical Guide to Laboratory Workflow Orchestration

For Researchers, Scientists, and Drug Development Professionals

In the fast-paced world of scientific research and drug development, efficiency, reproducibility, and data integrity are paramount. As laboratories handle increasingly complex workflows and generate vast amounts of data, the need for intelligent automation has never been more critical. Laboratory workflow orchestration emerges as a transformative solution, moving beyond simple automation to create a seamlessly integrated and highly efficient research ecosystem. This in-depth technical guide explores the core principles of laboratory workflow orchestration, its tangible benefits, practical implementation strategies, and its application in critical experimental procedures.

The Core of Laboratory Orchestration: From Automation to Intelligent Coordination

Laboratory workflow orchestration is the centralized and intelligent management of all components of a laboratory's processes, including instruments, software, data, and personnel.[1] It differs from simple automation, which typically focuses on automating a single task or a small series of tasks. Orchestration, in contrast, creates a dynamic and interconnected system where every step of a complex workflow is coordinated and optimized.[2]

At its heart, orchestration leverages a sophisticated software layer that acts as a central "conductor" for the entire laboratory. This platform communicates with a diverse range of instruments from various manufacturers, standardizes data formats, and manages the flow of samples and information.[3][4] This integration eliminates data silos and manual handoffs, which are often sources of errors and inefficiencies.[2]

The core components of a laboratory orchestration platform typically include:

-

A Centralized Scheduler: To manage and prioritize tasks across multiple instruments and workflows.

-

Instrument Integration Framework: To enable communication and control of a wide variety of laboratory devices.

-

Data Management System: To capture, store, and contextualize experimental data in a standardized format.[2]

-

Workflow Engine: To define, execute, and monitor complex experimental protocols.

-

User Interface: To provide a centralized point of control and visibility into all laboratory operations.

Quantifiable Impact: The Benefits of an Orchestrated Laboratory

The adoption of laboratory workflow orchestration delivers significant and measurable improvements across various aspects of laboratory operations. These benefits translate into accelerated research timelines, reduced costs, and more reliable scientific outcomes.

| Metric | Improvement with Orchestration | Source |

| Error Rate Reduction | Up to 95% reduction in pre-analytical errors.[5] | A study on an automated pre-analytical system in a clinical lab demonstrated a significant decrease in errors.[5] |

| Turnaround Time (TAT) | Mean TAT decreased by 6.1%; 99th percentile TAT decreased by 13.3%.[6] | An economic evaluation of total laboratory automation showed significant improvements in the timeliness of result reporting.[6] |

| Productivity and Throughput | Pharmaceutical companies could bring medicines to market more than 500 days faster.[7] | A McKinsey report highlighted the potential for automation to accelerate drug development.[7] |

| Staff Efficiency | Reduction in labor costs of $232,650 per year.[8] | A time-motion study in a laboratory showed significant labor cost savings after automation.[8] |

| Cost Savings | The total cost per test decreased from $0.79 to $0.15.[8] | A review of clinical chemistry laboratory automation reported a substantial reduction in the cost per test.[8] |

Visualizing a High-Throughput Screening Workflow

High-throughput screening (HTS) is a cornerstone of modern drug discovery, enabling the rapid testing of thousands of compounds. Orchestrating an HSF workflow is critical for ensuring efficiency and accuracy. The following diagram illustrates a typical automated HTS workflow for identifying kinase inhibitors.

Detailed Experimental Protocol: Automated Cell Viability (MTT) Assay

Cell viability assays are fundamental in drug discovery for assessing the cytotoxic effects of compounds. The MTT (3-(4,5-dimethylthiazol-2-yl)-2,5-diphenyltetrazolium bromide) assay is a colorimetric assay that measures cellular metabolic activity. Automating this assay enhances reproducibility and throughput.

Objective: To determine the IC50 value of a test compound on a cancer cell line using an orchestrated workflow.

Materials:

-

Cancer cell line (e.g., HeLa)

-

Complete culture medium (e.g., DMEM with 10% FBS)

-

Test compound stock solution (in DMSO)

-

MTT solution (5 mg/mL in PBS)

-

Solubilization solution (e.g., 10% SDS in 0.01 M HCl)[9]

-

Sterile 96-well plates

-

Automated liquid handler

-

Automated plate incubator (37°C, 5% CO2)

-

Microplate reader

Methodology:

-

Cell Seeding (Day 1):

-

Harvest and count cells to ensure >90% viability.

-

Using an automated liquid handler, seed 1 x 10^4 cells in 100 µL of complete culture medium into each well of a 96-well plate.

-

Incubate the plate overnight in an automated incubator.

-

-

Compound Treatment (Day 2):

-

Prepare serial dilutions of the test compound in culture medium using the automated liquid handler.

-

Remove the old medium from the cell plate and add 100 µL of the diluted compound solutions to the respective wells. Include vehicle control (DMSO) and untreated control wells.

-

Return the plate to the automated incubator for 48 hours.

-

-

MTT Assay (Day 4):

-

After incubation, add 10 µL of MTT solution to each well using the automated liquid handler.

-

Incubate the plate for 4 hours in the automated incubator.[9]

-

Add 100 µL of solubilization solution to each well to dissolve the formazan crystals.

-

Place the plate on an orbital shaker for 15 minutes to ensure complete solubilization.

-

-

Data Acquisition:

-

Measure the absorbance at 570 nm using a microplate reader.[9]

-

The orchestration software automatically captures the absorbance data and associates it with the corresponding compound concentrations.

-

-

Data Analysis:

-

The software calculates the percentage of cell viability for each concentration relative to the untreated control.

-

A dose-response curve is generated, and the IC50 value is determined.

-

Signaling Pathway Visualization: The MAPK/ERK Pathway in Cancer

The Mitogen-Activated Protein Kinase (MAPK)/Extracellular signal-Regulated Kinase (ERK) pathway is a crucial signaling cascade that regulates cell proliferation, differentiation, and survival.[10] Its dysregulation is a hallmark of many cancers, making it a key target for drug development.[11] The following diagram illustrates the core components of the MAPK/ERK pathway.

The Future of Research: Towards the Self-Driving Laboratory

Laboratory workflow orchestration is a foundational step towards the vision of a "self-driving" or "closed-loop" laboratory. In this paradigm, artificial intelligence and machine learning algorithms analyze experimental data in real-time to design and execute the next round of experiments automatically. This iterative process of designing, building, testing, and learning has the potential to dramatically accelerate scientific discovery.

By embracing laboratory workflow orchestration, research organizations can unlock new levels of efficiency, improve the quality and reliability of their data, and empower their scientists to focus on innovation rather than manual tasks. As this technology continues to evolve, it will undoubtedly play a pivotal role in shaping the future of research and drug development.

References

- 1. Cost-Benefit Analysis of Laboratory Automation [wakoautomation.com]

- 2. Lab Orchestration: Streamlining Lab Workflows to Enhance Efficiency, Quality and User Experience [thermofisher.com]

- 3. ijcmph.com [ijcmph.com]

- 4. researchgate.net [researchgate.net]

- 5. How to reduce laboratory errors with automation [biosero.com]

- 6. Economic Evaluation of Total Laboratory Automation in the Clinical Laboratory of a Tertiary Care Hospital - PMC [pmc.ncbi.nlm.nih.gov]

- 7. arobs.com [arobs.com]

- 8. media.neliti.com [media.neliti.com]

- 9. CyQUANT MTT Cell Proliferation Assay Kit Protocol | Thermo Fisher Scientific - US [thermofisher.com]

- 10. MAPK/ERK pathway - Wikipedia [en.wikipedia.org]

- 11. mdpi.com [mdpi.com]

Orchestration of Protein-Protein Interaction Networks: A Technical Guide for Researchers

For Researchers, Scientists, and Drug Development Professionals

Introduction

Protein-protein interactions (PPIs) are the cornerstone of cellular function, forming intricate and dynamic networks that govern nearly every biological process. From signal transduction and gene expression to metabolic regulation and immune responses, the precise orchestration of these interactions is paramount to cellular homeostasis. Dysregulation of PPI networks is a hallmark of numerous diseases, including cancer, neurodegenerative disorders, and infectious diseases, making them a critical area of study for drug development and therapeutic intervention.

This technical guide provides an in-depth exploration of the core principles and methodologies for studying the orchestration of PPI networks. It is designed to serve as a comprehensive resource for researchers, scientists, and drug development professionals, offering detailed experimental protocols, quantitative data analysis, and visual representations of key signaling pathways.

I. Key Signaling Pathways in PPI Networks

Signal transduction pathways are exemplary models of orchestrated PPIs, where a cascade of specific binding events transmits information from the cell surface to the nucleus, culminating in a cellular response. Understanding the intricate connections within these pathways is crucial for identifying potential therapeutic targets.

Mitogen-Activated Protein Kinase (MAPK) Signaling Pathway

The MAPK signaling cascade is a highly conserved pathway that regulates a wide array of cellular processes, including proliferation, differentiation, and apoptosis.[1] The pathway is organized into a three-tiered kinase module: a MAP Kinase Kinase Kinase (MAPKKK), a MAP Kinase Kinase (MAPKK), and a MAP Kinase (MAPK).[1][2] Scaffold proteins play a crucial role in assembling these kinase complexes, ensuring signaling specificity and efficiency.[1]

Wnt/β-catenin Signaling Pathway

The Wnt signaling pathway is critical for embryonic development and adult tissue homeostasis.[3] In the canonical pathway, the binding of a Wnt ligand to its receptor complex leads to the stabilization and nuclear translocation of β-catenin, which then acts as a transcriptional co-activator.[4] The interactions between β-catenin and its binding partners are crucial for regulating the expression of target genes involved in cell proliferation and differentiation.[5]

NF-κB Signaling Pathway

The NF-κB (nuclear factor kappa-light-chain-enhancer of activated B cells) signaling pathway is a key regulator of the immune and inflammatory responses, cell survival, and proliferation.[6] In its inactive state, NF-κB is sequestered in the cytoplasm by inhibitor of κB (IκB) proteins.[6] Upon stimulation by various signals, the IκB kinase (IKK) complex is activated, which then phosphorylates IκB, leading to its ubiquitination and proteasomal degradation.[7][8] This allows NF-κB to translocate to the nucleus and activate the transcription of target genes.[6]

Epidermal Growth Factor Receptor (EGFR) Signaling Pathway

The EGFR signaling pathway is crucial for regulating cell growth, survival, and differentiation.[9] Binding of epidermal growth factor (EGF) to EGFR induces receptor dimerization and autophosphorylation, creating docking sites for various adaptor proteins and signaling molecules.[10] These interactions initiate downstream signaling cascades, including the MAPK and PI3K/AKT pathways.[9][10]

Transforming Growth Factor-beta (TGF-β) Signaling Pathway

The TGF-β signaling pathway is involved in a wide range of cellular processes, including cell growth, differentiation, and apoptosis.[11] TGF-β ligands bind to a complex of type I and type II serine/threonine kinase receptors.[12] The type II receptor phosphorylates and activates the type I receptor, which in turn phosphorylates receptor-regulated Smads (R-Smads).[12][13] Activated R-Smads then form a complex with a common-mediator Smad (Co-Smad), Smad4, and translocate to the nucleus to regulate target gene expression.[13][14]

II. Experimental Protocols for Studying PPIs

A variety of experimental techniques are available to identify and characterize protein-protein interactions. The choice of method depends on the specific research question, the nature of the interacting proteins, and the desired level of detail.

Co-Immunoprecipitation (Co-IP)

Co-IP is a widely used technique to study PPIs in their native cellular environment.[15] It involves using an antibody to specifically pull down a protein of interest (the "bait") from a cell lysate, along with any proteins that are bound to it (the "prey").

References

- 1. MAPK signaling pathway | Abcam [abcam.com]

- 2. academic.oup.com [academic.oup.com]

- 3. bioinformation.net [bioinformation.net]

- 4. researchgate.net [researchgate.net]

- 5. Decoding β-catenin associated protein-protein interactions: Emerging cancer therapeutic opportunities - PubMed [pubmed.ncbi.nlm.nih.gov]

- 6. NF-κB - Wikipedia [en.wikipedia.org]

- 7. NF-κB Signaling | Cell Signaling Technology [cellsignal.com]

- 8. The IκB kinase complex: master regulator of NF-κB signaling - PMC [pmc.ncbi.nlm.nih.gov]

- 9. nanobioletters.com [nanobioletters.com]

- 10. A comprehensive pathway map of epidermal growth factor receptor signaling | Molecular Systems Biology [link.springer.com]

- 11. TGF beta signaling pathway - Wikipedia [en.wikipedia.org]

- 12. TGF-β Signaling - PMC [pmc.ncbi.nlm.nih.gov]

- 13. TGF Beta Signaling Pathway | Thermo Fisher Scientific - JP [thermofisher.com]

- 14. TGF-β Signaling | Cell Signaling Technology [cellsignal.com]

- 15. bitesizebio.com [bitesizebio.com]

Orchestration of the Immune Response: A Technical Guide to Core Foundational Concepts

Authored for: Researchers, Scientists, and Drug Development Professionals

Introduction

The orchestration of an effective immune response is a highly complex and dynamic process, involving a symphony of cellular and molecular players that communicate and coordinate to eliminate pathogens and maintain homeostasis. This process is broadly divided into two interconnected arms: the rapid, non-specific innate immune system and the slower, highly specific adaptive immune system.[1][2][3] The innate system acts as the first line of defense, recognizing conserved molecular patterns on pathogens and initiating an inflammatory response.[1][3] Crucially, the innate response shapes the subsequent adaptive response, which provides long-lasting, specific memory.[1][4][5] This guide provides an in-depth technical overview of the foundational concepts governing this intricate orchestration, from initial antigen presentation to the generation of effector and memory cells.

The Innate-Adaptive Immune Interface: Antigen Presentation

The critical link between the innate and adaptive immune systems is the process of antigen presentation.[4] Professional antigen-presenting cells (APCs), primarily dendritic cells (DCs), but also macrophages and B cells, are central to this process.[6][7][8]

-

Antigen Capture and Processing: Following a pathogenic encounter, innate cells like DCs capture antigens.[9] The antigens are internalized and proteolytically processed into smaller peptide fragments.[8][10]

-

MHC Loading and Presentation: These peptides are then loaded onto Major Histocompatibility Complex (MHC) molecules.[7][8] Endogenous antigens (e.g., from viral-infected cells) are typically presented on MHC class I molecules, while exogenous antigens (from extracellular pathogens) are presented on MHC class II molecules.[11]

-

APC Maturation and Migration: Concurrently, the APC receives signals from the pathogen (e.g., via Toll-like receptors) that induce its maturation. This involves the upregulation of co-stimulatory molecules and migration from the site of infection to secondary lymphoid organs like lymph nodes.[12] It is within these lymphoid tissues that the APC will present the antigen to naive T cells.[12][13]

T Cell Activation and Differentiation

The activation of a naive T cell is a pivotal event, requiring a precise sequence of three signals delivered by the APC.[14]

-

Signal 1 (Activation): The T cell receptor (TCR) on a CD4+ helper T cell or a CD8+ cytotoxic T cell specifically recognizes and binds to the peptide-MHC complex on the surface of an APC.[7][14] This interaction provides antigen specificity.

-

Signal 2 (Survival/Co-stimulation): Full T cell activation requires a second, co-stimulatory signal.[6][7] This is typically delivered when the CD28 protein on the T cell surface binds to B7 molecules (CD80/CD86) on the APC.[14][15] This second signal ensures that T cells are only activated in the context of a genuine threat recognized by the innate system.[14]

-

Signal 3 (Differentiation): The local cytokine environment, largely produced by the APC and other innate cells, provides the third signal.[6][14] This signal dictates the differentiation pathway of the activated CD4+ T cell into various specialized effector subsets, each with distinct functions.[6][13][16]

CD4+ T Helper Cell Differentiation

Upon activation, naive CD4+ T cells (Th0) can differentiate into several subsets, guided by specific cytokines and master transcription factors.[13][15]

-

Th1 Cells: Driven by IL-12 and IFN-γ, they activate the transcription factors T-bet and STAT4.[13][16][17] Th1 cells are crucial for cell-mediated immunity against intracellular pathogens.[6]

-

Th2 Cells: Driven by IL-4, they activate GATA3 and STAT6.[13][16][17] Th2 cells orchestrate humoral immunity and defense against helminths.[13]

-

Th17 Cells: Differentiation is induced by TGF-β and IL-6 (or IL-21), activating RORγt and STAT3.[13][16] They are important in combating extracellular bacteria and fungi.

-

Regulatory T (Treg) Cells: Induced by TGF-β and IL-2, they activate the master transcription factor Foxp3 and STAT5.[13][17][18] Tregs are critical for maintaining immune tolerance and suppressing excessive immune responses.[6]

IL-2 and STAT5 Signaling

The Interleukin-2 (IL-2) signaling pathway is a prime example of a critical cytokine cascade. IL-2 signaling via its receptor activates the transcription factor STAT5.[19][20] This pathway is essential for the proliferation of activated T cells and plays a pivotal role in directing the balance between effector T cells and regulatory T cells, making it a key regulator of immune homeostasis.[18][19][21] Upon IL-2 binding, receptor-associated Janus kinases (JAKs) phosphorylate STAT5, leading to its dimerization, nuclear translocation, and regulation of target gene expression.[19]

B Cell Activation and the Humoral Response

Humoral immunity, mediated by antibodies produced by B cells, is essential for combating extracellular microbes and their toxins.[22][23][24] B cell activation can occur through two main pathways.

-

T-Dependent (TD) Activation: This is the most common pathway for protein antigens.[11][24] B cells act as APCs, internalizing an antigen via their B cell receptor (BCR), processing it, and presenting it on MHC class II to an already activated helper T cell (often a specialized T follicular helper, Tfh, cell).[11][25] The T cell then provides co-stimulation via CD40L-CD40 interaction and releases cytokines (like IL-4 and IL-21) that fully activate the B cell.[23][25] This leads to clonal expansion, isotype switching, affinity maturation within germinal centers, and the generation of long-lived plasma cells and memory B cells.[22][25]

-

T-Independent (TI) Activation: Certain antigens with highly repetitive structures, like bacterial polysaccharides, can directly activate B cells without T cell help.[11][25] This is achieved by extensively cross-linking multiple BCRs on the B cell surface, providing a strong enough signal for activation.[11][24] This response is faster but generally results in lower-affinity antibodies (primarily IgM) and does not produce robust immunological memory.[25]

Lymphocyte Trafficking and Homing

The immune system's effectiveness relies on the ability of lymphocytes to continuously circulate and home to specific locations.[26] This trafficking is a highly regulated, multi-step process controlled by adhesion molecules and chemical messengers called chemokines.[12][26][27]

-

Homing to Secondary Lymphoid Organs (SLOs): Naive T and B cells constantly recirculate between the blood and SLOs (like lymph nodes and Peyer's patches) in a process of immune surveillance.[12][28] This process is initiated by the binding of L-selectin on the lymphocyte to its ligand on specialized high endothelial venules (HEVs) in the SLO, causing the cell to slow down and roll.[28] Subsequent activation by chemokines (like CCL19 and CCL21) triggers integrin-mediated firm adhesion and transmigration into the lymph node.[28]

-

Homing to Inflamed Tissues: Upon activation, effector and memory lymphocytes downregulate SLO-homing receptors and upregulate receptors that direct them to sites of inflammation in peripheral tissues.[12][27] For example, T cells destined for the skin may express CCR4, which binds to chemokines present in inflamed skin.[27]

Quantitative Analysis of Immune Responses

Quantifying the components of the immune system is crucial for establishing baseline values and understanding deviations in disease states. Multiplexed imaging and flow cytometry have enabled detailed censuses of immune cell populations across various tissues.[29]

Table 1: Representative Distribution of Immune Cells in an Adult Human

| Tissue / Compartment | Predominant Immune Cell Types | Estimated Number of Cells | Percentage of Total Immune Cells |

| Bone Marrow | Myeloid precursors, B cell precursors, Plasma cells | ~1.0 x 1012 | ~50% |

| Lymphatic System | T cells, B cells, Dendritic cells | ~0.6 x 1012 | ~30% |

| (Lymph Nodes, Spleen) | |||

| Blood | Neutrophils, T cells, Monocytes, B cells, NK cells | ~5.0 x 1010 | ~2-3% |

| Gut | T cells, Plasma cells, Macrophages | ~1.5 x 1011 | ~7-8% |

| Skin | T cells, Langerhans cells, Macrophages | ~2.0 x 1010 | ~1% |

| Lungs | Alveolar Macrophages, T cells | ~1.0 x 1010 | ~0.5% |

Note: Data are compiled estimates and can vary significantly based on age, sex, health status, and the methodologies used for quantification.[29] Studies have shown that sex is a primary factor influencing blood immune cell composition, with females generally exhibiting more robust immune responses.[29]

Key Experimental Methodologies

A. Flow Cytometry: Immune Cell Phenotyping

Flow cytometry is a powerful technique for identifying and quantifying immune cell populations based on their expression of specific cell surface and intracellular markers.[30][31]

Detailed Protocol:

-

Single-Cell Suspension Preparation: Prepare a single-cell suspension from blood (e.g., via density gradient centrifugation) or non-lymphoid tissues (e.g., via enzymatic digestion and mechanical disruption).[30][32]

-

Fc Receptor Blocking: Incubate cells with an Fc block reagent to prevent non-specific antibody binding to Fc receptors on cells like macrophages and B cells.[32]

-

Surface Marker Staining: Incubate cells with a cocktail of fluorochrome-conjugated antibodies specific for cell surface markers (e.g., CD3 for T cells, CD19 for B cells, CD4, CD8).[31] Staining is typically performed for 20-30 minutes at 4°C in the dark.

-

Viability Staining: Add a viability dye to distinguish live cells from dead cells, which can non-specifically bind antibodies.[30]

-

(Optional) Intracellular/Intranuclear Staining: For intracellular cytokines or transcription factors (like Foxp3), cells are first fixed and then permeabilized to allow antibodies to access intracellular targets.

-

Data Acquisition: Analyze the stained cells on a flow cytometer. The instrument uses lasers to excite the fluorochromes, and detectors measure the emitted light, allowing for the simultaneous analysis of multiple parameters on thousands of cells per second.[31]

-

Data Analysis: Use specialized software to "gate" on specific cell populations based on their fluorescence patterns to determine their relative percentages and absolute numbers.[30]

B. ELISA: Cytokine Quantification

The Enzyme-Linked Immunosorbent Assay (ELISA) is a plate-based assay used to detect and quantify soluble substances such as cytokines, chemokines, and antibodies in biological fluids.[33][34] The sandwich ELISA is the most common format for cytokine measurement.[34][35]

Detailed Protocol:

-

Plate Coating: Coat the wells of a 96-well microplate with a "capture" antibody specific for the cytokine of interest.[33][35] Incubate overnight at 4°C.

-

Washing and Blocking: Wash the plate to remove unbound antibody.[35] Block the remaining protein-binding sites in the wells with an inert protein solution (e.g., bovine serum albumin) to prevent non-specific binding.[33]

-

Sample and Standard Incubation: Add standards (recombinant cytokine of known concentrations) and samples (e.g., cell culture supernatant, serum) to the wells.[33][36] Incubate for 1-2 hours to allow the cytokine to bind to the capture antibody.

-

Detection Antibody: Wash the plate and add a biotinylated "detection" antibody, which binds to a different epitope on the captured cytokine.[35] Incubate for 1 hour.

-

Enzyme Conjugate: Wash the plate and add an enzyme-conjugated streptavidin (e.g., streptavidin-horseradish peroxidase), which binds to the biotin on the detection antibody.[36]

-

Substrate Addition: Wash the plate and add a chromogenic substrate (e.g., TMB).[36] The enzyme converts the substrate into a colored product.

-

Analysis: Stop the reaction with an acid (e.g., H₂SO₄) and measure the absorbance (optical density) of each well using a microplate reader.[35] The concentration of the cytokine in the samples is determined by comparing their absorbance to the standard curve generated from the standards.[34]

C. ChIP-seq: Mapping Protein-DNA Interactions

Chromatin Immunoprecipitation followed by Sequencing (ChIP-seq) is a method used to identify the genome-wide binding sites of DNA-associated proteins, such as transcription factors (e.g., STAT5, T-bet) or modified histones.[37][38][39]

Detailed Protocol:

-

Cross-linking: Treat cells with a reagent (typically formaldehyde) to create reversible covalent cross-links between proteins and the DNA they are bound to.[39][40] This step is omitted in Native ChIP (NChIP).[38]

-

Cell Lysis and Chromatin Fragmentation: Lyse the cells and shear the chromatin into smaller fragments (typically 200-600 bp) using sonication or enzymatic digestion (e.g., micrococcal nuclease).[37][39][40]

-

Immunoprecipitation (IP): Incubate the sheared chromatin with an antibody specific to the protein of interest (e.g., anti-STAT5). The antibody-protein-DNA complexes are then captured, often using antibody-binding magnetic beads.[37][39]

-

Washing: Wash the captured complexes to remove non-specifically bound chromatin fragments.[38]

-

Elution and Reverse Cross-linking: Elute the complexes from the antibody/beads and reverse the formaldehyde cross-links by heating.[38] Digest the proteins using proteinase K.[40]

-

DNA Purification: Purify the enriched DNA fragments.[39][40]

-

Library Preparation and Sequencing: Prepare a DNA library from the purified fragments and sequence it using a next-generation sequencing (NGS) platform.[37]

-

Bioinformatic Analysis: Align the sequence reads to a reference genome to identify "peaks," which represent regions with significant enrichment and correspond to the protein's binding sites.[37]

References

- 1. creative-diagnostics.com [creative-diagnostics.com]

- 2. In brief: The innate and adaptive immune systems - InformedHealth.org - NCBI Bookshelf [ncbi.nlm.nih.gov]

- 3. criver.com [criver.com]

- 4. Interactions Between the Innate and Adaptive Immune Responses - PubMed [pubmed.ncbi.nlm.nih.gov]

- 5. Innate and Adaptive Immunity | Emory University | Atlanta GA [vaccines.emory.edu]

- 6. T helper cell - Wikipedia [en.wikipedia.org]

- 7. akadeum.com [akadeum.com]

- 8. T Cells and MHC Proteins - Molecular Biology of the Cell - NCBI Bookshelf [ncbi.nlm.nih.gov]

- 9. Frontiers | A Novel Cellular Pathway of Antigen Presentation and CD4 T Cell Activation in vivo [frontiersin.org]

- 10. researchgate.net [researchgate.net]

- 11. B Lymphocytes and Humoral Immunity | Microbiology [courses.lumenlearning.com]

- 12. Lymphocyte trafficking and immunesurveillance [jstage.jst.go.jp]

- 13. Molecular Mechanisms of T Helper Cell Differentiation and Functional Specialization - PMC [pmc.ncbi.nlm.nih.gov]

- 14. T-cell activation | British Society for Immunology [immunology.org]

- 15. geneglobe.qiagen.com [geneglobe.qiagen.com]

- 16. beckman.com [beckman.com]

- 17. Helper T cell differentiation - PMC [pmc.ncbi.nlm.nih.gov]

- 18. Interleukin-2 and STAT5 in regulatory T cell development and function - PMC [pmc.ncbi.nlm.nih.gov]

- 19. Dynamic roles for IL-2-STAT5 signaling in effector and regulatory CD4+ T cell populations - PMC [pmc.ncbi.nlm.nih.gov]

- 20. researchgate.net [researchgate.net]

- 21. Dynamic Roles for IL-2-STAT5 Signaling in Effector and Regulatory CD4+ T Cell Populations - PubMed [pubmed.ncbi.nlm.nih.gov]

- 22. Humoral Immune Responses: Activation of B Lymphocytes and Production of Antibodies | Basicmedical Key [basicmedicalkey.com]

- 23. microrao.com [microrao.com]

- 24. bio.libretexts.org [bio.libretexts.org]

- 25. Cell Activation and Antibody Production in the Humoral Immune Response | Immunostep Biotech [immunostep.com]

- 26. Lymphocyte homing and homeostasis - PubMed [pubmed.ncbi.nlm.nih.gov]

- 27. storage.imrpress.com [storage.imrpress.com]

- 28. Chemokine control of lymphocyte trafficking: a general overview - PMC [pmc.ncbi.nlm.nih.gov]

- 29. The total mass, number, and distribution of immune cells in the human body - PMC [pmc.ncbi.nlm.nih.gov]

- 30. A Protocol for the Comprehensive Flow Cytometric Analysis of Immune Cells in Normal and Inflamed Murine Non-Lymphoid Tissues | PLOS One [journals.plos.org]

- 31. bitesizebio.com [bitesizebio.com]

- 32. low input ChIP-sequencing of immune cells [protocols.io]

- 33. Detection and Quantification of Cytokines and Other Biomarkers - PMC [pmc.ncbi.nlm.nih.gov]

- 34. biomatik.com [biomatik.com]

- 35. Cytokine Elisa [bdbiosciences.com]

- 36. bowdish.ca [bowdish.ca]

- 37. mcw.edu [mcw.edu]

- 38. Chromatin immunoprecipitation - Wikipedia [en.wikipedia.org]

- 39. Overview of Chromatin Immunoprecipitation (ChIP) | Cell Signaling Technology [cellsignal.com]

- 40. Chromatin Immunoprecipitation Sequencing (ChIP-seq) Protocol - CD Genomics [cd-genomics.com]

The Orchestration of Metabolic Pathways: An In-depth Technical Guide

For Researchers, Scientists, and Drug Development Professionals

This technical guide provides a comprehensive overview of the intricate orchestration of metabolic pathways, designed for researchers, scientists, and professionals in drug development. We delve into the core regulatory mechanisms, key signaling cascades, and the advanced experimental and computational methodologies used to elucidate metabolic networks. This guide emphasizes quantitative data presentation, detailed experimental protocols, and clear visual representations of complex biological processes to facilitate a deeper understanding and application in research and therapeutic development.

Core Principles of Metabolic Regulation

The intricate network of metabolic pathways within a cell is exquisitely regulated to maintain homeostasis, respond to environmental cues, and fulfill the bioenergetic and biosynthetic demands of the cell. The primary mechanisms of control can be categorized as follows:

-

Allosteric Regulation: This is a rapid control mechanism where a regulatory molecule binds to an enzyme at a site distinct from the active site, inducing a conformational change that either activates or inhibits the enzyme's activity.[1] A classic example is the feedback inhibition of phosphofructokinase, a key enzyme in glycolysis, by high levels of ATP, signaling an energy-replete state.[1][2]

-

Covalent Modification: The activity of metabolic enzymes can be modulated by the addition or removal of chemical groups, such as phosphate, acetyl, or methyl groups.[1][3] A prominent example is the regulation of glycogen phosphorylase, which is activated by phosphorylation to initiate the breakdown of glycogen into glucose.[1][3]

-

Genetic Control: The expression of genes encoding metabolic enzymes is a long-term regulatory strategy.[1] This involves the transcriptional upregulation or downregulation of enzymes in response to cellular needs. For instance, in the presence of lactose, bacterial cells induce the expression of genes for lactose-metabolizing enzymes.[1]

-

Substrate Availability: The rate of many metabolic pathways is directly influenced by the concentration of substrates. For example, the rate of fatty acid oxidation is largely dependent on the plasma concentration of fatty acids.[4]

Key Signaling Pathways in Metabolic Orchestration

Cellular metabolism is tightly integrated with signaling networks that translate extracellular cues into metabolic responses. Two of the most critical signaling pathways in this context are the PI3K/Akt/mTOR and AMPK pathways.

The PI3K/Akt/mTOR Pathway

The Phosphoinositide 3-kinase (PI3K)/Akt/mammalian Target of Rapamycin (mTOR) pathway is a central regulator of cell growth, proliferation, and metabolism.[3][4][5][6] Activated by growth factors and hormones like insulin, this pathway promotes anabolic processes such as protein and lipid synthesis, while inhibiting catabolic processes like autophagy.[3][4][5][6]

// Node Definitions GF [label="Growth Factor /\nInsulin", fillcolor="#FBBC05", fontcolor="#202124"]; Receptor [label="Receptor Tyrosine\nKinase (RTK)", fillcolor="#F1F3F4", fontcolor="#202124"]; PI3K [label="PI3K", fillcolor="#4285F4", fontcolor="#FFFFFF"]; PIP2 [label="PIP2", fillcolor="#FFFFFF", fontcolor="#202124", shape=ellipse]; PIP3 [label="PIP3", fillcolor="#FFFFFF", fontcolor="#202124", shape=ellipse]; PTEN [label="PTEN", fillcolor="#EA4335", fontcolor="#FFFFFF"]; PDK1 [label="PDK1", fillcolor="#34A853", fontcolor="#FFFFFF"]; Akt [label="Akt", fillcolor="#4285F4", fontcolor="#FFFFFF"]; mTORC2 [label="mTORC2", fillcolor="#34A853", fontcolor="#FFFFFF"]; TSC1_2 [label="TSC1/TSC2", fillcolor="#EA4335", fontcolor="#FFFFFF"]; Rheb [label="Rheb", fillcolor="#34A853", fontcolor="#FFFFFF"]; mTORC1 [label="mTORC1", fillcolor="#4285F4", fontcolor="#FFFFFF"]; S6K1 [label="S6K1", fillcolor="#FBBC05", fontcolor="#202124"]; fourEBP1 [label="4E-BP1", fillcolor="#FBBC05", fontcolor="#202124"]; Protein_Synthesis [label="Protein Synthesis", fillcolor="#F1F3F4", fontcolor="#202124"]; Lipid_Synthesis [label="Lipid Synthesis", fillcolor="#F1F3F4", fontcolor="#202124"]; Autophagy [label="Autophagy", fillcolor="#F1F3F4", fontcolor="#202124"];

// Edges GF -> Receptor [color="#5F6368"]; Receptor -> PI3K [color="#34A853"]; PI3K -> PIP2 [label=" P", arrowhead=none, style=dashed, color="#5F6368"]; PIP2 -> PIP3 [label=" P", color="#34A853"]; PIP3 -> PDK1 [color="#34A853"]; PIP3 -> Akt [color="#34A853"]; PTEN -> PIP3 [arrowhead=tee, color="#EA4335"]; PDK1 -> Akt [color="#34A853"]; mTORC2 -> Akt [color="#34A853"]; Akt -> TSC1_2 [arrowhead=tee, color="#EA4335"]; TSC1_2 -> Rheb [arrowhead=tee, color="#EA4335"]; Rheb -> mTORC1 [color="#34A853"]; mTORC1 -> S6K1 [color="#34A853"]; mTORC1 -> fourEBP1 [arrowhead=tee, color="#EA4335"]; mTORC1 -> Lipid_Synthesis [color="#34A853"]; mTORC1 -> Autophagy [arrowhead=tee, color="#EA4335"]; S6K1 -> Protein_Synthesis [color="#34A853"]; fourEBP1 -> Protein_Synthesis [arrowhead=tee, style=dashed, color="#EA4335"]; } END_DOT

Figure 1: The PI3K/Akt/mTOR signaling pathway.

The AMPK Pathway

The AMP-activated protein kinase (AMPK) pathway acts as a cellular energy sensor.[7][8] It is activated under conditions of low energy, such as nutrient deprivation or hypoxia, which lead to an increased AMP/ATP ratio.[7][8] Activated AMPK shifts the cellular metabolism from anabolic to catabolic processes, promoting ATP production through fatty acid oxidation and glycolysis, while inhibiting ATP-consuming processes like protein and lipid synthesis.[7][8]

// Node Definitions Low_Energy [label="Low Energy State\n(High AMP/ATP)", fillcolor="#FBBC05", fontcolor="#202124"]; LKB1 [label="LKB1", fillcolor="#34A853", fontcolor="#FFFFFF"]; CaMKK2 [label="CaMKKβ", fillcolor="#34A853", fontcolor="#FFFFFF"]; Calcium [label="Increased\nIntracellular Ca2+", fillcolor="#FBBC05", fontcolor="#202124"]; AMPK [label="AMPK", fillcolor="#4285F4", fontcolor="#FFFFFF"]; ACC [label="ACC", fillcolor="#EA4335", fontcolor="#FFFFFF"]; mTORC1 [label="mTORC1", fillcolor="#EA4335", fontcolor="#FFFFFF"]; ULK1 [label="ULK1", fillcolor="#34A853", fontcolor="#FFFFFF"]; Fatty_Acid_Oxidation [label="Fatty Acid\nOxidation", fillcolor="#F1F3F4", fontcolor="#202124"]; Fatty_Acid_Synthesis [label="Fatty Acid\nSynthesis", fillcolor="#F1F3F4", fontcolor="#202124"]; Protein_Synthesis [label="Protein Synthesis", fillcolor="#F1F3F4", fontcolor="#202124"]; Autophagy [label="Autophagy", fillcolor="#F1F3F4", fontcolor="#202124"];

// Edges Low_Energy -> LKB1 [color="#5F6368"]; Calcium -> CaMKK2 [color="#5F6368"]; LKB1 -> AMPK [label=" P", color="#34A853"]; CaMKK2 -> AMPK [label=" P", color="#34A853"]; AMPK -> ACC [arrowhead=tee, color="#EA4335"]; AMPK -> mTORC1 [arrowhead=tee, color="#EA4335"]; AMPK -> ULK1 [color="#34A853"]; ACC -> Fatty_Acid_Synthesis [arrowhead=tee, style=dashed, color="#EA4335"]; ACC -> Fatty_Acid_Oxidation [style=dashed, color="#34A853"]; mTORC1 -> Protein_Synthesis [arrowhead=tee, style=dashed, color="#EA4335"]; ULK1 -> Autophagy [color="#34A853"]; } END_DOT

Figure 2: The AMPK signaling pathway.

Quantitative Analysis of Metabolic Pathways

A quantitative understanding of metabolic pathways is essential for building predictive models and for identifying potential targets for therapeutic intervention. This involves the measurement of enzyme kinetics, metabolite concentrations, and metabolic fluxes.

Enzyme Kinetics

The kinetic properties of enzymes, such as the Michaelis constant (Km) and the maximum reaction velocity (Vmax), are fundamental parameters that govern the flux through a metabolic pathway.[9] Km represents the substrate concentration at which the reaction rate is half of Vmax and is an inverse measure of the enzyme's affinity for its substrate.[9]

| Enzyme (Glycolysis) | Substrate | Km (mM) | Vmax (µmol/min/mg protein) |

| Hexokinase | Glucose | 0.1 | 10 |

| Phosphofructokinase | Fructose-6-phosphate | 0.05 | 15 |

| Pyruvate Kinase | Phosphoenolpyruvate | 0.3 | 200 |

Table 1: Representative kinetic parameters for key glycolytic enzymes. Values are illustrative and can vary depending on the organism and experimental conditions.

Intracellular Metabolite Concentrations

The steady-state concentrations of intracellular metabolites provide a snapshot of the metabolic state of a cell. These concentrations are highly dynamic and can change in response to various stimuli. Mass spectrometry-based metabolomics is a powerful technique for the comprehensive measurement of these small molecules.

| Metabolite | Concentration in E. coli (mM) | Concentration in Mammalian Cells (mM) |

| Glucose-6-phosphate | 0.2 - 0.5 | 0.05 - 0.2 |

| Fructose-1,6-bisphosphate | 0.1 - 1.0 | 0.01 - 0.05 |

| ATP | 5.0 - 10.0 | 2.0 - 5.0 |

| NAD+ | 1.0 - 3.0 | 0.3 - 1.0 |

| NADH | 0.05 - 0.1 | 0.001 - 0.01 |

| Glutamate | 10 - 100 | 2 - 20 |

Table 2: Typical intracellular concentrations of key metabolites in E. coli and mammalian cells.[10] Concentrations can vary significantly based on cell type, growth conditions, and analytical methods.

Metabolic Flux Analysis

Metabolic flux analysis (MFA) is a powerful technique used to quantify the rates of metabolic reactions within a cell.[11][12][13] 13C-MFA, a common approach, involves feeding cells a 13C-labeled substrate and measuring the incorporation of the label into downstream metabolites using mass spectrometry or NMR.[12][13]

| Metabolic Flux | E. coli (mmol/gDW/h) | Mammalian Cell (mmol/gDW/h) |

| Glucose Uptake Rate | 10.0 | 1.0 |

| Glycolysis | 8.5 | 0.8 |

| Pentose Phosphate Pathway | 1.5 | 0.1 |

| TCA Cycle | 5.0 | 0.5 |

| Acetate Secretion | 3.0 | 0.05 |

Table 3: Representative metabolic flux values in central carbon metabolism. Values are illustrative and depend on the specific strain/cell line and culture conditions.[14]

Experimental Protocols

The following sections provide detailed methodologies for key experiments in the study of metabolic pathways.

Untargeted Metabolomics using LC-MS

This protocol outlines a general workflow for the analysis of polar metabolites in mammalian cells using liquid chromatography-mass spectrometry (LC-MS).

// Node Definitions Sample_Collection [label="1. Sample Collection\n(e.g., Adherent Cells)", fillcolor="#F1F3F4", fontcolor="#202124"]; Quenching [label="2. Quenching\n(e.g., Cold Methanol)", fillcolor="#FBBC05", fontcolor="#202124"]; Extraction [label="3. Metabolite Extraction\n(e.g., Methanol/Water)", fillcolor="#4285F4", fontcolor="#FFFFFF"]; LC_MS_Analysis [label="4. LC-MS Analysis", fillcolor="#34A853", fontcolor="#FFFFFF"]; Data_Processing [label="5. Data Processing\n(Peak Picking, Alignment)", fillcolor="#EA4335", fontcolor="#FFFFFF"]; Statistical_Analysis [label="6. Statistical Analysis\n(PCA, PLS-DA)", fillcolor="#FBBC05", fontcolor="#202124"]; Metabolite_ID [label="7. Metabolite Identification", fillcolor="#4285F4", fontcolor="#FFFFFF"]; Pathway_Analysis [label="8. Pathway Analysis", fillcolor="#34A853", fontcolor="#FFFFFF"];

// Edges Sample_Collection -> Quenching [color="#5F6368"]; Quenching -> Extraction [color="#5F6368"]; Extraction -> LC_MS_Analysis [color="#5F6368"]; LC_MS_Analysis -> Data_Processing [color="#5F6368"]; Data_Processing -> Statistical_Analysis [color="#5F6368"]; Statistical_Analysis -> Metabolite_ID [color="#5F6368"]; Metabolite_ID -> Pathway_Analysis [color="#5F6368"]; } END_DOT

Figure 3: General workflow for untargeted metabolomics.

4.1.1 Sample Preparation and Metabolite Extraction

-

Cell Culture: Culture adherent mammalian cells to ~80-90% confluency in a 6-well plate.

-

Quenching: Rapidly aspirate the culture medium. Immediately wash the cells with 1 mL of ice-cold 0.9% NaCl solution. Aspirate the saline and add 1 mL of ice-cold 80% methanol (-80°C) to each well to quench metabolic activity.

-

Scraping and Collection: Place the plate on dry ice and scrape the cells in the methanol solution using a cell scraper. Transfer the cell lysate to a pre-chilled microcentrifuge tube.

-

Extraction: Vortex the cell lysate vigorously for 1 minute. Centrifuge at 14,000 x g for 10 minutes at 4°C to pellet cell debris.

-

Supernatant Transfer: Carefully transfer the supernatant containing the metabolites to a new pre-chilled microcentrifuge tube.

-

Drying: Dry the metabolite extract completely using a vacuum concentrator (e.g., SpeedVac).

-

Storage: Store the dried metabolite pellet at -80°C until LC-MS analysis.

4.1.2 LC-MS Data Acquisition

-

Reconstitution: Reconstitute the dried metabolite pellet in a suitable volume (e.g., 100 µL) of the initial mobile phase (e.g., 95:5 water:acetonitrile with 0.1% formic acid).

-

Chromatography: Inject the sample onto a hydrophilic interaction liquid chromatography (HILIC) column for separation of polar metabolites.

-

Mass Spectrometry: Analyze the eluent using a high-resolution mass spectrometer (e.g., Q-TOF or Orbitrap) in both positive and negative ionization modes to cover a wide range of metabolites.

4.1.3 Data Analysis

-

Data Preprocessing: Use software such as XCMS or MZmine for peak picking, feature detection, retention time correction, and alignment across samples.[15]

-

Statistical Analysis: Perform multivariate statistical analysis, such as Principal Component Analysis (PCA) and Partial Least Squares-Discriminant Analysis (PLS-DA), to identify metabolites that are significantly different between experimental groups.[16]

-

Metabolite Identification: Identify significant metabolites by matching their accurate mass and fragmentation patterns (MS/MS spectra) to metabolite databases (e.g., METLIN, HMDB).

-

Pathway Analysis: Use tools like MetaboAnalyst or Ingenuity Pathway Analysis (IPA) to map the identified metabolites to metabolic pathways and identify perturbed pathways.[17]

Flux Balance Analysis (FBA)

Flux Balance Analysis (FBA) is a computational method used to predict metabolic flux distributions in a genome-scale metabolic model.[18][19] It relies on the assumption of a steady state, where the production and consumption of each internal metabolite are balanced.[18]

// Node Definitions Model_Reconstruction [label="1. Genome-Scale Metabolic\nModel Reconstruction", fillcolor="#F1F3F4", fontcolor="#202124"]; Stoichiometric_Matrix [label="2. Define Stoichiometric Matrix (S)", fillcolor="#FBBC05", fontcolor="#202124"]; Constraints [label="3. Set Constraints\n(Uptake/Secretion Rates)", fillcolor="#4285F4", fontcolor="#FFFFFF"]; Objective_Function [label="4. Define Objective Function\n(e.g., Maximize Biomass)", fillcolor="#34A853", fontcolor="#FFFFFF"]; Linear_Programming [label="5. Solve using Linear Programming", fillcolor="#EA4335", fontcolor="#FFFFFF"]; Flux_Distribution [label="6. Analyze Predicted\nFlux Distribution", fillcolor="#FBBC05", fontcolor="#202124"];

// Edges Model_Reconstruction -> Stoichiometric_Matrix [color="#5F6368"]; Stoichiometric_Matrix -> Constraints [color="#5F6368"]; Constraints -> Objective_Function [color="#5F6368"]; Objective_Function -> Linear_Programming [color="#5F6368"]; Linear_Programming -> Flux_Distribution [color="#5F6368"]; } END_DOT

Figure 4: Workflow for Flux Balance Analysis.

4.2.1 Step-by-Step Protocol

-

Obtain a Genome-Scale Metabolic Model: Start with a curated, genome-scale metabolic reconstruction of the organism of interest. These models are often available in public databases (e.g., BiGG Models).

-

Define the Stoichiometric Matrix (S): The model is represented by a stoichiometric matrix (S), where rows correspond to metabolites and columns to reactions. The entries in the matrix are the stoichiometric coefficients of the metabolites in each reaction.[18]

-

Set Constraints (Bounds): Define the lower and upper bounds for each reaction flux (v). This includes setting the uptake rates of nutrients (e.g., glucose, oxygen) and the secretion rates of byproducts based on experimental measurements. Irreversible reactions will have a lower bound of 0.[18]

-

Define an Objective Function (Z): Specify a biological objective to be optimized. A common objective is the maximization of the biomass production rate, which represents cell growth.[18] The objective function is a linear combination of fluxes.[18]

-

Solve the Linear Programming Problem: Use linear programming to find a flux distribution (v) that maximizes or minimizes the objective function (Z) subject to the steady-state assumption (S • v = 0) and the defined flux constraints.[18]

-

Analyze the Flux Distribution: The solution provides a predicted flux value for every reaction in the metabolic network, offering insights into the activity of different metabolic pathways under the simulated conditions.

Conclusion

The orchestration of metabolic pathways is a highly complex and dynamic process that is fundamental to cellular function. This guide has provided an in-depth overview of the key regulatory principles, signaling networks, and analytical techniques that are at the forefront of metabolic research. The ability to quantitatively measure and model metabolic networks is crucial for advancing our understanding of disease and for the development of novel therapeutic strategies. By integrating the experimental and computational approaches outlined here, researchers can continue to unravel the complexities of metabolic regulation and harness this knowledge for the benefit of human health.

References

- 1. researchgate.net [researchgate.net]

- 2. The GC-MS workflow | workflow4metabolomics [workflow4metabolomics.org]

- 3. geneglobe.qiagen.com [geneglobe.qiagen.com]

- 4. PI3K/AKT/mTOR Signaling Network in Human Health and Diseases - PMC [pmc.ncbi.nlm.nih.gov]

- 5. PI3K/AKT/mTOR pathway - Wikipedia [en.wikipedia.org]

- 6. encyclopedia.pub [encyclopedia.pub]

- 7. AMPK Signaling | Cell Signaling Technology [cellsignal.com]

- 8. AMPK – sensing energy while talking to other signaling pathways - PMC [pmc.ncbi.nlm.nih.gov]

- 9. teachmephysiology.com [teachmephysiology.com]

- 10. » What are the concentrations of free metabolites in cells? [book.bionumbers.org]

- 11. shimadzu.com [shimadzu.com]

- 12. Guidelines for metabolic flux analysis in metabolic engineering: methods, tools, and applications - CD Biosynsis [biosynsis.com]

- 13. 13C metabolic flux analysis: Classification and characterization from the perspective of mathematical modeling and application in physiological research of neural cell - PMC [pmc.ncbi.nlm.nih.gov]

- 14. researchgate.net [researchgate.net]

- 15. The LC-MS workflow | workflow4metabolomics [workflow4metabolomics.org]

- 16. Processing and Analysis of GC/LC-MS-Based Metabolomics Data | Springer Nature Experiments [experiments.springernature.com]

- 17. LC-MS-based metabolomics - PMC [pmc.ncbi.nlm.nih.gov]

- 18. What is flux balance analysis? - PMC [pmc.ncbi.nlm.nih.gov]

- 19. researchgate.net [researchgate.net]

Orchestrating Development: A Technical Guide to the Core Principles of Developmental Biology

For Researchers, Scientists, and Drug Development Professionals

Introduction

Developmental biology is the study of the processes by which a single cell develops into a complex multicellular organism. This intricate orchestration involves a precise interplay of genetic and environmental cues that guide cells to proliferate, differentiate, and organize into tissues and organs.[1] Understanding these fundamental principles is not only crucial for advancing our knowledge of life but also holds immense potential for regenerative medicine and the development of novel therapeutics. This technical guide provides an in-depth exploration of the core pillars of developmental biology: cellular differentiation, pattern formation, and morphogenesis. It further details key signaling pathways that govern these processes and provides comprehensive protocols for essential experimental techniques in the field.

Core Principles of Developmental Orchestration

The development of a multicellular organism is underpinned by three fundamental processes:

-

Cellular Differentiation: This is the process by which a less specialized cell becomes a more specialized cell type.[2] Starting from a single totipotent zygote, a series of divisions and differentiations give rise to the vast array of specialized cells that constitute a complete organism. This process is driven by changes in gene expression, where specific sets of genes are turned on or off to dictate a cell's function and identity.[3]

-

Pattern Formation: This refers to the process by which cells in a developing embryo acquire distinct identities in a spatially organized manner, leading to the formation of a structured body plan.[4][5] A key mechanism in pattern formation is the establishment of morphogen gradients. Morphogens are signaling molecules that emanate from a source and form a concentration gradient across a field of cells. Cells respond to different concentrations of the morphogen by activating distinct sets of genes, thereby acquiring different fates based on their position.[6][7][8]

-

Morphogenesis: This is the biological process that causes an organism to develop its shape.[9][10] It involves the coordinated movement and rearrangement of cells and tissues to form the three-dimensional structures of organs and the overall body plan.[9][11][12] Morphogenesis is a highly dynamic process involving cell adhesion, migration, and changes in cell shape.[9][12]

Key Signaling Pathways in Development

A small number of highly conserved signaling pathways are repeatedly used throughout development to regulate cell fate decisions, proliferation, and movement. Understanding these pathways is critical for deciphering the logic of development and for identifying potential targets for therapeutic intervention.

Wnt Signaling Pathway

The Wnt signaling pathway is a crucial regulator of a wide range of developmental processes, including cell fate specification, proliferation, and migration.[13][14] The pathway can be broadly divided into two main branches: the canonical (β-catenin-dependent) and non-canonical (β-catenin-independent) pathways.[2][4][15]

-

Canonical Wnt Pathway: In the absence of a Wnt ligand, a "destruction complex" phosphorylates β-catenin, targeting it for degradation. Binding of a Wnt ligand to its Frizzled (Fzd) receptor and LRP5/6 co-receptor disrupts the destruction complex, leading to the accumulation of β-catenin in the cytoplasm and its subsequent translocation to the nucleus.[9][12][15] In the nucleus, β-catenin acts as a co-activator for TCF/LEF transcription factors to regulate the expression of Wnt target genes.[4][9][12]

-

Non-canonical Wnt Pathways: These pathways operate independently of β-catenin. The two major non-canonical pathways are the Planar Cell Polarity (PCP) pathway and the Wnt/Ca2+ pathway.[2][4] The PCP pathway regulates cell polarity and coordinated cell movements, while the Wnt/Ca2+ pathway modulates intracellular calcium levels to influence processes like cell adhesion and migration.[2][4][15]

Caption: Wnt Signaling Pathways.

TGF-β Signaling Pathway

The Transforming Growth Factor-beta (TGF-β) superfamily of ligands regulates a diverse array of cellular processes, including proliferation, differentiation, apoptosis, and cell migration.[16] The signaling cascade can be initiated through both SMAD-dependent and SMAD-independent pathways.

-

SMAD-Dependent Pathway: Binding of a TGF-β ligand to its type II receptor recruits and phosphorylates a type I receptor.[10][16] The activated type I receptor then phosphorylates receptor-regulated SMADs (R-SMADs), which subsequently bind to the common mediator SMAD4.[10][16] This SMAD complex translocates to the nucleus to regulate target gene expression.[10][17]

-

SMAD-Independent Pathways: TGF-β receptors can also activate other signaling pathways, such as the MAPK/ERK, PI3K/AKT, and Rho-like GTPase pathways, to regulate a variety of cellular responses.[10][16][18]

References

- 1. journals.plos.org [journals.plos.org]

- 2. Wnt signal transduction pathways - PMC [pmc.ncbi.nlm.nih.gov]

- 3. Whole Mount In Situ Hybridization in Zebrafish [protocols.io]

- 4. researchgate.net [researchgate.net]

- 5. researchgate.net [researchgate.net]

- 6. Experiment < Expression Atlas < EMBL-EBI [ebi.ac.uk]

- 7. ChIP-seq Protocols and Methods | Springer Nature Experiments [experiments.springernature.com]

- 8. journals.biologists.com [journals.biologists.com]

- 9. zfin.org [zfin.org]

- 10. researchgate.net [researchgate.net]

- 11. Genome Editing in Mice Using CRISPR/Cas9 Technology - PMC [pmc.ncbi.nlm.nih.gov]

- 12. Genome Editing in Mice Using CRISPR/Cas9 Technology | Semantic Scholar [semanticscholar.org]

- 13. Video: Genome Editing in Mammalian Cell Lines using CRISPR-Cas [jove.com]

- 14. Canonical and Non-Canonical Wnt Signaling in Immune Cells - PMC [pmc.ncbi.nlm.nih.gov]

- 15. researchgate.net [researchgate.net]

- 16. creative-diagnostics.com [creative-diagnostics.com]

- 17. researchgate.net [researchgate.net]

- 18. bosterbio.com [bosterbio.com]

The Conductor of Discovery: A Technical Guide to Data Orchestration in Biomedical Research

For Researchers, Scientists, and Drug Development Professionals

In the era of big data, biomedical research is undergoing a profound transformation. The sheer volume, variety, and velocity of data generated from genomics, proteomics, high-throughput screening, and clinical trials present both unprecedented opportunities and significant challenges. To harness the full potential of this data deluge, researchers and drug development professionals are increasingly turning to data orchestration. This in-depth technical guide explores the core principles of data orchestration, its practical applications in biomedical research, and the tangible benefits it delivers in accelerating discovery and improving patient outcomes.

The Core of Data Orchestration: From Chaos to Cohesion

Data orchestration is the automated process of coordinating and managing complex data workflows across multiple systems and services. It goes beyond simple data integration by not just connecting data sources, but also automating the entire pipeline from data ingestion and processing to analysis and reporting. In the context of biomedical research, this means seamlessly moving vast datasets between different analytical tools, computational environments (such as high-performance computing clusters and cloud platforms), and storage systems in a reproducible and scalable manner.[1][2][3]

At its heart, data orchestration in biomedical research is about creating a unified and automated data ecosystem that breaks down data silos.[4] Traditionally, research labs and different departments within a pharmaceutical company often operate in isolation, with genomics, proteomics, and clinical data residing in disparate systems.[5] Data orchestration brings these together, enabling a more holistic and integrated approach to research.

The key components of a data orchestration framework typically include:

-

Workflow Management Systems (WMS): These are the engines of data orchestration, allowing researchers to define, execute, and monitor complex computational pipelines.[1][6] Popular WMS in the bioinformatics community include Nextflow, Snakemake, and platforms that support the Common Workflow Language (CWL).[7][8]

-

Containerization: Technologies like Docker and Singularity are used to package software and their dependencies into portable containers. This ensures that analysis pipelines can be run consistently across different computing environments, a cornerstone of reproducible research.

-

Scalable Infrastructure: Data orchestration platforms are often deployed on cloud computing platforms (e.g., Amazon Web Services, Google Cloud) or on-premise high-performance computing (HPC) clusters to handle the massive computational demands of biomedical data analysis.

The Quantitative Impact of Orchestration

The adoption of data orchestration yields significant, measurable benefits in biomedical research. By automating and optimizing data workflows, research organizations can achieve greater efficiency, reproducibility, and scalability.

A key advantage is the enhanced reproducibility of scientific findings.[2][6] By defining an entire analysis pipeline in a workflow language, every step, parameter, and software version is explicitly recorded, allowing other researchers to replicate the analysis precisely. This is crucial for validating research outcomes and building upon previous work.

Furthermore, data orchestration can lead to substantial improvements in computational efficiency. A comparative analysis of popular bioinformatics workflow management systems highlights these gains:

| Workflow Management System | Elapsed Time (minutes) | CPU Time (minutes) | Max. Memory (GB) |

| Pegasus-mpi-cluster | 130.5 | 10,783 | 11.5 |

| Snakemake | 132.2 | 10,812 | 12.1 |

| Nextflow | 135.8 | 10,925 | 12.3 |

| Cromwell-WDL | 145.1 | 11,041 | 13.0 |

| Toil-CWL | 155.6 | 11,234 | 13.5 |