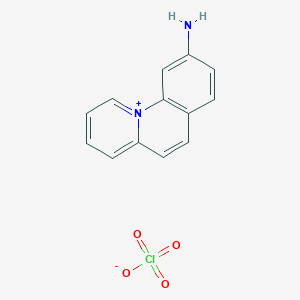

CID 23723895

Beschreibung

BenchChem offers high-quality this compound suitable for many research applications. Different packaging options are available to accommodate customers' requirements. Please inquire for more information about this compound including the price, delivery time, and more detailed information at info@benchchem.com.

Eigenschaften

IUPAC Name |

benzo[c]quinolizin-11-ium-9-amine;perchlorate | |

|---|---|---|

| Details | Computed by LexiChem 2.6.6 (PubChem release 2019.06.18) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI |

InChI=1S/C13H10N2.ClHO4/c14-11-6-4-10-5-7-12-3-1-2-8-15(12)13(10)9-11;2-1(3,4)5/h1-9,14H;(H,2,3,4,5) | |

| Details | Computed by InChI 1.0.5 (PubChem release 2019.06.18) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI Key |

RIQVDGHIUAGSRR-UHFFFAOYSA-N | |

| Details | Computed by InChI 1.0.5 (PubChem release 2019.06.18) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Canonical SMILES |

C1=CC=[N+]2C(=C1)C=CC3=C2C=C(C=C3)N.[O-]Cl(=O)(=O)=O | |

| Details | Computed by OEChem 2.1.5 (PubChem release 2019.06.18) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Molecular Formula |

C13H11ClN2O4 | |

| Details | Computed by PubChem 2.1 (PubChem release 2019.06.18) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Molecular Weight |

294.69 g/mol | |

| Details | Computed by PubChem 2.1 (PubChem release 2021.05.07) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Solubility |

>44.2 [ug/mL] (The mean of the results at pH 7.4) | |

| Record name | SID47199273 | |

| Source | Burnham Center for Chemical Genomics | |

| URL | https://pubchem.ncbi.nlm.nih.gov/bioassay/1996#section=Data-Table | |

| Description | Aqueous solubility in buffer at pH 7.4 | |

Q & A

Q. How to design experiments for evaluating Transformer-based models in NLP tasks?

- Methodological Answer : Experimental design should include:

- Objective Definition : Specify the task (e.g., translation, parsing) and metrics (BLEU, F1, accuracy) .

- Dataset Selection : Use standardized benchmarks (e.g., WMT for translation, GLUE for language understanding) to ensure comparability .

- Architecture Configuration : Compare attention mechanisms, layer depth, and parallelization efficiency against baselines (e.g., RNNs, CNNs) .

- Training Protocols : Report batch size, optimizer settings, and hardware details (e.g., 8 GPUs for 3.5 days in the Transformer study) .

- Statistical Validation : Use cross-validation or multiple random seeds to address variability .

What are the key methodological considerations when formulating research questions for deep learning studies?

- Methodological Answer : Use frameworks like PICO (Population, Intervention, Comparison, Outcome) or FINER (Feasible, Interesting, Novel, Ethical, Relevant) to structure questions . For example:

- Complexity : Avoid yes/no questions; instead, ask, "How do bidirectional attention mechanisms in BERT improve cross-task generalization compared to unidirectional models?" .

- Gap Identification : Conduct a systematic literature review to pinpoint unresolved issues (e.g., undertraining in early BERT implementations) .

Q. How to conduct a literature review for identifying gaps in Transformer model applications?

- Methodological Answer :

- Source Selection : Prioritize peer-reviewed journals and conference proceedings (e.g., NeurIPS, ACL) .

- Keyword Strategy : Combine terms like "attention mechanisms," "pretraining," and "task-specific fine-tuning" .

- Contradiction Analysis : Compare findings across studies (e.g., BERT’s bidirectional vs. RoBERTa’s dynamic masking) .

Advanced Research Questions

Q. How to optimize hyperparameters in Transformer-based models to address undertraining issues?

- Methodological Answer :

- Grid Search : Systematically test learning rates (e.g., 1e-5 to 3e-4), batch sizes, and dropout rates .

- Dynamic Masking : Implement RoBERTa’s approach to improve pretraining robustness .

- Training Duration : Extend training cycles beyond standard benchmarks (e.g., 100k steps vs. BERT’s 40k) .

- Resource Allocation : Use distributed training frameworks (e.g., TensorFlow/PyTorch) to manage computational costs .

Q. What strategies resolve contradictions between bidirectional (BERT) and robustly optimized (RoBERTa) pretraining approaches?

- Methodological Answer :

- Ablation Studies : Isolate variables (e.g., static vs. dynamic masking) to quantify their impact .

- Cross-Validation : Evaluate models on diverse tasks (e.g., GLUE, SQuAD) to identify task-specific strengths .

- Meta-Analysis : Aggregate results from multiple pretraining regimes to derive generalizable insights .

Q. How to analyze dataset biases affecting reproducibility in NLP research?

- Methodological Answer :

- Bias Audits : Use tools like Language Interpretability Tool (LIT) to detect demographic or syntactic biases .

- Data Augmentation : Apply adversarial examples or synthetic data to test model robustness .

- Ethical Frameworks : Align data collection with ethical guidelines (e.g., informed consent for human-authored text) .

Methodological Resources

- Experimental Design : Refer to the Transformer’s encoder-decoder architecture and BERT’s pretraining protocols .

- Contradiction Analysis : Use RoBERTa’s replication study for hyperparameter tuning .

- Ethical Compliance : Follow international publishing standards (e.g., COPE guidelines) for data and authorship .

Featured Recommendations

| Most viewed | ||

|---|---|---|

| Most popular with customers |

Haftungsausschluss und Informationen zu In-Vitro-Forschungsprodukten

Bitte beachten Sie, dass alle Artikel und Produktinformationen, die auf BenchChem präsentiert werden, ausschließlich zu Informationszwecken bestimmt sind. Die auf BenchChem zum Kauf angebotenen Produkte sind speziell für In-vitro-Studien konzipiert, die außerhalb lebender Organismen durchgeführt werden. In-vitro-Studien, abgeleitet von dem lateinischen Begriff "in Glas", beinhalten Experimente, die in kontrollierten Laborumgebungen unter Verwendung von Zellen oder Geweben durchgeführt werden. Es ist wichtig zu beachten, dass diese Produkte nicht als Arzneimittel oder Medikamente eingestuft sind und keine Zulassung der FDA für die Vorbeugung, Behandlung oder Heilung von medizinischen Zuständen, Beschwerden oder Krankheiten erhalten haben. Wir müssen betonen, dass jede Form der körperlichen Einführung dieser Produkte in Menschen oder Tiere gesetzlich strikt untersagt ist. Es ist unerlässlich, sich an diese Richtlinien zu halten, um die Einhaltung rechtlicher und ethischer Standards in Forschung und Experiment zu gewährleisten.