Discontinued

Beschreibung

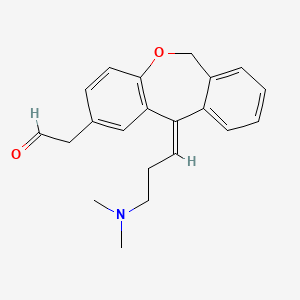

Structure

3D Structure

Eigenschaften

IUPAC Name |

2-[(11Z)-11-[3-(dimethylamino)propylidene]-6H-benzo[c][1]benzoxepin-2-yl]acetaldehyde | |

|---|---|---|

| Details | Computed by Lexichem TK 2.7.0 (PubChem release 2021.05.07) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI |

InChI=1S/C21H23NO2/c1-22(2)12-5-8-19-18-7-4-3-6-17(18)15-24-21-10-9-16(11-13-23)14-20(19)21/h3-4,6-10,13-14H,5,11-12,15H2,1-2H3/b19-8- | |

| Details | Computed by InChI 1.0.6 (PubChem release 2021.05.07) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI Key |

CTBUVTVWLYTOGO-UWVJOHFNSA-N | |

| Details | Computed by InChI 1.0.6 (PubChem release 2021.05.07) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Canonical SMILES |

CN(C)CCC=C1C2=CC=CC=C2COC3=C1C=C(C=C3)CC=O | |

| Details | Computed by OEChem 2.3.0 (PubChem release 2021.05.07) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Isomeric SMILES |

CN(C)CC/C=C\1/C2=CC=CC=C2COC3=C1C=C(C=C3)CC=O | |

| Details | Computed by OEChem 2.3.0 (PubChem release 2021.05.07) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Molecular Formula |

C21H23NO2 | |

| Details | Computed by PubChem 2.1 (PubChem release 2021.05.07) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

DSSTOX Substance ID |

DTXSID70728683 | |

| Record name | {(11Z)-11-[3-(Dimethylamino)propylidene]-6,11-dihydrodibenzo[b,e]oxepin-2-yl}acetaldehyde | |

| Source | EPA DSSTox | |

| URL | https://comptox.epa.gov/dashboard/DTXSID70728683 | |

| Description | DSSTox provides a high quality public chemistry resource for supporting improved predictive toxicology. | |

Molecular Weight |

321.4 g/mol | |

| Details | Computed by PubChem 2.1 (PubChem release 2021.05.07) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

CAS No. |

1376615-97-2 | |

| Record name | (11Z)-11-[3-(Dimethylamino)propylidene]-6,11-dihydrodibenz[b,e]oxepin-2-acetaldehyde | |

| Source | CAS Common Chemistry | |

| URL | https://commonchemistry.cas.org/detail?cas_rn=1376615-97-2 | |

| Description | CAS Common Chemistry is an open community resource for accessing chemical information. Nearly 500,000 chemical substances from CAS REGISTRY cover areas of community interest, including common and frequently regulated chemicals, and those relevant to high school and undergraduate chemistry classes. This chemical information, curated by our expert scientists, is provided in alignment with our mission as a division of the American Chemical Society. | |

| Explanation | The data from CAS Common Chemistry is provided under a CC-BY-NC 4.0 license, unless otherwise stated. | |

| Record name | {(11Z)-11-[3-(Dimethylamino)propylidene]-6,11-dihydrodibenzo[b,e]oxepin-2-yl}acetaldehyde | |

| Source | EPA DSSTox | |

| URL | https://comptox.epa.gov/dashboard/DTXSID70728683 | |

| Description | DSSTox provides a high quality public chemistry resource for supporting improved predictive toxicology. | |

| Record name | Cis-[11-(3-Dimethylamino-propylidene)-6,11-dihydro-dibenzo[b,e]-oxepin-2-yl]-acetic acid | |

| Source | European Chemicals Agency (ECHA) | |

| URL | https://echa.europa.eu/information-on-chemicals | |

| Description | The European Chemicals Agency (ECHA) is an agency of the European Union which is the driving force among regulatory authorities in implementing the EU's groundbreaking chemicals legislation for the benefit of human health and the environment as well as for innovation and competitiveness. | |

| Explanation | Use of the information, documents and data from the ECHA website is subject to the terms and conditions of this Legal Notice, and subject to other binding limitations provided for under applicable law, the information, documents and data made available on the ECHA website may be reproduced, distributed and/or used, totally or in part, for non-commercial purposes provided that ECHA is acknowledged as the source: "Source: European Chemicals Agency, http://echa.europa.eu/". Such acknowledgement must be included in each copy of the material. ECHA permits and encourages organisations and individuals to create links to the ECHA website under the following cumulative conditions: Links can only be made to webpages that provide a link to the Legal Notice page. | |

Foundational & Exploratory

Rofecoxib: A Technical Guide to a Discontinued COX-2 Inhibitor

For Researchers, Scientists, and Drug Development Professionals

Introduction

Rofecoxib (B1684582), formerly marketed as Vioxx, is a nonsteroidal anti-inflammatory drug (NSAID) that was designed as a selective inhibitor of the cyclooxygenase-2 (COX-2) enzyme.[1][2] It was developed to provide the analgesic and anti-inflammatory benefits of traditional NSAIDs while minimizing the gastrointestinal side effects associated with the inhibition of cyclooxygenase-1 (COX-1).[1][2] Rofecoxib gained widespread use for the treatment of osteoarthritis, rheumatoid arthritis, acute pain, and dysmenorrhea.[1] However, it was voluntarily withdrawn from the market in 2004 due to an increased risk of cardiovascular events, including heart attack and stroke, associated with long-term use.[1][3][4] This guide provides a comprehensive technical overview of rofecoxib, including its chemical and physical properties, mechanism of action, pharmacokinetic profile, and the key experimental findings that led to its withdrawal.

Chemical and Physical Properties

Rofecoxib is a butenolide, specifically a furan-2(5H)-one substituted with a phenyl group at position 3 and a p-(methylsulfonyl)phenyl group at position 4.[5]

| Property | Value | Reference |

| IUPAC Name | 4-(4-methylsulfonylphenyl)-3-phenyl-5H-furan-2-one | [1] |

| Molecular Formula | C₁₇H₁₄O₄S | [1] |

| Molar Mass | 314.36 g/mol | [1] |

| CAS Number | 162011-90-7 | [1] |

| Appearance | Solid | [5] |

| Solubility | ||

| DMSO | ~63 mg/mL (200.4 mM) | [6] |

| Dimethyl formamide (B127407) (DMF) | ~25 mg/mL | [6] |

| Ethanol | ~0.1 mg/mL | [6] |

| Water | Insoluble | [6] |

Mechanism of Action

Rofecoxib's primary mechanism of action is the selective inhibition of the COX-2 enzyme.[5][7] The cyclooxygenase enzyme has two main isoforms, COX-1 and COX-2. COX-1 is constitutively expressed in many tissues and is responsible for the production of prostaglandins (B1171923) that protect the stomach lining.[1] In contrast, COX-2 is an inducible enzyme that is upregulated at sites of inflammation and mediates the synthesis of prostaglandins involved in pain and inflammation.[1][2]

By selectively inhibiting COX-2, rofecoxib reduces the production of these pro-inflammatory prostaglandins, thereby exerting its analgesic and anti-inflammatory effects.[2][7] This selectivity for COX-2 over COX-1 was the basis for its improved gastrointestinal safety profile compared to non-selective NSAIDs.[1][2] The crystal structure of rofecoxib bound to human COX-2 reveals that its methyl sulfone moiety fits into a side pocket of the cyclooxygenase channel, which is believed to contribute to its isoform selectivity.[8][9]

Pharmacokinetic Properties

| Parameter | Value | Reference |

| Bioavailability | ~93% | [1][5] |

| Protein Binding | 87% | [1] |

| Metabolism | Primarily hepatic, involving both oxidative and reductive reactions. Key metabolizing enzymes include CYP3A4 and CYP1A2. | [5][7] |

| Elimination Half-life | Approximately 17 hours | [1][10] |

| Excretion | Predominantly via urine (71.5%) and a smaller amount in feces (14.2%). | [11] |

Experimental Protocols

In Vitro COX-2 Inhibition Assay

A common method to determine the inhibitory activity of compounds against COX enzymes is to measure the production of prostaglandin (B15479496) E₂ (PGE₂) in a cell-based assay.

Materials:

-

Chinese Hamster Ovary (CHO) cells recombinantly expressing human COX-2

-

Human osteosarcoma cells

-

U937 cells (for COX-1)

-

Rofecoxib

-

Lipopolysaccharide (LPS) for stimulation

-

PGE₂ ELISA kit

Procedure:

-

Culture the respective cell lines in appropriate media.

-

Pre-incubate the cells with varying concentrations of rofecoxib for a specified time (e.g., 2 hours).

-

Stimulate the cells with an inflammatory agent like LPS to induce prostaglandin production.

-

After incubation, collect the cell culture supernatant.

-

Quantify the concentration of PGE₂ in the supernatant using a competitive ELISA kit.

-

Calculate the IC₅₀ value, which is the concentration of rofecoxib required to inhibit PGE₂ production by 50%.

Animal Model of Arthritis

The anti-inflammatory efficacy of rofecoxib can be evaluated in animal models of arthritis, such as the collagen-induced arthritis model in rats.

Materials:

-

Male Wistar rats

-

Complete Freund's Adjuvant (CFA)

-

Rofecoxib

-

Vehicle control (e.g., 1% hydroxyethyl (B10761427) cellulose)

Procedure:

-

Induce arthritis in the rats by injecting CFA into the paw.

-

Administer rofecoxib or a vehicle control orally to the rats daily for a specified period (e.g., 28 days).[12]

-

Monitor the progression of arthritis by measuring paw volume and assigning an arthritis score.

-

At the end of the study, sacrifice the animals and collect tissues for histopathological and biochemical analysis.

Quantitative Data

In Vitro Inhibitory Activity (IC₅₀ Values)

| Target/Assay | Cell Line/System | IC₅₀ Value | Reference |

| COX-2 (PGE₂ Production) | CHO cells (recombinant human) | 18 nM | [6] |

| COX-2 (PGE₂ Production) | Human osteosarcoma cells | 26 nM | [6] |

| COX-2 (Purified human recombinant) | Purified Enzyme | 0.34 µM | [6] |

| COX-2 (Whole blood assay) | Human whole blood | 0.53 µM | [6] |

| COX-1 (PGE₂ Production) | U937 cells | >50 µM | [6] |

| COX-1 (Whole blood assay) | Human whole blood | 18.8 µM | [6] |

Clinical Trial Data on Cardiovascular Risk (APPROVe Study)

The Adenomatous Polyp Prevention on Vioxx (APPROVe) study was a key clinical trial that demonstrated the increased cardiovascular risk associated with long-term rofecoxib use.[1][3]

| Outcome | Rofecoxib | Placebo | Relative Risk |

| Thrombotic Cardiovascular Events | 1.50 events per 100 patient-years | 0.78 events per 100 patient-years | 1.92 |

Data from the APPROVe study after 18 months of treatment.

Signaling Pathways and Workflows

COX-2 Signaling Pathway in Inflammation

Caption: Rofecoxib inhibits the COX-2 enzyme, blocking prostaglandin synthesis.

Experimental Workflow for In Vitro COX-2 Inhibition Assay

Caption: Workflow for determining Rofecoxib's in vitro COX-2 inhibitory activity.

Rationale for Rofecoxib's Withdrawal

Caption: The cardiovascular risks of Rofecoxib led to its market withdrawal.

Conclusion

Rofecoxib represents a significant case study in drug development, highlighting the importance of balancing efficacy with long-term safety. While its selective inhibition of COX-2 offered a clear therapeutic advantage in terms of gastrointestinal tolerability, the unforeseen cardiovascular risks ultimately led to its discontinuation. The extensive research conducted on rofecoxib, both pre- and post-marketing, has provided valuable insights into the complex roles of cyclooxygenase enzymes in human physiology and pathology. This technical guide serves as a comprehensive resource for understanding the chemical, pharmacological, and clinical properties of this discontinued (B1498344) compound, offering important lessons for the future of drug design and development.

References

- 1. Rofecoxib - Wikipedia [en.wikipedia.org]

- 2. rofecoxib (Vioxx): Drug Facts, Side Effects and Dosing [medicinenet.com]

- 3. Rofecoxib (Vioxx) voluntarily withdrawn from market - PMC [pmc.ncbi.nlm.nih.gov]

- 4. clinician.nejm.org [clinician.nejm.org]

- 5. Rofecoxib | C17H14O4S | CID 5090 - PubChem [pubchem.ncbi.nlm.nih.gov]

- 6. benchchem.com [benchchem.com]

- 7. ClinPGx [clinpgx.org]

- 8. Crystal structure of rofecoxib bound to human cyclooxygenase-2 - PMC [pmc.ncbi.nlm.nih.gov]

- 9. journals.iucr.org [journals.iucr.org]

- 10. Discovery and development of cyclooxygenase 2 inhibitors - Wikipedia [en.wikipedia.org]

- 11. The disposition and metabolism of rofecoxib, a potent and selective cyclooxygenase-2 inhibitor, in human subjects - PubMed [pubmed.ncbi.nlm.nih.gov]

- 12. researchgate.net [researchgate.net]

Navigating the Data Void: A Technical Guide to Safety Data for Discontinued Research Chemicals

For researchers, scientists, and professionals in drug development, the discontinuation of a research chemical can create a significant information gap, particularly concerning its safety data. When a manufacturer ceases production of a substance, the corresponding Safety Data Sheet (SDS), the primary source of hazard information, can become difficult or impossible to obtain. This guide provides a comprehensive framework for systematically addressing the challenge of absent safety data for discontinued (B1498344) research chemicals, enabling organizations to maintain a high standard of safety and regulatory compliance.

The Regulatory Landscape: An Overview

The obligation for manufacturers to provide and maintain SDS for this compound products varies globally. In the United States, the Occupational Safety and Health Administration (OSHA) does not mandate that a manufacturer provide an SDS after a company ceases operations or discontinues a product line.[1] This contrasts with regulations in the European Union, where suppliers are required to keep all information used for classification and labelling for at least 10 years after the substance or mixture was last supplied.[1] Similarly, Canada requires suppliers to maintain these records for six years.[1] This regulatory divergence underscores the potential for significant data gaps, particularly for older or less common research chemicals.

A Systematic Approach to Locating Existing Safety Data

Before attempting to reconstruct a safety profile, a thorough search for any existing documentation is paramount. The following workflow outlines a systematic approach to this search.

Caption: Workflow for locating safety data for a this compound research chemical.

Reconstructing a Chemical Safety Profile

In the absence of a formal SDS, a safety profile must be reconstructed based on available data and, where necessary, through testing or computational modeling. This process should be systematic and well-documented, focusing on three key areas: physicochemical properties, toxicological data, and ecological data.

Physicochemical Properties

A chemical's physicochemical properties are fundamental to understanding its hazards. The following table summarizes the key properties required for a comprehensive safety assessment, along with references to standardized test guidelines.

| Property | Description | Standardized Test Guideline (Example) |

| Appearance | Physical state (solid, liquid, gas) and color. | Visual Inspection |

| Odor | Description of the odor, if any. | Subjective evaluation under controlled conditions. |

| pH | The pH of the substance in solution. | OECD Guideline 122 |

| Melting/Freezing Point | The temperature at which the substance changes from solid to liquid. | OECD Guideline 102 |

| Boiling Point | The temperature at which the substance changes from liquid to gas. | OECD Guideline 103 |

| Flash Point | The lowest temperature at which a liquid can form an ignitable mixture in air. | OECD Guideline 104 (unofficial) |

| Vapor Pressure | The pressure exerted by a vapor in thermodynamic equilibrium with its condensed phases. | OECD Guideline 104 |

| Vapor Density | The density of a vapor in relation to that of air. | Calculation based on molecular weight. |

| Relative Density | The ratio of the density of a substance to the density of a reference substance. | OECD Guideline 109 |

| Solubility | The ability of a substance to dissolve in a solvent. | OECD Guideline 105 |

| Partition Coefficient (n-octanol/water) | A measure of a chemical's lipophilicity. | OECD Guideline 107, 117, 123 |

| Auto-ignition Temperature | The lowest temperature at which a substance will spontaneously ignite. | ASTM E659 |

| Decomposition Temperature | The temperature at which a substance chemically decomposes. | Thermogravimetric Analysis (TGA) |

Toxicological Data

Toxicological data is essential for understanding the potential health effects of a chemical. The Globally Harmonized System of Classification and Labelling of Chemicals (GHS) provides a framework for classifying chemical hazards based on toxicological endpoints.[2][3]

| Toxicological Endpoint | Description | Standardized Test Guideline (Example) |

| Acute Toxicity (Oral, Dermal, Inhalation) | Adverse effects occurring after a single dose or short-term exposure.[4] | OECD Guidelines 401, 402, 403, 420, 423, 425 |

| Skin Corrosion/Irritation | The production of reversible or irreversible skin damage.[3] | OECD Guidelines 404, 430, 431, 435, 439 |

| Serious Eye Damage/Eye Irritation | The production of tissue damage in the eye, or serious physical decay of vision. | OECD Guideline 405, 437, 438, 492 |

| Respiratory or Skin Sensitization | An allergic response following skin contact or inhalation. | OECD Guidelines 406, 429, 442A, 442B, 442C, 442D, 442E |

| Germ Cell Mutagenicity | The potential to cause mutations in the germ cells. | OECD Guidelines 471, 473, 474, 475, 487, 488 |

| Carcinogenicity | The potential to cause cancer. | OECD Guidelines 451, 453 |

| Reproductive Toxicity | Adverse effects on sexual function and fertility in males and females, and developmental toxicity in the offspring. | OECD Guidelines 414, 415, 416, 421, 422, 443 |

| Specific Target Organ Toxicity (Single Exposure) | Specific, non-lethal target organ toxicity arising from a single exposure.[4] | OECD Guideline 407 |

| Specific Target Organ Toxicity (Repeated Exposure) | Specific target organ toxicity arising from repeated exposure. | OECD Guidelines 407, 408, 409, 410, 411, 412, 413, 452 |

| Aspiration Hazard | Severe acute effects such as chemical pneumonia, varying degrees of pulmonary injury or death following aspiration. | Based on physicochemical properties (e.g., viscosity). |

Ecological Data

Understanding the potential environmental impact of a chemical is a critical component of its safety profile.

| Ecotoxicological Endpoint | Description | Standardized Test Guideline (Example) |

| Toxicity to Fish | Acute and chronic effects on fish species. | OECD Guidelines 203, 210, 212, 215 |

| Toxicity to Aquatic Invertebrates | Acute and chronic effects on aquatic invertebrates (e.g., Daphnia). | OECD Guidelines 202, 211 |

| Toxicity to Aquatic Algae and Cyanobacteria | Effects on the growth of algae and cyanobacteria. | OECD Guideline 201 |

| Toxicity to Terrestrial Organisms | Effects on soil microorganisms, plants, and terrestrial invertebrates. | OECD Guidelines 207, 208, 216, 217, 222 |

| Persistence and Degradability | The potential for a chemical to persist in the environment. | OECD Guidelines 301, 302, 303, 304, 305, 306, 307, 308, 309, 310 |

| Bioaccumulation Potential | The potential for a chemical to accumulate in living organisms. | OECD Guidelines 305, 318 |

| Mobility in Soil | The potential for a chemical to move through the soil. | OECD Guideline 106, 121 |

Experimental Protocols: A Summary of Key Methodologies

When experimental data must be generated, adherence to standardized protocols is crucial for data quality and acceptance. The OECD Guidelines for the Testing of Chemicals are internationally recognized and provide detailed methodologies.[5][6] The following provides a high-level summary of the principles behind some key test categories.

Physicochemical Property Determination

-

Melting Point (OECD 102): This is typically determined using the capillary method, where a small sample of the substance is heated in a capillary tube and the temperature range over which it melts is observed. Other methods include hot-stage apparatus and differential scanning calorimetry (DSC).

-

Water Solubility (OECD 105): The column elution method or the flask method can be used. In the flask method, the substance is dissolved in water at a specific temperature until saturation is reached. The concentration of the substance in the aqueous solution is then determined analytically.

-

Partition Coefficient (n-octanol/water) (OECD 107, 117): The shake-flask method involves dissolving the substance in a mixture of n-octanol and water and measuring its concentration in each phase after equilibrium is reached. High-Performance Liquid Chromatography (HPLC) is another common method (OECD 117).

Toxicological Testing

-

Acute Oral Toxicity (OECD 420, 423, 425): These are in vivo tests in rodents. The "Up-and-Down Procedure" (OECD 425) is a sequential dosing method that uses a minimum number of animals to estimate the LD50 (the dose lethal to 50% of the test population). The "Acute Toxic Class Method" (OECD 423) involves dosing groups of animals at defined dose levels to identify a dose range that causes mortality. The "Fixed Dose Procedure" (OECD 420) uses a series of pre-determined dose levels and observes for signs of toxicity to classify the substance.[7]

-

Skin Irritation/Corrosion (OECD 404, 439): In vivo testing (OECD 404) involves applying the substance to the skin of an animal (typically a rabbit) and observing for signs of irritation or corrosion over a period of time. In vitro methods (OECD 439) use reconstructed human epidermis models to assess skin irritation potential.

-

Mutagenicity (Ames Test - OECD 471): This is an in vitro test that uses strains of the bacterium Salmonella typhimurium that have been genetically engineered to be unable to synthesize the amino acid histidine. The test substance is incubated with the bacteria, and if it is a mutagen, it will cause a reverse mutation, allowing the bacteria to grow on a histidine-free medium.

Ecotoxicological Testing

-

Acute Toxicity to Daphnia (OECD 202): This test exposes the freshwater invertebrate Daphnia magna to various concentrations of the test substance for 48 hours. The endpoint is immobilization of the daphnids.

-

Alga, Growth Inhibition Test (OECD 201): This test exposes a population of a selected green algae species to the test substance in a nutrient-rich medium for 72 hours. The endpoint is the inhibition of growth, measured by cell count or another biomass surrogate.

-

Ready Biodegradability (OECD 301): This series of tests assesses the potential for a chemical to be rapidly biodegraded by microorganisms under aerobic conditions. Methods include monitoring oxygen consumption or carbon dioxide evolution over a 28-day period.

The Role of In Silico Methods

When experimental testing is not feasible due to cost, time, or ethical considerations, in silico (computational) methods can provide valuable estimations of a chemical's properties and potential toxicity.[8][9][10]

-

Quantitative Structure-Activity Relationships (QSARs): These are mathematical models that relate the chemical structure of a substance to its biological activity or a specific property.[8] By inputting the molecular structure of the this compound research chemical, it is possible to predict various endpoints.

-

Read-Across: This approach involves identifying structurally similar chemicals for which safety data is available and using that data to infer the properties of the substance .

-

Expert Systems: These are software tools that incorporate toxicological knowledge and rules to predict the potential hazards of a chemical based on its structure.

Caption: A logical workflow for reconstructing a chemical safety profile.

Conclusion: A Commitment to Safety

The absence of a manufacturer's SDS for a this compound research chemical presents a significant challenge but does not absolve researchers and organizations of their responsibility to ensure a safe working environment. By following a systematic process of data retrieval, and where necessary, reconstructing a comprehensive safety profile through standardized testing and validated in silico methods, the information void can be filled. This proactive approach to chemical safety management is essential for protecting personnel, ensuring regulatory compliance, and fostering a culture of safety in research and development.

References

- 1. ghsnotebook.wordpress.com [ghsnotebook.wordpress.com]

- 2. ilo.org [ilo.org]

- 3. Globally Harmonized System of Classification and Labelling of Chemicals - Wikipedia [en.wikipedia.org]

- 4. nationalacademies.org [nationalacademies.org]

- 5. OECD Guidelines for the Testing of Chemicals - Wikipedia [en.wikipedia.org]

- 6. oecd.org [oecd.org]

- 7. researchgate.net [researchgate.net]

- 8. In silico toxicology: computational methods for the prediction of chemical toxicity - PMC [pmc.ncbi.nlm.nih.gov]

- 9. researchgate.net [researchgate.net]

- 10. labcorp.com [labcorp.com]

Revitalizing Discovery: A Technical Guide to Documenting Legacy Scientific Software

For Researchers, Scientists, and Drug Development Professionals

This guide provides a comprehensive framework for documenting legacy scientific software, a critical task for ensuring the reproducibility, extensibility, and long-term value of computational research. In an environment where researchers spend a significant portion of their time on administrative and documentation-related tasks, adopting a structured approach to understanding and documenting existing software is paramount.[1] This document outlines the core principles, experimental protocols for software analysis, and best practices for data presentation and visualization to transform opaque legacy systems into transparent, manageable assets.

The Challenge of Legacy Scientific Software

Legacy scientific software, often characterized by a lack of comprehensive documentation and the departure of original developers, presents significant challenges.[2][3][4] These systems can be difficult to understand, maintain, and adapt, hindering new research and slowing down the onboarding of new team members.[2][5] The primary obstacles include poorly commented code, missing architectural diagrams, and scattered, outdated notes.[2][3][5] Without a clear understanding of the software's inner workings, the risk of introducing errors during modifications is high, and the valuable scientific knowledge embedded within the code remains inaccessible.[2][3]

Core Principles of Legacy Documentation

A successful documentation effort for legacy scientific software should be guided by the following principles:

-

Centralization and Standardization : Documentation should be centralized in a single, searchable platform to prevent information silos.[5][6] Adopting standardized templates for different types of documentation (e.g., user guides, API documentation, process guides) ensures consistency and readability.[5]

-

Living Documents : Documentation should be treated as a living entity, continuously updated as the software is understood and modified.[6] Linking documentation to the current state of the system, where possible, prevents it from becoming obsolete.[5]

-

Audience-Centric Approach : The needs of different audiences, such as end-users, developers, and maintainers, should be considered.[7] This may require creating various types of documentation, from high-level user guides to detailed developer documentation.[7]

-

Incremental Documentation : The process of documenting a large, complex system can be daunting. An incremental approach, starting with the most critical components, can make the task more manageable.[7]

A Phased Approach to Documentation

A structured, phased approach is essential for systematically documenting legacy scientific software. The initial and most critical phase is a comprehensive audit of the existing system.

Experimental Protocol: Comprehensive Codebase Audit

This protocol outlines a systematic process for analyzing a legacy scientific software system to extract the necessary information for documentation.

Objective: To systematically analyze a legacy scientific software application to understand its architecture, functionality, data flow, and dependencies.

Materials:

-

Access to the source code repository.

-

Disassemblers and decompilers (if source code is unavailable).[8][9]

-

A centralized documentation platform (e.g., a wiki or a modern documentation tool).

-

Version control system (e.g., Git).

Methodology:

-

Initial Reconnaissance:

-

Static Analysis:

-

Use automated tools to analyze the source code without executing it.[9]

-

Generate a high-level architecture diagram showing the relationships between different components.[10]

-

Map out dependencies, both internal and external.

-

Identify and document key algorithms and data structures.

-

-

Dynamic Analysis:

-

Function-Level Documentation:

-

For critical functions and methods, add clear and concise comments explaining their purpose, parameters, and return values.

-

Give important functions clear, descriptive names.[11]

-

-

Documentation Synthesis and Review:

-

Consolidate all findings into the centralized documentation platform.

-

Create a "Getting Started" guide for new developers and users.

-

Conduct a peer review of the documentation for clarity and accuracy.

-

Data Presentation: Quantifying the Impact

Clear and concise data presentation is crucial for highlighting the importance and impact of documentation efforts. The following table provides a representative summary of the potential impact of poor documentation on research efficiency.

| Metric | Before Documentation Initiative | After 6 Months of Documentation | Percentage Improvement |

| Average Onboarding Time for New Researchers (days) | 45 | 15 | 66.7% |

| Time Spent by Senior Researchers on Routine Support (hours/week) | 10 | 3 | 70.0% |

| Frequency of Bugs Introduced During Updates (per release) | 8 | 2 | 75.0% |

| Time to Isolate and Fix Bugs (hours) | 24 | 6 | 75.0% |

Mandatory Visualization

Visual diagrams are indispensable for conveying complex information about software systems. The following diagrams, created using the DOT language, illustrate key aspects of a legacy scientific software system and the documentation process itself.

Signaling Pathway: Data Flow in a Legacy Bioinformatics Application

This diagram illustrates the flow of data through a hypothetical legacy bioinformatics application, from raw sequencing data to final analysis results.

Data flow in a legacy bioinformatics application.

Experimental Workflow: The Legacy Software Documentation Process

This diagram outlines the workflow for documenting legacy scientific software, from the initial assessment to ongoing maintenance.

The legacy software documentation workflow.

Logical Relationships: Architecture of a Legacy Simulation Platform

This diagram illustrates the high-level architecture of a legacy scientific simulation platform, showing the relationships between its main components.

High-level architecture of a legacy simulation platform.

References

- 1. paperguide.ai [paperguide.ai]

- 2. quora.com [quora.com]

- 3. droptica.com [droptica.com]

- 4. appinstitute.com [appinstitute.com]

- 5. kodesage.ai [kodesage.ai]

- 6. understandlegacycode.com [understandlegacycode.com]

- 7. What are best practices for research software documentation? | Software Sustainability Institute [software.ac.uk]

- 8. scnsoft.com [scnsoft.com]

- 9. The Reverse Engineering Process: Tools and Techniques You Need to Know [axiomq.com]

- 10. modlogix.com [modlogix.com]

- 11. reverseengineering.stackexchange.com [reverseengineering.stackexchange.com]

The Impact of Discontinued Reagents on Historical Data: A Technical Guide for Ensuring Data Integrity

For Researchers, Scientists, and Drug Development Professionals

Introduction

This technical guide provides a comprehensive framework for managing the transition from a discontinued (B1498344) reagent to a suitable replacement. It outlines the necessary risk assessments, details robust experimental protocols for bridging studies, and presents best practices for data analysis and interpretation to ensure the continued validity and comparability of scientific data over time. Adherence to these principles is crucial for maintaining the quality and reliability of research findings and supporting regulatory requirements.[1]

Chapter 1: The Core Challenge: Reagent Variability

Critical reagents, such as binding proteins, antibodies, or conjugated antibodies, directly influence assay results.[1] Their consistent quality is paramount.[1] However, variability can arise from numerous sources:

-

Lot-to-Lot Differences: Even within the same product line, minor changes in the manufacturing process can lead to variations in reagent performance.[2][3]

-

Manufacturing Process Changes: A change in the production method of a reagent, such as an antibody, is considered a major change that can significantly influence assay performance.[1]

-

Supplier Changes: A switch in the supplier of a key component can introduce unforeseen variability.[1]

-

Degradation Over Time: Improper storage or handling can lead to the degradation of reagents, affecting their stability and performance.[4][5]

This variability can manifest as changes in:

-

Affinity and Potency: Alterations in the binding strength of antibodies or the activity of enzymes.[1]

-

Specificity: Changes in the target-binding capabilities, potentially leading to increased cross-reactivity.[1]

-

Assay Performance: Shifts in sensitivity, precision, accuracy, and linearity.[6]

Chapter 2: Initial Assessment and Risk Management

When a critical reagent is this compound, a structured risk assessment is the first essential step. This process helps to determine the potential impact on data and to define the necessary validation and bridging activities.

Risk Assessment Workflow

The decision-making process can be visualized as a logical workflow. The primary goal is to classify the reagent change and determine the subsequent level of validation required.

Caption: Risk assessment workflow for a this compound reagent.

Chapter 3: Experimental Protocols for Bridging Studies

To ensure data comparability between a this compound ("old") and a replacement ("new") reagent, a bridging study is essential.[7][8] The goal is to demonstrate that the new reagent provides equivalent results to the old one.

Protocol 1: Head-to-Head Comparison

This protocol directly compares the performance of the old and new reagents using the same set of samples.

Objective: To assess the comparability of the new reagent with the old reagent using a panel of well-characterized samples.

Methodology:

-

Sample Selection:

-

Assay Execution:

-

Data Analysis:

-

Calculate the percentage difference between the results obtained with the old and new reagents for each sample.

-

Perform regression analysis (e.g., Deming or Passing-Bablok) to determine the slope, intercept, and correlation coefficient (R²).[2][9][10]

-

The slope should be close to 1, the intercept close to 0, and R² > 0.95 for acceptable agreement.

-

-

Acceptance Criteria:

-

Pre-define acceptance criteria for the study.[7] For example, the percentage difference for at least 80% of samples should be within ±20% of the old reagent's results.

-

The means of the QC samples should be within ±15% of their nominal values for both reagents.

-

Experimental Workflow Diagram

Caption: Workflow for a head-to-head bridging study.

Chapter 4: Data Presentation and Interpretation

Clear and concise presentation of quantitative data is critical for evaluating the success of a bridging study.

Table 1: Head-to-Head Comparison of an ELISA Kit

| Sample ID | Old Kit Result (ng/mL) | New Kit Result (ng/mL) | % Difference |

| 1 | 5.2 | 5.5 | 5.8% |

| 2 | 10.8 | 11.2 | 3.7% |

| 3 | 25.1 | 24.5 | -2.4% |

| 4 | 48.9 | 50.1 | 2.5% |

| 5 | 95.6 | 93.8 | -1.9% |

| ... | ... | ... | ... |

| 20 | 1.5 | 1.6 | 6.7% |

Table 2: Quality Control Performance

| QC Level | Reagent | N | Mean (ng/mL) | %CV | Acceptance Criteria (Mean) |

| Low | Old | 6 | 4.9 | 4.1% | 5.0 ± 0.75 |

| New | 6 | 5.1 | 3.8% | 5.0 ± 0.75 | |

| Medium | Old | 6 | 24.8 | 3.5% | 25.0 ± 3.75 |

| New | 6 | 25.3 | 3.2% | 25.0 ± 3.75 | |

| High | Old | 6 | 76.2 | 2.9% | 75.0 ± 11.25 |

| New | 6 | 74.9 | 2.5% | 75.0 ± 11.25 |

Table 3: Regression Analysis Summary

| Parameter | Value | Acceptance Criteria |

| Slope | 0.98 | 0.9 - 1.1 |

| Intercept | 0.15 | -5% to +5% of mean |

| R² | 0.992 | > 0.95 |

Chapter 5: Impact on Signaling Pathway Interpretation

A change in a critical reagent, such as a primary antibody for Western blotting, can significantly alter the interpretation of signaling pathway data. For instance, a new antibody may have a different affinity or specificity, leading to apparent changes in protein expression or phosphorylation that are artifacts of the reagent change, not biological reality.

Signaling Pathway Diagram: Potential Impact of Reagent Change

Caption: Impact of antibody change on pathway analysis.

Chapter 6: Best Practices and Mitigation Strategies

A proactive approach to reagent management can minimize disruptions caused by discontinuations.

-

Reagent Lifecycle Management: Implement a robust strategy for managing critical reagents from their initial qualification to their eventual replacement.[1] This includes thorough documentation of lot numbers, expiration dates, and performance characteristics.[4]

-

Reagent Banking: For long-term studies, consider purchasing a large single lot of a critical reagent and storing it under validated conditions to ensure consistency over the study's duration.[11]

-

Communication with Suppliers: Maintain open communication with reagent manufacturers to stay informed about potential discontinuations and to understand the nature of any changes to their products.

-

Thorough Validation: All new lots of critical reagents, even from the same supplier, should undergo some level of validation before being put into routine use.[6][12]

-

Statistical Process Control: Monitor the performance of assays over time using statistical process control charts to detect any drift or shift that may be related to reagent changes.[13][14]

Conclusion

The discontinuation of a critical reagent poses a significant threat to the integrity of historical and ongoing research data. However, by implementing a systematic approach that includes risk assessment, rigorous bridging studies, and proactive lifecycle management, researchers can effectively manage these transitions. Ensuring the comparability of data across different reagent lots is fundamental to the principles of scientific reproducibility and is essential for making sound scientific and clinical decisions. A well-documented and scientifically sound bridging strategy is not merely a quality control exercise; it is a critical component of robust and reliable research.

References

- 1. drugdiscoverytrends.com [drugdiscoverytrends.com]

- 2. tandfonline.com [tandfonline.com]

- 3. myadlm.org [myadlm.org]

- 4. sapiosciences.com [sapiosciences.com]

- 5. How to Handle Expired Lab Reagents: Tips for Safe and Effective Use | Lab Manager [labmanager.com]

- 6. How we validate new laboratory reagents - Ambar Lab [ambar-lab.com]

- 7. Recommendations and Best Practices for Reference Standards and Reagents Used in Bioanalytical Method Validation - PMC [pmc.ncbi.nlm.nih.gov]

- 8. bioprocessintl.com [bioprocessintl.com]

- 9. Methods and reagent-lot comparisons by regression analysis: Sample size considerations - PubMed [pubmed.ncbi.nlm.nih.gov]

- 10. scispace.com [scispace.com]

- 11. tandfonline.com [tandfonline.com]

- 12. studylib.net [studylib.net]

- 13. researchgate.net [researchgate.net]

- 14. researchgate.net [researchgate.net]

Unearthing the Past: A Technical Guide to Identifying Original Manufacturers of Discontinued Laboratory Equipment

For researchers, scientists, and drug development professionals, the breakdown of a trusted, long-discontinued piece of laboratory equipment can feel like a significant setback. Without access to the original manufacturer, sourcing replacement parts, service manuals, or even understanding the instrument's full capabilities can be a daunting task. This in-depth guide provides a systematic approach to uncovering the origins of your legacy lab equipment, ensuring its continued service in your critical research.

I. The Archival Deep Dive: Leveraging Historical Documents

The first step in your investigation is to consult a variety of archival resources that hold catalogs, manuals, and other historical documents from scientific instrument manufacturers.

Experimental Protocol: Archival Research Workflow

-

Initial Physical Inspection: Thoroughly examine the equipment for any identifying marks. Look for logos, model numbers, serial numbers, and any manufacturing location information. Photograph these details for reference.

-

Online Trade Catalog Databases: Begin your search with digitized collections of scientific instrument trade catalogs. These are often the most direct way to identify a manufacturer from a model number or image.

-

Targeted Keyword Searching: Use a combination of keywords in your searches, including the equipment type, model number (if available), and any observed markings. Broaden your search to include variations in company names.

-

Contacting Curators and Historians: If you find a likely match in a museum or university collection, do not hesitate to contact the curator or archivist. They can often provide additional, non-digitized information.[1]

Data Presentation: Key Archival Resources

| Resource | Description | Access Method | Key Features |

| Smithsonian Institution Libraries | An extensive collection of American and European trade catalogs for a wide range of scientific instruments.[2] | Online Digital Library | Keyword searchable, high-resolution scans of historical documents. |

| Scientific Instrument Commission | Provides links to numerous online collections of scientific instrument trade catalogs from institutions worldwide.[1] | Centralized Web Portal | Aggregates resources, including those from the Whipple Museum and the Internet Archive. |

| The Internet Archive | A vast digital library that includes a collection of instrument catalogs and archived manufacturer websites via the Wayback Machine.[3] | Website Search | The Wayback Machine allows you to view historical versions of a manufacturer's website, which may contain information on discontinued (B1498344) products.[3] |

| Office of NIH History and Stetten Museum | A collection of trade catalogs and literature on biomedical equipment and laboratory supplies.[4] | Online Catalog with Email Request | Contains materials from the early 1900s to the present; direct contact is required for copies.[4] |

II. Community Knowledge: Tapping into Specialized Forums

Online forums dedicated to laboratory equipment are invaluable resources. These communities are often populated by experienced technicians, retired scientists, and enthusiasts who may have direct experience with the equipment you are researching.

Experimental Protocol: Forum Engagement Strategy

-

Account Creation: Register for an account on relevant forums to gain full access to their features, including the ability to post questions and download documents.[3]

-

Thorough Searching: Before posting a new query, exhaust the forum's search function. It is highly likely that another user has inquired about the same or similar equipment in the past.

-

Detailed Inquiry Posts: When creating a new post, provide as much detail as possible. Include the information gathered during your physical inspection, clear photographs of the equipment and any markings, and a description of the problem you are trying to solve.

-

Engage with the Community: Respond to follow-up questions from other users and share any new information you discover. This collaborative approach increases your chances of a successful identification.

Data Presentation: Leading Laboratory Equipment Forums

| Forum | Primary Focus | Key Features |

| LabWrench | General laboratory and research equipment.[3] | Extensive instrument directory, Q&A sections, document repositories, and third-party service vendor lists.[3][5] |

| MedWrench | Diagnostic, surgical, and patient care equipment.[3] | Similar functionality to LabWrench but focused on the medical and clinical laboratory sector.[3] |

| Science Forums | Broad scientific discussion, including a dedicated section for laboratory equipment.[6] | Discussions on equipment properties, creative uses, and potential modifications.[6] |

| /r/labrats on Reddit | A general forum for laboratory professionals to discuss all aspects of lab life, including equipment.[7] | A large and active community that can offer practical advice and shared experiences.[7] |

III. The Digital Trail: Uncovering Online Remnants

Even for long-defunct companies, a digital footprint may still exist. This section details how to use web archives and search engine techniques to find this information.

Experimental Protocol: Digital Forensics for Lab Equipment

-

Wayback Machine Exploration: Use the Internet Archive's Wayback Machine to search for the suspected manufacturer's website.[3] Browse through different snapshots in time to find product pages, manuals, and contact information.[3]

-

Advanced Google Searching: Utilize advanced search operators to refine your queries. For example, use "filetype:pdf" in conjunction with the equipment name to find online manuals.

-

Patent Search: If you have a unique mechanism or design, a search of patent databases (e.g., Google Patents, USPTO) can sometimes reveal the original inventor and assignee (the manufacturer).

-

Used Equipment Listings: Websites that sell used lab equipment often retain the original manufacturer's name and model number in their listings.[7][8][9] This can be a quick way to confirm a manufacturer's identity.

IV. Visualizing the Search Process

To aid in your research, the following diagrams illustrate the logical workflows for identifying the original manufacturer of this compound lab equipment.

Caption: A workflow for identifying the original manufacturer of this compound lab equipment.

Caption: The relationship between information sources and successful manufacturer identification.

References

- 1. scientific-instrument-commission.org [scientific-instrument-commission.org]

- 2. Scientific Instruments [library.si.edu]

- 3. newlifescientific.com [newlifescientific.com]

- 4. ONHM Trade Catalog Collection [history.nih.gov]

- 5. labwrench.com [labwrench.com]

- 6. scienceforums.net [scienceforums.net]

- 7. reddit.com [reddit.com]

- 8. arcscientific.com [arcscientific.com]

- 9. shop.labexchange.com [shop.labexchange.com]

understanding the reason for a product's discontinuation in research

An In-Depth Technical Guide to Understanding Product Discontinuation in Pharmaceutical Research

Introduction

The journey of a novel therapeutic from initial discovery to market approval is a long, costly, and high-risk endeavor. A staggering majority of drug candidates, estimated to be around 90%, fail to navigate the complex path of clinical development successfully.[1][2][3][4] This high attrition rate underscores the critical need for researchers, scientists, and drug development professionals to deeply understand the multifaceted reasons behind product discontinuation. A thorough analysis of these failures provides invaluable lessons that can inform and de-risk future drug development programs, ultimately leading to safer and more effective medicines.

This technical guide provides a comprehensive overview of the primary reasons for product discontinuation in research, details key experimental protocols used to identify potential liabilities, and presents case studies of notable drug withdrawals. Furthermore, it introduces frameworks for conducting a systematic root cause analysis of these failures.

Primary Reasons for Product Discontinuation

The decision to halt the development of a drug candidate can stem from a variety of factors, which can be broadly categorized into scientific/clinical and strategic/commercial reasons.

Lack of Clinical Efficacy

A primary reason for the failure of a drug candidate is the inability to demonstrate a statistically significant and clinically meaningful therapeutic effect in human subjects.[4] This accounts for approximately 40-50% of all clinical trial failures.[1][2][3][4] A lack of efficacy can arise from several factors, including:

-

Poorly validated drug targets: The biological target of the drug may not be as critical to the disease pathology as initially hypothesized.

-

Suboptimal drug-target engagement: The drug may not bind to its target with sufficient affinity or specificity in vivo.

-

Flawed clinical trial design: The trial may be underpowered, have inappropriate endpoints, or enroll a patient population that is not suitable for demonstrating the drug's effect.[5]

-

High placebo response: In some therapeutic areas, a significant improvement in the placebo group can mask the true effect of the investigational drug.[6]

Unmanageable Toxicity and Safety Concerns

Ensuring patient safety is paramount in drug development. Unforeseen toxicity is a major hurdle, contributing to about 30% of drug discontinuations.[1][3] Safety issues can manifest at any stage of development and include:

-

On-target toxicity: The drug's mechanism of action, while targeting the desired pathway, may also produce adverse effects.

-

Off-target toxicity: The drug may interact with unintended biological targets, leading to unexpected side effects.

-

Metabolite-induced toxicity: The metabolic byproducts of the drug, rather than the parent compound, may be toxic.

-

Idiosyncratic toxicity: The drug may cause rare but severe adverse reactions in a small subset of the population.

Cardiotoxicity, particularly the prolongation of the QT interval, is a significant safety concern that has led to the discontinuation of many drug candidates.

Poor Pharmacokinetics and Drug-Like Properties

A compound's Absorption, Distribution, Metabolism, and Excretion (ADME) profile, collectively known as its pharmacokinetics, is a critical determinant of its success as a drug. Poor "drug-like" properties account for 10-15% of failures.[1][3][4] Key pharmacokinetic challenges include:

-

Low bioavailability: The drug is not sufficiently absorbed into the systemic circulation to reach therapeutic concentrations.

-

Unfavorable distribution: The drug may not adequately reach the target tissue or may accumulate in non-target tissues, leading to toxicity.

-

Rapid metabolism and clearance: The drug is broken down and eliminated from the body too quickly, requiring frequent and high doses.

-

Formation of reactive metabolites: The drug's metabolites may be chemically reactive and cause toxicity.

-

Potential for drug-drug interactions: The drug may inhibit or induce metabolic enzymes (like Cytochrome P450s), affecting the plasma levels of co-administered medications.

Commercial and Strategic Reasons

Beyond the scientific and clinical hurdles, a significant number of drug development programs are terminated for commercial or strategic reasons, accounting for roughly 10% of discontinuations.[1][2][3][7] These factors include:

-

Lack of commercial viability: The potential market for the drug may be too small to justify the high cost of development.[8]

-

Shifting market landscape: The emergence of a competitor with a superior product can render a drug candidate obsolete.

-

Insufficient funding: The high cost of late-stage clinical trials can be a significant barrier, particularly for smaller companies.[9]

-

Portfolio prioritization: Pharmaceutical companies may decide to allocate resources to more promising projects in their pipeline.

Quantitative Data on Discontinuation Rates

The following table summarizes the approximate contribution of various factors to the discontinuation of drug candidates during clinical development, based on analyses of clinical trial data from recent years.[1][2][3][4]

| Reason for Discontinuation | Approximate Percentage of Failures |

| Lack of Clinical Efficacy | 40% - 50% |

| Unmanageable Toxicity / Safety Concerns | 30% |

| Poor Pharmacokinetics / Drug-like Properties | 10% - 15% |

| Commercial / Strategic Reasons | 10% |

Methodologies for Investigating Discontinuation

A robust preclinical and clinical testing strategy is essential to identify potential reasons for discontinuation as early as possible. This section details the protocols for some of the key experiments conducted to assess the safety, efficacy, and pharmacokinetic properties of a drug candidate.

Preclinical Safety and Toxicity Assessment

Preclinical safety testing aims to identify potential toxicities before a drug is administered to humans. These studies are typically conducted in compliance with Good Laboratory Practice (GLP) regulations.[10][11][12]

4.1.1 hERG Assay for Cardiotoxicity

Objective: To assess the potential of a compound to inhibit the hERG (human Ether-à-go-go-Related Gene) potassium ion channel, which can lead to QT interval prolongation and potentially fatal cardiac arrhythmias.[13][14]

Methodology: Automated patch-clamp electrophysiology is a common method.[13][14][15][16]

Experimental Protocol:

-

Cell Line: A mammalian cell line (e.g., HEK293 or CHO) stably expressing the hERG channel is used.[13][14]

-

Compound Preparation: The test compound is prepared at a range of concentrations. A known hERG inhibitor (e.g., E-4031) is used as a positive control, and the vehicle (e.g., DMSO) is used as a negative control.[13]

-

Patch-Clamp Recording: The whole-cell patch-clamp technique is used to measure the electrical current flowing through the hERG channels in individual cells.[13]

-

Data Acquisition: The cells are exposed to the test compound, and the hERG current is recorded before and after exposure.

-

Data Analysis: The percentage of inhibition of the hERG current is calculated for each concentration of the test compound. An IC50 value (the concentration at which 50% of the channel activity is inhibited) is then determined.[13][14]

4.1.2 Cytochrome P450 (CYP) Inhibition Assay

Objective: To evaluate the potential of a compound to inhibit the activity of major cytochrome P450 enzymes, which are responsible for the metabolism of a vast number of drugs. Inhibition of these enzymes can lead to drug-drug interactions.[2][9][17][18][19]

Methodology: An in vitro assay using human liver microsomes or recombinant CYP enzymes.[2][17][18]

Experimental Protocol:

-

Enzyme Source: Human liver microsomes, which contain a mixture of CYP enzymes, or specific recombinant human CYP enzymes (e.g., CYP1A2, CYP2C9, CYP2C19, CYP2D6, CYP3A4) are used.[17][18]

-

Substrate and Inhibitor: A specific substrate for each CYP isoform is incubated with the enzyme source in the presence and absence of the test compound (the potential inhibitor).[2]

-

Incubation: The reaction mixture, containing the enzyme, substrate, test compound, and necessary cofactors (e.g., NADPH), is incubated at 37°C.[2]

-

Metabolite Quantification: The reaction is stopped, and the amount of metabolite formed from the substrate is quantified using liquid chromatography-tandem mass spectrometry (LC-MS/MS).[2][17]

-

Data Analysis: The rate of metabolite formation in the presence of the test compound is compared to the control (without the test compound). The IC50 value for the inhibition of each CYP isoform is then calculated.[2][19]

Pharmacokinetics (ADME) Assessment

ADME studies are crucial for understanding how a drug is handled by the body.[4][6][18][20][21]

4.2.1 Caco-2 Permeability Assay

Objective: To predict the in vivo absorption of a drug across the intestinal wall by measuring its transport across a monolayer of human Caco-2 cells.[3][7][20][22]

Methodology: An in vitro cell-based assay using Caco-2 cells grown on a semi-permeable membrane.[20][22]

Experimental Protocol:

-

Cell Culture: Caco-2 cells are seeded onto a semi-permeable membrane in a Transwell® plate and cultured for approximately 21 days to form a differentiated and polarized monolayer with tight junctions.[20]

-

Monolayer Integrity Check: The integrity of the cell monolayer is verified by measuring the transepithelial electrical resistance (TEER) and/or the permeability of a fluorescent marker like Lucifer yellow.[20][22]

-

Transport Study: The test compound is added to either the apical (A) or basolateral (B) side of the monolayer. Samples are taken from the opposite side at various time points.

-

Quantification: The concentration of the test compound in the collected samples is determined by LC-MS/MS.

-

Data Analysis: The apparent permeability coefficient (Papp) is calculated for both the apical-to-basolateral (A-to-B) and basolateral-to-apical (B-to-A) directions. The efflux ratio (Papp B-to-A / Papp A-to-B) is also calculated to determine if the compound is a substrate for efflux transporters like P-glycoprotein.[20]

Clinical Trial Protocols for Efficacy and Safety

Clinical trials are conducted in a series of phases to rigorously evaluate the efficacy and safety of a new drug in humans.[3][23]

-

Phase I: The primary goal is to assess the safety, tolerability, and pharmacokinetic profile of the drug in a small group of healthy volunteers.[3]

-

Phase II: The drug is administered to a larger group of patients with the target disease to evaluate its efficacy and further assess its safety.[3][24]

-

Phase III: Large-scale, multicenter, randomized, and controlled trials are conducted to confirm the drug's efficacy, monitor side effects, and compare it to standard treatments.[3][23]

-

Phase IV: Post-marketing studies are conducted after the drug is approved to gather additional information on its long-term safety and effectiveness.[3]

Case Studies in Product Discontinuation

Examining specific cases of drug discontinuation provides valuable insights into the practical application of the principles discussed above.

Case Study 1: Rofecoxib (Vioxx®) - Discontinuation Due to Cardiovascular Toxicity

Background: Rofecoxib was a selective cyclooxygenase-2 (COX-2) inhibitor marketed for the treatment of arthritis and acute pain.[25][26] It was voluntarily withdrawn from the market in 2004 due to an increased risk of heart attack and stroke.[7][19][25]

Mechanism of Toxicity: Rofecoxib's selective inhibition of COX-2, without a corresponding inhibition of COX-1, led to an imbalance in the production of prostanoids.[7][27] Specifically, it suppressed the production of prostacyclin (a vasodilator and inhibitor of platelet aggregation) by the vascular endothelium, while leaving the COX-1-mediated production of thromboxane (B8750289) A2 (a vasoconstrictor and promoter of platelet aggregation) in platelets unchecked.[7] This imbalance created a prothrombotic state, increasing the risk of cardiovascular events.

Caption: Rofecoxib's selective COX-2 inhibition pathway.

Case Study 2: Torcetrapib (B1681342) - Discontinuation Due to Off-Target Toxicity

Background: Torcetrapib was a cholesteryl ester transfer protein (CETP) inhibitor developed to raise high-density lipoprotein (HDL) cholesterol. Its development was halted in 2006 due to an increase in mortality and cardiovascular events in the treatment group of a large clinical trial.[28][29]

Mechanism of Toxicity: The adverse effects of torcetrapib were found to be off-target and not related to its CETP inhibition.[28][30] Torcetrapib was shown to increase the production of aldosterone (B195564) and cortisol from the adrenal glands.[21][30][31] This led to an increase in blood pressure and other adverse cardiovascular effects.[21][30] The mechanism was found to be independent of the renin-angiotensin system and was instead mediated by an increase in intracellular calcium in adrenal cells.[31]

Caption: Off-target toxicity pathway of Torcetrapib.

Case Study 3: Tarenflurbil (B1684577) - Discontinuation Due to Lack of Efficacy

Background: Tarenflurbil was developed as a γ-secretase modulator for the treatment of Alzheimer's disease.[4][8][5][24] The hypothesis was that by modulating γ-secretase, it would selectively reduce the production of the toxic amyloid-β 42 (Aβ42) peptide.[5] However, a large Phase III clinical trial failed to show any clinical benefit, and its development was discontinued (B1498344) in 2008.[4][9]

Reason for Failure: The primary reason for the failure of tarenflurbil was a lack of efficacy.[4][8] Several factors may have contributed to this:

-

Weak pharmacological activity: Tarenflurbil was found to be a weak modulator of γ-secretase.[4][8]

-

Poor brain penetration: The concentration of the drug that reached the brain was likely insufficient to have a meaningful effect on Aβ42 production.[4][8][32]

-

Target validity: It is also possible that targeting γ-secretase to reduce Aβ42 is not a viable therapeutic strategy for Alzheimer's disease.[4][8]

Caption: Tarenflurbil's intended mechanism of action.

Framework for Post-Discontinuation Analysis

A systematic approach to analyzing the reasons for a product's discontinuation is crucial for organizational learning and improving future drug development efforts.

Root Cause Analysis (RCA)

Root Cause Analysis is a structured method used to identify the underlying causes of a problem.[30][33][34] Instead of just addressing the immediate symptoms, RCA seeks to find the fundamental reasons for a failure to prevent its recurrence.[35] In the context of a clinical trial failure, an RCA would involve a multidisciplinary team systematically investigating all potential contributing factors, from the initial target validation to the design and execution of the clinical trial.

Failure Mode and Effects Analysis (FMEA) in Clinical Trials

Failure Mode and Effects Analysis (FMEA) is a proactive risk assessment tool that can be applied to clinical trials to identify potential failure modes, their causes, and their effects on the trial's outcome.[11][12][36][37][38] By prospectively identifying and prioritizing risks, study teams can implement mitigation strategies to reduce the likelihood of trial failure.

Caption: A simplified workflow for Root Cause Analysis.

Conclusion

The high rate of product discontinuation in pharmaceutical research is a significant challenge, but each failure presents a valuable learning opportunity. A comprehensive understanding of the reasons for these discontinuations—spanning clinical efficacy, safety, pharmacokinetics, and commercial factors—is essential for improving the efficiency and success rate of drug development. By employing rigorous preclinical and clinical experimental protocols to identify potential liabilities early and conducting thorough post-mortem analyses of failed programs, the pharmaceutical industry can continue to innovate and deliver safe and effective therapies to patients in need.

References

- 1. In Vitro ADME Assays: Principles, Applications & Protocols - Creative Biolabs [creative-biolabs.com]

- 2. creative-bioarray.com [creative-bioarray.com]

- 3. jeodpp.jrc.ec.europa.eu [jeodpp.jrc.ec.europa.eu]

- 4. Why did tarenflurbil fail in Alzheimer's disease? - PubMed [pubmed.ncbi.nlm.nih.gov]

- 5. γ-Secretase modulator in Alzheimer's disease: shifting the end - PubMed [pubmed.ncbi.nlm.nih.gov]

- 6. dda.creative-bioarray.com [dda.creative-bioarray.com]

- 7. Permeability Assessment Using 5-day Cultured Caco-2 Cell Monolayers | Springer Nature Experiments [experiments.springernature.com]

- 8. static1.1.sqspcdn.com [static1.1.sqspcdn.com]

- 9. lnhlifesciences.org [lnhlifesciences.org]

- 10. labs.iqvia.com [labs.iqvia.com]

- 11. tandfonline.com [tandfonline.com]

- 12. The Application of Failure Modes and Effects Analysis Methodology to Intrathecal Drug Delivery for Pain Management - PMC [pmc.ncbi.nlm.nih.gov]

- 13. hERG Safety | Cyprotex ADME-Tox Solutions - Evotec [evotec.com]

- 14. creative-bioarray.com [creative-bioarray.com]

- 15. researchgate.net [researchgate.net]

- 16. hERG Channel-Related Cardiotoxicity Assessment of 13 Herbal Medicines [jkom.org]

- 17. Cytochrome P450 (CYP) Inhibition Assay (IC50) Test | AxisPharm [axispharm.com]

- 18. criver.com [criver.com]

- 19. CYP Inhibition (IC50) | Cyprotex ADME-Tox Solutions | Evotec [evotec.com]

- 20. creative-bioarray.com [creative-bioarray.com]

- 21. Torcetrapib-induced blood pressure elevation is independent of CETP inhibition and is accompanied by increased circulating levels of aldosterone - PubMed [pubmed.ncbi.nlm.nih.gov]

- 22. enamine.net [enamine.net]

- 23. Tarenflurbil: Mechanisms and Myths - PMC [pmc.ncbi.nlm.nih.gov]

- 24. Novel Mechanism of γ-Secretase Modulation – Drug Discovery Opinion [drugdiscoveryopinion.com]

- 25. researchgate.net [researchgate.net]

- 26. Rofecoxib - Wikipedia [en.wikipedia.org]

- 27. ClinPGx [clinpgx.org]

- 28. Investigating Failure For Future Success: Root Cause Analysis - Carmody QS [carmodyqs.com]

- 29. ijrpr.com [ijrpr.com]

- 30. The failure of torcetrapib: what have we learned? - PMC [pmc.ncbi.nlm.nih.gov]

- 31. Torcetrapib induces aldosterone and cortisol production by an intracellular calcium-mediated mechanism independently of cholesteryl ester transfer protein inhibition - PubMed [pubmed.ncbi.nlm.nih.gov]

- 32. Modulation of γ-Secretase Activity by a Carborane-Based Flurbiprofen Analogue - PMC [pmc.ncbi.nlm.nih.gov]

- 33. Application of system-level root cause analysis for drug quality and safety problems: a case study - PubMed [pubmed.ncbi.nlm.nih.gov]

- 34. Clarifying off-target effects for torcetrapib using network pharmacology and reverse docking approach - PMC [pmc.ncbi.nlm.nih.gov]

- 35. Root Cause Analysis in Pharmacovigilance - Freyr [freyrsolutions.com]

- 36. mdpi.com [mdpi.com]

- 37. ihi.org [ihi.org]

- 38. Overview of Failure Mode and Effects Analysis (FMEA): A Patient Safety Tool - PMC [pmc.ncbi.nlm.nih.gov]

Navigating the Scarcity: An In-depth Technical Guide to Sourcing Rare and Discontinued Chemicals for Academic Research

For Researchers, Scientists, and Drug Development Professionals

In the landscape of academic and industrial research, the availability of specific chemical compounds is paramount to the advancement of scientific discovery. However, researchers often face the significant challenge of sourcing chemicals that are either rare, no longer in production, or available only in limited quantities. This guide provides a comprehensive overview of the strategies, services, and analytical techniques essential for successfully acquiring and verifying these critical research materials.

Sourcing Strategies for Rare and Discontinued (B1498344) Chemicals

The approach to sourcing a rare or this compound chemical is multifaceted, involving a combination of searching existing inventories, commissioning custom synthesis, and exploring alternative compounds. The optimal strategy depends on factors such as the chemical's complexity, the quantity required, the urgency of the need, and budgetary constraints.

Custom Synthesis: The Bespoke Solution

When a chemical is not commercially available, custom synthesis by a specialized contract research organization (CRO) is often the most viable option. This approach offers the synthesis of specific molecules tailored to the researcher's requirements, including desired purity levels and isotopic labeling.[1][2]

Key Considerations for Custom Synthesis:

-

Expertise and Capabilities: CROs vary in their expertise. Some may specialize in particular classes of compounds or reaction types, such as complex organic synthesis, chiral molecules, or peptide synthesis.

-

Scale of Production: The required quantity, from milligrams for initial screening to kilograms for later-stage development, will influence the choice of a synthesis partner.[3]

-

Cost and Contract Models: The cost of custom synthesis is influenced by the complexity of the synthesis, the number of steps, and the cost of starting materials.[1][4] Common contract models include Fee-for-Service (FFS), where payment is contingent on successful synthesis, and Full-Time Equivalent (FTE), which involves a dedicated team for a set period.[4][5]

-

Intellectual Property (IP): Clear agreements regarding the ownership of the synthesized compound and any novel synthetic routes developed are crucial.

Decision Framework for Sourcing Rare Chemicals

The following diagram illustrates a logical workflow for deciding the most appropriate sourcing strategy.

Caption: A decision-making workflow for sourcing rare or this compound chemicals.

Surplus Chemical Suppliers: A Second Life for Chemicals

For this compound chemicals, surplus chemical suppliers can be an invaluable resource. These companies purchase and resell excess, off-spec, or expired chemicals from other organizations.[6][7][8] This can be a cost-effective way to obtain chemicals that are no longer in production. However, it is crucial to verify the identity and purity of these materials upon receipt.

Online Chemical Marketplaces

Several online platforms aggregate the catalogs of numerous chemical suppliers, providing a centralized search engine for rare and commercially available compounds.[9][10][11][12][13] These marketplaces can be an efficient starting point for sourcing, offering transparency in pricing and availability from a global network of suppliers.

Chemical Archaeology: Uncovering Historical Sources

In some instances, particularly for older or more obscure compounds, "chemical archaeology" may be necessary. This involves delving into historical laboratory notebooks, patents, and scientific literature to identify the original synthetic methods and potential sources of the compound.[3][9] This approach can be time-consuming but may be the only option for extremely rare or historically significant chemicals.

Quantitative Data on Sourcing Options

The following tables provide a summary of quantitative data to aid in the decision-making process for sourcing rare and this compound chemicals.

Table 1: Cost Comparison of Sourcing Strategies

| Sourcing Strategy | Typical Cost Range | Key Cost-Influencing Factors |

| Purchasing from Commercial Supplier | $ - $$ | Purity grade, quantity, supplier markup. |

| Purchasing from Surplus Supplier | $ - $$$ | Rarity of the chemical, remaining shelf life, quantity available.[7] |

| Custom Synthesis | $$$ - $$$$$ | Complexity of the molecule, number of synthetic steps, cost of starting materials, required purity, scale of synthesis.[1][3][4][5][14][15] |

Note: Cost ranges are relative and can vary significantly based on the specific chemical.

Table 2: Typical Purity Levels of Sourced Chemicals

| Chemical Grade | Typical Purity | Common Applications |

| Technical Grade | 90-95% | Industrial applications, not suitable for most research.[16][17] |

| Laboratory Grade | >95% | Educational and general laboratory use.[18] |

| Reagent Grade / ACS Grade | >98% | Analytical and research applications meeting American Chemical Society standards.[17][18] |

| Pharmaceutical Grade (USP/BP) | >99% | Meets standards of the United States Pharmacopeia or British Pharmacopoeia for use in drug products.[18][19] |

| High-Purity / HPLC Grade | >99.9% | High-performance liquid chromatography and other sensitive analytical techniques.[17] |

Verification of Sourced Chemicals: Experimental Protocols

Independent verification of the identity and purity of any sourced rare or this compound chemical is a critical step to ensure the reliability of research data. The following are detailed methodologies for key analytical techniques.

High-Performance Liquid Chromatography-Mass Spectrometry (HPLC-MS)

HPLC-MS is a powerful technique for separating, identifying, and quantifying components in a mixture. It is particularly useful for assessing the purity of a sample.[20][21][22]

Experimental Protocol for Purity Assessment:

-

Sample Preparation:

-

Accurately weigh approximately 1-5 mg of the chemical sample.

-

Dissolve the sample in a suitable solvent (e.g., acetonitrile (B52724), methanol, or a mixture with water) to a final concentration of approximately 1 mg/mL.

-

Filter the sample solution through a 0.22 µm syringe filter into an HPLC vial.

-

-

Instrumentation and Conditions:

-

HPLC System: A standard HPLC system equipped with a UV detector and coupled to a mass spectrometer.

-

Column: A C18 reversed-phase column is commonly used for small molecules.

-

Mobile Phase: A gradient of water (A) and acetonitrile (B), both containing 0.1% formic acid, is a common starting point.

-

Flow Rate: Typically 0.2-1.0 mL/min.

-

Injection Volume: 5-10 µL.

-

Mass Spectrometer: An electrospray ionization (ESI) source in both positive and negative ion modes.

-

-

Data Analysis:

-

The purity of the sample is determined by integrating the peak area of the main compound and any impurities in the chromatogram.

-

The mass spectrum of the main peak should be analyzed to confirm the molecular weight of the desired compound.

-

Workflow for Chemical Identity and Purity Verification

This diagram outlines the typical workflow for verifying the identity and purity of a sourced chemical.

Caption: A standard workflow for the analytical verification of sourced chemicals.

Gas Chromatography-Mass Spectrometry (GC-MS)

GC-MS is ideal for the analysis of volatile and thermally stable compounds. It provides excellent separation and structural information.[23][24][25][26]

Experimental Protocol for Identity and Purity Analysis:

-

Sample Preparation:

-

Prepare a dilute solution of the sample (typically 10-100 µg/mL) in a volatile organic solvent such as dichloromethane (B109758) or hexane.[24]

-

Ensure the sample is free of non-volatile residues.

-

-

Instrumentation and Conditions:

-

GC System: A gas chromatograph with a capillary column (e.g., DB-5ms).

-

Injection: Split or splitless injection, depending on the sample concentration.

-

Carrier Gas: Helium at a constant flow rate.

-

Oven Temperature Program: A temperature gradient is used to separate compounds based on their boiling points. A typical program might start at 50°C and ramp up to 300°C.[27]

-

Mass Spectrometer: An electron ionization (EI) source is commonly used, which generates a reproducible fragmentation pattern.[23]

-

-

Data Analysis:

-

The retention time of the main peak can be compared to a known standard for identification.

-

The mass spectrum of the peak is compared to a spectral library (e.g., NIST) to confirm the identity of the compound.

-

Purity can be estimated by the relative area percentages of the peaks in the chromatogram.

-

Nuclear Magnetic Resonance (NMR) Spectroscopy