Not found

描述

BenchChem offers high-quality this compound suitable for many research applications. Different packaging options are available to accommodate customers' requirements. Please inquire for more information about this compound including the price, delivery time, and more detailed information at info@benchchem.com.

属性

分子式 |

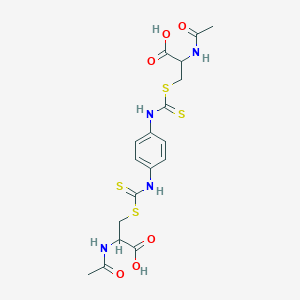

C18H22N4O6S4 |

|---|---|

分子量 |

518.7 g/mol |

IUPAC 名称 |

2-acetamido-3-[[4-[(2-acetamido-2-carboxyethyl)sulfanylcarbothioylamino]phenyl]carbamothioylsulfanyl]propanoic acid |

InChI |

InChI=1S/C18H22N4O6S4/c1-9(23)19-13(15(25)26)7-31-17(29)21-11-3-5-12(6-4-11)22-18(30)32-8-14(16(27)28)20-10(2)24/h3-6,13-14H,7-8H2,1-2H3,(H,19,23)(H,20,24)(H,21,29)(H,22,30)(H,25,26)(H,27,28) |

InChI 键 |

HTRJZMPLPYYXIN-UHFFFAOYSA-N |

规范 SMILES |

CC(=O)NC(CSC(=S)NC1=CC=C(C=C1)NC(=S)SCC(C(=O)O)NC(=O)C)C(=O)O |

同义词 |

2-AAPA cpd 2-acetylamino-3-(4-(2-acetylamino-2-carboxyethylsulfanylthiocarbonylamino)phenylthiocarbamoylsulfanyl)propionic acid |

产品来源 |

United States |

Foundational & Exploratory

Whitepaper: Strategies for Identification and Characterization of Proteins with No Apparent Homologs in Existing Databases

A Technical Guide for Researchers in Life Sciences and Drug Discovery

Introduction

The identification of protein homologs is a cornerstone of modern biological research, enabling functional annotation, evolutionary analysis, and the identification of potential drug targets. However, it is not uncommon for researchers to encounter proteins with no readily identifiable homologs in public or proprietary databases. This situation can arise from a multitude of factors, ranging from the limitations of sequence-based search algorithms to the genuine novelty of the protein .

This technical guide provides an in-depth exploration of the reasons why a protein homolog may not be found and presents a comprehensive overview of advanced computational and experimental strategies to address this challenge. We will delve into the nuances of sequence and profile-based search methods, detail experimental workflows for de novo protein identification and sequencing, and provide structured data and visualizations to aid in understanding these complex processes.

Why Homologs Go Undetected: The Limitations of Sequence-Based Searches

The most common starting point for finding protein homologs is a sequence similarity search using tools like BLAST (Basic Local Alignment Search Tool). While powerful, these methods have inherent limitations, particularly when dealing with distantly related proteins.

The "Twilight Zone" of Sequence Similarity

Evolutionary divergence can lead to sequences that share a common ancestor and three-dimensional structure but have very low sequence identity. For protein pairs with less than 25-35% sequence identity, it becomes statistically challenging to distinguish true homologs from random alignments, a concept often referred to as the "twilight zone" of sequence alignment. Standard BLAST searches may fail to detect these remote homologs.[1][2]

Limitations of Standard Search Algorithms

Standard search algorithms like BLAST are not always sensitive enough to detect distant evolutionary relationships.[1][3] Sequences can degrade significantly over evolutionary time, yet the proteins can still fold into similar 3D structures and perform similar functions.[1] More advanced methods are often required to uncover these remote homologies.

Rapid Evolution and Novel Protein Functions

In some biological contexts, such as viral evolution, proteins can evolve so rapidly that their sequences diverge beyond the point of easy recognition by standard tools.[3] Furthermore, a protein may have a genuinely novel function or belong to a newly evolved protein family that is not yet represented in the databases.

Advanced Computational Strategies for Detecting Remote Homologs

When standard searches fail, more sensitive computational methods can be employed to detect distant evolutionary relationships. These methods often build statistical models or profiles from multiple sequences to enhance search sensitivity.

Position-Specific Iterated BLAST (PSI-BLAST)

PSI-BLAST is an iterative search method that builds a position-specific scoring matrix (PSSM) from an initial BLAST search.[4][5][6][7] This PSSM captures the sequence variability at each position in a multiple sequence alignment of the top hits. In subsequent iterations, the PSSM is used to search the database, which can uncover more distant homologs.[4][5][6][7] This iterative refinement of the profile significantly increases the sensitivity of the search.

-

Initial BLASTp: Start with a standard protein-protein BLAST (BLASTp) search against a protein database (e.g., NCBI's non-redundant protein database).[4]

-

First Iteration: Review the initial results. PSI-BLAST will automatically generate a multiple sequence alignment of significant hits and create a PSSM.[5]

-

Subsequent Iterations: Use the PSSM to search the database again. New sequences found in this iteration are added to the alignment, and the PSSM is refined.[4]

-

Convergence: Repeat the iterations until no new significant hits are found.

-

Analysis: Carefully examine the results for conserved domains and functional motifs that may indicate homology.

Profile Hidden Markov Models (HMMs)

Profile HMMs are statistical models that represent the consensus sequence of a protein family.[8] Tools like HMMER use profile HMMs to search for remote homologs with high sensitivity.[9] They are particularly effective because they model not only conserved residues but also insertions and deletions within the protein family.[10] For sequences with less than 30% identity, profile-based methods can detect up to three times more homologs than pairwise alignments.[10]

-

Obtain a Multiple Sequence Alignment (MSA): If you have a set of related sequences, create an MSA using tools like ClustalW.

-

Build the Profile HMM: Use the hmmbuild program from the HMMER package to create a profile HMM from your MSA.

-

Calibrate the HMM (Optional but Recommended): Use hmmcalibrate to improve the sensitivity of the database search by determining appropriate statistical significance parameters.

-

Search the Database: Use hmmsearch to search a sequence database with your profile HMM.

-

Analyze the Results: Examine the E-values and bit scores to identify potential homologs.

Comparison of Search Method Sensitivities

The choice of search method can significantly impact the ability to detect remote homologs. The following table summarizes the relative sensitivities of different methods.

| Method | Principle | Relative Sensitivity | Typical Use Case |

| BLASTp | Local sequence alignment | Low to Moderate | Finding closely related homologs. |

| PSI-BLAST | Iterative profile-based search | High | Finding moderately to distantly related homologs.[4] |

| HMMER | Profile Hidden Markov Models | Very High | Detecting remote homologs and classifying proteins into families.[9][10] |

| HHblits/HHpred | HMM-HMM comparison | Very High | Finding very distant homologs by comparing profiles.[3] |

Experimental Approaches for Novel Protein Discovery and Sequencing

When computational methods fail to identify homologs, it may be necessary to turn to experimental techniques to characterize the protein. Mass spectrometry-based proteomics is a powerful tool for identifying and sequencing unknown proteins.

"Bottom-Up" Proteomics for Protein Identification

The most common approach for protein identification is "bottom-up" proteomics.[11] In this workflow, proteins are enzymatically digested into smaller peptides, which are then analyzed by tandem mass spectrometry (LC-MS/MS).[11][12] The resulting peptide fragmentation spectra are then matched against a sequence database to identify the protein.

-

Sample Preparation: Isolate and purify the protein of interest. This can involve techniques like gel electrophoresis.[11][12]

-

Enzymatic Digestion: Digest the protein into peptides using a protease such as trypsin.[11][12]

-

Liquid Chromatography (LC) Separation: Separate the complex mixture of peptides using high-performance liquid chromatography (HPLC).[12]

-

Tandem Mass Spectrometry (MS/MS): Analyze the separated peptides using a mass spectrometer.[13] The instrument first measures the mass-to-charge ratio (m/z) of the intact peptides (MS1 scan) and then selects and fragments individual peptides to generate fragmentation spectra (MS2 scan).[12]

-

Database Searching: Use a search algorithm (e.g., SEQUEST, Mascot) to compare the experimental fragmentation spectra to theoretical spectra generated from a protein sequence database.

De Novo Protein Sequencing

When a protein is not present in any database, de novo sequencing is required.[14][15][16] This technique determines the amino acid sequence of a peptide directly from its fragmentation spectrum without relying on a database.[14][15][16] Algorithms for de novo sequencing analyze the mass differences between peaks in the MS/MS spectrum to deduce the amino acid sequence.[14]

-

Sample Preparation and MS/MS Analysis: Follow the same initial steps as in bottom-up proteomics to generate high-quality fragmentation spectra.

-

Spectral Interpretation: Use de novo sequencing software (e.g., PEAKS, Novor) to analyze the MS/MS spectra. The software identifies ion series (typically b- and y-ions) and calculates the mass differences between adjacent peaks to determine the amino acid sequence.[15]

-

Sequence Assembly: The sequences of multiple overlapping peptides are assembled to reconstruct the full-length protein sequence.

| Sequencing Approach | Principle | Advantages | Limitations |

| Database-Dependent | Matches experimental spectra to theoretical spectra from a database. | High-throughput and computationally efficient. | Cannot identify proteins not in the database.[17] |

| De Novo Sequencing | Deduces peptide sequence directly from fragmentation spectra. | Enables sequencing of novel proteins and those from unsequenced organisms.[15][16] | Computationally intensive and can be less accurate than database searches.[15] |

Integrating Genomics, Transcriptomics, and Proteomics

A comprehensive approach to identifying and characterizing novel proteins involves the integration of data from genomics, transcriptomics, and proteomics. This "proteogenomics" workflow can provide strong evidence for the existence and function of a novel protein.

By creating a custom protein database from genomic and transcriptomic data, it is possible to identify peptides that map to previously unannotated open reading frames (ORFs).[18][19] This provides strong evidence that a predicted gene is indeed expressed as a protein.

Conclusion

The inability to find a protein homolog in a database is not a dead end but rather an entry point into a deeper investigation of a potentially novel protein. By employing advanced computational search strategies and leveraging powerful experimental techniques like mass spectrometry and de novo sequencing, researchers can overcome the limitations of standard homology-based annotation. The integrated approach of proteogenomics offers a robust framework for the discovery and validation of novel proteins, paving the way for new insights into biological function and the identification of new therapeutic targets.

References

- 1. Is Protein BLAST a thing of the past? - PMC [pmc.ncbi.nlm.nih.gov]

- 2. The limits of protein sequence comparison? - PMC [pmc.ncbi.nlm.nih.gov]

- 3. Powerful Sequence Similarity Search Methods and In-Depth Manual Analyses Can Identify Remote Homologs in Many Apparently “Orphan” Viral Proteins - PMC [pmc.ncbi.nlm.nih.gov]

- 4. Understanding PSI-BLAST: A Comprehensive Guide - Omics tutorials [omicstutorials.com]

- 5. PSI-BLAST Tutorial - Comparative Genomics - NCBI Bookshelf [ncbi.nlm.nih.gov]

- 6. PSI-BLAST tutorial - PubMed [pubmed.ncbi.nlm.nih.gov]

- 7. PSI-BLAST tutorial - PMC [pmc.ncbi.nlm.nih.gov]

- 8. stat.purdue.edu [stat.purdue.edu]

- 9. HMMER [hmmer.org]

- 10. Rational Design of Profile HMMs for Sensitive and Specific Sequence Detection with Case Studies Applied to Viruses, Bacteriophages, and Casposons - PMC [pmc.ncbi.nlm.nih.gov]

- 11. Protein Mass Spectrometry Made Simple - PMC [pmc.ncbi.nlm.nih.gov]

- 12. allumiqs.com [allumiqs.com]

- 13. Protein Identification by Tandem Mass Spectrometry - Creative Proteomics [creative-proteomics.com]

- 14. creative-biolabs.com [creative-biolabs.com]

- 15. De Novo Protein Sequencing: How to Improve Accuracy and Data Analysis Efficiency | MtoZ Biolabs [mtoz-biolabs.com]

- 16. medium.com [medium.com]

- 17. De novo sequencing methods in proteomics - PubMed [pubmed.ncbi.nlm.nih.gov]

- 18. A workflow to identify novel proteins based on the direct mapping of peptide-spectrum-matches to genomic locations - PMC [pmc.ncbi.nlm.nih.gov]

- 19. researchgate.net [researchgate.net]

Technical Guide: Establishing a Foundational Identity and History for Cell Lines with Missing Data

Audience: Researchers, scientists, and drug development professionals.

This document outlines a systematic approach to rectify this situation by establishing a new, verified historical record through rigorous authentication, characterization, and documentation.

The Core Problem: Consequences of an Unknown History

When historical data for a cell line is unavailable, it is impossible to ensure the two most critical attributes of a reliable in vitro model: its identity and its stability. The consequences are significant:

-

Misidentification and Cross-Contamination: A staggering number of cell lines are misidentified. The International Cell Line Authentication Committee (ICLAC) maintains a register of hundreds of misidentified cell lines, with HeLa being a common contaminant.[1][3] Using the wrong cell line renders all experimental data irrelevant to the intended biological model.

-

Genetic and Phenotypic Drift: Continuous passaging exerts selective pressure on cell populations, leading to changes in growth rate, morphology, gene expression, and response to stimuli.[4][5][6][7] Data from a low-passage culture may not be comparable to that from a high-passage culture, even if the line is "correct".[2][5]

-

Irreproducible Research: The use of unauthenticated or overly passaged cell lines is a major contributor to the reproducibility crisis in biomedical research.[2][8] Without a baseline, results cannot be reliably reproduced within or between labs.

-

Wasted Resources: Experiments conducted on poorly characterized cell lines can lead to incorrect theories and derail scientific progress, wasting significant time, funding, and effort.[1]

The logical workflow upon discovering a lack of historical data is not to search for lost records, but to assume the current cell stock is unverified and initiate a process of re-authentication and characterization.

References

- 1. biocompare.com [biocompare.com]

- 2. The costs of using unauthenticated, over-passaged cell lines: how much more data do we need? - PubMed [pubmed.ncbi.nlm.nih.gov]

- 3. The repercussions of using misidentified cell lines | Culture Collections [culturecollections.org.uk]

- 4. Passage number of cancer cell lines: Importance, intricacies, and way-forward - PubMed [pubmed.ncbi.nlm.nih.gov]

- 5. researchgate.net [researchgate.net]

- 6. cellculturecompany.com [cellculturecompany.com]

- 7. Impact of Passage Number on Cell Line Phenotypes [cytion.com]

- 8. Incorrect cell line validation and verification - PMC [pmc.ncbi.nlm.nih.gov]

A Researcher's Guide to Elucidating the Mechanism of Action for a Novel Compound

For Researchers, Scientists, and Drug Development Professionals

Introduction

The identification of a novel compound with promising therapeutic activity is a significant milestone in drug discovery. However, a critical subsequent step is the elucidation of its mechanism of action (MoA). A thorough understanding of how a compound exerts its effects at the molecular, cellular, and organismal levels is paramount for its optimization, preclinical development, and ultimately, its clinical success. A well-defined MoA can help predict potential side effects, identify patient populations who are most likely to respond, and uncover new therapeutic indications.

This guide provides an in-depth overview of the core experimental strategies and computational approaches employed to unravel the MoA of a novel compound. It is designed to be a technical resource for researchers, scientists, and drug development professionals, offering detailed experimental protocols, structured data presentation, and visualizations of key concepts and workflows.

Phase 1: Target Identification and Engagement

The initial phase of MoA elucidation focuses on identifying the direct molecular target(s) of the novel compound and confirming physical engagement in a relevant biological context.

Experimental Protocols

1. Affinity Chromatography coupled with Mass Spectrometry (AC-MS)

This technique is a cornerstone for identifying the protein targets of a small molecule.

-

Principle: The novel compound is immobilized on a solid support (e.g., beads) to create an affinity matrix. This matrix is then incubated with a complex protein mixture, such as a cell lysate. Proteins that bind to the compound are "captured" and subsequently eluted and identified by mass spectrometry.

-

Protocol Outline:

-

Compound Immobilization: Covalently attach the novel compound to a solid support (e.g., NHS-activated sepharose beads) via a functional group on the compound. A linker may be used to minimize steric hindrance.

-

Cell Lysis: Prepare a cell lysate from a relevant cell line or tissue under non-denaturing conditions to preserve protein structure and interactions.

-

Affinity Purification: Incubate the cell lysate with the compound-immobilized beads. Include a control with beads that have been treated with a blocking agent but without the compound to identify non-specific binders.

-

Washing: Wash the beads extensively with a series of buffers of increasing stringency to remove non-specifically bound proteins.

-

Elution: Elute the specifically bound proteins from the beads. This can be achieved by changing the pH, increasing the ionic strength, or by competitive elution with an excess of the free compound.

-

Sample Preparation for Mass Spectrometry: The eluted proteins are typically separated by SDS-PAGE, followed by in-gel digestion with trypsin.

-

LC-MS/MS Analysis: The resulting peptides are analyzed by liquid chromatography-tandem mass spectrometry (LC-MS/MS) to determine their amino acid sequences.

-

Data Analysis: The identified proteins are cross-referenced with protein databases to identify the potential targets. Candidates are prioritized based on their enrichment in the compound-treated sample compared to the control.[1][2]

-

2. Cellular Thermal Shift Assay (CETSA)

CETSA is a powerful method to confirm target engagement in intact cells or tissue samples.[3][4]

-

Principle: The binding of a ligand (the novel compound) to its target protein often increases the thermal stability of the protein. CETSA measures this change in thermal stability.[4]

-

Protocol Outline:

-

Cell Treatment: Treat intact cells with the novel compound at various concentrations. A vehicle-treated control is essential.

-

Heating: Heat the cell suspensions at a range of temperatures in a thermocycler.

-

Cell Lysis and Fractionation: Lyse the cells and separate the soluble protein fraction (containing folded, non-denatured proteins) from the precipitated fraction by centrifugation.

-

Protein Quantification: Quantify the amount of the putative target protein remaining in the soluble fraction at each temperature using techniques like Western blotting or ELISA.

-

Data Analysis: Plot the amount of soluble protein as a function of temperature to generate a melting curve. A shift in the melting curve to a higher temperature in the presence of the compound indicates target engagement.[4] An isothermal dose-response fingerprint can also be generated by heating all samples at a single, optimized temperature and varying the compound concentration to determine the cellular EC50.[5]

-

Data Presentation

Table 1: Summary of Target Identification and Engagement Data

| Parameter | Experimental Method | Result | Interpretation |

| Putative Target(s) | Affinity Chromatography-Mass Spectrometry | Protein X, Protein Y | The compound directly binds to these proteins in a cellular context. |

| Binding Affinity (Kd) | Surface Plasmon Resonance (SPR) | 50 nM (for Protein X) | High-affinity interaction with Protein X. |

| Thermal Shift (ΔTm) | Cellular Thermal Shift Assay (CETSA) | +4.2 °C (for Protein X) | The compound stabilizes Protein X in intact cells, confirming engagement. |

| Cellular EC50 | Isothermal Dose-Response CETSA | 200 nM (for Protein X) | Concentration of the compound required for 50% of maximal target engagement in cells. |

Phase 2: Elucidation of Cellular and Pathway Effects

Once the direct target is identified and engagement is confirmed, the next phase is to understand the downstream consequences of this interaction on cellular signaling pathways and phenotypes.

Experimental Protocols

1. Luciferase Reporter Gene Assay

This is a widely used method to investigate the effect of a compound on the activity of a specific signaling pathway.[6][7][8]

-

Principle: A reporter gene (e.g., luciferase) is placed under the control of a promoter that is regulated by a transcription factor of interest. The activity of the luciferase enzyme, which produces a luminescent signal in the presence of its substrate, serves as a readout for the activity of the signaling pathway.[6][7]

-

Protocol Outline:

-

Cell Transfection: Transfect a suitable cell line with a plasmid containing the luciferase reporter construct. A control plasmid with a constitutively active promoter (e.g., CMV) can be co-transfected to normalize for transfection efficiency.

-

Compound Treatment: Treat the transfected cells with the novel compound at various concentrations.

-

Cell Lysis: After an appropriate incubation period, lyse the cells to release the luciferase enzyme.[6][9]

-

Luminescence Measurement: Add the luciferase substrate (luciferin) to the cell lysate and measure the resulting luminescence using a luminometer.[6][10]

-

Data Analysis: Normalize the luciferase activity of the experimental reporter to that of the control reporter. A change in luminescence in response to the compound indicates modulation of the signaling pathway.

-

2. High-Content Imaging and Analysis

This technique allows for the quantitative analysis of multiple cellular parameters in response to compound treatment.

-

Principle: Automated microscopy and image analysis are used to measure changes in cell morphology, protein localization, and the expression of specific biomarkers.

-

Protocol Outline:

-

Cell Plating and Treatment: Plate cells in multi-well plates and treat with the novel compound.

-

Staining: Stain the cells with fluorescent dyes or antibodies to visualize specific cellular components (e.g., nucleus, cytoskeleton, target protein).

-

Image Acquisition: Acquire images using a high-content imaging system.

-

Image Analysis: Use image analysis software to quantify various cellular features, such as nuclear translocation of a transcription factor, changes in cell shape, or the intensity of a fluorescently labeled protein.

-

Data Presentation

Table 2: Summary of Cellular and Pathway Effects

| Parameter | Experimental Method | Result | Interpretation |

| Pathway Activity (IC50) | Luciferase Reporter Assay (NF-κB) | 150 nM | The compound inhibits the NF-κB signaling pathway. |

| Protein Phosphorylation | Western Blot (p-ERK) | Decreased by 80% at 1 µM | The compound inhibits the MAPK/ERK signaling pathway. |

| Transcription Factor Localization | High-Content Imaging (NFAT) | 90% nuclear exclusion at 500 nM | The compound prevents the activation and nuclear translocation of NFAT. |

| Cell Viability (GI50) | CellTiter-Glo Assay | 5 µM (in cancer cell line A) | The compound has a growth-inhibitory effect on cancer cells. |

Phase 3: In Vivo Validation

The final phase involves validating the MoA in a living organism to understand the compound's physiological effects.

Experimental Protocols

1. Animal Models of Disease

-

Principle: Use an appropriate animal model that recapitulates key aspects of the human disease to evaluate the in vivo efficacy and MoA of the novel compound.

-

Protocol Outline:

-

Model Selection: Choose a relevant animal model (e.g., a xenograft model for cancer, a transgenic model for a genetic disease).

-

Compound Administration: Administer the novel compound to the animals via a clinically relevant route.

-

Efficacy Assessment: Monitor disease progression and assess the therapeutic effect of the compound.

-

Pharmacodynamic (PD) Biomarker Analysis: Collect tissue or blood samples to measure biomarkers that reflect the engagement of the target and modulation of the downstream pathway in vivo.

-

Data Presentation

Table 3: Summary of In Vivo Validation Data

| Parameter | Experimental Model | Result | Interpretation |

| Tumor Growth Inhibition | Mouse Xenograft Model | 75% reduction in tumor volume | The compound has significant anti-tumor efficacy in vivo. |

| Target Engagement (in vivo) | In vivo CETSA on tumor tissue | Significant thermal stabilization of Protein X | The compound engages its target in the tumor tissue. |

| Pathway Modulation (in vivo) | Immunohistochemistry (p-STAT3) | 85% decrease in p-STAT3 staining in tumors | The compound inhibits the target pathway in the in vivo setting. |

Mandatory Visualizations

Signaling Pathway Diagram

References

- 1. researchgate.net [researchgate.net]

- 2. wp.unil.ch [wp.unil.ch]

- 3. Screening for Target Engagement using the Cellular Thermal Shift Assay - CETSA - Assay Guidance Manual - NCBI Bookshelf [ncbi.nlm.nih.gov]

- 4. benchchem.com [benchchem.com]

- 5. bio-protocol.org [bio-protocol.org]

- 6. indigobiosciences.com [indigobiosciences.com]

- 7. bitesizebio.com [bitesizebio.com]

- 8. bioagilytix.com [bioagilytix.com]

- 9. assaygenie.com [assaygenie.com]

- 10. youtube.com [youtube.com]

Unraveling a Novel Inborn Error of Metabolism: A Technical Guide to the ACAA2 Gain-of-Function Variant

A Whitepaper for Researchers, Scientists, and Drug Development Professionals

Abstract

The landscape of rare diseases is continually expanding with the advent of advanced genomic sequencing technologies. A recently identified autosomal dominant disorder, stemming from a recurrent gain-of-function variant in the ACAA2 gene, presents a novel challenge in the field of inborn errors of metabolism. This technical guide provides a comprehensive overview of the current, albeit limited, understanding of this emerging disease, characterized by a complex phenotype including familial partial lipodystrophy, lipomatosis, infantile steatohepatitis, and hypoglycemia. We consolidate the sparse existing data, propose a pathogenic mechanism, and offer a detailed compendium of experimental protocols to facilitate further research. This document is intended to serve as a foundational resource for researchers, clinicians, and industry professionals dedicated to elucidating the pathophysiology of this rare condition and developing potential therapeutic interventions.

Introduction to the ACAA2-Related Metabolic Disorder

Acetyl-CoA Acyltransferase 2 (ACAA2) is a mitochondrial enzyme that catalyzes the final step of the fatty acid β-oxidation spiral, the thiolytic cleavage of 3-ketoacyl-CoA into acetyl-CoA and a shortened acyl-CoA. This process is fundamental for energy production from fatty acids, particularly during periods of fasting.

A newly described rare genetic disorder has been linked to a recurrent heterozygous missense variant in the ACAA2 gene (c.688G>A; p.Glu230Lys).[1] Unlike typical inborn errors of metabolism that arise from loss-of-function mutations, this disorder is hypothesized to be caused by a pathological gain-of-function in the ACAA2 enzyme.[1] The precise molecular consequences of this enhanced enzymatic activity are still under investigation, but it is speculated to disrupt the delicate balance of mitochondrial lipid and energy metabolism.

Clinically, affected individuals present with a multisystemic phenotype that emerges from infancy to adulthood. Key features include:

-

Familial Partial Lipodystrophy (FPL): Characterized by the loss of subcutaneous adipose tissue from the limbs and gluteal region, with variable accumulation of fat in the face, neck, and intra-abdominal areas.[1][2]

-

Lipomatosis: The formation of benign tumors composed of adipose tissue.[1]

-

Infantile Steatohepatitis: Fatty infiltration and inflammation of the liver occurring in infancy.[1]

-

Hypoglycemia: Episodes of abnormally low blood sugar, which can be severe enough to cause neurological damage, particularly in infants.[1]

A critical diagnostic marker for this condition is the presence of elevated levels of long-chain acylcarnitines in plasma, which is paradoxical for a disorder involving an enzyme at the end of the beta-oxidation pathway and points towards a complex disruption of mitochondrial acyl-CoA metabolism.[1]

Summarized Patient Data

The following table summarizes the key clinical and biochemical findings from the initial reported cohort of individuals with the ACAA2 p.Glu230Lys variant. This data is compiled from the limited existing literature.[1]

| Parameter | Finding |

| Genetics | |

| Gene | ACAA2 |

| Variant | c.688G>A; p.Glu230Lys |

| Inheritance | Autosomal Dominant (including de novo cases) |

| Clinical Phenotype | |

| Adipose Tissue | Familial Partial Lipodystrophy, Pubic and Dorsocervical Lipomatosis |

| Hepatic | Infantile Steatohepatitis, Hepatomegaly, Steatosis, Fibrosis |

| Metabolic | Hypoglycemia (especially in infancy) |

| Biochemical Markers | |

| Plasma Acylcarnitines | Elevated long-chain species (e.g., C14, C16, C18) |

| Liver Function Tests | Elevated transaminases (in infancy) |

| Histopathology (Liver) | Micro- and macrovesicular steatosis, periportal fibrosis, damaged mitochondria |

Hypothesized Pathogenic Mechanism and Signaling Pathway

The ACAA2 enzyme catalyzes the conversion of 3-ketoacyl-CoA to acetyl-CoA and a shortened acyl-CoA. The p.Glu230Lys gain-of-function variant is thought to enhance this catalytic activity. The precise downstream consequences are yet to be fully elucidated, but a plausible hypothesis is that the overactive enzyme rapidly depletes the pool of 3-ketoacyl-CoA, leading to an increased "pull" on the entire β-oxidation pathway. This could paradoxically lead to an accumulation of upstream long-chain acyl-CoAs that cannot be processed efficiently, which are then shunted into acylcarnitine formation. This disruption in mitochondrial fatty acid homeostasis could lead to lipotoxicity in hepatocytes, impaired adipocyte function and storage, and dysregulated energy balance leading to hypoglycemia.

Caption: Hypothesized pathogenic mechanism of the ACAA2 gain-of-function variant.

Experimental Protocols

To facilitate research into this novel disorder, we provide detailed methodologies for key experiments. These protocols are adapted from established procedures for related metabolic diseases.

Patient-Derived Fibroblast Culture

-

Objective: To establish a renewable source of patient cells for biochemical and functional studies.

-

Methodology: Adapted from primary human fibroblast culture protocols.[3][4]

-

Biopsy Collection: Obtain a 3-4 mm skin punch biopsy from the patient under sterile conditions. Place the biopsy in a sterile tube containing transport medium (e.g., DMEM with 10% FBS and antibiotics).

-

Explant Preparation: In a biosafety cabinet, wash the biopsy with sterile PBS. Mince the tissue into 1-2 mm pieces using sterile scalpels.

-

Culture Initiation: Place the tissue pieces into a 6-well plate, ensuring they adhere to the bottom. Add a minimal amount of complete DMEM (high glucose, 20% FBS, penicillin/streptomycin) to just cover the tissue.

-

Cell Outgrowth: Incubate at 37°C in a 5% CO₂ incubator. Fibroblasts will begin to migrate from the explants within 7-14 days.

-

Expansion: Once a sufficient number of fibroblasts have emerged, trypsinize the cells and transfer them to a larger flask for expansion. Subsequent passages can be performed in DMEM with 10% FBS.

-

Acylcarnitine Profiling by Tandem Mass Spectrometry

-

Objective: To quantify the levels of long-chain acylcarnitines, the key diagnostic biomarker.

-

Methodology: Based on established LC-MS/MS protocols for acylcarnitine analysis.[5]

-

Sample Preparation (Plasma):

-

To 50 µL of patient plasma, add an internal standard solution containing stable isotope-labeled acylcarnitines.

-

Precipitate proteins by adding 200 µL of cold acetonitrile. Vortex and centrifuge.

-

Transfer the supernatant to a new tube and evaporate to dryness under a nitrogen stream.

-

-

Derivatization (Butylation):

-

Reconstitute the dried extract in 100 µL of 3N HCl in n-butanol.

-

Incubate at 65°C for 15 minutes.

-

Evaporate the butanolic HCl to dryness and reconstitute in the initial mobile phase.

-

-

LC-MS/MS Analysis:

-

Chromatography: Use a C18 column with a gradient elution of water and acetonitrile, both containing 0.1% formic acid.

-

Mass Spectrometry: Operate in positive electrospray ionization (ESI) mode with multiple reaction monitoring (MRM) to detect specific acylcarnitine species.

-

Quantification: Calculate the concentration of each acylcarnitine species by comparing its peak area to that of the corresponding internal standard.

-

-

ACAA2 (3-Ketoacyl-CoA Thiolase) Enzymatic Assay

-

Objective: To measure the enzymatic activity of ACAA2 in patient-derived cells and to characterize the kinetic properties of the gain-of-function variant.

-

Methodology: A coupled spectrophotometric assay adapted from protocols for thiolase activity.[6][7] This assay measures the thiolytic cleavage of a substrate (e.g., acetoacetyl-CoA) in the reverse (condensation) direction, which is then coupled to a reaction that produces a detectable change in absorbance. For the forward reaction (cleavage), the decrease in the 3-ketoacyl-CoA substrate can be monitored. A more direct forward assay is described below.

-

Principle (Forward Reaction): The cleavage of the 3-ketoacyl-CoA substrate by ACAA2 results in the disappearance of the enolate form of the substrate, which can be monitored by a decrease in absorbance at ~305-310 nm.

-

Reaction Mixture:

-

50 mM Tris-HCl buffer, pH 8.0

-

20 mM MgCl₂

-

50 µM Coenzyme A (CoA)

-

50 µM 3-ketoacyl-CoA substrate (e.g., acetoacetyl-CoA or a longer-chain substrate)

-

Cell or mitochondrial lysate (containing the ACAA2 enzyme)

-

-

Procedure:

-

Prepare cell or mitochondrial lysates from patient-derived fibroblasts and control cell lines.

-

In a quartz cuvette, combine the buffer, MgCl₂, and CoA.

-

Add the cell lysate and equilibrate to 37°C.

-

Initiate the reaction by adding the 3-ketoacyl-CoA substrate.

-

Immediately monitor the decrease in absorbance at 305 nm using a spectrophotometer.

-

-

Data Analysis:

-

Calculate the rate of reaction (enzyme activity) from the linear portion of the absorbance vs. time plot, using the molar extinction coefficient of the substrate.

-

To determine kinetic parameters (Vmax and Km) for the wild-type and mutant enzymes, repeat the assay with varying substrate concentrations and fit the data to the Michaelis-Menten equation.[8][9] A higher Vmax would be indicative of a gain-of-function.

-

-

Cellular Model Generation using CRISPR-Cas9

-

Objective: To create isogenic cell lines (e.g., in HEK293 or HepG2 cells) with the ACAA2 p.Glu230Lys variant to study the disease mechanism in a controlled genetic background.

-

Methodology: A CRISPR-Cas9 knock-in strategy.[9]

-

Component Design:

-

sgRNA: Design a single guide RNA that directs the Cas9 nuclease to a site as close as possible to the target mutation site (c.688) in the ACAA2 gene.

-

Cas9: Utilize a high-fidelity Cas9 nuclease to minimize off-target effects.

-

Donor Template: Synthesize a single-stranded oligodeoxynucleotide (ssODN) donor template containing the desired G-to-A point mutation. The ssODN should have 40-60 base pair homology arms flanking the mutation site. Introduce a silent mutation in the PAM sequence within the donor template to prevent re-cutting by Cas9 after successful editing.

-

-

Delivery: Co-transfect the sgRNA, Cas9 (as plasmid or RNP complex), and the ssODN donor template into the target cells using electroporation or a lipid-based transfection reagent.

-

Clonal Selection: After transfection, seed the cells at a low density to allow for the growth of single-cell-derived colonies.

-

Screening and Validation:

-

Expand individual clones and extract genomic DNA.

-

Screen for the desired knock-in mutation using PCR followed by Sanger sequencing or restriction fragment length polymorphism (RFLP) analysis if the mutation creates or destroys a restriction site.

-

Confirm the expression of the mutant ACAA2 protein by Western blot and validate the functional consequences using the enzymatic and mitochondrial function assays described herein.

-

-

Caption: Workflow for generating an ACAA2 gain-of-function cellular model.

Analysis of Mitochondrial Respiration

-

Objective: To assess the impact of the ACAA2 gain-of-function mutation on mitochondrial function.

-

Methodology: The Seahorse XF Cell Mito Stress Test.[10]

-

Cell Seeding: Seed control and patient-derived fibroblasts (or CRISPR-edited cells) in a Seahorse XF cell culture microplate. Allow cells to adhere and form a monolayer.

-

Assay Preparation: The day before the assay, hydrate the sensor cartridge. On the day of the assay, replace the culture medium with Seahorse XF assay medium supplemented with glucose, pyruvate, and glutamine, and incubate in a non-CO₂ incubator at 37°C.

-

Mito Stress Test: Load the sensor cartridge with compounds that modulate mitochondrial respiration (oligomycin, FCCP, and a mixture of rotenone/antimycin A). The Seahorse XF Analyzer will sequentially inject these compounds and measure the oxygen consumption rate (OCR) in real-time.

-

Data Analysis: The assay provides key parameters of mitochondrial function:

-

Basal Respiration: The baseline oxygen consumption of the cells.

-

ATP Production: The portion of basal respiration used to generate ATP.

-

Maximal Respiration: The maximum OCR the cells can achieve.

-

Spare Respiratory Capacity: The ability of the cell to respond to an energetic demand. An increase in basal and maximal respiration might be expected in cells with an ACAA2 gain-of-function, reflecting an increased flux through the β-oxidation pathway.

-

-

Future Directions and Conclusion

The discovery of the ACAA2 gain-of-function disorder opens up a new area of research in metabolic diseases. The immediate priorities should be to further characterize the clinical spectrum of the disease, understand the precise molecular consequences of the enhanced enzyme activity, and develop robust cellular and animal models. The experimental protocols detailed in this guide provide a roadmap for the scientific community to begin addressing these critical questions.

Key future research should focus on:

-

Developing a mouse model with the p.Glu230Lys knock-in mutation to study the systemic effects of the disorder and test therapeutic strategies.

-

Performing detailed lipidomic and metabolomic analyses on patient samples and model systems to understand the full scope of metabolic dysregulation.

-

Investigating potential therapeutic approaches, such as small molecule inhibitors that could normalize ACAA2 activity or strategies to mitigate the downstream effects of metabolic dysregulation.

This technical guide serves as a starting point for a collaborative effort to unravel the complexities of this rare disease. By providing a consolidated resource of current knowledge and actionable experimental plans, we hope to accelerate the pace of discovery and ultimately improve the lives of individuals affected by this novel ACAA2-related disorder.

References

- 1. A spectrophotometric assay for measuring acetyl-coenzyme A carboxylase. | Semantic Scholar [semanticscholar.org]

- 2. researchgate.net [researchgate.net]

- 3. A spectrophotometric assay for measuring acetyl-coenzyme A carboxylase - PubMed [pubmed.ncbi.nlm.nih.gov]

- 4. Peroxisomal Plant 3-Ketoacyl-CoA Thiolase Structure and Activity Are Regulated by a Sensitive Redox Switch - PMC [pmc.ncbi.nlm.nih.gov]

- 5. Practical assay method of cytosolic acetoacetyl-CoA thiolase by rapid release of cytosolic enzymes from cultured lymphocytes using digitonin - PubMed [pubmed.ncbi.nlm.nih.gov]

- 6. Impairment of mitochondrial acetoacetyl CoA thiolase activity in the colonic mucosa of patients with ulcerative colitis - PMC [pmc.ncbi.nlm.nih.gov]

- 7. Cloning, Expression and Purification of an Acetoacetyl CoA Thiolase from Sunflower Cotyledon - PMC [pmc.ncbi.nlm.nih.gov]

- 8. Basics of Enzymatic Assays for HTS - Assay Guidance Manual - NCBI Bookshelf [ncbi.nlm.nih.gov]

- 9. chem.libretexts.org [chem.libretexts.org]

- 10. youtube.com [youtube.com]

Navigating the Terra Incognita of Metabolism: A Technical Guide to Characterizing the Methylaspartate Cycle

For Researchers, Scientists, and Drug Development Professionals

Abstract: The landscape of microbial metabolism is vast and continues to reveal novel pathways with significant implications for biotechnology and drug development. The methylaspartate cycle, a recently elucidated anaplerotic pathway for acetate assimilation, stands as a prime example of metabolic diversity.[1][2] This stands in contrast to the more well-known glyoxylate cycle.[2][3] This technical guide provides an in-depth exploration of the methylaspartate cycle, a pathway notably absent in most model organisms but crucial for the survival of certain extremophiles, particularly haloarchaea.[1][4][5] We present a comprehensive overview of the cycle's enzymatic steps, quantitative data from key studies, detailed experimental protocols for its characterization, and visual diagrams to illuminate its intricate network. This document serves as a critical resource for researchers aiming to understand, engineer, or target this unique metabolic route.

Introduction: The Methylaspartate Cycle, a Novel Anaplerotic Pathway

Growth on two-carbon compounds like acetate requires a metabolic strategy to replenish the intermediates of the central carbon metabolism, a process known as anaplerosis. While the glyoxylate cycle is a well-established anaplerotic pathway in many organisms, it is not universally conserved.[6] Haloarchaea, a group of extremophilic archaea, have evolved a distinct solution: the methylaspartate cycle.[1][2][3] This pathway allows for the net conversion of acetyl-CoA to malate, a key precursor for biosynthesis.[1][2]

The discovery of the methylaspartate cycle in organisms like Haloarcula marismortui highlights the remarkable adaptability of microbial metabolism.[2][3] Unlike the glyoxylate cycle, the methylaspartate cycle involves a unique set of enzymatic reactions, including the key intermediate for which it is named, methylaspartate.[1][3] Understanding this pathway is not only fundamental to comprehending the metabolic capabilities of these organisms but also opens avenues for novel biotechnological applications and the development of targeted antimicrobial strategies.

Organisms: The methylaspartate cycle has been experimentally confirmed in haloarchaea such as Haloarcula marismortui and Haloarcula hispanica.[4] Bioinformatic analyses suggest its presence in approximately 40% of sequenced haloarchaea.[4][5] Notably, this pathway is absent in commonly studied model organisms like Escherichia coli and Saccharomyces cerevisiae, which typically utilize the glyoxylate cycle for growth on acetate.

The Methylaspartate Cycle: A Step-by-Step Enzymatic Journey

The methylaspartate cycle is intricately linked with the tricarboxylic acid (TCA) cycle. It effectively converts two molecules of acetyl-CoA into one molecule of malate.[4] The core of the pathway begins with the conversion of glutamate to methylaspartate and proceeds through a series of unique enzymatic transformations.

The key enzymatic steps of the methylaspartate cycle are:

-

Glutamate Mutase (MamAB): Glutamate is isomerized to (2S,3S)-3-methylaspartate.

-

Methylaspartate Ammonia-Lyase (Mal): This enzyme catalyzes the elimination of ammonia from methylaspartate to form mesaconate.

-

Succinyl-CoA:Mesaconate CoA-Transferase (Mct): Mesaconate is activated to mesaconyl-CoA.[5]

-

Mesaconyl-CoA Hydratase (Mch): Mesaconyl-CoA is hydrated to form β-methylmalyl-CoA.[5]

-

β-Methylmalyl-CoA Lyase (Mcl): This enzyme cleaves β-methylmalyl-CoA into glyoxylate and propionyl-CoA.

-

Propionyl-CoA Carboxylase: Propionyl-CoA is carboxylated to methylmalonyl-CoA.

-

Methylmalonyl-CoA Mutase: Methylmalonyl-CoA is isomerized to succinyl-CoA, which then enters the TCA cycle to regenerate the initial acceptor molecule.

-

Malate Synthase (Ms): Glyoxylate condenses with a second molecule of acetyl-CoA to form malate, the net product of the cycle.

// Nodes Glutamate [label="Glutamate", fillcolor="#F1F3F4"]; Methylaspartate [label="(2S,3S)-3-Methylaspartate", fillcolor="#F1F3F4"]; Mesaconate [label="Mesaconate", fillcolor="#F1F3F4"]; Mesaconyl_CoA [label="Mesaconyl-CoA", fillcolor="#F1F3F4"]; beta_Methylmalyl_CoA [label="β-Methylmalyl-CoA", fillcolor="#F1F3F4"]; Glyoxylate [label="Glyoxylate", fillcolor="#FBBC05"]; Propionyl_CoA [label="Propionyl-CoA", fillcolor="#F1F3F4"]; Methylmalonyl_CoA [label="Methylmalonyl-CoA", fillcolor="#F1F3F4"]; Succinyl_CoA [label="Succinyl-CoA", fillcolor="#F1F3F4"]; Malate [label="Malate", fillcolor="#34A853"]; Acetyl_CoA_in [label="Acetyl-CoA", fillcolor="#EA4335"]; Acetyl_CoA_in2 [label="Acetyl-CoA", fillcolor="#EA4335"]; TCA_Cycle [label="TCA Cycle", shape=ellipse, fillcolor="#4285F4", fontcolor="#FFFFFF"];

// Edges Glutamate -> Methylaspartate [label="Glutamate Mutase (MamAB)"]; Methylaspartate -> Mesaconate [label="Methylaspartate Ammonia-Lyase (Mal)"]; Mesaconate -> Mesaconyl_CoA [label="Succinyl-CoA:Mesaconate\nCoA-Transferase (Mct)"]; Mesaconyl_CoA -> beta_Methylmalyl_CoA [label="Mesaconyl-CoA Hydratase (Mch)"]; beta_Methylmalyl_CoA -> Glyoxylate [label="β-Methylmalyl-CoA Lyase (Mcl)"]; beta_Methylmalyl_CoA -> Propionyl_CoA [label="β-Methylmalyl-CoA Lyase (Mcl)"]; Propionyl_CoA -> Methylmalonyl_CoA [label="Propionyl-CoA Carboxylase"]; Methylmalonyl_CoA -> Succinyl_CoA [label="Methylmalonyl-CoA Mutase"]; Succinyl_CoA -> TCA_Cycle [label="To TCA Cycle"]; TCA_Cycle -> Glutamate [label="From TCA Cycle"]; Acetyl_CoA_in -> TCA_Cycle; Acetyl_CoA_in2 -> Malate; Glyoxylate -> Malate [label="Malate Synthase (Ms)"]; } The Methylaspartate Cycle

Quantitative Data

The characterization of the methylaspartate cycle has been supported by quantitative measurements of enzyme activities and metabolite concentrations. The following tables summarize key data from studies on Haloarcula hispanica.

Table 1: Specific Activities of Key Enzymes in the Methylaspartate Cycle in Haloarcula hispanica

| Enzyme | Substrate | Specific Activity (nmol min⁻¹ mg⁻¹ protein) |

| Methylaspartate ammonia-lyase | (2S,3S)-3-Methylaspartate | 130 ± 10 |

| Mesaconate CoA-transferase | Mesaconate | 50 ± 5 |

| Mesaconyl-CoA hydratase | Mesaconyl-CoA | 250 ± 20 |

| β-Methylmalyl-CoA lyase | β-Methylmalyl-CoA | 180 ± 15 |

| Malate synthase | Glyoxylate + Acetyl-CoA | 70 ± 8 |

Data are representative values from published studies and may vary based on experimental conditions.

Table 2: Intracellular Metabolite Concentrations in Acetate-Grown Haloarcula hispanica

| Metabolite | Concentration (mM) |

| Glutamate | 150 - 200 |

| Mesaconate | 0.5 - 1.0 |

These high concentrations of glutamate are a notable feature of haloarchaea utilizing this pathway.[2][3]

Experimental Protocols

The elucidation of the methylaspartate cycle has relied on a combination of proteomics, enzyme assays, and metabolite analysis. Below are detailed methodologies for key experiments.

Proteomic Analysis of Acetate-Grown vs. Succinate-Grown Cells

Objective: To identify proteins that are upregulated during growth on acetate, a substrate that necessitates an anaplerotic pathway.

Methodology:

-

Cell Culture: Grow Haloarcula marismortui in a defined medium with either acetate or succinate as the sole carbon source.

-

Protein Extraction: Harvest cells in the exponential growth phase, lyse them by sonication in a suitable buffer, and centrifuge to remove cell debris.

-

Two-Dimensional Gel Electrophoresis (2D-PAGE):

-

Separate proteins in the first dimension by isoelectric focusing (IEF).

-

Separate proteins in the second dimension by SDS-polyacrylamide gel electrophoresis (SDS-PAGE).

-

-

Protein Identification:

-

Excise protein spots that are significantly upregulated in acetate-grown cells.

-

Perform in-gel digestion with trypsin.

-

Analyze the resulting peptides by matrix-assisted laser desorption/ionization-time of flight (MALDI-TOF) mass spectrometry.

-

Identify the proteins by comparing the peptide mass fingerprints to a protein database.

-

Enzyme Assays

Objective: To measure the specific activities of the enzymes involved in the methylaspartate cycle.

General Considerations: Assays are typically performed spectrophotometrically by monitoring the change in absorbance of a substrate or product.

Example: β-Methylmalyl-CoA Lyase Assay

-

Reaction Mixture: Prepare a reaction mixture containing buffer, β-methylmalyl-CoA, and cell-free extract or purified enzyme.

-

Detection: The cleavage of β-methylmalyl-CoA to glyoxylate and propionyl-CoA can be coupled to the reduction of NAD⁺ by lactate dehydrogenase in the presence of glyoxylate, or the formation of propionyl-CoA can be monitored by HPLC.

-

Measurement: Monitor the change in absorbance at 340 nm (for the coupled assay) or quantify the peak corresponding to propionyl-CoA by HPLC.

-

Calculation: Calculate the specific activity based on the rate of product formation, the amount of protein in the assay, and the molar extinction coefficient of NADH.

Metabolite Analysis by High-Performance Liquid Chromatography (HPLC)

Objective: To identify and quantify the intermediates of the methylaspartate cycle.

Methodology:

-

Metabolite Extraction: Quench the metabolism of cell cultures rapidly (e.g., with cold methanol) and extract metabolites using a suitable solvent (e.g., a chloroform/methanol/water mixture).

-

HPLC Separation:

-

Use a reversed-phase C18 column.

-

Employ a gradient of a suitable mobile phase (e.g., acetonitrile and a buffered aqueous solution) to separate the metabolites.

-

-

Detection:

-

Detect CoA esters by their UV absorbance at 260 nm.

-

Detect organic acids by UV absorbance at a lower wavelength (e.g., 210 nm) or by mass spectrometry.

-

-

Quantification: Quantify the metabolites by comparing their peak areas to those of known standards.

// Nodes Start [label="Hypothesis:\nNovel Acetate Assimilation Pathway", shape=ellipse, fillcolor="#4285F4", fontcolor="#FFFFFF"]; Proteomics [label="Comparative Proteomics\n(Acetate vs. Succinate)", fillcolor="#F1F3F4"]; Upregulated_Proteins [label="Identification of\nUpregulated Proteins", fillcolor="#FBBC05"]; Gene_Identification [label="Gene Identification and\nOperon Analysis", fillcolor="#F1F3F4"]; Enzyme_Assays [label="Enzyme Assays", fillcolor="#F1F3F4"]; Metabolite_Analysis [label="Metabolite Analysis (HPLC)", fillcolor="#F1F3F4"]; Pathway_Reconstruction [label="Pathway Reconstruction", fillcolor="#34A853", fontcolor="#FFFFFF"];

// Edges Start -> Proteomics; Proteomics -> Upregulated_Proteins; Upregulated_Proteins -> Gene_Identification; Gene_Identification -> Enzyme_Assays; Gene_Identification -> Metabolite_Analysis; Enzyme_Assays -> Pathway_Reconstruction; Metabolite_Analysis -> Pathway_Reconstruction; } Experimental Workflow for Pathway Elucidation

Logical Relationships and Regulation

The methylaspartate cycle is not an isolated pathway but is integrated with the broader metabolic network of the cell.

-

Link to TCA Cycle: The cycle is dependent on the TCA cycle for the initial conversion of acetyl-CoA to glutamate and for the regeneration of succinyl-CoA.[6]

-

Nitrogen Metabolism: The involvement of glutamate and the release of ammonia by methylaspartate ammonia-lyase create a direct link to nitrogen metabolism.[2][3]

-

Regulation: The genes encoding the key enzymes of the methylaspartate cycle in Haloarcula marismortui are organized in an operon, suggesting coordinate regulation at the transcriptional level.[3] Their expression is induced by acetate and repressed by other carbon sources like succinate.

// Nodes Acetate [label="Acetate", fillcolor="#EA4335"]; TCA_Cycle [label="TCA Cycle", shape=ellipse, fillcolor="#4285F4", fontcolor="#FFFFFF"]; Methylaspartate_Cycle [label="Methylaspartate Cycle", shape=ellipse, fillcolor="#FBBC05"]; Biosynthesis [label="Biosynthesis", shape=ellipse, fillcolor="#34A853", fontcolor="#FFFFFF"]; Nitrogen_Metabolism [label="Nitrogen Metabolism", shape=ellipse, fillcolor="#5F6368", fontcolor="#FFFFFF"];

// Edges Acetate -> TCA_Cycle [label="Acetyl-CoA"]; TCA_Cycle -> Methylaspartate_Cycle [label="Glutamate"]; Methylaspartate_Cycle -> TCA_Cycle [label="Succinyl-CoA"]; Methylaspartate_Cycle -> Biosynthesis [label="Malate"]; Methylaspartate_Cycle -> Nitrogen_Metabolism [label="NH₃"]; Nitrogen_Metabolism -> Methylaspartate_Cycle [label="Glutamate"]; } Metabolic Network Integration

Implications for Drug Development

The unique nature of the methylaspartate cycle and its presence in specific groups of microorganisms make it a potential target for novel antimicrobial agents. Enzymes that are essential for the cycle and are absent in humans and other non-target organisms could be attractive targets for inhibitor screening and design. For instance, methylaspartate ammonia-lyase and β-methylmalyl-CoA lyase represent promising candidates for the development of drugs against pathogenic haloarchaea or other organisms that may harbor this pathway.

Conclusion

The methylaspartate cycle is a testament to the metabolic ingenuity of life in extreme environments. Its elucidation has not only expanded our fundamental understanding of carbon metabolism but also provided a new set of tools and targets for biotechnological and pharmaceutical research. This technical guide offers a comprehensive resource for scientists and researchers to delve into the intricacies of this fascinating pathway, from its molecular mechanisms to its broader physiological and evolutionary context. The continued exploration of such unique metabolic pathways will undoubtedly uncover further opportunities for innovation in science and medicine.

References

- 1. The methylaspartate cycle in haloarchaea and its possible role in carbon metabolism - PubMed [pubmed.ncbi.nlm.nih.gov]

- 2. A methylaspartate cycle in haloarchaea - PubMed [pubmed.ncbi.nlm.nih.gov]

- 3. researchgate.net [researchgate.net]

- 4. researchgate.net [researchgate.net]

- 5. Frontiers | Succinyl-CoA:Mesaconate CoA-Transferase and Mesaconyl-CoA Hydratase, Enzymes of the Methylaspartate Cycle in Haloarcula hispanica [frontiersin.org]

- 6. The methylaspartate cycle in haloarchaea and its possible role in carbon metabolism - PMC [pmc.ncbi.nlm.nih.gov]

Overcoming the Crystallization Barrier: A Technical Guide to the Structure and Function of the Cav1.1 Channel Complex

A Whitepaper for Researchers, Scientists, and Drug Development Professionals

Abstract: For decades, the atomic-resolution structure of the voltage-gated calcium channel Cav1.1, a cornerstone of skeletal muscle excitation-contraction coupling, remained elusive to X-ray crystallography. This guide explores the profound challenges inherent in crystallizing large, multi-subunit membrane proteins and details the revolutionary impact of single-particle cryo-electron microscopy (cryo-EM) in finally elucidating its architecture. We provide an in-depth overview of the Cav1.1 signaling pathway, a detailed cryo-EM workflow, and quantitative data derived from these breakthrough structural studies, offering a blueprint for tackling similarly challenging macromolecular complexes.

Introduction to the Cav1.1 Channel

The voltage-gated calcium channel Cav1.1 (also known as the dihydropyridine receptor, DHPR) is a heteromultimeric protein complex essential for life.[1] Located in the transverse tubules of skeletal muscle, its primary role is to convert the electrical signal of a neuronal action potential into an intracellular calcium release, the direct trigger for muscle contraction.[2][3][4] This process, known as excitation-contraction (EC) coupling, makes Cav1.1 a critical component of all voluntary movement.[2][5]

The complex consists of a central pore-forming α1-subunit and auxiliary α2δ, β, and γ subunits, each contributing to its function and regulation.[6][7] Beyond its physiological role, mutations in the gene encoding Cav1.1 are linked to debilitating channelopathies such as hypokalemic periodic paralysis and malignant hyperthermia, making it a significant target for drug development.[1][8] Despite its importance, the complete, high-resolution crystal structure of the Cav1.1 complex proved unattainable for many years.

The Crystallization Challenge

The journey to determine the structure of Cav1.1 highlights the significant hurdles faced when studying complex membrane proteins via X-ray crystallography.[9][10]

Core Challenges Include:

-

Inherent Flexibility: Membrane proteins like Cav1.1 are dynamic and adopt multiple conformations, which impedes the formation of the highly ordered, rigid crystal lattice required for X-ray diffraction.[9][11]

-

Hydrophobicity and Stability: Cav1.1 is embedded within a lipid bilayer.[11] Extracting it requires detergents that can disrupt its native structure and lead to aggregation or misfolding.[9][12] Maintaining stability outside the membrane environment is a major bottleneck.[9]

-

Size and Complexity: The Cav1.1 complex is large and composed of multiple subunits, adding layers of complexity to expression, purification, and crystallization.[6] Eukaryotic expression systems are often necessary to ensure proper folding and post-translational modifications.[9]

-

Crystal Quality: Even when crystals are obtained, they are often small, thin, or poorly ordered, making it difficult to collect high-quality diffraction data.[12]

These combined difficulties meant that for a long time, our understanding of Cav1.1 was based on lower-resolution data and homology models.[13]

Methodological Breakthrough: Cryo-Electron Microscopy (Cryo-EM)

The "resolution revolution" in cryo-EM provided the breakthrough needed to overcome the crystallization barrier for membrane proteins.[14] Cryo-EM does not require crystallization; instead, it involves flash-freezing purified protein complexes in a thin layer of vitreous ice and imaging millions of individual particles with an electron microscope.[15] This approach is exceptionally well-suited for large, flexible complexes like Cav1.1.[16]

Cryo-EM Experimental Workflow

The general workflow for determining the structure of a membrane protein complex like Cav1.1 using single-particle cryo-EM involves several key stages, from protein production to final 3D model.[14][16]

Experimental Protocol: Membrane Protein Purification & Grid Preparation

This protocol provides a generalized methodology for preparing a large membrane protein complex for cryo-EM analysis.

-

Expression and Harvest: The target protein (e.g., rabbit Cav1.1) is overexpressed in a suitable eukaryotic cell line (e.g., HEK293 cells). Cells are harvested and cell pellets are flash-frozen and stored at -80°C.

-

Membrane Preparation: Cell pellets are resuspended in a hypotonic lysis buffer containing protease inhibitors. Cells are lysed via dounce homogenization, and the lysate is centrifuged to pellet nuclei and debris. The supernatant is then ultracentrifuged to pellet the cell membranes.

-

Solubilization: The membrane pellet is resuspended in a solubilization buffer containing a mild detergent (e.g., DDM or LMNG), cholesterol analogue (e.g., CHS), and protease inhibitors.[17] The mixture is stirred for 1-2 hours at 4°C to extract the protein from the lipid bilayer.

-

Affinity Chromatography: The solubilized extract is clarified by ultracentrifugation, and the supernatant is incubated with an affinity resin (e.g., Strep-Tactin for a Strep-tagged protein). The resin is washed extensively with a buffer containing a lower concentration of detergent to remove non-specific binders. The protein is then eluted.

-

Size Exclusion Chromatography (SEC): The eluate from the affinity step is concentrated and injected onto a size exclusion chromatography column. This step is crucial for separating the intact complex from aggregates and smaller contaminants, ensuring sample homogeneity.[16] The buffer for SEC contains a detergent suitable for cryo-EM (e.g., DDM/CHS).

-

Quality Control: Fractions from the SEC peak corresponding to the complex are analyzed by SDS-PAGE to verify the presence of all subunits and by negative-stain EM to assess particle integrity and homogeneity.

-

Cryo-EM Grid Preparation: The purified, concentrated protein (typically 1-5 mg/mL) is applied to a glow-discharged cryo-EM grid (e.g., Quantifoil R1.2/1.3). Using a vitrification robot (e.g., Vitrobot), the grid is blotted to create a thin film of the sample, which is then plunge-frozen into liquid ethane. This rapid freezing traps the protein complexes in a layer of non-crystalline (vitreous) ice.

The Cav1.1 Signaling Pathway in Skeletal Muscle

Cav1.1 is the voltage sensor in skeletal muscle EC coupling.[4][5] It physically interacts with the ryanodine receptor (RyR1), a calcium release channel on the sarcoplasmic reticulum (SR), to trigger muscle contraction in a process that does not strictly require calcium influx through the Cav1.1 pore itself.[1][5][8]

Pathway Steps:

-

A nerve impulse triggers an action potential that travels down the neuron and depolarizes the muscle cell membrane (sarcolemma).[2][18]

-

This depolarization propagates into the T-tubules, where Cav1.1 resides.[19] The voltage-sensing domains of Cav1.1 detect this change.[20]

-

The voltage-induced conformational change in Cav1.1 is mechanically transmitted to the RyR1 channel on the SR.[1][3]

-

This allosteric activation opens the RyR1 channel, causing a massive release of stored Ca²⁺ ions from the SR into the cytosol.[2][19]

-

The sharp increase in cytosolic Ca²⁺ concentration allows calcium to bind to the regulatory protein troponin.[18][21]

-

This binding event moves another protein, tropomyosin, exposing myosin-binding sites on actin filaments and initiating the cross-bridge cycling that results in muscle contraction.[21]

Quantitative Data Summary

The cryo-EM structures of the Cav1.1 complex have provided unprecedented, near-atomic detail.[6][13][22] This has allowed for precise measurements and a deeper understanding of its architecture and drug interactions.

Table 1: Cryo-EM Structural Data for Rabbit Cav1.1 Complex

| PDB ID | Publication Year | Resolution (Å) | Ligand/State | Key Findings | Reference |

| 5GJW | 2016 | 3.6 | Apo (putative inactivated) | Revealed overall architecture, subunit arrangement, and interactions. The inner gate is closed. | [13][22] |

| 5GJV | 2015 | 4.2 | Apo | First overall structure of the Cav1.1 complex, showing the arrangement of α1, α2δ, β, and γ subunits. | [6][7] |

| 6JP8 | 2021 | 2.9 | Amlodipine (Antagonist) | High-resolution view of a DHP drug binding site, explaining its inhibitory mechanism. | [23] |

| 6JPA | 2021 | 3.2 | (R)-(+)-Bay K8644 (Antagonist) | Shows binding mode of a chiral antagonist, contributing to understanding of drug stereospecificity. | [23] |

| 6JPB | 2021 | 3.4 | (S)-(-)-Bay K8644 (Agonist) | Structure in the presence of an agonist, which surprisingly still showed an inactivated conformation. | [23] |

Conclusion and Future Directions

The successful determination of the Cav1.1 structure via cryo-EM marks a pivotal moment in the study of voltage-gated ion channels. It provides a definitive atomic framework for understanding the molecular basis of excitation-contraction coupling and the pathology of related diseases.[6][13] For drug development professionals, these structures offer a precise template for the rational design of novel therapeutics targeting Cav channels with higher specificity and efficacy.

Future research will likely focus on capturing the Cav1.1 complex in different functional states (e.g., open/activated) to create a dynamic "movie" of its action. Further investigation into its interaction with regulatory proteins and lipids will continue to refine our understanding of this essential molecular machine. The workflows and protocols established during the study of Cav1.1 now serve as a valuable guide for researchers tackling other large, challenging protein complexes that have long resisted crystallographic approaches.

References

- 1. Cav1.1 - Wikipedia [en.wikipedia.org]

- 2. bio.libretexts.org [bio.libretexts.org]

- 3. Voltage-gated calcium channel Cav1.1 complex - Proteopedia, life in 3D [proteopedia.org]

- 4. researchgate.net [researchgate.net]

- 5. CaV1.1: The atypical prototypical voltage-gated Ca2+ channel - PMC [pmc.ncbi.nlm.nih.gov]

- 6. molbio.princeton.edu [molbio.princeton.edu]

- 7. Structure of the voltage-gated calcium channel Cav1.1 complex - PubMed [pubmed.ncbi.nlm.nih.gov]

- 8. Skeletal muscle CaV1.1 channelopathies - PMC [pmc.ncbi.nlm.nih.gov]

- 9. Overcoming the challenges of membrane protein crystallography - PMC [pmc.ncbi.nlm.nih.gov]

- 10. sciencedaily.com [sciencedaily.com]

- 11. Membrane Protein Research: Challenges & New Solutions - Creative Proteomics [creative-proteomics.com]

- 12. biology.stackexchange.com [biology.stackexchange.com]

- 13. researchgate.net [researchgate.net]

- 14. pubs.acs.org [pubs.acs.org]

- 15. encyclopedia.pub [encyclopedia.pub]

- 16. cytivalifesciences.com [cytivalifesciences.com]

- 17. Overview of Membrane Protein Sample Preparation for Single-Particle Cryo-Electron Microscopy Analysis - PMC [pmc.ncbi.nlm.nih.gov]

- 18. study.com [study.com]

- 19. derangedphysiology.com [derangedphysiology.com]

- 20. Voltage sensor movements of CaV1.1 during an action potential in skeletal muscle fibers - PMC [pmc.ncbi.nlm.nih.gov]

- 21. Excitation-Contraction Coupling | Definition, Purpose & Diagram - Video | Study.com [study.com]

- 22. discovery.researcher.life [discovery.researcher.life]

- 23. Structural Basis of the Modulation of the Voltage-Gated Calcium Ion Channel Cav 1.1 by Dihydropyridine Compounds* - PubMed [pubmed.ncbi.nlm.nih.gov]

Whitepaper: A Strategic Approach to the Synthesis of Novel Chemical Entities

Audience: Researchers, scientists, and drug development professionals.

Abstract: The design and synthesis of novel chemical entities for which no established protocols exist is a cornerstone of modern drug discovery and materials science. This guide outlines a systematic, multi-phase approach for navigating the complex process of planning, executing, and validating the synthesis of a new molecule. We will use the hypothetical target molecule "Targesyn-1," a novel kinase inhibitor, as a case study to illustrate key principles, from initial retrosynthetic analysis to final biological evaluation. This document provides detailed experimental frameworks, data presentation standards, and logical workflow diagrams to support researchers in this endeavor.

Phase 1: Design and Retrosynthetic Analysis

The journey to a new molecule begins not in the lab, but in conceptual design and strategic planning. Before any reaction is attempted, a viable synthetic pathway must be developed. Retrosynthetic analysis is a technique used to deconstruct the target molecule into simpler, commercially available precursors. This process involves breaking key chemical bonds and applying known chemical transformations in reverse.

The primary goal is to identify a logical and efficient sequence of reactions that will allow for the construction of the target molecule from simple starting materials. This analysis forms the blueprint for the entire synthesis.

Caption: Retrosynthetic analysis of the hypothetical molecule Targesyn-1.

Phase 2: Forward Synthesis Workflow

With a retrosynthetic plan in place, the forward synthesis can be executed. This phase involves the stepwise construction of the target molecule from the identified precursors. Each step must be carefully optimized to maximize yield and purity. This is often an iterative process, requiring adjustments to reaction conditions, catalysts, and reagents based on the experimental outcomes.

A typical workflow involves performing a reaction, followed by workup (quenching the reaction and initial separation), purification of the intermediate product, and finally, characterization to confirm its identity and purity before proceeding to the next step.

Caption: General workflow for a multi-step chemical synthesis.

Experimental Protocols: A Template

Detailed and reproducible protocols are essential. Below is a hypothetical protocol for a key Suzuki coupling step in the synthesis of Targesyn-1, illustrating the necessary level of detail.

Protocol: Synthesis of Intermediate B via Suzuki Coupling

-

Materials & Reagents:

-

Precursor 2 (1.0 eq, 2.5 mmol, 500 mg)

-

Precursor 3 (1.1 eq, 2.75 mmol, 680 mg)

-

Palladium(II) Acetate (Pd(OAc)₂, 0.02 eq, 0.05 mmol, 11.2 mg)

-

SPhos (ligand, 0.04 eq, 0.10 mmol, 41.0 mg)

-

Potassium Carbonate (K₂CO₃, 3.0 eq, 7.5 mmol, 1.04 g)

-

1,4-Dioxane (15 mL)

-

Water (5 mL)

-

Nitrogen (inert gas)

-

-

Procedure:

-

To a 100 mL round-bottom flask equipped with a magnetic stir bar, add Precursor 2, Precursor 3, and Potassium Carbonate.

-

Evacuate and backfill the flask with nitrogen three times to establish an inert atmosphere.

-

Add Palladium(II) Acetate and SPhos to the flask.

-

Add the degassed solvents (1,4-Dioxane and water).

-

Heat the reaction mixture to 90°C and stir vigorously for 12 hours. Monitor reaction progress by TLC or LC-MS.

-

Upon completion, cool the mixture to room temperature.

-

Dilute the mixture with Ethyl Acetate (50 mL) and water (50 mL).

-

Separate the organic layer. Wash with brine (2 x 25 mL), dry over anhydrous sodium sulfate, filter, and concentrate under reduced pressure.

-

Purify the crude residue by flash column chromatography (Silica gel, 20% Ethyl Acetate in Hexanes) to yield the pure Intermediate B.

-

Data Presentation: Purification and Characterization

All newly synthesized compounds must be rigorously characterized to confirm their identity, structure, and purity. Quantitative data should be summarized in a clear, tabular format.

Table 1: Analytical Characterization Data for Targesyn-1 and Key Intermediates

| Compound | Method | Yield (%) | Purity (HPLC, %) | Mass (m/z) [M+H]⁺ | ¹H NMR |

| Intermediate A | Step 1 | 85 | 98.2 | 212.14 | Conforms to expected structure. |

| Intermediate B | Step 2 | 78 | 99.1 | 354.28 | Conforms to expected structure. |

| Targesyn-1 | Step 3 | 91 | >99.5 | 482.35 | Conforms to final product structure. |

Phase 3: Biological Evaluation

For drug development, the newly synthesized compound must be tested for its intended biological activity. For a kinase inhibitor like Targesyn-1, a biochemical assay would be performed to measure its ability to inhibit the target kinase.

The workflow involves preparing the compound, performing the assay across a range of concentrations, and analyzing the data to determine key metrics like the half-maximal inhibitory concentration (IC₅₀).

Caption: Workflow for a typical in vitro kinase inhibition assay.

Data Presentation: Biological Activity

The quantitative results from biological assays are crucial for evaluating the compound's efficacy and selectivity.

Table 2: Kinase Inhibition Profile of Targesyn-1

| Kinase Target | IC₅₀ (nM) | Description |

| Target Kinase 1 | 15.2 | Primary target of interest |

| Off-Target Kinase A | 850.6 | Structurally related kinase |

| Off-Target Kinase B | >10,000 | Unrelated kinase (selectivity) |

| Off-Target Kinase C | 2,100 | Structurally related kinase |

This systematic approach, combining careful planning, precise execution, and rigorous analysis, provides a robust framework for the successful synthesis and evaluation of novel chemical compounds.

Navigating the Uncharted: A Technical Guide to Antibody Generation When Commercial Options Are Exhausted

For researchers, scientists, and drug development professionals, the absence of a commercially available antibody against a specific target of interest presents a significant roadblock. This in-depth guide provides a technical roadmap for navigating this challenge, outlining the primary strategies for custom antibody development, from traditional methods to cutting-edge recombinant technologies. We will delve into detailed experimental protocols, present comparative data to inform your strategic decisions, and visualize complex workflows to demystify the process of generating a novel antibody tailored to your specific research needs.

Section 1: Strategic Considerations: Choosing Your Path

When a commercial antibody is not available, several avenues can be explored. The optimal choice depends on factors such as the intended application, required specificity, timeline, and budget. The main strategies include generating custom polyclonal or monoclonal antibodies, leveraging recombinant antibody technologies, or exploring antibody alternatives.

An Overview of Antibody Generation Strategies

Custom antibody development is a multi-stage process that begins with careful antigen design and preparation, followed by immunization, screening, and finally, antibody production and purification.[1] This approach allows for the creation of antibodies with high specificity and affinity for novel targets.[2]

-

Polyclonal Antibodies: Produced by different B cells in an immunized animal, polyclonal antibodies are a heterogeneous mixture of immunoglobulins that recognize multiple epitopes on a single antigen. They are relatively quick and inexpensive to produce.

-

Monoclonal Antibodies: Originating from a single B cell clone, monoclonal antibodies are homogenous and recognize a single epitope.[3] They offer high specificity and batch-to-batch consistency, which is crucial for diagnostics, therapeutics, and quantitative assays.[4]

-

Recombinant Antibodies: Generated in vitro using synthetic genes, recombinant antibodies offer high reproducibility and the flexibility for engineering. Technologies like phage display allow for the rapid discovery of antibodies without the need for animal immunization.

Comparative Analysis of Antibody Generation Methods

Choosing the right method for antibody generation is a critical first step. The following table summarizes key quantitative metrics to aid in this decision-making process.

| Parameter | Polyclonal Antibodies | Monoclonal Antibodies (Hybridoma) | Recombinant Antibodies (Phage Display) |

| Development Time | 2-3 months | 4-6 months | 1-2 months |

| Specificity | Lower (recognizes multiple epitopes) | Higher (recognizes a single epitope) | High (can be selected for single epitope) |