Pegasus

描述

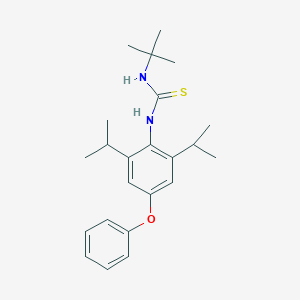

a pro-pesticide; inhibits mitochondrial ATPase in vitro and in vivo by its carbodiimide product

Structure

3D Structure

属性

IUPAC Name |

1-tert-butyl-3-[4-phenoxy-2,6-di(propan-2-yl)phenyl]thiourea | |

|---|---|---|

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI |

InChI=1S/C23H32N2OS/c1-15(2)19-13-18(26-17-11-9-8-10-12-17)14-20(16(3)4)21(19)24-22(27)25-23(5,6)7/h8-16H,1-7H3,(H2,24,25,27) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI Key |

WOWBFOBYOAGEEA-UHFFFAOYSA-N | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Canonical SMILES |

CC(C)C1=CC(=CC(=C1NC(=S)NC(C)(C)C)C(C)C)OC2=CC=CC=C2 | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Molecular Formula |

C23H32N2OS | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

DSSTOX Substance ID |

DTXSID1041845 | |

| Record name | Diafenthiuron | |

| Source | EPA DSSTox | |

| URL | https://comptox.epa.gov/dashboard/DTXSID1041845 | |

| Description | DSSTox provides a high quality public chemistry resource for supporting improved predictive toxicology. | |

Molecular Weight |

384.6 g/mol | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

CAS No. |

80060-09-9 | |

| Record name | Diafenthiuron | |

| Source | CAS Common Chemistry | |

| URL | https://commonchemistry.cas.org/detail?cas_rn=80060-09-9 | |

| Description | CAS Common Chemistry is an open community resource for accessing chemical information. Nearly 500,000 chemical substances from CAS REGISTRY cover areas of community interest, including common and frequently regulated chemicals, and those relevant to high school and undergraduate chemistry classes. This chemical information, curated by our expert scientists, is provided in alignment with our mission as a division of the American Chemical Society. | |

| Explanation | The data from CAS Common Chemistry is provided under a CC-BY-NC 4.0 license, unless otherwise stated. | |

| Record name | Diafenthiuron [ISO] | |

| Source | ChemIDplus | |

| URL | https://pubchem.ncbi.nlm.nih.gov/substance/?source=chemidplus&sourceid=0080060099 | |

| Description | ChemIDplus is a free, web search system that provides access to the structure and nomenclature authority files used for the identification of chemical substances cited in National Library of Medicine (NLM) databases, including the TOXNET system. | |

| Record name | Diafenthiuron | |

| Source | EPA DSSTox | |

| URL | https://comptox.epa.gov/dashboard/DTXSID1041845 | |

| Description | DSSTox provides a high quality public chemistry resource for supporting improved predictive toxicology. | |

| Record name | Thiourea, N'-[2,6-bis(1-methylethyl)-4-phenoxyphenyl]-N-(1,1-dimethylethyl) | |

| Source | European Chemicals Agency (ECHA) | |

| URL | https://echa.europa.eu/substance-information/-/substanceinfo/100.113.249 | |

| Description | The European Chemicals Agency (ECHA) is an agency of the European Union which is the driving force among regulatory authorities in implementing the EU's groundbreaking chemicals legislation for the benefit of human health and the environment as well as for innovation and competitiveness. | |

| Explanation | Use of the information, documents and data from the ECHA website is subject to the terms and conditions of this Legal Notice, and subject to other binding limitations provided for under applicable law, the information, documents and data made available on the ECHA website may be reproduced, distributed and/or used, totally or in part, for non-commercial purposes provided that ECHA is acknowledged as the source: "Source: European Chemicals Agency, http://echa.europa.eu/". Such acknowledgement must be included in each copy of the material. ECHA permits and encourages organisations and individuals to create links to the ECHA website under the following cumulative conditions: Links can only be made to webpages that provide a link to the Legal Notice page. | |

| Record name | DIAFENTHIURON | |

| Source | FDA Global Substance Registration System (GSRS) | |

| URL | https://gsrs.ncats.nih.gov/ginas/app/beta/substances/22W5MDB01G | |

| Description | The FDA Global Substance Registration System (GSRS) enables the efficient and accurate exchange of information on what substances are in regulated products. Instead of relying on names, which vary across regulatory domains, countries, and regions, the GSRS knowledge base makes it possible for substances to be defined by standardized, scientific descriptions. | |

| Explanation | Unless otherwise noted, the contents of the FDA website (www.fda.gov), both text and graphics, are not copyrighted. They are in the public domain and may be republished, reprinted and otherwise used freely by anyone without the need to obtain permission from FDA. Credit to the U.S. Food and Drug Administration as the source is appreciated but not required. | |

Foundational & Exploratory

Pegasus Workflow Management System: A Technical Guide for Scientific Computing in Drug Development and Research

An In-depth Whitepaper for Researchers, Scientists, and Drug Development Professionals

The landscape of modern scientific research, particularly in fields like drug development, is characterized by increasingly complex and data-intensive computational analyses. From molecular simulations to high-throughput screening and cryogenic electron microscopy (cryo-EM) data processing, the scale and complexity of these tasks demand robust and automated solutions. The Pegasus Workflow Management System (WMS) has emerged as a powerful open-source platform designed to orchestrate these complex scientific computations across a wide range of computing environments, from local clusters to national supercomputing centers and commercial clouds. This guide provides a technical deep dive into the core functionalities of this compound, its architecture, and its practical applications in scientific domains relevant to drug discovery and development.

Core Concepts and Architecture of this compound WMS

This compound is engineered to bridge the gap between the high-level description of a scientific process and the low-level details of its execution on diverse and distributed computational infrastructures. At its core, this compound enables scientists to define their computational pipelines as abstract workflows, focusing on the scientific logic rather than the underlying execution environment.

Abstract Workflows: Describing the Science

This compound represents workflows as Directed Acyclic Graphs (DAGs), where nodes symbolize computational tasks and the directed edges represent the dependencies between them. This abstract representation allows researchers to define their workflows using APIs in popular languages like Python, R, or Java, or through Jupyter Notebooks. The key components of an abstract workflow are:

-

Transformations: The logical name for an executable program or script that performs a specific task.

-

Files: Logical names for the input and output data of the transformations.

-

Dependencies: The relationships that define the order of execution, with the output of one task serving as the input for another.

This abstraction is a cornerstone of this compound, providing portability and reusability of workflows across different computational platforms.

The this compound Mapper: From Abstract to Executable

The "magic" of this compound lies in its Mapper (also referred to as the planner), which transforms the abstract workflow into a concrete, executable workflow. This process involves several key steps:

-

Resource Discovery: this compound queries information services to identify available computational resources, such as clusters, grids, or cloud services.

-

Data Discovery: It consults replica catalogs to locate the physical locations of the input data files.

-

Job Prioritization and Optimization: The mapper can reorder, group (cluster), and prioritize tasks to enhance overall workflow performance. For instance, it can bundle many short-duration jobs into a single larger job to reduce the overhead of scheduling.

-

Data Management Job Creation: this compound automatically adds necessary jobs for data staging (transferring input files to the execution site) and staging out (moving output files to a desired storage location). It also creates jobs to clean up intermediate data, which is crucial for managing storage in data-intensive workflows.

-

Provenance Tracking: Jobs are wrapped with a tool called "kickstart" which captures detailed runtime information, including the exact software versions used, command-line arguments, and resource consumption. This information is stored for later analysis and ensures the reproducibility of the scientific results.

Execution and Monitoring

The executable workflow is typically managed by HTCondor's DAGMan (Directed Acyclic Graph Manager) , a robust workflow engine that handles the dependencies and reliability of the jobs. HTCondor also acts as a broker, interfacing with various batch schedulers like SLURM and PBS on different computational resources. This compound provides a suite of tools for real-time monitoring of workflow execution, including a web-based dashboard and command-line utilities for checking status and debugging failures.

Caption: High-level architecture of the this compound Workflow Management System.

Quantitative Analysis of this compound-managed Workflows

The scalability and performance of this compound have been demonstrated in a variety of large-scale scientific applications. The following table summarizes key metrics from several notable use cases, illustrating the system's capability to handle diverse and demanding computational workloads.

| Workflow Application | Scientific Domain | Number of Tasks | Input Data Size | Output Data Size | Computational Resources Used | Key this compound Features Utilized |

| LIGO PyCBC | Gravitational Wave Physics | ~60,000 per workflow | ~10 GB | ~60 GB | LIGO Data Grid, OSG, XSEDE | Data Reuse, Cross-site Execution, Monitoring Dashboard |

| CyberShake | Earthquake Science | ~420,000 per site model | Terabytes | Terabytes | Titan, Blue Waters Supercomputers | High-throughput Scheduling, Large-scale Data Management |

| Cryo-EM Pre-processing | Structural Biology | 9 per micrograph | Terabytes | Terabytes | High-Performance Computing (HPC) Clusters | Task Clustering, Automated Data Transfer, Real-time Feedback |

| Molecular Dynamics (SNS) | Drug Delivery Research | Parameter Sweep | - | ~3 TB | Cray XE6 at NERSC (~400,000 CPU hours) | Parameter Sweeps, Large-scale Simulation Management |

| Montage | Astronomy | Variable | Gigabytes to Terabytes | Gigabytes to Terabytes | TeraGrid Clusters | Task Clustering (up to 97% reduction in completion time) |

Experimental Protocols: this compound in Action

To provide a concrete understanding of how this compound is applied in practice, this section details the methodologies for two key experimental workflows relevant to drug development and life sciences.

Automated Cryo-EM Image Pre-processing

Cryogenic electron microscopy is a pivotal technique in structural biology for determining the high-resolution 3D structures of biomolecules, a critical step in modern drug design. The raw data from a cryo-EM experiment consists of thousands of "movies" of micrographs that must undergo a computationally intensive pre-processing pipeline before they can be used for structure determination. This compound is used to automate and orchestrate this entire pipeline.

Methodology:

-

Data Ingestion: As new micrograph movies are generated by the electron microscope, they are automatically transferred to a high-performance computing (HPC) cluster.

-

Workflow Triggering: A service continuously monitors the arrival of new data and triggers a this compound workflow for each micrograph.

-

Motion Correction: The first computational step is to correct for beam-induced motion in the raw movie frames. The MotionCor2 software is typically used for this task.

-

CTF Estimation: The contrast transfer function (CTF) of the microscope, which distorts the images, is estimated for each motion-corrected micrograph using software like Gctf.

-

Image Conversion and Cleanup: this compound manages the conversion of images between different formats required by the various software tools, using utilities like E2proc2d from the EMAN2 package. Crucially, this compound also schedules cleanup jobs to remove large intermediate files as soon as they are no longer needed, minimizing the storage footprint of the workflow.

-

Real-time Feedback: The results of the pre-processing, such as CTF estimation plots, are sent back to the researchers in near real-time. This allows them to assess the quality of their data collection session and make adjustments on the fly.

-

Task Clustering: Since many of the pre-processing steps for a single micrograph are computationally inexpensive, this compound clusters these tasks together to reduce the scheduling overhead on the HPC system, leading to a more efficient use of resources.

Caption: Automated Cryo-EM pre-processing workflow managed by this compound.

Large-Scale Molecular Dynamics Simulations for Drug Discovery

Molecular dynamics (MD) simulations are a powerful computational tool in drug development for studying the physical movements of atoms and molecules. They can be used to investigate protein dynamics, ligand binding, and other molecular phenomena. Long-timescale MD simulations are often computationally prohibitive to run as a single, monolithic job. This compound can be used to break down these long simulations into a series of shorter, sequential jobs.

Methodology:

-

Workflow Definition: The long-timescale simulation is divided into N sequential, shorter-timescale simulations. An abstract workflow is created where each job represents one of these shorter simulations.

-

Initial Setup: The first job in the workflow takes the initial protein structure and simulation parameters as input and runs the first segment of the MD simulation using a package like NAMD (Nanoscale Molecular Dynamics).

-

Sequential Execution and State Passing: The output of the first simulation (the final coordinates and velocities of the atoms) serves as the input for the second simulation job. This compound manages this dependency, ensuring that each subsequent job starts with the correct state from the previous one.

-

Parallel Trajectories: For more comprehensive sampling of the conformational space, multiple parallel workflows can be executed, each starting with slightly different initial conditions. This compound can manage these parallel executions simultaneously.

-

Trajectory Analysis: After all the simulation segments are complete, a final set of jobs in the workflow can be used to concatenate the individual trajectory files and perform analysis, such as calculating root-mean-square deviation (RMSD) or performing principal component analysis (PCA).

-

Resource Management: this compound submits each simulation job to the appropriate computational resources, which could be a local cluster or a supercomputer. It handles the staging of input files and the retrieval of output trajectories for each step.

Caption: Sequential molecular dynamics simulation workflow using this compound.

Conclusion: Accelerating Scientific Discovery

The this compound Workflow Management System provides a robust and flexible framework for automating, managing, and executing complex scientific computations. For researchers and professionals in the drug development sector, this compound offers a powerful solution to tackle the challenges of data-intensive and computationally demanding tasks. By abstracting the complexities of the underlying computational infrastructure, this compound allows scientists to focus on their research questions, leading to accelerated discovery and innovation. The system's features for performance optimization, data management, fault tolerance, and provenance tracking make it an invaluable tool for ensuring the efficiency, reliability, and reproducibility of scientific workflows. As the scale and complexity of scientific computing continue to grow, workflow management systems like this compound will play an increasingly critical role in advancing the frontiers of research.

Pegasus WMS: A Technical Guide for Bioinformatics Workflows

An In-depth Technical Guide for Researchers, Scientists, and Drug Development Professionals

Introduction to Pegasus WMS

This compound Workflow Management System (WMS) is a robust and scalable open-source platform designed to orchestrate complex, multi-stage computational workflows.[1] It empowers scientists to define their computational pipelines at a high level of abstraction, shielding them from the complexities of the underlying heterogeneous and distributed computing environments.[2][3] this compound automates the reliable and efficient execution of these workflows on a variety of resources, including high-performance computing (HPC) clusters, cloud platforms, and national cyberinfrastructures.[1][4] This automation is particularly beneficial in bioinformatics, where research and drug development often involve data-intensive analyses composed of numerous interdependent steps.[5][6]

This compound achieves this by taking an abstract workflow description, typically a Directed Acyclic Graph (DAG) where nodes represent computational tasks and edges represent dependencies, and mapping it to an executable workflow tailored for the target execution environment.[2] This mapping process involves automatically locating necessary input data and computational resources.[4] Key features of this compound that are particularly advantageous for bioinformatics workflows include:

-

Portability and Reuse: Workflows defined in an abstract manner can be executed on different computational infrastructures with minimal to no modification.[7][8]

-

Scalability: this compound can manage workflows ranging from a few tasks to over a million, scaling the execution across a large number of resources.[7]

-

Data Management: It handles the complexities of data movement, including staging input data to compute resources and registering output data in catalogs.[9]

-

Fault Tolerance and Reliability: this compound automatically retries failed tasks and can provide rescue workflows to recover from non-recoverable errors, ensuring the robustness of long-running analyses.[9]

-

Provenance Tracking: Detailed information about the workflow execution, including the software and parameters used, is captured, which is crucial for the reproducibility of scientific results.[7]

-

Container Support: this compound seamlessly integrates with container technologies like Docker and Singularity, enabling the packaging of software dependencies and ensuring a consistent execution environment, a critical aspect of reproducible bioinformatics.[7]

Core Architecture of this compound WMS

The architecture of this compound WMS is designed to separate the logical description of a workflow from its physical execution. This is achieved through a series of components that work together to plan, execute, and monitor the workflow.

At its core, this compound takes an abstract workflow description, often in the form of a DAX (Directed Acyclic Graph in XML) file, and compiles it into an executable workflow.[2] This process involves several key components:

-

Mapper: The Mapper is the central planner in this compound. It takes the abstract workflow and, using information from various catalogs, maps it to the available computational resources. It adds necessary tasks for data staging (transferring input files), data registration (cataloging output files), and data cleanup.

-

Catalogs: this compound relies on a set of catalogs to bridge the gap between the abstract workflow and the concrete execution environment:

-

Replica Catalog: Keeps track of the physical locations of input files.

-

Transformation Catalog: Describes the logical application names and where the corresponding executables are located on different systems.

-

Site Catalog: Provides information about the execution sites, such as the available schedulers (e.g., SLURM, HTCondor) and the paths to storage and scratch directories.

-

-

Execution Engine (HTCondor DAGMan): this compound generates a submit file for HTCondor's DAGMan (Directed Acyclic Graph Manager), which is responsible for submitting the individual jobs of the workflow in the correct order of dependency and managing their execution.

This architecture allows for a high degree of automation and optimization. For instance, the Mapper can restructure the workflow for better performance by clustering small, short-running jobs into a single larger job, thereby reducing the overhead of submitting many individual jobs to a scheduler.[10]

A Case Study: The PGen Workflow for Soybean Genomic Variation Analysis

A prominent example of this compound WMS in bioinformatics is the PGen workflow, developed for large-scale genomic variation analysis of soybean germplasm.[1][10] This workflow is a critical component of the Soybean Knowledge Base (SoyKB) and is designed to process next-generation sequencing (NGS) data to identify Single Nucleotide Polymorphisms (SNPs) and insertions-deletions (indels).[1][10]

The PGen workflow automates a complex series of tasks, leveraging the power of high-performance computing resources to analyze large datasets efficiently.[1][10] The core scientific objective is to link genotypic variations to phenotypic traits for crop improvement.

Experimental Protocol: The PGen Workflow

The PGen workflow is structured as a series of interdependent computational jobs that process raw sequencing reads to produce a set of annotated genetic variations. The general methodology is as follows:

-

Data Staging: Raw NGS data, stored in a remote data store, is transferred to the scratch filesystem of the HPC cluster where the computation will take place. This is handled automatically by this compound.

-

Sequence Alignment: The raw sequencing reads are aligned to a reference soybean genome using the Burrows-Wheeler Aligner (BWA).

-

Variant Calling: The aligned reads are then processed using the Genome Analysis Toolkit (GATK) to identify SNPs and indels.

-

Variant Annotation: The identified variants are annotated using tools like SnpEff and SnpSift to predict their functional effects (e.g., whether a SNP results in an amino acid change).

-

Copy Number Variation (CNV) Analysis: The workflow also includes steps for identifying larger structural variations, such as CNVs, using tools like cn.MOPS.

-

Data Cleanup and Staging Out: Intermediate files generated during the workflow are cleaned up to manage storage space, and the final results are transferred back to a designated output directory in the data store.

While the specific command-line arguments for each tool can be customized, the workflow provides a standardized and reproducible pipeline for genomic variation analysis.

Quantitative Data from the PGen Workflow

The execution of the PGen workflow on a dataset of 106 soybean lines sequenced at 15X coverage yielded significant scientific results. The following table summarizes the key findings from this analysis.[1][10]

| Data Type | Quantity |

| Soybean Lines Analyzed | 106 |

| Sequencing Coverage | 15X |

| Identified Single Nucleotide Polymorphisms (SNPs) | 10,218,140 |

| Identified Insertions-Deletions (indels) | 1,398,982 |

| Identified Non-synonymous SNPs | 297,245 |

| Identified Copy Number Variation (CNV) Regions | 3,330 |

This data highlights the scale of the analysis and the volume of information that can be generated and managed using a this compound-driven workflow.

Hypothetical Signaling Pathway Analysis Workflow

While the PGen workflow focuses on genomic variation, this compound is equally well-suited for other types of bioinformatics analyses, such as signaling pathway analysis. This type of analysis is crucial in drug development for understanding how a disease or a potential therapeutic affects cellular processes. A typical signaling pathway analysis workflow might involve the following steps:

-

Differential Gene Expression Analysis: Starting with RNA-seq data from control and treated samples, this step identifies genes that are up- or down-regulated in response to the treatment.

-

Pathway Enrichment Analysis: The list of differentially expressed genes is then used to identify biological pathways that are significantly enriched with these genes. This is often done using databases such as KEGG or Gene Ontology (GO).

-

Network Analysis: The enriched pathways and the corresponding genes are used to construct interaction networks to visualize the relationships between the affected genes and pathways.

-

Drug Target Identification: By analyzing the perturbed pathways, potential drug targets can be identified.

This compound can manage the execution of the various tools required for each of these steps, ensuring that the analysis is reproducible and scalable.

Conclusion

This compound WMS provides a powerful and flexible framework for managing complex bioinformatics workflows. Its ability to abstract away the complexities of the underlying computational infrastructure allows researchers to focus on the science while ensuring that their analyses are portable, scalable, and reproducible. The PGen workflow for soybean genomics serves as a compelling real-world example of how this compound can be used to manage large-scale data analysis in a production environment. As bioinformatics research becomes increasingly data-intensive and collaborative, tools like this compound WMS will be indispensable for accelerating scientific discovery and innovation in drug development.

References

- 1. PGen: large-scale genomic variations analysis workflow and browser in SoyKB - PubMed [pubmed.ncbi.nlm.nih.gov]

- 2. rafaelsilva.com [rafaelsilva.com]

- 3. marketing.globuscs.info [marketing.globuscs.info]

- 4. This compound WMS – Automate, recover, and debug scientific computations [this compound.isi.edu]

- 5. researchgate.net [researchgate.net]

- 6. isi.edu [isi.edu]

- 7. This compound Workflows with Application Containers — CyVerse Container Camp: Container Technology for Scientific Research 0.1.0 documentation [cyverse-container-camp-workshop-2018.readthedocs-hosted.com]

- 8. uct-cbio.github.io [uct-cbio.github.io]

- 9. arokem.github.io [arokem.github.io]

- 10. researchgate.net [researchgate.net]

Pegasus WMS for High-Throughput Computing: An In-Depth Technical Guide

Audience: Researchers, scientists, and drug development professionals.

This technical guide provides a comprehensive overview of the Pegasus Workflow Management System (WMS), a robust solution for managing and executing complex, high-throughput computational workflows. This compound is designed to automate, recover from failures, and provide detailed provenance for scientific computations, making it an invaluable tool for researchers in various domains, including drug development, genomics, and large-scale data analysis.

Core Concepts of this compound WMS

This compound enables scientists to create abstract workflows that are independent of the underlying execution environment.[1][2][3] This abstraction allows for portability and scalability, as the same workflow can be executed on a personal laptop, a campus cluster, a grid, or a cloud environment without modification.[1][2]

The system is built upon a few key concepts:

-

Abstract Workflow: A high-level, portable description of the scientific workflow, defining the computational tasks and their dependencies as a Directed Acyclic Graph (DAG).[4] This is typically created using the this compound Python, Java, or R APIs.[4]

-

Executable Workflow: The result of this compound planning and mapping the abstract workflow onto specific resources. This concrete plan includes data transfer, job submission, and cleanup tasks.

-

Catalogs: this compound uses a set of catalogs to manage information about data, transformations, and resources.

-

Replica Catalog: Maps logical file names to physical file locations.

-

Transformation Catalog: Describes the logical application names, the physical locations of the executables, and the required environment.

-

Site Catalog: Defines the execution sites and their configurations.[4]

-

-

Provenance: this compound automatically captures detailed provenance information about the workflow execution, including the data used, the software versions, and the execution environment. This information is stored in a database and can be queried for analysis and reproducibility.[2]

This compound WMS Architecture

The this compound architecture is designed to separate the concerns of workflow definition from execution. It consists of several key components that work together to manage the entire workflow lifecycle.

The core of this compound is the Mapper (or planner), which takes the abstract workflow (in DAX or YAML format) and maps it to the available resources.[5] This process involves:

-

Site Selection: Choosing the best execution sites for each task based on resource availability and user preferences.

-

Data Staging: Planning the transfer of input data to the execution sites and the staging of output data to desired locations.[1]

-

Job Clustering: Grouping small, short-running jobs into larger jobs to reduce the overhead of scheduling and execution.[6]

-

Task Prioritization: Optimizing the order of job execution to improve performance.

Once the executable workflow is generated, it is handed over to a workflow execution engine, typically HTCondor's DAGMan , which manages the submission of jobs to the target resources and handles dependencies.[5]

Data Management in this compound

This compound provides a robust data management system that handles the complexities of data movement in distributed environments.[7] It automates data staging, replica selection, and data cleanup.[7] this compound can use a variety of transfer protocols, including GridFTP, HTTP, and S3, to move data between storage and compute resources.[7]

One of the key features of this compound is its ability to perform data reuse . If an intermediate data product already exists from a previous workflow run, this compound can reuse it, saving significant computation time.[7]

Experimental Protocols and Use Cases

This compound has been successfully employed in a wide range of scientific domains, from astrophysics to earthquake science and bioinformatics.[8]

Use Case 1: LIGO Gravitational Wave Analysis

The Laser Interferometer Gravitational-Wave Observatory (LIGO) uses this compound to manage the complex data analysis pipelines for detecting gravitational waves.[9] The PyCBC (Compact Binary Coalescence) workflow is one of the primary analysis pipelines used in the discovery of gravitational waves.[10]

Experimental Protocol:

-

Data Acquisition: Raw data from the LIGO detectors is collected and pre-processed.

-

Template Matching: The data is searched for signals that match theoretical models of gravitational waves from binary inspirals. This involves running thousands of matched-filtering jobs.

-

Signal Coincidence: Candidate signals from multiple detectors are compared to identify coincident events.

-

Parameter Estimation: For candidate events, a follow-up analysis is performed to estimate the parameters of the source, such as the masses and spins of the black holes.

-

Statistical Significance: The statistical significance of the candidate events is assessed to distinguish true signals from noise.

References

- 1. Workflow gallery – this compound WMS [this compound.isi.edu]

- 2. cyverse-container-camp-workshop-2018.readthedocs-hosted.com [cyverse-container-camp-workshop-2018.readthedocs-hosted.com]

- 3. GitHub - this compound-isi/pegasus: this compound Workflow Management System - Automate, recover, and debug scientific computations. [github.com]

- 4. 6. Creating Workflows — this compound WMS 5.1.2-dev.0 documentation [this compound.isi.edu]

- 5. scitech.group [scitech.group]

- 6. 12. Optimizing Workflows for Efficiency and Scalability — this compound WMS 5.1.2-dev.0 documentation [this compound.isi.edu]

- 7. Advanced LIGO – Laser Interferometer Gravitational Wave Observatory – this compound WMS [this compound.isi.edu]

- 8. This compound, a Workflow Management System for Large-Scale Science | Statewide California Earthquake Center [central.scec.org]

- 9. This compound powers LIGO gravitational wave detection analysis – this compound WMS [this compound.isi.edu]

- 10. research.cs.wisc.edu [research.cs.wisc.edu]

Getting Started with Pegasus for Computational Science: An In-depth Technical Guide

For Researchers, Scientists, and Drug Development Professionals

This guide provides a comprehensive overview of the Pegasus Workflow Management System, offering a deep dive into its core functionalities and applications in computational science, with a particular focus on bioinformatics and drug development. This compound is an open-source platform that enables scientists to design, execute, and manage complex scientific workflows across diverse computing environments, from local clusters to national supercomputers and cloud infrastructures.[1] Its ability to abstract scientific processes into portable and scalable workflows makes it an invaluable tool for data-intensive research.

Core Concepts of this compound

This compound workflows are defined as Directed Acyclic Graphs (DAGs), where nodes represent computational tasks and edges define the dependencies between them.[1] This structure allows for the clear representation of complex multi-step analyses. The system operates on the principle of abstracting the workflow from the underlying execution environment. Scientists can define their computational pipeline in a resource-independent manner, and this compound handles the mapping of this abstract workflow onto the available computational resources.[1]

Key features of the this compound platform include:

-

Automation: this compound automates the execution of complex workflows, managing job submission, data movement, and error recovery.

-

Portability: Workflows defined in an abstract manner can be executed on different computational platforms without modification.

-

Scalability: this compound is designed to handle large-scale workflows with thousands of tasks and massive datasets.

-

Provenance Tracking: The system automatically captures detailed provenance information, recording the steps, software, and data used in a computation, which is crucial for reproducibility.

-

Error Recovery: this compound provides robust fault-tolerance mechanisms, automatically retrying failed tasks and enabling the recovery of workflows.

Experimental Protocols

This section details the methodologies for two key computational biology workflows that can be orchestrated using this compound: Germline Variant Calling and Ab Initio Protein Structure Prediction.

Germline Variant Calling Workflow (GATK Best Practices)

This protocol outlines the steps for identifying single nucleotide polymorphisms (SNPs) and small insertions/deletions (indels) in whole-genome sequencing data, following the GATK Best Practices.[2][3][4][5]

1. Data Pre-processing:

- Quality Control (FastQC): Raw sequencing reads in FASTQ format are assessed for quality.

- Alignment (BWA-MEM): Reads are aligned to a reference genome.

- Mark Duplicate Reads (GATK MarkDuplicatesSpark): PCR duplicates are identified and marked to avoid biases in variant calling.

- Base Quality Score Recalibration (GATK BaseRecalibrator & ApplyBQSR): Systematic errors in base quality scores are corrected.[2]

2. Variant Discovery:

- HaplotypeCaller (GATK): The core variant calling step, which identifies potential variants in the aligned reads.

3. Variant Filtering and Annotation:

- Variant Filtering: Raw variant calls are filtered to remove artifacts.

- Variant Annotation: Variants are annotated with information about their potential functional consequences.

Ab Initio Protein Structure Prediction (Rosetta)

This protocol describes the process of predicting the three-dimensional structure of a protein from its amino acid sequence using the Rosetta software suite, a workflow well-suited for management by this compound.[1][6][7][8][9]

1. Input Preparation:

- Sequence File (FASTA): The primary amino acid sequence of the target protein.

- Fragment Libraries: Libraries of short structural fragments from known proteins that are used to build the initial models.

2. Structure Prediction Protocol:

- Fragment Insertion (Monte Carlo Assembly): The Rosetta algorithm iteratively assembles protein structures by inserting fragments from the pre-computed libraries.

- Scoring Function: A sophisticated energy function is used to evaluate the quality of the generated structures.

- Refinement: The most promising structures undergo a refinement process to improve their atomic details.

3. Output Analysis:

- Model Selection: The final predicted structures are clustered and ranked based on their energy scores.

- Structure Validation: The quality of the predicted models is assessed using various validation tools.

Data Presentation

The following table summarizes hypothetical quantitative data from a proteomics experiment that could be processed and analyzed using a this compound workflow. This data is based on findings from a study on optimizing proteomics sample preparation.

| Sample Group | Protein Extraction Method | Number of Protein IDs | Gram-Positive Bacteria IDs | Non-abundant Phyla IDs |

| Control | Standard Lysis Buffer | 1500 | 300 | 50 |

| Optimized | SDS + Urea in Tris-HCl | 2500 | 600 | 150 |

This table illustrates how quantitative data from a proteomics experiment can be structured for comparison. A this compound workflow could automate the analysis pipeline from raw mass spectrometry data to the generation of such tables.

Visualizations

Signaling Pathway Representation of a Bioinformatics Workflow

This diagram illustrates a conceptual bioinformatics workflow, such as variant calling, in the style of a signaling pathway.

Caption: A conceptual signaling pathway of a bioinformatics workflow.

Experimental Workflow: Germline Variant Calling

This diagram details the GATK-based germline variant calling workflow.

Caption: A detailed workflow for germline variant calling using GATK.

Experimental Workflow: Rosetta Protein Structure Prediction

This diagram illustrates the workflow for ab initio protein structure prediction using Rosetta.

Caption: A workflow for protein structure prediction using Rosetta.

Logical Relationship: Virtual Screening for Drug Discovery

This diagram shows the logical steps in a virtual screening workflow, a common task in drug discovery that can be managed with this compound.

Caption: Logical flow of a virtual screening process in drug discovery.

References

- 1. medium.com [medium.com]

- 2. Chapter 2 GATK practice workflow | A practical introduction to GATK 4 on Biowulf (NIH HPC) [hpc.nih.gov]

- 3. edu.abi.am [edu.abi.am]

- 4. Variant Calling Workflow [nbisweden.github.io]

- 5. gatk.broadinstitute.org [gatk.broadinstitute.org]

- 6. Structure Prediction Applications [docs.rosettacommons.org]

- 7. Protein structure prediction with a focus on Rosetta | PDF [slideshare.net]

- 8. researchgate.net [researchgate.net]

- 9. Abinitio [docs.rosettacommons.org]

Pegasus: A Technical Guide to Automating Scientific Workflows for Researchers and Drug Development Professionals

An in-depth technical guide on the core of the Pegasus Workflow Management System, tailored for researchers, scientists, and drug development professionals. This guide explores the architecture, capabilities, and practical applications of this compound for automating complex, large-scale scientific computations.

Introduction to this compound: Orchestrating Complex Scientific Discovery

This compound is a robust Workflow Management System (WMS) designed to automate, manage, and execute complex scientific workflows across a wide range of heterogeneous and distributed computing environments.[1][2] For researchers and professionals in fields like bioinformatics, genomics, and drug discovery, where multi-stage data analysis pipelines are the norm, this compound provides a powerful framework to manage computational tasks, ensuring portability, scalability, performance, and reliability.[2][3]

At its core, this compound abstracts the scientific workflow from the underlying computational infrastructure.[4][5] This separation allows scientists to define their computational pipelines in a portable manner, focusing on the scientific logic rather than the intricacies of the execution environment. This compound then maps this abstract workflow onto available resources, which can include local clusters, national supercomputing centers, or commercial clouds, and manages its execution, including data transfers and error recovery.[2][3][4]

Core Architecture and Concepts

This compound's architecture is designed to be modular and flexible, enabling the execution of workflows ranging from a few tasks to over a million.[3][4] The system is built upon several key concepts that are crucial for its operation.

Abstract Workflows (DAX)

Scientists define their workflows using a high-level, resource-independent XML format called the Abstract Workflow Description Language (DAX).[2] A DAX file describes the computational tasks as jobs and the dependencies between them as a Directed Acyclic Graph (DAG).[2] Each job in the DAX is a logical representation of a task, specifying its inputs, outputs, and the transformation (the executable) to be run.

The this compound Mapper: From Abstract to Executable

The heart of this compound is its "just-in-time" planner or mapper.[2][3] The mapper takes the abstract workflow (DAX) and compiles it into an executable workflow tailored for a specific execution environment.[2] This process involves several key steps:

-

Resource Discovery: Identifying the available computational and storage resources.

-

Data Discovery: Locating the physical locations of input data files.

-

Task Mapping: Assigning individual jobs to appropriate computational resources.

-

Data Management Job Insertion: Adding necessary jobs for data staging (transferring input data to the execution site) and stage-out (transferring output data to a storage location).

-

Workflow Refinement: Applying optimizations such as job clustering (grouping small, short-running jobs into a single larger job to reduce overhead), task reordering, and prioritization to enhance performance and scalability.[3]

The output of the mapper is a concrete, executable workflow that can be submitted to a workflow engine for execution.

Execution and Monitoring

This compound uses HTCondor's DAGMan (Directed Acyclic Graph Manager) as its primary workflow execution engine. DAGMan manages the dependencies between jobs and submits them to the underlying resource managers (e.g., Slurm, Torque/PBS, or Condor itself) on the target compute resources.

This compound provides comprehensive monitoring and debugging tools.[4] The this compound-status command allows users to monitor the progress of their workflows in real-time. In case of failures, this compound-analyzer helps in diagnosing the root cause of the error.[4] All runtime provenance, including information about the execution environment, job performance, and data usage, is captured and stored in a database, which can be queried for detailed analysis.[3][4]

Key Features and Capabilities

This compound offers a rich set of features designed to meet the demands of modern scientific research:

| Feature | Description |

| Portability & Reuse | Workflows are defined abstractly, allowing them to be executed on different computational infrastructures without modification.[3] |

| Scalability | Capable of managing workflows with up to a million tasks and processing petabytes of data.[4] |

| Performance | Employs various optimization techniques like job clustering, data reuse, and resource co-allocation to improve workflow performance. |

| Reliability & Fault Tolerance | Automatically retries failed tasks and data transfers. In case of persistent failures, it can generate a "rescue DAG" containing only the remaining tasks to be executed.[4] |

| Data Management | Automates the management of the entire data lifecycle within a workflow, including replica selection, data transfers, and cleanup of intermediate data.[3] |

| Provenance Tracking | Captures detailed provenance information about every aspect of the workflow execution, including the software used, input data, parameters, and the execution environment. This is crucial for reproducibility and validation of scientific results.[3][4] |

| Container Support | Seamlessly integrates with container technologies like Docker and Singularity, enabling reproducible computational environments for workflow tasks. |

Experimental Protocols and Workflows in Practice

This compound has been successfully applied to a wide range of scientific domains. Below are detailed overviews of representative workflows.

Bioinformatics: RNA-Seq Analysis

A common application of this compound in bioinformatics is the automation of RNA sequencing (RNA-Seq) analysis pipelines. These workflows typically involve multiple stages of data processing and analysis.

Experimental Protocol:

-

Quality Control (QC): Raw sequencing reads (in FASTQ format) are assessed for quality using tools like FastQC.

-

Adapter Trimming: Sequencing adapters and low-quality bases are removed from the reads using tools like Trimmomatic.

-

Genome Alignment: The cleaned reads are aligned to a reference genome using a splice-aware aligner such as STAR or HISAT2.

-

Quantification: The number of reads mapping to each gene or transcript is counted to estimate its expression level. Tools like featureCounts or HTSeq are used for this step.

-

Differential Expression Analysis: Statistical analysis is performed to identify genes that are differentially expressed between different experimental conditions. This is often done using R packages like DESeq2 or edgeR.

-

Downstream Analysis: Further analysis, such as gene set enrichment analysis or pathway analysis, is performed on the list of differentially expressed genes.

Seismology: The CyberShake Workflow

The Southern California Earthquake Center (SCEC) uses this compound to run its CyberShake workflows, which are computationally intensive simulations to characterize earthquake hazards.

Experimental Protocol:

-

Extract Rupture Variations: For a given earthquake rupture, generate a set of rupture variations with different slip distributions and hypocenter locations.

-

Generate Strain Green Tensors (SGTs): For each site of interest, pre-calculate and store the SGTs, which represent the fundamental response of the Earth's structure to a point source. This is a highly parallel and computationally expensive step.

-

Synthesize Seismograms: Combine the SGTs with the rupture variations to generate synthetic seismograms for each site.

-

Measure Peak Spectral Acceleration: From the synthetic seismograms, calculate various intensity measures, such as peak spectral acceleration at different periods.

-

Calculate Hazard Curves: For each site, aggregate the intensity measures from all rupture variations and all relevant earthquake sources to compute a probabilistic seismic hazard curve.

Astronomy: The Montage Image Mosaic Workflow

The Montage application, developed by NASA/IPAC, is used to create custom mosaics of the sky from multiple input images. This compound is often used to orchestrate the execution of Montage workflows.

Experimental Protocol:

-

Reprojection: The input images, which may have different projections, scales, and orientations, are reprojected to a common coordinate system and pixel scale.

-

Background Rectification: The background levels of the reprojected images are matched to each other to create a seamless mosaic.

-

Co-addition: The reprojected and background-corrected images are co-added to create the final mosaic.

Quantitative Data and Performance

This compound has demonstrated its ability to handle extremely large and complex scientific workflows. The following tables summarize some of the key performance and scalability metrics from published case studies.

Table 1: CyberShake Workflow Scalability

| Metric | Value |

| Number of Tasks | Up to 1 million |

| Data Managed | 2.5 PB |

| Execution Time | 10 weeks (continuous) |

| Computational Resources | Oak Ridge Leadership Computing Facility (Summit) |

Data from the CyberShake 22.12 study.

Table 2: Montage Workflow Performance

| Metric | Value |

| Number of Tasks | 387 |

| Workflow Runtime | 7 minutes, 21 seconds |

| Cumulative Job Wall Time | 5 minutes, 36 seconds |

Data from a representative Montage workflow run.[6]

Conclusion

This compound provides a mature, feature-rich, and highly capable workflow management system that empowers researchers, scientists, and drug development professionals to tackle complex computational challenges. By abstracting workflow logic from the execution environment, this compound enables the creation of portable, scalable, and reproducible scientific pipelines. Its robust data management, fault tolerance, and provenance tracking capabilities are essential for ensuring the integrity and reliability of scientific results in an increasingly data-intensive research landscape. As scientific discovery becomes more reliant on the automated analysis of massive datasets, tools like this compound will continue to be indispensable for accelerating research and innovation.

References

- 1. olcf.ornl.gov [olcf.ornl.gov]

- 2. Frontiers | Using open-science workflow tools to produce SCEC CyberShake physics-based probabilistic seismic hazard models [frontiersin.org]

- 3. CyberShake Workflow Framework - SCECpedia [strike.scec.org]

- 4. kbolsen.sdsu.edu [kbolsen.sdsu.edu]

- 5. GitHub - this compound-isi/ACCESS-Pegasus-Examples: this compound Workflows examples including the this compound tutorial, to run on ACCESS resources. [github.com]

- 6. Workflow gallery – this compound WMS [this compound.isi.edu]

Pegasus: An In-Depth Technical Guide to Single-Cell Analysis

For Researchers, Scientists, and Drug Development Professionals

This technical guide provides a comprehensive overview of the Pegasus Python package, a powerful and scalable tool for single-cell RNA sequencing (scRNA-seq) data analysis. This compound, developed as part of the Cumulus project, offers a rich set of functionalities for processing, analyzing, and visualizing large-scale single-cell datasets.[1] This document details the core workflow, experimental protocols, and data presentation, enabling users to effectively leverage this compound for their research and development needs.

Introduction to this compound

This compound is a command-line tool and a Python package designed for the analysis of transcriptomes from millions of single cells.[2] It is built upon the popular AnnData data structure, ensuring interoperability with the broader scverse ecosystem. This compound provides a comprehensive suite of tools covering the entire scRNA-seq analysis pipeline, from initial data loading and quality control to advanced analyses like differential gene expression and gene set enrichment.

The this compound Workflow

The standard this compound workflow encompasses several key stages, each with dedicated functions to ensure robust and reproducible analysis. The typical progression involves data loading, quality control and filtering, normalization, identification of highly variable genes, dimensionality reduction, cell clustering, and differential gene expression analysis to identify cluster-specific markers.

Experimental Protocols & Quantitative Data

This section provides detailed methodologies for the core steps in the this compound workflow, accompanied by tables summarizing key quantitative parameters.

Data Loading

This compound supports various input formats, including 10x Genomics' Cell Ranger output, MTX, CSV, and TSV files. The this compound.read_input function is the primary entry point for loading data into an AnnData object.

Experimental Protocol: Data Loading

-

Purpose: To load the gene expression count matrix and associated metadata into memory.

-

Methodology: Utilize the this compound.read_input() function, specifying the file path and format. For 10x Genomics data, provide the path to the directory containing the matrix.mtx.gz, barcodes.tsv.gz, and features.tsv.gz files.

-

Example Code:

Quality Control and Filtering

Quality control (QC) is a critical step to remove low-quality cells and genes that could otherwise introduce noise into downstream analyses. This compound provides the pg.qc_metrics and pg.filter_data functions for this purpose.

Experimental Protocol: Quality Control and Filtering

-

Purpose: To calculate QC metrics and filter out cells and genes based on these metrics.

-

Methodology:

-

Calculate QC metrics using pg.qc_metrics(). This function computes metrics such as the number of genes detected per cell (n_genes), the total number of UMIs per cell (n_counts), and the percentage of mitochondrial gene expression (percent_mito).

-

Filter the data using pg.filter_data(). This function applies user-defined thresholds to remove cells and genes that do not meet the quality criteria.

-

-

Example Code:

Table 1: Recommended Filtering Parameters

| Parameter | This compound.qc_metrics argument | Description | Recommended Range |

| Minimum Genes per Cell | min_genes | The minimum number of genes detected in a cell. | 200 - 1000 |

| Maximum Genes per Cell | max_genes | The maximum number of genes detected in a cell to filter out potential doublets. | 3000 - 8000 |

| Mitochondrial Percentage | percent_mito | The maximum percentage of mitochondrial gene content. | 5 - 20 |

| Minimum Cells per Gene | (within pg.filter_data) | The minimum number of cells a gene must be expressed in to be retained. | 3 - 10 |

Normalization and Highly Variable Gene Selection

Normalization adjusts for differences in sequencing depth between cells. Subsequently, identifying highly variable genes (HVGs) focuses the analysis on biologically meaningful variation.

Experimental Protocol: Normalization and HVG Selection

-

Purpose: To normalize the data and identify genes with high variance across cells.

-

Methodology:

-

Normalize the data using pg.log_norm(). This function performs total-count normalization and log-transforms the data.

-

Identify HVGs using pg.highly_variable_features(). This compound offers methods similar to Seurat for HVG selection.

-

-

Example Code:

Table 2: Highly Variable Gene Selection Parameters

| Parameter | This compound.highly_variable_features argument | Description | Default Value |

| Flavor | flavor | The method for HVG selection. | "seurat_v3" |

| Number of Top Genes | n_top_genes | The number of highly variable genes to select. | 2000 |

Dimensionality Reduction and Clustering

Principal Component Analysis (PCA) is used to reduce the dimensionality of the data, followed by graph-based clustering to group cells with similar expression profiles.

Experimental Protocol: PCA and Clustering

-

Purpose: To reduce the dimensionality of the data and identify cell clusters.

-

Methodology:

-

Perform PCA on the highly variable genes using pg.pca().

-

Construct a k-nearest neighbor (kNN) graph using pg.neighbors().

-

Perform clustering on the kNN graph using algorithms like Louvain or Leiden (pg.louvain() or pg.leiden()).

-

-

Example Code:

Table 3: PCA and Clustering Parameters

| Parameter | Function | Description | Default Value | | :--- | :--- | :--- | | Number of Principal Components | pg.pca | The number of principal components to compute. | 50 | | Number of Neighbors | pg.neighbors | The number of nearest neighbors to use for building the kNN graph. | 15 | | Resolution | pg.louvain / pg.leiden | The resolution parameter for clustering, which influences the number of clusters. | 1.0 |

Differential Gene Expression and Visualization

Differential expression (DE) analysis identifies genes that are significantly upregulated in each cluster compared to all other cells. The results are often visualized using UMAP or t-SNE plots.

Experimental Protocol: DE Analysis and Visualization

-

Purpose: To find marker genes for each cluster and visualize the cell populations.

-

Methodology:

-

Perform DE analysis using pg.de_analysis(), specifying the cluster annotation.

-

Generate a UMAP embedding using pg.umap().

-

Visualize the clusters and gene expression on the UMAP plot using pg.scatter().

-

-

Example Code:

Signaling Pathway Analysis

This compound facilitates the analysis of signaling pathways and other gene sets through its gene set enrichment analysis (GSEA) and signature score calculation functionalities.

Gene Set Enrichment Analysis (GSEA)

The this compound.gsea() function allows for the identification of enriched pathways in the differentially expressed genes of each cluster.

Experimental Protocol: Gene Set Enrichment Analysis

-

Purpose: To identify biological pathways that are significantly enriched in each cell cluster.

-

Methodology:

-

Perform differential expression analysis as described in section 3.5.

-

Run pg.gsea(), providing the DE results and a gene set file in GMT format (e.g., from MSigDB).

-

-

Example Code:

Signature Score Calculation for a Signaling Pathway

The this compound.calc_signature_score() function can be used to calculate a score for a given gene set (e.g., a signaling pathway) for each cell. This allows for the visualization of pathway activity across the dataset.

Hypothetical Example: Analysis of the TGF-β Signaling Pathway

The TGF-β signaling pathway plays a crucial role in various cellular processes. We can define a gene set representing this pathway and analyze its activity.

Experimental Protocol: TGF-β Pathway Activity Score

-

Purpose: To quantify the activity of the TGF-β signaling pathway in each cell.

-

Methodology:

-

Define a list of genes belonging to the TGF-β pathway.

-

Use this compound.calc_signature_score() to calculate a score for this gene set.

-

Visualize the signature score on a UMAP plot using pg.scatter().

-

-

Example Code:

Conclusion

This compound provides a robust and user-friendly framework for the analysis of large-scale single-cell RNA sequencing data. Its comprehensive functionalities, scalability, and integration with the Python ecosystem make it an invaluable tool for researchers and scientists in both academic and industrial settings. This guide has outlined the core workflow and provided detailed protocols to enable users to effectively apply this compound to their own single-cell datasets. For more detailed information, users are encouraged to consult the official this compound documentation.

References

In-Depth Technical Guide to Pegasus for Astrophysical Plasma Simulation

For Researchers, Scientists, and Drug Development Professionals

This guide provides a comprehensive technical overview of the Pegasus code, a sophisticated tool for simulating astrophysical plasma dynamics. This compound is a hybrid-kinetic, particle-in-cell (PIC) code that offers a powerful approach to modeling complex plasma phenomena where a purely fluid or fully kinetic description is insufficient.[1][2] This document details the core functionalities of this compound, presents quantitative data from validation tests in a structured format, outlines the methodologies for key experiments, and provides visualizations of its core logical workflows.

Core Architecture and Numerical Methods

This compound is engineered with a modular architecture, drawing inspiration from the well-established Athena magnetohydrodynamics (MHD) code.[1][2] This design promotes flexibility and ease of use, allowing researchers to adapt the code for a wide range of astrophysical scenarios. At its heart, this compound employs a hybrid model that treats ions as kinetic particles and electrons as a fluid. This approach is particularly well-suited for problems where ion kinetic effects are crucial, while the electron dynamics can be approximated as a charge-neutralizing fluid.[3]

The core numerical methods implemented in this compound are summarized in the table below:

| Feature | Description | Reference |

| Model | Hybrid-Kinetic Particle-in-Cell (PIC) | [1][4] |

| Ion Treatment | Kinetic (Particle-in-Cell) | [3] |

| Electron Treatment | Massless, charge-neutralizing fluid | [3] |

| Integration Algorithm | Second-order accurate, three-stage predictor-corrector | [1][4] |

| Particle Integrator | Energy-conserving | [1][2] |

| Magnetic Field Solver | Constrained Transport Method (ensures ∇ ⋅ B = 0) | [1][2] |

| Noise Reduction | Delta-f (δf) scheme | [1][2][5] |

| Coordinate Systems | Cartesian, Cylindrical, Spherical | [2] |

| Parallelization | MPI-based domain decomposition | [1][2] |

Hybrid-Kinetic Particle-in-Cell (PIC) Method

The PIC method in this compound tracks the trajectories of a large number of computational "macro-particles," which represent a multitude of real ions. The motion of these particles is governed by the Lorentz force, where the electric and magnetic fields are calculated on a grid.[6] The fields are sourced from the moments of the particle distribution (density and current). This particle-grid coupling allows for the self-consistent evolution of the plasma.

Constrained Transport Method

To maintain the divergence-free constraint of the magnetic field (∇ ⋅ B = 0), which is a fundamental property of Maxwell's equations, this compound employs the constrained transport method. This method evolves the magnetic field components on a staggered mesh, ensuring that the numerical representation of the divergence of the magnetic field remains zero to machine precision throughout the simulation.[1][2]

Delta-f (δf) Scheme

For simulations where the plasma distribution function only slightly deviates from a known equilibrium, the delta-f (δf) scheme is a powerful variance-reduction technique.[1][2][5] Instead of simulating the full distribution function f, the δf method evolves only the perturbation, δf = f - f₀, where f₀ is the background distribution. This significantly reduces the statistical noise associated with the PIC method, enabling more accurate simulations of low-amplitude waves and instabilities.

Data Presentation: Validation Test Results

This compound has been rigorously tested against a suite of standard plasma physics problems to validate its accuracy and robustness. The following tables summarize the key parameters and results from some of these validation tests.

Linear Landau Damping

Linear Landau damping is a fundamental collisionless damping process in plasmas. The simulation results from this compound show excellent agreement with the theoretical predictions for the damping rate and frequency of electrostatic waves.

| Parameter | Value |

| Wavenumber (kλ_D) | 0.5 |

| Initial Perturbation Amplitude (α) | 0.01 |

| Number of Particles per Cell | 256 |

| Grid Resolution | 128 cells |

| Result | |

| Damping Rate (γ/ω_p) | -0.153 |

| Wave Frequency (ω/ω_p) | 1.41 |

Alfven Waves

Alfven waves are low-frequency electromagnetic waves that propagate in magnetized plasmas. This compound accurately captures their propagation characteristics.

| Parameter | Value |

| Plasma Beta (β) | 1.0 |

| Wave Amplitude (δB/B₀) | 10⁻⁶ |

| Propagation Angle (θ) | 45° |

| Grid Resolution | 128 x 128 |

| Result | |

| Propagation Speed | Matches theoretical Alfven speed |

Cyclotron Waves

Cyclotron waves are associated with the gyromotion of charged particles around magnetic field lines. This compound simulations of these waves demonstrate the code's ability to handle kinetic ion physics accurately.

| Parameter | Value |

| Magnetic Field Strength (B₀) | 1.0 |

| Ion Temperature (Tᵢ) | 0.1 |

| Wave Propagation | Parallel to B₀ |

| Grid Resolution | 256 cells |

| Result | |

| Dispersion Relation | Agrees with theoretical predictions for ion cyclotron waves |

Experimental Protocols

This section provides detailed methodologies for the key validation tests cited above. These protocols can serve as a template for researchers looking to replicate these results or design new simulations with this compound.

Protocol for Linear Landau Damping Simulation

-

Initialization :

-

Define a one-dimensional, periodic simulation domain.

-

Initialize a uniform, Maxwellian distribution of ions with a specified thermal velocity.

-

Introduce a small sinusoidal perturbation to the ion distribution function in both space and velocity, consistent with the desired wave mode.

-

The electrons are treated as a charge-neutralizing fluid.

-

-

Field Solver Configuration :

-

Use the electrostatic solver to compute the electric field from the ion charge density at each time step.

-

-

Particle Pusher Configuration :

-

Use the energy-conserving particle pusher to advance the ion positions and velocities based on the calculated electric field.

-

-

Time Evolution :

-

Evolve the system for a sufficient number of plasma periods to observe the damping of the electric field energy.

-

-

Diagnostics :

-

Record the time history of the electric field energy and the spatial Fourier modes of the electric field.

-

Analyze the recorded data to determine the damping rate and frequency of the wave.

-

Protocol for Alfven Wave Simulation

-

Initialization :

-

Define a two-dimensional, periodic simulation domain with a uniform background magnetic field, B₀.

-

Initialize a uniform plasma with a specified density and pressure (defining the plasma beta).

-

Introduce a small-amplitude, sinusoidal perturbation to the magnetic and velocity fields corresponding to a shear Alfven wave.

-

-

Field Solver Configuration :

-

Use the constrained transport method to evolve the magnetic field.

-

The electric field is determined from the ideal Ohm's law, consistent with the electron fluid model.

-

-

Particle Pusher Configuration :

-

Advance the ion positions and velocities using the Lorentz force from the evolving electromagnetic fields.

-

-

Time Evolution :

-

Evolve the system and observe the propagation of the wave packet.

-

-

Diagnostics :

-

Record the spatial and temporal evolution of the magnetic and velocity field components.

-

Measure the propagation speed of the wave and compare it to the theoretical Alfven speed.

-

Mandatory Visualization: Workflows and Logical Relationships

The following diagrams, generated using the DOT language, illustrate the core logical flows within the this compound simulation code.

Caption: High-level flowchart of the main simulation loop in this compound.

References

- 1. [PDF] Hybrid simulation of Alfvén wave parametric decay instability in a laboratory relevant plasma | Semantic Scholar [semanticscholar.org]

- 2. [1311.4865] this compound: A New Hybrid-Kinetic Particle-in-Cell Code for Astrophysical Plasma Dynamics [arxiv.org]

- 3. researchgate.net [researchgate.net]

- 4. [PDF] this compound: A new hybrid-kinetic particle-in-cell code for astrophysical plasma dynamics | Semantic Scholar [semanticscholar.org]

- 5. Using delta f | EPOCH [epochpic.github.io]

- 6. pst.hfcas.ac.cn [pst.hfcas.ac.cn]

Pegasus Workflow Management System: A Technical Guide for Scientific and Drug Development Applications

This in-depth technical guide explores the core features of the Pegasus Workflow Management System (WMS), a robust and scalable solution for automating, managing, and executing complex scientific workflows. Designed for researchers, scientists, and professionals in fields like drug development, this compound provides a powerful framework for orchestrating computationally intensive tasks across diverse and distributed computing environments. This document details the system's architecture, key functionalities, and provides insights into its application in real-world scientific endeavors.

Core Features of the this compound Workflow Management System

This compound is engineered to address the challenges of modern scientific computing, offering a suite of features that promote efficiency, reliability, and reproducibility.[1]

-

Portability and Reuse : A cornerstone of this compound is the abstraction of workflow descriptions from the underlying execution environment.[2][3][4][5] This allows researchers to define a workflow once and execute it on various resources, including local clusters, grids, and clouds, without modification.[3][4][5]

-

Scalability : this compound is designed to handle workflows of varying scales, from a few tasks to over a million. It can efficiently manage large numbers of tasks and distribute them across a multitude of computational resources.[3][4][6]

-

Performance Optimization : The this compound mapper can intelligently reorder, group, and prioritize tasks to enhance the overall performance of a workflow.[3][4][5][7] A key optimization technique is job clustering , where multiple short-running jobs are grouped into a single, larger job to reduce the overhead associated with job submission and scheduling.[3]

-

Data Management : this compound provides comprehensive data management capabilities, including replica selection, data transfers, and output registration in data catalogs.[4][7] It automatically stages necessary input data to execution sites and registers output data for future use.[7]

-

Provenance Tracking : Detailed provenance information is automatically captured for every workflow execution. This includes information about the data used and produced, the software executed with specific parameters, and the execution environment.[7] This comprehensive record-keeping is crucial for the reproducibility of scientific results.

-

Reliability and Fault Tolerance : this compound incorporates several mechanisms to ensure the reliable execution of workflows. Jobs and data transfers are automatically retried in case of failures.[7] For unrecoverable errors, this compound can generate a "rescue workflow" that allows the user to resume the workflow from the point of failure.[8][9]

-

Monitoring and Debugging : A suite of tools is provided for monitoring the progress of workflows in real-time and for debugging failures.[5][10] The this compound-status command offers a high-level overview of the workflow's state, while this compound-analyzer helps pinpoint the cause of failures.[10][11]

System Architecture

The architecture of the this compound WMS is designed to decouple the logical description of a workflow from its physical execution. This is achieved through a multi-stage process that transforms an abstract workflow into an executable workflow tailored for a specific computational environment.

The core components of the this compound architecture include:

-

Workflow Mapper : This is the central component of this compound. It takes a high-level, abstract workflow description (in XML or YAML format) and "compiles" it into an executable workflow. During this process, it performs several key functions:

-

Resource Selection : It identifies suitable computational resources for executing the workflow tasks based on information from various catalogs.

-

Data Staging : It plans the necessary data transfers to move input files to the execution sites and to stage out output files.

-

Task Clustering : It groups smaller tasks into larger jobs to optimize performance.

-

Adding Auxiliary Jobs : It adds jobs for tasks such as directory creation, data registration, and cleanup.

-

-

Execution Engine (HTCondor DAGMan) : this compound leverages HTCondor's DAGMan (Directed Acyclic Graph Manager) as its primary workflow execution engine. DAGMan is responsible for submitting jobs in the correct order based on their dependencies and for managing job retries.

-

Information Catalogs : this compound relies on a set of catalogs to obtain information about the available resources and data:

-

Site Catalog : Describes the physical and logical properties of the execution sites.

-

Replica Catalog : Maps logical file names to their physical locations.

-

Transformation Catalog : Describes the logical application names and their physical locations on different sites.

-

-

Monitoring and Debugging Tools : These tools interact with a workflow-specific database that is populated with real-time monitoring information and provenance data.

Below is a diagram illustrating the high-level architecture of the this compound WMS.

Quantitative Performance Data

This compound has been successfully employed in numerous large-scale scientific projects, demonstrating its scalability and performance. Below are tables summarizing quantitative data from two prominent use cases: the LIGO gravitational wave search and the SPLINTER drug discovery project.

LIGO Gravitational Wave Search Workflow

The Laser Interferometer Gravitational-Wave Observatory (LIGO) collaboration has extensively used this compound to manage the complex workflows for analyzing gravitational wave data.[2][12]

| Metric | Value | Reference |

| Number of Compute Tasks per Workflow | ~60,000 | [13] |

| Input Data per Workflow | ~5,000 files (10 GB total) | [13] |

| Output Data per Workflow | ~60,000 files (60 GB total) | [13] |

| Total Workflows (August 2017) | ~4,000 | [14] |

| Total Tasks (August 2017) | > 9 million | [14] |

| Turnaround Time for Offline Analysis | Days (previously weeks) | [14] |

SPLINTER Drug Discovery Workflow

The Structural Protein-Ligand Interactome (SPLINTER) project utilizes this compound to manage millions of molecular docking simulations for predicting interactions between small molecules and proteins.[15]

| Metric | Value | Reference |

| Number of Docking Simulations (Jan-Feb 2013) | > 19 million | [15] |

| Number of Proteins | ~3,900 | [15] |

| Number of Ligands | ~5,000 | [15] |

| Total Core Hours | 1.42 million | [15] |

| Completion Time | 27 days | [15] |

| Average Daily Wall Clock Time | 52,593 core hours | [15] |

| Peak Daily Wall Clock Time | > 100,000 core hours | [15] |

Experimental Protocols

This section provides detailed methodologies for two representative scientific workflows managed by this compound.

LIGO PyCBC Gravitational Wave Search

The PyCBC (Python Compact Binary Coalescence) workflow is a key pipeline used by the LIGO Scientific Collaboration to search for gravitational waves from the merger of compact binary systems like black holes and neutron stars.

Objective : To identify statistically significant gravitational-wave signals in the data from the LIGO detectors.

Methodology :

-

Data Preparation : The workflow begins by identifying and preparing the input data, which consists of time-series strain data from the LIGO detectors.

-

Template Bank Generation : A large bank of theoretical gravitational waveform templates is generated, each corresponding to a different set of binary system parameters (e.g., masses, spins).

-