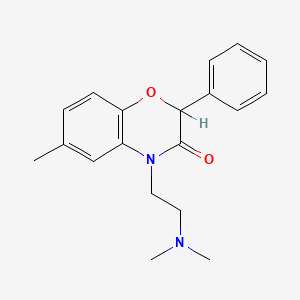

AR 17048

描述

属性

CAS 编号 |

65792-35-0 |

|---|---|

分子式 |

C19H22N2O2 |

分子量 |

310.4 g/mol |

IUPAC 名称 |

4-[2-(dimethylamino)ethyl]-6-methyl-2-phenyl-1,4-benzoxazin-3-one |

InChI |

InChI=1S/C19H22N2O2/c1-14-9-10-17-16(13-14)21(12-11-20(2)3)19(22)18(23-17)15-7-5-4-6-8-15/h4-10,13,18H,11-12H2,1-3H3 |

InChI 键 |

WAMJTKQWKFBKKP-UHFFFAOYSA-N |

规范 SMILES |

CC1=CC2=C(C=C1)OC(C(=O)N2CCN(C)C)C3=CC=CC=C3 |

外观 |

Solid powder |

纯度 |

>98% (or refer to the Certificate of Analysis) |

保质期 |

>2 years if stored properly |

溶解度 |

Soluble in DMSO, not in water |

储存 |

Dry, dark and at 0 - 4 C for short term (days to weeks) or -20 C for long term (months to years). |

同义词 |

2-phenyl-4-(beta-dimethylaminoethyl)-6-methyl-2,3-dihydro-1,4-benzoxazin-3-one AR 17048 AR-17048 |

产品来源 |

United States |

Foundational & Exploratory

Augmented Reality in the Laboratory: A Technical Guide to Enhancing Research and Development

An In-depth Technical Guide for Researchers, Scientists, and Drug Development Professionals

Introduction

Augmented Reality (AR) is rapidly transitioning from a niche technology to a powerful tool within the laboratory setting, poised to revolutionize scientific research, drug discovery, and quality control. By overlaying digital information—such as instructions, data, and 3D models—onto the physical world, AR provides scientists and researchers with a more intuitive and interactive way to engage with their experiments and data. This guide explores the core technical aspects of AR in the laboratory, detailing its applications, quantifiable benefits, and practical implementation for experimental protocols.

Augmented reality enhances the real world by adding digital elements, unlike virtual reality (VR) which creates a completely simulated environment.[1] In a laboratory context, this allows researchers to maintain a connection with their physical workspace while accessing a wealth of digital information. This can range from hands-free access to protocols and data to the visualization of complex molecular structures in three dimensions.[2][3] The integration of AR into laboratory workflows promises to enhance efficiency, reduce errors, and foster a deeper understanding of complex biological and chemical processes.

Core Applications of Augmented Reality in the Laboratory

The applications of AR in a laboratory setting are diverse, spanning the entire research and development pipeline. Key areas where AR is making a significant impact include:

-

Data Visualization: AR enables scientists to visualize complex, multidimensional datasets in an immersive 3D space.[4] For instance, researchers can interact with 3D models of proteins, molecules, or cellular structures, leading to a more profound understanding of their form and function.[2][4] This is a significant leap from traditional 2D representations on a computer screen.[4]

-

Experimental Guidance and Protocol Adherence: AR headsets and smart glasses can provide researchers with step-by-step instructions overlaid directly onto their field of view.[5][6] This hands-free guidance ensures that complex protocols are followed precisely, minimizing the risk of human error.[3] The system can provide real-time feedback and alerts, for example, if a wrong reagent is selected or a step is missed.[7]

-

Inventory and Equipment Management: AR applications can streamline laboratory inventory management.[5][8] By simply looking at a reagent or piece of equipment, a researcher can view relevant information such as expiration dates, safety data, and maintenance schedules.[8] This can significantly reduce the time spent on manual inventory checks and searches.[5]

-

Training and Education: AR provides an immersive and interactive platform for training new laboratory personnel.[2][9] Trainees can practice complex procedures in a simulated environment without using expensive reagents or posing a risk to sensitive equipment.[2][9] Studies have shown that AR-based training can be more effective than traditional paper-based methods.[10]

Quantitative Impact of Augmented Reality in Laboratory Settings

The adoption of AR in laboratories is driven by its potential to deliver measurable improvements in efficiency, accuracy, and safety. While the field is still emerging, several studies have started to quantify the benefits of AR implementation.

| Metric | Improvement with AR | Context | Source |

| Accuracy | 62.3% increase | AR-based training for a procedural task compared to paper-based training. | [10] |

| User Frustration | 32.14% reduction | AR-based training for a procedural task compared to paper-based training. | [10] |

| System Latency | Reduced from 2126 ms (B15284909) to 296 ms | Real-time AI-powered Augmented Reality Microscope (ARM) for cancer diagnosis. | [11] |

| Cognitive Load | Significant reduction | AR-guided assembly tasks compared to traditional video tutorials. | [10] |

| Task Time (Pointing) | Significantly higher | Pointing at virtual targets in an AR environment compared to physical targets. | [12] |

It is important to note that while AR shows significant promise, there can be a learning curve and potential for increased task time in certain scenarios, such as direct interaction with virtual objects compared to physical ones.[12]

Experimental Protocols: AR-Guided Cell Passaging

To illustrate the practical application of AR in the laboratory, this section provides a detailed methodology for a common cell culture procedure: passaging adherent cells, using a hypothetical AR system.

Objective: To provide a hands-free, interactive guide for the subculturing of an adherent cell line (e.g., AR42J) to ensure protocol adherence and minimize contamination risk.

Materials and Equipment:

-

AR Headset (e.g., Microsoft HoloLens 2) with pre-loaded "AR-Lab-Assist" software

-

T25 flask with confluent AR42J cells

-

Complete growth medium (pre-warmed to 37°C)

-

Phosphate-Buffered Saline (PBS) (pre-warmed to 37°C)

-

Trypsin-EDTA solution (pre-warmed to 37°C)

-

Sterile serological pipettes and pipette aid

-

Sterile 15 mL conical tube

-

New T25 culture flask

-

70% ethanol

-

Biological safety cabinet (BSC)

-

Incubator at 37°C, 5% CO₂

-

Inverted microscope

AR System Features:

-

Voice Commands: The entire protocol can be navigated using voice commands such as "Next Step," "Previous Step," "Show Timer," and "Record Observation."

-

Visual Overlays: The AR headset will display text instructions, timers, and highlight specific equipment and areas within the user's field of view.

-

Object Recognition: The system can identify flasks, pipettes, and reagents to confirm correct selection.

-

Automated Data Logging: The system can record timestamps for critical steps and allow for voice-to-text annotation of observations.

Methodology:

-

Preparation and Sterilization:

-

AR Prompt: "Disinfect the biological safety cabinet and all required materials with 70% ethanol." A visual overlay will highlight the spray bottle and wipes.

-

The user confirms completion by saying, "Task complete."

-

-

Observation of Cells:

-

AR Prompt: "Observe the cell confluency under the inverted microscope. The confluency should be greater than 80%." A reference image of 80% confluency is displayed in the user's peripheral vision.

-

The user observes the cells and can say, "Record observation: Cells are approximately 90% confluent and appear healthy," which is automatically logged.

-

-

Aspiration of Old Medium:

-

AR Prompt: "Aspirate the old medium from the T25 flask." An arrow will point to the waste container for the aspirator tube.

-

-

Washing with PBS:

-

AR Prompt: "Gently add 2 mL of pre-warmed PBS to the side of the flask." The system will highlight the PBS bottle.

-

AR Prompt: "Rock the flask gently to wash the cell monolayer." A short animation demonstrating the rocking motion is displayed.

-

AR Prompt: "Aspirate the PBS."

-

-

Cell Detachment with Trypsin:

-

AR Prompt: "Add 1 mL of pre-warmed trypsin to the flask and distribute evenly." The system recognizes the trypsin bottle and provides a green checkmark for confirmation.

-

AR Prompt: "Incubate in the incubator for 2-5 minutes. Start timer." A timer is displayed in the top right corner of the user's view.

-

The user can periodically check the cells under the microscope. AR Prompt: "Look for cell rounding and detachment."

-

-

Neutralization of Trypsin:

-

AR Prompt: "Once 70-80% of cells are detached, add 3 mL of complete medium to neutralize the trypsin." An arrow points to the complete medium bottle.

-

-

Cell Collection and Centrifugation:

-

AR Prompt: "Gently pipette the cell suspension to break up clumps and transfer to a 15 mL conical tube." The system highlights the conical tube.

-

AR Prompt: "Centrifuge at 500g for 5 minutes."

-

-

Resuspension and Seeding:

-

AR Prompt: "Aspirate the supernatant and resuspend the cell pellet in 1 mL of fresh complete medium."

-

AR Prompt: "Transfer the cell suspension to a new T25 flask containing 4 mL of complete medium." An arrow points to the new flask.

-

-

Incubation:

-

AR Prompt: "Gently swirl the flask to ensure even cell distribution and place in the incubator." A diagram showing the correct swirling motion appears.

-

AR Prompt: "Label the flask with the cell line name, passage number, and date." A virtual keyboard can be used for data entry, which is then logged.

-

Visualizations

AR-Assisted Laboratory Workflow

Caption: A logical workflow diagram illustrating the interaction between a researcher and an AR system during a laboratory experiment.

Signaling Pathway for AR-Enhanced Data Interpretation

Caption: A signaling pathway demonstrating how raw experimental data is transformed into an interactive 3D model for enhanced interpretation using an AR platform.

Conclusion

Augmented reality is set to become an indispensable tool in the modern laboratory.[5][6] By seamlessly integrating digital information with the physical research environment, AR technology offers a new paradigm for conducting experiments, analyzing data, and managing laboratory resources. The quantitative and qualitative data emerging from early adoptions point towards significant improvements in efficiency, accuracy, and user experience. As the hardware becomes more accessible and the software more sophisticated, we can expect to see even more innovative applications of AR that will continue to push the boundaries of scientific discovery. For research organizations and pharmaceutical companies, investing in and integrating AR technologies will be crucial for staying at the forefront of innovation and maintaining a competitive edge.

References

- 1. A Review Article of the Reduce Errors in Medical Laboratories - PMC [pmc.ncbi.nlm.nih.gov]

- 2. Introducing Augmented Reality to Optical Coherence Tomography in Ophthalmic Microsurgery | IEEE Conference Publication | IEEE Xplore [ieeexplore.ieee.org]

- 3. Augmented Reality in the Pharmaceutical Industry - BrandXR [brandxr.io]

- 4. augmentiqs.com [augmentiqs.com]

- 5. An augmented reality microscope with real-time artificial intelligence integration for cancer diagnosis - PubMed [pubmed.ncbi.nlm.nih.gov]

- 6. analyticalscience.wiley.com [analyticalscience.wiley.com]

- 7. Augmented Reality for better laboratory results [medica-tradefair.com]

- 8. [1812.00825] Microscope 2.0: An Augmented Reality Microscope with Real-time Artificial Intelligence Integration [arxiv.org]

- 9. Augmented Reality in Pharmaceutical Industry - Plutomen [pluto-men.com]

- 10. Frontiers | Acceptance of augmented reality for laboratory safety training: methodology and an evaluation study [frontiersin.org]

- 11. helios2.mi.parisdescartes.fr [helios2.mi.parisdescartes.fr]

- 12. mdpi.com [mdpi.com]

Principles of Augmented Reality for Scientific Visualization: An In-depth Technical Guide

For Researchers, Scientists, and Drug Development Professionals

Abstract

Augmented Reality (AR) is poised to revolutionize scientific visualization by overlaying interactive, three-dimensional digital information onto the real-world environment. This guide delves into the core principles of applying AR to scientific visualization, with a specific focus on applications within research, drug discovery, and development. We will explore methodologies for data presentation, detail experimental protocols for creating AR experiences, and illustrate key workflows and pathways using Graphviz diagrams. This document serves as a technical resource for professionals seeking to leverage AR for more intuitive data interaction, enhanced collaboration, and accelerated discovery.

Core Principles of Augmented Reality in a Scientific Context

Augmented reality systems fundamentally consist of three key components: a combination of real and virtual worlds, real-time interaction, and accurate 3D registration of virtual and real objects.[1] In a laboratory or research setting, this translates to the ability to visualize and interact with complex datasets, such as molecular structures or cellular pathways, as if they were physically present in the workspace.[2] This immersive approach can significantly enhance the understanding of intricate biological and chemical systems, which are often difficult to interpret from traditional 2D screens.[3][4]

The primary advantage of AR in scientific visualization is its capacity to present data in a spatial context, allowing researchers to walk around a virtual protein, manipulate a signaling pathway with hand gestures, or observe the simulated interaction of a drug candidate with its target in three dimensions.[5][6] This technology facilitates a more profound comprehension of spatial relationships and complex structural features.[3][7]

Data Presentation in Augmented Reality

While AR excels at visualizing qualitative, 3D data, it can also be a powerful tool for presenting quantitative information in a more intuitive and contextual manner. Instead of being confined to static tables and charts, quantitative data can be overlaid onto 3D models, providing real-time feedback and a deeper understanding of the data's significance.

Table 1: Quantitative Data Integration in AR Visualizations

| Data Type | AR Presentation Method | Example Application in Drug Development |

| Binding Affinity Data (e.g., Ki, IC50) | Numeric labels attached to specific binding sites on a 3D protein model. Color-coding of the molecular surface to represent affinity gradients. | A researcher visualizes a target protein and several lead compounds. As they manipulate a virtual compound near the binding pocket, the corresponding binding affinity values are displayed in real-time. |

| Gene Expression Levels | Heatmap textures applied to a 3D model of a cell or tissue. Bar charts or graphs that appear when a specific cellular component is selected. | When viewing a 3D model of a cancerous tissue, genes that are upregulated by a potential drug are highlighted in green, while downregulated genes are shown in red. |

| Pharmacokinetic (PK) Data (e.g., Cmax, Tmax) | Animated particles or colored flows within a 3D anatomical model to represent drug concentration over time. Interactive graphs that display the PK curve when a specific organ is selected. | A scientist can visualize the absorption, distribution, metabolism, and excretion (ADME) of a new drug within a virtual human body, with concentrations changing dynamically over time. |

| Clinical Trial Data | Geographic mapping of trial sites with interactive data points. 3D scatter plots to visualize patient responses and adverse events. | A clinical trial manager can see a world map with holographic representations of enrollment numbers and key efficacy results at each clinical site.[5] |

Experimental Protocols for Augmented Reality Visualization

Creating a compelling and accurate scientific visualization in AR involves a series of steps to process and render the data. Below are detailed methodologies for key applications.

Protocol: Molecular Structure Visualization with a Head-Mounted Display (e.g., Microsoft HoloLens)

This protocol outlines the general steps to import and visualize a protein structure in an AR environment.[3][7]

-

Data Acquisition: Obtain the 3D coordinates of the desired molecule from a database such as the Protein Data Bank (PDB). The data is typically in a .pdb or .cif file format.

-

3D Model Preparation:

-

Use molecular visualization software (e.g., PyMOL, Chimera) to clean up the PDB file, select the desired chains, and represent the molecule in a suitable format (e.g., surface, ribbon, ball-and-stick).

-

Export the prepared structure as a 3D model file compatible with game engines, such as .obj or .fbx.

-

-

AR Environment Development:

-

Import the 3D model into a game engine that supports AR development, such as Unity or Unreal Engine.[8]

-

Utilize the appropriate AR software development kit (SDK) for the target device (e.g., Mixed Reality Toolkit for HoloLens).

-

-

Interaction and Feature Implementation:

-

Program interactions such as rotation, scaling, and translation of the molecule using hand gestures or controllers.[8]

-

Add features to display annotations, highlight specific residues, or measure distances between atoms.

-

-

Deployment: Build and deploy the application to the AR headset.

Protocol: Real-time Molecular Visualization from 2D Input (MolAR Application Workflow)

This protocol describes the workflow of the MolAR application, which uses machine learning to generate AR visualizations from 2D images.[4][9]

-

Input: The user provides a 2D representation of a molecule. This can be a hand-drawn structure on paper or a chemical name.

-

Image Recognition/Name Parsing:

-

If a drawing is provided, the application uses a machine learning model (a convolutional neural network) to recognize the chemical structure.

-

If a name is provided, it is parsed to identify the molecule.

-

-

3D Model Generation: The application queries a chemical database (like PubChem) to retrieve the 3D coordinates of the recognized molecule.

-

AR Visualization: The 3D model is then rendered in the user's real-world environment through their smartphone or tablet camera. The user can interact with the virtual molecule.[4]

Visualizing Pathways and Workflows

AR provides an unparalleled opportunity to visualize not just static structures, but also dynamic processes and complex relationships.

Signaling Pathway Visualization

Understanding the intricate network of interactions in a biological signaling pathway is crucial for drug discovery. AR can transform these complex 2D diagrams into interactive 3D networks.[10]

Caption: A generic G-protein coupled receptor (GPCR) signaling pathway.

Experimental Workflow in an AR-Enhanced Laboratory

AR can streamline laboratory workflows by providing hands-free access to information and step-by-step guidance.[11]

Caption: A typical laboratory workflow enhanced with augmented reality guidance.

Logical Relationship: AR Data Visualization Pipeline

This diagram illustrates the logical flow of data from its raw form to an interactive AR visualization.[12]

Caption: The logical pipeline for creating scientific AR visualizations.

Conclusion and Future Outlook

Augmented reality holds immense potential to transform scientific research, particularly in fields like drug development that rely heavily on the interpretation of complex, multi-dimensional data. By moving beyond the limitations of 2D screens, AR offers a more intuitive, interactive, and collaborative platform for scientific discovery.[13][14] As AR hardware becomes more accessible and software tools more sophisticated, we can anticipate its integration into routine laboratory and clinical practices, from visualizing molecular interactions to guiding complex procedures and enhancing scientific education.[5][15] The principles and protocols outlined in this guide provide a foundation for researchers and scientists to begin exploring and implementing this transformative technology in their own work.

References

- 1. Augmented reality - Wikipedia [en.wikipedia.org]

- 2. The Role of Augmented Reality in Scientific Visualization [falconediting.com]

- 3. Visualization of molecular structures using HoloLens-based augmented reality - PMC [pmc.ncbi.nlm.nih.gov]

- 4. towardsdatascience.com [towardsdatascience.com]

- 5. impactcare.co.in [impactcare.co.in]

- 6. allerin.com [allerin.com]

- 7. researchgate.net [researchgate.net]

- 8. paxcom.ai [paxcom.ai]

- 9. pubs.aip.org [pubs.aip.org]

- 10. Augmented reality revolutionizes surgery and data visualization for VCU researchers - VCU News - Virginia Commonwealth University [news.vcu.edu]

- 11. analyticalscience.wiley.com [analyticalscience.wiley.com]

- 12. m.youtube.com [m.youtube.com]

- 13. Augmented Reality in the Pharmaceutical Industry - BrandXR [brandxr.io]

- 14. editverse.com [editverse.com]

- 15. artixio.com [artixio.com]

Getting Started with Augmented Reality in Life Sciences Research: An In-depth Technical Guide

For Researchers, Scientists, and Drug Development Professionals

Introduction: A New Dimension in Life Sciences Research

Augmented Reality (AR) is rapidly transitioning from a futuristic concept to a practical tool poised to revolutionize life sciences research. By overlaying digital information—such as 3D molecular models, interactive protocols, and real-time data—onto the physical world, AR offers an intuitive and immersive way to interact with complex biological data and streamline laboratory workflows. This guide provides a technical deep-dive for researchers, scientists, and drug development professionals on how to begin leveraging AR to enhance data visualization, improve experimental accuracy, and accelerate discovery. From molecular modeling in drug discovery to guided laboratory procedures, AR is creating a more interactive, informative, and efficient research environment.[1][2][3][4]

Core Applications of Augmented Reality in the Research Lifecycle

Augmented reality is being applied across various facets of life sciences research, demonstrating significant potential to enhance efficiency and comprehension.[2][3] Key areas of impact include:

-

Enhanced Molecular Visualization and Drug Discovery: AR allows researchers to move beyond 2D screens and interact with 3D molecular structures in a shared physical space.[1][3] This spatial interaction can lead to a deeper understanding of protein-ligand binding, complex molecular geometries, and drug-target interactions.[5] By simulating and manipulating virtual models of molecules and proteins, scientists can expedite the identification of potential drug candidates and optimize their design.[1][2][4]

-

Streamlined Laboratory Workflows and Training: AR can provide researchers with hands-free, heads-up access to protocols, notes, and instrument controls. This can be particularly valuable in sterile environments or when performing complex, multi-step procedures. For training purposes, AR can offer guided, step-by-step instructions overlaid on the actual instruments and equipment, reducing errors and improving learning curves for new techniques.[6][7][8]

-

Advanced Surgical and Medical Training: In the realm of medical research, AR is being used to create highly realistic surgical simulations.[9][10] Trainees can practice complex procedures on virtual anatomical models overlaid on physical manikins, gaining valuable experience in a risk-free setting.[9]

-

Immersive Genomics and Proteomics Data Visualization: The sheer volume and complexity of omics data present a significant visualization challenge. AR offers a new paradigm for exploring these datasets in 3D, potentially revealing patterns and relationships that are not apparent in traditional 2D representations.

Quantitative Impact of Augmented Reality in Life Sciences

While still an emerging field, early studies and use cases are beginning to provide quantitative evidence of AR's benefits. The following tables summarize some of the key findings.

| Application Area | Metric | AR-Assisted Performance | Traditional Method Performance | Improvement with AR | Source/Study |

| Surgical Training | Mean Procedure Time (seconds) | 97.62 ± 35.59 | 121.34 ± 12.17 | 19.5% faster | Systematic Review on VR/AR in Medical Education |

| Laboratory Safety Training | Accuracy Rate | 62.3% more accurate | Baseline | 62.3% | Werrlich et al. (2018) |

| Molecular Docking | Binding Pose Prediction Success Rate (RMSD < 2 Å) | 82% (ChemPLP scoring function in GOLD) | Varies by software (59% - 100%) | N/A (Comparative Study) | Benchmarking Docking Protocols for COX Enzymes |

| Genomics Data Analysis | F1-Score for Single Nucleotide Variant (SNV) Calling (DRAGEN platform) | 0.985 to 0.992 | Comparable to CPU-based GATK | N/A (Comparable Performance) | Benchmarking Accelerated NGS Pipelines |

Note: The data presented is from various studies with different methodologies and may not be directly comparable. It serves to illustrate the potential quantitative benefits of AR.

Technical Implementation: Integrating AR into Your Lab

Successfully integrating AR into a life sciences research environment requires careful consideration of both hardware and software components.

Hardware Requirements

The choice of hardware will depend on the specific application, but generally falls into two categories:

| Hardware Type | Description | Key Specifications | Examples |

| Head-Mounted Displays (HMDs) | Wearable devices that provide an immersive, hands-free AR experience. They contain the necessary sensors and displays to overlay digital content onto the user's view of the real world. | High-resolution display (at least 1080p), powerful processor (e.g., quad-core), sufficient RAM (minimum 4-6GB), advanced sensors (accelerometer, gyroscope, depth sensors), and a capable GPU. | Microsoft HoloLens 2, Magic Leap 2, Varjo XR-3 |

| Handheld Devices (Smartphones and Tablets) | Utilize the device's camera and screen to display AR content. They are more accessible and widely available than HMDs. | Modern processor (e.g., Apple A-series, Qualcomm Snapdragon 8-series), high-quality camera, and support for AR frameworks like ARKit or ARCore. | Latest iPhones and iPads, high-end Android devices from Google, Samsung, etc. |

Software and Development

The software ecosystem for AR is rapidly evolving. Researchers have several options for developing and deploying AR applications:

| Software/Platform | Description | Key Features |

| AR Frameworks (ARKit and ARCore) | Software Development Kits (SDKs) from Apple and Google, respectively, that provide the foundational tools for creating AR experiences on iOS and Android devices. | World tracking, plane detection, light estimation, and image tracking. |

| Game Engines (Unity and Unreal Engine) | Powerful 3D development platforms that are widely used for creating interactive AR and VR applications. They offer a rich set of tools for 3D modeling, animation, physics simulation, and cross-platform deployment. | Visual scripting, extensive asset stores, and strong community support. |

| Specialized Scientific Visualization Software | A growing number of software tools are being developed specifically for scientific and medical AR applications. | Integration with scientific data formats (e.g., PDB for protein structures), advanced rendering capabilities for scientific data, and tools for collaborative visualization. |

Experimental Protocols

The following protocols provide detailed methodologies for implementing AR in different life sciences research contexts.

Protocol 1: AR-Guided Western Blot

This protocol adapts a standard western blot procedure to incorporate AR for guidance and data logging.

Objective: To demonstrate the use of an AR headset to guide a researcher through a western blot protocol, reducing the potential for error and creating an automatic digital record of the experiment.

Materials:

-

Microsoft HoloLens 2 or similar AR headset

-

Custom AR application for the western blot protocol

-

Standard western blot equipment and reagents (electrophoresis chamber, transfer system, PVDF membrane, blocking buffer, primary and secondary antibodies, ECL substrate, imaging system)

Methodology:

-

Preparation and Setup:

-

The researcher puts on the AR headset and launches the "AR Western Blot" application.

-

The application displays a virtual checklist of all necessary reagents and equipment. The researcher confirms the presence of each item using voice commands or gestures.

-

The AR application visually highlights the correct placement of the gel in the electrophoresis chamber.

-

-

Gel Electrophoresis:

-

The AR application displays a virtual timer for the gel run, which is initiated by a voice command.

-

Visual cues appear on the bench to guide the researcher through the preparation of the transfer buffer.

-

-

Protein Transfer:

-

The application provides a step-by-step 3D animated guide on how to assemble the transfer stack (filter paper, gel, membrane, filter paper). Each component is virtually highlighted in the correct order.

-

A virtual timer for the transfer is displayed and initiated by voice command.

-

-

Blocking and Antibody Incubation:

-

The application displays the recipe for the blocking buffer and highlights the correct reagents on the shelf.

-

Virtual timers are used for the blocking and antibody incubation steps.

-

The researcher can use voice commands to record the lot numbers of the primary and secondary antibodies, which are automatically added to the digital lab notebook associated with the experiment.

-

-

Detection and Imaging:

-

The application provides instructions for preparing and applying the ECL substrate.

-

The researcher uses voice commands to capture an image of the final blot with the AR headset's camera, which is automatically saved with a timestamp.

-

Protocol 2: AR-Assisted 3D Protein Structure Visualization and Analysis

Objective: To utilize a handheld AR application for the interactive visualization and analysis of a protein structure from the Protein Data Bank (PDB).

Materials:

-

Smartphone or tablet with a compatible AR application (e.g., a custom app built with ARKit/ARCore and Unity)

-

PDB file of the target protein

Methodology:

-

Data Import and Initialization:

-

The researcher downloads the desired PDB file onto their device.

-

The AR application is launched, and the PDB file is imported.

-

The application prompts the user to scan a flat surface (e.g., a lab bench or desk).

-

-

AR Visualization:

-

Once a surface is detected, the 3D model of the protein is rendered in the real-world environment.

-

The researcher can walk around the virtual protein to view it from all angles.

-

Standard touch gestures (pinch to zoom, two-finger rotate) are used to manipulate the size and orientation of the model.

-

-

Interactive Analysis:

-

The application provides a menu with options to change the protein's representation (e.g., cartoon, surface, ball and stick).

-

The researcher can select specific residues or chains to highlight them with different colors.

-

A measurement tool allows the user to calculate the distance between two selected atoms.

-

If the PDB file contains a ligand, the researcher can toggle its visibility and analyze its position within the binding pocket.

-

-

Collaboration and Data Capture:

-

The application allows for collaborative viewing, where multiple users can see and interact with the same virtual model in a shared physical space.

-

The researcher can capture screenshots and videos of the AR visualization for inclusion in presentations or publications.

-

Mandatory Visualizations

Signaling Pathway: Mitogen-Activated Protein Kinase (MAPK) Cascade

The following diagram illustrates the MAPK signaling pathway, a crucial cascade involved in cell proliferation, differentiation, and survival. AR can be used to visualize this pathway in 3D, showing the spatial relationships between the interacting proteins and how signals are transduced from the cell membrane to the nucleus.

Caption: The MAPK signaling cascade, a key pathway in cellular regulation.

Experimental Workflow: AR-Assisted Cell Culture

This diagram outlines a typical cell culture workflow enhanced with AR guidance. The AR system provides step-by-step instructions, timers, and data logging capabilities, improving consistency and reducing the risk of contamination.

Caption: An AR-assisted workflow for a typical cell culture experiment.

Logical Relationship: Drug Discovery Funnel with AR Integration

This diagram illustrates the traditional drug discovery funnel, highlighting where AR can be integrated to improve efficiency and decision-making.

Caption: Integration of AR into the drug discovery and development pipeline.

Conclusion and Future Outlook

Augmented reality is set to become an indispensable tool in the life sciences research landscape. Its ability to merge the digital and physical worlds offers unprecedented opportunities to enhance our understanding of complex biological systems, streamline laboratory processes, and accelerate the pace of discovery. While the technology is still evolving, the early applications and quantitative data demonstrate a clear potential for significant impact. As AR hardware becomes more powerful and accessible, and as the software ecosystem matures, we can expect to see even more innovative and transformative applications emerge. For researchers and drug development professionals, now is the time to begin exploring the possibilities of augmented reality and to consider how this powerful new technology can be integrated into their own work to push the boundaries of scientific knowledge.

References

- 1. advanced-medicinal-chemistry.peersalleyconferences.com [advanced-medicinal-chemistry.peersalleyconferences.com]

- 2. provenreality.com [provenreality.com]

- 3. Augmented Reality in the Pharmaceutical Industry - BrandXR [brandxr.io]

- 4. impactcare.co.in [impactcare.co.in]

- 5. The Role of Virtual and Augmented Reality in Advancing Drug Discovery in Dermatology - PMC [pmc.ncbi.nlm.nih.gov]

- 6. analyticalscience.wiley.com [analyticalscience.wiley.com]

- 7. Fostering Performance in Hands-On Laboratory Work with the Use of Mobile Augmented Reality (AR) Glasses [mdpi.com]

- 8. researchgate.net [researchgate.net]

- 9. Reporting reproducible imaging protocols - PubMed [pubmed.ncbi.nlm.nih.gov]

- 10. researchgate.net [researchgate.net]

The Convergence of Real and Virtual: A Technical Guide to the History and Evolution of Augmented Reality in Scientific Discovery

For Researchers, Scientists, and Drug Development Professionals

Abstract

Augmented Reality (AR) is rapidly transcending its origins in gaming and entertainment to become a transformative tool in scientific discovery. By overlaying digital information onto the physical world, AR offers researchers unprecedented opportunities to visualize complex data, interact with virtual models in a real-world context, and enhance experimental procedures. This technical guide provides an in-depth exploration of the history and evolution of AR in scientific research, with a particular focus on its applications in medicine, chemistry, and drug development. It details key technological milestones, presents quantitative data on the impact of AR, and provides detailed experimental protocols for its implementation. Through a comprehensive analysis of the core technologies and practical use cases, this paper serves as a vital resource for scientists and researchers seeking to leverage the power of augmented reality to accelerate discovery and innovation.

A Journey Through Time: The Evolution of Augmented Reality

The concept of blending digital information with the real world predates the term "augmented reality." Early explorations in the mid-20th century laid the groundwork for the immersive technologies we see today.

Foundational Concepts and Early Milestones

The intellectual seeds of AR can be traced back to the 1960s with Ivan Sutherland's invention of the first head-mounted display (HMD), "The Sword of Damocles," in 1968.[1] This device, though cumbersome, was the first to present computer-generated graphics that overlaid the user's view of the real world. The term "augmented reality" itself was coined in 1990 by Thomas Caudell, a researcher at Boeing, who was working on a system to guide workers in assembling aircraft wiring harnesses.[1][2]

One of the first functional AR systems, called Virtual Fixtures, was developed in 1992 by Louis Rosenberg at the United States Air Force Research Laboratory.[1] This system demonstrated that overlaying virtual information on a real-world view could enhance human performance in complex tasks. These early systems were often large, expensive, and limited in their capabilities, but they established the fundamental principles of AR.

The Rise of Mobile and Wearable AR

The proliferation of smartphones in the late 2000s marked a turning point for AR, making it accessible to a mass audience. The release of AR software development kits (SDKs) like ARToolKit in 2000, and later Apple's ARKit and Google's ARCore in 2017, democratized the development of AR applications. These platforms provided the tools for developers to create sophisticated AR experiences on consumer-grade hardware. The launch of devices like Google Glass in 2013, while not a commercial success, spurred further interest and development in wearable AR displays.

Core Technologies Powering Augmented Reality in Science

The magic of AR is enabled by a confluence of hardware and software technologies working in concert to create a seamless blend of the real and virtual.

Hardware: The Window to the Augmented World

AR experiences are delivered through a variety of hardware, each with its own strengths and limitations.

-

Head-Mounted Displays (HMDs): These devices, such as Microsoft's HoloLens, provide the most immersive AR experience by overlaying high-definition 3D graphics directly onto the user's field of view. They allow for hands-free operation, which is crucial in many scientific and medical applications.

-

Handheld Devices: Smartphones and tablets are the most common platforms for AR due to their widespread availability. They utilize their cameras to capture the real world and then display the augmented view on their screens.

-

Projection-Based AR: This approach, also known as Spatial Augmented Reality (SAR), projects digital information directly onto physical objects in the environment. This is particularly useful for collaborative work and large-scale visualizations.

Software: The Brains Behind the Illusion

The software component of AR is responsible for understanding the real world and correctly placing virtual objects within it. Key software technologies include:

-

Simultaneous Localization and Mapping (SLAM): This is a critical algorithm that allows a device to build a map of its surroundings while simultaneously tracking its own position within that map. This is essential for anchoring virtual objects to the real world.

-

3D Object Recognition and Tracking: This technology enables the AR system to identify and track specific objects in the real world, allowing for context-aware augmentation.

-

Rendering Engines: These engines are responsible for generating the 3D graphics that are overlaid on the real world, ensuring they are realistic and correctly lit.

Augmented Reality in Action: Transforming Scientific Disciplines

AR is no longer a futuristic concept but a practical tool being applied across a range of scientific fields to enhance research and discovery.

Revolutionizing Medical Training and Surgical Procedures

The medical field has been an early and enthusiastic adopter of AR technology. One of the most significant impacts has been in surgical training and execution. AR allows surgeons to overlay 3D models of a patient's anatomy, derived from CT or MRI scans, directly onto the patient's body during a procedure. This provides a "superhuman" view, enhancing precision and reducing the risk of errors.

Several studies have demonstrated the quantitative benefits of using AR in surgical training. These studies often compare the performance of trainees using AR systems with those using traditional training methods.

| Metric | AR Group Improvement over Traditional Methods | Source |

| Technical Performance | 35% mean improvement (95% CI: 28%-42%) | [1][3][4][5] |

| Accuracy | 29% mean improvement (95% CI: 23%-35%) | [1][3][4][5] |

| Procedural Knowledge | 32% mean improvement (95% CI: 25%-39%) | [1][3][4][5] |

| Student Engagement | Mean score of 4.5/5 (SD = 0.6) | [1][3][4][5] |

| Student Satisfaction | Mean score of 4.7/5 (SD = 0.5) | [1][3][4][5] |

| Confidence | 30% mean improvement (95% CI: 24%-36%) | [1][3][4][5] |

A typical experimental setup to evaluate the effectiveness of AR in surgical training involves the following steps:

-

Participant Recruitment: A cohort of surgical trainees with similar levels of experience is recruited.

-

Pre-Test Assessment: All participants undergo a baseline assessment of their surgical skills on a standardized task (e.g., suturing, dissection) using traditional methods. Performance is measured using metrics such as time to completion, number of errors, and accuracy.

-

Group Allocation: Participants are randomly assigned to either a control group (traditional training) or an experimental group (AR-assisted training).

-

Training Intervention:

-

The control group receives standard surgical training, which may include lectures, video tutorials, and practice on physical models.

-

The experimental group receives training using an AR system that overlays procedural guidance, anatomical information, or real-time feedback onto their view of the surgical task.

-

-

Post-Test Assessment: After the training period, all participants are re-assessed on the same standardized surgical task.

-

Data Analysis: The performance metrics from the pre-test and post-test are statistically analyzed to determine if there is a significant difference in skill improvement between the two groups. Subjective measures, such as confidence and satisfaction, are also collected through questionnaires.

Enhancing Visualization and Interaction in Chemistry and Drug Discovery

In the fields of chemistry and drug discovery, understanding the three-dimensional structure of molecules is paramount. Traditional 2D representations on computer screens can often be limiting. AR provides an intuitive way to visualize and interact with complex molecular structures in 3D space.

AR applications like "MolAR" and "BioSIMAR" allow researchers and students to view and manipulate 3D models of molecules as if they were real objects in the room.[6][7] This can lead to a deeper understanding of molecular geometry, bonding, and intermolecular interactions. Some advanced systems even allow for real-time quantum chemistry calculations to be performed on the visualized molecules.

Here is a general protocol for using an AR application for molecular visualization, based on the functionalities of apps like MolAR and BioSIMAR:

-

Software and Hardware Setup:

-

Install the chosen AR molecular visualization application (e.g., MolAR, BioSIMAR) on a compatible smartphone or tablet.

-

Ensure the device's camera is functioning correctly.

-

-

Molecule Selection and Input:

-

From a Database: Many applications allow you to search for molecules by name or by their Protein Data Bank (PDB) ID.

-

From a 2D Drawing: Some applications, like MolAR, use machine learning to recognize and convert a hand-drawn 2D chemical structure into a 3D model.[6]

-

From a QR Code: Applications like BioSIMAR use QR codes to trigger the display of specific molecules.

-

-

AR Visualization and Interaction:

-

Point the device's camera at a flat surface or the designated marker (e.g., a QR code or a drawing).

-

The 3D model of the molecule will appear overlaid on the real-world view.

-

Use touch gestures on the screen to rotate, zoom, and pan the molecule to view it from different angles.

-

Some applications may offer additional features, such as displaying molecular orbitals, measuring bond lengths and angles, or simulating molecular dynamics.

-

-

Data Analysis and Exploration:

-

Visually inspect the 3D structure to understand its spatial arrangement.

-

Identify key functional groups and their orientations.

-

Explore potential binding sites and interactions with other molecules.

-

The Future of Augmented Reality in Scientific Discovery

The integration of AR into scientific research is still in its early stages, but the potential for future advancements is immense. As hardware becomes more powerful and comfortable, and as software becomes more intelligent, we can expect to see even more sophisticated applications of AR in the laboratory and in the field.

Future developments may include:

-

Haptic Feedback: The addition of haptic feedback to AR systems will allow researchers to "feel" virtual objects, providing a more immersive and intuitive experience.

-

Collaborative AR Environments: Multi-user AR platforms will enable researchers from around the world to collaborate in a shared virtual space, interacting with the same data and models.

Conclusion

Augmented reality is poised to become an indispensable tool for scientific discovery. From its humble beginnings as a bulky, experimental technology, AR has evolved into a powerful and accessible platform that is already making a significant impact in fields such as medicine and chemistry. By providing a more intuitive and immersive way to visualize and interact with complex data, AR is empowering researchers to ask new questions, explore new possibilities, and ultimately, accelerate the pace of scientific innovation. As the technology continues to mature, we can expect to see even more groundbreaking applications of augmented reality that will reshape our understanding of the world around us.

References

- 1. researchgate.net [researchgate.net]

- 2. researchgate.net [researchgate.net]

- 3. researchgate.net [researchgate.net]

- 4. The Role of Augmented Reality in Surgical Training: A Systematic Review - PMC [pmc.ncbi.nlm.nih.gov]

- 5. The Increasing Use of Augmented Reality in Surgery Training [kirbysurgicalcenter.com]

- 6. pubs.acs.org [pubs.acs.org]

- 7. researchgate.net [researchgate.net]

The Immersive Leap: Gauging the Potential of Augmented Reality for Accelerated Molecular Modeling

A Technical Guide for Researchers and Drug Development Professionals

The paradigm of molecular modeling is shifting. For decades, researchers have navigated the complex, three-dimensional world of proteins and ligands through the flat plane of a 2D screen. While powerful, this method imposes a significant cognitive load, requiring users to mentally reconstruct 3D relationships from 2D representations. Augmented Reality (AR) and its immersive counterpart, Virtual Reality (VR), offer a transformative alternative, allowing scientists to step directly into the molecular world. This guide explores the potential of AR in molecular modeling, drawing on quantitative data from immersive VR studies to benchmark its capabilities against traditional methods and detailing experimental protocols for evaluating these burgeoning technologies.

The Promise of Spatial Interaction

Augmented reality overlays interactive, 3D molecular models onto the user's real-world environment.[1][2][3][4] This allows for intuitive, direct manipulation of virtual objects, an experience fundamentally different from the indirect interaction paradigm of a mouse and keyboard.[1] In drug discovery, where understanding the nuanced fit between a ligand and a protein's binding pocket is paramount, this immersive perspective can be invaluable.[5] Companies like Nanome have developed collaborative VR/AR platforms that enable scientists to visualize, modify, and simulate molecular structures in a shared 3D space, aiming to accelerate decision-making and shorten drug development timelines.[5][6] The core hypothesis is that by reducing the cognitive barrier between the scientist and the molecule, immersive technologies can enhance spatial understanding, foster creativity, and improve the efficiency of complex molecular design tasks.[6][7]

Quantitative Evaluation: Immersive Reality vs. Traditional Desktops

While AR-specific quantitative studies in professional drug discovery are still emerging, research in the closely related field of interactive Molecular Dynamics in Virtual Reality (iMD-VR) provides compelling benchmarks that illuminate the potential of immersive systems. A seminal study explored the use of iMD-VR for the complex task of flexible protein-ligand docking, a critical step in structure-based drug design.

The study directly compared the performance of users in an immersive environment with established crystallographic data. The results demonstrate that iMD-VR allows users to intuitively and rapidly carry out the detailed atomic manipulations required to dock flexible ligands into dynamic enzyme active sites.[8][9]

| Task | System/Platform | Participant Group | Key Performance Metric | Time to Complete | Source |

| Flexible Docking | iMD-VR (Narupa) | Expert Users | Recreate crystallographic binding pose | 5-10 minutes | [8][9] |

| Flexible Docking | iMD-VR (Narupa) | Novice Users (Post-training) | Recover binding pose within 2.15 Å RMSD of crystallographic pose | 5-10 minutes | [8][9] |

| Ligand Unbinding | iMD-VR (Narupa) | Expert Users | Guide benzamidine (B55565) out of trypsin binding pocket | < 5 picoseconds (simulation time) | [9] |

| Ligand Rebinding | iMD-VR (Narupa) | Expert Users | Guide benzamidine into trypsin binding pocket | < 5 picoseconds (simulation time) | [9] |

Table 1: Quantitative performance metrics for protein-ligand docking tasks performed in an immersive Virtual Reality environment. The data highlights the speed and accuracy achievable by both expert and novice users for tasks crucial to drug discovery.

Experimental Protocols for Evaluating Immersive Modeling Systems

To rigorously assess the utility of AR and VR platforms in a research context, structured experimental protocols are essential. The following methodology is adapted from user studies in immersive molecular dynamics and provides a framework for comparing AR/VR systems against traditional desktop setups.[8][9]

Objective:

To evaluate the effectiveness, efficiency, and user experience of an AR/VR molecular modeling platform for flexible protein-ligand docking compared to a standard 2D desktop interface.

Participants:

-

Expert Group: 5-10 computational chemists or structural biologists with extensive experience in traditional molecular docking software.

-

Novice Group: 10-15 graduate students or researchers in biochemistry or a related field with theoretical knowledge of protein-ligand interactions but limited experience with docking software.

Hardware and Software:

-

Immersive System: An AR or VR headset (e.g., Microsoft HoloLens 2, HTC Vive) running a compatible molecular modeling application (e.g., Nanome, Narupa iMD-VR).[8]

-

Desktop System: A high-performance workstation with a standard 2D monitor, keyboard, and mouse, running industry-standard modeling software (e.g., PyMOL, ChimeraX, Maestro).

Experimental Design:

A within-subjects or between-subjects design can be used. A within-subjects design, where each participant completes tasks on both systems, is powerful for comparison but requires counterbalancing to avoid learning effects.

Procedure:

-

Pre-Experiment:

-

Administer a background questionnaire to capture participants' experience with molecular modeling, 3D visualization, and AR/VR technologies.

-

For the novice group, provide a standardized, hour-long training session on the principles of protein-ligand binding and the use of both the immersive and desktop systems.[8]

-

-

Task Execution:

-

Assign participants a series of protein-ligand docking tasks using well-characterized systems (e.g., trypsin/benzamidine, neuraminidase/oseltamivir, HIV-1 protease/amprenavir).[8][9]

-

Task 1 (Pose Recreation): Provide participants with a protein and a separated ligand. Instruct them to dock the ligand into the binding pocket and manipulate it to achieve the most stable, realistic binding pose.

-

Task 2 (Unbinding/Rebinding): Provide participants with the bound protein-ligand complex. Instruct them to guide the ligand out of the binding pocket and then re-dock it. This tests the ability to explore binding pathways.[8][9]

-

-

Data Collection:

-

Performance Metrics:

-

Task Completion Time: Record the time taken to complete each docking task.

-

Accuracy (RMSD): Calculate the Root-Mean-Square Deviation of the final ligand pose generated by the user compared to the known crystallographic pose.

-

-

User Experience Metrics:

-

System Usability Scale (SUS): Administer this standardized questionnaire to assess perceived usability.

-

NASA Task Load Index (NASA-TLX): Use this tool to evaluate the perceived workload across dimensions like mental demand, physical demand, and frustration.

-

Qualitative Feedback: Conduct a post-experiment interview to gather subjective feedback on the intuitiveness, sense of immersion, and perceived benefits or drawbacks of each system.

-

-

Visualizing the Workflow

To better understand the logical flow of such a comparative study, the following diagrams illustrate the key processes.

References

- 1. Visualization of molecular structures using HoloLens-based augmented reality - PMC [pmc.ncbi.nlm.nih.gov]

- 2. Interactive Molecular Graphics for Augmented Reality Using HoloLens - PMC [pmc.ncbi.nlm.nih.gov]

- 3. researchgate.net [researchgate.net]

- 4. Visualization of molecular structures using HoloLens-based augmented reality - PubMed [pubmed.ncbi.nlm.nih.gov]

- 5. New case study shows virtual reality tools could save biopharmaceutical companies tens of thousands per year [prnewswire.com]

- 6. VR Software wiki - Nanome's Evaluation [vrwiki.cs.brown.edu]

- 7. meet.nanome.ai [meet.nanome.ai]

- 8. Interactive molecular dynamics in virtual reality for accurate flexible protein-ligand docking - PubMed [pubmed.ncbi.nlm.nih.gov]

- 9. Interactive molecular dynamics in virtual reality for accurate flexible protein-ligand docking | PLOS One [journals.plos.org]

A Technical Guide to Marker-Based vs. Markerless Augmented Reality in the Laboratory

For Researchers, Scientists, and Drug Development Professionals

This in-depth technical guide explores the core principles, comparative advantages, and practical applications of marker-based and markerless Augmented Reality (AR) systems within the laboratory environment. As laboratories increasingly adopt digital technologies to enhance efficiency, accuracy, and safety, understanding the nuances of different AR approaches is crucial for making informed implementation decisions. This guide provides a detailed comparison of the two primary AR technologies, supported by quantitative data, experimental protocols, and workflow visualizations to aid researchers, scientists, and drug development professionals in leveraging AR for their specific needs.

Core Principles: Marker-Based vs. Markerless AR

Augmented reality overlays computer-generated information onto the real world. The primary distinction between marker-based and markerless AR lies in how the system tracks the user's viewpoint and anchors digital content to the physical environment.

Marker-Based AR utilizes predefined visual cues, or markers, to trigger and position digital content.[1] These markers can range from simple QR codes and barcodes to more complex image targets like logos or custom-designed fiducial markers.[2][3] The AR application's camera recognizes these markers, calculates their position and orientation in 3D space, and overlays the corresponding digital information.[1] This method is known for its high precision and stability, as the marker provides a constant and reliable reference point.[1]

Markerless AR , also known as Simultaneous Localization and Mapping (SLAM)-based AR, does not require predefined markers. Instead, it employs advanced computer vision algorithms to analyze the real-world environment in real-time, identifying natural features such as points, edges, and textures on surfaces like walls and floors.[4] The system builds a 3D map of the surroundings while simultaneously tracking the device's position within that map, allowing for the placement of virtual objects in the environment. This approach offers greater flexibility and a more seamless user experience, as it is not constrained by the presence of physical markers.[1]

Quantitative Comparison of AR Technologies

The choice between marker-based and markerless AR often depends on the specific requirements of the laboratory application, including the need for accuracy, the nature of the environment, and budget constraints. The following tables summarize key quantitative data to facilitate a direct comparison.

| Performance Metric | Marker-Based AR | Markerless AR (SLAM) | Notes |

| Positional Accuracy | Sub-millimeter precision possible | Generally less precise than marker-based, with potential for slight "drift"[1] | Marker-based AR excels in applications requiring exact alignment of virtual content with physical objects. |

| Recognition/Tracking Success Rate (Optimal Conditions) | High, can achieve 99.7% tracking reliability with well-designed markers | High, can achieve a 94.4% success rate in varied conditions[2][5] | Performance of both systems is dependent on factors like lighting and camera quality. |

| Initialization Time | Rapid, can be sub-100ms | Can be slightly longer as the system needs to analyze the environment | Marker-based systems can be faster to start as they only need to detect a known pattern. |

| Effective Range | Dependent on marker size and camera resolution; can be effective up to several meters with large markers | Generally more flexible, not limited by the line of sight to a specific marker | Markerless AR is more scalable for larger laboratory spaces.[1] |

| Computational Overhead | Lower, as it relies on recognizing predefined patterns[1] | Higher, due to real-time environmental mapping and feature tracking[1] | This can impact device choice and battery consumption. |

| Cost of Implementation | Generally lower for software development and can utilize standard printed markers[1][6] | Can be more cost-effective in the long run as it doesn't require the production and placement of physical markers[6][7] | Hardware costs (e.g., smartphones, tablets, AR glasses) are a factor for both. |

| Environmental Factor | Marker-Based AR Performance | Markerless AR Performance | Key Takeaway |

| Lighting Conditions | More robust in varied lighting as long as the marker is visible[1] | Can be sensitive to very low light or highly reflective surfaces that lack distinct features[1] | Controlled lighting in a lab environment benefits both, but marker-based may be more reliable in challenging lighting. |

| Surface Texture | Not dependent on surface texture, only on marker visibility | Requires surfaces with sufficient texture and feature points for stable tracking | Markerless AR may struggle on plain, uniform surfaces often found in labs (e.g., stainless steel benches). |

| Occlusion | Tracking is lost if the marker is obscured from the camera's view[1] | More resilient to partial occlusion as it tracks multiple environmental features | In a cluttered lab environment, markerless AR may offer more consistent tracking. |

Experimental Protocols for Lab Use

The following are detailed methodologies for implementing AR in common laboratory scenarios.

Marker-Based AR for Instrument Operation Guidance

This protocol outlines the use of marker-based AR to provide step-by-step instructions for operating a piece of laboratory equipment, such as a centrifuge or a spectrophotometer.

Objective: To guide a user through the standard operating procedure (SOP) of a laboratory instrument, reducing errors and training time.

Materials:

-

AR-enabled device (smartphone or tablet) with the guidance application installed.

-

Laminated AR markers with clear, high-contrast patterns.

-

The laboratory instrument to be operated.

Methodology:

-

Marker Placement: Affix AR markers to key interaction points on the instrument (e.g., power button, sample loading area, control panel).

-

Initiate AR Application: Launch the AR guidance application on the smart device.

-

Initial Scan: Point the device's camera at the primary marker on the instrument to initiate the workflow.

-

Step-by-Step Guidance: The application will overlay a 3D animation or text instruction for the first step (e.g., "Press the power button").

-

Task Completion and Next Step: Once the user completes the action, they point the camera at the next designated marker in the sequence to trigger the instructions for the subsequent step.

-

Interactive Information: At any point, scanning a specific "help" marker can bring up additional information, such as safety warnings or troubleshooting tips.

-

Workflow Completion: The AR application will indicate the completion of the SOP once all steps have been successfully executed.

Markerless AR for Navigating a Bio-Safety Cabinet Workflow

This protocol describes the use of markerless AR to guide a researcher through an aseptic workflow within a Class II Bio-Safety Cabinet (BSC).

Objective: To ensure proper aseptic technique and adherence to the experimental protocol within the sterile environment of a BSC, minimizing the risk of contamination.

Materials:

-

AR-enabled smart glasses (e.g., Microsoft HoloLens, Vuzix Blade).

-

The AR application for the specific cell culture protocol.

-

All necessary sterile reagents and consumables for the experiment.

Methodology:

-

Environment Mapping: The user, wearing the AR smart glasses, looks around the inside of the BSC to allow the markerless AR system to map the surfaces and create a 3D understanding of the workspace.

-

Protocol Initiation: The user initiates the desired protocol through a voice command or gesture.

-

Virtual Object Placement: The AR application overlays virtual representations of the required items (e.g., media bottles, pipette tips, cell culture flasks) in their designated locations within the mapped BSC environment.

-

Sequential Guidance: The application highlights the first item to be used and displays the corresponding action (e.g., "Add 10 mL of media to the flask").

-

Hands-Free Interaction: The user performs the task with both hands, as the instructions are displayed in their field of view. They can proceed to the next step using a voice command (e.g., "Next step").

-

Integrated Timers and Alerts: For incubation steps, a virtual timer is displayed. The system can also provide alerts for critical steps, such as mixing reagents or changing pipette tips.

-

Data Logging: The user can verbally log observations or deviations from the protocol, which are automatically transcribed and saved.

-

Protocol Completion: The application confirms the completion of the workflow and can provide instructions for waste disposal and cleaning the BSC.

Visualization of Laboratory Workflows

The following diagrams, created using the DOT language, illustrate logical relationships and workflows in a laboratory setting where AR can be implemented.

Caption: A generalized experimental workflow guided by Augmented Reality.

Caption: An AR-assisted decision tree for a diagnostic workflow.

Conclusion

Both marker-based and markerless AR offer significant potential to revolutionize laboratory work by improving efficiency, reducing errors, and enhancing safety. Marker-based AR is a robust and precise solution, ideal for tasks requiring high accuracy in controlled environments, such as instrument operation and training.[1] Its lower computational requirements also make it accessible on a wider range of devices. In contrast, markerless AR provides unparalleled flexibility and a more intuitive user experience, making it well-suited for dynamic workflows and larger lab spaces.[1] The choice between the two will ultimately depend on the specific application, environmental conditions, and project goals. As the technology continues to mature, hybrid approaches that leverage the strengths of both systems may become the standard for AR implementation in the multifaceted laboratory environment.

References

- 1. Types of AR: Marker-Based vs. Markerless [qodequay.com]

- 2. shmpublisher.com [shmpublisher.com]

- 3. navajyotijournal.org [navajyotijournal.org]

- 4. A Survey of Marker-Less Tracking and Registration Techniques for Health & Environmental Applications to Augmented Reality and Ubiquitous Geospatial Information Systems - PMC [pmc.ncbi.nlm.nih.gov]

- 5. researchgate.net [researchgate.net]

- 6. analyticalscience.wiley.com [analyticalscience.wiley.com]

- 7. comparison of markerless and marker-based motion capture system [remocapp.com]

Augmented Reality in Scientific Education and Training: A Technical Guide

Introduction

Augmented Reality (AR) is rapidly emerging as a transformative technology in scientific education and training, offering immersive and interactive experiences that enhance understanding and skill acquisition. By overlaying computer-generated information onto the real world, AR provides a powerful tool for visualizing complex data, simulating intricate procedures, and providing real-time guidance. This technical guide explores the core applications of AR across various scientific disciplines, focusing on quantitative outcomes and detailed experimental methodologies. It is intended for researchers, scientists, and drug development professionals seeking to understand and leverage the potential of AR in their respective fields.

Augmented Reality in Medical and Surgical Training

AR is making significant inroads in medical education, particularly in surgical training, where it offers a safe and controlled environment for trainees to develop critical skills.[1][2] By overlaying 3D anatomical models onto a patient manikin or the trainee's own view, AR systems provide unprecedented insights into human anatomy and allow for the practice of complex surgical procedures without risk to patients.[3]

Quantitative Outcomes

Numerous studies have demonstrated the positive impact of AR on surgical training, showing significant improvements in performance metrics compared to traditional training methods. A recent study on AR-based surgical training reported substantial gains in accuracy, efficiency, and procedural success rates.[4] Another study focusing on a laparoscopic appendectomy simulation showed that 100% of trainees improved their performance time, with an average improvement of 55%.[5]

| Performance Metric | Improvement with AR | Study Reference |

| Accuracy | 20% increase | [4] |

| Time Efficiency | 33% improvement | [4] |

| Error Reduction | 60% decrease | [4] |

| Procedural Success Rate | 21% increase | [4] |

| Laparoscopic Appendectomy Time | 55% average improvement | [5] |

| Laparoscopic Instrument Travel | 39% average improvement | [5] |

Experimental Protocol: AR-Based Surgical Skill Assessment

A typical experimental protocol to assess the effectiveness of an AR surgical training module involves a pre-test/post-test control group design.

-

Participant Recruitment: A cohort of surgical trainees with similar experience levels is recruited.

-

Group Allocation: Participants are randomly assigned to either an experimental group (AR training) or a control group (traditional training, e.g., video tutorials).

-

Pre-Test: All participants perform a standardized surgical task (e.g., suturing, dissection) on a simulator, and baseline performance metrics are recorded. These metrics often include task completion time, instrument path length, number of errors, and accuracy of movements.

-

Intervention:

-

The experimental group receives training using an AR headset (e.g., Microsoft HoloLens) that overlays 3D anatomical models, procedural steps, and real-time feedback onto the physical simulator.[6]

-

The control group receives training through traditional methods, such as watching an instructional video of the same procedure.

-

-

Post-Test: After the training session, all participants repeat the same surgical task from the pre-test. Performance metrics are recorded again.

-

Data Analysis: Statistical analysis is performed to compare the improvement in performance metrics between the experimental and control groups.

Experimental Workflow Diagram

Augmented Reality in Biology Education

In biology, AR offers a unique opportunity to visualize and interact with complex biological structures and processes that are otherwise invisible to the naked eye.[7][8] From cellular mechanisms to entire ecosystems, AR applications can bring abstract concepts to life, leading to improved student engagement and learning outcomes.[9][10]

Quantitative Outcomes

Studies have shown that AR can significantly enhance students' understanding of complex biological topics. For instance, a study on the use of an AR application for learning about the human respiratory system showed that the experimental group achieved a significantly higher average post-test score compared to the control group.[11] Another study in a secondary school biology class found that students using an AR-enhanced curriculum outperformed their peers who received traditional instruction.[12][13]

| Study Focus | AR Group (Post-Test Score) | Control Group (Post-Test Score) | Key Finding | Study Reference |

| Human Respiratory System | 90.60 | 76.07 | Significant improvement in learning outcomes. | [11] |

| Secondary School Biology | 81.0% | 76.1% | AR group significantly outperformed the control group. | [12][13] |

Experimental Protocol: AR for Cellular Biology Education

The following protocol outlines a typical experiment to evaluate the impact of an AR application on learning cellular biology.

-

Participants: High school or undergraduate students enrolled in a biology course.

-

Design: A quasi-experimental design with a pre-test and a post-test.

-

Materials:

-

An AR application (e.g., "AR Sinaps") installed on mobile devices.[11]

-

Pre-test and post-test questionnaires covering key concepts of cellular biology.

-

Traditional learning materials (e.g., textbooks, PowerPoint slides) for the control group.

-

-

Procedure:

-

Pre-Test: All students complete a pre-test to assess their prior knowledge.

-

Intervention (2 weeks):

-

Experimental Group: Students use the AR application to explore 3D models of cells, organelles, and cellular processes. The application allows for interactive manipulation and exploration of these virtual objects.

-

Control Group: Students learn the same topics using traditional methods.

-

-

Post-Test: All students complete a post-test to measure their knowledge gain.

-

-

Data Analysis: The pre-test and post-test scores are compared between the two groups to determine the effectiveness of the AR intervention.

Logical Relationship in AR Biology Learning

Augmented Reality in Chemistry Education

Chemistry education often involves the challenge of visualizing three-dimensional molecular structures and complex reaction mechanisms from two-dimensional representations.[14][15] AR provides a powerful solution by allowing students to interact with and manipulate virtual 3D models of molecules, enhancing their spatial reasoning and conceptual understanding.[16][17]

Quantitative Outcomes

Research in chemistry education has shown the positive effects of AR on student learning and engagement. Studies have reported that interactive, hands-on AR experiences lead to better conceptual understanding and increased interest in the subject.[18]

While many studies focus on qualitative feedback, some provide quantitative evidence of AR's effectiveness. For example, a study comparing an AR-based learning group with a traditional demonstration group found that the hands-on AR group performed significantly better on a chemical reactions concept test.[18]

| Learning Approach | Post-Test Performance (Chemical Reactions) | Key Finding | Study Reference |

| Hands-on AR Learning | Significantly Higher | Outperformed demonstration group | [18] |

| Demonstration-based Learning | Lower | - | [18] |

Experimental Protocol: AR for Molecular Structure Visualization

This protocol describes an experiment to assess the impact of an AR application on students' ability to understand and visualize molecular structures.

-

Participants: Undergraduate chemistry students.

-

Design: A comparative study between a hands-on AR group and a passive AR demonstration group.

-

Materials:

-

An AR application that displays 3D molecular models when a device's camera is pointed at specific markers (e.g., printed images in a textbook).

-

Mobile devices (smartphones or tablets) for the students.

-

A pre-test and post-test to assess understanding of molecular geometry and isomerism.

-

-

Procedure:

-

Pre-Test: All students complete a pre-test.

-

Intervention:

-

Hands-on AR Group: Students individually use the AR application to explore and interact with 3D molecular models.

-

Demonstration Group: The instructor uses the AR application to demonstrate the 3D molecular models to the class.

-

-

Post-Test: All students complete a post-test.

-

-

Data Analysis: The results of the post-test are compared between the two groups to evaluate the effectiveness of the hands-on AR approach.

Signaling Pathway for AR Chemistry Application

Conclusion

Augmented reality holds immense promise for revolutionizing scientific education and training. The evidence presented in this guide demonstrates that AR can lead to significant and measurable improvements in learning outcomes, skill acquisition, and student engagement across diverse scientific fields. As AR technology continues to mature and become more accessible, its integration into scientific curricula and training programs is expected to grow, offering new and exciting possibilities for the future of science education. Further research with rigorous experimental designs and larger sample sizes will continue to validate and refine the application of AR in these critical domains.

References

- 1. Molecular Data Visualization with Augmented Reality (AR) on Mobile Devices - PubMed [pubmed.ncbi.nlm.nih.gov]

- 2. The Increasing Use of Augmented Reality in Surgery Training [kirbysurgicalcenter.com]

- 3. researchgate.net [researchgate.net]

- 4. researchgate.net [researchgate.net]

- 5. academic.oup.com [academic.oup.com]

- 6. The Role of Augmented Reality in Surgical Training: A Systematic Review - PMC [pmc.ncbi.nlm.nih.gov]

- 7. mdpi.com [mdpi.com]

- 8. mdpi.com [mdpi.com]

- 9. blazingprojects.com [blazingprojects.com]

- 10. kwpublications.com [kwpublications.com]

- 11. pubs.aip.org [pubs.aip.org]

- 12. Frontiers | Enhancing student engagement through augmented reality in secondary biology education [frontiersin.org]

- 13. ltu.diva-portal.org [ltu.diva-portal.org]

- 14. Application of Augmented Reality in Chemistry Education: A Systemic Review Based on Bibliometric Analysis from 2002 to 2023 | Semantic Scholar [semanticscholar.org]

- 15. researchgate.net [researchgate.net]

- 16. mdpi.com [mdpi.com]

- 17. pubs.aip.org [pubs.aip.org]