ACHP

Description

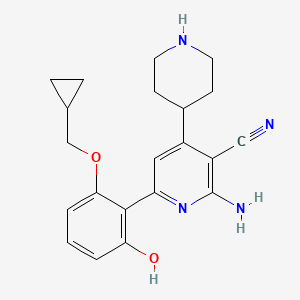

structure in first source

Structure

3D Structure

Properties

IUPAC Name |

2-amino-6-[2-(cyclopropylmethoxy)-6-hydroxyphenyl]-4-piperidin-4-ylpyridine-3-carbonitrile | |

|---|---|---|

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI |

InChI=1S/C21H24N4O2/c22-11-16-15(14-6-8-24-9-7-14)10-17(25-21(16)23)20-18(26)2-1-3-19(20)27-12-13-4-5-13/h1-3,10,13-14,24,26H,4-9,12H2,(H2,23,25) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI Key |

DYVFBWXIOCLHPP-UHFFFAOYSA-N | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Canonical SMILES |

C1CC1COC2=CC=CC(=C2C3=NC(=C(C(=C3)C4CCNCC4)C#N)N)O | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Molecular Formula |

C21H24N4O2 | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

DSSTOX Substance ID |

DTXSID401025613 | |

| Record name | 2-Amino-6-[2-(cyclopropylmethoxy)-6-oxo-2,4-cyclohexadien-1-ylidene]-1,6-dihydro-4-(4-piperidinyl)-3-pyridinecarbonitrile | |

| Source | EPA DSSTox | |

| URL | https://comptox.epa.gov/dashboard/DTXSID401025613 | |

| Description | DSSTox provides a high quality public chemistry resource for supporting improved predictive toxicology. | |

Molecular Weight |

364.4 g/mol | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

CAS No. |

1844858-31-6 | |

| Record name | 2-Amino-6-[2-(cyclopropylmethoxy)-6-oxo-2,4-cyclohexadien-1-ylidene]-1,6-dihydro-4-(4-piperidinyl)-3-pyridinecarbonitrile | |

| Source | EPA DSSTox | |

| URL | https://comptox.epa.gov/dashboard/DTXSID401025613 | |

| Description | DSSTox provides a high quality public chemistry resource for supporting improved predictive toxicology. | |

Foundational & Exploratory

A Technical Guide to the ACHP Guidelines for Archaeological Site Identification and Evaluation

This guide provides an in-depth overview of the frameworks and methodologies stipulated by the Advisory Council on Historic Preservation (ACHP) for the identification and evaluation of archaeological sites, primarily within the context of Section 106 of the National Historic Preservation Act (NHPA).[1][2] It is intended for researchers, scientists, and cultural resource management professionals involved in land use planning and development.

Introduction to Section 106 and the Role of the this compound

Section 106 of the NHPA requires federal agencies to consider the effects of their projects on historic properties.[2][3] The this compound is an independent federal agency that promotes the preservation, enhancement, and productive use of our nation's historic resources and advises the President and Congress on national historic preservation policy. The this compound's regulations implement Section 106 and guide federal agencies in fulfilling their responsibilities. Archaeological sites are a key component of these historic properties, and their identification and evaluation are critical steps in the Section 106 review process. It is estimated that over 90% of archaeological excavations in the United States are conducted in compliance with Section 106.

The process of identifying and evaluating archaeological sites is not intended to be an exhaustive search for every artifact of the past. Instead, federal agencies are required to make a "reasonable and good faith effort" to identify historic properties, including archaeological sites that are listed on or eligible for listing on the National Register of Historic Places, within a project's Area of Potential Effects (APE).

Core Concepts in Archaeological Site Identification and Evaluation

Several key concepts, defined by the National Park Service and integral to the this compound guidelines, underpin the process of identifying and evaluating archaeological sites.

| Term | Definition | Source |

| Archaeological Site | A location that contains the physical evidence of past human behavior that allows for its interpretation. | National Register Bulletin No. 36 |

| Historic Property | Any prehistoric or historic district, site, building, structure, or object included in, or eligible for inclusion on, the National Register of Historic Places. | National Historic Preservation Act |

| Significance | The importance of a property, measured by its ability to meet one or more of the four National Register criteria (A-D). | National Register Bulletin No. 15 |

| Integrity | The ability of a property to convey its significance through its physical features and context. This includes seven aspects: location, design, setting, materials, workmanship, feeling, and association. | National Register Bulletin No. 15 |

| Area of Potential Effects (APE) | The geographic area or areas within which an undertaking may directly or indirectly cause alterations in the character or use of historic properties. | 36 CFR § 800.16(d) |

Methodologies for Archaeological Site Identification (Phase I)

The initial stage of locating archaeological sites is the Phase I investigation. This involves a combination of background research and fieldwork designed to identify resources and define site boundaries within the APE.

Background Research

Before fieldwork commences, a thorough literature review is essential. This includes:

-

Record Checks: Consulting the appropriate state historic preservation office (SHPO) or tribal historic preservation office (THPO) for records of previously identified historic properties and surveys.

-

Review of Pertinent Materials: Examining historical maps (such as Sanborn maps and historic topographic maps), aerial photographs, and gray literature (unpublished archaeological reports).

-

Local Sources: Gathering information from local historical societies, public libraries, and through informant interviews.

-

Predictive Modeling: In areas where the archaeology is well-known, predictive models may be used to identify locations with a high probability of containing archaeological sites.

Field Survey Methods

The selection of field methods depends on the specific environmental conditions and the nature of the project.

| Method | Description | Application |

| Pedestrian Survey | Systematic walking of the APE in transects to visually identify artifacts and surface features. | Areas with good ground surface visibility. |

| Shovel Test Pits (STPs) | Excavation of small, standardized pits at regular intervals along a grid to identify subsurface artifacts. Soil is typically screened through 1/4-inch mesh. | Areas with low surface visibility or where buried sites are expected. |

| Augering/Coring | Use of a hand or mechanical auger to examine deeper soil stratigraphy and identify buried cultural layers. | Environments with significant soil deposition. |

| Mechanical Trenching | Excavation of trenches with a backhoe to expose soil profiles and identify deeply buried sites. | Used judiciously in areas with high potential for deeply buried, significant deposits. |

| Remote Sensing | Non-invasive techniques such as ground-penetrating radar (GPR), magnetometry, and soil resistivity to detect subsurface anomalies that may represent archaeological features. | To identify features without excavation and to guide the placement of test units. |

The following diagram illustrates the workflow for a Phase I archaeological investigation.

References

Navigating the Nexus of Science and Heritage: A Technical Guide to the Section 106 Process for Researchers

For Immediate Release

Washington, D.C. – For researchers, scientists, and drug development professionals whose work intersects with federal lands, funding, or permits, a critical but often overlooked regulatory framework is Section 106 of the National Historic Preservation Act (NHPA). This in-depth guide provides a technical overview of the Section 106 process, offering clarity on its requirements, from initial project conception to the resolution of potential impacts on historic properties. Understanding this process is crucial for ensuring compliance and the successful execution of scientific endeavors.

The Core of Section 106: A Consultation-Based Process

Section 106 of the NHPA mandates that federal agencies consider the effects of their undertakings on historic properties.[1][2][3][4][5] An "undertaking" is broadly defined as any project, activity, or program funded in whole or in part under the direct or indirect jurisdiction of a federal agency, including those requiring a federal permit, license, or approval. For the scientific community, this can encompass a wide range of activities, from archaeological excavations to biological surveys and the installation of monitoring equipment on federal lands.

The Section 106 process is not designed to halt projects but to encourage a consultative approach to identify, assess, and seek ways to avoid, minimize, or mitigate any adverse effects on historic properties. Historic properties are those that are listed in or eligible for listing in the National Register of Historic Places.

The key participants in the Section 106 process include:

-

The Federal Agency: The lead agency responsible for the undertaking and for initiating and overseeing the Section 106 process.

-

State Historic Preservation Officer (SHPO): Appointed by the governor in each state, the SHPO and their staff have expertise in the historic resources of their state and consult with federal agencies.

-

Tribal Historic Preservation Officer (THPO): Federally recognized Native American tribes may have a THPO who assumes the responsibilities of the SHPO on tribal lands. Government-to-government consultation with tribes is a critical component of the process.

-

The Advisory Council on Historic Preservation (this compound): An independent federal agency that oversees the Section 106 process and may participate in consultation, particularly in complex or contentious cases.

-

Consulting Parties: These can include local governments, applicants for federal assistance or permits, and other individuals and organizations with a demonstrated interest in the undertaking.

-

The Public: The public must be given an opportunity to provide their views and concerns.

The Four-Step Section 106 Process

The Section 106 process is a sequential, four-step framework designed to ensure that historic preservation is considered in project planning.

Step 1: Initiate the Process

The federal agency first determines if its proposed action constitutes an "undertaking" with the potential to affect historic properties. If so, the agency identifies the appropriate SHPO/THPO and other potential consulting parties and plans for public involvement.

Step 2: Identify Historic Properties

The agency, in consultation with the SHPO/THPO, defines the "Area of Potential Effects" (APE), which is the geographic area within which an undertaking may directly or indirectly cause changes to the character or use of historic properties. A reasonable and good faith effort must be made to identify any historic properties within the APE. This often involves background research, field surveys, and consultation with knowledgeable parties.

Step 3: Assess Adverse Effects

If historic properties are identified within the APE, the agency, in consultation with the SHPO/THPO and other consulting parties, assesses whether the undertaking will have an "adverse effect." An adverse effect occurs when an undertaking may alter, directly or indirectly, any of the characteristics of a historic property that qualify it for inclusion in the National Register in a manner that would diminish the integrity of the property.

Step 4: Resolve Adverse Effects

If it is determined that the undertaking will have an adverse effect, the federal agency must consult with the SHPO/THPO and other consulting parties to seek ways to avoid, minimize, or mitigate the adverse effects. This consultation can result in a Memorandum of Agreement (MOA) or a Programmatic Agreement (PA), which are legally binding documents outlining the agreed-upon measures.

Data Presentation: Understanding the Metrics of Section 106

While comprehensive, nationwide quantitative data on the precise timelines and costs of the Section 106 process is not systematically collected and publicly available, some insights can be gleaned from various sources. It is important to note that costs and timelines are highly project-specific and depend on the complexity of the undertaking and the nature of the historic properties involved.

| Metric | Available Data & Observations | Caveats |

| Typical Timelines | The SHPO/THPO generally has 30 days to review a federal agency's findings and determinations at each step of the process. The overall timeline can range from a few weeks for simple projects with no adverse effects to a year or more for complex projects requiring extensive consultation and mitigation. | These are general timeframes and can be extended by requests for additional information or prolonged consultation. |

| Costs | The cost of archaeological investigations is highly variable. For federally funded projects, the responsible federal agency typically covers the costs of surveys and mitigation. For federally permitted projects, the applicant is usually responsible. The Moss-Bennett Act suggests that for projects over $50,000, mitigation costs for cultural resources are often around 1% of the total project cost. | This 1% figure is a guideline and not a strict rule. Costs can fluctuate significantly based on the scope of work required. |

| Outcomes | The majority of Section 106 reviews do not result in a finding of adverse effect. When adverse effects are identified, resolution is typically achieved through a Memorandum of Agreement (MOA). The process is designed to be collaborative and does not mandate a specific preservation outcome. | Data on the specific outcomes of all Section 106 agreements (e.g., number of properties preserved vs. mitigated through data recovery) is not centrally compiled. |

Experimental Protocols: Methodologies in Section 106 Investigations

For many scientific researchers, particularly in the field of archaeology, the Section 106 process necessitates specific fieldwork and reporting. The following are detailed methodologies for the phased archaeological investigations commonly required for compliance.

Phase I: Archaeological Identification Survey

Objective: To determine the presence or absence of archaeological resources within the Area of Potential Effects (APE).

Methodology:

-

Archival Research: A thorough review of existing records, including state archaeological site files, historic maps, land use records, and previous cultural resource surveys in the vicinity.

-

Field Reconnaissance: A systematic pedestrian survey of the APE. The intensity of the survey is determined by factors such as ground visibility and the potential for buried sites.

-

Surface Inspection: In areas with good ground visibility, the surface is systematically walked in transects to identify artifacts or surface features.

-

Subsurface Testing: In areas with low surface visibility, shovel test pits (STPs) are excavated at regular intervals along a grid. STPs are typically 30-50 cm in diameter and are excavated to sterile subsoil. All excavated soil is screened through 1/4-inch mesh to recover artifacts.

-

-

Documentation: All findings, including negative results, are thoroughly documented with notes, photographs, and maps. Any identified archaeological sites are recorded on state-specific site forms.

-

Reporting: A comprehensive report is prepared that details the research design, field methodology, findings, and recommendations for further investigation if necessary.

Phase II: Archaeological Evaluation

Objective: To determine if an identified archaeological site is eligible for listing in the National Register of Historic Places (NRHP).

Methodology:

-

Development of a Research Design: A formal research design is created to guide the evaluation, outlining the specific questions that will be addressed.

-

More Intensive Fieldwork:

-

Controlled Surface Collection: A systematic collection of artifacts from the surface of the site to understand the types and distribution of cultural materials.

-

Test Unit Excavation: The excavation of larger, formal test units (e.g., 1x1 meter or 2x2 meter squares) to investigate the vertical and horizontal extent of cultural deposits, identify features, and assess the integrity of the site. Excavation proceeds in natural or arbitrary levels, and all soil is screened.

-

Geophysical Surveys: Non-invasive techniques such as ground-penetrating radar (GPR), magnetometry, or electrical resistivity may be used to identify subsurface features without excavation.

-

-

Artifact Analysis: Recovered artifacts are cleaned, cataloged, and analyzed to determine their age, function, and cultural affiliation.

-

Reporting: A detailed report is prepared that presents the results of the fieldwork and analysis and provides a formal determination of the site's eligibility for the NRHP.

Phase III: Archaeological Data Recovery (Mitigation)

Objective: To mitigate the adverse effects of an undertaking on a significant archaeological site by recovering the important information it contains before it is destroyed.

Methodology:

-

Data Recovery Plan: A detailed plan is developed in consultation with the SHPO/THPO and other consulting parties that outlines the research questions, excavation strategy, sampling methods, and analysis plan.

-

Large-Scale Excavation: Extensive, systematic excavation of the portions of the site that will be impacted by the project. This may involve the use of heavy machinery to remove sterile overburden, followed by careful hand excavation of cultural layers and features.

-

Specialized Analyses: In addition to standard artifact analysis, specialized studies may be conducted, such as radiocarbon dating, faunal and floral analysis, soil chemistry, and geoarchaeology.

-

Curation: All recovered artifacts and records are prepared for long-term curation at an approved facility.

-

Public Outreach: Mitigation often includes a public outreach component, such as exhibits, publications, or educational programs.

-

Final Report: A comprehensive final report is prepared that presents the results of the data recovery and contributes to our understanding of the past.

Section 106 and Non-Archaeological Scientific Research

While archaeology is the most common scientific discipline involved in Section 106, other fields of research can also trigger the process. Any scientific activity that is considered a federal "undertaking" and has the potential to affect historic properties is subject to review. This could include:

-

Biological and Ecological Surveys: Research involving ground disturbance, such as the installation of monitoring wells, soil sampling, or the establishment of long-term study plots in areas with potential archaeological or cultural significance.

-

Geological Investigations: Projects that involve trenching, drilling, or other subsurface disturbances.

-

Installation of Scientific Equipment: The construction of towers, weather stations, or other research infrastructure that could have a visual or physical impact on historic properties or landscapes.

For these disciplines, the Section 106 process follows the same four steps. The key is early consultation with the lead federal agency and the SHPO/THPO to determine if the research activities constitute an undertaking and to define the APE. In many cases, a programmatic agreement can be developed for repetitive research activities to streamline the review process.

Mandatory Visualizations

To further elucidate the Section 106 process, the following diagrams illustrate key workflows and relationships.

Caption: A high-level overview of the four-step Section 106 workflow.

Caption: The communication pathways between key parties in the Section 106 process.

Caption: The logical decision-making process for assessing adverse effects.

By understanding the intricacies of the Section 106 process, scientific researchers can better navigate their responsibilities, foster positive relationships with regulatory agencies and consulting parties, and contribute to both the advancement of knowledge and the preservation of our nation's rich cultural heritage. Early and proactive engagement is the key to a successful and efficient review.

References

- 1. dot.alaska.gov [dot.alaska.gov]

- 2. Frequently Asked Questions About Lead Federal Agencies in Section 106 Review | Advisory Council on Historic Preservation [this compound.gov]

- 3. This compound.gov [this compound.gov]

- 4. This compound Regulations Implementing Section 106 | DSC Workflows - (U.S. National Park Service) [nps.gov]

- 5. allstarecology.com [allstarecology.com]

Navigating the Future of Preservation: A Technical Guide to the ACHP's Research Priorities

For Immediate Release

Washington, D.C. - The Advisory Council on Historic Preservation (ACHP) has outlined a forward-looking agenda that, while not rooted in traditional laboratory science, sets a clear course for research and development in the heritage sector. This technical guide provides researchers, scientists, and preservation professionals with an in-depth look at the this compound's strategic direction, translating their policy objectives into actionable research priorities. The focus is on leveraging technology, addressing climate change, and ensuring a more inclusive and equitable approach to preserving the nation's diverse heritage.

The this compound's role is not that of a scientific research agency but a policy and oversight body.[1][2][3] Therefore, this guide synthesizes the council's strategic goals into a framework that can inform and guide scientific and technical research in the field of heritage preservation. The following sections detail these priorities, offering a roadmap for innovation in preservation practices.

Core Research and Development Pillars

The this compound's strategic objectives can be distilled into three core pillars for research and development in heritage science and preservation:

-

Climate Change and Environmental Sustainability: Developing and implementing strategies to mitigate the impacts of climate change on historic properties is a paramount concern.[4][5]

-

Technological Advancement and Digital Integration: Harnessing modern technology to improve the efficiency and effectiveness of preservation processes is a key goal.

-

Equity and Inclusive Preservation: Ensuring that the national historic preservation program reflects the full diversity of the American story is a fundamental objective.

These pillars are interconnected and represent a holistic approach to the future of historic preservation.

I. Climate Change and Environmental Sustainability

The this compound has formally recognized the escalating threat of climate change to historic properties and has issued a policy statement to guide federal agencies and other stakeholders. Research in this area should focus on developing innovative and practical solutions for climate adaptation and mitigation.

Key Research Priorities:

| Priority Area | Research Focus | Potential Methodologies |

| Material Science and Conservation | Development of new materials and techniques for the conservation of historic materials exposed to extreme weather events (e.g., increased precipitation, temperature fluctuations, saltwater intrusion). | Accelerated weathering studies, non-destructive testing and evaluation, development of reversible and compatible conservation treatments. |

| Energy Efficiency and Renewable Energy Integration | Investigating and promoting the sensitive integration of renewable energy technologies and energy efficiency upgrades in historic buildings without compromising their historic character. | Building performance simulation, life cycle assessment, case study analysis of successful retrofitting projects. |

| Climate Adaptation Strategies | Researching and developing best practices for adapting historic properties and cultural landscapes to the impacts of climate change, such as sea-level rise, flooding, and wildfires. | Vulnerability assessments, risk mapping, development of adaptation planning frameworks, documentation and analysis of traditional and indigenous knowledge of resilience. |

Experimental Workflow: Climate Adaptation Strategy Development

The following diagram illustrates a potential workflow for developing and implementing climate adaptation strategies for a historic coastal property.

II. Technological Advancement and Digital Integration

The this compound advocates for the use of modern technology and digital tools to streamline preservation processes, particularly the Section 106 review, and to enhance public engagement. Research in this area should focus on the development and application of innovative digital technologies for the documentation, analysis, and management of heritage resources.

Key Research Priorities:

| Priority Area | Research Focus | Potential Methodologies |

| Digital Documentation and Survey | Advancing the use of remote sensing, GIS, 3D laser scanning, and photogrammetry for the rapid and accurate documentation of historic properties and cultural landscapes. | Development of standardized data acquisition and processing protocols, integration of digital data into preservation planning and review processes. |

| Data Management and Accessibility | Creating robust and interoperable digital platforms for the management, sharing, and long-term preservation of heritage data. | Development of linked open data models, cloud-based data management systems, and public-facing web portals for accessing heritage information. |

| Predictive Modeling and Analysis | Utilizing data analytics and machine learning to model the potential impacts of development projects on historic resources and to identify areas at risk from environmental and other threats. | Development of spatial analysis models, machine learning algorithms for feature recognition, and decision support tools for preservation planning. |

Logical Relationship: Integrated Heritage Data Management

The following diagram illustrates the logical relationship between different components of an integrated digital heritage management system.

III. Equity and Inclusive Preservation

A central theme in the this compound's recent strategic planning is the commitment to a more equitable and inclusive preservation practice that recognizes the full range of the nation's heritage. Research in this area should focus on developing methodologies and frameworks for identifying, documenting, and preserving the heritage of underrepresented communities.

Key Research Priorities:

| Priority Area | Research Focus | Potential Methodologies |

| Community-Based Heritage Documentation | Developing and implementing participatory methods for documenting the tangible and intangible heritage of diverse communities. | Oral history, community mapping, collaborative digital storytelling, ethnographic research. |

| Re-evaluation of Significance Criteria | Critically examining and proposing revisions to existing criteria for evaluating the significance of historic properties to ensure they are more inclusive of diverse cultural values and narratives. | Comparative analysis of national and international significance criteria, case study analysis of properties associated with underrepresented communities. |

| Equitable Mitigation and Community Benefits | Investigating and promoting mitigation strategies for adverse effects on historic properties that provide direct and tangible benefits to affected communities. | Development of community benefit agreement frameworks, research on the socio-economic impacts of preservation in diverse neighborhoods. |

Signaling Pathway: Achieving Equitable Preservation Outcomes

The following diagram illustrates the signaling pathway from policy to equitable outcomes in historic preservation.

Conclusion

While the Advisory Council on Historic Preservation does not conduct scientific research in a traditional sense, its strategic priorities provide a clear and compelling agenda for the heritage science and preservation community. By focusing on the critical challenges of climate change, embracing the potential of new technologies, and committing to a more equitable and inclusive approach, the this compound is paving the way for a more resilient and relevant future for historic preservation. Researchers, scientists, and preservation professionals are encouraged to align their work with these priorities to contribute to the ongoing evolution of this vital field.

References

- 1. procurementsciences.com [procurementsciences.com]

- 2. Advisory Council on Historic Preservation (this compound) | National Preservation Institute [npi.org]

- 3. Federal Register :: Agencies - Advisory Council on Historic Preservation [federalregister.gov]

- 4. Climate Resilience & Sustainability | Advisory Council on Historic Preservation [this compound.gov]

- 5. This compound.gov [this compound.gov]

Navigating the Past, Building the Future: A Scientist's Technical Guide to the National Historic Preservation Act

An In-depth Technical Guide on the Core Principles of the National Historic Preservation Act for Researchers, Scientists, and Drug Development Professionals

Introduction: Bridging Scientific Advancement and Historic Preservation

For researchers, scientists, and professionals in drug development, the focus is firmly on the future: pioneering new technologies, discovering life-saving therapies, and expanding the boundaries of human knowledge. However, the physical spaces where this innovation occurs—be it a university campus, a corporate research park, or a manufacturing facility—are often situated within a rich historical context. The National Historic Preservation Act (NHPA) of 1966 is a key piece of federal legislation that ensures this historical context is considered as we build for the future.[1][2] This guide provides a technical overview of the foundational principles of the NHPA, with a specific focus on its relevance to the scientific and research community.

The NHPA establishes a partnership between the federal government and state, tribal, and local governments to preserve the nation's historic and cultural resources.[2] For scientists and developers, the most critical component of the NHPA is Section 106.[3][4] This section requires federal agencies to consider the effects of their undertakings on historic properties. An "undertaking" is a broad term that includes any project, activity, or program funded, permitted, licensed, or approved by a federal agency. This means that if your research facility receives federal grants, requires a federal permit for construction or expansion, or is located on federal land, the Section 106 review process will likely apply.

This guide will demystify the Section 106 process, providing a clear "experimental protocol" for compliance, presenting key data on review timelines, and offering case studies relevant to scientific facilities. By understanding the principles of the NHPA, the scientific community can proactively integrate historic preservation into project planning, ensuring that the quest for future innovation respects and preserves the significant threads of our past.

Quantitative Data on the Section 106 Process

While a comprehensive, nationwide database on all Section 106 reviews is not publicly available, data from various sources provides insight into the typical timelines and outcomes of the process. It is important to note that these figures can vary significantly based on the complexity of the project, the state, and the specific consulting parties involved.

| Metric | Reported Figure(s) | Source / Notes |

| SHPO/THPO Response Time | 30 calendar days for review of a finding or determination upon receipt of adequate information. | This 30-day clock can reset if the SHPO/THPO requests additional information. |

| Average SHPO Review Time | Varies by state; for example, in FY2024, Kentucky reported an average turnaround of 11 days. | This demonstrates that many reviews are completed much faster than the statutory 30-day period. |

| Finding of "No Historic Properties Affected" | A 2010 study indicated that of 114,000 eligibility actions reviewed annually, approximately 85% were found not to involve historic properties. | This suggests that a large majority of projects undergoing Section 106 review do not have an impact on historic properties. |

| Finding of "Adverse Effect" | Varies by state; for example, in FY2025, Washington state reported that only 8% of over 5,000 reviews found adverse effects. | This indicates that a finding of "adverse effect" is not the most common outcome of a Section 106 review. |

| Timeline for Memorandum of Agreement (MOA) | Varies significantly depending on the complexity of the adverse effect and the number of consulting parties. | The process of resolving adverse effects through an MOA is consultative and does not have a fixed timeline. |

Experimental Protocol: The Section 106 Review Process

For a scientist or research professional, the Section 106 process can be viewed as a structured protocol with distinct phases and required documentation. Adhering to this protocol early in the project planning phase is the most effective way to ensure compliance and avoid delays.

Phase 1: Initiation and Identification

-

Determine if the Project is a Federal "Undertaking": The first step is to ascertain if the project falls under the purview of Section 106. This is triggered if the project is funded, licensed, or permitted, in whole or in part, by a federal agency.

-

Initiate Consultation: The federal agency (or the applicant on their behalf) must initiate consultation with the relevant State Historic Preservation Officer (SHPO) or Tribal Historic Preservation Officer (THPO). This involves notifying them of the undertaking and providing initial project information.

-

Define the Area of Potential Effects (APE): The APE is the geographic area within which an undertaking may directly or indirectly cause alterations in the character or use of historic properties. The APE should be defined in consultation with the SHPO/THPO.

-

Identify Historic Properties: A "good faith effort" must be made to identify historic properties within the APE. This involves:

-

Reviewing existing records, such as the National Register of Historic Places.

-

Conducting field surveys and investigations.

-

Consulting with the SHPO/THPO and other interested parties.

-

Phase 2: Assessment of Effects

-

Apply the Criteria of Adverse Effect: For each identified historic property, the federal agency must assess the potential effects of the undertaking by applying the Criteria of Adverse Effect (36 CFR § 800.5). An adverse effect occurs when an undertaking may alter, directly or indirectly, any of the characteristics of a historic property that qualify it for inclusion in the National Register in a manner that would diminish the integrity of the property's location, design, setting, materials, workmanship, feeling, or association.

-

Make a Finding of Effect: Based on the assessment, one of three findings must be made:

-

No Historic Properties Affected: This finding is appropriate when there are no historic properties in the APE, or the project will have no effect on any historic properties present.

-

No Adverse Effect: This finding is made when there are historic properties present, but the project's effects are not detrimental.

-

Adverse Effect: This finding is made when the project will have a detrimental impact on one or more historic properties.

-

-

Submit Findings to SHPO/THPO: The finding of effect, along with supporting documentation, is submitted to the SHPO/THPO for their review and concurrence. The SHPO/THPO generally has 30 days to respond.

Phase 3: Resolution of Adverse Effects

-

Continue Consultation: If a finding of "Adverse Effect" is made, the federal agency must continue to consult with the SHPO/THPO and other consulting parties to find ways to avoid, minimize, or mitigate the adverse effects.

-

Develop a Memorandum of Agreement (MOA): The consultation typically results in a legally binding Memorandum of Agreement (MOA) that outlines the agreed-upon measures to resolve the adverse effects.

-

Implement the MOA: The federal agency is responsible for ensuring that the stipulations of the MOA are carried out.

Mandatory Visualizations

The Section 106 Review Process Workflow

Caption: A flowchart illustrating the four-step Section 106 review process.

Criteria of Adverse Effect

Caption: The criteria for determining an adverse effect on a historic property.

Case Study: NASA's Glenn Research Center and the NHPA

A relevant case study for the scientific community is the experience of the National Aeronautics and Space Administration (NASA) with the NHPA. NASA's Glenn Research Center, established in 1941, is home to numerous historic facilities that were crucial to the advancement of aerospace technology. These include the Altitude Wind Tunnel, used in the development of early jet engines, and the Rocket Engine Test Facility, a National Historic Landmark.

As NASA's research needs evolved, many of these facilities required modification or were slated for decommissioning. These actions constituted federal undertakings and triggered the Section 106 process. For example, modifications to historic wind tunnels to accommodate new research programs required careful consideration of their impact on the character-defining features of these structures.

In cases where adverse effects could not be avoided, NASA entered into Memoranda of Agreement with the relevant SHPOs. These agreements stipulated mitigation measures, such as:

-

Documentation: Creating detailed architectural and engineering records of the historic facilities before their alteration or demolition.

-

Interpretive Displays: Installing historical markers and exhibits to educate the public about the historical significance of the facilities.

-

Salvage and Preservation: Carefully removing and preserving key artifacts and components of the historic facilities for future display or study.

The NASA experience demonstrates that the NHPA does not prohibit scientific advancement at historic sites. Instead, it provides a flexible framework for balancing the needs of cutting-edge research with the responsibility of preserving our nation's scientific heritage. This approach allows for the continued use and adaptation of historic research facilities while ensuring that their important contributions to science and technology are not forgotten. For drug development professionals, this case study offers a model for how to approach the expansion or modification of existing manufacturing plants or research campuses that may have historical significance. By proactively engaging in the Section 106 process, it is possible to achieve both research objectives and preservation goals.

Conclusion: A Framework for Responsible Innovation

The National Historic Preservation Act and its Section 106 review process are not impediments to scientific progress. Rather, they provide a structured and collaborative framework for ensuring that the development of new research facilities and the advancement of science and technology are undertaken with a conscious regard for our nation's history. For researchers, scientists, and drug development professionals, understanding the core principles of the NHPA is the first step toward successful project planning and execution. By initiating consultation early, making a good faith effort to identify and assess effects on historic properties, and collaborating with SHPOs and other stakeholders to resolve adverse effects, the scientific community can continue to build the future without erasing the invaluable legacy of the past.

References

The Digital Frontier of Preservation: A Technical Guide to the ACHP's Embrace of New Technologies

For Immediate Release

Washington, D.C. - The Advisory Council on Historic Preservation (ACHP) today released a comprehensive technical guide detailing its position on the integration of new technologies in the field of historic preservation. This document, aimed at researchers, scientists, and drug development professionals, outlines the agency's strategic approach to leveraging technological innovation to enhance the efficiency and effectiveness of the Section 106 review process and address contemporary challenges such as climate change.

The guide emphasizes the this compound's commitment to fostering a forward-thinking preservation landscape where digital tools and data-driven methodologies play a pivotal role. It highlights the agency's focus on improving access to reliable digital and geospatial information to inform federal project planning and streamline environmental reviews.[1]

Key Tenets of the this compound's Technological Stance:

The this compound's approach to new technologies is guided by a set of core principles aimed at modernizing the federal historic preservation process while upholding the tenets of the National Historic Preservation Act (NHPA).

-

Enhancing Data Accessibility and Management: A central pillar of the this compound's strategy is the improvement of digital information infrastructure. The agency's Digital Information Task Force has put forth recommendations to enhance the availability of geospatial data on historic properties, thereby facilitating more informed and efficient project planning and Section 106 reviews.[1] This initiative is further bolstered by recent funding to develop a centralized geolocation database of U.S. historic properties, which will accelerate permitting for thousands of federal undertakings annually.[2]

-

Streamlining Regulatory Processes: The this compound advocates for and utilizes program alternatives, such as programmatic agreements and program comments, to tailor and streamline the Section 106 review process, particularly for large-scale infrastructure projects like broadband and wireless telecommunications.[3][4] This pragmatic approach seeks to balance the need for technological advancement with the imperative of historic preservation.

-

Addressing Climate Change with Innovation: Recognizing the escalating threat of climate change to historic properties, the this compound has issued a policy statement calling for the accelerated development of guidance on acceptable treatments for at-risk resources. This includes the incorporation of the latest technological innovations and material treatments to enhance the resilience of historic buildings and sites.

-

Modernizing Preservation Standards: The this compound is actively engaged in a critical review of federal historic preservation standards to ensure they are responsive to modern challenges and opportunities. This includes a more flexible application of guidance to accommodate new technologies and sustainable practices in the rehabilitation of historic structures.

Data Presentation: A Move Towards Standardization

While specific quantitative data on the adoption rates of new technologies in preservation is not centrally compiled by the this compound, the agency strongly supports the development and use of standardized data for cultural resource management. The following table illustrates the types of data the this compound encourages federal and state agencies to collect and manage, drawing from the principles outlined in the National Cultural Resource Management Data Standard.

| Data Category | Key Attributes | Rationale for Collection and Standardization |

| Geospatial Data | Site/Property Boundaries, District Polygons, Location Coordinates | To create an integrated, nationwide map of historic and cultural resources for early planning and impact avoidance. |

| Investigation Data | Survey Areas, Dates of Investigation, Methods Used | To track where and when cultural resource investigations have occurred, reducing redundancy and improving efficiency. |

| Resource Status | National Register Eligibility, Condition Assessments | To provide up-to-date information for project reviews and long-term management of historic properties. |

| Digital Documentation | 3D Laser Scans, Photogrammetric Models, Digital Twins | To create high-fidelity records of historic properties for conservation, research, and public engagement. |

Methodologies and Workflows

The this compound's role is primarily that of policy and oversight; therefore, it does not prescribe detailed experimental protocols for specific preservation technologies. Instead, the agency provides guidance on the process for incorporating new technologies within the existing regulatory framework of Section 106.

The Section 106 Review Process: A Framework for Technological Integration

The four-step Section 106 review process provides a structured methodology for federal agencies to consider the effects of their undertakings on historic properties. New technologies can be integrated at various stages of this process:

-

Initiate the Process: Utilize GIS and digital databases to identify consulting parties and areas of potential effect.

-

Identify Historic Properties: Employ technologies such as LiDAR, ground-penetrating radar, and remote sensing to identify and delineate historic properties, including archaeological sites.

-

Assess Adverse Effects: Use 3D modeling and simulations to visualize the potential impacts of a project on the character-defining features of a historic property.

-

Resolve Adverse Effects: Develop mitigation measures that may include the use of new technologies, such as high-resolution digital documentation of a property to be altered or demolished.

The following diagram illustrates the logical workflow for integrating new technologies into the Section 106 process.

This compound's Digital Information Task Force Workflow

The this compound's Digital Information Task Force has established a logical workflow for improving the use of digital and geospatial data in preservation. This workflow emphasizes collaboration and feedback among key stakeholders.

Conclusion

The Advisory Council on Historic Preservation is actively fostering an environment where new technologies are thoughtfully and effectively integrated into the practice of historic preservation. By promoting robust data management, streamlining regulatory processes, and embracing innovative solutions to contemporary challenges, the this compound is ensuring that the nation's rich cultural heritage is preserved for future generations in an ever-evolving digital world. The agency will continue to provide guidance and support to federal agencies and preservation partners in harnessing the power of technology to achieve our common preservation goals.

References

- 1. Digital Information Task Force Recommendations and Action Plan | Advisory Council on Historic Preservation [this compound.gov]

- 2. This compound Receives $750,000 in Funding for Innovative Information Technology | Advisory Council on Historic Preservation [this compound.gov]

- 3. Broadband Infrastructure and Section 106 Review | Advisory Council on Historic Preservation [this compound.gov]

- 4. Telecommunications | Advisory Council on Historic Preservation [this compound.gov]

Navigating the Nexus of Research and Preservation: A Technical Guide to the ACHP Initial Consultation Process

For Researchers, Scientists, and Drug Development Professionals

Introduction

In the intricate landscape of scientific research and development, particularly for projects with a federal nexus, a critical yet often unfamiliar regulatory pathway emerges: the Section 106 consultation process, overseen by the Advisory Council on Historic Preservation (ACHP). While seemingly distant from the laboratory or clinical trial, this process is a vital component of project approval for any federally funded, licensed, or permitted research activity that has the potential to affect historic properties. This guide provides an in-depth technical overview of the initial consultation process with the this compound, tailored for a scientific audience. By drawing parallels with the structured, methodical approach of scientific inquiry, this document aims to demystify the process, enabling researchers and drug development professionals to navigate it effectively, ensuring both scientific advancement and the preservation of our nation's heritage.

The Section 106 review process is analogous to a preclinical safety assessment for a new therapeutic. Just as a preclinical study identifies potential adverse effects on a biological system, the Section 106 process identifies potential adverse effects on our historical and cultural landscape. Both processes are systematic, involve expert consultation, and aim to mitigate negative impacts before a project proceeds. Understanding this framework is crucial for efficient project planning and execution.

The Core of the Matter: The Section 106 Process

Section 106 of the National Historic Preservation Act of 1966 (NHPA) mandates that federal agencies consider the effects of their projects—referred to as "undertakings"—on historic properties.[1][2] The process is implemented through regulations issued by the this compound, an independent federal agency that promotes the preservation, enhancement, and sustainable use of the nation's diverse historic resources.[3] The goal of the Section 106 process is to identify and assess the effects of a proposed project on historic properties and to seek ways to avoid, minimize, or mitigate any adverse effects.[4]

What Constitutes a "Research Project" Undertaking?

For the purposes of Section 106, a "research project" is not limited to laboratory work. It encompasses any scientific investigation that is a federal undertaking and has the potential to affect historic properties.[5] This most commonly includes, but is not limited to:

-

Archaeological Surveys and Excavations: A significant portion of research projects subject to Section 106 are archaeological in nature, with estimates suggesting over 90% of archaeological excavations in the United States are conducted under this provision.

-

Environmental Impact Studies: Research involving ground disturbance, construction of monitoring stations, or other activities on federal lands.

-

Infrastructure for Research: The construction or modification of research facilities, access roads, or other infrastructure that may be located in or near historic sites.

Quantitative Overview of the Section 106 Consultation Process

While comprehensive, granular data on all Section 106 consultations is not centralized in a single public repository, available information provides insight into the process's timelines and outcomes.

| Metric | Value/Range | Source/Comment |

| Annual Federal Undertakings Reviewed | ~100,000 - 120,000 | This figure represents the approximate number of federal projects reviewed by State and Tribal Historic Preservation Officers each year. |

| Projects with Adverse Effects | Very small percentage | The vast majority of federal projects are found to have no adverse effect on historic properties. |

| SHPO/THPO Review Period for Findings | 30 calendar days | State Historic Preservation Officers (SHPOs) and Tribal Historic Preservation Officers (THPOs) have a standard 30-day window to review and comment on a federal agency's findings and determinations. |

| Average Time to Finalize a Section 106 Agreement | Increased by 90 days (a 20% increase) in two years | A study of Section 106 agreements (which are typically required for projects with adverse effects) indicates a trend of increasing timelines for resolution. |

| Shortest Average Timescale for Agreement | 192 days | This highlights that even in the best-case scenarios, resolving adverse effects can be a lengthy process. |

| Maximum Recorded Timescale for Agreement | 2,679 days (> 7 years) | Illustrates the potential for significant delays in complex or contentious projects. |

Experimental Protocols: Methodologies in Cultural Resource Investigation

The "experimental protocols" in the context of Section 106 consultation for research projects are the systematic methodologies employed to identify, evaluate, and mitigate effects on historic properties. These are most clearly defined in the realm of archaeological and cultural resource surveys.

Phase I: Identification Survey

-

Objective: To determine the presence or absence of historic properties within the project's Area of Potential Effects (APE).

-

Methodology:

-

Literature Review: A comprehensive review of existing records, including historical maps, previous survey reports, and state archaeological site files.

-

Systematic Field Survey: This typically involves a pedestrian survey where the ground surface is systematically walked and visually inspected for artifacts or features. In areas with low surface visibility, subsurface testing is conducted through the excavation of shovel tests at regular intervals (e.g., 30-meter intervals in high-probability areas). All excavated soil is screened through a 1/4-inch mesh to recover artifacts.

-

Deep Testing: In areas with the potential for buried archaeological sites, techniques such as augering, coring, or mechanical trenching may be employed.

-

Phase II: Evaluation

-

Objective: To determine the significance of a located property and its eligibility for the National Register of Historic Places.

-

Methodology:

-

Intensive Testing: This may involve the excavation of additional, more closely spaced shovel tests or larger test units to define the boundaries of the site and to understand its structure and integrity.

-

Artifact Analysis: Detailed analysis of recovered artifacts to determine the age, function, and cultural affiliation of the site.

-

Feature Documentation: The mapping and documentation of any identified archaeological features, such as hearths or building foundations.

-

Phase III: Mitigation/Data Recovery

-

Objective: To mitigate the adverse effects of the project on a significant historic property, often through the systematic recovery of important information.

-

Methodology:

-

Development of a Research Design and Data Recovery Plan: This plan outlines the specific research questions to be addressed and the methods for excavation and analysis.

-

Large-Scale Excavation: The systematic excavation of large areas of the site to recover a representative sample of artifacts and features.

-

Specialized Analyses: This may include radiocarbon dating, soil analysis, and other specialized studies to extract as much information as possible from the archaeological record.

-

Reporting and Curation: The preparation of a detailed technical report on the findings and the curation of all recovered artifacts and records in a recognized repository.

-

Visualizing the Process: Workflows and Pathways

To further clarify the initial consultation process, the following diagrams illustrate the key workflows and logical relationships using the DOT language.

Caption: The initial steps taken by a federal agency to begin the Section 106 consultation process.

Caption: The workflow for identifying and evaluating historic properties within the project area.

Caption: The communication and consultation pathways between the key participants in the Section 106 process.

Conclusion

The initial consultation process with the this compound under Section 106 is a structured, multi-step process that is integral to responsible project planning for any research undertaking with federal involvement. For researchers, scientists, and drug development professionals, understanding this process is not an ancillary task but a core component of project management. By appreciating the parallels between the systematic methodologies of scientific research and the procedural requirements of Section 106, the scientific community can effectively engage in this process, fostering a collaborative environment where scientific progress and historic preservation can coexist and mutually inform one another. Early and proactive consultation is paramount to avoiding delays and ensuring successful project outcomes.

References

- 1. Section 106: National Historic Preservation Act of 1966 | GSA [gsa.gov]

- 2. Section 106 Review Fact Sheet | Advisory Council on Historic Preservation [this compound.gov]

- 3. epa.gov [epa.gov]

- 4. Section 106 Tutorial: Roles and Responsibilities - Consulting Parties [environment.fhwa.dot.gov]

- 5. 30-Day Review Timeframes: When are They Applicable in Section 106 Review? | Advisory Council on Historic Preservation [this compound.gov]

A Technical Guide to the Scientific Analysis of Historic Properties

For Researchers, Scientists, and Drug Development Professionals

This guide provides a comprehensive overview of the definition of "historic property" as established by the Advisory Council on Historic Preservation (ACHP) and delves into the scientific methodologies employed for the analysis of materials from such properties. The content is structured to offer researchers, scientists, and professionals in drug development a foundational understanding of the interdisciplinary nature of cultural heritage science, detailing experimental protocols, data presentation, and logical workflows.

Defining a "Historic Property"

The Advisory Council on Historic Preservation (this compound), an independent federal agency, oversees the implementation of Section 106 of the National Historic Preservation Act (NHPA).[1][2] This legislation requires federal agencies to consider the effects of their projects on historic properties.[3][4] The formal definition of a "historic property" is codified in federal regulations at 36 CFR § 800.16(l)(1).[5]

A historic property is defined as any prehistoric or historic district, site, building, structure, or object that is included in, or eligible for inclusion in, the National Register of Historic Places. This designation is significant as it extends protection to properties that have not yet been formally listed but meet the criteria for inclusion. The term also encompasses artifacts, records, and archaeological remains associated with such properties. Furthermore, it includes properties of traditional religious and cultural importance to Native American tribes or Native Hawaiian organizations that satisfy the National Register criteria.

Properties are evaluated for the National Register based on four main criteria:

-

Criterion A: Association with events that have made a significant contribution to the broad patterns of our history.

-

Criterion B: Association with the lives of persons significant in our past.

-

Criterion C: Embodiment of the distinctive characteristics of a type, period, or method of construction, or that represent the work of a master, or that possess high artistic values.

-

Criterion D: Have yielded, or may be likely to yield, information important in prehistory or history.

Methodologies for Scientific Analysis

The scientific analysis of materials from historic properties is a multidisciplinary field that draws from chemistry, physics, geology, and biology to characterize the composition, provenance, and degradation of cultural heritage materials. These investigations provide invaluable insights for conservation, historical interpretation, and authentication.

Elemental Analysis

Elemental analysis techniques are fundamental in determining the constituent elements of inorganic materials such as metals, glass, ceramics, and pigments.

XRF is a non-destructive technique that bombards a sample with X-rays, causing the elements within the sample to emit fluorescent (or secondary) X-rays at characteristic energies. By measuring these energies, the elemental composition can be determined. Portable XRF (pXRF) instruments are frequently used for in-situ analysis.

Experimental Protocol for XRF Analysis of Archaeological Metals:

-

Instrument Calibration: Calibrate the XRF spectrometer using certified reference materials that are matrix-matched to the artifacts being analyzed (e.g., bronze, silver alloys).

-

Surface Preparation: Gently clean the surface of the metal artifact to remove any superficial dirt or corrosion that may interfere with the analysis. This should be done with care to not damage the original surface. For quantitative analysis, a small, flat area is ideal.

-

Data Acquisition: Position the XRF instrument's measurement window directly on the prepared surface of the artifact. Ensure a consistent distance and angle for all measurements.

-

Measurement Parameters: Set the appropriate analytical parameters on the instrument, including the voltage, current, and acquisition time. Typical acquisition times range from 30 to 120 seconds per measurement point.

-

Data Analysis: Process the resulting spectra using the instrument's software. This will involve peak identification and quantification to determine the elemental concentrations.

SEM-EDS provides high-resolution imaging of a sample's surface and localized elemental analysis. A focused beam of electrons is scanned across the sample, generating various signals, including secondary electrons for imaging and characteristic X-rays for elemental analysis.

Experimental Protocol for SEM-EDS Analysis of Historic Pigments:

-

Sample Preparation: A minute sample of the pigment is carefully removed from the historic object using a scalpel under a microscope. The sample is then mounted on an aluminum stub using a carbon adhesive tab and sputter-coated with a thin layer of carbon to make it conductive.

-

Instrument Setup: The prepared sample is placed into the SEM chamber, and a vacuum is created. The electron beam is generated and focused on the sample.

-

Imaging: Secondary electron or backscattered electron detectors are used to obtain high-magnification images of the pigment particles, revealing their morphology and texture.

-

EDS Analysis: The electron beam is focused on a specific point of interest on the pigment particle, or an area is mapped. The emitted X-rays are collected by the EDS detector.

-

Spectral Analysis: The EDS software generates a spectrum showing the characteristic X-ray peaks of the elements present. This allows for the qualitative and semi-quantitative determination of the elemental composition of the pigment.

Molecular and Structural Analysis

These techniques are crucial for identifying the molecular composition and crystalline structure of both organic and inorganic materials.

FTIR spectroscopy identifies chemical bonds in a molecule by producing an infrared absorption spectrum. It is particularly useful for characterizing organic materials like textiles, binding media, and resins, as well as some inorganic compounds.

Experimental Protocol for FTIR Analysis of Historical Textiles:

-

Sample Preparation: A small fiber sample is carefully removed from the textile. For Attenuated Total Reflectance (ATR)-FTIR, the fiber can be placed directly on the ATR crystal.

-

Data Acquisition: The sample is placed in the FTIR spectrometer. An infrared beam is passed through the sample, and the instrument measures how much of the infrared radiation is absorbed at each wavelength.

-

Spectral Collection: The spectrum is typically collected over a range of 4000 to 400 cm⁻¹. Multiple scans are often averaged to improve the signal-to-noise ratio.

-

Data Analysis: The resulting spectrum is a plot of absorbance or transmittance versus wavenumber. The peaks in the spectrum correspond to the vibrational frequencies of the chemical bonds in the sample, allowing for the identification of the material (e.g., cellulose for cotton or linen, protein for wool or silk).

Organic Residue Analysis

The analysis of organic residues preserved in or on artifacts can provide direct evidence of past human activities, such as diet, food preparation techniques, and the use of various natural products.

GC-MS is a powerful technique for separating, identifying, and quantifying complex mixtures of volatile organic compounds. It is widely used for the analysis of lipids, waxes, and resins from archaeological contexts.

Experimental Protocol for GC-MS Analysis of Organic Residues in Ceramics:

-

Sample Preparation: A small fragment of the ceramic sherd is ground into a fine powder.

-

Lipid Extraction: The powdered ceramic is subjected to solvent extraction (e.g., using a mixture of chloroform and methanol) to dissolve the absorbed organic residues.

-

Derivatization: The extracted lipids are chemically modified (derivatized) to make them more volatile and suitable for GC analysis. A common method is transesterification to form fatty acid methyl esters (FAMEs).

-

GC-MS Analysis: The derivatized extract is injected into the gas chromatograph. The different compounds in the mixture are separated based on their boiling points and interaction with the stationary phase of the GC column. As each compound elutes from the column, it enters the mass spectrometer, which ionizes the molecules and separates the ions based on their mass-to-charge ratio, providing a unique mass spectrum for each compound.

-

Data Interpretation: The resulting chromatogram shows a series of peaks, each representing a different compound. By comparing the retention times and mass spectra of the peaks to those of known standards and library databases, the organic compounds present in the residue can be identified.

Quantitative Data Presentation

The presentation of quantitative data in a structured format is essential for the comparison and interpretation of analytical results. The following tables provide examples of how elemental composition data from the analysis of historic artifacts can be presented.

Table 1: Elemental Composition of Roman Coins by SEM-EDS (wt%)

| Coin ID | Cu | Pb | Sn | Fe | Other |

| RC-01 | 92.13 | - | 2.26 | - | Ag: 5.61 |

| RC-02 | 97.25 | - | - | - | Ag: 2.75 |

| RC-03 | 85.2 | 10.5 | 3.1 | 1.2 | - |

| RC-04 | 98.12 | 1.11 | - | - | - |

| RC-05 | 65.0 | 11.59 | 12.0 | - | - |

Data compiled from multiple sources.

Table 2: Chemical Composition of Roman Coins by Electron Microprobe Analysis (EMPA) (mass %)

| Coin ID | Cu (wt.%) | Sn (wt.%) | Pb (wt.%) | Fe (wt.%) | Zn (wt.%) |

| Augustus As (#4) | 96.5 - 99 | < 1 | < 1 | < 0.5 | < 0.5 |

| Quadrans of Caligula (#6) | 97 - 99 | < 1 | < 1 | < 0.5 | < 0.5 |

| Nummus radians of Galerius Caesar (#10) | 96.5 - 99 | < 1 | < 1 | < 0.5 | < 0.5 |

| Aes Litra (#1) | 0 - 35 | 52 - 94 | < 2 | < 1 | - |

| Claudius As (#5) | 70 - 85 | < 2 | 10 - 25 | < 1 | < 1 |

Data adapted from a study on Roman coins.

Visualization of Workflows and Relationships

Diagrams are essential for visualizing complex processes and relationships in the scientific analysis of historic properties. The following diagrams, created using the DOT language, illustrate a general workflow and a more specific decision-making process.

References

- 1. researchgate.net [researchgate.net]

- 2. researchgate.net [researchgate.net]

- 3. inis.iaea.org [inis.iaea.org]

- 4. Different Analytical Procedures for the Study of Organic Residues in Archeological Ceramic Samples with the Use of Gas Chromatography-mass Spectrometry - PubMed [pubmed.ncbi.nlm.nih.gov]

- 5. researchgate.net [researchgate.net]

Methodological & Application

Application Notes and Protocols for Remote Sensing in ACHP Section 106 Surveys

Audience: Researchers and scientists in archaeology, historic preservation, and cultural resource management.

Introduction: Section 106 of the National Historic Preservation Act of 1966 (NHPA) requires federal agencies to consider the effects of their projects on historic properties. This process involves a series of steps, including identifying historic properties and assessing the effects of the undertaking. Remote sensing technologies offer a powerful, non-invasive toolkit for conducting Section 106 surveys, enabling large-scale analysis, enhancing discovery of archaeological sites and historic features, and providing valuable data for decision-making. These application notes and protocols provide a detailed guide to integrating various remote sensing techniques into the Section 106 review process.

Application Notes

Integrating Remote Sensing into the Four-Step Section 106 Process

Remote sensing can be effectively applied at each stage of the Section 106 process:

-

Step 1: Initiate the Section 106 Process: While remote sensing is not directly used in the initial administrative steps, the data it can provide should be considered during the planning phase to scope the potential need for surveys.

-

Step 2: Identify Historic Properties: This is where remote sensing is most impactful. It aids in defining the Area of Potential Effects (APE) and in the identification of previously unknown archaeological sites and historic structures.[1][2]

-

Step 3: Assess Adverse Effects: Remote sensing can be used to monitor changes to historic properties over time and to assess the potential visual and physical impacts of a project.

-

Step 4: Resolve Adverse Effects: Data from remote sensing can inform the development of mitigation measures, such as project redesign to avoid sensitive areas.

Defining the Area of Potential Effects (APE) with Remote Sensing

The APE is the geographic area within which an undertaking may directly or indirectly cause alterations in the character or use of historic properties.[1] Remote sensing helps in defining a more accurate and comprehensive APE by:

-

Landscape-Level Analysis: Satellite imagery and LiDAR can be used to analyze broad landscapes to understand the geomorphological and environmental context of potential historic properties.[3]

-

Viewshed Analysis: For projects with the potential for visual effects, LiDAR-derived Digital Elevation Models (DEMs) can be used to conduct viewshed analysis to determine the area from which the project will be visible.

-

Predictive Modeling: By combining remote sensing data with other geographic information in a GIS, predictive models can be developed to identify areas with a high potential for containing archaeological sites, thus helping to refine the APE.

Identification of Historic Properties

A variety of remote sensing techniques can be employed to identify potential historic properties:

-

Aerial and Satellite Imagery: High-resolution satellite imagery can reveal features such as ancient roads, agricultural fields, and even the outlines of buried structures through soil marks and vegetation anomalies.[4] The analysis of multi-temporal imagery can show changes in the landscape that may indicate the presence of historic properties.

-

Light Detection and Ranging (LiDAR): LiDAR is particularly effective in forested areas, where it can "see" through the tree canopy to create high-resolution models of the ground surface, revealing subtle earthworks, mounds, and other archaeological features that are not visible in traditional aerial photography.

-

Geophysical Surveys: Techniques such as Ground Penetrating Radar (GPR), magnetometry, and electrical resistivity are used to investigate subsurface features without excavation. These methods can detect buried walls, foundations, pits, and other archaeological remains.

Data Presentation and Management

All data collected through remote sensing should be managed within a Geographic Information System (GIS). This allows for the integration of different datasets, spatial analysis, and the creation of maps and models to support the Section 106 review process.

Quantitative Data Summary

The following tables provide a summary of quantitative data for various remote sensing techniques applicable to Section 106 surveys.

Table 1: Comparison of Common Satellite Sensors for Archaeological Prospection

| Satellite/Sensor | Spatial Resolution (Panchromatic) | Spatial Resolution (Multispectral) | Revisit Time | Cost | Key Applications in Section 106 |

| Pleiades Neo | 30 cm | 1.2 m | Daily | High | Detailed site identification, feature mapping, monitoring. |

| WorldView-3 | 31 cm | 1.24 m | <1 day | High | High-resolution site discovery, vegetation analysis for crop marks. |

| IKONOS | 82 cm | 3.2 m | 1-3 days | Moderate to High | Regional surveys, identification of larger archaeological features. |

| QuickBird | 61 cm | 2.4 m | 1-3.5 days | Moderate to High | Detailed site mapping and analysis. |

| Sentinel-2 | 10 m (some bands) | 10 m, 20 m, 60 m | 5 days | Free | Large-scale landscape analysis, monitoring environmental changes around sites. |

| Landsat 8/9 | 15 m | 30 m | 16 days | Free | Regional landscape characterization, long-term environmental monitoring. |

| CORONA | ~1.8 m | N/A (B&W Film) | Historical | Low (declassified) | Historical landscape analysis, identifying sites disturbed by modern activity. |

| COSMO-SkyMed (SAR) | 1 m (Spotlight mode) | N/A | Variable | Moderate to High | Detection of subsurface features, imaging through cloud cover. |

Table 2: Typical Geophysical Properties of Archaeological Features and Surrounding Soils

| Material/Feature | Magnetic Susceptibility (10⁻⁸ SI/kg) | Electrical Resistivity (ohm-m) | GPR Reflection Potential |

| Topsoil | 20-100 | 50-200 | Variable |

| Subsoil | 5-30 | 100-500 | Variable |

| Fired Clay (hearth, kiln) | 100-2000 | 100-1000 | Moderate to High |

| Ditch/Pit Fill (organic) | 50-200 | 10-100 | High |

| Stone Foundation/Wall | Low | 500-10,000+ | High |

| Buried Metal Objects | Very High | Very Low (<1) | Very High |

| Compacted Earth (floor) | Slightly higher than surrounding soil | Higher than surrounding soil | Moderate |

Note: These values are approximate and can vary significantly depending on soil type, moisture content, and other environmental factors.

Experimental Protocols

Protocol 1: Archaeological Survey using Airborne LiDAR

1. Objective: To identify and map potential archaeological earthworks and other topographic features within the Area of Potential Effects (APE).

2. Methodology:

-

2.1. Data Acquisition:

-

Specify a high point density for the LiDAR survey (e.g., >8 points per square meter) to ensure adequate resolution for detecting subtle archaeological features.

-

Plan the flight mission for "leaf-off" conditions (late fall to early spring) in deciduous forest areas to maximize ground penetration.

-

Ensure the collection of both first and last return data.

-

-

2.2. Data Processing:

-

2.2.1. Point Cloud Classification: Classify the raw LiDAR point cloud data to separate ground points from vegetation and buildings. This is a critical step for creating a "bare-earth" Digital Elevation Model (DEM).

-

2.2.2. DEM Generation: Create a high-resolution DEM (e.g., 0.5 to 1-meter resolution) from the classified ground points.

-

-

2.3. Data Visualization and Analysis:

-

Generate various visualizations from the DEM to enhance the visibility of archaeological features. Common techniques include:

-

Hillshade: Simulates the sun's illumination of the terrain from different angles.

-

Slope: Highlights changes in terrain steepness.

-

Local Relief Model (LRM): Removes large-scale topographic trends to emphasize small-scale features.

-

-

Systematically review the visualizations to identify potential archaeological features such as mounds, earthworks, and old roadbeds.

-

-

2.4. Ground-Truthing:

-

Conduct field verification of identified anomalies to confirm their archaeological nature.

-

Protocol 2: Ground Penetrating Radar (GPR) Survey for Site-Specific Investigation

1. Objective: To detect and map subsurface archaeological features within a specific area of interest identified through other survey methods or historical documentation.

2. Methodology:

-

2.1. Site Preparation:

-

Establish a georeferenced survey grid over the area of interest. The grid size will depend on the expected size and density of features.

-

Clear the survey area of any surface obstructions that may interfere with the GPR antenna.

-

-

2.2. GPR Data Acquisition:

-