qc1

Description

BenchChem offers high-quality this compound suitable for many research applications. Different packaging options are available to accommodate customers' requirements. Please inquire for more information about this compound including the price, delivery time, and more detailed information at info@benchchem.com.

Structure

3D Structure

Properties

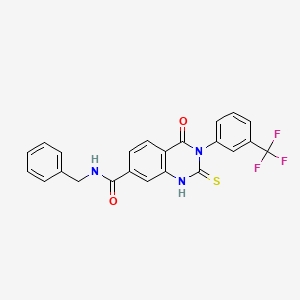

IUPAC Name |

N-benzyl-4-oxo-2-sulfanylidene-3-[3-(trifluoromethyl)phenyl]-1H-quinazoline-7-carboxamide | |

|---|---|---|

| Details | Computed by Lexichem TK 2.7.0 (PubChem release 2021.05.07) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI |

InChI=1S/C23H16F3N3O2S/c24-23(25,26)16-7-4-8-17(12-16)29-21(31)18-10-9-15(11-19(18)28-22(29)32)20(30)27-13-14-5-2-1-3-6-14/h1-12H,13H2,(H,27,30)(H,28,32) | |

| Details | Computed by InChI 1.0.6 (PubChem release 2021.05.07) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI Key |

IFNVTSPDMUUAFY-UHFFFAOYSA-N | |

| Details | Computed by InChI 1.0.6 (PubChem release 2021.05.07) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Canonical SMILES |

C1=CC=C(C=C1)CNC(=O)C2=CC3=C(C=C2)C(=O)N(C(=S)N3)C4=CC=CC(=C4)C(F)(F)F | |

| Details | Computed by OEChem 2.3.0 (PubChem release 2021.05.07) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Molecular Formula |

C23H16F3N3O2S | |

| Details | Computed by PubChem 2.1 (PubChem release 2021.05.07) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Molecular Weight |

455.5 g/mol | |

| Details | Computed by PubChem 2.1 (PubChem release 2021.05.07) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Foundational & Exploratory

Understanding QC1 in Astronomical Data Analysis: A Technical Guide

In the realm of astronomical data analysis, ensuring the integrity and quality of observational data is paramount for producing scientifically robust results. A crucial step in this process is the implementation of a multi-tiered quality control (QC) system. This guide provides an in-depth technical overview of Quality Control Level 1 (QC1) , a fundamental stage in the data processing pipeline of major astronomical observatories, particularly the European Southern Observatory (ESO). This process is designed for researchers, scientists, and professionals involved in astronomical data analysis and interpretation.

The Role of Quality Control in Astronomical Data

Astronomical data, from raw frames captured by telescopes to final science-ready products, undergoes a series of processing steps. Each step has the potential to introduce errors or artifacts. A structured quality control process is therefore essential to identify and flag data that does not meet predefined standards. This process is often categorized into different levels, starting from immediate on-site checks to more detailed offline analysis.

Defining Quality Control Level 1 (this compound)

This compound is an offline quality control procedure that utilizes automated data reduction pipelines to extract key parameters from the observational data.[1] These parameters provide quantitative measures of the data's quality and the instrument's performance. The primary goals of this compound are to:

-

Assess Data Quality: Systematically evaluate the quality of both raw science and calibration data.

-

Monitor Instrument Health: Track the performance of the telescope and its instruments over time by trending key QC parameters.[1]

-

Provide Rapid Feedback: Offer a "quick look" at the data quality, enabling timely identification of potential issues.[1]

The this compound process involves comparing the extracted parameters against established thresholds and historical data to identify any deviations that might indicate a problem with the observation or the instrument.[1]

The this compound Workflow

The this compound process is an integral part of the overall data flow from the telescope to the archive. While the initial QC Level 0 (QC0) involves real-time checks during the observation, this compound is the first stage of offline analysis.[1] Subsequent levels, such as QC Level 2 (QC2), involve more intensive processing to generate science-grade data products for the archive.[1]

The general workflow for this compound can be visualized as follows:

Key Experiments and Methodologies

The core of the this compound process lies in the automated extraction and analysis of specific parameters from various types of astronomical data. The methodologies for some key "experiments" or checks are detailed below.

1. Calibration Frame Analysis:

-

Methodology: For calibration frames such as biases, darks, and flats, the data reduction pipeline calculates statistical properties for each detector. For instance, in the case of the VIRCAM instrument, the pipeline measures the median dark level, read-out noise (RON), and noise from any stripe pattern for each of the sixteen detectors.[2] These measured values are the this compound parameters.

-

Data Presentation: The extracted this compound parameters are then compared against predefined thresholds. A scoring system is often employed to flag any deviations.[2] These scores are stored in a QC database for further analysis and trending.

2. Science Frame Analysis:

-

Methodology: For science frames, the this compound process may involve checks on parameters such as background levels, seeing conditions (a measure of atmospheric turbulence), and photometric zero points. For spectroscopic data, this could include measures of spectral resolution and signal-to-noise ratio. For example, the Gaia-ESO Survey has a dedicated quality control procedure for its UVES spectra.[3]

-

Data Presentation: The results of these checks are often presented in reports or "health check" plots that allow scientists to quickly assess the quality of an observation.[2] These reports and the associated this compound parameters are ingested into the observatory's archive system.

Quantitative Data Summary

The different levels of quality control can be summarized as follows, primarily based on the ESO framework:

| Quality Control Level | Location | Timing | Key Activities | Output |

| QC0 | On-site | During or immediately after observation | Monitoring of ambient conditions (e.g., seeing, humidity) against user constraints; flux level checks.[1] | Real-time feedback to observers. |

| This compound | On-site and Offline | Offline, shortly after observation | Pipeline-based extraction of QC parameters; comparison with reference and historical data (trending); quick-look data quality assessment.[1] | QC parameters stored in a database; quality-flagged data for further processing. |

| QC2 | Offline (Data Center) | Offline, typically later than this compound | Generation and ingestion of science-grade data products into the science archive.[1] | Calibrated and processed data ready for scientific analysis. |

Signaling Pathways and Logical Relationships

The logical flow of the this compound decision-making process can be represented as a signaling pathway. This diagram illustrates how raw data is processed and evaluated to determine its quality status.

Conclusion

Quality Control Level 1 is a critical, automated step in the processing of astronomical data. It provides a systematic and quantitative assessment of data quality and instrument performance, serving as a vital link between raw observations and science-ready data products. By flagging potential issues early in the data processing chain, this compound ensures the reliability and integrity of the data that ultimately fuels astronomical research and discovery. Researchers utilizing data from large surveys and observatories benefit from the rigor of the this compound process, which provides a foundational level of confidence in the quality of the data they analyze.

References

A Technical Guide to Quality Control (QC) Level 1 Parameters in ESO Pipelines

This in-depth technical guide provides a comprehensive overview of the core principles and practical applications of Quality Control Level 1 (QC1) parameters within the European Southern Observatory (ESO) data reduction pipelines. Tailored for researchers, scientists, and drug development professionals who may be utilizing advanced imaging and spectroscopic data, this document outlines the generation, significance, and interpretation of key this compound parameters.

The ESO pipelines are a suite of sophisticated software tools designed to process raw data from the various instruments on the Very Large Telescope (VLT) and other ESO facilities. A fundamental output of these pipelines is a set of this compound parameters, which are quantitative metrics that assess the quality of the data at different stages of the reduction process. These parameters are crucial for monitoring instrument health, verifying the accuracy of the calibration process, and ensuring the scientific validity of the final data products. This compound parameters are stored in the FITS headers of the processed files and are accessible through the ESO Science Archive Facility.

Data Presentation: Key this compound Parameters

The following tables summarize a selection of important this compound parameters for different types of calibrations and science data products across various ESO instrument pipelines, such as the FOcal Reducer/low dispersion Spectrograph 2 (FORS2) and the Multi Unit Spectroscopic Explorer (MUSE). These parameters provide a snapshot of the data quality and the performance of the instrument.

Table 1: Master Bias Frame this compound Parameters

| Parameter Name | Description | Instrument Example |

| QC.BIAS.MASTERn.RON | Read-out noise in quadrant 'n' determined from difference images of each adjacent pair of biases. | MUSE |

| QC.BIAS.MASTERn.RONERR | Error on the read-out noise in quadrant 'n'. | MUSE |

| QC.BIAS.MASTERn.MEAN | Mean value of the master bias in quadrant 'n'. | MUSE |

| QC.BIAS.MASTERn.STDEV | Standard deviation of the master bias in quadrant 'n'. | MUSE |

| QC.BIAS.MASTER.NBADPIX | Number of bad pixels found in the master bias. | MUSE |

Table 2: Spectroscopic Data this compound Parameters

| Parameter Name | Description | Instrument Example |

| QC LSS RESOLUTION | Mean spectral resolution for Long-Slit Spectroscopy (LSS) mode. | FORS2 |

| QC LSS RESOLUTION RMS | Root mean square of the spectral resolution measurements. | FORS2 |

| QC LSS RESOLUTION NLINES | Number of arc lamp lines used to compute the mean resolution. | FORS2 |

| QC LSS CENTRAL WAVELENGTH | Wavelength at the center of the CCD for LSS mode. | FORS2 |

| QC.NLINE.CAT | Number of lines in the input catalog for wavelength calibration. | X-shooter |

| QC.NLINE.FOUND | Number of lines found and used for the wavelength solution. | X-shooter |

Table 3: Imaging Data this compound Parameters

| Parameter Name | Description | Instrument Example |

| QC INSTRUMENT ZEROPOINT | The instrumental zeropoint, a measure of the instrument's throughput. | FORS2 |

| QC INSTRUMENT ZEROPOINT ERROR | The error on the instrumental zeropoint. | FORS2 |

| QC ATMOSPHERIC EXTINCTION | The atmospheric extinction coefficient. | FORS2 |

| QC ATMOSPHERIC EXTINCTION ERROR | The error on the atmospheric extinction coefficient. | FORS2 |

| QC IMGQU | The image quality (seeing) of the scientific exposure, measured as the median FWHM of stars. | FORS2 |

| QC IMGQUERR | The uncertainty in the image quality. | FORS2 |

Experimental Protocols: Methodologies for this compound Parameter Generation

The generation of this compound parameters is intrinsically linked to the data reduction recipes within the ESO pipelines. These recipes are the "experimental protocols" that process the raw data. Below are detailed methodologies for two key calibration recipes.

Protocol 1: Master Bias Frame Creation (muse_bias)

Objective: To create a low-noise master bias frame and to measure the detector characteristics, such as read-out noise and fixed pattern noise.

Methodology:

-

Input Data: A series of raw bias frames (typically 5 or more) taken with the shutter closed and zero exposure time.

-

Processing Steps:

-

Each raw bias frame is trimmed to remove the overscan regions.

-

The pipeline calculates the median value of each frame.

-

A master bias frame is created by taking a median of the individual bias frames. This process effectively removes cosmic rays and reduces random noise.

-

The read-out noise (RON) is calculated from the difference between pairs of consecutive bias frames.

-

The final master bias frame and its associated error map are saved as a FITS file.

-

-

Output this compound Parameters: The recipe calculates a suite of this compound parameters that are written to the header of the master bias FITS file. These include the mean, median, and standard deviation of the master bias for each quadrant of the detector, as well as the read-out noise and its error (as detailed in Table 1).

Protocol 2: Photometric Calibration (fors_photometry)

Objective: To determine the photometric properties of the instrument and the atmosphere, such as the instrumental zeropoint and the atmospheric extinction.

Methodology:

-

Input Data:

-

A raw science image of a standard star field.

-

A master bias frame.

-

A master flat field frame.

-

A catalog of standard stars with their known magnitudes and colors.

-

-

Processing Steps:

-

The raw science frame is bias-subtracted and flat-fielded.

-

The pipeline performs source detection on the calibrated image to identify the standard stars.

-

The instrumental magnitudes of the detected standard stars are measured.

-

By comparing the instrumental magnitudes with the catalog magnitudes, the pipeline fits a model that solves for the instrumental zeropoint and the atmospheric extinction coefficient.

-

-

Output this compound Parameters: The key this compound parameters derived from this recipe include the instrumental zeropoint, its error, the atmospheric extinction, and its error (as detailed in Table 3). These are crucial for the flux calibration of science data.

Mandatory Visualization

The following diagrams illustrate the logical flow of data processing and the hierarchical nature of quality control within the ESO pipeline environment.

An In-depth Technical Guide to Primary Quality Control (QC1) Data in Drug Development

Audience: Researchers, scientists, and drug development professionals.

The Core Purpose of Quality Control 1 (QC1) Data

In the landscape of drug development, Quality Control (QC) is a comprehensive set of practices designed to ensure the consistent quality, safety, and efficacy of pharmaceutical products.[1][2] QC testing is performed at multiple stages of the manufacturing process, from the initial assessment of raw materials to the final release of the drug product.[3] This guide focuses on Primary Quality Control (this compound) data , which we define as the foundational data generated from the initial quality assessments of raw materials, in-process materials, and the final drug substance and product. This initial tier of data is critical for making informed decisions throughout the development lifecycle and for ensuring regulatory compliance with standards such as Good Manufacturing Practices (GMP).[4]

The fundamental role of this compound data is to verify the identity, purity, potency, and stability of materials, ensuring they meet predetermined specifications.[3][5] This data forms the basis for batch release, provides insights into the consistency of the manufacturing process, and is a crucial component of the documentation submitted to regulatory agencies like the FDA and EMA.[6] Ultimately, robust this compound data de-risks the drug development process by identifying potential issues early, thereby preventing costly delays and ensuring patient safety.[1]

Key Stages and Data Presentation of this compound

This compound data is generated at three primary stages of the manufacturing process: Raw Material Testing, In-Process Quality Control (IPQC), and Finished Product Testing. The following tables summarize the key quantitative data collected at each stage.

Raw Material this compound Data

This stage involves the testing of all incoming materials, including Active Pharmaceutical Ingredients (APIs), excipients, and solvents, to confirm their identity and quality before they are used in production.[7][8]

| Parameter | Typical Analytical Method | Acceptance Criteria (Example) |

| Identity | FTIR/Raman Spectroscopy | Spectrum conforms to reference standard |

| Purity | HPLC, Gas Chromatography (GC) | ≥ 99.0% |

| Moisture Content | Karl Fischer Titration | ≤ 0.5% |

| Microbial Load | Microbial Limit Test | Total Aerobic Microbial Count: ≤ 100 CFU/g |

In-Process Quality Control (IPQC) this compound Data

IPQC tests are conducted during the manufacturing process to monitor and, if necessary, adapt the process to ensure the final product will meet its specifications.[9][10]

| Dosage Form | Parameter | Typical Analytical Method | Acceptance Criteria (Example) |

| Tablets | Weight Variation | Gravimetric | ± 5% of average weight (for tablets > 324 mg)[8] |

| Hardness | Hardness Tester | 4 - 10 kg | |

| Friability | Friability Tester | ≤ 1.0% weight loss | |

| Liquids/Solutions | pH | pH Meter | 6.8 - 7.2 |

| Viscosity | Viscometer | 15 - 25 cP |

Finished Product this compound Data

This is the final stage of QC testing before the drug product is released for distribution. It ensures that the finished product meets all its quality attributes.[7]

| Parameter | Typical Analytical Method | Acceptance Criteria (Example) | | :--- | :--- | :--- | :--- | | Assay (Potency) | HPLC, UV-Vis Spectroscopy | 90.0% - 110.0% of label claim | | Content Uniformity | HPLC | USP <905> requirements | | Purity/Impurity Profile | HPLC | Individual impurity ≤ 0.1%, Total impurities ≤ 1.0% | | Dissolution | Dissolution Apparatus (USP I/II) | ≥ 80% (Q) of drug dissolved in 45 minutes | | Stability | Stability Chambers (ICH conditions) | Meets all specifications throughout shelf-life |

Experimental Protocols

Detailed methodologies for key this compound experiments are provided below.

Raw Material Identity Verification via FTIR Spectroscopy

Objective: To confirm the identity of a raw material by comparing its infrared spectrum to that of a known reference standard.

Methodology:

-

Instrument Preparation: Ensure the Fourier Transform Infrared (FTIR) spectrometer is calibrated and the sample stage is clean.

-

Background Scan: Perform a background scan to capture the spectrum of the ambient environment, which will be subtracted from the sample spectrum.

-

Sample Preparation: Place a small amount of the raw material powder directly onto the attenuated total reflectance (ATR) crystal.

-

Sample Analysis: Apply pressure to ensure good contact between the sample and the ATR crystal. Initiate the scan over a range of 4000 to 400 cm⁻¹.[11]

-

Data Interpretation: The resulting spectrum is compared to a reference spectrum of the material stored in a spectral library.[11]

-

Acceptance Criteria: The sample spectrum must show a high correlation (e.g., >95% match) with the reference spectrum for the material to be accepted.

In-Process Control: Tablet Weight Variation and Hardness

Objective: To ensure uniformity of dosage units and appropriate mechanical strength of tablets during a compression run.

Methodology:

-

Sampling: At regular intervals (e.g., every 15-30 minutes), collect a sample of 20 tablets from the tablet press.

-

Weight Variation Test:

-

Individually weigh each of the 20 tablets and record the weights.

-

Calculate the average weight of the 20 tablets.

-

Determine the percentage deviation of each individual tablet's weight from the average weight.

-

Acceptance Criteria: As per USP, for tablets with an average weight greater than 324 mg, not more than two tablets should deviate from the average weight by more than ±5%, and no tablet should deviate by more than ±10%.[8][12]

-

-

Hardness Test:

-

Take 10 of the sampled tablets and measure the hardness of each using a calibrated hardness tester.

-

Record the individual hardness values and calculate the average.

-

Acceptance Criteria: The hardness should fall within the range specified in the batch manufacturing record (e.g., 4-10 kg).[13]

-

Finished Product Purity and Potency via High-Performance Liquid Chromatography (HPLC)

Objective: To determine the purity of the Active Pharmaceutical Ingredient (API) in the finished product by separating it from any impurities and to quantify its concentration (potency).

Methodology:

-

Mobile Phase Preparation: Prepare the mobile phase as specified in the analytical method (e.g., a mixture of acetonitrile and water). Degas the mobile phase to remove dissolved gases.

-

Standard Solution Preparation: Accurately weigh a known amount of a reference standard of the API and dissolve it in a suitable diluent to create a standard solution of known concentration.

-

Sample Preparation: Take a representative sample of the finished product (e.g., a crushed tablet or a volume of liquid) and dissolve it in the diluent to achieve a target concentration of the API. Filter the sample solution to remove any particulates.[14]

-

Chromatographic System Setup:

-

Install the appropriate HPLC column (e.g., a C18 column).

-

Set the mobile phase flow rate (e.g., 1.0 mL/min).

-

Set the column temperature (e.g., 30°C).

-

Set the detector wavelength to the absorbance maximum of the API.

-

-

Analysis:

-

Inject a blank (diluent) to ensure no interfering peaks are present.

-

Inject the standard solution multiple times to establish system suitability (e.g., repeatability of peak area and retention time).

-

Inject the sample solution.

-

-

Data Analysis:

-

Purity: Identify and quantify any impurity peaks in the chromatogram based on their retention times and peak areas relative to the main API peak.

-

Potency (Assay): Compare the peak area of the API in the sample solution to the peak area of the API in the standard solution to calculate the concentration of the API in the sample.

-

-

Acceptance Criteria: The purity and potency results must fall within the specifications set for the finished product.

Stability Testing of a New Drug Product

Objective: To evaluate how the quality of a drug product varies over time under the influence of environmental factors such as temperature, humidity, and light. This data is used to establish a shelf-life for the product.[15]

Methodology:

-

Protocol Design: Based on ICH guidelines, design a stability study protocol that specifies the batches to be tested, storage conditions, testing frequency, and analytical tests to be performed.[7]

-

Sample Storage: Place at least three primary batches of the drug product in stability chambers under the following long-term and accelerated conditions:

-

Long-term: 25°C ± 2°C / 60% RH ± 5% RH

-

Accelerated: 40°C ± 2°C / 75% RH ± 5% RH

-

-

Testing Schedule: Pull samples from the stability chambers at specified time points (e.g., 0, 3, 6, 9, 12, 18, 24, and 36 months for long-term; 0, 3, and 6 months for accelerated).

-

Analytical Testing: At each time point, perform a full suite of finished product QC tests, including:

-

Appearance

-

Assay (Potency)

-

Purity/Impurity Profile

-

Dissolution

-

-

Data Evaluation: Analyze the data for any trends in the degradation of the API or changes in the product's performance over time.

-

Shelf-Life Determination: Based on the long-term stability data, determine the time period during which the drug product is expected to remain within its specifications. This period defines the product's shelf-life.

Cell-Based Potency Assay for a Biologic Drug

Objective: To measure the biological activity of a biologic drug by assessing its effect on a cellular process, which is indicative of its therapeutic mechanism of action.

Methodology:

-

Cell Culture: Culture a suitable cell line that responds to the biologic drug. For example, for an antibody that blocks a growth factor receptor, use a cell line that proliferates in response to that growth factor.

-

Assay Plate Preparation:

-

Seed the cells into a 96-well microplate at a predetermined density and allow them to adhere overnight.

-

Prepare a serial dilution of a reference standard of the biologic drug.

-

Prepare serial dilutions of the test sample of the biologic drug.

-

-

Cell Treatment:

-

Remove the cell culture medium from the plate.

-

Add the dilutions of the reference standard and test sample to the appropriate wells.

-

Add a constant, predetermined concentration of the growth factor to stimulate cell proliferation.

-

Include negative controls (cells with growth factor but no antibody) and positive controls (cells with a known concentration of reference standard).

-

-

Incubation: Incubate the plate for a specified period (e.g., 48-72 hours) to allow the antibody to inhibit cell proliferation.

-

Cell Viability Readout: Add a reagent that measures cell viability (e.g., a reagent that produces a colorimetric or luminescent signal in proportion to the number of living cells).

-

Data Acquisition: Read the plate using a plate reader at the appropriate wavelength.

-

Data Analysis:

-

Plot the cell viability signal against the log of the drug concentration for both the reference standard and the test sample to generate dose-response curves.

-

Use a four-parameter logistic (4PL) model to fit the curves and determine the IC50 (the concentration that causes 50% inhibition of proliferation) for both the reference and the test sample.

-

Calculate the relative potency of the test sample compared to the reference standard.

-

-

Acceptance Criteria: The relative potency of the test sample must fall within a prespecified range (e.g., 80-125% of the reference standard).

Mandatory Visualizations

Logical Workflow for this compound Data

This diagram illustrates the flow of materials and the corresponding this compound data generation points from raw material receipt to finished product release.

References

- 1. apexinstrument.me [apexinstrument.me]

- 2. debian - How do I add color to a graphviz graph node? - Unix & Linux Stack Exchange [unix.stackexchange.com]

- 3. documentation.tokens.studio [documentation.tokens.studio]

- 4. How Transformers Work: A Detailed Exploration of Transformer Architecture | DataCamp [datacamp.com]

- 5. pharmatimesofficial.com [pharmatimesofficial.com]

- 6. ICH Official web site : ICH [ich.org]

- 7. youtube.com [youtube.com]

- 8. m.youtube.com [m.youtube.com]

- 9. Raw Materials Identification Testing by NIR Spectroscopy and Raman Spectroscopy : SHIMADZU (Shimadzu Corporation) [shimadzu.com]

- 10. SOP for Checking Material Identity Using FTIR or Raman Spectroscopy – V 2.0 – SOP Guide for Pharma [pharmasop.in]

- 11. m.youtube.com [m.youtube.com]

- 12. m.youtube.com [m.youtube.com]

- 13. youtube.com [youtube.com]

- 14. m.youtube.com [m.youtube.com]

- 15. google.com [google.com]

The Trasis QC1 System: An In-depth Technical Guide to its Core Principles for PET Tracer Quality Control

For Researchers, Scientists, and Drug Development Professionals

The Trasis QC1 is a compact, automated system designed to streamline the quality control (QC) of Positron Emission Tomography (PET) tracers. This guide provides a detailed overview of its basic principles, operational workflow, and the analytical technologies it integrates. The system is designed for compliance with both European and US pharmacopeia, offering a "one sample, one click, one report" solution that significantly enhances efficiency and safety in radiopharmaceutical production.[1][2][3][4][5] A complete quality control report can be generated from a single sample in approximately 30 minutes.[2][6][7]

Core Principles and Integrated Technologies

The fundamental principle of the Trasis this compound system is the integration and miniaturization of multiple analytical instruments into a single, self-shielded unit.[8] This approach addresses several challenges in traditional PET tracer QC, including the need for multiple, bulky instruments, significant lab space, and extensive manual sample handling, which increases radiation exposure to personnel.

Based on available information and the typical requirements for PET tracer QC, the Trasis this compound likely integrates the following core analytical capabilities:

-

Chromatography: For the separation and identification of the radiolabeled tracer from chemical and radiochemical impurities. This is likely achieved through:

-

High-Performance Liquid Chromatography (HPLC): A radio-HPLC system is a cornerstone of PET QC for determining radiochemical purity and identity.

-

Gas Chromatography (GC): Essential for the detection of residual solvents from the synthesis process.

-

-

Radiodetection: To measure the radioactivity of the tracer and any radiochemical impurities. This would involve a gamma detector, likely integrated with the HPLC system.

-

Spectrometry: A gamma spectrometer may be included for radionuclidic identity and purity testing.

-

Sample Hub: A centralized module for performing simpler, compendial tests. This may include:

-

pH measurement: To ensure the final product is within a physiologically acceptable range.

-

Colorimetric Assays: For tests like the Kryptofix 222 spot test to quantify residual catalyst.

-

Thin Layer Chromatography (TLC): A simpler method for radiochemical purity assessment.

-

The system's design focuses on automation to enhance reproducibility and reduce operator-dependent variability.[9]

Quantitative Data and Performance

While specific performance data for the Trasis this compound system has not been extensively published, the following table summarizes the key operational parameters and the standard quality control tests it is expected to perform based on its design and intended use.

| Parameter | Specification / Test | Significance in PET Tracer QC |

| Operational Parameters | ||

| Analysis Time | Approx. 30 minutes | Rapid analysis is crucial for short-lived PET radionuclides, allowing for timely release of the tracer for clinical use. |

| Sample Volume | Approx. 300 µL | A small sample volume minimizes waste of the valuable radiotracer.[7] |

| Quality Control Tests | ||

| Identity | ||

| Radiochemical Identity | Comparison of retention time with a known standard (HPLC) | Confirms that the detected radioactivity corresponds to the intended PET tracer. |

| Radionuclidic Identity | Half-life determination or gamma spectrum analysis | Verifies that the radioactivity is from the correct radionuclide (e.g., Fluorine-18). |

| Purity | ||

| Radiochemical Purity | HPLC or TLC analysis | Determines the percentage of the total radioactivity that is in the desired chemical form of the tracer. |

| Radionuclidic Purity | Gamma spectroscopy | Ensures the absence of other radioactive isotopes. |

| Chemical Purity | HPLC with UV or other chemical detectors | Quantifies non-radioactive chemical impurities that may be present from the synthesis. |

| Safety and Formulation | ||

| pH | Potentiometric measurement | Ensures the final product is suitable for injection and will not cause patient discomfort or physiological issues. |

| Residual Solvents | Gas Chromatography (GC) | Detects and quantifies any remaining solvents from the synthesis process to ensure they are below safety limits. |

| Kryptofix 222 Concentration | Colorimetric spot test or other quantitative method | Kryptofix 222 is a common but potentially toxic catalyst used in 18F-radiochemistry; its concentration must be strictly controlled. |

| Endotoxin Level | Limulus Amebocyte Lysate (LAL) test or equivalent | (If integrated) Ensures the absence of bacterial endotoxins, which can cause a pyrogenic response in patients. The Trasis ecosystem includes a separate device, Sterinow, for sterility testing.[2] |

| Visual Inspection | Automated visual/optical analysis | Checks for the absence of visible particles and ensures the solution is clear. |

Experimental Protocols and Methodologies

Detailed experimental protocols for the Trasis this compound are proprietary and specific to the tracer being analyzed. However, the underlying methodologies for the key experiments are based on standard pharmacopeial methods. A generalized workflow is as follows:

-

Sample Introduction: A single sample of the final PET tracer product is introduced into the this compound system.

-

Automated Aliquoting: The system internally divides the sample for parallel or sequential analysis by the different integrated modules.

-

Radio-HPLC Analysis:

-

An aliquot is injected onto an appropriate HPLC column.

-

A mobile phase (a solvent mixture) flows through the column, separating the components of the sample based on their affinity for the column material.

-

A UV detector (or other chemical detector) and a radioactivity detector are connected in series to detect both chemical and radiochemical species as they elute from the column.

-

The data is used to determine radiochemical identity and purity.

-

-

Gas Chromatography Analysis:

-

Another aliquot is injected into the GC.

-

The sample is vaporized and carried by an inert gas through a column.

-

Different solvents travel through the column at different rates and are detected as they exit, allowing for their identification and quantification.

-

-

"Sample Hub" Assays:

-

A portion of the sample is used for pH measurement via an integrated pH probe.

-

Another portion may be spotted onto a plate or mixed with reagents for a colorimetric determination of Kryptofix 222.

-

-

Data Integration and Reporting: The software of the this compound system collects and analyzes the data from all the individual tests and compiles a single, comprehensive report.[6]

Visualizing the Workflow and Logical Relationships

The following diagrams illustrate the logical workflow of the Trasis this compound system and the interrelationship of the quality control tests.

Caption: High-level workflow of the Trasis this compound system.

Caption: Logical relationship of PET tracer quality control tests.

References

- 1. Tracer-QC Automated, universal testing for PET-QC | MetorX | measuring tools for radiationX [metorx.com]

- 2. jnm.snmjournals.org [jnm.snmjournals.org]

- 3. m.youtube.com [m.youtube.com]

- 4. trasis.com [trasis.com]

- 5. trasis.com [trasis.com]

- 6. medicalexpo.com [medicalexpo.com]

- 7. m.youtube.com [m.youtube.com]

- 8. This compound | IOL [iol.be]

- 9. van Dam Lab - Miniaturized QC testing of PET tracers [vandamlab.org]

The QC1 Device: An In-depth Technical Guide to Automated Radiopharmaceutical Quality Control

The QC1 device by Trasis is an automated, compact, and integrated system designed to streamline the quality control (QC) of radiopharmaceuticals, particularly for Positron Emission Tomography (PET) tracers.[1][2][3] This guide provides a comprehensive overview of the this compound, its core functionalities, and its role in ensuring the safety and efficacy of radiopharmaceuticals for researchers, scientists, and drug development professionals.

Overview

The quality control of radiopharmaceuticals is a critical and resource-intensive step in their production, requiring multiple analytical instruments and significant manual handling.[4] The Trasis this compound is engineered to address these challenges by consolidating essential QC tests into a single, self-shielded unit.[1][3] This integration aims to reduce the laboratory footprint, shorten the time to release a batch, and minimize radiation exposure for operators.[4][5] The system is designed to be compliant with both European and United States Pharmacopeias (EP and USP).[1][4] The technology was originally conceived by this compound GmbH and later acquired and further developed by Trasis.[3]

Key Features

The this compound system is characterized by several key features that enhance the efficiency and safety of radiopharmaceutical QC:

-

Integration: It combines multiple analytical instruments into one compact device, including modules for High-Performance Liquid Chromatography (HPLC), Gas Chromatography (GC), Thin-Layer Chromatography (TLC), pH measurement, and radionuclide identification.[3][5]

-

Automation: The QC process is fully automated, from sample injection to the generation of a comprehensive report, reducing the potential for human error.[4]

-

Speed: A complete QC report can be generated in approximately 30 minutes, depending on the specific radiopharmaceutical being analyzed.[1]

-

Safety: The device is self-shielded, significantly reducing the radiation dose to laboratory personnel.[5]

-

Compact Footprint: Its integrated design saves valuable laboratory space.[1]

-

Simplified Workflow: The system operates on a "one sample, one click, one report" principle, simplifying the entire QC process.[6] A sample volume of 300 µL is required.[7]

Integrated Quality Control Modules

The this compound integrates several analytical modules to perform a comprehensive suite of QC tests as required by pharmacopeial standards.

Radio-High-Performance Liquid Chromatography (Radio-HPLC)

The integrated radio-HPLC system is essential for determining the radiochemical purity and identity of the radiopharmaceutical. It separates the desired radiolabeled compound from any radioactive impurities. The system would typically include a pump, injector, column, a UV detector (for identifying non-radioactive chemical impurities), and a radioactivity detector.[3]

Gas Chromatography (GC)

A miniaturized GC module is incorporated for the analysis of residual solvents in the final radiopharmaceutical preparation. This is a critical safety parameter to ensure that solvents used during the synthesis process are below acceptable limits.[3]

Radio-Thin-Layer Chromatography (Radio-TLC)

The radio-TLC scanner provides an orthogonal method for assessing radiochemical purity. It is a rapid technique to separate and quantify different radioactive species in the sample.[3]

Gamma Spectrometer/Dose Calibrator

This component is responsible for confirming the radionuclidic identity and purity of the sample. It measures the gamma-ray energy spectrum to identify the radionuclide and to detect any radionuclidic impurities. It also quantifies the total radioactivity of the sample.[3]

pH Meter

An integrated pH meter measures the pH of the final radiopharmaceutical solution to ensure it is within a physiologically acceptable range for injection.[3]

Data Presentation

The following tables summarize the quality control tests performed by the this compound device and its general specifications based on available information.

Table 1: Quality Control Tests Performed by the this compound Device

| Parameter | Purpose | Integrated Module |

| Radiochemical Purity & Identity | To ensure the radioactivity is bound to the correct chemical compound and to quantify radiochemical impurities. | Radio-HPLC, Radio-TLC |

| Chemical Purity | To identify and quantify non-radioactive chemical impurities. | HPLC (with UV detector) |

| Radionuclidic Purity & Identity | To confirm the correct radionuclide is present and to quantify any radionuclide impurities. | Gamma Spectrometer |

| Residual Solvents | To quantify the amount of residual solvents from the synthesis process. | Gas Chromatography (GC) |

| pH | To ensure the final product is within a physiologically acceptable pH range. | pH Meter |

| Radioactivity Concentration | To measure the amount of radioactivity per unit volume. | Dose Calibrator |

Table 2: General Specifications of the this compound Device

| Specification | Description |

| System Type | Automated, integrated radiopharmaceutical quality control system |

| Key Features | Compact, self-shielded, compliant with EP/USP |

| Analysis Time | Approximately 30 minutes per sample |

| Sample Volume | 300 µL |

| Integrated Modules | Radio-HPLC, GC, Radio-TLC, Gamma Spectrometer, pH Meter, Dose Calibrator |

| User Interface | Touch screen with a user-friendly interface |

| Reporting | Generates a single, comprehensive report for all tests |

Disclaimer: Detailed quantitative specifications for the individual analytical modules are not publicly available and should be requested directly from the manufacturer, Trasis.

Experimental Protocols

While specific, detailed experimental protocols for individual radiopharmaceuticals on the this compound are proprietary and not publicly available, a general experimental workflow can be outlined. The user would typically follow the on-screen instructions provided by the this compound's software.

General Experimental Workflow

-

System Initialization and Calibration: The operator powers on the this compound device and performs any required daily system suitability tests or calibrations as prompted by the software. This ensures that all integrated modules are functioning within specified parameters.

-

Sample Preparation: A 300 µL aliquot of the final radiopharmaceutical product is drawn into a suitable vial.[7]

-

Sample Introduction: The sample vial is placed into the designated port on the this compound device.

-

Initiation of the QC Sequence: Using the touchscreen interface, the operator selects the appropriate pre-programmed QC method for the specific radiopharmaceutical being tested and initiates the automated analysis.

-

Automated Analysis: The this compound system automatically performs the following steps:

-

Aliquoting and distribution of the sample to the various analytical modules (HPLC, GC, TLC, etc.).

-

Execution of the pre-defined analytical methods for each module.

-

Data acquisition from all detectors.

-

-

Data Processing and Report Generation: The system's software processes the raw data from all analyses, performs the necessary calculations, and compares the results against the predefined acceptance criteria for the specific radiopharmaceutical. A single, comprehensive report is generated that includes the results of all tests.

-

Review and Batch Release: The operator reviews the final report to ensure all specifications are met before releasing the radiopharmaceutical batch for clinical use.

Mandatory Visualizations

The following diagrams illustrate the logical relationships and workflows of the this compound device.

Caption: General experimental workflow for the Trasis this compound device.

Caption: Logical relationship of the integrated modules within the this compound device.

References

The Role of MSK-QC1-1 in Ensuring Data Integrity in Mass Spectrometry-Based Metabolomics

An In-depth Technical Guide for Researchers, Scientists, and Drug Development Professionals

In the landscape of mass spectrometry-based metabolomics, the pursuit of high-quality, reproducible, and reliable data is paramount. The inherent complexity of biological systems and the sensitivity of analytical instrumentation necessitate rigorous quality control (QC) measures. The MSK-QC1-1 Metabolomics QC Standard Mix 1, developed by Cambridge Isotope Laboratories, Inc., serves as a critical tool for researchers to monitor and validate the performance of their analytical workflows. This technical guide provides a comprehensive overview of the purpose, composition, and application of MSK-QC1-1, empowering researchers to enhance the robustness and confidence of their metabolomics data.

Core Purpose and Applications of MSK-QC1-1

MSK-QC1-1 is a quality control standard mix composed of five ¹³C-labeled amino acids designed for use in mass spectrometry (MS) based metabolomics.[1] Its primary purpose is to provide a defined and consistent reference material to evaluate the performance of the entire analytical workflow, from sample preparation to data acquisition and analysis. The use of stable isotope-labeled internal standards is a widely accepted practice to normalize variations in sample preparation, injection volume, and mass spectrometry ionization.

The key applications of MSK-QC1-1 include:

-

System Suitability Assessment: Regular injection of MSK-QC1-1 allows researchers to monitor key performance indicators of their LC-MS system, such as retention time stability, peak shape, and signal intensity.[2] This ensures that the instrument is performing optimally before and during the analysis of precious biological samples.

-

Evaluation of Analytical Precision: By analyzing MSK-QC1-1 multiple times throughout a sample batch, researchers can determine the analytical precision of their method, typically expressed as the coefficient of variation (CV) for peak area and retention time. This is crucial for distinguishing true biological variation from analytical noise.

-

Identification of Performance Deficits: Deviations in the expected signal or retention times of the standards in MSK-QC1-1 can indicate issues with the LC-MS system, such as a dirty ion source, column degradation, or problems with the mobile phase. Early detection of such issues can prevent the generation of unreliable data.

-

Enhancing Inter-Laboratory Reproducibility: The use of a standardized QC material like MSK-QC1-1 can help to diminish inter-laboratory variability, making it easier to compare and combine data from different studies and laboratories.[2]

-

Spike-in Standard for Quantitation: Beyond its role in quality control, the stable isotope-labeled compounds in MSK-QC1-1 can also be used as internal standards for the relative or absolute quantification of their unlabeled counterparts in biological samples.

Composition and Quantitative Data

MSK-QC1-1 is a lyophilized mixture of five ¹³C-labeled amino acids. Upon reconstitution in 1 mL of solvent, the following concentrations are achieved:

| Compound Name | Isotopic Label | Concentration (µg/mL) |

| L-Alanine | ¹³C₃, 99% | 4 |

| L-Leucine | ¹³C₆, 99% | 4 |

| L-Phenylalanine | ¹³C₆, 99% | 4 |

| L-Tryptophan | ¹³C₁₁, 99% | 40 |

| L-Tyrosine | ¹³C₆, 99% | 4 |

Table 1: Composition of MSK-QC1-1 upon reconstitution in 1 mL of solvent.[2]

While specific performance data such as coefficients of variation (CVs) can be system and method-dependent, the use of such standards aims to achieve low CVs for key metrics. In well-controlled LC-MS metabolomics experiments, CVs for retention time are typically expected to be below 1-2%, while peak area CVs for internal standards are often targeted to be below 15-20%. Monitoring these values for the components of MSK-QC1-1 provides a clear indication of the stability and reproducibility of the analytical run.

Experimental Protocol for Utilization

The following provides a detailed methodology for the integration of MSK-QC1-1 into a typical LC-MS metabolomics workflow.

Preparation of the QC Standard

-

Reconstitution: Carefully reconstitute the lyophilized MSK-QC1-1 standard in 1 mL of a suitable solvent. A common choice is a solvent that is compatible with the initial mobile phase conditions of the liquid chromatography method (e.g., 50:50 methanol:water).

-

Vortexing and Sonication: Vortex the vial for at least 30 seconds to ensure complete dissolution. A brief sonication in a water bath can further aid in dissolving the standards.

-

Storage: Store the reconstituted stock solution at -20°C or below in an amber vial to protect it from light.

Integration into the Analytical Run

-

System Conditioning: At the beginning of each analytical batch, inject the MSK-QC1-1 standard multiple times (e.g., 3-5 times) to condition the LC-MS system and ensure stable performance.

-

Periodic QC Injections: Throughout the analytical run, inject the MSK-QC1-1 standard at regular intervals. A common practice is to inject the QC sample after every 8-10 biological samples. This allows for the monitoring of instrument performance over time and can be used to correct for analytical drift.

-

Post-Batch QC: It is also advisable to inject the MSK-QC1-1 standard at the end of the analytical batch to assess the performance of the system throughout the entire run.

Data Analysis and Interpretation

-

Monitor Key Metrics: For each injection of MSK-QC1-1, monitor the following parameters for each of the five amino acids:

-

Retention Time (RT): The RT should remain consistent throughout the run. A significant drift in RT may indicate a problem with the LC column or mobile phase composition.

-

Peak Area: The peak area should be reproducible across all QC injections. A gradual decrease in peak area may suggest a dirty ion source or detector fatigue, while erratic peak areas could indicate injection problems.

-

Peak Shape: The chromatographic peak shape should be symmetrical and consistent. Poor peak shape can affect the accuracy of integration and may indicate column degradation.

-

Signal-to-Noise Ratio (S/N): Monitoring the S/N can provide an indication of the instrument's sensitivity.

-

-

Establish Acceptance Criteria: Before starting a study, it is important to establish acceptance criteria for the QC metrics. For example, a common criterion is that the CV for the peak area of the internal standards in the QC samples should be less than 20%. If the QC samples fall outside of these predefined limits, the data from the surrounding biological samples may need to be re-analyzed or flagged as potentially unreliable.

Visualization of Experimental Workflow and a Relevant Metabolic Pathway

Experimental Workflow

The following diagram illustrates the integration of MSK-QC1-1 into a standard metabolomics workflow.

The Shikimate Pathway: Biosynthesis of Aromatic Amino Acids

Three of the five amino acids present in MSK-QC1-1 – Phenylalanine, Tryptophan, and Tyrosine – are aromatic amino acids. In plants, bacteria, fungi, and algae, these essential amino acids are synthesized via the shikimate pathway.[3][4] This pathway is not present in animals, making these amino acids essential dietary components. The shikimate pathway serves as an excellent example of a metabolic route where some of the components of MSK-QC1-1 are central.

Conclusion

References

The Gatekeeper of Bioanalytical Validity: An In-depth Technical Guide to the Role of Low-Level QC Samples (QC1)

For Researchers, Scientists, and Drug Development Professionals

In the rigorous landscape of drug development, the integrity of bioanalytical data is paramount. Ensuring that a method for quantifying a drug or its metabolites in a biological matrix is reliable and reproducible is the central objective of bioanalytical method validation. Within this critical process, Quality Control (QC) samples serve as the sentinels of accuracy and precision. This technical guide delves into the specific and crucial role of the low-level QC sample (QC1), often the first line of defense against erroneous data at the lower end of the quantification range.

The Foundation: Bioanalytical Method Validation and the QC Framework

Bioanalytical method validation is the process of establishing, through documented evidence, that a specific analytical method is suitable for its intended purpose.[1] This involves a series of experiments designed to assess the method's performance characteristics.[2] A cornerstone of this validation is the use of QC samples, which are prepared by spiking a known concentration of the analyte into the same biological matrix as the study samples.[3][4]

Regulatory bodies such as the U.S. Food and Drug Administration (FDA) and the European Medicines Agency (EMA), now harmonized under the International Council for Harmonisation (ICH) M10 guideline, mandate the use of QC samples at multiple concentration levels to cover the entire calibration curve range.[5][6][7] Typically, this includes a minimum of three levels: low, medium, and high.

The low QC sample, often referred to as this compound or LQC, holds a position of particular importance. It is prepared at a concentration typically within three times the Lower Limit of Quantification (LLOQ).[8][9] The LLOQ represents the lowest concentration of an analyte that can be measured with acceptable accuracy and precision.[2][8] Therefore, the performance of the this compound sample provides a critical assessment of the method's reliability at the lower boundary of its quantitative range.

Core Functions of the this compound Sample

The this compound sample is instrumental in evaluating several key validation parameters:

-

Accuracy: This measures the closeness of the mean test results to the true (nominal) concentration of the analyte. The accuracy of the this compound sample demonstrates the method's ability to provide unbiased results at low concentrations.

-

Precision: This assesses the degree of scatter between a series of measurements. Precision is typically expressed as the coefficient of variation (CV). The precision of the this compound sample indicates the method's reproducibility at the lower end of the calibration range.

-

Stability: The this compound sample is used in various stability tests to ensure that the analyte's concentration does not change under different storage and handling conditions. This is crucial for maintaining sample integrity from collection to analysis.[10][11]

The workflow for incorporating QC samples into the validation process is a systematic one, ensuring that each analytical run is performed under controlled and monitored conditions.

References

- 1. youtube.com [youtube.com]

- 2. ajpsonline.com [ajpsonline.com]

- 3. pharmoutsource.com [pharmoutsource.com]

- 4. FDA Bioanalytical method validation guidlines- summary – Nazmul Alam [nalam.ca]

- 5. fda.gov [fda.gov]

- 6. database.ich.org [database.ich.org]

- 7. openpr.com [openpr.com]

- 8. Bioanalytical method validation: An updated review - PMC [pmc.ncbi.nlm.nih.gov]

- 9. ema.europa.eu [ema.europa.eu]

- 10. Stability: Recommendation for Best Practices and Harmonization from the Global Bioanalysis Consortium Harmonization Team - PMC [pmc.ncbi.nlm.nih.gov]

- 11. Stability Assessments in Bioanalytical Method Validation [celegence.com]

Foundational Concepts of Quality Control Level 1 in Analytical Chemistry: An In-depth Technical Guide

For Researchers, Scientists, and Drug Development Professionals

Introduction to Quality Control in Analytical Chemistry

In the realm of analytical chemistry, particularly within the pharmaceutical and drug development sectors, the reliability and accuracy of data are paramount. Quality Control (QC) encompasses a set of procedures and practices designed to ensure that analytical results are precise, accurate, and reproducible.[1][2][3] Level 1 Quality Control represents the fundamental, routine checks and measures implemented during analytical testing to monitor the performance of the analytical system and validate the results of each analytical run.[4][5] This guide provides an in-depth overview of the core foundational concepts of QC Level 1, offering detailed methodologies and data presentation to support researchers, scientists, and drug development professionals in maintaining the integrity of their analytical data.

Quality Assurance (QA) and Quality Control (QC) are often used interchangeably, but they represent distinct concepts. QA is a broad, systematic approach that ensures a product or service will meet quality requirements, focusing on preventing defects.[2][4] QC, on the other hand, is a subset of QA and involves the operational techniques and activities used to fulfill requirements for quality by monitoring and identifying any defects in the final product.[2]

Core Components of QC Level 1

The foundational level of quality control in an analytical laboratory is built upon three principal pillars:

-

System Suitability Testing (SST): A series of tests to ensure the analytical equipment and method are performing correctly before and during the analysis of samples.[6][7]

-

Calibration: The process of configuring an instrument to provide a result for a sample within an acceptable range.[8]

-

Control Charting: A graphical tool used to monitor the stability and consistency of an analytical method over time.[9][10]

These components work in concert to provide a robust framework for ensuring the validity of analytical results.

System Suitability Testing (SST)

System Suitability Testing is an integral part of any analytical procedure and is designed to evaluate the performance of the entire analytical system, from the instrument to the reagents and the analytical column.[6] SST is performed prior to the analysis of any samples to confirm that the system is adequate for the intended analysis.[6]

Key SST Parameters and Acceptance Criteria

The specific parameters and their acceptance criteria for SST can vary depending on the analytical technique (e.g., HPLC, GC) and the specific method. However, some common parameters are universally applied.

| Parameter | Description | Typical Acceptance Criteria |

| Tailing Factor (T) | A measure of peak symmetry. | T ≤ 2 |

| Resolution (Rs) | The separation between two adjacent peaks. | Rs ≥ 2 |

| Relative Standard Deviation (RSD) / Precision | The precision of replicate injections of a standard. | RSD ≤ 2.0% |

| Theoretical Plates (N) | A measure of column efficiency. | N > 2000 |

| Capacity Factor (k') | A measure of the retention of an analyte on the column. | 2 < k' < 10 |

| Signal-to-Noise Ratio (S/N) | For determining the limit of detection (LOD) and quantitation (LOQ). | S/N ≥ 3 for LOD, S/N ≥ 10 for LOQ[11] |

Experimental Protocol: Performing a System Suitability Test for HPLC

-

Prepare a System Suitability Solution: This solution should contain the analyte(s) of interest at a known concentration, and potentially other compounds to challenge the system's resolution.

-

Equilibrate the HPLC System: Run the mobile phase through the system until a stable baseline is achieved.

-

Perform Replicate Injections: Inject the system suitability solution a minimum of five times.

-

Data Analysis: From the resulting chromatograms, calculate the Tailing Factor, Resolution, RSD of the peak areas, and Theoretical Plates.

-

Compare to Acceptance Criteria: Verify that all calculated parameters meet the pre-defined acceptance criteria as outlined in the method's Standard Operating Procedure (SOP).

-

Proceed with Sample Analysis: If all SST parameters pass, the system is deemed suitable for sample analysis. If any parameter fails, troubleshooting must be performed, and the SST must be repeated until it passes.

Calibration

Calibration determines the relationship between the analytical response of an instrument and the concentration of an analyte.[8] This is a critical step for ensuring the accuracy of quantitative measurements.

Types of Calibration

-

Single-Point Calibration: Uses a single standard to establish the relationship. It is less common and assumes a linear response through the origin.

-

Multi-Point Calibration (Calibration Curve): Uses a series of standards of known concentrations to construct a calibration curve. This is the most common and reliable method.

Experimental Protocol: Creating and Using a Multi-Point Calibration Curve

-

Prepare a Stock Standard Solution: Accurately prepare a concentrated solution of the analyte of interest.

-

Prepare a Series of Calibration Standards: Dilute the stock solution to create a series of at least five standards that bracket the expected concentration range of the unknown samples.

-

Analyze the Calibration Standards: Analyze each calibration standard using the analytical method and record the instrument response (e.g., peak area).

-

Construct the Calibration Curve: Plot the instrument response (y-axis) versus the known concentration of the standards (x-axis).

-

Perform Linear Regression: Fit a linear regression line to the data points. The equation of the line will be in the form y = mx + c, where 'y' is the response, 'x' is the concentration, 'm' is the slope, and 'c' is the y-intercept.

-

Evaluate the Calibration Curve: The quality of the calibration curve is assessed by the coefficient of determination (r²).

| Parameter | Description | Acceptance Criteria |

| Coefficient of Determination (r²) | A measure of how well the regression line fits the data points. | r² ≥ 0.995 |

-

Analyze Unknown Samples: Analyze the unknown samples using the same analytical method.

-

Determine Unknown Concentrations: Use the equation of the calibration curve to calculate the concentration of the analyte in the unknown samples from their measured responses.

Control Charting

Control charts are a powerful statistical process control tool used to monitor the stability of an analytical method over time.[9][10] The most common type of control chart used in analytical laboratories is the Levey-Jennings chart.[12][13][14][15]

Constructing a Levey-Jennings Chart

-

Select a Quality Control (QC) Sample: The QC sample should be a stable, homogenous material that is representative of the samples being analyzed.[16] It is often prepared from a bulk pool of a representative matrix or a certified reference material.

-

Establish the Mean and Standard Deviation: Analyze the QC sample a minimum of 20 times over a period of time when the method is known to be in control. Calculate the mean (x̄) and standard deviation (s) of these measurements.

-

Define Control Limits:

-

Center Line (CL): The calculated mean (x̄).

-

Warning Limits (UWL/LWL): x̄ ± 2s.

-

Action Limits (UAL/LAL): x̄ ± 3s.

-

| Control Limit | Formula | Statistical Probability (within limits) |

| Center Line (CL) | x̄ | - |

| Warning Limits (WL) | x̄ ± 2s | ~95%[12] |

| Action Limits (AL) | x̄ ± 3s | ~99.7%[15] |

-

Plot the Chart: Create a chart with the control limits and plot the results of the QC sample for each analytical run.

Interpreting Control Charts: Westgard Rules

A set of rules, known as Westgard rules, are applied to the control chart to determine if the analytical method is in a state of statistical control.[12]

| Rule | Description | Interpretation |

| 12s | One control measurement exceeds the ±2s warning limits. | Warning - potential random error. |

| 13s | One control measurement exceeds the ±3s action limits. | Rejection - indicates a significant random error or a large systematic error. |

| 22s | Two consecutive control measurements exceed the same ±2s warning limit. | Rejection - indicates a systematic error.[12] |

| R4s | The range between two consecutive control measurements exceeds 4s. | Rejection - indicates random error. |

| 41s | Four consecutive control measurements are on the same side of the mean and exceed ±1s. | Rejection - indicates a small systematic error.[12] |

| 10x̄ | Ten consecutive control measurements fall on the same side of the mean. | Rejection - indicates a systematic error. |

Experimental Protocol: Implementing a Levey-Jennings Chart

-

Establish Baseline Data: As described in 5.1, analyze the QC sample at least 20 times to establish the mean and standard deviation.

-

Construct the Chart: Draw the center line, warning limits, and action limits on a chart.

-

Routine QC Analysis: Include the QC sample in every analytical run.

-

Plot QC Results: Plot the result of the QC sample on the chart immediately after each run.

-

Apply Westgard Rules: Evaluate the plotted point and recent data points against the Westgard rules.

-

Take Action:

-

In Control: If no rules are violated, the analytical run is considered valid, and the results for the unknown samples can be reported.

-

Out of Control: If any of the rejection rules are violated, the analytical run is considered invalid. Do not report patient or product results. Investigate the cause of the error, take corrective action, and re-analyze the QC sample and all unknown samples from that run.

-

Conclusion

References

- 1. qasac-americas.org [qasac-americas.org]

- 2. youtube.com [youtube.com]

- 3. eurachem.org [eurachem.org]

- 4. scribd.com [scribd.com]

- 5. youtube.com [youtube.com]

- 6. youtube.com [youtube.com]

- 7. m.youtube.com [m.youtube.com]

- 8. mpcb.gov.in [mpcb.gov.in]

- 9. scribd.com [scribd.com]

- 10. scribd.com [scribd.com]

- 11. youtube.com [youtube.com]

- 12. m.youtube.com [m.youtube.com]

- 13. youtube.com [youtube.com]

- 14. youtube.com [youtube.com]

- 15. youtube.com [youtube.com]

- 16. A Detailed Guide Regarding Quality Control Samples | Torrent Laboratory [torrentlab.com]

Methodological & Application

Accessing and Utilizing the ESO QC1 Database: Application Notes and Protocols for Researchers

For Researchers, Scientists, and Drug Development Professionals

These application notes provide a comprehensive guide to accessing and utilizing the European Southern Observatory (ESO) Quality Control Level 1 (QC1) database. This database is a critical resource for researchers, offering detailed information on the performance and calibration of ESO's world-class astronomical instruments. Understanding and effectively using the this compound database can significantly enhance the quality and reliability of scientific data analysis.

Introduction to the ESO this compound Database

The ESO this compound database is a relational database that stores a wide array of quality control parameters derived from the routine calibration and processing of data from ESO's instruments.[1][2] These parameters are essential for monitoring the health and performance of the instruments over time, a process known as trending.[3] The this compound database is populated by automated pipelines that process calibration data, ensuring a consistent and reliable source of information.[4][5]

The primary purpose of the this compound database is to:

-

Monitor Instrument Health: Track key performance indicators to detect any changes or anomalies in instrument behavior.

-

Assess Data Quality: Provide quantitative metrics on the quality of calibration data, which directly impacts the quality of scientific observations.

-

Enable Trend Analysis: Allow for the long-term study of instrument performance, aiding in predictive maintenance and a deeper understanding of instrument characteristics.[3]

-

Support Scientific Analysis: Offer valuable metadata that can be used to select the best quality data for a specific scientific goal and to understand potential systematic effects.

Accessing the this compound Database

There are two primary methods for accessing the ESO this compound database: user-friendly web interfaces and direct access via Structured Query Language (SQL).

Web-Based Interfaces

For most users, the web-based interfaces provide a convenient way to browse and visualize the this compound data without needing to write complex queries.

-

qc1_browser: This tool allows users to view the contents of specific this compound tables. You can select an instrument and a corresponding data table (e.g., uves_bias for the UVES instrument's bias frames) to see the recorded this compound parameters. The browser also offers filtering capabilities to narrow down the data by date or other parameters.

-

qc1_plotter: This interactive tool enables the visualization of this compound parameters. Users can plot one parameter against another (e.g., a specific QC parameter against time) to identify trends and outliers. The plotter also provides basic statistical analysis of the selected data.

Direct SQL Access

For more advanced users who require more complex data retrieval and analysis, the this compound database can be queried directly using SQL. This method offers the most flexibility in terms of data selection and manipulation.

To access the database via SQL, you will need to use a command-line tool like isql. The connection parameters are specific to the ESO environment. An example of a simple SQL query to retrieve data from the uves_bias table would be:

This query selects the cdbfile (calibration data file), mjd_obs (Modified Julian Date of observation), and median_master (median value of the master bias frame) for all entries where the median master bias is greater than 150.

Data Presentation: this compound Parameters for Key Instruments

The this compound database contains a vast number of parameters for each instrument. Below are tables summarizing some of the key this compound parameters for two widely used VLT instruments: UVES and FORS1.

UVES (Ultraviolet and Visual Echelle Spectrograph) this compound Parameters

| Parameter Name | Description | Typical Use |

| resolving_power | The spectral resolving power (R = λ/Δλ) measured from calibration lamp exposures. | Monitoring the instrument's ability to distinguish fine spectral features. |

| dispersion_rms | The root mean square of the wavelength calibration solution. | Assessing the accuracy of the wavelength calibration. |

| bias_level | The median level of the master bias frame. | Monitoring the baseline electronic offset of the detector. |

| read_noise | The read-out noise of the detector measured from bias frames. | Characterizing the detector noise, which impacts the signal-to-noise ratio of faint targets. |

| flat_field_flux | The mean flux in a master flat-field frame. | Tracking the stability of the calibration lamps and the throughput of the instrument. |

FORS1 (FOcal Reducer/low dispersion Spectrograph 1) this compound Parameters

| Parameter Name | Description | Typical Use |

| zeropoint | The photometric zero point, which relates instrumental magnitudes to a standard magnitude system. | Monitoring the overall throughput of the telescope and instrument system. |

| seeing | The atmospheric seeing measured from standard star observations. | Characterizing the image quality delivered by the telescope and atmosphere. |

| strehl_ratio | The ratio of the observed peak intensity of a point source to the theoretical maximum peak intensity of a perfect telescope. | Assessing the performance of the adaptive optics system (if used). |

| dark_current | The rate at which charge is generated in the detector in the absence of light. | Monitoring the detector's thermal noise. |

| gain | The conversion factor between electrons and Analog-to-Digital Units (ADUs). | Characterizing the detector's electronic response. |

Experimental Protocols

This section provides detailed protocols for two common use cases of the ESO this compound database: long-term instrument performance monitoring and data quality assessment for a specific scientific observation.

Protocol 1: Long-Term Monitoring of UVES Resolving Power

Objective: To monitor the spectral resolving power of the UVES instrument over a period of several years to identify any long-term trends or sudden changes that might indicate an instrument problem.

Methodology:

-

Access the this compound Database: Connect to the this compound database using the qc1_plotter web interface.

-

Select Instrument and Table: Choose the UVES instrument and the uves_wave table, which contains parameters from wavelength calibration frames.

-

Select Parameters for Plotting:

-

Set the X-axis to mjd_obs (Modified Julian Date of observation) to plot against time.

-

Set the Y-axis to resolving_power.

-

-

Filter the Data: To ensure a consistent dataset, apply filters based on instrument settings. For example, select a specific central wavelength setting and slit width that are frequently used for calibration.

-

Generate the Plot: Execute the plotting function to visualize the resolving power as a function of time.

-

Analyze the Trend:

-

Visually inspect the plot for any long-term drifts, periodic variations, or abrupt jumps in the resolving power.

-

Use the statistical tools in qc1_plotter to calculate the mean and standard deviation of the resolving power over different time intervals.

-

If a significant change is detected, investigate further by correlating it with instrument intervention logs or other this compound parameters.

-

Protocol 2: Assessing Data Quality for a FORS1 Science Observation

Objective: To assess the quality of the calibration data associated with a set of FORS1 science observations to ensure that the science data can be accurately calibrated.

Methodology:

-

Identify Relevant Calibration Data: From the science observation's FITS header, identify the associated calibration files (e.g., bias, flat-field, and standard star observations).

-

Access the this compound Database: Use the qc1_browser to query the relevant this compound tables for FORS1 (e.g., fors1_bias, fors1_img_flat, fors1_img_zerop).

-

Retrieve this compound Parameters for Bias Frames:

-

Query the fors1_bias table for the specific master bias frame used to calibrate the science data.

-

Check the read_noise and bias_level parameters. Compare them to the typical values for the FORS1 detector to ensure there were no electronic issues.

-

-

Retrieve this compound Parameters for Flat-Field Frames:

-

Query the fors1_img_flat table for the master flat-field frame.

-

Examine the flat_field_flux to check the stability of the calibration lamp.

-

Look for any quality flags or comments that might indicate issues with the flat-field.

-

-

Retrieve this compound Parameters for Photometric Standard Star Observations:

-

Query the fors1_img_zerop table for the photometric zero point measurements taken on the same night as the science observations.

-

Check the zeropoint value and its uncertainty. A stable and well-determined zero point is crucial for accurate flux calibration.

-

Note the measured seeing during the standard star observation as an indicator of the atmospheric conditions.

-

-

Synthesize the Information: Based on the retrieved this compound parameters, make an informed decision about the quality of the calibration data. If any parameters are outside the expected range, it may be necessary to use alternative calibration data or to flag the science data as potentially having calibration issues.

Visualizations

The following diagrams illustrate the key workflows for accessing and utilizing the ESO this compound database.

References

Application Notes and Protocols: A Step-by-Step Guide for Primary Next-Generation Sequencing (NGS) Data Quality Control (QC1), Retrieval, and Analysis

Audience: Researchers, scientists, and drug development professionals.

Introduction:

This document provides a comprehensive, step-by-step guide for the initial quality control (QC1) of raw next-generation sequencing (NGS) data. This foundational analysis is critical for ensuring the reliability and reproducibility of downstream applications, including variant calling, RNA sequencing analysis, and epigenetic studies. Adherence to these protocols will enable researchers to identify potential issues with sequencing data at the earliest stage, saving valuable time and resources.

This compound Data Retrieval

The first step in any NGS data analysis pipeline is to retrieve the raw sequencing data, which is typically in the FASTQ format. FASTQ files contain the nucleotide sequence of each read and a corresponding quality score.

Protocol for Data Retrieval:

-