TDBIA

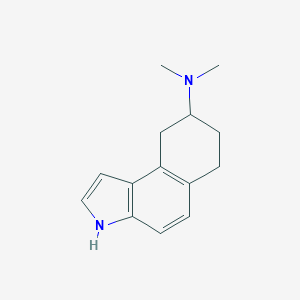

Description

The exact mass of the compound 6,7,8,9-Tetrahydro-N,N-dimethyl-3H-benz(e)indol-8-amine is unknown and the complexity rating of the compound is unknown. Its Medical Subject Headings (MeSH) category is Chemicals and Drugs Category - Heterocyclic Compounds - Heterocyclic Compounds, Fused-Ring - Heterocyclic Compounds, 2-Ring - Indoles - Supplementary Records. The storage condition is unknown. Please store according to label instructions upon receipt of goods.

BenchChem offers high-quality this compound suitable for many research applications. Different packaging options are available to accommodate customers' requirements. Please inquire for more information about this compound including the price, delivery time, and more detailed information at info@benchchem.com.

Structure

2D Structure

3D Structure

Properties

CAS No. |

121784-56-3 |

|---|---|

Molecular Formula |

C14H18N2 |

Molecular Weight |

214.31 g/mol |

IUPAC Name |

N,N-dimethyl-6,7,8,9-tetrahydro-3H-benzo[e]indol-8-amine |

InChI |

InChI=1S/C14H18N2/c1-16(2)11-5-3-10-4-6-14-12(7-8-15-14)13(10)9-11/h4,6-8,11,15H,3,5,9H2,1-2H3 |

InChI Key |

HENBLLXAHPTGHX-UHFFFAOYSA-N |

SMILES |

CN(C)C1CCC2=C(C1)C3=C(C=C2)NC=C3 |

Canonical SMILES |

CN(C)C1CCC2=C(C1)C3=C(C=C2)NC=C3 |

Synonyms |

6,7,8,9-tetrahydro-N,N-dimethyl-3H-benz(e)indol-8-amine 6,7,8,9-tetrahydro-N,N-dimethyl-3H-benz(e)indol-8-amine, (+)-(R)-isomer 6,7,8,9-tetrahydro-N,N-dimethyl-3H-benz(e)indol-8-amine, (-)-(S)-isomer TDBIA |

Origin of Product |

United States |

Foundational & Exploratory

A Technical Guide to Quantitative Tibial Bone Assessment for Early-Stage Osteoporosis Detection

An In-depth Analysis of Methodologies and Clinical Data for Researchers and Drug Development Professionals

Introduction

The early and accurate detection of osteoporosis is critical for preventing debilitating fractures and managing bone health. While Dual-Energy X-ray Absorptiometry (DXA) is the current gold standard for measuring bone mineral density (BMD), its accessibility can be limited. This has spurred research into alternative, cost-effective, and readily available screening methods. This technical guide explores the use of quantitative assessment of tibial bone characteristics as a promising avenue for the early detection of osteoporosis. This approach focuses on analyzing parameters such as cortical thickness and the speed of sound through the tibia, offering valuable insights into bone health.

Osteoporosis is a progressive systemic skeletal disease characterized by low bone mass and the deterioration of bone tissue's microarchitecture, leading to increased bone fragility and a higher risk of fractures. The condition is often "silent," with no symptoms until a fracture occurs. Therefore, early identification of individuals with low bone density (osteopenia) or osteoporosis is crucial for timely intervention.

Quantitative Data Summary

The following tables summarize key quantitative data from studies evaluating tibial bone assessment in relation to established osteoporosis diagnostic criteria.

Table 1: Tibial Cortical Thickness (TCT) in Different Bone Density Categories

| Bone Density Category | Mean Tibial Cortical Thickness (mm) |

| Normal | Data not explicitly provided in summary |

| Osteopenia | 3.98 (for the total study population including all categories)[1] |

| Osteoporosis | Significantly lower than normal and osteopenic groups (P < 0.0001)[1] |

A study involving 62 patients (90% female) with a mean age of 57 years found a significant difference in the mean Tibial Cortical Thickness (TCT) among normal, osteopenic, and osteoporotic groups[1].

Table 2: Correlation of Tibial Cortical Thickness with Bone Mineral Density (BMD) T-scores

| Parameter 1 | Parameter 2 | Correlation Coefficient (r) | Significance |

| Tibial Cortical Thickness (TCT) | Spine T-score (from DXA) | Direct and Significant | P < 0.0001[1] |

The findings indicate a strong positive correlation between TCT and the T-scores obtained from DXA scans, suggesting that a decrease in tibial cortical thickness is associated with lower bone mineral density[1].

Table 3: Diagnostic Accuracy of Tibial Cortical Thickness for Osteoporosis

| Diagnostic Test | Area Under the Curve (AUC) | Interpretation |

| Tibial Cortical Thickness (TCT) | 0.9 or above is excellent, 0.8-0.89 is good, 0.7-0.79 is fair[1] | TCT can be a relatively accurate diagnostic tool for predicting osteoporosis[1] |

The Receiver Operating Characteristic (ROC) curve analysis is used to determine the optimal cutoff point for TCT to predict osteoporosis[1].

Experimental Protocols

Detailed methodologies are crucial for the replication and validation of research findings. The following sections outline the key experimental protocols for assessing tibial bone characteristics.

Protocol 1: Measurement of Tibial Cortical Thickness (TCT) using Plain Radiography

Objective: To measure the cortical thickness of the tibia from standard anteroposterior (AP) knee radiographs.

Materials:

-

Standard X-ray machine

-

Digital imaging software with measurement tools

Procedure:

-

Patient Positioning: The patient is positioned for a standard AP radiograph of the knee.

-

Image Acquisition: A plain radiograph of the AP view of the knee is taken.

-

Measurement Location: The total thickness of the tibia cortex (sum of the medial and lateral cortex) is measured at a point 10 cm distal from the proximal tibial joint line[1].

-

Calculation: The thicknesses of the medial and lateral cortices are measured, and the sum of these two measurements is recorded as the total TCT[1]. The mean of these two measurements can also be considered as the TCT[1].

-

Data Analysis: The measured TCT values are then correlated with the patient's BMD T-scores obtained from DXA.

Protocol 2: Dual-Energy X-ray Absorptiometry (DXA)

Objective: To measure bone mineral density (BMD) of the lumbar spine and femur, which serves as the gold standard for osteoporosis diagnosis.

Materials:

-

DXA scanner (e.g., Osteosys Dexum T)[1]

Procedure:

-

Patient Preparation: The patient lies on the DXA table.

-

Scanning: The DXA scanner's arm passes over the areas of interest, typically the lumbar spine and hip[2]. The scanner emits two low-dose X-ray beams with different energy levels[2].

-

Data Acquisition: The detector measures the amount of X-rays that pass through the bone from each beam[2].

-

BMD Calculation: The machine's software calculates the BMD based on the difference in absorption of the two X-ray beams.

-

T-score Interpretation: The BMD measurement is reported as a T-score, which compares the patient's BMD to that of a healthy young adult[3].

Protocol 3: Quantitative Ultrasound (QUS) of the Tibia

Objective: To assess bone properties, such as the speed of sound (SOS), in the tibia as an indicator of bone health.

Materials:

-

Quantitative ultrasound scanner with a dual-transducer probe

Procedure:

-

Patient Positioning: The patient is seated or lying down with the leg accessible.

-

Probe Placement: A dual-transducer ultrasound probe is placed on the tibia shaft.

-

Measurement: The device measures the propagation speed of the ultrasound wave through both the cortical and cancellous layers of the bone[4].

-

Data Analysis: The measured SOS is used as an indicator of bone density and quality. Studies have shown a high correlation (r=0.93) between ultrasound measurements of the tibia and BMD from DXA[4].

Signaling Pathways and Logical Relationships

The underlying biological mechanisms and the logical framework for using tibial assessment in osteoporosis detection are illustrated below.

References

- 1. Evaluation of the tibial cortical thickness accuracy in osteoporosis diagnosis in comparison with dual energy X-ray absorptiometry - PMC [pmc.ncbi.nlm.nih.gov]

- 2. The Importance of DEXA Imaging in Early Detection of Osteoporosis — Imaging Specialists [imagingsc.com]

- 3. mdpi.com [mdpi.com]

- 4. Quantitative assessment of osteoporosis from the tibia shaft by ultrasound techniques - PubMed [pubmed.ncbi.nlm.nih.gov]

Unveiling the Intricacies of Distal Tibia Microarchitecture: A Technical Guide for Researchers

For Immediate Release

This technical guide provides a comprehensive overview of the microarchitecture of the distal tibia, an area of significant interest in bone research and drug development due to its susceptibility to fracture and its role in weight-bearing. This document is intended for researchers, scientists, and professionals in the pharmaceutical industry, offering a detailed exploration of quantitative data, experimental protocols, and key signaling pathways that govern the structural integrity of this critical anatomical site.

Quantitative Analysis of Distal Tibia Microarchitecture

The microarchitecture of the distal tibia is a critical determinant of its mechanical competence. High-resolution peripheral quantitative computed tomography (HR-pQCT) is a non-invasive imaging technique that allows for the in vivo assessment of bone microarchitecture. The following tables summarize key quantitative parameters of the distal tibia trabecular and cortical bone from various studies, providing a comparative reference for researchers.

Table 1: Trabecular Bone Microarchitecture of the Human Distal Tibia

| Parameter | Description | Reported Values (Mean ± SD or Range) |

| Bone Volume Fraction (BV/TV) | The fraction of the total tissue volume that is occupied by bone. | 0.13 - 0.25 |

| Trabecular Number (Tb.N) | The average number of trabeculae per unit length. | 1.5 - 2.5 mm⁻¹ |

| Trabecular Thickness (Tb.Th) | The average thickness of the trabeculae. | 0.08 - 0.15 mm |

| Trabecular Separation (Tb.Sp) | The average distance between trabeculae. | 0.4 - 0.7 mm |

Table 2: Cortical Bone Microarchitecture of the Human Distal Tibia

| Parameter | Description | Reported Values (Mean ± SD or Range) |

| Cortical Thickness (Ct.Th) | The average thickness of the cortical shell. | 0.8 - 1.5 mm |

| Cortical Porosity (Ct.Po) | The fraction of the cortical bone volume that is porous. | 1.0 - 4.0 % |

| Cortical Bone Mineral Density (Ct.BMD) | The density of the cortical bone. | 800 - 950 mg HA/cm³ |

Experimental Protocols for Assessing Distal Tibia Microarchitecture

A thorough understanding of the distal tibia's microarchitecture requires a multi-faceted approach, combining advanced imaging techniques with traditional histological and biomechanical assessments. This section provides detailed methodologies for key experiments.

Micro-Computed Tomography (µCT) Analysis

Micro-computed tomography (µCT) is a high-resolution ex vivo imaging technique that provides detailed three-dimensional information about bone microarchitecture.

Experimental Workflow for µCT Analysis

Caption: Workflow for µCT analysis of the distal tibia.

Detailed Protocol:

-

Sample Preparation:

-

Fixation: Immediately following extraction, fix distal tibia samples in 10% neutral buffered formalin for 48-72 hours at 4°C.

-

Storage: After fixation, transfer the samples to 70% ethanol for long-term storage at 4°C. Ensure the samples are fully submerged.

-

-

Image Acquisition:

-

Scanning: Scan the samples using a high-resolution µCT system. Typical scanning parameters for a human distal tibia might include an isotropic voxel size of 10-20 µm, a tube voltage of 55-70 kVp, and a current of 100-145 µA.

-

Region of Interest (ROI): Define a standardized region of interest for analysis. A common approach for the distal tibia is to start the scan at a fixed distance (e.g., 22.5 mm) proximal to the tibial plafond and acquire a set number of slices (e.g., 110 slices, corresponding to a 9.02 mm section).[1]

-

-

Image Reconstruction and Analysis:

-

Reconstruction: Reconstruct the acquired 2D projection images into a 3D volumetric dataset using the manufacturer's software.

-

Segmentation: Segment the bone from the bone marrow using a global thresholding algorithm.

-

Quantitative Analysis: Perform a 3D analysis on the segmented bone volume to calculate microarchitectural parameters such as Bone Volume Fraction (BV/TV), Trabecular Number (Tb.N), Trabecular Thickness (Tb.Th), and Trabecular Separation (Tb.Sp).[1]

-

Undecalcified Bone Histomorphometry

Histomorphometry of undecalcified bone sections provides crucial information on cellular activity and bone matrix composition.

Experimental Workflow for Undecalcified Bone Histomorphometry

Caption: Workflow for undecalcified bone histomorphometry.

Detailed Protocol:

-

Sample Preparation:

-

Sectioning:

-

Using a heavy-duty microtome equipped with a tungsten carbide knife, cut 5-10 µm thick sections.

-

-

Staining:

-

Goldner's Trichrome Stain: This stain is used to differentiate between mineralized bone, osteoid, and cellular components.[2][4]

-

Stain with Weigert's iron hematoxylin.

-

Differentiate in acid alcohol.

-

Stain with a solution containing Ponceau de Xylidine and Acid Fuchsin.

-

Treat with phosphomolybdic acid.

-

Counterstain with Light Green or Fast Green.

-

Results: Mineralized bone stains green, osteoid stains red/orange, and cell nuclei stain dark blue/black.[5]

-

-

Von Kossa Stain: This method is used to detect mineralized bone by staining the phosphate in the hydroxyapatite.[6][7]

-

Incubate sections in a silver nitrate solution under a bright light.

-

Rinse with distilled water.

-

Treat with sodium thiosulfate to remove unreacted silver.

-

Counterstain with a nuclear stain such as Nuclear Fast Red.

-

-

-

Histomorphometric Analysis:

-

Acquire images of the stained sections using a light microscope equipped with a digital camera.

-

Use image analysis software to quantify various static and dynamic parameters of bone remodeling, such as osteoid volume/bone volume (OV/BV), osteoclast surface/bone surface (Oc.S/BS), and mineral apposition rate (MAR) if fluorochrome labels were administered in vivo.

-

Biomechanical Testing

Biomechanical testing provides a direct measure of the mechanical properties of the distal tibia, such as its strength and stiffness.

Experimental Workflow for Biomechanical Testing

Caption: Workflow for biomechanical testing of the distal tibia.

Detailed Protocols:

-

Uniaxial Compression Testing:

-

Sample Preparation: Prepare cylindrical or cubic bone samples from the distal tibia. The ends of the samples should be made parallel and smooth. Embed the ends in a potting material like PMMA to ensure a flat loading surface.

-

Testing: Use a universal testing machine to apply a compressive load at a constant strain rate (e.g., 0.5% per second) until failure.

-

Data Analysis: Record the load and displacement data to generate a load-displacement curve. From this curve, calculate the ultimate compressive strength, stiffness (slope of the linear portion), and toughness (area under the curve).

-

-

Torsion Testing:

-

Sample Preparation: Prepare standardized bone samples, often with a defined gauge length. Securely fix the ends of the sample in grips, preventing rotation at the interface.

-

Testing: Apply a torsional load at a constant angular displacement rate until the sample fractures.

-

Data Analysis: Record the torque and angular displacement to create a torque-rotation curve. From this, determine the torsional rigidity, maximum torque, and energy to failure.

-

Key Signaling Pathways in Bone Microarchitecture Regulation

The microarchitecture of the distal tibia is dynamically maintained through a complex interplay of signaling pathways that regulate the activity of bone-forming osteoblasts and bone-resorbing osteoclasts.

Wnt Signaling Pathway

The Wnt signaling pathway is a crucial regulator of bone formation and homeostasis.[8]

Caption: Canonical Wnt signaling pathway in bone formation.

RANK/RANKL/OPG Signaling Pathway

The RANK/RANKL/OPG signaling axis is the primary regulator of osteoclast differentiation and activity, and thus bone resorption.[9][10]

Caption: RANK/RANKL/OPG signaling in osteoclastogenesis.

Bone Morphogenetic Protein (BMP) Signaling Pathway

BMPs are growth factors that play a pivotal role in bone formation by inducing the differentiation of mesenchymal stem cells into osteoblasts.[7][11]

Caption: BMP signaling pathway in osteoblast differentiation.

References

- 1. Bone microarchitectural analysis using ultra-high-resolution CT in tiger vertebra and human tibia - PMC [pmc.ncbi.nlm.nih.gov]

- 2. Undecalcified Bone Preparation for Histology, Histomorphometry and Fluorochrome Analysis - PMC [pmc.ncbi.nlm.nih.gov]

- 3. m.youtube.com [m.youtube.com]

- 4. researchgate.net [researchgate.net]

- 5. researchgate.net [researchgate.net]

- 6. researchgate.net [researchgate.net]

- 7. TGF-β and BMP Signaling in Osteoblast Differentiation and Bone Formation - PMC [pmc.ncbi.nlm.nih.gov]

- 8. Role of Wnt Signaling in Bone Remodeling and Repair - PMC [pmc.ncbi.nlm.nih.gov]

- 9. researchgate.net [researchgate.net]

- 10. RANKL/RANK signaling pathway | Pathway - PubChem [pubchem.ncbi.nlm.nih.gov]

- 11. TGF-β and BMP Signaling in Osteoblast Differentiation and Bone Formation [ijbs.com]

An In-depth Technical Guide to Imaging Techniques for the Distal Tibia

For Researchers, Scientists, and Drug Development Professionals

This guide provides a comprehensive review of established and emerging imaging modalities for the assessment of the distal tibia. It is designed to offer researchers, scientists, and professionals in drug development a detailed technical understanding of these techniques, facilitating their application in preclinical and clinical research.

Overview of Imaging Modalities

The distal tibia, a critical weight-bearing structure of the ankle joint, is susceptible to a range of pathologies, from acute fractures to chronic degenerative conditions. Accurate and detailed imaging is paramount for diagnosis, treatment planning, and the evaluation of therapeutic interventions. This guide explores the core imaging techniques: X-ray radiography, computed tomography (CT), magnetic resonance imaging (MRI), and ultrasound (US), with a focus on their technical specifications, experimental protocols, and quantitative performance.

Data Presentation: Quantitative Comparison of Imaging Modalities

The selection of an appropriate imaging modality is contingent on the specific clinical or research question. The following tables summarize the quantitative performance of each technique for different pathologies of the distal tibia.

| Pathology | Imaging Modality | Sensitivity | Specificity | Key Advantages | Limitations | Citation(s) |

| Distal Tibia Fractures | X-ray Radiography | Moderate | Moderate-High | Widely available, low cost, rapid acquisition. | Limited visualization of complex fracture patterns and soft tissues. | [1] |

| Computed Tomography (CT) | High (yield of 23% for articular fractures in distal third fractures) | High | Excellent for detailed fracture characterization and pre-operative planning. | Ionizing radiation, higher cost than X-ray. | [2][3][4] | |

| Syndesmotic Injury | Weight-Bearing CT (WBCT) | High (95.8% for volumetric measurement) | High (83.3% for volumetric measurement) | Allows for functional assessment of joint stability under physiological load. | Limited availability, higher radiation dose than conventional CT. | [5][6][7][8] |

| Soft Tissue & Ligamentous Injury | Ultrasound (US) | High (up to 90% for lateral ankle ligaments) | High | Real-time dynamic imaging, no ionizing radiation, cost-effective. | Operator-dependent, limited visualization of intra-articular structures. | [9][10][11][12][13] |

| Magnetic Resonance Imaging (MRI) | High | High | Superior soft tissue contrast, excellent for ligament, tendon, and cartilage assessment. | Higher cost, longer acquisition time, contraindications (e.g., pacemakers). | [14][15] | |

| Articular Cartilage Lesions | Magnetic Resonance Imaging (MRI) (3T with 3D-DESS) | Grade I: 8.8%, Grade II: 67.9%, Grade III: 74.1%, Grade IV: 83.3% | High (99.2% - 99.8%) | Non-invasive, detailed assessment of cartilage morphology and some compositional information. | Lower sensitivity for early-stage (Grade I) lesions. | [16][17][18] |

| Weight-Bearing CT Arthrography (WBCTa) | High (serves as a referent standard in some studies) | High | Provides imaging under load, potentially revealing lesions not seen on non-weight-bearing MRI. | Invasive (requires contrast injection), ionizing radiation. | [19] |

Experimental Protocols

Detailed and standardized experimental protocols are crucial for reproducible and comparable research outcomes. This section outlines key protocols for each imaging modality.

X-ray Radiography for Distal Tibia Fractures

-

Standard Views:

-

Anteroposterior (AP)

-

Lateral

-

Mortise (AP with 15-20 degrees of internal rotation)

-

-

Procedure:

-

Position the patient supine on the imaging table.

-

For the AP view, ensure the foot is in a neutral position with the ankle at 90 degrees.

-

For the lateral view, the patient should be turned onto the affected side with the knee slightly flexed.

-

For the mortise view, internally rotate the entire leg and foot approximately 15-20 degrees to bring the intermalleolar plane parallel to the detector.

-

The X-ray beam should be centered on the ankle joint.

-

-

Exposure Parameters:

-

kVp: 60-70

-

mAs: 3-5 (will vary based on patient size and equipment)

-

Computed Tomography (CT) for Pilon Fractures

-

Patient Positioning: Supine, feet first.

-

Scan Range: From the tibial tuberosity to the plantar aspect of the foot.

-

Acquisition:

-

Helical acquisition with thin slices (≤ 1.0 mm).

-

Tube voltage: 120 kVp

-

Tube current: Automated dose modulation or a fixed mAs of 150-250.

-

-

Reconstruction:

Magnetic Resonance Imaging (MRI) of the Ankle at 3T

-

Patient Positioning: Supine, feet first, with the ankle in a dedicated ankle coil at a 90-degree angle.

-

Standard Sequences:

-

Sagittal T1-weighted or Proton Density (PD)-weighted: Provides excellent anatomical detail.

-

Sagittal T2-weighted with fat saturation or STIR: Sensitive for detecting fluid and edema.

-

Axial PD-weighted with and without fat saturation: Useful for assessing tendons and ligaments in cross-section.

-

Coronal PD-weighted with fat saturation: Provides a comprehensive view of the articular surfaces and collateral ligaments.

-

-

Advanced Sequences for Cartilage Assessment:

-

3D Double-Echo Steady-State (3D-DESS): High-resolution imaging for detailed morphological assessment of articular cartilage.[17]

-

T2 Mapping: Quantitative assessment of cartilage matrix composition.

-

-

Typical Parameters (3T):

Ultrasound (US) for Soft Tissue and Ligamentous Injury

-

Transducer: High-frequency linear array transducer (10-18 MHz).

-

Patient Positioning:

-

Anterior Talofibular Ligament (ATFL): Patient supine with the foot in slight plantar flexion and internal rotation.

-

Calcaneofibular Ligament (CFL): Patient supine with the foot in dorsiflexion.

-

Posterior Talofibular Ligament (PTFL): Patient prone with the foot hanging off the edge of the examination table.

-

Syndesmosis: Patient supine with the foot in a neutral position. Dynamic assessment with external rotation stress can be performed.

-

-

Imaging Protocol:

-

Begin with a survey scan in both the longitudinal and transverse planes.

-

Perform a systematic evaluation of all relevant ligaments and tendons.

-

Dynamic imaging with passive or active range of motion can be used to assess for ligamentous instability and tendon subluxation.

-

Compare with the contralateral, asymptomatic side.

-

Visualization of Signaling Pathways and Experimental Workflows

The following diagrams, generated using the DOT language, illustrate key biological pathways involved in distal tibia pathology and standardized workflows for imaging-based research.

Signaling Pathways in Bone Fracture Healing

The healing of a distal tibial fracture is a complex biological process involving a cascade of signaling molecules. Two of the most critical pathways are the Transforming Growth Factor-beta (TGF-β) and Vascular Endothelial Growth Factor (VEGF) signaling pathways.

Caption: TGF-β signaling pathway in fracture healing.[24][25][26][27][28][29]

Caption: VEGF signaling pathway in fracture healing.[30][31][32][33][34]

Experimental Workflow for Imaging-Based Assessment

A standardized workflow is essential for conducting rigorous imaging-based research on the distal tibia. The following diagram outlines a typical experimental workflow.

Caption: A standardized experimental workflow for imaging-based research of the distal tibia.

References

- 1. Diagnosis of union of distal tibia fractures: accuracy and interobserver reliability - PubMed [pubmed.ncbi.nlm.nih.gov]

- 2. researchgate.net [researchgate.net]

- 3. Diagnosing Fractures of the Distal Tibial Articular Surface in Tibia Shaft Fractures: Is Computed Tomography Always Necessary? - PubMed [pubmed.ncbi.nlm.nih.gov]

- 4. researchgate.net [researchgate.net]

- 5. Volume Measurements on Weightbearing Computed Tomography Can Detect Subtle Syndesmotic Instability - PMC [pmc.ncbi.nlm.nih.gov]

- 6. Weight-bearing CT Useful for Diagnosis of Subtle Syndesmotic Instability - Mass General Advances in Motion [advances.massgeneral.org]

- 7. Diagnostic Accuracy of Weightbearing CT in Detecting Subtle Chronic Syndesmotic Instability: A Prospective Comparative Study - PMC [pmc.ncbi.nlm.nih.gov]

- 8. Can Weightbearing Cone-beam CT Reliably Differentiate Between Stable and Unstable Syndesmotic Ankle Injuries? A Systematic Review and Meta-analysis - PMC [pmc.ncbi.nlm.nih.gov]

- 9. The accuracy of diagnostic ultrasound imaging for musculoskeletal soft tissue pathology of the extremities: a comprehensive review of the literature - PMC [pmc.ncbi.nlm.nih.gov]

- 10. Ultrasound imaging in musculoskeletal injuries-What the Orthopaedic surgeon needs to know - PMC [pmc.ncbi.nlm.nih.gov]

- 11. How Accurate Is Ultrasound for Diagnosing Soft Tissue Injuries? [ultrasoundphysio.ie]

- 12. What Are the Benefits of Using Ultrasound for Diagnosing Soft Tissue Injuries? | Our Blog [glmi.com]

- 13. Research Portal [researchportal.murdoch.edu.au]

- 14. The value of magnetic resonance imaging in the preoperative diagnosis of tibial plateau fractures: a systematic literature review - PMC [pmc.ncbi.nlm.nih.gov]

- 15. ajronline.org [ajronline.org]

- 16. mdpi.com [mdpi.com]

- 17. Accuracy of cartilage-specific 3-Tesla 3D-DESS magnetic resonance imaging in the diagnosis of chondral lesions: comparison with knee arthroscopy - PMC [pmc.ncbi.nlm.nih.gov]

- 18. radiopaedia.org [radiopaedia.org]

- 19. Diagnostic value of MRI for detection of knee cartilage lesions vs. weight-bearing CT arthrography (WBCTa) - PubMed [pubmed.ncbi.nlm.nih.gov]

- 20. ota.org [ota.org]

- 21. MR imaging of the ankle at 3 Tesla and 1.5 Tesla: protocol optimization and application to cartilage, ligament and tendon pathology in cadaver specimens - PubMed [pubmed.ncbi.nlm.nih.gov]

- 22. mrimaster.com [mrimaster.com]

- 23. researchgate.net [researchgate.net]

- 24. Molecular signaling in bone fracture healing and distraction osteogenesis. | Semantic Scholar [semanticscholar.org]

- 25. journals.biologists.com [journals.biologists.com]

- 26. TGF‐β/Smad2 signalling regulates enchondral bone formation of Gli1+ periosteal cells during fracture healing - PMC [pmc.ncbi.nlm.nih.gov]

- 27. Transforming Growth Factor Beta Family: Insight into the Role of Growth Factors in Regulation of Fracture Healing Biology and Potential Clinical Applications - PMC [pmc.ncbi.nlm.nih.gov]

- 28. academic.oup.com [academic.oup.com]

- 29. Imaging transforming growth factor-beta signaling dynamics and therapeutic response in breast cancer bone metastasis - PubMed [pubmed.ncbi.nlm.nih.gov]

- 30. Angiogenesis in Bone Regeneration - PMC [pmc.ncbi.nlm.nih.gov]

- 31. The roles of vascular endothelial growth factor in bone repair and regeneration - PMC [pmc.ncbi.nlm.nih.gov]

- 32. JCI - Osteoblast-derived VEGF regulates osteoblast differentiation and bone formation during bone repair [jci.org]

- 33. JCI - Synergistic enhancement of bone formation and healing by stem cell–expressed VEGF and bone morphogenetic protein-4 [jci.org]

- 34. Synergistic Effects of Vascular Endothelial Growth Factor on Bone Morphogenetic Proteins Induced Bone Formation In Vivo: Influencing Factors and Future Research Directions - PMC [pmc.ncbi.nlm.nih.gov]

For Researchers, Scientists, and Drug Development Professionals

An In-depth Technical Guide on the Wnt/β-catenin Signaling Pathway and its Relevance in Pediatric Bone Development

Introduction

Pediatric bone development is a complex and highly regulated process involving the coordinated actions of numerous signaling pathways. Among these, the canonical Wnt/β-catenin signaling pathway has emerged as a critical regulator of skeletal patterning, bone formation, and homeostasis. Dysregulation of this pathway is implicated in a variety of skeletal diseases, making it a key area of research for the development of novel therapeutic interventions. This guide provides a comprehensive overview of the Wnt/β-catenin pathway's role in pediatric bone development, with a focus on quantitative data, detailed experimental protocols, and visual representations of key processes.

The Wnt signaling pathway is integral to skeletal biology, influencing processes from embryonic development through to bone maintenance and repair. The canonical Wnt/β-catenin pathway, in particular, is essential for directing the fate of mesenchymal stem cells towards the osteoblast lineage, the bone-forming cells. This is achieved by suppressing adipogenic transcription factors while inducing key osteogenic transcription factors like Runx2 and osterix.

1. The Canonical Wnt/β-catenin Signaling Pathway in Bone Development

The Wnt pathway is broadly divided into the canonical (β-catenin-dependent) and non-canonical (β-catenin-independent) pathways. The canonical pathway is the primary focus of this guide due to its well-established role in osteoblast differentiation.

In the absence of a Wnt ligand, cytoplasmic β-catenin is targeted for degradation by a "destruction complex" consisting of Axin, Adenomatous Polyposis Coli (APC), Casein Kinase 1α (CK1α), and Glycogen Synthase Kinase 3β (GSK3β). GSK3β phosphorylates β-catenin, marking it for ubiquitination and subsequent proteasomal degradation. This keeps intracellular levels of β-catenin low.

The pathway is activated when a Wnt ligand binds to a Frizzled (Fz) receptor and its co-receptor, Low-density lipoprotein receptor-related protein 5 or 6 (LRP5/6). This binding event leads to the recruitment of the Dishevelled (Dvl) protein, which in turn inhibits the destruction complex. As a result, β-catenin is no longer phosphorylated and accumulates in the cytoplasm. This stabilized β-catenin then translocates to the nucleus, where it partners with T-cell factor/lymphoid enhancer factor (TCF/LEF) transcription factors to activate the expression of Wnt target genes, many of which are crucial for osteoblast differentiation and function.

A Technical Guide to High-Resolution Peripheral Quantitative Computed Tomography (HR-pQCT) for Trabecular and Dense Bone Image Analysis

For Researchers, Scientists, and Drug Development Professionals

High-Resolution Peripheral Quantitative Computed Tomography (HR-pQCT) is a non-invasive, low-radiation imaging modality that provides detailed three-dimensional assessment of bone microstructure at peripheral skeletal sites, most commonly the distal radius and tibia.[1][2] Its ability to separately analyze cortical and trabecular bone compartments makes it an invaluable tool in osteoporosis research, clinical trials for metabolic bone diseases, and the development of novel therapeutics targeting skeletal health.[2][3][4][5] This guide provides an in-depth overview of the technical aspects of HR-pQCT for Trabecular and Dense Bone Image Analysis (TDBIA), including experimental protocols, quantitative data presentation, and visualization of key workflows.

Core Principles of HR-pQCT

HR-pQCT operates on the same principles as conventional computed tomography (CT) but achieves a significantly higher spatial resolution, with an isotropic voxel size typically ranging from 61 to 82 μm.[4] This high resolution allows for the direct measurement and quantification of various microstructural parameters of both trabecular and cortical bone. The effective radiation dose from a standard HR-pQCT scan is low, typically around 3-5 μSv, which is comparable to or lower than other common bone densitometry techniques like dual-energy X-ray absorptiometry (DXA).[1][6]

The technology has evolved from first-generation to second-generation scanners, with the latter offering improved resolution and a larger scan region.[1] It is important to note that direct comparison of some parameters across different scanner generations should be done with caution, particularly for metrics highly dependent on resolution like trabecular thickness.[1]

Experimental Protocols

Standardized protocols for image acquisition and analysis are crucial for ensuring the comparability of data across different studies and clinical trials.[7][8] The International Osteoporosis Foundation (IOF), the American Society for Bone and Mineral Research (ASBMR), and the European Calcified Tissue Society (ECTS) have jointly published guidelines to promote standardization.[7][8]

Key Experimental Steps:

-

Patient Positioning and Scan Site Selection:

-

The most common scanning sites are the non-dominant distal radius and tibia.[9] In cases of previous fracture or surgery at the non-dominant site, the contralateral limb is scanned.[9]

-

The limb is immobilized in a carbon fiber cast to minimize motion artifacts during the scan.[1]

-

A reference line is established at the distal endplate of the radius or tibia.[1]

-

The scan region is then defined by a fixed offset proximal to this reference line. For second-generation scanners, this is typically 9.0 mm for the radius and 22.0 mm for the tibia.[1]

-

-

Image Acquisition:

-

A scout view is performed to accurately position the scan region.

-

The scanner acquires a series of parallel CT slices, covering a defined length of the bone (e.g., 10.20 mm for second-generation scanners).[1]

-

The total scan time is typically a few minutes per site.

-

-

Image Processing and Segmentation:

-

The acquired grayscale images are processed to segment the bone from soft tissue.

-

The periosteal surface is contoured, and automated algorithms are used to separate the cortical and trabecular bone compartments.[1][10]

-

Visual inspection and manual correction of the contours may be necessary to ensure accurate segmentation, especially in cases of severe bone deterioration.[1]

-

-

Data Analysis:

Quantitative Data Presentation

HR-pQCT provides a comprehensive set of quantitative parameters to characterize bone health. These can be broadly categorized into densitometric, morphometric, and mechanical properties for both trabecular and cortical bone.

Table 1: Key Densitometric and Morphometric Parameters from HR-pQCT

| Parameter | Abbreviation | Description | Compartment |

| Densitometric | |||

| Total Volumetric Bone Mineral Density | Tt.vBMD | The average mineral density of the entire bone region (cortical + trabecular). | Total |

| Trabecular Volumetric Bone Mineral Density | Tb.vBMD | The average mineral density within the trabecular compartment. | Trabecular |

| Cortical Volumetric Bone Mineral Density | Ct.vBMD | The average mineral density within the cortical compartment. | Cortical |

| Trabecular Microstructure | |||

| Bone Volume Fraction | BV/TV | The ratio of trabecular bone volume to the total volume of the trabecular compartment. | Trabecular |

| Trabecular Number | Tb.N | The average number of trabeculae per unit length. | Trabecular |

| Trabecular Thickness | Tb.Th | The average thickness of the trabeculae. | Trabecular |

| Trabecular Separation | Tb.Sp | The average distance between trabeculae. | Trabecular |

| Cortical Microstructure | |||

| Cortical Thickness | Ct.Th | The average thickness of the cortical shell. | Cortical |

| Cortical Porosity | Ct.Po | The volume of pores within the cortical bone as a percentage of the total cortical bone volume. | Cortical |

| Cortical Area | Ct.Ar | The cross-sectional area of the cortical bone. | Cortical |

Note: The methods for deriving some trabecular parameters can differ between first and second-generation scanners. For instance, with first-generation scanners, trabecular thickness and separation are often derived from trabecular number and bone volume fraction, assuming a plate-like model. Second-generation scanners with higher resolution allow for more direct measurement of these parameters.[1]

Table 2: Application of HR-pQCT in Monitoring Therapeutic Interventions

| Therapeutic Agent | Key Findings from HR-pQCT Studies |

| Antiresorptive Agents (e.g., Alendronate) | Studies have shown maintenance or small increases in cortical thickness and density.[5][11] |

| Anabolic Agents (e.g., Teriparatide) | Increases in trabecular thickness and bone volume fraction have been observed.[5][11] Some studies report a transient increase in cortical porosity.[5] |

| Strontium Ranelate | Increases in cortical thickness, cortical BMD, and trabecular bone volume fraction have been reported.[11] |

Mandatory Visualizations

Experimental Workflow for HR-pQCT Analysis

Caption: Standard HR-pQCT Experimental Workflow

Hierarchical Data Structure of HR-pQCT Outputs

Caption: Hierarchical Structure of HR-pQCT Data

Conclusion

HR-pQCT is a powerful imaging tool that provides unparalleled in vivo insights into bone microarchitecture.[7][8] For researchers, scientists, and drug development professionals, it offers the ability to non-invasively monitor changes in both trabecular and cortical bone in response to disease progression and therapeutic intervention.[4][5] By adhering to standardized protocols and leveraging the comprehensive quantitative data provided by this technology, the scientific community can continue to advance our understanding of skeletal health and develop more effective treatments for bone disorders. The use of HR-pQCT in clinical trials is expanding, and its role in personalized medicine and fracture risk assessment is expected to grow.[2][12]

References

- 1. Guidelines for Assessment of Bone Density and Microarchitecture In Vivo Using High-Resolution Peripheral Quantitative Computed Tomography - PMC [pmc.ncbi.nlm.nih.gov]

- 2. academic.oup.com [academic.oup.com]

- 3. Frontiers | High-Resolution Peripheral Quantitative Computed Tomography for Bone Evaluation in Inflammatory Rheumatic Disease [frontiersin.org]

- 4. Frontiers | Utility of HR-pQCT in detecting training-induced changes in healthy adult bone morphology and microstructure [frontiersin.org]

- 5. Osteoporosis drug effects on cortical and trabecular bone microstructure: a review of HR-pQCT analyses - PMC [pmc.ncbi.nlm.nih.gov]

- 6. The clinical application of high-resolution peripheral computed tomography (HR-pQCT) in adults: state of the art and future directions - PMC [pmc.ncbi.nlm.nih.gov]

- 7. New guidelines for assessment of bone density and microarchitecture in vivo with HR-pQCT - American Society for Bone and Mineral Research [asbmr.org]

- 8. New guidelines for assessment of bone density and microarchitecture in vivo with HR-pQCT | International Osteoporosis Foundation [osteoporosis.foundation]

- 9. researchgate.net [researchgate.net]

- 10. High-Resolution Peripheral Quantitative Computed Tomography Can Assess Microstructural and Mechanical Properties of Human Distal Tibial Bone - PMC [pmc.ncbi.nlm.nih.gov]

- 11. Clinical Imaging of Bone Microarchitecture with HR-pQCT - PMC [pmc.ncbi.nlm.nih.gov]

- 12. deepblue.lib.umich.edu [deepblue.lib.umich.edu]

The Significance of Trabecular Bone Microarchitecture Assessment in the Distal Tibia: An In-depth Technical Guide

For Researchers, Scientists, and Drug Development Professionals

Executive Summary

The assessment of bone health has traditionally relied on areal bone mineral density (aBMD) measurements obtained through dual-energy X-ray absorptiometry (DXA). However, a substantial number of fragility fractures occur in individuals with non-osteoporotic aBMD values, highlighting the critical role of bone microarchitecture in determining bone strength.[1][2] The distal tibia, a weight-bearing site rich in trabecular bone, has emerged as a key location for the detailed evaluation of bone quality. High-Resolution Peripheral Quantitative Computed Tomography (HR-pQCT) is the gold-standard non-invasive imaging modality for the three-dimensional assessment of bone microarchitecture at the distal tibia.[3][4][5] This technical guide provides a comprehensive overview of the significance of assessing trabecular bone in the distal tibia, with a primary focus on the methodologies and applications of HR-pQCT. While the Trabecular Bone Score (TBS) is a well-established tool for evaluating trabecular microarchitecture from lumbar spine DXA scans, its application to the distal tibia is not a current standard clinical practice and lacks validation. This document will explore the established principles of distal tibia microarchitectural analysis and the key signaling pathways that govern its integrity, providing a robust resource for researchers and professionals in the field of bone health.

Introduction to Trabecular Bone and its Importance

Trabecular bone, also known as cancellous or spongy bone, is one of the two main types of bone tissue. It has a porous, honeycomb-like structure composed of a network of interconnected rods and plates called trabeculae.[6][7] This intricate architecture provides a large surface area for metabolic activity and contributes significantly to bone strength and flexibility, particularly at the ends of long bones and in the vertebrae.[6][7] Deterioration of the trabecular microarchitecture, characterized by a loss of trabeculae, decreased connectivity, and a shift from plate-like to rod-like structures, can severely compromise bone's mechanical integrity, leading to an increased risk of fracture, independent of bone mass.[1]

High-Resolution Peripheral Quantitative Computed Tomography (HR-pQCT) of the Distal Tibia

HR-pQCT is a state-of-the-art, non-invasive imaging technique that provides in-vivo three-dimensional images of the distal radius and tibia with high resolution, allowing for the separate analysis of cortical and trabecular compartments.[3][4][5]

Experimental Protocol for HR-pQCT of the Distal Tibia

A standardized protocol is crucial for ensuring the accuracy and reproducibility of HR-pQCT measurements. The following outlines a typical experimental workflow:

-

Patient Positioning: The patient is seated with their lower leg extended and placed in a carbon fiber cast to immobilize the foot and ankle. The cast is then secured within the gantry of the HR-pQCT scanner.

-

Scout View: A two-dimensional scout view (projection image) of the distal tibia is acquired to define the region of interest.

-

Reference Line Placement: A reference line is manually placed at the tibial pilon, a consistent anatomical landmark at the distal end of the tibia.

-

Image Acquisition: The scan is initiated at a predefined distance proximal to the reference line (typically 22.5 mm for the first-generation and 22.0 mm for the second-generation XtremeCT scanners) and extends proximally for a length of 9.02 mm. The scan duration is approximately 3 minutes, with a low effective radiation dose of around 3-5 µSv.

-

Image Reconstruction: The acquired raw data is reconstructed into a three-dimensional image with an isotropic voxel size (typically 82 µm for the first-generation and 61 µm for the second-generation scanners).

-

Image Quality Control: The reconstructed images are visually inspected for motion artifacts. Scans with significant artifacts are typically excluded from analysis.

-

Image Analysis:

-

Contouring: The periosteal surface of the tibia is semi-automatically contoured.

-

Segmentation: An automated algorithm separates the cortical and trabecular bone compartments.

-

Parameter Quantification: A comprehensive set of quantitative parameters describing the density, microarchitecture, and geometry of both trabecular and cortical bone is calculated.

-

Key Trabecular Bone Microarchitecture Parameters from HR-pQCT

The following table summarizes the key quantitative parameters for trabecular bone at the distal tibia derived from HR-pQCT analysis.

| Parameter | Abbreviation | Description | Clinical Significance |

| Volumetric Bone Mineral Density | |||

| Total Volumetric BMD | Tt.vBMD (mg HA/cm³) | Average volumetric bone mineral density of the entire bone region (cortical + trabecular). | Reflects overall bone density at the site. |

| Trabecular Volumetric BMD | Tb.vBMD (mg HA/cm³) | Average volumetric bone mineral density of the trabecular compartment. | A direct measure of trabecular bone mass. |

| Trabecular Microarchitecture | |||

| Bone Volume Fraction | BV/TV (%) | The ratio of trabecular bone volume to the total volume of the trabecular compartment. | A primary indicator of trabecular bone quantity. |

| Trabecular Number | Tb.N (1/mm) | The average number of trabeculae per unit length. | Reflects the density of the trabecular network. |

| Trabecular Thickness | Tb.Th (mm) | The average thickness of the trabeculae. | Thinner trabeculae are associated with reduced bone strength. |

| Trabecular Separation | Tb.Sp (mm) | The average distance between trabeculae. | Increased separation indicates a more porous and weaker structure. |

| Finite Element Analysis | |||

| Stiffness | (N/mm) | The resistance of the bone to deformation under a simulated axial load. | A biomechanical measure of bone strength. |

| Failure Load | (N) | The estimated load at which the bone would fracture under simulated compression. | A direct prediction of bone's load-bearing capacity. |

Quantitative Data from HR-pQCT Studies of the Distal Tibia

The following tables present normative data and data showing the association of HR-pQCT parameters with fracture risk.

Table 1: Normative HR-pQCT Data for the Distal Tibia in Young Adults (16-29 years) [8]

| Parameter | Females (Mean ± SD) | Males (Mean ± SD) |

| Tt.vBMD (mg HA/cm³) | 315.8 ± 44.5 | 344.1 ± 45.9 |

| Tb.vBMD (mg HA/cm³) | 192.4 ± 35.8 | 195.9 ± 37.5 |

| BV/TV (%) | 15.9 ± 3.0 | 16.2 ± 3.1 |

| Tb.N (1/mm) | 2.11 ± 0.25 | 2.05 ± 0.23 |

| Tb.Th (mm) | 0.075 ± 0.007 | 0.079 ± 0.008 |

| Tb.Sp (mm) | 0.399 ± 0.055 | 0.410 ± 0.052 |

| Stiffness (kN/mm) | 48.7 ± 11.8 | 65.5 ± 14.5 |

| Failure Load (kN) | 9.0 ± 2.1 | 12.0 ± 2.6 |

Table 2: Association between Distal Tibia HR-pQCT Parameters and Incident Fracture Risk [9]

| Parameter (per SD decrease) | Hazard Ratio (95% CI) for any Incident Fracture |

| Tt.vBMD | 1.40 (1.29 - 1.52) |

| Tb.vBMD | 1.34 (1.24 - 1.45) |

| BV/TV | 1.34 (1.24 - 1.45) |

| Tb.N | 1.30 (1.20 - 1.41) |

| Tb.Th | 1.22 (1.13 - 1.32) |

| Failure Load | 1.46 (1.34 - 1.59) |

Trabecular Bone Score (TBS) and its Potential Application to the Distal Tibia

TBS is a texture analysis tool that is applied to 2D lumbar spine DXA images to provide an indirect measure of trabecular microarchitecture.[1][10][11][12] It quantifies the gray-level variations in the DXA image, with a higher TBS value indicating a more homogeneous and well-connected trabecular structure, and a lower value suggesting a degraded and fracture-prone microarchitecture.[1][6][11] TBS has been shown to predict fracture risk independently of aBMD and clinical risk factors.[1][10]

Currently, the application of TBS is validated and widely used for the lumbar spine. There is a lack of validated software and normative data for calculating TBS from DXA scans of the distal tibia. While some research has explored TBS at the proximal tibia in specific populations, this is not a standard or clinically accepted practice for the distal tibia.[13] Therefore, while the concept of analyzing texture to infer microarchitecture is appealing, its application to the distal tibia via DXA remains an area for future research and is not a substitute for the detailed 3D assessment provided by HR-pQCT.

Molecular Regulation of Trabecular Bone Remodeling

The integrity of trabecular bone is maintained through a continuous process of bone remodeling, which involves the coordinated actions of bone-resorbing osteoclasts and bone-forming osteoblasts.[14][15] This process is tightly regulated by a complex network of signaling pathways. Understanding these pathways is crucial for identifying therapeutic targets for bone diseases.

The RANK/RANKL/OPG Signaling Pathway

This pathway is the principal regulator of osteoclast differentiation and activity.

-

RANKL (Receptor Activator of Nuclear factor Kappa-B Ligand): A cytokine produced by osteoblasts and osteocytes that binds to its receptor, RANK, on the surface of osteoclast precursors.[16][17][18]

-

RANK (Receptor Activator of Nuclear factor Kappa-B): Binding of RANKL to RANK triggers a signaling cascade that leads to the differentiation and activation of osteoclasts.[16][17][18]

-

OPG (Osteoprotegerin): A soluble decoy receptor also produced by osteoblasts that binds to RANKL, preventing it from interacting with RANK and thereby inhibiting osteoclastogenesis.[19][20][21][22][23]

The balance between RANKL and OPG is a critical determinant of bone resorption.[18] An increase in the RANKL/OPG ratio leads to increased osteoclast activity and bone loss.

The Wnt/β-catenin Signaling Pathway

The Wnt/β-catenin pathway is a crucial regulator of osteoblast differentiation, proliferation, and survival, and thus plays a key role in bone formation.[24][25][26][27][28]

-

Wnt Proteins: A family of secreted signaling molecules that bind to Frizzled (Fzd) receptors and LRP5/6 co-receptors on the surface of osteoprogenitor cells.[25][27]

-

β-catenin: In the absence of Wnt signaling, β-catenin is targeted for degradation. Wnt binding leads to the stabilization and accumulation of β-catenin in the cytoplasm.

-

Gene Transcription: Accumulated β-catenin translocates to the nucleus, where it partners with TCF/LEF transcription factors to activate the expression of genes that promote osteoblastogenesis and bone formation.[28]

The Role of Sclerostin

Sclerostin is a protein secreted primarily by osteocytes that acts as a potent inhibitor of the Wnt/β-catenin signaling pathway.[29][30][31][32][33]

-

Inhibition of Wnt Signaling: Sclerostin binds to the LRP5/6 co-receptors, preventing the formation of the Wnt-Fzd-LRP5/6 complex and thereby inhibiting the downstream signaling cascade that leads to bone formation.[29][30][31]

-

Regulation of Bone Remodeling: By inhibiting bone formation, sclerostin plays a crucial role in maintaining the balance of bone remodeling. Mechanical loading on bone suppresses sclerostin expression, allowing for bone formation to occur where it is needed.

Clinical and Research Applications

The detailed assessment of distal tibia trabecular bone microarchitecture using HR-pQCT has significant implications for both clinical research and drug development.

-

Improved Fracture Risk Prediction: HR-pQCT-derived parameters of the distal tibia have been shown to predict incident fractures independently of aBMD and the FRAX tool, allowing for a more accurate identification of individuals at high risk.[9][34][35]

-

Understanding Disease Pathophysiology: HR-pQCT enables the characterization of bone microarchitectural deterioration in various diseases, including osteoporosis, chronic kidney disease, and diabetes mellitus, providing insights into the underlying mechanisms of skeletal fragility.

-

Monitoring Therapeutic Interventions: The high reproducibility of HR-pQCT allows for the sensitive detection of changes in bone microarchitecture in response to anabolic and anti-resorptive therapies, making it a valuable tool in clinical trials for new osteoporosis treatments.

-

Preclinical Research: In preclinical studies, micro-CT, the ex-vivo counterpart to HR-pQCT, is extensively used to evaluate the effects of new compounds on bone structure in animal models.

Future Directions and Conclusion

The assessment of trabecular bone microarchitecture at the distal tibia using HR-pQCT provides invaluable information beyond what can be obtained from standard aBMD measurements. It offers a more complete picture of bone strength and fracture risk. While the application of TBS to the distal tibia is not currently established, the principle of texture analysis from 2D images may hold future promise, pending further research and validation. For now, HR-pQCT remains the cornerstone for the detailed, non-invasive evaluation of trabecular bone at this critical weight-bearing site. A deeper understanding of the molecular pathways that regulate trabecular bone remodeling will continue to drive the development of novel therapeutic strategies to preserve bone microarchitecture and prevent fragility fractures. This guide serves as a foundational resource for leveraging these advanced assessment techniques and molecular insights in the pursuit of improved bone health.

References

- 1. DXA parameters, Trabecular Bone Score (TBS) and Bone Mineral Density (BMD), in fracture risk prediction in endocrine-mediated secondary osteoporosis - PMC [pmc.ncbi.nlm.nih.gov]

- 2. Noninvasive imaging of bone microarchitecture - PMC [pmc.ncbi.nlm.nih.gov]

- 3. High-Resolution Peripheral Quantitative Computed Tomography Can Assess Microstructural and Mechanical Properties of Human Distal Tibial Bone - PMC [pmc.ncbi.nlm.nih.gov]

- 4. Prediction of bone strength at the distal tibia by HR-pQCT and DXA - PubMed [pubmed.ncbi.nlm.nih.gov]

- 5. Prediction of bone strength at the distal tibia by HR-pQCT and DXA [boris-portal.unibe.ch]

- 6. Trabecular Bone Score (TBS) + DEXA — Joan Pagano Fitness [joanpaganofitness.com]

- 7. Osteoporosis - Wikipedia [en.wikipedia.org]

- 8. Bone microarchitecture and strength of the radius and tibia in a reference population of young adults: an HR-pQCT study - PubMed [pubmed.ncbi.nlm.nih.gov]

- 9. deepblue.lib.umich.edu [deepblue.lib.umich.edu]

- 10. Trabecular Bone Score: A New DXA-Derived Measurement for Fracture Risk Assessment - PubMed [pubmed.ncbi.nlm.nih.gov]

- 11. researchgate.net [researchgate.net]

- 12. taylorandfrancis.com [taylorandfrancis.com]

- 13. Evaluation of the tibial cortical thickness accuracy in osteoporosis diagnosis in comparison with dual energy X-ray absorptiometry - PMC [pmc.ncbi.nlm.nih.gov]

- 14. Biomechanical and molecular regulation of bone remodeling - PubMed [pubmed.ncbi.nlm.nih.gov]

- 15. Cellular mechanisms of bone remodeling - PMC [pmc.ncbi.nlm.nih.gov]

- 16. The RANK–RANKL–OPG System: A Multifaceted Regulator of Homeostasis, Immunity, and Cancer - PMC [pmc.ncbi.nlm.nih.gov]

- 17. Functions of RANKL/RANK/OPG in bone modeling and remodeling - PMC [pmc.ncbi.nlm.nih.gov]

- 18. cancernetwork.com [cancernetwork.com]

- 19. Osteoprotegerin - Wikipedia [en.wikipedia.org]

- 20. Osteoprotegerin holds potential as a therapeutic agent for bone disorders | McGraw Hill's AccessScience [accessscience.com]

- 21. Osteoprotegerin produced by osteoblasts is an important regulator in osteoclast development and function - PubMed [pubmed.ncbi.nlm.nih.gov]

- 22. Osteoprotegerin regulates bone formation through a coupling mechanism with bone resorption - PubMed [pubmed.ncbi.nlm.nih.gov]

- 23. academic.oup.com [academic.oup.com]

- 24. Wnt signaling in bone formation and its therapeutic potential for bone diseases - PMC [pmc.ncbi.nlm.nih.gov]

- 25. Frontiers | Wnt Pathway in Bone Repair and Regeneration – What Do We Know So Far [frontiersin.org]

- 26. Wnt Signaling in Bone Development and Disease: Making Stronger Bone with Wnts - PMC [pmc.ncbi.nlm.nih.gov]

- 27. JCI - Regulation of bone mass by Wnt signaling [jci.org]

- 28. The Role of the Wnt/β-catenin Signaling Pathway in Formation and Maintenance of Bone and Teeth - PMC [pmc.ncbi.nlm.nih.gov]

- 29. joe.bioscientifica.com [joe.bioscientifica.com]

- 30. Role and mechanism of action of Sclerostin in bone - PMC [pmc.ncbi.nlm.nih.gov]

- 31. mdpi.com [mdpi.com]

- 32. researchgate.net [researchgate.net]

- 33. Role of Wnt signaling and sclerostin in bone and as therapeutic targets in skeletal disorders - PubMed [pubmed.ncbi.nlm.nih.gov]

- 34. Best Performance Parameters of HR‐pQCT to Predict Fragility Fracture: Systematic Review and Meta‐Analysis - PMC [pmc.ncbi.nlm.nih.gov]

- 35. Cortical and trabecular bone microarchitecture predicts incident fracture independently of DXA bone mineral density and FRAX in older women and men: The Bone Microarchitecture International Consortium (BoMIC) - PMC [pmc.ncbi.nlm.nih.gov]

Unraveling the Genetic Blueprint of Distal Tibia Bone Mineral Density: A Technical Guide

For Immediate Release

[City, State] – [Date] – An in-depth technical guide released today offers researchers, scientists, and drug development professionals a comprehensive overview of the genetic determinants of distal tibia bone mineral density (BMD). This whitepaper provides a detailed exploration of the key genes, signaling pathways, and experimental methodologies crucial for advancing our understanding of bone health and developing novel therapeutics for osteoporosis and other skeletal diseases.

The distal tibia, a site of significant clinical relevance for fracture risk, possesses a complex genetic architecture. This guide synthesizes current knowledge to provide a clear and actionable resource for the scientific community.

Key Genetic Loci Influencing Distal Tibia BMD

Genome-wide association studies (GWAS) have been instrumental in identifying genetic variants associated with BMD at various skeletal sites. While large-scale GWAS specifically for distal tibia BMD are less common than for sites like the femoral neck and lumbar spine, several studies utilizing peripheral quantitative computed tomography (pQCT) have pinpointed key loci influencing volumetric BMD (vBMD) in the tibia. These findings are critical for understanding the specific genetic factors that regulate bone density in this weight-bearing region.

A meta-analysis of GWAS for pQCT-derived tibial bone traits has identified several single nucleotide polymorphisms (SNPs) significantly associated with both cortical and trabecular vBMD[1][2]. These findings underscore the distinct genetic regulation of different bone compartments.

| Locus (Nearest Gene) | SNP | Risk Allele | Effect on vBMD | P-value | Bone Compartment |

| 13q14 (RANKL) | rs1021188 | C | Decrease | 3.6 x 10-14 | Cortical |

| 6q25.1 (ESR1/C6orf97) | rs6909279 | G | Decrease | 1.1 x 10-9 | Cortical |

| 8q24.12 (TNFRSF11B/OPG) | rs7839059 | A | Decrease | 1.2 x 10-10 | Cortical |

| 1p34.3 (FMN2/GREM2) | rs9287237 | T | Decrease | 4.9 x 10-9 | Trabecular |

| 1p36.12 (WNT4/ZBTB40) | - | - | Association noted | - | Trabecular |

Table 1: Summary of key genetic variants associated with distal tibia volumetric bone mineral density (vBMD) as measured by pQCT. Data compiled from multiple sources[1][2][3].

Core Signaling Pathways in Bone Homeostasis

The genetic determinants of distal tibia BMD exert their effects through complex signaling networks that regulate the balance between bone formation by osteoblasts and bone resorption by osteoclasts. Three principal pathways are central to this process: the WNT/β-catenin pathway, the RANK/RANKL/OPG pathway, and the Bone Morphogenetic Protein (BMP) pathway.

WNT/β-catenin Signaling Pathway

The canonical WNT signaling pathway is a critical regulator of osteoblast differentiation and bone formation. The binding of WNT ligands to Frizzled (FZD) receptors and LRP5/6 co-receptors initiates a cascade that leads to the accumulation of β-catenin in the cytoplasm. Subsequently, β-catenin translocates to the nucleus, where it activates the transcription of genes essential for osteoblastogenesis.

RANK/RANKL/OPG Signaling Pathway

The RANK/RANKL/OPG axis is the primary regulator of osteoclast formation and activity, and thus bone resorption. Receptor Activator of Nuclear Factor kappa-B Ligand (RANKL), expressed by osteoblasts and other cells, binds to its receptor RANK on the surface of osteoclast precursors. This interaction triggers their differentiation into mature osteoclasts. Osteoprotegerin (OPG), also secreted by osteoblasts, acts as a decoy receptor for RANKL, preventing it from binding to RANK and thereby inhibiting osteoclastogenesis. The balance between RANKL and OPG is a critical determinant of bone mass.

Bone Morphogenetic Protein (BMP) Signaling Pathway

BMPs, members of the TGF-β superfamily, are potent inducers of osteoblast differentiation from mesenchymal stem cells. BMPs bind to type I and type II serine/threonine kinase receptors on the cell surface. This leads to the phosphorylation and activation of SMAD proteins (SMAD1/5/8), which then complex with SMAD4 and translocate to the nucleus to regulate the expression of osteogenic genes, such as Runx2.

Experimental Protocols

The accurate assessment of distal tibia BMD and the identification of its genetic determinants rely on robust and standardized experimental protocols.

Phenotyping: Distal Tibia BMD Measurement with pQCT/HR-pQCT

Peripheral quantitative computed tomography (pQCT) and high-resolution pQCT (HR-pQCT) are the gold standards for measuring volumetric BMD and assessing bone microarchitecture at the distal tibia.

1. Subject Positioning and Scout View:

-

The subject is seated with their lower leg placed in a carbon fiber cast to ensure immobilization.

-

A scout view (a 2D projection image) of the tibia is acquired to define the reference line at the distal tibia endplate.

2. Scan Acquisition:

-

For trabecular bone analysis, a standard region of interest is typically defined at 4% of the tibial length proximal to the reference line.

-

For cortical bone analysis, a scan is usually performed at 38% or 66% of the tibial length from the distal end.

-

The scanner acquires a series of cross-sectional images (slices) through the specified region.

3. Image Analysis:

-

Specialized software is used to segment the bone from the surrounding soft tissue.

-

The cortical and trabecular bone compartments are then separated using density-based thresholds.

-

Key parameters are calculated, including:

-

Total, cortical, and trabecular volumetric bone mineral density (Tt.vBMD, Ct.vBMD, Tb.vBMD) in mg/cm³.

-

Bone geometry (cross-sectional area, cortical thickness).

-

Trabecular microarchitecture (trabecular number, thickness, and separation) - primarily with HR-pQCT.

-

Genotyping and Genome-Wide Association Study (GWAS)

A typical GWAS workflow for identifying genetic variants associated with distal tibia BMD involves several key stages.

1. Cohort Recruitment: Large, well-characterized populations are essential.

2. Phenotyping: Distal tibia BMD is measured using pQCT or HR-pQCT as described above.

3. DNA Sample Collection and Genotyping: DNA is extracted from blood or saliva and genotyped using high-density SNP arrays.

4. Quality Control (QC): Rigorous QC is applied to both genotype and phenotype data to remove low-quality samples and markers.

5. Genotype Imputation: Untyped SNPs are statistically inferred using a reference panel (e.g., 1000 Genomes Project) to increase genome coverage.

6. Statistical Analysis: Association between each SNP and distal tibia BMD is tested, typically using a linear regression model adjusted for covariates such as age, sex, weight, and population stratification.

7. Replication: Significant findings from the discovery GWAS are validated in one or more independent cohorts.

8. Functional Annotation: The biological function of identified variants and genes is investigated to understand their role in bone metabolism.

Future Directions and Implications for Drug Development

The identification of genes and pathways influencing distal tibia BMD opens up new avenues for the development of targeted therapies for osteoporosis. By understanding the specific mechanisms through which genetic variants affect bone cell function, it may be possible to design drugs that modulate these pathways to enhance bone formation or inhibit bone resorption. For example, therapies targeting components of the WNT and RANKL/OPG signaling pathways are already in clinical use or under development.

Further research, including larger GWAS specifically focused on distal tibia microarchitecture and the integration of multi-omics data (e.g., transcriptomics, proteomics), will be crucial for a more complete understanding of the genetic regulation of bone strength at this critical skeletal site. This will ultimately pave the way for personalized medicine approaches to prevent and treat osteoporosis.

References

- 1. Genetic Determinants of Trabecular and Cortical Volumetric Bone Mineral Densities and Bone Microstructure - PMC [pmc.ncbi.nlm.nih.gov]

- 2. Genetic Determinants of Trabecular and Cortical Volumetric Bone Mineral Densities and Bone Microstructure | PLOS Genetics [journals.plos.org]

- 3. Genetic analysis of osteoblast activity identifies Zbtb40 as a regulator of osteoblast activity and bone mass - PMC [pmc.ncbi.nlm.nih.gov]

Animal Models for Studying Distal Tibia Bone Loss: An In-depth Technical Guide

For Researchers, Scientists, and Drug Development Professionals

This guide provides a comprehensive overview of established and emerging animal models used to investigate the complex mechanisms of distal tibia bone loss. Understanding these models is crucial for the development of novel therapeutic strategies for a range of clinical conditions, including traumatic injuries, osteoporosis, and post-traumatic osteoarthritis. This document details experimental protocols, presents quantitative data for comparative analysis, and visualizes key signaling pathways involved in bone regeneration and resorption.

Introduction to Animal Models

The choice of an appropriate animal model is paramount for translational research in bone biology. The distal tibia, with its unique anatomical and biomechanical properties, presents specific challenges in modeling bone loss. Researchers utilize a variety of species, from small rodents to large animals, to recapitulate different aspects of human conditions.

-

Rodent Models (Rats and Mice): Widely used due to their cost-effectiveness, rapid breeding cycles, and the availability of transgenic strains.[1] They are particularly valuable for studying the fundamental cellular and molecular mechanisms of bone healing and for initial drug screening.[2][3] Common models include surgically created defects, fractures, and models of osteoporosis.[3][4]

-

Rabbit Models: Often serve as an intermediate model between rodents and larger animals. Their larger size allows for more complex surgical procedures and the use of orthopedic implants designed for humans.[5][6] Rabbit models are frequently employed to study physeal injuries, bone defects, and the efficacy of bone grafting materials.[5]

-

Large Animal Models (Sheep and Pigs): These models offer the closest approximation to human bone physiology, biomechanics, and fracture healing processes.[7][8] Sheep are particularly favored for studies on osteoporosis and fracture fixation techniques due to similarities in bone composition and remodeling.[7][9] Porcine models are also valuable, especially for intra-articular fracture studies, due to the anatomical similarities of their joints to humans.

Quantitative Data on Distal Tibia Bone Loss in Animal Models

The following tables summarize key quantitative parameters from various studies, providing a basis for comparing the extent of bone loss and the efficacy of treatments across different models.

Table 1: Ovine (Sheep) Models of Distal Tibia Bone Loss

| Parameter | Model Details | Results | Reference |

| Bone Mineral Density (BMD) | Osteoporosis induced by ovariectomy, calcium/vitamin D-restricted diet, and steroids for 6 months. | Cancellous Bone: 55% decrease; Cortical Bone: 7% decrease. | [9] |

| Osteoporosis induced by ovariectomy, low calcium diet, and steroid injection for 6 months. | Lumbar Spine & Proximal Femur: >25% decrease. | [10] | |

| Bone Volume Fraction (BV/TV) | Iliac crest biopsy from osteoporotic sheep (as above). | 37% decrease over 6 months. | [9] |

| Trabecular Number (Tb.N) | Iliac crest biopsy from osteoporotic sheep. | 19% decrease. | [9] |

| Trabecular Thickness (Tb.Th) | Iliac crest biopsy from osteoporotic sheep. | 22% decrease. | [9] |

| Torsional Strength & Stiffness | Tibia from osteoporotic sheep. | Approximately 50% lower than control group. | [9] |

Table 2: Rodent (Rat and Mouse) Models of Distal Tibia Bone Loss

| Parameter | Model Details | Results | Reference |

| Bone Volume Fraction (BV/TV) | Unilateral open transverse tibial fractures in chondrocyte-specific Bmp2 cKO mice vs. control. | Significantly decreased at days 7, 10, 14, and 21 post-fracture in cKO mice. | [11] |

| Volumetric muscle loss (VML) injury adjacent to the tibia in male mice. | Trabecular bone volume fraction was greater in uninjured controls compared to VML-injured mice. | [12] | |

| Trabecular Number (Tb.N) | VML injury in male mice. | Lesser in VML-injured mice compared to uninjured controls. | [12] |

| Trabecular Spacing (Tb.Sp) | VML injury in male mice. | Greater in VML-injured mice compared to uninjured controls. | [12] |

| Bone Mineral Density (BMD) | VML injury in male mice. | Trabecular BMD was less in VML-injured mice compared to uninjured controls. | [12] |

| Cortical Bone Thickness | VML injury in male mice. | 6% less in tibias of VML-injured limbs compared to uninjured controls. | [12] |

| Ultimate Load | Tibias from VML-injured limbs. | 10% less than tibias from uninjured controls. | [12] |

Table 3: Lagomorph (Rabbit) Models of Distal Tibia Bone Loss

| Parameter | Model Details | Results | Reference |

| Energy to Failure | Unicortical defect in the mid-diaphysis (MD) of the tibia. | Significantly reduced (0.18 ± 0.07 J) compared to intact tibiae (0.31 ± 0.14 J). | [13] |

| Angle at Failure | Unicortical defect in the MD of the tibia. | Significantly reduced (0.17 ± 0.05 rad) compared to intact tibiae (0.23 ± 0.07 rad). | [13] |

| Peak Torque & Stiffness | Unicortical defect in the MD or distal metaphysis (DM) of the tibia. | No significant difference detected between defect groups and intact tibiae. | [13] |

Experimental Protocols

Detailed and standardized experimental protocols are essential for the reproducibility of animal studies. Below are representative protocols for creating distal tibia bone loss models.

Rat Tibial Defect Model

This model is commonly used to evaluate bone regeneration and the efficacy of biomaterials.

-

Animal Preparation:

-

Use adult male Sprague-Dawley rats (250-300g).

-

Anesthetize the animal using isoflurane inhalation or intraperitoneal injection of a ketamine/xylazine cocktail.

-

Shave the hair from the anteromedial aspect of the right hindlimb and sterilize the surgical site with povidone-iodine and alcohol.

-

Administer pre-operative analgesics (e.g., buprenorphine) to minimize pain.

-

-

Surgical Procedure:

-

Make a 1.5-2.0 cm longitudinal incision over the anteromedial aspect of the proximal tibia.

-

Carefully dissect the soft tissues to expose the periosteum of the tibia.

-

Make a longitudinal incision in the periosteum and elevate it to expose the underlying bone.

-

Create a critical-sized defect (typically 5-8 mm in length) in the mid-diaphysis of the tibia using a dental burr or a Gigli saw under constant saline irrigation to prevent thermal necrosis.[14]

-

The defect should be a full-thickness segmental osteotomy.

-

If testing a biomaterial, implant it into the defect site.

-

Close the periosteum and overlying soft tissues in layers using absorbable sutures.

-

Close the skin with non-absorbable sutures or surgical staples.

-

-

Post-Operative Care:

-

Administer post-operative analgesics for 48-72 hours.

-

House the animals individually to prevent injury to the surgical site.

-

Monitor the animals daily for signs of pain, infection, or distress.

-

Radiographs can be taken at specified time points (e.g., 2, 4, 8 weeks) to monitor bone healing.[3]

-

Sheep Model of Osteoporosis-Induced Bone Loss

This large animal model is valuable for preclinical testing of orthopedic implants and therapies for osteoporotic fractures.

-

Animal Preparation:

-

Induction of Osteoporosis:

-

Assessment of Bone Loss:

-

Monitor bone mineral density (BMD) of the distal tibia and other sites (e.g., lumbar spine, proximal femur) using quantitative computed tomography (qCT) or dual-energy X-ray absorptiometry (DXA) at baseline and regular intervals.[9][10]

-

Collect blood and urine samples to analyze biochemical markers of bone turnover.

-

-

Post-Induction Procedures:

-

Once significant bone loss is confirmed, the animals can be used for subsequent studies, such as the creation of fractures in the osteoporotic distal tibia to test fixation devices.

-

-

Post-Operative Care:

Key Signaling Pathways in Distal Tibia Bone Loss and Regeneration

The processes of bone loss and formation are tightly regulated by a complex network of signaling pathways. Understanding these pathways is critical for identifying novel therapeutic targets.

BMP Signaling Pathway

The Bone Morphogenetic Protein (BMP) signaling pathway is a crucial regulator of osteoblast differentiation and bone formation, playing a vital role in fracture healing.[11][17]

Wnt/β-catenin Signaling Pathway

The canonical Wnt/β-catenin pathway is a master regulator of bone mass. Its activation promotes osteoblastogenesis and bone formation, while its inhibition can lead to bone loss.[18][19]

RANKL/RANK/OPG Signaling Pathway

This pathway is the principal regulator of osteoclast differentiation and activity, and thus bone resorption. An imbalance in this system, with an increased RANKL/OPG ratio, is a hallmark of many bone loss conditions, including osteoporosis.[20][21]

Conclusion

The selection of an appropriate animal model is a critical decision in the preclinical study of distal tibia bone loss. This guide has provided a comparative overview of commonly used models, presenting quantitative data to aid in this selection process. The detailed experimental protocols offer a foundation for reproducible study design, and the visualization of key signaling pathways highlights potential targets for therapeutic intervention. By leveraging these models and a thorough understanding of the underlying molecular mechanisms, researchers can accelerate the development of effective treatments for debilitating bone conditions.

References

- 1. A Mouse Model of Orthopedic Surgery to Study Postoperative Cognitive Dysfunction and Tissue Regeneration - PMC [pmc.ncbi.nlm.nih.gov]

- 2. A longitudinal rat model for assessing postoperative recovery and bone healing following tibial osteotomy and plate fixation - PMC [pmc.ncbi.nlm.nih.gov]

- 3. Development of a tibial experimental non-union model in rats - PMC [pmc.ncbi.nlm.nih.gov]

- 4. A Rat Tibial Growth Plate Injury Model to Characterize Repair Mechanisms and Evaluate Growth Plate Regeneration Strategies - PMC [pmc.ncbi.nlm.nih.gov]

- 5. researchgate.net [researchgate.net]