Agromet

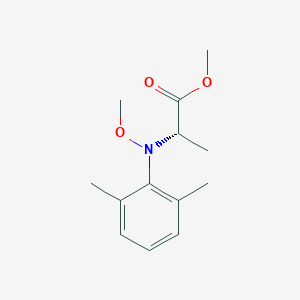

Description

Properties

CAS No. |

123298-28-2 |

|---|---|

Molecular Formula |

C13H19NO3 |

Molecular Weight |

237.29 g/mol |

IUPAC Name |

methyl (2S)-2-(N-methoxy-2,6-dimethylanilino)propanoate |

InChI |

InChI=1S/C13H19NO3/c1-9-7-6-8-10(2)12(9)14(17-5)11(3)13(15)16-4/h6-8,11H,1-5H3/t11-/m0/s1 |

InChI Key |

OABBFKUSJCCJFO-NSHDSACASA-N |

SMILES |

CC1=C(C(=CC=C1)C)N(C(C)C(=O)OC)OC |

Isomeric SMILES |

CC1=C(C(=CC=C1)C)N([C@@H](C)C(=O)OC)OC |

Canonical SMILES |

CC1=C(C(=CC=C1)C)N(C(C)C(=O)OC)OC |

Other CAS No. |

123298-28-2 |

Synonyms |

N-(2,6-Dimethylphenyl)-N-methoxyalanine methyl ester |

Origin of Product |

United States |

Foundational & Exploratory

Key Research Questions in Agrometeorology: A Technical Guide

An In-depth Technical Guide for Researchers, Scientists, and Drug Development Professionals

The escalating challenges of climate change, a growing global population, and the need for sustainable agricultural practices have placed agrometeorology at the forefront of scientific research. This technical guide delves into the core research questions driving the field, providing an in-depth look at the experimental protocols and quantitative data that are shaping our understanding of the intricate relationships between weather, climate, and agricultural systems. The following sections address key research questions, detail the methodologies used to investigate them, and present pertinent data in a structured format to facilitate comparison and further inquiry.

Quantifying the Impact of Climate Change on Crop Yields

A primary research question in agrometeorology is to precisely quantify the effects of climate change on the productivity of major staple crops. This involves understanding the isolated and combined impacts of rising temperatures, altered precipitation patterns, and increased atmospheric carbon dioxide concentrations.

Data Presentation:

The following table summarizes the projected impact of climate change on the yields of major crops under different warming scenarios.

| Crop | Warming Scenario | Projected Yield Change (%) | Key Climatic Drivers | Geographic Region of Study | Reference |

| Maize | RCP 8.5 (High Emissions) | -24% by late century | Increased temperature, changes in rainfall | Global | [1] |

| Wheat | RCP 8.5 (High Emissions) | +17% by late century | Elevated CO2, expanded growing range | Global | [1] |

| Wheat | 2°C Warming | +1.7% (with CO2 fertilization) | Temperature, CO2 | Global | [2] |

| Wheat | 2°C Warming | -6.6% (without CO2 fertilization) | Temperature | Global | [2] |

| Soybean | Elevated [CO2] (550 ppm) | +15% | Elevated CO2 | SoyFACE Experiment, USA | [3] |

| Winter Wheat | 1°C Temperature Increase | Yield decrease | Temperature | North China Plain | [4] |

| Winter Wheat | 10% Precipitation Increase | General yield increase | Precipitation | North China Plain | [4] |

Experimental Protocols:

Free-Air Carbon Dioxide Enrichment (FACE) Experiments: These experiments are critical for understanding crop responses to elevated CO2 in real-world field conditions.

Detailed Methodology for a Soybean FACE Experiment:

-

Experimental Setup: Large octagonal rings of pipes (B44673) are constructed in a soybean field to release CO2 into the atmosphere, creating an environment with elevated CO2 concentrations (e.g., 550 ppm) within the ring. Control plots with ambient CO2 levels are also established.[3]

-

Treatments: The experiment can include treatments for elevated CO2, elevated ozone, and their interaction, alongside control plots.[3]

-

Monitoring: Throughout the growing season, a suite of measurements are taken, including:

-

Leaf-level gas exchange: To determine photosynthetic rates and stomatal conductance.

-

Canopy temperature: Measured with infrared thermometry to assess the impact of altered transpiration rates.[5]

-

Plant growth and development: Regular measurements of plant height, node number, and phenological stages (e.g., flowering, pod fill).[5]

-

Biomass and yield: At the end of the season, plants are harvested to determine total biomass, seed yield, and harvest index.[3]

-

-

Data Analysis: Statistical analysis is performed to compare the measured parameters between the elevated CO2 and ambient plots to determine the significance of any observed differences.

Visualization:

Caption: Workflow of a Free-Air Carbon Dioxide Enrichment (FACE) experiment.

Enhancing the Accuracy of Agrometeorological Models

Crop simulation models are indispensable tools for predicting crop growth and yield under various environmental conditions. A key research question is how to improve the accuracy of these models through robust calibration and validation procedures.

Experimental Protocols:

Detailed Methodology for DSSAT Crop Model Calibration:

The Decision Support System for Agrotechnology Transfer (DSSAT) is a widely used suite of crop models.[6][7] Calibrating these models for specific cultivars and environments is crucial for their accuracy.[8][9]

-

Data Collection: A minimum dataset is required, including:

-

Weather Data: Daily maximum and minimum temperature, solar radiation, and precipitation.[8]

-

Soil Data: Soil texture, organic carbon, pH, and hydraulic properties for different soil layers.

-

Crop Management Data: Sowing date, plant density, irrigation, and fertilizer application details.[8]

-

Observed Crop Data: Phenological dates (e.g., flowering, maturity), final yield, and biomass.

-

-

Model Input File Preparation: The collected data is formatted into specific input files for the DSSAT model.[6]

-

Genotype Coefficient Estimation (Calibration):

-

The model is run with initial genotype coefficients for the specific cultivar.

-

The simulated outputs (e.g., flowering date, maturity date, yield) are compared to the observed data.

-

The genotype coefficients are iteratively adjusted to minimize the difference between simulated and observed values. This is often done using a tool like the Generalized Likelihood Uncertainty Estimation (GLUE) module within DSSAT.[9]

-

-

Model Validation:

-

The calibrated model is then run using an independent dataset (i.e., data not used for calibration).

-

The simulated outputs are compared to the observed data from the validation dataset.

-

Statistical metrics such as Root Mean Square Error (RMSE) and Normalized Root Mean Square Error (nRMSE) are used to evaluate the model's performance.

-

Data Presentation:

The following table provides an example of genotype coefficients for a rice cultivar in the DSSAT CERES-Rice model.

| Coefficient | Description | Value |

| P1 | Basic vegetative phase duration (°C d) | 550 |

| P2R | Critical photoperiod (h) | 12.5 |

| P5 | Grain filling duration (°C d) | 600 |

| G1 | Potential spikelet number coefficient | 60 |

| G2 | Single grain weight (g) | 0.022 |

| G3 | Tillering coefficient | 1.0 |

| PHINT | Phylochron interval (°C d) | 95 |

Visualization:

Caption: Workflow for the calibration and validation of the DSSAT crop model.

Improving Agrometeorological Forecasting for Pest and Disease Management

A critical area of research is the development of accurate forecasting models for crop pests and diseases based on meteorological data. This allows for timely and targeted interventions, reducing crop losses and the environmental impact of pesticides.

Data Presentation:

The following table shows the correlation of weather parameters with the severity of potato late blight.

| Weather Parameter | Correlation with Disease Severity | Significance (p-value) |

| Maximum Temperature | 0.751 | <0.05 |

| Minimum Temperature | 0.001 | Not Significant |

| Rainfall | 0.0565 | <0.05 |

| Relative Humidity | 0.673 | <0.05 |

| Wind Speed | 0.332 | <0.05 |

Source: Adapted from a study on potato late blight in the Northern Himalayas of India.[10]

Experimental Protocols:

Development of a Weather-Based Forecasting Model for Potato Late Blight:

-

Data Collection:

-

Meteorological Data: Daily records of maximum and minimum temperature, rainfall, relative humidity, and wind speed are collected from weather stations near the experimental plots.[10]

-

Disease Severity Data: Regular field surveys are conducted to assess the severity of potato late blight, often using a standardized rating scale.

-

-

Statistical Analysis:

-

Correlation Analysis: The relationship between each weather parameter and disease severity is determined using correlation analysis.[10]

-

Regression Analysis: Stepwise multiple regression analysis is used to develop a predictive model, where disease severity is the dependent variable and the significant weather parameters are the independent variables.

-

-

Model Validation: The developed model is validated using an independent dataset to assess its predictive accuracy.

Visualization:

Caption: Signaling pathway of meteorological factors on potato late blight development.

Downscaling Climate Model Projections for Local Agricultural Impact Assessment

Global Climate Models (GCMs) provide projections of future climate, but their spatial resolution is too coarse for local agricultural impact studies. Downscaling techniques are essential to translate these large-scale projections to a finer, more relevant scale.

Experimental Protocols:

Step-by-Step Protocol for Statistical Downscaling of Precipitation Data (Delta Method):

The delta method, a common statistical downscaling technique, is used to apply the change in a climate variable from a GCM to a high-resolution observed climate dataset.[11][12]

-

Data Acquisition:

-

GCM Data: Obtain monthly precipitation projections from a GCM for a historical baseline period and a future period.

-

Observed Data: Acquire a high-resolution gridded dataset of observed monthly precipitation for the same historical baseline period.

-

-

Calculate GCM Anomalies: For each month, calculate the ratio of the future GCM precipitation to the historical GCM precipitation. This ratio represents the projected change, or anomaly.

-

Interpolate Anomalies: Interpolate the coarse-resolution GCM anomalies to the same high-resolution grid as the observed data.

-

Apply Anomalies to Observed Data: For each grid cell and each month, multiply the observed historical precipitation by the interpolated anomaly ratio to obtain the downscaled future precipitation.

Visualization:

Caption: Logical relationship of the statistical downscaling (delta method) process.

References

- 1. NASA SVS | Impact of Climate Change on Global Wheat Yields [svs.gsfc.nasa.gov]

- 2. ourworldindata.org [ourworldindata.org]

- 3. researchgate.net [researchgate.net]

- 4. mdpi.com [mdpi.com]

- 5. Elevated CO2 significantly delays reproductive development of soybean under Free-Air Concentration Enrichment (FACE) - PubMed [pubmed.ncbi.nlm.nih.gov]

- 6. ars.usda.gov [ars.usda.gov]

- 7. researchgate.net [researchgate.net]

- 8. renupublishers.com [renupublishers.com]

- 9. mdpi.com [mdpi.com]

- 10. pdfs.semanticscholar.org [pdfs.semanticscholar.org]

- 11. uaf-snap.org [uaf-snap.org]

- 12. academicjournals.org [academicjournals.org]

A Technical Guide to the Historical Development of Agricultural Meteorology

Audience: Researchers, scientists, and drug development professionals.

Core Content: This whitepaper provides an in-depth exploration of the historical evolution of agricultural meteorology, detailing key milestones, foundational experiments, and the development of methodologies that form the bedrock of modern agrometeorology.

The Dawn of Agricultural Meteorology: Early Observations and Instrumentation

The genesis of agricultural meteorology lies in the fundamental human need to understand the relationship between weather and food production. Early agricultural societies relied on empirical observations and phenological records to guide their farming practices. However, the 18th century marked a significant turning point with the advent of systematic scientific inquiry and the development of meteorological instruments.

A pivotal figure of this era was René Antoine Ferchault de Réaumur, a French scientist who introduced the concept of thermal time, or growing degree-days, in the 1730s. He posited that the cumulative heat units required for a plant to reach a specific developmental stage were constant. This concept laid the groundwork for predicting crop phenology based on temperature data.

Experimental Protocols: Réaumur's Thermal Time Concept

Réaumur's experiments were conceptually simple yet profound. The detailed methodology involved:

-

Observation: Meticulous observation of the life cycles of various plants and insects.

-

Instrumentation: Utilization of his own invention, the Réaumur thermometer, to record daily air temperatures. The Réaumur scale sets the freezing point of water at 0°Ré and the boiling point at 80°Ré.[1][2][3]

-

Data Collection: Daily temperature readings were taken at the same time and location to ensure consistency.

-

Calculation: The sum of the mean daily temperatures above a certain baseline (the temperature below which the organism's development ceases) was calculated for each stage of the organism's life cycle.

-

Hypothesis: Réaumur hypothesized that the total "heat" required for a specific developmental stage (e.g., from planting to flowering) was a constant value, regardless of the year-to-year variations in weather.

The Chemical Revolution and its Impact on Agricultural Science

The 19th century witnessed a surge in the application of chemistry to agriculture, a period largely defined by the work of Jean-Baptiste Boussingault. A French chemist, Boussingault is often regarded as one of the founders of modern agricultural science. He was the first to conduct systematic field experiments to understand the nutritional requirements of crops.[4][5]

Experimental Protocols: Boussingault's Nitrogen Fixation Experiments

Boussingault's most notable experiments focused on the source of nitrogen for plants. He meticulously designed his experiments to control for variables and obtain quantitative results.[6][7][8][9] The methodology for his nitrogen balance studies was as follows:

-

Hypothesis: To determine if plants could assimilate atmospheric nitrogen.

-

Experimental Setup:

-

Plants, particularly legumes and cereals, were grown in pots containing sterilized sand or calcined soil to eliminate any initial nitrogen content.[6][7][8][9]

-

The experiments were conducted in a glazed conservatory to protect the plants from atmospheric deposition of nitrogen compounds from rain or dust.

-

A known quantity of seeds with a predetermined nitrogen content was planted.

-

The plants were irrigated with distilled water.

-

-

Data Collection:

-

At the end of the growing season, the entire plant (roots, stems, leaves, and seeds) was harvested.

-

The total dry matter and nitrogen content of the harvested plants were determined using analytical chemistry methods of the time.

-

-

Analysis: The nitrogen content of the harvested plants was compared to the initial nitrogen content of the seeds.

Data Presentation: Boussingault's Nitrogen Balance in Legumes vs. Cereals (Hypothetical Data)

| Crop | Initial Nitrogen in Seed (g) | Final Nitrogen in Plant (g) | Net Nitrogen Gain (g) |

| Clover (Legume) | 0.05 | 0.55 | 0.50 |

| Wheat (Cereal) | 0.05 | 0.06 | 0.01 |

| Peas (Legume) | 0.10 | 1.10 | 1.00 |

| Oats (Cereal) | 0.10 | 0.11 | 0.01 |

This table is a representative illustration of Boussingault's findings and not a direct reproduction of his original data.

Boussingault's experiments conclusively demonstrated that legumes could acquire significant amounts of nitrogen from a source other than the soil, leading him to correctly infer that they were fixing atmospheric nitrogen.[1][4][5]

The Statistical Era: Rigor and Design in Agricultural Experiments

The late 19th and early 20th centuries saw the establishment of long-term agricultural experiment stations, most notably Rothamsted Experimental Station in the United Kingdom.[10][11] These stations generated vast amounts of data, but the methods for analyzing this data were often inadequate. This changed with the arrival of R.A. Fisher at Rothamsted in 1919.

Experimental Protocols: Fisher's Principles of Experimental Design

Fisher's methodology was not about a single experiment but a new philosophy of conducting and analyzing experiments. The core principles are:

-

Replication: Repeating each treatment multiple times to estimate experimental error and increase the precision of the results.

-

Randomization: Assigning treatments to experimental units randomly to avoid bias.

-

Blocking (Local Control): Grouping experimental units into blocks where the units within a block are more similar to each other than to units in other blocks. This helps to reduce the effect of known sources of variation, such as soil heterogeneity.

Data Presentation: Rothamsted Broadbalk Winter Wheat Experiment (1852-1861)

The following table presents a summary of early yield data from the Broadbalk experiment at Rothamsted, which was initiated by Lawes and Gilbert in 1843. This long-term experiment provided much of the data that Fisher later used to develop his statistical methods. The data shows the average wheat grain yield under different fertilizer treatments.

| Treatment | Average Yield (tonnes/hectare) |

| Unmanured | 0.91 |

| Farmyard Manure | 2.36 |

| NPK | 2.22 |

| PK only | 1.15 |

| N only | 1.48 |

Data adapted from Johnston, A. E., & Poulton, P. R. (2018). The importance of long-term experiments in agricultural science. European Journal of Soil Science, 69(1), 11-21.

The 20th Century and Beyond: Technological Advancements

The mid-20th century saw the integration of new technologies into agricultural meteorology. The development of computers allowed for the creation of complex crop simulation models that could predict growth and yield based on weather inputs. The latter half of the century was marked by the advent of remote sensing, with satellites providing unprecedented data on crop health, soil moisture, and weather patterns on a global scale.

Evolution of Crop Yield Forecasting

Crop yield forecasting has evolved from simple empirical observations to sophisticated, multi-faceted systems.

Caption: Evolution of Crop Yield Forecasting Methods.

Logical Relationships in Modern Agrometeorological Data Integration

Modern agricultural meteorology relies on the integration of data from various sources to provide actionable insights for farmers and policymakers.

Caption: Integration of Data in Modern Agrometeorology.

Conclusion

The historical development of agricultural meteorology is a testament to the power of scientific inquiry, from the early, meticulous observations of naturalists to the complex, data-driven models of the modern era. The foundational work of pioneers like Réaumur, Boussingault, and Fisher provided the intellectual and methodological framework upon which the field is built. Today, agricultural meteorology continues to evolve, driven by technological innovation and the pressing need to ensure global food security in a changing climate. For researchers and scientists, understanding this historical trajectory provides a crucial context for current research and future advancements in this vital field.

References

- 1. earthwormexpress.com [earthwormexpress.com]

- 2. agronomy.org [agronomy.org]

- 3. fao.org [fao.org]

- 4. encyclopedia.com [encyclopedia.com]

- 5. redalyc.org [redalyc.org]

- 6. Mr Lawes' experiments [repository.rothamsted.ac.uk]

- 7. FG 180: 180 years of Rothamsted Research - what has it done for farming? | Farm News | Farmers Guardian [farmersguardian.com]

- 8. Iizumi, T (2019): Global dataset of historical yields v1.2 and v1.3 aligned version [doi.pangaea.de]

- 9. researchgate.net [researchgate.net]

- 10. The Long Term Experiments | Rothamsted Research [rothamsted.ac.uk]

- 11. rothamsted.ac.uk [rothamsted.ac.uk]

- 12. From complex histories to cohesive data, a long-term agricultural dataset from the Morrow Plots - PMC [pmc.ncbi.nlm.nih.gov]

In-depth Technical Guide: The Role of Agrometeorology in Climate Change Adaptation

For Researchers and Scientists

The escalating climate crisis necessitates robust and innovative strategies to ensure global food security. Agrometeorology, the science of applying meteorological and climatological data to agriculture, stands at the forefront of developing climate change adaptation measures. By providing critical insights into weather patterns and their effects on agricultural systems, agrometeorology empowers farmers, policymakers, and researchers to make informed decisions that enhance resilience and sustainability.[1][2] This technical guide explores the pivotal role of agrometeorology in climate change adaptation, detailing key applications, methodologies, and the logical frameworks that underpin this critical field.

Core Principles of Agrometeorological Adaptation

Agrometeorology facilitates climate change adaptation through a multi-faceted approach that integrates weather and climate information into agricultural practices.[1] The primary objective is to optimize crop production, manage risks associated with climate variability, and minimize environmental impacts.[1] This involves a continuous cycle of monitoring, forecasting, and advising on agricultural operations.

Key applications include:

-

Optimizing Crop Management: Agrometeorological data informs decisions on planting dates, irrigation scheduling, and fertilizer application, leading to improved crop yields and resource efficiency.[3][4]

-

Pest and Disease Management: Weather conditions significantly influence the lifecycle of pests and the spread of diseases. Agrometeorological forecasting enables early warning systems and targeted interventions.[3]

-

Disaster Risk Reduction: Early warnings for extreme weather events such as droughts, floods, and heatwaves allow for preemptive measures to protect crops and livestock.[4][5]

-

Development of Climate-Resilient Crops: Agrometeorological data is crucial for identifying and breeding crop varieties that are better suited to changing climatic conditions.[6]

The logical flow of agrometeorological services for climate adaptation begins with data collection and culminates in on-farm decision-making.

References

- 1. studysmarter.co.uk [studysmarter.co.uk]

- 2. Frontiers | Agro-meteorological services in the era of climate change: a bibliometric review of research trends, knowledge gaps, and global collaboration [frontiersin.org]

- 3. Importance of agricultural meteorology [niubol.com]

- 4. agristudoc.com [agristudoc.com]

- 5. ler.esalq.usp.br [ler.esalq.usp.br]

- 6. Adaptive Strategies - Agricultural Practices | METEO 469: From Meteorology to Mitigation: Understanding Global Warming [courses.ems.psu.edu]

A Deep Dive into the Soil-Plant-Atmosphere Continuum: A Technical Guide

Abstract

The intricate relationship between soil, plants, and the atmosphere governs terrestrial life. This technical guide provides an in-depth exploration of the fundamental principles underpinning these interactions, collectively known as the Soil-Plant-Atmosphere Continuum (SPAC). We delve into the biophysical and biochemical processes that drive water and nutrient transport, gas exchange, and the complex signaling networks that allow plants to respond to their environment. This document synthesizes quantitative data, details key experimental protocols, and provides visual representations of critical signaling pathways to serve as a comprehensive resource for researchers in plant science, environmental science, and related fields.

The Soil-Plant-Atmosphere Continuum (SPAC)

The Soil-Plant-Atmosphere Continuum (SPAC) describes the continuous pathway of water movement from the soil, through the plant, and into the atmosphere.[1][2] This movement is not an active process but is passively driven by a gradient in water potential (Ψ), a measure of the potential energy of water. Water flows from areas of higher (less negative) water potential to areas of lower (more negative) water potential.[3][4] The atmosphere typically has an extremely low water potential, creating a strong driving force for water to be pulled from the soil through the plant, a process known as transpiration.[3][5]

Water Potential Gradients

The steep gradient in water potential across the SPAC is the primary driver of water transport in plants. This gradient must overcome various resistances, including the hydraulic conductivity of the soil and the resistance to flow within the plant's xylem.

| Component | Typical Water Potential (Ψ) Range (MPa) | Conditions |

| Soil | -0.01 to -0.3 | Saturated to Field Capacity[5][6] |

| -1.5 | Permanent Wilting Point[6] | |

| Root | -0.2 to -0.5 | Normal Conditions[3] |

| Stem | Varies, less negative than leaves | Dependent on height and transpiration rate |

| Leaf | -1.0 to -3.0 | Transpiring Leaf[3] |

| Atmosphere | -80 to -200 | Humid to Dry Air[3][7] |

Nutrient Acquisition from the Soil

Plants absorb essential mineral nutrients from the soil solution through their roots. This uptake can occur through several mechanisms, broadly categorized as passive and active transport.[8]

-

Passive Transport : This process does not require metabolic energy.

-

Mass Flow : Nutrients are carried along with the flow of water into the roots, driven by transpiration. This is a major pathway for mobile nutrients like nitrate.[9]

-

Diffusion : Nutrients move from an area of higher concentration in the soil solution to an area of lower concentration at the root surface. This is important for less mobile nutrients like phosphate.[9]

-

Root Interception : Roots physically contact soil particles and absorb the nutrients adsorbed to them. This accounts for a small percentage of total nutrient uptake.[9]

-

-

Active Transport : This process requires energy (ATP) to move nutrients against their concentration gradient. It involves carrier proteins and pumps embedded in the root cell membranes.[8] An electrochemical gradient, established by proton pumps (H+-ATPases), drives the transport of many ions.[10]

Plant Nutrient Sufficiency Ranges

Monitoring the concentration of nutrients in plant tissues is a key diagnostic tool. The following table provides typical sufficiency ranges for key macronutrients in the dry matter of mature plant leaves. Concentrations below this range may indicate a deficiency.

| Nutrient | Chemical Symbol | Sufficiency Range (% of Dry Weight) | Primary Functions |

| Nitrogen | N | 2.5 - 4.0 | Component of proteins, nucleic acids, chlorophyll |

| Phosphorus | P | 0.2 - 0.5 | Energy transfer (ATP), DNA/RNA structure |

| Potassium | K | 2.0 - 5.0 | Enzyme activation, stomatal regulation, osmoregulation |

(Source: Data compiled from multiple agricultural extension resources)

Gas Exchange and Stomatal Regulation

Stomata, microscopic pores on the leaf surface, are the primary sites for gas exchange (CO2 uptake for photosynthesis and O2 release) and water vapor loss (transpiration).[11][12] Each stoma is surrounded by a pair of specialized guard cells that regulate its aperture, balancing the need for CO2 uptake with the prevention of excessive water loss.[13][14]

Stomatal opening is triggered by factors such as light and low internal CO2 concentrations, while closure is induced by darkness, water stress (mediated by the hormone abscisic acid), and high CO2 levels.[12][14]

Stomatal Conductance

Stomatal conductance (g_s) quantifies the rate of gas diffusion through stomata. It is a critical parameter in models of plant water use and photosynthesis. C4 plants, with their more efficient carbon fixation mechanism, often exhibit lower stomatal conductance than C3 plants under similar conditions.[15]

| Plant Type | Light Condition | Typical Stomatal Conductance (mol H₂O m⁻² s⁻¹) |

| C3 (e.g., Tarenaya hassleriana) | High Light (800 µmol m⁻² s⁻¹) | ~0.42 |

| Low Light (200 µmol m⁻² s⁻¹) | ~0.26 | |

| C4 (e.g., Gynandropsis gynandra) | High Light (800 µmol m⁻² s⁻¹) | ~0.15 |

| Low Light (200 µmol m⁻² s⁻¹) | ~0.12 |

(Data adapted from a study on Cleomaceae species)[3][16]

Signaling Pathways in Plant Responses

Plants have evolved sophisticated signaling networks to perceive and respond to environmental cues, including nutrient availability and abiotic stress.

ABA-Dependent Drought Stress Signaling

Drought stress is a major limiting factor for plant growth. The phytohormone abscisic acid (ABA) is a central regulator of the drought response.[17][18]

Under normal conditions, PP2C phosphatases actively inhibit SnRK2 kinases.[19][20] When drought stress triggers ABA synthesis, ABA binds to the PYR/PYL/RCAR receptors. This complex then binds to and inactivates PP2Cs.[19][20] The release from inhibition allows SnRK2 kinases to become activated, which then phosphorylate downstream targets, including AREB/ABF transcription factors. These transcription factors move into the nucleus and activate the expression of numerous stress-responsive genes, leading to physiological adaptations like stomatal closure and the production of protective proteins.[20][21]

Rhizosphere Signaling: Legume-Rhizobia Symbiosis

The rhizosphere, the narrow zone of soil surrounding plant roots, is a hub of chemical communication. A classic example is the symbiotic relationship between legumes and nitrogen-fixing rhizobia bacteria. This interaction is initiated by a molecular dialogue mediated by flavonoids secreted by the plant roots.[11][22]

Under nitrogen-limiting conditions, legume roots secrete specific flavonoid compounds into the rhizosphere.[22] These flavonoids are perceived by compatible rhizobia and bind to the bacterial transcriptional activator protein, NodD.[9][11] The activated NodD-flavonoid complex then induces the expression of a suite of bacterial nod (nodulation) genes.[9] These genes produce and secrete lipo-chitooligosaccharide signaling molecules known as Nod factors. When perceived by the host plant's root hairs, Nod factors trigger a signaling cascade that leads to root hair curling, infection thread formation, and ultimately, the development of a new organ, the nitrogen-fixing root nodule.[11]

Key Experimental Protocols

Protocol: Measurement of Stomatal Conductance using an Infrared Gas Analyzer (IRGA)

Objective: To quantify leaf-level stomatal conductance (g_s) and transpiration rate (E).

Materials:

-

Portable infrared gas analyzer (IRGA) system (e.g., LI-COR LI-6800).

-

Calibration gas cylinders (CO2-free air and a known CO2 concentration).

-

The plant of interest.

Procedure:

-

System Warm-up and Calibration: Power on the IRGA and allow it to warm up for at least 30 minutes to stabilize the sensors. Perform the manufacturer's recommended zero and span calibrations for the CO2 and H2O analyzers.[23]

-

Set Chamber Conditions: Configure the IRGA's leaf cuvette to desired environmental conditions. For a standard light-response curve, you might set a constant temperature (e.g., 25°C), relative humidity, and a saturating CO2 concentration (e.g., 400 µmol mol⁻¹). Light levels will be varied systematically.[1]

-

Leaf Selection: Choose a healthy, fully expanded, and mature leaf that has been exposed to the ambient light conditions of the experiment.[23]

-

Clamping the Leaf: Gently enclose the selected leaf within the IRGA cuvette, ensuring a good seal around the leaf gaskets to prevent leaks. The leaf should fill as much of the chamber area as possible.

-

Acclimation and Measurement: Allow the leaf to acclimate to the chamber conditions until the gas exchange parameters (photosynthesis, transpiration, and stomatal conductance) stabilize. This may take several minutes. Once stable, log the measurement.[12]

-

Varying Conditions: For a light-response curve, decrease the light intensity in a stepwise manner, allowing the leaf to acclimate and stabilize at each new light level before logging the data.[16]

-

Data Analysis: The IRGA software calculates stomatal conductance based on the flow rate of air through the chamber and the difference in water vapor concentration between the air entering and exiting the chamber.[23]

Protocol: Determination of Soil Water Potential using a Pressure Plate Apparatus

Objective: To determine the relationship between soil water content and soil water potential (i.e., the soil water retention curve).

Materials:

-

Pressure plate extractor apparatus.

-

Porous ceramic plates (e.g., 1-bar and 15-bar plates).

-

Undisturbed soil core samples in retaining rings.

-

Source of compressed air or nitrogen with a precision regulator.

-

Balance for weighing samples.

-

Drying oven.

Procedure:

-

Plate Saturation: Thoroughly saturate the ceramic plate by submerging it in deionized water for at least 24 hours.[2]

-

Sample Preparation: Place the undisturbed soil cores, contained within their metal or plastic rings, on the saturated ceramic plate. Add water to the plate to ensure good hydraulic contact between the soil samples and the plate surface.[24]

-

Saturation: Place the plate with the samples into the pressure vessel. Add water until it is just below the top of the sample rings and allow the samples to saturate for 24-48 hours.

-

Applying Pressure: Remove excess standing water. Securely seal the lid of the pressure vessel. Connect the outflow tube from the plate to a collection vessel outside the chamber. Apply the first desired pressure (e.g., 0.1 bar or 10 kPa) using the compressed gas and regulator.[25]

-

Equilibration: Allow water to drain from the samples through the porous plate until outflow ceases. This indicates that the water potential within the soil samples has equilibrated with the applied gas pressure. This can take several days to weeks, depending on the soil type and pressure.[24]

-

Measurement: Once equilibrated, release the pressure, quickly remove the samples, and weigh them to determine their moist weight.

-

Repeat: The samples can be placed back on the plate to be equilibrated at the next, higher pressure level (e.g., 0.33 bar, 1 bar, 5 bar, 15 bar).[24]

-

Oven Drying: After the final pressure point (typically 15 bars, representing the permanent wilting point), place the soil samples in a drying oven at 105°C for 24-48 hours until they reach a constant weight. Record the dry weight.

-

Calculation: The gravimetric water content at each pressure point is calculated as: (Moist Weight - Dry Weight) / Dry Weight. This data is used to construct the soil water retention curve.

Protocol: Collection and Analysis of Root Exudates

Objective: To collect and analyze the low-molecular-weight compounds released by plant roots.

Materials:

-

Hydroponic or aeroponic plant growth system, or a system for growing plants in sterile sand or glass beads.

-

Collection solution (e.g., sterile deionized water or a simple nutrient solution).

-

Filtration apparatus (e.g., 0.22 µm syringe filters).

-

Lyophilizer (freeze-dryer) or rotary evaporator for sample concentration.

-

Analytical instrumentation (e.g., High-Performance Liquid Chromatography (HPLC) or Gas Chromatography-Mass Spectrometry (GC-MS)).

Procedure:

-

Plant Growth: Grow plants in a system where roots can be accessed without damage and with minimal contamination. A hydroponic system is often used.

-

Exudate Collection: Gently remove the plants from their growth medium, rinse the roots carefully with sterile water to remove debris and nutrients. Place the root system in a beaker or vessel containing a known volume of sterile collection solution for a defined period (e.g., 2-8 hours).[26]

-

Control Sample: Prepare a control vessel with the same collection solution but without a plant to account for any background contamination.

-

Filtration: After the collection period, immediately filter the exudate solution through a 0.22 µm filter to remove root border cells, microorganisms, and other particulates.[27]

-

Concentration: Due to the low concentration of exudates, the sample usually requires concentration. Freeze-drying (lyophilization) is a common method that avoids heat degradation of the compounds.[27]

-

Reconstitution and Analysis: Reconstitute the dried exudate powder in a small, known volume of an appropriate solvent. The sample is then ready for analysis by methods such as HPLC (for sugars, organic acids, amino acids) or GC-MS (for volatile compounds or after derivatization of non-volatile compounds) to identify and quantify the components.[27]

References

- 1. researchgate.net [researchgate.net]

- 2. researchgate.net [researchgate.net]

- 3. A comparison of stomatal conductance responses to blue and red light between C3 and C4 photosynthetic species in three phylogenetically-controlled experiments - PMC [pmc.ncbi.nlm.nih.gov]

- 4. ctahr.hawaii.edu [ctahr.hawaii.edu]

- 5. eagri.org [eagri.org]

- 6. Certified Crop Advisor study resources (Northeast region) [nrcca.cals.cornell.edu]

- 7. Nighttime Stomatal Conductance and Transpiration in C3 and C4 Plants - PMC [pmc.ncbi.nlm.nih.gov]

- 8. Methods for Root Exudate Collection and Analysis - PubMed [pubmed.ncbi.nlm.nih.gov]

- 9. researchgate.net [researchgate.net]

- 10. academic.oup.com [academic.oup.com]

- 11. researchgate.net [researchgate.net]

- 12. protocols.io [protocols.io]

- 13. mdpi.com [mdpi.com]

- 14. Nitrate Signaling, Functions, and Regulation of Root System Architecture: Insights from Arabidopsis thaliana - PMC [pmc.ncbi.nlm.nih.gov]

- 15. researchgate.net [researchgate.net]

- 16. Frontiers | A comparison of stomatal conductance responses to blue and red light between C3 and C4 photosynthetic species in three phylogenetically-controlled experiments [frontiersin.org]

- 17. Mechanisms of Abscisic Acid-Mediated Drought Stress Responses in Plants - PMC [pmc.ncbi.nlm.nih.gov]

- 18. Signaling in Legume–Rhizobia Symbiosis [mdpi.com]

- 19. researchgate.net [researchgate.net]

- 20. Insights into Drought Stress Signaling in Plants and the Molecular Genetic Basis of Cotton Drought Tolerance - PMC [pmc.ncbi.nlm.nih.gov]

- 21. Frontiers | Core Components of Abscisic Acid Signaling and Their Post-translational Modification [frontiersin.org]

- 22. The Role of Flavonoids in Nodulation Host-Range Specificity: An Update - PMC [pmc.ncbi.nlm.nih.gov]

- 23. 5.7 Stomatal conductance – ClimEx Handbook [climexhandbook.w.uib.no]

- 24. cdpr.ca.gov [cdpr.ca.gov]

- 25. Documentation:Soil Water Retention - Ceramic Pressure Plates - UBC Wiki [wiki.ubc.ca]

- 26. Unraveling the secrets of plant roots: Simplified method for large scale root exudate sampling and analysis in Arabidopsis thaliana - PMC [pmc.ncbi.nlm.nih.gov]

- 27. researchgate.net [researchgate.net]

A Technical Guide to Agrometeorological Forecasting Models: A Comprehensive Review

For Researchers, Scientists, and Drug Development Professionals

This in-depth technical guide provides a comprehensive review of agrometeorological forecasting models. The content delves into the core methodologies, data requirements, and comparative performance of various modeling approaches. This document is intended to serve as a valuable resource for researchers and scientists in the fields of agriculture, meteorology, and environmental science, providing the foundational knowledge required to select, implement, and interpret the results of agrometeorological forecasts.

Introduction to Agrometeorological Forecasting

Agrometeorological forecasting is a critical scientific discipline that integrates meteorology, soil science, and crop physiology to predict the impact of weather and climate on agricultural production.[1] Accurate and timely forecasts are essential for a multitude of applications, including optimizing crop management practices, mitigating the impacts of extreme weather events, ensuring food security, and informing agricultural policy.[1] The evolution of forecasting methodologies has seen a progression from simple empirical observations to sophisticated, data-driven models that leverage advanced computational techniques.

The primary objective of agrometeorological forecasting is to provide quantitative estimates of crop yields and other agriculturally relevant parameters in advance of harvest.[1] These forecasts are crucial for farmers in making tactical decisions regarding planting dates, irrigation scheduling, and pest and disease management. At a broader scale, they are indispensable for governmental and non-governmental organizations for regional planning, resource allocation, and early warning systems for food shortages.

Core Methodologies in Agrometeorological Forecasting

Agrometeorological forecasting models can be broadly categorized into three main types: empirical-statistical models, crop simulation models (mechanistic models), and machine learning/artificial intelligence-based models. Each of these approaches has its own set of strengths, weaknesses, and data requirements.

Empirical-Statistical Models

Statistical models are the most traditional approach to agrometeorological forecasting. They rely on establishing statistical relationships between historical weather data and crop yields.[2] These models are generally simpler to develop and implement, requiring less computational resources compared to other methods.

Key Characteristics:

-

Data-Driven: Primarily based on historical time-series data of meteorological variables and crop yields.

-

Regression-Based: Often employ multiple linear regression, time-series analysis (e.g., ARIMA), and other statistical techniques to establish predictive equations.[2]

-

Location-Specific: The derived statistical relationships are often specific to the region for which they were developed.

Commonly Used Models:

-

Multiple Linear Regression (MLR): Relates crop yield to several meteorological variables (e.g., temperature, precipitation) through a linear equation.

-

Time-Series Models (ARIMA, SARIMA): Analyze the temporal patterns in historical data to forecast future values.[2]

-

Descriptive Methods: Classify weather conditions based on certain thresholds to identify conditions associated with significantly different yields.[1]

Crop Simulation Models (Mechanistic Models)

Crop simulation models (CSMs), also known as mechanistic or process-based models, are more complex and aim to mimic the physiological processes of crop growth and development.[1] These models are built on a scientific understanding of how crops respond to environmental factors.

Key Characteristics:

-

Process-Oriented: Simulate daily plant growth based on inputs of weather, soil conditions, and management practices.[3]

-

Biologically-Based: Incorporate mathematical equations that describe key physiological processes such as photosynthesis, respiration, and water uptake.

-

Greater Generalizability: Can be adapted to different environments and management scenarios with proper calibration.

Prominent Examples:

-

DSSAT (Decision Support System for Agrotechnology Transfer): A widely used suite of crop models for simulating the growth of over 40 different crops.

-

APSIM (Agricultural Production Systems sIMulator): A modular modeling framework that can simulate a wide range of agricultural systems.

-

WOFOST (WOrld FOod STudies): A simulation model for the quantitative analysis of the growth and production of annual field crops.

Machine Learning and AI-Based Models

In recent years, machine learning (ML) and artificial intelligence (AI) have emerged as powerful tools in agrometeorological forecasting. These models can capture complex, non-linear relationships in large datasets that may be missed by traditional statistical methods.

Key Characteristics:

-

Algorithmic Learning: Learn patterns directly from data without being explicitly programmed with physiological processes.

-

Handling Complexity: Capable of handling high-dimensional and heterogeneous data from various sources (e.g., satellite imagery, IoT sensors).

-

Improved Accuracy: Often demonstrate superior predictive performance compared to traditional models, especially when large datasets are available.[4]

Frequently Employed Algorithms:

-

Random Forest (RF): An ensemble learning method that builds multiple decision trees and merges their predictions to improve accuracy.[5]

-

Support Vector Machines (SVM): A supervised learning model that finds the optimal hyperplane to separate data points into different classes or to perform regression.

-

Artificial Neural Networks (ANN): A set of algorithms, modeled loosely after the human brain, that are designed to recognize patterns.[4]

-

Long Short-Term Memory (LSTM) Networks: A type of recurrent neural network (RNN) well-suited for time-series forecasting.[2]

-

Convolutional Neural Networks (CNN): Primarily used for analyzing imagery data, such as satellite images for crop monitoring.

Data Presentation: A Comparative Analysis of Model Performance

The performance of agrometeorological forecasting models is typically evaluated using a range of statistical metrics. The choice of the most suitable model often depends on the specific application, data availability, and desired level of accuracy. The following tables summarize the quantitative performance of different model types based on data from various comparative studies.

Table 1: Performance Metrics for Different Machine Learning Models in Crop Yield Prediction

| Model | R-squared (R²) | Root Mean Square Error (RMSE) | Mean Absolute Error (MAE) | Accuracy | Reference |

| Random Forest | 0.88 | - | - | 99% | [5][6] |

| Extra Trees | - | - | 5249.03 | 97.5% | [4] |

| Artificial Neural Network | 0.9873 | - | - | - | [4] |

| K-Nearest Neighbor | - | - | - | 97% | [5] |

| Logistic Regression | - | - | - | 96% | [5] |

| Deep Learning Model | 0.94 | 227.99 | - | - | [6] |

| Gradient Boosting Regressor | 0.84 | - | - | - | [6] |

Table 2: Comparative Performance of Different Regression Models for Crop Yield Prediction

| Model | R² Score | Root Mean Square Error (RMSE) | Mean Squared Error (MSE) | Mean Absolute Error (MAE) | Reference |

| Random Forest Regressor | Best Performance | - | - | - | [7] |

| K-Neighbors Regressor | Second Best Performance | - | - | - | [7] |

| Decision Tree Regressor | Third Best Performance | - | - | - | [7] |

| Linear Regression | - | - | - | - | [8] |

| Support Vector Regressor | - | - | - | - | [8] |

Experimental Protocols: Methodologies for Key Experiments

The reliability of any forecasting model is contingent upon a robust experimental design for its development and validation. This section outlines a generalized experimental protocol for developing a machine learning-based agrometeorological forecasting model.

Data Acquisition and Preprocessing

-

Data Collection: Gather historical data from various sources, including:

-

Meteorological Data: Daily records of maximum and minimum temperature, precipitation, solar radiation, wind speed, and humidity from weather stations or gridded climate datasets.[9]

-

Soil Data: Information on soil type, texture, depth, and water holding capacity.[9]

-

Crop Data: Historical crop yield data at the desired spatial resolution (e.g., county, district).

-

Remote Sensing Data: Satellite imagery (e.g., NDVI, EVI) to monitor crop health and growth stages.[10]

-

-

Data Cleaning: Handle missing values through imputation techniques (e.g., mean, median, or model-based imputation).[11] Address outliers and inconsistencies in the data.

-

Data Integration: Merge data from different sources based on common spatial and temporal identifiers.

-

Feature Engineering: Create new variables from the existing data that may have better predictive power. This can include calculating growing degree days, water balance indices, or other agronomic indicators.

Model Training and Validation

-

Data Splitting: Divide the preprocessed dataset into training, validation, and testing sets. A common split is 70% for training, 15% for validation, and 15% for testing.

-

Model Selection: Choose one or more machine learning algorithms to train on the dataset.

-

Model Training: Train the selected model(s) on the training dataset. This involves the model learning the relationships between the input features and the target variable (e.g., crop yield).

-

Hyperparameter Tuning: Optimize the model's hyperparameters using the validation set to improve its performance. This can be done using techniques like grid search or random search.

-

Model Evaluation: Evaluate the performance of the trained model on the unseen test dataset using various statistical metrics such as R², RMSE, and MAE.[8]

Experimental Setup for a Comparative Study

To compare the performance of different models, the following steps are crucial:

-

Standardized Dataset: Use the exact same training, validation, and testing datasets for all models being compared.

-

Consistent Evaluation Metrics: Apply the same set of performance metrics to evaluate all models.[7]

-

Cross-Validation: Employ k-fold cross-validation to ensure that the model's performance is robust and not dependent on a particular random split of the data.[12]

-

Statistical Significance Testing: If possible, perform statistical tests to determine if the differences in performance between models are statistically significant.

Mandatory Visualizations

The following diagrams, created using the DOT language for Graphviz, illustrate key workflows and logical relationships in agrometeorological forecasting.

Conclusion

The field of agrometeorological forecasting is continuously evolving, with a clear trend towards the integration of diverse data sources and the adoption of more sophisticated modeling techniques. While empirical-statistical models remain valuable for their simplicity and interpretability, crop simulation models offer deeper insights into the mechanisms of crop-weather interactions. The advent of machine learning and AI has opened up new frontiers, enabling the development of highly accurate and robust forecasting systems.

Future research should focus on the development of hybrid models that combine the strengths of different approaches. For instance, integrating the outputs of crop simulation models as input features for machine learning algorithms has shown promise in improving prediction accuracy.[13] Furthermore, the increasing availability of real-time data from IoT devices and remote sensing platforms will continue to drive innovation in this critical field, ultimately contributing to more resilient and sustainable agricultural systems.

References

- 1. fao.org [fao.org]

- 2. biochemjournal.com [biochemjournal.com]

- 3. researchgate.net [researchgate.net]

- 4. researchgate.net [researchgate.net]

- 5. Comparative Analysis of Classification Algorithms for Crop Yield Prediction | Atlantis Press [atlantis-press.com]

- 6. GitHub - ShubhamKJ123/Crop-Yield-Prediction-using-Machine-Learning-Algorithms [github.com]

- 7. Predictive Modeling of Crop Yields: A Comparative Analysis of Regression Techniques for Agricultural Yield Prediction | Agricultural Engineering International: CIGR Journal [cigrjournal.org]

- 8. A Comparative Analysis of Regression Models for Crop Yield Prediction Based on Rainfall Data: Experimental Study and Future Perspective | IEEE Conference Publication | IEEE Xplore [ieeexplore.ieee.org]

- 9. View of Benchmark data set for wheat growth models: field experiments and AgMIP multi-model simulations [odjar.org]

- 10. researchgate.net [researchgate.net]

- 11. mdpi.com [mdpi.com]

- 12. mdpi.com [mdpi.com]

- 13. Frontiers | County-scale crop yield prediction by integrating crop simulation with machine learning models [frontiersin.org]

The Unseen Architects: A Technical Guide to the Impact of Microclimates on Crop Production

For Researchers, Scientists, and Drug Development Professionals

Introduction

Microclimates, the localized atmospheric conditions that differ from the surrounding macroclimate, are critical determinants of agricultural productivity.[1] These subtle variations in temperature, humidity, light, wind, and soil moisture within and around a crop canopy can significantly influence plant physiological processes, from photosynthesis to stress responses, ultimately dictating yield and quality.[1][2] For researchers and scientists, understanding and manipulating these micro-environments is key to developing climate-resilient crops and sustainable agricultural practices. For drug development professionals, the signaling pathways activated by microclimatic stressors offer a treasure trove of potential targets for novel agrochemicals designed to enhance crop resilience and productivity. This guide provides an in-depth technical overview of the core impacts of microclimates on crop production, detailing quantitative effects, experimental methodologies, and the underlying biological signaling networks.

Temperature: The Primary Driver of Plant Metabolism

Temperature is arguably the most influential microclimatic factor, directly regulating the rate of biochemical reactions, including photosynthesis and respiration.[3][4] Both extreme heat and cold can cause irreversible damage to plant cells and disrupt critical growth stages, leading to significant yield losses.[3][5]

Quantitative Impact of Temperature on Crop Yield

Global and localized studies consistently demonstrate a strong correlation between temperature variations and crop productivity. Yields often increase with temperature up to an optimal threshold, beyond which they decline sharply.[6]

| Crop | Temperature Change | Impact on Yield | Geographic Region/Condition | Reference |

| Maize | +1°C increase in global mean temperature | -7.4% | Global Average | [7] |

| Wheat | +1°C increase in global mean temperature | -6.0% | Global Average | [7] |

| Rice | +1°C increase in global mean temperature | -3.2% | Global Average | [7] |

| Soybean | +1°C increase in global mean temperature | -3.1% | Global Average | [7] |

| Rice | 1°C increase in seasonal average temperature | -9% | Crop Growth Simulation | [4] |

| Wheat | Temperature increase > 2.38°C threshold | Yield loss accelerates to 8.2% per 1°C warming | Meta-analysis | [8] |

| Rice | Temperature increase > 3.13°C threshold | Yield loss accelerates to 7.1% per 1°C warming | Meta-analysis | [8] |

| Maize | Heat Stress (38°C vs 30°C) for 28 days | 72.5% reduction in net photosynthetic rate | Controlled Environment | [5] |

| Potato | Soil Temperature > 29°C | Tuber formation is practically absent | General Observation | [9] |

Experimental Protocols for Temperature Impact Analysis

1.2.1 Controlled Environment Studies

Controlled Environment Agriculture (CEA) systems, such as growth chambers and greenhouses, are invaluable for dissecting the specific effects of temperature on crop physiology.[10][11][12]

-

Objective: To determine the effect of specific temperature regimes on plant growth, physiology, and yield, while keeping other variables (light, humidity, CO2) constant.

-

Methodology:

-

Plant Material: Use genetically uniform plant material (e.g., a specific cultivar or inbred line) to minimize biological variability.

-

Acclimation: Grow plants in a standardized "control" environment (e.g., 23°C/18°C day/night) to a specific developmental stage (e.g., V4 stage in maize).[13]

-

Treatment Application: Transfer subsets of plants to different controlled environments set at the target temperatures (e.g., low, optimal, high stress).[13] The transition should be gradual if studying acclimation.

-

Environmental Monitoring: Independently monitor and log air and canopy temperature at multiple locations within each environment using calibrated sensors (e.g., thermocouples, infrared thermometers). Report averages and standard deviations.[11]

-

Data Collection: At regular intervals and at the end of the experiment, collect data on physiological parameters (photosynthetic rate, stomatal conductance), morphological traits (plant height, leaf area), and yield components (grain weight, fruit number).

-

Statistical Analysis: Use appropriate statistical models (e.g., ANOVA) to determine the significance of temperature effects. A randomized complete block design is often used, where each chamber or greenhouse bench represents a block.[11]

-

1.2.2 Field-Based Canopy Temperature Measurement

Measuring canopy temperature (CT) in the field provides a real-time indicator of plant water stress, as stomatal closure under drought leads to reduced transpirational cooling and thus, higher leaf temperatures.[14][15][16]

-

Objective: To assess crop water stress and genetic variation in cooling capacity across a large number of plots in a field trial.

-

Methodology (Airborne Thermography):

-

Platform: Mount a high-resolution thermal infrared camera on an unmanned aerial vehicle (UAV).

-

Image Acquisition: Fly the UAV over the experimental field at a consistent altitude and speed, typically during a clear, sunny day when water stress is most likely to be expressed (e.g., post-anthesis).[15][16]

-

Ground Truthing: Place temperature references (blackbody calibrators or plates of known emissivity and temperature) within the field for post-flight image correction. Record ambient air temperature, relative humidity, and wind speed at the time of the flight.[15]

-

Image Processing: Stitch the captured thermal images into a single orthomosaic. Use specialized software to extract the average temperature for each individual plot, excluding soil background.[15]

-

Data Analysis: Calculate CT for each plot and analyze for significant differences between genotypes or irrigation treatments. Heritability of the CT trait can also be calculated to assess its utility in breeding programs.[15][16]

-

Visualization: Heat Stress Signaling Pathway

When plants are exposed to high temperatures, a conserved molecular signaling cascade known as the Heat Shock Response (HSR) is activated to protect cellular components from damage.[3][17][18] This involves the activation of Heat Shock Transcription Factors (HSFs) and the production of Heat Shock Proteins (HSPs).[3][17]

Light: The Energy Source and Developmental Signal

Light intensity, quality (wavelength), and duration (photoperiod) are fundamental microclimatic variables that drive photosynthesis and regulate plant development (photomorphogenesis).[19][20] Fluctuations in light within the canopy can lead to significant inefficiencies in carbon gain.[18]

Quantitative Impact of Light Intensity on Crop Performance

The relationship between light intensity and photosynthesis is not linear; it reaches a saturation point where further increases in light do not increase carbon fixation.[20]

| Crop | Light Intensity Change | Impact on Performance | Experimental Condition | Reference |

| Lettuce | Optimal: 350-600 µmol·m⁻²·s⁻¹ at 23°C | Highest photosynthetic rate and yield | Controlled Environment | [13] |

| Lettuce | High light (600 µmol·m⁻²·s⁻¹) at low temp (15°C) | Decreased chlorophyll (B73375) and photosynthesis efficiency | Controlled Environment | [13] |

| Tomato | +30% increase in light intensity (within optimal range) | +14% increase in fruit yield | Greenhouse with LEDs | [21] |

| Various C3 & C4 Crops | Transition from low to high light | 10-15% limitation to photosynthesis during induction | Laboratory Measurement | [18] |

| Intercropped Corn | Shading by trees (59.9% solar radiation decrease) | Yield reduction, but improved plant growth characteristics | Agroforestry System | [22] |

Experimental Protocols for Light Response Analysis

2.2.1 Photosynthesis-Irradiance (PI) Curve Measurement

-

Objective: To characterize the photosynthetic response of a leaf to a range of light intensities and determine key parameters like the light compensation point, light saturation point, and maximum photosynthetic rate (Amax).

-

Methodology:

-

Instrumentation: Use a portable gas exchange system (e.g., LI-COR LI-6800) with an integrated light source (fluorometer chamber).

-

Leaf Selection: Choose a recently fully expanded, healthy leaf that has been acclimated to the ambient growth conditions.

-

Environmental Control: Clamp the leaf into the chamber. Set the chamber conditions to mimic the plant's growth environment (e.g., constant CO2 concentration of 400 ppm, temperature of 25°C, and controlled humidity).

-

Light Curve Program: Program the instrument to step through a series of light intensities (Photosynthetic Photon Flux Density, PPFD), for example: 2000, 1500, 1000, 500, 200, 100, 50, 20, 0 µmol·m⁻²·s⁻¹. Allow the leaf to stabilize at each light level before logging the gas exchange data (CO2 assimilation, stomatal conductance).

-

Data Analysis: Plot the net photosynthetic rate (A) against the PPFD. Fit a non-rectangular hyperbola model to the data to derive parameters such as Amax, quantum yield, and the light saturation point.

-

Visualization: Light Signaling and Photomorphogenesis

Plants perceive light through a suite of photoreceptors, including phytochromes (sensing red/far-red light) and cryptochromes (sensing blue light).[23][24] In darkness, transcription factors called PHYTOCHROME INTERACTING FACTORs (PIFs) accumulate and suppress light-induced genes. Upon light exposure, activated photoreceptors trigger the rapid degradation of PIFs, allowing for photomorphogenesis (e.g., cotyledon expansion, chlorophyll synthesis) to proceed.[19]

Wind and Humidity: Regulators of Water Relations and Disease

Wind speed and atmospheric humidity are intrinsically linked, co-regulating canopy gas exchange, transpiration rates, and the incidence of fungal diseases.[24][25][26]

Quantitative Impact of Wind and Humidity

Modifying wind speed through practices like shelterbelts can create a more favorable microclimate, leading to significant yield improvements. High humidity can promote fungal pathogens but also affects plant physiology.

| Microclimate Factor | Modification | Impact on Crop/Environment | Quantitative Effect | Reference |

| Wind Speed | Shelterbelt (Medium-High Density) | Corn Yield | +2.21% (before cutting) | [27] |

| Wind Speed | Shelterbelt (Post-Cutting Legacy) | Corn Yield (Soil Effect) | +0.98% (average legacy effect) | [9][27] |

| Wind Speed | Greenhouse Ventilation | Sweet Pepper Yield | +24.4% (at 0.8-1.0 m/s) | [27] |

| Wind Speed | Greenhouse Ventilation | Sweet Pepper Transpiration | "Inefficient" transpiration increases at excessive speeds | [27] |

| Relative Humidity | Maintained < 85% | Botrytis Incidence in Flowers | 98% reduction | [28] |

| Relative Humidity | High Humidity Treatment | Ethylene Production in Arabidopsis | Rapid induction | [7] |

| Relative Humidity | Intermediate RH (50-56%) | Fungal Disease Progression | Maximal rate of development | [26] |

Experimental Protocols for Microclimate Modification Analysis

3.2.1 Assessing Shelterbelt Effects on Microclimate and Yield

-

Objective: To quantify the impact of a shelterbelt on wind speed, microclimate, and the yield of an adjacent crop.

-

Methodology:

-

Site Selection: Choose a site with a mature shelterbelt adjacent to a uniformly managed crop field. The control area should be in the same field but far enough away to be unaffected by the shelterbelt (>30 times the shelterbelt height, H).

-

Instrumentation: Place anemometers at crop canopy height on transects perpendicular to the shelterbelt on both the leeward (downwind) and windward (upwind) sides.[6] Locations should be at standardized distances based on the shelterbelt's height (e.g., 1H, 3H, 5H, 10H, 20H).[6] Simultaneously, deploy sensors to measure air temperature and relative humidity at the same locations.

-

Data Logging: Continuously record data throughout a significant portion of the growing season.

-

Yield Measurement: At harvest, collect yield samples along the same transects. This can be done by hand-harvesting defined quadrats (e.g., 1m²) at each measurement point.[29]

-

Data Analysis: Calculate the relative wind speed reduction at each distance compared to the open-field control. Correlate the changes in wind speed, temperature, and humidity with the observed changes in crop yield to map the zone of influence.[21]

-

3.2.2 Analyzing Mulching Effects on Soil Microclimate

-

Objective: To determine how different mulch materials alter soil temperature and moisture and their subsequent effect on crop growth.

-

Experimental Design:

-

Treatments: Establish plots with different mulch treatments (e.g., black polyethylene, transparent polyethylene, straw mulch) and a bare soil control.[30]

-

Layout: Arrange plots in a randomized complete block design with at least three replications to account for field variability.[30]

-

Instrumentation: Install soil temperature and moisture sensors (e.g., thermocouples, time-domain reflectometry probes) at a consistent depth (e.g., 10-15 cm) in the center of each plot.

-

Measurements: Record soil temperature and moisture daily or with a data logger. Periodically collect data on crop growth parameters (plant height, leaf area index) and weed biomass.[30]

-

Yield Assessment: Harvest the central rows of each plot to determine the final crop yield.

-

Analysis: Compare the microclimate data, growth parameters, and yield across the different mulch treatments using ANOVA.

-

Visualization: Experimental Workflow for Shelterbelt Analysis

This diagram illustrates the logical flow of an experiment designed to quantify the multifaceted impact of a shelterbelt on the agricultural micro-environment and resulting crop yield.

References

- 1. researchgate.net [researchgate.net]

- 2. Deficit Irrigation and Mulching Impacts on Major Crop Yield and Water Efficiency: A Review, Hydrology, Science Publishing Group [sciencepublishinggroup.com]

- 3. researchgate.net [researchgate.net]

- 4. cabidigitallibrary.org [cabidigitallibrary.org]

- 5. mdpi.com [mdpi.com]

- 6. organicresearchcentre.com [organicresearchcentre.com]

- 7. Ethylene signaling modulates air humidity responses in plants - PubMed [pubmed.ncbi.nlm.nih.gov]

- 8. Exploring the Mechanisms of Humidity Responsiveness in Plants and Their Potential Applications [mdpi.com]

- 9. Estimating the Legacy Effect of Post-Cutting Shelterbelt on Crop Yield Using Google Earth and Sentinel-2 Data [diva-portal.org]

- 10. agtech.folio3.com [agtech.folio3.com]

- 11. sciencesocieties.org [sciencesocieties.org]

- 12. caes.ucdavis.edu [caes.ucdavis.edu]

- 13. The productive performance of intercropping - PMC [pmc.ncbi.nlm.nih.gov]

- 14. G2301 [extensionpubs.unl.edu]

- 15. Methodology for High-Throughput Field Phenotyping of Canopy Temperature Using Airborne Thermography - PMC [pmc.ncbi.nlm.nih.gov]

- 16. Frontiers | Methodology for High-Throughput Field Phenotyping of Canopy Temperature Using Airborne Thermography [frontiersin.org]

- 17. Beat the Heat: Signaling Pathway-Mediated Strategies for Plant Thermotolerance [mdpi.com]

- 18. academic.oup.com [academic.oup.com]

- 19. mdpi.com [mdpi.com]

- 20. fs.usda.gov [fs.usda.gov]

- 21. researchgate.net [researchgate.net]

- 22. researchgate.net [researchgate.net]

- 23. Light signaling in plants—a selective history - PMC [pmc.ncbi.nlm.nih.gov]

- 24. academic.oup.com [academic.oup.com]

- 25. Heat-Responsive Photosynthetic and Signaling Pathways in Plants: Insight from Proteomics - PMC [pmc.ncbi.nlm.nih.gov]

- 26. researchgate.net [researchgate.net]

- 27. mdpi.com [mdpi.com]

- 28. researchgate.net [researchgate.net]

- 29. organicresearchcentre.com [organicresearchcentre.com]

- 30. researchgate.net [researchgate.net]

The Convergence of Earth Observation and Agricultural Meteorology: A Technical Guide to State-of-the-Art Remote Sensing

For Immediate Release

A comprehensive technical guide for researchers, scientists, and agricultural stakeholders, this whitepaper delves into the cutting-edge applications of remote sensing in agrometeorology. It provides a detailed overview of the core methodologies, data presentation through structured tables, and intricate experimental protocols. Mandatory visualizations of key workflows and signaling pathways are included to facilitate a deeper understanding of the complex interplay between remote sensing data and agrometeorological phenomena.

The advent of advanced satellite technology has revolutionized our ability to monitor and understand the Earth's systems. In the realm of agrometeorology, remote sensing has emerged as an indispensable tool, offering unparalleled spatial and temporal insights into crop health, soil conditions, and water resources. This guide explores the state-of-the-art techniques that are empowering precision agriculture, enhancing crop yield forecasts, and enabling more effective management of water resources in a changing climate.

Core Applications in Agrometeorology

Remote sensing applications in agrometeorology are diverse and impactful. Key areas of application include:

-

Crop Yield Forecasting: By analyzing vegetation indices over time, researchers can model and predict crop yields with increasing accuracy, providing vital information for food security and market planning.[1][2]

-

Evapotranspiration (ET) Estimation: Satellite data, particularly thermal imagery, is crucial for models that estimate the amount of water returning to the atmosphere from the land surface and plants. This is critical for irrigation scheduling and water resource management.

-

Soil Moisture Monitoring: Microwave remote sensing, in particular, allows for the estimation of soil moisture content, a key variable in drought assessment and agricultural water management.[3][4][5][6][7]

-

Drought and Stress Detection: Spectral and thermal remote sensing can detect early signs of plant stress due to water scarcity or disease, enabling timely intervention to mitigate crop damage.[8][9][10][11][12]

-

Integration with Crop Models: Remote sensing data can be assimilated into crop growth models to improve the accuracy of simulations and forecasts.

Data Presentation: A Comparative Look at Key Satellite Sensors

The selection of an appropriate satellite sensor is contingent on the specific agrometeorological application, considering factors such as spatial, spectral, and temporal resolution. The table below provides a comparison of commonly used satellite sensors in agriculture.

| Sensor | Satellite Platform(s) | Spatial Resolution | Temporal Resolution | Key Spectral Bands for Agrometeorology | Primary Applications |

| OLI | Landsat 8, Landsat 9 | 30m (Visible, NIR, SWIR), 100m (Thermal) | 16 days (8 days with both) | Blue, Green, Red, Near-Infrared (NIR), Shortwave Infrared (SWIR), Thermal Infrared (TIR) | Field-scale crop monitoring, water management, land cover mapping |

| MSI | Sentinel-2A, Sentinel-2B | 10m (Visible, NIR), 20m (Red Edge, SWIR), 60m (Coastal, Aerosol) | 5 days (with both) | Blue, Green, Red, 4 Red Edge bands, NIR, SWIR | Precision agriculture, crop type mapping, vegetation stress analysis |

| MODIS | Terra, Aqua | 250m - 1km | 1-2 days | Red, NIR, Blue, Green, SWIR, TIR | Regional to global crop monitoring, drought assessment, ET estimation |

| VIIRS | Suomi-NPP, NOAA-20 | 375m, 750m | Daily | Red, NIR, SWIR, TIR | Global vegetation monitoring, active fire detection, sea surface temperature |

Experimental Protocols: Methodologies for Key Agrometeorological Assessments

This section details the methodologies for several key remote sensing applications in agrometeorology, providing a step-by-step guide for researchers.

Protocol 1: Crop Yield Forecasting using Machine Learning

Objective: To predict crop yield using satellite-derived vegetation indices and machine learning algorithms.

Methodology:

-

Data Acquisition and Pre-processing:

-

Acquire time-series satellite imagery (e.g., Landsat, Sentinel-2) for the growing season.

-

Obtain historical crop yield data for the study area.

-

Collect relevant meteorological data (e.g., precipitation, temperature).

-

Pre-process satellite imagery: This includes atmospheric correction, cloud masking, and geometric correction.

-

-

Feature Extraction:

-

Calculate vegetation indices such as the Normalized Difference Vegetation Index (NDVI) for each image in the time series.

-

Extract other relevant features from the satellite data and meteorological records.

-

-

Model Training and Hyperparameter Tuning:

-

Select appropriate machine learning algorithms, such as Support Vector Machines (SVM), Random Forest (RF), Gradient Boosting Machines (GBM), or Artificial Neural Networks (ANN).[2]

-

Split the data into training and testing sets.

-

Train the selected models on the training data.

-

Optimize model performance by tuning hyperparameters using techniques like Grid Search or Optuna.[1][13]

-

-

Model Validation and Prediction:

-

Evaluate the trained models on the testing set using metrics like Root Mean Square Error (RMSE), Mean Absolute Error (MAE), and R-squared (R²).

-

Use the best-performing model to predict crop yield for new, unseen data.

-

Protocol 2: Evapotranspiration Estimation using the Surface Energy Balance System (SEBS)

Objective: To estimate actual evapotranspiration using satellite data and meteorological information within the SEBS framework.

Methodology:

-

Input Data Preparation:

-

Remote Sensing Data: Acquire satellite imagery with thermal bands (e.g., Landsat, MODIS) to derive land surface temperature, albedo, and emissivity.[14][15]

-

Meteorological Data: Obtain ground-based measurements of air pressure, temperature, humidity, and wind speed.

-

Radiation Data: Collect data on incoming solar and longwave radiation.

-

-

Derivation of Land Surface Parameters:

-

Calculate land surface temperature from the thermal bands.

-

Compute surface albedo from the visible and near-infrared bands.

-

Estimate surface emissivity and vegetation cover using NDVI.

-

-

SEBS Model Implementation:

-

Energy Balance Calculation: The model first calculates the net radiation, which is the balance between incoming and outgoing radiation.

-

Sensible Heat Flux Estimation: SEBS determines the sensible heat flux (the heat transferred between the surface and the atmosphere) by considering the temperature difference between the surface and the air.

-

Latent Heat Flux as a Residual: The latent heat flux (the energy used for evapotranspiration) is then calculated as the residual of the surface energy balance equation.

-

-

Evapotranspiration Calculation and Validation:

-

Convert the latent heat flux to actual evapotranspiration in millimeters per day.

-

Validate the SEBS-derived ET estimates against ground-based measurements from flux towers or lysimeters.

-

Protocol 3: Data Assimilation in Crop Models using the Ensemble Kalman Filter (EnKF)

Objective: To improve the accuracy of crop growth model simulations by assimilating remote sensing observations.

Methodology:

-

Model and Observation Preparation:

-

Crop Model: Select a suitable crop growth model (e.g., WOFOST, DSSAT).

-

Remote Sensing Observations: Acquire and process satellite data to derive variables that can be assimilated, such as Leaf Area Index (LAI) or soil moisture.

-

-

Ensemble Generation:

-