SPPC

Description

Properties

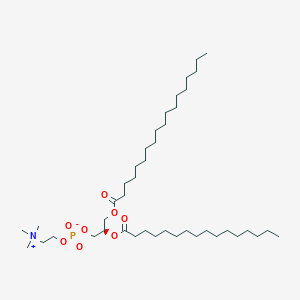

IUPAC Name |

[(2R)-2-hexadecanoyloxy-3-octadecanoyloxypropyl] 2-(trimethylazaniumyl)ethyl phosphate | |

|---|---|---|

| Details | Computed by LexiChem 2.6.6 (PubChem release 2019.06.18) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI |

InChI=1S/C42H84NO8P/c1-6-8-10-12-14-16-18-20-21-23-24-26-28-30-32-34-41(44)48-38-40(39-50-52(46,47)49-37-36-43(3,4)5)51-42(45)35-33-31-29-27-25-22-19-17-15-13-11-9-7-2/h40H,6-39H2,1-5H3/t40-/m1/s1 | |

| Details | Computed by InChI 1.0.5 (PubChem release 2019.06.18) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI Key |

BYSIMVBIJVBVPA-RRHRGVEJSA-N | |

| Details | Computed by InChI 1.0.5 (PubChem release 2019.06.18) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Canonical SMILES |

CCCCCCCCCCCCCCCCCC(=O)OCC(COP(=O)([O-])OCC[N+](C)(C)C)OC(=O)CCCCCCCCCCCCCCC | |

| Details | Computed by OEChem 2.1.5 (PubChem release 2019.06.18) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Isomeric SMILES |

CCCCCCCCCCCCCCCCCC(=O)OC[C@H](COP(=O)([O-])OCC[N+](C)(C)C)OC(=O)CCCCCCCCCCCCCCC | |

| Details | Computed by OEChem 2.1.5 (PubChem release 2019.06.18) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Molecular Formula |

C42H84NO8P | |

| Details | Computed by PubChem 2.1 (PubChem release 2019.06.18) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

DSSTOX Substance ID |

DTXSID201336101 | |

| Record name | 1-stearoyl-2-palmitoyl-sn-glycero-3-phosphocholine | |

| Source | EPA DSSTox | |

| URL | https://comptox.epa.gov/dashboard/DTXSID201336101 | |

| Description | DSSTox provides a high quality public chemistry resource for supporting improved predictive toxicology. | |

Molecular Weight |

762.1 g/mol | |

| Details | Computed by PubChem 2.1 (PubChem release 2021.05.07) | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Physical Description |

Solid | |

| Record name | PC(18:0/16:0) | |

| Source | Human Metabolome Database (HMDB) | |

| URL | http://www.hmdb.ca/metabolites/HMDB0008034 | |

| Description | The Human Metabolome Database (HMDB) is a freely available electronic database containing detailed information about small molecule metabolites found in the human body. | |

| Explanation | HMDB is offered to the public as a freely available resource. Use and re-distribution of the data, in whole or in part, for commercial purposes requires explicit permission of the authors and explicit acknowledgment of the source material (HMDB) and the original publication (see the HMDB citing page). We ask that users who download significant portions of the database cite the HMDB paper in any resulting publications. | |

Foundational & Exploratory

A Technical Guide to the Future Circular Collider (FCC-hh): A New Frontier in High-Energy Physics and its Applications for Medical Research and Development

Introduction

This technical guide provides a comprehensive overview of the Future Circular Collider (FCC), with a specific focus on its proton-proton collider aspect, the FCC-hh. While not officially named the "Super Proton-Proton Collider," the FCC-hh represents the next generation of particle accelerators, poised to succeed the Large Hadron Collider (LHC). This document is intended for researchers, scientists, and professionals in drug development, detailing the technical specifications of the FCC-hh and exploring its potential applications in the medical field. The technologies developed for high-energy physics have historically driven significant advancements in medical diagnostics, therapy, and imaging, and the FCC is expected to continue this legacy.[1][2][3]

Core Project: The Future Circular Collider (FCC)

The Future Circular Collider is a proposed next-generation particle accelerator complex to be built at CERN.[4][5] The project envisions a new ~91-kilometer circumference tunnel that would house different types of colliders in stages.[6][7] The ultimate goal is the FCC-hh, a hadron collider designed to achieve a center-of-mass collision energy of 100 TeV, a significant leap from the 14 TeV of the LHC.[4][8] This leap in energy will allow physicists to probe new realms of physics, study the Higgs boson in unprecedented detail, and search for new particles that could explain mysteries such as dark matter.[4][9]

The FCC program is planned in two main stages:

-

FCC-ee: An electron-positron collider that would serve as a "Higgs factory," producing millions of Higgs bosons for precise measurement.[6][10]

-

FCC-hh: A proton-proton and heavy-ion collider that would reuse the FCC-ee tunnel and infrastructure to reach unprecedented energy levels.[7][11]

Technical Specifications of the FCC-hh

The design of the FCC-hh is based on extending the technologies developed for the LHC and its high-luminosity upgrade (HL-LHC).[8][11][12] The key parameters of the FCC-hh are summarized in the table below, with a comparison to the LHC for context.

| Parameter | Large Hadron Collider (LHC) | Future Circular Collider (FCC-hh) |

| Circumference | 27 km | ~90.7 km[7] |

| Center-of-Mass Energy (p-p) | 14 TeV | 100 TeV[8][9] |

| Peak Luminosity | 2 x 10^34 cm^-2 s^-1 | >5 x 10^34 cm^-2 s^-1 |

| Injection Energy | 450 GeV | 3.3 TeV[9] |

| Dipole Magnet Field Strength | 8.3 T | 16 T[4] |

| Number of Bunches | 2808 | ~9450[13] |

| Bunch Spacing | 25 ns | 25 ns[13] |

| Beam Current | 0.58 A | 0.5 A[13] |

| Stored Energy per Beam | 362 MJ | 16.7 GJ[4] |

Relevance to Drug Development and Medical Research

While the primary mission of the FCC-hh is fundamental physics research, the technological advancements required for its construction and operation have significant potential to translate into medical applications.[3] Particle accelerator technologies have historically been pivotal in healthcare, from cancer therapy to medical imaging.[14][15]

Production of Novel Radioisotopes for Theranostics

High-energy proton beams can be used to produce a wide range of radioisotopes, some of which are not accessible with lower-energy cyclotrons typically found in hospitals.[16] Facilities at CERN, such as MEDICIS (Medical Isotopes Collected from ISOLDE), are already dedicated to producing unconventional radioisotopes for medical research, with a focus on precision medicine and theranostics.[1][17] The high-energy and high-intensity proton beams available from the FCC injector chain could significantly expand the variety and quantity of novel isotopes for targeted radionuclide therapy and advanced diagnostic imaging.[3]

-

Target Preparation: A bismuth-209 target is prepared and mounted in a target station.

-

Proton Beam Irradiation: The target is irradiated with a high-energy proton beam from the accelerator's injector chain. The specific energy would be selected to maximize the production cross-section of Astatine-211.

-

Target Processing: Post-irradiation, the target is remotely transferred to a hot cell for chemical processing.

-

Isotope Separation: Astatine-211 is separated from the bismuth target and other byproducts using chemical extraction techniques.

-

Radiolabeling: The purified Astatine-211 is chelated and conjugated to a monoclonal antibody or peptide that specifically targets a tumor antigen.

-

Quality Control: The final radiopharmaceutical is subjected to rigorous quality control to ensure purity, stability, and specific activity.

-

Preclinical Evaluation: The therapeutic efficacy and dosimetry of the Astatine-211 labeled drug are evaluated in preclinical cancer models.

Advancements in Particle Therapy

Particle therapy, particularly with protons and heavy ions, offers a more precise way to target tumors while minimizing damage to surrounding healthy tissue.[18] The research and development for the FCC-hh will drive innovations in accelerator technology, such as high-gradient radiofrequency cavities and high-field superconducting magnets, which could lead to more compact and cost-effective accelerators for medical use.[19][20] This could make advanced cancer therapies like carbon-ion therapy more widely accessible.

Development of Next-Generation Medical Imaging Detectors

The detectors required for the FCC experiments will need to be more advanced than any currently in existence, capable of handling immense amounts of data with high spatial and temporal resolution. This drives innovation in detector technology, which has a history of being adapted for medical imaging.[21] For example, the Medipix family of detectors, developed at CERN, has enabled high-resolution, color X-ray imaging.[1][22] Future detector technologies developed for the FCC could lead to new paradigms in medical imaging, such as photon-counting CT, which could offer higher resolution and better material differentiation at lower radiation doses.

Visualizations

Logical Workflow: CERN Accelerator Complex to FCC-hh

Caption: The CERN accelerator complex injection chain for the FCC-hh.

Signaling Pathway: From Proton Beam to Therapeutic Application

Caption: Workflow for producing a targeted radiopharmaceutical.

Experimental Workflow: Medical Detector Development

Caption: From high-energy physics R&D to clinical imaging detectors.

References

- 1. Healthcare | Knowledge Transfer [kt.cern]

- 2. lettersinhighenergyphysics.com [lettersinhighenergyphysics.com]

- 3. Medical Applications at CERN and the ENLIGHT Network - PMC [pmc.ncbi.nlm.nih.gov]

- 4. Future Circular Collider - Wikipedia [en.wikipedia.org]

- 5. Future Circular Collider (FCC) - Istituto Nazionale di Fisica Nucleare [infn.it]

- 6. CERN releases report on the feasibility of a possible Future Circular Collider | CERN [home.cern]

- 7. The Future Circular Collider | CERN [home.cern]

- 8. researchgate.net [researchgate.net]

- 9. arxiv.org [arxiv.org]

- 10. FCC CDR [fcc-cdr.web.cern.ch]

- 11. research.monash.edu [research.monash.edu]

- 12. FCC-hh: The Hadron Collider: Future Circular Collider Conceptual Design Report Volume 3 [infoscience.epfl.ch]

- 13. meow.elettra.eu [meow.elettra.eu]

- 14. Fermilab | Science | Particle Physics | Benefits of Particle Physics | Medicine [fnal.gov]

- 15. Particle accelerator - Wikipedia [en.wikipedia.org]

- 16. What Are Particle Accelerators, and How Do They Support Cancer Treatment? [mayomagazine.mayoclinic.org]

- 17. CERN accelerates medical applications | CERN [home.cern]

- 18. Applications of Particle Accelerators [arxiv.org]

- 19. aitanatop.ific.uv.es [aitanatop.ific.uv.es]

- 20. m.youtube.com [m.youtube.com]

- 21. From particle physics to medicine – CERN70 [cern70.cern]

- 22. General Medical Applications | Knowledge Transfer [knowledgetransfer.web.cern.ch]

The Super Proton-Proton Collider: A Technical Guide to the Successor of the LHC

A Whitepaper for Researchers, Scientists, and Drug Development Professionals

The Super Proton-Proton Collider (SPPC) represents a monumental leap forward in high-energy physics, poised to succeed the Large Hadron Collider (LHC) and delve deeper into the fundamental fabric of the universe. This technical guide provides a comprehensive overview of the this compound's core components, experimental objectives, and the methodologies that will be employed to explore new frontiers in science. The this compound is the second phase of a larger project that begins with the Circular Electron-Positron Collider (CEPC), a Higgs factory designed for high-precision measurements of the Higgs boson and other Standard Model particles.[1][2]

Introduction: The Post-LHC Era

The discovery of the Higgs boson at the LHC was a landmark achievement, completing the Standard Model of particle physics. However, many fundamental questions remain unanswered, including the nature of dark matter, the origin of matter-antimatter asymmetry, and the hierarchy problem. The this compound is designed to address these profound questions by colliding protons at unprecedented center-of-mass energies, opening a new window into the high-energy frontier.

The CEPC-SPPC project is a two-stage endeavor. The first stage, the CEPC, will provide a "Higgs factory" for precise measurements of the Higgs boson's properties.[3] The second stage, the this compound, will be a discovery machine, searching for new physics beyond the Standard Model.[1][2] Both colliders will be housed in the same 100-kilometer circumference tunnel.[1][3]

Quantitative Data Summary

The following tables summarize the key design parameters of the CEPC and this compound, offering a clear comparison of their capabilities.

Table 1: CEPC Key Parameters [3][4]

| Parameter | Value |

| Circumference | 100 km |

| Center-of-Mass Energy (Higgs) | 240 GeV |

| Center-of-Mass Energy (Z-pole) | 91.2 GeV |

| Center-of-Mass Energy (W-pair) | 160 GeV |

| Luminosity (Higgs) | 5 x 10³⁴ cm⁻²s⁻¹ |

| Luminosity (Z-pole) | 115 x 10³⁴ cm⁻²s⁻¹ |

| Number of Interaction Points | 2 |

| Expected Higgs Bosons (10 years) | ~2.6 million (baseline) |

| Expected Z Bosons (1 year) | > 1 trillion |

Table 2: this compound Key Parameters [1][5][6]

| Parameter | Value |

| Circumference | 100 km |

| Center-of-Mass Energy | 125 TeV (baseline) |

| Intermediate Run Energy | 75 TeV |

| Peak Luminosity per IP | 1.1 x 10³⁵ cm⁻²s⁻¹ |

| Dipole Magnetic Field | 20 T (baseline) |

| Injection Energy | 2.1 TeV |

| Number of Interaction Points | 2 (4 possible) |

| Circulating Beam Current | 1.0 A |

| Bunch Separation | 25 ns |

Experimental Program and Methodologies

The experimental program at the CEPC-SPPC facility is designed to be comprehensive, covering both precision measurements and direct searches for new phenomena.

CEPC: The Higgs Factory

The primary goal of the CEPC is the precise measurement of the Higgs boson's properties. The vast number of Higgs bosons produced will allow for detailed studies of its couplings to other particles, its self-coupling, and its decay modes.

Key Experiments:

-

Higgs Coupling Measurements: By analyzing the production and decay rates of the Higgs boson in various channels (e.g., H → ZZ, H → WW, H → bb, H → ττ, H → γγ), the couplings to different particles can be determined with high precision.

-

Search for Invisible Higgs Decays: The CEPC's clean experimental environment will enable sensitive searches for Higgs decays into particles that do not interact with the detector, which could be a signature of dark matter.[7]

-

Electroweak Precision Measurements: Operating as a Z and W factory, the CEPC will produce enormous numbers of Z and W bosons, allowing for extremely precise measurements of their properties, which can indirectly probe for new physics.

Experimental Methodology: A Generalized Workflow

The experimental workflow for Higgs boson studies at the CEPC will generally follow these steps:

-

Event Generation: Theoretical models are used to simulate the production of electron-positron collisions and the subsequent decay of particles.

-

Detector Simulation: The generated particles are passed through a detailed simulation of the CEPC detector to model their interactions and the detector's response. The CEPC software chain utilizes tools like Geant4 for this purpose.[8]

-

Event Reconstruction: The raw data from the detector is processed to reconstruct the trajectories and energies of the particles produced in the collision. The CEPC reconstruction software is based on the Particle Flow principle, which aims to reconstruct each final state particle using the most precise sub-detector system.[8]

-

Event Selection: Specific criteria are applied to select events that are consistent with the signal of interest (e.g., a Higgs boson decay) while rejecting background events.

-

Signal Extraction and Analysis: Statistical methods are used to extract the signal from the remaining background and to measure the properties of the Higgs boson.

This compound: The Discovery Machine

The this compound will push the energy frontier to 125 TeV, enabling searches for new particles and phenomena far beyond the reach of the LHC. The primary physics goals of the this compound include:

-

Searches for Supersymmetry (SUSY): SUSY is a theoretical framework that predicts a partner particle for each particle in the Standard Model. The this compound will have a vast discovery potential for a wide range of SUSY models.[9][10][11] Searches will focus on signatures with large missing transverse energy (from the stable, lightest supersymmetric particle, a dark matter candidate) and multiple jets or leptons.

-

Searches for Dark Matter: In addition to SUSY-related dark matter candidates, the this compound will search for other forms of dark matter that could be produced in high-energy collisions.[12][13][14]

-

Searches for New Gauge Bosons and Extra Dimensions: Many theories beyond the Standard Model predict the existence of new force carriers (W' and Z' bosons) or additional spatial dimensions. The this compound will be able to search for these phenomena at mass scales an order of magnitude higher than the LHC.[15][16][17][18]

-

Precision Higgs Physics at High Energy: The this compound will also contribute to Higgs physics by enabling the study of rare Higgs production and decay modes, and by providing a precise measurement of the Higgs self-coupling.

Experimental Methodology: Search for Supersymmetry

The search for SUSY particles at the this compound will involve a sophisticated data analysis pipeline:

-

Signal and Background Modeling: Monte Carlo simulations will be used to generate large samples of both the expected SUSY signal events and the various Standard Model background processes.

-

Detector Simulation and Reconstruction: As with the CEPC, a detailed detector simulation will be crucial for understanding the detector's response to the complex events produced at the this compound.

-

Event Selection: A set of stringent selection criteria will be applied to isolate potential SUSY events. These criteria typically include:

-

High missing transverse energy (MET), which is a key signature of the escaping lightest supersymmetric particles.

-

A high multiplicity of jets, often including jets originating from bottom quarks (b-jets).

-

The presence of one or more leptons (electrons or muons).

-

-

Background Estimation: The contribution from Standard Model background processes will be carefully estimated using a combination of simulation and data-driven techniques.

-

Statistical Analysis: A statistical analysis will be performed to search for an excess of events in the data compared to the expected background. A significant excess would be evidence for new physics, such as supersymmetry.

Signaling Pathways: Particle Decay Chains

Understanding the decay patterns of known and hypothetical particles is crucial for designing search strategies. The following diagrams illustrate key decay chains relevant to the this compound's physics program.

Higgs Boson Decay Channels

A Representative Supersymmetric Particle Decay Chain

In many SUSY models, the gluino (the superpartner of the gluon) is produced in pairs and decays through a cascade to lighter supersymmetric particles, ultimately producing Standard Model particles and the lightest supersymmetric particle (LSP), which is a dark matter candidate.

Conclusion

The Super Proton-Proton Collider, in conjunction with its predecessor the Circular Electron-Positron Collider, represents a comprehensive and ambitious program to address the most pressing questions in fundamental physics. Through a combination of high-precision measurements and high-energy searches, the CEPC-SPPC project has the potential to revolutionize our understanding of the universe. The technical designs are mature, and the physics case is compelling, paving the way for a new era of discovery in particle physics.

References

- 1. slac.stanford.edu [slac.stanford.edu]

- 2. researchgate.net [researchgate.net]

- 3. slac.stanford.edu [slac.stanford.edu]

- 4. [2203.09451] Snowmass2021 White Paper AF3-CEPC [arxiv.org]

- 5. ias.ust.hk [ias.ust.hk]

- 6. proceedings.jacow.org [proceedings.jacow.org]

- 7. cepc.ihep.ac.cn [cepc.ihep.ac.cn]

- 8. Software | CEPC Software [cepcsoft.ihep.ac.cn]

- 9. [2404.16922] Searches for Supersymmetry (SUSY) at the Large Hadron Collider [arxiv.org]

- 10. ATLAS strengthens its search for supersymmetry | ATLAS Experiment at CERN [atlas.cern]

- 11. Seeking Susy | CMS Experiment [cms.cern]

- 12. Scientists propose a new way to search for dark matter [www6.slac.stanford.edu]

- 13. [1211.7090] Galactic Searches for Dark Matter [arxiv.org]

- 14. sciencedaily.com [sciencedaily.com]

- 15. ATLAS and CMS search for new gauge bosons – CERN Courier [cerncourier.com]

- 16. pdg.lbl.gov [pdg.lbl.gov]

- 17. moriond.in2p3.fr [moriond.in2p3.fr]

- 18. [PDF] Prospects for discovering new gauge bosons , extra dimensions and contact interaction at the LHC | Semantic Scholar [semanticscholar.org]

Physics Beyond the Standard Model at the Super Proton-Proton Collider: A Technical Guide

Abstract: The Standard Model of particle physics, despite its remarkable success, leaves several fundamental questions unanswered, pointing towards the existence of new physics. The proposed Super Proton-Proton Collider (SPPC) is a next-generation hadron collider designed to explore the energy frontier and directly probe physics beyond the Standard Model (BSM). With a designed center-of-mass energy of approximately 125 TeV, the this compound will provide an unprecedented opportunity to search for new particles and interactions. This technical guide provides an in-depth overview of the core BSM physics program at the this compound, summarizing key quantitative projections, outlining experimental strategies, and visualizing the logical workflows for discovery.

Introduction: The Imperative for Physics Beyond the Standard Model

The Standard Model (SM) of particle physics provides a remarkably successful description of the fundamental particles and their interactions. However, it fails to address several profound questions, including the nature of dark matter, the origin of neutrino masses, the hierarchy problem, and the matter-antimatter asymmetry in the universe. These unresolved puzzles strongly suggest that the SM is an effective theory that will be superseded by a more fundamental description of nature at higher energy scales.

The Super Proton-Proton Collider (this compound) is a proposed circular proton-proton collider with a circumference of 100 km, designed to reach a center-of-mass energy of around 125 TeV.[1][2] As the second stage of the Circular Electron-Positron Collider (CEPC-SPPC) project, the this compound is poised to be a discovery machine at the energy frontier, directly searching for new particles and phenomena that could revolutionize our understanding of the fundamental laws of physics.[1][3] This document outlines the key areas of BSM physics that the this compound will investigate, presenting the projected sensitivity and experimental approaches.

Key Accelerator and Detector Parameters

The physics reach of the this compound is determined by its key design parameters, which have evolved through various conceptual design stages. The ultimate goal is to achieve a significant leap in energy and luminosity compared to the Large Hadron Collider (LHC).

| Parameter | Pre-CDR Value | CDR Value | Ultimate Goal | Reference |

| Circumference | 54.4 km | 100 km | 100 km | [2][4] |

| Center-of-Mass Energy | 70.6 TeV | 75 TeV | 125-150 TeV | [2][4] |

| Dipole Magnetic Field | 20 T | 12 T | 20-24 T | [2][4] |

| Nominal Luminosity per IP | 1.2 x 10^35 cm⁻²s⁻¹ | 1.0 x 10^35 cm⁻²s⁻¹ | - | [2][4] |

| Injection Energy | 2.1 TeV | 2.1 TeV | 4.2 TeV | [2][4] |

| Number of Interaction Points | 2 | 2 | 2 | [2][4] |

| Bunch Separation | 25 ns | 25 ns | - | [2][4] |

| Stored Energy per Beam | - | - | 9.1 GJ | [5] |

The detectors at the this compound will need to be designed to handle the high-energy and high-luminosity environment. Key requirements include excellent momentum and energy resolution for charged particles and photons, efficient identification of heavy-flavor jets (b-tagging), and robust tracking and calorimetry to reconstruct the complex events produced in 125 TeV collisions. The ability to identify long-lived particles and measure missing transverse energy will be crucial for many BSM searches.[6]

Core Physics Program: Probing the Unknown

The this compound's primary mission is to explore the vast landscape of BSM physics. The following sections detail the key theoretical frameworks and the experimental strategies to test them.

Supersymmetry (SUSY)

Supersymmetry is a well-motivated extension of the Standard Model that posits a symmetry between fermions and bosons, introducing a superpartner for each SM particle. SUSY can provide a solution to the hierarchy problem, offer a natural dark matter candidate (the lightest supersymmetric particle, or LSP), and lead to the unification of gauge couplings at high energies.

Experimental Strategy: The this compound will search for the production of supersymmetric particles, such as squarks (superpartners of quarks) and gluinos (superpartners of gluons), which are expected to be produced with large cross-sections if their masses are within the TeV range. These heavy particles would then decay through a cascade of lighter SUSY particles, ultimately producing SM particles and the stable LSP, which would escape detection and result in a large missing transverse energy signature. Searches will focus on final states with multiple jets, leptons, and significant missing transverse energy.

Projected Sensitivity: While specific projections for the this compound are still under development, studies for a generic 100 TeV pp collider indicate a significant increase in discovery reach compared to the LHC.

| BSM Scenario | Particle | Projected Mass Reach (100 TeV pp collider) |

| Supersymmetry | Gluino | ~10-15 TeV |

| Supersymmetry | Squark | ~8-10 TeV |

| Supersymmetry | Wino (LSP) | up to ~3 TeV |

Note: These are indicative values for a generic 100 TeV collider and the final sensitivity of the this compound will depend on the ultimate machine and detector performance.

New Gauge Bosons (Z' and W')

Many BSM theories predict the existence of new gauge bosons, often denoted as Z' and W', which are heavier cousins of the SM Z and W bosons. These particles could arise from extended gauge symmetries, such as new U(1) groups. The discovery of a Z' or W' boson would be a clear sign of new physics and would provide insights into the underlying symmetry structure of a more fundamental theory.

Experimental Strategy: The primary search channel for a Z' boson is the "bump hunt" in the dilepton (electron-positron or muon-antimuon) or dijet invariant mass spectrum. A new, heavy particle would appear as a resonance (a "bump") on top of the smoothly falling background from SM processes. Similarly, a W' boson could be searched for in the lepton-plus-missing-energy final state.

Projected Sensitivity: The this compound will be able to probe for Z' and W' bosons with masses far beyond the reach of the LHC.

| BSM Scenario | Particle | Projected Mass Reach (100 TeV pp collider) |

| Extended Gauge Symmetries | Z' Boson | up to ~40 TeV |

| Extended Gauge Symmetries | W' Boson | up to ~25 TeV |

Note: These are indicative values for a generic 100 TeV collider and the final sensitivity of the this compound will depend on the ultimate machine and detector performance.

Composite Higgs Models

Composite Higgs models propose that the Higgs boson is not a fundamental particle but rather a composite state, a bound state of new, strongly interacting fermions. This framework provides a natural solution to the hierarchy problem. A key prediction of these models is the existence of new, heavy particles called "top partners," which are fermionic partners of the top quark (B2429308).

Experimental Strategy: The search for composite Higgs models at the this compound will focus on the direct production of top partners. These particles are expected to decay predominantly to a top quark and a W, Z, or Higgs boson. Searches will target final states with multiple top quarks, heavy bosons, and potentially high jet multiplicity. Precision measurements of Higgs boson couplings can also provide indirect evidence for compositeness, as these models predict deviations from the SM predictions.

Projected Sensitivity: The this compound will have a significant discovery potential for top partners with masses in the multi-TeV range.

| BSM Scenario | Particle | Projected Mass Reach (100 TeV pp collider) |

| Composite Higgs | Top Partner (T) | up to ~10-12 TeV |

Note: These are indicative values for a generic 100 TeV collider and the final sensitivity of the this compound will depend on the ultimate machine and detector performance.

References

- 1. [2203.07987] Study Overview for Super Proton-Proton Collider [arxiv.org]

- 2. indico.cern.ch [indico.cern.ch]

- 3. researchgate.net [researchgate.net]

- 4. indico.ihep.ac.cn [indico.ihep.ac.cn]

- 5. Frontiers | Design Concept for a Future Super Proton-Proton Collider [frontiersin.org]

- 6. indico.cern.ch [indico.cern.ch]

Advancing Precision Oncology: A Technical Overview of the Key Design Goals of Sharing Progress in Cancer Care (SPCC) Projects

A Technical Guide for Researchers, Scientists, and Drug Development Professionals

Sharing Progress in Cancer Care (SPCC) is an independent, non-profit organization dedicated to accelerating advancements in oncology through education and the dissemination of innovative practices. The core of SPCC's mission is to bridge the gap between cutting-edge research and clinical application, ultimately improving patient outcomes. This technical guide delves into the key design goals of SPCC's flagship projects, with a focus on personalized medicine, predictive diagnostics, and supportive care. We will explore the methodologies, quantitative data, and underlying biological pathways that form the foundation of their initiatives.

Core Principle: Personalizing Treatment Through Advanced Diagnostics in HER2-Positive Breast Cancer

A primary design goal of SPCC is to foster the adoption of personalized medicine by promoting the use of advanced diagnostic tools to tailor treatment strategies. This is prominently exemplified in their support and dissemination of information regarding the DEFINITIVE (Diagnostic HER2DX-guided Treatment for patIents wIth Early-stage HER2-positive Breast Cancer) trial .

The central aim of this project is to move beyond a "one-size-fits-all" approach to HER2-positive breast cancer, which, despite being a well-defined subtype, exhibits significant clinical and biological heterogeneity. The project's design is centered on validating the clinical utility of the HER2DX® genomic assay to guide therapeutic decisions, with the goal of improving quality of life by de-escalating treatment for low-risk patients and identifying high-risk patients who may benefit from escalated therapeutic strategies.

Experimental Protocols

HER2DX® Genomic Assay Methodology

The HER2DX® test is a sophisticated in vitro diagnostic assay that provides a multi-faceted view of a patient's tumor biology. The protocol is designed to be robust and reproducible, utilizing standard clinical samples.

-

Sample Collection and Preparation: The assay is performed on Formalin-Fixed, Paraffin-Embedded (FFPE) breast cancer tissue obtained from a core biopsy prior to treatment. This allows for the analysis of the tumor in its native state.

-

RNA Extraction: Total RNA is extracted from the FFPE tissue sections using standardized and optimized protocols to ensure high-quality genetic material is obtained from the preserved tissue.

-

Gene Expression Quantification: The expression levels of a panel of 27 specific genes are measured. While the precise platform can vary, this is typically accomplished using a highly sensitive and specific method such as nCounter (NanoString Technologies) or a similar targeted RNA sequencing approach that provides direct, digital counting of mRNA molecules.

-

Algorithmic Analysis: The quantified gene expression data is integrated with two key clinical features (tumor size and nodal status) in a proprietary, supervised learning algorithm. This algorithm generates three distinct scores: a risk of relapse score, a pathological complete response (pCR) likelihood score, and an ERBB2 gene expression score.

Data Presentation: Efficacy of the HER2DX® Assay

The HER2DX® assay has been evaluated in multiple studies, yielding quantitative data that underscores its predictive power. The tables below summarize key findings from correlative analyses of clinical trials.

| HER2DX® pCR Score Category | pCR Rate (HP-based therapy) | Odds Ratio (pCR-high vs. pCR-low) |

| High | 50.4% | 3.27 |

| Medium | 35.8% | - |

| Low | 23.2% | - |

| Table 1: Pathological Complete Response (pCR) rates in patients with HER2+ early breast cancer treated with trastuzumab and pertuzumab (HP)-based therapy, stratified by the HER2DX® pCR score.[1] |

| HER2DX® pCR Score Category (ESD Patients) | pCR Rate (Letrozole + Trastuzumab/Pertuzumab) |

| High | 100.0% |

| Medium | 46.2% |

| Low | 7.7% |

| Table 2: pCR rates in endocrine-sensitive disease (ESD) patients treated with a chemotherapy-free regimen, demonstrating the assay's ability to predict response to de-escalated therapy.[2][3] |

Signaling Pathways and Logical Relationships

The 27 genes analyzed by the HER2DX® assay are not randomly selected; they represent four crucial biological processes that dictate tumor behavior and response to therapy. Understanding these pathways is key to interpreting the assay's results.

-

HER2 Amplicon Expression: This signature directly measures the expression of ERBB2 and other genes in the HER2 amplicon, such as GRB7. The HER2 signaling pathway is a critical driver of cell proliferation and survival in these tumors.

-

Luminal Differentiation: This signature includes genes like ESR1 (Estrogen Receptor 1) and BCL2. It reflects the tumor's reliance on estrogen-driven pathways. There is often an inverse relationship between the HER2 and ER signaling pathways; tumors with high luminal signaling may be less dependent on HER2 signaling for their growth.[3]

-

Tumor Cell Proliferation: Genes in this signature are associated with cell cycle progression. High expression indicates a rapidly dividing, aggressive tumor.

-

Immune Infiltration: The presence of immune cells, as indicated by this gene signature, is often associated with a better prognosis and a higher likelihood of response to HER2-targeted therapies, which can induce an anti-tumor immune response.

The logical workflow of the DEFINITIVE trial is designed to rigorously assess the clinical impact of using the HER2DX® assay to guide treatment decisions.

Enhancing Supportive Care: Evidence-Based Approaches in Geriatric Oncology

Another key design goal for SPCC is to improve the quality of life and treatment tolerance of cancer patients through better supportive care. This is particularly critical in geriatric oncology, where patients are more vulnerable to the side effects of treatment. SPCC's "Project on the Impact of Nutritional Status in Geriatric Oncology" is a prime example of this focus.

The project's design is to synthesize expert consensus and promote the integration of systematic nutritional screening and assessment into routine oncology practice for older adults. Malnutrition is a prevalent and serious comorbidity in this population, affecting an estimated 30% to 80% of older cancer patients and is linked to increased frailty, poor treatment outcomes, and reduced survival.[4]

Experimental Protocols

Nutritional Status Assessment

The project advocates for a two-step process: initial screening followed by a comprehensive assessment for those identified as at-risk. While a single, universal protocol is not mandated, the project highlights several validated tools.

-

Screening: Simple, rapid tools are recommended for initial screening in a clinical setting. Examples include the Geriatric 8 (G8) questionnaire or the Mini Nutritional Assessment - Short Form (MNA-SF) .

-

Assessment: For patients who screen positive for malnutrition risk, a more detailed assessment is performed. This can involve the full Mini Nutritional Assessment (MNA) , the Patient-Generated Subjective Global Assessment (PG-SGA) , or the Geriatric Nutritional Risk Index (GNRI) , which incorporates serum albumin levels and weight loss.

Data Presentation: Prognostic Value of Nutritional Screening Tools

Research has validated the independent prognostic value of various nutritional tools in older adults with cancer. The following table compares the performance of several tools in predicting 1-year mortality.

| Nutritional Assessment Tool | Hazard Ratio (for mortality) | C-index (Discriminant Ability) |

| Body Mass Index (BMI) | Varies by category | 0.748 |

| Weight Loss (WL) > 10% | Significant increase | 0.752 |

| Mini Nutritional Assessment (MNA) | Progressive increase with risk | 0.761 |

| Geriatric Nutritional Risk Index (GNRI) | Progressive increase with risk | 0.761 |

| Glasgow Prognostic Score (GPS) | Progressive increase with risk | 0.762 |

| Table 3: Comparative prognostic performance of various nutrition-related assessment tools in predicting 1-year mortality in older patients with cancer, adjusted for other prognostic factors.[5] |

Future Directions: Personalized Diagnostics in Prostate Cancer

Building on the principles of personalized medicine, SPCC is also engaged in projects aimed at "Improving quality in prostate cancer care through personalised diagnostic testing." This initiative is designed to address the significant challenge of overdiagnosis and overtreatment in prostate cancer. The goal is to promote the use of diagnostic tests that can more accurately stratify patients based on their risk of developing clinically significant disease, thereby guiding decisions about the necessity and intensity of interventions like biopsies and active treatment.

While specific SPCC-funded trials with published quantitative data are still emerging in this area, the design goal is to support the integration of tools that combine clinical data with biomarker information. This includes advanced imaging techniques like multi-parametric MRI (mpMRI) and various molecular diagnostic tests. The logical framework for such an approach is to create a more personalized and less invasive diagnostic pathway.

Conclusion

The key design goals of Sharing Progress in Cancer Care's projects are deeply rooted in the principles of evidence-based medicine, personalization, and a holistic approach to patient care. By championing the integration of advanced genomic assays like HER2DX®, promoting systematic supportive care assessments in vulnerable populations, and advocating for more precise diagnostic pathways in diseases like prostate cancer, SPCC is actively shaping a future where cancer treatment is more effective, less toxic, and tailored to the individual needs of each patient. The methodologies and data presented herein provide a technical foundation for understanding the scientific rigor and forward-thinking vision that drive these crucial initiatives.

References

- 1. researchgate.net [researchgate.net]

- 2. Application and challenge of HER2DX genomic assay in HER2+ breast cancer treatment - PMC [pmc.ncbi.nlm.nih.gov]

- 3. HER2DX genomic test in HER2-positive/hormone receptor-positive breast cancer treated with neoadjuvant trastuzumab and pertuzumab: A correlative analysis from the PerELISA trial - PMC [pmc.ncbi.nlm.nih.gov]

- 4. Nutritional Challenges in Older Cancer Patients: A Narrative Review of Assessment Tools and Management Strategies - PubMed [pubmed.ncbi.nlm.nih.gov]

- 5. Comparison of the prognostic value of eight nutrition-related tools in older patients with cancer: A prospective study - PubMed [pubmed.ncbi.nlm.nih.gov]

A Technical Guide to New Particle Discovery at the Super Proton-Proton Collider (SPPC)

For Researchers, Scientists, and Drug Development Professionals

Executive Summary

The Super Proton-Proton Collider (SPPC) represents a monumental leap in high-energy physics, poised to unlock the next generation of fundamental particles and forces. With a planned center-of-mass energy of up to 125 TeV, the this compound will provide an unprecedented window into the electroweak scale and beyond, offering profound insights for particle physics and potentially revolutionizing our understanding of the universe with implications for various scientific fields, including drug development through advancements in computational methods and detector technologies.[1][2] This technical guide provides a comprehensive overview of the core methodologies and quantitative projections for the discovery of new particles at the this compound, with a focus on Higgs boson self-coupling, supersymmetry, and dark matter.

The Super Proton-Proton Collider: A New Frontier

The this compound is a proposed hadron collider with a circumference of 100 kilometers, designed to succeed the Large Hadron Collider (LHC).[1][2] It is the second phase of the Circular Electron-Positron Collider (CEPC-SPPC) project.[1][2] The primary objective of the this compound is to explore physics at the energy frontier, directly probing for new particles and phenomena beyond the Standard Model.

Key Design and Performance Parameters

The this compound is envisioned to be constructed in stages, with an initial center-of-mass energy of 75 TeV, ultimately reaching 125 TeV.[1][2] The design leverages high-field superconducting magnets, a key technological challenge and area of ongoing research and development.[2]

| Parameter | This compound (Phase 1) | This compound (Ultimate) |

| Center-of-Mass Energy (√s) | 75 TeV | 125 TeV |

| Circumference | 100 km | 100 km |

| Peak Luminosity per IP | 1.0 x 10³⁵ cm⁻²s⁻¹ | > 1.0 x 10³⁵ cm⁻²s⁻¹ |

| Integrated Luminosity (10-15 years) | ~30 ab⁻¹ | > 30 ab⁻¹ |

| Dipole Magnetic Field | 12 T | 20 T |

| Number of Interaction Points (IPs) | 2 | 2 |

Table 1: Key design parameters of the Super Proton-Proton Collider.[1][2]

Probing the Higgs Sector: The Higgs Self-Coupling

A primary scientific goal of the this compound is the precise measurement of the Higgs boson's properties, particularly its self-coupling. This measurement provides a direct probe of the shape of the Higgs potential, which is fundamental to understanding the mechanism of electroweak symmetry breaking.[1][3][4] Deviations from the Standard Model prediction for the Higgs self-coupling would be a clear indication of new physics.

Experimental Protocol: Measuring the Trilinear Higgs Self-Coupling

The most direct way to measure the trilinear Higgs self-coupling is through the production of Higgs boson pairs (di-Higgs production). At the this compound's high energy, the dominant production mode is gluon-gluon fusion.

Experimental Steps:

-

Event Selection: Identify collision events with signatures corresponding to the decay of two Higgs bosons. Promising decay channels include:

-

bbγγ: One Higgs decays to a pair of bottom quarks, and the other to a pair of photons. This channel offers a clean signature with good mass resolution for the diphoton system.

-

bbττ: One Higgs decays to bottom quarks and the other to a pair of tau leptons.

-

4b: Both Higgs bosons decay to bottom quarks, providing the largest branching ratio but suffering from significant quantum chromodynamics (QCD) background.

-

-

Background Rejection: Implement stringent selection criteria to suppress the large backgrounds from Standard Model processes. This involves:

-

b-tagging: Identifying jets originating from bottom quarks.

-

Photon Identification: Distinguishing prompt photons from those produced in jet fragmentation.

-

Kinematic Cuts: Applying cuts on the transverse momentum (pT), pseudorapidity (η), and angular separation of the final state particles.

-

-

Signal Extraction: Perform a statistical analysis of the invariant mass distributions of the di-Higgs system to extract the signal yield above the background.

-

Coupling Measurement: The measured di-Higgs production cross-section is then used to constrain the value of the trilinear Higgs self-coupling.

Projected Sensitivity

Simulations indicate that the this compound will be able to measure the Higgs self-coupling with a precision of a few percent, a significant improvement over the capabilities of the HL-LHC.

| Collider | Integrated Luminosity | Decay Channel | Projected Precision on Higgs Self-Coupling (68% CL) |

| HL-LHC (14 TeV) | 3 ab⁻¹ | Combination | ~50% |

| This compound (100 TeV) | 30 ab⁻¹ | bbγγ | ~8% |

| This compound (100 TeV) | 30 ab⁻¹ | Combination | 4-8% |

Table 2: Projected precision for the measurement of the trilinear Higgs self-coupling at the HL-LHC and this compound.[3][5][6]

Supersymmetry: A Solution to the Hierarchy Problem

Supersymmetry (SUSY) is a well-motivated extension of the Standard Model that postulates a symmetry between fermions and bosons. It provides a potential solution to the hierarchy problem and offers a natural candidate for dark matter. The this compound's high energy will allow for searches for supersymmetric particles (sparticles) over a wide mass range.

Experimental Protocol: Searching for Gluinos and Squarks

Gluinos (the superpartners of gluons) and squarks (the superpartners of quarks) are expected to be produced copiously at a hadron collider if their masses are within the collider's reach.

Experimental Steps:

-

Signature Definition: Searches typically target final states with multiple high-pT jets and significant missing transverse energy (MET), which arises from the escape of the lightest supersymmetric particle (LSP), a stable, weakly interacting particle that is a prime dark matter candidate.

-

Event Selection:

-

Select events with a high number of jets (e.g., ≥ 4).

-

Require large MET.

-

Veto events containing identified leptons (electrons or muons) to reduce backgrounds from W and Z boson decays.

-

-

Background Suppression: The main backgrounds are from top quark (B2429308) pair production (ttbar), W+jets, and Z+jets production. These are suppressed by the high jet multiplicity and large MET requirements. Advanced techniques like machine learning classifiers can be employed to further enhance signal-to-background discrimination.

-

Signal Region Definition: Define signal regions based on kinematic variables such as the effective mass (the scalar sum of the pT of the jets and the MET) to enhance the sensitivity to different SUSY models.

-

Statistical Analysis: Compare the observed event yields in the signal regions with the predicted background rates. An excess of events would be evidence for new physics.

Projected Discovery Reach

The this compound will significantly extend the discovery reach for supersymmetric particles compared to the LHC.

| Sparticle | LHC (14 TeV, 300 fb⁻¹) | This compound (100 TeV, 3 ab⁻¹) |

| Gluino Mass | ~2.5 TeV | ~15 TeV |

| Squark Mass | ~2 TeV | ~10 TeV |

Table 3: Estimated discovery reach for gluinos and squarks at the LHC and this compound.

Unveiling the Dark Sector: The Search for Dark Matter

The nature of dark matter is one of the most profound mysteries in modern physics. The this compound will be a powerful tool in the search for Weakly Interacting Massive Particles (WIMPs), a leading class of dark matter candidates.

Experimental Protocol: Mono-X Searches

At a hadron collider, dark matter particles would be produced in pairs and, being weakly interacting, would escape detection, leading to a signature of large missing transverse energy. To trigger and reconstruct such events, the production of dark matter must be accompanied by a visible particle (X), such as a jet, photon, or W/Z boson, recoiling against the invisible dark matter particles.

Experimental Steps:

-

Signature Selection:

-

Mono-jet/Mono-photon: Select events with a single high-pT jet or photon and large MET.

-

Mono-W/Z: Select events where a W or Z boson is produced in association with MET. The W and Z bosons are identified through their leptonic or hadronic decays.

-

-

Background Rejection: The primary backgrounds are from Z(→νν)+jet/photon and W(→lν)+jet/photon production, where the neutrinos are invisible and the lepton from the W decay may not be identified. Careful background estimation using data-driven methods in control regions is crucial.

-

Signal Extraction: The signal is extracted by analyzing the shape of the MET distribution. A WIMP signal would appear as a broad excess at high MET values.

-

Model Interpretation: The results are interpreted in the context of various dark matter models, such as those with new mediators that couple the Standard Model particles to the dark sector.

Projected Sensitivity

The this compound will have unprecedented sensitivity to WIMP-nucleon scattering cross-sections, complementing and extending the reach of direct detection experiments.

| Dark Matter Mass | Mediator Mass Reach (Simplified Model) |

| 100 GeV | Up to 10 TeV |

| 1 TeV | Up to 20 TeV |

Table 4: Estimated reach for dark matter mediator masses in a simplified model framework at the this compound.

Conclusion

The Super Proton-Proton Collider stands as a beacon for the future of fundamental physics. Its unprecedented energy and luminosity will empower scientists to address some of the most pressing questions in the field, from the nature of the Higgs boson to the identity of dark matter. The experimental protocols and projected sensitivities outlined in this guide demonstrate the immense discovery potential of the this compound. The data and insights gleaned from this next-generation collider will not only reshape our understanding of the fundamental laws of nature but also have the potential to catalyze unforeseen technological advancements across various scientific disciplines.

References

- 1. [2004.03505] Measuring the Higgs self-coupling via Higgs-pair production at a 100 TeV p-p collider [arxiv.org]

- 2. ams02.org [ams02.org]

- 3. Physics at 100 TeV | EP News [ep-news.web.cern.ch]

- 4. Higgs self-coupling measurements at a 100 TeV hadron collider (Journal Article) | OSTI.GOV [osti.gov]

- 5. lup.lub.lu.se [lup.lub.lu.se]

- 6. researchgate.net [researchgate.net]

the role of the SPPC in high-energy physics

An In-depth Technical Guide to the Super Proton-Proton Collider (SPPC)

Introduction

The Super Proton-Proton Collider (this compound) represents a monumental step forward in the exploration of high-energy physics. As the proposed successor to the Large Hadron Collider (LHC), the this compound is designed to be a discovery machine, pushing the energy frontier to unprecedented levels.[1][2] It constitutes the second phase of the Circular Electron-Positron Collider (CEPC-SPPC) project, a two-stage plan hosted by China to probe the fundamental structure of the universe.[1][3][4] The first stage, CEPC, will serve as a "Higgs factory" for precision measurements, while the this compound will delve into new physics beyond the Standard Model.[1][3] Both colliders are planned to share the same 100-kilometer circumference tunnel.[1][2] This guide provides a technical overview of the this compound's core design, proposed experimental capabilities, and the logical workflows that will underpin its operation.

Core Design and Performance

The design of the this compound is centered around achieving a center-of-mass collision energy significantly higher than that of the LHC. The project has outlined a phased approach, with the ultimate goal of reaching energies in the range of 125-150 TeV.[1][3] This ambitious objective is critically dependent on the development of high-field superconducting magnet technology.[1]

Key Design Parameters

The main design parameters for the this compound have evolved through various conceptual stages. The current baseline design aims for a center-of-mass energy of 125 TeV, utilizing powerful 20 Tesla superconducting dipole magnets.[1][2] An intermediate stage with 12 T magnets could achieve 75 TeV.[1]

Table 1: this compound General Design Parameters

| Parameter | Value | Unit |

|---|---|---|

| Circumference | 100 | km |

| Beam Energy | 62.5 | TeV |

| Center-of-Mass Energy (Ultimate) | 125 | TeV |

| Center-of-Mass Energy (Phase 1) | 75 | TeV |

| Dipole Field (Ultimate) | 20 | T |

| Dipole Field (Phase 1) | 12 | T |

| Injection Energy | 2.1 - 4.2 | TeV |

| Number of Interaction Points | 2 | |

| Nominal Luminosity per IP | 1.0 x 10³⁵ | cm⁻²s⁻¹ |

| Bunch Separation | 25 | ns |

Data sourced from multiple conceptual design reports.[1][3]

Accelerator Complex and Injection Workflow

To achieve the target collision energy of 125 TeV, a sophisticated injector chain is required to pre-accelerate the proton beams.[5] This multi-stage process ensures the beam has the necessary energy and quality before being injected into the main collider rings.

Experimental Protocol: Beam Acceleration

The proposed injector chain for the this compound consists of four main stages in cascade:

-

p-Linac: A linear accelerator will be the initial source of protons, accelerating them to an energy of 1.2 GeV.[5]

-

p-RCS (Proton Rapid Cycling Synchrotron): The beam from the Linac is then transferred to a rapid cycling synchrotron, which will boost its energy to 10 GeV.[5]

-

MSS (Medium Stage Synchrotron): Following the p-RCS, a medium-stage synchrotron will take the beam energy up to 180 GeV.[5]

-

SS (Super Synchrotron): The final stage of the injector complex is a large synchrotron that will accelerate the protons to the this compound's injection energy of 2.1 TeV (or potentially higher in later stages).[1][5]

After this sequence, the high-energy proton beams are injected into the two main collider rings of the this compound, where they are accelerated to their final collision energy and brought into collision at the designated interaction points.

Physics Goals and Experimental Methodology

The primary objective of the this compound is to explore physics at the energy frontier, searching for new particles and phenomena that lie beyond the scope of the Standard Model.[2][6] The high collision energy will enable searches for heavy new particles predicted by theories such as supersymmetry and extra dimensions. Furthermore, the this compound will allow for more precise measurements of Higgs boson properties, including its self-coupling, which is a crucial test of the Higgs mechanism.[7]

Experimental Protocol: General-Purpose Detector and Data Acquisition

While specific detector designs for the this compound are still in the conceptual phase, they will follow the general principles of modern high-energy physics experiments like ATLAS and CMS at the LHC.[8][9] A general-purpose detector at the this compound would be a complex, multi-layered device designed to track the paths, measure the energies, and identify the types of particles emerging from the high-energy collisions.

The experimental workflow can be summarized as follows:

-

Proton-Proton Collision: Bunches of protons collide at the interaction point inside the detector.

-

Particle Detection: The collision products travel through various sub-detectors (e.g., tracker, calorimeters, muon chambers) that record their properties.

-

Trigger System: An initial, rapid data filtering system (the trigger) selects potentially interesting events from the immense number of collisions (up to billions per second) and discards the rest. This is crucial for managing the data volume.

-

Data Acquisition (DAQ): The data from the selected events is collected from all detector components and assembled.

-

Data Reconstruction: Sophisticated algorithms process the raw detector data to reconstruct the trajectories and energies of the particles, creating a complete picture of the collision event.

-

Data Analysis: Physicists analyze the reconstructed event data to search for signatures of new physics or to make precise measurements of known processes.

Key Technological Challenges

The realization of the this compound hinges on significant advancements in several key technologies. The most critical of these is the development of high-field superconducting magnets. Achieving a 20 T dipole field for the main collider ring is a formidable challenge that requires extensive research and development in new superconducting materials and magnet design.[1][3] Other challenges include managing the intense synchrotron radiation produced by the high-energy beams and the associated heat load on the beam screen, as well as developing robust beam collimation systems to protect the machine components.[3]

Conclusion

The Super Proton-Proton Collider is a visionary project that promises to redefine the boundaries of high-energy physics. By achieving collision energies an order of magnitude greater than the LHC, it will provide a unique window into the fundamental laws of nature, potentially uncovering new particles, new forces, and a deeper understanding of the universe's structure and evolution. While significant technological hurdles remain, the ongoing research and development, driven by a global collaboration of scientists and engineers, pave the way for this next-generation discovery machine.

References

- 1. slac.stanford.edu [slac.stanford.edu]

- 2. [2203.07987] Study Overview for Super Proton-Proton Collider [arxiv.org]

- 3. Frontiers | Design Concept for a Future Super Proton-Proton Collider [frontiersin.org]

- 4. [1507.03224] Concept for a Future Super Proton-Proton Collider [arxiv.org]

- 5. proceedings.jacow.org [proceedings.jacow.org]

- 6. [2101.10623] Optimization of Design Parameters for this compound Longitudinal Dynamics [arxiv.org]

- 7. researchgate.net [researchgate.net]

- 8. Experiments | CERN [home.cern]

- 9. Detector | CMS Experiment [cms.cern]

conceptual design of the SPPC accelerator complex

An In-depth Technical Guide to the Conceptual Design of the Super Proton-Proton Collider (SPPC)

Introduction

The Super Proton-Proton Collider (this compound) represents a monumental step forward in the global pursuit of fundamental physics. Envisioned as the successor to the Large Hadron Collider (LHC), the this compound is the second phase of the ambitious Circular Electron-Positron Collider (CEPC-SPPC) project initiated by China[1][2]. Designed as a discovery machine, its primary objective is to explore the energy frontier well beyond the Standard Model, investigating new physics phenomena[3][4]. The this compound will be a proton-proton collider housed in the same 100-kilometer circumference tunnel as the CEPC, a Higgs factory[1][5][6]. This strategic placement allows for a phased, synergistic approach to high-energy physics research over the coming decades.

The conceptual design of the this compound is centered on achieving an unprecedented center-of-mass collision energy of up to 125 TeV, an order of magnitude greater than the LHC[1][3]. This leap in energy is predicated on significant advancements in key accelerator technologies, most notably the development of very high-field superconducting magnets.

Overall Design and Staging

The this compound's design is planned in two major phases to manage technical challenges and optimize for both high luminosity and high energy[1].

-

Phase I : This stage targets a center-of-mass energy of 75 TeV. It will utilize 12 Tesla (T) superconducting dipole magnets, a technology that is a more direct successor to existing accelerator magnets. This phase can serve as an intermediate operational run, similar to the initial runs of the LHC at lower energies[1].

-

Phase II : This is the ultimate goal of the project, aiming for a center-of-mass energy of 125 TeV[1][3]. Achieving this requires the successful research, development, and industrialization of powerful 20 T dipole magnets, which represents a significant technological challenge[1][5].

The current baseline design is focused on the 125 TeV goal, which dictates the specifications for the accelerator complex and its components[1].

This compound Collider: Core Parameters

The main collider ring is the centerpiece of the this compound project. Its design is optimized to achieve the highest possible energy and luminosity within the 100 km tunnel. The table below summarizes the primary design parameters for the 125 TeV baseline configuration.

| Parameter | Value | Unit |

| General Design | ||

| Circumference | 100 | km |

| Center-of-Mass Energy (CM) | 125 | TeV |

| Beam Energy | 62.5 | TeV |

| Injection Energy | 3.2 | TeV |

| Number of Interaction Points | 2 | |

| Magnet System | ||

| Dipole Field Strength | 20 | T |

| Dipole Curvature Radius | 10415.4 | m |

| Arc Filling Factor | 0.78 | |

| Beam & Luminosity | ||

| Peak Luminosity (per IP) | 1.3 x 10³⁵ | cm⁻²s⁻¹ |

| Lorentz Gamma (at collision) | 66631 |

Table 1: Key design parameters for the this compound main collider ring at its 125 TeV baseline.[1][7]

The this compound Injector Chain

To accelerate protons to the required 3.2 TeV injection energy for the main collider ring, a sophisticated, multi-stage injector chain is required. This cascaded series of accelerators ensures that the proton beam has the necessary properties, such as bunch structure and emittance, at each stage before being passed to the next[4][8]. The injector complex is designed to be a powerful facility in its own right, with the potential to support its own physics programs when not actively filling the this compound[5].

The injector chain consists of four main accelerator stages:

-

p-Linac : A proton linear accelerator that provides the initial acceleration.

-

p-RCS : A proton Rapid Cycling Synchrotron.

-

MSS : The Medium-stage Synchrotron.

-

SS : The Super Synchrotron, which is the final and largest synchrotron in the injector chain, responsible for accelerating the beam to the this compound's injection energy[1][8].

Caption: The sequential workflow of the this compound injector chain.

The table below outlines the energy progression through the injector complex.

| Accelerator Stage | Output Energy |

| p-Linac (proton Linear Accelerator) | 1.2 GeV |

| p-RCS (proton Rapid Cycling Synchrotron) | 10 GeV |

| MSS (Medium-stage Synchrotron) | 180 GeV |

| SS (Super Synchrotron) | 3.2 TeV |

Table 2: Energy stages of the this compound injector chain.[1][8]

Methodologies and Key Technical Challenges

The conceptual design of the this compound is built upon specific methodologies aimed at overcoming significant technological hurdles. The feasibility of the entire project hinges on successful R&D in these areas.

High-Field Superconducting Magnets

The core technological challenge for the 125 TeV this compound is the development of 20 T accelerator-quality dipole magnets[1][6]. The methodology involves a robust R&D program focused on advanced superconducting materials.

-

Design Principle : To bend the 62.5 TeV proton beams around a 100 km ring, an exceptionally strong magnetic field is required. The 20 T target necessitates moving beyond traditional Niobium-Titanium (Nb-Ti) superconductors used in the LHC.

-

Materials R&D : The primary candidates are Niobium-Tin (Nb₃Sn) and High-Temperature Superconductors (HTS). The design methodology involves creating a hybrid magnet structure, potentially using HTS coils to augment the field generated by Nb₃Sn coils[9].

-

Key Protocols : This effort includes extensive testing of conductor cables, short-model magnet prototyping, and studies on stress management within the magnet coils, as the electromagnetic forces at 20 T are immense[9].

Longitudinal Beam Dynamics and RF System

Maintaining beam stability and achieving high luminosity requires precise control over the proton bunches.

-

Design Principle : The this compound design employs a sophisticated Radio Frequency (RF) system to control the longitudinal profile of the proton bunches. Shorter bunches lead to a higher probability of collision at the interaction points, thus increasing luminosity[4].

-

Methodology : A dual-harmonic RF system is proposed. This system combines a fundamental frequency of 400 MHz with a higher harmonic system at 800 MHz. The superposition of these two RF waves creates a wider and flatter potential well, which helps to lengthen the bunch core slightly while shortening the overall bunch length, mitigating collective instabilities and beam-beam effects[4].

Beam Collimation and Machine Protection

The total stored energy in the this compound beams will be enormous, on the order of gigajoules.

-

Design Principle : A tiny fraction of this beam hitting a magnet could cause a quench (a loss of superconductivity) or permanent damage. Therefore, a highly efficient beam collimation system is critical for both machine protection and detector background control.

-

Methodology : The design involves a multi-stage collimation system to safely absorb stray protons far from the interaction points and superconducting components. This is a crucial area of study, as the mechanisms for beam loss and collimation at these unprecedented energies present new challenges compared to lower-energy colliders[9].

Caption: Logical relationship between key systems and performance goals.

Conclusion

The conceptual design of the Super Proton-Proton Collider outlines a clear, albeit challenging, path toward the next frontier in high-energy physics. As the second phase of the CEPC-SPPC project, it leverages a 100 km tunnel to aim for a groundbreaking 125 TeV center-of-mass energy. The design is characterized by a powerful four-stage injector chain and relies on the successful development of cutting-edge 20 T superconducting magnets. Through detailed methodologies addressing beam dynamics, collimation, and other accelerator physics issues, the this compound is poised to become the world's premier facility for fundamental particle research in the decades to come.

References

- 1. slac.stanford.edu [slac.stanford.edu]

- 2. cepc.ihep.ac.cn [cepc.ihep.ac.cn]

- 3. [2203.07987] Study Overview for Super Proton-Proton Collider [arxiv.org]

- 4. [2101.10623] Optimization of Design Parameters for this compound Longitudinal Dynamics [arxiv.org]

- 5. Frontiers | Design Concept for a Future Super Proton-Proton Collider [frontiersin.org]

- 6. slac.stanford.edu [slac.stanford.edu]

- 7. indico.fnal.gov [indico.fnal.gov]

- 8. proceedings.jacow.org [proceedings.jacow.org]

- 9. ias.ust.hk [ias.ust.hk]

timeline for the SPPC and CEPC-SPPC project

An In-depth Technical Guide to the Circular Electron Positron Collider (CEPC) and Super Proton-Proton Collider (SPPC) Projects

This technical guide provides a comprehensive overview of the Circular Electron Positron Collider (CEPC) and the subsequent Super Proton-Proton Collider (this compound) projects. It is intended for researchers, scientists, and drug development professionals interested in the timeline, technical specifications, and scientific goals of these future large-scale particle physics facilities.

Project Timeline and Key Milestones

The CEPC project, first proposed by the Chinese particle physics community in 2012, has a multi-stage timeline leading to its operation and eventual upgrade to the this compound.[1][2] The project has progressed through several key design and review phases, with construction anticipated to begin in the coming years.

Key Phases and Milestones:

-

2012: The concept of the CEPC was proposed by Chinese high-energy physicists.[2][3]

-

2015: The Preliminary Conceptual Design Report (Pre-CDR) was completed.[4]

-

2018: The Conceptual Design Report (CDR) was released in November.[1][2]

-

2023: The Technical Design Report (TDR) for the accelerator complex was officially released on December 25th, marking a significant milestone.[5][6]

-

2024-2027: The project is currently in the Engineering Design Report (EDR) phase.[3][6] This phase includes finalizing the engineering design, site selection, and industrialization of key components.[5][7]

-

2025: A formal proposal is scheduled to be submitted to the Chinese government.[5][6] The reference Technical Design Report for the detector is also expected to be released in June 2025.[8]

-

2027 (Projected): Start of construction is anticipated around this year, during China's "15th Five-Year Plan".[5][8]

-

Post-2050 (Projected): The this compound era is expected to begin, following the completion of the CEPC's primary physics program and the readiness of high-field superconducting magnets for installation.[9]

Quantitative Data: Accelerator and Detector Parameters

The CEPC is designed to operate at several center-of-mass energies to function as a Higgs, Z, and W factory, with a potential upgrade to study the top quark.[4] The this compound will utilize the same tunnel to achieve unprecedented proton-proton collision energies.[10]

Table 1: CEPC Main Accelerator Parameters at Different Operating Modes

| Parameter | Higgs Factory | Z Factory | W Factory | t-tbar (Upgrade) |

| Center-of-Mass Energy (GeV) | 240 | 91.2 | 160 | 360 |

| Circumference (km) | 100 | 100 | 100 | 100 |

| Luminosity per IP (10³⁴ cm⁻²s⁻¹) | 5.0 | 115 | 16 | 0.5 |

| Synchrotron Radiation Power/beam (MW) | 30 | 30 | 30 | 30 |

| Number of Interaction Points (IPs) | 2 | 2 | 2 | 2 |

Data sourced from multiple references, including[5][9].

Table 2: this compound Main Design Parameters

| Parameter | Pre-CDR | CDR | Ultimate |

| Circumference (km) | 54.4 | 100 | 100 |

| Center-of-Mass Energy (TeV) | 70.6 | 75 | 125-150 |

| Dipole Field (T) | 20 | 12 | 20-24 |

| Injection Energy (TeV) | 2.1 | 2.1 | 4.2 |

| Number of IPs | 2 | 2 | 2 |

| Nominal Luminosity per IP (cm⁻²s⁻¹) | 1.2 x 10³⁵ | 1.0 x 10³⁵ | - |

| Circulating Beam Current (A) | 1.0 | 0.7 | - |

This table presents an evolution of the this compound design parameters as outlined in the Pre-CDR and CDR. The "Ultimate" column reflects the long-term goals for the project.[10][11]

Table 3: CEPC Baseline Detector Performance Requirements

| Performance Metric | Requirement | Physics Goal |

| Lepton Identification Efficiency | > 99.5% | Precision Higgs and Electroweak measurements |

| b-tagging Efficiency | ~80% | Separation of Higgs decays to b, c, and gluons |

| Jet Energy Resolution | BMR < 4% | Separation of W/Z/Higgs with hadronic final states |

| Luminosity Measurement Accuracy | 10⁻³ (Higgs), 10⁻⁴ (Z) | Precision cross-section measurements |

| Beam Energy Measurement Accuracy | 1 MeV (Higgs), 100 keV (Z) | Precise mass measurements of Higgs and Z bosons |

Data compiled from various sources detailing detector performance studies.[8][12][13]

Experimental Protocols and Physics Goals

The primary physics goals of the CEPC are to perform high-precision measurements of the Higgs boson, Z and W bosons, and to search for physics beyond the Standard Model.[5][14] The vast datasets will allow for unprecedented tests of the Standard Model.[15]

Higgs Factory Operation (240 GeV): The main objective is the precise measurement of the Higgs boson's properties, including its mass, width, and couplings to other particles.[14] The CEPC is expected to produce over one million Higgs bosons, enabling the study of rare decay modes.[16] The primary production mechanism at this energy is the Higgs-strahlung process (e⁺e⁻ → ZH).

Z Factory Operation (91.2 GeV): Operating at the Z-pole, the CEPC will produce trillions of Z bosons.[16] This will allow for extremely precise measurements of electroweak parameters, such as the Z boson mass and width, and various asymmetry parameters.[17] These measurements will be sensitive to subtle effects of new physics at higher energy scales. The potential for a polarized beam at the Z-pole is also being investigated to enhance the physics reach.[18]

W Factory Operation (~160 GeV): By performing scans around the W-pair production threshold, the CEPC will be able to measure the mass of the W boson with very high precision.[15] This is a crucial parameter within the Standard Model, and a precise measurement can provide stringent consistency checks.

This compound Physics Program: As the second phase, the this compound will be a discovery machine, aiming to explore the energy frontier far beyond the reach of the Large Hadron Collider.[10] With a center-of-mass energy of up to 125-150 TeV, its primary goal will be to search for new heavy particles and phenomena that could provide answers to fundamental questions, such as the nature of dark matter and the hierarchy problem.[11]

Detailed experimental methodologies are still under development and will be finalized by the respective international collaborations. The general approach will involve analyzing the final state particles from the electron-positron or proton-proton collisions using the hermetic detectors to reconstruct the properties of the produced particles and search for new phenomena.

Visualizations

Project Timeline and Phases

Caption: High-level timeline of the CEPC and this compound projects.

CEPC Accelerator Complex Workflow

Caption: Simplified workflow of the CEPC accelerator complex.

CEPC to this compound Transition Logic

Caption: Logical relationship between the CEPC and this compound projects.

References

- 1. indico.in2p3.fr [indico.in2p3.fr]

- 2. indico.ihep.ac.cn [indico.ihep.ac.cn]

- 3. arxiv.org [arxiv.org]

- 4. arxiv.org [arxiv.org]

- 5. pos.sissa.it [pos.sissa.it]

- 6. lomcon.ru [lomcon.ru]

- 7. indico.ihep.ac.cn [indico.ihep.ac.cn]

- 8. indico.cern.ch [indico.cern.ch]

- 9. slac.stanford.edu [slac.stanford.edu]

- 10. indico.ihep.ac.cn [indico.ihep.ac.cn]

- 11. slac.stanford.edu [slac.stanford.edu]

- 12. indico.global [indico.global]

- 13. researchgate.net [researchgate.net]

- 14. [1810.09037] Precision Higgs Physics at CEPC [arxiv.org]

- 15. worldscientific.com [worldscientific.com]

- 16. [1811.10545] CEPC Conceptual Design Report: Volume 2 - Physics & Detector [arxiv.org]

- 17. indico.ihep.ac.cn [indico.ihep.ac.cn]

- 18. [2204.12664] Investigation of spin rotators in CEPC at the Z-pole [arxiv.org]

scientific motivation for a 100 TeV proton collider

An in-depth technical guide on the , prepared for researchers, scientists, and drug development professionals.

Executive Summary

The Standard Model of particle physics, despite its remarkable success, leaves several fundamental questions unanswered, including the nature of dark matter, the origin of matter-antimatter asymmetry, and the hierarchy problem. The Large Hadron Collider (LHC) addressed some of these by discovering the Higgs boson, but has not yet revealed physics beyond the Standard Model.[1][2] A next-generation 100 TeV proton-proton collider, such as the proposed Future Circular Collider (FCC-hh), represents a transformative leap in energy and luminosity, providing the necessary power to probe these profound mysteries.[3][4][5] This machine would be both a "discovery machine" and a precision instrument.[6] Its primary scientific motivations are to perform high-precision studies of the Higgs boson, including the first direct measurement of its self-coupling, to conduct a comprehensive search for the particles that constitute dark matter, to explore new physics paradigms such as supersymmetry and extra dimensions at unprecedented mass scales, and to test the fundamental structure of the Standard Model at the highest achievable energies.[4][7][8][9] The technological advancements required for such a collider in areas like high-field magnets, detector technology, and large-scale data analysis will also drive innovation across numerous scientific and industrial domains.