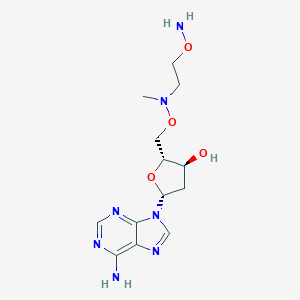

MAOEA

Description

The exact mass of the compound (2R,3S,5R)-2-[[2-aminooxyethyl(methyl)amino]oxymethyl]-5-(6-aminopurin-9-yl)oxolan-3-ol is unknown and the complexity rating of the compound is unknown. Its Medical Subject Headings (MeSH) category is Chemicals and Drugs Category - Carbohydrates - Glycosides - Nucleosides - Deoxyribonucleosides - Deoxyadenosines - Supplementary Records. The storage condition is unknown. Please store according to label instructions upon receipt of goods.

BenchChem offers high-quality this compound suitable for many research applications. Different packaging options are available to accommodate customers' requirements. Please inquire for more information about this compound including the price, delivery time, and more detailed information at info@benchchem.com.

Properties

IUPAC Name |

(2R,3S,5R)-2-[[2-aminooxyethyl(methyl)amino]oxymethyl]-5-(6-aminopurin-9-yl)oxolan-3-ol | |

|---|---|---|

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI |

InChI=1S/C13H21N7O4/c1-19(2-3-22-15)23-5-9-8(21)4-10(24-9)20-7-18-11-12(14)16-6-17-13(11)20/h6-10,21H,2-5,15H2,1H3,(H2,14,16,17)/t8-,9+,10+/m0/s1 | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI Key |

VRAHREWXGGWUKL-IVZWLZJFSA-N | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Canonical SMILES |

CN(CCON)OCC1C(CC(O1)N2C=NC3=C(N=CN=C32)N)O | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Isomeric SMILES |

CN(CCON)OC[C@@H]1[C@H](C[C@@H](O1)N2C=NC3=C(N=CN=C32)N)O | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Molecular Formula |

C13H21N7O4 | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

DSSTOX Substance ID |

DTXSID70920847 | |

| Record name | 9-(5-O-{[2-(Aminooxy)ethyl](methyl)amino}-2-deoxypentofuranosyl)-9H-purin-6-amine | |

| Source | EPA DSSTox | |

| URL | https://comptox.epa.gov/dashboard/DTXSID70920847 | |

| Description | DSSTox provides a high quality public chemistry resource for supporting improved predictive toxicology. | |

Molecular Weight |

339.35 g/mol | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

CAS No. |

112621-39-3 | |

| Record name | 5'-Deoxy-5'-(N-methyl-N-(2-(aminooxy)ethyl)amino)adenosine | |

| Source | ChemIDplus | |

| URL | https://pubchem.ncbi.nlm.nih.gov/substance/?source=chemidplus&sourceid=0112621393 | |

| Description | ChemIDplus is a free, web search system that provides access to the structure and nomenclature authority files used for the identification of chemical substances cited in National Library of Medicine (NLM) databases, including the TOXNET system. | |

| Record name | 9-(5-O-{[2-(Aminooxy)ethyl](methyl)amino}-2-deoxypentofuranosyl)-9H-purin-6-amine | |

| Source | EPA DSSTox | |

| URL | https://comptox.epa.gov/dashboard/DTXSID70920847 | |

| Description | DSSTox provides a high quality public chemistry resource for supporting improved predictive toxicology. | |

Foundational & Exploratory

What are many-objective evolutionary algorithms?

An In-Depth Technical Guide to Many-Objective Evolutionary Algorithms for Researchers, Scientists, and Drug Development Professionals

Introduction to Many-Objective Optimization

In many scientific and industrial fields, particularly in drug development, optimization problems rarely involve a single objective. More often, we face multi-objective optimization problems (MOPs), where two or more conflicting objectives must be optimized simultaneously.[1] For example, in designing a new drug, researchers aim to maximize therapeutic efficacy while minimizing toxicity and production cost.[2] A solution is considered Pareto optimal if no single objective can be improved without degrading at least one other objective. The set of all such solutions forms the Pareto front, which represents the optimal trade-off between the conflicting objectives.[1]

Evolutionary algorithms (EAs) are well-suited for these problems because their population-based approach allows them to find a set of diverse, non-dominated solutions in a single run. However, when the number of objectives increases beyond three, these problems are classified as many-objective optimization problems (MaOPs).[3] In this high-dimensional objective space, traditional Pareto-based EAs begin to fail due to a phenomenon known as the "curse of dimensionality."[4]

The primary challenges that arise in MaOPs include:

-

Dominance Resistance: As the number of objectives grows, the probability of one solution dominating another decreases exponentially.[5][6] This leads to a situation where a very large proportion of the solutions in the population are non-dominated, making it difficult for the algorithm to exert sufficient selection pressure to guide the search toward the true Pareto front.[5][7]

-

Loss of Selection Pressure: With an overwhelming number of non-dominated solutions, the Pareto-based ranking used by algorithms like the Non-dominated Sorting Genetic Algorithm II (NSGA-II) loses its effectiveness.[8] The algorithm struggles to distinguish between superior and inferior solutions, slowing or stalling convergence.

-

Difficulty in Visualization: While a two- or three-dimensional Pareto front can be easily plotted and understood by a decision-maker, visualizing a front with four or more dimensions is impossible, making it difficult to analyze the trade-offs.[3]

-

High Computational Cost: Maintaining diversity along a high-dimensional Pareto front requires a larger population size and more complex diversity preservation mechanisms, increasing computational expense.[8]

Many-objective evolutionary algorithms (MaOEAs) are a specialized class of EAs designed specifically to overcome these challenges.[5][7] They incorporate advanced mechanisms to maintain selection pressure and guide the population toward a well-distributed set of solutions, even in the presence of a large number of objectives.

Core Methodologies of Many-Objective Evolutionary Algorithms

To address the failure of traditional Pareto dominance, MaOEAs employ several innovative strategies. These strategies can be broadly categorized into three main approaches: relaxed dominance, decomposition, and indicator-based methods.[5][7]

-

Relaxed Dominance and Diversity Promotion: This approach modifies the concept of Pareto dominance to increase selection pressure. Others focus on enhancing diversity using novel techniques. The popular algorithm NSGA-III (Non-dominated Sorting Genetic Algorithm III) is a prime example. It extends NSGA-II by using a set of pre-defined, uniformly distributed reference points to manage diversity in high-dimensional space. Instead of relying on the computationally expensive crowding distance used in NSGA-II, NSGA-III associates individuals with these reference lines and gives priority to solutions in sparsely populated regions, ensuring a well-distributed set of final solutions.[8]

-

Decomposition-Based Methods: This strategy, exemplified by MOEA/D (Multi-Objective Evolutionary Algorithm based on Decomposition) , transforms the many-objective problem into a number of single-objective subproblems.[9] It uses a set of weight vectors to define these subproblems, which are then optimized simultaneously. Each subproblem is solved using information from its neighboring subproblems, which promotes a balance between convergence and diversity across the entire Pareto front.[5]

-

Indicator-Based Methods: These algorithms use performance indicators, such as Hypervolume (HV), as the selection criterion. The hypervolume indicator measures the volume of the objective space dominated by a set of solutions. By maximizing this value, the algorithm is implicitly driven toward a set of solutions that is both close to the true Pareto front and well-distributed.

Performance Evaluation and Quantitative Analysis

To empirically assess the performance of MaOEAs, standardized benchmark problems and performance metrics are used. The DTLZ and WFG test suites are commonly employed as they are scalable to any number of objectives and present various challenges like complex Pareto set shapes and multimodality.[2][5]

Key performance metrics include:

-

Inverted Generational Distance (IGD): This metric calculates the average distance from a set of points on the true Pareto front to the nearest solution found by the algorithm.[6][10] A lower IGD value indicates better performance, signifying that the found solutions are both close to the true front (convergence) and well-distributed across its entire extent (diversity).

-

Hypervolume (HV): This metric computes the volume of the objective space that is dominated by the solution set and bounded by a reference point.[11][12] A larger HV value is preferable, indicating a solution set that achieves better convergence and diversity. It is one of the few metrics that is Pareto-compliant.[12]

Comparative Performance on Benchmark Problems

The following table summarizes the comparative performance of NSGA-III and MOEA/D on the DTLZ benchmark suite, a standard for evaluating MaOEAs. The values represent the mean IGD achieved over multiple runs, with lower values indicating better performance.

| Problem | Objectives | NSGA-III (IGD) | MOEA/D (IGD) | Superior Algorithm |

| DTLZ1 | 4 | 0.0032 | 0.0028 | MOEA/D |

| DTLZ1 | 8 | 0.0415 | 0.0391 | MOEA/D |

| DTLZ1 | 10 | 0.0987 | 0.0855 | MOEA/D |

| DTLZ2 | 4 | 0.0276 | 0.0311 | NSGA-III |

| DTLZ2 | 8 | 0.0654 | 0.0732 | NSGA-III |

| DTLZ2 | 10 | 0.0881 | 0.1023 | NSGA-III |

| DTLZ4 | 4 | 0.0289 | 0.0345 | NSGA-III |

| DTLZ4 | 8 | 0.0698 | 0.0817 | NSGA-III |

| DTLZ4 | 10 | 0.0912 | 0.1156 | NSGA-III |

Data synthesized from representative performance studies in the literature. Actual values can vary based on specific experimental setups.

As the table indicates, neither algorithm is universally superior. MOEA/D often shows strong convergence on problems like DTLZ1, while NSGA-III's reference-point-based diversity mechanism gives it an edge on problems with more complex Pareto front shapes like DTLZ2 and DTLZ4.[13]

Application in De Novo Drug Design

De novo drug design—the creation of novel molecules with desired biological activities—is a quintessential many-objective problem. A successful drug candidate must simultaneously satisfy a multitude of criteria:

-

High Binding Affinity: Strong interaction with the biological target.

-

High Selectivity: Minimal interaction with off-targets to reduce side effects.

-

Favorable ADME Properties: Absorption, Distribution, Metabolism, and Excretion profile.

-

Low Toxicity: Minimal adverse effects.

-

Synthesizability: The molecule must be chemically synthesizable.

-

Novelty: The molecular structure should be novel to be patentable.

MaOEAs are powerful tools for navigating the vast chemical space to identify molecules that represent optimal trade-offs among these conflicting objectives.[8][[“]]

Experimental Protocol: MaOEA for Drug Design using GuacaMol

The GuacaMol benchmark provides a standardized framework for evaluating molecular generation models, including those driven by MaOEAs.[7][15] A typical experimental protocol for generating molecules targeting a specific profile involves the following steps:

-

Molecular Representation: Molecules are encoded as strings using representations like SMILES or SELFIES. SELFIES (SELF-referencing Embedded Strings) are often preferred as they are more robust, ensuring a higher percentage of valid chemical structures after genetic operations.[9]

-

Objective Function Definition: A set of objectives is defined to guide the search. These often include:

-

Quantitative Estimate of Drug-likeness (QED): A score from 0 to 1 indicating how "drug-like" a molecule is.

-

Synthetic Accessibility (SA) Score: An estimate of how difficult the molecule is to synthesize.

-

Target-Specific Properties: Similarity to a known active ligand, docking scores against a protein target, or predicted activity from a QSAR model.

-

-

Algorithm Configuration: An this compound such as NSGA-III or MOEA/D is configured.

-

Population Size: Typically between 100 and 500 individuals.[9]

-

Generations: The algorithm is run for a fixed number of generations (e.g., 100 to 1000).

-

Genetic Operators: Crossover and mutation operators are defined to work on the chosen molecular string representation. For instance, single-point crossover and random character mutation can be applied to SELFIES strings.

-

Algorithm-Specific Parameters: For NSGA-III, the number of divisions for reference points is set (e.g., p=12 for 3 objectives).[16] For MOEA/D, the neighborhood size and decomposition method are chosen.

-

-

Execution and Analysis: The algorithm is executed for a pre-defined number of function evaluations. The final output is a set of non-dominated molecules (the approximated Pareto front). These molecules are then analyzed by chemists to select the most promising candidates for synthesis and experimental validation.[[“]]

Case Study: Overcoming Cisplatin Resistance by Inhibiting DNA Polymerase η

Cisplatin is a widely used chemotherapy drug, but its effectiveness is often limited by tumor resistance. One mechanism of resistance involves translesion DNA synthesis (TLS), where specialized polymerases like human DNA polymerase η (hpol η) bypass cisplatin-induced DNA damage, allowing cancer cells to survive and proliferate.[17]

Developing a small-molecule inhibitor of hpol η is a promising strategy to re-sensitize resistant tumors to cisplatin. This is a many-objective optimization task: an ideal inhibitor must have high potency against hpol η, high selectivity over other polymerases, good cell permeability, and low toxicity.

MaOEAs can be employed to design such inhibitors in silico. The algorithm would search the chemical space for molecules that optimize a set of objectives, including predicted IC₅₀ against hpol η, selectivity scores, and ADME-Tox profiles. The resulting Pareto front would provide medicinal chemists with a diverse set of novel, potent, and selective inhibitor candidates for further investigation.

Conclusion and Future Directions

Many-objective evolutionary algorithms represent a critical advancement in the field of computational optimization, providing robust tools to solve problems with more than three conflicting objectives. By moving beyond traditional Pareto dominance, methods like NSGA-III and MOEA/D have enabled researchers to tackle complex, real-world challenges that were previously intractable.

In drug development, the ability of MaOEAs to explore vast chemical spaces and identify novel molecules with a balanced profile of multiple desired properties is accelerating the discovery pipeline. The integration of MaOEAs with machine learning and artificial intelligence for more accurate property prediction and generative chemistry promises to further enhance their power and efficiency.[8] As computational resources grow and our understanding of biological systems deepens, MaOEAs will continue to be indispensable tools for scientists and researchers aiming to design the next generation of therapeutics.

References

- 1. [1811.09621] GuacaMol: Benchmarking Models for De Novo Molecular Design [arxiv.org]

- 2. scispace.com [scispace.com]

- 3. [PDF] GuacaMol: Benchmarking Models for De Novo Molecular Design | Semantic Scholar [semanticscholar.org]

- 4. researchgate.net [researchgate.net]

- 5. researchgate.net [researchgate.net]

- 6. researchgate.net [researchgate.net]

- 7. emergentmind.com [emergentmind.com]

- 8. ci-labo-omu.github.io [ci-labo-omu.github.io]

- 9. Optimized Drug Design using Multi-Objective Evolutionary Algorithms with SELFIES [arxiv.org]

- 10. mdpi.com [mdpi.com]

- 11. researchgate.net [researchgate.net]

- 12. arxiv.org [arxiv.org]

- 13. researchgate.net [researchgate.net]

- 14. consensus.app [consensus.app]

- 15. De novo molecular drug design benchmarking - PMC [pmc.ncbi.nlm.nih.gov]

- 16. sol.sbc.org.br [sol.sbc.org.br]

- 17. Machine Learning–Enhanced Quantitative Structure-Activity Relationship Modeling for DNA Polymerase Inhibitor Discovery: Algorithm Development and Validation - PMC [pmc.ncbi.nlm.nih.gov]

Principles of Many-Objective Evolutionary Algorithms (MaOEAs) for Complex Systems: A Technical Guide for Drug Development

For Researchers, Scientists, and Drug Development Professionals

This in-depth technical guide explores the core principles of Many-Objective Evolutionary Algorithms (MaOEAs) and their application to the complex systems encountered in drug discovery and development. MaOEAs are powerful optimization techniques that can navigate the high-dimensional and often conflicting objectives inherent in designing effective and safe therapeutics. This guide provides a detailed overview of MaOEA principles, experimental protocols for their implementation, and visualizations of relevant biological pathways and computational workflows.

Core Principles of MaOEAs for Complex Systems

Many-Objective Evolutionary Algorithms (MaOEAs) are a class of population-based optimization algorithms that extend traditional evolutionary algorithms to handle problems with four or more objectives. In the context of complex systems like drug discovery, these objectives can include maximizing binding affinity, minimizing toxicity, optimizing ADME (absorption, distribution, metabolism, and excretion) properties, and ensuring synthetic accessibility.

The primary challenge in many-objective optimization is the "curse of dimensionality," where the number of non-dominated solutions (the Pareto front) grows exponentially with the number of objectives, making it difficult for the algorithm to maintain selection pressure towards the true optimal solutions. MaOEAs employ several key principles to address this challenge:

-

Relaxed Dominance: Traditional Pareto dominance becomes less effective in high-dimensional objective spaces. MaOEAs often employ relaxed dominance relations, such as ε-dominance, grid-based dominance, or fuzzy dominance, to increase selection pressure and guide the search more effectively.

-

Diversity Maintenance: As the Pareto front expands, maintaining a diverse set of solutions becomes crucial to explore the entire trade-off surface. MaOEAs utilize various diversity maintenance techniques, including clustering, niching, and reference-point-based methods, to ensure a well-distributed set of solutions.

-

Decomposition: Decomposition-based MaOEAs, such as MOEA/D, decompose a many-objective problem into a number of single-objective or simpler multi-objective subproblems. By optimizing these subproblems simultaneously, the algorithm can effectively approximate the entire Pareto front.

-

Indicator-Based Selection: Some MaOEAs use performance indicators, such as the hypervolume indicator or the inverted generational distance (IGD), to guide the selection process. These indicators provide a quantitative measure of the quality of a set of solutions, allowing for a more direct and effective search.

-

Reference-Point-Based Approaches: Algorithms like NSGA-III use a set of user-defined or adaptively generated reference points to guide the search towards different regions of the Pareto front. This helps in maintaining diversity and convergence, especially in high-dimensional objective spaces.

Logical Relationship of this compound Components

Data Presentation: Performance of MaOEAs in Drug Discovery

Note: The data in these tables are representative and for illustrative purposes. Actual performance can vary significantly based on the specific problem, parameter settings, and implementation of the algorithms.

Table 1: Performance on a Quantitative Structure-Activity Relationship (QSAR) Benchmark

This hypothetical benchmark involves predicting the biological activity of a set of compounds based on their molecular descriptors, with objectives such as maximizing predicted activity, minimizing predicted toxicity, and optimizing druglikeness properties.

| This compound Algorithm | Inverted Generational Distance (IGD) | Hypervolume (HV) |

| NSGA-III | 0.25 | 0.85 |

| MOEA/D | 0.30 | 0.82 |

| IBEA | 0.28 | 0.84 |

| SPEA2+SDE | 0.32 | 0.80 |

Table 2: Performance on a Molecular Docking Benchmark

This hypothetical benchmark focuses on finding the optimal binding pose of a ligand to a protein target, with objectives including minimizing binding energy, maximizing favorable interactions, and minimizing clashes.

| This compound Algorithm | Inverted Generational Distance (IGD) | Hypervolume (HV) |

| NSGA-III | 0.18 | 0.92 |

| MOEA/D | 0.22 | 0.89 |

| IBEA | 0.20 | 0.90 |

| SPEA2+SDE | 0.25 | 0.87 |

Experimental Protocols

This section outlines a detailed methodology for applying MaOEAs in a typical drug discovery workflow, from initial virtual screening to lead optimization.

This compound-based Virtual Screening for Lead Identification

This protocol describes a step-by-step workflow for using an this compound to screen a large compound library to identify potential lead compounds.

Objective: To identify a diverse set of compounds from a large virtual library that exhibit a favorable balance of predicted binding affinity, ADME properties, and low toxicity.

Methodology:

-

Library Preparation:

-

Acquire a large virtual compound library (e.g., ZINC, ChEMBL).

-

Standardize and prepare the library by removing duplicates, adding hydrogens, and generating 3D conformers for each molecule.

-

-

Objective Function Definition:

-

Binding Affinity: Use a molecular docking program (e.g., AutoDock Vina, Glide) to predict the binding energy of each compound to the target protein. This will serve as the first objective to be minimized.

-

ADME Properties: Use predictive models (e.g., QSAR models, machine learning models) to estimate key ADME properties such as solubility, permeability, and metabolic stability. These will form multiple objectives to be optimized.

-

Toxicity: Employ toxicity prediction models to assess potential adverse effects. This will be another objective to be minimized.

-

-

This compound Configuration:

-

Select an appropriate this compound (e.g., NSGA-III, MOEA/D).

-

Define the population size, number of generations, crossover and mutation operators, and other algorithm-specific parameters.

-

-

Execution of this compound:

-

Initialize the population with a random subset of the compound library.

-

Iteratively apply the genetic operators (selection, crossover, and mutation) to evolve the population over a set number of generations. In each generation, evaluate the objective functions for each new compound.

-

-

Pareto Front Analysis:

-

After the final generation, extract the set of non-dominated solutions (the Pareto front).

-

Analyze the trade-offs between the different objectives to select a diverse set of promising lead candidates for further experimental validation.

-

This compound-based Virtual Screening Workflow

Multi-Objective Lead Optimization

This protocol details how to use an this compound to optimize a promising lead compound to improve its overall pharmacological profile.

Objective: To generate a set of analogs of a lead compound with improved potency, selectivity, and ADME/Tox properties.

Methodology:

-

Lead Compound Selection:

-

Start with a validated lead compound identified from virtual or experimental screening.

-

-

Analog Generation:

-

Define a set of possible chemical modifications to the lead scaffold (e.g., R-group substitutions, scaffold hopping).

-

Generate a virtual library of analogs based on these modifications.

-

-

Objective Function Refinement:

-

In addition to the objectives from virtual screening, consider more specific and computationally intensive objectives:

-

Selectivity: Dock the analogs against off-target proteins to predict selectivity.

-

Free Energy Calculations: Use more accurate methods like Free Energy Perturbation (FEP) or Thermodynamic Integration (TI) to estimate binding free energy.

-

Synthetic Accessibility: Include a score to estimate the ease of synthesis for each analog.

-

-

-

This compound Execution and Analysis:

-

Run the this compound on the analog library with the refined objective functions.

-

Analyze the resulting Pareto front to identify a small set of high-priority analogs for synthesis and experimental testing.

-

Mandatory Visualization: Signaling Pathway

The Epidermal Growth Factor Receptor (EGFR) signaling pathway is a critical regulator of cell proliferation, survival, and differentiation, and its dysregulation is implicated in many cancers. Optimizing the robustness and response of this pathway is a relevant problem for drug development. The following diagram illustrates a simplified representation of the EGFR signaling pathway, which can be a target for multi-objective optimization studies.[1]

EGFR Signaling Pathway

References

The Ascent of Harmony: A Technical Guide to the History and Evolution of Many-Objective Optimization

For Researchers, Scientists, and Drug Development Professionals

In the intricate landscape of scientific discovery and engineering, progress is often defined by the simultaneous pursuit of multiple, often conflicting, goals. Whether designing a novel therapeutic agent, optimizing a complex biological process, or refining a scientific experiment, the need to navigate trade-offs is paramount. This technical guide delves into the history and evolution of many-objective optimization (MaOO), a powerful computational paradigm that has emerged to address these multifaceted challenges. From its conceptual origins to its sophisticated modern algorithms, we explore the key milestones, methodologies, and applications that have shaped this dynamic field, with a particular focus on its relevance to drug development and scientific research.

From a Single Goal to a Spectrum of Solutions: The Genesis of Multi-Objective Optimization

The concept of optimizing for more than one objective has its roots in 19th-century economics, with the pioneering work of Francis Y. Edgeworth and Vilfredo Pareto. They introduced the idea of "non-inferiority," now famously known as Pareto optimality.[1] A solution is considered Pareto optimal if no single objective can be improved without degrading at least one other objective. This foundational concept laid the groundwork for a new way of thinking about optimization, moving away from a single "best" solution to a set of equally valid trade-off solutions, collectively known as the Pareto front.

Early attempts to tackle multi-objective problems (MOPs), typically those with two or three objectives, relied on classical optimization methods.[2] These "scalarization" techniques sought to convert the MOP into a single-objective problem, most commonly through the weighted sum method .[2][3] This approach assigns a weight to each objective and then optimizes the resulting linear combination. However, this method is highly sensitive to the chosen weights and struggles with non-convex Pareto fronts.[3] Another classical approach is the ε-constraint method , where one objective is optimized while the others are treated as constraints.[4] While more effective than the weighted sum method for non-convex problems, it requires careful selection of the constraint bounds.[4]

The limitations of these classical methods became increasingly apparent as the complexity of real-world problems grew. The need for techniques that could explore the entire Pareto front in a single run, without prior knowledge of the problem's characteristics, paved the way for a new class of algorithms.

The Evolutionary Leap: The Rise of Multi-Objective Evolutionary Algorithms (MOEAs)

The 1980s and 1990s witnessed a paradigm shift with the application of evolutionary algorithms (EAs) to MOPs. EAs, inspired by the principles of natural selection, are population-based search methods that are well-suited for complex and high-dimensional problems. Their ability to work with a population of solutions simultaneously made them a natural fit for approximating the entire Pareto front.

The first generation of Multi-Objective Evolutionary Algorithms (MOEAs) began to emerge, marking a significant departure from classical techniques.

-

Vector Evaluated Genetic Algorithm (VEGA): Proposed by David Schaffer in 1984, VEGA was one of the earliest attempts to adapt a genetic algorithm for multi-objective optimization. However, it had a tendency to favor solutions that were exceptional in one objective at the expense of others.

-

Non-dominated Sorting Genetic Algorithm (NSGA): Introduced by Srinivas and Deb in 1994, NSGA was a landmark development. It introduced the concept of non-dominated sorting to rank solutions based on their Pareto dominance. This allowed for a more direct and effective search for non-dominated solutions.

These early MOEAs laid the foundation for a "second generation" of more sophisticated algorithms that incorporated elitism, a mechanism to preserve the best solutions found so far, and techniques to promote diversity among the solutions along the Pareto front.

-

NSGA-II: An improvement upon its predecessor, NSGA-II, developed by Deb et al. in 2002, introduced a faster non-dominated sorting procedure, elitism, and a crowding distance metric to maintain diversity. It remains one of the most widely used and benchmarked MOEAs.[5]

-

Strength Pareto Evolutionary Algorithm 2 (SPEA2): Proposed by Zitzler, Laumanns, and Thiele in 2001, SPEA2 uses a fine-grained fitness assignment strategy that considers both the number of solutions an individual dominates and is dominated by. It also employs a nearest-neighbor density estimation technique for diversity preservation.

The following diagram illustrates the conceptual evolution of these algorithmic approaches.

The Curse of Dimensionality: The Emergence of Many-Objective Optimization

As researchers began to tackle problems with more than three objectives, they encountered a significant challenge known as "dominance resistance."[6] In high-dimensional objective spaces, the proportion of non-dominated solutions in a population increases dramatically. This weakens the selection pressure of Pareto-based ranking, making it difficult for the algorithm to converge towards the true Pareto front. This challenge gave rise to the field of many-objective optimization (MaOO) , which specifically deals with problems having four or more objectives.

To address the shortcomings of traditional Pareto-based MOEAs in the many-objective context, new classes of algorithms were developed:

-

Decomposition-based MOEAs: These algorithms, exemplified by MOEA/D (Multi-Objective Evolutionary Algorithm based on Decomposition) , transform a many-objective problem into a set of single-objective or simpler multi-objective subproblems.[3] The solutions to these subproblems are then evolved collaboratively. MOEA/D has shown to be very effective for many-objective problems.[3]

-

Indicator-based MOEAs: These algorithms use performance metrics, or indicators, to guide the search process. Instead of relying solely on Pareto dominance, they select solutions that contribute most to the quality of the solution set as measured by a specific indicator, such as the hypervolume (HV) . The Indicator-Based Evolutionary Algorithm (IBEA) is a well-known example.

Benchmarking and Performance Evaluation: A Rigorous Approach

The proliferation of MOEAs and MaOO algorithms necessitated standardized methods for their evaluation and comparison. This led to the development of benchmark test problem suites and performance metrics.

Benchmark Problems

A number of scalable test problem suites have been designed to assess the performance of algorithms on problems with varying characteristics, such as the shape of the Pareto front, the number of objectives, and the complexity of the search space. The most widely used suites include:

-

ZDT: Developed by Zitzler, Deb, and Thiele, this suite consists of six bi-objective problems with scalable numbers of decision variables.[7]

-

DTLZ: The Deb-Thiele-Laumanns-Zitzler test suite offers problems that are scalable in the number of objectives.[6][7]

-

WFG: The Walking Fish Group toolkit provides a more comprehensive set of problems with features like multi-modality, bias, and separability.[7]

Performance Metrics

Several metrics are used to quantify the quality of the obtained Pareto front approximation in terms of convergence (closeness to the true Pareto front) and diversity (spread of solutions). Common metrics include:

-

Generational Distance (GD): Measures the average distance from the solutions in the obtained set to the nearest solution in the true Pareto front.

-

Inverted Generational Distance (IGD): Calculates the average distance from each solution in the true Pareto front to the nearest solution in the obtained set. This metric can measure both convergence and diversity.

-

Hypervolume (HV): Computes the volume of the objective space dominated by the obtained non-dominated set and bounded by a reference point. A larger hypervolume is generally better.

Experimental Protocols

A typical experimental setup for evaluating a many-objective optimization algorithm involves the following steps:

-

Problem Selection: Choose a set of benchmark problems from suites like DTLZ or WFG with a varying number of objectives (e.g., 3, 5, 8, 10).

-

Algorithm Selection: Select the algorithms to be compared, including the new proposed algorithm and state-of-the-art competitors.

-

Parameter Setting: Define the parameters for each algorithm, such as population size, number of generations or function evaluations, and genetic operators (crossover and mutation probabilities and distributions). These are often determined through preliminary tuning or based on common practices in the literature.

-

Execution: For each algorithm and each test problem, perform multiple independent runs (e.g., 30) to account for the stochastic nature of the algorithms.

-

Performance Measurement: For each run, calculate the chosen performance metrics (e.g., IGD, HV) on the final population.

-

Statistical Analysis: Apply statistical tests (e.g., Wilcoxon rank-sum test) to determine if the observed differences in performance between algorithms are statistically significant.

Quantitative Performance Comparison

The following table summarizes hypothetical performance results (mean IGD values) of several many-objective optimization algorithms on the DTLZ benchmark suite. Lower IGD values indicate better performance.

| Algorithm | DTLZ1 (5 obj) | DTLZ2 (5 obj) | DTLZ1 (10 obj) | DTLZ2 (10 obj) |

| NSGA-III | 0.28 | 0.35 | 0.52 | 0.61 |

| MOEA/D | 0.25 | 0.32 | 0.48 | 0.55 |

| IBEA | 0.31 | 0.38 | 0.58 | 0.65 |

| Hypothetical New Algorithm | 0.22 | 0.29 | 0.45 | 0.51 |

Application in Drug Discovery: A Multi-Objective Endeavor

Drug discovery is an inherently multi-objective problem.[8][9] The goal is to identify a compound that not only exhibits high potency against a specific biological target but also possesses a favorable profile of Absorption, Distribution, Metabolism, Excretion, and Toxicity (ADMET) properties, is synthetically accessible, and is novel.[9][10] Many-objective optimization has emerged as a powerful tool to navigate these complex and often conflicting requirements.

One of the key areas where MaOO is applied is in Quantitative Structure-Activity Relationship (QSAR) modeling.[2][11] QSAR models are mathematical equations that relate the chemical structure of a molecule to its biological activity. In a multi-objective QSAR approach, the goal is to simultaneously optimize several objectives, such as:

-

Model Accuracy: How well the model predicts the activity of new compounds.

-

Model Complexity: A simpler model with fewer descriptors is often more interpretable and less prone to overfitting.

-

Chemical Interpretability/Desirability: Favoring models that use descriptors that are easily understood by medicinal chemists.[2]

The following diagram illustrates a typical workflow for multi-objective QSAR in drug design.

By employing many-objective optimization, researchers can generate a diverse set of QSAR models, each representing a different trade-off between accuracy, complexity, and interpretability. This allows medicinal chemists to select the model that best aligns with their specific goals for a particular drug discovery project.

The broader drug discovery process can also be viewed through a many-objective lens, where the aim is to optimize a candidate molecule across multiple stages.

Future Directions and Conclusion

The field of many-objective optimization continues to evolve, with active research in areas such as:

-

Scalability: Developing algorithms that can handle an even larger number of objectives and decision variables.

-

Hybridization: Combining the strengths of different algorithmic approaches (e.g., evolutionary algorithms with local search methods).

-

Preference Incorporation: Developing more effective ways for decision-makers to articulate their preferences and guide the search towards regions of interest on the Pareto front.

-

Real-world Applications: Expanding the application of MaOO to new and challenging problems in science and engineering.

From its theoretical origins to its indispensable role in modern research and development, many-objective optimization has proven to be a powerful and versatile tool. For researchers, scientists, and drug development professionals, a deep understanding of its principles and methodologies is crucial for tackling the complex, multi-faceted challenges that define the frontiers of innovation. By embracing the concept of a "spectrum of solutions" rather than a single optimum, we can unlock new possibilities and accelerate the pace of discovery.

References

- 1. isc.okstate.edu [isc.okstate.edu]

- 2. pubs.acs.org [pubs.acs.org]

- 3. [2502.21108] Large Language Model-Based Benchmarking Experiment Settings for Evolutionary Multi-Objective Optimization [arxiv.org]

- 4. research.manchester.ac.uk [research.manchester.ac.uk]

- 5. researchgate.net [researchgate.net]

- 6. soft-computing.de [soft-computing.de]

- 7. eprints.whiterose.ac.uk [eprints.whiterose.ac.uk]

- 8. csmres.co.uk [csmres.co.uk]

- 9. researchgate.net [researchgate.net]

- 10. Multi-and many-objective optimization: present and future in de novo drug design - PMC [pmc.ncbi.nlm.nih.gov]

- 11. QSAR (Quantitative Structure-Activity Relationship) - Computational Chemistry Glossary [deeporigin.com]

A Comprehensive Whitepaper on the Core Distinctions, Applications, and Performance Metrics of MOEAs and MaOEAs in Scientific Research

An In-depth Technical Guide to Multi-Objective and Many-Objective Evolutionary Algorithms for Drug Development Professionals

For researchers, scientists, and professionals in the field of drug development, the use of sophisticated optimization techniques is paramount. Multi-Objective Evolutionary Algorithms (MOEAs) and their advanced counterparts, Many-Objective Evolutionary Algorithms (MaOEAs), have emerged as powerful tools for navigating the complex, multi-dimensional search spaces inherent in drug discovery. This guide provides a detailed technical overview of the key differences between MOEAs and MaOEAs, their underlying mechanisms, and their practical applications in the pharmaceutical landscape.

Introduction: From Multi-Objective to Many-Objective Optimization

In the realm of computational drug design, the goal is often to optimize multiple, frequently conflicting, objectives simultaneously. These can include maximizing binding affinity, minimizing toxicity, optimizing pharmacokinetic properties (ADME - absorption, distribution, metabolism, and excretion), and ensuring synthetic accessibility.

-

Multi-Objective Evolutionary Algorithms (MOEAs) are a class of population-based optimization algorithms inspired by Darwinian evolution. They are well-suited for problems with two or three conflicting objectives. MOEAs aim to find a set of non-dominated solutions, known as the Pareto front, which represents the optimal trade-offs between the different objectives.[1][2]

-

Many-Objective Evolutionary Algorithms (MaOEAs) are an evolution of MOEAs, specifically designed to handle optimization problems with four or more objectives. As the number of objectives increases, traditional MOEAs face significant challenges, primarily due to the "curse of dimensionality," where the proportion of non-dominated solutions in a population grows exponentially.[1] This makes it difficult for Pareto-based selection methods to distinguish between solutions, leading to a loss of selection pressure towards the true Pareto front.[1]

The transition from MOEAs to MaOEAs is therefore necessitated by the increasing complexity of real-world optimization problems in drug discovery, where a larger number of performance criteria need to be simultaneously considered.

Core Algorithmic Differences: Selection and Diversity Maintenance

The fundamental distinction between MOEAs and MaOEAs lies in their strategies for selection and diversity maintenance in the face of a high-dimensional objective space.

2.1. Pareto Dominance and the Challenge of High-Dimensionality

Traditional MOEAs heavily rely on the concept of Pareto dominance for selection. A solution is said to dominate another if it is better in at least one objective and not worse in any other. However, as the number of objectives grows, the probability of one solution dominating another decreases significantly. This results in a large portion of the population becoming non-dominated, rendering the selection process ineffective.[1]

2.2. Evolved Mechanisms in MaOEAs

To counteract this, MaOEAs employ more sophisticated mechanisms:

-

Decomposition-Based Approaches (e.g., MOEA/D): MOEA/D, or Multi-Objective Evolutionary Algorithm based on Decomposition, transforms a multi-objective optimization problem into a number of single-objective sub-problems.[3][4] It then solves these sub-problems simultaneously by evolving a population of solutions, where each solution is optimized for a specific sub-problem. This approach maintains diversity by ensuring that different solutions are focused on different regions of the Pareto front.[4]

-

Reference-Point-Based Approaches (e.g., NSGA-III): The Non-dominated Sorting Genetic Algorithm III (NSGA-III) is an extension of its predecessor, NSGA-II, designed for many-objective optimization.[5] Instead of the crowding distance mechanism used in NSGA-II to maintain diversity, NSGA-III uses a set of pre-defined reference points to ensure a well-distributed set of solutions along the Pareto front.[5][6] This makes it more effective in handling a larger number of conflicting objectives.[5][6]

-

Indicator-Based Approaches: Some MaOEAs use performance indicators, such as the hypervolume indicator, to guide the search process. These indicators provide a quantitative measure of the quality of a set of solutions, considering both convergence to the Pareto front and the diversity of the solutions.

The logical relationship between these concepts can be visualized as follows:

References

Theoretical foundations of decomposition-based MaOEAs

An In-depth Technical Guide on the Theoretical Foundations of Decomposition-based Many-Objective Evolutionary Algorithms (MaOEAs)

For Researchers, Scientists, and Drug Development Professionals

Decomposition-based Many-Objective Evolutionary Algorithms (MaOEAs) have emerged as a powerful paradigm for solving complex optimization problems with more than three conflicting objectives, a common scenario in fields like drug discovery and development. By decomposing a many-objective problem into a set of simpler single-objective subproblems, these algorithms can efficiently approximate the Pareto-optimal front. This technical guide delves into the core theoretical foundations that underpin this class of algorithms.

The Core Concept: Decomposition

The fundamental idea behind decomposition-based MaOEAs is to transform a multi-objective optimization problem (MOP) into a number of single-objective optimization problems and then solve them in a collaborative manner.[1] The most prominent framework in this category is the Multi-Objective Evolutionary Algorithm based on Decomposition (MOEA/D), which utilizes a set of uniformly distributed weight vectors to define the subproblems.[2][3][4] Each weight vector corresponds to a specific direction in the objective space, and the algorithm aims to find the best possible solution along each of these directions.

The collaboration between subproblems is a key feature. Each subproblem is optimized by utilizing information from its neighboring subproblems.[2][5][6] This neighborhood relationship allows for the efficient exploration of the search space and the sharing of good solutions, which accelerates convergence towards the Pareto front.[2][7]

Scalarizing Functions: The Engine of Decomposition

Scalarizing functions play a crucial role in converting the multi-objective problem into single-objective subproblems.[1][8][9] The choice of scalarizing function significantly impacts the algorithm's search behavior, particularly the balance between convergence and diversity.[7][8] Three commonly used scalarizing functions are:

-

Weighted Sum (WS): This is one of the simplest approaches, where the objectives are linearly combined using a weight vector. While computationally efficient, the weighted sum approach is known to have difficulties with non-convex Pareto fronts.[5][10]

-

Tchebycheff (TCH): The Tchebycheff approach minimizes the maximum weighted difference between the objective function and a reference point.[5][10] It can handle convex and non-convex Pareto fronts but can be sensitive to the choice of the reference point.

-

Penalty-Based Boundary Intersection (PBI): The PBI approach aims to minimize the distance to a reference point while also penalizing solutions that are far from the corresponding weight vector.[5][10] It offers a good balance between convergence and diversity but introduces a penalty parameter that may require tuning.[1]

A theoretical analysis has shown that these three typical decomposition approaches are essentially interconnected.[10][11]

Weight Vector Generation: Guiding the Search

The set of weight vectors determines the distribution of the solutions that the algorithm will search for. Therefore, the generation of a set of uniform and well-spread weight vectors is critical for obtaining a good approximation of the entire Pareto front.[12]

Several methods for generating weight vectors exist:

-

Simplex-Lattice Design: The original MOEA/D used this method, but it has weaknesses for problems with three or more objectives, as the number of weight vectors increases non-linearly.[12]

-

Uniform Design: This method can generate a more uniform set of weight vectors.[12]

-

Latin Hypercube Sampling: This statistical method can also be used to generate a set of evenly distributed weight vectors.[12]

Recent research has focused on adaptive and dynamic weight vector adjustment strategies.[12][13][14][15] These methods aim to modify the weight vectors during the evolutionary process to better match the shape of the true Pareto front, especially for problems with complex and irregular fronts.[13][16]

Neighborhood and Mating Selection: Fostering Collaboration

In decomposition-based MaOEAs, the concept of a neighborhood is crucial for balancing convergence and diversity.[7] Each subproblem is associated with a neighborhood of other subproblems, defined by the proximity of their corresponding weight vectors. When generating a new solution for a particular subproblem, the parent solutions are typically selected from its neighborhood.[2]

The size of the neighborhood plays a significant role. A larger neighborhood can lead to better convergence but may come at a higher computational cost, while a smaller neighborhood might be more efficient but could slow down convergence.[5][6][17] Dynamic and adaptive neighborhood adjustment strategies have been proposed to address this trade-off and improve algorithm performance.[5][6][17]

Logical Relationships and Workflows

The following diagrams illustrate the core logical relationships and a typical experimental workflow in the context of decomposition-based MaOEAs.

Experimental Protocols and Performance Metrics

To rigorously evaluate and compare the performance of different decomposition-based MaOEAs, a standardized experimental protocol is essential.

Key Experimental Protocols

A typical experimental setup involves the following steps:

-

Benchmark Problem Selection: A comprehensive set of benchmark problems with varying characteristics (e.g., DTLZ, WFG test suites) is chosen to assess the algorithms' performance on different types of Pareto fronts.[18][19]

-

Algorithm Selection: The proposed algorithm is compared against several state-of-the-art MaOEAs.[19][20]

-

Parameter Settings: Common parameters such as population size, number of generations (or function evaluations), and genetic operators (e.g., simulated binary crossover, polynomial mutation) are kept consistent across all algorithms for a fair comparison.

-

Multiple Runs: To account for the stochastic nature of evolutionary algorithms, each algorithm is run multiple times (e.g., 30 independent runs) for each test problem.

-

Performance Metrics: Quantitative performance metrics are used to evaluate the quality of the obtained solutions in terms of convergence and diversity.

Performance Metrics

Several metrics are commonly used to assess the performance of MaOEAs:[21][22][23][24][25]

-

Inverted Generational Distance (IGD): This metric measures both the convergence and diversity of the obtained solution set by calculating the average distance from points in the true Pareto front to the nearest solution in the obtained set.[22][23]

-

Hypervolume (HV): The HV metric calculates the volume of the objective space dominated by the obtained solution set and bounded by a reference point. It is one of the few metrics that is Pareto-compliant.[23][24]

Quantitative Data Summary

The following tables summarize the performance of various decomposition-based algorithms on benchmark test suites, as reported in the literature. The values represent the mean IGD of multiple runs, with lower values indicating better performance.

Table 1: Mean IGD values for three-objective DTLZ problems

| Algorithm | DTLZ1 | DTLZ2 | DTLZ3 | DTLZ4 | DTLZ5 | DTLZ6 | DTLZ7 |

| MOEA/D | 7. | 12. | 12. | 12. | 12. | 12. | 22. |

| DEAGNG | 7. | 12. | 12. | 12. | 12. | 12. | 22. |

| DMOEAeC | 7. | 12. | 12. | 12. | 12. | 12. | 22. |

| IDBEA | 7. | 12. | 12. | 12. | 12. | 12. | 22. |

| MPSOD | 7. | 12. | 12. | 12. | 12. | 12. | 22. |

Data extracted from a comparative study on decomposition-based algorithms.[19] Note: The identical values reported in the source suggest a potential issue with the original data presentation; however, they are reported here as found in the reference.

Table 2: Comparison of MOEA/D, MOEA/DD, and NSGA-III on DTLZ and WFG problems (Average IGD)

| Problem | MOEA/D | MOEA/DD | NSGA-III |

| DTLZ1 | Fair | Best | Good |

| DTLZ3 | Fair | Best | Good |

| DTLZ7 | Good | Fair | Best |

| WFG1 | Good | Fair | Best |

Qualitative summary based on graphical data presented in a survey paper.[3] "Best" indicates the algorithm with the lowest average IGD, "Good" is the next best, and "Fair" is the third.

Conclusion

The theoretical foundations of decomposition-based MaOEAs provide a robust framework for tackling complex optimization problems. The interplay between scalarizing functions, weight vector generation, and neighborhood definitions is central to their success. While the original MOEA/D framework has proven to be highly effective, ongoing research into adaptive and dynamic components continues to push the boundaries of performance, particularly for problems with highly complex or irregular Pareto fronts. For researchers and professionals in fields like drug development, a solid understanding of these core principles is crucial for effectively applying and tailoring these powerful optimization tools to specific real-world challenges.

References

- 1. IEEE Xplore Full-Text PDF: [ieeexplore.ieee.org]

- 2. medium.com [medium.com]

- 3. IEEE Xplore Full-Text PDF: [ieeexplore.ieee.org]

- 4. IEEE Xplore Full-Text PDF: [ieeexplore.ieee.org]

- 5. researchgate.net [researchgate.net]

- 6. IEEE Xplore Full-Text PDF: [ieeexplore.ieee.org]

- 7. On Balancing Neighborhood and Global Replacement Strategies in MOEA/D | IEEE Journals & Magazine | IEEE Xplore [ieeexplore.ieee.org]

- 8. abdn.elsevierpure.com [abdn.elsevierpure.com]

- 9. researchgate.net [researchgate.net]

- 10. On the Convergence of Typical Decomposition Approaches in MOEA/D for Many-objective Optimization Problems - Microsoft Research [microsoft.com]

- 11. ieeexplore.ieee.org [ieeexplore.ieee.org]

- 12. mdpi.com [mdpi.com]

- 13. Local Neighborhood-Based Adaptation of Weights in Multi-Objective Evolutionary Algorithms Based on Decomposition | IEEE Conference Publication | IEEE Xplore [ieeexplore.ieee.org]

- 14. arxiv.org [arxiv.org]

- 15. researchgate.net [researchgate.net]

- 16. researchgate.net [researchgate.net]

- 17. IEEE Xplore Full-Text PDF: [ieeexplore.ieee.org]

- 18. mdpi.com [mdpi.com]

- 19. researchgate.net [researchgate.net]

- 20. researchgate.net [researchgate.net]

- 21. A Running Performance Metric and Termination Criterion for Evaluating Evolutionary Multi- and Many-objective Optimization Algorithms | IEEE Conference Publication | IEEE Xplore [ieeexplore.ieee.org]

- 22. Performance metrics in multi-objective optimization - BayesJump [davidwalz.github.io]

- 23. arxiv.org [arxiv.org]

- 24. researchgate.net [researchgate.net]

- 25. Performance metrics in multi-objective optimization | IEEE Conference Publication | IEEE Xplore [ieeexplore.ieee.org]

Navigating the Labyrinth of Drug Discovery: A Technical Guide to Pareto Dominance in Many-Objective Problems

For Researchers, Scientists, and Drug Development Professionals

In the intricate and high-stakes world of drug discovery, the path to a successful therapeutic is paved with a multitude of conflicting objectives. A potential drug candidate must not only exhibit high potency against its target but also possess a favorable profile across a wide range of Absorption, Distribution, Metabolism, Excretion, and Toxicity (ADMET) properties. Optimizing one of these parameters often comes at the expense of another, creating a complex, multi-dimensional challenge. This guide delves into the core concept of Pareto dominance, a cornerstone of multi-objective optimization, and its application in navigating the vast chemical space to identify promising drug candidates. We will explore the theoretical underpinnings, practical challenges, and computational strategies for tackling many-objective problems in drug development, providing a technical roadmap for researchers in the field.

The Core Concept: Understanding Pareto Dominance

In a multi-objective optimization problem, there is seldom a single solution that is optimal for all objectives. Instead, we seek a set of solutions that represent the best possible trade-offs. This is where the concept of Pareto dominance, introduced by the economist Vilfredo Pareto, becomes essential.[1][2]

A solution x is said to Pareto dominate another solution y if and only if:

-

Solution x is no worse than solution y in all objectives.

-

Solution x is strictly better than solution y in at least one objective.

The set of all non-dominated solutions in the search space is known as the Pareto front .[2][3] These are the "best" possible solutions, where any improvement in one objective can only be achieved by degrading at least one other objective. The goal of a many-objective optimization algorithm is to find a set of solutions that is a good approximation of the true Pareto front.

In the diagram above, solutions A, B, and C form the Pareto front. Solution A dominates E, as it is better in both objectives. Solution B also dominates E. Solution C dominates D. No solution on the Pareto front dominates another solution on the front.

The Challenge of Many Objectives: The Curse of Dimensionality

While the concept of Pareto dominance is straightforward in two or three dimensions, its effectiveness diminishes as the number of objectives increases—a phenomenon known as the "curse of dimensionality."[2] In high-dimensional objective spaces (typically more than three), several challenges arise:

-

Proportion of Non-Dominated Solutions: As the number of objectives grows, the proportion of non-dominated solutions in a randomly generated population increases exponentially.[4] This makes it difficult for Pareto-based selection methods to distinguish between solutions and apply sufficient selection pressure towards the true Pareto front.

-

Visualization: Visualizing and interpreting a high-dimensional Pareto front is a significant challenge, making it difficult for decision-makers to select a final solution.[5][6][7][8]

-

Computational Cost: The computational effort required to find and maintain a well-distributed set of non-dominated solutions increases significantly with the number of objectives.

Strategies for Handling Many-Objective Problems in Drug Discovery

To address the challenges of high-dimensional objective spaces, several strategies have been developed and applied in the field of drug discovery.

Scalarization Methods

Scalarization techniques transform a multi-objective problem into a single-objective one by aggregating the objectives into a single function.[9][10] This allows the use of traditional single-objective optimization algorithms. Common scalarization methods include:

-

Weighted Sum: This is the simplest approach, where each objective is assigned a weight, and the goal is to optimize the weighted sum of all objectives. The main drawback is that it can only find solutions on the convex parts of the Pareto front and requires a priori knowledge to set the weights appropriately.

-

Epsilon-Constraint Method: In this method, one objective is optimized while the others are converted into constraints, with a permissible upper or lower bound (epsilon). By systematically varying the epsilon values, different points on the Pareto front can be obtained.

While straightforward to implement, scalarization methods can be sensitive to the choice of weights or constraints and may not be able to capture the full diversity of the Pareto front.[3]

Pareto-based Approaches

Pareto-based methods directly use the concept of dominance to guide the search. Evolutionary algorithms, such as the Non-dominated Sorting Genetic Algorithm II (NSGA-II), are popular in this category.[11][12] These algorithms maintain a population of solutions and use Pareto ranking and diversity preservation mechanisms to evolve the population towards the Pareto front.

Dimensionality Reduction

Dimensionality reduction techniques aim to reduce the number of objectives by identifying and removing redundant or less important ones.[4][13][14][15][16] This can be achieved through methods like Principal Component Analysis (PCA), which transforms the original set of correlated objectives into a smaller set of uncorrelated principal components. By focusing on the most significant components, the complexity of the problem can be reduced, making it more amenable to traditional multi-objective optimization algorithms.

Quality Indicators

Given that a many-objective optimization algorithm produces a set of solutions, it is crucial to have metrics to evaluate the quality of this set. Quality indicators provide a quantitative measure of how well an approximated Pareto front represents the true Pareto front. The hypervolume indicator is one of the most widely used metrics.[17][18][19][20][21] It calculates the volume of the objective space dominated by the obtained set of non-dominated solutions and bounded by a reference point. A larger hypervolume generally indicates a better approximation of the Pareto front in terms of both convergence and diversity.

Case Study: Multi-Objective Optimization of PARP-1 Inhibitors for ADMET Profile Improvement

To illustrate the practical application of these concepts, we present a synthesized case study based on the optimization of Poly (ADP-ribose) polymerase-1 (PARP-1) inhibitors. PARP-1 is a key enzyme in DNA repair, and its inhibitors have shown significant promise in cancer therapy.[22][23][24][25][26] However, early-generation PARP-1 inhibitors often suffer from suboptimal ADMET properties, limiting their clinical utility.

In this case study, we employ a multi-objective particle swarm optimization (MOPSO) algorithm to simultaneously optimize a set of conflicting objectives for a series of PARP-1 inhibitor analogues.[1][27][28][29][30]

Experimental Protocol: Multi-Objective Particle Swarm Optimization (MOPSO)

The following protocol outlines the key steps in the MOPSO-based optimization of PARP-1 inhibitors. This protocol is based on the general framework described in the ChemMORT platform.[1]

-

Objective Definition: Five objectives were defined for optimization:

-

Maximization of predicted PARP-1 inhibitory activity (pIC50).

-

Maximization of aqueous solubility (LogS).

-

Minimization of predicted cardiotoxicity (hERG inhibition).

-

Maximization of metabolic stability (microsomal clearance).

-

Maximization of synthetic accessibility (SA score).

-

-

Dataset Preparation: A dataset of known PARP-1 inhibitors with experimentally determined pIC50 values and ADMET properties was compiled from the ChEMBL database. This dataset was used to train quantitative structure-activity relationship (QSAR) models for each objective.

-

QSAR Model Development: For each of the five objectives, a gradient boosting machine learning model was trained to predict the property based on a set of 2D molecular descriptors calculated using the RDKit library.

-

MOPSO Algorithm Implementation:

-

Initialization: A swarm of 100 particles (molecules) was initialized. Each particle represents a candidate molecule encoded as a SMILES string. The initial population was generated by randomly modifying a known active PARP-1 inhibitor scaffold.

-

Evaluation: For each particle in the swarm, the five objective functions (QSAR models) were evaluated to determine its position in the objective space.

-

Non-Dominated Sorting and Leader Selection: The entire swarm was ranked using fast non-dominated sorting. The leaders (non-dominated solutions) were stored in an external archive.

-

Velocity and Position Update: The velocity and position of each particle were updated based on its own best-known position and the position of a leader selected from the archive. A crowding distance mechanism was used to promote diversity in leader selection.

-

Mutation: A small probability of mutation (e.g., random modification of the SMILES string) was applied to each particle to maintain diversity and escape local optima.

-

Termination: The algorithm was run for a fixed number of iterations (e.g., 500 generations).

-

-

Pareto Front Analysis: The final set of non-dominated solutions from the archive was analyzed to identify promising candidates with a balanced profile of high potency and favorable ADMET properties.

Data Presentation: Representative Pareto Front Solutions

The following table summarizes a selection of non-dominated solutions from the final Pareto front, showcasing the trade-offs between the different objectives.

| Compound ID | Predicted pIC50 | Predicted LogS | Predicted hERG Inhibition (µM) | Predicted Microsomal Clearance (µL/min/mg) | SA Score |

| Parent | 8.2 | -4.5 | 5.2 | 85 | 2.8 |

| Candidate 1 | 8.9 | -4.8 | 7.1 | 75 | 3.1 |

| Candidate 2 | 8.5 | -3.2 | 6.5 | 68 | 3.0 |

| Candidate 3 | 8.1 | -3.5 | >10 | 55 | 2.9 |

| Candidate 4 | 8.6 | -4.1 | 8.9 | 45 | 3.2 |

| Candidate 5 | 8.4 | -3.8 | 9.5 | 50 | 2.5 |

Higher pIC50, higher LogS, higher hERG inhibition value, lower microsomal clearance, and lower SA score are desirable.

This table illustrates the trade-offs inherent in the optimization process. For instance, Candidate 1 shows the highest predicted potency but has slightly worse solubility than the parent compound. Candidate 3 exhibits the most favorable hERG profile but at the cost of reduced potency. Such a diverse set of solutions allows medicinal chemists to make informed decisions based on the specific goals of their drug discovery program.

Visualizing the Biological Context: Signaling Pathways

Understanding the biological context of the drug target is crucial for rational drug design. PARP-1 is a key player in the DNA damage response (DDR) pathway. Visualizing this pathway can help researchers understand the mechanism of action of PARP inhibitors and potential avenues for combination therapies. The Mitogen-Activated Protein Kinase (MAPK) signaling pathway is another critical pathway often implicated in the cellular processes that PARP inhibitors affect, such as cell proliferation and apoptosis.[31][32][33]

This diagram illustrates how extracellular signals, such as growth factors, can activate the MAPK cascade, ultimately leading to changes in gene expression that promote cell proliferation. The diagram also shows the role of PARP-1 in the DNA damage response, highlighting a key area of crosstalk between these fundamental cellular processes. Understanding these connections can inform the development of combination therapies, for example, by targeting both a signaling pathway and a DNA repair mechanism.

Conclusion

Pareto dominance provides a powerful framework for addressing the multi-objective nature of drug discovery. By embracing the concept of finding a set of optimal trade-off solutions, researchers can move beyond the limitations of single-objective optimization and explore the chemical space more effectively. The challenges posed by a high number of objectives are significant, but with the strategic application of advanced computational techniques such as Pareto-based evolutionary algorithms, dimensionality reduction, and robust quality indicators, these hurdles can be overcome. As the complexity of drug discovery continues to grow, a deep understanding and application of Pareto dominance and many-objective optimization will be indispensable for the rational design of the next generation of therapeutics.

References

- 1. academic.oup.com [academic.oup.com]

- 2. Multi-and many-objective optimization: present and future in de novo drug design - PMC [pmc.ncbi.nlm.nih.gov]

- 3. Pareto optimization to accelerate multi-objective virtual screening - Digital Discovery (RSC Publishing) DOI:10.1039/D3DD00227F [pubs.rsc.org]

- 4. researchgate.net [researchgate.net]

- 5. egr.msu.edu [egr.msu.edu]

- 6. How do you visualize and compare the Pareto fronts of different multi-objective optimization algorithms? - Infermatic [infermatic.ai]

- 7. [PDF] 3D-RadVis: Visualization of Pareto front in many-objective optimization | Semantic Scholar [semanticscholar.org]

- 8. researchgate.net [researchgate.net]

- 9. [2405.10221] Scalarisation-based risk concepts for robust multi-objective optimisation [arxiv.org]

- 10. researchgate.net [researchgate.net]

- 11. mdpi.com [mdpi.com]

- 12. sci2s.ugr.es [sci2s.ugr.es]

- 13. Exploring Dimensionality Reduction Techniques for Deep Learning Driven QSAR Models of Mutagenicity - PMC [pmc.ncbi.nlm.nih.gov]

- 14. Dimensionality reduction approach for many-objective epistasis analysis - PubMed [pubmed.ncbi.nlm.nih.gov]

- 15. researchgate.net [researchgate.net]

- 16. Exploring Dimensionality Reduction Techniques for Deep Learning Driven QSAR Models of Mutagenicity - PubMed [pubmed.ncbi.nlm.nih.gov]

- 17. proceedings.neurips.cc [proceedings.neurips.cc]

- 18. research-repository.uwa.edu.au [research-repository.uwa.edu.au]

- 19. hpi.de [hpi.de]

- 20. asmedigitalcollection.asme.org [asmedigitalcollection.asme.org]

- 21. researchgate.net [researchgate.net]

- 22. Computational design of PARP-1 inhibitors: QSAR, molecular docking, virtual screening, ADMET, and molecular dynamics simulations for targeted drug development - PubMed [pubmed.ncbi.nlm.nih.gov]

- 23. Improved QSAR models for PARP-1 inhibition using data balancing, interpretable machine learning, and matched molecular pair analysis - PubMed [pubmed.ncbi.nlm.nih.gov]

- 24. Computational Chemistry Advances in the Development of PARP1 Inhibitors for Breast Cancer Therapy - PMC [pmc.ncbi.nlm.nih.gov]

- 25. aacrjournals.org [aacrjournals.org]

- 26. researchgate.net [researchgate.net]

- 27. idus.us.es [idus.us.es]

- 28. youtube.com [youtube.com]

- 29. A Multiobjective Particle Swarm Optimizer for Constrained Optimization [ideas.repec.org]

- 30. mdpi.com [mdpi.com]

- 31. MAP Kinase Pathways - PMC [pmc.ncbi.nlm.nih.gov]

- 32. scispace.com [scispace.com]

- 33. cusabio.com [cusabio.com]

The Convergence of Complexity and Optimization: A Technical Guide to the Role of Many-Objective Evolutionary Algorithms in Solving NP-hard Problems

For Researchers, Scientists, and Drug Development Professionals

Abstract

Many-Objective Evolutionary Algorithms (MaOEAs) are at the forefront of computational intelligence, offering robust methodologies for solving optimization problems with four or more conflicting objectives. This technical guide provides an in-depth exploration of the critical role MaOEAs play in tackling NP-hard problems, a class of computationally intractable challenges prevalent across various scientific and industrial domains. We delve into the theoretical underpinnings of MaOEAs, their practical applications in classic combinatorial optimization problems such as the Traveling Salesman Problem (TSP) and the Vehicle Routing Problem (VRP), and their emerging significance in the field of drug development for tasks like Quantitative Structure-Activity Relationship (QSAR) modeling and molecular docking. This paper presents detailed experimental protocols, comparative performance data, and visual workflows to equip researchers, scientists, and drug development professionals with a comprehensive understanding of MaOEA-based problem-solving paradigms.

Introduction to NP-hard Problems

In computational complexity theory, problems are often categorized by how their solving time scales with the size of the input. NP-hard (Non-deterministic Polynomial-time hard) problems are a class of problems for which no known algorithm can find an optimal solution in polynomial time.[1][2] The time required to solve them grows exponentially with the size of the problem, making even moderately sized instances computationally infeasible to solve exactly.[3]

Many real-world challenges in logistics, planning, and science are NP-hard.[4] Two classic examples include:

-

Traveling Salesman Problem (TSP): Given a list of cities and the distances between them, find the shortest possible route that visits each city exactly once and returns to the origin city.[1] The number of possible routes grows factorially with the number of cities, making a brute-force search impossible for all but the smallest instances.[5]

-

Vehicle Routing Problem (VRP): An extension of the TSP, the VRP aims to find the optimal set of routes for a fleet of vehicles to serve a given set of customers, often with constraints such as vehicle capacity and time windows.[4][6]

The inherent difficulty of these problems necessitates the use of heuristic and metaheuristic approaches, like evolutionary algorithms, which can find high-quality, near-optimal solutions in a reasonable amount of time.[7][8]

Many-Objective Evolutionary Algorithms (MaOEAs)

Evolutionary algorithms are population-based metaheuristics inspired by natural selection. When applied to problems with multiple conflicting objectives (Multi-objective Optimization Problems, or MOPs), they are known as Multi-objective Evolutionary Algorithms (MOEAs). These algorithms do not find a single optimal solution but rather a set of trade-off solutions, known as the Pareto optimal set.[9]

From Multi- to Many-Objective Optimization: Problems with four or more objectives are classified as Many-Objective Optimization Problems (MaOPs).[9][10] As the number of objectives increases, traditional MOEAs face significant challenges, a phenomenon often called the "curse of dimensionality".[11] The primary issues include:

-

Dominance Resistance: In high-dimensional objective spaces, a large proportion of the solutions in the population become non-dominated with respect to each other. This weakens the selection pressure based on Pareto dominance, making it difficult for the algorithm to guide the search toward the true Pareto front.[10][11]

-

Diversity Maintenance: Maintaining a well-distributed set of solutions across the entire high-dimensional Pareto front becomes increasingly difficult.[10]

-

Visualization: Visualizing and interpreting a Pareto front with more than three dimensions is challenging for decision-makers.[9]

MaOEAs are a specialized class of MOEAs designed to overcome these challenges. They employ modified selection criteria, enhanced diversity preservation mechanisms, and/or decomposition strategies to maintain search pressure and effectively explore high-dimensional objective spaces.[9][11]

Core Workflow of an this compound

The fundamental workflow of a typical this compound involves an iterative process of selection, variation, and replacement to evolve a population of candidate solutions.

References

- 1. Travelling salesman problem - Wikipedia [en.wikipedia.org]

- 2. wseas.com [wseas.com]

- 3. Recent Advances in Combinatorial Optimization - PMC [pmc.ncbi.nlm.nih.gov]

- 4. Vehicle routing problem - Wikipedia [en.wikipedia.org]

- 5. Algorithms for the Travelling Salesman Problem [routific.com]

- 6. Vehicle Routing Problem | OR-Tools | Google for Developers [developers.google.com]

- 7. internationalpubls.com [internationalpubls.com]

- 8. arxiv.org [arxiv.org]

- 9. agriscigroup.us [agriscigroup.us]

- 10. mdpi.com [mdpi.com]

- 11. researchgate.net [researchgate.net]

Foundational Concepts of Indicator-Based Many-Objective Evolutionary Algorithms: A Technical Guide for Drug Development Professionals

A Whitepaper for Researchers, Scientists, and Drug Development Professionals

Abstract

The simultaneous optimization of multiple, often conflicting, objectives is a cornerstone of modern drug discovery. Researchers aim to design molecules that exhibit high potency and selectivity while minimizing toxicity and ensuring favorable pharmacokinetic profiles (Absorption, Distribution, Metabolism, Excretion, and Toxicity - ADMET). As the number of objectives increases, this becomes a many-objective optimization problem (MaOP), posing significant challenges to traditional optimization techniques. Indicator-based Many-Objective Evolutionary Algorithms (MaOEAs) have emerged as a powerful class of stochastic optimization algorithms capable of navigating these complex, high-dimensional search spaces. This technical guide provides an in-depth exploration of the foundational concepts of indicator-based MaOEAs, detailing the core indicators, selection mechanisms, and experimental protocols relevant to their application in drug development.

Introduction to Many-Objective Optimization in Drug Discovery

The discovery of a new drug is a multi-faceted challenge requiring the careful balancing of numerous properties.[1][2][3] A candidate molecule must not only bind effectively to its target (potency) but also avoid binding to other targets (selectivity) to prevent side effects.[1] Furthermore, its ADMET properties are critical for its success as a therapeutic agent.[1] These objectives are often in conflict; for instance, increasing a molecule's potency might inadvertently increase its toxicity.