QF-Erp7

Description

The Transformer architecture, introduced in 2017, revolutionized natural language processing (NLP) by replacing recurrent and convolutional layers with self-attention mechanisms. This enabled parallelized training and superior performance on tasks like translation and parsing . Subsequent models like BERT, RoBERTa, and BART built on this foundation, refining pretraining objectives, data efficiency, and task adaptability.

Properties

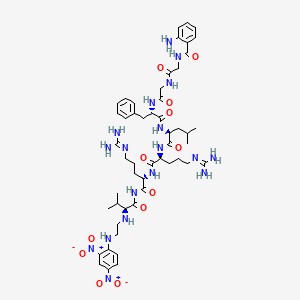

CAS No. |

132472-84-5 |

|---|---|

Molecular Formula |

C51H74N18O12 |

Molecular Weight |

1131.2 g/mol |

IUPAC Name |

2-amino-N-[2-[[2-[[(2S)-1-[[(2S)-1-[[(2S)-5-(diaminomethylideneamino)-1-[[(2S)-5-(diaminomethylideneamino)-1-[[(2S)-2-[2-(2,4-dinitroanilino)ethylamino]-3-methylbutanoyl]amino]-1-oxopentan-2-yl]amino]-1-oxopentan-2-yl]amino]-4-methyl-1-oxopentan-2-yl]amino]-1-oxo-3-phenylpropan-2-yl]amino]-2-oxoethyl]amino]-2-oxoethyl]benzamide |

InChI |

InChI=1S/C51H74N18O12/c1-29(2)24-38(66-48(76)39(25-31-12-6-5-7-13-31)63-42(71)28-61-41(70)27-62-44(72)33-14-8-9-15-34(33)52)47(75)65-36(16-10-20-59-50(53)54)45(73)64-37(17-11-21-60-51(55)56)46(74)67-49(77)43(30(3)4)58-23-22-57-35-19-18-32(68(78)79)26-40(35)69(80)81/h5-9,12-15,18-19,26,29-30,36-39,43,57-58H,10-11,16-17,20-25,27-28,52H2,1-4H3,(H,61,70)(H,62,72)(H,63,71)(H,64,73)(H,65,75)(H,66,76)(H4,53,54,59)(H4,55,56,60)(H,67,74,77)/t36-,37-,38-,39-,43-/m0/s1 |

InChI Key |

JDYFMQPAPMPKCG-WWFQFRPWSA-N |

SMILES |

CC(C)CC(C(=O)NC(CCCN=C(N)N)C(=O)NC(CCCN=C(N)N)C(=O)NC(=O)C(C(C)C)NCCNC1=C(C=C(C=C1)[N+](=O)[O-])[N+](=O)[O-])NC(=O)C(CC2=CC=CC=C2)NC(=O)CNC(=O)CNC(=O)C3=CC=CC=C3N |

Isomeric SMILES |

CC(C)C[C@@H](C(=O)N[C@@H](CCCN=C(N)N)C(=O)N[C@@H](CCCN=C(N)N)C(=O)NC(=O)[C@H](C(C)C)NCCNC1=C(C=C(C=C1)[N+](=O)[O-])[N+](=O)[O-])NC(=O)[C@H](CC2=CC=CC=C2)NC(=O)CNC(=O)CNC(=O)C3=CC=CC=C3N |

Canonical SMILES |

CC(C)CC(C(=O)NC(CCCN=C(N)N)C(=O)NC(CCCN=C(N)N)C(=O)NC(=O)C(C(C)C)NCCNC1=C(C=C(C=C1)[N+](=O)[O-])[N+](=O)[O-])NC(=O)C(CC2=CC=CC=C2)NC(=O)CNC(=O)CNC(=O)C3=CC=CC=C3N |

Appearance |

Solid powder |

Other CAS No. |

132472-84-5 |

Purity |

>98% (or refer to the Certificate of Analysis) |

shelf_life |

>3 years if stored properly |

solubility |

Soluble in DMSO |

storage |

Dry, dark and at 0 - 4 C for short term (days to weeks) or -20 C for long term (months to years). |

Synonyms |

2-aminobenzoyl-glycyl-glycyl-phenylalanyl-leucyl-arginyl-arginyl-valyl-N-(2,4-dinitrophenyl)ethylenediamine Abz-G-G-F-L-R-R-V-EDDn Abz-Gly-Gly-Phe-Leu-Arg-Arg-Val-EDDn QF-ERP7 |

Origin of Product |

United States |

Comparison with Similar Compounds

Comparison with Similar Models

The following table compares key Transformer-based models using metrics and methodologies from the evidence:

Critical Analysis of Research Findings

- Efficiency vs. Performance : The Transformer reduced training time by 75% compared to prior models but required extensive data . BART and T5 later emphasized scalability, with T5 leveraging a 750GB corpus for multitask learning .

- Bidirectional Context : BERT’s bidirectional approach outperformed unidirectional models like GPT but was initially undertrained, a flaw corrected by RoBERTa through extended training cycles .

- Task Generalization : BART demonstrated versatility by excelling in both generation (summarization) and comprehension (GLUE), whereas BERT/RoBERTa focused primarily on comprehension .

Practical Implications

- Industry Adoption : BERT and RoBERTa dominate tasks like sentiment analysis and named entity recognition, while BART is preferred for summarization and dialogue systems.

- Resource Considerations : Training T5 or RoBERTa demands significant computational resources (e.g., hundreds of GPUs), making them less accessible for small-scale projects .

Retrosynthesis Analysis

AI-Powered Synthesis Planning: Our tool employs the Template_relevance Pistachio, Template_relevance Bkms_metabolic, Template_relevance Pistachio_ringbreaker, Template_relevance Reaxys, Template_relevance Reaxys_biocatalysis model, leveraging a vast database of chemical reactions to predict feasible synthetic routes.

One-Step Synthesis Focus: Specifically designed for one-step synthesis, it provides concise and direct routes for your target compounds, streamlining the synthesis process.

Accurate Predictions: Utilizing the extensive PISTACHIO, BKMS_METABOLIC, PISTACHIO_RINGBREAKER, REAXYS, REAXYS_BIOCATALYSIS database, our tool offers high-accuracy predictions, reflecting the latest in chemical research and data.

Strategy Settings

| Precursor scoring | Relevance Heuristic |

|---|---|

| Min. plausibility | 0.01 |

| Model | Template_relevance |

| Template Set | Pistachio/Bkms_metabolic/Pistachio_ringbreaker/Reaxys/Reaxys_biocatalysis |

| Top-N result to add to graph | 6 |

Feasible Synthetic Routes

Featured Recommendations

| Most viewed | ||

|---|---|---|

| Most popular with customers |

Disclaimer and Information on In-Vitro Research Products

Please be aware that all articles and product information presented on BenchChem are intended solely for informational purposes. The products available for purchase on BenchChem are specifically designed for in-vitro studies, which are conducted outside of living organisms. In-vitro studies, derived from the Latin term "in glass," involve experiments performed in controlled laboratory settings using cells or tissues. It is important to note that these products are not categorized as medicines or drugs, and they have not received approval from the FDA for the prevention, treatment, or cure of any medical condition, ailment, or disease. We must emphasize that any form of bodily introduction of these products into humans or animals is strictly prohibited by law. It is essential to adhere to these guidelines to ensure compliance with legal and ethical standards in research and experimentation.