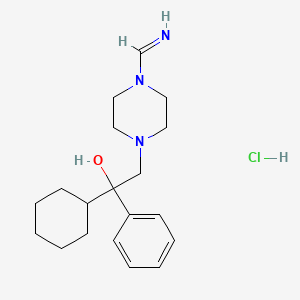

Dac 5945

Description

Properties

CAS No. |

124065-13-0 |

|---|---|

Molecular Formula |

C19H30ClN3O |

Molecular Weight |

351.9 g/mol |

IUPAC Name |

1-cyclohexyl-2-(4-methanimidoylpiperazin-1-yl)-1-phenylethanol;hydrochloride |

InChI |

InChI=1S/C19H29N3O.ClH/c20-16-22-13-11-21(12-14-22)15-19(23,17-7-3-1-4-8-17)18-9-5-2-6-10-18;/h1,3-4,7-8,16,18,20,23H,2,5-6,9-15H2;1H |

InChI Key |

ABECNUXOXSXJCR-UHFFFAOYSA-N |

Canonical SMILES |

C1CCC(CC1)C(CN2CCN(CC2)C=N)(C3=CC=CC=C3)O.Cl |

Appearance |

Solid powder |

Purity |

>98% (or refer to the Certificate of Analysis) |

shelf_life |

>2 years if stored properly |

solubility |

Soluble in DMSO |

storage |

Dry, dark and at 0 - 4 C for short term (days to weeks) or -20 C for long term (months to years). |

Synonyms |

DAC 5945 DAC-5945 N-iminomethyl-N'-((2-hydroxy-2-phenyl-2-cyclohexyl)ethyl)piperazine hydrochloride |

Origin of Product |

United States |

Foundational & Exploratory

An In-depth Technical Guide to the HPE FlexFabric 5945 Switch Series for High-Performance Computing Environments

The HPE FlexFabric 5945 Switch Series represents a line of high-density, low-latency Top-of-Rack (ToR) switches engineered for the demanding requirements of modern data centers.[1][2][3][4] For researchers, scientists, and drug development professionals leveraging high-performance computing (HPC) for complex data analysis and simulations, the network infrastructure is a critical component for timely and efficient data processing. This guide provides a detailed technical overview of the HPE FlexFabric 5945 series, focusing on its capabilities to support data-intensive scientific workflows.

Core Capabilities and Performance

The HPE FlexFabric 5945 series is designed to provide high-performance switching to eliminate network bottlenecks in computationally intensive environments. These switches offer a combination of high port density and wire-speed performance, crucial for large-scale data transfers common in genomics, molecular modeling, and other research areas.

Performance Specifications

The following table summarizes the key performance metrics across different models in the HPE FlexFabric 5945 series. This data is essential for designing a network architecture that can handle large datasets and high-speed interconnects between compute and storage nodes.

| Feature | HPE FlexFabric 5945 48SFP28 8QSFP28 (JQ074A) | HPE FlexFabric 5945 2-slot Switch (JQ075A) | HPE FlexFabric 5945 4-slot Switch (JQ076A) | HPE FlexFabric 5945 32QSFP28 (JQ077A) |

| Switching Capacity | 4 Tb/s[5] | 3.6 Tb/s[5] | 6.4 Tb/s[5] | 6.4 Tb/s[5] |

| Throughput | 2024 Mpps[5] | 2024 Mpps[5] | 2024 Mpps[5] | 2024 Mpps[5] |

| Latency | < 1 µs (64-byte packets)[5] | < 1 µs (64-byte packets)[5] | < 1 µs (64-byte packets)[5] | < 1 µs (64-byte packets)[5] |

| MAC Address Table Size | 288K Entries[5] | 288K Entries[5] | 288K Entries[5] | 288K Entries[5] |

| Routing Table Size (IPv4/IPv6) | 324K / 162K Entries[5] | 324K / 162K Entries[5] | 324K / 162K Entries[5] | 324K / 162K Entries[5] |

| Packet Buffer Size | 32 MB | 32MB[5] | 16MB[5] | 32MB[5] |

| Flash Memory | 1 GB[5] | 1 GB[5] | 1GB[5] | 1 GB[5] |

| SDRAM | 8 GB | 8GB[5] | 4GB[5] | 8GB[5] |

Port Configurations

The series offers various models with flexible port configurations to accommodate diverse connectivity requirements, from 10GbE to 100GbE, allowing for the aggregation of numerous servers and high-speed uplinks to the core network.

| Model | I/O Ports and Slots |

| HPE FlexFabric 5945 48SFP28 8QSFP28 (JQ074A) | 48 x 25G SFP28 Ports, 8 x 100G QSFP28 Ports, 2 x 1G SFP ports.[5] Supports up to 80 x 10GbE ports with splitter cables. |

| HPE FlexFabric 5945 2-slot Switch (JQ075A) | 2 module slots, 2 x 100G QSFP28 ports. Supports a maximum of 48 x 10/25 GbE and 4 x 100 GbE ports, or up to 16 x 100 GbE ports.[5] |

| HPE FlexFabric 5945 4-slot Switch (JQ076A) | 4 Module slots, 2 x 1G SFP ports.[5] Supports a maximum of 96 x 10/25 GbE and 8 x 100 GbE ports, or up to 32 x 100 GbE ports.[5] |

| HPE FlexFabric 5945 32QSFP28 (JQ077A) | 32 x 100G QSFP28 ports, 2 x 1G SFP ports.[5] |

Methodologies for Performance Verification

In a research context, it is imperative to validate the performance claims of network hardware. The following methodologies outline standard procedures for testing the key performance indicators of the HPE FlexFabric 5945 switch series.

Experimental Protocol: Latency Measurement

-

Objective: To measure the port-to-port latency of the switch for various packet sizes.

-

Apparatus:

-

Two high-performance servers with network interface cards (NICs) supporting the desired speed (e.g., 100GbE).

-

HPE FlexFabric 5945 switch.

-

High-precision network traffic generator and analyzer (e.g., Ixia, Spirent).

-

Appropriate cabling (e.g., QSFP28 DACs or transceivers with fiber).

-

-

Procedure:

-

Connect the two servers to two ports on the 5945 switch.

-

Configure the traffic generator to send a stream of packets of a specific size (e.g., 64, 128, 256, 512, 1024, 1518 bytes) from one server to the other.

-

The analyzer measures the time from the last bit of the packet leaving the transmitting port to the first bit arriving at the receiving port.

-

Repeat the measurement for a statistically significant number of packets to calculate the average latency.

-

Vary the packet sizes and traffic rates to characterize the latency under different load conditions.

-

-

Data Analysis: Plot latency as a function of packet size and throughput.

Experimental Protocol: Throughput and Switching Capacity Verification

-

Objective: To verify the maximum forwarding rate and non-blocking switching capacity of the switch.

-

Apparatus:

-

Multiple high-performance servers or a multi-port network traffic generator.

-

HPE FlexFabric 5945 switch with all ports to be tested populated with appropriate transceivers.

-

Cabling for all connected ports.

-

-

Procedure:

-

Connect the traffic generator ports to the switch ports in a full mesh or a pattern that exercises the switch fabric comprehensively.

-

Configure the traffic generator to send traffic at the maximum line rate for each port simultaneously. The traffic pattern should be designed to avoid congestion at a single egress port (e.g., a "many-to-many" traffic pattern).

-

The analyzer on the receiving ports measures the aggregate traffic received.

-

The throughput is calculated in millions of packets per second (Mpps) and the switching capacity in gigabits per second (Gbps) or terabits per second (Tbps).

-

-

Data Analysis: Compare the measured throughput and switching capacity against the manufacturer's specifications. The switch is considered non-blocking if the measured throughput equals the theoretical maximum based on the number of ports and their line rates.

Logical Architecture and Data Flow

Understanding the logical architecture of how the HPE FlexFabric 5945 can be deployed is crucial for network design. The following diagrams illustrate key concepts and a typical deployment scenario.

Caption: High-level data flow within the HPE FlexFabric 5945 switch.

The above diagram illustrates the simplified internal data path of a packet transiting the switch. Upon arrival at an ingress port, the packet processor makes a forwarding decision based on Layer 2 (MAC address) or Layer 3 (IP address) information. The high-speed switch fabric then directs the packet to the appropriate egress port. Packet buffers are utilized to handle temporary congestion.

Caption: Typical Top-of-Rack deployment in a high-performance computing cluster.

This diagram shows the HPE FlexFabric 5945 switches deployed as ToR switches, aggregating connections from servers and storage within a rack and providing high-speed uplinks to the core network. This architecture is common in HPC environments to minimize latency between compute nodes.

Advanced Features for Demanding Environments

The HPE FlexFabric 5945 series is equipped with a range of advanced features that are particularly beneficial for scientific and research computing.

-

Virtual Extensible LAN (VXLAN): Support for VXLAN allows for the creation of virtualized network overlays, which can improve flexibility and scalability in large, multi-tenant research environments.[6]

-

Intelligent Resilient Fabric (IRF): IRF technology enables multiple 5945 switches to be virtualized and managed as a single logical device.[2] This simplifies network management and improves resiliency, as a failure of one switch in the IRF fabric does not lead to a complete network outage.

-

Data Center Bridging (DCB): DCB protocols, including Priority-based Flow Control (PFC) and Enhanced Transmission Selection (ETS), are supported to provide a lossless Ethernet fabric, which is critical for storage traffic such as iSCSI and RoCE (RDMA over Converged Ethernet).[2]

-

Low Latency Cut-Through Switching: The switches utilize cut-through switching, which begins forwarding a packet before it is fully received, significantly reducing latency for demanding applications.[6]

Management and Automation

The HPE FlexFabric 5945 series supports a comprehensive set of management interfaces and protocols, including a command-line interface (CLI), SNMP, and out-of-band management.[5][6] For large-scale environments, automation is key. The switches can be managed through HPE's Intelligent Management Center (IMC), which provides a centralized platform for network monitoring, configuration, and automation.

References

HPE 5945 Switch: An In-depth Technical Guide to Ultra-Low-Latency Performance for Research and Drug Development

Authored for Researchers, Scientists, and Drug Development Professionals, this guide provides a comprehensive technical overview of the HPE 5945 switch series, with a core focus on its ultra-low-latency performance capabilities. This document delves into the switch's key performance metrics, the architectural features that enable high-speed data transfer, and its application in demanding research environments.

The relentless pace of innovation in fields such as genomics, computational chemistry, and artificial intelligence-driven drug discovery necessitates a network infrastructure that can keep pace with the exponential growth in data volumes and the demand for real-time processing. The HPE 5945 switch series is engineered to meet these challenges, offering a high-density, ultra-low-latency solution ideal for the aggregation or server access layer of large enterprise data centers and high-performance computing (HPC) clusters.

Core Performance Characteristics

The HPE 5945 switch series is built to deliver consistent, high-speed performance for data-intensive workloads. Its architecture is optimized for minimizing the time it takes for data packets to traverse the network, a critical factor in HPC and real-time analytics environments.

Quantitative Performance Data

The following tables summarize the key performance specifications of the HPE 5945 switch series, providing a clear comparison of its capabilities.

| Metric | Performance Specification | Source(s) |

| Latency | < 1 µs (for 64-byte packets) | [1][2] |

| Switching Capacity | Up to 6.4 Tb/s (model dependent) | [2] |

| Throughput | Up to 2024 Mpps (million packets per second) | [1] |

| MAC Address Table Size | 288,000 entries | [2] |

| IPv4 Routing Table Size | 324,000 entries | [2] |

| IPv6 Routing Table Size | 162,000 entries | [2] |

| Packet Buffer Size | Up to 32 MB |

These specifications underscore the switch's capacity to handle massive data flows with minimal delay, a crucial requirement for the large datasets and iterative computational processes common in drug development and scientific research.

Experimental Protocols for Performance Validation

While specific internal HPE testing methodologies for the HPE 5945 are not publicly detailed, the performance of network switching hardware is typically validated using standardized testing procedures. The most common of these is the RFC 2544 benchmark suite from the Internet Engineering Task Force (IETF). This suite of tests provides a framework for measuring key performance metrics in a consistent and reproducible manner.

RFC 2544 Benchmarking: A General Overview

For the benefit of researchers and scientists who value methodological rigor, this section outlines the typical tests included in an RFC 2544 evaluation. These tests are designed to stress the device under test (DUT) and measure its performance under various load conditions.

-

Throughput: This test determines the maximum rate at which the switch can forward frames without any loss. The test is conducted with various frame sizes, as performance can vary depending on the packet size.

-

Latency: Latency is the time it takes for a frame to travel from the source port to the destination port. In RFC 2544, this is typically measured as a round-trip time and then halved. The test is usually performed at the maximum throughput rate determined in the throughput test. The sub-microsecond latency of the HPE 5945 is a key indicator of its high-performance design, likely achieved through a cut-through switching architecture.

-

Frame Loss Rate: This test measures the percentage of frames that are dropped by the switch at various load levels. The test helps to understand the switch's behavior under congestion.

-

Back-to-Back Frames (Burstability): This test measures the switch's ability to handle a continuous stream of frames without any loss. It is a good indicator of the switch's buffer performance.

While a specific RFC 2544 test report for the HPE 5945 is not publicly available, the advertised performance figures suggest that the switch has undergone rigorous testing to validate its ultra-low-latency capabilities.[3][4][5]

Architectural Deep Dive: Enabling Ultra-Low Latency

The HPE 5945's impressive performance is not merely a result of powerful silicon, but also a combination of architectural features designed to minimize packet processing overhead and maximize data transfer efficiency.

Cut-Through Switching

The HPE 5945 utilizes a cut-through switching architecture.[1][6] In this mode, the switch begins forwarding a frame as soon as it has read the destination MAC address, without waiting for the entire frame to be received. This significantly reduces the latency compared to the traditional store-and-forward method, where the entire frame is buffered before being forwarded. For latency-sensitive applications prevalent in research, this architectural choice is paramount.

High-Density, High-Speed Connectivity

The HPE 5945 series offers a variety of high-density port configurations, including 10GbE, 25GbE, 40GbE, and 100GbE ports.[1][6] This flexibility allows for the creation of high-bandwidth connections to servers, storage, and other network infrastructure, ensuring that the network does not become a bottleneck for data-intensive applications.

Logical Workflows and Signaling Pathways

Understanding the logical workflows and signaling pathways within a network is crucial for designing and troubleshooting high-performance environments. The HPE 5945 supports several key technologies that enable resilient and scalable network architectures.

HPE Intelligent Resilient Framework (IRF)

HPE's Intelligent Resilient Framework (IRF) is a virtualization technology that allows multiple HPE 5945 switches to be interconnected and managed as a single logical device.[7][8] This simplifies network administration and enhances resiliency.

Caption: A logical diagram of an HPE IRF ring topology, showcasing the master and slave switch roles.

In an IRF fabric, one switch is elected as the "master" and is responsible for managing the entire virtual switch. The other switches act as "slaves," providing additional ports and forwarding capacity. In the event of a master switch failure, a new master is elected, ensuring business continuity.

IRF Failover Signaling Pathway:

Caption: A simplified workflow illustrating the IRF failover process upon master switch failure.

Spine-and-Leaf Architecture for HPC

In HPC environments, a spine-and-leaf network architecture is often employed to provide high-bandwidth, low-latency connectivity between compute nodes and storage. The HPE 5945 is well-suited for both the "leaf" (server access) and "spine" (aggregation) layers of such an architecture.

Caption: A typical spine-and-leaf network architecture using HPE 5945 switches for HPC.

This architecture ensures that there are only two "hops" between any two servers in the network, minimizing latency and providing predictable performance.

Application in Drug Discovery and Genomics Research

Logical Workflow in a Drug Discovery HPC Environment:

References

- 1. au-cloud-production-media.s3.ap-southeast-2.amazonaws.com [au-cloud-production-media.s3.ap-southeast-2.amazonaws.com]

- 2. support.hpe.com [support.hpe.com]

- 3. scribd.com [scribd.com]

- 4. solwise.co.uk [solwise.co.uk]

- 5. scribd.com [scribd.com]

- 6. etevers-marketing.com [etevers-marketing.com]

- 7. arubanetworking.hpe.com [arubanetworking.hpe.com]

- 8. medium.com [medium.com]

- 9. biosolveit.de [biosolveit.de]

HPE FlexFabric 5945: An In-Depth Technical Guide for High-Performance Computing Environments

For Researchers, Scientists, and Drug Development Professionals

This guide provides a comprehensive technical overview of the HPE FlexFabric 5945 switch series, focusing on the core features and performance metrics relevant to demanding research and high-performance computing (HPC) environments. The information is presented to align with the data-centric and methodological expectations of the scientific community.

Core Performance Capabilities

The HPE FlexFabric 5945 series is engineered for high-density, ultra-low-latency data center environments, making it well-suited for computationally intensive workloads characteristic of scientific research and drug development. Key performance indicators are summarized below.

Performance and Throughput Specifications

The following table outlines the key performance metrics of the HPE FlexFabric 5945 switch series. These specifications are critical for understanding the switch's capacity to handle large data flows and minimize processing delays.

| Metric | Value | Significance in a Research Context |

| Switching Capacity | Up to 2.56 Tb/s[1] | Enables the transfer of large datasets, such as genomic sequences or molecular modeling outputs, without bottlenecks. |

| Throughput | Up to 1904 MPPS (Million Packets Per Second)[1] | Ensures that a high volume of smaller data packets, common in certain analytical workflows, can be processed efficiently. |

| Latency | Under 1µs for 40 GbE[1] | Crucial for latency-sensitive applications, such as real-time data analysis and synchronized computational clusters. |

| MAC Address Table Size | 288K Entries | Supports large and complex network topologies with numerous connected devices. |

| IPv4/IPv6 Routing Table Size | 324K/162K Entries | Facilitates operation in large, routed networks, ensuring scalability for growing research infrastructures. |

| Packet Buffer Size | 16MB / 32MB (model dependent) | Helps to absorb bursts of traffic without dropping packets, ensuring data integrity during periods of high network load. |

High-Density Port Configurations

The 5945 series offers a variety of high-density port configurations to accommodate diverse and expanding laboratory and data center needs. This flexibility allows for the aggregation of numerous servers and storage devices, essential for large-scale data analysis.

| Model Variant | Port Configuration |

| Fixed Port Models | 48 x 10GbE (SFP or BASE-T) with 6 x 40GbE ports[1] |

| 48 x 10GbE (SFP or BASE-T) with 6 x 100GbE ports[1] | |

| 32 x 40GbE ports | |

| Modular Models | 2-slot modular version with two 40GbE ports |

| 4-slot modular version with four 40GbE ports |

Methodologies for Performance Benchmarking

While specific, in-house experimental protocols for the HPE FlexFabric 5945 are not publicly detailed, the performance metrics are determined using standardized industry methodologies. A key standard in this domain is RFC 2544 , "Benchmarking Methodology for Network Interconnect Devices." This standard provides a framework for testing the performance of network devices in a repeatable and comparable manner.

Key RFC 2544 Test Parameters:

-

Throughput: Measures the maximum rate at which frames can be forwarded without any loss. This is determined by sending a specific number of frames at a defined rate and verifying that all frames are received.

-

Latency: Characterizes the time delay for a frame to travel from the source to the destination through the device under test.

-

Frame Loss Rate: Reports the percentage of frames that are lost at various load levels, which is particularly important for understanding behavior under network congestion.

-

Back-to-Back Frames: Measures the maximum number of frames that can be sent in a burst without any frame loss.

These standardized tests ensure that the reported performance metrics are reliable and can be used to accurately predict the switch's behavior under demanding workloads.

Core Technologies for Research Environments

The HPE FlexFabric 5945 incorporates several key technologies that are particularly beneficial for scientific and research applications.

Intelligent Resilient Framework (IRF)

IRF technology allows multiple HPE FlexFabric 5945 switches to be virtualized and managed as a single logical device. This simplifies network administration and enhances resiliency. In a research setting, this means that the network can tolerate the failure of a single switch without disrupting critical computational tasks.

Caption: Logical diagram of an HPE IRF virtual device.

Virtual Extensible LAN (VXLAN)

VXLAN is a network virtualization technology that allows for the creation of a large number of isolated virtual networks over a physical network infrastructure. This is highly beneficial for multi-tenant research environments where different research groups or projects require secure and isolated network segments for their data.

Caption: VXLAN logical overlay network.

Data Center Bridging (DCB)

Data Center Bridging (DCB) is a suite of IEEE standards that enhances Ethernet for use in data center environments. Key features include Priority-based Flow Control (PFC), Enhanced Transmission Selection (ETS), and Data Center Bridging Exchange Protocol (DCBX). For research applications, DCB ensures lossless data transmission for storage protocols like iSCSI and RoCE, which is critical for the integrity of large datasets.

High Availability and Environmental Specifications

Ensuring uptime is critical in research environments where long-running computations can be costly to restart. The HPE FlexFabric 5945 is designed with redundant, hot-swappable power supplies and fans to mitigate hardware failures. Additionally, its reversible airflow design allows for flexible deployment in hot-aisle/cold-aisle data center layouts, contributing to efficient cooling and operational stability.

Environmental and Physical Specifications

| Specification | Value |

| Operating Temperature | 32°F to 113°F (0°C to 45°C) |

| Operating Relative Humidity | 10% to 90% (noncondensing) |

| Acoustic Noise | Varies by model and fan speed, typically in the range of 60-70 dBA |

| Power Supply | Dual, hot-swappable AC or DC power supplies |

| Airflow | Front-to-back or back-to-front (reversible) |

Management and Monitoring

The HPE FlexFabric 5945 series supports a comprehensive set of management and monitoring tools. These include a full-featured command-line interface (CLI), SNMP v1, v2c, and v3, and sFlow (RFC 3176) for traffic monitoring. For large-scale environments, integration with HPE's Intelligent Management Center (IMC) provides centralized control and visibility.

Of particular interest to data-intensive research is the support for HPE FlexFabric Network Analytics, which provides real-time telemetry and microburst detection. This allows network administrators to identify and troubleshoot transient network congestion that could impact the performance of sensitive applications.

Caption: Monitoring and management workflow.

References

HPE 5945 switch for top-of-rack deployment

An In-depth Technical Guide to the HPE 5945 Switch for Top-of-Rack Deployments in Research and Drug Development

For researchers, scientists, and drug development professionals, the efficient transfer and processing of large datasets are paramount. The network infrastructure forms the backbone of these data-intensive workflows, directly impacting the speed of research and discovery. The HPE 5945 Switch Series, designed for top-of-rack (ToR) data center deployments, offers high-performance, low-latency connectivity essential for demanding computational and data-driven environments. This guide provides a technical deep dive into the capabilities of the HPE 5945 switch, tailored for a scientific audience.

Core Performance and Scalability

The HPE 5945 series is engineered to handle the rigorous demands of high-performance computing (HPC) clusters, next-generation sequencing (NGS) data analysis, and other data-intensive scientific applications. Its architecture is optimized for minimizing latency and maximizing throughput, critical factors in accelerating research timelines.

Quantitative Performance Specifications

The following tables summarize the key performance metrics of the HPE 5945 switch series, providing a clear comparison of its capabilities.

| Performance Metric | HPE FlexFabric 5945 Switch Series | Significance in Research Environments |

| Switching Capacity | Up to 6.4 Tb/s[1][2] | Enables wire-speed performance for large data transfers between servers, crucial for genomic data processing and large-scale simulations. |

| Throughput | Up to 2024 Mpps (Million packets per second)[1][2] | Ensures that a high volume of network packets can be processed without degradation, important for applications with high-frequency, small-packet traffic. |

| Latency | < 1 µs (64-byte packets)[1] | Ultra-low latency is critical for tightly coupled HPC applications and real-time data analysis, reducing computational wait times. |

| MAC Address Table Size | 288K Entries[1] | Supports a large number of connected devices, accommodating dense server racks and virtualized environments common in research data centers. |

| Routing Table Size | 324K Entries (IPv4), 162K Entries (IPv6)[1] | Provides scalability for large and complex network topologies, ensuring efficient routing of data across different subnets and research groups. |

| Model Specific Port Configurations | Description |

| JQ074A | 48 x 1/10/25GbE SFP28 ports and 8 x 40/100GbE QSFP28 ports[3] |

| JQ075A | 2-slot modular switch[3][4] |

| JQ076A | 4-slot modular switch[3][4] |

| JQ077A | 32 x 40/100GbE QSFP28 ports[3] |

These specifications highlight the switch's capacity to serve as a high-density, high-speed aggregation point for servers in a ToR architecture, directly connecting powerful computational resources.

Key Features for Scientific Workflows

The HPE 5945 switch series incorporates several advanced features that are particularly beneficial for research and drug development environments.

-

Data Center Bridging (DCB): Provides a set of enhancements to Ethernet to support lossless data transmission, which is critical for storage traffic (iSCSI, FCoE) and other sensitive data streams often found in scientific computing.[3][5] DCB ensures that no data packets are dropped during periods of network congestion.

-

Virtual Extensible LAN (VXLAN): Enables the creation of virtualized overlay networks on top of the physical infrastructure.[5][6] This is highly valuable for securely isolating different research projects or datasets, allowing for multi-tenant environments within the same physical network.

-

Intelligent Resilient Framework (IRF): Allows multiple HPE 5945 switches to be virtualized and managed as a single logical device.[5] This simplifies network management and enhances resiliency, as the failure of one switch in the IRF fabric does not lead to a complete network outage.

-

Low Latency Cut-Through Switching: The switch can forward a packet as soon as the destination MAC address is read, without waiting for the entire packet to be received.[3][5] This significantly reduces latency, a key advantage for HPC applications that rely on rapid communication between nodes.

Experimental Protocols: Measuring Network Performance

To substantiate the performance claims of network hardware, standardized testing methodologies are employed. While specific internal testing protocols from HPE are proprietary, the following outlines the general experimental procedures for measuring key network switch performance metrics, relevant for any researcher looking to validate their network infrastructure.

Latency Measurement (RFC 2544)

Objective: To measure the time delay a packet experiences as it traverses the switch.

Methodology:

-

Test Setup: A dedicated network traffic generator/analyzer is connected to two ports on the HPE 5945 switch.

-

Frame Size: The test is repeated for various frame sizes (e.g., 64, 128, 256, 512, 1024, 1280, 1518 bytes) to understand the latency characteristics across different packet types.

-

Traffic Generation: The traffic generator sends a known number of frames at a specific rate through one port of the switch.

-

Timestamping: The analyzer records the timestamp of when each frame is sent and when it is received on the second port.

-

Calculation: Latency is calculated as the difference between the receive time and the send time for each frame. The average, minimum, and maximum latency values are reported. For store-and-forward devices, latency will vary with frame size. For cut-through devices, latency should remain relatively constant.

Throughput Measurement (RFC 2544)

Objective: To determine the maximum rate at which the switch can forward packets without any drops.

Methodology:

-

Test Setup: Similar to the latency test, a traffic generator/analyzer is connected to two ports on the switch.

-

Traffic Profile: A continuous stream of frames of a specific size is sent from the generator to the switch.

-

Iterative Testing: The offered load (traffic rate) is increased incrementally. At each step, the number of frames sent by the generator is compared to the number of frames received by the analyzer.

-

Throughput Determination: The throughput is the highest rate at which the number of received frames equals the number of sent frames (i.e., zero frame loss). This test is repeated for different frame sizes.

Visualizing Network Architecture and Workflows

The following diagrams, generated using the DOT language, illustrate key concepts and workflows related to the HPE 5945 switch in a research environment.

Top-of-Rack Deployment Architecture

This diagram shows a typical ToR deployment where HPE 5945 switches connect servers within a rack and then aggregate uplinks to the core network.

Caption: Typical Top-of-Rack data center design with HPE 5945 switches.

Logical Workflow for HPC Data Processing

This diagram illustrates a simplified logical workflow for processing large datasets in an HPC environment, highlighting the role of the network.

References

Navigating Lossless Data Transmission: A Technical Guide to HPE 5945 Data Center Bridging Capabilities

For Researchers, Scientists, and Drug Development Professionals

In the data-intensive realms of scientific research and drug development, the integrity and speed of data transmission are paramount. The HPE FlexFabric 5945 Switch Series, a line of high-density, ultra-low-latency switches, addresses these critical needs through its robust implementation of Data Center Bridging (DCB). This technical guide provides an in-depth exploration of the HPE 5945's DCB capabilities, offering a comprehensive resource for professionals who rely on seamless and efficient data flow for their mission-critical applications.

The HPE 5945 Switch Series is engineered for deployment in the aggregation or server access layer of large enterprise data centers, and it is also well-suited for the core layer of medium-sized enterprises.[1] With support for high-density 10/25/40/100GbE connectivity, these switches are designed to handle the demanding throughput requirements of virtualized environments and server-to-server traffic.[1][2]

Core Data Center Bridging Technologies

Data Center Bridging is a suite of IEEE standards that enhances Ethernet to support converged networks, where storage, data networking, and management traffic can coexist on a single fabric without compromising performance or reliability. The HPE 5945 Switch Series implements the key DCB protocols to enable a lossless and efficient network.[1]

Priority-based Flow Control (PFC) - IEEE 802.1Qbb

Priority-based Flow Control is a mechanism that prevents packet loss due to network congestion. Unlike traditional Ethernet flow control that pauses all traffic on a link, PFC operates on individual priority levels. This allows for the selective pausing of lower-priority traffic to prevent buffer overruns for high-priority, loss-sensitive traffic such as iSCSI or RoCE (RDMA over Converged Ethernet).

Enhanced Transmission Selection (ETS) - IEEE 802.1Qaz

Enhanced Transmission Selection provides a method for allocating bandwidth to different traffic classes. This ensures that critical applications receive a guaranteed portion of the network bandwidth, while also allowing other traffic to utilize any unused bandwidth. This is crucial in a converged network to prevent a high-volume data stream from starving other essential applications.

Data Center Bridging Capability Exchange Protocol (DCBX) - IEEE 802.1Qaz

DCBX is a discovery and configuration exchange protocol that allows network devices to communicate their DCB capabilities and configurations to their peers. It ensures consistent configuration of PFC and ETS across the network, which is vital for the proper functioning of a lossless fabric. DCBX leverages the Link Layer Discovery Protocol (LLDP) for this exchange.

Quantitative Data and Specifications

The following tables summarize the key quantitative specifications of the HPE FlexFabric 5945 Switch Series, providing a clear overview of its performance and capabilities.

| Performance Metric | Specification | Source |

| Switching Capacity | Up to 2.56 Tb/s | |

| Throughput | Up to 1904 MPPS | |

| Latency | Under 1µs for 40 GbE | |

| Jumbo Frames | Up to 9416 bytes |

| Hardware Specification | Details | Source |

| Ports | High-density 10/25/40/100GbE options | |

| Modularity | 2-slot and 4-slot modular versions available | |

| Memory and Processor | Varies by model, e.g., 1GB Flash, 16MB Packet buffer, 4GB SDRAM | |

| MAC Address Table Size | Up to 288K Entries | |

| Routing Table Size | Up to 324K Entries (IPv4), 162K Entries (IPv6) |

Signaling Pathways and Logical Workflows

To visualize the operational flow of Data Center Bridging protocols on the HPE 5945, the following diagrams illustrate the key signaling pathways and logical relationships.

Caption: PFC prevents packet loss by sending a pause frame for a specific priority when the receiver's buffer is congested.

Caption: ETS allocates guaranteed bandwidth to different traffic classes, ensuring quality of service.

Caption: DCBX uses LLDP to exchange and negotiate DCB capabilities and configurations between peer devices.

Experimental Protocols and Methodologies

While specific, detailed experimental protocols from HPE for the 5945 Switch Series are not publicly available, the following outlines a general methodology for validating the performance of Data Center Bridging in a lab environment. This approach is standard in the industry for testing the efficacy of lossless networking configurations.

Objective:

To verify the lossless nature of the network under congestion and to measure the performance of priority-based flow control and enhanced transmission selection.

Materials:

-

Two or more HPE FlexFabric 5945 Switches.

-

Servers with network interface cards (NICs) that support DCB, particularly for RoCE or iSCSI traffic.

-

A high-speed traffic generator and analyzer (e.g., Ixia, Spirent).

-

Cabling appropriate for the port speeds being tested (e.g., 100GbE QSFP28).

Methodology:

-

Baseline Performance Measurement:

-

Establish a baseline of network performance without DCB enabled. Measure latency and packet loss under normal and congested conditions.

-

-

DCB Configuration:

-

Enable DCB features on the HPE 5945 switches, including PFC for the desired priority queues and ETS to allocate bandwidth.

-

Configure the server NICs to trust the DCB settings from the switch and to tag traffic with the appropriate priority levels (e.g., using DSCP or CoS values).

-

-

Lossless Verification with PFC:

-

Configure the traffic generator to send a high-priority, loss-sensitive traffic stream (e.g., simulating RoCE) and a lower-priority, best-effort traffic stream.

-

Create congestion by oversubscribing a link.

-

Monitor the traffic analyzer for any packet drops in the high-priority stream. The expectation is zero packet loss.

-

Observe the PFC pause frames being sent from the receiving switch to the sending device.

-

-

Bandwidth Allocation Verification with ETS:

-

Configure ETS to assign specific bandwidth percentages to different traffic classes.

-

Use the traffic generator to send traffic for each class at a rate that exceeds its guaranteed bandwidth.

-

Verify that each traffic class receives its allocated bandwidth and that unused bandwidth is fairly distributed among other traffic classes.

-

-

DCBX Verification:

-

Connect two HPE 5945 switches and enable DCBX.

-

Verify that the switches exchange their DCB capabilities and that the operational state of the DCB features is consistent between the two devices.

-

Introduce a configuration mismatch on one switch and verify that DCBX identifies and flags the inconsistency.

-

Conclusion

The HPE FlexFabric 5945 Switch Series provides a robust and feature-rich platform for building high-performance, lossless data center networks. For researchers, scientists, and drug development professionals, leveraging the Data Center Bridging capabilities of the HPE 5945 can significantly enhance the reliability and efficiency of critical data workflows. By understanding and implementing Priority-based Flow Control, Enhanced Transmission Selection, and the Data Center Bridging Capability Exchange Protocol, organizations can create a converged network infrastructure that meets the stringent demands of modern scientific computing.

References

HPE FlexFabric 5945 Switch Series: A Technical Guide for Data-Intensive Research

In the realms of modern scientific research, particularly in genomics, computational biology, and drug discovery, the ability to process and move massive datasets quickly and efficiently is paramount. The network infrastructure forms the backbone of these high-performance computing (HPC) environments, where bottlenecks can significantly hinder research progress. The HPE FlexFabric 5945 Switch Series offers a family of high-density, ultra-low-latency switches designed to meet the demanding requirements of these data-intensive applications. This guide provides a technical overview of the HPE FlexFabric 5945 series, with a focus on its relevance to researchers, scientists, and drug development professionals.

Core Capabilities for Research Environments

The HPE FlexFabric 5945 Switch Series is engineered for deployment in aggregation or server access layers of large enterprise data centers and is also robust enough for the core layer of medium-sized enterprises.[1][2][3] For research computing clusters, this translates to a versatile platform that can function as a high-speed top-of-rack (ToR) switch, connecting servers and storage, or as a spine switch in a leaf-spine architecture, providing a high-bandwidth, low-latency fabric for east-west traffic. The switches' support for cut-through switching ensures minimal delay in data transmission, a critical factor in tightly coupled HPC clusters.[2][4]

A key advantage for scientific workflows is the switch's high-performance data center switching capabilities, with a switching capacity of up to 2.56 Tb/s and throughput of up to 1904 MPPS. This high throughput is essential for data-intensive environments. Furthermore, with latency under 1 microsecond for 40GbE, the 5945 series helps to accelerate applications that are sensitive to delays, such as those involving real-time data analysis or complex simulations.

Quantitative Specifications

The following tables summarize the key quantitative specifications of the HPE FlexFabric 5945 Switch Series, allowing for easy comparison between different models.

Performance and Capacity

| Feature | Specification | Models |

| Switching Capacity | Up to 6.4 Tb/s | JQ076A, JQ077A |

| Up to 4.0 Tb/s | JQ074A | |

| Up to 3.6 Tb/s | JQ075A | |

| Throughput | Up to 2024 Mpps | All Models |

| Latency | < 1 µs (64-byte packets) | All Models |

| MAC Address Table Size | 288,000 Entries | All Models |

| Routing Table Size (IPv4/IPv6) | 324,000 / 162,000 Entries | All Models |

Memory and Processor

| Component | Specification | Models |

| Flash Memory | 1 GB | All Models |

| SDRAM | 8 GB | JQ075A, JQ077A |

| 4 GB | JQ074A, JQ076A | |

| Packet Buffer Size | 32 MB | JQ075A, JQ077A |

| 16 MB | JQ074A, JQ076A |

Port Configurations

| Model | Description | Ports |

| JQ074A | HPE FlexFabric 5945 48SFP28 8QSFP28 Switch | 48 x 1/10/25G SFP28, 8 x 40/100G QSFP28 |

| JQ075A | HPE FlexFabric 5945 2-slot Switch | 2 module slots, 2 x 40/100G QSFP28 |

| JQ076A | HPE FlexFabric 5945 4-slot Switch | 4 module slots |

| JQ077A | HPE FlexFabric 5945 32QSFP28 Switch | 32 x 40/100G QSFP28 |

Network Performance Benchmarking Methodology

To quantify the performance of the HPE FlexFabric 5945 switch in a research context, a standardized testing methodology is crucial. The following protocols, based on industry-standard practices, can be employed to evaluate key performance indicators.

Throughput Test

-

Objective: To determine the maximum rate at which the switch can forward frames without any loss.

-

Methodology:

-

Connect a traffic generator/analyzer to two ports on the switch.

-

Configure a test stream of frames of a specific size (e.g., 64, 128, 256, 512, 1024, 1280, 1518 bytes).

-

Transmit the stream from the source port to the destination port at a known rate, starting at 100% of the theoretical maximum for the link speed.

-

At the destination port, measure the number of frames received.

-

If any frames are lost, reduce the transmission rate and repeat the test.

-

The throughput is the highest rate at which no frames are lost.

-

Repeat this procedure for each frame size.

-

Latency Test

-

Objective: To measure the time delay a frame experiences as it is forwarded through the switch.

-

Methodology:

-

Use the same setup as the throughput test.

-

For each frame size, set the transmission rate to the maximum throughput determined in the previous test.

-

The traffic generator sends a frame and records a timestamp upon transmission.

-

Upon receiving the same frame, the analyzer records a second timestamp.

-

The latency is the difference between the two timestamps.

-

This test should be repeated multiple times to determine an average latency.

-

Frame Loss Rate Test

-

Objective: To determine the percentage of frames lost at various load conditions.

-

Methodology:

-

Use the same setup as the throughput test.

-

Configure a stream of frames of a specific size.

-

Transmit the stream at a rate higher than the determined throughput to induce congestion.

-

Measure the number of frames transmitted and the number of frames received.

-

The frame loss rate is calculated as: ((Frames Transmitted - Frames Received) / Frames Transmitted) * 100%.

-

Repeat this test for various transmission rates and frame sizes.

-

Visualizing Network Architecture and Data Workflows

The following diagrams illustrate how the HPE FlexFabric 5945 can be integrated into a research computing environment and the logical flow of data in a drug discovery pipeline.

Caption: A leaf-spine network architecture for a research computing cluster.

Caption: Data flow in a computational drug discovery workflow.

Advanced Features for Resilient and Scalable Research Networks

Beyond raw performance, the HPE FlexFabric 5945 series incorporates several features that are critical for the demanding nature of research environments:

-

Intelligent Resilient Fabric (IRF): This technology allows up to ten 5945 switches to be virtualized and managed as a single logical device. For research computing, this simplifies network management, enhances scalability, and provides high availability with rapid convergence times in the event of a link or device failure.

-

Data Center Bridging (DCB): DCB protocols are essential for converged network environments where storage traffic (like iSCSI or FCoE) and traditional Ethernet traffic share the same fabric. This is particularly relevant for research clusters that rely on high-performance, low-latency access to shared storage.

-

VXLAN Support: Virtual Extensible LAN (VXLAN) allows for the creation of virtualized Layer 2 networks over a Layer 3 infrastructure. This enables researchers to create isolated and secure network segments for different projects or user groups, enhancing flexibility and security within a shared computing environment.

-

Flexible High Port Density: The 5945 series offers a variety of high-density port configurations, including 10GbE, 25GbE, 40GbE, and 100GbE ports. This allows for the creation of scalable networks that can accommodate the ever-increasing bandwidth demands of modern scientific instruments and computational workloads.

References

An In-depth Technical Guide to Direct Attach Copper (DAC) Cables for High-Speed Switching Environments

Authored for Researchers, Scientists, and Drug Development Professionals

In modern high-performance computing (HPC) and data-intensive scientific research, the efficiency and reliability of the underlying network infrastructure are paramount. The rapid transfer of large datasets, such as those generated in genomic sequencing, molecular modeling, and clinical trial data analysis, necessitates a robust and low-latency interconnect solution. Direct Attach Copper (DAC) cables have emerged as a critical component in data center and laboratory networks, offering a cost-effective and high-performance alternative to traditional fiber optic solutions for short-reach applications. This guide provides a comprehensive technical overview of DAC cable technology, its performance characteristics, and the rigorous experimental protocols used for its validation.

Core Principles of Direct Attach Copper (DAC) Cables

A Direct Attach Copper (DAC) cable is a high-speed, twinaxial copper cable assembly with integrated transceiver modules on both ends.[1][2] These modules, which come in various form factors such as SFP+, QSFP+, and QSFP28, allow the cable to be plugged directly into the ports of network switches, servers, and storage devices, bypassing the need for separate optical transceivers.[3][4] The core of a DAC cable consists of shielded copper wires, typically with American Wire Gauge (AWG) ratings of 24, 28, or 30, which transmit data as electrical signals.[3]

DAC cables are broadly categorized into two main types:

-

Passive DAC Cables: These cables do not contain any active electronic components for signal conditioning. They rely on the host device's signal processing capabilities to ensure signal integrity. Consequently, passive DACs have extremely low power consumption (typically less than 0.15W) and offer the lowest latency. However, their transmission distance is limited, generally up to 7 meters for 10G and progressively shorter for higher data rates.

-

Active DAC Cables (ADCs): Also known as Active Copper Cables (ACCs), these assemblies incorporate electronic circuitry within the transceiver modules to amplify and equalize the signal. This signal conditioning allows for longer transmission distances, typically up to 15 meters, and can accommodate thinner gauge wires. The trade-off is slightly higher power consumption (around 0.5W to 1.5W) and a marginal increase in latency compared to their passive counterparts.

A key advantage of DAC cables is their direct electrical signaling path, which eliminates the electro-optical conversion process inherent in fiber optic systems. This results in significantly lower latency, a critical factor in latency-sensitive applications.

Breakout DAC Cables

Breakout DACs, also known as fanout or splitter cables, feature a higher-speed connector on one end (e.g., 40G QSFP+) that is split into multiple lower-speed connectors on the other end (e.g., four 10G SFP+). This configuration is highly efficient for connecting a high-bandwidth switch port to multiple lower-bandwidth server or storage ports, thereby increasing port density and simplifying cabling.

Quantitative Performance Metrics

The selection of an appropriate interconnect solution requires a thorough comparison of key performance indicators. The following tables summarize the quantitative data for DAC cables in comparison to Active Optical Cables (AOCs) and traditional fiber optic transceivers.

| Parameter | Passive DAC | Active DAC | Active Optical Cable (AOC) | Fiber Optic Transceiver |

| Latency | Lowest (<1 µs) | Very Low (<1 µs) | Low | Higher (due to E/O conversion) |

| Power Consumption | < 0.15 W | 0.5 - 1.5 W | 1 - 2 W | 1 - 4 W (per transceiver) |

| Bit Error Rate (BER) | Typically < 10⁻¹² | Typically < 10⁻¹² | Typically < 10⁻¹² | Typically < 10⁻¹² |

| Bend Radius | Less sensitive | Less sensitive | More sensitive | More sensitive |

| EMI Susceptibility | Susceptible | Less Susceptible | Immune | Immune |

Table 1: Comparative Analysis of Interconnect Technologies

| Data Rate | Passive DAC Max. Length | Active DAC Max. Length | Typical Wire Gauge (AWG) |

| 10 Gbps | 7 m | 15 m | 24, 28, 30 |

| 25 Gbps | 5 m | 10 m | 24, 28, 30 |

| 40 Gbps | 5 m | 10 m | 24, 28, 30 |

| 100 Gbps | 3 m | 5 m | 24, 26 |

| 200 Gbps | 3 m | 5 m | 24, 26 |

| 400 Gbps | 2 m | 5 m | 24, 26 |

Table 2: Maximum Length of DAC Cables by Data Rate

Experimental Protocols for Performance Validation

To ensure the reliability and performance of DAC cables in critical applications, a series of rigorous experimental tests are conducted. These tests validate the signal integrity and compliance with industry standards.

Bit Error Rate (BER) Testing

The Bit Error Rate (BER) is a fundamental metric of transmission quality, representing the ratio of erroneously received bits to the total number of transmitted bits. A lower BER indicates a more reliable link. For data communications, a BER of 10⁻¹² or lower is generally considered acceptable.

Methodology for BER Testing:

-

Equipment Setup: A Bit Error Rate Tester (BERT) is used. The BERT consists of a pattern generator and an error detector. For DAC cable testing, a specialized tester with SFP/QSFP ports is often employed.

-

Pattern Generation: The pattern generator transmits a predefined, complex data pattern, typically a Pseudo-Random Binary Sequence (PRBS), through the DAC cable. The PRBS pattern is designed to simulate a wide range of bit combinations to effectively stress the communication link.

-

Error Detection: The DAC cable is connected in a loopback configuration or between two BERT units. The error detector at the receiving end compares the incoming data stream with the known transmitted PRBS pattern.

-

Data Analysis: Any discrepancies between the received and expected patterns are counted as bit errors. The BER is calculated by dividing the total number of bit errors by the total number of bits transmitted over a specified period.

Signal Integrity Analysis using Eye Diagrams

An eye diagram is a powerful visualization tool used to assess the quality of a digital signal. It is generated by overlaying multiple segments of a digital waveform, creating a pattern that resembles an eye.

Methodology for Eye Diagram Analysis:

-

Equipment Setup: A high-bandwidth oscilloscope is the primary instrument for generating eye diagrams. A signal source, such as a pattern generator, provides the input signal, and differential probes are used to connect to the DAC cable.

-

Signal Acquisition: The oscilloscope samples the signal at the receiving end of the DAC cable over many bit intervals.

-

Diagram Generation: The oscilloscope's software superimposes these sampled waveforms, aligning them based on the clock cycle. The resulting image is the eye diagram.

-

Interpretation:

-

Eye Opening: A wide and tall eye opening indicates a high-quality signal with good timing and voltage margins.

-

Eye Height: Represents the signal-to-noise ratio. A larger height signifies a cleaner signal.

-

Eye Width: Indicates the timing margin and the extent of jitter (timing variations). A wider eye is desirable.

-

Eye Closure: A closed or constricted eye suggests significant signal degradation due to factors like noise, jitter, and inter-symbol interference (ISI), which can lead to a high BER.

-

Time-Domain Reflectometry (TDR) for Fault Localization

Time-Domain Reflectometry (TDR) is a diagnostic technique used to locate faults and impedance discontinuities in metallic cables. A TDR instrument sends a low-voltage pulse down the cable and analyzes the reflections that occur at any point where the cable's impedance changes.

Methodology for TDR Testing:

-

Equipment Setup: A TDR instrument is connected to one end of the DAC cable.

-

Pulse Injection: The TDR injects a fast-rise time pulse into the cable.

-

Reflection Analysis: The instrument monitors for reflected pulses. The shape and polarity of the reflection indicate the nature of the impedance change (e.g., an open circuit, a short circuit, or a crimp).

-

Distance Calculation: By measuring the time it takes for the reflection to return and knowing the velocity of propagation (VoP) of the signal in the cable, the TDR can calculate the precise distance to the fault. This is invaluable for troubleshooting and quality control.

References

Navigating High-Speed Data: A Technical Guide to HPE 5945 Switch Series Transceivers

In the realm of modern scientific research and drug development, the ability to rapidly process and transfer vast datasets is paramount. The HPE 5945 Switch Series, a family of high-density, low-latency switches, provides the foundational network infrastructure required for these demanding environments.[1][2] A critical component of this infrastructure is the selection of appropriate transceivers, which enable connectivity between the switch and other network devices. This guide provides a comprehensive overview of the supported transceivers for the HPE 5945 Switch Series, designed to assist network architects and IT professionals in building robust and high-performance networks for research applications.

Understanding Transceiver Compatibility

The HPE 5945 Switch Series supports a wide array of transceivers to accommodate diverse networking requirements, from 1 Gigabit Ethernet (GbE) to 100 GbE.[1][3] The compatibility of a transceiver is determined by its form factor (e.g., SFP, QSFP), data rate, and the specific HPE-validated firmware. It is crucial to use HPE-approved transceivers to ensure optimal performance, reliability, and support.

While detailed experimental protocols for transceiver validation are proprietary to Hewlett Packard Enterprise and not publicly available, the supported transceivers listed in this guide have undergone rigorous testing to ensure interoperability with the 5945 series switches. This testing encompasses signal integrity, power consumption, and thermal performance to guarantee stable operation in a production environment.

Supported Transceiver Modules

The following tables summarize the supported transceivers for the HPE 5945 Switch Series, categorized by their form factor and data rate.

SFP (Small Form-factor Pluggable) Transceivers

These transceivers are typically used for management ports and lower-speed data connections.

| Part Number | Description | Data Rate |

| JD102B | HPE Networking X115 100M SFP LC FX Transceiver | 100 Mbps |

| JD120B | HPE Networking X110 100M SFP LC LX Transceiver | 100 Mbps |

| JD090A | HPE X110 100M SFP LC LH40 Transceiver | 100 Mbps |

| JD089B | HPE X120 1G SFP RJ45 T Transceiver | 1 Gbps |

| JD118B | HPE X120 1G SFP LC SX Transceiver | 1 Gbps |

| JD119B | HPE X120 1G SFP LC LX Transceiver | 1 Gbps |

| JD103A | HPE Networking X120 1G SFP LC LH100 Transceiver | 1 Gbps |

| JD061A | HPE X125 1G SFP LC LH40 1310nm Transceiver | 1 Gbps |

| JD062A | HPE X120 1G SFP LC LH40 1550nm Transceiver | 1 Gbps |

| JD063B | HPE X125 1G SFP LC LH80 Transceiver | 1 Gbps |

SFP+ (Enhanced Small Form-factor Pluggable) Transceivers

SFP+ transceivers are used for 10 Gigabit Ethernet connections.

| Part Number | Description | Data Rate |

| S2N61A | HPE Networking Comware 10GBASE-T SFP+ RJ45 30m Cat6A Transceiver | 10 Gbps |

| JD092B | HPE X130 10G SFP+ LC SR Transceiver | 10 Gbps |

| JD094B | HPE X130 10G SFP+ LC LR Transceiver | 10 Gbps |

| JG234A | HPE Networking X130 10G SFP+ LC ER 40km Transceiver | 10 Gbps |

| JG915A | HPE Networking X130 10G SFP+ LC LH 80km Transceiver | 10 Gbps |

| JL737A | HPE X130 10G SFP+ LC BiDi 10 km Uplink transceiver | 10 Gbps |

| JL738A | HPE X130 10G SFP+ LC BiDi 10 km Downlink transceiver | 10 Gbps |

| JL739A | HPE X130 10G SFP+ LC BiDi 40 km Uplink transceiver | 10 Gbps |

| JL740A | HPE X130 10G SFP+ LC BiDi 40 km Downlink transceiver | 10 Gbps |

SFP28 (Enhanced Small Form-factor Pluggable 28) Transceivers

SFP28 transceivers support 25 Gigabit Ethernet, providing a high-density, high-bandwidth solution.

| Part Number | Description | Data Rate |

| JL293A | HPE X190 25G SFP28 LC SR 100m MM Transceiver | 25 Gbps |

| JL855A | HPE Networking 25G SFP28 LC LR 10km SMF Transceiver | 25 Gbps |

QSFP+ (Quad Small Form-factor Pluggable Plus) Transceivers

QSFP+ transceivers are used for 40 Gigabit Ethernet connectivity.

| Part Number | Description | Data Rate |

| JG325B | HPE X140 40G QSFP+ MPO SR4 Transceiver | 40 Gbps |

| JG709A | HPE X140 40G QSFP+ MPO MM 850nm CSR4 300-m Transceiver | 40 Gbps |

| JL251A | HPE X140 40G QSFP+ LC BiDi 100 m MM Transceiver | 40 Gbps |

| JG661A | HPE X140 40G QSFP+ LC LR4 SM 10 km 1310nm Transceiver | 40 Gbps |

| JL286A | HPE X140 40G QSFP+ LC LR4L 2 km SM Transceiver | 40 Gbps |

QSFP28 (Quad Small Form-factor Pluggable 28) Transceivers

QSFP28 transceivers are the standard for 100 Gigabit Ethernet, offering the highest bandwidth available on the 5945 series.

| Part Number | Description | Data Rate |

| JL274A | HPE Networking X150 100G QSFP28 MPO SR4 100m MM Transceiver | 100 Gbps |

| JH419A | HPE X150 100G QSFP28 LC SWDM4 100m MM Transceiver | 100 Gbps |

| JQ344A | HPE X150 100G QSFP28 LC BiDi 100m MM Transceiver | 100 Gbps |

| JH672A | HPE X150 100G QSFP28 eSR4 300m MM Transceiver | 100 Gbps |

| JH420A | HPE X150 100G QSFP28 MPO PSM4 500m SM Transceiver | 100 Gbps |

| S4J89A | HPE Networking Comware 100G DR QSFP28 LC 500m SM Transceiver | 100 Gbps |

| JH673A | HPE X150 100G QSFP28 CWDM4 2km SM Transceiver | 100 Gbps |

| S2P29A | HPE Networking Comware 100G FR1 QSFP28 LC 2km SMF Transceiver | 100 Gbps |

| JL275A | HPE X150 100G QSFP28 LC LR4 10km SM Transceiver | 100 Gbps |

Direct Attach and Active Optical Cables

In addition to transceivers, the HPE 5945 series supports various Direct Attach Copper (DAC) and Active Optical Cables (AOC) for short-distance interconnects.

| Part Number | Description |

| JD095C | HPE FlexNetwork X240 10G SFP+ to SFP+ 0.65m Direct Attach Copper Cable |

| JL290A | HPE X2A0 10G SFP+ to SFP+ 7m Active Optical Cable |

| JL291A | HPE X2A0 10G SFP+ to SFP+ 10m Active Optical Cable |

| JL292A | HPE X2A0 10G SFP+ to SFP+ 20m Active Optical Cable |

| JG326A | HPE FlexNetwork X240 40G QSFP+ QSFP+ 1m Direct Attach Copper Cable |

| JG327A | HPE FlexNetwork X240 40G QSFP+ QSFP+ 3m Direct Attach Copper Cable |

| JG328A | HPE FlexNetwork X240 40G QSFP+ QSFP+ 5m Direct Attach Copper Cable |

| JG329A | HPE FlexNetwork X240 40G QSFP+ to 4x10G SFP+ 1m Direct Attach Copper Splitter Cable |

| JG330A | HPE FlexNetwork X240 40G QSFP+ to 4x10G SFP+ 3m Direct Attach Copper Splitter Cable |

| JG331A | HPE FlexNetwork X240 40G QSFP+ to 4x10G SFP+ 5m Direct Attach Copper Splitter Cable |

| JL271A | HPE X240 100G QSFP28 to QSFP28 1m Direct Attach Copper Cable |

| JL272A | HPE X240 100G QSFP28 to QSFP28 3m Direct Attach Copper Cable |

| JL273A | HPE X240 100G QSFP28 to QSFP28 5m Direct Attach Copper Cable |

| JL276A | HPE X2A0 100G QSFP28 to QSFP28 7m Active Optical Cable |

| JL277A | HPE X2A0 100G QSFP28 to QSFP28 10m Active Optical Cable |

| JL278A | HPE X2A0 100G QSFP28 to QSFP28 20m Active Optical Cable |

| JL282A | HPE X240 QSFP28 4xSFP28 1m Direct Attach Copper Cable |

| JL283A | HPE X240 QSFP28 4xSFP28 3m Direct Attach Copper Cable |

| JL284A | HPE X240 QSFP28 4xSFP28 5m Direct Attach Copper Cable |

Logical Connectivity Workflow

The following diagram illustrates the logical connectivity options for an HPE 5945 switch, showcasing how different transceivers and cables can be used to connect to various network components such as servers, storage arrays, and other switches.

References

HPE 5945 Switch: A Technical Deep Dive for High-Performance Computing Environments

For Researchers, Scientists, and Drug Development Professionals

In the data-intensive realms of scientific research and drug development, the network infrastructure is a critical component that dictates the pace of discovery. The Hewlett Packard Enterprise (HPE) 5945 Switch series is engineered to meet the demanding requirements of these environments, offering a high-performance, low-latency platform for data center and high-performance computing (HPC) workloads. This technical guide provides an in-depth look at the architecture and design of the HPE 5945 switch, tailored for professionals who rely on robust and efficient data transit for their research.

Core Architecture: A High-Throughput, Low-Latency Design

The HPE 5945 switch series is designed as a top-of-rack (ToR) or aggregation layer switch, providing high-density 10/25/40/100GbE connectivity. At its core, the architecture is built to deliver wirespeed performance with ultra-low latency, a crucial requirement for HPC clusters and large-scale data analysis.

Control Plane and Data Plane Separation

The HPE 5945 operates on the Comware 7 operating system, which employs a modular design that separates the control plane and data plane. This separation is fundamental to the switch's stability and performance. The control plane is responsible for routing protocols, network management, and other control functions, while the data plane is dedicated to the high-speed forwarding of packets. This ensures that control plane tasks do not impact the performance of data forwarding.

A logical representation of this separation is illustrated below:

Hardware and ASIC Architecture

While HPE does not publicly disclose the specific ASIC (Application-Specific Integrated Circuit) vendor and model used in the 5945 series, its performance characteristics suggest a modern, high-capacity, and programmable chipset. This ASIC is the heart of the data plane, responsible for line-rate packet processing, and features like cut-through switching to minimize latency. The hardware is designed for data center environments with features like front-to-back or back-to-front airflow for efficient cooling and redundant, hot-swappable power supplies and fans for high availability.

Performance and Scalability

The HPE 5945 series is engineered for high performance and scalability, making it suitable for demanding research and development environments. Key performance metrics are summarized in the table below.

| Metric | HPE 5945 Series Specifications | Relevance to Research & Drug Development |

| Switching Capacity | Up to 6.4 Tbps | Enables the transfer of massive datasets, such as genomic sequences or molecular simulation results, without bottlenecks. |

| Throughput | Up to 2024 Mpps (Million packets per second)[1] | Ensures high-speed processing of transactional workloads and real-time data streams common in laboratory environments. |

| Latency | Sub-microsecond (< 1 µs)[1][2] | Critical for tightly coupled HPC clusters where inter-node communication speed directly impacts application performance. |

| MAC Address Table Size | Up to 288,000 entries | Supports large Layer 2 domains with a high number of connected devices, typical in extensive research networks. |

| IPv4/IPv6 Routing Table Size | Up to 324,000/162,000 entries | Allows for complex network designs and scaling of routed networks across large research campuses or data centers. |

| Port Density | High-density 10/25/40/100GbE configurations | Provides flexible and high-speed connectivity for a diverse range of servers, storage, and scientific instruments. |

Key Features for Research Environments

The HPE 5945 switch incorporates several features that are particularly beneficial for scientific and research workloads.

Intelligent Resilient Framework (IRF)

HPE's IRF technology allows multiple 5945 switches to be virtualized and managed as a single logical device. This simplifies network design and management, enhances resiliency, and enables load balancing across the members of the IRF fabric. For critical research workloads, an IRF setup provides high availability by ensuring that the failure of a single switch does not disrupt the network.

The logical workflow of an IRF configuration is depicted below:

Virtual Extensible LAN (VXLAN)

For environments with large-scale virtualization or multi-tenant research groups, VXLAN provides a mechanism to create isolated Layer 2 networks over a Layer 3 infrastructure. The HPE 5945 has hardware support for VXLAN, offloading the encapsulation and decapsulation of VXLAN packets to the ASIC for line-rate performance. This is particularly useful for creating secure, isolated networks for different research projects or collaborations.

Packet Forwarding Pipeline

Understanding the packet forwarding pipeline is essential for comprehending how the switch processes data. While the exact internal pipeline of the proprietary ASIC is not public, a logical representation can be constructed based on standard switch architectures and the features of the 5945.

A simplified logical packet flow is as follows:

Experimental Protocols: Methodologies for performance testing of network switches typically involve specialized hardware and software for traffic generation and analysis. Key tests include:

-

RFC 2544: A standard set of tests that measure throughput, latency, frame loss rate, and back-to-back frames.

-

RFC 2889: A suite of tests for measuring the performance of LAN switching devices.

-

RFC 3918: Defines methodologies for testing multicast forwarding performance.

These tests are conducted using traffic generators from vendors like Ixia or Spirent, which can create line-rate traffic and measure performance with high precision.

Conclusion

The HPE 5945 switch series provides a robust, high-performance, and scalable networking foundation for data-intensive research and drug development. Its low-latency, high-throughput architecture, combined with key features like IRF and VXLAN, enables the rapid and efficient movement of large datasets, which is paramount for accelerating the pace of scientific discovery. Understanding the core architectural principles of this switch allows researchers and IT professionals to better design and optimize their network infrastructure to meet the unique demands of their work.

References

Revolutionizing Research Data Centers: A Technical Guide to the HPE 5945 Switch Series

For Immediate Release

In the fast-paced world of scientific research, drug development, and high-performance computing (HPC), the ability to rapidly process and analyze massive datasets is paramount. The network infrastructure of a research data center forms the bedrock of these capabilities. The HPE FlexFabric 5945 Switch Series emerges as a pivotal technology, engineered to meet the stringent demands of modern research environments by delivering high-density, ultra-low-latency, and scalable connectivity. This guide provides an in-depth technical overview of the HPE 5945, tailored for researchers, scientists, and drug development professionals.

Core Capabilities for Research and Development

Research data centers are characterized by their need for high-bandwidth, low-latency communication to support data-intensive applications, large-scale simulations, and collaborative research. The HPE 5945 switch series is specifically designed for deployment at the aggregation or server access layer of large enterprise data centers, and is also robust enough for the core layer of medium-sized enterprises.[1][2][3] Its architecture addresses the critical requirements of these environments.

A key advantage of the HPE 5945 is its high-performance switching capability, featuring a cut-through and nonblocking architecture that delivers very low latency, approximately 1 microsecond for 100GbE, which is crucial for demanding enterprise applications.[1] This ultra-low latency is critical for HPC clusters and storage networks where minimizing communication overhead is essential for overall application performance. The switch series also supports high-density 10GbE, 25GbE, 40GbE, and 100GbE deployments, providing flexible and scalable connectivity for server edges.[1][4]

Quantitative Performance Metrics

The HPE 5945 series offers a range of models with varying specifications to suit different deployment scales and performance requirements. The following tables summarize the key quantitative data for easy comparison.

Table 1: Performance Specifications of HPE FlexFabric 5945 Switch Models

| Feature | HPE FlexFabric 5945 48SFP28 8QSFP28 (JQ074A) | HPE FlexFabric 5945 2-slot Switch (JQ075A) | HPE FlexFabric 5945 4-slot Switch (JQ076A) | HPE FlexFabric 5945 32QSFP28 (JQ077A) |

| Switching Capacity | 4 Tb/s[5] | 3.6 Tb/s[5] | 6.4 Tb/s[5] | 6.4 Tb/s[5] |

| Throughput | Up to 2024 Mpps[5] | Up to 2024 Mpps[5] | Up to 2024 Mpps[5] | Up to 2024 Mpps[5] |

| Latency | < 1 µs (64-byte packets)[5] | < 1 µs (64-byte packets)[5] | < 1 µs (64-byte packets)[5] | < 1 µs (64-byte packets)[5] |

| MAC Address Table Size | 288K Entries[5] | 288K Entries[5] | 288K Entries[5] | 288K Entries[5] |

| IPv4 Routing Table Size | 324K Entries[5] | 324K Entries[5] | 324K Entries[5] | 324K Entries[5] |

| IPv6 Routing Table Size | 162K Entries[5] | 162K Entries[5] | 162K Entries[5] | 162K Entries[5] |

Table 2: Port Configurations of HPE FlexFabric 5945 Switch Models

| Model | I/O Ports and Slots |

| HPE FlexFabric 5945 48SFP28 8QSFP28 (JQ074A) | 48 x 25G SFP28 Ports, 8 x 100G QSFP28 Ports, 2 x 1G SFP ports.[5] Supports up to 80 x 10GbE ports with splitter cables.[6] |

| HPE FlexFabric 5945 2-slot Switch (JQ075A) | 2 module slots, 2 x 100G QSFP28 ports. Supports up to 48 x 10/25 GbE and 4 x 100 GbE ports, or up to 16 x 100 GbE ports.[5] |

| HPE FlexFabric 5945 4-slot Switch (JQ076A) | 4 Module slots, 2 x 1G SFP ports. Supports up to 96 x 10/25 GbE and 8 x 100 GbE ports, or up to 32 x 100 GbE ports.[5] |

| HPE FlexFabric 5945 32QSFP28 (JQ077A) | 32 x 100G QSFP28 ports. |

Advanced Features for Research Data Centers

The HPE 5945 series is equipped with a comprehensive set of features that are highly beneficial for research data centers.

High-Performance Computing and Storage Networking

For research workloads that rely on high-performance computing, such as genomics sequencing, molecular modeling, and computational fluid dynamics, the HPE 5945 provides support for RoCE v1/v2 (RDMA over Converged Ethernet).[7] RoCE enables remote direct memory access over an Ethernet network, significantly reducing CPU overhead and improving application performance. The switch series also supports Fibre Channel over Ethernet (FCoE) and NVMe over Fabrics, making it a versatile solution for modern storage networks.[1]

Virtualization and Cloud-Native Environments

Modern research often involves virtualized environments and containerized workloads. The HPE 5945 supports VXLAN (Virtual Extensible LAN) and EVPN (Ethernet VPN), which are essential technologies for building scalable and flexible network overlays in virtualized data centers.[4][8] This allows for the creation of isolated logical networks for different research projects or tenants, enhancing security and manageability.

High Availability and Resiliency

To ensure uninterrupted research operations, the HPE 5945 incorporates several high-availability features. HPE Intelligent Resilient Fabric (IRF) technology allows up to 10 HPE 5945 switches to be combined and managed as a single virtual switch, simplifying network architecture and improving resiliency.[1] Additionally, features like Distributed Resilient Network Interconnect (DRNI) offer high availability by combining multiple physical switches into one virtual distributed-relay system.[1] Redundant, hot-swappable power supplies and fans further enhance the reliability of the platform.

Experimental Workflow and Logical Relationships

The following diagram illustrates the logical workflow of how the HPE 5945's features cater to the demands of a typical research data center environment.

Methodologies for Key Experiments and Protocols

While this document does not detail specific biological or chemical experiments, the networking protocols and technologies mentioned are based on well-defined industry standards. For instance, the implementation of RoCE follows the specifications set by the InfiniBand Trade Association. Similarly, VXLAN and EVPN are standardized by the Internet Engineering Task Force (IETF). The performance metrics cited, such as latency and throughput, are typically measured in controlled lab environments using industry-standard traffic generation and analysis tools. These tests involve sending a high volume of packets of a specific size (e.g., 64-byte packets for latency measurements) between ports on the switch and measuring the time taken for the packets to be forwarded.

Conclusion

The HPE 5945 Switch Series provides a robust, high-performance, and scalable networking foundation for modern research data centers. Its combination of ultra-low latency, high-density port configurations, and advanced features for HPC, storage, and virtualization directly addresses the demanding requirements of data-intensive scientific and drug development workflows. By leveraging the capabilities of the HPE 5945, research institutions can accelerate their discovery processes and drive innovation.

References

- 1. etevers-marketing.com [etevers-marketing.com]

- 2. HPE Networking Comware Switch Series 5945 | HPE Store US [buy.hpe.com]

- 3. andovercg.com [andovercg.com]

- 4. HPE FlexFabric 5945 Switch Series [hpe.com]

- 5. support.hpe.com [support.hpe.com]

- 6. Options | HPE Store EMEA [buy.hpe.com]

- 7. 2nd-source.de [2nd-source.de]

- 8. support.hpe.com [support.hpe.com]

HPE FlexFabric 5945: A Technical Guide for High-Performance Computing in Scientific Research

An In-depth Technical Guide for Researchers, Scientists, and Drug Development Professionals