CCMI

Description

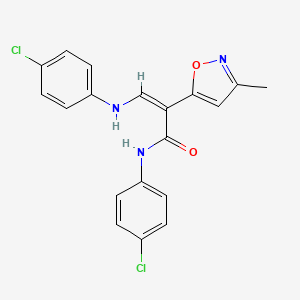

Structure

2D Structure

3D Structure

Properties

IUPAC Name |

(Z)-3-(4-chloroanilino)-N-(4-chlorophenyl)-2-(3-methyl-1,2-oxazol-5-yl)prop-2-enamide | |

|---|---|---|

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI |

InChI=1S/C19H15Cl2N3O2/c1-12-10-18(26-24-12)17(11-22-15-6-2-13(20)3-7-15)19(25)23-16-8-4-14(21)5-9-16/h2-11,22H,1H3,(H,23,25)/b17-11- | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI Key |

VMAKIACTLSBBIY-BOPFTXTBSA-N | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Canonical SMILES |

CC1=NOC(=C1)C(=CNC2=CC=C(C=C2)Cl)C(=O)NC3=CC=C(C=C3)Cl | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Isomeric SMILES |

CC1=NOC(=C1)/C(=C/NC2=CC=C(C=C2)Cl)/C(=O)NC3=CC=C(C=C3)Cl | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Molecular Formula |

C19H15Cl2N3O2 | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Molecular Weight |

388.2 g/mol | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

CAS No. |

917837-54-8 | |

| Record name | AVL-3288 | |

| Source | ChemIDplus | |

| URL | https://pubchem.ncbi.nlm.nih.gov/substance/?source=chemidplus&sourceid=0917837548 | |

| Description | ChemIDplus is a free, web search system that provides access to the structure and nomenclature authority files used for the identification of chemical substances cited in National Library of Medicine (NLM) databases, including the TOXNET system. | |

| Record name | AVL-3288 | |

| Source | FDA Global Substance Registration System (GSRS) | |

| URL | https://gsrs.ncats.nih.gov/ginas/app/beta/substances/VA80VAX4WF | |

| Description | The FDA Global Substance Registration System (GSRS) enables the efficient and accurate exchange of information on what substances are in regulated products. Instead of relying on names, which vary across regulatory domains, countries, and regions, the GSRS knowledge base makes it possible for substances to be defined by standardized, scientific descriptions. | |

| Explanation | Unless otherwise noted, the contents of the FDA website (www.fda.gov), both text and graphics, are not copyrighted. They are in the public domain and may be republished, reprinted and otherwise used freely by anyone without the need to obtain permission from FDA. Credit to the U.S. Food and Drug Administration as the source is appreciated but not required. | |

Foundational & Exploratory

The Cancer Cell Map Initiative: A Technical Guide to Unraveling the Complexity of Cancer Networks

For Researchers, Scientists, and Drug Development Professionals

The Cancer Cell Map Initiative (CCMI) is a collaborative research effort dedicated to shifting the paradigm of cancer research from a gene-centric view to a comprehensive understanding of the intricate network of protein-protein interactions (PPIs) that drive tumorigenesis.[1][2] By systematically mapping these complex interactions, the this compound aims to elucidate how genetic alterations in cancer ultimately manifest as functional changes at the protein level, thereby revealing novel therapeutic targets and biomarkers.[1][3] This guide provides an in-depth technical overview of the this compound's core methodologies, data, and key findings.

Core Principles of the Cancer Cell Map Initiative

The central tenet of the this compound is that the functional consequences of diverse and often rare cancer mutations converge on a smaller number of protein complexes and pathways.[4] By focusing on the protein interaction landscape, the initiative seeks to:

-

Move Beyond Single-Gene Analyses: While genomic sequencing has identified a vast number of mutations associated with cancer, the functional impact of many of these mutations remains unclear. The this compound contextualizes these mutations by examining their effect on protein interaction networks.[1][4]

-

Identify Novel Therapeutic Targets: By uncovering previously unknown protein interactions that are specific to cancer cells, the this compound pinpoints new nodes in the cancer network that can be targeted for therapeutic intervention.[2][3]

-

Discover New Biomarkers: Protein complexes and interaction signatures can serve as more robust biomarkers for patient stratification and predicting treatment response than individual gene mutations.[3]

-

Create a Public Resource: The data and maps generated by the this compound are made publicly available to the research community to accelerate cancer research and drug discovery.

Data Presentation: Quantitative Overview of Key Findings

The this compound has generated extensive data on the protein interactomes of breast and head and neck cancers. The following tables summarize the key quantitative findings from their initial landmark studies.

| Metric | Head and Neck Squamous Cell Carcinoma (HNSCC) | Breast Cancer | Reference |

| Genes/Proteins Studied ("Baits") | 31 frequently altered genes | 40 significantly altered proteins | [1] |

| Cell Lines Used | 3 (cancerous and non-cancerous) | 3 (MCF7, MDA-MB-231, and non-tumorigenic MCF10A) | [1] |

| Total Protein-Protein Interactions (PPIs) Identified | 771 | Hundreds | [1][2] |

| Percentage of Novel PPIs (not previously reported) | 84% | ~79% | [1][2] |

Experimental Protocols: A Detailed Look at the Core Methodology

The primary experimental approach employed by the Cancer Cell Map Initiative is Affinity Purification followed by Mass Spectrometry (AP-MS) . This powerful technique allows for the isolation and identification of proteins that interact with a specific protein of interest (the "bait") within a cellular context.

Affinity Purification-Mass Spectrometry (AP-MS) Workflow

The following diagram illustrates the general workflow for AP-MS as utilized in the this compound's research.

Detailed Methodological Steps:

-

Generation of Bait-Expressing Cell Lines:

-

The open reading frame (ORF) of a gene of interest (the "bait") is cloned into a lentiviral expression vector.

-

An affinity tag (e.g., FLAG, HA, or a tandem tag like SFB) is fused to the N- or C-terminus of the bait protein. This tag allows for the specific purification of the bait and its interacting partners.

-

Lentivirus is produced and used to transduce the desired mammalian cell lines (e.g., HEK293T for initial testing, followed by cancer-relevant lines like MCF7 or HNSCC cell lines).

-

Stable cell lines expressing the tagged bait protein are selected using an appropriate antibiotic resistance marker (e.g., puromycin).

-

-

Cell Culture and Lysis:

-

The engineered cell lines are grown in large-scale culture to generate sufficient biomass for protein purification.

-

Cells are harvested and then lysed in a buffer containing detergents and protease inhibitors to solubilize proteins and prevent their degradation, while aiming to keep native protein complexes intact.

-

-

Affinity Purification:

-

The cell lysate is cleared by centrifugation to remove cellular debris.

-

The cleared lysate is incubated with beads (e.g., magnetic or agarose) that are coated with antibodies specific to the affinity tag (e.g., anti-FLAG M2 beads).

-

The bait protein, along with its interacting "prey" proteins, binds to the beads.

-

The beads are washed several times with lysis buffer to remove proteins that non-specifically bind to the beads or the antibody.

-

The purified protein complexes are eluted from the beads, often by competition with a peptide corresponding to the affinity tag or by changing the pH.

-

-

Protein Digestion and Mass Spectrometry:

-

The eluted proteins are denatured, reduced, and alkylated.

-

The proteins are then digested into smaller peptides using a protease, most commonly trypsin.

-

The resulting peptide mixture is analyzed by liquid chromatography-tandem mass spectrometry (LC-MS/MS). The peptides are separated by liquid chromatography and then ionized and fragmented in the mass spectrometer to determine their amino acid sequences.

-

-

Computational Analysis of Mass Spectrometry Data:

-

The raw mass spectrometry data is processed using a search algorithm (e.g., MaxQuant) to identify the peptides and, by extension, the proteins present in the sample.

-

To distinguish true interaction partners from background contaminants, sophisticated scoring algorithms such as SAINT (Significance Analysis of INTeractome) and CompPASS (Comparative Proteomic Analysis Software Suite) are employed. These tools use quantitative data (e.g., spectral counts) from replicate experiments and negative controls to calculate a confidence score for each potential PPI.

-

High-confidence interactions are then used to construct protein-protein interaction networks, which can be visualized and further analyzed using software like Cytoscape.

-

Mandatory Visualization: Signaling Pathways and Logical Relationships

The this compound's work has shed light on the rewiring of key signaling pathways in cancer. Below are diagrams representing some of these findings, generated using the DOT language.

The PI3K-AKT Signaling Pathway and Novel Regulators in Breast Cancer

The PI3K-AKT pathway is one of the most frequently dysregulated pathways in human cancers. The this compound's investigation into the interactome of PIK3CA (the catalytic subunit of PI3K) in breast cancer cells identified novel negative regulators of this pathway.

This diagram illustrates the core PI3K-AKT signaling cascade, where activation of receptor tyrosine kinases leads to the activation of PIK3CA, which then phosphorylates PIP2 to generate PIP3, a key second messenger that activates AKT and promotes cell growth and survival. The this compound discovered that in breast cancer cells, the proteins BPIFA1 and SCGB2A1 interact with PIK3CA and act as potent negative regulators of this pathway.[1]

A Novel Interaction in Head and Neck Cancer Promoting Cell Migration

In their study of head and neck squamous cell carcinoma (HNSCC), the this compound uncovered a previously unknown interaction between the fibroblast growth factor receptor 3 (FGFR3) and Daple, a guanine-nucleotide exchange factor. This interaction was shown to activate a signaling cascade that promotes cancer cell migration.

This pathway highlights the discovery that FGFR3, a receptor tyrosine kinase, interacts with Daple. This interaction leads to the activation of the G-protein subunit Gαi, which in turn activates the PAK1/2 kinases, ultimately promoting cancer cell migration.[1] This finding provides a new potential therapeutic avenue for HNSCC by targeting components of this novel pathway.

Conclusion

The Cancer Cell Map Initiative represents a significant advancement in our approach to understanding and treating cancer. By moving beyond the linear analysis of gene mutations to the complex, interconnected web of protein interactions, the this compound is providing a more holistic view of cancer biology. The data and methodologies presented in this guide offer a powerful resource for researchers and drug development professionals, paving the way for the discovery of new therapeutic targets, the development of more effective combination therapies, and the identification of novel biomarkers for precision medicine. The continued expansion of these cancer cell maps to other tumor types will undoubtedly be a cornerstone of cancer systems biology for years to come.

References

A Researcher's Technical Guide to the Cancer Cell Map Initiative (CCMI) Data Portal

An In-depth Whitepaper for Researchers, Scientists, and Drug Development Professionals

The Cancer Cell Map Initiative (CCMI) is a collaborative effort to comprehensively map the complex network of protein-protein and genetic interactions that drive cancer. This initiative provides a rich resource for researchers, scientists, and drug development professionals to explore the molecular underpinnings of cancer, identify novel therapeutic targets, and understand mechanisms of drug resistance. The primary access point to this wealth of data is through dedicated portals integrated within the cBioPortal for Cancer Genomics.

This technical guide provides a detailed overview of the this compound data portal, focusing on the types of data available, the experimental methodologies employed, and how to visualize and interpret the complex biological networks.

Data Presentation

The this compound generates a variety of quantitative data from high-throughput experiments. Below are summary tables of representative data from key this compound projects, providing insights into protein-protein interactions and genetic dependencies in different cancer types.

Table 1: Protein-Protein Interactions (PPIs) in Breast Cancer Cells

This table summarizes a subset of high-confidence protein-protein interactions identified in breast cancer cell lines using affinity purification-mass spectrometry (AP-MS). The "bait" protein is the protein that was targeted for purification, and the "prey" proteins are the interacting partners that were identified.

| Bait Protein | Prey Protein | Cell Line | MIST Score |

| PIK3CA | IRS1 | MCF7 | 0.89 |

| PIK3CA | PIK3R1 | MCF7 | 0.95 |

| PIK3CA | PIK3R2 | MCF7 | 0.92 |

| PIK3CA | PIK3R3 | MCF7 | 0.85 |

| TP53 | MDM2 | MCF7 | 0.98 |

| TP53 | TP53BP1 | MCF7 | 0.91 |

| BRCA1 | BARD1 | T47D | 0.99 |

| BRCA1 | PALB2 | T47D | 0.93 |

MIST (Mass spectrometry interaction statistics) score represents the confidence of the interaction.

Table 2: Genetic Dependencies in Head and Neck Squamous Cell Carcinoma (HNSCC)

This table presents a selection of genes identified as essential for the survival or "fitness" of HNSCC cell lines, as determined by genome-wide CRISPR-Cas9 screens. A more negative CRISPR score indicates a higher dependency of the cancer cells on that particular gene.

| Gene | Cell Line | CRISPR Score (CERES) |

| EGFR | FaDu | -1.25 |

| PIK3CA | FaDu | -0.98 |

| TP53 | Cal27 | -1.15 |

| MYC | Cal27 | -1.02 |

| UCHL5 | MOC1 | -0.89 |

| YAP1 | SCC-4 | -0.95 |

| TAZ | SCC-4 | -0.91 |

CERES score is a computational method to estimate gene dependency levels from CRISPR-Cas9 screens.

Experimental Protocols

The data generated by the this compound relies on state-of-the-art experimental techniques. The following sections provide detailed methodologies for the key experiments cited.

Affinity Purification-Mass Spectrometry (AP-MS)

AP-MS is a powerful technique used to identify protein-protein interactions. The general workflow involves expressing a "bait" protein with an affinity tag, purifying the bait and its interacting "prey" proteins, and identifying the proteins using mass spectrometry.[1]

1. Cell Culture and Lentiviral Transduction:

-

Human cancer cell lines (e.g., MCF7 for breast cancer, FaDu for head and neck cancer) are cultured in appropriate media.

-

Lentiviral vectors carrying the bait protein fused to an affinity tag (e.g., Strep-FLAG) are used to transduce the cells.

-

Stable cell lines expressing the tagged protein are selected using an appropriate antibiotic.

2. Cell Lysis and Affinity Purification:

-

Cells are harvested and lysed in a buffer that preserves protein-protein interactions.

-

The cell lysate is incubated with affinity beads (e.g., anti-FLAG agarose) to capture the bait protein and its interacting partners.

-

The beads are washed multiple times with lysis buffer to remove non-specific binders.

3. Protein Elution and Digestion:

-

The bound protein complexes are eluted from the beads using a competitive peptide (e.g., 3xFLAG peptide).

-

The eluted proteins are denatured, reduced, and alkylated.

-

The proteins are then digested into smaller peptides using trypsin.

4. Liquid Chromatography-Tandem Mass Spectrometry (LC-MS/MS):

-

The resulting peptide mixture is separated by reverse-phase liquid chromatography.

-

The separated peptides are ionized and analyzed by a high-resolution mass spectrometer (e.g., Orbitrap).

-

The mass spectrometer acquires both MS1 spectra (for peptide identification) and MS2 spectra (for peptide fragmentation and sequencing).

5. Data Analysis:

-

The raw mass spectrometry data is processed using a search algorithm (e.g., MaxQuant) to identify the peptides and proteins.

-

The identified proteins are filtered against a database of common contaminants.

-

Statistical scoring algorithms like MIST (Mass spectrometry interaction statistics) or SAINT (Significance Analysis of INTeractome) are used to assign confidence scores to the identified protein-protein interactions.[1]

CRISPR-Cas9 Loss-of-Function Screening

CRISPR-Cas9 screens are used to systematically knock out genes to identify those that are essential for cancer cell survival or other phenotypes of interest.[2]

1. Library Design and Preparation:

-

A pooled library of single-guide RNAs (sgRNAs) targeting thousands of genes in the human genome is designed.

-

The sgRNA library is synthesized as a pool of oligonucleotides and cloned into a lentiviral vector.

-

The lentiviral library is packaged into viral particles.

2. Cell Transduction and Selection:

-

Cancer cells stably expressing the Cas9 nuclease are transduced with the pooled sgRNA lentiviral library at a low multiplicity of infection (MOI) to ensure that most cells receive only one sgRNA.

-

Transduced cells are selected with an appropriate antibiotic (e.g., puromycin) to eliminate non-transduced cells.

3. Cell Culture and Phenotypic Selection:

-

The population of cells with gene knockouts is cultured for a defined period.

-

During this time, cells with knockouts of essential genes will be depleted from the population.

-

A "time 0" reference cell pellet is collected at the beginning of the screen.

4. Genomic DNA Extraction and sgRNA Sequencing:

-

Genomic DNA is extracted from the "time 0" and final cell populations.

-

The sgRNA sequences integrated into the genome are amplified by PCR.

-

The amplified sgRNAs are sequenced using next-generation sequencing.

5. Data Analysis:

-

The sequencing reads are aligned to the sgRNA library to determine the abundance of each sgRNA in the initial and final cell populations.

-

The change in abundance of each sgRNA is calculated.

-

Statistical methods, such as MAGeCK or CERES, are used to identify genes whose knockout leads to a significant change in cell fitness.[3]

Mandatory Visualization

The following diagrams, created using the DOT language for Graphviz, illustrate key signaling pathways and experimental workflows relevant to the this compound data portal.

References

- 1. Affinity purification–mass spectrometry and network analysis to understand protein-protein interactions - PMC [pmc.ncbi.nlm.nih.gov]

- 2. Affinity Purification Mass Spectrometry | Thermo Fisher Scientific - US [thermofisher.com]

- 3. Genome-wide CRISPR screens of oral squamous cell carcinoma reveal fitness genes in the Hippo pathway - PMC [pmc.ncbi.nlm.nih.gov]

Understanding Protein Interaction Networks in Cancer: A Technical Guide

For Researchers, Scientists, and Drug Development Professionals

Introduction

The intricate dance of proteins within a cell governs its every function, from growth and proliferation to apoptosis. In the context of cancer, this choreography is often disrupted. Aberrant protein-protein interactions (PPIs) can hijack signaling pathways, leading to uncontrolled cell growth, evasion of cell death, and metastasis. Understanding the complex web of these interactions, known as the protein interaction network or interactome, is paramount for elucidating cancer biology and developing novel therapeutic strategies.[1] This in-depth technical guide provides a comprehensive overview of the core concepts, experimental methodologies, and key signaling pathways central to the study of protein interaction networks in cancer.

Core Concepts in Protein Interaction Networks

Protein-protein interactions are the physical contacts of high specificity established between two or more protein molecules as a result of biochemical events steered by electrostatic forces including the hydrophobic effect. These interactions are fundamental to virtually all cellular processes.

Key Terminology:

-

Interactome: The complete set of protein-protein interactions within a cell, organism, or specific biological context.[1]

-

Hub Proteins: Highly connected proteins within an interaction network that often play critical roles in cellular function and disease.

-

Bait and Prey: In experimental contexts, the "bait" is the protein of interest used to "capture" its interacting partners, the "prey."[2][3]

-

Binary Interactions: Direct physical interactions between two proteins.

-

Co-complex Interactions: Associations of multiple proteins within a stable complex, which may not all have direct binary interactions.

Experimental Methodologies for Studying Protein Interactions

A variety of experimental techniques are employed to identify and characterize protein-protein interactions. The choice of method depends on the specific research question, the nature of the proteins being studied, and the desired level of detail.

Co-Immunoprecipitation (Co-IP)

Co-immunoprecipitation is a widely used antibody-based technique to isolate a specific protein (the "bait") and its binding partners (the "prey") from a cell lysate.[4][5]

Detailed Protocol:

-

Cell Lysis:

-

Harvest cultured cells and wash with ice-cold phosphate-buffered saline (PBS).

-

Lyse the cells in a non-denaturing lysis buffer to preserve protein interactions. A common lysis buffer composition is:

-

50 mM Tris-HCl, pH 7.4

-

150 mM NaCl

-

1 mM EDTA

-

1% NP-40 or Triton X-100

-

Protease and phosphatase inhibitor cocktail (added fresh)

-

-

Incubate the lysate on ice to facilitate cell disruption.

-

Centrifuge the lysate to pellet cellular debris and collect the supernatant containing the protein mixture.

-

-

Pre-clearing the Lysate (Optional but Recommended):

-

Incubate the cell lysate with protein A/G beads (without the primary antibody) to reduce non-specific binding of proteins to the beads.

-

Centrifuge and collect the supernatant.

-

-

Immunoprecipitation:

-

Incubate the pre-cleared lysate with a primary antibody specific to the bait protein with gentle rotation at 4°C. The incubation time can range from 1 hour to overnight.

-

Add protein A/G-coupled agarose or magnetic beads to the lysate-antibody mixture and continue to incubate with gentle rotation at 4°C for 1-4 hours. These beads bind to the Fc region of the primary antibody.

-

-

Washing:

-

Pellet the beads by centrifugation and discard the supernatant.

-

Wash the beads multiple times with a wash buffer (often the lysis buffer with a lower detergent concentration) to remove non-specifically bound proteins.

-

-

Elution:

-

Elute the protein complexes from the beads using an elution buffer. This can be a low-pH buffer (e.g., glycine-HCl, pH 2.5-3.0) or a buffer containing a denaturing agent (e.g., SDS-PAGE sample buffer).

-

-

Analysis:

-

The eluted proteins are typically analyzed by Western blotting to confirm the presence of the bait and expected prey proteins.

-

For the identification of novel interaction partners, the eluate can be subjected to mass spectrometry analysis.

-

Yeast Two-Hybrid Screening Workflow

Affinity Purification-Mass Spectrometry (AP-MS)

AP-MS is a high-throughput technique that combines affinity purification of a protein of interest with mass spectrometry to identify its interaction partners on a large scale. [6][7] Detailed Protocol:

-

Bait Protein Expression:

-

The bait protein is typically expressed with an affinity tag (e.g., FLAG, HA, Strep-tag) in a suitable cell line.

-

-

Cell Lysis and Affinity Purification:

-

Cells are lysed under non-denaturing conditions.

-

The cell lysate is incubated with beads coated with an antibody or other affinity reagent that specifically binds to the tag on the bait protein.

-

The beads are washed to remove non-specifically bound proteins.

-

-

Elution and Protein Digestion:

-

The protein complexes are eluted from the beads.

-

The eluted proteins are then digested into smaller peptides, typically using the enzyme trypsin.

-

-

Liquid Chromatography-Tandem Mass Spectrometry (LC-MS/MS):

-

The peptide mixture is separated by liquid chromatography.

-

The separated peptides are then ionized and analyzed by a mass spectrometer. The mass spectrometer measures the mass-to-charge ratio of the peptides and then fragments them to determine their amino acid sequence.

-

-

Data Analysis and Protein Identification:

-

The fragmentation spectra are searched against a protein sequence database to identify the proteins present in the original complex.

-

Computational methods are used to score the interactions and distinguish true interactors from background contaminants.

-

PI3K/AKT Signaling Cascade

MAPK/ERK Signaling Pathway

The Mitogen-Activated Protein Kinase (MAPK) pathway, also known as the Ras-Raf-MEK-ERK pathway, is a chain of proteins that communicates a signal from a receptor on the surface of the cell to the DNA in the nucleus. T[8]his pathway is involved in cell proliferation, differentiation, and survival.

Key Protein Interactions:

-

Growth Factor Receptors and GRB2/SOS: Activation of growth factor receptors leads to the recruitment of the adaptor protein GRB2 and the guanine nucleotide exchange factor SOS.

-

SOS and Ras: SOS activates the small GTPase Ras by promoting the exchange of GDP for GTP.

-

Ras and Raf: Activated, GTP-bound Ras recruits and activates the serine/threonine kinase Raf (a MAPKKK).

-

Raf and MEK: Raf phosphorylates and activates MEK (a MAPKK).

-

MEK and ERK: MEK, a dual-specificity kinase, phosphorylates and activates ERK (a MAPK).

-

ERK and Transcription Factors: Activated ERK translocates to the nucleus and phosphorylates transcription factors such as c-Myc and ELK-1, leading to changes in gene expression that promote cell proliferation.

[9]***

MAPK/ERK Signaling Pathway

MAPK/ERK Signaling Cascade

Wnt/β-catenin Signaling Pathway

The Wnt signaling pathway plays a critical role in embryonic development and adult tissue homeostasis. Aberrant activation of the canonical Wnt/β-catenin pathway is a hallmark of several cancers, particularly colorectal cancer.

Key Protein Interactions:

-

Wnt, Frizzled, and LRP5/6: In the "on" state, Wnt ligands bind to Frizzled (FZD) receptors and LRP5/6 co-receptors.

-

FZD/LRP5/6 and Dishevelled (DVL): This binding leads to the recruitment and activation of the cytoplasmic protein Dishevelled.

-

DVL and the Destruction Complex: Activated DVL inhibits the "destruction complex," which consists of Axin, Adenomatous Polyposis Coli (APC), Glycogen Synthase Kinase 3 (GSK3), and Casein Kinase 1 (CK1).

-

Destruction Complex and β-catenin: In the "off" state (absence of Wnt), the destruction complex phosphorylates β-catenin, targeting it for ubiquitination and proteasomal degradation.

-

β-catenin and TCF/LEF: When the destruction complex is inhibited, β-catenin accumulates in the cytoplasm and translocates to the nucleus, where it binds to TCF/LEF transcription factors to activate the transcription of target genes, such as MYC and CCND1 (cyclin D1).

[10]***

Wnt/β-catenin Signaling Pathway

Wnt/β-catenin Signaling States

Quantitative Data on Protein Interactions in Cancer

The study of protein interaction networks generates vast amounts of data. Publicly available databases serve as crucial repositories for this information, enabling researchers to analyze and interpret complex interaction networks.

| Database | Description | Approximate Number of Protein-Protein Interactions (Human) |

| BioGRID | A comprehensive database of protein and genetic interactions curated from the primary biomedical literature for all major model organism species. | [11] > 1,000,000 |

| IntAct | An open-source, open data molecular interaction database populated by data curated from literature or from direct data depositions. | [12][13] > 800,000 |

| STRING | A database of known and predicted protein-protein interactions, including both direct (physical) and indirect (functional) associations. | [14][15] > 19,000,000 (including predicted) |

Interaction Data for Key Oncoproteins:

| Oncoprotein | Function | Approximate Number of Known Interactors (BioGRID) |

| TP53 | Tumor suppressor, transcription factor | > 4,000 |

| EGFR | Receptor tyrosine kinase, cell surface receptor | > 2,000 |

| KRAS | Small GTPase, signal transducer | > 500 |

Note: The number of interactions is constantly being updated as new research is published.

Conclusion and Future Directions

The mapping and analysis of protein interaction networks have revolutionized our understanding of cancer. These networks provide a systems-level view of the molecular alterations that drive tumorigenesis and have unveiled a plethora of potential therapeutic targets. The continued development of high-throughput experimental techniques, coupled with advanced computational and bioinformatic tools, will undoubtedly lead to a more comprehensive and dynamic picture of the cancer interactome. This will pave the way for the development of more effective and personalized cancer therapies that specifically target the aberrant protein-protein interactions at the heart of the disease.

References

- 1. Co-immunoprecipitation (Co-IP): The Complete Guide | Antibodies.com [antibodies.com]

- 2. researchgate.net [researchgate.net]

- 3. kaggle.com [kaggle.com]

- 4. researchgate.net [researchgate.net]

- 5. researchgate.net [researchgate.net]

- 6. researchgate.net [researchgate.net]

- 7. fiveable.me [fiveable.me]

- 8. How to conduct a Co-immunoprecipitation (Co-IP) | Proteintech Group [ptglab.com]

- 9. researchgate.net [researchgate.net]

- 10. researchgate.net [researchgate.net]

- 11. p14arf - Wikipedia [en.wikipedia.org]

- 12. researchgate.net [researchgate.net]

- 13. researchgate.net [researchgate.net]

- 14. STRING v11: protein–protein association networks with increased coverage, supporting functional discovery in genome-wide experimental datasets - PMC [pmc.ncbi.nlm.nih.gov]

- 15. The STRING database in 2023: protein-protein association networks and functional enrichment analyses for any sequenced genome of interest - PubMed [pubmed.ncbi.nlm.nih.gov]

Mapping the Cancer Interactome: A Technical Guide to the Cancer Cell Map Initiative's Key Publications

The Cancer Cell Map Initiative (CCMI) is a collaborative effort to systematically define the molecular networks that underlie cancer. By mapping the intricate web of protein-protein interactions (PPIs), the this compound aims to provide a deeper understanding of how genetic alterations drive cancer progression and to identify novel therapeutic targets. This technical guide delves into the core findings and methodologies of three key publications from the this compound, published in Science in October 2021, which lay the groundwork for a systems-level understanding of head and neck and breast cancers.

Core Publications

The foundation of this guide is built upon the following publications:

-

"A protein network map of head and neck cancer reveals PIK3CA mutant drug sensitivity" by Swaney, D.L., Ramms, D.J., Wang, Z., et al. (2021).

-

"A protein interaction landscape of breast cancer" by Kim, M., Park, J., Bouhaddou, M., et al. (2021).

-

"Interpretation of cancer mutations using a multiscale map of protein systems" by Zheng, F., Kelly, M.R., Ramms, D.J., et al. (2021).[1]

These papers present a comprehensive analysis of the protein interaction networks in head and neck squamous cell carcinoma (HNSCC) and breast cancer, utilizing affinity purification-mass spectrometry (AP-MS) to chart the landscape of interactions in both healthy and cancerous states.[2]

Experimental Protocols: Affinity Purification-Mass Spectrometry (AP-MS)

The primary experimental technique employed in these studies is affinity purification coupled with mass spectrometry (AP-MS). This powerful method allows for the isolation and identification of proteins that interact with a specific "bait" protein. The general workflow is as follows:

Experimental Workflow: AP-MS

Detailed Methodologies:

-

Cell Lines and Culture: The studies utilized human embryonic kidney (HEK293T) cells as a general human cell context, alongside specific cancer cell lines for head and neck squamous cell carcinoma (HNSCC) and breast cancer.

-

Construct Design and Transfection: Bait proteins of interest, including wild-type and mutant versions, were cloned into expression vectors with N-terminal Strep-HA tags. These plasmids were then transiently transfected into the chosen cell lines.

-

Affinity Purification:

-

Lysis: Cells were harvested and lysed to release cellular proteins.

-

Binding: The cell lysates were incubated with Strep-Tactin beads, which have a high affinity for the Strep-tag on the bait protein.

-

Washing: A series of washing steps were performed to remove non-specific binding proteins.

-

Elution: The bait protein and its interacting partners were eluted from the beads.

-

-

Mass Spectrometry:

-

Sample Preparation: The eluted protein complexes were reduced, alkylated, and digested with trypsin to generate peptides.

-

LC-MS/MS: The peptide mixtures were separated by liquid chromatography and analyzed by tandem mass spectrometry.

-

-

Data Analysis:

-

Protein Identification: The resulting spectra were searched against a human protein database to identify the peptides and, subsequently, the proteins present in the sample.

-

Interaction Scoring: To distinguish true interactors from background contaminants, two scoring algorithms were used:

-

SAINTexpress: This algorithm calculates the probability of a true interaction based on spectral counts.

-

MiST (Mass spectrometry interaction STatistics): This tool also uses spectral counts to assign a confidence score to each interaction.

-

-

Differential Analysis: To identify cancer-specific or mutation-specific interactions, a differential interaction score was calculated to compare interactions across different conditions.

-

Quantitative Data Summary

The AP-MS experiments generated a vast amount of quantitative data on protein-protein interactions. The following tables summarize the key findings from the Swaney et al. (HNSCC) and Kim et al. (Breast Cancer) publications.

Table 1: Summary of Protein-Protein Interactions in Head and Neck Squamous Cell Carcinoma (HNSCC)

| Condition | Number of Bait Proteins | Total High-Confidence PPIs Identified | Novelty of Interactions |

| HNSCC vs. Non-cancerous cells | 31 | 771 | ~84% not previously reported |

Data from Swaney, D.L., et al. (2021). Science.

Table 2: Summary of Protein-Protein Interactions in Breast Cancer

| Cell Line Context | Number of Bait Proteins | Total High-Confidence PPIs Identified | Novelty of Interactions |

| Breast Cancer vs. Non-tumorigenic cells | 40 | Hundreds | ~79% not previously reported |

Data from Kim, M., et al. (2021). Science.

Key Signaling Pathways and Networks

The this compound publications shed light on how cancer-associated mutations rewire cellular signaling pathways. A significant focus was placed on the PI3K/AKT pathway, which is frequently mutated in various cancers.

PI3K/AKT Signaling Pathway in Cancer

The studies revealed novel protein interactions that modulate the activity of the PI3K/AKT pathway, a critical regulator of cell growth, proliferation, and survival. For instance, in breast cancer, the proteins BPIFA1 and SCGB2A1 were identified as novel interactors of PIK3CA (a subunit of PI3K) that act as negative regulators of the pathway.[3]

PI3K/AKT Signaling Pathway

BRCA1 Interactome in Breast Cancer

In the context of breast cancer, the researchers mapped the interaction network of the tumor suppressor protein BRCA1. They identified UBE2N as a functionally relevant interactor, suggesting its potential as a biomarker for therapies targeting DNA repair pathways.

BRCA1 Interaction Network

Pan-Cancer Analysis and Future Directions

The third key publication by Zheng et al. integrated the newly generated PPI data with existing multi-omic datasets to create a comprehensive, multi-scale map of protein systems in cancer.[1] This "pan-cancer" approach allows for the identification of common and distinct molecular mechanisms across different tumor types. The study developed a statistical model to pinpoint specific protein systems that are under mutational selection in various cancers. This integrated map provides a powerful resource for interpreting the functional consequences of cancer mutations and for identifying new therapeutic vulnerabilities.

The work of the Cancer Cell Map Initiative, as highlighted in these seminal publications, provides a rich, systems-level view of the molecular alterations that drive cancer. The detailed experimental protocols and extensive datasets serve as a valuable resource for the cancer research community, paving the way for the development of more targeted and effective cancer therapies.

References

Accessing the Public Data of the Cancer Cell Map Initiative: A Technical Guide for Researchers

This in-depth guide provides researchers, scientists, and drug development professionals with a comprehensive overview of how to access and utilize the public data generated by the Cancer Cell Map Initiative (CCMI). The this compound is a collaborative effort to construct comprehensive maps of the protein-protein and genetic interactions within cancer cells to accelerate the development of precision medicine.[1][2][3][4]

Overview of this compound Data

The primary data generated by the this compound are "Cell Maps," which are comprehensive network models of genetic and physical interactions between genes and their protein products.[5][6] These maps are crucial for understanding how cellular networks are altered in cancer. The this compound focuses on several cancer types, with a significant emphasis on breast cancer and head and neck cancers, particularly investigating the PI3K/AKT/mTOR and TP53 signaling pathways.[7]

The data is generated using cutting-edge experimental techniques, primarily:

-

Affinity Purification-Mass Spectrometry (AP-MS): To identify protein-protein interactions.

-

CRISPR (Clustered Regularly Interspaced Short Palindromic Repeats): Including CRISPR knockout (CRISPRko), CRISPR interference (CRISPRi), and CRISPR activation (CRISPRa) screens to probe gene function.[8]

Accessing this compound Data via the Network Data Exchange (NDEx)

The primary distribution channel for this compound's Cell Maps is the Network Data Exchange (NDEx), an online commons for biological network data.[5][6][9]

Step-by-Step Data Access Workflow

To access this compound data on NDEx, follow these steps:

-

Create an NDEx Account:

-

Navigate to the --INVALID-LINK--.

-

Click on "Login/Register" in the top right corner.

-

You can sign up using a Google account or create a new account.[9]

-

-

Request Access to the this compound Project Group:

-

Once logged in, use the search bar to find the group named "This compound Project ".

-

Request access to this group with at least "can read" permission.[9]

-

-

Browsing and Downloading this compound Networks:

-

Within the this compound Project group, you will find a collection of network datasets.

-

You can browse, query, and download these networks in various formats for further analysis.

-

The following diagram illustrates the general workflow for accessing this compound data through NDEx.

Caption: Workflow for accessing and utilizing this compound data from the NDEx platform.

Programmatic Access

For more advanced users, NDEx provides APIs for programmatic access to the data, which can be integrated into analysis pipelines using languages like Python and R.[9] This allows for automated downloading and processing of multiple network files.

Experimental Protocols

The this compound employs standardized and rigorous experimental protocols to generate high-quality data. Below are overviews of the key methodologies.

Affinity Purification-Mass Spectrometry (AP-MS)

AP-MS is used to identify the interacting partners of a protein of interest (the "bait").

General Protocol Outline:

-

Bait Protein Expression: The gene encoding the bait protein is tagged with an epitope (e.g., FLAG, HA) and expressed in a relevant cell line.

-

Cell Lysis: Cells are lysed under conditions that preserve protein-protein interactions.

-

Immunoprecipitation: An antibody specific to the epitope tag is used to "pull down" the bait protein and its interacting partners.

-

Elution: The protein complexes are eluted from the antibody.

-

Mass Spectrometry: The eluted proteins are identified and quantified using mass spectrometry.

The following diagram outlines the AP-MS experimental workflow.

Caption: A simplified workflow for Affinity Purification-Mass Spectrometry (AP-MS).

CRISPR-Based Functional Genomics Screens

The this compound utilizes pooled CRISPR-based screens to systematically assess the function of a large number of genes.[10]

General Protocol Outline:

-

Library Preparation: A pooled library of single-guide RNAs (sgRNAs) targeting a set of genes is generated.

-

Lentiviral Production: The sgRNA library is packaged into lentiviral particles.

-

Cell Transduction: A population of cells is transduced with the lentiviral library at a low multiplicity of infection to ensure that most cells receive only one sgRNA.

-

Selection/Screening: The transduced cells are subjected to a selection pressure (e.g., drug treatment) or screened for a specific phenotype.

-

Genomic DNA Extraction and Sequencing: Genomic DNA is extracted from the surviving or selected cells, and the sgRNA sequences are amplified and sequenced.

-

Data Analysis: The abundance of each sgRNA is compared between the initial and final cell populations to identify genes that, when perturbed, affect the phenotype of interest.

The following diagram shows the workflow for a pooled CRISPR screen.

Caption: Workflow for a pooled CRISPR-based functional genomics screen.

For more detailed information on this compound's CRISPR screening methodologies, refer to the materials from their CRISPR Screening Workshop .[10]

Key Signaling Pathways Investigated by this compound

The this compound has a strong focus on elucidating the alterations in key cancer-related signaling pathways.

The PI3K/AKT/mTOR Pathway

This pathway is a critical regulator of cell growth, proliferation, and survival, and it is frequently hyperactivated in cancer. The diagram below provides a simplified representation of this pathway, highlighting key components often studied by this compound.

Caption: A simplified diagram of the PI3K/AKT/mTOR signaling pathway.

The TP53 Signaling Pathway

The TP53 gene encodes the p53 tumor suppressor protein, often referred to as the "guardian of the genome."[11] Mutations in TP53 are among the most common in human cancers. The pathway diagram below illustrates the central role of p53 in response to cellular stress.

References

- 1. The Cancer Cell Map Initiative: Defining the Hallmark Networks of Cancer [escholarship.org]

- 2. The Cancer Cell Map Initiative: Defining the Hallmark Networks of Cancer - PMC [pmc.ncbi.nlm.nih.gov]

- 3. This compound | home [this compound.org]

- 4. idekerlab.ucsd.edu [idekerlab.ucsd.edu]

- 5. This compound | this compound [this compound.org]

- 6. HPMI | Cell Maps [hpmi.ucsf.edu]

- 7. onclive.com [onclive.com]

- 8. This compound | CORES [this compound.org]

- 9. This compound | Cell Maps FAQ [this compound.org]

- 10. This compound | CRISPR Screening Workshop [this compound.org]

- 11. The p53 network: Cellular and systemic DNA damage responses in aging and cancer - PMC [pmc.ncbi.nlm.nih.gov]

Core Experimental Approach: Affinity Purification-Mass Spectrometry (AP-MS)

A Technical Guide to Exploring Genetic Interactions with the Cancer Cell Map Initiative (CCMI)

For Researchers, Scientists, and Drug Development Professionals

The Cancer Cell Map Initiative (this compound) is a collaborative effort to create comprehensive maps of the genetic and protein-protein interactions that underpin cancer.[1] By systematically elucidating the complex networks that are rewired in cancer cells, the this compound aims to identify novel therapeutic targets and patient stratification strategies. This guide provides an in-depth overview of the core methodologies, data, and key findings from the this compound, with a focus on their work in breast and head and neck cancers.

The primary experimental strategy employed by the this compound to map protein-protein interactions (PPIs) is affinity purification coupled with mass spectrometry (AP-MS).[1][2] This technique allows for the isolation and identification of proteins that interact with a specific "bait" protein, providing a snapshot of the protein complexes within a cell.

Experimental Protocol: AP-MS for Mapping Differential PPI Networks

The following is a generalized protocol for AP-MS as utilized in this compound studies to map differential PPI networks between wild-type and mutant proteins in various cellular contexts.

1. Cell Line Engineering and Bait Expression:

-

Cell Lines: Human Embryonic Kidney (HEK293T) cells are commonly used for their high transfectability and protein expression levels. For cancer-specific studies, relevant cancer cell lines such as those for breast cancer (e.g., MCF7) and head and neck squamous cell carcinoma (HNSCC) are utilized.

-

Vector Construction: The gene encoding the "bait" protein of interest (both wild-type and mutant versions) is cloned into a mammalian expression vector. This vector typically includes a dual affinity tag, such as the 2xStrep-HA tag, fused to the N- or C-terminus of the bait protein to facilitate purification.

-

Transfection: The expression vectors are transfected into the chosen cell line. For stable expression, lentiviral transduction is often employed, followed by selection with an appropriate antibiotic (e.g., puromycin) to generate stable cell lines.

2. Cell Lysis and Protein Extraction:

-

Cell Harvesting: Cells are harvested, washed with phosphate-buffered saline (PBS), and pelleted by centrifugation.

-

Lysis: The cell pellet is resuspended in a lysis buffer containing detergents (e.g., Triton X-100 or NP-40) to solubilize proteins and disrupt cell membranes. The buffer is supplemented with protease and phosphatase inhibitors to prevent protein degradation and maintain post-translational modifications.

-

Clarification: The lysate is centrifuged at high speed to pellet cellular debris, and the supernatant containing the soluble proteins is collected.

3. Affinity Purification:

-

Bead Preparation: Streptactin- or HA-conjugated magnetic beads are washed and equilibrated with the lysis buffer.

-

Incubation: The clarified cell lysate is incubated with the prepared beads to allow the tagged "bait" protein and its interacting partners to bind to the beads. This incubation is typically performed for several hours at 4°C with gentle rotation.

-

Washing: The beads are washed multiple times with the lysis buffer to remove non-specific binding proteins.

4. Elution and Sample Preparation for Mass Spectrometry:

-

Elution: The bound protein complexes are eluted from the beads. For Strep-tagged proteins, elution is often performed with a buffer containing biotin, which competes with the Strep-tag for binding to the streptactin beads.

-

Protein Digestion: The eluted proteins are denatured, reduced, and alkylated. They are then digested into smaller peptides using a protease, most commonly trypsin.

-

Peptide Desalting: The resulting peptides are desalted and concentrated using a C18 solid-phase extraction column (e.g., a ZipTip).

5. Mass Spectrometry and Data Analysis:

-

LC-MS/MS Analysis: The desalted peptides are analyzed by liquid chromatography-tandem mass spectrometry (LC-MS/MS). The peptides are separated by reverse-phase chromatography and then ionized and fragmented in the mass spectrometer.

-

Protein Identification: The resulting MS/MS spectra are searched against a human protein database (e.g., UniProt) using a search engine like MaxQuant to identify the peptides and, by extension, the proteins in the sample.

-

Quantitative Analysis: The abundance of each identified protein is quantified based on the intensity of its corresponding peptides. To identify specific interactors, the abundance of each protein in the bait pulldown is compared to its abundance in control pulldowns (e.g., from cells expressing an empty vector or a non-interacting protein). Statistical significance is determined using methods like the SAINT (Significance Analysis of INTeractome) algorithm.

Quantitative Data: Protein-Protein Interactions in Cancer

The this compound has generated extensive datasets of PPIs for various cancers. This data reveals how cancer-associated mutations alter protein interaction networks. Below are summary tables of newly identified protein-protein interactions in head and neck and breast cancer from key this compound publications.

Table 1: Novel Protein-Protein Interactions in Head and Neck Squamous Cell Carcinoma (HNSCC)

| Bait Protein (Gene) | Interacting Protein | Biological Context/Significance |

| PIK3CA (mutant) | ERBB3 | Enhanced interaction in mutant PIK3CA, suggesting a mechanism of pathway activation. |

| PIK3CA (mutant) | GRB2 | Altered interaction with a key adaptor protein in receptor tyrosine kinase signaling. |

| NOTCH1 | MAML1 | Known co-activator, with altered interactions in specific NOTCH1 mutants. |

| TP53 (mutant) | MDM2 | Differential binding of mutant p53 to its negative regulator. |

| FAT1 | DVL1 | Connection to the Wnt signaling pathway. |

Note: This table represents a summary of findings. For a comprehensive list of interactions and quantitative scores, refer to the supplementary data of the relevant this compound publications.

Table 2: Novel Protein-Protein Interactions in Breast Cancer

| Bait Protein (Gene) | Interacting Protein | Biological Context/Significance |

| PIK3CA (mutant) | BPIFA1 | Newly identified negative regulator of the PI3K-AKT pathway.[1] |

| PIK3CA (mutant) | SCGB2A1 | Newly identified negative regulator of the PI3K-AKT pathway.[1] |

| GATA3 | ZNF354C | Interaction with a zinc finger protein, potentially modulating GATA3's transcriptional activity. |

| CDH1 | CTNND1 | Altered interaction with p120-catenin in specific E-cadherin mutants. |

| MAP2K4 | JNK1 | Altered kinase-substrate interaction in the context of MAP2K4 mutations. |

Note: This table represents a summary of findings. For a comprehensive list of interactions and quantitative scores, refer to the supplementary data of the relevant this compound publications.

Signaling Pathways and Experimental Workflows

The this compound's work provides a systems-level view of how mutations impact cellular signaling. The following diagrams, rendered in Graphviz DOT language, illustrate key concepts and workflows.

PI3K Signaling Pathway with Mutant-Specific Interactions

This diagram depicts a simplified PI3K signaling pathway, highlighting how mutations in PIK3CA can lead to altered protein interactions and downstream signaling.

References

Unveiling the Architecture of Cancer: A Technical Guide to CCMI Resources for Systems Biology

For Researchers, Scientists, and Drug Development Professionals

The Cancer Cell Map Initiative (CCMI) is at the forefront of a paradigm shift in cancer research. By moving beyond single-gene analyses to a comprehensive, network-level understanding of cancer, the this compound is generating invaluable resources for the scientific community. This technical guide provides an in-depth overview of the core methodologies, data, and biological networks being mapped by the this compound and its collaborators, with a focus on their applications in cancer systems biology and drug development.

The mission of the this compound is to construct comprehensive maps of the protein-protein and genetic interactions that orchestrate the cancer cell's machinery.[1] This network-based approach is critical for deciphering the complexity of cancer, where tumors with diverse mutational landscapes often converge on disrupting the same core molecular pathways.[2][3] By elucidating these "hallmark networks," the this compound aims to provide a foundational framework for interpreting cancer genomes and identifying novel therapeutic targets.[2][3]

Mapping the Genetic Interaction Landscape with CRISPR Technology

A cornerstone of the this compound's efforts is the systematic mapping of genetic interactions in human cancer cells. This is achieved through innovative combinatorial screening platforms utilizing CRISPR-Cas9 and CRISPR interference (CRISPRi) technologies.[1][4][5][6][7][8] These powerful techniques allow for the simultaneous perturbation of gene pairs to identify synthetic lethal and other epistatic relationships, revealing the functional wiring of cancer cells.

Experimental Protocol: Combinatorial CRISPRi/Cas9 Screening

The following protocol outlines the key steps in performing a combinatorial CRISPR screen to map genetic interactions, based on methodologies reported by this compound-affiliated researchers.[1][6][8]

-

Library Design and Construction: A lentiviral library of dual guide RNAs (gRNAs) is designed to target a specific set of genes (e.g., chromatin-regulating factors, known cancer genes).[4][9] Each vector in the library contains two gRNA expression cassettes, enabling the simultaneous knockout or knockdown of two distinct genes.

-

Cell Line Transduction: A population of cancer cells stably expressing the Cas9 nuclease (for CRISPR knockout) or dCas9-KRAB (for CRISPRi) is transduced with the dual-gRNA library at a low multiplicity of infection to ensure that most cells receive a single viral particle.

-

Growth Competition Assay: The transduced cell population is cultured for a defined period (e.g., 14-21 days), allowing for the depletion of cells with dual-gRNA perturbations that are detrimental to cell fitness.

-

Next-Generation Sequencing (NGS): Genomic DNA is isolated from the cell population at initial and final time points. The gRNA cassettes are amplified by PCR and subjected to high-throughput sequencing to determine the relative abundance of each dual-gRNA construct.

-

Data Analysis: The sequencing data is analyzed to calculate a genetic interaction score for each gene pair. This score quantifies the extent to which the fitness effect of the double perturbation deviates from the expected effect of the individual perturbations.

Quantitative Data: Genetic Interactions in Cancer Cell Lines

The following table summarizes a subset of synthetic lethal interactions identified in a combinatorial CRISPR-Cas9 screen targeting 73 cancer-related genes in HeLa, A549, and 293T cell lines.[9] A negative interaction score indicates a synthetic lethal relationship, where the simultaneous knockout of both genes results in a greater fitness defect than expected.

| Gene A | Gene B | Cell Line | Interaction Score |

| TP53 | BRCA1 | HeLa | -1.2 |

| TP53 | BRCA2 | HeLa | -1.1 |

| KRAS | BRAF | A549 | -0.9 |

| MYC | MAX | 293T | -1.5 |

| PTEN | PIK3CA | HeLa | -0.8 |

| RB1 | E2F1 | A549 | -1.3 |

Note: The interaction scores presented here are illustrative and based on the findings reported in the cited literature. For a comprehensive dataset, please refer to the supplementary materials of the original publication.

Charting the Protein Interactome: A Blueprint of the Cancer Cell

In parallel with genetic interaction mapping, the this compound is dedicated to charting the protein-protein interaction (PPI) networks that form the physical backbone of cellular processes. Understanding how these interactions are rewired in cancer is crucial for identifying key protein complexes and signaling hubs that drive tumorigenesis. Methodologies such as affinity purification coupled with mass spectrometry (AP-MS) and yeast two-hybrid (Y2H) screens are employed to systematically map these physical interactions on a proteome-wide scale.[10][11][12][13]

Experimental Workflow: Affinity Purification-Mass Spectrometry (AP-MS)

The AP-MS workflow is a powerful approach to identify the components of protein complexes.

Visualizing Cancer's Logic: Signaling Pathways and Networks

The ultimate goal of the this compound is to integrate genetic and physical interaction data to construct comprehensive models of cancer cell signaling networks. These models can reveal how oncogenic mutations perturb cellular pathways and suggest novel strategies for therapeutic intervention.

The MAPK/ERK Signaling Pathway: A Key Cancer Network

The Ras-MAPK signaling pathway is a critical regulator of cell proliferation, differentiation, and survival, and it is frequently dysregulated in cancer.[13][14] The following diagram illustrates a simplified representation of this pathway, highlighting key components that are often mutated or hyperactivated in tumors.

The resources and methodologies developed by the Cancer Cell Map Initiative are empowering researchers to delve deeper into the intricate wiring of cancer cells. By providing comprehensive maps of genetic and physical interactions, the this compound is paving the way for a new era of systems-level cancer biology and the development of more effective and personalized cancer therapies. The data and protocols highlighted in this guide serve as a starting point for leveraging these valuable resources in your own research endeavors.

References

- 1. Genetic interaction mapping in mammalian cells using CRISPR interference - PMC [pmc.ncbi.nlm.nih.gov]

- 2. embopress.org [embopress.org]

- 3. researchgate.net [researchgate.net]

- 4. Genetic interaction mapping in mammalian cells using CRISPR interference | Springer Nature Experiments [experiments.springernature.com]

- 5. Combinatorial CRISPR–Cas9 screens for de novo mapping of genetic interactions | Springer Nature Experiments [experiments.springernature.com]

- 6. aacrjournals.org [aacrjournals.org]

- 7. dash.harvard.edu [dash.harvard.edu]

- 8. Combinatorial CRISPR-Cas9 screens for de novo mapping of genetic interactions - PMC [pmc.ncbi.nlm.nih.gov]

- 9. researchgate.net [researchgate.net]

- 10. The Cancer Genome Atlas Program (TCGA) - NCI [cancer.gov]

- 11. m.youtube.com [m.youtube.com]

- 12. wjgnet.com [wjgnet.com]

- 13. m.youtube.com [m.youtube.com]

- 14. youtube.com [youtube.com]

The Convergence of Metabolism and Precision Oncology: A Technical Guide

Authored for Researchers, Scientists, and Drug Development Professionals

Introduction

The landscape of cancer treatment is undergoing a paradigm shift, moving away from cytotoxic chemotherapies towards a more nuanced, individualized approach known as precision oncology. This strategy hinges on the molecular characterization of a patient's tumor to guide targeted therapies. Concurrently, a deeper understanding of the metabolic reprogramming inherent in cancer cells has unveiled a rich landscape of therapeutic targets. This technical guide explores the pivotal role of research centers at the forefront of cancer metabolism and innovation in advancing precision oncology. By dissecting the intricate metabolic pathways that fuel cancer progression and developing novel therapeutic strategies to exploit these metabolic vulnerabilities, these centers are paving the way for a new generation of cancer treatments. This document will delve into the core scientific principles, experimental methodologies, and clinical data underpinning this exciting field, with a focus on key research emanating from leading institutions such as The Ohio State University Comprehensive Cancer Center and the University of California, Irvine's Chao Family Comprehensive Cancer Center.

Key Metabolic Pathways in Cancer

Cancer cells exhibit profound metabolic alterations to support their rapid proliferation and survival. Three central pillars of this metabolic reprogramming are the Warburg effect, altered glutamine metabolism, and the dysregulation of the PI3K/Akt/mTOR signaling pathway.

The Warburg Effect: Aerobic Glycolysis

A hallmark of many cancer cells is their reliance on aerobic glycolysis, a phenomenon first described by Otto Warburg. Unlike normal cells, which primarily utilize mitochondrial oxidative phosphorylation for energy production in the presence of oxygen, cancer cells favor converting glucose to lactate.[1] This metabolic switch provides a rapid source of ATP and metabolic intermediates necessary for the synthesis of nucleotides, lipids, and amino acids, thereby fueling cell growth and division.[2][3]

Caption: The Warburg Effect signaling pathway in cancer cells.

Glutamine Metabolism: Fueling the Krebs Cycle and Biosynthesis

Glutamine is another critical nutrient for cancer cells, serving as a key source of carbon and nitrogen.[4] It replenishes the tricarboxylic acid (TCA) cycle, a process known as anaplerosis, and provides the nitrogen required for nucleotide and amino acid synthesis.[5] The enzyme glutaminase (GLS) catalyzes the conversion of glutamine to glutamate, which is then converted to the TCA cycle intermediate α-ketoglutarate.[4] Many cancer cells exhibit a strong dependence on glutamine, making its metabolic pathway an attractive therapeutic target.[6][7]

References

- 1. Enhancing the efficacy of glutamine metabolism inhibitors in cancer therapy - PMC [pmc.ncbi.nlm.nih.gov]

- 2. researchgate.net [researchgate.net]

- 3. Metabolite Profiling in Anticancer Drug Development: A Systematic Review - PMC [pmc.ncbi.nlm.nih.gov]

- 4. Clinical Trials Home | UCI Health | Orange County, CA [ucihealth.org]

- 5. mdpi.com [mdpi.com]

- 6. Advancing Cancer Treatment by Targeting Glutamine Metabolism—A Roadmap - PMC [pmc.ncbi.nlm.nih.gov]

- 7. Enhancing the Efficacy of Glutamine Metabolism Inhibitors in Cancer Therapy - PubMed [pubmed.ncbi.nlm.nih.gov]

Methodological & Application

Unlocking New Cancer Drug Targets: A Guide to Leveraging CCMI Data

Application Note: Utilizing Cancer Cell Map Initiative (CCMI) Data for Novel Target Discovery

The Cancer Cell Map Initiative (this compound) is a collaborative effort to map the complex network of protein-protein and genetic interactions within cancer cells. This rich dataset provides an unprecedented opportunity for researchers, scientists, and drug development professionals to identify and validate novel therapeutic targets. By understanding the intricate molecular machinery of cancer, we can uncover vulnerabilities that can be exploited for targeted therapies.

This document provides detailed application notes and protocols for utilizing this compound data in your target discovery workflow. We will cover the conceptual framework, experimental design, data analysis, and target validation, empowering your research to translate this compound's comprehensive datasets into actionable therapeutic strategies.

Conceptual Framework: From Interaction Maps to Drug Targets

The central premise of using this compound data for target discovery lies in identifying nodes and pathways within the cancer interactome that are critical for tumor cell survival and proliferation. These "cancer dependencies" can be revealed by analyzing the vast network of protein-protein interactions (PPIs) and genetic interactions.

A typical workflow involves several key stages, from initial data exploration to preclinical validation.

Data Presentation: Quantitative Insights from this compound-driven Research

A key aspect of leveraging this compound data is the ability to quantify changes in protein interactions and cellular dependencies. Below are examples of how quantitative data can be structured to inform target discovery.

Table 1: Differentially Interacting Proteins in a Cancer Cell Line

This table showcases a hypothetical list of proteins with significantly altered interactions in a cancer cell line compared to a non-cancerous control, as might be determined by affinity purification-mass spectrometry (AP-MS).

| Bait Protein | Interacting Protein | Log2 Fold Change (Cancer vs. Control) | p-value | Potential Role in Cancer |

| EGFR | GRB2 | 1.8 | 0.001 | Signal Transduction |

| EGFR | SHC1 | 1.5 | 0.005 | Signal Transduction |

| PIK3CA | p85a | 2.1 | <0.001 | PI3K/AKT Signaling |

| PIK3CA | IRS1 | -1.2 | 0.01 | Negative Regulation |

| TP53 | MDM2 | 3.5 | <0.0001 | Inhibition of Apoptosis |

| BRCA1 | BARD1 | -2.0 | 0.002 | DNA Repair |

Table 2: Top Candidate Genes from a Genome-Wide CRISPR-Cas9 Screen

This table presents a sample of high-confidence "hits" from a CRISPR screen designed to identify genes essential for the survival of a specific cancer cell line. The "viability score" indicates the degree of cell death upon gene knockout.

| Gene | Guide RNA ID | Viability Score (z-score) | False Discovery Rate (FDR) | Associated Pathway |

| KRAS | sgRNA-KRAS-1 | -3.2 | <0.01 | Ras/MAPK Signaling |

| PIK3CA | sgRNA-PIK3CA-2 | -2.9 | <0.01 | PI3K/AKT Signaling |

| MYC | sgRNA-MYC-3 | -3.5 | <0.01 | Transcription Factor |

| BCL2L1 | sgRNA-BCL2L1-1 | -2.5 | 0.02 | Apoptosis Regulation |

| PARP1 | sgRNA-PARP1-4 | -2.8 | 0.01 | DNA Repair |

Experimental Protocols: Methodologies for Target Discovery and Validation

The following protocols provide a detailed overview of key experimental techniques used in conjunction with this compound data.

Protocol: Affinity Purification-Mass Spectrometry (AP-MS) for Identifying Protein-Protein Interactions

Objective: To identify the interacting partners of a protein of interest (bait) in a cancer cell line.

Materials:

-

Cancer cell line of interest

-

Lentiviral vector for expressing a tagged (e.g., FLAG, HA) bait protein

-

Lysis buffer (e.g., RIPA buffer with protease and phosphatase inhibitors)

-

Antibody-conjugated magnetic beads (e.g., anti-FLAG M2 magnetic beads)

-

Wash buffers (e.g., TBS)

-

Elution buffer (e.g., 3xFLAG peptide solution)

-

Mass spectrometer (e.g., Orbitrap)

Procedure:

-

Cell Line Transduction: Transduce the cancer cell line with the lentiviral vector expressing the tagged bait protein. Select for successfully transduced cells.

-

Cell Lysis: Harvest cells and lyse them on ice with lysis buffer to release cellular proteins.

-

Immunoprecipitation: Incubate the cell lysate with antibody-conjugated magnetic beads to capture the bait protein and its interacting partners.

-

Washing: Wash the beads several times with wash buffer to remove non-specific binders.

-

Elution: Elute the protein complexes from the beads using an appropriate elution buffer.

-

Sample Preparation for Mass Spectrometry: Reduce, alkylate, and digest the eluted proteins into peptides using trypsin.

-

LC-MS/MS Analysis: Analyze the peptide mixture using liquid chromatography-tandem mass spectrometry (LC-MS/MS).

-

Data Analysis: Use a database search engine (e.g., Mascot, MaxQuant) to identify the proteins from the MS/MS spectra. Quantify the relative abundance of interacting proteins between cancer and control samples.

Protocol: Genome-Wide CRISPR-Cas9 Knockout Screen for Identifying Cancer Dependencies

Objective: To identify genes that are essential for the survival and proliferation of a cancer cell line.[1][2][3]

Materials:

-

Cas9-expressing cancer cell line

-

Pooled lentiviral sgRNA library targeting the human genome

-

HEK293T cells for lentivirus production

-

Transfection reagent

-

Polybrene

-

Puromycin (or other selection antibiotic)

-

Genomic DNA extraction kit

-

PCR reagents for sgRNA amplification

-

Next-generation sequencing (NGS) platform

Procedure:

-

Lentivirus Production: Produce the pooled sgRNA lentiviral library by transfecting HEK293T cells.

-

Cell Transduction: Transduce the Cas9-expressing cancer cell line with the sgRNA library at a low multiplicity of infection (MOI) to ensure that most cells receive only one sgRNA.

-

Antibiotic Selection: Select for successfully transduced cells using puromycin.

-

Baseline (T0) Sample Collection: Collect a sample of cells to determine the initial representation of each sgRNA.

-

Cell Culture and Screening: Culture the transduced cells for a period of time (e.g., 14-21 days) to allow for the depletion of cells with knockouts of essential genes.

-

Final (T_final) Sample Collection: Collect a sample of cells at the end of the screen.

-

Genomic DNA Extraction: Extract genomic DNA from the T0 and T_final cell populations.

-

sgRNA Amplification and Sequencing: Amplify the sgRNA sequences from the genomic DNA using PCR and sequence them using an NGS platform.

-

Data Analysis: Determine the abundance of each sgRNA in the T0 and T_final samples. Identify sgRNAs that are significantly depleted in the T_final sample, as these target essential genes.

Mandatory Visualizations: Signaling Pathways and Experimental Workflows

PI3K/AKT Signaling Pathway

The PI3K/AKT pathway is a critical regulator of cell growth, proliferation, and survival, and it is frequently hyperactivated in cancer. The following diagram illustrates key protein-protein interactions within this pathway.

References

Application Notes and Protocols: Leveraging CCMI Networks in Breast Cancer Research

Introduction

The study of breast cancer is undergoing a paradigm shift, moving from a focus on single gene mutations to a more holistic, systems-level understanding of the disease. Central to this evolution is the analysis of Cell-Cell and Cell-Matrix Interaction (CCMI) networks. These intricate networks, composed of protein-protein interactions (PPIs) and genetic interactions, govern the complex signaling pathways that drive tumor initiation, progression, and response to therapy. The Cancer Cell Map Initiative (this compound) is at the forefront of generating comprehensive maps of these interactions to elucidate the molecular underpinnings of cancer. By analyzing the architecture of these networks, researchers can identify critical signaling hubs, uncover mechanisms of drug resistance, and discover novel therapeutic targets. These application notes provide an overview and detailed protocols for applying this compound network principles to breast cancer research for scientists and drug development professionals.

Application Note 1: Identifying Novel Therapeutic Targets and Biomarkers

The heterogeneity of breast cancer means that few patients share identical mutation profiles, making it challenging to link specific mutations to disease outcomes through traditional statistical association. This compound network analysis addresses this by integrating protein interaction data to identify entire protein assemblies or functional modules that are under selection in cancer. Mutations occurring in any gene within a specific protein assembly can collectively predict disease outcome, providing a more robust biomarker than single-gene analysis.

Comprehensive mapping of these interactomes can shed light on the mechanisms underlying cancer initiation and progression, informing novel therapeutic strategies. By identifying the key nodes and pathways that are rewired in cancer cells, researchers can pinpoint novel drug targets. This approach is critical for intractable subtypes like triple-negative breast cancer (TNBC), where a lack of well-defined targets has hindered the development of effective therapies.

Caption: Workflow for this compound-based target and biomarker discovery.

Application Note 2: Understanding Drug Resistance Mechanisms

A major challenge in breast cancer treatment is the development of resistance to targeted therapies. Protein-protein interaction networks are highly dynamic and can be extensively rewired in response to therapeutic agents, leading to adaptive resistance. For example, in response to PI3K inhibitors in HER2+ breast cancer, compensatory signaling through receptor tyrosine kinase (RTK)-dependent complexes can reactivate downstream pathways, limiting the drug's efficacy.

By systematically profiling how targeted inhibitors remodel protein complexes, researchers can gain mechanistic insights into these adaptive responses. This knowledge is crucial for designing rational combination therapies that can overcome or prevent resistance. For instance, identifying the specific signaling assemblies, such as mTOR-containing complexes, that are reorganized following treatment can reveal secondary targets to inhibit alongside the primary driver oncogene.

Caption: The PI3K/AKT/mTOR signaling pathway in breast cancer.

Quantitative Data Summary

Quantitative analysis is essential for validating the findings from this compound networks and translating them into clinical applications. The following tables summarize relevant data from studies on breast cancer analysis.

Table 1: Elastographic Measures vs. Breast Cancer Prognostic Factors

This table presents the diagnostic performance of sonographic elastography, a technique that measures tissue stiffness—a key feature of the cell matrix. Higher stiffness values are strongly associated with malignancy and adverse prognostic factors.

| Measure | Cut-off Value | Sensitivity | Specificity | Application | Associated Negative Prognostic Factors |

| Strain Ratio (SR) | 2.42 | 96.0% | 98.5% | Differentiating benign vs. malignant lesions | High Nuclear Grade, Lymph Node Metastasis, ER-negative, PR-negative, HER2-negative |

| Tsukuba Score (TS) | 2.5 | 93.8% | 80.6% | Differentiating benign vs. malignant lesions | High Nuclear Grade, Lymph Node Metastasis, ER-negative, PR-negative, HER2-negative |

Data sourced from a study on sonographic elastography in breast cancer.

Table 2: Performance of Machine Learning Models in Predicting Pathological Complete Response (pCR) to Neoadjuvant Therapy

This table shows the performance, measured by the Area Under the Curve (AUC), of machine learning models trained on clinical and radiomics data to predict treatment response.

| Patient Subgroup | Best Model Input | AUC |

| All Subtypes | Radiomics Features | 0.72 |

| Triple-Negative | Radiomics Features | 0.80 |

| HER2-Positive | Radiomics Features | 0.65 |

Data from a study on predicting breast cancer response using machine learning.

Experimental Protocols

Detailed methodologies are crucial for the reproducible application of this compound network studies. The following are summarized protocols for key experimental models.

Protocol 1: Establishment and Analysis of Patient-Derived Xenograft (PDX) Models

PDX models, created by transplanting primary human tumor samples into immune-compromised mice, are invaluable for modeling the clinical diversity of breast cancer and for in vivo therapeutic testing.

1. Tissue Collection and Processing:

- Collect fresh human breast tumor tissue from surgical resection in a sterile collection medium on ice.