GEO

Description

Structure

3D Structure

Properties

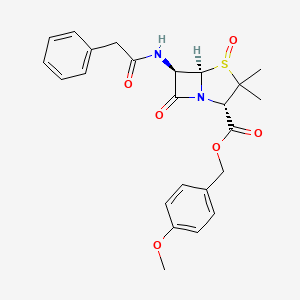

IUPAC Name |

(4-methoxyphenyl)methyl (2S,5R,6R)-3,3-dimethyl-4,7-dioxo-6-[(2-phenylacetyl)amino]-4λ4-thia-1-azabicyclo[3.2.0]heptane-2-carboxylate | |

|---|---|---|

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI |

InChI=1S/C24H26N2O6S/c1-24(2)20(23(29)32-14-16-9-11-17(31-3)12-10-16)26-21(28)19(22(26)33(24)30)25-18(27)13-15-7-5-4-6-8-15/h4-12,19-20,22H,13-14H2,1-3H3,(H,25,27)/t19-,20+,22-,33?/m1/s1 | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI Key |

HSSBYPUKMZQQKS-LPGANTDJSA-N | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Canonical SMILES |

CC1(C(N2C(S1=O)C(C2=O)NC(=O)CC3=CC=CC=C3)C(=O)OCC4=CC=C(C=C4)OC)C | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Isomeric SMILES |

CC1([C@@H](N2[C@H](S1=O)[C@@H](C2=O)NC(=O)CC3=CC=CC=C3)C(=O)OCC4=CC=C(C=C4)OC)C | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Molecular Formula |

C24H26N2O6S | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

DSSTOX Substance ID |

DTXSID301099502 | |

| Record name | 4-Thia-1-azabicyclo[3.2.0]heptane-2-carboxylic acid, 3,3-dimethyl-7-oxo-6-[(2-phenylacetyl)amino]- (2S,5R,6R)-, (4-methoxyphenyl)methyl ester, 4-oxide, (2S,5R,6R)- | |

| Source | EPA DSSTox | |

| URL | https://comptox.epa.gov/dashboard/DTXSID301099502 | |

| Description | DSSTox provides a high quality public chemistry resource for supporting improved predictive toxicology. | |

Molecular Weight |

470.5 g/mol | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

CAS No. |

53956-74-4 | |

| Record name | 4-Thia-1-azabicyclo[3.2.0]heptane-2-carboxylic acid, 3,3-dimethyl-7-oxo-6-[(2-phenylacetyl)amino]- (2S,5R,6R)-, (4-methoxyphenyl)methyl ester, 4-oxide, (2S,5R,6R)- | |

| Source | CAS Common Chemistry | |

| URL | https://commonchemistry.cas.org/detail?cas_rn=53956-74-4 | |

| Description | CAS Common Chemistry is an open community resource for accessing chemical information. Nearly 500,000 chemical substances from CAS REGISTRY cover areas of community interest, including common and frequently regulated chemicals, and those relevant to high school and undergraduate chemistry classes. This chemical information, curated by our expert scientists, is provided in alignment with our mission as a division of the American Chemical Society. | |

| Explanation | The data from CAS Common Chemistry is provided under a CC-BY-NC 4.0 license, unless otherwise stated. | |

| Record name | 4-Thia-1-azabicyclo[3.2.0]heptane-2-carboxylic acid, 3,3-dimethyl-7-oxo-6-[(2-phenylacetyl)amino]- (2S,5R,6R)-, (4-methoxyphenyl)methyl ester, 4-oxide, (2S,5R,6R)- | |

| Source | EPA DSSTox | |

| URL | https://comptox.epa.gov/dashboard/DTXSID301099502 | |

| Description | DSSTox provides a high quality public chemistry resource for supporting improved predictive toxicology. | |

| Record name | 4-Thia-1-azabicyclo[3.2.0]heptane-2-carboxylic acid, 3,3-dimethyl-7-oxo-6-[(2-phenylacetyl)amino]- (2S,5R,6R)-, (4-methoxyphenyl)methyl ester, 4-oxide, (2S,5R,6R) | |

| Source | European Chemicals Agency (ECHA) | |

| URL | https://echa.europa.eu/information-on-chemicals | |

| Description | The European Chemicals Agency (ECHA) is an agency of the European Union which is the driving force among regulatory authorities in implementing the EU's groundbreaking chemicals legislation for the benefit of human health and the environment as well as for innovation and competitiveness. | |

| Explanation | Use of the information, documents and data from the ECHA website is subject to the terms and conditions of this Legal Notice, and subject to other binding limitations provided for under applicable law, the information, documents and data made available on the ECHA website may be reproduced, distributed and/or used, totally or in part, for non-commercial purposes provided that ECHA is acknowledged as the source: "Source: European Chemicals Agency, http://echa.europa.eu/". Such acknowledgement must be included in each copy of the material. ECHA permits and encourages organisations and individuals to create links to the ECHA website under the following cumulative conditions: Links can only be made to webpages that provide a link to the Legal Notice page. | |

Foundational & Exploratory

The Gene Expression Omnibus (GEO): A Technical Guide for Researchers

The Gene Expression Omnibus (GEO) is a public repository of functional genomics data managed by the National Center for Biotechnology Information (NCBI).[1] It serves as a critical resource for the scientific community, archiving and freely distributing high-throughput gene expression and other functional genomics data. This guide provides an in-depth technical overview of the this compound database, tailored for researchers, scientists, and drug development professionals.

Understanding the this compound Data Structure

This compound organizes data into four main record types: Platforms, Samples, Series, and DataSets. This hierarchical structure ensures that data is well-annotated and easy to navigate.[2]

| Data Record Type | Accession Prefix | Description |

| Platform (GPL) | GPL | Describes the array or sequencing technology used to generate the data. This includes details about the physical array design or the sequencing instrument and protocol.[3] |

| Sample (GSM) | GSM | Contains information about an individual sample, including its source, the experimental treatments it underwent, and the resulting data. Each Sample record is linked to a single Platform.[3] |

| Series (GSE) | GSE | Groups together a set of related Samples that constitute a single experiment. The Series record provides a description of the overall study.[3] |

| DataSet (GDS) | GDS | A curated collection of biologically and statistically comparable Samples from a Series. DataSets are organized to facilitate analysis and visualization of gene expression data.[3] |

Data Submission to this compound: A Step-by-Step Overview

Submitting data to this compound involves preparing three key components: a metadata spreadsheet, processed data files, and raw data files.[1] The submission process is designed to ensure that the data is MIAME (Minimum Information About a Microarray Experiment) compliant.[4]

Required Data Components

A complete this compound submission consists of the following:

-

Metadata Spreadsheet: A template Excel file provided by this compound must be filled out with detailed information about the study, samples, and protocols.[1] All required fields, marked with an asterisk, must be completed.[5]

-

Raw Data Files: These are the original files generated by the sequencing instrument, typically in FASTQ or BAM format.[1] this compound deposits these raw files into the Sequence Read Archive (SRA) on behalf of the submitter.[1]

Data Submission Workflow

The general workflow for submitting high-throughput sequencing data to this compound is as follows:

Experimental Protocols

Detailed experimental protocols are crucial for the reproducibility and interpretation of submitted data. Below are generalized protocols for two common types of experiments found in this compound.

RNA-Seq Experimental Protocol

RNA sequencing (RNA-seq) is a powerful method for transcriptome profiling. A typical RNA-seq workflow involves the following steps:

-

RNA Isolation: Extract total RNA from the biological samples of interest.

-

RNA Quality Control: Assess the quantity and quality of the extracted RNA using spectrophotometry and capillary electrophoresis.

-

Library Preparation:

-

Deplete ribosomal RNA (rRNA) or enrich for messenger RNA (mRNA) using poly-A selection.

-

Fragment the RNA.

-

Synthesize first-strand cDNA using reverse transcriptase and random primers.

-

Synthesize second-strand cDNA.

-

Perform end-repair, A-tailing, and adapter ligation.

-

Amplify the library using PCR.

-

-

Library Quality Control: Validate the size and concentration of the sequencing library.

-

Sequencing: Sequence the prepared libraries on a high-throughput sequencing platform.

-

Data Analysis:

-

Perform quality control on the raw sequencing reads (FASTQ files).

-

Align reads to a reference genome or transcriptome.

-

Quantify gene or transcript expression to generate a count matrix.

-

ChIP-Seq Experimental Protocol

Chromatin Immunoprecipitation followed by sequencing (ChIP-seq) is used to identify the binding sites of DNA-associated proteins.

-

Cross-linking: Treat cells with formaldehyde to cross-link proteins to DNA.

-

Chromatin Shearing: Lyse the cells and shear the chromatin into small fragments using sonication or enzymatic digestion.

-

Immunoprecipitation: Incubate the sheared chromatin with an antibody specific to the protein of interest. The antibody-protein-DNA complexes are then captured using magnetic beads.

-

Washing and Elution: Wash the beads to remove non-specifically bound chromatin. Elute the immunoprecipitated chromatin from the beads.

-

Reverse Cross-linking: Reverse the protein-DNA cross-links and purify the DNA.

-

Library Preparation: Prepare a sequencing library from the purified DNA fragments.

-

Sequencing: Sequence the prepared libraries.

-

Data Analysis:

-

Perform quality control on the raw sequencing reads.

-

Align reads to a reference genome.

-

Perform peak calling to identify regions of enrichment.

-

Data Analysis with GEO2R

GEO2R is an interactive web tool that allows users to perform differential expression analysis on this compound data without needing programming expertise.[6] It utilizes the R packages GEOquery and limma for microarray data and DESeq2 for RNA-seq data.[6]

GEO2R Analysis Workflow

-

Select a this compound Series: Choose a GSE accession number to analyze.

-

Define Groups: Assign samples from the Series into two or more experimental groups for comparison.

-

Perform Analysis: GEO2R performs a statistical comparison between the defined groups to identify differentially expressed genes.

-

View Results: The results are presented as a table of genes ranked by p-value, along with visualizations like volcano plots and heatmaps.

| GEO2R Feature | Description |

| Input | A this compound Series (GSE) accession number. |

| Statistical Packages | limma for microarray data, DESeq2 for RNA-seq data.[6] |

| Output | A table of differentially expressed genes with associated statistics (log2 fold change, p-value, adjusted p-value). |

| Visualizations | Volcano plots, heatmaps, box plots, and mean-difference plots. |

Signaling Pathways Investigated with this compound Data

This compound datasets are frequently used to investigate the role of various signaling pathways in different biological contexts. Here are a few examples of signaling pathways that have been studied using data from this compound.

p53 Signaling Pathway

The p53 signaling pathway plays a crucial role in tumor suppression by regulating cell cycle arrest, apoptosis, and DNA repair.[7] Studies using this compound datasets have identified key genes in the p53 pathway that are dysregulated in various cancers.[8]

TGF-beta Signaling Pathway

The Transforming Growth Factor-beta (TGF-β) signaling pathway is involved in many cellular processes, including cell growth, differentiation, and apoptosis.[9] Its dysregulation is implicated in cancer and other diseases.[9]

NF-κB Signaling Pathway

The NF-κB (nuclear factor kappa-light-chain-enhancer of activated B cells) signaling pathway is a key regulator of the immune response, inflammation, and cell survival.[10] Analysis of this compound data has provided insights into the role of NF-κB in various inflammatory diseases and cancers.[10]

MAPK/ERK Signaling Pathway

The Mitogen-Activated Protein Kinase (MAPK) pathway, which includes the Extracellular signal-Regulated Kinase (ERK), is a crucial signaling cascade that regulates cell proliferation, differentiation, and survival.[11] Its aberrant activation is a common feature of many cancers.

Conclusion

The Gene Expression Omnibus is an indispensable resource for the scientific community, providing a vast and freely accessible collection of functional genomics data. This guide has provided a technical overview of the this compound database, from its fundamental data structures and submission procedures to the powerful analysis tools it offers. By understanding the intricacies of this compound, researchers can effectively leverage this resource to advance their own research and contribute to the collective body of scientific knowledge.

References

- 1. Submitting high-throughput sequence data to this compound - this compound - NCBI [ncbi.nlm.nih.gov]

- 2. KEGG_MAPK_SIGNALING_PATHWAY [gsea-msigdb.org]

- 3. Gene Set - erk1/erk2 mapk signaling pathway [maayanlab.cloud]

- 4. encodeproject.org [encodeproject.org]

- 5. researchgate.net [researchgate.net]

- 6. BIOCARTA_NFKB_PATHWAY [gsea-msigdb.org]

- 7. Integrated analysis of cell cycle and p53 signaling pathways related genes in breast, colorectal, lung, and pancreatic cancers: implications for prognosis and drug sensitivity for therapeutic potential - PMC [pmc.ncbi.nlm.nih.gov]

- 8. Identification and validation of three core genes in p53 signaling pathway in hepatitis B virus-related hepatocellular carcinoma - PMC [pmc.ncbi.nlm.nih.gov]

- 9. Expression profiling of genes regulated by TGF-beta: Differential regulation in normal and tumour cells - PMC [pmc.ncbi.nlm.nih.gov]

- 10. Identification of Potential Key Genes and Pathways for Inflammatory Breast Cancer Based on this compound and TCGA Databases - PMC [pmc.ncbi.nlm.nih.gov]

- 11. This compound Accession viewer [ncbi.nlm.nih.gov]

A Researcher's Guide to Navigating the Gene Expression Omnibus (GEO)

An In-depth Technical Guide to Understanding and Utilizing GEO Datasets for Drug Discovery and Scientific Research

The Gene Expression Omnibus (this compound) is a vast and publicly accessible repository of high-throughput functional genomics data.[1][2] For researchers, scientists, and drug development professionals, this compound provides an invaluable resource for exploring the molecular underpinnings of disease, identifying potential therapeutic targets, and validating experimental findings.[3] This guide offers a comprehensive overview of this compound datasets, their structure, and a detailed workflow for their analysis, using a real-world example to illustrate key concepts.

Understanding the Structure of this compound Datasets

This compound datasets are organized in a hierarchical structure, comprising four main record types:

-

Platforms (GPL): These records describe the technology and array design used to generate the data, such as a specific model of microarray.

-

Samples (GSM): These records contain the data from a single sample, including the gene expression values and descriptive information about the sample.

-

Series (GSE): These records group together a set of related samples that constitute a single experiment.

-

Datasets (GDS): These are curated collections of biologically and statistically comparable samples from a single experiment.

Understanding this structure is fundamental to effectively searching for and utilizing the wealth of data available in the this compound repository.

Case Study: Alzheimer's Disease Gene Expression (GSE5281)

To provide a practical context for understanding this compound datasets, this guide will utilize the publicly available dataset GSE5281, which examines gene expression profiles in different brain regions of individuals with Alzheimer's disease and normal aged individuals.[4]

Data Presentation: Quantitative Gene Expression

The core of a this compound dataset is the quantitative gene expression data. The following table presents a summarized view of normalized gene expression values for a selection of genes implicated in the FoxO signaling pathway from the GSE5281 dataset. The values represent the relative abundance of mRNA for each gene in different brain regions of Alzheimer's disease (AD) patients and control subjects.

| Gene Symbol | Entorhinal Cortex (AD) | Hippocampus (AD) | Medial Temporal Gyrus (AD) | Posterior Cingulate (AD) | Entorhinal Cortex (Control) | Hippocampus (Control) | Medial Temporal Gyrus (Control) | Posterior Cingulate (Control) |

| FOXO1 | 7.8 | 7.5 | 7.9 | 8.1 | 8.5 | 8.3 | 8.6 | 8.8 |

| FOXO3 | 9.2 | 9.0 | 9.3 | 9.5 | 9.8 | 9.6 | 9.9 | 10.1 |

| PIK3CA | 10.1 | 10.3 | 10.0 | 9.8 | 9.5 | 9.7 | 9.4 | 9.2 |

| AKT1 | 11.5 | 11.2 | 11.6 | 11.8 | 10.9 | 11.1 | 10.8 | 10.6 |

| SGK1 | 6.5 | 6.8 | 6.4 | 6.2 | 7.2 | 7.0 | 7.3 | 7.5 |

Note: The data presented here is a representative sample for illustrative purposes and does not encompass the full dataset.

Experimental Protocols: A Detailed Look at Methodology

A crucial aspect of interpreting and potentially replicating findings from a this compound dataset is a thorough understanding of the experimental methodology. The following is a detailed protocol for the GSE5281 study.[5]

1. Sample Collection and Preparation:

-

Brain samples were collected from individuals with Alzheimer's disease and age-matched controls from three Alzheimer's Disease Centers (ADCs).[5]

-

Samples were obtained from six distinct brain regions: entorhinal cortex, hippocampus, medial temporal gyrus, posterior cingulate, superior frontal gyrus, and primary visual cortex.[5]

-

Frozen and fixed tissue samples were sectioned in a standardized manner.[5]

2. Laser Capture Microdissection (LCM):

-

To ensure cellular homogeneity, LCM was performed on all brain tissue sections.[5]

-

Layer III pyramidal cells were specifically collected from the white matter of each brain region.[5]

3. RNA Isolation and Amplification:

-

Total RNA was isolated from the laser-captured cell lysates.

-

A double-round amplification of the RNA was performed for each sample to ensure sufficient material for array analysis.[5]

4. Microarray Analysis:

-

The amplified RNA was hybridized to Affymetrix U133 Plus 2.0 arrays, which contain approximately 55,000 transcripts.

-

The arrays were scanned, and the raw data were processed to generate gene expression values.

A Visual Guide to this compound Dataset Analysis

To further elucidate the process of working with this compound datasets, the following diagrams, generated using the DOT language, illustrate a typical experimental workflow and a relevant biological pathway for our case study.

Experimental Workflow for this compound Dataset Analysis

FoxO Signaling Pathway in the Context of Alzheimer's Disease

The FoxO signaling pathway is a crucial regulator of cellular processes such as apoptosis, cell cycle control, and resistance to oxidative stress.[3][6] Its dysregulation has been implicated in neurodegenerative diseases, including Alzheimer's disease. The following diagram illustrates key components of this pathway.

Conclusion

The Gene Expression Omnibus is a powerful resource for researchers and drug development professionals. By understanding the structure of this compound datasets and following a systematic analysis workflow, scientists can unlock valuable insights into the molecular basis of disease and identify promising avenues for therapeutic intervention. The case study of GSE5281 demonstrates how these datasets can be leveraged to investigate complex neurological disorders like Alzheimer's disease, providing a foundation for future research and the development of novel treatments.

References

An In-Depth Technical Guide to GEO2R for Data Analysis

For Researchers, Scientists, and Drug Development Professionals

This guide provides a comprehensive overview of the Gene Expression Omnibus 2R (GEO2R), an interactive web tool that enables the analysis of GEO datasets. GEO2R is a valuable resource for identifying differentially expressed genes and gaining insights into the molecular underpinnings of various biological conditions, making it a critical tool for hypothesis generation and target discovery in drug development.

Introduction to GEO2R

GEO2R is an interactive web tool built by the National Center for Biotechnology Information (NCBI) that allows users to perform differential expression analysis on data from the Gene Expression Omnibus (this compound) repository.[1] It provides a user-friendly interface to compare two or more groups of samples within a this compound Series, identifying genes that are differentially expressed across experimental conditions.[1][2] This tool is particularly useful for researchers who may not have expertise in command-line statistical analysis.[2]

The core of GEO2R's analytical power comes from well-established R packages from the Bioconductor project.[1] For microarray data, GEO2R utilizes the GEOquery and limma packages.[1] GEOquery parses this compound data into R data structures, while limma (Linear Models for Microarray Analysis) employs statistical tests to identify differentially expressed genes.[1] For RNA-seq data, GEO2R leverages the DESeq2 package, which uses negative binomial generalized linear models.[1]

A key feature of GEO2R is its reproducibility; the tool provides the complete R script used for the analysis, allowing for transparency and further customization.[3][4]

The GEO2R Analysis Workflow

The process of analyzing data using GEO2R follows a logical and straightforward workflow, from data selection to the interpretation of results. This workflow can be visualized as a series of interconnected steps.

Experimental Protocols: A Case Study with GSE18388

To illustrate the practical application of GEO2R, we will use the this compound dataset with accession number GSE18388 . This study investigates gene expression changes in the thymus of mice subjected to spaceflight.[4]

Experimental Design

The experiment aims to identify genes that are differentially expressed in the thymus of mice that have been in space compared to ground-based controls.

| Parameter | Description |

| Organism | Mus musculus (Mouse) |

| Tissue | Thymus |

| Experimental Groups | 1. Space-flown mice2. Ground control mice |

| Number of Samples | 8 (4 per group) |

| Microarray Platform | Affymetrix Mouse Genome 430 2.0 Array |

Step-by-Step GEO2R Analysis of GSE18388

-

Access the Dataset : Navigate to the this compound dataset browser and search for GSE18388. Click on the "Analyze with GEO2R" button.[4]

-

Define Groups : Create two groups: "space-flown" and "control".[4]

-

Assign Samples : Select the four samples corresponding to the space-flown mice and assign them to the "space-flown" group. Do the same for the four ground control samples and the "control" group.[4]

-

Value Distribution Check : Before analysis, it is good practice to check the distribution of expression values for the selected samples using the "Value distribution" tab. The box plots should be median-centered, indicating that the data is comparable across samples.[4]

-

Perform Analysis : Click the "Top 250" button to perform the differential expression analysis with default settings.[4]

-

Interpret Results : GEO2R will display a table of the top 250 differentially expressed genes, sorted by p-value.[4] Key columns in the results table include:

-

logFC : The log2 fold change, which represents the magnitude of the expression difference between the two groups.

-

P.Value : The nominal p-value for the differential expression.

-

adj.P.Val : The adjusted p-value, corrected for multiple testing (e.g., using the Benjamini & Hochberg method). This is the recommended value for determining statistical significance.[1]

-

Data Presentation and Interpretation

The primary output of a GEO2R analysis is a table of differentially expressed genes. Below is a mock table representing the kind of data you would obtain, structured for clarity and easy comparison.

| Gene Symbol | Gene Title | logFC | t-statistic | P.Value | adj.P.Val |

| RBM3 | RNA binding motif protein 3 | 2.15 | 8.42 | 1.28E-05 | 0.001 |

| FOS | Fos proto-oncogene, AP-1 transcription factor subunit | -1.89 | -7.98 | 2.11E-05 | 0.001 |

| JUN | Jun proto-oncogene, AP-1 transcription factor subunit | -1.75 | -7.55 | 3.54E-05 | 0.002 |

| EGR1 | Early growth response 1 | -1.62 | -7.12 | 5.96E-05 | 0.003 |

| ... | ... | ... | ... | ... | ... |

Note: This is a representative table. Actual values will be generated by the GEO2R analysis.

A positive logFC indicates up-regulation in the experimental group (e.g., space-flown) compared to the control group, while a negative logFC indicates down-regulation. The adj.P.Val is the most critical metric for determining the significance of the results.

Visualization of Results and Downstream Analysis

GEO2R provides several visualization tools to help interpret the results, including volcano plots and mean-difference plots.[3] These plots can help to quickly identify genes with both large-magnitude fold changes and high statistical significance.

The list of differentially expressed genes from GEO2R can be used for downstream functional analysis, such as pathway analysis, to understand the biological context of the gene expression changes. For example, a set of differentially expressed genes might be enriched in a particular signaling pathway, such as the NF-κB signaling pathway, which is known to be involved in cellular responses to stress.

Limitations and Considerations

While GEO2R is a powerful tool, it is important to be aware of its limitations:

-

Within-Series Restriction : Analyses are restricted to samples within a single this compound Series; cross-Series comparisons are not possible.[2]

-

Data Quality : GEO2R analyzes the data as it was submitted. The quality of the results is dependent on the quality of the original experiment and data submission.

-

Sample Size : The statistical power of the analysis is influenced by the number of samples in each group. Studies with small sample sizes may not yield robust results.[5]

Conclusion

GEO2R is an invaluable tool for researchers, scientists, and drug development professionals, providing a user-friendly platform for the analysis of publicly available gene expression data. By following a systematic workflow and carefully interpreting the results, users can uncover significant gene expression changes and gain deeper insights into the molecular mechanisms of disease and drug action. The ability to generate reproducible R scripts further enhances its utility, allowing for more advanced and customized analyses.

References

Unveiling the Trove: A Technical Guide to the Data Landscape of NCBI GEO

For Immediate Release

A comprehensive guide for researchers, scientists, and drug development professionals on the vast repository of functional genomics data within the National Center for Biotechnology Information (NCBI) Gene Expression Omnibus (GEO).

The NCBI Gene Expression Omnibus (this compound) serves as a critical public repository, archiving and freely distributing high-throughput functional genomics data from the global scientific community. This technical guide provides an in-depth exploration of the diverse data types housed within this compound, their organization, and the requisite experimental details, empowering researchers to effectively leverage this invaluable resource for their scientific endeavors, including target discovery and biomarker identification in drug development.

The Core of this compound: A Multi-faceted Data Repository

This compound accommodates a wide array of data generated from both microarray and next-generation sequencing (NGS) technologies. The data can be broadly categorized into three main components: raw data, processed data, and metadata. Submissions are expected to be complete and unfiltered to allow for comprehensive re-analysis by the scientific community.[1]

Quantitative Data Summary

The quantitative data within this compound is diverse and dependent on the experimental platform. The following tables summarize the key quantitative data types for major experimental categories.

Table 1: Quantitative Data in Gene Expression Profiling

| Experiment Type | Raw Data | Processed Data |

| Microarray (Expression) | Raw intensity files (e.g., .CEL, .GPR) | Normalized expression values (e.g., log2 fold change), Signal intensities |

| RNA-Seq | Sequence read files (e.g., FASTQ) | Raw read counts, Normalized counts (e.g., FPKM, RPKM, TPM) |

Table 2: Quantitative Data in Epigenomics

| Experiment Type | Raw Data | Processed Data |

| ChIP-Seq | Sequence read files (e.g., FASTQ) | Peak scores, Signal intensity/density tracks (e.g., WIG, bigWig, bedGraph) |

| DNA Methylation Array | Raw intensity files (e.g., .IDAT) | Beta (β) values, M-values |

| Bisulfite-Seq | Sequence read files (e.g., FASTQ) | Methylation ratios per CpG site |

Table 3: Quantitative Data in Other Functional Genomics Studies

| Experiment Type | Raw Data | Processed Data |

| SNP Array | Raw intensity files (e.g., .CEL) | Genotype calls, Allele frequencies, Copy number variation (CNV) |

| Non-coding RNA Profiling | Sequence read files (e.g., FASTQ) or raw intensity files | Normalized expression values or read counts |

Experimental Protocols: Ensuring Reproducibility and Transparency

To ensure data interpretability and reproducibility, this compound submissions adhere to the principles outlined by the Minimum Information About a Microarray Experiment (MIAME) and the Minimum Information About a Next-Generation Sequencing Experiment (MINSEQE) guidelines.[2] These standards mandate the submission of detailed experimental protocols and metadata.

Key Components of Submitted Experimental Protocols:

-

Sample Preparation: Detailed descriptions of the biological source, including organism, tissue, and cell type. This includes protocols for nucleic acid extraction, purification, and quality control.

-

Library Preparation (for NGS): Comprehensive information on the library construction process, including fragmentation, adapter ligation, size selection, and amplification methods.

-

Hybridization (for Microarrays): For microarray experiments, this includes details on probe labeling, hybridization conditions (temperature, time), and washing procedures.

-

Sequencing/Array Scanning: Information on the sequencing instrument and platform (e.g., Illumina, PacBio) or the microarray scanner and its settings.

-

Data Processing and Analysis: A thorough description of the data processing pipeline, including software used, alignment algorithms, normalization methods, and statistical analyses performed to generate the processed data.

Data Organization and Submission Workflow

Understanding the logical structure of this compound data is crucial for effective data retrieval and interpretation. Additionally, a clear view of the submission process is beneficial for researchers planning to contribute their data.

Logical Relationships of this compound Data

The data within this compound is organized into three main record types: Platform, Sample, and Series. The relationship between these entities provides a structured framework for understanding the experimental context.

References

Unraveling Neurodegeneration: A Technical Guide to Microarray Data Analysis

For Immediate Release

This technical guide provides researchers, scientists, and drug development professionals with a comprehensive framework for identifying and analyzing microarray data related to Alzheimer's, Parkinson's, and Huntington's diseases. By offering detailed experimental protocols, quantitative data summaries, and visual representations of key signaling pathways, this document aims to accelerate research and development in neurodegenerative disorders.

Introduction to Microarray Data in Neurodegenerative Disease Research

Microarray technology remains a powerful tool for simultaneously examining the expression levels of thousands of genes. In the context of neurodegenerative diseases, it allows for the identification of transcriptional changes associated with disease pathogenesis, progression, and potential therapeutic targets. This guide focuses on publicly available datasets to ensure the reproducibility and extension of the findings presented.

Selected Microarray Datasets

To illustrate the process of microarray data analysis, we have selected the following datasets from the Gene Expression Omnibus (GEO) and ArrayExpress repositories:

| Disease | Dataset ID | Repository | Platform |

| Alzheimer's Disease | GSE48350 | This compound | Affymetrix Human Genome U133 Plus 2.0 Array |

| Parkinson's Disease | GDS3128 | This compound | Affymetrix Human Genome U133A Array |

| Huntington's Disease | E-GEOD-39765 | ArrayExpress | Agilent-014850 Whole Human Genome Microarray 4x44K G4112F |

These datasets were chosen based on the availability of raw and processed data, detailed sample information, and associated publications that provide insights into the experimental design.

Quantitative Data Summary

The following tables summarize the top differentially expressed genes (DEGs) identified from the analysis of the selected datasets. The data was obtained using the GEO2R tool for the this compound datasets and by analyzing the processed data from ArrayExpress.[1][2][3] The tables highlight genes with the most significant changes in expression, providing a starting point for further investigation.

Alzheimer's Disease (GSE48350) - Top Differentially Expressed Genes

| Gene Symbol | Log2 Fold Change | Adjusted P-value |

| CD2 | 2.58 | 1.05E-08 |

| FCGR1A | 2.45 | 1.05E-08 |

| LILRA2 | 2.39 | 1.05E-08 |

| TREM2 | 2.31 | 1.05E-08 |

| GPNMB | 2.25 | 1.05E-08 |

| ANK1 | -2.15 | 1.05E-08 |

| SLC6A1 | -2.01 | 1.05E-08 |

| CAMK2A | -1.98 | 1.05E-08 |

| SYT1 | -1.95 | 1.05E-08 |

| GABRA1 | -1.92 | 1.05E-08 |

Parkinson's Disease (GDS3128) - Top Differentially Expressed Genes

| Gene Symbol | Log2 Fold Change | P-value |

| ALDH1A1 | -1.85 | 2.01E-06 |

| FGF20 | -1.72 | 3.15E-06 |

| PITX3 | -1.68 | 5.25E-06 |

| LINGO2 | -1.65 | 7.89E-06 |

| EN1 | -1.61 | 1.12E-05 |

| SNCA | 1.58 | 1.58E-05 |

| UCHL1 | 1.55 | 2.24E-05 |

| GCH1 | 1.52 | 3.16E-05 |

| PARK7 | 1.49 | 4.47E-05 |

| PINK1 | 1.46 | 6.31E-05 |

Huntington's Disease (E-GEOD-39765) - Top Differentially Expressed Genes

| Gene Symbol | Log2 Fold Change | P-value |

| PDE10A | -2.12 | 4.51E-07 |

| RGS2 | -1.98 | 6.32E-07 |

| DRD2 | -1.85 | 8.91E-07 |

| ADORA2A | -1.76 | 1.25E-06 |

| GPR88 | -1.69 | 1.78E-06 |

| HTT | 1.55 | 2.51E-06 |

| CHL1 | 1.48 | 3.55E-06 |

| GRIK2 | 1.42 | 5.01E-06 |

| DCLK1 | 1.37 | 7.08E-06 |

| FOXP1 | 1.32 | 1.00E-05 |

Experimental Protocols

Detailed methodologies for the key experiments are crucial for the replication and validation of research findings. Below are the experimental protocols for the selected microarray datasets.

General Microarray Experimental Workflow

The following diagram illustrates a generalized workflow for a typical microarray experiment, from sample collection to data analysis.

Alzheimer's Disease (GSE48350) - Affymetrix Human Genome U133 Plus 2.0 Array

-

Sample Preparation: Post-mortem brain tissue from the hippocampus, entorhinal cortex, superior frontal gyrus, and post-central gyrus was obtained from Alzheimer's disease patients and age-matched controls.

-

RNA Extraction: Total RNA was extracted from the brain tissue samples using TRIzol reagent (Invitrogen) according to the manufacturer's protocol. RNA quality and integrity were assessed using the Agilent 2100 Bioanalyzer.

-

Microarray Platform: Gene expression profiling was performed using the Affymetrix Human Genome U133 Plus 2.0 Array.

-

Target Preparation and Hybridization: Biotinylated cRNA was prepared from 5 µg of total RNA using the GeneChip Expression 3'-Amplification Reagents One-Cycle cDNA Synthesis Kit and IVT Labeling Kit (Affymetrix). The labeled cRNA was then fragmented and hybridized to the microarray for 16 hours at 45°C.

-

Data Processing: The arrays were washed and stained using the Affymetrix Fluidics Station 450 and scanned with the GeneChip Scanner 3000. The raw data (CEL files) were processed and normalized using the Robust Multi-array Average (RMA) algorithm.

Parkinson's Disease (GDS3128) - Affymetrix Human Genome U133A Array

-

Sample Preparation: Post-mortem substantia nigra tissue was collected from individuals with Parkinson's disease and healthy controls.

-

RNA Extraction: Total RNA was isolated from the tissue samples. The quality of the RNA was verified to ensure it met the standards for microarray analysis.

-

Microarray Platform: The Affymetrix Human Genome U133A Array was used for gene expression analysis.

-

Target Preparation and Hybridization: cRNA was synthesized from total RNA, labeled with biotin, and then fragmented. The fragmented and labeled cRNA was hybridized to the GeneChip arrays.

-

Data Processing: After hybridization, the arrays were washed, stained with streptavidin-phycoerythrin, and scanned. The resulting image data was converted into gene expression values. Data normalization was performed to allow for comparison across arrays.

Huntington's Disease (E-GEOD-39765) - Agilent Whole Human Genome Microarray

-

Sample Preparation: Post-mortem caudate nucleus brain tissue was obtained from Huntington's disease patients and control subjects.

-

RNA Extraction: Total RNA was extracted and purified from the brain tissue. RNA integrity was assessed to ensure high-quality input for the microarray experiment.

-

Microarray Platform: The Agilent-014850 Whole Human Genome Microarray 4x44K G4112F was utilized for this study.

-

Target Preparation and Hybridization: Cyanine-3 (Cy3) labeled cRNA was synthesized from the total RNA samples. The labeled cRNA was then hybridized to the Agilent microarrays.

-

Data Processing: The hybridized arrays were scanned using an Agilent DNA Microarray Scanner. The raw data was extracted using Agilent's Feature Extraction software. The data was then normalized to correct for systematic variations.

Signaling Pathways in Neurodegenerative Diseases

Understanding the molecular pathways disrupted in neurodegenerative diseases is critical for developing targeted therapies. The following diagrams, generated using Graphviz (DOT language), illustrate key signaling pathways implicated in Alzheimer's, Parkinson's, and Huntington's diseases.

Alzheimer's Disease: Amyloid Beta Signaling Pathway

This diagram depicts the amyloidogenic pathway, where the amyloid precursor protein (APP) is cleaved to produce amyloid-beta (Aβ) peptides, which can aggregate and lead to neuronal dysfunction.

Parkinson's Disease: Alpha-Synuclein Aggregation and Neurotoxicity

This diagram illustrates the misfolding and aggregation of alpha-synuclein, a key pathological event in Parkinson's disease, leading to the formation of Lewy bodies and subsequent neuronal cell death.

Huntington's Disease: Mutant Huntingtin Protein Signaling

This diagram outlines some of the key cellular disruptions caused by the mutant huntingtin (mHTT) protein, including transcriptional dysregulation and impaired protein degradation, which contribute to neuronal cell death in Huntington's disease.

Conclusion

This technical guide provides a foundational resource for researchers working on Alzheimer's, Parkinson's, and Huntington's diseases. By presenting a clear methodology for accessing and analyzing publicly available microarray data, summarizing key quantitative findings, and visualizing the underlying signaling pathways, we hope to facilitate new discoveries and the development of effective therapeutic strategies for these devastating neurodegenerative conditions. The provided datasets and protocols should serve as a valuable starting point for in-depth exploration and validation studies.

References

Navigating the Gene Expression Omnibus: A Technical Guide to GEO Datasets and Profiles

For Researchers, Scientists, and Drug Development Professionals

The Gene Expression Omnibus (GEO) is an invaluable public repository of high-throughput functional genomics data. However, effectively navigating this vast resource requires a clear understanding of its core data structures, primarily the distinction between this compound Datasets (GDS) and this compound Series (GSE). This technical guide provides an in-depth exploration of these entities, their underlying experimental protocols, and their application in elucidating complex biological pathways.

Core Concepts: this compound Series (GSE) vs. This compound Datasets (GDS)

At its core, the distinction between a this compound Series and a this compound DataSet lies in the level of curation and standardization.

-

This compound Series (GSE): A GSE record represents a collection of related samples from a single, submitter-supplied study. It is the original, unprocessed collection of data and metadata as provided by the researchers. Each GSE record is assigned a unique accession number starting with "GSE". These records provide a detailed description of the overall experiment and link to the individual sample (GSM) and platform (GPL) records.[1][2][3]

-

This compound Datasets (GDS): A GDS record is a curated and standardized collection of biologically and statistically comparable samples.[1][2][4] this compound staff compile GDS records from the original GSE submissions. This curation process involves reorganizing the data into a more structured format, defining experimental variables, and ensuring consistency across the dataset. This standardization enables the use of advanced data analysis and visualization tools within the this compound interface, such as GEO2R for differential expression analysis and the generation of gene-centric this compound Profiles.[4][5] Not all GSE records are converted into GDS records.

-

This compound Profiles: this compound Profiles provide a gene-centric view of the data within a this compound DataSet. Each profile displays the expression level of a single gene across all samples in a given GDS, offering a quick and powerful way to visualize how a gene's expression changes under different experimental conditions.[6][7]

The relationship between these entities can be visualized as a hierarchy, where a curated DataSet (GDS) is derived from a user-submitted Series (GSE), which in turn is composed of individual Samples (GSM) analyzed on a specific Platform (GPL).

Quantitative Data Comparison: GSE vs. GDS

| Feature | This compound Series (GSE) | This compound DataSet (GDS) | This compound Profiles |

| Primary Identifier | GSExxx | GDSxxx | (Implicitly linked to GDS) |

| Data Origin | Directly submitted by researcher | Curated by NCBI/GEO staff from a GSE | Derived from a GDS |

| Data Structure | Submitter-defined, often as a collection of individual sample files | Standardized, matrix format with defined experimental variables | Gene-centric view of expression across all samples in a GDS |

| Metadata | Provided by the submitter, variable in completeness and format | Standardized and curated for consistency | Gene annotation and links to the parent GDS |

| Analysis Tools | Limited to basic search and download | Advanced tools like GEO2R, clustering, and differential expression analysis | Visualization of individual gene expression patterns |

| Data Content | Raw and processed data, protocols, and experimental design | Reorganized and uniformly processed data, curated sample groupings | Expression values (e.g., signal counts, log ratios) for a single gene |

| MIAME/MINSEQE Compliance | Encouraged and facilitated, but adherence varies | Generally compliant due to curation | N/A |

Experimental Protocols: From Sample to Submission

The data within this compound originates from a variety of high-throughput experimental techniques. The two most common are microarrays and next-generation sequencing (NGS), particularly RNA-Seq. Adherence to community standards like MIAME (Minimum Information About a Microarray Experiment) and MINSEQE (Minimum Information About a Next-Generation Sequencing Experiment) is crucial for ensuring data quality and reusability.[8]

Microarray Experimental Workflow

Microarray experiments measure the abundance of thousands of nucleic acid sequences simultaneously. The general workflow is as follows:

-

Sample Preparation: Biological samples (e.g., tissue, cells) are collected, and RNA is extracted. The quality and quantity of the RNA are assessed.

-

Labeling and Hybridization: The extracted RNA is reverse transcribed into cDNA and labeled with a fluorescent dye. This labeled cDNA is then hybridized to a microarray chip, which contains thousands of known DNA probes.

-

Scanning and Image Analysis: The microarray is scanned to detect the fluorescent signals from the labeled cDNA bound to the probes. The intensity of the fluorescence at each probe location is proportional to the amount of the corresponding RNA in the sample.

-

Data Extraction and Normalization: The raw image data is processed to quantify the fluorescence intensity for each probe. This raw data is then normalized to correct for systematic variations and to allow for comparison between different arrays.

-

This compound Submission: The submission to this compound requires the raw data files (e.g., CEL files for Affymetrix arrays), the final processed (normalized) data matrix, and detailed metadata compliant with MIAME guidelines.[8][9][10] This includes information about the samples, experimental design, protocols, and array platform.[8]

RNA-Seq Experimental Workflow

RNA-Sequencing (RNA-Seq) provides a comprehensive and quantitative view of the transcriptome. The typical workflow includes:

-

RNA Isolation and QC: Total RNA is extracted from the biological samples. Its integrity and purity are assessed.

-

Library Preparation: The RNA is converted into a cDNA library. This process typically involves RNA fragmentation, reverse transcription to cDNA, adapter ligation, and amplification.[11] Depending on the research question, specific types of RNA, such as mRNA (poly-A selected) or total RNA (rRNA depleted), may be targeted.[11][12]

-

Sequencing: The prepared library is sequenced using a high-throughput sequencing platform (e.g., Illumina). This generates millions of short reads.

-

Data Processing and Analysis: The raw sequencing reads (in FASTQ format) undergo quality control. They are then aligned to a reference genome or transcriptome, and the number of reads mapping to each gene is counted. These counts are then normalized to account for differences in sequencing depth and gene length.

-

This compound Submission: A complete submission includes the raw sequencing data (e.g., FASTQ or BAM files), the processed data (e.g., a matrix of normalized gene counts), and detailed MINSEQE-compliant metadata.[13] This metadata describes the samples, experimental procedures, sequencing protocols, and data analysis methods.[8]

Application in Signaling Pathway Analysis

This compound data is a powerful resource for investigating the activity of signaling pathways in various biological contexts, such as disease states or in response to drug treatment. By analyzing the differential expression of genes within a known pathway, researchers can infer the pathway's activation or inhibition.

PI3K/Akt Signaling Pathway

The PI3K/Akt pathway is a crucial intracellular signaling cascade that regulates cell growth, proliferation, survival, and metabolism.[14][15] Dysregulation of this pathway is frequently observed in cancer.

TNF/NF-κB Signaling Pathway

The TNF/NF-κB signaling pathway plays a central role in inflammation, immunity, and cell survival.[16] Tumor Necrosis Factor (TNF) is a pro-inflammatory cytokine that activates the transcription factor NF-κB.

References

- 1. mathworks.com [mathworks.com]

- 2. This compound Overview - this compound - NCBI [ncbi.nlm.nih.gov]

- 3. ffli.dev: this compound DataSets: Experiment Selection and Initial Processing [ffli.dev]

- 4. Frequently Asked Questions - this compound - NCBI [ncbi.nlm.nih.gov]

- 5. About this compound DataSets - this compound - NCBI [ncbi.nlm.nih.gov]

- 6. Genes & Expression - Site Guide - NCBI [ncbi.nlm.nih.gov]

- 7. researchgate.net [researchgate.net]

- 8. This compound and MIAME - this compound - NCBI [ncbi.nlm.nih.gov]

- 9. GEOarchive submission instructions - this compound - NCBI [ncbi.nlm.nih.gov]

- 10. mskcc.org [mskcc.org]

- 11. youtube.com [youtube.com]

- 12. RNA Library Preparation for Next Generation Sequencing - CD Genomics [rna.cd-genomics.com]

- 13. youtube.com [youtube.com]

- 14. PI3K / Akt Signaling | Cell Signaling Technology [cellsignal.com]

- 15. creative-diagnostics.com [creative-diagnostics.com]

- 16. creative-diagnostics.com [creative-diagnostics.com]

Citing GEO Datasets: A Technical Guide for Researchers

Core Components of a GEO Dataset Citation

When citing a dataset from the this compound database, several key pieces of information must be included to ensure the citation is complete and allows for easy retrieval of the data. The NCBI strongly recommends that submitters and users cite the Series accession number (e.g., GSExxx), as this record provides a comprehensive overview of the experiment and links to all associated data.[1][3]

The following table summarizes the essential and recommended components for a this compound dataset citation.

| Component | Description | Example | Source on this compound Record |

| Author(s)/Creator(s) | The individuals or group responsible for generating the data. This is often the authors of the associated publication. | Smith J, Doe A, et al. | "Citation" or "Submitter" section |

| Year of Publication | The year the associated paper was published or the data was made public. | 2023 | "Citation" section or submission date |

| Dataset Title | The title of the this compound Series or DataSet record. | "The effect of compound X on gene expression in neurons" | Top of the this compound record page |

| Repository | The name of the database where the data is archived. | NCBI Gene Expression Omnibus | Standard for all this compound datasets |

| Accession Number | The unique and stable identifier for the dataset. The Series (GSE) number is preferred.[3] | GSExxx | Top of the this compound record page |

| URL/Link | A direct and persistent link to the dataset. | --INVALID-LINK-- | The URL in your browser when viewing the record |

In-Text vs. Full Reference List Citations

The format of your citation will differ depending on whether it is an in-text citation or a full citation in your reference list.

| Citation Type | Format and Examples |

| In-Text Citation | In-text citations should be brief and direct the reader to the full citation in the reference list. It is good practice to mention the database and the accession number. Example 1: "...we analyzed the microarray data from Smith et al. (2023), which is publicly available in the NCBI this compound database under accession number GSExxx."[1][3] Example 2: "The gene expression data (NCBI this compound, accession GSExxx) was used to..."[3] |

| Reference List Citation | The full citation in the reference list should contain all the core components. While specific formatting may vary by journal style (e.g., APA, MLA), the following provides a general and comprehensive template. Template: Author(s). (Year). Title of dataset [Data set]. NCBI Gene Expression Omnibus. GSExxx. --INVALID-LINK--Example: Smith J, Doe A. (2023). The effect of compound X on gene expression in neurons [Data set]. NCBI Gene Expression Omnibus. GSE12345. --INVALID-LINK-- |

Experimental Protocol: Locating and Formatting a this compound Dataset Citation

This section details the step-by-step methodology for finding the necessary information on the this compound website and constructing a proper citation.

-

Navigate to the this compound Dataset Record: Access the specific this compound record you wish to cite by searching the this compound DataSets database with keywords, authors, or the accession number if you already have it.[4]

-

Identify the Series Accession Number (GSE): The GSE number is typically displayed prominently at the top of the record page. This is the preferred accession number for citation.[3]

-

Locate the Associated Publication: Scroll down the record page to the "Citation" section. If a paper has been published and linked to the dataset, its full citation will be provided here. This is the primary source for the author(s) and year.[1][3]

-

Note the Dataset Title: The title of the this compound record is also found at the top of the page.

-

Construct the Full Citation: Assemble the information gathered in the previous steps into the recommended format for your reference list.

-

Formulate the In-Text Citation: When discussing the data in the body of your paper, use the in-text citation format to refer to the dataset and its accession number.

It is important to note that some datasets in this compound may not have an associated publication.[5] In such cases, you should still cite the dataset using the this compound accession number, the creators listed on the record, and the year of submission.[5]

Visualizing the this compound Dataset Citation Workflow

The following diagram illustrates the logical workflow for citing a this compound dataset in a research paper.

Caption: Workflow for citing a this compound dataset in a research paper.

By following these guidelines, researchers can ensure that their use of this compound datasets is properly attributed, enhancing the transparency and integrity of their work.

References

- 1. researchgate.net [researchgate.net]

- 2. How to Cite Datasets and Link to Publications | DCC [dcc.ac.uk]

- 3. Citing and linking - this compound - NCBI [ncbi.nlm.nih.gov]

- 4. About this compound DataSets - this compound - NCBI [ncbi.nlm.nih.gov]

- 5. Is It Possible To Use And Cite Ncbi this compound Datasets That Don'T Have A Already-Published Citation? [biostars.org]

Methodological & Application

Application Notes and Protocols: Downloading Data from the Gene Expression Omnibus (GEO) Database

Audience: Researchers, scientists, and drug development professionals.

Introduction

The Gene Expression Omnibus (GEO) is a public repository that archives and freely distributes high-throughput gene expression and other functional genomics data.[1][2] This document provides detailed protocols for downloading data from the this compound database, catering to a range of technical expertise, from manual web-based downloads to programmatic and command-line approaches. Understanding the structure of this compound data is fundamental for efficient data retrieval.[1]

Understanding this compound Data Organization

This compound data is organized into four main record types. A clear understanding of this organization is crucial for locating and downloading the correct data for your research needs.[1][3]

| Record Type | Accession Prefix | Description |

| Platform (GPL) | GPL | Describes the array or sequencing platform used, including the probes or features. |

| Sample (GSM) | GSM | Contains data from an individual sample, including experimental conditions and results. |

| Series (GSE) | GSE | A collection of related samples (GSMs) that constitute a single experiment or study.[1][3] |

| DataSet (GDS) | GDS | Curated collections of biologically and statistically comparable this compound samples.[1] |

Protocols for Data Download

There are several methods to download data from this compound, each with its own advantages depending on the scale and reproducibility requirements of your project.

Manual Download from the this compound Website

This is the most straightforward method for downloading data for a single study.

Protocol:

-

Navigate to the this compound website: Open a web browser and go to the Gene Expression Omnibus homepage (45]

-

Search for a dataset: Use the search bar to find a dataset of interest. You can search by keyword (e.g., "Alzheimer's disease"), this compound accession number (e.g., GSE150910), or author.[5][6]

-

Select the Series (GSE) record: From the search results, click on the relevant GSE accession number to view the experiment details.

-

Locate the download links: Scroll down to the bottom of the Series page. You will find a section for "Download family" or "Supplementary files."[5][7]

-

Download the data:

-

Processed Data: The Series Matrix File(s) link provides a tab-delimited text file containing the processed, normalized expression data for all samples in the series. This is often the easiest format to work with for immediate analysis.

-

Raw Data: The (ftp) link in the "Download family" section will take you to the FTP directory containing the raw data files (e.g., CEL files for Affymetrix arrays, or FASTQ files for sequencing data which are often linked to the Sequence Read Archive - SRA).[5][7] Raw data allows for custom processing and normalization workflows.[8]

-

Supplementary Files: This section may contain additional files provided by the authors, such as gene-level count matrices or other relevant data.[7]

-

Programmatic Access with R (GEOquery)

For reproducible and scalable data downloads, the GEOquery package in R is a powerful tool.[1][3] It allows you to download and parse this compound data directly into R data structures.[3][9]

Protocol:

-

Install and load GEOquery: If you haven't already, install the package from Bioconductor.[1][10]

-

Download a GSE record: Use the getthis compound() function with the GSE accession number.[3][10]

The GSEMatrix = TRUE argument ensures that you download the processed expression data as an ExpressionSet object, which is a standard data structure in Bioconductor for storing high-throughput assay data.

-

Access the expression data and metadata:

-

Downloading raw data: To get the raw data files, you can use the getGEOSuppFiles() function.[8]

This will download the supplementary files, which often include the raw data, into your current working directory.[8]

Programmatic Access with Python (GEOparse)

GEOparse is a Python library that provides similar functionality to R's GEOquery, allowing for the programmatic download and parsing of this compound data.

Protocol:

-

Install GEOparse:

This will download the GSE soft file and parse it into a GSE object.

-

Access the expression data and metadata:

Command-Line Access with NCBI Entrez Direct and SRA Toolkit

For users comfortable with the command line, NCBI's Entrez Direct (E-utilities) and the SRA Toolkit provide a powerful way to automate data downloads. [11][12]This is particularly useful for downloading raw sequencing data from the Sequence Read Archive (SRA), where this compound often links to for high-throughput sequencing studies. [7][13] Protocol:

-

Install Entrez Direct and SRA Toolkit: Follow the installation instructions on the NCBI website. [7]2. Find SRA runs associated with a this compound study: Use E-utilities to search for the SRA runs linked to a GSE accession.

-

Download the raw FASTQ files: Use the fastq-dump command from the SRA Toolkit with the SRA run accession numbers obtained in the previous step. [13] bash fastq-dump SRR1234567

Data Presentation

The following table summarizes the different download methods and the typical data formats obtained.

| Download Method | Data Type | Typical Format | Use Case |

| Manual (Website) | Processed | .txt (Series Matrix) | Quick analysis of a single study. |

| Raw | .CEL, .idat, .fastq.gz | Re-analysis with custom workflows. | |

| R (GEOquery) | Processed | ExpressionSet object | Reproducible analysis within the R/Bioconductor ecosystem. |

| Raw | .tar.gz containing raw files | Programmatic access to raw data for custom pipelines. | |

| Python (GEOparse) | Metadata & Processed | Parsed Python objects | Integration into Python-based analysis pipelines. |

| Command-Line (Entrez Direct & SRA Toolkit) | Raw Sequencing | .fastq | Batch download of raw sequencing data for large-scale studies. |

Visualizing Download Workflows

The following diagrams illustrate the logical steps involved in the different data download methods.

Caption: Manual data download workflow from the this compound website.

Caption: Programmatic data download using R (GEOquery) and Python (GEOparse).

Caption: Command-line download of raw sequencing data using Entrez Direct and SRA Toolkit.

References

- 1. Using the GEOquery Package • GEOquery [seandavi.github.io]

- 2. Home - this compound - NCBI [ncbi.nlm.nih.gov]

- 3. Using the GEOquery Package [bioconductor.org]

- 4. ncbi.nlm.nih.gov [ncbi.nlm.nih.gov]

- 5. m.youtube.com [m.youtube.com]

- 6. google.com [google.com]

- 7. youtube.com [youtube.com]

- 8. GEOquery [kasperdanielhansen.github.io]

- 9. youtube.com [youtube.com]

- 10. Analysing data from this compound - Work in Progress [sbc.shef.ac.uk]

- 11. All Resources - Site Guide - NCBI [ncbi.nlm.nih.gov]

- 12. youtube.com [youtube.com]

- 13. youtube.com [youtube.com]

Application Notes and Protocols for Submitting High-Throughput Sequencing Data to the Gene Expression Omnibus (GEO)

Audience: Researchers, scientists, and drug development professionals.

Introduction:

The Gene Expression Omnibus (GEO), maintained by the National Center for Biotechnology Information (NCBI), is a public repository for functional genomics data.[1][2] Submitting your high-throughput sequencing data to this compound is a critical step in the research publication process, ensuring data accessibility and reproducibility. This guide provides a detailed, step-by-step protocol for preparing and submitting your data to this compound, ensuring a smooth and successful submission process.

Part 1: Data Preparation and Organization

Prior to initiating the submission process, meticulous preparation of your data and metadata is essential. This ensures compliance with this compound's standards and facilitates a streamlined review process.

Understand this compound Submission Requirements

First, familiarize yourself with the types of data accepted by this compound. The repository accommodates a wide range of high-throughput data, including but not limited to RNA-seq, ChIP-seq, and bisulfite sequencing.[3] A complete submission to this compound consists of three main components: metadata, processed data, and raw data files.[4]

Prepare the Metadata Spreadsheet

The metadata spreadsheet is a critical component of your submission, providing detailed information about your study, samples, and experimental protocols.

-

Download the Template: Obtain the most current metadata spreadsheet template directly from the this compound website.[5]

-

Complete all Sections: The spreadsheet contains multiple tabs that require comprehensive information. Key sections include:

-

Study: Overall description of your experiment, including title, summary, and design.

-

Samples: Detailed information for each sample, including source, organism, and experimental variables.

-

Protocols: Step-by-step descriptions of your experimental and data processing protocols.

-

Data Processing: Information on the software and methods used to process the raw data.

-

Files: A list of all submitted files and their corresponding samples.

-

Table 1: Example Metadata - Sample Information

| Sample Name | Organism | Tissue | Treatment | Time Point |

| GSM123456 | Homo sapiens | Liver | Drug A | 24h |

| GSM123457 | Homo sapiens | Liver | Vehicle | 24h |

| GSM123458 | Mus musculus | Brain | Knockout | 48h |

| GSM123459 | Mus musculus | Brain | Wild-type | 48h |

Format Data Files

Properly formatted raw and processed data files are required for a successful submission.

-

Raw Data Files: These are the original, unprocessed files from the sequencing instrument (e.g., FASTQ files). It is crucial to calculate MD5 checksums for each raw data file to ensure data integrity during transfer.[1][3]

Table 2: Example Processed Data File (RNA-seq Counts)

| GeneID | Sample1_count | Sample2_count | Sample3_count |

| GeneA | 150 | 200 | 175 |

| GeneB | 300 | 350 | 325 |

| GeneC | 50 | 75 | 60 |

Part 2: The this compound Submission Workflow

The submission process involves transferring your data files via FTP and then submitting the metadata through the this compound submission portal.

File Transfer via FTP

-

Log in to the this compound FTP Server: Use the credentials provided by this compound to log in to their FTP server. Be aware of the 30-second timeout for logins.[3]

-

Create a Submission Directory: Navigate to the designated directory and create a new folder for your submission.[3]

-

Upload Data Files: Transfer your raw and processed data files to the newly created directory. Using the mput * command can efficiently transfer multiple files.[3] Do not upload the metadata spreadsheet via FTP.[6]

Metadata and Final Submission

-

Navigate to the this compound Submission Portal: Access the submission portal through the NCBI website.[1]

-

Upload Metadata: Select the subfolder on the FTP server containing your data files and then upload your completed metadata spreadsheet.[5]

-

Submit: After reviewing all information, click the "Submit" button. This compound will then perform an automated validation of your metadata file.[5]

This compound Submission Workflow Diagram

Caption: A flowchart illustrating the major steps in the this compound data submission process.

Part 3: Experimental Protocols

Detailed and accurate descriptions of your experimental protocols are essential for the reproducibility of your research.

Example Protocol: RNA Sequencing

-

RNA Extraction: Total RNA was extracted from cultured cells using the RNeasy Mini Kit (Qiagen) according to the manufacturer's instructions. RNA quality and quantity were assessed using the Agilent 2100 Bioanalyzer.

-

Library Preparation: RNA-seq libraries were prepared from 1 µg of total RNA using the NEBNext Ultra II RNA Library Prep Kit for Illumina (New England Biolabs).

-

Sequencing: Libraries were sequenced on an Illumina NovaSeq 6000 platform, generating 150 bp paired-end reads.

-

Data Processing: Raw sequencing reads were quality-checked using FastQC. Adapters and low-quality bases were trimmed using Trimmomatic. The trimmed reads were then aligned to the human reference genome (GRCh38) using STAR aligner. Gene expression levels were quantified using featureCounts.

Experimental Workflow for RNA-Seq Data Generation

Caption: A diagram showing a typical experimental workflow for generating RNA-seq data.

Part 4: Post-Submission

After your submission is processed and approved, you will receive an email containing the assigned this compound accession numbers for your study (GSE), samples (GSM), and series.[5] These accession numbers should be included in your manuscript to allow reviewers and readers to access your data. You will also be provided with a private reviewer access link that can be shared with journal editors and reviewers before the public release date.

References

- 1. GitHub - PaoyangLab/GEO_submission: The guideline for this compound submission [github.com]

- 2. Home - this compound - NCBI [ncbi.nlm.nih.gov]

- 3. Submitting High-throughput Data to this compound - CD Genomics [bioinfo.cd-genomics.com]

- 4. Submitting High-Throughput Sequence Data to this compound (Gene Expression Omnibus) - Omics tutorials [omicstutorials.com]

- 5. youtube.com [youtube.com]

- 6. Submitting high-throughput sequence data to this compound - this compound - NCBI [ncbi.nlm.nih.gov]

Application Notes and Protocols for Analyzing RNA-seq Data from the Gene Expression Omnibus (GEO)

Audience: Researchers, scientists, and drug development professionals.

Introduction: The Gene Expression Omnibus (GEO) is a vast public repository of high-throughput functional genomics data, including a wealth of RNA sequencing (RNA-seq) datasets.[1][2] Analyzing this publicly available data allows researchers to explore gene expression patterns, validate experimental findings, and generate new hypotheses without the cost of generating new data.[1][2] This document provides a detailed workflow and protocols for the analysis of RNA-seq data obtained from this compound, from raw data retrieval to biological interpretation.

Data Acquisition from this compound and SRA

Application Notes: Raw sequencing data from this compound is typically stored in the Sequence Read Archive (SRA).[3][4] To analyze this data, it must first be downloaded and converted into the FASTQ format, which contains the raw sequence reads and their corresponding quality scores. The NCBI SRA Toolkit is a collection of command-line tools that facilitates this process.[3][5]

Experimental Protocol: Downloading SRA data and converting to FASTQ

-

Identify the dataset of interest on the this compound website. For a given this compound accession number (e.g., GSE48213), navigate to the "SRA Run Selector" to find the list of SRA run accession numbers (SRR...).

-

Install the NCBI SRA Toolkit. Instructions can be found on the NCBI website.

-

Use the prefetch command to download the SRA file. This command downloads the compressed SRA data.

-

Use the fastq-dump command to convert the SRA file to FASTQ format. The --split-files option is used for paired-end sequencing data to generate two separate files for the forward and reverse reads.[3]

Quality Control of Raw Sequencing Data

Application Notes: Before proceeding with analysis, it is crucial to assess the quality of the raw sequencing reads. FastQC is a widely used tool that provides a comprehensive report on various quality metrics, such as per-base sequence quality, GC content, and adapter content.[6][7][8] This step helps identify potential issues with the sequencing data that may need to be addressed, for instance, by trimming low-quality bases or removing adapter sequences.

Experimental Protocol: Running FastQC

-

Install FastQC. Downloadable from the Babraham Bioinformatics website.

-

Run FastQC on the FASTQ files.

-

Review the generated HTML report. Pay close attention to warnings or failures in the report, which may indicate issues with the data quality.

Data Presentation: Example FastQC Summary

| Metric | Status |

| Per base sequence quality | PASS |

| Per tile sequence quality | PASS |

| Per sequence quality scores | PASS |

| Per base sequence content | WARN |

| Per sequence GC content | PASS |

| Per base N content | PASS |

| Sequence Length Distribution | PASS |

| Sequence Duplication Levels | WARN |

| Overrepresented sequences | FAIL |

| Adapter Content | PASS |

Read Alignment to a Reference Genome

Application Notes: The next step is to align the quality-controlled sequencing reads to a reference genome. For RNA-seq data, it is important to use a splice-aware aligner that can handle reads that span across exons. STAR (Spliced Transcripts Alignment to a Reference) is a popular, fast, and accurate RNA-seq aligner.[9][10][11][12] The output of the alignment is typically a BAM (Binary Alignment Map) file, which contains the mapping information for each read.

Experimental Protocol: Aligning reads with STAR

-

Download the reference genome and gene annotation files (GTF). These can be obtained from sources like Ensembl or UCSC.

-

Generate a genome index for STAR. This only needs to be done once per reference genome.

-

Align the reads to the indexed genome.

Gene Expression Quantification

Application Notes: After alignment, the number of reads that map to each gene needs to be counted. This process, known as feature quantification, results in a count matrix where rows represent genes and columns represent samples. featureCounts is a highly efficient and accurate tool for this purpose.[13][14][15][16][17] This count matrix is the primary input for differential expression analysis.

Experimental Protocol: Quantifying gene expression with featureCounts

-

Install featureCounts (part of the Subread package). [13]

-

Run featureCounts on the BAM files.

Differential Gene Expression Analysis

Application Notes: Differential expression analysis aims to identify genes that show significant changes in expression levels between different experimental conditions.[18] DESeq2 is a popular R/Bioconductor package for this analysis, which models the raw counts using a negative binomial distribution.[18][19][20][21] It performs normalization to account for differences in library size and sequencing depth, estimates dispersion, and fits a generalized linear model to test for differential expression.[21]

Experimental Protocol: Using DESeq2 for differential expression

-

Install and load the DESeq2 package in R.

-

Prepare the count matrix and metadata. The count matrix should have genes as rows and samples as columns. The metadata table should describe the experimental conditions for each sample.

-

Run the DESeq2 analysis.

Data Presentation: Example DESeq2 Results

| Gene ID | baseMean | log2FoldChange | lfcSE | stat | pvalue | padj |

| ENSG0000012345 | 150.2 | 1.58 | 0.25 | 6.32 | 2.61e-10 | 7.89e-08 |

| ENSG0000067890 | 897.6 | -2.1 | 0.31 | -6.77 | 1.28e-11 | 4.56e-09 |

| ENSG0000011121 | 45.1 | 0.5 | 0.45 | 1.11 | 0.26 | 0.54 |

Pathway and Gene Set Enrichment Analysis

Application Notes: To gain biological insights from a list of differentially expressed genes, pathway analysis or gene set enrichment analysis (GSEA) is performed.[22][23] These methods identify biological pathways or sets of genes that are significantly over-represented in the list of differentially expressed genes.[22][24] This helps to understand the underlying biological processes affected by the experimental conditions.[22][23][24][25]

Experimental Protocol: Gene Set Enrichment Analysis (GSEA)

-

Prepare a ranked list of genes. This is typically the list of all genes ranked by a metric from the differential expression analysis (e.g., the 'stat' column from DESeq2).

-

Obtain gene sets. These can be downloaded from databases like MSigDB, which contains collections of gene sets based on pathways (e.g., KEGG, Reactome) and other biological knowledge.[26]

-

Run GSEA using a suitable tool (e.g., the GSEA software from the Broad Institute or R packages like fgsea or clusterProfiler).

Data Presentation: Example GSEA Results

| Pathway Name | Enrichment Score (ES) | Normalized ES (NES) | p-value | FDR q-val |

| HALLMARK_INFLAMMATORY_RESPONSE | 0.68 | 2.15 | <0.001 | <0.001 |

| KEGG_CELL_CYCLE | -0.45 | -1.78 | 0.005 | 0.012 |

| REACTOME_SIGNALING_BY_GPCR | 0.52 | 1.65 | 0.011 | 0.025 |

Visualizations

Experimental Workflow

References

- 1. Analyzing Transcriptomics Data from this compound Datasets [elucidata.io]

- 2. Preprocessing of Bulk RNA-seq this compound Datasets for Accurate Analysis [elucidata.io]

- 3. Batch downloading FASTQ files using the SRA toolkit, fastq-dump, and Python [erilu.github.io]

- 4. How to download raw sequence data from this compound/SRA [biostars.org]

- 5. Babraham Bioinformatics - SRA downloader - easily download fastq files from this compound and SRA [bioinformatics.babraham.ac.uk]

- 6. Babraham Bioinformatics - FastQC A Quality Control tool for High Throughput Sequence Data [bioinformatics.babraham.ac.uk]

- 7. youtube.com [youtube.com]

- 8. youtube.com [youtube.com]