PHM16

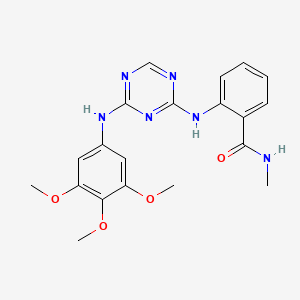

Description

BenchChem offers high-quality this compound suitable for many research applications. Different packaging options are available to accommodate customers' requirements. Please inquire for more information about this compound including the price, delivery time, and more detailed information at info@benchchem.com.

Properties

Molecular Formula |

C20H22N6O4 |

|---|---|

Molecular Weight |

410.4 g/mol |

IUPAC Name |

N-methyl-2-[[4-(3,4,5-trimethoxyanilino)-1,3,5-triazin-2-yl]amino]benzamide |

InChI |

InChI=1S/C20H22N6O4/c1-21-18(27)13-7-5-6-8-14(13)25-20-23-11-22-19(26-20)24-12-9-15(28-2)17(30-4)16(10-12)29-3/h5-11H,1-4H3,(H,21,27)(H2,22,23,24,25,26) |

InChI Key |

UQGQBHYGCQYHMP-UHFFFAOYSA-N |

Canonical SMILES |

CNC(=O)C1=CC=CC=C1NC2=NC(=NC=N2)NC3=CC(=C(C(=C3)OC)OC)OC |

Origin of Product |

United States |

Foundational & Exploratory

What are the core principles of prognostics and health management?

An In-depth Technical Guide to the Core Principles of Prognostics and Health Management (PHM)

Introduction to Prognostics and Health Management (PHM)

The PHM framework is a continuous and systematic process that integrates several key activities to provide a comprehensive understanding of a system's health.[7] It leverages data from sensors, operational history, and physics-based models to move beyond simple fault detection to provide actionable insights into future performance.[1]

The Core Principles and Workflow of PHM

The PHM process can be systematically broken down into four primary stages: Data Acquisition, Diagnostics, Prognostics, and Health Management.[8][9] This cyclical process ensures that decisions are based on the most current health status of the system.

Data Acquisition and Processing

The foundation of any PHM system is the ability to acquire and process high-quality data that accurately reflects the system's condition.[8][10] This stage involves the collection of data from various sources and its subsequent manipulation to prepare it for analysis.[9]

Key Methodologies:

-

Sensing: This involves the use of various sensors to monitor the physical state of a system.[2] Common sensors include those for vibration, temperature, pressure, acoustic emissions, and electrical signals. For specialized applications, advanced sensors like wireless, low-power nodes may be developed for retrofitting existing systems.[2]

-

Data Collection: Data can be acquired in different modes. Static acquisition captures a snapshot of the system at a point in time, while dynamic acquisition records data over a period to observe trends.[11] In complex scenarios, data acquisition may be synchronized with physiological or mechanical cycles, a technique known as gated acquisition.[11]

-

Data Pre-processing: Raw sensor data is often noisy and may contain irrelevant information.[12] Pre-processing is a critical step to clean the data and extract meaningful features. This can involve:

-

Noise Filtering: Removing external noise to improve the signal-to-noise ratio.

-

Feature Extraction: Deriving specific metrics or "health indicators" from the raw data that are sensitive to system degradation.

-

Data Fusion: Combining data from multiple sensors to create a more comprehensive and reliable health assessment.

-

Diagnostics: Fault Detection and Isolation

Diagnostics focuses on identifying that a fault has occurred, isolating its location, and determining its root cause.[7] It answers the question, "What is wrong with the system now?".[13] This is a crucial step that precedes prognostics, as understanding the current fault is necessary to predict its future progression.[14]

Key Methodologies:

-

Fault Detection: This is the initial step of identifying an anomaly or deviation from normal operating conditions.[14] It often involves setting thresholds for sensor readings or using statistical models to detect outliers.

-

Fault Isolation: Once a fault is detected, this process pinpoints the specific component or subsystem that is failing.

-

Fault Identification: This step determines the nature and cause of the fault.

Experimental Protocol: Fault Diagnosis using Deep Learning An example of a diagnostic methodology involves using Convolutional Neural Networks (CNNs) for fault detection in industrial robots, particularly in settings with imbalanced or scarce data.[14]

-

Data Acquisition: Collect data from the system under various operating conditions (e.g., normal and multiple fault states).

-

Signal Processing: Convert raw time-series data (e.g., vibration signals) into a 2D format like spectrograms or scalograms, which can be used as image inputs for CNNs.

-

Model Training: Train a benchmark CNN model (e.g., GoogLeNet, SqueezeNet, VGG16) on the labeled image dataset. The model learns to classify the images corresponding to different health states.

-

Validation: Test the trained model on a separate validation dataset to assess its accuracy in diagnosing known faults.[14]

-

Novelty Detection: For real-world scenarios, where not all fault conditions are known in advance, advanced techniques may be used to detect "unknown" faults—conditions that were not part of the training data.[8]

The following table summarizes the performance of several benchmark CNN models in a fault diagnosis task on an industrial robot, demonstrating the high accuracy achievable with deep learning approaches.[14]

| Model | Accuracy (%) |

| GoogLeNet | 99.7% |

| SqueezeNet | 99.6% |

| VGG16 | 99.3% |

| AlexNet | 98.0% |

| Inceptionv3 | 97.9% |

| ResNet50 | 95.7% |

| Table 1: Performance of CNN benchmark models in fault detection and diagnosis. Data sourced from a study on industrial robot fault diagnosis.[14] |

Prognostics: Predicting Remaining Useful Life (RUL)

Prognostics is the predictive element of PHM, focused on forecasting the future health of a system and estimating its Remaining Useful Life (RUL).[4][13] RUL is the predicted time left before a component or system will no longer be able to perform its intended function.[15] This forward-looking capability is what distinguishes PHM from traditional diagnostics.[13]

Methodologies for Prognostics:

-

Data-Driven Approaches: These methods use historical run-to-failure data and machine learning or statistical techniques to model degradation patterns.[15] They do not require deep knowledge of the system's physics but depend heavily on the availability of large, relevant datasets.[15]

-

Model-Based (Physics-of-Failure) Approaches: These approaches use mathematical models based on the physical principles of how a component degrades and fails.[4] They are often more accurate when the failure mechanisms are well understood but can be complex and computationally expensive to develop.

Health Management: Decision Support

The final principle, Health Management, involves using the information from diagnostics and prognostics to make informed decisions about maintenance, logistics, and operations.[4][7] The goal is to translate the predictive insights into actions that optimize the system's lifecycle.[3]

Key Decision Support Activities:

-

Logistics and Supply Chain Management: Using RUL predictions to ensure that spare parts and personnel are available when and where they are needed.[3]

-

Operational Adjustments: Modifying how a system is used (e.g., reducing its load) to extend its life until maintenance can be performed.[1]

This decision-making process is often automated or semi-automated through a decision support system, which weighs the RUL prediction against operational constraints and objectives to recommend the optimal course of action.[2][17]

Conclusion

The core principles of Prognostics and Health Management—Data Acquisition, Diagnostics, Prognostics, and Health Management—provide a robust framework for moving from a reactive to a predictive and proactive approach to system maintenance and lifecycle management. By leveraging advanced sensor technologies, data processing, and predictive modeling, PHM offers a powerful methodology to enhance reliability, safety, and efficiency across a wide range of technical and scientific domains. The systematic application of these principles enables organizations to anticipate failures, optimize operations, and make intelligent, data-driven decisions.

References

- 1. researchgate.net [researchgate.net]

- 2. Prognostics and Health Management – Center for Systems Reliability [sandia.gov]

- 3. dau.edu [dau.edu]

- 4. Prognostics and Health Management | Center for Advanced Life Cycle Engineering [calce.umd.edu]

- 5. taylorfrancis.com [taylorfrancis.com]

- 6. routledge.com [routledge.com]

- 7. mathworks.com [mathworks.com]

- 8. mdpi.com [mdpi.com]

- 9. researchgate.net [researchgate.net]

- 10. youtube.com [youtube.com]

- 11. European Nuclear Medicine Guide [nucmed-guide.app]

- 12. A Data Processing Method for CBM for PHM [ijpe-online.com]

- 13. learning.modapto.eu [learning.modapto.eu]

- 14. mdpi.com [mdpi.com]

- 15. Prognostics - Wikipedia [en.wikipedia.org]

- 16. Prognostics and Remaining Useful Life Prediction of Machinery: Advances, Opportunities and Challenges | Journal of Dynamics, Monitoring and Diagnostics [ojs.istp-press.com]

- 17. Improving Patient Engagement Through Patient Decision Support - PMC [pmc.ncbi.nlm.nih.gov]

An In-Depth Technical Guide to Fault Diagnostics and Prognostics in Engineering Systems

Abstract: The increasing complexity of modern engineering systems necessitates robust methodologies for ensuring their reliability and safety. Fault diagnostics and prognostics are critical disciplines that address this need by enabling the detection, isolation, and prediction of failures. This technical guide provides a comprehensive overview of the core principles, methodologies, and applications of fault diagnostics and prognostics. It is intended for researchers and professionals seeking a deeper understanding of these fields, with a focus on data-driven and model-based approaches, signal processing techniques, and the estimation of Remaining Useful Life (RUL). This guide incorporates detailed experimental protocols, quantitative data summaries, and visual representations of key workflows and logical relationships to facilitate a thorough understanding of the subject matter.

Introduction to Fault Diagnostics and Prognostics

Fault diagnostics is the process of detecting and identifying the root cause of a fault after it has occurred in a system.[1] It goes beyond simple fault detection by providing insights into the nature and location of the anomaly.[1] Prognostics, on the other hand, is the prediction of a system's future health state and the estimation of its Remaining Useful Life (RUL) before a failure occurs.[2] Together, diagnostics and prognostics form the cornerstone of Prognostics and Health Management (PHM), a comprehensive maintenance strategy that aims to reduce unscheduled downtime, optimize maintenance schedules, and enhance operational efficiency.[3]

The primary objectives of a robust PHM system are to:

-

Diagnose Faults: Determine the root cause, location, and severity of a detected fault.

-

Prognosticate Failures: Predict the future degradation of a component or system and estimate its RUL.

Core Methodologies

The methodologies for fault diagnostics and prognostics can be broadly categorized into three main approaches: data-driven, model-based, and hybrid methods.

Data-Driven Approaches

Data-driven methods leverage historical and real-time operational data from sensors to identify patterns and trends indicative of faulty behavior.[5] These approaches do not require an in-depth understanding of the system's physical principles.[5] They are particularly effective for complex systems where developing an accurate physical model is challenging.[6]

Key Techniques:

-

Statistical Methods: These methods employ statistical models to monitor deviations from normal operating conditions. Techniques include Statistical Process Control (SPC), which uses control charts to track process parameters, and time-series analysis to model the temporal behavior of sensor data.

-

Machine Learning (ML): ML algorithms are increasingly used for their ability to learn complex patterns from large datasets.[7] Supervised learning algorithms like Support Vector Machines (SVM) and Decision Trees are used for fault classification, while unsupervised methods like clustering and anomaly detection can identify novel fault conditions.[8][9] Deep learning models, such as Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), have shown significant promise in RUL estimation.[10][11]

Model-Based Approaches

Model-based techniques utilize a mathematical representation of the system's physical behavior to detect and diagnose faults.[12] These models are derived from first principles and engineering knowledge.[13] The core idea is to compare the actual system output with the model's predicted output; a significant discrepancy, or "residual," indicates a fault.[14]

Key Techniques:

-

Parameter Estimation: This method involves estimating the physical parameters of the system model from sensor data. Deviations in these parameters from their nominal values can indicate a fault.

-

State Observers: Observers, such as Kalman filters and Luenberger observers, are used to estimate the internal state of a system. The residual between the observed and estimated states is used for fault detection.

-

Parity Space Relations: This approach uses analytical redundancy by checking for consistency among a set of sensor measurements based on the system's mathematical model.

Signal Processing Techniques

Signal processing is a crucial precursor to both data-driven and model-based methods, as it involves extracting relevant features from raw sensor data.[15] The quality of these extracted features significantly impacts the performance of diagnostic and prognostic algorithms.[16]

Key Techniques:

-

Time-Domain Analysis: Involves calculating statistical features from the signal waveform, such as root mean square (RMS), kurtosis, and crest factor.[17]

-

Frequency-Domain Analysis: Utilizes techniques like the Fast Fourier Transform (FFT) to analyze the frequency content of the signal.[15] Faults in rotating machinery often manifest as specific frequency components.[15]

-

Time-Frequency Analysis: Methods like the wavelet transform and the short-time Fourier transform (STFT) are used to analyze non-stationary signals where the frequency content changes over time.

Quantitative Data Summary

The performance of different diagnostic and prognostic algorithms can be evaluated using various metrics. The following tables summarize the performance of several machine learning and deep learning models on benchmark datasets.

Table 1: Performance Comparison of Machine Learning Algorithms for Fault Diagnosis

| Algorithm | Dataset | Accuracy (%) | Precision (%) | Recall (%) | F1-Score (%) |

| Support Vector Machine (SVM) | Manufacturing System | 91.62 | - | - | - |

| K-Nearest Neighbors (KNN) | Photovoltaic System | 99.2 | 99.2 | - | - |

| Decision Tree (DT) | Photovoltaic System | - | 98.6 | - | - |

| Random Forest | Electric Motor | High | - | - | - |

| Ensemble Bagged Trees | Photovoltaic System | 92.2 | - | - | - |

Data compiled from multiple sources.[3][7][8][18] Note: "-" indicates that the specific metric was not reported in the cited source.

Table 2: Performance Comparison of Deep Learning Models for RUL Estimation

| Model | Dataset | MAE | MSE | R² Score |

| LSTM | CALCE Battery | - | - | - |

| CNN | CALCE Battery | - | - | - |

| LSTM + Autoencoder | CALCE Battery | 1.29% | 32.12% | - |

| Transformer-based Model | CALCE Battery | - | - | - |

Data from a comparative analysis of deep learning models for RUL estimation.[19][20] Note: "-" indicates that the specific metric was not reported in the cited source.

Experimental Protocols

This section provides detailed methodologies for key experiments in fault diagnostics and prognostics.

Experimental Protocol for Bearing Fault Diagnosis

This protocol describes a typical experimental setup for collecting vibration data for bearing fault diagnosis.

Objective: To acquire vibration signals from bearings under healthy and various fault conditions to train and validate a fault diagnosis model.

Materials:

-

Electric motor (e.g., 2 horsepower induction motor)[21]

-

Test bearings (healthy and with seeded faults such as inner race, outer race, and ball defects)

-

Accelerometers (e.g., three-axis)

-

Data acquisition system

-

Shaft and coupling

-

Loading mechanism

Procedure:

-

Test Rig Assembly: Mount the test bearing on the shaft, which is driven by the electric motor.[21] Apply a radial load to the bearing using the loading mechanism.[22]

-

Sensor Installation: Place accelerometers on the motor housing near the test bearing to capture vibration signals in the axial, radial, and tangential directions.

-

Data Acquisition:

-

Set the motor to a constant rotational speed (e.g., 2000 rpm).[22]

-

Set the sampling frequency of the data acquisition system to a high rate (e.g., 20 kHz) to capture the high-frequency signatures of bearing faults.[22]

-

Record vibration data for a sufficient duration for each of the following conditions:

-

Healthy bearing

-

Bearing with an inner race fault

-

Bearing with an outer race fault

-

Bearing with a ball fault

-

-

-

Data Preprocessing and Feature Extraction:

-

Divide the raw vibration signals into smaller segments.

-

Apply signal processing techniques (e.g., FFT, wavelet transform) to extract relevant features from each segment.

-

-

Model Training and Validation:

-

Use the extracted features and corresponding fault labels to train a machine learning classifier (e.g., SVM, KNN).

-

Evaluate the performance of the trained model using a separate test dataset.

-

Protocol for Implementing Machine Learning-Based Predictive Maintenance

This protocol outlines the steps for developing and deploying a machine learning model for predictive maintenance.

Objective: To create a predictive model that can estimate the RUL of a component or system based on sensor data.

Procedure:

-

Data Acquisition and Preparation:

-

Feature Engineering:

-

Extract meaningful features from the raw data that are indicative of the system's health. This may involve time-domain, frequency-domain, or time-frequency analysis.

-

Select the most informative features to reduce the dimensionality of the data and improve model performance.

-

-

Model Selection and Training:

-

Choose an appropriate machine learning algorithm based on the problem (e.g., regression for RUL estimation, classification for fault diagnosis).[9]

-

-

Model Evaluation and Validation:

-

Deployment and Monitoring:

-

Deploy the trained model for real-time monitoring and prediction.

-

Continuously monitor the model's performance and retrain it periodically with new data to maintain its accuracy.[23]

-

Visualizing Workflows and Logical Relationships

The following diagrams, created using the DOT language, illustrate key workflows and logical relationships in fault diagnostics and prognostics.

Data-Driven Fault Diagnosis Workflow

This diagram outlines the typical steps involved in a data-driven approach to fault diagnosis.

Model-Based Fault Prognosis Workflow

This diagram illustrates the logical flow of a model-based approach to fault prognosis.

References

- 1. researchgate.net [researchgate.net]

- 2. scispace.com [scispace.com]

- 3. Performance Optimization of Machine-Learning Algorithms for Fault Detection and Diagnosis in PV Systems | MDPI [mdpi.com]

- 4. Predictive Maintenance Machine Learning: A Practical Guide | Neural Concept [neuralconcept.com]

- 5. re.public.polimi.it [re.public.polimi.it]

- 6. The Fault Diagnosis Model of FMS Workflow Based on Adaptive Weighted Fuzzy Petri Net | Scientific.Net [scientific.net]

- 7. Machine Learning approach for Predictive Maintenance in Industry 4.0 | IEEE Conference Publication | IEEE Xplore [ieeexplore.ieee.org]

- 8. mdpi.com [mdpi.com]

- 9. How to Implement Predictive Maintenance Using Machine Learning? [neurosys.com]

- 10. researchgate.net [researchgate.net]

- 11. Remaining Useful Life Prediction Based on Deep Learning: A Survey - PMC [pmc.ncbi.nlm.nih.gov]

- 12. mdpi.com [mdpi.com]

- 13. Prognostic and Health Management of Critical Aircraft Systems and Components: An Overview - PMC [pmc.ncbi.nlm.nih.gov]

- 14. Data-Driven Fault Diagnosis for Electric Drives: A Review - PMC [pmc.ncbi.nlm.nih.gov]

- 15. mdpi.com [mdpi.com]

- 16. Machine Learning Predictive Maintenance: How to Implement It? | Lemberg Solutions [lembergsolutions.com]

- 17. arxiv.org [arxiv.org]

- 18. Comparative analysis of the performance of supervised learning algorithms for photovoltaic system fault diagnosis | Science and Technology for Energy Transition (STET) [stet-review.org]

- 19. kuey.net [kuey.net]

- 20. kuey.net [kuey.net]

- 21. mdpi.com [mdpi.com]

- 22. Enhanced Rolling Bearing Fault Diagnosis Combining Novel Fluctuation Entropy Guided-VMD with Neighborhood Statistical Model | MDPI [mdpi.com]

- 23. oxmaint.com [oxmaint.com]

Key concepts in condition-based maintenance for industrial machinery.

An In-depth Technical Guide to Condition-Based Maintenance for Industrial Machinery

Introduction: The Evolution of Maintenance Philosophies

In the landscape of industrial operations, maintenance strategies have evolved significantly, moving from reactive repairs to data-driven, proactive interventions. The primary goal is to enhance equipment reliability, improve safety, and optimize operational costs.[1][2] Condition-Based Maintenance (CBM) represents a sophisticated approach that leverages real-time asset health data to guide maintenance decisions.[1][3][4][5] This strategy contrasts sharply with traditional methods.

Table 1: Comparison of Core Maintenance Philosophies

| Maintenance Strategy | Core Principle | Pros | Cons |

| Reactive Maintenance | "Run-to-failure." Action is taken only after a breakdown occurs. | No initial cost. | High unplanned downtime, expensive emergency repairs, potential for catastrophic failure. |

| Preventive Maintenance | "Calendar-based." Maintenance is performed at predetermined intervals (time or usage).[1] | Reduces likelihood of failure compared to reactive. | Can lead to unnecessary maintenance, risk of introducing new faults during service.[1][6] |

| Condition-Based Maintenance (CBM) | "Monitor and act." Maintenance is triggered by the actual condition of the asset.[1][3][4][5] | Optimizes scheduling, prolongs asset life, reduces costs by avoiding unnecessary work.[1][4][5] | Requires initial investment in monitoring technology and expertise.[1] |

| Predictive Maintenance (PdM) | "Predict and prevent." Uses historical and real-time data with advanced analytics to forecast future failures.[1] | Maximizes uptime, minimizes maintenance costs by intervening at the optimal moment.[4] | Highest initial investment, requires significant data and analytical capabilities. |

CBM and Predictive Maintenance (PdM) are closely related, with CBM forming the foundation for PdM. CBM answers the question, "Is something wrong?", while PdM seeks to answer, "When will it go wrong?".[7] This guide focuses on the core tenets of CBM, from data acquisition to decision-making, providing a technical framework for its implementation.

The Core Workflow of Condition-Based Maintenance

The CBM process is a systematic, data-driven cycle designed to transform raw sensor data into actionable maintenance intelligence. This workflow is standardized by ISO 13374, which outlines a modular architecture for condition monitoring and diagnostics systems.[8][9] The key stages involve acquiring data related to system health, processing that data to extract meaningful features, and making informed maintenance decisions.[6]

Caption: A logical flow diagram of the CBM process based on the ISO 13374 standard.

The process begins with Data Acquisition from sensors and ends with Advisory Generation that guides maintenance personnel.[8][9] This structured approach ensures that maintenance activities are directly linked to the evidenced health of the machinery.

Prognostics and Health Management (PHM)

Prognostics and Health Management (PHM) is a comprehensive engineering discipline that provides the analytical power behind advanced CBM and predictive maintenance.[7][10] Its purpose is to assess the current health of a component and predict its remaining useful life (RUL).[11] PHM integrates diagnostics (detecting and identifying faults) with prognostics (predicting fault progression).[10][12]

Caption: Relationship between Diagnostics, Prognostics, and Decision Support in PHM.

By implementing PHM, organizations can move beyond simply detecting a fault to understanding its trajectory, enabling just-in-time maintenance that maximizes component life while minimizing the risk of unexpected failure.[12]

Key Condition Monitoring Techniques: Methodologies

The efficacy of a CBM program hinges on the quality and relevance of the data collected. Various monitoring techniques are employed to track different physical parameters, each providing unique insights into machinery health.[13][14]

Table 2: Mapping of Monitoring Techniques to Common Industrial Faults

| Monitoring Technique | Detectable Faults | Applicable Machinery |

| Vibration Analysis | Imbalance, misalignment, bearing wear, gear tooth defects, looseness.[13] | Rotating machinery: motors, pumps, compressors, turbines, gearboxes.[13][15] |

| Infrared Thermography | Overheating in electrical connections, bearing friction, insulation breakdown, cooling issues.[14] | Electrical cabinets, motors, bearings, steam systems.[15] |

| Oil Analysis | Component wear (via particle analysis), fluid contamination (water, coolant), lubricant degradation.[16] | Engines, gearboxes, hydraulic systems, transformers. |

| Ultrasonic Analysis | High-frequency sounds from bearing friction, pressure/vacuum leaks, electrical arcing. | Bearings, steam traps, compressed air systems, electrical panels. |

| Electrical Monitoring | Motor winding faults, rotor bar issues, power quality problems.[15] | Electric motors, generators, transformers.[14] |

Experimental Protocol: Vibration Analysis

Vibration analysis is a cornerstone of CBM for rotating machinery, based on the principle that all machines produce a characteristic vibration "signature" during normal operation.[13][15] Deviations from this signature indicate developing faults.

-

Principle: Measures the oscillation of machine components. Changes in vibration amplitude or frequency directly correlate to changes in the machine's dynamic forces, which are altered by faults like imbalance or bearing wear.[15]

-

Instrumentation: Accelerometers are the most common sensors. They are mounted directly onto the machine's bearing housings or other critical points to convert mechanical vibration into an electrical signal.

-

Data Acquisition:

-

Sensor Placement: Sensors are placed at strategic locations, typically in the horizontal, vertical, and axial directions, to capture the full range of motion.

-

Data Collection: Data is captured as a time-domain waveform. The sampling rate must be high enough to capture the frequencies of interest (typically following the Nyquist theorem).

-

-

Data Analysis:

-

Time Waveform Analysis: The raw signal is observed to detect transient events like impacts or rubbing.

-

Spectral Analysis (FFT): The Fast Fourier Transform (FFT) algorithm is applied to the time waveform to convert it into the frequency domain. This spectrum separates the overall vibration into its constituent frequencies, allowing for the precise identification of faults, as different faults manifest at specific frequencies.[16]

-

-

Interpretation: Specific frequencies in the spectrum are linked to specific components. For example, a high peak at 1x the rotational speed often indicates imbalance, while specific higher frequencies can be matched to the unique geometric properties of a bearing to diagnose inner or outer race defects.

Experimental Protocol: Oil Analysis

Oil analysis provides a deep insight into the internal condition of machinery by examining the properties of its lubricating oil.[15][16] The lubricant acts as a diagnostic medium, carrying evidence of wear and contamination.

-

Principle: Assesses the health of both the machinery and the lubricant itself by analyzing the physical and chemical properties of the oil and identifying the type and quantity of suspended particles.[16]

-

Instrumentation: Laboratory-based instruments such as spectrometers, viscometers, and particle counters are used.

-

Data Acquisition (Sampling):

-

A representative oil sample (typically 100-200 mL) is drawn from the machine while it is operating or shortly after shutdown to ensure particles are still in suspension.

-

Samples are taken from a consistent point in the system (e.g., a dedicated sample valve) to ensure data trendability.

-

The sample container must be clean to avoid external contamination.

-

-

Data Analysis:

-

Spectrometric Analysis: Techniques like Inductively Coupled Plasma (ICP) spectrometry measure the concentration of various metallic elements (e.g., iron, copper, aluminum) in parts per million (PPM), indicating which specific components are wearing.

-

Viscosity Measurement: Checks if the oil's viscosity is within the specified range. Significant changes can indicate oxidation, thermal breakdown, or contamination.

-

Particle Counting (e.g., ISO 4406): Quantifies the number of particles in different size ranges to assess the overall cleanliness of the fluid.

-

-

Interpretation: High levels of a specific metal can pinpoint the wearing component (e.g., high iron suggests gear or bearing wear). The presence of contaminants like water or silicon (dirt) indicates seal failure or improper filtration, which can accelerate wear.

Implementation and Benefits

Adopting a CBM strategy offers substantial advantages by transforming maintenance from a cost center into a value-added activity.[1] The primary goals are to predict and prevent equipment failures, enhance reliability, optimize resources, and improve safety.[1]

-

Reduced Downtime: By identifying potential issues before they escalate, CBM minimizes unplanned downtime and production disruptions.[4][17]

-

Cost Savings: Maintenance is performed only when necessary, which reduces labor costs, minimizes the consumption of spare parts, and lowers the risk of expensive secondary damage from catastrophic failures.[4][5]

-

Extended Asset Life: By monitoring and maintaining optimal operating conditions, CBM reduces stress and premature wear on machinery, extending its operational lifespan.[1][5][17]

-

Improved Safety: Proactively addressing mechanical issues helps prevent dangerous equipment failures that could pose a risk to personnel.[1][4][5]

Despite these benefits, implementation requires an initial investment in sensor technology, data acquisition systems, and personnel training.[1] However, for critical assets where failure carries significant financial or safety risks, the return on investment is typically high.[3][17]

References

- 1. Condition-Based Maintenance Explained: A Data-Driven Approach [voltainsite.com]

- 2. tsapps.nist.gov [tsapps.nist.gov]

- 3. Condition-based Maintenance (CBM) Explained | Reliable Plant [reliableplant.com]

- 4. fiixsoftware.com [fiixsoftware.com]

- 5. What is Condition-based Maintenance? | IBM [ibm.com]

- 6. files.core.ac.uk [files.core.ac.uk]

- 7. Prognostics and Health Management (PHM) - MATLAB & Simulink [it.mathworks.com]

- 8. cdn.standards.iteh.ai [cdn.standards.iteh.ai]

- 9. researchgate.net [researchgate.net]

- 10. Prognostics and Health Management of Industrial Assets: Current Progress and Road Ahead - PMC [pmc.ncbi.nlm.nih.gov]

- 11. research.polyu.edu.hk [research.polyu.edu.hk]

- 12. sal.aalto.fi [sal.aalto.fi]

- 13. clickmaint.com [clickmaint.com]

- 14. samotics.com [samotics.com]

- 15. advancedtech.com [advancedtech.com]

- 16. 15 Condition-Based Monitoring Techniques (2025) | Inframatrix [inframatrix.com.my]

- 17. The Complete Condition-Based Maintenance (CBM) Guide - FieldEx [fieldex.com]

A Technical Guide to Remaining Useful Life (RUL) Prediction Models for Researchers, Scientists, and Drug Development Professionals

An In-depth Technical Guide on the Core Principles, Methodologies, and Applications of Remaining Useful Life (RUL) Prediction Models.

This guide provides a comprehensive overview of Remaining Useful Life (RUL) prediction models, tailored for an audience of researchers, scientists, and professionals in drug development and pharmaceutical manufacturing. The principles of prognostics and health management (PHM), central to RUL prediction, offer a powerful paradigm for enhancing the reliability and efficiency of critical equipment in laboratory and manufacturing environments. By anticipating equipment failures, researchers can safeguard valuable experiments, ensure data integrity, and maintain the stringent quality control required in pharmaceutical production.[1][2][3][4]

Core Concepts of Remaining Useful Life (RUL) Prediction

Remaining Useful Life (RUL) is the estimated time an asset can operate before it requires repair or replacement.[5][6] RUL prediction is a key component of prognostics and health management (PHM), a discipline focused on predicting the future health state of a system.[6][7] In the context of drug development and pharmaceutical manufacturing, this translates to predicting the failure of critical equipment such as bioreactors, chromatography systems, and lyophilizers. Accurate RUL prediction enables a shift from reactive or preventive maintenance to a more efficient predictive maintenance strategy, minimizing downtime and ensuring operational consistency.[1][2][3]

The core workflow of developing an RUL prediction model involves several key stages, from data acquisition to model deployment.

Methodologies for RUL Prediction

RUL prediction models can be broadly categorized into three main approaches: model-based, data-driven, and hybrid models.[8][9]

Model-Based Approaches

Model-based approaches, also known as physics-based models, utilize a deep understanding of the system's physical failure mechanisms to predict its RUL.[5][8] These models are based on mathematical equations that describe the degradation process.

Advantages:

-

Can be highly accurate if the underlying physics of failure are well understood.

-

Require less historical data for training compared to data-driven models.

Disadvantages:

-

Developing an accurate physical model can be complex and time-consuming.

-

The model may not be generalizable to other systems with different failure modes.

A conceptual representation of a model-based approach involves mapping physical principles to a degradation model.

Data-Driven Approaches

Data-driven methods use historical data from sensors and operational logs to learn the degradation patterns of a system and predict its RUL.[5][9] These approaches have gained significant popularity with the rise of machine learning and deep learning.

Common Data-Driven Models:

-

Artificial Neural Networks (ANNs): Including Multi-Layer Perceptrons (MLPs), ANNs can model complex non-linear relationships between sensor data and RUL.[10][11]

-

Recurrent Neural Networks (RNNs): Particularly Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) networks, are well-suited for time-series data as they can capture temporal dependencies in the degradation process.

-

Convolutional Neural Networks (CNNs): Can automatically extract hierarchical features from sensor data, which is beneficial for RUL prediction.[12]

-

Support Vector Machines (SVM): A powerful classification and regression technique that can be used for RUL estimation.

-

Gaussian Process Regression (GPR): A probabilistic model that provides a distribution over the possible RUL values, capturing uncertainty in the prediction.

Advantages:

-

Do not require in-depth knowledge of the system's physics.

-

Can be applied to a wide range of systems.

Disadvantages:

-

Require a large amount of historical run-to-failure data.

-

The performance is highly dependent on the quality and quantity of the data.

Hybrid Approaches

Hybrid models combine elements of both model-based and data-driven approaches to leverage their respective strengths.[8] For instance, a physical model might be used to estimate an unobservable degradation state, which is then used as an input to a data-driven model for RUL prediction.

Quantitative Performance of RUL Prediction Models

The performance of RUL prediction models is typically evaluated using metrics such as Mean Absolute Error (MAE), Root Mean Squared Error (RMSE), and R-squared (R²). The following tables summarize the performance of various models on the well-established NASA C-MAPSS and CALCE battery datasets.

Table 1: Performance of RUL Prediction Models on NASA C-MAPSS Dataset (Turbofan Engines)

| Model | RMSE | MAE | R² | Reference |

|---|---|---|---|---|

| TCN-BiLSTM-Attention | 12.33 (FD001) | - | - | [13] |

| LSTM | 11.76 (FD003) | - | - | [14] |

| PSO-LSTM | - | 0.67% | 0.9298 | [12] |

| Hybrid (EEMD & KAN-LSTM) | - | - | > 0.96 |[3] |

Table 2: Performance of RUL Prediction Models on CALCE Dataset (Li-ion Batteries)

| Model | RUL Error (cycles) | R² | Reference |

|---|

| Hybrid (EEMD & KAN-LSTM) | 7-15 | > 0.91 |[3] |

Experimental Protocols for RUL Model Validation

A robust experimental protocol is crucial for validating the performance of RUL prediction models. The following outlines a generalized protocol based on the methodologies used for the NASA C-MAPSS and CALCE battery datasets.

Data Acquisition and Preprocessing

-

Data Source: Utilize run-to-failure data from a fleet of similar assets. For instance, the C-MAPSS dataset contains simulated data for turbofan engines, while the CALCE dataset provides experimental data for Li-ion batteries.[15][16][17]

-

Sensor Data: Collect multivariate time-series data from various sensors monitoring key operational parameters (e.g., temperature, pressure, voltage, current).[16]

-

Data Cleaning: Handle missing values and remove noise from the sensor signals using techniques like moving averages or Kalman filters.

-

Normalization: Scale the sensor data to a common range (e.g., 0 to 1) to ensure that all features contribute equally to the model's training.

Feature Engineering and Selection

-

Feature Extraction: Create meaningful features from the raw sensor data. This can include statistical features (e.g., mean, standard deviation, skewness, kurtosis) over a time window, or frequency-domain features from techniques like Fast Fourier Transform (FFT).

-

Feature Selection: Select the most relevant features that are highly correlated with the degradation process. This can be done using techniques like correlation analysis, principal component analysis (PCA), or more advanced methods like recursive feature elimination.[8][18]

Model Training and Validation

-

Data Splitting: Divide the dataset into training, validation, and testing sets. The training set is used to train the model, the validation set to tune hyperparameters, and the testing set to evaluate the final model's performance on unseen data.

-

Model Training: Train the selected RUL prediction model on the training data.

-

Hyperparameter Tuning: Optimize the model's hyperparameters (e.g., learning rate, number of layers in a neural network) using the validation set.

-

Performance Evaluation: Evaluate the trained model on the test set using metrics like RMSE, MAE, and R².

The experimental workflow can be visualized as follows:

Degradation Pathway Modeling

In the context of physical assets, a "signaling pathway" can be conceptualized as a "degradation pathway," which illustrates the chain of events leading from an initial fault to system failure. Understanding these pathways is crucial for selecting the right sensors and features for RUL prediction.

For example, in a Li-ion battery, a common degradation pathway involves the growth of the Solid Electrolyte Interphase (SEI) layer, which leads to an increase in internal resistance and a decrease in capacity.

For rotating machinery, such as a centrifuge in a lab, a degradation pathway might start with a bearing fault.

References

- 1. llumin.com [llumin.com]

- 2. All About Pharmaceutical Predictive Maintenance | Nanoprecise [nanoprecise.io]

- 3. Predictive Maintenance for Pharma | AspenTech [aspentech.com]

- 4. Pi Pharma Intelligence | Integrating AI for Predictive Maintenance in Pharmaceutical Manufacturing [pipharmaintelligence.com]

- 5. mdpi.com [mdpi.com]

- 6. Prognostics and Health Management (PHM) - MATLAB & Simulink [mathworks.com]

- 7. dau.edu [dau.edu]

- 8. Remaining Useful Life Estimation for Predictive Maintenance Using Feature Engineering | IEEE Conference Publication | IEEE Xplore [ieeexplore.ieee.org]

- 9. mdpi.com [mdpi.com]

- 10. mdpi.com [mdpi.com]

- 11. researchgate.net [researchgate.net]

- 12. icimpe2024.sciencesconf.org [icimpe2024.sciencesconf.org]

- 13. mdpi.com [mdpi.com]

- 14. researchgate.net [researchgate.net]

- 15. Comparison of Open Datasets for Lithium-ion Battery Testing | by BatteryBits Editors | BatteryBits (Volta Foundation) | Medium [medium.com]

- 16. Predicting Jet Engine Failures with NASA’s C-MAPSS Dataset and LSTM | by Mihai Timoficiuc | Oct, 2025 | Medium [medium.com]

- 17. kaggle.com [kaggle.com]

- 18. Optimizing Remaining Useful Life Prediction: A Feature Engineering Approach | IEEE Conference Publication | IEEE Xplore [ieeexplore.ieee.org]

The Convergence of Data and Diagnostics: A Technical Guide to Data-Driven Prognostics and Health Management

For Researchers, Scientists, and Drug Development Professionals

In the intricate landscape of modern engineering and industrial systems, the ability to predict and prevent failures is paramount. Prognostics and Health Management (PHM) has emerged as a critical discipline to ensure the reliability, safety, and operational efficiency of complex machinery.[1][2] At the heart of this discipline lies a powerful and evolving paradigm: data-driven approaches. By harnessing the vast amounts of data generated by sensors and operational logs, these methods employ statistical analysis and machine learning to detect anomalies, diagnose faults, and predict the remaining useful life (RUL) of components and systems.[1][3][4] This technical guide provides an in-depth exploration of the core principles, methodologies, and applications of data-driven PHM.

The Data-Driven PHM Framework: An Overview

Data-driven PHM methodologies are broadly categorized into statistical and machine learning approaches.[1] These techniques are prized for their ability to model complex, non-linear degradation patterns without requiring an in-depth understanding of the underlying physics of failure.[5][6] However, their efficacy is intrinsically linked to the availability and quality of historical data.[1]

The typical workflow of a data-driven PHM system can be visualized as a sequential process, starting from data acquisition and culminating in actionable insights for maintenance and operational decision-making.

References

- 1. mdpi.com [mdpi.com]

- 2. Deep Learning Techniques in Intelligent Fault Diagnosis and Prognosis for Industrial Systems: A Review [mdpi.com]

- 3. asmedigitalcollection.asme.org [asmedigitalcollection.asme.org]

- 4. Advancing aircraft engine RUL predictions: an interpretable integrated approach of feature engineering and aggregated feature importance - PMC [pmc.ncbi.nlm.nih.gov]

- 5. Deep learning and similarity-based models for predicting turbofan engine remaining useful life: insights from the CMAPSS dataset | The Aeronautical Journal | Cambridge Core [cambridge.org]

- 6. arxiv.org [arxiv.org]

An In-depth Technical Guide to Physics-Based Models for Failure Prediction in Mechanical Systems

Audience: Researchers, Scientists, and Engineers in Mechanical and Materials Science

Executive Summary

The increasing demand for reliability and safety in mechanical systems necessitates a deep understanding and accurate prediction of failure mechanisms. Physics-based models, grounded in the fundamental principles of mechanics and materials science, offer a robust framework for forecasting the initiation and propagation of failures. This technical guide provides a comprehensive overview of the core physics-based models employed for failure prediction in mechanical systems, with a focus on fatigue, fracture mechanics, and creep. It details the theoretical underpinnings of these models, outlines the experimental protocols for their validation, and presents a quantitative comparison of their predictive performance. Furthermore, this guide illustrates key workflows and relationships using logical diagrams to enhance comprehension for researchers and professionals in the field.

Introduction to Physics-of-Failure (PoF)

The Physics-of-Failure (PoF) approach is a science-based methodology that utilizes knowledge of failure mechanisms to predict the reliability of a product.[1][2] It focuses on understanding the physical, chemical, mechanical, and thermal processes that lead to material degradation and eventual failure.[1] Unlike purely data-driven or statistical methods, PoF models are built upon first principles, relating the applied stresses and material properties to the time-to-failure.[3] This approach allows for more accurate predictions, especially in scenarios with limited historical data, and provides a deeper understanding of the root causes of failure.[1][2]

The primary failure mechanisms in mechanical systems include fatigue, fracture, and creep. Each of these phenomena is described by a distinct set of physics-based models.

Fatigue Failure Prediction Models

Fatigue is the progressive and localized structural damage that occurs when a material is subjected to cyclic loading.[4] The prediction of fatigue life is crucial in the design of components subjected to repeated operational stresses.

Stress-Life (S-N) and Strain-Life (ε-N) Models

The Stress-Life (S-N) approach , the earliest fatigue model, relates the nominal stress amplitude (S) to the number of cycles to failure (N).[4] It is most suitable for high-cycle fatigue (HCF) regimes where plastic deformation is minimal.

The Strain-Life (ε-N) approach provides a more detailed description of fatigue behavior, particularly in the low-cycle fatigue (LCF) regime where plastic strain is significant. This model separately considers the elastic and plastic strain components.[5]

Linear Elastic Fracture Mechanics (LEFM) Models

LEFM-based models assume that fatigue failure is a consequence of the propagation of pre-existing cracks or defects.[6][7] The central parameter in LEFM is the stress intensity factor (K), which quantifies the stress state at the crack tip.[7]

The Paris Law is a fundamental LEFM model that relates the fatigue crack growth rate (da/dN) to the stress intensity factor range (ΔK):

da/dN = C(ΔK)^m

where C and m are material constants.[6]

Energy-Based Models

Energy-based models propose that fatigue damage is proportional to the energy dissipated during cyclic loading. These models can be particularly useful for complex loading scenarios and materials with significant plastic deformation.

Fracture Mechanics-Based Failure Prediction

Fracture mechanics is the field of mechanics concerned with the study of the propagation of cracks in materials.[6] It is a critical tool for predicting the failure of components containing flaws.

Linear Elastic Fracture Mechanics (LEFM)

As mentioned in the context of fatigue, LEFM is applicable when the plastic deformation at the crack tip is small compared to the crack size and specimen dimensions.[7] The critical stress intensity factor, K_Ic, also known as fracture toughness, is a material property that defines the critical value of K at which a crack will propagate catastrophically.[6][7]

Elastic-Plastic Fracture Mechanics (EPFM)

When significant plastic deformation occurs at the crack tip, LEFM is no longer valid, and Elastic-Plastic Fracture Mechanics (EPFM) must be employed. EPFM uses parameters such as the J-integral and the Crack Tip Opening Displacement (CTOD) to characterize the fracture behavior.

Creep Failure Prediction Models

Creep is the time-dependent, permanent deformation of a material subjected to a constant load or stress at elevated temperatures.[8] It is a primary failure mechanism in components operating in high-temperature environments, such as gas turbine blades and power plant piping.[8][9]

Empirical Models

Several empirical models have been developed to predict creep life based on experimental data. These include:

-

Larson-Miller Parameter (LMP): Relates stress, temperature, and rupture time.[8][9]

-

Orr-Sherby-Dorn (OSD) Parameter: Similar to LMP but with a different formulation.

-

Manson-Haferd Parameter: Another time-temperature parameter used for creep life prediction.

Continuum Damage Mechanics (CDM) Models

CDM models describe the progressive degradation of material properties due to the accumulation of micro-damage during the creep process. The Kachanov-Rabotnov model is a well-known CDM model for creep.

Hybrid Physics-Data-Driven Models

In recent years, there has been a growing trend towards combining physics-based models with data-driven techniques, such as machine learning, to improve prediction accuracy.[10] These hybrid models can leverage the fundamental understanding of failure mechanisms from physics-based models while capturing complex, non-linear relationships from experimental data.[11] Physics-Informed Neural Networks (PINNs), for example, embed physical laws into the neural network architecture to enhance predictive capabilities, especially with limited data.[12][13]

Experimental Protocols for Model Validation

The validation of physics-based models against experimental data is crucial to ensure their accuracy and reliability.[14][15] Standardized testing procedures are essential for obtaining consistent and comparable results.

Fatigue Testing

Standard: ASTM E647 - Standard Test Method for Measurement of Fatigue Crack Growth Rates.[12][13][16][17]

Methodology:

-

Specimen Preparation: Notched specimens, typically compact tension C(T) or middle-cracked tension M(T) geometries, are used.[13] A sharp fatigue pre-crack is introduced at the notch tip.

-

Cyclic Loading: The specimen is subjected to cyclic loading with a constant amplitude or under a programmed load sequence.

-

Crack Growth Monitoring: The crack length is measured as a function of the number of fatigue cycles using methods such as visual inspection, compliance techniques, or machine vision systems.[13][18]

-

Data Analysis: The crack growth rate (da/dN) is calculated from the crack length versus cycles data. The stress intensity factor range (ΔK) is calculated based on the applied load, crack length, and specimen geometry. The results are typically presented as a da/dN vs. ΔK curve.

Creep Testing

Standard: ASTM E139 - Standard Test Methods for Conducting Creep, Creep-Rupture, and Stress-Rupture Tests of Metallic Materials.

Methodology:

-

Specimen Preparation: A standard tensile specimen is machined from the material to be tested.

-

Constant Load and Temperature: The specimen is placed in a furnace and subjected to a constant tensile load at a specified elevated temperature.

-

Strain Measurement: The elongation of the specimen is measured over time using an extensometer.

-

Data Analysis: The creep strain is plotted against time to generate a creep curve, which typically shows three stages: primary, secondary (steady-state), and tertiary creep leading to rupture. The minimum creep rate and time to rupture are key parameters extracted from this curve.

Quantitative Data Presentation

The performance of different physics-based models can be compared using various metrics. The following tables summarize some of the available quantitative data from the literature.

Table 1: Comparison of Creep Life Prediction Models for Ferritic Heat Resistant Steels

| Model | Root Mean Squared Error (log rupture time) | Prediction Error Factor |

| Support Vector Regression (SVR) | 0.14 | 1.38 |

| Random Forest | Data not available | Data not available |

| Gradient Tree Boosting | Data not available | Data not available |

| Source: Adapted from a study on creep life predictions for ferritic heat resistant steels.[19] |

Table 2: Performance of a Physics-Informed Neural Network (PINN) Model for Multiaxial Fatigue Life Prediction of Aluminum Alloy 7075-T6

| Model | Prediction Performance |

| GMFL-PINN | Outperforms FS, SWT, and LZH models |

| Fatemi-Socie (FS) | Baseline for comparison |

| Smith-Watson-Topper (SWT) | Baseline for comparison |

| Li-Zhang (LZH) | Baseline for comparison |

| Source: Based on a study on PINN for multiaxial fatigue life prediction.[12][13] |

Visualization of Workflows and Relationships

Diagrams are essential for visualizing the logical flow of processes and the relationships between different concepts in failure prediction.

Caption: Experimental workflow for fatigue crack growth testing and model validation.

Caption: Relationship between different physics-based failure prediction models.

Conclusion

Physics-based models provide an indispensable framework for predicting the failure of mechanical systems. By grounding predictions in the fundamental principles of mechanics and materials science, these models offer insights that are often unattainable with purely empirical approaches. The continued development of these models, particularly through their integration with data-driven techniques, promises to further enhance the accuracy and reliability of failure prediction, thereby contributing to the design of safer and more durable mechanical systems. The experimental validation of these models, following standardized protocols, remains a cornerstone of this endeavor, ensuring that theoretical advancements are robustly translated into practical engineering solutions.

References

- 1. mdpi.com [mdpi.com]

- 2. Fatigue Failure and Fracture Mechanics | Book | Scientific.Net [scientific.net]

- 3. ntrs.nasa.gov [ntrs.nasa.gov]

- 4. researchgate.net [researchgate.net]

- 5. Fracture toughness and deformation of titanium alloys at low temperatures | Semantic Scholar [semanticscholar.org]

- 6. mdpi.com [mdpi.com]

- 7. ntrs.nasa.gov [ntrs.nasa.gov]

- 8. Investigation of Creep Behavior of a Gas Turbine Bade with a Visco Plastic FEM Model to Estimate the Blade Life [tmachineintelligence.ir]

- 9. Ensuring the security of your connection [dspace.lib.cranfield.ac.uk]

- 10. tandfonline.com [tandfonline.com]

- 11. researchgate.net [researchgate.net]

- 12. CMES | Free Full-Text | Physics-Informed Neural Networks for Multiaxial Fatigue Life Prediction of Aluminum Alloy [techscience.com]

- 13. researchgate.net [researchgate.net]

- 14. etheses.whiterose.ac.uk [etheses.whiterose.ac.uk]

- 15. soaneemrana.org [soaneemrana.org]

- 16. Recent Advances in Creep Modelling of the Nickel Base Superalloy, Alloy 720Li - PMC [pmc.ncbi.nlm.nih.gov]

- 17. tandfonline.com [tandfonline.com]

- 18. researchgate.net [researchgate.net]

- 19. tms.org [tms.org]

The Cornerstone of Intelligent Maintenance: A Deep Dive into Sensor Data for Prognostics and Health Management (PHM)

An In-depth Technical Guide for Researchers, Scientists, and Drug Development Professionals

Prognostics and Health Management (PHM) is a critical enabler of modern reliability and maintenance strategies, providing the foresight needed to prevent failures, optimize performance, and extend the operational life of critical assets. At the heart of every effective PHM system lies the intelligent acquisition and analysis of sensor data. This guide delves into the fundamental principles of leveraging sensor data for PHM applications, offering a comprehensive overview for researchers and professionals seeking to harness its power. We will explore the journey from raw sensor signals to actionable maintenance decisions, detailing experimental protocols, data presentation standards, and the intricate signaling pathways that underpin this transformative technology.

The PHM Workflow: From Sensor to Decision

The effective implementation of a PHM system follows a structured workflow that transforms raw sensor data into predictive insights. This process can be broadly categorized into several key stages, each playing a crucial role in the overall accuracy and reliability of the prognostic and diagnostic capabilities.

A typical PHM workflow begins with data acquisition, where sensors are deployed to monitor the operational and environmental conditions of a system.[1] This is followed by data preprocessing, which involves cleaning and preparing the raw data for analysis. Feature extraction and selection are then employed to identify the most salient indicators of system health. These features are used to construct health indicators (HIs), which provide a quantitative measure of the system's degradation. Finally, prognostic models use these HIs to predict the Remaining Useful Life (RUL) of the asset, enabling informed maintenance decisions.[2]

Sensor Technologies: The Vanguard of Data Acquisition

The selection of appropriate sensors is a critical first step in any PHM implementation. A wide array of sensor technologies is available, each suited to monitoring specific physical parameters that can be indicative of system health. The choice of sensor depends on the nature of the equipment, the potential failure modes, and the operating environment.

Vibration sensors, such as accelerometers, are widely used for monitoring rotating machinery like bearings and gearboxes, as they can detect subtle changes in vibration patterns that often precede a failure.[3] Temperature sensors are essential for monitoring thermal stresses, which can be a significant factor in the degradation of electronic components and mechanical systems.[3] Other important sensor types include pressure sensors for fluid and gas systems, acoustic emission sensors for detecting crack propagation, and current sensors for monitoring electrical equipment.[3][4]

| Sensor Type | Measured Parameter | Typical Application in PHM | Key Specifications |

| Vibration Sensor (Accelerometer) | Acceleration, Velocity, Displacement | Rotating machinery (bearings, gears, motors) | Sensitivity, Frequency Range, Number of Axes |

| Temperature Sensor | Temperature | Electronics, engines, industrial processes | Accuracy, Operating Range, Response Time |

| Pressure Sensor | Pressure | Hydraulic and pneumatic systems, pipelines | Pressure Range, Accuracy, Media Compatibility |

| Acoustic Emission Sensor | High-frequency stress waves | Crack detection in structures, bearings | Frequency Range, Sensitivity, Durability |

| Current Sensor | Electrical Current | Electric motors, power systems | Current Range, Accuracy, Bandwidth |

| Humidity Sensor | Relative Humidity | Environmental monitoring for electronics | Measurement Range, Accuracy, Stability |

| pH Sensor | Acidity or Alkalinity | Chemical processing, water treatment | pH Range, Accuracy, Electrode Type |

Experimental Protocols for PHM Data Acquisition

The quality and relevance of sensor data are paramount for the success of any PHM endeavor. Therefore, well-designed experiments are crucial for collecting high-fidelity data that accurately reflects the degradation processes of the equipment under study. The following protocols outline the methodologies for two common scenarios in PHM research: bearing fault diagnosis and accelerated life testing of electronic components.

Experimental Protocol 1: Bearing Fault Diagnosis Data Collection

This protocol is based on the well-established methodology used for the Case Western Reserve University (CWRU) bearing dataset, a benchmark for bearing fault diagnosis algorithms.[5]

Objective: To collect vibration data from healthy and faulty rolling element bearings under various operating conditions.

Experimental Setup:

-

Test Rig: A 2 horsepower (hp) induction motor, a torque transducer/encoder, a dynamometer, and control electronics. The test bearings support the motor shaft.

-

Sensors: Accelerometers mounted on the motor housing at the drive end and fan end.

-

Data Acquisition System: A 16-channel DAT recorder with a sampling frequency of 12 kHz or 48 kHz.

-

Test Bearings: Deep groove ball bearings. Faults of different diameters (e.g., 0.007, 0.014, and 0.021 inches) are seeded onto the inner race, outer race, and rolling elements using electro-discharge machining (EDM).

Procedure:

-

Baseline Data Collection: Install a healthy bearing in the test rig.

-

Record vibration data at different motor loads (0 to 3 hp) and speeds (e.g., 1797, 1772, 1750, and 1730 RPM).

-

Faulty Bearing Data Collection:

-

Replace the healthy bearing with a bearing containing a single-point fault.

-

Repeat the data collection process for each fault type (inner race, outer race, ball) and each fault severity (diameter).

-

-

Data Segmentation: The continuous vibration signals are typically segmented into smaller, manageable files for analysis.

Experimental Protocol 2: Accelerated Life Testing (ALT) of Electronic Components

This protocol provides a general framework for conducting accelerated life testing to assess the reliability and predict the lifetime of electronic components.[6][7]

Objective: To accelerate the failure mechanisms of electronic components to estimate their lifetime under normal operating conditions in a reduced timeframe.

Experimental Setup:

-

Environmental Chamber: A chamber capable of controlling temperature and humidity over a wide range.

-

Power Supplies and Monitoring Equipment: To power the components under test and monitor their performance parameters.

-

Test Articles: A statistically significant number of the electronic components to be tested.

Procedure:

-

Identify Stress Factors and Failure Mechanisms: Determine the key environmental and operational stresses that are likely to cause degradation and failure (e.g., temperature, voltage, humidity).

-

Define Test Conditions: Select accelerated stress levels that are higher than the normal operating conditions but do not introduce unrealistic failure modes. The Arrhenius model is often used for temperature-related acceleration.

-

Conduct the Test:

-

Place the test articles in the environmental chamber.

-

Apply the accelerated stress conditions.

-

Continuously or periodically monitor the performance of the components until a predefined failure criterion is met.

-

-

Data Analysis:

-

Record the time-to-failure for each component.

-

Use statistical models (e.g., Weibull, Lognormal) to analyze the failure data and extrapolate the lifetime under normal operating conditions.

-

The Data Processing and Analysis Pathway

Once high-quality sensor data has been acquired, it must be processed and analyzed to extract meaningful information about the health of the system. This involves a series of steps that transform raw signals into actionable prognostic insights.

References

- 1. papers.phmsociety.org [papers.phmsociety.org]

- 2. nvlpubs.nist.gov [nvlpubs.nist.gov]

- 3. advancedtech.com [advancedtech.com]

- 4. swiftsensors.com [swiftsensors.com]

- 5. scispace.com [scispace.com]

- 6. Accelerated Life Testing – Classic or CALT « Electronic Environment [electronic.se]

- 7. Accelerated lifetime tests based on the Physics of Failure [forcetechnology.com]

Basic principles of signal processing for fault detection.

An In-Depth Technical Guide to Signal Processing for Fault Detection

Introduction

The early detection and diagnosis of faults in complex systems are paramount for ensuring operational reliability, safety, and efficiency. Unforeseen failures can lead to significant downtime, economic losses, and catastrophic events. Condition monitoring, which involves tracking the health of machinery, relies heavily on the analysis of signals to identify the signatures of incipient faults.[1] Signal processing provides a powerful suite of tools to extract meaningful information from raw sensor data, transforming it into a format that reveals the operational state of a system.[2] This guide delves into the fundamental principles of signal processing for fault detection, outlining the core methodologies in the time, frequency, and time-frequency domains. It is intended for researchers, scientists, and professionals who require a technical understanding of these foundational techniques.

The General Workflow of Fault Detection

The process of detecting a fault using signal processing follows a structured methodology, beginning with data acquisition and culminating in a decision.[3] This workflow involves several key stages: signal acquisition, preprocessing, feature extraction, and finally, fault detection and classification.[3] Preprocessing is a crucial step that can include filtering, smoothing, and normalization to improve the quality of the raw signal.[4] The subsequent feature extraction phase is critical, as it aims to identify and select the relevant signal characteristics that can distinguish between different types of faults.[5]

Caption: A generalized workflow for fault detection using signal processing.

Signal Analysis Domains

The core of fault detection lies in analyzing the signal in different domains to extract fault-related features. The choice of domain—time, frequency, or a combination of both—depends on the nature of the signal and the fault characteristics.

Time-Domain Analysis

Time-domain analysis directly examines the signal's waveform and its characteristics over time. This approach is often computationally efficient and effective for detecting faults that cause significant changes in the signal's energy or amplitude distribution.[6] Statistical features are commonly calculated to quantify these changes.[6]

Key time-domain features include:

-

Root Mean Square (RMS): Indicates the energy content of the signal.[6]

-

Peak Value: The maximum amplitude of the signal, which can be sensitive to immediate impacts.[6]

-

Kurtosis: Measures the "tailedness" of the signal's probability distribution. It is particularly sensitive to sharp impulses, which are often indicative of bearing faults.[6]

-

Skewness: Measures the asymmetry of the signal's distribution.

-

Crest Factor: The ratio of the peak value to the RMS value, which can indicate the presence of impulsive vibrations.

| Time-Domain Feature | Description | Typical Application |

| Root Mean Square (RMS) | Represents the power or energy content of the signal. | Detecting overall increases in vibration or current levels. |

| Peak Value | The maximum absolute amplitude in the signal waveform.[6] | Useful for detecting sudden impacts or transient events.[6] |

| Kurtosis | A statistical measure of the "peakedness" or impulsiveness of a signal.[6] | Highly effective for detecting incipient bearing faults that generate sharp spikes.[6] |

| Skewness | Measures the asymmetry of the signal's probability distribution. | Can indicate non-linearities or specific types of wear. |

| Crest Factor | The ratio of the peak value to the RMS value. | Sensitive to the presence of impulsive noise or impacts on a signal. |

Frequency-Domain Analysis

Frequency-domain analysis transforms a time-domain signal into its constituent frequency components. This is particularly powerful for diagnosing faults in rotating machinery, as many faults manifest as distinct periodic components in the frequency spectrum.[7] The most common tool for this transformation is the Fast Fourier Transform (FFT).[8] Analyzing the signal's spectrum can reveal characteristic frequencies associated with issues like unbalance, misalignment, and bearing defects.[7]

References

- 1. mdpi.com [mdpi.com]

- 2. researchgate.net [researchgate.net]

- 3. digital-library.theiet.org [digital-library.theiet.org]

- 4. Improved signal preprocessing techniques for machine fault diagnosis | IEEE Conference Publication | IEEE Xplore [ieeexplore.ieee.org]

- 5. mdpi.com [mdpi.com]

- 6. researchgate.net [researchgate.net]

- 7. emerald.com [emerald.com]

- 8. irjet.net [irjet.net]

An In-depth Technical Guide to Machine Learning in Predictive Maintenance

Introduction to Predictive Maintenance (PdM)

Predictive Maintenance (PdM) is a proactive strategy that leverages data analysis and machine learning techniques to predict equipment failures before they occur.[1][2] Unlike reactive maintenance (fixing components after they break) or preventive maintenance (servicing equipment on a fixed schedule), PdM aims to perform maintenance only when necessary, thereby reducing costs, minimizing downtime, and extending the lifespan of assets.[3] The foundation of modern PdM is the continuous monitoring of equipment health using data from various sources, including IoT sensors tracking parameters like vibration, temperature, pressure, and power consumption.[4][5] By analyzing this data, machine learning algorithms can identify patterns indicative of degradation or impending failure, enabling proactive intervention.[6]

Core Machine Learning Applications in Predictive Maintenance

Machine learning's role in PdM can be categorized into three primary applications:

-

Anomaly Detection : This involves identifying data points or patterns that deviate from the expected normal behavior of a system.[7] Unsupervised learning models are often used to establish a baseline of normal operation and flag outliers, such as unusual temperature spikes or vibration frequencies, which can be early indicators of a fault.[8]

-

Remaining Useful Life (RUL) Prediction : RUL is the estimated time left before a component or system fails.[9][10] This is typically framed as a regression problem where models, often based on deep learning, are trained on historical sensor data from equipment run to failure to predict the remaining operational lifespan of in-service assets.[11][12]

-

Fault Diagnosis and Classification : When an anomaly is detected, the next step is to identify the specific type and cause of the fault.[13][14] This is a classification task where supervised learning models are trained on labeled data of different fault conditions to automatically diagnose issues as they arise.[15]

Logical Framework for ML in Predictive Maintenance

The core tasks in predictive maintenance are logically interconnected, starting from detecting an issue to predicting its timeline and identifying its root cause.

References

- 1. Predictive maintenance on NASA’s Turbofan Engine Degradation dataset (CMAPSS) | by Rohit Malhotra | Medium [medium.com]

- 2. researchgate.net [researchgate.net]

- 3. Predictive Maintenance of Pumps. Leveraging Machine Learning for… | by Sunilkumar | Medium [medium.com]

- 4. Lite and Efficient Deep Learning Model for Bearing Fault Diagnosis Using the CWRU Dataset [mdpi.com]

- 5. Machine learning techniques applied to mechanical fault diagnosis and fault prognosis in the context of real industrial manufacturing use-cases: a systematic literature review - PMC [pmc.ncbi.nlm.nih.gov]

- 6. routledge.com [routledge.com]

- 7. Comparative Analysis of Machine Learning Models for Predictive Maintenance of Ball Bearing Systems | MDPI [mdpi.com]

- 8. Remaining Useful Life Prediction in Turbofan Engines: PCA and Machine Learing Approach | IEEE Conference Publication | IEEE Xplore [ieeexplore.ieee.org]

- 9. kaggle.com [kaggle.com]

- 10. arxiv.org [arxiv.org]

- 11. Prediction of Remaining Useful Lifetime (RUL) of turbofan engine using machine learning | IEEE Conference Publication | IEEE Xplore [ieeexplore.ieee.org]

- 12. arno.uvt.nl [arno.uvt.nl]

- 13. GitHub - UtkarshPanara/Remaining-Useful-Life-Prediction-for-Turbofan-Engines: RUL prediction for Turbofan Engine (CMAPSS dataset) using CNN [github.com]

- 14. researchgate.net [researchgate.net]

- 15. mdpi.com [mdpi.com]

The Evolution and Technical Core of Prognostics and Health Management: An In-depth Guide

An ever-evolving discipline, Prognostics and Health Management (PHM) has transitioned from a reactive maintenance philosophy to a proactive, data-driven strategy crucial for ensuring the reliability, safety, and efficiency of critical systems. This technical guide delves into the historical context of PHM, its core methodologies, and its burgeoning applications, with a particular focus on its relevance to researchers, scientists, and drug development professionals.

Historical Context and the Evolution of Maintenance Strategies

The journey of PHM is intrinsically linked to the evolution of maintenance practices over the last century. Initially, maintenance was purely reactive, addressing failures only after they occurred. This "run-to-failure" approach, while simple, often resulted in catastrophic downtimes and unforeseen costs.[1][2][3][4][5]

The mid-20th century saw the rise of preventive maintenance , a time-based strategy where maintenance tasks are performed at predetermined intervals.[6] This marked a shift towards proactive thinking but was often inefficient, leading to unnecessary maintenance on healthy components or failing to prevent unforeseen failures.

The 1970s witnessed the emergence of predictive maintenance (PdM) , a more sophisticated approach that utilizes condition-monitoring techniques to assess the health of equipment in real-time.[7] This laid the groundwork for modern PHM by focusing on predicting failures based on the actual condition of an asset.

The advent of advanced sensor technologies, computational power, and the Internet of Things (IoT) has propelled the evolution towards a more holistic and integrated approach known as Prognostics and Health Management. PHM encompasses not only the prediction of failures but also the management of a system's overall health throughout its lifecycle.[8][9][10] The U.S. Department of Defense has been a key driver in the formalization of PHM, particularly through its Condition-Based Maintenance Plus (CBM+) initiatives.[10]

Core Methodologies in Prognostics and Health Management

PHM methodologies can be broadly categorized into three main approaches: data-driven, physics-based (or model-based), and hybrid models. The choice of methodology depends on the system's complexity, the availability of data, and the understanding of its failure mechanisms.

Data-Driven Approaches

Data-driven methods rely on historical and real-time data collected from sensors to learn the degradation patterns of a system and predict its Remaining Useful Life (RUL). These approaches are particularly useful when the underlying physics of failure are not well understood or are too complex to model. Key steps in a data-driven approach include:

-

Data Acquisition: Gathering relevant data from various sensors, such as vibration, temperature, pressure, and acoustic sensors.

-

Feature Extraction and Selection: Identifying and selecting the most informative features from the raw sensor data that are indicative of the system's health.

-

Model Training and Prediction: Utilizing machine learning and deep learning algorithms to train a model on the extracted features and predict the RUL.

Commonly used algorithms in data-driven PHM include:

-

Traditional Machine Learning: Support Vector Machines (SVM), Random Forests, and Gradient Boosting.

-

Deep Learning: Recurrent Neural Networks (RNNs), Long Short-Term Memory (LSTM) networks, and Convolutional Neural Networks (CNNs).

Physics-Based (Model-Based) Approaches