Eblsp

Description

BenchChem offers high-quality this compound suitable for many research applications. Different packaging options are available to accommodate customers' requirements. Please inquire for more information about this compound including the price, delivery time, and more detailed information at info@benchchem.com.

Properties

CAS No. |

87468-59-5 |

|---|---|

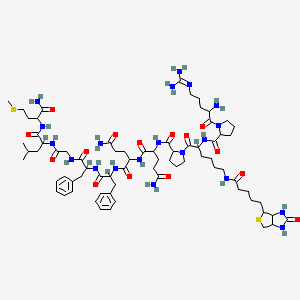

Molecular Formula |

C73H112N20O15S2 |

Molecular Weight |

1573.9 g/mol |

IUPAC Name |

N-[5-amino-1-[[1-[[1-[[2-[[1-[(1-amino-4-methylsulfanyl-1-oxobutan-2-yl)amino]-4-methyl-1-oxopentan-2-yl]amino]-2-oxoethyl]amino]-1-oxo-3-phenylpropan-2-yl]amino]-1-oxo-3-phenylpropan-2-yl]amino]-1,5-dioxopentan-2-yl]-2-[[1-[2-[[1-[2-amino-5-(diaminomethylideneamino)pentanoyl]pyrrolidine-2-carbonyl]amino]-6-[5-(2-oxo-1,3,3a,4,6,6a-hexahydrothieno[3,4-d]imidazol-4-yl)pentanoylamino]hexanoyl]pyrrolidine-2-carbonyl]amino]pentanediamide |

InChI |

InChI=1S/C73H112N20O15S2/c1-42(2)37-50(66(102)84-46(62(77)98)31-36-109-3)83-60(97)40-82-63(99)51(38-43-17-6-4-7-18-43)88-67(103)52(39-44-19-8-5-9-20-44)89-65(101)47(27-29-57(75)94)85-64(100)48(28-30-58(76)95)86-68(104)55-24-16-35-93(55)71(107)49(87-69(105)54-23-15-34-92(54)70(106)45(74)21-14-33-81-72(78)79)22-12-13-32-80-59(96)26-11-10-25-56-61-53(41-110-56)90-73(108)91-61/h4-9,17-20,42,45-56,61H,10-16,21-41,74H2,1-3H3,(H2,75,94)(H2,76,95)(H2,77,98)(H,80,96)(H,82,99)(H,83,97)(H,84,102)(H,85,100)(H,86,104)(H,87,105)(H,88,103)(H,89,101)(H4,78,79,81)(H2,90,91,108) |

InChI Key |

KSIKYPVWKBFHBT-UHFFFAOYSA-N |

Canonical SMILES |

CC(C)CC(C(=O)NC(CCSC)C(=O)N)NC(=O)CNC(=O)C(CC1=CC=CC=C1)NC(=O)C(CC2=CC=CC=C2)NC(=O)C(CCC(=O)N)NC(=O)C(CCC(=O)N)NC(=O)C3CCCN3C(=O)C(CCCCNC(=O)CCCCC4C5C(CS4)NC(=O)N5)NC(=O)C6CCCN6C(=O)C(CCCN=C(N)N)N |

Origin of Product |

United States |

Foundational & Exploratory

Unable to Identify "EBLS Software" for Neuroscience Research

Following a comprehensive search for "EBLS software" targeted at neuroscience researchers, no specific software, platform, or tool publicly identified by this name could be located. The search results did not yield any technical guides, whitepapers, or research articles pertaining to a software with this designation within the neuroscience field.

It is possible that "EBLS software" may be:

-

An internal or proprietary tool not available in the public domain.

-

An acronym for a highly specialized or emerging software that is not yet widely documented.

-

A possible typographical error of another software's name.

The search did, however, yield information on tangentially related topics, which may assist in clarifying the intended subject:

-

Extended-Spectrum Beta-Lactamase (ESBL): In the field of microbiology, ESBL refers to enzymes that mediate resistance to certain antibiotics. Research articles discuss ESBL-producing Enterobacterales, but this is not related to neuroscience software.

-

Evoke Neuroscience: This is a company that produces an FDA-cleared medical device and software system used to assess cognitive function by measuring electroencephalography (EEG) and event-related potentials (ERP). While relevant to neuroscience, "Evoke" is distinct from "EBLS."

-

General Neuroscience Software and Data Platforms: The search also brought up various tools and platforms used in neuroscience research for data visualization, analysis, and sharing, such as open-source tools discussed in eNeuro and data standards like Neurodata Without Borders (NWB). However, none of these are referred to as "EBLS."

Without a clear identification of the "EBLS software," it is not possible to proceed with the creation of an in-depth technical guide, including data presentation, experimental protocols, and signaling pathway diagrams as requested.

Further clarification on the full name of the software or the specific context of its use is required to fulfill this request.

Event-Based Logic and State (EBLS) Software in Neuroscience: A Technical Guide

A Note on Terminology: The term "EBLS Software" is not a standardized identifier for a specific software package in the field of neuroscience. However, it aptly describes a class of powerful simulation tools that operate on the principles of event-based logic and state management. This guide provides an in-depth technical overview of the core concepts, architecture, and application of these event-driven simulators in neuroscience research and drug development.

At its core, event-driven simulation in neuroscience is a computational methodology for modeling spiking neural networks (SNNs). Unlike traditional clock-driven simulators that update the state of every neuron at fixed time intervals, event-driven simulators only perform calculations when a specific event occurs, primarily the firing of a neuron (a "spike"). This approach offers significant advantages in terms of computational efficiency and accuracy, especially for models with sparse firing activity, which is characteristic of biological neural networks.

Core Principles of Event-Driven Simulation

Event-driven simulation revolves around the concept of advancing the simulation time to the next scheduled event. The state of the system is defined by the collective states of its neurons and synapses. A change in state is triggered by an event, which is typically the generation of a spike.

The fundamental logic of an event-driven simulator can be summarized as follows:

-

Event Scheduling: When a neuron fires, the simulator calculates when this spike will arrive at its post-synaptic targets. These future spike arrivals are then placed in a time-ordered event queue.

-

State Update: The simulator advances to the time of the next event in the queue. The state of the receiving neuron is then updated based on the incoming spike and its synaptic weight.

-

Threshold Check: After the state update, the neuron's membrane potential is checked against its firing threshold. If the threshold is crossed, a new spike event is generated, and the process returns to step one.

This contrasts with clock-driven simulators, which iterate through every neuron at each time step, regardless of whether they are receiving or sending a spike. This can lead to unnecessary computations, especially in large, sparsely firing networks.

Quantitative Performance Data

The efficiency of event-driven simulators is particularly evident in tasks such as image classification using spiking neural networks. Below are tables summarizing the performance of two event-driven simulators, EDHA (Event-Driven High Accuracy) and EvtSNN (Event SNN), on the MNIST handwritten digit classification task.

Table 1: Performance on Unsupervised MNIST Classification (Single Epoch)

| Simulator | Simulation Method | Training Time (seconds) | Test Accuracy (%) |

|---|---|---|---|

| EvtSNN | Event-Driven | 56 | 89.56 |

| EDHA | Event-Driven | 642 | ~89.5 |

Data sourced from Mo and Tao, 2022.[1]

Table 2: Simulation Speed Comparison of EvtSNN and EDHA

| Network Scale (Neurons) | Input Firing Rate (Hz) | Speed-up of EvtSNN over EDHA |

|---|---|---|

| Small (e.g., hundreds) | Low (e.g., 2-5) | 2.9x - 14.0x |

| Large (e.g., thousands) | High (e.g., 10-20) | Performance advantage varies |

Data summarized from benchmark experiments in Mo and Tao, 2022.[1]

Table 3: Performance Comparison on MNIST Recognition Network

| Simulator | Simulation Method | Training Time (hours) | Evaluation Time (hours) |

|---|---|---|---|

| Brian2 | Clock-Driven | 228.33 | 18 |

| Bindsnet | Clock-Driven | 1094.1 | 18.0 |

| EDHA | Event-Driven | 17.3 | 3.93 |

Data sourced from Mo et al., 2021.[2]

Experimental Protocols

Unsupervised MNIST Classification with a Spiking Neural Network

This protocol outlines the methodology for training and evaluating a two-layer spiking neural network on the MNIST dataset using an event-driven simulator, as described in the literature for simulators like EDHA and EvtSNN.[1][2]

1. Network Architecture:

-

Input Layer: 784 neurons, corresponding to the 28x28 pixels of the MNIST images.

-

Excitatory Layer: A population of excitatory neurons (e.g., 400 or more).

-

Inhibitory Layer: A corresponding population of inhibitory neurons for lateral inhibition.

-

Connectivity:

-

All-to-all connections between the input layer and the excitatory layer.

-

One-to-one connections between the excitatory and inhibitory neurons.

-

Inhibitory connections from each inhibitory neuron to all excitatory neurons except the one it is paired with.

-

2. Neuron and Synapse Models:

-

Neuron Model: Leaky Integrate-and-Fire (LIF) neurons are commonly used for both excitatory and inhibitory populations.

-

Synaptic Plasticity: Spike-Timing-Dependent Plasticity (STDP) is employed to update the synaptic weights between the input and excitatory layers.[3] This learning rule strengthens or weakens synapses based on the relative timing of pre- and post-synaptic spikes.

3. Input Encoding:

-

The pixel intensity of the MNIST images is converted into spike trains. A common method is rate-based encoding, where higher pixel intensity corresponds to a higher firing rate of the corresponding input neuron.

4. Simulation and Training Procedure:

-

Each of the 60,000 training images from the MNIST dataset is presented to the network for a short duration (e.g., 250-350 ms).

-

During the presentation of each image, the network evolves according to the event-driven simulation principles.

-

The STDP rule is applied to update the synaptic weights based on the spike timing.

-

After training on the entire dataset for one or more epochs, the learning is turned off.

5. Evaluation:

-

The 10,000 test images are presented to the trained network.

-

The response of the excitatory neurons is recorded for each image.

-

Each excitatory neuron is assigned to the digit class that it fires for most frequently across the training set.

-

The network's prediction for a test image is the class of the neuron that fires most strongly.

-

The classification accuracy is calculated by comparing the network's predictions to the true labels of the test images.

Visualizations

Signaling Pathways and Workflows

The following diagrams, generated using Graphviz, illustrate the core concepts of event-driven simulation and a typical network model.

Caption: Core loop of an event-driven neural simulator.

Caption: Event-driven vs. Clock-driven simulation workflows.

Caption: A simple spiking neuron model with STDP learning.

References

An In-depth Technical Guide to Dynamic Vision Sensor (DVS) Data Formats

For Researchers, Scientists, and Drug Development Professionals

This guide provides a comprehensive technical overview of Dynamic Vision Sensor (DVS) technology, with a focus on its data formats. DVS, also known as event-based cameras, are bio-inspired sensors that capture changes in brightness at the pixel level, offering significant advantages in scenarios with high-speed motion and challenging lighting conditions. This document details the structure of DVS data, common data formats, and essential experimental protocols for its effective utilization.

Data Presentation

The data generated by a Dynamic Vision Sensor is fundamentally different from that of traditional frame-based cameras. Instead of capturing entire images at a fixed rate, DVS cameras asynchronously output a stream of "events" whenever a pixel detects a significant change in illumination. This event-based approach leads to a sparse and low-latency data stream.

Core DVS Event Data Structure

Each event in a DVS data stream is a discrete piece of information with the following core components:

| Component | Data Type | Description |

| Timestamp (t) | 64-bit integer | The time at which the event occurred, typically with microsecond precision. This high temporal resolution is a key advantage of DVS technology. |

| X-coordinate (x) | 16-bit integer | The horizontal position of the pixel that triggered the event. |

| Y-coordinate (y) | 16-bit integer | The vertical position of the pixel that triggered the event. |

| Polarity (p) | 1-bit boolean | Indicates the direction of the brightness change. A value of '1' typically represents an increase in brightness (ON event), while '0' signifies a decrease (OFF event). |

The AEDAT4 Data Format

The Address-Event Data (AEDAT) format is a widely used standard for storing data from neuromorphic sensors, including DVS cameras. The latest version, AEDAT4, is a flexible container format capable of storing various types of data streams beyond just events.

An AEDAT4 file consists of a header followed by a series of data packets. Each packet has a header that specifies its stream ID and size, followed by the payload containing the actual data.

| AEDAT4 Stream Type | Description |

| Events | A stream of polarity events, as described in the table above. This is the primary data type for DVS cameras. |

| Frames | For hybrid sensors (DAVIS), this stream contains standard image frames, often synchronized with the event stream. |

| IMU (Inertial Measurement Unit) | Contains data from an integrated IMU, such as accelerometer and gyroscope readings, with timestamps. |

| Triggers | Records external trigger signals, allowing for synchronization with other sensors or systems. |

Experimental Protocols

To effectively utilize DVS data, it is crucial to follow specific experimental protocols for tasks such as camera calibration and noise reduction.

DVS Camera Calibration

Standard checkerboard-based calibration methods used for traditional cameras are not directly applicable to DVS cameras due to their asynchronous nature. A common and effective alternative is to use a flickering pattern displayed on a screen.

Methodology for DVS Calibration with a Flickering Pattern:

-

Pattern Display: A high-contrast pattern, such as a checkerboard or a series of sinusoidal gradients, is displayed on a monitor.

-

Flickering: The displayed pattern is flickered at a known frequency (e.g., 50-100 Hz). This rapid change in brightness across the pattern reliably generates events from the DVS camera.

-

Camera Positioning: The DVS camera is positioned to view the entire flickering pattern.

-

Data Acquisition: Event data is recorded as the camera is moved through a variety of poses (different angles and distances) relative to the screen. It is essential to capture a diverse set of viewpoints to ensure a robust calibration.

-

Event Accumulation: The recorded events are accumulated over short time windows to reconstruct frames that represent the calibration pattern.

-

Corner Detection: Standard corner detection algorithms are then applied to these reconstructed frames to identify the corners of the checkerboard pattern.

-

Parameter Estimation: With a sufficient number of corner detections from various poses, the intrinsic (focal length, principal point, distortion coefficients) and extrinsic (rotation and translation) parameters of the camera can be estimated using established camera calibration algorithms.

DVS Data Noise Filtering

DVS sensors can produce a significant amount of background activity (noise), especially in low-light conditions. The Background Activity Filter is a common and effective algorithm for reducing this noise.

Methodology for Background Activity Noise Filtering:

-

Principle: The filter operates on the principle that true events caused by moving objects will have spatio-temporal correlation with neighboring events, while noise events are typically isolated in both space and time.

-

Neighborhood Definition: For each incoming event, a small spatial neighborhood (e.g., a 3x3 or 5x5 pixel area) is defined around its location.

-

Temporal Correlation: The filter checks the timestamps of the most recent events that occurred within this spatial neighborhood.

-

Noise Identification: If no other event has occurred in the neighborhood within a predefined time window (a few milliseconds), the current event is considered to be noise and is discarded.

-

Signal Preservation: If there are recent neighboring events, the current event is considered part of a correlated signal and is passed through the filter.

-

Parameter Tuning: The size of the spatial neighborhood and the duration of the temporal window are key parameters that need to be tuned based on the specific sensor and the dynamics of the scene.

Mandatory Visualization

The following diagrams illustrate key workflows and logical relationships in DVS data processing.

Caption: A typical workflow for processing DVS data, from sensor to application.

Caption: The logical flow of a Background Activity Filter for DVS noise reduction.

The Dawn of a New Sense: A Technical Guide to Event-Based Learning Systems in Neuromorphic Sensing

For Researchers, Scientists, and Drug Development Professionals

In the quest for more efficient and biologically plausible computing, the field of neuromorphic sensing has emerged as a revolutionary paradigm. Inspired by the human brain's ability to process information with remarkable speed and energy efficiency, neuromorphic systems offer a fundamental shift from traditional, frame-based data acquisition to an event-driven approach. This guide provides an in-depth technical overview of the core principles, software, and applications of what can be conceptualized as Event-Based Learning Systems (EBLS), with a particular focus on their potential implications for scientific research and drug development.

At the heart of neuromorphic sensing are event-based sensors, such as Dynamic Vision Sensors (DVS). Unlike conventional cameras that capture entire frames at a fixed rate, these sensors have independent pixels that only report changes in brightness, generating a sparse stream of asynchronous "events." This data-on-demand approach drastically reduces data redundancy and power consumption, making it ideal for a new class of intelligent, low-latency applications.

Processing this event-based data requires a departure from traditional deep learning frameworks. Spiking Neural Networks (SNNs), often hailed as the third generation of neural networks, are inherently suited for this task. SNNs communicate through discrete "spikes," mirroring the behavior of biological neurons. This event-driven processing in SNNs, when paired with neuromorphic hardware, unlocks significant gains in computational speed and energy efficiency.

While a single, universally recognized "EBLS software" suite does not exist, the field is supported by a growing ecosystem of powerful open-source SNN simulation frameworks. These tools, predominantly based on Python, provide the necessary components to build, train, and deploy SNNs for a variety of tasks.

Core Software Frameworks for Event-Based Learning

For beginners and seasoned researchers alike, a number of software libraries provide accessible entry points into the world of neuromorphic computing. These frameworks allow for the definition of neuron models, synaptic connections, and learning rules, enabling the simulation of complex SNNs.

| Software Framework | Primary Language | Key Features |

| Brian | Python | Focuses on ease of use and flexibility, allowing for the definition of neuron models with simple mathematical equations. |

| NEST | Python (PyNEST) | Designed for the simulation of large-scale SNNs, with a focus on performance and scalability. |

| Nengo | Python | A versatile framework that supports the creation of large-scale cognitive and neural models, and can be run on various hardware backends, including neuromorphic chips. |

| Lava | Python | An open-source software framework for developing neuro-inspired applications and mapping them to neuromorphic hardware. |

| snnTorch | Python (PyTorch-based) | Integrates SNN components into the popular PyTorch deep learning framework, facilitating gradient-based training of SNNs. |

A General Workflow for Neuromorphic Sensing

The processing pipeline in a typical neuromorphic system follows a logical progression from data acquisition to intelligent output. This workflow is designed to handle the asynchronous and sparse nature of event-based data efficiently.

An In-depth Technical Guide to Event-Based Logic Simulation (EBLS) Software in Computational Neuroscience

For Researchers, Scientists, and Drug Development Professionals

This guide provides a comprehensive overview of Event-Based Logic Simulation (EBLS) software, a cornerstone of modern computational neuroscience. We delve into the core principles of this simulation paradigm, explore its implementation in leading software packages, and present detailed experimental protocols and quantitative data from seminal research in the field.

Introduction to Event-Based Simulation in Computational Neuroscience

In computational neuroscience, simulating the brain's intricate network of neurons is a formidable challenge. Two primary simulation strategies have emerged: clock-driven and event-driven. While clock-driven simulators update the state of every neuron at fixed time steps, event-driven simulators, the focus of this guide, operate on a more efficient principle. They only perform computations when a significant event occurs, typically the firing of a neuron (a "spike"). This approach can lead to substantial gains in simulation speed and efficiency, particularly for the sparse and asynchronous firing patterns observed in biological neural networks[1][2][3].

The core idea behind event-driven simulation is that the state of a neuron can be predicted analytically between incoming spikes. Therefore, the simulator can calculate the exact time of the next spike for each neuron and advance the simulation time to the next scheduled event. This avoids the unnecessary computations of clock-driven methods, which must check every neuron at every time step, regardless of its activity level.

Leading Event-Based Simulation Software

Several powerful and flexible software packages have been developed to implement event-driven simulations of spiking neural networks. This guide will focus on two of the most prominent and widely used simulators: NEST and Brian .

-

NEST (NEural Simulation Tool): A simulator designed for large-scale spiking neural network models, focusing on the dynamics, size, and structure of neural systems[4][5]. It is highly efficient and scalable, making it suitable for simulations on high-performance computing (HPC) systems. NEST provides a rich set of pre-defined neuron and synapse models, including models of synaptic plasticity like Spike-Timing-Dependent Plasticity (STDP)[5][6][7].

-

Brian: A simulator that prioritizes flexibility and ease of use, allowing researchers to define neuron and synapse models using their mathematical equations directly in Python code[8][9][10][11]. Brian's use of code generation allows for both high performance and the ability to implement novel and complex models and experimental protocols[8][12][13].

Quantitative Data from Event-Based Simulations

The efficiency of event-driven simulators is a key advantage. The following table summarizes performance benchmark data comparing event-driven and clock-driven simulation approaches.

| Performance Metric | Event-Driven (AER-based) | Clock-Driven (Serial) | Conditions | Source |

| Energy Consumption | Increases with the number of active inputs | Relatively stable | 100 time-steps, 8 input channels | [1] |

| Latency | Generally lower, especially with sparse inputs | Higher, as it processes all neurons at each time step | Varying input sparsity | [1] |

| Resource Usage (FPGA) | Dependent on the complexity of the event-handling logic | Dependent on the number of neurons and the complexity of their models | Hardware implementation on FPGA | [1] |

The next table presents quantitative results from a simulation of a working memory model implemented in the NEST simulator, demonstrating the biological realism that can be achieved.

| Parameter | Value | Description | Source |

| Network Size | 8,000 excitatory neurons, 2,000 inhibitory neurons | The total number of neurons in the simulated network. | [14] |

| Neuron Model | Leaky integrate-and-fire (LIF) with exponential postsynaptic currents | The mathematical model used to describe the dynamics of individual neurons. | [14] |

| Synaptic Plasticity | Short-term synaptic facilitation | The mechanism by which the strength of synapses changes over short timescales, crucial for working memory. | [14] |

| WM Maintenance | Sustained spiking activity in specific neuron populations | The neural correlate of holding information in working memory, achieved through synaptic facilitation. | [14] |

Detailed Experimental Protocols

This section provides detailed methodologies for key experiments performed using event-based simulators.

STDP is a form of Hebbian learning where the precise timing of pre- and post-synaptic spikes determines the change in synaptic strength[15]. This protocol outlines how to simulate a classic STDP experiment using the NEST simulator.

Objective: To replicate the bimodal distribution of synaptic weights that emerges from an exponential STDP rule with all-to-all spike pairing.

Experimental Workflow:

Caption: Experimental workflow for simulating STDP in NEST.

Methodology:

-

Network Definition:

-

Create a population of presynaptic neurons and a single postsynaptic neuron using nest.Create().

-

Connect the presynaptic population to the postsynaptic neuron using the stdp_synapse model. The connectivity can be all-to-all.

-

-

Neuron and Synapse Model Specification:

-

Use a simple neuron model like the leaky integrate-and-fire (iaf_psc_alpha).

-

For the stdp_synapse, specify the parameters for the exponential STDP rule, including the learning rates for potentiation (LTP) and depression (LTD), and the time constants of the STDP window.

-

-

Stimulation Protocol:

-

Create a poisson_generator for each presynaptic neuron to generate random spike trains with a specified firing rate.

-

Connect the Poisson generators to the presynaptic neurons.

-

-

Simulation Execution:

-

Run the simulation for a sufficiently long duration to allow the synaptic weights to converge to a stable distribution using nest.Simulate().

-

-

Data Recording and Analysis:

-

Use a multimeter to record the synaptic weights of the connections at regular intervals.

-

After the simulation, retrieve the recorded data and plot a histogram of the final synaptic weights to observe the bimodal distribution.

-

Computational models of the basal ganglia are crucial for understanding its role in action selection and decision-making[16][17][18][19][20]. This protocol describes the setup of a large-scale model of the cortico-basal ganglia-thalamic (CBT) circuit.

Objective: To simulate the interaction between different brain regions involved in motor control and decision-making.

Signaling Pathway of the Basal Ganglia:

Caption: Simplified diagram of the direct, indirect, and hyperdirect pathways of the basal ganglia. '+' denotes excitatory connections, and '-' denotes inhibitory connections.

Methodology:

-

Model Implementation: The entire model is implemented in the NEST simulator[17].

-

Neuron Models: The model uses conductance-based and current-based leaky integrate-and-fire (LIF) neurons for different brain regions[17].

-

Network Structure:

-

Cortex: Modeled with six layers containing various neuron types.

-

Basal Ganglia (BG): Includes the striatum (with medium spiny neurons and fast-spiking interneurons), external and internal globus pallidus (GPe and GPi), and the subthalamic nucleus (STN).

-

Thalamus (TH): Divided into excitatory and inhibitory zones.

-

-

Connectivity and Parameters: Axonal and synaptic delays, synaptic weights, time constants, and the number of neurons are based on experimental data[17].

-

Simulation Environment: The simulation can be run on multi-core processors or supercomputers like Fugaku, demonstrating the scalability of NEST[17].

The Brian simulator excels at allowing researchers to define complex and interactive experimental protocols directly within their Python scripts[8][12][13].

Objective: To create a simulation where the stimulus presented to a neuron model can be interactively controlled by the user during the simulation.

Logical Relationship for Interactive Simulation:

Caption: Diagram showing the interaction between the Brian simulation loop and a user-defined Python function for interactive control.

Methodology:

-

Model Definition: Define a neuron model using standard mathematical equations in a string format, for instance, a simple leaky integrate-and-fire neuron with a variable input current I.

-

Simulation Setup: Create a NeuronGroup with the defined model.

-

Interactive Control Function: Write a Python function that can be called at each time step of the simulation. This function can, for example, read a value from a graphical user interface (GUI) slider or a text input and update the I parameter of the NeuronGroup.

-

Running the Simulation: Use a custom simulation loop in Python that, at each step, calls the run() function of the Brian simulation for a single time step and then calls the user-defined control function to update the stimulus. This "mixed" approach provides immense flexibility for creating closed-loop experiments and interactive visualizations[8][12].

Conclusion

Event-based logic simulation software like NEST and Brian are indispensable tools in computational neuroscience. They provide the means to simulate large, biologically realistic neural networks with high efficiency and flexibility. This guide has offered a glimpse into the technical depth of these simulators, providing concrete examples of their application in modeling fundamental neural processes. For researchers and professionals in drug development, understanding and utilizing these powerful simulation tools can accelerate the exploration of neural circuit function in both health and disease, paving the way for novel therapeutic strategies.

References

- 1. Energy-Efficient Digital Design: A Comparative Study of Event-Driven and Clock-Driven Spiking Neurons This work was partially supported by project SERICS (PE00000014) under the MUR National Recovery and Resilience Plan funded by the European Union. To foster research in this field, we are making our experimental code available as open source: https://github.com/smilies-polito/spiking-neurons-comparison.git [arxiv.org]

- 2. mdpi.com [mdpi.com]

- 3. Frontiers | EvtSNN: Event-driven SNN simulator optimized by population and pre-filtering [frontiersin.org]

- 4. NEST Simulator [nest-simulator.org]

- 5. NEST (NEural Simulation Tool) - Scholarpedia [scholarpedia.org]

- 6. Spike-timing dependent plasticity (STDP) synapse models — NEST simulator user documentation 1.0.0 documentation [nest-simulator.readthedocs.io]

- 7. stdp_synapse – Synapse type for spike-timing dependent plasticity — NEST Simulator Documentation [nest-simulator.readthedocs.io]

- 8. Brian 2, an intuitive and efficient neural simulator | eLife [elifesciences.org]

- 9. Frontiers | The Brian simulator [frontiersin.org]

- 10. The Brian Simulator | The Brian spiking neural network simulator [briansimulator.org]

- 11. researchgate.net [researchgate.net]

- 12. Brian 2, an intuitive and efficient neural simulator - PMC [pmc.ncbi.nlm.nih.gov]

- 13. Brian 2, an intuitive and efficient neural simulator [agris.fao.org]

- 14. iris.unica.it [iris.unica.it]

- 15. lcnepfl.ch [lcnepfl.ch]

- 16. Welcome to Basal Ganglia modelâs documentation — basal_ganglia_model_doc 1.0.0 documentation [basal-ganglia-model.readthedocs.io]

- 17. Embodied bidirectional simulation of a spiking cortico-basal ganglia-cerebellar-thalamic brain model and a mouse musculoskeletal body model distributed across computers including the supercomputer Fugaku - PMC [pmc.ncbi.nlm.nih.gov]

- 18. Frontiers | Computational models of basal-ganglia pathway functions: focus on functional neuroanatomy [frontiersin.org]

- 19. A neural network model of basal ganglia’s decision-making circuitry - PMC [pmc.ncbi.nlm.nih.gov]

- 20. researchgate.net [researchgate.net]

getting started with event-based data processing

An In-Depth Technical Guide to Event-Based Data Processing for Scientific Research and Drug Development

Introduction to Event-Based Data Processing

In the realms of scientific research and drug development, the volume, velocity, and variety of data generated from experiments are ever-increasing. Traditional batch processing methods, where data is collected and processed in large chunks, are often inefficient for handling this continuous stream of information, leading to delays in obtaining critical insights. Event-based data processing, underpinned by an Event-Driven Architecture (EDA), offers a paradigm shift. Instead of periodically polling for new data, systems built on EDA react to events as they occur. An event represents a significant change in state, such as the completion of a sequencing run, the generation of an image from a microscope, or the output of an analytical instrument.[1][2] This approach enables real-time data processing, enhances scalability, and promotes loose coupling between different components of a research pipeline.[1][3]

The core components of an event-driven system are event producers, event consumers, and an event broker (or event bus).[2]

-

Event Producers: These are the sources of events, such as laboratory instruments, sequencing machines, or software applications that generate data.

-

Event Consumers: These are services that subscribe to specific types of events and perform actions based on them, such as data analysis, storage, or visualization.

-

Event Broker: This is the intermediary that receives events from producers and routes them to the appropriate consumers. This decoupling means that producers don't need to know about the consumers, and vice-versa, which greatly increases the flexibility and scalability of the system.[1][2]

This guide provides a comprehensive overview of event-based data processing, its core concepts, and its practical applications in scientific and drug development workflows.

Core Concepts: A Shift from Batch to Real-Time

The fundamental difference between batch and event-based processing lies in how data is handled. Batch processing operates on a fixed, large dataset at rest, often on a predetermined schedule. In contrast, stream processing, which is central to event-driven architecture, processes data in motion, as it is generated.[4][5] This allows for near-instantaneous analysis and reaction to new information.[6]

An EDA can be implemented using different topologies, with the two primary ones being the broker topology and the mediator topology . The broker topology is highly decentralized, with events broadcast to all interested consumers, offering high performance and scalability. The mediator topology uses a central orchestrator to control the flow of events, which can simplify complex workflows and error handling.

For more complex scenarios, patterns like Event Sourcing and Command Query Responsibility Segregation (CQRS) can be employed. Event Sourcing involves storing the entire history of state changes as a sequence of events.[7][8] This provides a complete audit trail and allows the state of a system to be reconstructed at any point in time. CQRS separates the models for reading and writing data, which can optimize performance and scalability for each.[7][8]

Quantitative Data Summary

The adoption of an event-driven architecture and the choice of underlying technologies can have a significant impact on the performance and efficiency of data processing pipelines. The following tables summarize key quantitative data points to aid in decision-making.

Performance Comparison: Apache Kafka vs. Apache Pulsar

Apache Kafka and Apache Pulsar are two of the most popular open-source platforms for event streaming. While both are highly performant, they have different architectural designs that can lead to performance differences depending on the use case.

| Metric | Apache Kafka | Apache Pulsar | Source(s) |

| Maximum Throughput | Lower than Pulsar in some benchmark scenarios. | Can be up to 2.5x higher than Kafka in some benchmarks. | [9] |

| Publish Latency | Higher single-digit publish latency. | Up to 100x lower single-digit publish latency. | [9] |

| Historical Read Rate | Slower for historical data reads. | Can be up to 1.5x faster for historical data reads. | [9] |

| Architecture | Stateful brokers where data is stored. | Stateless brokers with a separate storage layer (Apache BookKeeper). | [8] |

| Multi-tenancy | Not natively supported. | Native multi-tenancy support. | [10] |

| Messaging Patterns | Primarily publish-subscribe to topics. | Supports publish-subscribe, queues, and fan-out patterns. | [10] |

Impact of Event-Driven Architecture on System Performance

Migrating from a traditional monolithic or batch-oriented architecture to an event-driven one can yield significant performance improvements, though it may also introduce complexities.

| Metric | Traditional Architecture (Monolithic/Batch) | Event-Driven Architecture | Source(s) |

| Response Time | Can be higher due to synchronous processing and resource contention. | Can be significantly lower; one study showed a 76% reduction in response time in a cloud computing environment. | [1][11][12] |

| Resource Utilization | Can be inefficient, with resources idle between batch jobs. | More efficient; the same study showed an 82% improvement in resource utilization through optimized event processing. | [12] |

| Process Agility | Lower, as changes to one part of the system can impact the entire workflow. | Higher; organizations typically experience a 35% improvement in process agility. | [12] |

| Time-to-Market for New Features | Slower, due to tightly coupled components. | Faster; can be reduced by approximately 40%. | [12] |

| System Availability | Lower; a failure in one component can bring down the entire system. | Higher; decoupled nature can lead to 99.95% availability even during partial outages. | [12] |

| Computational Resource Usage | Can be lower for simple, non-distributed tasks. | May consume more computational resources due to the overhead of the event broker and distributed nature. | [1][11] |

Experimental Protocols and Event-Driven Workflows

To illustrate the practical application of event-based data processing, this section details the methodologies for two common, data-intensive experimental workflows in life sciences and proposes an event-driven alternative for each.

Next-Generation Sequencing (NGS) Data Analysis

Next-Generation Sequencing (NGS) technologies generate massive amounts of genomic data that require a multi-step analysis pipeline to derive meaningful biological insights.

Traditional Batch-Based NGS Workflow Protocol

The standard NGS data analysis workflow is often executed as a series of sequential batch jobs.

-

Sequencing & Base Calling: The sequencing instrument generates raw image data, which is converted into base calls and stored as BCL (Binary Base Call) files.

-

Demultiplexing: The BCL files for a sequencing run, which contains data from multiple samples, are processed to separate the reads for each sample based on their unique barcodes. This process generates FASTQ files for each sample.[13]

-

Quality Control (QC): The raw FASTQ files are analyzed using tools like FastQC to assess the quality of the sequencing reads.

-

Adapter Trimming and Filtering: Low-quality reads and adapter sequences are removed from the FASTQ files.

-

Alignment: The cleaned reads are aligned to a reference genome using an aligner such as BWA (Burrows-Wheeler Aligner).[13] This produces a BAM (Binary Alignment Map) file.

-

Post-Alignment Processing: The BAM files are sorted, indexed, and duplicate reads are marked.

-

Variant Calling: Variants (SNPs, indels) are identified from the processed BAM files using tools like GATK. The output is a VCF (Variant Call Format) file.

-

Annotation: The identified variants are annotated with information about their potential functional impact.

Event-Driven NGS Workflow

An event-driven approach can significantly accelerate this process by parallelizing and automating the workflow.

-

SequencingRunCompleted Event: The sequencing instrument, upon completing a run, publishes a SequencingRunCompleted event to the event broker. This event contains metadata such as the run ID and the location of the raw BCL files.

-

Demultiplexing Service: A DemultiplexingService consumes the SequencingRunCompleted event and initiates the demultiplexing process. For each sample, as a FASTQ file is generated, it publishes a FastqFileCreated event.

-

QC and Preprocessing Services: A QCService and a PreprocessingService listen for FastqFileCreated events. They can run in parallel. Upon completion, they publish QCFinished and ReadsCleaned events, respectively.

-

Alignment Service: An AlignmentService listens for both QCFinished and ReadsCleaned events for the same sample. Once both are received, it triggers the alignment process. Upon completion, it publishes a BamFileCreated event.

-

Downstream Analysis Services: A VariantCallingService and other downstream services can then consume the BamFileCreated event to perform their respective tasks in parallel.

This event-driven workflow allows for immediate processing of data as it becomes available, reduces idle time, and makes the entire pipeline more resilient and scalable.

Cryo-Electron Microscopy (Cryo-EM) Single Particle Analysis

Cryo-EM has become a cornerstone of structural biology, but the data processing pipeline is computationally intensive and consists of several distinct stages.

Traditional Cryo-EM Workflow Protocol

The typical workflow for single-particle cryo-EM data processing is a linear sequence of steps.

-

Data Acquisition: A cryo-electron microscope collects a series of "movies" of a frozen-hydrated biological sample.[14]

-

Motion Correction: The frames of each movie are aligned to correct for beam-induced motion, producing a micrograph.[15]

-

CTF Estimation: The Contrast Transfer Function (CTF) of the microscope is estimated for each micrograph to correct for optical aberrations.[15]

-

Particle Picking: Individual particles (projections of the macromolecule) are identified and selected from the micrographs.[15]

-

Particle Extraction: The selected particles are extracted into a stack of smaller images.

-

2D Classification: The particle images are classified into different 2D classes to remove noise and select good particles.[15]

-

Ab Initio 3D Reconstruction: An initial 3D model is generated from the cleaned particle stack.

-

3D Classification and Refinement: The particles are classified into different 3D classes, and the 3D reconstructions are refined to high resolution.[15]

Event-Driven Cryo-EM Workflow

An event-driven workflow can introduce parallelism and real-time feedback into the cryo-EM data processing pipeline.

-

MovieAcquired Event: As the microscope acquires each movie, an event MovieAcquired is published, containing the path to the raw movie file.

-

Motion Correction and CTF Estimation Services: A MotionCorrectionService and a CTFEstimationService consume MovieAcquired events and process the movies in parallel. Upon completion, they publish MicrographCreated and CTFEstimated events, respectively.

-

Particle Picking Service: A ParticlePickingService listens for both MicrographCreated and CTFEstimated events for the same micrograph. Once both are available, it initiates particle picking and publishes a ParticlesPicked event with the coordinates of the particles.

-

Particle Extraction Service: This service consumes ParticlesPicked events and extracts the particle images, publishing a ParticlesExtracted event.

-

Real-time 2D Classification: A 2DClassificationService can consume ParticlesExtracted events and perform 2D classification on-the-fly as data is being collected. This allows researchers to monitor the quality of their data in real-time and make adjustments to the data collection process if necessary.

-

Downstream Processing: Once a sufficient number of particles have been extracted and classified, a Start3DReconstruction event can be triggered to initiate the final stages of the analysis.

This event-driven approach provides immediate feedback on data quality and can significantly reduce the total time from data collection to a high-resolution structure.

Visualizations: Signaling Pathways and Experimental Workflows

Diagrams are essential for understanding the complex relationships in biological systems and data processing pipelines. The following visualizations are created using the Graphviz DOT language.

Biological Signaling Pathway: MAPK Signaling Cascade

The Mitogen-Activated Protein Kinase (MAPK) pathway is a crucial signaling cascade that regulates a wide variety of cellular processes, including proliferation, differentiation, and apoptosis. It can be modeled as a series of events where the activation of one protein triggers the activation of the next.

References

- 1. kleinnerfarias.github.io [kleinnerfarias.github.io]

- 2. estuary.dev [estuary.dev]

- 3. arxiv.org [arxiv.org]

- 4. researchgate.net [researchgate.net]

- 5. google.com [google.com]

- 6. Modeling signaling pathways in biology with MaBoSS: From one single cell to a dynamic population of heterogeneous interacting cells - PMC [pmc.ncbi.nlm.nih.gov]

- 7. youtube.com [youtube.com]

- 8. m.youtube.com [m.youtube.com]

- 9. Apache Pulsar vs. Apache Kafka 2022 Benchmark | Hacker News [news.ycombinator.com]

- 10. Kafka or Pulsar? A guide to choosing the right event streaming platform | by Maria Hatfield, PhD | Medium [medium.com]

- 11. On the impact of event-driven architecture on performance: An exploratory study | Kleinner Farias [kleinnerfarias.github.io]

- 12. researchgate.net [researchgate.net]

- 13. google.com [google.com]

- 14. JEOL USA blog | A Walkthrough of the Cryo-EM Workflow [jeolusa.com]

- 15. creative-biostructure.com [creative-biostructure.com]

Methodological & Application

Application Notes and Protocols for EBLS Data Conversion

For Researchers, Scientists, and Drug Development Professionals

This document provides a detailed guide on the data conversion process for Electron Beam Lithography (EBL) systems, often referred to as EBLS. This process is critical for translating design files into a format that EBL hardware can interpret to fabricate micro- and nano-scale structures. Such structures are integral to a range of research and development applications, including the creation of biosensors, microfluidic devices for drug screening, and templates for tissue engineering.

Introduction to EBL Data Conversion

Electron Beam Lithography is a technique that uses a focused beam of electrons to draw custom patterns on a surface covered with an electron-sensitive film (resist).[1] The fidelity of the final fabricated pattern is highly dependent on the quality of the input data and the corrections applied during the data preparation stage. EBL software, such as BEAMER or custom academic software, provides the necessary tools for this conversion and correction process.[2][3]

The primary function of an EBL data converter is to translate common Computer-Aided Design (CAD) formats into a machine-specific format that controls the electron beam's deflection and dosage. This process, known as fracturing, breaks down complex polygons from the design file into simpler shapes (rectangles and trapezoids) that the EBL system can write.

Key Data Formats in EBL

A variety of data formats are used in the EBL workflow, from initial design to the final machine-readable file. Understanding these formats is crucial for a smooth data conversion process.

| Data Format | Type | Description | Common Use |

| GDSII | CAD | A binary file format representing planar geometric shapes, text labels, and other information about the layout in hierarchical form. It is the industry standard for data exchange of integrated circuit or micro-nano device layouts.[2] | Initial design of complex microfluidic channels, sensor arrays, and other micro-devices. |

| CIF | CAD | Caltech Intermediate Form is a text-based format for describing integrated circuits. It is less common than GDSII but still supported by some systems. | Design of simple electronic components or test patterns. |

| DXF | CAD | Drawing Exchange Format is a CAD data file format developed by Autodesk. While versatile, it is not strongly standardized for microelectronics, which can sometimes lead to compatibility issues.[4] | Importing designs from general-purpose CAD software like AutoCAD. |

| ASCII Text (e.g., JEOL J01, TXL) | Text | Simple text-based formats that are useful for creating repeating patterns like gratings or dot arrays.[4] They offer precise control at the pixel level and are easy to generate programmatically.[4] | Algorithmic generation of diffraction gratings, photonic crystals, or large arrays of nanoparticles. |

| Machine Specific Formats (e.g., JEOL, Elionix, Raith) | Machine | Binary formats tailored to the specific EBL hardware. These files contain the fractured pattern data and instructions for the electron beam controller.[3] | Final output of the data conversion process, ready for exposure. |

Experimental Protocol: Converting a GDSII Design for Biosensor Fabrication

This protocol outlines the steps to convert a GDSII file, containing the layout of a micro-array biosensor, into a machine-readable format for an EBL system.

Objective: To prepare a GDSII design file of a biosensor for fabrication using EBL, including proximity effect correction.

Materials:

-

Workstation with EBL data preparation software (e.g., BEAMER, or a similar package).

-

GDSII design file of the biosensor.

-

Process parameters for the specific EBL system and resist (e.g., acceleration voltage, resist sensitivity, substrate material).

Methodology:

-

Import GDSII File: Launch the EBL software and import the GDSII layout file for the biosensor.

-

Layer and Pattern Inspection: Visually inspect the imported layers and patterns to ensure the design has been imported correctly. Check for any unintended gaps or overlaps in the geometry.

-

Proximity Effect Correction (PEC): The scattering of electrons in the resist and substrate can unintentionally expose areas adjacent to the intended pattern. This is known as the proximity effect.[2]

-

Monte Carlo Simulation: Many EBL software packages include a Monte Carlo simulation module to model the electron scattering and energy deposition in the resist and substrate.[2]

-

Dose Correction: Based on the simulation, the software will modulate the electron dose for different parts of the design. Denser areas will receive a lower dose, while isolated features will receive a higher dose to ensure uniform feature size after development.

-

-

Data Fracturing: The software will fracture the complex polygons in the GDSII file into simpler shapes that the EBL pattern generator can handle. The user can often define parameters such as the maximum trapezoid size.

-

Export Machine Format: Export the corrected and fractured data into the specific format required by the EBL system.

Quantitative Data Summary

The following table provides a hypothetical example of parameters used and the results of a proximity effect correction for two different feature sizes in a biosensor design.

| Parameter | Value |

| EBL System | JEOL JBX-6300FS |

| Acceleration Voltage | 100 kV |

| Resist | PMMA A4 |

| Substrate | Silicon |

| Initial GDSII Feature Size 1 (Dense Array) | 50 nm lines, 100 nm pitch |

| Initial GDSII Feature Size 2 (Isolated Line) | 50 nm |

| Base Electron Dose | 500 µC/cm² |

| Corrected Dose (Feature 1) | 450 µC/cm² |

| Corrected Dose (Feature 2) | 580 µC/cm² |

| Simulated Final Feature Size (with PEC) | 50 ± 2 nm |

| Simulated Final Feature Size (without PEC) | 65 nm (dense), 40 nm (isolated) |

Visualizations

Caption: Workflow for EBL data conversion from design to fabrication.

Caption: Logic diagram for the Proximity Effect Correction (PEC) process.

These protocols and visualizations provide a foundational understanding of the EBLS data conversion process. For specific applications, it is crucial to consult the documentation for the particular EBL software and hardware being used, as parameters and capabilities can vary significantly.

References

Application Notes and Protocols for EBLS Data Analyzer with MATLAB

Disclaimer: The term "EBLS Data Analyzer" does not correspond to a standardized, commercially available software or tool. Therefore, this document provides a generalized framework assuming "EBLS" refers to "Event-Based Luminescence Signals," a common data type in biological research and drug development. The following protocols and notes describe a comprehensive workflow for analyzing such data using MATLAB.

Introduction

Event-Based Luminescence Signal (EBLS) analysis is a critical component in many biological assays, particularly in drug discovery and cell signaling research. These assays often involve measuring the light output from a biological sample over time, where changes in luminescence correspond to a specific molecular event (e.g., enzyme activity, protein-protein interaction, or changes in gene expression). MATLAB provides a powerful and flexible environment for importing, processing, analyzing, and visualizing EBLS data.

This guide provides detailed protocols for a hypothetical cell-based EBLS experiment and the subsequent data analysis workflow in MATLAB. The target audience includes researchers and scientists who are looking to establish a robust and reproducible method for analyzing their EBLS data.

Experimental Protocol: Cell-Based EBLS Assay

This protocol describes a typical experiment to measure the effect of a compound on a specific signaling pathway using a luminescence-based reporter assay.

Objective: To quantify the dose-dependent effect of a test compound on the activity of a target signaling pathway.

Materials:

-

Cells engineered with a luciferase reporter gene downstream of a promoter responsive to the signaling pathway of interest.

-

Cell culture medium and supplements.

-

White, opaque 96-well or 384-well microplates.

-

Test compound at various concentrations.

-

Luciferase substrate (e.g., luciferin).

-

Luminometer capable of kinetic reads.

Methodology:

-

Cell Plating:

-

Trypsinize and count the reporter cells.

-

Seed the cells into the wells of a microplate at a predetermined density.

-

Incubate the plate overnight at 37°C and 5% CO2 to allow for cell attachment.

-

-

Compound Treatment:

-

Prepare serial dilutions of the test compound.

-

Add the compound dilutions to the appropriate wells. Include vehicle-only wells as a negative control.

-

Incubate the plate for a duration determined by the kinetics of the signaling pathway.

-

-

Luminescence Reading:

-

Add the luciferase substrate to all wells.

-

Immediately place the plate in a luminometer.

-

Perform kinetic luminescence readings at regular intervals (e.g., every 5 minutes) for a specified duration (e.g., 2 hours).

-

Data Analysis Protocol with MATLAB

This protocol outlines the steps to import and analyze the kinetic luminescence data generated from the experiment described above.

3.1. Data Import and Organization

The first step is to import the data from the luminometer into MATLAB. Data is often exported as a CSV or Excel file.

-

Using the Import Tool:

-

Programmatic Import: For better reproducibility, it is recommended to write a script to import the data.

3.2. Data Preprocessing

Raw luminescence data often needs to be preprocessed to remove noise and correct for background.

-

Background Subtraction: Subtract the average luminescence of the blank (no cells) wells from all other wells.

-

Normalization: To compare between different experiments or plates, data can be normalized. A common method is to normalize to the vehicle control.

-

Smoothing: To reduce noise, a moving average filter can be applied.

3.3. Feature Extraction

The goal of feature extraction is to quantify the biological response from the kinetic curves. Common features include:

-

Peak Luminescence: The maximum luminescence value.

-

Time to Peak: The time at which the peak luminescence occurs.

-

Area Under the Curve (AUC): The integral of the luminescence curve over time.

Data Presentation

The extracted features should be summarized in a table for easy comparison.

| Compound Conc. (µM) | Peak Luminescence (RLU) | Time to Peak (min) | AUC (RLU*min) |

| 0 (Vehicle) | 150,000 | 60 | 6,000,000 |

| 0.1 | 250,000 | 55 | 9,000,000 |

| 1 | 500,000 | 50 | 15,000,000 |

| 10 | 800,000 | 45 | 24,000,000 |

| 100 | 820,000 | 45 | 25,000,000 |

Visualization with Graphviz

5.1. Experimental Workflow

References

Application Notes and Protocols: A Step-by-Step Guide to Cytoscape Installation and Usage for Pathway Analysis

For Researchers, Scientists, and Drug Development Professionals

Introduction

In biological research and drug development, understanding the complex interplay of molecules within signaling pathways is crucial for deciphering disease mechanisms and identifying potential therapeutic targets. While the specific software "EBLS" could not be identified as a publicly available tool, this guide provides a comprehensive walkthrough for the installation and basic usage of Cytoscape , a powerful, open-source, and widely-used platform for visualizing, analyzing, and interpreting biological networks and pathways. Cytoscape's extensive features and active community make it an invaluable tool for researchers in the life sciences.

System Requirements

Successful installation and optimal performance of Cytoscape depend on meeting the following system requirements. Two sets of recommendations are provided: minimum for basic functionality and recommended for a smoother experience, especially when working with large networks.

| Component | Minimum Requirements | Recommended Requirements |

| Operating System | Windows 10, macOS 11 (Big Sur) or later, Linux (Ubuntu 20.04 or later) | Windows 11, latest macOS, latest stable Linux distribution |

| Processor | Intel i3 CPU or equivalent | Intel i5/i7/i9 or equivalent AMD processor |

| RAM | 4 GB | 8 GB or more |

| Disk Space | 500 MB of free space | 1 GB or more of free space |

| Java | Java 11 (bundled with the installer) | Java 11 (bundled with the installer) |

Installation Guide

This section provides a step-by-step guide for installing Cytoscape on your operating system.

Step 1: Download the Installer

-

Navigate to the official Cytoscape website: --INVALID-LINK--

-

Click on the "Download" button. The website will automatically detect your operating system and suggest the appropriate installer.

-

Select the installer for your operating system (Windows, macOS, or Linux).

Step 2: Run the Installer

-

Windows:

-

Locate the downloaded .exe file and double-click to launch the installation wizard.

-

Follow the on-screen instructions. You can typically accept the default settings.

-

The installer includes the necessary Java environment, so a separate Java installation is not required.

-

-

macOS:

-

Locate the downloaded .dmg file and double-click to open it.

-

Drag the Cytoscape application icon into your "Applications" folder.

-

-

Linux:

-

Open a terminal window.

-

Navigate to the directory where you downloaded the installer (.sh file).

-

Make the installer executable by running the command: chmod +x Cytoscape_*.sh

-

Run the installer with the command: ./Cytoscape_*.sh

-

Follow the instructions in the installation wizard.

-

Step 3: Launch Cytoscape

Once the installation is complete, you can launch Cytoscape by:

-

Windows: Double-clicking the Cytoscape shortcut on your desktop or finding it in the Start Menu.

-

macOS: Double-clicking the Cytoscape icon in your "Applications" folder.

-

Linux: Executing the cytoscape.sh script from the installation directory or using the desktop launcher if one was created.

Experimental Protocols: Basic Workflow for Pathway Analysis

This protocol outlines a fundamental workflow for visualizing and analyzing a signaling pathway using Cytoscape.

Protocol 1: Loading a Signaling Pathway from a Database

-

Objective: To import a known signaling pathway from an external database.

-

Procedure:

-

Launch Cytoscape.

-

In the "Network Search" tool at the top of the "Network" panel, select a database from the dropdown menu (e.g., "NDEx" or "WikiPathways").

-

Enter the name of a signaling pathway of interest (e.g., "EGFR signaling pathway") into the search bar and press Enter.

-

From the search results, select a pathway and click the "Import" button.

-

The pathway will be loaded and displayed in the main network view window.

-

Protocol 2: Visualizing Data on a Pathway

-

Objective: To map experimental data (e.g., gene expression levels) onto the nodes of a pathway.

-

Prerequisites: A data file (e.g., CSV or TXT) containing at least two columns: one with gene/protein identifiers that match the nodes in your network and another with the corresponding numerical data (e.g., log2 fold change).

-

Procedure:

-

With your network open, go to File > Import > Table from File....

-

Select your data file.

-

In the "Import Table" dialog, ensure the correct "Key Column for Network" (the column with identifiers) is selected.

-

Click "OK". The data will be imported into Cytoscape's "Node Table".

-

To visualize the data, go to the "Style" tab in the "Control Panel".

-

Select "Node" at the bottom of the "Style" panel.

-

Find the property you want to modify (e.g., "Fill Color").

-

Click the dropdown menu for "Column" and select your imported data column.

-

For "Mapping Type", choose "Continuous Mapping". This will map the numerical data to a color gradient.

-

You can customize the color gradient to represent your data (e.g., red for up-regulation, blue for down-regulation).

-

Visualizations

Signaling Pathway Diagram

The following diagram illustrates a simplified generic signaling pathway, which can be visualized and analyzed using software like Cytoscape.

A simplified diagram of a generic signaling pathway.

Experimental Workflow Diagram

The diagram below outlines the general workflow for pathway analysis using experimental data in Cytoscape.

A general workflow for pathway analysis in Cytoscape.

Application Notes & Protocols for LC-MS Data Conversion

Audience: Researchers, scientists, and drug development professionals.

These application notes provide a detailed protocol for converting raw Liquid Chromatography-Mass Spectrometry (LC-MS) data from proprietary vendor formats into open, analyzable formats. This process is a critical first step in many drug discovery and development workflows, including metabolomics, proteomics, and pharmacokinetic studies.[1][2][3][4]

Proper data conversion ensures data integrity and compatibility with a wide range of downstream analysis software.[5] This protocol focuses on the use of ProteoWizard, a widely used open-source software suite, to achieve this conversion.[6]

Introduction to LC-MS Data Conversion

LC-MS instruments generate complex, high-dimensional data that is typically stored in proprietary binary files.[5][6] These vendor-specific formats (e.g., .RAW for Thermo, .d for Bruker/Agilent, .WIFF for Sciex) are not universally compatible with analysis software.[5] Therefore, a crucial step in the analytical pipeline is the conversion of this raw data into an open-standard format, such as mzML or mzXML.[5][6][7] This conversion process, often referred to as "data preprocessing," involves extracting key information from the raw files, such as retention time, mass-to-charge ratio (m/z), and intensity, and structuring it in a standardized way.[5][8]

The primary goals of LC-MS data conversion are:

-

Interoperability: To enable the use of various software tools for data analysis, regardless of the instrument vendor.

-

Data Reduction: To convert profile data, which contains a large number of data points for each peak, into centroid data, which represents each peak by its monoisotopic mass and intensity. This significantly reduces file size.[9]

-

Data Quality Improvement: To apply filters and algorithms that can improve the quality of the data by, for example, recalculating precursor m/z and charge states.[6]

Experimental Protocols

This section details the protocol for converting raw LC-MS data using the MSConvert tool within the ProteoWizard software suite. This can be performed using either a Graphical User Interface (GUI) for ease of use or a command-line interface for batch processing and automation.[6]

2.1. Materials and Equipment

-

Computer with ProteoWizard software installed.

-

Raw LC-MS data files from the instrument.

2.2. Protocol using MSConvert GUI

-

Launch MSConvert GUI: Open the ProteoWizard application and launch the MSConvert GUI.

-

Load Raw Data:

-

Configure Output Format and Location:

-

Apply Data Processing Filters:

-

Select the "Filters" tab to apply data processing options. The order of filters can be important.

-

Peak Picking: This is the most common filter and is used to convert profile data to centroid data. Select "Peak Picking" and ensure it is the first filter in the list.[6][10]

-

Other optional filters can be applied as needed (see Table 2 for common parameters).

-

-

Start Conversion: Click the "Start" button to begin the conversion process.

2.3. Protocol using MSConvert Command Line

For automated processing of multiple files, the command-line interface is more efficient.

-

Open a Command Prompt or Terminal.

-

Navigate to the ProteoWizard directory.

-

Construct the Command: The basic command structure is as follows: msconvert.exe [options]

-

Specify Output Format and Filters: Use flags to specify the output format and filters. For example: msconvert.exe --mzML --filter "peakPicking true 1-" C:\data*.raw This command converts all .raw files in the C:\data\ directory to mzML format and applies peak picking to MS levels 1 and above.[6]

Data Presentation: Conversion Parameters

The following tables summarize the key parameters and options available during the data conversion process.

Table 1: Supported Input Raw Data Formats and their Origin

| Vendor/Creator | Format |

| AB SCIEX | .wiff, .t2d |

| Agilent | .d directories |

| Bruker | .d directories, .fid, XMASS XML |

| Thermo | .raw |

| Waters | .raw directories |

| HUPO PSI | .mzML |

| ISB Seattle | .mzXML |

| Matrix Science | .mgf |

This table is based on information from ProteoWizard documentation.[6]

Table 2: Key Data Conversion and Filtering Parameters in MSConvert

| Parameter | Description | Recommended Setting/Value |

| Output Format | The file format for the converted data. | mzML (recommended), mzXML, mgf, mz5 |

| Binary Encoding | The precision for storing binary data like m/z and intensity. | 64-bit for m/z, 32-bit for intensity |

| Peak Picking | Converts profile mode spectra to centroided spectra. | true 1- (apply to MS levels 1 and up) |

| Scan Number Filter | Selects a specific range of scan numbers for conversion. | e.g., [100, 500] |

| Scan Time Filter | Selects spectra within a specified retention time range. | e.g., [5, 10] (in minutes) |

| Precursor Recalculator | Recalculates the precursor m/z and charge for MS2 spectra based on MS1 data. | true |

| Charge State Predictor | Predicts charge states for spectra where the charge is unknown. | true |

Visualization of the Data Conversion Workflow

The following diagram illustrates the logical workflow for converting raw LC-MS data to an analysis-ready format.

Caption: LC-MS raw data conversion workflow.

Conclusion

The conversion of raw LC-MS data to an open-standard format is a fundamental and essential step in the data analysis pipeline for drug development and other life science research. By following a standardized protocol using tools like ProteoWizard's MSConvert, researchers can ensure their data is interoperable, reduced in complexity, and ready for downstream quantitative and qualitative analysis. The parameters chosen during conversion can significantly impact the final results, and therefore should be carefully considered and documented.

References

- 1. news-medical.net [news-medical.net]

- 2. LC/MS applications in drug development - PubMed [pubmed.ncbi.nlm.nih.gov]

- 3. Current developments in LC-MS for pharmaceutical analysis - Analyst (RSC Publishing) [pubs.rsc.org]

- 4. longdom.org [longdom.org]

- 5. bigomics.ch [bigomics.ch]

- 6. Employing ProteoWizard to Convert Raw Mass Spectrometry Data - PMC [pmc.ncbi.nlm.nih.gov]

- 7. Mass spectrometry data format - Wikipedia [en.wikipedia.org]

- 8. Untargeted LC-MS workflow - mzmine documentation [mzmine.github.io]

- 9. matrixscience.com [matrixscience.com]

- 10. Data conversion - mzmine documentation [mzmine.github.io]

Application Notes and Protocols for Processing Dynamic Vision Sensor (DVS) Recordings with Event-Based Light Stimulation (EBLS)

For Researchers, Scientists, and Drug Development Professionals

Introduction

Dynamic Vision Sensors (DVS), a form of neuromorphic imaging technology, offer a paradigm shift in capturing dynamic biological processes. Unlike traditional frame-based cameras, DVS cameras feature independent pixels that asynchronously report changes in brightness, generating a sparse stream of "events" with microsecond temporal resolution.[1][2][3] This high temporal precision and data efficiency make DVS an ideal tool for monitoring rapid cellular and network-level activity in biological systems.

Event-Based Light Stimulation (EBLS) leverages precise, patterned light delivery to modulate the activity of photosensitive cells, a cornerstone of optogenetics.[4][5][6] By combining DVS with EBLS, researchers can achieve unprecedented temporal correlation between a light-based stimulus and the resulting biological response, opening new avenues for high-throughput drug screening, neuroethology studies, and fundamental research in cellular signaling.

This document provides a detailed protocol for designing, executing, and analyzing experiments that involve the processing of DVS recordings in conjunction with EBLS.

Experimental Design and Setup

A successful DVS-EBLS experiment relies on the precise synchronization of the light stimulation source and the DVS camera. The following protocol outlines a typical setup for an in vitro experiment, such as monitoring the response of cultured neurons expressing channelrhodopsin to patterned light stimulation.

Materials and Equipment

-

DVS Camera: (e.g., iniVation DVXplorer, Prophesee Metavision sensor)

-

Light Source for EBLS: LED or laser with appropriate wavelength for the specific opsin, coupled to a digital micromirror device (DMD) or a programmable pattern projector.

-

Synchronization Hardware: A microcontroller (e.g., Arduino, Raspberry Pi) or a dedicated data acquisition (DAQ) card to generate and send trigger signals to both the DVS camera and the EBLS light source.[7]

-

Microscope: An inverted microscope suitable for cell culture imaging.

-

Cell Culture System: Incubator, cell culture dishes, and reagents.

-

Software:

-

DVS data acquisition and visualization software (e.g., DV, proprietary SDKs).

-

EBLS pattern generation and control software.

-

Data analysis software (e.g., Python with libraries such as NumPy, SciPy, and OpenCV; MATLAB).

-

Experimental Workflow Diagram

Caption: High-level experimental workflow for a DVS-EBLS experiment.

Detailed Protocols

Protocol 1: System Synchronization and Data Acquisition

-

Hardware Connection:

-

Connect the trigger output from the synchronization hardware to the external trigger input (e.g., SIGNAL_IN) of the DVS camera.

-

Connect another trigger output from the synchronization hardware to the trigger input of the EBLS light source.

-

Ensure a common ground connection between all devices.

-

-

Trigger Signal Configuration:

-

Program the synchronization hardware to generate a periodic trigger signal (e.g., a 30 Hz square wave). This signal will serve as a precise timestamp for the start of each light stimulation pulse.

-

-

DVS Camera Configuration:

-

Using the camera's software, enable the external trigger input. This will embed the trigger events directly into the DVS data stream, allowing for precise temporal alignment during analysis.

-

-

EBLS Configuration:

-

Program the desired light stimulation pattern (e.g., a series of light pulses directed at specific regions of the cell culture).

-

Configure the EBLS system to advance to the next step in the pattern upon receiving a trigger signal.

-

-

Data Recording:

-

Start the DVS recording.

-

Initiate the trigger signal from the synchronization hardware. This will simultaneously start the EBLS pattern and the recording of trigger markers in the DVS data stream.

-

Record for the desired duration of the experiment.

-

Save the DVS data in a suitable format (e.g., AEDAT 4.0).

-

Protocol 2: DVS Data Processing and Analysis

-

Data Loading and Pre-processing:

-

Load the recorded DVS data file into your analysis environment (e.g., Python).

-

Separate the event stream into individual data packets: polarity events, frame events (if any), and special events (including external triggers).

-

-

Noise Filtering:

-

Apply a spatio-temporal correlation filter to remove background activity noise, which is common in DVS recordings. This filter removes events that do not have a sufficient number of neighboring events within a defined spatial and temporal window.

-

-

Synchronization of EBLS and DVS Data:

-

Extract the timestamps of the external trigger events from the DVS data. These timestamps correspond to the onset of each light stimulus.

-

Align the polarity event data relative to the trigger timestamps. This allows for the analysis of the neural response as a function of time from stimulus onset.

-

-

Event-to-Frame Conversion for Visualization and ROI Selection:

-

To visualize the neural activity and define regions of interest (ROIs), convert the event stream into a series of frames. A common method is to create a "surface of active events" (SAE), where each frame represents the timestamps of the most recent events at each pixel.

-

From the generated frames, manually or algorithmically define ROIs corresponding to the locations of individual cells or cell clusters.

-

-

Quantitative Analysis:

-

For each ROI, calculate the event rate (number of events per unit time) as a primary measure of neural activity.

-

Plot the event rate as a function of time, aligned to the EBLS trigger events, to generate a peri-stimulus time histogram (PSTH).

-

From the PSTH, extract key metrics such as:

-

Baseline Firing Rate: The average event rate before the stimulus.

-

Peak Response: The maximum event rate after the stimulus.

-

Latency to Peak: The time from stimulus onset to the peak response.

-

Response Duration: The time the event rate remains significantly above the baseline.

-

-