Lexil

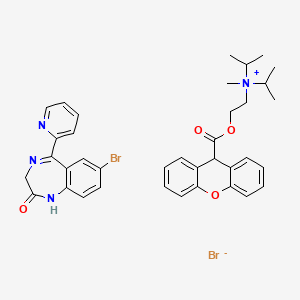

Description

BenchChem offers high-quality this compound suitable for many research applications. Different packaging options are available to accommodate customers' requirements. Please inquire for more information about this compound including the price, delivery time, and more detailed information at info@benchchem.com.

Properties

CAS No. |

63280-97-7 |

|---|---|

Molecular Formula |

C37H40Br2N4O4 |

Molecular Weight |

764.5 g/mol |

IUPAC Name |

7-bromo-5-pyridin-2-yl-1,3-dihydro-1,4-benzodiazepin-2-one;methyl-di(propan-2-yl)-[2-(9H-xanthene-9-carbonyloxy)ethyl]azanium;bromide |

InChI |

InChI=1S/C23H30NO3.C14H10BrN3O.BrH/c1-16(2)24(5,17(3)4)14-15-26-23(25)22-18-10-6-8-12-20(18)27-21-13-9-7-11-19(21)22;15-9-4-5-11-10(7-9)14(17-8-13(19)18-11)12-3-1-2-6-16-12;/h6-13,16-17,22H,14-15H2,1-5H3;1-7H,8H2,(H,18,19);1H/q+1;;/p-1 |

InChI Key |

VJPXJCQAEYLZCE-UHFFFAOYSA-M |

SMILES |

CC(C)[N+](C)(CCOC(=O)C1C2=CC=CC=C2OC3=CC=CC=C13)C(C)C.C1C(=O)NC2=C(C=C(C=C2)Br)C(=N1)C3=CC=CC=N3.[Br-] |

Canonical SMILES |

CC(C)[N+](C)(CCOC(=O)C1C2=CC=CC=C2OC3=CC=CC=C13)C(C)C.C1C(=O)NC2=C(C=C(C=C2)Br)C(=N1)C3=CC=CC=N3.[Br-] |

Synonyms |

Lexil Ro 10-9618 |

Origin of Product |

United States |

Foundational & Exploratory

The Theoretical Underpinnings of Lexile Measures: A Technical Guide

The Lexile® Framework for Reading, developed by MetaMetrics®, provides a scientific approach to measuring both a reader's ability and the difficulty of a text on the same scale. This allows for a more precise matching of readers with texts, aiming to optimize reading comprehension and growth. This in-depth technical guide explores the core theoretical basis of Lexile measures, designed for researchers, scientists, and professionals in drug development who require a thorough understanding of its psychometric and linguistic foundations.

Core Principles of the Lexile Framework

The fundamental concept behind the Lexile Framework is to place both reader ability and text complexity onto a single, developmental scale.[1][2][3][4][5][6][7] This shared scale enables a direct comparison between a reader's proficiency and a text's difficulty, facilitating the selection of appropriately challenging reading material.[1][8][9] The two key measures within this framework are:

-

Lexile Reader Measure: This quantifies a person's reading ability. It is determined through a reading comprehension test or program that has been linked to the Lexile Framework.[2][3][8][10][11] A higher Lexile reader measure signifies a greater reading ability.[8]

-

Lexile Text Measure: This indicates the reading demand of a text.[2][3][12] It is calculated by analyzing the semantic and syntactic characteristics of the text using the Lexile Analyzer®.[1][13][14] A higher Lexile text measure corresponds to a more challenging text.[8]

The Lexile scale is a developmental scale ranging from below 0L for beginning readers and texts (designated with a "BR" for Beginning Reader) to over 1600L for advanced readers and texts.[1][3][4]

Psychometric Foundation: The Rasch Model

The Lexile Framework is built upon a strong psychometric foundation, specifically the Rasch model.[1][15][16][17][18] The Rasch model is a form of conjoint measurement, which means it allows for the measurement of two different elements—in this case, reader ability and text difficulty—on the same scale and in the same units.[1] This is a crucial aspect of the framework, as it provides the theoretical basis for matching readers and texts.

The application of the Rasch model allows for the creation of a linear measurement scale where the difference between any two points on the scale has the same meaning, regardless of where on the scale it occurs. This is in contrast to raw scores on a test, where the difference in ability between scores at the extremes of the scale may not be the same as the difference between scores in the middle.[16]

Quantifying Text Complexity: The Lexile Analyzer®

The Lexile text measure is determined through a proprietary software called the Lexile Analyzer®.[1][12][13] This tool analyzes the full text to evaluate its complexity based on two well-established predictors of reading difficulty: syntactic complexity and semantic difficulty.[8][10][19][20]

-

Syntactic Complexity (Sentence Length): This is measured by the mean sentence length. Longer sentences are generally more grammatically complex and place a greater demand on a reader's short-term memory, thus indicating a higher level of syntactic difficulty.[1][10]

-

Semantic Difficulty (Word Frequency): This is determined by the frequency of words in a large corpus of text. The Lexile Framework uses the mean log word frequency.[1][13] Words that appear less frequently in the corpus are considered more challenging, indicating greater semantic difficulty.[10]

The combination of these two factors in the Lexile equation produces the Lexile measure for a text.[1][13] Longer sentences and lower-frequency words result in a higher Lexile measure, while shorter sentences and higher-frequency words lead to a lower measure.[1][14]

Experimental Protocol for Lexile Text Measurement

The process of analyzing a text with the Lexile Analyzer® follows a detailed protocol to ensure accuracy and consistency:

-

Text Preparation: The text must be in a plain text format. Any non-prose elements such as poetry, lists, or song lyrics are removed as they lack conventional punctuation and can skew the analysis.[1][12][14][21]

-

Slicing the Text: The text is divided into 125-word slices.[17][19]

-

Analysis of Slices: Each slice is analyzed for its sentence length and word frequency against the Lexile corpus, which contains nearly 600 million words from a wide variety of sources and genres.[17][19]

-

Application of the Lexile Equation: The calculations for sentence length and word frequency are input into the Lexile equation to determine a Lexile measure for each slice.

-

Rasch Model Application: The Lexile measures of all the slices are then applied to the Rasch psychometric model to calculate the final Lexile measure for the entire text.[17][19]

For texts intended for early readers (650L and below), the Lexile Analyzer® incorporates nine variables, including decoding, patterning, and structural variables, to provide a more nuanced assessment of text complexity.[22]

Data Presentation

The quantitative data associated with the Lexile Framework is summarized in the tables below for easy comparison.

| Lexile Scale Range | Reader/Text Description |

| BR (Beginning Reader) | Measures below 0L for emergent readers and texts.[3][4] |

| 200L - 1600L+ | The typical range of the Lexile scale.[18] |

| Above 1700L | Advanced readers and texts.[23] |

| Lexile Range for Optimal Reading | Forecasted Comprehension Rate | Description |

| Reader's Lexile Measure -100L to +50L | Approximately 75% | This range is considered the "sweet spot" for reading growth, providing enough challenge to encourage progress without causing frustration.[8][11][19][24] |

Visualizing the Lexile Framework

The following diagrams, created using the DOT language, illustrate the core concepts and workflows of the Lexile Framework.

References

- 1. metametricsinc.com [metametricsinc.com]

- 2. researchgate.net [researchgate.net]

- 3. Lexile Framework for Reading [partnerhelp.metametricsinc.com]

- 4. lexile.com [lexile.com]

- 5. youtube.com [youtube.com]

- 6. m.youtube.com [m.youtube.com]

- 7. youtube.com [youtube.com]

- 8. bjupresshomeschool.com [bjupresshomeschool.com]

- 9. scispace.com [scispace.com]

- 10. Understanding the Lexile Framework- the Pros & Cons — Reading Rev [readingrev.com]

- 11. Lexile Measures [help.turnitin.com]

- 12. Lexile Analyzer® [la-tools.lexile.com]

- 13. cdn.lexile.com [cdn.lexile.com]

- 14. help.activelylearn.com [help.activelylearn.com]

- 15. Using Lexile Reading Measures to Improve Literacy [rasch.org]

- 16. scribd.com [scribd.com]

- 17. renaissance.com [renaissance.com]

- 18. cdn.edmentum.com [cdn.edmentum.com]

- 19. cdn.lexile.com [cdn.lexile.com]

- 20. scholastic.com [scholastic.com]

- 21. youtube.com [youtube.com]

- 22. Lexile Reading and Text Measures [partnerhelp.metametricsinc.com]

- 23. files.eric.ed.gov [files.eric.ed.gov]

- 24. m.youtube.com [m.youtube.com]

what are the core principles of the Lexile framework

An In-depth Technical Guide on the Core Principles of the Lexile Framework

The Lexile® Framework for Reading provides a quantitative system for measuring text complexity and a reader's comprehension ability on the same developmental scale.[1][2][3] Developed by MetaMetrics, this framework is rooted in over 30 years of research and is widely used to match readers with texts that are appropriately challenging, thereby fostering reading growth.[4][5] The core of the framework lies in its ability to express both the difficulty of a text and the ability of a reader using a common metric: the Lexile.[5][6]

Core Principles: A Dual-Component System

The Lexile Framework is constructed upon two fundamental components: the Lexile Text Measure and the Lexile Reader Measure .[1][7][8] Both are reported on the Lexile scale, which typically ranges from below 0L for beginning readers (designated with a "BR" code) to over 1600L for advanced readers and complex texts.[2][4][9] This unified scale is a key principle, allowing for a direct comparison and matching between a reader's ability and a text's difficulty.[4][5] The goal is to find a "targeted reading experience" where the reader can comprehend approximately 75% of the material, a level considered optimal for skill development without causing frustration.[4][10]

The Scientific Foundation of Text Complexity

A Lexile text measure is derived from a quantitative analysis of two well-established predictors of text difficulty: syntactic complexity and semantic difficulty.[2][4][6][11] These factors are evaluated algorithmically, independent of qualitative aspects like age-appropriateness or the complexity of themes.[3][7]

-

Syntactic Complexity (Sentence Structure): The framework uses the average sentence length as a powerful and reliable proxy for syntactic complexity.[4][12] Longer sentences are generally more complex, containing more clauses and requiring the reader to process more information in short-term memory.[4][12]

-

Semantic Difficulty (Word Familiarity): This is determined by word frequency.[4][6][11] The framework assesses the familiarity of words based on their prevalence within a massive, nearly 600-million-word corpus of texts.[4][6][11] Words that appear less frequently in this corpus are considered more challenging. The specific metric used is the mean log word frequency, which had the highest correlation with text difficulty (r = -0.779) among the variables studied by MetaMetrics.[4][12]

These two variables—mean sentence length and mean log word frequency—are combined in a proprietary mathematical equation to calculate the final Lexile measure for a text.[4][12]

Methodologies and Protocols

The determination of Lexile measures for both texts and readers follows distinct, scientifically grounded protocols.

Experimental Protocol: Lexile Text Measure Determination

The Lexile text measure is calculated using an automated software program called the Lexile® Text Analyzer.[4][9] The protocol is as follows:

-

Text Slicing: The full text of a book or article is partitioned into 125-word slices.[3][6][11] This slicing method ensures that variations in complexity throughout the text are accounted for and prevents shorter, simpler sections from disproportionately influencing the overall measure.[4]

-

Syntactic and Semantic Analysis: Within each slice, the software calculates the two core variables:

-

Lexile Equation Application: The MSL and MLF values for each slice are entered into the Lexile equation to produce a Lexile measure for that slice.

-

Rasch Model Calibration: The Lexile measures from all the individual slices are then applied to the Rasch psychometric model.[6][11] This conjoint measurement model places the text's difficulty onto the common Lexile scale, resulting in a single, holistic Lexile measure for the entire text.[4][6][11]

For texts intended for early readers (generally below 650L), the analyzer also evaluates four "Early Reading Indicators": Structure, Syntactic, Semantic, and Decoding demands, to provide a more nuanced assessment.[9]

Protocol: Lexile Reader Measure Determination

A reader's Lexile measure is not determined by analyzing their speech or writing but is obtained from their performance on a standardized reading comprehension test that has been linked to the Lexile framework.[1][2] Many prominent educational assessments report student reading scores as Lexile measures.[1][13] The Rasch model is fundamental here as well, as it allows student abilities to be placed on the same scale as the text difficulties, providing a direct link between assessment performance and text complexity.[4][14][15]

Data Presentation

The quantitative nature of the Lexile framework is summarized in the following tables.

| Lexile Scale and Reader/Text Designations | |

| Lexile Measure | Description |

| Above 1600L | Advanced readers and texts.[2][9] |

| 900L - 1050L | The recommended reading range for a reader with a 1000L measure.[1][12] |

| Below 650L | Texts where Early Reading Indicators are applied for enhanced analysis.[9] |

| 0L and Below | Beginning Reader (BR). A higher number after BR indicates a less complex text (e.g., BR300L is easier than BR100L).[7][9] |

| AD | Adult Directed: Text is best read aloud to a student.[9] |

| NC | Non-Conforming: Content is age-appropriate for readers whose ability is lower than the text's complexity suggests.[9] |

| HL | High-Low: High-interest content at a lower reading level.[9] |

| GN | Graphic Novel.[9] |

| Key Variables in Lexile Text Analysis | |

| Variable | Description & Example Data |

| Mean Sentence Length (MSL) | A proxy for syntactic complexity.[4] Longer sentences increase the Lexile measure. |

| Example Text 1 (420L): MSL = 6.95[9] | |

| Example Text 2 (980L): MSL = 13.78[9] | |

| Mean Log Word Frequency (MLF) | A proxy for semantic difficulty, based on a ~600-million-word corpus.[4][6] Lower frequency words (and thus a lower MLF) increase the Lexile measure. |

| Example Text 1 (420L): MLF = 3.43[9] | |

| Example Text 2 (980L): MLF = 3.28[9] | |

| Correlation of MLF to Text Difficulty | r = -0.779[4][12] |

Visualized Workflows and Relationships

The logical and experimental processes of the Lexile Framework are illustrated below using Graphviz.

References

- 1. bjupresshomeschool.com [bjupresshomeschool.com]

- 2. Lexile Framework for Reading [schooldataleadership.org]

- 3. renaissance.com [renaissance.com]

- 4. metametricsinc.com [metametricsinc.com]

- 5. m.youtube.com [m.youtube.com]

- 6. usg.edu [usg.edu]

- 7. Lexile - Wikipedia [en.wikipedia.org]

- 8. Understanding the Lexile Framework- the Pros & Cons — Reading Rev [readingrev.com]

- 9. metametricsinc.com [metametricsinc.com]

- 10. youtube.com [youtube.com]

- 11. cdn.lexile.com [cdn.lexile.com]

- 12. cdn.lexile.com [cdn.lexile.com]

- 13. youtube.com [youtube.com]

- 14. Using Lexile Reading Measures to Improve Literacy [rasch.org]

- 15. scribd.com [scribd.com]

The Foundational Research Behind the Lexile Framework: A Technical Guide

For Researchers, Scientists, and Drug Development Professionals

This in-depth technical guide delves into the foundational research and core methodologies that underpin the Lexile Framework for Reading. Developed by MetaMetrics, the Lexile Framework provides a scientific approach to measuring reading ability and text complexity on the same scale. This allows for the precise matching of readers with texts, a critical component in educational and professional settings where clear comprehension of technical documents is paramount. This guide will explore the psychometric underpinnings, key experimental validations, and the analytical processes at the heart of the Lexile Framework.

Core Principles: The Rasch Model and Key Predictors

The Lexile Framework is built upon a strong psychometric foundation, primarily the Rasch model , a one-parameter logistic item response theory model.[1][2][3] This model establishes a common scale for both reader ability and text difficulty, allowing for a direct comparison between the two. The fundamental principle is that the probability of a reader correctly comprehending a text is a function of the difference between the reader's ability measure and the text's difficulty measure.

The difficulty of a text, its Lexile measure, is determined by two key linguistic features that have been consistently shown to be powerful predictors of text complexity:

-

Semantic Difficulty (Word Frequency): This refers to the novelty of the words in a text. The Lexile Framework operationalizes this by calculating the frequency of words against a massive corpus of written material.[4] Words that appear less frequently in the corpus are considered more challenging.

-

Syntactic Complexity (Sentence Length): This refers to the complexity of the sentence structures. The framework uses the length of sentences as a proxy for syntactic complexity, with longer sentences generally indicating more complex grammatical structures.[4]

The Lexile Analyzer: The Engine of Text Measurement

The Lexile Analyzer is the software that calculates the Lexile measure for a given text.[5] The process involves several key steps:

-

Text Preparation: The text is first prepared for analysis. This includes removing extraneous elements like titles, headings, and incomplete sentences that could skew the measurement.

-

Slicing: The entire text is divided into 125-word slices. This ensures that the analysis captures the complexity across the entire document and is not overly influenced by any single section.[6]

-

Analysis of Slices: Each 125-word slice is analyzed for its semantic and syntactic characteristics.

-

Application of the Lexile Equation: The data from the analysis of the slices are then input into a proprietary algebraic equation, known as the "Lexile specification equation," to determine the Lexile measure for the entire text.[1][4] While the exact formula is proprietary, it is a regression equation that combines the measures of word frequency and sentence length.[7]

The Lexile Corpus

A critical component of the Lexile Analyzer is its extensive corpus of text. Initially developed with a 600-million-word corpus, it has since expanded to over 1.4 billion words.[7] This corpus is compiled from thousands of published texts across a wide range of genres and subject areas, providing a robust baseline for determining word frequencies.[7]

Quantitative Data and Validation Studies

The validity of the Lexile Framework has been established through numerous studies that correlate Lexile measures with other established measures of reading comprehension and text difficulty.

| Validation Study Type | Key Finding | Source |

| Correlation with Text Difficulty | The mean log word frequency, a measure of semantic difficulty, was found to have a high correlation with text difficulty. | [4] |

| Correlation with Observed Item Difficulty | The mean log word frequency showed a high correlation with the rank order of reading comprehension test items. | [7] |

| Linking with Standardized Tests | A study combining nine standardized reading tests found a high correlation between the observed logit difficulties of reading passages and the theoretical calibrations from the Lexile equation after correction for range restriction and measurement error. | [7] |

| Internal Consistency | Analysis of basal reader series demonstrated that the Lexile Framework could consistently account for the sequential difficulty of the units within the series. | [8] |

Experimental Protocols

While detailed, step-by-step protocols for the initial development of the Lexile Analyzer are proprietary, the general methodologies for key validation and linking studies are available.

Protocol for Linking Standardized Tests to the Lexile Scale

The process of linking a standardized reading test to the Lexile Framework involves a rigorous psychometric study to establish a statistical relationship between the two scales.

-

Content Analysis: A thorough analysis of the content and format of the standardized test is conducted.

-

Development of a Linking Instrument: A custom reading assessment, often referred to as a "Lexile linking test," is developed. This test is designed to be content-neutral and to measure reading ability across a wide range of the Lexile scale.

-

Co-administration Study: A sample of students takes both the standardized test and the Lexile linking test.

-

Psychometric Analysis: The data from the co-administration study is analyzed using the Rasch model to place both the items from the standardized test and the linking test onto a common scale.

-

Development of Conversion Tables: Based on the analysis, a set of conversion tables or equations is created that allows for the translation of scores from the standardized test into Lexile measures.

Protocol for Lexile Analyzer Validation

The validation of the Lexile Analyzer is an ongoing process that involves multiple approaches:

-

Correlation with External Measures: Lexile measures of texts are correlated with other established readability formulas and with expert judgments of text difficulty.

-

Predictive Validity Studies: Studies are conducted to determine how well Lexile measures predict actual reader comprehension. This often involves having students read texts at various Lexile levels and then assessing their comprehension.

-

Analysis of Fit to the Rasch Model: The extent to which the data from reader-text interactions fit the predictions of the Rasch model is continuously evaluated.

Visualizing the Lexile Framework

The following diagrams, created using the DOT language, illustrate the core concepts and workflows of the Lexile Framework.

Caption: Core components of the Lexile Framework.

Caption: Workflow of the Lexile Analyzer.

Caption: Process of linking a standardized test to the Lexile Framework.

References

- 1. metametricsinc.com [metametricsinc.com]

- 2. Using Lexile Reading Measures to Improve Literacy [rasch.org]

- 3. scribd.com [scribd.com]

- 4. scispace.com [scispace.com]

- 5. m.youtube.com [m.youtube.com]

- 6. renaissance.com [renaissance.com]

- 7. metametricsinc.com [metametricsinc.com]

- 8. levelupreader.net [levelupreader.net]

The Application of Lexile Measures in Enhancing Clarity and Comprehension of Clinical and Pharmaceutical Documentation: A Technical Guide

For Immediate Release

DURHAM, NC – October 30, 2025 – This technical guide explores the application of Lexile® measures, a widely recognized framework for assessing text complexity, to the domain of scientific and drug development documentation. Primarily directed at researchers, scientists, and drug development professionals, this document outlines the imperative for clear, comprehensible technical literature and proposes a framework for the exploratory application of Lexile measures to enhance the efficacy of communication within the pharmaceutical industry.

The development of novel therapeutics is contingent on the precise and unambiguous communication of complex scientific and clinical information. From clinical trial protocols to regulatory submissions, the clarity of these documents can significantly impact the efficiency of drug development, the integrity of clinical trials, and ultimately, patient safety. While the Lexile framework has been extensively validated in educational settings, its application to the specialized and technical language of pharmaceutical science remains a nascent yet promising field of inquiry.

This guide will provide a comprehensive overview of the Lexile framework, detail methodologies for its application in a pharmaceutical context, present quantitative data from related fields, and propose workflows for integrating readability analysis into the drug development lifecycle.

The Lexile® Framework for Reading: A Primer

The Lexile Framework for Reading provides a quantitative measure of text complexity and reader ability on the same scale.[1] A Lexile text measure is calculated based on two key indicators of text difficulty:

-

Sentence Length: Longer sentences are generally more complex and place a higher demand on the reader's short-term memory.

-

Word Frequency: Words that appear less frequently in a large corpus of text are considered more challenging.

The resulting Lexile score is a number followed by an "L" (e.g., 1200L), which allows for the matching of readers with texts at an appropriate level of difficulty.

The Case for Readability in Drug Development

While the primary audience for much of the documentation in drug development consists of highly trained professionals, the clarity and readability of these materials are nonetheless critical. A discussion forum hosted by the Association for Applied Human Pharmacology (AGAH e.V.) underscored the need for improvement in the readability and comprehensibility of Investigator's Brochures (IBs) for meaningful risk assessment in early clinical trials.[2] The overall consensus was that an optimized presentation of data is crucial for the best possible understanding of a compound's characteristics and for safeguarding clinical trial participants.[2]

Complex or unclear language in documents such as clinical trial protocols can lead to misinterpretations, protocol deviations, and compromised data integrity. Similarly, the readability of regulatory submissions can impact the efficiency of the review process.

Exploratory Application of Lexile Measures in Pharmaceutical Documentation

While direct, published exploratory studies on the application of Lexile measures to internal drug development documentation are limited, the principles and methodologies are transferable from studies on patient-facing materials and other technical documents. The following sections outline a proposed approach for such exploratory studies.

Experimental Protocol: Assessing the Readability of Technical Documents

A proposed study to assess the readability of technical documents within a pharmaceutical setting would involve the following steps:

-

Document Selection: A representative sample of key documents from the drug development lifecycle would be selected. This could include:

-

Clinical Trial Protocols

-

Investigator's Brochures (IBs)

-

Informed Consent Forms (ICFs)

-

Standard Operating Procedures (SOPs)

-

Regulatory Submission Dossiers (e.g., sections of a New Drug Application)

-

-

Text Preparation: The selected documents would be converted to plain text format to be processed by the Lexile Analyzer®, a software tool that calculates the Lexile measure of a text.[3]

-

Lexile Analysis: Each document would be analyzed to determine its Lexile score. The analysis would also yield data on mean sentence length and word frequency.

-

Data Analysis and Benchmarking: The Lexile scores of the documents would be analyzed to establish a baseline readability level for different types of pharmaceutical documentation. This data could be compared to established Lexile benchmarks for other types of complex texts, such as scientific articles or legal documents.

-

Correlation with Outcomes (Exploratory): In a more advanced study, the Lexile scores of documents could be correlated with relevant outcomes. For example:

-

Clinical Trial Protocols: Correlation with the rate of protocol deviations or amendments.

-

Investigator's Brochures: Correlation with the time required for investigator training or the number of queries from clinical sites.

-

Regulatory Submissions: Correlation with the number of review cycles or requests for information from regulatory agencies.

-

Quantitative Data from Related Fields

While awaiting direct studies in the pharmaceutical industry, valuable insights can be drawn from research on the readability of patient-facing medical information. The following tables summarize quantitative data from studies that have used readability formulas, including Lexile, to assess such materials.

Table 1: Readability of Prescription Drug Warning Labels

| Warning Label Text | Lexile Score | Correct Interpretation Rate |

| Take with Food | Beginning | 83.7% |

| Do not take dairy products, antacids, or iron preparations within 1 hour of this medication | 1110L | 7.6% |

Source: Adapted from a study on the impact of low literacy on the comprehension of prescription drug warning labels.[4]

Table 2: Readability of Clinical Research Patient Information Leaflets/Informed Consent Forms (PILs/ICFs)

| Readability Metric | Mean Score/Level | Recommended Level |

| Flesch Reading Ease | 49.6 | 60-70 |

| Flesch-Kincaid Grade Level | 11.3 | 6th |

| Gunning Fog | 12.1 | <8 |

| Simplified Measure of Gobbledegook (SMOG) | 13.0 | <6 |

Source: Adapted from a retrospective quantitative analysis of clinical research PILs/ICFs in Ireland and the UK.[5]

These data clearly indicate a significant gap between the complexity of patient-facing medical documents and the recommended reading levels for the general public. A similar gap may exist for professional-facing documentation, hindering effective communication even among experts.

Proposed Workflow for Integrating Readability Analysis

The integration of readability analysis into the document development workflow can be a proactive step towards improving the clarity of scientific and clinical communications. The following diagram illustrates a proposed workflow.

Caption: A proposed workflow for integrating Lexile analysis into the technical document lifecycle.

This iterative process ensures that readability is considered a key quality attribute of the documentation, alongside scientific accuracy and regulatory compliance.

Signaling Pathway for Improved Comprehension and Efficiency

The application of readability metrics can be conceptualized as a signaling pathway that leads to improved comprehension and, consequently, enhanced efficiency in the drug development process.

Caption: A signaling pathway illustrating how readability analysis can lead to improved outcomes in drug development.

Conclusion and Future Directions

The application of Lexile measures to the internal documentation of the drug development process presents a significant opportunity to enhance communication, improve efficiency, and reduce errors. While the direct evidence base is still emerging, the foundational principles of readability and the methodologies for its assessment are well-established.

Future exploratory studies should focus on:

-

Establishing Lexile benchmarks for a wide range of internal pharmaceutical documents.

-

Investigating the correlation between the readability of these documents and key performance indicators in drug development.

-

Developing best practice guidelines for writing and formatting technical documents to optimize for clarity and comprehension.

By embracing a culture of clear communication, the pharmaceutical industry can streamline its processes, foster better collaboration, and ultimately, accelerate the delivery of safe and effective medicines to patients.

References

- 1. m.youtube.com [m.youtube.com]

- 2. How to Interpret an Investigator’s Brochure for Meaningful Risk Assessment: Results of an AGAH Discussion Forum - PMC [pmc.ncbi.nlm.nih.gov]

- 3. What is an Investigator’s Brochure (IB) in Clinical Trials? | Novotech CRO [novotech-cro.com]

- 4. Reliability of Wikipedia - Wikipedia [en.wikipedia.org]

- 5. Readability and understandability of clinical research patient information leaflets and consent forms in Ireland and the UK: a retrospective quantitative analysis - PMC [pmc.ncbi.nlm.nih.gov]

The Genesis of Text Complexity: A Technical Deep Dive into the Lexile Framework

Durham, NC - For researchers, scientists, and professionals in drug development, the precise and consistent communication of complex information is paramount. The texts they produce and consume demand a nuanced understanding of readability and comprehension. The Lexile Framework for Reading, a ubiquitous tool in education and publishing, offers a standardized approach to measuring text complexity. This technical guide delves into the origins of the Lexile scale, its foundational methodologies, and the empirical data that underpins its validity, providing a comprehensive resource for those who require a granular understanding of text measurement.

The Lexile Framework was developed by MetaMetrics, Inc., an educational measurement company, with its origins tracing back to the early 1980s and the work of its co-founders, A. Jackson Stenner and Malbert Smith III.[1][2] The development was significantly propelled by a series of Small Business Innovation Research (SBIR) grants from the National Institute of Child Health and Human Development (NICHD) starting in 1984.[3] The central goal was to create a scientific, universal scale for measuring both a reader's ability and the difficulty of a text, allowing for a more precise matching of readers to appropriate reading materials.[2][4]

At its core, the Lexile Framework is built upon the principle that text complexity can be quantified by analyzing two key linguistic features: syntactic complexity and semantic difficulty.[5][6] This dual-component model forms the basis of the proprietary "Lexile equation" or "Lexile specification equation" used to calculate the Lexile measure of a text.[5][6]

The Core Algorithm: Deconstructing the Lexile Equation

The Lexile Analyzer, a proprietary software, is the engine that calculates Lexile measures.[5] The process begins by breaking down a text into 125-word "slices."[5][7] This slicing method ensures that the analysis is not skewed by variations in sentence length across a long text. For each slice, the analyzer calculates the mean sentence length and the mean log word frequency.

Syntactic Complexity: The Role of Sentence Length

A primary and powerful indicator of the syntactic challenge a text presents is the average length of its sentences.[5] Longer sentences are more likely to contain multiple clauses and complex grammatical structures, placing a greater demand on a reader's short-term memory and information processing capabilities.[5] The Lexile model uses the mean sentence length as its measure of syntactic complexity.

Semantic Difficulty: The Power of Word Frequency

To quantify the semantic difficulty of a text, MetaMetrics turned to the concept of word frequency. The underlying theory is that words that appear more frequently in a language are more familiar to readers and therefore present less of a comprehension challenge.[5] After analyzing over 50 different semantic variables, researchers at MetaMetrics determined that the mean log word frequency had the highest correlation with text difficulty.[5]

The word frequency is not determined from the text being analyzed itself, but rather by comparing the words in the text to a massive, 600-million-word corpus of written material.[8] This extensive corpus provides a stable and representative baseline of word frequencies in the English language. The logarithm of the word frequency is used to account for the vast differences in the occurrences of common and rare words.

The Psychometric Foundation: The Rasch Model

A key innovation of the Lexile Framework is its ability to place both reader ability and text difficulty on the same measurement scale.[1][9] This is achieved through the application of the Rasch model, a psychometric model from the field of item response theory.[1] The Rasch model allows for the creation of a "conjoint measurement" system, where the probability of a particular outcome (in this case, a reader successfully comprehending a text) is a function of the difference between the person's ability and the item's difficulty.[1]

In the context of the Lexile Framework, the Rasch model was used to calibrate a common scale, the Lexile scale, where a reader's ability and a text's difficulty are both expressed in Lexile units (L).[9] The scale typically ranges from below 0L for beginning readers and texts (designated as BR for Beginning Reader) to over 1700L for advanced readers and complex texts. A key benchmark of the scale is that a reader is expected to have a 75% comprehension rate with a text that has the same Lexile measure as their reading ability.[5]

Experimental Validation

The validity of the Lexile Framework has been established through numerous studies that demonstrate a strong correlation between Lexile measures and other established measures of reading comprehension and text difficulty.[3] Initial validation efforts involved linking the Lexile scale to standardized reading tests such as The Iowa Tests of Basic Skills and the Stanford Achievement Test.[3] These studies consistently showed a strong positive relationship between a student's reading comprehension score on these tests and their Lexile measure.[3]

Further validation has been an ongoing process, with hundreds of independent research studies utilizing Lexile measures as a metric for text complexity.[10] One notable finding from the early research was the high negative correlation (r = -0.779) between mean log word frequency and text difficulty, underscoring the significance of this variable in the Lexile equation.[5][8]

Below is a summary of the core components of the Lexile Framework's development:

| Component | Description |

| Theoretical Basis | Text complexity is a function of syntactic and semantic difficulty. |

| Syntactic Variable | Mean Sentence Length |

| Semantic Variable | Mean Log Word Frequency (derived from a 600-million-word corpus) |

| Psychometric Model | Rasch Model for conjoint measurement of reader ability and text difficulty |

| Unit of Measurement | Lexile (L) |

| Target Comprehension | 75% comprehension when reader's Lexile measure matches the text's Lexile measure |

The Lexile Analysis Workflow

The process of determining a Lexile measure for a given text follows a structured workflow, which can be visualized as follows:

Logical Relationship of Core Components

The fundamental relationship between the core components of the Lexile Framework can be illustrated as follows:

References

- 1. metametricsinc.com [metametricsinc.com]

- 2. cdn.lexile.com [cdn.lexile.com]

- 3. metametricsinc.com [metametricsinc.com]

- 4. researchgate.net [researchgate.net]

- 5. cdn.lexile.com [cdn.lexile.com]

- 6. metametricsinc.com [metametricsinc.com]

- 7. cheshirelibraryblog.com [cheshirelibraryblog.com]

- 8. metametricsinc.com [metametricsinc.com]

- 9. advancebookreaders.com [advancebookreaders.com]

- 10. metametrics.com [metametrics.com]

A Technical Deep Dive: The Genesis and Funding of the Lexile Framework

A Whitepaper on the Foundational Development and Initial Financial Backing of the Lexile Framework for Reading

This technical guide provides an in-depth exploration of the initial development and funding of the Lexile Framework for Reading. It is intended for researchers, psychometricians, and educational technology professionals interested in the scientific underpinnings of widely adopted educational tools. This document details the core methodologies, the pivotal role of federal grant funding, and the early validation protocols that established the framework as a durable measure of reading ability and text complexity.

Introduction: The Scientific Pursuit of a Common Metric

The Lexile Framework for Reading was conceived to address a fundamental challenge in education: accurately matching readers with texts of appropriate difficulty to optimize learning and growth. Developed by Dr. A. Jackson Stenner and Dr. Malbert Smith III, co-founders of MetaMetrics, Inc., the framework introduced a common scale for measuring both reader ability and text complexity in the same units, known as Lexiles (L).[1][2][3] The core innovation was the application of a scientific, psychometric model to create an objective, scalable system, moving beyond traditional, often subjective, leveling methods.[3] This guide focuses on the seminal period of its creation, tracing its journey from a research concept to a validated, operational framework.

Initial Funding: The Role of Federal Research Grants

The foundational research and development of the Lexile Framework were substantially supported by the U.S. government, primarily through the Small Business Innovation Research (SBIR) program.[4] The National Institute of Child Health and Human Development (NICHD), a division of the National Institutes of Health (NIH), provided the critical early-stage funding that enabled MetaMetrics to pursue its long-term research agenda.[4]

Quantitative Funding Data

Between 1984 and 1996, MetaMetrics was the recipient of five separate SBIR grants from the NICHD. This sustained funding was instrumental in the multi-year research effort required to develop and validate the framework. While a comprehensive public database detailing the precise award dates and amounts for these specific historical grants is not readily accessible, the SBIR program operates in distinct phases, with Phase I grants focused on establishing technical merit and feasibility and Phase II grants supporting full-scale research and development.

| Funding Body | Grant Program | Number of Grants | Time Period | Stated Purpose |

| National Institute of Child Health and Human Development (NICHD) | Small Business Innovation Research (SBIR) | 5 | 1984–1996 | To develop a universal measurement system for reading and writing.[5] |

Core Methodology: A Psychometric Approach

The technical core of the Lexile Framework is its basis in psychometric theory, specifically the Rasch model—a one-parameter logistic model from Item Response Theory.[6][7] This model allows for the placement of both reader ability and text difficulty onto a single, equal-interval scale.[8][9] This conjoint measurement is the key feature that enables the direct comparison and matching of readers and texts.[8]

The initial Lexile equation was a regression formula designed to predict the difficulty of a text based on two core linguistic features that decades of readability research had identified as powerful and reliable predictors of text complexity.[1]

-

Syntactic Complexity (Sentence Length): This variable measures the average length of sentences in a text. Longer sentences are hypothesized to place a greater load on a reader's short-term memory, thereby increasing the cognitive demand of the reading task.[1]

-

Semantic Difficulty (Word Frequency): This variable is an indicator of vocabulary difficulty. The framework operates on the principle that words appearing less frequently in a large corpus of written language are less likely to be familiar to a reader, thus making the text more challenging.[1]

Experimental Protocol: Text Calibration

The process of assigning a Lexile measure to a text, known as calibration, follows a defined protocol.

-

Text Sampling: The text is first parsed into 125-word slices. If the 125th word falls mid-sentence, the slice is extended to the end of that sentence. This slicing method ensures that local variations in text complexity are accounted for and prevents shorter, simpler sentences from being disproportionately weighted against longer, more complex ones in the overall analysis.[9]

-

Variable Analysis: For each slice, the two core variables are measured:

-

The average sentence length is calculated.

-

The frequency of each word is determined by referencing a large, proprietary corpus of text. The initial development relied on the best available corpora of the time, which have since been expanded. The modern Lexile corpus contains over 600 million words.[1]

-

-

Application of the Lexile Equation: The values for mean sentence length and mean word frequency are entered into the Lexile equation to produce a Lexile measure for each slice.

-

Averaging: The Lexile measures of all slices are then averaged to determine the final Lexile measure for the entire text.

-

Rasch Modeling: The entire process is anchored by the Rasch model, which converts the raw text characteristics into measurements on a consistent, linear scale.[6]

Initial Validation Studies

A critical component of the framework's development was a series of validation studies to establish its construct validity. This involved demonstrating a strong correlation between the Lexile Framework's measurements and the difficulty of texts as determined by other established, independent measures, such as nationally normed reading comprehension tests.

Methodology

The primary validation protocol involved the following steps:

-

Selection of Standardized Tests: A set of widely used, nationally normed reading comprehension tests was selected.

-

Item Difficulty Analysis: The Rasch item difficulties for the reading passages on these tests were obtained, often from the test publishers themselves. This provided an empirical measure of each passage's difficulty based on actual student performance data.

-

Lexile Calibration: The text of each reading passage was then analyzed using the Lexile Analyzer to generate a "theory-based" Lexile measure.

-

Correlational Analysis: The empirical Rasch difficulties (observed difficulty) were correlated with the Lexile measures (predicted difficulty).

Quantitative Results

These studies demonstrated a very strong positive relationship between the Lexile measures and the observed difficulties of the test passages. A key 1987 study by Stenner, Smith, Horabin, and Smith analyzed 1,780 items from nine nationally normed tests.[9] The resulting correlation between the Lexile calibrations and the Rasch item difficulties was 0.93 after correcting for measurement error and range restriction, providing powerful evidence for the framework's validity.[1]

While a detailed breakdown of the correlations for each individual test from the initial studies is not available in the reviewed literature, the high overall correlation across a wide range of established assessments was a pivotal result. The table below summarizes the types of tests used in these linking studies over the years, illustrating the breadth of the validation effort.

| Standardized Test Type | Grades Studied | Typical Correlation with Lexile Measures |

| Norm-Referenced Reading Comprehension Tests | K-12 | Strong Positive (typically >0.70) |

| State-Mandated Achievement Tests | Various | Strong Positive |

| Interim/Benchmark Assessments | K-12 | Strong Positive |

Note: The correlations in Table 2 are representative of the strong positive relationships found in numerous linking studies reported in MetaMetrics' technical documents.[5] Specific values vary by test and student population.

Visualizing the Development and Workflow

The following diagrams illustrate the key relationships and processes in the initial development of the Lexile Framework.

Conclusion

The initial development of the Lexile Framework for Reading represents a significant application of psychometric principles to the field of literacy. Its creation was not an overnight endeavor but a deliberate, multi-year research and development effort made possible by crucial seed funding from the National Institute of Child Health and Human Development. By grounding the framework in the Rasch model and focusing on robust, objective indicators of text complexity—sentence length and word frequency—the developers created a scientifically defensible and highly scalable tool. The strong results of early validation studies provided the empirical evidence necessary for its widespread adoption by state departments of education, assessment publishers, and curriculum developers, fundamentally shaping the landscape of reading assessment and instruction.

References

- 1. metametricsinc.com [metametricsinc.com]

- 2. m.youtube.com [m.youtube.com]

- 3. levelupreader.net [levelupreader.net]

- 4. researchgate.net [researchgate.net]

- 5. cdn.lexile.com [cdn.lexile.com]

- 6. Home | RePORT [report.nih.gov]

- 7. scispace.com [scispace.com]

- 8. youtube.com [youtube.com]

- 9. partnerhelp.metametricsinc.com [partnerhelp.metametricsinc.com]

Methodological & Application

Utilizing the Lexile Framework for Advanced Reading Research: Application Notes and Protocols for Scientific and Clinical Contexts

For Immediate Release

These application notes provide a detailed guide for researchers, scientists, and drug development professionals on leveraging the Lexile Framework for Reading to enhance the clarity and effectiveness of scientific and clinical communication. By applying a standardized metric to text complexity, researchers can improve patient comprehension, ensure the accessibility of clinical trial materials, and contribute to more robust research outcomes in health literacy.

Introduction to the Lexile Framework in a Research Context

The Lexile Framework for Reading offers a scientific approach to measuring both the complexity of a text and the reading ability of an individual on the same scale.[1][2] A Lexile measure is a number followed by an "L" (e.g., 1000L), which indicates the reading demand of a text or a person's reading ability.[2] This framework is predicated on over three decades of research and is widely adopted for its accuracy in matching readers with appropriate texts.[3][4]

While traditionally used in educational settings, the principles of the Lexile Framework are increasingly relevant in scientific and clinical research. Ensuring that patient-facing materials, such as informed consent forms, patient education brochures, and drug information leaflets, are at an appropriate reading level is crucial for patient understanding, adherence, and informed decision-making.[5] Research has consistently shown that a significant portion of the adult population has limited health literacy, making the assessment of text complexity a critical component of ethical and effective research.

Core Principles of the Lexile Framework

The Lexile Framework is based on two primary predictors of text difficulty:

-

Semantic Difficulty (Word Frequency): This refers to the complexity of the vocabulary used. The Lexile Framework analyzes the frequency of words in a vast corpus of text; less frequent words are considered more challenging.[4]

-

Syntactic Complexity (Sentence Length): This relates to the structure of sentences. Longer, more complex sentence structures are more demanding for a reader to process.[6]

A proprietary algorithm combines these two factors to produce a single Lexile measure for a given text.[7]

Application in a Scientific and Drug Development Context

The Lexile Framework can be a valuable tool for:

-

Assessing the Readability of Clinical Trial Materials: Evaluating the complexity of informed consent forms, study protocols, and patient-reported outcome measures to ensure they are understandable to the target participant population.[5]

-

Developing Patient Education Materials: Creating patient-friendly content that aligns with the average reading ability of the general public, which is often cited as being around the 8th-grade level.[8]

-

Health Literacy Research: Investigating the relationship between the Lexile level of health information and patient comprehension, adherence to treatment, and health outcomes.

-

Standardizing Text Complexity in Research: Providing an objective measure of text complexity in studies that use written materials as part of the experimental design.

Experimental Protocols

Protocol for Assessing the Lexile Level of Research Materials

This protocol outlines the steps to determine the Lexile measure of written materials, such as informed consent forms or patient education documents.

Objective: To quantitatively assess the reading complexity of a text using the Lexile Framework.

Materials:

-

The text to be analyzed in a plain text format (.txt).

-

Access to the Lexile Analyzer® tool.

Procedure:

-

Prepare the Text: Convert the document into a plain text file. Ensure that any extraneous text not intended for the reader (e.g., version numbers, internal review notes) is removed.

-

Access the Lexile Analyzer: Navigate to the official Lexile website and access the Lexile Analyzer tool. This may require creating a free account.[9]

-

Upload the Text: Upload the prepared plain text file into the analyzer.

-

Analyze the Text: Initiate the analysis process. The tool will process the text based on its semantic and syntactic characteristics.[4]

-

Record the Lexile Measure: The Lexile Analyzer will output a Lexile measure for the text. Record this measure for your research records.

-

Interpret the Results: Compare the obtained Lexile measure to established benchmarks for different populations (see Table 1). For patient-facing materials in the United States, a target Lexile range is often recommended to align with the average adult reading level.

Protocol for a Comparative Readability Study

This protocol describes a methodology for comparing the readability of different versions of a document or documents from different sources.

Objective: To compare the Lexile measures of two or more texts to determine their relative readability.

Materials:

-

All text documents to be compared, each in a plain text format.

-

Access to the Lexile Analyzer®.

-

Statistical software for data analysis.

Procedure:

-

Text Selection: Clearly define the criteria for selecting the texts for comparison. For example, you might compare an original informed consent form with a revised, "plain language" version.

-

Lexile Measurement: Follow the protocol outlined in 4.1 to determine the Lexile measure for each document.

-

Data Tabulation: Record the Lexile measures for all documents in a structured table for easy comparison.

-

Statistical Analysis: If comparing groups of documents, consider using appropriate statistical tests (e.g., t-test, ANOVA) to determine if there are significant differences in the mean Lexile measures between the groups.

-

Reporting: Report the Lexile measures for each document or the mean and standard deviation for each group of documents. Clearly state the findings of your comparative analysis.

Data Presentation

Quantitative data from readability studies should be presented in a clear and organized manner.

Table 1: Example of Lexile Measures for Different Text Types

| Text Type | Sample Document | Lexile Measure |

| Academic Publication | Journal Article Abstract | 1400L |

| Standard Informed Consent | Original Clinical Trial Consent Form | 1250L |

| Plain Language Informed Consent | Revised Clinical Trial Consent Form | 950L |

| Patient Education Brochure | "Understanding Your Condition" | 800L |

Table 2: Fictional Data from a Comparative Study of Patient Information Leaflets

| Drug Class | Manufacturer A (Mean Lexile ± SD) | Manufacturer B (Mean Lexile ± SD) | p-value |

| Antihypertensives (n=10) | 1150L ± 85L | 980L ± 70L | <0.05 |

| Statins (n=10) | 1210L ± 92L | 1020L ± 80L | <0.05 |

| Anticoagulants (n=10) | 1300L ± 110L | 1050L ± 95L | <0.01 |

Visualizations

Workflow for Applying the Lexile Framework in Clinical Research

References

- 1. cdn.lexile.com [cdn.lexile.com]

- 2. youtube.com [youtube.com]

- 3. metametrics.com [metametrics.com]

- 4. metametricsinc.com [metametricsinc.com]

- 5. Assessing the Readability of Clinical Trial Consent Forms for Surgical Specialties - PubMed [pubmed.ncbi.nlm.nih.gov]

- 6. youtube.com [youtube.com]

- 7. scispace.com [scispace.com]

- 8. cdn.technologynetworks.com [cdn.technologynetworks.com]

- 9. education.udel.edu [education.udel.edu]

Unlocking Text Complexity: A Detailed Methodological Guide to Determining Lexile Scores

Application Notes and Protocols for Researchers, Scientists, and Drug Development Professionals

These application notes provide a comprehensive overview and detailed protocols for understanding and applying the methodology behind Lexile® scores, a widely used measure of text complexity. This guide is designed for researchers, scientists, and professionals who require a thorough understanding of the quantitative underpinnings and procedural steps involved in assigning Lexile measures to written texts.

Introduction to the Lexile Framework for Reading

The Lexile Framework for Reading is a scientific approach to measuring both the complexity of a text and a reader's ability on the same scale.[1][2][3] This allows for a precise matching of readers with texts that are appropriately challenging, fostering comprehension and skill development. The framework is underpinned by a proprietary algorithm that analyzes the semantic and syntactic characteristics of a text to produce a Lexile score.[1][2]

A Lexile text measure is a number followed by an "L" (e.g., 1050L), which represents the difficulty of a text. The Lexile scale is a developmental scale ranging from below 0L for beginning reader texts to over 1700L for advanced and complex materials.[1]

Core Principles of Lexile Text Measurement

The determination of a Lexile score for a text is based on two primary and well-established predictors of text difficulty:

-

Syntactic Complexity: This refers to the complexity of sentence structure. Longer, more complex sentences are generally more difficult to process and comprehend. In the Lexile framework, this is quantified by mean sentence length .[2]

-

Semantic Difficulty: This relates to the complexity of the vocabulary used in a text. Words that appear less frequently in a language are typically more challenging for a reader. This is quantified by mean log word frequency .[2]

The proprietary Lexile equation combines these two factors to calculate the final Lexile score. While the exact formula is not public, the relationship is such that longer sentences and lower frequency words result in a higher Lexile measure.[2]

Quantitative Data Summary

The following tables summarize the key quantitative parameters involved in the Lexile scoring methodology.

| Parameter | Description | Unit of Measurement | Role in Lexile Score |

| Mean Sentence Length | The average number of words per sentence in a given text sample. | Words | A primary indicator of syntactic complexity. Longer sentences increase the Lexile score. |

| Mean Log Word Frequency | The logarithm of the frequency with which words in the text appear in the MetaMetrics Corpus. | Logits | A primary indicator of semantic difficulty. Lower frequency words (and thus a lower mean log frequency) increase the Lexile score. |

| Lexile Score | The final calculated measure of text complexity. | Lexiles (L) | The output of the Lexile equation, representing the text's position on the Lexile scale. |

Table 1: Core Quantitative Parameters in Lexile Text Measurement

| Component | Description | Key Statistics |

| MetaMetrics Corpus | A large, curated body of written English text used as a reference for determining word frequencies. | Comprises over 1.4 billion words from a wide range of K-12 educational materials. |

| Lexile Analyzer | The proprietary software that performs the automated analysis of a text to determine its Lexile score. | Processes text to calculate mean sentence length and mean log word frequency. |

Table 2: Key Components of the Lexile Analysis Infrastructure

Experimental Protocols

The following protocols outline the standardized methodology for determining the Lexile score of a text.

Protocol for Text Preparation

Objective: To prepare a text file for analysis by the Lexile Analyzer.

Materials:

-

Digital text file (e.g., .txt, .doc, .pdf)

-

Word processing software (e.g., Microsoft Word, Google Docs)

Procedure:

-

Obtain Digital Text: Secure a digital version of the text to be analyzed.

-

Ensure Text Integrity: Verify that the digital text is a faithful representation of the original, with no significant omissions or additions of content.

-

Format as Plain Text:

-

Open the text in a word processor.

-

Remove any elements that are not part of the main body of the text, such as:

-

Tables of contents

-

Indexes

-

Bibliographies

-

Footnotes and endnotes

-

Page numbers and headers/footers

-

-

Save the cleaned text as a plain text file (.txt). This ensures that no formatting codes interfere with the analysis.

-

Protocol for Lexile Analysis using the Lexile Analyzer

Objective: To obtain a Lexile score for a prepared text using the Lexile Analyzer software.

Materials:

-

Prepared plain text file (.txt)

-

Access to the Lexile Analyzer software (typically through a licensed MetaMetrics product or service)

Procedure:

-

Initiate the Lexile Analyzer: Launch the Lexile Analyzer software.

-

Load the Text File: Import the prepared plain text file into the analyzer.

-

Execute Analysis: Initiate the analysis process. The software will perform the following automated steps:

-

Text Slicing: The text is divided into smaller, manageable segments for analysis.

-

Sentence Boundary Detection: The software identifies the beginning and end of each sentence.

-

Word Tokenization: The text is broken down into individual words (tokens).

-

Sentence Length Calculation: The number of words in each sentence is counted, and the mean sentence length for the entire text is calculated.

-

Word Frequency Analysis: Each word is compared against the MetaMetrics Corpus to determine its frequency of occurrence. The logarithm of this frequency is taken.

-

Mean Log Word Frequency Calculation: The average of the log word frequencies for all words in the text is calculated.

-

-

Lexile Score Calculation: The Lexile Analyzer applies the proprietary Lexile equation to the calculated mean sentence length and mean log word frequency to generate the final Lexile score.

-

Review Results: The output will display the Lexile score for the text, along with the mean sentence length and mean word frequency.

Visualizing the Methodology

The following diagrams, generated using the DOT language, illustrate the key workflows and relationships in the Lexile scoring methodology.

Caption: Workflow for determining the Lexile score of a text.

Caption: Conceptual relationship of factors influencing a Lexile score.

References

Applying Lexile Measures in Educational Research: Application Notes and Protocols

For Researchers, Scientists, and Drug Development Professionals

These application notes provide a comprehensive guide for incorporating Lexile® measures into educational research studies. The Lexile Framework for Reading offers a scientific approach to measuring both a reader's ability and the complexity of a text on the same scale, making it a valuable tool for quantitative research in reading comprehension, literacy interventions, and educational program evaluation.

Introduction to the Lexile Framework

The Lexile Framework is a widely used methodology that quantifies both reader ability (Lexile reader measure) and text complexity (Lexile text measure) on a single, developmental scale.[1] Lexile measures are expressed as a number followed by an "L" (e.g., 850L). This dual-measurement system allows for the precise matching of readers with texts that are at an appropriate level of difficulty to foster reading growth.[2]

The determination of a text's Lexile measure is based on two key linguistic features:

-

Word Frequency: The prevalence of words in a large corpus of text.

-

Sentence Length: The number of words per sentence.

A reader's Lexile measure is typically determined through a standardized reading assessment that has been linked to the Lexile scale.[3]

Data Presentation

In educational research utilizing Lexile measures, clear and structured presentation of quantitative data is crucial for interpretation and comparison. The following tables are examples of how Lexile data can be presented in a research context.

Table 1: Participant Demographics and Baseline Lexile Measures

| Characteristic | Intervention Group (n=50) | Control Group (n=50) | Total (N=100) |

| Age (Mean ± SD) | 10.2 ± 1.5 | 10.1 ± 1.6 | 10.15 ± 1.55 |

| Gender (% Female) | 52% | 48% | 50% |

| Baseline Lexile (Mean ± SD) | 650L ± 50L | 645L ± 55L | 647.5L ± 52.5L |

Table 2: Pre- and Post-Intervention Lexile Measures by Group

| Group | Pre-Intervention Lexile (Mean ± SD) | Post-Intervention Lexile (Mean ± SD) | Mean Lexile Gain (L) |

| Intervention | 650L ± 50L | 780L ± 60L | 130L |

| Control | 645L ± 55L | 665L ± 58L | 20L |

Table 3: Longitudinal Lexile Growth Over Three Time Points

| Time Point | Intervention Group Lexile (Mean ± SD) | Control Group Lexile (Mean ± SD) |

| Baseline (T1) | 650L ± 50L | 645L ± 55L |

| Mid-Point (T2 - 6 months) | 715L ± 55L | 655L ± 56L |

| End-Point (T3 - 12 months) | 780L ± 60L | 665L ± 58L |

Experimental Protocols

The following are detailed methodologies for two common types of research studies that utilize Lexile measures: a reading intervention study and a longitudinal reading growth study.

Protocol for a Lexile-Based Reading Intervention Study

This protocol outlines a randomized controlled trial to assess the efficacy of a targeted reading intervention.

3.1.1. Participant Recruitment and Screening

-

Define Target Population: Specify the age or grade level of participants (e.g., 4th-grade students).

-

Inclusion Criteria:

-

Students within the defined age/grade range.

-

Students with a baseline Lexile measure within a specified range (e.g., 400L to 700L) as determined by a standardized reading assessment (e.g., MAP Growth Reading Test, STAR Reading Test).[3]

-

-

Exclusion Criteria:

-

Students with diagnosed learning disabilities that would require specialized instruction beyond the scope of the intervention.

-

Students who are not proficient in the language of the intervention.

-

-

Informed Consent: Obtain informed consent from parents or legal guardians and assent from the student participants.

3.1.2. Randomization and Blinding

-

Randomization: Randomly assign participants to either the intervention group or a control group using a random number generator.

-

Blinding: If possible, blind the assessors who administer the pre- and post-intervention reading assessments to the group allocation of the participants.

3.1.3. Intervention Design

-

Intervention Group:

-

Text Selection: Provide students with a collection of reading materials that are within their "Lexile range," which is typically defined as 100L below to 50L above their current Lexile reader measure.[1][3] This ensures the texts are challenging but not frustrating.

-

Intervention Delivery: Implement a structured reading program for a set duration (e.g., 30 minutes daily for 12 weeks). This could involve independent reading, small group instruction, or the use of a specific reading software program.

-

-

Control Group:

-

The control group will continue with the standard reading curriculum without the targeted Lexile-based intervention.

-

3.1.4. Data Collection

-

Baseline (Pre-Intervention): Administer a standardized reading assessment to all participants to obtain their initial Lexile reader measure.

3.1.5. Statistical Analysis

-

Descriptive Statistics: Calculate the mean and standard deviation for baseline and post-intervention Lexile scores for both groups.

-

Inferential Statistics:

-

Use an independent samples t-test to compare the mean Lexile gain (post-intervention score minus pre-intervention score) between the intervention and control groups.

-

Alternatively, use an Analysis of Covariance (ANCOVA) with the post-intervention Lexile score as the dependent variable, group (intervention vs. control) as the independent variable, and the pre-intervention Lexile score as a covariate to control for initial differences.

-

Protocol for a Longitudinal Study of Reading Growth

This protocol outlines a study to track the natural progression of reading ability over time using Lexile measures.

3.2.1. Participant Cohort

-

Define Cohort: Select a cohort of students at a specific starting point (e.g., the beginning of 3rd grade).

-

Recruitment: Recruit a representative sample of students from the target population. Obtain informed consent and assent.

3.2.2. Data Collection Timeline

-

Time Point 1 (Baseline): At the beginning of the academic year, administer a standardized reading assessment to obtain the initial Lexile reader measure for each participant.

-

Time Point 2: At the end of the same academic year, re-administer the same assessment to measure Lexile growth.

-

Subsequent Time Points: Continue to administer the assessment at regular intervals (e.g., annually at the beginning and end of each school year) for the duration of the study (e.g., three years).

3.2.3. Data Analysis

-

Descriptive Statistics: Calculate the mean and standard deviation of Lexile scores at each time point.

-

Growth Modeling:

-

Use repeated measures ANOVA to analyze the change in Lexile scores over time.

-

Employ hierarchical linear modeling (HLM) or latent growth curve modeling (LGCM) to model individual and group-level growth trajectories. These models can account for variability in initial reading ability and growth rates, and can also incorporate time-varying and time-invariant covariates (e.g., socioeconomic status, instructional practices).

-

Mandatory Visualizations

The following diagrams, created using Graphviz (DOT language), illustrate the experimental workflow and logical relationships described in the protocols.

Caption: Workflow for a Lexile-Based Reading Intervention Study.

Caption: Workflow for a Longitudinal Study of Reading Growth Using Lexile Measures.

Limitations and Considerations

While Lexile measures are a powerful tool for educational research, it is important to be aware of their limitations:

-

Quantitative Focus: The Lexile Framework is a quantitative measure of text complexity and does not account for qualitative factors such as text structure, genre, author's purpose, or the background knowledge of the reader.

-

Not a Diagnostic Tool: A Lexile score indicates a student's reading comprehension level but does not diagnose the underlying reasons for reading difficulties.[2]

-

Potential for Misuse: Lexile measures should not be used as the sole factor in determining what a student should read. Student interest and motivation are also critical components of reading development. The framework is intended to be a guide, not a prescription. The framework has been criticized for being overly simplistic and potentially limiting readers' choices.

References

Application Notes and Protocols: Utilizing the Lexile Framework for Differentiated Instruction in Research

For Researchers, Scientists, and Drug Development Professionals

Introduction

The Lexile framework measures text complexity based on two key predictors: sentence length and word frequency.[2][4] The resulting Lexile measure is a number followed by an "L" (e.g., 1200L), which indicates the reading demand of the text.[2][3] By understanding the Lexile measures of both scientific documents and the reading abilities of research personnel, organizations can create a more effective and efficient scientific communication ecosystem.

Application in a Research Context

Differentiated instruction in a research setting involves providing scientific information in multiple formats or at varying levels of complexity to accommodate the diverse expertise of the audience. This can range from providing onboarding materials for new scientists with lower Lexile measures to presenting highly complex data to seasoned principal investigators in texts with higher Lexile measures. The goal is to ensure that all personnel can effectively understand and act upon the information presented to them.

Quantitative Data Summary

To illustrate the potential impact of applying the Lexile framework in a research setting, the following tables summarize hypothetical data from studies assessing the effect of text complexity on researcher performance.

Table 1: Impact of Lexile-Adapted Protocols on Experimental Execution Time

| Researcher Experience Level | Average Lexile Measure of Original Protocols | Average Lexile Measure of Adapted Protocols | Average Completion Time for Original Protocols (minutes) | Average Completion Time for Adapted Protocols (minutes) | Percentage Improvement in Completion Time |

| New Hire (0-1 year) | 1650L | 1300L | 120 | 90 | 25.0% |

| Mid-Level (2-5 years) | 1650L | 1450L | 95 | 85 | 10.5% |

| Senior Scientist (5+ years) | 1650L | N/A | 75 | N/A | N/A |

Table 2: Effect of Text Complexity on Protocol Comprehension and Error Rates

| Document Lexile Measure | Target Audience Experience | Average Comprehension Score (%) | Average Rate of Critical Errors per Protocol |

| 1700L | New Hire | 65 | 4.2 |

| 1500L | New Hire | 85 | 1.5 |

| 1300L | New Hire | 95 | 0.5 |

| 1700L | Senior Scientist | 92 | 0.8 |

| 1500L | Senior Scientist | 98 | 0.2 |

Experimental Protocols

The following protocols provide a framework for implementing and evaluating the use of the Lexile framework for differentiated instruction within a research organization.

Experiment 1: Baseline Readability Assessment of Internal Scientific Documents

Objective: To determine the current range of text complexity for a sample of internal scientific and technical documents.

Methodology:

-

Document Sampling:

-

Randomly select a representative sample of 50 internal documents, including standard operating procedures (SOPs), research protocols, and internal reports.

-

-

Text Preparation:

-

For each document, extract the main body of text, excluding tables, figures, and references.

-

Save each text sample as a plain text file (.txt).

-

-

Lexile Analysis:

-

Utilize the Lexile® Analyzer tool to determine the Lexile measure for each text sample.

-

Record the Lexile measure, word count, and mean sentence length for each document.

-

-

Data Analysis:

-

Calculate the mean, median, and range of Lexile measures for the entire sample of documents.

-

Categorize the documents by type (e.g., SOP, protocol, report) and calculate the average Lexile measure for each category.

-

Experiment 2: Comprehension and Performance Study with Lexile-Adapted Protocols

Objective: To assess the impact of Lexile-adapted research protocols on comprehension, task performance, and error rates among scientists with varying levels of experience.

Methodology:

-

Participant Recruitment:

-

Recruit a cohort of 30 scientists: 10 new hires (<1 year experience), 10 mid-level scientists (2-5 years experience), and 10 senior scientists (>5 years experience).

-

-

Protocol Selection and Adaptation:

-

Select a standard laboratory protocol with a high baseline Lexile measure (e.g., 1600L).

-

Create two adapted versions of the protocol: one at a lower Lexile measure (e.g., 1400L) and one at a significantly lower measure (e.g., 1200L) by simplifying sentence structure and substituting less frequent words with more common synonyms where scientifically appropriate.

-

-

Experimental Design:

-

Randomly assign participants within each experience level to one of three groups, each receiving one version of the protocol (original, adapted 1, or adapted 2).

-

Each participant will be asked to perform the protocol.

-

-

Data Collection:

-

Measure the time taken to complete the protocol.

-

Record the number and severity of any errors made during the execution of the protocol.

-

Administer a post-task comprehension quiz consisting of 10 multiple-choice questions about the key steps and principles of the protocol.

-

-

Data Analysis:

-