JPL

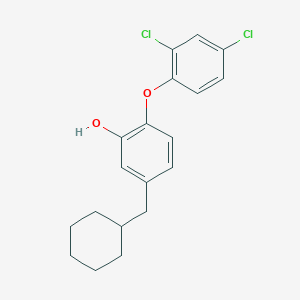

Description

BenchChem offers high-quality this compound suitable for many research applications. Different packaging options are available to accommodate customers' requirements. Please inquire for more information about this compound including the price, delivery time, and more detailed information at info@benchchem.com.

Properties

Molecular Formula |

C19H20Cl2O2 |

|---|---|

Molecular Weight |

351.3 g/mol |

IUPAC Name |

5-(cyclohexylmethyl)-2-(2,4-dichlorophenoxy)phenol |

InChI |

InChI=1S/C19H20Cl2O2/c20-15-7-9-18(16(21)12-15)23-19-8-6-14(11-17(19)22)10-13-4-2-1-3-5-13/h6-9,11-13,22H,1-5,10H2 |

InChI Key |

AUJNRGORQMIJCP-UHFFFAOYSA-N |

Canonical SMILES |

C1CCC(CC1)CC2=CC(=C(C=C2)OC3=C(C=C(C=C3)Cl)Cl)O |

Origin of Product |

United States |

Foundational & Exploratory

A Technical History of JPL's Robotic Exploration of Mars

For decades, NASA's Jet Propulsion Laboratory (JPL) has been at the forefront of robotic exploration of Mars. A succession of increasingly sophisticated orbiters, landers, and rovers has transformed our understanding of the Red Planet, revealing a world with a complex geological history and a past that may have been conducive to life. This technical guide provides an in-depth overview of this compound's key Mars missions, detailing their scientific objectives, instrument payloads, and experimental methodologies for an audience of researchers, scientists, and drug development professionals.

Early Reconnaissance: The Mariner Flybys

This compound's journey to Mars began with the Mariner program, a series of flyby missions that provided the first close-up views of the planet. These early missions were crucial for gathering fundamental data about Mars's atmosphere and surface, paving the way for future, more complex explorations.

Mariner 4 , launched in 1964, was the first successful flyby of Mars.[1][2] Its primary objective was to capture and transmit the first close-up images of the Martian surface.[1][2] The spacecraft also conducted measurements of interplanetary space and the Martian environment.[1][3]

Orbital Surveillance: Charting the Red Planet from Above

Following the initial flybys, this compound developed a series of orbiters designed for long-term observation of Mars. These missions have been instrumental in mapping the planet's surface, studying its climate, and identifying potential landing sites for future missions.

Mariner 9 , which arrived at Mars in 1971, became the first spacecraft to orbit another planet.[4][5][6] Despite arriving during a global dust storm, it went on to map 85% of the Martian surface, revealing features like the vast Valles Marineris canyon system and the towering Olympus Mons volcano.[6]

Subsequent orbiters have continued to build upon this legacy. Mars Global Surveyor provided high-resolution imaging and topographic data that revolutionized our understanding of Martian geology.[7][8] Mars Odyssey has been instrumental in mapping the distribution of water ice and has served as a crucial communications relay for surface missions.[9][10][11][12][13] The Mars Reconnaissance Orbiter carries a powerful high-resolution camera and other instruments to study the Martian climate and geology in unprecedented detail.[14][15][16][17][18]

On the Ground: Landers and Rovers

This compound's landers and rovers have provided an up-close and personal view of the Martian surface, conducting detailed analyses of rocks and soil to search for evidence of past water activity and assess the planet's habitability.

The Viking Program , while managed by NASA's Langley Research Center, included two orbiters built and operated by this compound.[19] The Viking 1 lander was the first U.S. mission to successfully land on Mars and conduct experiments on the surface.[20]

The modern era of Mars surface exploration began with Mars Pathfinder and its small rover, Sojourner , in 1997.[21][22][23][24] This mission demonstrated the feasibility of a low-cost landing system and the value of a mobile platform for exploration.[21][23]

This success was followed by the twin Mars Exploration Rovers , Spirit and Opportunity , which landed in 2004.[25][26] Designed for a 90-day mission, both rovers far exceeded their operational lifetimes, making significant discoveries about the history of water on Mars.[25][27]

The Mars Science Laboratory mission delivered the car-sized rover Curiosity to Gale Crater in 2012.[28][29][30][31] Curiosity's advanced suite of instruments is designed to assess Mars's past and present habitability.[28][30]

The most recent addition to this compound's Martian fleet is the Perseverance rover, which landed in Jezero Crater in 2021 as part of the Mars 2020 mission.[32][33] Perseverance is searching for signs of ancient microbial life and collecting rock and soil samples for potential future return to Earth.[32][33][34][35][36]

Quantitative Mission Data

The following tables summarize key quantitative data for this compound's Mars exploration missions.

| Flyby and Orbiter Missions | Launch Date | Mars Arrival Date | Mission Type | Key Scientific Instruments |

| Mariner 4 | Nov 28, 1964 | Jul 15, 1965 | Flyby | Imaging System, Helium Magnetometer, Plasma Probe, Cosmic Ray Telescope, Cosmic Dust Detector[1][2][37][38] |

| Mariner 9 | May 30, 1971 | Nov 14, 1971 | Orbiter | Imaging System, Infrared Interferometer Spectrometer, Ultraviolet Spectrometer, Infrared Radiometer[4][5][6][39] |

| Viking 1 Orbiter | Aug 20, 1975 | Jun 19, 1976 | Orbiter | Imaging System, Atmospheric Water Detector, Infrared Thermal Mapper[20] |

| Viking 2 Orbiter | Sep 9, 1975 | Aug 7, 1976 | Orbiter | Imaging System, Atmospheric Water Detector, Infrared Thermal Mapper |

| Mars Global Surveyor | Nov 7, 1996 | Sep 12, 1997 | Orbiter | Mars Orbiter Camera (MOC), Mars Orbiter Laser Altimeter (MOLA), Thermal Emission Spectrometer (TES), Magnetometer/Electron Reflectometer[7][8][40][41] |

| Mars Odyssey | Apr 7, 2001 | Oct 24, 2001 | Orbiter | Thermal Emission Imaging System (THEMIS), Gamma Ray Spectrometer (GRS), Martian Radiation Environment Experiment (MARIE)[9][10][11][12][13] |

| Mars Reconnaissance Orbiter | Aug 12, 2005 | Mar 10, 2006 | Orbiter | High Resolution Imaging Science Experiment (HiRISE), Context Camera (CTX), Mars Color Imager (MARCI), Compact Reconnaissance Imaging Spectrometer for Mars (CRISM), Mars Climate Sounder (MCS), Shallow Radar (SHARAD)[15][16] |

| Lander and Rover Missions | Launch Date | Mars Landing Date | Mission Type | Key Scientific Instruments |

| Viking 1 Lander | Aug 20, 1975 | Jul 20, 1976 | Lander | Imaging System, Gas Chromatograph-Mass Spectrometer, X-ray Fluorescence Spectrometer, Seismometer, Meteorology Instrument Package, Biology Instrument[20] |

| Viking 2 Lander | Sep 9, 1975 | Sep 3, 1976 | Lander | Imaging System, Gas Chromatograph-Mass Spectrometer, X-ray Fluorescence Spectrometer, Seismometer, Meteorology Instrument Package, Biology Instrument |

| Mars Pathfinder & Sojourner | Dec 4, 1996 | Jul 4, 1997 | Lander & Rover | Imager for Mars Pathfinder (IMP), Atmospheric Structure Instrument/Meteorology Package (ASI/MET), Alpha Proton X-ray Spectrometer (APXS) (on rover)[21][24] |

| Spirit (MER-A) | Jun 10, 2003 | Jan 4, 2004 | Rover | Panoramic Camera (Pancam), Microscopic Imager (MI), Mini-Thermal Emission Spectrometer (Mini-TES), Mössbauer Spectrometer, Alpha Particle X-ray Spectrometer (APXS), Rock Abrasion Tool (RAT)[25][26][27] |

| Opportunity (MER-B) | Jul 7, 2003 | Jan 25, 2004 | Rover | Panoramic Camera (Pancam), Microscopic Imager (MI), Mini-Thermal Emission Spectrometer (Mini-TES), Mössbauer Spectrometer, Alpha Particle X-ray Spectrometer (APXS), Rock Abrasion Tool (RAT)[25][26][42] |

| Curiosity (MSL) | Nov 26, 2011 | Aug 6, 2012 | Rover | Mast Camera (Mastcam), Chemistry and Camera (ChemCam), Alpha Particle X-ray Spectrometer (APXS), Chemistry and Mineralogy (CheMin), Sample Analysis at Mars (SAM), Radiation Assessment Detector (RAD), Dynamic Albedo of Neutrons (DAN), Rover Environmental Monitoring Station (REMS), Mars Hand Lens Imager (MAHLI), Mars Descent Imager (MARDI)[28][29][30][43] |

| Perseverance (Mars 2020) | Jul 30, 2020 | Feb 18, 2021 | Rover & Helicopter | Mastcam-Z, SuperCam, Planetary Instrument for X-ray Lithochemistry (PIXL), Scanning Habitable Environments with Raman & Luminescence for Organics & Chemicals (SHERLOC), Mars Oxygen In-Situ Resource Utilization Experiment (MOXIE), Mars Environmental Dynamics Analyzer (MEDA), Radar Imager for Mars' Subsurface Experiment (RIMFAX)[32][33][34][35] |

Experimental Protocols and Methodologies

The scientific instruments aboard this compound's Mars missions employ a variety of sophisticated techniques to analyze the Martian environment. Below are detailed methodologies for some of the key experiments.

Rover-Based Remote and Contact Science

This compound's Mars rovers utilize a two-tiered approach to scientific investigation: remote sensing from the mast and in-situ analysis with an arm-mounted instrument suite.

Remote Sensing Protocol:

-

Target Identification: The Panoramic Camera (Pancam) or Mastcam-Z surveys the surrounding terrain to identify geological features of interest. These are multispectral imagers capable of creating high-resolution, panoramic, and stereoscopic images.

-

Elemental and Mineralogical Analysis: The Chemistry and Camera (ChemCam) instrument on Curiosity and the SuperCam on Perseverance use Laser-Induced Breakdown Spectroscopy (LIBS). A high-powered laser vaporizes a small amount of a rock or soil target from a distance. The resulting plasma is analyzed by a spectrometer to determine the elemental composition. SuperCam also incorporates Raman and infrared spectroscopy for mineralogical analysis.

Contact Science Protocol:

-

Surface Preparation: For rock targets, the Rock Abrasion Tool (RAT) on the Mars Exploration Rovers or the drill on Curiosity and Perseverance can remove the weathered outer layer to expose fresh material.

-

Microscopic Imaging: The Microscopic Imager (MI) on Spirit and Opportunity and the Mars Hand Lens Imager (MAHLI) on Curiosity and Perseverance provide close-up, high-resolution images of the rock and soil texture.

-

Elemental Composition: The Alpha Particle X-ray Spectrometer (APXS), present on Sojourner, the Mars Exploration Rovers, Curiosity, and Perseverance, bombards the target with alpha particles and X-rays. The resulting backscattered alpha particles and X-ray fluorescence are measured to determine the elemental composition of the sample.

-

Mineralogical Analysis:

-

The Mössbauer Spectrometer on Spirit and Opportunity was used to identify iron-bearing minerals.

-

The Chemistry and Mineralogy (CheMin) instrument on Curiosity uses X-ray diffraction to identify and quantify the minerals in a powdered rock or soil sample delivered by the rover's drill.

-

The Scanning Habitable Environments with Raman & Luminescence for Organics & Chemicals (SHERLOC) instrument on Perseverance uses an ultraviolet laser to perform fine-scale mapping of minerals and organic molecules.

-

Orbiter-Based Surface and Atmospheric Analysis

This compound's Mars orbiters employ a suite of instruments to study the planet's surface and atmosphere from a global perspective.

Surface Composition and Topography:

-

Thermal Emission Spectrometry: The Thermal Emission Spectrometer (TES) on Mars Global Surveyor and the Thermal Emission Imaging System (THEMIS) on Mars Odyssey measure the infrared energy emitted from the Martian surface. Different minerals radiate heat at characteristic wavelengths, allowing scientists to create maps of surface mineralogy.

-

Laser Altimetry: The Mars Orbiter Laser Altimeter (MOLA) on Mars Global Surveyor used a laser to measure the round-trip travel time of a light pulse from the spacecraft to the surface and back. This data was used to create a highly accurate topographic map of Mars.

-

High-Resolution Imaging: The High Resolution Imaging Science Experiment (HiRISE) on the Mars Reconnaissance Orbiter is a powerful telescopic camera capable of imaging the Martian surface with resolutions as fine as a few tens of centimeters per pixel.

Atmospheric Profiling:

-

The Mars Climate Sounder (MCS) on the Mars Reconnaissance Orbiter observes the Martian atmosphere in the infrared to measure temperature, pressure, humidity, and dust content at different altitudes.

Visualizing this compound's Mars Exploration

The following diagrams illustrate key aspects of this compound's Mars exploration history and methodologies.

Caption: A timeline of key this compound Mars exploration missions.

Caption: A simplified workflow for Mars rover scientific investigations.

Caption: The evolving science goals of this compound's Mars Exploration Program.

References

- 1. Mariner 4 - Wikipedia [en.wikipedia.org]

- 2. Mariner 4 - Mars Missions - NASA Jet Propulsion Laboratory | NASA Jet Propulsion Laboratory (this compound) [this compound.nasa.gov]

- 3. Khan Academy [khanacademy.org]

- 4. Mariner 9 - Wikipedia [en.wikipedia.org]

- 5. Mariner 9 - Marspedia [marspedia.org]

- 6. science.nasa.gov [science.nasa.gov]

- 7. Mars Global Surveyor | U.S. Geological Survey [usgs.gov]

- 8. britannica.com [britannica.com]

- 9. researchgate.net [researchgate.net]

- 10. hou.usra.edu [hou.usra.edu]

- 11. Mars Odyssey [astronautix.com]

- 12. science.nasa.gov [science.nasa.gov]

- 13. This compound.nasa.gov [this compound.nasa.gov]

- 14. Mars Reconnaissance Orbiter - Wikipedia [en.wikipedia.org]

- 15. planetary.org [planetary.org]

- 16. science.nasa.gov [science.nasa.gov]

- 17. science.nasa.gov [science.nasa.gov]

- 18. Mars Reconnaissance Orbiter - Marspedia [marspedia.org]

- 19. Viking program - Wikipedia [en.wikipedia.org]

- 20. Viking 1 - Mars Missions - NASA Jet Propulsion Laboratory | NASA Jet Propulsion Laboratory (this compound) [this compound.nasa.gov]

- 21. planetary.org [planetary.org]

- 22. astronomy.com [astronomy.com]

- 23. Mars Pathfinder - Mars Missions - NASA Jet Propulsion Laboratory | NASA Jet Propulsion Laboratory (this compound) [this compound.nasa.gov]

- 24. Mars Pathfinder - Wikipedia [en.wikipedia.org]

- 25. Mars Exploration Rover | Facts, Spirit, & Opportunity | Britannica [britannica.com]

- 26. Missions to Mars - Spirit and Opportunity [astronomyonline.org]

- 27. Spirit (rover) - Wikipedia [en.wikipedia.org]

- 28. science.nasa.gov [science.nasa.gov]

- 29. Mars Science Laboratory - Wikipedia [en.wikipedia.org]

- 30. Curiosity (rover) - Wikipedia [en.wikipedia.org]

- 31. lpi.usra.edu [lpi.usra.edu]

- 32. science.nasa.gov [science.nasa.gov]

- 33. Perseverance – a high-tech laboratory on wheels [dlr.de]

- 34. science.nasa.gov [science.nasa.gov]

- 35. planetary.org [planetary.org]

- 36. newatlas.com [newatlas.com]

- 37. Mariner 4 - Marspedia [marspedia.org]

- 38. honeysucklecreek.net [honeysucklecreek.net]

- 39. General Information - Mariner 9 [lasp.colorado.edu]

- 40. Mars Global Surveyor - Wikipedia [en.wikipedia.org]

- 41. science.nasa.gov [science.nasa.gov]

- 42. science.nasa.gov [science.nasa.gov]

- 43. Mars Science Laboratory & Curiosity Rover | Dawnbreaker MRR [mrr.dawnbreaker.com]

A Technical Guide to JPL's Earth Science Research Programs

Pasadena, CA - The Jet Propulsion Laboratory (JPL), managed by the California Institute of Technology for NASA, stands at the forefront of Earth science research, employing a sophisticated suite of spaceborne and airborne instruments to monitor and understand our dynamic planet. This in-depth guide provides researchers, scientists, and drug development professionals with a technical overview of this compound's core Earth science research programs, detailing the experimental protocols of key missions and presenting quantitative data in a structured format for comparative analysis.

Core Research Areas

This compound's Earth science endeavors are broadly categorized into four thematic areas: the cryosphere, the global water and energy cycle, atmospheric composition and dynamics, and Earth's surface and interior. Research within these areas is synergistic, with data from multiple missions often integrated to provide a holistic understanding of Earth as a system.

Key Earth Science Missions and Instrumentation

This compound manages a diverse portfolio of Earth-observing missions, each equipped with specialized instrumentation to measure key geophysical parameters. The following tables summarize the quantitative specifications of instruments for several key missions.

| Mission | Instrument | Measurement Principle | Key Parameters Measured | Spatial Resolution | Temporal Resolution | Data Products |

| OCO-2 | Three-channel imaging grating spectrometer | Measures reflected sunlight in the O2 A-band and two CO2 bands to determine column-averaged dry-air mole fraction of CO2 (XCO2). | XCO2 | 1.29 km x 2.25 km | 16-day repeat cycle | Level 1B: Calibrated radiances; Level 2: Georeferenced XCO2 retrievals |

| SMAP | L-band radar and radiometer | Active and passive microwave remote sensing to measure soil moisture and freeze/thaw state. | Soil moisture, freeze/thaw state | Radiometer: 36 km; Radar (inactive): 1-3 km; Combined product: 9 km | 2-3 days | Level 1: Calibrated instrument data; Level 2: Soil moisture retrievals; Level 3: Daily composites; Level 4: Model-derived root zone soil moisture |

| NISAR | L-band and S-band Synthetic Aperture Radar (SAR) | Dual-frequency radar interferometry to measure surface deformation and changes in land cover. | Surface deformation, ice velocity, biomass, soil moisture | 3-10 meters | 12-day repeat cycle | Level 1: Raw and calibrated SAR data; Level 2: Geocoded products (interferograms, polarimetric data) |

| SWOT | Ka-band Radar Interferometer (KaRIn) | Radar interferometry to measure water surface elevation. | Water surface elevation, slope, width of rivers, lakes, and oceans | 2 km (ocean), 50-100 m (rivers) | 21-day repeat cycle | River and lake vector products, ocean surface topography grids |

| GRACE-FO | Microwave Ranging Instrument, Laser Ranging Interferometer | Measures changes in the distance between two twin satellites to map variations in Earth's gravity field. | Time-variable gravity field, mass changes (water, ice) | ~300 km | Monthly | Level 1B: Inter-satellite range and acceleration data; Level 2: Gravity field models; Level 3: Gridded mass concentration blocks (mascons) |

| ECOSTRESS | Multispectral thermal infrared radiometer | Measures the temperature of plants and the surface. | Land surface temperature, evapotranspiration | 70 m | Variable (due to ISS orbit) | Level 1: Radiances; Level 2: Land surface temperature and emissivity; Level 3: Evapotranspiration; Level 4: Evaporative stress index |

| AIRS | Hyperspectral infrared sounder | Measures infrared energy emitted from the Earth's surface and atmosphere. | Atmospheric temperature and water vapor profiles, trace gases (O3, CO, CH4) | 13.5 km at nadir | Daily global coverage | Level 1B: Calibrated radiances; Level 2: Retrieved geophysical profiles |

| MAIA | Spectropolarimetric camera | Measures the radiance and polarization of sunlight scattered by atmospheric aerosols. | Particulate matter (PM) size, composition, and quantity | 1 km | ~3-4 times per week over primary target areas | Level 1: Calibrated radiances and polarimetry; Level 2: Aerosol and PM properties |

Experimental Protocols and Methodologies

The acquisition and processing of data from this compound's Earth science missions follow rigorous and well-defined protocols to ensure data quality and scientific validity. These protocols, from instrument calibration to the generation of high-level data products, are crucial for the interpretation of scientific results.

Orbiting Carbon Observatory 2 (OCO-2): XCO2 Retrieval

The experimental protocol for retrieving the column-averaged dry-air mole fraction of carbon dioxide (XCO2) from OCO-2 observations involves a multi-step process that begins with the measurement of reflected sunlight and culminates in the generation of calibrated XCO2 data products.

-

Data Acquisition: The OCO-2 instrument's three-channel imaging grating spectrometer measures the intensity of sunlight reflected from the Earth's surface and atmosphere in the Oxygen A-band (around 0.765 µm) and two carbon dioxide bands (a weak absorption band around 1.61 µm and a strong absorption band around 2.06 µm).[1][2][3] These measurements are taken in a push-broom fashion across a narrow swath.[2]

-

Spectral Calibration: The raw data from the spectrometer is spectrally calibrated to precisely determine the wavelength of the measured light. This is crucial for identifying the absorption features of O2 and CO2.

-

Radiometric Calibration: The data is then radiometrically calibrated to convert the instrument's digital numbers into physical units of radiance.[4] This step involves using on-board calibration sources and vicarious calibration techniques.[4]

-

Geolocation: The precise geographic location of each measurement is determined through geometric calibration, which uses information about the spacecraft's orbit and attitude.[4]

-

Cloud Screening: A critical step is the identification and filtering of data contaminated by clouds, as clouds significantly alter the light path and interfere with the accurate retrieval of XCO2.

-

Full-Physics Retrieval Algorithm: The cloud-screened, calibrated radiances are then processed using a "full-physics" retrieval algorithm. This algorithm models the transfer of solar radiation through the atmosphere and compares the modeled radiances to the observed radiances. By iteratively adjusting the atmospheric parameters in the model, including the CO2 profile, the algorithm finds the best fit to the observations.

-

XCO2 Calculation: The retrieved CO2 profile is then used to calculate the column-averaged dry-air mole fraction of CO2 (XCO2). The simultaneous measurement of the O2 A-band is used to determine the total column of dry air, which is necessary to calculate the mole fraction.[3]

-

Bias Correction and Validation: The retrieved XCO2 values are compared with ground-based measurements from the Total Carbon Column Observing Network (TCCON) to identify and correct for any systematic biases.[3]

Soil Moisture Active Passive (SMAP): Data Product Generation

The Soil Moisture Active Passive (SMAP) mission utilizes both an L-band radar (now inactive) and an L-band radiometer to provide global measurements of soil moisture and freeze/thaw state. The data processing for SMAP is structured in a hierarchical manner, progressing from raw instrument data to sophisticated, model-derived products.

-

Level 1 Data Products: These are the most fundamental data products, containing calibrated and geolocated instrument measurements.

-

L1B_TB: Calibrated brightness temperatures from the radiometer.

-

L1C_S0_HiRes: High-resolution radar backscatter cross-sections.

-

-

Level 2 Data Products: These products contain geophysical retrievals of soil moisture derived from the Level 1 data.

-

L2_SM_P: Soil moisture derived from the passive radiometer data at a 36 km resolution.

-

L2_SM_A: Soil moisture derived from the active radar data at a 3 km resolution (based on early mission data).

-

L2_SM_AP: A combined active-passive soil moisture product at a 9 km resolution.

-

-

Level 3 Data Products: These are daily global composites of the Level 2 data, providing a consistent daily snapshot of global soil moisture and freeze/thaw conditions.

-

L3_SM_P: Daily global composite of the L2_SM_P product.

-

L3_FT_A: Daily freeze/thaw state derived from radar data.

-

-

Level 4 Data Products: These are model-derived, value-added products that provide estimates of root zone soil moisture and carbon net ecosystem exchange. These products are generated by assimilating SMAP observations into land surface models.

References

- 1. This compound Science: Water & Ecosystems [science.this compound.nasa.gov]

- 2. GRACE-FO Mission Documentation | PO.DAAC / this compound / NASA [podaac.this compound.nasa.gov]

- 3. Ecosystem Spaceborne Thermal Radiometer Experiment on Space Station | NASA Earthdata [earthdata.nasa.gov]

- 4. AIRS | AIRS Project Instrument Suite – AIRS [airs.this compound.nasa.gov]

JPL's Enduring Legacy in the Quest for New Worlds: A Technical Guide to Exoplanet Detection and Characterization

Pasadena, CA - For decades, NASA's Jet Propulsion Laboratory (JPL) has been at the forefront of humanity's search for planets beyond our solar system.[1] From pioneering missions that revealed the sheer abundance of exoplanets to developing cutting-edge technologies that will one day characterize Earth-like worlds, this compound's contributions have been pivotal in transforming exoplanetology from a nascent field into a cornerstone of modern astrophysics. This technical guide provides an in-depth overview of this compound's key contributions to exoplanet detection and characterization, tailored for researchers, scientists, and drug development professionals interested in the methodologies and technologies driving this exciting frontier.

Key this compound Missions in Exoplanet Science

This compound has played a crucial role in the management and scientific operations of several landmark space telescopes that have revolutionized our understanding of exoplanets.

Kepler and K2: A Statistical Revolution

The Kepler Space Telescope, with its development managed by this compound, was a game-changer in exoplanet science.[1] Launched in 2009, its primary mission was to continuously monitor a fixed patch of the sky to detect the subtle dimming of starlight caused by a planet transiting, or passing in front of, its star.[1] This "transit method" allowed for the discovery of thousands of exoplanets, providing the first robust statistics on the prevalence of planets in our galaxy.[1][2]

Following the failure of two reaction wheels, this compound engineers ingeniously repurposed the spacecraft for the K2 mission, which continued to discover exoplanets by observing different fields along the ecliptic plane.[3][4] The Kepler and K2 missions together have confirmed over 2,800 exoplanets.[1]

Spitzer Space Telescope: A Versatile Observer

The this compound-managed Spitzer Space Telescope, an infrared observatory, proved to be a surprisingly powerful tool for exoplanet characterization.[1] While not initially designed for exoplanet science, its sensitive infrared detectors were instrumental in studying the atmospheres of "hot Jupiters" and famously characterized the seven Earth-sized planets of the TRAPPIST-1 system.[1][5] Spitzer's observations allowed scientists to create the first "weather maps" of an exoplanet and to detect molecules in their atmospheres.

Nancy Grace Roman Space Telescope: The Next Generation

Looking to the future, this compound is a key partner in the development of the Nancy Grace Roman Space Telescope, slated for launch by May 2027.[6] Roman will conduct a large-scale survey of exoplanets using gravitational microlensing, a technique that can detect planets much farther from their stars than the transit method allows.[7][8] This will provide a more complete census of planetary systems.

Pioneering Technologies for Direct Imaging

One of the greatest challenges in exoplanetology is directly imaging a planet, as the light from its host star is typically billions of times brighter. This compound has been a leader in developing technologies to overcome this "starlight suppression" problem.

The Coronagraph: Blocking the Glare

A coronagraph is an instrument designed to block the light from a star to reveal the faint objects orbiting it. This compound has been at the forefront of developing advanced coronagraph technology. The Coronagraph Instrument (CGI) on the Nancy Grace Roman Space Telescope, designed and built by this compound, will be a technology demonstrator for future missions.[9][10] It is expected to be 100 to 1,000 times more powerful than previous space-based coronagraphs and will feature "active" optics, such as deformable mirrors, to compensate for tiny imperfections in the telescope's optics.[11][12]

Starshade: A Deployable Occulter

Another innovative concept developed at this compound is the starshade, a large, flower-shaped spacecraft that would fly tens of thousands of kilometers in front of a space telescope.[13][14][15] The starshade would precisely block the light from a target star, allowing the telescope to directly image any orbiting planets. This technology is currently at a Technology Readiness Level (TRL) of 5.[13]

Quantitative Data from this compound-led Missions and Technologies

The following tables summarize key quantitative data from this compound's contributions to exoplanet science.

| Mission/Instrument | Primary Detection/Characterization Method | Key Quantitative Achievements/Specifications |

| Kepler/K2 | Transit Photometry | Discovered over 2,800 confirmed exoplanets.[1] |

| Spitzer Space Telescope | Transit Photometry, Secondary Eclipse, Phase Curves | Characterized the seven Earth-sized planets of the TRAPPIST-1 system.[1] First detection of thermal emission from a "hot Jupiter".[5] |

| Nancy Grace Roman Space Telescope (Coronagraph) | Direct Imaging | Technology demonstration aiming for a contrast ratio of ≲ 10⁻⁸.[9] Will be 100 to 1,000 times more powerful than previous space-based coronagraphs.[11] |

| Starshade (Technology Concept) | Direct Imaging | Aims to suppress starlight to enable direct imaging of Earth-like planets. Currently at TRL 5.[13] |

Experimental Protocols

A detailed understanding of the methodologies employed in exoplanet detection and characterization is crucial for researchers in the field.

Transit Photometry Data Analysis Pipeline

The Kepler and K2 missions utilized a sophisticated data processing pipeline to identify transiting exoplanet candidates from the vast amount of photometric data collected.

The process begins with the Calibration (CAL) module, which converts the raw pixel data from the spacecraft's photometer into calibrated pixel values.[13] The Photometric Analysis (PA) module then extracts the brightness of the target stars over time, creating light curves.[13] These light curves are then passed to the Pre-search Data Conditioning (PDC) module, which removes systematic errors and instrumental noise.[13] The Transiting Planet Search (TPS) algorithm then scours these corrected light curves for periodic dips in brightness that could indicate a planetary transit, flagging them as Threshold-Crossing Events (TCEs). Finally, the Data Validation (DV) module performs a series of tests on these TCEs to produce a list of Kepler Objects of Interest (KOIs), which are then prioritized for follow-up observations to confirm their planetary nature.[16]

Direct Imaging Data Reduction

Directly imaging an exoplanet requires sophisticated data reduction techniques to subtract the overwhelming glare of the host star. A key technique is Point Spread Function (PSF) subtraction.

The process begins with standard pre-processing of the raw coronagraphic images, including dark subtraction and flat-fielding. The images are then precisely aligned. A model of the star's Point Spread Function (PSF) is then created, either from observations of a reference star or by using advanced algorithms like Karhunen-Loève Image Projection (KLIP). This model PSF is then subtracted from the science images, leaving behind the faint signal of any orbiting exoplanets. Finally, the residual images are derotated and stacked to enhance the signal-to-noise ratio of any detected planets, which can then be further characterized.

Exoplanet Atmosphere Characterization through Spectroscopy

Spectroscopy is a powerful technique for probing the composition of exoplanet atmospheres. By analyzing the light that passes through or is emitted from an exoplanet's atmosphere, scientists can identify the presence of specific molecules.

References

- 1. Exploring Exoplanets | NASA Jet Propulsion Laboratory (this compound) [this compound.nasa.gov]

- 2. Exploring exoplanet populations with NASA’s Kepler Mission - PMC [pmc.ncbi.nlm.nih.gov]

- 3. planetary.org [planetary.org]

- 4. arxiv.org [arxiv.org]

- 5. [2005.11331] Highlights of Exoplanetary Science from Spitzer [arxiv.org]

- 6. arxiv.org [arxiv.org]

- 7. science.nasa.gov [science.nasa.gov]

- 8. [2505.10621] Exoplanet Detection with Microlensing [arxiv.org]

- 9. Science Team : Roman Space Telescope/NASA [roman.gsfc.nasa.gov]

- 10. Roman Space Telescope/NASA [roman.gsfc.nasa.gov]

- 11. This compound Science: Planetary And Exoplanetary Atmospheres [science.this compound.nasa.gov]

- 12. [2012.12119] A review of simulation and performance modeling for the Roman coronagraph instrument [arxiv.org]

- 13. Kepler and K2 data processing pipeline - Kepler & K2 Science Center [keplergo.github.io]

- 14. Roman Space Telescope Coronagraph Instrument Public Simulated Images [roman.ipac.caltech.edu]

- 15. [PDF] A New Algorithm for Point-Spread Function Subtraction in High-Contrast Imaging: A Demonstration with Angular Differential Imaging | Semantic Scholar [semanticscholar.org]

- 16. Kepler Data Products Overview [exoplanetarchive.ipac.caltech.edu]

A Technical Guide to Research Opportunities for Visiting Scientists at the Jet Propulsion Laboratory (JPL)

For Researchers, Scientists, and Drug Development Professionals

This in-depth guide provides a comprehensive overview of the various programs and opportunities for visiting scientists and researchers at the Jet Propulsion Laboratory (JPL). Managed by Caltech for NASA, this compound is a world-leading center for robotic exploration of the solar system and Earth science.[1][2] This document outlines the key research areas, summarizes available programs with quantitative data, and provides detailed workflows for select research processes to aid prospective visiting scientists in identifying and preparing for research collaborations.

Core Research Areas at this compound

This compound's research is broadly categorized into several key directorates, offering a wide spectrum of opportunities for visiting scientists. These areas are at the forefront of scientific discovery and technological innovation.[3]

-

Earth Science: Studying our home planet to understand its systems and predict changes.

-

Planetary Science: Exploring the planets, moons, and other bodies within our solar system.

-

Astrophysics and Space Science: Investigating the universe, from the heliosphere to distant galaxies.

-

Technology and Engineering: Developing cutting-edge technologies and autonomous robotic systems for space exploration.[4]

Visiting Scientist and Researcher Programs

This compound offers a variety of programs for researchers at different stages of their careers to collaborate with this compound scientists and engineers. The following tables provide a summary of these opportunities.

Table 1: Programs for Established Researchers and Faculty

| Program Name | Target Audience | Purpose | Duration | Funding |

| This compound Visiting Researcher Program | Faculty, faculty research associates, tenured researchers at research centers and laboratories.[5] | To foster collaborations and exchange of ideas in research areas of interest to this compound.[5] | Varies based on collaboration | Typically, this is an honorary title; funding is not directly provided by the program. |

| Distinguished Visiting Scientist Program | Senior researchers who are recognized authorities in their fields.[5] | To promote interchange between senior researchers and this compound personnel through consultation or collaboration.[5] | Varies | Determined by the this compound Director.[5] |

| This compound Joint Faculty Appointments | Typically for faculty members of universities proximal to this compound. | To formalize collaborations between this compound and academic institutions. | Typically two years, with yearly renewals. | Determined by the this compound Director. |

| This compound Faculty Research Program (JFRP) | Full-time STEM faculty at accredited U.S. universities.[6] | To engage faculty in research of mutual interest and to provide them with experience of the this compound/NASA research culture.[6] | 10 weeks, full-time.[6] | Stipend provided, but contingent on the availability of a this compound host's funding.[6] |

Table 2: Postdoctoral and Student Research Programs

| Program Name | Target Audience | Key Requirements | Duration | Funding/Stipend |

| This compound Visiting Postdoctoral Scholar Program | Postdoctoral candidates with access to funding from non-NASA institutions (e.g., Fulbright). | Must have an established relationship with a this compound researcher or be responding to an existing postdoctoral opportunity.[5] | Varies based on fellowship | Supported by the visiting postdoc's institution or fellowship grant.[7] |

| NASA Postdoctoral Program (NPP) | New and senior Ph.D. recipients. | Application deadlines are November 1, March 1, and July 1, annually. | One year, renewable up to a maximum of three years. | Stipend rates start at $70,000 per year, with supplements for high-cost-of-living areas and certain specialties. Also includes a $10,000 per year travel allowance.[8] |

| This compound Visiting Student Research Program (JVSRP) | Undergraduate and graduate students in STEM fields.[9] | Must have secured third-party funding.[9] | Flexible, with full-time and part-time options available.[9] | Requires proof of financial support of at least $2,400 per month.[9] |

| Summer Undergraduate Research Fellowships (SURF)@this compound | Undergraduate students. | Students collaborate with a mentor to develop a research project and write a proposal as part of the application. | 10 weeks during the summer.[10] | In 2026, the award is $9,600 for the ten-week period.[10] |

Research in Astrobiology and Drug Discovery

A notable area of research with relevance to drug development professionals is this compound's work in astrobiology, which includes the search for life and habitable environments beyond Earth. A key example is the collaboration between this compound and the University of Southern California (USC) to study the effects of the space environment on fungi to potentially develop new medicines.[11]

The experiment involves sending samples of Aspergillus nidulans to the International Space Station (ISS) to investigate whether the stressful conditions of space, such as microgravity and increased radiation, can trigger the production of novel secondary metabolites.[11] These compounds, which include molecules like penicillin and lovastatin, are not essential for the fungus's growth but can have significant pharmaceutical applications.[11] Research has shown that Aspergillus niger, a similar fungus, undergoes genomic, proteomic, and metabolomic alterations in the ISS environment.[12]

Generalized Experimental Workflow for Fungal Research on the ISS

The following diagram illustrates a generalized workflow for an experiment studying the effects of the space environment on fungi, based on similar research conducted on the ISS. This provides a conceptual framework for the type of research visiting scientists could engage in.

Autonomous Systems in Scientific Research

This compound is a leader in the development and deployment of autonomous systems for scientific research. A prime example is the Autonomous Exploration for Gathering Increased Science (AEGIS) system used on the Mars rovers.[13] AEGIS enables the rovers to autonomously identify and select geological targets for analysis, significantly increasing the scientific return of the missions.[14]

AEGIS Workflow for Autonomous Target Selection

The following diagram illustrates the logical workflow of the AEGIS system. This represents a key area of research in robotics and artificial intelligence that visiting scientists could contribute to.

How to Pursue a Visiting Research Opportunity at this compound

Prospective visiting scientists should begin by identifying a research area and potential collaborators at this compound. The this compound Science website is a valuable resource for exploring current research projects and identifying scientists working in specific fields. For most programs, establishing contact with a this compound researcher is a crucial first step.

The application processes for the various programs differ. For instance, the JVSRP requires applicants to email their documents directly to the program coordinator, while the NASA Postdoctoral Program has a formal application process with specific deadlines.[9][15] It is essential to carefully review the requirements and application procedures for each program of interest on the official this compound and NASA websites.

This compound's strategic plan emphasizes strengthening partnerships with academia and other research institutions, indicating a continued commitment to fostering a collaborative research environment.[4] For researchers in fields such as biotechnology and drug development, interdisciplinary opportunities may exist within this compound's astrobiology and planetary protection research groups.

References

- 1. Partnering with this compound — Data Science [datascience.this compound.nasa.gov]

- 2. NASA Jet Propulsion Laboratory (this compound) - Robotic Space Exploration | NASA Jet Propulsion Laboratory (this compound) [this compound.nasa.gov]

- 3. Research at this compound | Home [this compound.nasa.gov]

- 4. This compound SIP - Vision [this compound.nasa.gov]

- 5. Research at this compound | Visiting Researcher Programs [this compound.nasa.gov]

- 6. This compound Faculty Research Program – Explore Programs & Apply | NASA this compound Education [this compound.nasa.gov]

- 7. Postdoctoral Programs [postdocs.this compound.nasa.gov]

- 8. Benefits | NASA Postdoctoral Program [npp.orau.org]

- 9. This compound Visiting Student Research Program – Explore Programs & Apply | NASA this compound Education [this compound.nasa.gov]

- 10. SURF@this compound - Student-Faculty Programs [sfp.caltech.edu]

- 11. Nasa and USC to send fungi into space for developing new medicine - Airport Technology [airport-technology.com]

- 12. The International Space Station Environment Triggers Molecular Responses in Aspergillus niger - PMC [pmc.ncbi.nlm.nih.gov]

- 13. ai.this compound.nasa.gov [ai.this compound.nasa.gov]

- 14. researchgate.net [researchgate.net]

- 15. Postdoctoral Programs [postdocs.this compound.nasa.gov]

Accessing JPL's Planetary Data Archives: A Technical Guide for Researchers

Pasadena, CA - The Jet Propulsion Laboratory (JPL), a leader in robotic space exploration, manages a vast and diverse collection of planetary science data. These archives are a critical resource for researchers, scientists, and drug development professionals seeking to understand our solar system and beyond. This technical guide provides a comprehensive overview of the primary data archives, access methods, and data processing protocols to enable effective utilization of these invaluable resources.

Overview of this compound's Planetary Data Archives

This compound's planetary data is primarily managed and distributed through two key entities: the Planetary Data System (PDS) and the Solar System Dynamics (SSD) group .

The Planetary Data System (PDS) is a long-term archive of digital data products from NASA's planetary missions, as well as other flight and ground-based data acquisitions.[1] The PDS is a federated system composed of eight nodes, six of which are science discipline nodes that specialize in specific areas of planetary science.[2][3] All data curated by the PDS is peer-reviewed to ensure its usability by the global planetary science community.[1]

The Solar System Dynamics (SSD) group at this compound provides key solar system data and ephemeris computation services.[4] This includes the highly accurate Horizons ephemeris system and the Small-Body Database (SBDB).[4][5]

The Planetary Data System (PDS)

The PDS archives a wide array of data types, from raw instrument data to high-level processed scientific products. The data is organized into a hierarchical structure of bundles, collections, and products, adhering to the PDS4 data standards.[6]

PDS Science Discipline Nodes

The PDS is comprised of several discipline nodes, each responsible for archiving and distributing specific types of planetary data.[2] Researchers should direct their queries to the node most relevant to their area of study.

| Node Name | Data Specialization | Representative Data Types |

| Atmospheres Node (ATM) | Non-imaging atmospheric data from planetary missions.[2][7] | Temperature and pressure profiles, atmospheric composition, and meteorological data.[7] |

| Cartography and Imaging Sciences Node (IMG) | Digital image collections from planetary missions.[2][8] The archive totals approximately 2.6 PB of data.[9] | Raw and calibrated images, mosaics, and cartographic products.[8] |

| Geosciences Node (GEO) | Data related to the surfaces and interiors of terrestrial planetary bodies.[2][4][10] | Spectral data, geophysical measurements, radar data, and laser altimetry.[11] |

| Planetary Plasma Interactions (PPI) | Fields and particles data from planetary missions.[2][12][13] | Magnetic field data, plasma wave observations, and energetic particle measurements.[12] |

| Ring-Moon Systems Node (RMS) | Data relevant to outer planetary systems containing rings and moons.[2] | Images, spectral data, and occultation data of Jupiter, Saturn, Uranus, and Neptune systems.[2] |

| Small Bodies Node (SBN) | Data related to asteroids, comets, and interplanetary dust.[2][6] | Mission data, ground-based observations, and laboratory measurements of small bodies.[6] |

| Navigation and Ancillary Information Facility (NAIF) | Ancillary data required to understand the geometry of space science observations.[3] | Spacecraft and planetary ephemerides, instrument orientation, and shape models (SPICE kernels).[3] |

| Engineering Node | Provides systems engineering support to the entire PDS. | Manages PDS standards, software, and system-wide tools. |

Accessing PDS Data

The PDS offers several methods for accessing its data archives, ranging from web-based portals to programmatic interfaces.

The primary entry point for accessing PDS data is the main PDS website, which provides links to the various discipline nodes. Each node maintains its own website with specialized search tools and data access interfaces.[2] For example, the Imaging Node offers the Planetary Image Atlas for searching image data.[8]

For more advanced and automated data retrieval, the PDS provides a RESTful Application Programming Interface (API).[14] This API allows users to programmatically search for and download PDS4 data.

A key resource for learning how to use the PDS API is the collection of sample Jupyter notebooks provided by the PDS.[15] These notebooks offer practical examples of how to query the API using Python.

Experimental Protocol: Accessing PDS Data with the PDS API in Python

This protocol outlines the basic steps for querying the PDS API using Python.

1. Objective: To programmatically search for and retrieve information about PDS data products.

2. Materials:

- Python 3.x

- requests library (pip install requests)

3. Methodology:

4. Example Python Code:

Solar System Dynamics (SSD) Group Data

The SSD group provides crucial data for mission planning, astronomical observations, and scientific research.

Horizons Ephemeris System

The this compound Horizons system provides highly accurate ephemerides for solar system objects, including planets, moons, asteroids, and comets.[4]

Horizons data can be accessed through several interfaces:

-

Web Interface: A user-friendly web form for generating ephemerides.[4]

-

Command-Line Interface: A text-based interface accessible via telnet.[4]

-

Email Interface: Batch requests can be submitted via email.[4]

-

API: A RESTful API for programmatic access to Horizons data.[4][16]

Small-Body Database (SBDB)

The SBDB is a comprehensive database of all known asteroids and comets in our solar system, containing orbital and physical data.[5]

-

SBDB Lookup Tool: A web-based tool for retrieving data for a specific small body.

-

SBDB Query API: A RESTful API for programmatically querying the database.[17] The astroquery Python package provides a convenient interface to this API.[18]

Experimental Protocol: Retrieving Asteroid Data with the SBDB Query API and Astroquery

This protocol demonstrates how to use the astroquery library to fetch data for a specific asteroid from the SBDB.

1. Objective: To retrieve orbital and physical data for a given asteroid.

2. Materials:

- Python 3.x

- astroquery library (pip install astroquery)

3. Methodology:

4. Example Python Code:

Data Processing and Analysis

Once data has been acquired, it often needs to be processed and analyzed to be scientifically useful. This compound provides and supports several tools and methodologies for this purpose.

VICAR Image Processing System

The Video Image Communication and Retrieval (VICAR) system is a general-purpose image processing software system developed at this compound.[19][20] It is widely used to process images from this compound's unmanned planetary spacecraft.[20] VICAR consists of a library of programs that can be combined to perform complex image processing tasks, such as radiometric correction, geometric correction, and mosaicking.[21]

SPICE Toolkit

The Navigation and Ancillary Information Facility (NAIF) at this compound develops and maintains the SPICE toolkit.[3] SPICE is an information system that provides the geometric and other ancillary data needed to interpret space science observations.[3] The SPICE toolkit is a library of software that allows scientists and engineers to read SPICE data files (kernels) and compute observation geometry, such as the position and orientation of a spacecraft and its instruments.[3][22] Tutorials and documentation for using the SPICE toolkit are available on the NAIF website.[23]

Visualizing Data Access and Workflows

Understanding the flow of data from the archives to the researcher is crucial for efficient data utilization. The following diagrams, generated using the DOT language, illustrate key workflows.

Caption: High-level overview of accessing this compound's planetary data archives.

Caption: A typical workflow for programmatic data access using this compound's APIs.

Caption: The federated structure of the Planetary Data System (PDS).

References

- 1. atmospheres node data [pds-atmospheres.nmsu.edu]

- 2. PDS: Node Descriptions [pds.nasa.gov]

- 3. A Very Brief Introduction to SPICE — NAIF PDS4 Bundler 1.8.0 documentation [nasa-pds.github.io]

- 4. PDS Geosciences Node Data and Services [pds-geosciences.wustl.edu]

- 5. core.ac.uk [core.ac.uk]

- 6. PDS: Small Bodies Node Home [pds-smallbodies.astro.umd.edu]

- 7. PDS Atmospheres Node [pds-atmospheres.nmsu.edu]

- 8. Cartography and Imaging Sciences Discipline Node [pds-imaging.this compound.nasa.gov]

- 9. hou.usra.edu [hou.usra.edu]

- 10. NASA Planetary Data System Geosciences Node | Research | WashU [research.washu.edu]

- 11. lpi.usra.edu [lpi.usra.edu]

- 12. PDS/PPI Home Page [pds-ppi.igpp.ucla.edu]

- 13. PDS/PPI Home Page [pds-ppi.igpp.ucla.edu]

- 14. orbital mechanics - Python API for this compound Horizons? - Space Exploration Stack Exchange [space.stackexchange.com]

- 15. Tutorials/Cookbooks — PDS APIs B15.1 documentation [nasa-pds.github.io]

- 16. google.com [google.com]

- 17. youtube.com [youtube.com]

- 18. This compound SBDB Queries (astroquery.jplsbdb/astroquery.solarsystem.this compound.sbdb) — astroquery v0.4.12.dev198 [astroquery.readthedocs.io]

- 19. ntrs.nasa.gov [ntrs.nasa.gov]

- 20. VICAR - Video Image Communication And Retrieval(NPO-49845-1) | NASA Software Catalog [software.nasa.gov]

- 21. scispace.com [scispace.com]

- 22. NAIF [naif.this compound.nasa.gov]

- 23. SPICE Tutorials [naif.this compound.nasa.gov]

A Technical Introduction to the Deep Space Network: Core Capabilities and Scientific Applications

An In-depth Guide for Researchers, Scientists, and Drug Development Professionals

The Deep Space Network (DSN) stands as the largest and most sensitive scientific telecommunications system in the world, serving as the primary conduit for receiving invaluable data from interplanetary spacecraft.[1] Managed by NASA's Jet Propulsion Laboratory (this compound), the DSN provides the critical two-way communication link that enables the guidance and control of robotic explorers and the return of their scientific discoveries.[2] This technical guide provides a comprehensive overview of the DSN's architecture, core capabilities, and its application in pioneering scientific experiments.

Global Architecture and Core Functions

The DSN's strategic global placement ensures continuous communication with spacecraft as the Earth rotates.[2] It consists of three deep-space communications complexes located approximately 120 degrees apart in longitude:

-

Goldstone, California, USA

-

Madrid, Spain

-

Canberra, Australia [1]

This configuration guarantees that any spacecraft in deep space is always within the line of sight of at least one of the ground stations.[1]

The primary functions of the DSN are to:

-

Acquire Telemetry Data: Receive scientific and engineering data from spacecraft.[1][3]

-

Transmit Commands: Send instructions and software modifications to spacecraft.[1][3]

-

Track Spacecraft Position and Velocity: Perform precise measurements of a spacecraft's trajectory.[1][3]

-

Perform Radio and Radar Astronomy Observations: Explore the solar system and the universe.[1]

-

Conduct Radio Science Experiments: Utilize the spacecraft-to-Earth radio link as a scientific instrument.[3]

DSN Antenna and Frequency Specifications

Each DSN complex is equipped with a variety of large, steerable, high-gain parabolic reflector antennas. The network's antenna inventory includes 70-meter, 34-meter, and 26-meter diameter antennas, each with specific capabilities.[3] The DSN operates across several frequency bands, with a general trend toward higher frequencies to support increased data return.[3]

Antenna Performance Characteristics

The performance of a DSN antenna is a critical factor in the design of deep space communication links. Key parameters include antenna gain and system noise temperature, which are often combined into a figure of merit known as G/T. The following tables summarize the typical performance characteristics of the DSN's primary antennas.

| Antenna | Frequency Band | Antenna Gain (dBi) | System Noise Temperature (K) at Zenith | G/T (dB/K) at Zenith |

| 70-meter | S-Band (2.295 GHz) | ~68 | ~18 | ~45.5 |

| X-Band (8.42 GHz) | ~74 | ~20 | ~51.0 | |

| Ka-Band (32 GHz) | ~81 | ~40 | ~55.0 | |

| 34-meter (HEF) | S-Band (2.295 GHz) | ~61 | ~25 | ~37.0 |

| X-Band (8.42 GHz) | ~68 | ~28 | ~43.5 | |

| 34-meter (BWG) | S-Band (2.295 GHz) | ~61 | ~25 | ~37.0 |

| X-Band (8.42 GHz) | ~68 | ~30 | ~43.2 | |

| Ka-Band (32 GHz) | ~75 | ~50 | ~48.0 |

Note: Values are approximate and can vary based on specific antenna, elevation angle, and weather conditions.

Frequency Bands and Data Rates

The DSN utilizes specific frequency bands allocated for deep space communication by the International Telecommunication Union (ITU).[3] The choice of frequency band has a significant impact on the achievable data rates.

| Frequency Band | Uplink Frequency Range (MHz) | Downlink Frequency Range (MHz) | Typical Maximum Data Rates | Primary Use |

| S-Band | 2110 - 2120 | 2290 - 2300 | Up to 256 kbps | Telemetry, Tracking, and Command for older missions and near-Earth operations. |

| X-Band | 7145 - 7190 | 8400 - 8450 | Up to 10 Mbps | Primary band for modern deep space missions, offering a good balance of data rate and weather resilience. |

| Ka-Band | 34200 - 34700 | 31800 - 32300 | Over 100 Mbps | High-rate data return for science-intensive missions. More susceptible to weather effects. |

| K-Band (Near-Earth) | N/A | 25500 - 27000 | High | Used for missions in near-Earth space, such as the James Webb Space Telescope.[3] |

DSN Services and Data Flow

The DSN provides a suite of services to support space missions, broadly categorized into uplink and downlink capabilities. These services handle seven distinct types of data.

The Seven DSN Data Types

-

Telemetry (TLM): Scientific and engineering data transmitted from the spacecraft.

-

Tracking (TRK): Data used to determine the spacecraft's position and velocity, including Doppler and ranging measurements.

-

Command (CMD): Instructions sent from mission control to the spacecraft.

-

Radio Science (RS): Data from experiments that use the radio link itself as the instrument.

-

Very Long Baseline Interferometry (VLBI): High-resolution positional data obtained by correlating signals from two widely separated antennas.

-

Monitor (MON): Data on the status and performance of the DSN itself.

-

Frequency and Timing (F&T): High-precision frequency and timing references that are essential for all DSN operations.[4]

Data Flow and Signal Processing

The flow of data through the DSN is a complex process involving multiple stages of signal reception, processing, and distribution.

Experimental Protocols: Radio Science

The DSN, in conjunction with spacecraft radio systems, enables a class of experiments known as radio science. These experiments use the properties of the radio waves to probe the physical characteristics of celestial bodies and the interplanetary medium.

Gravity Field Mapping

Objective: To determine the gravity field of a planet or moon, providing insights into its internal structure.

Methodology:

-

A coherent, two-way radio link is established between a DSN station and the spacecraft orbiting the target body.

-

The DSN transmits a highly stable uplink signal to the spacecraft.

-

The spacecraft's transponder receives the uplink signal and immediately retransmits it back to the DSN station.

-

The DSN's precision receivers measure the Doppler shift of the downlink signal. This shift is the change in frequency of the radio waves caused by the relative motion between the spacecraft and the ground station.

-

Minute variations in the spacecraft's velocity, caused by local variations in the gravitational field of the body it is orbiting, induce tiny changes in the Doppler shift.

-

By precisely measuring these Doppler shifts over many orbits, scientists can create a detailed map of the body's gravity field.

Radio Occultation

Objective: To study the structure, composition, and dynamics of a planet's atmosphere and ionosphere.

Methodology:

-

The experiment is conducted when the spacecraft passes behind the target planet as viewed from Earth (an occultation).

-

As the spacecraft is occulted, its radio signals to the DSN pass through the planet's atmosphere.

-

The atmosphere refracts (bends) the radio waves and alters their frequency and amplitude.

-

The DSN's open-loop receivers record these changes in the signal with high precision.

-

By analyzing the changes in the signal's properties as it traverses different layers of the atmosphere, scientists can derive vertical profiles of atmospheric temperature, pressure, density, and electron content in the ionosphere.[5][6][7][8]

Logical Relationships and System Interdependencies

The various subsystems of the DSN are intricately linked to provide a seamless and reliable communication and data acquisition service. The Frequency and Timing Subsystem, for instance, is fundamental to the operation of all other systems.

References

- 1. descanso.this compound.nasa.gov [descanso.this compound.nasa.gov]

- 2. semanticscholar.org [semanticscholar.org]

- 3. NASA Deep Space Network - Wikipedia [en.wikipedia.org]

- 4. Ground antennas in NASA's deep space telecommunications | IEEE Journals & Magazine | IEEE Xplore [ieeexplore.ieee.org]

- 5. researchgate.net [researchgate.net]

- 6. cris.unibo.it [cris.unibo.it]

- 7. deepblue.lib.umich.edu [deepblue.lib.umich.edu]

- 8. cris.unibo.it [cris.unibo.it]

JPL's Blueprint for Cosmic Discovery: A Technical Roadmap for Future Deep Space Exploration

Pasadena, CA - The Jet Propulsion Laboratory (JPL), a leading center for robotic exploration of the solar system, is charting an ambitious course for the coming decades. This technical guide delves into this compound's strategic roadmap, outlining the key scientific questions, technological advancements, and groundbreaking missions that will define the next era of deep space exploration. This document is intended for researchers, scientists, and engineers, providing a comprehensive overview of the quantitative data, experimental protocols, and logical frameworks that underpin this compound's vision.

Strategic Imperatives: Guiding the Future of Exploration

This compound's strategic direction is guided by a set of imperatives that prioritize transformational science, technology infusion, and a robust and innovative workforce. The "this compound Plan 2023–2026" builds upon the foundation of the 2018 Strategic Implementation Plan, outlining seven key imperatives to guide the laboratory's focus.[1][2][3] These imperatives emphasize the importance of delivering on current commitments while investing in the technologies and methodologies that will enable the groundbreaking missions of the future. A core aspect of this strategy is the pursuit of a diverse and bold portfolio of missions that push the boundaries of space exploration technology by developing and fielding increasingly capable autonomous robotic systems.[4][5]

A central theme in this compound's future is the continued quest to answer fundamental questions about the universe: "Where did we come from?" and "Are we alone?".[6] This is reflected in the priorities set by the Planetary Science Decadal Survey, which heavily influences this compound's mission portfolio.[7][8][9][10] Key recommendations include the Mars Sample Return mission, a Uranus Orbiter and Probe, and the Enceladus Orbilander, all of which are central to this compound's long-term planning.[7][8]

Flagship Missions: Charting a Course for New Frontiers

This compound's roadmap is anchored by a series of flagship missions designed to investigate some of the most compelling targets in our solar system. These missions are characterized by their ambitious scientific goals and the development of cutting-edge technologies.

Europa Clipper: Unveiling the Secrets of an Ocean World

The Europa Clipper mission is designed to investigate the habitability of Jupiter's moon Europa, which is believed to harbor a global subsurface ocean of liquid water. The spacecraft will perform dozens of close flybys to study the moon's ice shell, ocean, and composition.

| Europa Clipper Instrument | Key Measurement Capabilities |

| Plasma Instrument for Magnetic Sounding (PIMS) & Europa Clipper Magnetometer (ECM) | Characterize the magnetic field to confirm the existence of and characterize the ocean. |

| Europa Imaging System (EIS) | Provide high-resolution images to study the geology and identify potential landing sites. |

| Mapping Imaging Spectrometer for Europa (MISE) | Map the distribution of ices, salts, and organic molecules on the surface. |

| Radar for Europa Assessment and Sounding: Ocean to Near-surface (REASON) | Sound the ice shell to determine its thickness and search for subsurface water. |

| Europa Thermal Emission Imaging System (E-THEMIS) | Detect thermal anomalies that may indicate active plumes or thin ice. |

| MAss SPectrometer for Planetary EXploration/Europa (MASPEX) | Analyze the composition of the tenuous atmosphere and any potential plumes. |

| SUrface Dust Mass Analyzer (SUDA) | Analyze the composition of tiny particles ejected from Europa's surface. |

| Europa Ultraviolet Spectrograph (UVS) | Search for and characterize plumes erupting from the moon's interior. |

| Gravity/Radio Science | Determine the thickness of the ice shell and the depth of the ocean. |

The Gravity/Radio Science investigation will utilize the spacecraft's telecommunications system to precisely measure Europa's gravity field. The experiment will proceed as follows:

-

Signal Transmission: A radio signal is transmitted from the Deep Space Network (DSN) on Earth to the Europa Clipper spacecraft.

-

Coherent Transponding: The spacecraft receives the signal and transmits a new signal back to Earth at a frequency that is coherent with the received signal.

-

Doppler Shift Measurement: By analyzing the Doppler shift in the returned signal, scientists can precisely determine the spacecraft's velocity relative to Earth.

-

Orbital Perturbation Analysis: As the spacecraft flies by Europa, the moon's gravity will slightly alter its trajectory. These perturbations are reflected in the Doppler shift of the radio signal.

-

Gravity Field Mapping: By making these measurements during multiple flybys at different orientations and altitudes, a detailed map of Europa's gravity field can be constructed. This data will be used to infer the thickness of the ice shell and the depth of the subsurface ocean.

SPHEREx: A Spectroscopic Survey of the Cosmos

The Spectro-Photometer for the History of the Universe, Epoch of Reionization, and Ices Explorer (SPHEREx) is a two-year astrophysics mission that will survey the entire sky in optical and near-infrared light. The mission aims to address three key scientific themes: the origin of the universe, the origin and history of galaxies, and the origin of water in planetary systems.

| SPHEREx Mission Parameter | Value |

| Wavelength Range | 0.75 to 5.0 micrometers |

| Spectral Channels | 96 |

| Field of View | 3.5° x 7° |

| Aperture Diameter | 20 cm |

| Survey Duration | 2 years |

| Number of All-Sky Maps | 4 |

SPHEREx will perform its all-sky survey using a series of overlapping exposures. The observational strategy is as follows:

-

Orbital Scan: The spacecraft will be in a near-polar, sun-synchronous orbit, allowing it to scan a continuous strip of the sky as the Earth rotates.

-

Step and Integrate: The telescope will observe a patch of sky for a set integration time, then slew to the next adjacent patch.

-

Spectral Mapping: For each patch of sky, the instrument will obtain a spectrum in 96 different wavelength bands.

-

All-Sky Coverage: Over a period of six months, the spacecraft's orbital precession will allow it to observe the entire celestial sphere.

-

Multiple Surveys: The mission will complete four full all-sky surveys over its two-year primary mission, allowing for the co-addition of data to increase signal-to-noise and the detection of transient events.

Enabling Technologies: Powering the Next Generation of Discovery

This compound's ambitious mission portfolio is enabled by a continuous investment in cutting-edge technologies. These advancements are crucial for increasing mission capability, reducing costs, and enabling new scientific investigations.

Advanced Propulsion

This compound is a leader in the development of advanced propulsion systems that enable missions to reach distant targets faster and with greater payload capacity.[11]

-

Electric Propulsion: Hall thrusters and ion propulsion systems offer significantly higher specific impulse than traditional chemical rockets, allowing for more efficient deep space travel.[12][13][14] The Dawn mission successfully used ion propulsion to orbit two different main-belt asteroids.[11][13] Future advancements in high-power electric propulsion are a key technology priority.[15][16]

-

Advanced Concepts: this compound continues to explore novel propulsion concepts that could revolutionize deep space exploration, though these are at earlier stages of development.[12]

Autonomous Systems and Artificial Intelligence

-

Cooperative Autonomy: The Cooperative Autonomous Distributed Robotic Exploration (CADRE) technology demonstration will feature a team of small rovers that will work together to explore the Moon, showcasing the potential of multi-robot missions.

The CADRE rovers will demonstrate a cooperative, autonomous mapping capability. The experimental protocol will involve the following steps:

-

Leader Election: The rovers will autonomously elect a leader for a given task.

-

Task Allocation: The leader will assign specific areas of interest to each rover.

-

Coordinated Navigation: The rovers will navigate to their assigned locations while maintaining communication and avoiding collisions.

-

Distributed Sensing: Each rover will use its ground-penetrating radar to collect subsurface data.

-

Data Fusion: The data from all rovers will be combined to create a 3D map of the lunar subsurface.

References

- 1. d2pn8kiwq2w21t.cloudfront.net [d2pn8kiwq2w21t.cloudfront.net]

- 2. d2pn8kiwq2w21t.cloudfront.net [d2pn8kiwq2w21t.cloudfront.net]

- 3. This compound Plan – David Rager [davidrager.co]

- 4. This compound.nasa.gov [this compound.nasa.gov]

- 5. This compound SIP - Vision [this compound.nasa.gov]

- 6. NASA Selects Future Mission Concepts for Study | NASA Jet Propulsion Laboratory (this compound) [this compound.nasa.gov]

- 7. Planetary Science Decadal Survey - Wikipedia [en.wikipedia.org]

- 8. 2022 Planetary Science Decadal Survey: Recommendations for Major Missions - AIP.ORG [aip.org]

- 9. planetary.org [planetary.org]

- 10. space.com [space.com]

- 11. Advanced Propulsion for this compound Deep Space Missions | NASA Jet Propulsion Laboratory (this compound) [this compound.nasa.gov]

- 12. Electric Propulsion Laboratory | NASA Jet Propulsion Laboratory (this compound) [this compound.nasa.gov]

- 13. youtube.com [youtube.com]

- 14. Deep Space 1: Advanced Technologies: Solar Electric Propulsion [this compound.nasa.gov]

- 15. Advanced Electric Propulsion System - Wikipedia [en.wikipedia.org]

- 16. ntrs.nasa.gov [ntrs.nasa.gov]

- 17. AI at Work: How NASA's this compound Uses Artificial Intelligence to Explore Mars and Understand Earth - Crescenta Valley Weekly [crescentavalleyweekly.com]

- 18. AI-Enhanced Spacecraft Navigation and Anomaly Detection: How NASA Uses Machine Learning to Improve Space Operations – Millennial Partners [millennial.ae]

- 19. A.I. Will Prepare Robots for the Unknown | NASA Jet Propulsion Laboratory (this compound) [this compound.nasa.gov]

- 20. This compound Artificial Intelligence Group [ai.this compound.nasa.gov]

- 21. m.youtube.com [m.youtube.com]

A Technical Guide to the Jet Propulsion Laboratory's Central Role in Climate Change Monitoring

Issued: November 20, 2025

This document provides a comprehensive technical overview of the Jet Propulsion Laboratory's (JPL) pivotal contributions to monitoring global climate change. Managed by Caltech for NASA, this compound is at the forefront of Earth science, developing and operating a suite of advanced satellite missions and instruments.[1] These technologies provide critical data to the scientific community for understanding the complex Earth system, including its oceans, cryosphere, water and energy cycles, and carbon cycle.[2] This guide details the key missions, experimental methodologies, and data products relevant to researchers and scientists.

This compound's Thematic Approach to Climate Science

This compound's climate change research is structured around four principal themes, creating a holistic view of the planet's interconnected systems.[3]

-

Icy Regions: Studying the dynamics of Earth's ice sheets and glaciers is crucial as their meltwater is a primary contributor to sea-level rise. This compound uses advanced technologies to monitor changes in the most remote parts of the globe.[3]

-

Water and Energy Cycles: this compound employs radar, thermal, and moisture-sensing instruments to track the movement of water between the sea, air, and land.[3] Understanding these processes is vital for predicting precipitation patterns, freshwater availability, and the intensification of storms.[3][4]

-

Greenhouse Gases: this compound missions are designed to identify the sources and sinks of greenhouse gases like carbon dioxide and methane on a global scale.[5][6] This research is fundamental to understanding how atmospheric concentrations of these gases will evolve.[3]

-

Ecosystems: Technologies used for water cycle analysis are also applied to monitor the health and transformation of natural and agricultural ecosystems.[3] This includes tracking droughts and plant respiration from space.[3]

Key Missions and Instrumentation

This compound's contributions to climate monitoring are enabled by a portfolio of sophisticated instruments and satellite missions. The quantitative specifications for several key ongoing and future missions are summarized below.

Ocean and Sea Level Monitoring

Continuous and precise measurement of sea surface height is a cornerstone of climate monitoring. This compound has been a key contributor to this effort for over three decades.[7]

| Mission/Instrument | Key Climate Variables | Launch Date | Key Specifications | Status |

| Sentinel-6B | Sea Surface Height, Atmospheric Water Vapor, Air Temperature & Humidity | Nov 2025 (Scheduled)[8] | Accuracy: Centimeter-level for 90% of oceans.Orbit: 1,336 km altitude, 66° inclination, 10-day repeat cycle.Mass: 2,623 lbs (1,190 kg).[7] | Future[8] |

| COWVR (Compact Ocean Wind Vector Radiometer) | Ocean Surface Wind Speed & Direction, Cloud Water Content, Water Vapor | Dec 21, 2021[9] | Weight: 130 lbs (58.7 kg).Power: 47 watts.Frequency: 34 gigahertz.[9] | Current[8] |

| GNSS-RO (on Sentinel-6) | Atmospheric Temperature, Density, & Moisture Content | Nov 2020 (on Sentinel-6A)[7] | Measures refraction of navigation satellite radio signals passing through the atmosphere.[4] | Current |

Greenhouse Gas and Ecosystem Monitoring

Identifying and quantifying emissions of methane and carbon dioxide is a critical area of focus for this compound.

| Mission/Instrument | Key Climate Variables | Launch Date | Key Specifications | Status |

| OCO-2 (Orbiting Carbon Observatory 2) | Atmospheric Carbon Dioxide (CO2) sources and sinks | July 2, 2014 | Designed to provide global, high-resolution CO2 measurements.[5] | Current |

| EMIT (Earth Surface Mineral Dust Source Investigation) | Mineral Dust Composition, Methane (CH4), Carbon Dioxide (CO2) | July 2022[10][11] | Imaging spectrometer on the International Space Station (ISS); identifies point-source emissions.[11] | Current |

| Carbon-I | Greenhouse Gas Emissions (Methane, CO2) | Early 2030s (Proposed) | Aims for unprecedented high-resolution, continuous global mapping of emission sources like power plants and pipeline leaks.[6] | Proposed |

| ASTER (Advanced Spaceborne Thermal Emission and Reflection Radiometer) | High-resolution images of land surface, water, ice, and clouds | Dec 18, 1999 (on Terra) | Captures images across 14 spectral bands.[5] | Current |

Atmospheric and Weather Dynamics

This compound instruments provide multi-layered data on atmospheric composition, which is essential for improving weather forecasts and long-term climate models.[4]

| Mission/Instrument | Key Climate Variables | Launch Date | Key Specifications | Status |

| AIRS (Atmospheric Infrared Sounder) | Atmospheric Temperature, Water Vapor, Greenhouse Gases (CO2) | May 4, 2002 (on Aqua) | A key tool for climate studies on greenhouse gas distribution and weather forecasts.[5] | Current |

| TEMPEST (Temporal Experiment for Storms and Tropical Systems) | Atmospheric Humidity | Dec 21, 2021[9] | Weight: < 3 lbs (1.3 kg).Antenna: ~6 inches (15 cm) diameter.Microwave radiometer designed to study storm growth.[9] | Current |

| MISR (Multi-angle Imaging SpectroRadiometer) | Aerosols, Cloud Properties, Surface Reflectance | Dec 18, 1999 (on Terra) | Views Earth at nine different angles simultaneously to provide detailed imagery.[5][12] | Current |

Experimental Protocols and Methodologies

The data generated by this compound instruments are based on sophisticated measurement principles.

-

Radio Occultation (GNSS-RO): This technique uses radio signals from navigation satellites (like GPS).[4] As a signal passes through Earth's atmosphere, it slows down, and its path bends—a phenomenon called refraction.[4] By precisely measuring this effect, scientists can derive detailed profiles of atmospheric density, temperature, and humidity.[4] This methodology provides high-vertical-resolution data critical for weather and climate models.

-