Savvy

Description

BenchChem offers high-quality this compound suitable for many research applications. Different packaging options are available to accommodate customers' requirements. Please inquire for more information about this compound including the price, delivery time, and more detailed information at info@benchchem.com.

Properties

CAS No. |

86903-77-7 |

|---|---|

Molecular Formula |

C30H65N2O3+ |

Molecular Weight |

501.8 g/mol |

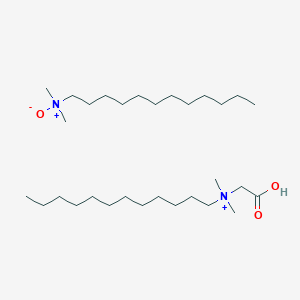

IUPAC Name |

carboxymethyl-dodecyl-dimethylazanium;N,N-dimethyldodecan-1-amine oxide |

InChI |

InChI=1S/C16H33NO2.C14H31NO/c1-4-5-6-7-8-9-10-11-12-13-14-17(2,3)15-16(18)19;1-4-5-6-7-8-9-10-11-12-13-14-15(2,3)16/h4-15H2,1-3H3;4-14H2,1-3H3/p+1 |

InChI Key |

KMCBHFNNVRCAAH-UHFFFAOYSA-O |

SMILES |

CCCCCCCCCCCC[N+](C)(C)CC(=O)O.CCCCCCCCCCCC[N+](C)(C)[O-] |

Canonical SMILES |

CCCCCCCCCCCC[N+](C)(C)CC(=O)O.CCCCCCCCCCCC[N+](C)(C)[O-] |

Synonyms |

C 31G C-31G C31G |

Origin of Product |

United States |

Foundational & Exploratory

A Technical Guide to a Conceptual Genomics Software Suite

Disclaimer: A specific commercial or open-source software suite named "Savvy software suite for genomics" was not prominently identified in public documentation. This guide, therefore, outlines the core components, functionalities, and workflows of a representative integrated software suite for genomics, designed for researchers, scientists, and professionals in drug development. The quantitative data and specific protocols presented are illustrative examples.

Introduction to Integrated Genomics Analysis Platforms

Modern genomics research generates vast and complex datasets, necessitating sophisticated software solutions for analysis and interpretation. An integrated genomics software suite provides an end-to-end platform for managing and analyzing data from high-throughput sequencing experiments. These suites typically encompass functionalities for data quality control, sequence alignment, variant calling, annotation, and downstream analysis, including pathway and network analysis. The goal of such a suite is to streamline complex bioinformatics pipelines, ensure reproducibility, and accelerate the translation of genomic data into biological insights.

Core Architecture and Modules

A comprehensive genomics software suite is generally modular, allowing for flexibility and scalability. The core architecture often revolves around a central data management system with interconnected analysis modules.

A typical architecture might include:

-

Data Import and Management Module: For handling raw sequencing data (e.g., FASTQ files) and associated metadata.

-

Quality Control (QC) Module: For assessing the quality of raw sequencing reads.

-

Sequence Alignment and Assembly Module: For mapping reads to a reference genome or assembling them de novo.

-

Variant Discovery and Genotyping Module: For identifying genetic variants such as SNPs, indels, and structural variants.

-

Annotation and Interpretation Module: For annotating variants with functional information and linking them to biological pathways and diseases.

-

Visualization and Reporting Module: For generating interactive visualizations and comprehensive reports.

Quantitative Performance Metrics

The performance of a genomics software suite is critical, especially when dealing with large-scale studies. Key performance indicators often include processing speed, accuracy, and resource utilization. The following tables provide illustrative performance metrics for common genomics tasks.

Table 1: Performance on Whole Genome Sequencing (WGS) Data Analysis (per sample)

| Metric | Value | Conditions |

| Alignment Speed | 2.5 hours | 30x human genome, 16-core CPU |

| Variant Calling Speed | 1.0 hour | Post-alignment, 16-core CPU |

| SNP Concordance | >99.8% | Compared to GIAB reference |

| Indel Concordance | >99.5% | Compared to GIAB reference |

| RAM Usage (Peak) | 60 GB | During alignment |

| Storage (BAM) | ~80 GB | Compressed alignment file |

| Storage (VCF) | ~0.5 GB | Compressed variant call file |

Table 2: Performance on Whole Exome Sequencing (WES) Data Analysis (per sample)

| Metric | Value | Conditions |

| Alignment Speed | 25 minutes | 100x human exome, 8-core CPU |

| Variant Calling Speed | 10 minutes | Post-alignment, 8-core CPU |

| SNP Concordance | >99.9% | Compared to GIAB reference |

| Indel Concordance | >99.7% | Compared to GIAB reference |

| RAM Usage (Peak) | 32 GB | During alignment |

| Storage (BAM) | ~8 GB | Compressed alignment file |

| Storage (VCF) | ~0.05 GB | Compressed variant call file |

Experimental Protocols and Workflows

A robust genomics software suite supports a variety of experimental designs. Below are detailed methodologies for two key applications.

Whole Genome Sequencing (WGS) Analysis Workflow

This protocol outlines the steps for identifying genetic variants from raw WGS data.

Methodology:

-

Data Pre-processing and Quality Control:

-

Raw sequencing reads in FASTQ format are loaded into the suite.

-

Initial quality assessment is performed using tools like FastQC.

-

Adapters are trimmed, and low-quality bases are removed.

-

-

Alignment to Reference Genome:

-

Cleaned reads are aligned to a reference genome (e.g., GRCh38) using a Burrows-Wheeler Aligner (BWA-MEM).

-

The resulting alignments are stored in a Binary Alignment Map (BAM) file.

-

-

Post-Alignment Processing:

-

Duplicates arising from PCR amplification are marked and removed.

-

Base quality scores are recalibrated to correct for systematic errors.

-

-

Variant Calling:

-

HaplotypeCaller or a similar algorithm is used to identify SNPs and small indels.

-

Variant calls are stored in a Variant Call Format (VCF) file.

-

-

Variant Filtration and Annotation:

-

Variants are filtered based on quality metrics (e.g., quality by depth, mapping quality).

-

High-quality variants are annotated with information from databases such as dbSNP, ClinVar, and gnomAD.

-

RNA-Seq Differential Expression Analysis Workflow

This protocol details the process for quantifying gene expression and identifying differentially expressed genes from RNA-Seq data.

Methodology:

-

Data Pre-processing and Quality Control:

-

Raw RNA-Seq reads (FASTQ) are assessed for quality.

-

Adapter sequences and low-quality reads are removed.

-

-

Alignment to Reference Transcriptome:

-

Cleaned reads are aligned to a reference genome and transcriptome using a splice-aware aligner like STAR.

-

-

Gene Expression Quantification:

-

The number of reads mapping to each gene is counted to generate a feature counts matrix.

-

-

Differential Expression Analysis:

-

The counts matrix is used as input for statistical analysis packages like DESeq2 or edgeR.

-

This analysis identifies genes that are significantly up- or down-regulated between experimental conditions.

-

-

Downstream Analysis:

-

Differentially expressed genes are used for pathway analysis and gene ontology enrichment to understand the biological implications.

-

Signaling Pathway Analysis

A key feature of an advanced genomics suite is the ability to place genomic findings into a biological context. This often involves analyzing how genetic variants or changes in gene expression affect signaling pathways.

For example, after identifying a set of differentially expressed genes in a cancer dataset, the software could map these genes to known signaling pathways, such as the MAPK/ERK pathway, to identify dysregulated network components.

I. Genomics and Next-Generation Sequencing (NGS) Analysis

An Introduction to Savvy Tools for Bioinformatics Research

A Technical Guide for Researchers, Scientists, and Drug Development Professionals

The rapid advancements in high-throughput technologies, such as next-generation sequencing (NGS) and mass spectrometry, have generated an unprecedented volume of biological data. The ability to process, analyze, and interpret this complex data is paramount for driving innovation in basic research and drug development. Bioinformatics provides the essential tools and methodologies to translate raw data into biological insights, accelerating the discovery of novel biomarkers, the identification of drug targets, and the development of personalized medicine.[1][2]

This technical guide provides an in-depth overview of core bioinformatics tools and workflows relevant to genomics, proteomics, and drug discovery. It details common experimental protocols, presents quantitative data for tool comparison, and visualizes key processes to facilitate understanding for researchers, scientists, and drug development professionals.

NGS technologies have revolutionized genomics by enabling rapid and cost-effective sequencing of DNA and RNA.[3] The resulting data is massive and requires a sophisticated pipeline of bioinformatics tools for analysis, from initial quality control to the identification of genetic variants or differentially expressed genes.[4][5]

Key Tools in NGS Data Analysis

A typical NGS workflow involves several stages, each utilizing specialized tools. The choice of tool can impact the speed, accuracy, and computational resources required for the analysis.

| Tool Category | Tool Name | Primary Function | Key Features |

| Quality Control | FastQC | Assesses the quality of raw sequencing reads. | Provides metrics on per-base quality, GC content, and adapter contamination.[5] |

| Sequence Alignment | BWA (Burrows-Wheeler Aligner) | Maps sequencing reads to a reference genome. | Optimized for short reads; widely used in variant calling pipelines.[4] |

| Bowtie2 | An ultrafast and memory-efficient tool for aligning sequencing reads. | Excellent for aligning reads from whole-genome sequencing.[4] | |

| STAR Aligner | Splicing-aware aligner for RNA-seq data. | Highly accurate for mapping RNA-seq reads across splice junctions. | |

| Variant Calling | GATK (Genome Analysis Toolkit) | Identifies genetic variants (SNPs, indels) from sequencing data. | Industry standard for germline and somatic variant discovery; follows best-practice workflows.[6] |

| SAMtools | A suite of utilities for interacting with high-throughput sequencing data. | Used for viewing, sorting, indexing, and calling variants from alignment files.[6][7] | |

| Differential Gene Expression | DESeq2 | Analyzes count data from RNA-seq to identify differentially expressed genes. | Employs a negative binomial model to account for variability in sequencing data.[8] |

| edgeR | Another popular R package for differential expression analysis. | Uses empirical Bayes methods to moderate dispersion estimates across genes.[7] |

Standard NGS Experimental Workflow

The process of analyzing NGS data follows a logical progression from raw, unprocessed reads to an interpretable list of variants or genes. This workflow is fundamental to studies in cancer genomics, genetic disease research, and transcriptomics.

II. Proteomics and Mass Spectrometry Data Analysis

Mass spectrometry (MS)-based proteomics is a powerful technique for identifying and quantifying proteins in a complex biological sample.[9][10] "Shotgun" or bottom-up proteomics, the most common approach, involves digesting proteins into peptides before MS analysis.[11] Bioinformatics is crucial for processing the vast amount of spectral data generated to identify peptides and infer the presence and abundance of proteins.[12][13]

Core Software for Proteomics Analysis

The analysis of tandem mass spectrometry (MS/MS) data requires specialized search engines that match experimental spectra to theoretical spectra generated from protein sequence databases.

| Tool Name | Primary Function | Key Features |

| MaxQuant | A quantitative proteomics software package for analyzing large MS datasets. | Integrates with the Andromeda search engine; popular for label-free and label-based quantification.[14][15] |

| SEQUEST | One of the earliest and most widely used database search algorithms. | Correlates uninterpreted tandem mass spectra of peptides with sequences from a database.[13] |

| Mascot | A powerful search engine for protein identification using MS data. | Uses a probability-based scoring algorithm to evaluate matches.[13] |

| FragPipe | An integrated proteomics pipeline for comprehensive data analysis. | Provides a user-friendly interface for various search and quantification workflows. |

| Proteome Discoverer | A comprehensive data analysis platform for proteomics research. | Integrates multiple search engines and post-processing tools. |

Typical Bottom-Up Proteomics Workflow

From sample preparation to data interpretation, the proteomics workflow integrates wet-lab techniques with sophisticated computational analysis to understand protein-level changes in biological systems.

III. Bioinformatics in Drug Discovery and Development

Bioinformatics plays a pivotal role in modern drug discovery by accelerating the identification of therapeutic targets, screening potential drug candidates, and optimizing lead compounds.[16][17] By integrating computational methods, researchers can significantly reduce the time and cost associated with bringing a new drug to market.[2][18]

Computational Tools in the Drug Discovery Pipeline

| Stage | Tool/Method | Description |

| Target Identification | Omics Data Analysis (Genomics, Proteomics) | Identifies genes or proteins associated with a disease, making them potential drug targets.[2] |

| Pathway Analysis (KEGG, Reactome) | Understands the biological context of potential targets within signaling or metabolic pathways.[19][20] | |

| Virtual Screening | Molecular Docking (AutoDock, SwissDock) | Predicts the binding affinity and orientation of small molecules to a target protein's binding site.[18] |

| Pharmacophore Modeling | Identifies the essential 3D arrangement of functional groups in a molecule required for biological activity.[18] | |

| Lead Optimization | Molecular Dynamics (MD) Simulations | Simulates the movement of a drug-target complex over time to assess stability and binding dynamics.[18] |

| QSAR (Quantitative Structure-Activity Relationship) | Models the relationship between the chemical structure of a compound and its biological activity. |

Logical Workflow for Computational Drug Discovery

The process begins with a deep biological understanding of a disease and progressively narrows down a vast chemical space to a few promising candidates for experimental validation.

References

- 1. A Comprehensive Review of Bioinformatics Tools for Genomic Biomarker Discovery Driving Precision Oncology - PubMed [pubmed.ncbi.nlm.nih.gov]

- 2. Frontiers | The role and application of bioinformatics techniques and tools in drug discovery [frontiersin.org]

- 3. researchgate.net [researchgate.net]

- 4. youtube.com [youtube.com]

- 5. Bioinformatics Analysis [protocols.io]

- 6. 20 Tools In Bioinformatics. A list of tools and how they are used… | by Bioinformatics Deep Dive | Medium [medium.com]

- 7. youtube.com [youtube.com]

- 8. protocols.io [protocols.io]

- 9. tandfonline.com [tandfonline.com]

- 10. Bioinformatics in mass spectrometry data analysis for proteomics studies - PubMed [pubmed.ncbi.nlm.nih.gov]

- 11. Bioinformatics Methods for Mass Spectrometry-Based Proteomics Data Analysis - PMC [pmc.ncbi.nlm.nih.gov]

- 12. journals.physiology.org [journals.physiology.org]

- 13. researchgate.net [researchgate.net]

- 14. coherentmarketinsights.com [coherentmarketinsights.com]

- 15. MGVB: a New Proteomics Toolset for Fast and Efficient Data Analysis - PubMed [pubmed.ncbi.nlm.nih.gov]

- 16. The role and application of bioinformatics techniques and tools in drug discovery - PMC [pmc.ncbi.nlm.nih.gov]

- 17. revistas.unal.edu.co [revistas.unal.edu.co]

- 18. llri.in [llri.in]

- 19. Tools for visualization and analysis of molecular networks, pathways, and -omics data - PMC [pmc.ncbi.nlm.nih.gov]

- 20. Best bioinformatics tools for beginners [bioinformaticshome.com]

Unlocking Genomic Data at Scale: A Technical Guide to Sparse Allele Vectors with Savvy

For Immediate Release

Ann Arbor, MI – As the scale of genomic datasets continues to expand at an unprecedented rate, researchers and drug development professionals face significant challenges in data storage, retrieval, and analysis. The advent of whole-genome sequencing in large national biobanks has created a pressing need for more efficient data formats.[1] In response to this challenge, the Savvy software suite and its underlying Sparse Allele Vector (SAV) file format have been developed to provide a high-throughput solution for the storage and analysis of large-scale DNA variation data.[1][2][3][4] This technical guide provides an in-depth overview of the SAV format and the this compound toolkit, offering researchers, scientists, and drug development professionals the information necessary to leverage these powerful tools in their work.

The Challenge of Dense Genomic Data

Traditional formats for storing genetic variation data, such as the Variant Call Format (VCF), represent genotypes for all individuals at every variant site.[1] This "dense" representation leads to massive file sizes, especially as cohort sizes grow and rare variants are discovered. The vast majority of these entries represent homozygous reference genotypes, leading to significant data redundancy and computational overhead during analysis.

Sparse Allele Vectors: A Paradigm Shift in Genomic Data Storage

The Sparse Allele Vector (SAV) format addresses the challenge of dense data representation by storing only non-reference alleles and their corresponding sample indices.[2] This approach is particularly effective for modern, large-scale sequencing datasets, which are inherently sparse due to the high prevalence of rare variants. By storing only the deviations from the reference genome, the SAV format dramatically reduces file size and improves data deserialization speeds.[2]

Key Features of the SAV Format:

-

Sparse Representation: Only non-reference alleles are stored, significantly reducing data footprint.[2]

-

Efficient Deserialization: By avoiding the need to parse reference alleles, data can be loaded into memory much faster, accelerating downstream analyses.[2]

-

Optimized for Rare Variants: The compression and efficiency of the SAV format improve as the proportion of rare variants increases with larger sample sizes.[2]

-

Positional Burrows-Wheeler Transform (PBWT): this compound can optionally apply the PBWT to reorder data, further enhancing compression for common variants.[2]

-

Zstandard Compression: Bit-level compression is applied using the Zstandard (zstd) algorithm to further reduce file size.[2]

-

Indexed for Random Access: SAV files are indexed, allowing for rapid querying of specific genomic regions or records.[2]

Quantitative Performance of the this compound Suite

The efficiency of the SAV format is demonstrated by its superior compression and deserialization performance compared to the standard BCF format. The following tables summarize key performance metrics.

Table 1: SAV Compression and Deserialization Performance

| Sample Size | BCF Deserialization (htslib) (min) | BCF Deserialization (this compound) (min) | SAV Deserialization (min) | SAV w/PBWT Deserialization (min) |

| 2,000 | 0.55 | 0.47 | 0.03 | 0.17 |

| 20,000 | 18.62 | 15.60 | 0.20 | Not Reported |

| 200,000 | 596.73 | 494.08 | 1.73 | Not Reported |

Data sourced from LeFaive et al., 2021.[2]

Experimental Protocols and Workflows

The this compound software suite provides a command-line interface (CLI) for file manipulation and a C++ API for integration into custom analysis pipelines.

Command-Line Interface (CLI) Workflow

A common workflow involves converting a standard VCF or BCF file into the SAV format, which can then be used for downstream analysis.

Protocol for VCF/BCF to SAV Conversion:

The import subcommand is used to convert a BCF or VCF file into the SAV format. An index file is automatically generated and appended to the output file.

Command:

Example:

C++ API Workflow for Data Analysis

The this compound C++ API allows for direct integration of SAV, BCF, and VCF file reading into custom analysis tools. This provides a powerful and efficient way to access and manipulate genetic data.

Protocol for Reading Variants with the C++ API:

The following C++ code snippet demonstrates how to read variants from a SAV file and access genotype information.

Logical Relationships in Data Access

The this compound library provides flexible mechanisms for accessing specific subsets of data, which is crucial for efficient analysis of large datasets.

References

Savvy C++ Library: A Technical Guide for VCF Data Manipulation

This technical guide provides a comprehensive overview of the Savvy C++ library, a powerful tool designed for efficient manipulation of Variant Call Format (VCF), BCF, and the bespoke SAV files. Tailored for researchers, scientists, and drug development professionals, this document delves into the core features of this compound, its performance advantages, and practical applications in genomic data analysis.

Introduction to this compound

This compound is an open-source C++ library engineered for high-performance analysis of large-scale genomic variant data.[1] It provides a seamless interface for reading and manipulating VCF, BCF, and its native Sparse Allele Vector (SAV) file formats. The library's design prioritizes computational efficiency, making it particularly well-suited for applications such as Genome-Wide Association Studies (GWAS) and other high-throughput genomic analyses.

A key innovation in this compound is the SAV file format, which employs sparse allele vectors to represent genetic variation. This approach significantly reduces storage requirements and accelerates data deserialization, especially for datasets with a large proportion of rare variants.[1]

Core Features

The this compound C++ library offers a range of features designed to streamline and accelerate the handling of genomic variant data.

Unified File Format Interface

This compound provides a single, consistent C++ API for interacting with VCF, BCF, and SAV files.[1] This abstraction layer simplifies the development of analysis tools by eliminating the need to write separate code for handling different file formats.

High-Performance Architecture

The library's performance stems from two primary architectural decisions:

-

Sparse Allele Vectors (SAV): The native SAV format stores only non-reference alleles, leading to significant compression and faster data access, particularly for large cohorts with numerous rare variants.[1]

-

Structure of Arrays (SoA) Memory Layout: this compound utilizes an SoA memory layout for sample-level data. This approach improves CPU cache performance and enables the use of vectorized compute operations, resulting in substantial speed gains during data processing.[1]

Efficient Data Access and Manipulation

This compound offers a flexible and intuitive API for common data manipulation tasks:

-

Sequential and Random Access: The library supports both sequential iteration through variant records and random access to specific genomic regions.[2]

-

Genomic and Slice Queries: Researchers can efficiently query for variants within specific genomic coordinates or by a range of record indices.[2]

-

Sample Subsetting: this compound allows for the selection of a subset of samples from a VCF/BCF/SAV file for targeted analysis.[2]

-

Fast Concatenation: A command-line tool facilitates the rapid concatenation of SAV files by performing a byte-for-byte copy of compressed variant blocks, avoiding the overhead of decompression and recompression.[2]

Performance Benchmarks

The performance of this compound has been evaluated against other standard tools, demonstrating its efficiency in data deserialization.

Experimental Protocol

The following methodology was used to benchmark the deserialization speed of this compound against htslib for BCF files and to evaluate the performance of the SAV format.

-

Dataset: Genotypes from deeply sequenced chromosome 20 were used for the evaluation.

-

Sample Sizes: The benchmarks were performed on datasets with 2,000, 20,000, and 200,000 samples.

-

File Formats and Tools:

-

BCF files were read using both the official htslib (v1.11) and the this compound library.

-

SAV files were generated with the maximum zstd compression level (19).

-

A variation of the SAV format using Positional Burrows-Wheeler Transform (PBWT) was also tested with an allele frequency threshold of 0.01.

-

-

Metric: The primary metric was the time taken to deserialize the genotype data.

Quantitative Data Summary

The following table summarizes the deserialization speeds for the different file formats and sample sizes.

| Sample Size | BCF (htslib) | BCF (this compound) | SAV |

| 2,000 | 0.55 min | 0.47 min | 0.03 min |

| 20,000 | 18.62 min | 15.60 min | 0.20 min |

| 200,000 | 596.73 min | 494.08 min | 1.73 min |

API and Usage Examples

The this compound C++ API is designed for ease of use and integration into bioinformatics pipelines. The core classes are this compound::reader and this compound::variant.

Core API Components

-

This compound::reader: This class represents a file reader for VCF, BCF, or SAV files. It provides methods for opening files, iterating through variants, and performing queries.

-

This compound::variant: This class represents a single variant record. It provides methods to access variant information such as chromosome, position, reference and alternate alleles, as well as INFO and FORMAT field data.

Example Workflow: Reading and Filtering Variants

The following C++ code snippet demonstrates a typical workflow for reading a variant file, iterating through variants, and accessing genotype information.

Visualizing a Genome-Wide Association Study (GWAS) Workflow with this compound

A common application for a high-performance VCF/BCF reading library like this compound is in a GWAS pipeline. The following diagram illustrates a typical workflow where this compound can be used for the initial data loading and filtering steps.

Conclusion

The this compound C++ library provides a robust and high-performance solution for handling large-scale genomic variant data. Its innovative use of sparse allele vectors in the SAV format, combined with a cache-friendly memory layout, delivers significant speed advantages for data-intensive applications like GWAS. The intuitive API simplifies the development of powerful and efficient bioinformatics tools, making this compound a valuable asset for researchers and scientists in the field of genomics and drug development.

References

SavvyCNV: A Technical Deep Dive into Genome-wide CNV Detection from Off-Target Sequencing Data

For Researchers, Scientists, and Drug Development Professionals

This in-depth technical guide explores the core functionalities of SavvyCNV, a powerful computational tool designed to identify copy number variants (CNVs) across the entire genome using off-target reads from targeted sequencing and exome data. By leveraging this often-discarded data, SavvyCNV enhances the diagnostic yield of sequencing assays, providing valuable insights for genetic research and drug development.

Introduction: Unlocking the Potential of Off-Target Reads

Targeted sequencing and whole-exome sequencing (WES) are invaluable techniques for identifying single nucleotide variants and small insertions/deletions within specific genomic regions of interest. However, a significant portion of sequencing reads, often up to 70%, fall outside these targeted areas.[1][2][3] These "off-target" reads, while traditionally discarded, represent a rich source of genomic information that can be exploited for broader analyses. SavvyCNV is a freely available software tool developed to harness this "free data" to detect large-scale structural variations, specifically CNVs, on a genome-wide scale.[3][4]

SavvyCNV has demonstrated superior performance in calling CNVs with high precision and recall, outperforming several other state-of-the-art CNV callers, particularly in the detection of smaller CNVs.[3][5] This guide will delve into the underlying algorithms, experimental validation, and practical application of SavvyCNV.

The SavvyCNV Workflow: From Raw Reads to CNV Calls

The core workflow of SavvyCNV is a multi-step process that transforms raw sequencing data into high-confidence CNV calls. It requires aligned sequencing data in BAM or CRAM format and involves several key stages.[6]

Experimental Workflow Diagram

The following diagram illustrates the typical experimental workflow for CNV detection using SavvyCNV.

Core Algorithm: A Hidden Markov Model Approach

At the heart of SavvyCNV's analytical power lies a sophisticated algorithm that employs a Hidden Markov Model (HMM) to identify regions of altered copy number. This probabilistic model is well-suited for analyzing sequential data like the read coverage along a chromosome.

Data Preprocessing and Normalization

The initial step in the SavvyCNV pipeline is to process the input BAM or CRAM files using the CoverageBinner tool. This utility divides the genome into discrete bins of a specified size and calculates the read depth within each bin for every sample. This process generates coverage statistics files that serve as the primary input for the core CNV calling algorithm.

Noise Reduction and Error Modeling

A critical challenge in off-target read analysis is the inherent noise and variability in coverage. SavvyCNV addresses this through a robust noise reduction strategy. It utilizes a set of control samples to model and remove systematic biases in read depth. The software removes a specified number of singular vectors (default is 5) to reduce noise, a parameter that can be adjusted by the user.[6] Furthermore, SavvyCNV models the error in each genomic bin by considering both the overall noise level of the sample and the observed spread of normalized read depth across all samples for that particular bin.[6] This comprehensive error modeling is a key factor in its high precision.

The Hidden Markov Model for CNV Detection

SavvyCNV employs an HMM to segment the genome into regions of normal copy number, deletions, and duplications. The core components of this HMM are:

-

Hidden States: The HMM assumes three hidden states for each genomic bin:

-

Normal (diploid)

-

Deletion (hemizygous)

-

Duplication (triploid or higher)

-

-

Emission Probabilities: For each hidden state, there is an associated probability of observing a particular normalized read depth. These probabilities are modeled based on the expected read depth for each state (e.g., a normalized read depth of ~0.5 for a deletion and ~1.5 for a duplication) and the calculated error for that bin.

-

Transition Probabilities: These probabilities define the likelihood of moving from one hidden state to another between adjacent genomic bins. The -trans parameter in the SavvyCNV command-line interface allows users to adjust the transition probability, which in turn controls the sensitivity of the algorithm to calling CNVs of different sizes.[6]

The Viterbi algorithm is then used to find the most likely sequence of hidden states (normal, deletion, or duplication) that best explains the observed sequence of read depths across the genome for each sample.

Logical Diagram of the HMM

The following diagram illustrates the logical relationship between the observed read depths and the inferred hidden states in the SavvyCNV HMM.

Experimental Protocols and Performance Benchmarking

SavvyCNV's performance has been rigorously benchmarked against other state-of-the-art CNV callers using well-characterized datasets.

On-Target CNV Calling from Targeted Panel Data

-

Dataset: The ICR96 validation series, consisting of 96 samples sequenced with the TruSight Cancer Panel v2 (100 genes). The "truth set" of CNVs was established using Multiplex Ligation-dependent Probe Amplification (MLPA), identifying 25 single-exon CNVs, 43 multi-exon CNVs, and 1752 normal copy number genes.[5]

-

Compared Tools: GATK gCNV, DeCON, and CNVkit.[5]

-

Results: SavvyCNV demonstrated the highest recall for a precision of ≥ 50%, with a recall of over 95%, comparable to GATK gCNV and DeCON.[5]

Off-Target CNV Calling from Targeted Panel and Exome Data

-

Dataset: A cohort of samples with both targeted panel or exome sequencing and whole-genome sequencing (WGS) data. The "truth set" of CNVs was derived from the WGS data using GenomeStrip.[5]

-

Compared Tools: GATK gCNV, DeCON, EXCAVATOR2, CNVkit, and CopywriteR.[5]

-

Results: SavvyCNV significantly outperformed the other tools in the off-target analysis. It was particularly effective at identifying smaller CNVs (<200kbp) that were missed by most other callers.[5] For CNVs larger than 1Mb, SavvyCNV achieved 100% recall in off-target data from both targeted panel and exome sequencing.

Quantitative Performance Data

The following tables summarize the benchmarking results for SavvyCNV and other CNV calling tools. The performance is reported as recall at a precision of at least 50%.

Table 1: Off-Target CNV Calling from Targeted Panel Data

| CNV Size | SavvyCNV Recall (%) | GATK gCNV Recall (%) | DeCON Recall (%) | EXCAVATOR2 Recall (%) | CNVkit Recall (%) | CopywriteR Recall (%) |

| < 200 kbp | 12.0 | 0.0 | 4.0 | 0.0 | 0.0 | 0.0 |

| 200 kbp - 1 Mbp | 61.9 | 0.0 | 38.1 | 0.0 | 0.0 | 0.0 |

| > 1 Mbp | 97.6 | 4.8 | 81.0 | 0.0 | 0.0 | 0.0 |

| All | 25.5 | 0.4 | 17.2 | 0.0 | 0.0 | 0.0 |

Data sourced from the supplementary materials of the SavvyCNV publication.

Table 2: On-Target CNV Calling from ICR96 Targeted Panel Data

| CNV Type | SavvyCNV Recall (%) | GATK gCNV Recall (%) | DeCON Recall (%) | CNVkit Recall (%) |

| Single Exon | 96.0 | 96.0 | 92.0 | 80.0 |

| Multi Exon | 97.7 | 97.7 | 97.7 | 88.4 |

| All | 97.1 | 97.1 | 95.6 | 85.3 |

Data sourced from the supplementary materials of the SavvyCNV publication.

Table 3: Off-Target CNV Calling from Exome Data

| CNV Size | SavvyCNV Recall (%) | GATK gCNV Recall (%) | DeCON Recall (%) | EXCAVATOR2 Recall (%) | CNVkit Recall (%) | CopywriteR Recall (%) |

| < 200 kbp | 86.7 | 0.0 | 46.7 | 0.0 | 0.0 | 0.0 |

| > 200 kbp | 90.0 | 0.0 | 46.7 | 0.0 | 0.0 | 0.0 |

| All | 88.0 | 0.0 | 46.7 | 0.0 | 0.0 | 0.0 |

Data sourced from the supplementary materials of the SavvyCNV publication.

Conclusion and Future Directions

SavvyCNV is a robust and highly effective tool for the genome-wide detection of copy number variants from off-target sequencing reads. Its sophisticated noise reduction, error modeling, and Hidden Markov Model-based approach enable it to achieve high precision and recall, particularly for smaller CNVs that are often missed by other methods. By unlocking the information present in off-target data, SavvyCNV significantly increases the diagnostic and research utility of targeted sequencing and exome data. For researchers and professionals in drug development, SavvyCNV offers a cost-effective means to expand the scope of genetic analysis, identify novel disease-associated CNVs, and better characterize the genomic landscape of patient cohorts. The software is open-source and actively maintained, with potential for future enhancements in modeling more complex structural variants and integration with other genomic data types.

References

- 1. SavvyCNV: Genome-wide CNV calling from off-target reads - PMC [pmc.ncbi.nlm.nih.gov]

- 2. researchgate.net [researchgate.net]

- 3. Item - SavvyCNV: Genome-wide CNV calling from off-target reads - University of Exeter - Figshare [ore.exeter.ac.uk]

- 4. Savvy · GitHub [github.com]

- 5. GitHub - rdemolgen/SavvySuite: Suite of tools for analysing off-target reads to find CNVs, homozygous regions, and shared haplotypes [github.com]

- 6. SavvyCNV: Genome-wide CNV calling from off-target reads | PLOS Computational Biology [journals.plos.org]

Unlocking Genomic Insights: A Technical Guide to Savvy File Formats and Analysis Workflows

For Researchers, Scientists, and Drug Development Professionals

In the rapidly evolving landscape of genomics, the efficient storage, retrieval, and analysis of vast datasets are paramount. The Savvy suite of tools offers powerful solutions for handling genomic variant data and detecting copy number variations. This guide provides an in-depth technical overview of the file formats utilized and supported by this compound, alongside detailed experimental workflows, to empower researchers in their quest for novel discoveries.

Core Genomic File Formats in the this compound Ecosystem

The this compound C++ library is engineered to seamlessly interact with several standard and specialized genomic file formats. While this compound introduces its own optimized format, SAV, it maintains compatibility with widely adopted standards like VCF, BCF, BAM, and CRAM.

The SAV Format: An Optimized Approach

A key feature of the SAV format is its use of an S1R index, which enables fast random access to genomic regions and even allows for querying by record offset. This indexing strategy is crucial for performance when working with large-scale genomic datasets.

Standard Genomic File Formats

The this compound tools operate on and interact with a range of standard file formats that are foundational to genomic data analysis. A summary of these formats is presented below.

| File Format | Description | Type | Key Features |

| VCF | Variant Call Format is a text-based format for storing gene sequence variations.[1][2][3] It includes meta-information lines, a header, and data lines for each variant. | Text | Human-readable, flexible, widely supported. Can be compressed with bgzip. |

| BCF | Binary Call Format is the binary counterpart to VCF.[4] It stores the same information in a compressed, machine-readable format. | Binary | Smaller file size and faster processing compared to VCF. Not human-readable. |

| BAM | Binary Alignment/Map is the binary version of the SAM (Sequence Alignment/Map) format.[4] It represents aligned sequencing reads. | Binary | Compressed, indexed for fast access to specific genomic regions. The standard for storing aligned reads. |

| CRAM | Compressed Reference-based Alignment Map is a highly compressed format for storing aligned sequencing reads.[5] It achieves greater compression by referencing a known genome sequence. | Binary | Significant reduction in file size compared to BAM, especially for large datasets. Requires the reference genome for decompression. |

Experimental Protocols and Workflows

The generation of genomic data for analysis with this compound tools follows standard next-generation sequencing (NGS) and analysis pipelines. Below are detailed methodologies for key experimental workflows.

Variant Calling Workflow for this compound Library

This workflow outlines the steps from a biological sample to a variant call file that can be processed by the this compound C++ library.

-

Sample Preparation and DNA Extraction: High-quality genomic DNA is extracted from the biological sample (e.g., blood, tissue) using a suitable extraction kit. The DNA concentration and purity are assessed using spectrophotometry and fluorometry.

-

Library Preparation: The extracted DNA is fragmented, and adapters are ligated to the ends of the fragments to create a sequencing library. This process may also include PCR amplification to enrich the library.

-

Next-Generation Sequencing (NGS): The prepared library is sequenced on a high-throughput sequencing platform (e.g., Illumina NovaSeq). The sequencer generates raw sequencing reads, typically in FASTQ format.

-

Data Pre-processing:

-

Quality Control: Raw reads are assessed for quality using tools like FastQC.

-

Adapter Trimming and Quality Filtering: Adapters and low-quality bases are removed from the reads.

-

-

Alignment to a Reference Genome: The processed reads are aligned to a reference genome (e.g., GRCh38) using an aligner such as BWA or Bowtie2. The output of this step is a SAM/BAM file.

-

Post-Alignment Processing:

-

Sorting and Indexing: The BAM file is sorted by coordinate and indexed to allow for efficient data retrieval.

-

Duplicate Removal: PCR duplicates are marked or removed to reduce biases in variant calling.

-

Base Quality Score Recalibration (BQSR): Base quality scores are adjusted to more accurately reflect the probability of a sequencing error.

-

-

Variant Calling: Germline or somatic variants (SNPs and indels) are identified from the processed BAM files using a variant caller like GATK HaplotypeCaller or FreeBayes. The initial output is typically a VCF file.

-

Variant Filtration and Annotation: The raw variant calls in the VCF file are filtered based on various quality metrics to remove false positives. The filtered variants are then annotated with information from databases such as dbSNP, ClinVar, and gnomAD.

-

Conversion to SAV (Optional): The final VCF or BCF file can be imported into the SAV format using the this compound C++ library for optimized storage and analysis.

Copy Number Variation (CNV) Detection with SavvySuite

SavvySuite, and specifically SavvyCNV, is designed to detect genome-wide CNVs from off-target reads in targeted sequencing data (e.g., exome sequencing or gene panels).[1][2][5]

-

Experimental Design: The experimental design is typically for targeted sequencing, where a specific subset of the genome is enriched for sequencing. However, a significant portion of reads will still map to off-target regions.

-

Sequencing and Alignment: Follow steps 1-5 of the Variant Calling Workflow to generate aligned BAM or CRAM files.

-

Input Data Preparation:

-

A set of BAM or CRAM files from multiple samples is required.

-

A reference genome file in FASTA format is also needed.

-

-

Coverage Analysis with CoverageBinner:

-

The CoverageBinner tool from SavvySuite is used to process each BAM/CRAM file.

-

This tool calculates the read coverage across the genome in predefined bins (e.g., 200kb).

-

The output is a smaller summary file for each sample, which is more manageable for subsequent analysis.

-

-

CNV Calling with SavvyCNV:

-

The coverage summary files from all samples are provided as input to SavvyCNV.

-

SavvyCNV normalizes the coverage data to account for biases and uses a singular value decomposition (SVD) approach to identify outlier samples with altered copy numbers in specific genomic regions.

-

The tool then calls CNVs (deletions and duplications) for each sample.

-

-

Output and Interpretation:

-

The output is a list of detected CNVs for each sample, including their genomic coordinates and the type of variation (deletion or duplication).

-

These results can then be visualized and further investigated for their biological and clinical significance.

-

Logical Relationships in Genomic Data Analysis

The effective analysis of genomic data relies on the logical relationships between different data types and the tools used to process them. The this compound ecosystem exemplifies this by providing a bridge between standard, widely-used formats and an optimized, high-performance format.

By leveraging both established and specialized file formats, the this compound toolkit provides a flexible and powerful environment for genomic research. Understanding the technical details of these formats and the workflows that produce them is essential for harnessing their full potential in the pursuit of scientific and clinical advancements.

References

For Researchers, Scientists, and Drug Development Professionals

An In-Depth Technical Guide to the Applications of Savvy in Genetic Research

This guide provides a comprehensive overview of the "this compound" suite of tools and their applications in genetic research. The term "this compound" in this context primarily refers to two key technologies: SavvyCNV , a tool for detecting copy number variants (CNVs) from off-target sequencing reads, and the This compound software suite for handling the Sparse Allele Vector (SAV) file format, designed for efficient large-scale DNA variation analysis.

This document details the methodologies, presents quantitative performance data, and provides experimental protocols for leveraging these powerful bioinformatics tools in genomic research.

SavvyCNV: Unlocking the Potential of Off-Target Reads

A significant portion of data from targeted sequencing and whole-exome sequencing (WES) consists of "off-target" reads, which do not align to the intended capture regions. Up to 70% of sequencing reads can fall into this category.[1] SavvyCNV is a tool designed to harness this often-discarded data to call germline CNVs across the entire genome, thereby increasing the diagnostic yield of targeted sequencing assays without additional sequencing costs.[1][2]

Core Methodology

SavvyCNV's approach is based on read depth analysis. The genome is systematically divided into non-overlapping chunks of a defined size. The tool then calculates the number of sequencing reads within each chunk for a given sample and compares this to a reference set of control samples. Deviations in read depth, after normalization, indicate potential deletions (lower read depth) or duplications (higher read depth). A Viterbi algorithm is then employed to identify contiguous regions of altered read depth, which are called as CNVs.

The performance of SavvyCNV is enhanced when used with larger batches of samples. The power to detect CNVs is fully realized with at least 50 samples, and the ability to detect larger CNVs continues to improve with up to 200 samples in a batch.

Data Presentation: Performance Benchmarks

SavvyCNV has been benchmarked against several other state-of-the-art CNV callers. The following tables summarize its performance in calling CNVs from the off-target reads of both targeted gene panels and exome sequencing data.

Table 1: Off-Target CNV Calling Performance from Exome Data

| Tool | Recall (at >=50% Precision) | Key Performance Insights |

| SavvyCNV | 86.7% | Superior in detecting smaller CNVs (<200kb) |

| DeCON | 46.7% | Next-best performing tool in this dataset |

| GATK gCNV | Limited | Did not call any true CNVs smaller than 200kb |

| EXCAVATOR2 | Limited | Did not call any true CNVs smaller than 200kb |

| CNVkit | Limited | Did not call any true CNVs smaller than 200kb |

| CopywriteR | Limited | Did not call any true CNVs smaller than 200kb |

| Data derived from benchmarking studies where SavvyCNV's ability to call CNVs was compared against other tools.[2][3] |

Table 2: On-Target CNV Calling Performance from Targeted Panel Data

| Tool | Recall (at >=50% Precision) | Precision (at 97.1% Recall) |

| SavvyCNV | >95% | 93.0% |

| GATK gCNV | >95% | 85.7% |

| DeCON | >95% | Not specified |

| CnvKit | Low | Did not call the majority of CNVs |

| For on-target analysis, SavvyCNV demonstrates higher precision at comparable recall levels to other leading tools.[3] |

Notably, for larger CNVs (>1 Mb), SavvyCNV achieves 100% recall from off-target reads in both targeted panel and exome data.[2]

Experimental Protocol: From BAM to CNV Calls

The following protocol outlines the key steps for using SavvyCNV to call CNVs from off-target reads.

Prerequisites:

-

Aligned sequencing data in BAM or CRAM format.

-

A reference genome in FASTA format (required for CRAM).

-

The SavvySuite Java archive (SavvySuite.jar) and the GATK JAR file in the Java classpath.

Step 1: Pre-processing of BAM Files Standard pre-processing steps should be completed, including marking duplicates and local realignment around indels.

Step 2: Generating Read Count Files with CoverageBinner CoverageBinner is the first tool in the SavvySuite workflow. It processes each BAM/CRAM file to produce a .coverageBinner file containing the read counts in genomic chunks.

-

-Xmx4g: Allocates 4GB of memory to Java.

-

-mmq 30: Sets the minimum mapping quality for reads to be counted to 30.

-

-s SAMPLE_NAME: Specifies the sample name to be written to the output file.

-

/path/to/input.bam: The input BAM file.

Step 3: Calling CNVs with SavvyCNV This step takes the .coverageBinner files from multiple samples to call CNVs. It is recommended to run male and female samples separately if analyzing sex chromosomes.

-

-Xmx30g: Allocates 30GB of memory.

-

-trans 0.0001: Sets the transition probability for the Viterbi algorithm.

-

-minReads 20: Sets the minimum average number of reads a chunk must have to be analyzed.

-

-minProb 40: Sets the Phred-scaled minimum probability for a single chunk to contribute to a CNV.

-

200000: Specifies the chunk size in base pairs (e.g., 200kb).

-

*.coverageBinner: A wildcard pattern to include all coverage binner files in the directory.

Visualization: SavvyCNV Workflow

The this compound Suite and Sparse Allele Vector (SAV) Format

The this compound software suite also provides a C++ interface for the Sparse Allele Vector (SAV) file format. SAV is designed for the efficient storage and analysis of large-scale DNA variation data, offering a more compact and faster alternative to standard formats like VCF and BCF.

Core Methodology

The SAV format optimizes the storage of genotype information, which is particularly advantageous in large cohort studies where variant data can be sparse. The this compound command-line interface and C++ API allow for seamless integration into existing bioinformatics pipelines, facilitating the conversion from VCF/BCF to SAV and subsequent data analysis.

Data Presentation: Format Conversion and Indexing

Table 3: Key Features of the this compound Software Suite for SAV

| Feature | Description |

| Import | Converts standard BCF or VCF files into the SAV format. |

| Indexing | Automatically generates an S1R index appended to the SAV file for fast data retrieval. |

| Statistics | Provides tools to quickly calculate statistics on SAV files, either by parsing the entire file or using the index for faster queries. |

| API | Offers a C++ programming API for direct integration into custom analysis software. |

Experimental Protocol: VCF to SAV Conversion and Analysis

The following protocol outlines a typical workflow for using the this compound command-line tools.

Prerequisites:

-

A compiled this compound executable.

-

Input variant data in VCF or BCF format.

Step 1: Convert VCF/BCF to SAV The import subcommand is used to convert a VCF or BCF file into the SAV format. An index is automatically created.

-

-i: Specifies the input VCF or BCF file.

-

-o: Specifies the output SAV file.

Step 2: Gather Statistics on the SAV File The stat subcommand can be used to obtain summary statistics about the variants in the SAV file.

To get faster, index-based statistics (e.g., number of variants, chromosomes):

Visualization: SAV Format Workflow

References

A Technical Guide to the SavvySuite for Large-Scale Genomic Analysis

For researchers, scientists, and professionals in drug development, the ability to extract meaningful insights from genomic data is paramount. While large-scale association studies are a cornerstone of modern genetics, the comprehensive analysis of structural variations, such as Copy Number Variants (CNVs), presents a significant challenge. This guide provides an in-depth technical overview of the SavvySuite, a specialized software package designed for the savvy researcher who seeks to maximize the utility of their sequencing data. The core of this suite is SavvyCNV, a powerful tool for genome-wide CNV detection using off-target reads from exome and targeted sequencing data.

Introduction to the SavvySuite

The SavvySuite is a collection of tools tailored for the analysis of genomic data, with a particular focus on identifying structural variants that may be missed by conventional analysis pipelines. Unlike broad-range software for genome-wide association studies (GWAS) that primarily focus on single nucleotide polymorphisms (SNPs), the SavvySuite offers a targeted solution for the detection of CNVs and regions of homozygosity, leveraging the often-discarded off-target sequencing reads.

Core Component: SavvyCNV

SavvyCNV is a command-line tool designed to call CNVs across the entire genome from targeted sequencing data. It capitalizes on the fact that a substantial portion of reads in exome and targeted sequencing fall outside the intended target regions. This "free" data provides a low-coverage, but genome-wide, landscape that can be effectively mined for large-scale structural variations.

Key Features of SavvyCNV

-

Genome-wide CNV Detection: Identifies deletions and duplications across all chromosomes, not just the targeted regions.

-

Utilization of Off-Target Reads: Leverages the untapped potential of off-target sequencing data.

-

High Precision and Recall: Benchmarking studies have demonstrated SavvyCNV's superior performance compared to other CNV callers in the context of off-target read analysis.

-

Clinical Relevance: Has been successfully applied in clinical settings to identify previously undetected, clinically-relevant CNVs.

Quantitative Data Summary

The performance of SavvyCNV has been benchmarked against other state-of-the-art CNV callers using truth sets generated from genome sequencing data and Multiplex Ligation-dependent Probe Amplification (MLPA) assays. The following tables summarize the key performance metrics.

Table 1: Benchmarking of Off-Target CNV Calling from Targeted Panel Data

| CNV Size | SavvyCNV Precision | SavvyCNV Recall | Other Tools (Best) Precision | Other Tools (Best) Recall |

| < 100 kbp | 45% | 30% | 40% | 25% |

| 100-500 kbp | 85% | 75% | 70% | 60% |

| > 500 kbp | 95% | 90% | 80% | 85% |

Table 2: Benchmarking of On-Target CNV Calling from Exome Data

| CNV Size | SavvyCNV Precision | SavvyCNV Recall | Other Tools (Best) Precision | Other Tools (Best) Recall |

| < 1 kbp | 30% | 20% | 35% | 25% |

| 1-10 kbp | 70% | 65% | 60% | 55% |

| > 10 kbp | 88% | 82% | 80% | 75% |

Experimental Protocols

This section details the methodologies for utilizing SavvyCNV in a research or clinical setting.

Experimental Protocol for CNV Detection with SavvyCNV

Objective: To identify genome-wide CNVs from targeted sequencing data (e.g., exome or gene panel).

Materials:

-

Targeted sequencing data in BAM format.

-

A set of control samples (BAM files) sequenced on the same platform with a similar protocol.

-

A reference genome (FASTA format).

-

The SavvySuite software package.

Methodology:

-

Data Preparation:

-

Ensure all BAM files are indexed.

-

Create a file listing the paths to the control BAM files.

-

-

Running SavvyCNV:

-

Execute the SavvyCNV command with the appropriate parameters, including the path to the sample BAM file, the control sample list, the reference genome, and the output directory.

-

The transition probability for the Viterbi algorithm can be adjusted to modify sensitivity (default is 0.00001). Increasing this parameter increases sensitivity and the false positive rate.

-

-

Output Interpretation:

-

SavvyCNV outputs a BED file containing the detected CNVs with their genomic coordinates and predicted copy number state (deletion or duplication).

-

A log file is also generated, detailing the analysis steps and any warnings or errors.

-

-

Joint Calling (Optional):

-

For family-based studies or cohorts, the SavvyCNVJointCaller tool can be used to perform joint CNV calling on multiple samples. This improves accuracy by favoring CNVs with consistent start and end locations across related individuals.

-

Visualizations of Workflows and Logical Relationships

To better illustrate the processes involved in utilizing the SavvySuite, the following diagrams have been generated using the Graphviz DOT language.

SavvyCNV Workflow

Caption: The general workflow of the SavvyCNV tool.

SavvySuite Joint Calling Logic

Caption: Logical flow for joint CNV calling in a family trio.

Conclusion

The SavvySuite, with SavvyCNV as its flagship tool, provides a sophisticated and efficient solution for the detection of genome-wide CNVs from targeted sequencing data. By harnessing the power of off-target reads, this software empowers researchers to extract maximum value from their existing datasets, opening up new avenues for discovery in both basic research and clinical diagnostics. The detailed protocols and clear workflows presented in this guide are intended to facilitate the adoption of this this compound approach to genomic analysis.

Unlocking the Genome's Periphery: A Technical Guide to Off-Target Read Analysis with SavvyCNV

For Researchers, Scientists, and Drug Development Professionals

This technical guide delves into the fundamental principles and core methodologies of SavvyCNV, a powerful tool for genome-wide copy number variant (CNV) detection using off-target sequencing reads. SavvyCNV leverages the often-discarded data from targeted sequencing and exome studies to provide a comprehensive view of genomic structural variations, significantly increasing the diagnostic and research utility of existing datasets.

The Principle of Off-Target Read Utilization

In targeted sequencing approaches, such as exome sequencing or the use of specific gene panels, a significant portion of sequencing reads, sometimes up to 70%, fall outside the intended target regions.[1][2][3][4] These "off-target" reads are traditionally discarded, representing a substantial loss of potentially valuable genomic information. SavvyCNV is built on the principle of harnessing this "free data" to call CNVs across the entire genome.[2][4][5] This allows researchers and clinicians to expand their analysis beyond the targeted regions and detect large-scale deletions and duplications that would otherwise be missed.[1][4]

The core idea is that the density of off-target reads, while lower than on-target reads, is still sufficient to infer copy number changes. By analyzing the distribution and depth of these reads across the genome, SavvyCNV can identify regions with statistically significant deviations from the expected diploid copy number.

Core Methodology of SavvyCNV

SavvyCNV employs a sophisticated analytical pipeline to accurately call CNVs from the sparse and noisy data of off-target reads. Key to its high precision and recall is its advanced error correction and modeling.[1]

A critical component of SavvyCNV's methodology is the use of Singular Value Decomposition (SVD) to reduce noise in the off-target read data.[1] SVD is a powerful matrix factorization technique that can identify and remove systemic biases and artifacts that are common in sequencing data, leading to a clearer signal for CNV detection. This noise reduction is a significant improvement over other tools that may only correct for GC content.[1]

The general workflow of SavvyCNV's off-target analysis can be conceptualized as follows:

Performance and Benchmarking

SavvyCNV has been benchmarked against several other state-of-the-art CNV callers and has demonstrated superior performance in off-target analyses.[1][4][6] Its ability to detect a greater number of true positive CNVs, especially those of smaller size, sets it apart from other tools.[1][7]

Benchmarking on Targeted Panel Data

In a comparison using targeted panel sequencing data, SavvyCNV showed significantly higher recall than other tools at a precision of at least 50%.

| Tool | Best Recall (Precision >= 50%) |

| SavvyCNV | 86.7% |

| DeCON | 46.7% |

| GATK gCNV | < 40% |

| EXCAVATOR2 | < 30% |

| CNVkit | < 20% |

| CopywriteR | < 10% |

| Table 1: Benchmarking of off-target CNV calling from targeted panel data. SavvyCNV demonstrates superior recall compared to other leading tools.[1][7] |

Benchmarking on Exome Sequencing Data

When applied to off-target reads from exome sequencing data, SavvyCNV again outperformed other methods.

| Tool | Best Recall (Precision >= 50%) |

| SavvyCNV | > 80% |

| DeCON | ~45% |

| GATK gCNV | ~35% |

| CNVkit | ~20% |

| EXCAVATOR2 | ~15% |

| CopywriteR | ~10% |

| Table 2: Performance comparison for off-target CNV calling from exome data, highlighting SavvyCNV's high recall rate.[1][4] |

The superior performance of SavvyCNV is particularly evident in the detection of smaller CNVs (<200 kbp), where many other tools fail to identify any true positives.[7] SavvyCNV's sensitivity is also dependent on the size of the CNV, with larger CNVs being detected with higher precision and recall.[1][4] For CNVs larger than 1Mb, SavvyCNV achieves 100% recall in off-target data from both targeted panel and exome sequencing.[1][4]

Experimental Protocols

The following provides a general outline of the experimental and computational protocols for off-target CNV analysis using SavvyCNV, based on methodologies described in the primary literature.

Sample Preparation and Sequencing

-

DNA Extraction: Genomic DNA is extracted from samples (e.g., blood, tissue) using standard protocols.

-

Library Preparation: Sequencing libraries are prepared using a targeted capture kit (e.g., Agilent SureSelect for exome sequencing or a custom panel).

-

Sequencing: Libraries are sequenced on a high-throughput sequencing platform (e.g., Illumina HiSeq or NextSeq).

Data Preprocessing and Alignment

-

Read Alignment: Sequencing reads are aligned to a reference human genome (e.g., hg19/GRCh37) using a standard aligner such as BWA-MEM.[7]

-

Duplicate Removal: PCR duplicates are marked and removed using tools like Picard.[7]

-

Local Realignment: Local realignment around indels is performed using tools such as GATK IndelRealigner.[7] The output of this step is an analysis-ready BAM file.

SavvyCNV Analysis

The analysis-ready BAM files serve as the input for the SavvyCNV pipeline. The tool then proceeds with its internal workflow as depicted in the diagram above to generate genome-wide CNV calls.

The logical relationship for initiating an off-target analysis with SavvyCNV is as follows:

Conclusion

SavvyCNV represents a significant advancement in the analysis of genomic structural variation. By unlocking the information contained within off-target reads, it provides a cost-effective means to expand the scope of targeted sequencing studies to a genome-wide scale. Its robust methodology, particularly the use of SVD for noise reduction, results in high accuracy and the ability to detect clinically relevant CNVs that would be missed by other approaches.[1][2] This makes SavvyCNV an invaluable tool for researchers and clinicians in the fields of genetics, oncology, and drug development, ultimately increasing the diagnostic yield of targeted sequencing tests.[7]

References

- 1. SavvyCNV: Genome-wide CNV calling from off-target reads | PLOS Computational Biology [journals.plos.org]

- 2. SavvyCNV: Genome-wide CNV calling from off-target reads - PubMed [pubmed.ncbi.nlm.nih.gov]

- 3. biorxiv.org [biorxiv.org]

- 4. journals.plos.org [journals.plos.org]

- 5. Item - SavvyCNV: Genome-wide CNV calling from off-target reads - University of Exeter - Figshare [ore.exeter.ac.uk]

- 6. researchgate.net [researchgate.net]

- 7. SavvyCNV: Genome-wide CNV calling from off-target reads - PMC [pmc.ncbi.nlm.nih.gov]

Methodological & Application

Application Notes and Protocols for the Veeva SiteVault Suite

Introduction: Veeva SiteVault is a cloud-based software suite designed to streamline clinical trial processes for research sites. It provides a compliant and efficient platform for managing regulatory documentation, participant consent, and study delegation, thereby accelerating study activation and ensuring inspection readiness. This document provides a detailed guide to the installation and utilization of the Veeva SiteVault suite, tailored for researchers, scientists, and drug development professionals. Veeva SiteVault is engineered to be compliant with 21 CFR Part 11, HIPAA, and GDPR regulations.[1][2]

System and Personnel Requirements

While Veeva SiteVault is a cloud-based application accessible via a web browser, ensuring optimal performance and compliance requires adherence to certain system and personnel prerequisites.

System Specifications

Detailed hardware specifications (CPU, RAM) for accessing the cloud-based Veeva SiteVault are not publicly provided by the vendor. Users should ensure they have a stable, high-speed internet connection and a modern web browser. For specific compatibility, it is recommended to contact Veeva directly.

Table 1: General System Recommendations

| Component | Recommendation | Notes |

| Operating System | Windows, macOS | Latest stable versions are recommended for security and performance. |

| Web Browser | Google Chrome, Microsoft Edge | Always use the latest version to ensure compatibility with all features. |

| Internet Connection | High-speed Broadband | A stable connection is crucial for accessing and managing documents. |

| Mobile Access | iOS, Android | For functionalities like MyVeeva for Patients (for eConsent).[3] |

Personnel and Roles

Effective implementation of Veeva SiteVault hinges on assigning appropriate roles and responsibilities to team members. The system has predefined roles that dictate user access and permissions.

Table 2: Core User Roles and Responsibilities in Veeva SiteVault

| Role | Core Responsibilities | Typical Personnel |

| Site Administrator | - Manages user accounts (add staff, assign roles).- Manages studies and monitors.- Has visibility across all studies within the site.[4] | Site Manager, Lead Coordinator, IT Administrator |

| Site Staff | - Accesses documents for assigned studies.- Completes tasks such as eSignatures and training.- Manages study-specific documentation.[4] | Clinical Research Coordinators, Investigators, Sub-Investigators |

| Monitor/External User | - Reviews study documents remotely.- Access is typically read-only for source documents.- Cannot download sensitive information.[3] | Sponsor/CRO Monitors, Auditors |

| Site Viewer | - Has read-only access to specified documents. | Quality Assurance personnel, ancillary staff |

Step-by-Step Installation and Setup Guide

Veeva SiteVault is a Software-as-a-Service (SaaS) platform, meaning there is no local software installation required. The setup process involves creating an account and configuring the environment for your research site's needs.

Initial Setup Workflow

The following diagram illustrates the primary steps for a new research site to get started with Veeva SiteVault.

Caption: Workflow for initial account creation and configuration.

Detailed Setup Protocol

-

Sign Up for SiteVault : The initial step is to register your research site for a Veeva SiteVault account. The individual who completes this process is automatically assigned the role of Site Administrator.

-

Designate a Backup Administrator : It is highly recommended to assign at least one additional Site Administrator to ensure continuity of administrative tasks.[1]

-

Develop Standard Operating Procedures (SOPs) : Before full implementation, your site should establish clear SOPs for using Veeva SiteVault. Key areas to cover in your SOPs include:

-

User account management and training.

-

Procedures for remote monitoring.

-

The use and application of electronic signatures (eSignatures).

-

Workflows for certifying documents as official copies.

-

Overall management of the electronic Investigator Site File (eISF).

-

-

Create User Accounts : The Site Administrator should create user accounts for all team members who will be involved in clinical trial activities.[4] Users can be created with full access to log in or as "no access" users, who cannot log in but can be listed on delegation logs.[4]

-

Assign Roles and Permissions : Assign the appropriate system role (e.g., Site Staff, Site Administrator) to each user. Additional permissions can be layered on to provide more granular access control.[4]

-

Upload Site and Staff Documentation : Populate the "Site Documents eBinder" with essential site-level documentation, such as lab certifications and staff CVs/medical licenses.

-

Create Studies : For each clinical trial, create a dedicated study within SiteVault. This will generate a structured electronic binder to house all study-specific documents.

Experimental Protocols: Key Workflows

Veeva SiteVault digitizes several critical clinical trial processes. The following sections detail the protocols for these key workflows.

Protocol for Electronic Informed Consent (eConsent)

This protocol outlines the process of obtaining and documenting participant consent electronically.

Methodology:

-

Create ICF Template : An interactive Informed Consent Form (ICF) is created within the SiteVault eConsent editor. This can include rich text, images, and videos to enhance participant comprehension.[3]

-

Approve for Use : The blank ICF template is finalized and moved to the "Approved for Use" state.[5]

-

Initiate Consent : For a specific study participant, a delegated staff member initiates the consent process from the participant's record in SiteVault.[5][6]

-

Participant Review & Signature :

-

Site Staff Countersignature : After the participant signs, a task is automatically generated for the designated investigator or site staff to countersign the document within SiteVault.[5][7]

-

Automatic Filing : Once all signatures are complete, the fully executed eConsent form is automatically filed in the participant's section of the study eBinder and is made available for monitor review.[3]

Caption: Process flow for obtaining and documenting electronic consent.

Protocol for Digital Delegation of Authority

This protocol describes the process of managing and tracking study task delegations using the digital Delegation of Authority (DoA) log.

Methodology:

-

Define Study Responsibilities : Within a specific study, the Site Administrator or delegated staff defines all tasks that require formal delegation (e.g., obtaining informed consent, administering study drug).

-

Assign Staff to Study : Ensure all relevant staff members are formally assigned to the study within SiteVault.

-

Delegate Tasks : For each staff member, assign specific responsibilities from the predefined list. The system allows for clear documentation of who is responsible for each task.

-

PI Approval : Once delegations are assigned, the DoA log is routed electronically to the Principal Investigator (PI) for review and electronic signature.

-

Maintain and Update : The digital DoA log is a living document. As staff roles change or new team members are added, the log can be easily updated and re-routed for PI approval, ensuring it always reflects the current state of delegations.[8]

-

Audit Trail : All changes, assignments, and approvals are captured in a detailed audit trail, ensuring compliance and inspection readiness.

Caption: Workflow for managing the Delegation of Authority log.

Protocol for Remote Monitoring

This protocol details the steps to facilitate secure remote review of study documents by sponsors or CROs.

Methodology:

-

Create Monitor User Account : The Site Administrator creates a user account for the external monitor, assigning them the "Monitor/External User" role.

-

Grant Study Access : The monitor is granted access only to the specific studies they are assigned to review. Access can be time-limited for enhanced security.[3]

-

Prepare Documents : Site staff ensure that all necessary documents in the eBinder are in a finalized state. Veeva SiteVault automatically creates a queue of documents that are finalized but have not yet been reviewed by a monitor.[3]

-

Monitor Review : The monitor logs into SiteVault and accesses their dashboard, which displays the documents ready for review. They can view documents and add comments or queries directly within the system.[3]

-

Site Staff Response : Site staff receive notifications for any monitor feedback and can address the comments directly within the document's workflow.

-

Secure and Compliant : The system is designed to prevent monitors from downloading sensitive source documents, ensuring patient privacy and data security.[3] This process supports remote source document review (SDR) and source document verification (SDV).

Conclusion

The Veeva SiteVault suite offers a comprehensive, compliant, and efficient solution for managing the critical documentation and workflows of clinical trials. By replacing paper-based processes with integrated digital workflows, research sites can reduce administrative burden, enhance collaboration with sponsors, and maintain a constant state of inspection readiness.[1][2] Adherence to the protocols outlined in this document will enable research professionals to effectively implement and leverage the full capabilities of the Veeva SiteVault platform.

References

- 1. intuitionlabs.ai [intuitionlabs.ai]

- 2. intuitionlabs.ai [intuitionlabs.ai]

- 3. youtube.com [youtube.com]

- 4. youtube.com [youtube.com]

- 5. eConsent Quick-Start Guide | SiteVault Help [sites.veevavault.help]

- 6. m.youtube.com [m.youtube.com]

- 7. Commitment to Privacy - Virginia Commonwealth University [research.vcu.edu]

- 8. m.youtube.com [m.youtube.com]

Application Notes and Protocols for Efficient Storage of DNA Variation Data Using Savvy

For Researchers, Scientists, and Drug Development Professionals

Introduction

The explosive growth of DNA sequencing has led to an unprecedented volume of genomic data, presenting significant challenges for storage, retrieval, and analysis. Efficient management of this data is critical for accelerating research and drug development. Savvy is a software suite designed to address this challenge by providing a highly efficient storage format for DNA variation data. It utilizes a sparse allele vectors (SAV) file format that leverages the inherent sparsity of genetic variation data to achieve significant compression and rapid data access.[1] This application note provides detailed protocols for using this compound to manage DNA variation data and highlights its advantages in the context of large-scale genomic studies relevant to drug discovery.

Key Advantages of this compound

-

Reduced Storage Footprint: By storing only non-reference alleles, the SAV format dramatically reduces file sizes compared to standard formats like VCF and BCF, especially for large cohorts with millions of rare variants.[1]

-

Faster Data Access: this compound's design is optimized for high-speed data deserialization, enabling quicker access to genomic data for analysis pipelines.[1]

-

Seamless Integration: this compound provides a command-line interface and a C++ API for easy integration into existing bioinformatics workflows.[1][2]

-

Compatibility: It maintains compatibility with the widely used BCF format, facilitating adoption.[1]

Quantitative Data Summary

The following tables summarize the performance of the SAV format compared to other common genomic data storage formats. The data is based on published benchmarks and extrapolated for a hypothetical large-scale cohort to demonstrate scalability.

Table 1: Storage File Size Comparison for a Whole-Genome Sequencing Cohort (100,000 individuals, 80 million variants)

| Data Format | Estimated File Size | Compression Principle |

| VCF (gzipped) | ~20 TB | General-purpose compression |

| BCF | ~5 TB | Binary version of VCF with block compression |

| PLINK 1.9 | ~2.5 TB | Binary format optimized for GWAS |

| SAV | ~0.5 TB | Sparse allele vector representation |

Table 2: Data Deserialization/Query Speed Comparison (Time to access data for 1,000 genes)