Dlpts

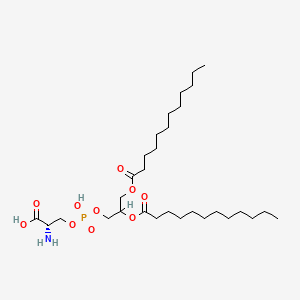

Description

BenchChem offers high-quality this compound suitable for many research applications. Different packaging options are available to accommodate customers' requirements. Please inquire for more information about this compound including the price, delivery time, and more detailed information at info@benchchem.com.

Structure

2D Structure

Properties

CAS No. |

2954-46-3 |

|---|---|

Molecular Formula |

C30H58NO10P |

Molecular Weight |

623.8 g/mol |

IUPAC Name |

(2S)-2-amino-3-[2,3-di(dodecanoyloxy)propoxy-hydroxyphosphoryl]oxypropanoic acid |

InChI |

InChI=1S/C30H58NO10P/c1-3-5-7-9-11-13-15-17-19-21-28(32)38-23-26(24-39-42(36,37)40-25-27(31)30(34)35)41-29(33)22-20-18-16-14-12-10-8-6-4-2/h26-27H,3-25,31H2,1-2H3,(H,34,35)(H,36,37)/t26?,27-/m0/s1 |

InChI Key |

RHODCGQMKYNKED-GEVKEYJPSA-N |

SMILES |

CCCCCCCCCCCC(=O)OCC(COP(=O)(O)OCC(C(=O)O)N)OC(=O)CCCCCCCCCCC |

Isomeric SMILES |

CCCCCCCCCCCC(=O)OCC(COP(=O)(O)OC[C@@H](C(=O)O)N)OC(=O)CCCCCCCCCCC |

Canonical SMILES |

CCCCCCCCCCCC(=O)OCC(COP(=O)(O)OCC(C(=O)O)N)OC(=O)CCCCCCCCCCC |

Synonyms |

dilauroylphosphatidylserine DLPTS |

Origin of Product |

United States |

Foundational & Exploratory

A Technical Examination of the Defense Language Proficiency Test (DLPT)

The Defense Language Proficiency Test (DLPT) is a suite of examinations developed and administered by the Defense Language Institute Foreign Language Center (DLIFLC) to assess the foreign language proficiency of United States Department of Defense (DoD) personnel. This guide provides a detailed technical overview of the DLPT, focusing on its core components, psychometric underpinnings, and developmental methodologies, intended for an audience of researchers, scientists, and drug development professionals interested in language proficiency assessment.

Core Purpose and Assessment Domains

The primary objective of the DLPT is to measure the general language proficiency of native English speakers in a specific foreign language. The test is designed to evaluate how well an individual can function in real-world situations, assessing their ability to understand written and spoken material. The DLPT system is a critical tool for the DoD in determining the readiness of its forces by measuring and validating language capabilities. The skills assessed are primarily reading and listening comprehension. While speaking proficiency is also a crucial skill for military linguists, it is typically assessed via a separate Oral Proficiency Interview (OPI) and is not a component of the standard DLPT.

Test Structure and Formats

The current iteration of the test is the DLPT5, which is primarily delivered via computer. A significant technical feature of the DLPT5 is its use of two distinct test formats, the selection of which is determined by the size of the linguist population for a given language.

-

Multiple-Choice (MC): This format is used for languages with large populations of test-takers, such as Russian, Chinese, and Arabic. The MC format allows for automated scoring, which is more efficient for large-scale testing.

-

Constructed-Response (CR): This format is employed for less commonly taught languages with smaller populations of examinees, such as Hindi and Albanian. The CR format requires human scorers to evaluate examinee responses.

The DLPT5 is also structured into two proficiency ranges:

-

Lower-Range Test: This test assesses proficiency from Interagency Language Roundtable (ILR) levels 0+ to 3.

-

Upper-Range Test: This test is designed for examinees who have already achieved a score of ILR level 3 on the lower-range test and assesses proficiency from ILR levels 3 to 4.

Data Presentation: Scoring and Proficiency Levels

The DLPT5 reports scores based on the Interagency Language Roundtable (ILR) scale, which is the standard for describing language proficiency within the U.S. federal government. The scale ranges from ILR 0 (No Proficiency) to ILR 5 (Native or Bilingual Proficiency), with intermediate "plus" levels.

While a direct raw score to ILR level conversion table is not publicly available due to test security, the proficiency level is determined based on the percentage of correctly answered questions at each ILR level.

| Test Format | Percentage of Correct Answers Required to Achieve ILR Level |

| Multiple-Choice (MC) | 70% |

| Constructed-Response (CR) | 75% |

Source: DLIFLC

The following table provides a general overview of the ILR proficiency levels and their corresponding descriptions for the skills assessed by the DLPT.

| ILR Level | Designation | General Description (Reading/Listening) |

| 0 | No Proficiency | No practical ability to read or understand the spoken language. |

| 0+ | Memorized Proficiency | Can recognize some basic words or phrases. |

| 1 | Elementary Proficiency | Can understand simple, predictable texts and conversations on familiar topics. |

| 1+ | Elementary Proficiency, Plus | Can understand the main ideas and some details in simple, authentic texts and conversations. |

| 2 | Limited Working Proficiency | Can understand the main ideas of most factual, non-technical texts and conversations. |

| 2+ | Limited Working Proficiency, Plus | Can understand most factual information and some abstract concepts in non-technical texts and conversations. |

| 3 | General Professional Proficiency | Able to read and understand a variety of authentic prose on unfamiliar subjects with near-complete comprehension. Able to understand the essentials of all speech in a standard dialect, including technical discussions within a special field. |

| 3+ | General Professional Proficiency, Plus | Comprehends most of the content and intent of a variety of forms and styles of speech and text pertinent to professional needs. |

| 4 | Advanced Professional Proficiency | Able to read fluently and accurately all styles and forms of the language pertinent to professional needs, including understanding inferences and cultural references. Able to understand all forms and styles of speech pertinent to professional needs. |

| 4+ | Advanced Professional Proficiency, Plus | Near-native ability to read and understand extremely difficult or abstract prose. Increased ability to understand extremely difficult and abstract speech. |

| 5 | Functionally Native Proficiency | Reading and listening proficiency is functionally equivalent to that of a well-educated native speaker. |

Source: Interagency Language Roundtable

Experimental Protocols: Test Development and Validation

The DLPT is described as a reliable and scientifically validated tool for assessing language ability. The development and validation process is a multi-stage, rigorous procedure involving linguistic and psychometric experts.

Item Development

Test items are created by a team of at least two native speakers of the target language and a project manager who is an experienced test developer. The process emphasizes the use of authentic materials, such as news articles, radio broadcasts, and other real-world sources. Each test item, which consists of a passage (for reading) or an audio clip (for listening) and a corresponding question, is assigned an ILR proficiency rating.

Psychometric Validation

A key aspect of the DLPT's technical framework is its reliance on psychometric principles to ensure the validity and reliability of the test scores.

-

Item Response Theory (IRT): For multiple-choice tests, the DLIFLC employs Item Response Theory, a sophisticated statistical method used in large-scale, high-stakes testing. IRT models the relationship between a test-taker's proficiency level and the probability of them answering a specific question correctly. This allows for a more precise measurement of proficiency than classical test theory. During the validation phase for languages with large linguist populations, multiple-choice items are administered to a large number of examinees at varying proficiency levels. The response data is then analyzed to identify and remove any items that are not functioning appropriately.

-

Cut Score Determination: The process of establishing the "cut scores" that differentiate one ILR level from the next is a critical step. For multiple-choice tests, this is done using IRT. A psychometrician calculates an "ability indicator" that corresponds to the ability to answer 70% of the questions at a given ILR level correctly. This computation is then applied to the operational test forms to generate the final cut scores.

-

Constructed-Response Scoring: For constructed-response tests, where statistical analysis on a large scale is not feasible, a rigorous review process is still implemented. Each question has a detailed scoring protocol, and two trained scorers independently rate each test. In cases of disagreement, a third expert rater makes the final determination.

Mandatory Visualizations

The following diagrams illustrate key workflows and logical relationships within the DLPT system.

The Evolution of the Defense Language Proficiency Test: A Technical Deep Dive

For Immediate Release

This technical guide provides a comprehensive overview of the history, development, and psychometric underpinnings of the Defense Language Proficiency Test (DLPT) series. Designed for researchers, scientists, and drug development professionals, this document details the evolution of the DLPT, focusing on its core methodologies, experimental protocols, and the transition to its current iteration, the DLPT 5.

A History of Measuring Proficiency: From Early Iterations to the DLPT 5

The Defense Language Proficiency Test (DLPT) is a suite of examinations produced by the Defense Language Institute Foreign Language Center (DLIFLC) to assess the language proficiency of Department of Defense (DoD) personnel. The primary purpose of the DLPT is to measure how well an individual can function in real-world situations using a foreign language, with assessments focused on reading and listening skills. Test scores are a critical component in determining Foreign Language Proficiency Pay (FLPP) and for qualifying for specific roles requiring language capabilities.

The DLPT series has undergone periodic updates every 10 to 15 years to reflect advancements in our understanding of language testing and to meet the evolving needs of the government. The most significant recent evolution was the transition from the DLPT IV to the DLPT 5. A key criticism of the DLPT IV was its lack of authentic materials and the short length of its passages, which limited its ability to comprehensively assess the full spectrum of skills described in the Interagency Language Roundtable (ILR) skill level descriptions. The DLPT 5 was developed to address these shortcomings, with a greater emphasis on using authentic materials to provide a more accurate assessment of a test-taker's true proficiency.

The introduction of the DLPT 5 was not without controversy, as it resulted in an average drop of approximately half a point in proficiency scores across all languages and skills. This led to speculation that the new version was a cost-saving measure to reduce FLPP payments.

Core Test Design and Scoring

The DLPT is not a single test but a series of assessments tailored to different languages and proficiency ranges. The scoring of all DLPT versions is based on the Interagency Language Roundtable (ILR) scale, which provides a standardized measure of language ability.

Test Formats

The DLPT 5 employs two primary test formats, the selection of which is determined by the size of the test-taker population for a given language.

-

Multiple-Choice (MC): This format is used for languages with large populations of linguists, such as Russian and Chinese. The use of multiple-choice questions allows for automated scoring and robust statistical analysis.

-

Constructed-Response (CR): For less commonly taught languages with smaller test-taker populations, a constructed-response format is utilized. This format requires human raters to score the responses, which is more time and personnel-intensive but does not necessitate the large-scale statistical calibration required for multiple-choice tests.

Scoring and the ILR Scale

The DLPT measures proficiency from ILR level 0 (No Proficiency) to 4 (Full Professional Proficiency), with "+" designations indicating proficiency that exceeds a base level but does not fully meet the criteria for the next. For most basic program students at DLIFLC, the graduation requirement is an ILR level 2 in both reading and listening (L2/R2).

The DLPT 5 is available in two ranges: a lower-range test covering ILR levels 0+ to 3, and an upper-range test for levels 3 to 4. To be eligible for the upper-range test, an examinee must first achieve a score of level 3 on the lower-range test in that skill.

Quantitative Data Summary

The following tables summarize key quantitative data related to the DLPT 5 and historical performance.

| Test Modality | Number of Items | Number of Passages | Maximum Passage Length | Time Limit |

| Lower Range Reading (Constructed Response) | 60 | 30 | 300 words | 3 hours |

| Lower Range Reading (Multiple-Choice) | 60 | 36 | 400 words | 3 hours |

| Lower Range Listening (Constructed Response) | 60 | 30 (played twice) | 2 minutes | 3 hours |

| Lower Range Listening (Multiple-Choice) | 60 | 40 (played once or twice) | 2 minutes | 3 hours |

| Source: The Defense Language Institute Foreign Language Center's DLPT-5 |

| Skill | Percentage of Scores Maintained After One Year |

| Listening | 75.5% |

| Reading | 78.2% |

| Source: An Analysis of Factors Predicting Retention and Language Atrophy Over Time for Successful DLI Graduates |

Experimental Protocols

The development and validation of the DLPT series is a rigorous process overseen by the Evaluation and Standards (ES) division of DLIFLC, with input from the Defense Language Testing Advisory Board, a group of nationally recognized psychometricians and testing experts.

Test Item Development

DLPT5 test items are developed by teams consisting of at least two speakers of the target language (typically native speakers) and a project manager with expertise in test development. All test materials are reviewed by at least one native English speaker. The passages are selected based on text typology, considering factors such as the purpose of the passage (e.g., to persuade or inform) and its linguistic features (e.g., lexicon and syntax).

Validation Protocol for Multiple-Choice Tests

For languages with a sufficient number of test-takers (generally 100-200 or more), a validation form of the test is administered. The response data from this administration is then subjected to statistical analysis using Item Response Theory (IRT). This analysis helps to identify and remove any test questions that are not functioning appropriately. For instance, questions that high-ability examinees are divided on between two or more answers are not used in the operational test forms.

The cut scores for each ILR level are determined based on the judgment of ILR experts that a person at a given level should be able to answer at least 70% of the multiple-choice questions at that level correctly. A DLIFLC psychometrician uses IRT to calculate an ability indicator corresponding to this 70% threshold for each level.

Validation Protocol for Constructed-Response Tests

Constructed-response tests undergo a rigorous review process by experts in testing and the ILR proficiency scale. Each question has a detailed scoring protocol that outlines the range of acceptable answers. Examinees are not required to match the exact wording of the protocol but must convey the correct idea.

To ensure scoring reliability, each test is independently scored by two trained raters. If the two raters disagree on a score, a third, expert rater scores the test to make the final determination. Raters are continuously monitored, and those who are inconsistent are either retrained or removed from the pool of raters.

Comparability Studies

To ensure that changes in the test delivery platform do not affect scores, DLIFLC conducts comparability studies. For example, before the full implementation of the computer-based DLPT 5, a study was conducted with the Russian test where examinees took one form on paper and another on the computer. The results of this study showed no significant differences in scores between the two delivery methods.

Visualizing the DLPT Development and Validation Workflow

The following diagrams illustrate the logical workflow for the development and validation of the two types of DLPT 5 tests.

Caption: Workflow for DLPT 5 Multiple-Choice Test Development and Validation.

Caption: Workflow for DLPT 5 Constructed-Response Test Development and Scoring.

The Defense Language Proficiency Test (DLPT): A Technical Overview of Military Language Assessment

For Immediate Release

MONTEREY, CA – The Defense Language Proficiency Test (DLPT) system represents a cornerstone of the U.S. Department of Defense's (DoD) efforts to assess the foreign language capabilities of its personnel. This in-depth guide provides researchers, scientists, and drug development professionals with a comprehensive overview of the DLPT's core purpose, technical design, and psychometric underpinnings. The DLPT is a battery of scientifically validated tests developed by the Defense Language Institute Foreign Language Center (DLIFLC) to measure the general language proficiency of native English speakers in various foreign languages.[1][2]

The primary purpose of the DLPT is to evaluate how well an individual can function in real-world situations using a foreign language.[1] These assessments are critical for several reasons, including:

-

Qualifying Personnel: Ensuring that military linguists and other personnel in language-dependent roles possess the necessary skills to perform their duties.[1]

-

Determining Compensation: DLPT scores are a key factor in determining Foreign Language Proficiency Pay (FLPP) for service members.[1]

-

Assessing Readiness: The scores provide a measure of the language readiness of military units.[1]

-

Informing Training: The results help to gauge the effectiveness of language training programs and identify areas for improvement.

This document will delve into the test's structure, the methodologies behind its development and validation, and the scoring framework that underpins its utility.

Core Framework: The Interagency Language Roundtable (ILR) Scale

The DLPT's scoring and proficiency levels are benchmarked against the Interagency Language Roundtable (ILR) scale, the standard for language ability assessment within the U.S. federal government.[1] The ILR scale provides a common metric for describing language performance across different government agencies. It consists of six base levels, from 0 (No Proficiency) to 5 (Native or Bilingual Proficiency), with intermediate "plus" levels denoting proficiency that is more than a base level but does not fully meet the criteria for the next level.

The DLPT primarily assesses the receptive skills of listening and reading.[1] The following table summarizes the ILR proficiency levels for these two skills.

| ILR Level | Designation | General Description of Listening and Reading Proficiency |

| 0 | No Proficiency | No practical understanding of the spoken or written language. |

| 0+ | Memorized Proficiency | Can recognize and understand a number of isolated words and phrases. |

| 1 | Elementary Proficiency | Sufficient comprehension to understand simple questions, statements, and basic survival needs. |

| 1+ | Elementary Proficiency, Plus | Can understand the main ideas and some supporting details in routine social conversations and simple texts. |

| 2 | Limited Working Proficiency | Able to understand the main ideas of most conversations and texts on familiar topics. |

| 2+ | Limited Working Proficiency, Plus | Can comprehend the main ideas and most supporting details in conversations and texts on a variety of topics. |

| 3 | General Professional Proficiency | Able to understand the essentials of all speech in a standard dialect and can read with almost complete comprehension a variety of authentic prose on unfamiliar subjects.[3] |

| 3+ | General Professional Proficiency, Plus | Comprehends most of the content and intent of a variety of forms and styles of speech and text, including many sociolinguistic and cultural references.[3] |

| 4 | Advanced Professional Proficiency | Able to understand all forms and styles of speech and text pertinent to professional needs with a high degree of accuracy.[3] |

| 4+ | Advanced Professional Proficiency, Plus | Near-native ability to understand the language in all its complexity. |

| 5 | Native or Bilingual Proficiency | Reading and listening proficiency is functionally equivalent to that of a well-educated native speaker. |

Test Structure and Administration

The current iteration of the DLPT is the DLPT5, which is primarily a computer-based test.[3] It is designed to assess proficiency regardless of how the language was acquired and is not tied to any specific curriculum.[2] The test materials are sampled from authentic, real-world sources such as newspapers, radio broadcasts, and websites, covering a broad range of topics including social, cultural, political, and military subjects.

The DLPT system employs two main test formats, the choice of which depends on the size of the test-taker population for a given language:

-

Multiple-Choice (MC): Used for languages with large numbers of test-takers. This format allows for automated scoring and robust statistical analysis.

-

Constructed-Response (CR): Implemented for less commonly taught languages with smaller test-taker populations. This format requires human scorers to evaluate short-answer responses.

Each test is allotted three hours for completion.[4]

Experimental Protocols and Methodologies

The development and validation of the DLPT are guided by rigorous psychometric principles to ensure the reliability and validity of the test scores. While specific internal validation data is not publicly released, the methodologies employed are based on established best practices in language assessment.[5]

Test Development Workflow

The creation of a DLPT is a multi-stage process involving language experts, testing specialists, and psychometricians.

Item Development and Review

The foundation of the DLPT lies in the quality of its test items. The process for developing and vetting these items is meticulous.

Passage Selection: Test developers select authentic passages from a variety of sources. These passages are then rated by language experts to determine their corresponding ILR level.

Item Writing: A team of trained item writers, typically native or near-native speakers of the target language, create questions based on the selected passages. For multiple-choice tests, this includes writing a single correct answer (the key) and several plausible but incorrect options (distractors). For constructed-response tests, a detailed scoring rubric is developed.

Item Review: All test items undergo a multi-layered review process. This involves scrutiny by language experts, testing specialists, and native English speakers to ensure clarity, accuracy, and fairness.

Psychometric Analysis and Scoring

The psychometric soundness of the DLPT is established through statistical analysis, with Item Response Theory (IRT) being a key methodology for multiple-choice tests.

Item Response Theory (IRT): IRT is a sophisticated statistical framework that models the relationship between a test-taker's proficiency level and their probability of answering an item correctly.[6] Unlike classical test theory, IRT considers the properties of each individual item, such as its difficulty and its ability to discriminate between test-takers of different proficiency levels. This allows for more precise measurement of language ability.

Cut-Score Determination: For multiple-choice tests, cut-scores (the scores required to achieve a certain ILR level) are determined using IRT. Experts judge that a test-taker at a given ILR level should be able to answer at least 70% of the questions at that level correctly. A psychometrician then uses IRT to calculate the ability level corresponding to this 70% probability, which is then used to set the cut-scores for operational test forms.

For constructed-response tests, scoring is based on a detailed protocol. Each response is independently scored by two trained raters. If there is a disagreement, a third expert rater makes the final determination. Generally, a test-taker needs to answer approximately 75% of the questions at a given level correctly to be awarded that proficiency level.[4]

Quantitative Data Summary

While specific psychometric data for the DLPT is not publicly available, the following tables illustrate the types of quantitative analyses that are conducted to ensure the test's reliability and validity.

Table 1: Illustrative Reliability and Validity Metrics

| Metric Type | Specific Metric | Description | Typical Target Value |

| Reliability | Internal Consistency (e.g., Cronbach's Alpha) | The degree to which items on a test measure the same underlying construct. | > 0.80 |

| Test-Retest Reliability | The consistency of scores over time when the same test is administered to the same individuals. | High positive correlation | |

| Inter-Rater Reliability (for CR tests) | The level of agreement between different raters scoring the same responses. | High percentage of agreement or correlation | |

| Validity | Content Validity | The extent to which the test content is representative of the language skills it aims to measure. | Assessed through expert review |

| Construct Validity | The degree to which the test measures the theoretical construct of language proficiency. | Assessed through statistical methods like factor analysis | |

| Criterion-Related Validity | The correlation of DLPT scores with other measures of language ability or job performance. | Statistically significant positive correlation |

Table 2: Illustrative Score Distribution (Hypothetical Data for a Single Language)

| ILR Level | Percentage of Test-Takers (Listening) | Percentage of Test-Takers (Reading) |

| 0/0+ | 5% | 4% |

| 1/1+ | 15% | 12% |

| 2/2+ | 45% | 48% |

| 3/3+ | 30% | 32% |

| 4/4+/5 | 5% | 4% |

Note: The data in these tables are for illustrative purposes only and do not represent actual DLPT results.

Conclusion

The Defense Language Proficiency Test is a comprehensive and technically sophisticated system for assessing the language capabilities of U.S. military personnel. Its foundation in the Interagency Language Roundtable scale, coupled with rigorous test development and psychometric methodologies like Item Response Theory, ensures that the DLPT provides reliable and valid measures of language proficiency. For researchers and professionals in related fields, an understanding of the DLPT's core principles offers valuable insights into the large-scale assessment of language skills in a high-stakes environment. The continuous development and refinement of the DLPT underscore the DoD's commitment to maintaining a linguistically ready force.

References

The Interagency Language Roundtable (ILR) Scale and the Defense Language Proficiency Test (DLPT): A Technical Overview

An In-depth Guide for Researchers and Professionals in Drug Development

This technical guide provides a comprehensive overview of the Interagency Language Roundtable (ILR) scale, the U.S. government's standard for describing and measuring language proficiency, and the Defense Language Proficiency Test (DLPT), a key instrument for assessing language capabilities within the Department of Defense (DoD). This document is intended for researchers, scientists, and drug development professionals who require a deep understanding of these language assessment frameworks for contexts such as clinical trial site selection, multilingual data analysis, and effective communication with diverse patient populations.

The Interagency Language Roundtable (ILR) Scale

The ILR scale is a system used by the United States federal government to uniformly describe and measure the language proficiency of its employees.[1] Developed in the mid-20th century to meet diplomatic and intelligence needs, it has become the standard for evaluating language skills across various government agencies.[2][3] The scale is not a test itself but a set of detailed descriptions of language ability across four primary skills: speaking, listening, reading, and writing.[4]

The ILR scale consists of six base levels, ranging from 0 (No Proficiency) to 5 (Native or Bilingual Proficiency).[4] Additionally, "plus" levels (e.g., 0+, 1+, 2+, 3+, 4+) are used to indicate proficiency that substantially exceeds a given base level but does not fully meet the criteria for the next higher level.[5] This results in a more granular 11-point scale.[6]

ILR Skill Level Descriptions

The following table summarizes the abilities associated with each base level of the ILR scale.

| ILR Level | Designation | General Description of Abilities |

| 0 | No Proficiency | Has no practical ability in the language. May know a few isolated words.[7] |

| 1 | Elementary Proficiency | Can satisfy basic travel and courtesy requirements. Can use simple questions and answers to communicate on familiar topics.[7][8] |

| 2 | Limited Working Proficiency | Able to handle routine social demands and limited work requirements. Can engage in conversations about everyday topics.[5] |

| 3 | Professional Working Proficiency | Can speak the language with sufficient accuracy to participate effectively in most formal and informal conversations on practical, social, and professional topics.[5][7] |

| 4 | Full Professional Proficiency | Able to use the language fluently and accurately on all levels pertinent to professional needs. Can understand and participate in any conversation with a high degree of precision.[7][8] |

| 5 | Native or Bilingual Proficiency | Has a speaking proficiency equivalent to that of an educated native speaker.[5][7] |

A visual representation of the ILR proficiency levels and their hierarchical relationship is provided below.

The Defense Language Proficiency Test (DLPT)

The Defense Language Proficiency Test (DLPT) is a suite of examinations produced by the Defense Language Institute Foreign Language Center (DLIFLC) to assess the foreign language proficiency of DoD personnel.[1] These tests are designed to measure how well an individual can function in real-world situations using a foreign language and are critical for determining job qualifications, special pay, and unit readiness. The DLPT primarily assesses the receptive skills of reading and listening.[1] An Oral Proficiency Interview (OPI) is used to assess speaking skills but is a separate examination.[1]

DLPT Versions and Formats

The most current version of the DLPT is the DLPT5, which was introduced to provide a more accurate and comprehensive assessment of language proficiency, often using authentic materials like news articles and broadcasts.[1][9] The DLPT5 is delivered via computer and is available in two main formats depending on the language being tested:[10]

-

Multiple-Choice (MC): Used for languages with larger populations of test-takers.

-

Constructed-Response (CR): Used for less commonly taught languages. In this format, examinees type short answers to questions.[10]

The DLPT5 is also available in lower-range (testing ILR levels 0+ to 3) and upper-range (testing ILR levels 3 to 4) versions for some languages.[5][11]

The logical workflow for determining the appropriate DLPT5 format and range is illustrated in the diagram below.

Scoring and Correlation with the ILR Scale

DLPT5 scores are reported directly in terms of ILR levels.[2] The scoring methodology, however, differs between the multiple-choice and constructed-response formats.

| Test Format | Scoring Methodology | ILR Level Correlation |

| Multiple-Choice (MC) | Based on Item Response Theory (IRT), a statistical model. A candidate must generally answer at least 70% of the questions at a given ILR level correctly to be awarded that level.[11] | Scores are calculated to correspond to ILR levels 0+ through 3 for lower-range tests and 3 through 4 for upper-range tests.[5] |

| Constructed-Response (CR) | Human-scored based on a detailed protocol. Each response is evaluated for the correct information it contains. Two independent raters score each test, with a third adjudicating any disagreements.[12] | ILR ratings are determined by the number of questions answered correctly at each level.[5] |

Experimental Protocols: Test Development and Validation

The development and validation of the DLPT is a rigorous, multi-stage process designed to ensure the tests are reliable and accurately measure language proficiency according to the ILR standards. While a detailed, universal experimental protocol is not publicly available, the key stages of the process can be outlined as follows.

Test Item Development Protocol

-

Content Specification: Test content is designed to reflect real-world language use and covers a range of topics including social, cultural, political, economic, and military subjects. Passages are often sourced from authentic materials.[1]

-

Item Writing: For each passage, questions are developed to target specific ILR skill levels.[5]

-

Expert Review: All test items undergo review by multiple experts, including specialists in the target language and in language testing, to ensure they are accurate, appropriate, and correctly aligned with the intended ILR level.[11]

-

Translation and Rendering: For scoring and review purposes, accurate English renderings of all foreign language test items are created.

The general workflow for DLPT5 item development is depicted below.

Test Validation Protocol

The validation process for the DLPT aims to ensure that the test scores provide a meaningful and accurate indication of a test-taker's language proficiency. This involves gathering evidence for different types of validity.

-

Content Validity: Experts review the test to ensure that the questions and tasks are representative of the language skills described in the ILR standards.

-

Construct Validity: This is assessed by ensuring the test measures the theoretical construct of language proficiency as defined by the ILR scale. Statistical analysis, such as determining the correlation between different sections of the test, is used to support construct validity.

-

Empirical Validation (for MC tests):

-

Pre-testing: Multiple-choice items are administered to a large and diverse group of examinees with varying proficiency levels.[11]

-

Statistical Analysis: The response data is analyzed using psychometric models like Item Response Theory (IRT). This analysis helps to identify and remove questions that do not perform as expected (e.g., are too easy, too difficult, or do not differentiate between proficiency levels).[11]

-

Cut Score Determination: The statistical analysis is used to establish the "cut scores," or the number of correct answers needed to achieve each ILR level.[11]

-

-

Reliability: The consistency of the test is evaluated. For constructed-response tests, this includes measuring inter-rater reliability to ensure that different scorers assign the same scores to the same responses.

This comprehensive approach to development and validation ensures that the DLPT remains a scientifically sound and reliable tool for measuring language proficiency within the DoD.[2]

References

- 1. Defense Language Proficiency Tests - Wikipedia [en.wikipedia.org]

- 2. Defense Language Proficiency Test (DLPT) [broward.edu]

- 3. robins.af.mil [robins.af.mil]

- 4. apps.dtic.mil [apps.dtic.mil]

- 5. uscg.mil [uscg.mil]

- 6. govtilr.org [govtilr.org]

- 7. SAM.gov [sam.gov]

- 8. languagetesting.com [languagetesting.com]

- 9. sydney.edu.au [sydney.edu.au]

- 10. govtribe.com [govtribe.com]

- 11. ets.org [ets.org]

- 12. pdfs.semanticscholar.org [pdfs.semanticscholar.org]

foundational principles of the DLPT5

An extensive search for publicly available information on the "foundational principles of the DLPT5" has yielded no relevant results within the fields of scientific research, drug development, or any other related technical domain. Consequently, it is not possible to provide an in-depth technical guide, whitepaper, or any associated data, experimental protocols, or visualizations as requested.

The term "DLPT5" does not appear to correspond to any known protein, gene, signaling pathway, or therapeutic agent in publicly accessible scientific literature, databases, or other informational resources. This suggests a few possibilities:

-

Internal or Proprietary Designation: "DLPT5" may be an internal codename or a proprietary designation for a project or compound that is not yet disclosed to the public.

-

Novel or Unpublished Research: The subject may be part of very recent, unpublished research that has not yet been disseminated in the scientific community.

-

Typographical Error: It is possible that "DLPT5" is a misspelling of another established scientific term.

Without any foundational information, the creation of a technical guide with the specified requirements, including data tables, experimental protocols, and Graphviz diagrams, cannot be accomplished. Should further, more specific or alternative identifying information become available, a new search can be undertaken.

A Technical Guide to the Scope and Structure of the Defense Language Proficiency Test (DLPT)

For Researchers, Scientists, and Drug Development Professionals

Abstract

The Defense Language Proficiency Test (DLPT) is a comprehensive suite of examinations developed by the Defense Language Institute (DLI) to assess the foreign language capabilities of United States Department of Defense (DoD) personnel.[1] This guide provides an in-depth analysis of the DLPT system, detailing its structure, the extensive range of languages it covers, and the proficiency scoring methodology. While the subject matter diverges from the typical focus of drug development, the principles of standardized assessment, rigorous validation, and tiered evaluation presented here may offer analogous insights into complex system analysis and qualification. This document outlines the test's architecture, presents a categorized list of assessed languages, and provides logical workflows to illustrate the assessment process, adhering to a structured, scientific format.

Introduction: The DLPT Framework

The Defense Language Proficiency Test (DLPT) is a critical tool used by the U.S. Department of Defense to measure the language proficiency of native English speakers and other personnel with strong English skills in a variety of foreign languages.[2] Produced by the Defense Language Institute Foreign Language Center (DLIFLC), these tests are designed to evaluate how well an individual can function in real-world situations using a foreign language.[1] The primary skills assessed are reading and listening comprehension.[1]

The results of the DLPT have significant implications for service members, influencing their eligibility for specific roles, determining the amount of Foreign Language Proficiency Pay (FLPP), and factoring into the overall readiness assessment of military linguist units.[1] The current iteration of the test is the DLPT5, which has largely replaced previous versions and is increasingly delivered via computer.[1][3] Most federal government agencies utilize the DLPT and the associated Oral Proficiency Interview (OPI) as reliable, scientifically validated instruments for evaluating language ability among DoD personnel globally.[4]

Test Protocol and Methodology

The administration and design of the DLPT follow a standardized protocol to ensure consistent and objective assessment across all languages and candidates. This methodology can be broken down into its core components: test structure, scoring, and format variations.

Assessment Structure

-

Skills Evaluated: The DLPT battery of tests primarily assesses passive language skills: reading and listening.[1] Speaking proficiency is typically evaluated via a separate test, the Oral Proficiency Interview (OPI), which is not an official part of the DLPT battery but is often used in conjunction with it.[1]

-

Test Content: Passages and audio clips used in the DLPT are drawn from authentic, real-life materials.[2][5] These sources include newspapers, radio broadcasts, television, and internet content, covering a wide range of topics such as social, cultural, political, military, and scientific subjects.[2][5]

-

Administration: Tests are administered annually to maintain currency.[1] Each section (listening and reading) typically takes about three hours to complete.[3]

Scoring and Proficiency Levels

DLPT scoring is based on the Interagency Language Roundtable (ILR) scale, which measures proficiency from Level 0 (no proficiency) to Level 5 (native or bilingual proficiency).[6][7] The scale includes intermediate "plus" levels (e.g., 0+, 1+, 2+), resulting in 11 possible grades.[7] Scores for the current DLPT5 can extend up to Level 3 for lower-range tests and up to Level 4 for some upper-range tests.[1][8] To qualify for FLPP, personnel must typically meet or exceed a minimum score, such as L2/R2 (Level 2 in Listening, Level 2 in Reading).[1]

Test Formats

The DLPT5 utilizes two primary formats, the choice of which often depends on the size of the test-taker population for a given language.

-

Multiple-Choice (MC): Used for languages with large numbers of linguists, such as Russian, Chinese, and Modern Standard Arabic.[1] This format allows for automated scoring.

-

Constructed-Response Test (CRT): Employed for less commonly taught languages where the test-taker population is smaller, such as Hindi and Albanian.[1] In this format, the examinee must write out their answers, which are then graded by trained scorers.[8]

Results: Scope of Languages Covered

The DLPT program covers a wide and diverse array of languages, reflecting the strategic interests and operational needs of the Department of Defense. The availability of a test for a specific language is subject to change based on evolving requirements. The Defense Language Institute may have a demand for test items for approximately 450 languages and dialects.[7]

Table of Assessed Languages

The following table summarizes languages for which DLPTs are known to be available, compiled from various official and informational sources.

| Language | Dialect/Variant | Language | Dialect/Variant |

| Albanian | Korean | ||

| Amharic | Kurdish | Kurmanji | |

| Arabic | Modern Standard (MSA)[2][4][9] | Kurdish | Sorani[2][4] |

| Arabic | Algerian[4][5] | Levantine | |

| Arabic | Egyptian[5] | Norwegian | [2][4][9] |

| Arabic | Iraqi[5][9] | Pashto | Afghan[2][4][9] |

| Arabic | Saudi[4][5] | Persian | Farsi / Dari[2][4][9] |

| Arabic | Sudanese[4][5] | Polish | [2][5] |

| Arabic | Yemeni[4][5] | Portuguese | Brazilian[2][5] |

| Azerbaijani | [2][4] | Portuguese | European[2][5] |

| Buano | [4] | Punjabi | Western[2][5] |

| Burmese | [10] | Romanian | [2][5] |

| Cebuano | [5] | Russian | [2][4][9] |

| Chavacano | [4][5] | Serbian/Croatian | [2][4] |

| Chinese | Cantonese[4][5][10] | Somali | [2][4] |

| Chinese | Mandarin[2][4][9] | Spanish | [2][4][9] |

| Czech | [2][5] | Swahili | [4] |

| French | [2][4][10] | Tagalog | [2][4][10] |

| German | [2][4][10] | Tausug | [4][5] |

| Greek | [2][4][9] | Thai | [2][4] |

| Haitian Creole | [2][4] | Turkish | [2][4] |

| Hebrew | [2][4] | Ukrainian | [2][5] |

| Hindi | [2][4][9] | Urdu | [2][4][9] |

| Indonesian | [2][4] | Uzbek | [2][4] |

| Italian | [2][5] | Vietnamese | [2][4] |

| Japanese | [2][4] | Yoruba | [2][4] |

Note: This list is not exhaustive and is subject to change. It is compiled from multiple sources indicating test availability or the existence of test preparation materials.[2][4][5][9]

Language Difficulty Categorization

The Defense Language Institute groups languages into four categories based on the approximate time required for a native English speaker to achieve proficiency. This categorization influences the length of DLI training courses.[11]

| Category | Description | Representative Languages | DLI Course Length |

| I | Languages more closely related to English. | French, Italian, Portuguese, Spanish[11] | 26 Weeks[11] |

| II | Languages with significant differences from English. | German, Indonesian[11] | 35 Weeks[11] |

| III | "Hard" languages with substantial linguistic and/or cultural differences. | Dari, Hebrew, Hindi, Russian, Serbian, Tagalog, Thai, Turkish, Urdu[11] | 48 Weeks[11] |

| IV | "Super-hard" languages that are exceptionally difficult for native English speakers. | Arabic, Chinese (Mandarin), Japanese, Korean, Pashto[11] | 64 Weeks[11] |

Logical and Workflow Visualizations

To better illustrate the processes and relationships within the DLPT system, the following diagrams are provided. They serve as logical models analogous to experimental workflows or signaling pathways.

Caption: Workflow of the DLPT assessment process.

Caption: Logical relationship of DLI language categories.

References

- 1. Defense Language Proficiency Tests - Wikipedia [en.wikipedia.org]

- 2. Course [acenet.edu]

- 3. robins.af.mil [robins.af.mil]

- 4. veteran.com [veteran.com]

- 5. Course [acenet.edu]

- 6. Defense Language Proficiency Test (DLPT) [broward.edu]

- 7. DLPT5 Full Range Test Item Development [usfcr.com]

- 8. mezzoguild.com [mezzoguild.com]

- 9. af.mil [af.mil]

- 10. navy.mil [navy.mil]

- 11. DLI's language guidelines | AUSA [ausa.org]

A Technical Guide to the Evolution of the Defense Language Proficiency Test: DLPT IV vs. DLPT 5

For Immediate Distribution to Researchers, Scientists, and Drug Development Professionals

This technical guide provides an in-depth comparison of the Defense Language Proficiency Test (DLPT) IV and its successor, the DLPT 5. The transition to the DLPT 5 marked a significant evolution in the Department of Defense's methodology for assessing foreign language proficiency, driven by a greater understanding of language testing principles and the changing needs of the government.[1] This document outlines the core technical differences, summarizes quantitative data, and describes the methodological shifts between these two generations of the DLPT.

Executive Summary

The Defense Language Proficiency Test (DLPT) is a standardized testing system used by the U.S. Department of Defense to evaluate the language proficiency of its members.[2] The fifth generation of this test, the DLPT 5, was introduced to provide a more comprehensive, effective, and reliable assessment of language capabilities compared to its predecessor, the DLPT IV. Key enhancements in the DLPT 5 include the use of longer, more authentic passages, a multi-question format for each passage, and a tiered testing structure with lower and upper proficiency ranges.[3] The DLPT 5 is administered via computer, a shift from the paper-and-pencil format of the DLPT IV.[3][4]

Key Technical and Structural Differences

The transition from DLPT IV to DLPT 5 involved fundamental changes to the test's design and content. These changes were instituted to better align the test with the Interagency Language Roundtable (ILR) skill level descriptions and to assess a test-taker's ability to function in real-world situations.[1][3]

Test Format and Content

A primary criticism of the DLPT IV was its use of very short passages, which limited its ability to comprehensively assess the full spectrum of language skills described in the ILR guidelines.[1][5] The DLPT 5 addresses this by incorporating longer passages and presenting multiple questions related to a single passage, a departure from the one-question-per-passage format of its predecessor.

Furthermore, the DLPT 5 places a strong emphasis on the use of "authentic materials."[2] While the DLPT IV also used authentic materials, the DLPT 5 development process formalized and prioritized the inclusion of real-world source materials such as live radio and television broadcasts, telephone conversations, and online articles to a greater extent.[5] This shift was intended to create a more accurate assessment of an individual's ability to comprehend language as it is used by native speakers.

Test Structure and Delivery

The DLPT 5 introduced a more complex and nuanced structure compared to the DLPT IV. A significant innovation is the implementation of lower-range and upper-range examinations. The lower-range test assesses proficiency from ILR levels 0+ to 3, while the upper-range test is designed for ILR levels 3 to 4.[3][4] Eligibility to take the upper-range test generally requires a score of ILR level 3 on the corresponding lower-range test.[5]

Another key structural difference is the introduction of two distinct test formats for the DLPT 5: multiple-choice (MC) and constructed-response test (CRT).[2] The MC format is typically used for languages with a large number of test-takers, while the CRT format, which requires examinees to type short answers in English, is used for less commonly taught languages.[2] The DLPT IV, in contrast, primarily utilized a consistent multiple-choice format across different languages.[2]

The delivery method also represents a major advancement. The DLPT 5 is a computer-based test, which allows for more efficient administration and scoring.[3][6] The DLPT IV was originally a paper-and-pencil test, though web-based versions of older DLPT forms were introduced as an interim measure during the transition to the DLPT 5.[4][7]

Quantitative Data Summary

The following tables summarize the available quantitative data for the DLPT IV and DLPT 5.

| Feature | DLPT IV | DLPT 5 (Lower Range) |

| Delivery Method | Paper-and-pencil (initially), with later web-based versions | Computer-based |

| Test Sections | Listening, Reading | Listening, Reading |

| Number of Items | 100 items per section (Listening and Reading) | Approximately 60 questions per section |

| Passage Length | Very short[1][5] | Longer passages |

| Questions per Passage | Typically one | Multiple questions per passage (up to 4 for MC, up to 3 for CRT)[8][9] |

| Test Duration | Listening: ~75 minutes | 3 hours per section (Listening and Reading)[1][9] |

| Scoring Basis | Interagency Language Roundtable (ILR) Scale | Interagency Language Roundtable (ILR) Scale[2] |

| Proficiency Range | Up to ILR Level 3[10] | Lower Range: 0+ to 3; Upper Range: 3 to 4[3][4] |

| Test Formats | Primarily Multiple-Choice[2] | Multiple-Choice (MC) and Constructed Response Test (CRT)[2] |

Table 1: Comparison of DLPT IV and DLPT 5 (Lower Range) Specifications

| DLPT 5 Lower Range - Reading | Multiple-Choice (MC) | Constructed Response (CRT) |

| Number of Passages | ~36 | ~30 |

| Maximum Passage Length | 400 words | 300 words |

| Questions per Passage | Up to 4 | Up to 3 |

Table 2: DLPT 5 Lower Range Reading Section Specifications by Format [8][9]

| DLPT 5 Lower Range - Listening | Multiple-Choice (MC) | Constructed Response (CRT) |

| Number of Passages | ~40 | 30 |

| Maximum Passage Length | 2 minutes | 2 minutes |

| Passage Plays | Once for lower levels, twice for level 2 and above | Twice for all passages |

| Questions per Passage | 2 | 2 |

Table 3: DLPT 5 Lower Range Listening Section Specifications by Format [8][9]

Experimental Protocols and Methodologies

The development and validation protocols for the DLPT 5 represent a more systematic and scientifically grounded approach compared to earlier generations.

DLPT 5 Development and Validation

The creation of the DLPT 5 is a multidisciplinary effort, involving teams of target language experts (often native speakers) and foreign language testing specialists.[3] The process for developing test items is rigorous and includes the following key stages:

-

Passage Selection: Test developers select authentic passages from a wide range of sources, covering topics such as politics, economics, culture, science, and military affairs.[8] The passages are chosen based on text typology to ensure they are representative of the language used in real-world contexts.[3]

-

Item Writing and Review: For multiple-choice questions, item writers create a single correct answer and several plausible distractors. For constructed-response items, a detailed scoring rubric is developed. All items undergo a thorough review by both language and testing experts to ensure their validity and alignment with the targeted ILR level.[3]

-

Validation: The validation process for the DLPT 5 is extensive, particularly for the multiple-choice versions. New test items are piloted with a large number of examinees at varying proficiency levels.[5] The response data is then statistically analyzed using Item Response Theory (IRT) to ensure that each question functions appropriately and accurately measures the intended proficiency level.[1] Items that do not meet the required statistical criteria are removed from the pool.[5] For constructed-response tests, which are used for languages with smaller populations of test-takers, large-scale statistical analysis is not feasible. Instead, the validation relies on the rigorous review process and the standardized training of human raters who use a detailed protocol to score the tests.[8]

DLPT IV Methodological Shortcomings

The impetus for developing the DLPT 5 stemmed from identified limitations in the DLPT IV. The primary methodological shortcoming was the test's structure, which, with its very short passages, did not adequately allow for the assessment of higher-order comprehension skills as defined by the ILR scale.[1][5] While the DLPT IV was based on the ILR standards, its design did not fully capture the complexity of language use that the DLPT 5 aims to measure through its use of longer, more contextually rich passages.[1] The development of the DLPT 5 was a direct response to the need for a more robust and valid measure of language proficiency.[1]

Visualizing the DLPT Evolution and Structure

The following diagrams illustrate the key relationships and workflow changes between the DLPT IV and DLPT 5.

Caption: Evolutionary path from DLPT IV to DLPT 5.

Caption: Hierarchical structure of the DLPT 5.

Caption: DLPT 5 multiple-choice item validation workflow.

References

- 1. robins.af.mil [robins.af.mil]

- 2. govtribe.com [govtribe.com]

- 3. internetmor92: DLI Launches New DLPT Generation-DLPT 5: Measuring Language Capabilities in the 21st Century [internetmor92.blogspot.com]

- 4. Defense Language Proficiency Tests - Wikipedia [en.wikipedia.org]

- 5. Course [acenet.edu]

- 6. esd.whs.mil [esd.whs.mil]

- 7. SAM.gov [sam.gov]

- 8. govtribe.com [govtribe.com]

- 9. Course [acenet.edu]

- 10. DLPT5 Full Range Test Item Development [highergov.com]

understanding the structure of the DLPT reading section

An In-depth Technical Guide to the Structure of the Defense Language Proficiency Test (DLPT) Reading Section

Introduction

The Defense Language Proficiency Test (DLPT) is a comprehensive suite of examinations produced by the Defense Language Institute (DLI) to assess the foreign language reading and listening proficiency of United States Department of Defense (DoD) personnel. This guide provides a detailed technical overview of the structure and underlying principles of the DLPT reading section, with a particular focus on the DLPT5, the current iteration of the test. The DLPT is designed to measure how well an individual can comprehend written language in real-world situations, and its results are used for critical decisions regarding career placement, special pay, and readiness of military units. The test is not tied to a specific curriculum but rather assesses general language proficiency acquired through any means.

Test Architecture and Scoring Framework

The DLPT reading section is a computer-based examination designed to evaluate a test-taker's ability to understand authentic written materials in a target language. The scoring of the DLPT is based on the Interagency Language Roundtable (ILR) scale, a standard for describing language proficiency within the U.S. federal government. The ILR scale ranges from Level 0 (No Proficiency) to Level 5 (Native or Bilingual Proficiency), with intermediate "plus" levels (e.g., 0+, 1+, 2+) indicating proficiency that substantially exceeds a base level but does not fully meet the criteria for the next.

Test Versions and Formats

The DLPT5 reading test is available in two primary ranges, each targeting a different spectrum of proficiency:

-

Lower-Range Test: Measures ILR proficiency levels from 0+ to 3.

-

Upper-Range Test: Measures ILR proficiency levels from 3 to 4.

Examinees who achieve a score of 3 on the lower-range test may be eligible to take the upper-range test.

The format of the DLPT5 reading section varies depending on the prevalence of the language being tested:

-

Multiple-Choice (MC): Used for languages with a large population of test-takers, such as Russian, Chinese, and Arabic. This format allows for automated scoring.

-

Constructed-Response (CR): Employed for less commonly taught languages with smaller test-taker populations, like Hindi and Dari. This format requires human scorers to evaluate short-answer responses written in English.

The following tables summarize the key quantitative aspects of the DLPT5 reading section formats.

Table 1: DLPT5 Lower-Range Reading Test Specifications

| Feature | Multiple-Choice Format | Constructed-Response Format |

| ILR Levels Assessed | 0+ to 3 | 0+ to 3 |

| Number of Questions | Approximately 60 | 60 |

| Number of Passages | Approximately 36 | Approximately 30 |

| Maximum Passage Length | Approximately 400 words | Approximately 300 words |

| Questions per Passage | Up to 4 | Up to 3 |

| Time Limit | 3 hours | 3 hours |

Source: Defense Language Institute Foreign Language Center

Table 2: DLPT5 Upper-Range Reading Test Specifications

| Feature | Multiple-Choice/Constructed-Response Format |

| ILR Levels Assessed | 3 to 4 |

| Number of Questions | Varies |

| Number of Passages | Varies |

| Maximum Passage Length | Varies |

| Questions per Passage | Varies |

| Time Limit | 3 hours |

Note: Specific quantitative data for the upper-range test is less publicly available but follows a similar structure to the lower-range test, with content targeted at higher proficiency levels.

Experimental Protocols: Test Development and Validation

The development of the DLPT is a rigorous, multi-faceted process designed to ensure the scientific validity and reliability of the examination. This process is overseen by the Evaluation and Standards (ES) division of the DLI.

Test Development Workflow

The creation of DLPT items follows a systematic workflow involving a multidisciplinary team of target language experts, linguists, and psychometricians.

Caption: A high-level overview of the DLPT test development workflow.

Passage Selection and Content

The passages used in the DLPT are sourced from authentic, real-world materials to reflect the types of language an individual would encounter in the target country. These sources include newspapers, magazines, websites, and official documents. The content covers a broad range of topics, including social, cultural, political, economic, scientific, and military subjects.

The selection of passages is guided by text typology, considering the purpose of the text (e.g., to inform, persuade), its linguistic features (lexicon and syntax), and its alignment with the ILR proficiency level descriptions.

Item Writing and Review

For each passage, a team of target language experts and testing specialists develops a set of questions. All questions and, for the multiple-choice format, the answer options are presented in English. This is to ensure that the test is measuring comprehension of the target language passage, not the test-taker's ability to understand questions in the target language.

The development of test items adheres to strict guidelines:

-

Single Correct Answer: For multiple-choice questions, there is only one correct answer.

-

Plausible Distractors: The incorrect options (distractors) are designed to be plausible to test-takers who have not fully understood the passage.

-

Level-Appropriate Targeting: Questions are written to target specific ILR proficiency levels.

-

Avoiding Reliance on Background Knowledge: Test items are designed to be answerable solely based on the information provided in the passage.

A rigorous review process involving multiple experts ensures the quality and validity of each test item before it is included in a pilot test.

Psychometric Validation

For multiple-choice tests, the DLPT program employs psychometric analysis, including Item Response Theory (IRT), to calibrate the difficulty of test items and ensure the reliability of the overall test. This requires a large sample of test-takers (ideally 200 or more) to take a validation form of the test. The data from this validation testing is used to identify and remove questions that are not performing as expected.

Constructed-response tests, due to the smaller populations of test-takers, do not undergo the same large-scale statistical analysis. However, they are subject to the same rigorous review process by language and testing experts to ensure their validity.

Structure of the DLPT Reading Section

The DLPT reading section presents the test-taker with a series of passages in the target language, each followed by one or more questions in English.

Logical Flow of the Examination

The following diagram illustrates the logical structure of the DLPT reading section from the perspective of the test-taker.

Caption: Logical flow of the DLPT reading section for a single passage.

An "Orientation" statement in English precedes each passage to provide context. The test-taker then reads the authentic passage in the target language and answers the corresponding questions in English. This cycle repeats for each passage in the test.

Interplay of Test Components

The relationship between the different components of the DLPT reading section is hierarchical, with the overall proficiency score being derived from performance on individual items that are linked to specific passages and targeted ILR levels.

Caption: Hierarchical relationship of components in the DLPT reading section.

Conclusion

The DLPT reading section is a sophisticated and robust instrument for measuring language proficiency. Its structure is deeply rooted in the principles of scientific measurement, with a clear and logical architecture designed to provide a reliable and valid assessment of a test-taker's ability to comprehend authentic written materials. The use of a multi-faceted test development process, including expert review and psychometric analysis, ensures that the DLPT remains a cornerstone of the DoD's language proficiency assessment program. For researchers and professionals in fields requiring precise language skill evaluation, an understanding of the DLPT's technical underpinnings is essential for interpreting its results and appreciating its role in maintaining a high level of linguistic readiness.

Navigating the Aural Maze: A Technical Guide to the Defense Language Proficiency Test (DLPT) Listening Comprehension Format

For Immediate Release

This technical guide provides a comprehensive analysis of the listening comprehension section of the Defense Language Proficiency Test (DLPT), a crucial instrument for assessing the auditory linguistic capabilities of U.S. Department of Defense personnel. Tailored for an audience of researchers, scientists, and drug development professionals who may encounter language proficiency data in their work, this document details the test's structure, methodologies, and scoring, presenting a clear framework for understanding this critical assessment tool.

Test Structure and Design

The DLPT is designed to measure how well a person can understand a foreign language in real-world situations.[1] The listening comprehension component, a key modality of the assessment, utilizes authentic audio materials to gauge a test-taker's proficiency.[2][3] These materials are drawn from a variety of real-life sources, including news broadcasts, radio shows, and conversations, covering a wide range of topics from social and cultural to political and military subjects.[2][3]

The DLPT listening comprehension test is administered via computer and is offered in two main formats, contingent on the prevalence of the language being tested.[1][4] For widely spoken languages such as Russian, Chinese, and Arabic, a multiple-choice format is employed.[4] In contrast, less commonly taught languages utilize a constructed-response format where test-takers provide short written answers in English.[4]

The test is further divided into lower-range and upper-range examinations. The lower-range test assesses proficiency levels from 0+ to 3 on the Interagency Language Roundtable (ILR) scale, while the upper-range test is designed for those who have already achieved a level 3 and measures proficiency from levels 3 to 4.[2]

Quantitative Data Summary

To facilitate a clear understanding of the test's quantitative parameters, the following table summarizes the key metrics for the lower and upper-range listening comprehension exams.

| Feature | Lower-Range Listening Test | Upper-Range Listening Test |

| ILR Proficiency Levels Measured | 0+ to 3[2] | 3 to 4[2] |

| Number of Questions | Approximately 60[2] | Approximately 60[2] |

| Number of Audio Passages | Approximately 30-37[2] | Approximately 30[2] |

| Questions per Passage | Up to 2[2] | 2[2] |

| Passage Repetition | Each passage is played twice[2] | Each passage is played twice[2] |

| Maximum Passage Length | Approximately 2.5 minutes[2] | Not explicitly stated, but likely similar to lower-range |

| Total Test Time | 3 hours[2][5] | 3 hours[2] |

| Mandatory Break | 15 minutes (does not count towards test time)[2][6] | 15 minutes (does not count towards test time)[2] |

Experimental Protocol: Test Administration and Response

The administration of the DLPT listening comprehension test follows a standardized protocol to ensure consistency and validity. The following outlines the key steps in the experimental workflow from the test-taker's perspective.

Pre-Test Orientation

Before the commencement of the scored portion of the exam, test-takers are presented with an orientation. This includes:

-

Instructions: Detailed guidance on how to navigate the test interface and respond to questions.

-

Sample Questions: Examples of the types of questions and audio passages to be expected, allowing for familiarization with the format.

Test Section Workflow

Each question set within the test follows a structured sequence:

-

Contextual Orientation: A brief statement in English is provided to set the context for the upcoming audio passage.[2]

-

Audio Passage Presentation: The audio passage in the target language is played automatically. The passage is played a total of two times.[2]

-

Question and Response: Following the audio, one or more multiple-choice or constructed-response questions are presented in English.[2][4] The test-taker then selects the correct option or types a short answer in English.[2][4] While the audio playback is computer-controlled, examinees can manage their own time for answering the questions.[2]

Scoring Methodology

The DLPT is scored based on the Interagency Language Roundtable (ILR) scale, which ranges from 0 (no proficiency) to 5 (native or bilingual proficiency).[7] The listening test specifically provides scores for ILR levels 0+, 1, 1+, 2, 2+, 3, 3+, and 4.[2][8] To be awarded a specific proficiency level, a test-taker must generally answer a certain percentage of questions at that level correctly, while also demonstrating proficiency at all lower levels.[2]

Visualizing the Process

To further elucidate the structure and flow of the DLPT listening comprehension test, the following diagrams have been generated using Graphviz.

Caption: Logical flow for DLPT listening test progression.

Caption: Standard workflow for a single question set.

Conclusion

The DLPT listening comprehension test is a robust and multifaceted assessment tool. Its reliance on authentic materials and its tiered structure of lower and upper-range exams allow for a nuanced evaluation of a wide spectrum of language proficiencies. For researchers and professionals in fields where linguistic competence is a factor, understanding the technical specifications and methodologies of the DLPT is essential for the accurate interpretation and application of its results. This guide provides a foundational overview to support such endeavors.

References

- 1. Defense Language Proficiency Tests - Wikipedia [en.wikipedia.org]

- 2. uscg.mil [uscg.mil]

- 3. Course [acenet.edu]

- 4. uscg.mil [uscg.mil]

- 5. robins.af.mil [robins.af.mil]

- 6. robins.af.mil [robins.af.mil]

- 7. Master Your Skills with the Ultimate DLPT Spanish Practice Test Guide - Talkpal [talkpal.ai]

- 8. Defense Language Proficiency Test (DLPT) [broward.edu]

Methodological & Application

Defense Language Proficiency Test (DLPT): Application Notes on Scoring and Interpretation for Researchers and Drug Development Professionals

Introduction

The Defense Language Proficiency Test (DLPT) is a suite of standardized assessments developed by the Defense Language Institute Foreign Language Center (DLIFLC) to measure the language proficiency of Department of Defense (DoD) personnel. These tests are crucial for determining job assignments, special pay, and overall readiness of military and civilian employees. This document provides detailed application notes on the scoring and interpretation of the DLPT, with a particular focus on the DLPT5, the current computer-based version. The information is intended for researchers, scientists, and drug development professionals who may encounter or utilize DLPT scores in their work, particularly in contexts where linguistic competence is a relevant variable.

The DLPT assesses two primary skills: reading and listening comprehension. The scoring is based on the Interagency Language Roundtable (ILR) scale, a standardized system used by the U.S. federal government to describe language proficiency.

Scoring Methodology

The DLPT5 employs a criterion-referenced scoring system, meaning that an individual's performance is measured against a set of predefined criteria for each proficiency level, rather than against the performance of other test-takers.

The Interagency Language Roundtable (ILR) Scale

The foundation of DLPT scoring is the ILR scale, which ranges from Level 0 (No Proficiency) to Level 5 (Native or Bilingual Proficiency). The scale also includes "plus" designations (e.g., 0+, 1+, 2+, 3+, 4+) to indicate proficiency that substantially exceeds one base level but does not fully meet the criteria for the next. The DLPT5 provides scores for the lower range from ILR 0+ to 3 and for an upper-range test from ILR 3 to 4.

Determination of ILR Level

An examinee's ILR level is determined by the number of questions answered correctly at each level. Each question on the DLPT5 is designed to assess a specific ILR level. To be awarded a particular ILR level, an examinee must generally answer at least 70-75% of the questions at that level correctly.[1] The test is designed in a way that an individual must demonstrate proficiency at a lower level before being credited with a higher level.

Data Presentation: ILR Skill Level Descriptions

The following tables provide a detailed breakdown of the abilities associated with each ILR level for reading and listening comprehension. This information is essential for the accurate interpretation of DLPT scores.

Table 1: ILR Skill Level Descriptions for Reading Proficiency

| ILR Level | Designation | Description of Abilities |

| 0 | No practical ability to read the language | Consistently misunderstands or cannot comprehend written text. |

| 0+ | Memorized Proficiency | Able to recognize and read a limited number of isolated words and phrases, such as names, street signs, and simple notices. Understanding is often inaccurate. |

| 1 | Elementary Proficiency | Sufficient comprehension to understand very simple, connected written material in a predictable context. Can understand material such as announcements of public events, simple biographical information, and basic instructions. |

| 1+ | Elementary Proficiency, Plus | Sufficient comprehension to understand simple discourse in printed form for informative social purposes. Can read material such as straightforward newspaper headlines and simple prose on familiar subjects. Can guess the meaning of some unfamiliar words from context. |

| 2 | Limited Working Proficiency | Sufficient comprehension to read simple, authentic written material in a variety of contexts on familiar subjects. Can read uncomplicated, but authentic prose on familiar subjects that are normally presented in a predictable sequence. Texts may include news items describing frequently occurring events, simple biographical information, social notices, and formulaic business letters. |

| 2+ | Limited Working Proficiency, Plus | Sufficient comprehension to understand most factual material in non-technical prose as well as some discussions on concrete topics related to special professional interests. Is markedly more proficient at reading materials on a familiar topic. Can separate main ideas and details from subordinate information. |

| 3 | General Professional Proficiency | Able to read within a normal range of speed and with almost complete comprehension a variety of authentic prose material on unfamiliar subjects. Reading ability is not dependent on subject matter knowledge. Can understand the main and subsidiary ideas of texts, including those with complex structure and vocabulary. |

| 3+ | General Professional Proficiency, Plus | Able to comprehend a wide variety of texts, including those with highly colloquial or specialized language. Can understand many sociolinguistic and cultural references, though some nuances may be missed. |

| 4 | Advanced Professional Proficiency | Able to read fluently and accurately all styles and forms of the language pertinent to professional needs. Can readily understand highly abstract and specialized texts, as well as literary works. Has a strong sensitivity to sociolinguistic and cultural references. |

Table 2: ILR Skill Level Descriptions for Listening Proficiency

| ILR Level | Designation | Description of Abilities |

| 0 | No practical understanding of the spoken language | Understanding is limited to occasional isolated words with essentially no ability to comprehend communication. |

| 0+ | Memorized Proficiency | Sufficient comprehension to understand a number of memorized utterances in areas of immediate needs. Requires frequent pauses and repetition. |

| 1 | Elementary Proficiency | Sufficient comprehension to understand utterances about basic survival needs and minimum courtesy and travel requirements. Can understand simple questions and answers, statements, and face-to-face conversations in a standard dialect. |

| 1+ | Elementary Proficiency, Plus | Sufficient comprehension to understand short conversations about all survival needs and limited social demands. Can understand face-to-face speech in a standard dialect, delivered at a normal rate with some repetition and rewording. |

| 2 | Limited Working Proficiency | Sufficient comprehension to understand conversations on routine social demands and limited job requirements. Can understand a fair amount of detail from conversations and short talks on familiar topics. |

| 2+ | Limited Working Proficiency, Plus | Sufficient comprehension to understand the main points of most conversations on non-technical subjects and conversations on special fields of competence. Can get the gist of some radio broadcasts and formal speeches. |

| 3 | General Professional Proficiency | Able to understand the essentials of all speech in a standard dialect including technical discussions within a special field. Can follow discourse that is structurally complex and contains abstract or unfamiliar vocabulary. |

| 3+ | General Professional Proficiency, Plus | Comprehends most of the content and intent of a variety of forms and styles of speech pertinent to professional needs, as well as general topics and social conversation. Can understand many sociolinguistic and cultural references but may miss some subtleties. |