AIAP

Description

BenchChem offers high-quality this compound suitable for many research applications. Different packaging options are available to accommodate customers' requirements. Please inquire for more information about this compound including the price, delivery time, and more detailed information at info@benchchem.com.

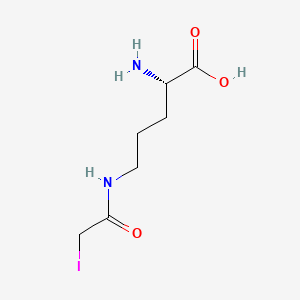

Structure

3D Structure

Properties

IUPAC Name |

(2S)-2-amino-5-[(2-iodoacetyl)amino]pentanoic acid | |

|---|---|---|

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI |

InChI=1S/C7H13IN2O3/c8-4-6(11)10-3-1-2-5(9)7(12)13/h5H,1-4,9H2,(H,10,11)(H,12,13)/t5-/m0/s1 | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI Key |

ZDWGLSKCVZNFLT-YFKPBYRVSA-N | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Canonical SMILES |

C(CC(C(=O)O)N)CNC(=O)CI | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Isomeric SMILES |

C(C[C@@H](C(=O)O)N)CNC(=O)CI | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Molecular Formula |

C7H13IN2O3 | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

DSSTOX Substance ID |

DTXSID90957150 | |

| Record name | N~5~-(1-Hydroxy-2-iodoethylidene)ornithine | |

| Source | EPA DSSTox | |

| URL | https://comptox.epa.gov/dashboard/DTXSID90957150 | |

| Description | DSSTox provides a high quality public chemistry resource for supporting improved predictive toxicology. | |

Molecular Weight |

300.09 g/mol | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

CAS No. |

35748-65-3 | |

| Record name | 2-Amino-5-iodoacetamidopentanoic acid | |

| Source | ChemIDplus | |

| URL | https://pubchem.ncbi.nlm.nih.gov/substance/?source=chemidplus&sourceid=0035748653 | |

| Description | ChemIDplus is a free, web search system that provides access to the structure and nomenclature authority files used for the identification of chemical substances cited in National Library of Medicine (NLM) databases, including the TOXNET system. | |

| Record name | N~5~-(1-Hydroxy-2-iodoethylidene)ornithine | |

| Source | EPA DSSTox | |

| URL | https://comptox.epa.gov/dashboard/DTXSID90957150 | |

| Description | DSSTox provides a high quality public chemistry resource for supporting improved predictive toxicology. | |

Foundational & Exploratory

AIAP: A Deep Dive into the ATAC-seq Integrative Analysis Package

The Assay for Transposase-Accessible Chromatin with high-throughput sequencing (ATAC-seq) has become a cornerstone technique for investigating genome-wide chromatin accessibility, providing critical insights into gene regulation. The ATAC-seq Integrative Analysis Package (AIAP) is a comprehensive computational tool designed to streamline and enhance the analysis of ATAC-seq data. It offers a complete solution encompassing quality control, improved peak calling, and downstream differential analysis, ensuring high-quality and reliable results for researchers.[1][2][3] This technical guide provides an in-depth exploration of the this compound tool for researchers, scientists, and drug development professionals.

Core Concepts and Workflow

This compound is designed to process paired-end ATAC-seq data, demonstrating a significant improvement in sensitivity (20%-60%) in both peak calling and differential analysis.[2][3] The tool is conveniently packaged in Docker/Singularity, allowing for execution with a single command line to generate a comprehensive quality control (QC) report.[1][2][3] The software, source code, and documentation are publicly available for researchers.[1][2][3]

The this compound workflow is structured into four main stages: Data Processing, Quality Control (QC), Integrative Analysis, and Data Visualization.

Experimental Protocols

This compound's development and benchmarking were performed using publicly available ATAC-seq datasets from the Encyclopedia of DNA Elements (ENCODE) project. The following provides a detailed methodology for the data processing and analysis steps implemented within the this compound pipeline.

Data Processing Protocol

-

Adapter Trimming: Raw paired-end FASTQ reads are trimmed to remove adapter sequences using the cutadapt tool.

-

Alignment: The trimmed reads are then aligned to a reference genome (e.g., hg19, hg38, mm9, mm10) using the Burrows-Wheeler Aligner (bwa).[3]

-

Post-Alignment Processing: The resulting BAM files are processed using methylQA in ATAC-seq mode. This step involves:

-

Filtering out unmapped and low-quality mapped reads.

-

Identifying the Tn5 transposase insertion sites by shifting the read alignments by +4 bp on the positive strand and -5 bp on the negative strand.[3]

-

Quality Control (QC) Protocol

This compound calculates a series of QC metrics to assess the quality of the ATAC-seq data. These metrics are crucial for identifying potential issues in the experimental procedure and ensuring the reliability of downstream analysis.

The core QC metrics include:

-

Reads Under Peak Ratio (RUPr): This metric calculates the fraction of total reads that fall within the identified accessible chromatin regions (peaks). A higher RUPr generally indicates a better signal-to-noise ratio.

-

Background (BG): this compound estimates the background noise by randomly sampling genomic regions and measuring the signal within them. A lower background value is indicative of a cleaner ATAC-seq signal.

-

Promoter Enrichment (ProEn): This metric measures the enrichment of ATAC-seq signal in promoter regions, which are expected to be accessible. Higher promoter enrichment suggests a successful experiment.

-

Subsampling Enrichment (SubEn): To account for sequencing depth variability, this compound subsamples the reads to a fixed number and assesses the enrichment of signal in peaks.

Data Presentation

The performance of this compound was benchmarked using a comprehensive set of 70 mouse ENCODE ATAC-seq datasets. The following tables summarize the key QC metrics obtained from this analysis, providing a reference for expected values in high-quality ATAC-seq experiments.

| Quality Control Metric | Description | Recommended Value |

| Non-redundant uniquely mapped reads | Percentage of reads that uniquely map to the reference genome after removing duplicates. | > 80% |

| ChrM contamination rate | Percentage of reads mapping to the mitochondrial chromosome. | < 5% |

| Reads Under Peak Ratio (RUPr) | Percentage of reads located in called peak regions. | > 20% |

| Promoter Enrichment (ProEn) | Fold enrichment of ATAC-seq signal at transcription start sites (TSSs). | > 5 |

Mandatory Visualization

This compound Quality Control Logic

The following diagram illustrates the logical flow of the quality control module in this compound, where several key metrics are assessed to determine the overall quality of the ATAC-seq data.

This compound Differential Accessibility Analysis

This compound facilitates the identification of differentially accessible regions (DARs) between different experimental conditions. This analysis is crucial for understanding the dynamic changes in chromatin accessibility associated with various biological processes.

References

- 1. This compound: A Quality Control and Integrative Analysis Package to Improve ATAC-seq Data Analysis - PMC [pmc.ncbi.nlm.nih.gov]

- 2. This compound: A Quality Control and Integrative Analysis Package to Improve ATAC-seq Data Analysis - PubMed [pubmed.ncbi.nlm.nih.gov]

- 3. researchgate.net [researchgate.net]

Unveiling Chromatin Accessibility: An In-depth Technical Guide to ATAC-seq Quality Control Metrics

For Researchers, Scientists, and Drug Development Professionals

The Assay for Transposase-Accessible Chromatin with high-throughput sequencing (ATAC-seq) has become a cornerstone technique for investigating genome-wide chromatin accessibility, providing critical insights into gene regulation and cellular states. The quality of ATAC-seq data is paramount for the accuracy and reliability of these insights. This technical guide provides a comprehensive overview of the core quality control (QC) metrics essential for evaluating the success of an ATAC-seq experiment, with a focus on the standards and methodologies that ensure robust and reproducible results.

The ATAC-seq Experimental Workflow: From Nuclei to Insights

The ATAC-seq workflow begins with the isolation of nuclei, followed by transposition using a hyperactive Tn5 transposase. This enzyme simultaneously fragments the DNA in open chromatin regions and ligates sequencing adapters in a process called "tagmentation". These tagged DNA fragments are then amplified and subjected to high-throughput sequencing. The resulting sequencing reads are aligned to a reference genome to identify regions of accessible chromatin.

Core Quality Control Metrics for ATAC-seq Data

A series of well-defined QC metrics are essential for assessing the quality of ATAC-seq libraries. These metrics provide insights into the efficiency of the transposition reaction, the complexity of the library, and the overall signal-to-noise ratio. The following tables summarize the key QC metrics, their descriptions, and the generally accepted values based on guidelines from consortia such as ENCODE.

Table 1: Library Composition and Quality Metrics

| Metric | Description | Acceptable/Good Values | Interpretation |

| Total Reads | The total number of sequenced reads. | Application-dependent | Provides a general measure of sequencing depth. |

| Alignment Rate | The percentage of reads that successfully map to the reference genome. | >80% (acceptable), >95% (good)[1] | A low alignment rate may indicate sample contamination or poor sequencing quality. |

| Non-duplicate, Non-mitochondrial Reads | The number of unique reads that do not map to the mitochondrial genome. | >25 million fragments for paired-end sequencing[1] | High mitochondrial DNA contamination can indicate excessive cell lysis. High duplication rates suggest low library complexity. |

| Library Complexity (NRF, PBC1, PBC2) | Measures the diversity of the DNA fragment library. Non-Redundant Fraction (NRF), PCR Bottlenecking Coefficient 1 (PBC1), and PCR Bottlenecking Coefficient 2 (PBC2) are key indicators. | NRF > 0.9, PBC1 > 0.9, PBC2 > 3[2] | Low complexity indicates that a large fraction of reads are PCR duplicates, suggesting that the library was over-amplified or started with too little material. |

Table 2: Signal and Enrichment Metrics

| Metric | Description | Acceptable/Good Values (ENCODE) | Interpretation |

| Fraction of Reads in Peaks (FRiP) | The proportion of all mapped reads that fall within the called peak regions.[3] | >0.2 (acceptable), >0.3 (good)[2][3] | A primary indicator of signal-to-noise ratio. Higher FRiP scores indicate better enrichment of signal in open chromatin regions. |

| Reads Under Peak Ratio (RUPr) | A metric defined by the AIAP package to assess signal enrichment. | Benchmark-dependent | Similar to FRiP, a higher RUPr suggests better signal quality. |

| TSS Enrichment Score | The ratio of reads centered at transcription start sites (TSSs) compared to flanking regions. | Varies by annotation, but generally >6 is acceptable and >10 is ideal for human samples.[2] | A strong TSS enrichment indicates successful targeting of open chromatin associated with regulatory regions. |

| Number of Peaks | The total number of distinct accessible chromatin regions identified. | >150,000 (replicated peaks), >70,000 (IDR peaks) for human samples.[1] | Reflects the complexity of the accessible chromatin landscape captured. |

| Irreproducible Discovery Rate (IDR) | A statistical measure of consistency between biological replicates. | Rescue and self-consistency ratios < 2[2] | Ensures that the identified peaks are reproducible across experiments. |

Table 3: Fragment and Read Characteristics

| Metric | Description | Expected Pattern | Interpretation |

| Fragment Size Distribution | The distribution of the lengths of the sequenced DNA fragments. | A periodic pattern with a prominent peak at <100 bp (nucleosome-free) and subsequent peaks at ~200 bp intervals (mono-, di-, tri-nucleosomes).[1] | A clear nucleosomal pattern is a hallmark of a successful ATAC-seq experiment and confirms the capture of both nucleosome-free and nucleosome-occupied accessible regions. |

| Blacklist Fraction | The proportion of reads mapping to genomic regions known to produce artifactual signals. | As low as possible | High blacklist fraction can indicate technical artifacts and may need to be filtered. |

Logical Relationships of Key QC Metrics

The various QC metrics are interconnected and together provide a holistic view of data quality. A successful ATAC-seq experiment is a prerequisite for obtaining good QC metrics, which in turn are necessary for reliable downstream biological interpretation.

Methodologies for Key QC Metric Generation

Detailed protocols for generating these QC metrics are often embedded within standardized bioinformatics pipelines, such as the ENCODE ATAC-seq pipeline.

Fraction of Reads in Peaks (FRiP) Calculation

-

Input: BAM file (aligned reads) and a BED file of called peaks.

-

Procedure:

-

Count the total number of mapped reads in the BAM file. This can be done using tools like samtools view -c.

-

Intersect the reads in the BAM file with the peak regions defined in the BED file. Tools like bedtools intersect are commonly used for this purpose.

-

Count the number of reads that overlap with the peak regions.

-

-

Calculation:

-

FRiP Score = (Number of reads in peaks) / (Total number of mapped reads)

-

TSS Enrichment Score Calculation

-

Input: BAM file and a file with TSS coordinates.

-

Procedure:

-

For each TSS, calculate the read coverage in a window centered around the TSS (e.g., +/- 2000 bp).

-

Normalize the coverage at each base pair relative to the TSS by the average coverage in the flanking regions (e.g., +/- 1900-2000 bp).

-

-

Calculation:

-

The TSS enrichment score is the highest point of the normalized coverage profile at the TSS.

-

Library Complexity Estimation

-

Input: BAM file.

-

Procedure:

-

The Preseq library, or tools that implement its methods like ATACseqQC::estimateLibComplexity, are used to estimate the number of unique fragments that would be sequenced given a certain sequencing depth.

-

This is achieved by analyzing the duplication rates of reads at various subsampled sequencing depths.

-

-

Output:

-

Metrics such as NRF, PBC1, and PBC2 are calculated based on these estimations to provide a quantitative measure of library complexity.

-

Conclusion

References

The Advent of AI-Accelerated Platforms in Genomics Research: A Technical Guide

An In-depth Technical Guide for Researchers, Scientists, and Drug Development Professionals on the Integration of Artificial Intelligence in Genomics.

While the acronym "AIAP" can refer to specific software, such as the "A Quality Control and Integrative Analysis Package to Improve ATAC-seq Data Analysis," this guide will address the broader and more impactful concept of Artificial Intelligence Accelerated Platforms . These platforms encompass a range of computational tools and methodologies that are revolutionizing how genomic data is generated, analyzed, and translated into actionable insights.

Core Concepts: The Engine of AI in Genomics

At its core, AI in genomics leverages machine learning (ML) and deep learning (DL) algorithms to identify complex patterns in vast and high-dimensional genomic datasets. These algorithms can be broadly categorized into supervised, unsupervised, and reinforcement learning approaches, each suited for different analytical tasks.

Supervised learning models are trained on labeled data to make predictions on new, unlabeled data.[1] In genomics, this is applied to tasks like predicting the pathogenicity of genetic variants or classifying tumor subtypes based on gene expression profiles.[2] Unsupervised learning , on the other hand, is used to uncover hidden structures in unlabeled data, such as identifying novel cell populations from single-cell RNA sequencing (scRNA-seq) data.[3] Deep learning , a subset of machine learning, utilizes neural networks with multiple layers to model intricate patterns in data, proving particularly effective in image analysis of medical scans and predicting protein structures.[4][5]

Revolutionizing the Genomics Workflow

Data Acquisition and Quality Control

Data Processing and Analysis

This is where AI has made its most significant impact to date. AI algorithms excel at tasks that are challenging for traditional bioinformatics pipelines:

-

Variant Calling: AI models, particularly deep learning-based approaches like DeepVariant, have demonstrated superior accuracy in identifying single nucleotide polymorphisms (SNPs) and insertions/deletions (indels) from sequencing data.[3]

-

Gene Expression Analysis: Machine learning can be used to analyze RNA-seq data to identify differentially expressed genes, classify cell types, and reconstruct gene regulatory networks.

-

Functional Genomics: AI helps in predicting the function of non-coding genomic regions, identifying enhancers, and understanding the impact of genetic variations on gene regulation.[7]

Quantitative Data Summary

The application of AI in genomics has yielded significant quantifiable improvements across various tasks. The following tables summarize key performance metrics of AI models in genomics and their impact on drug discovery timelines.

| AI Application in Genomics | Model Type | Performance Metric | Reported Value | Reference |

| Patient Outcome Prediction | Machine Learning | AUROC | > 0.8 | [8] |

| Gene Set Function Identification | GPT-4 (LLM) | Accuracy | 73% | [7] |

| Somatic Mutation Detection | DeepSomatic (Deep Learning) | F1 Score (SNPs) | 0.983 | |

| Somatic Mutation Detection | DeepSomatic (Deep Learning) | F1 Score (Indels) | ~90% (Illumina), >80% (PacBio) |

| Impact of AI on Drug Discovery | Metric | Reported Impact | Reference |

| Market Growth | Projected Market Size (2032) | USD 12.02 billion | [9] |

| Market Growth | CAGR (2024-2032) | 27.8% | [9] |

| Development Timeline | Reduction in Timeline | up to 35% | [10] |

| R&D Cost | Average Cost per New Drug | > USD 2 billion | [9] |

Experimental Protocols: A Practical Guide

The integration of AI into genomics research necessitates a shift in experimental design and data analysis protocols. Below are detailed methodologies for key experiments leveraging AI.

Protocol 1: AI-Enhanced Variant Calling and Annotation

1. Data Preprocessing:

- Raw sequencing reads (FASTQ files) are subjected to quality control using tools like FastQC to assess read quality, GC content, and other metrics.

- Adapter sequences are trimmed, and low-quality reads are filtered out.

2. Genome Alignment:

- The processed reads are aligned to a reference genome (e.g., GRCh38) using an aligner like BWA-MEM.

- The resulting alignment files (BAM format) are sorted and indexed.

3. AI-Based Variant Calling:

- A deep learning-based variant caller, such as Google's DeepVariant, is used to identify SNPs and indels from the aligned reads.

- DeepVariant transforms the read alignments around a candidate variant into an image-like representation and uses a convolutional neural network (CNN) to classify the genotype.

4. Variant Annotation and Prioritization:

- The identified variants (VCF file) are annotated with information from various databases (e.g., dbSNP, ClinVar, gnomAD) to predict their functional impact.

- Machine learning models can be applied to prioritize variants based on their predicted pathogenicity, integrating features such as conservation scores, allele frequency, and functional annotations.

Protocol 2: AI-Driven Analysis of Single-Cell RNA Sequencing Data

This protocol describes the workflow for analyzing scRNA-seq data to identify cell types and states using machine learning.

1. Data Preprocessing and Quality Control:

- Raw scRNA-seq data is processed to generate a gene-cell count matrix.

- Cells with low library size or high mitochondrial gene content are filtered out.

2. Normalization and Feature Selection:

- The count data is normalized to account for differences in library size between cells.

- Highly variable genes are identified for downstream analysis.

3. Dimensionality Reduction and Clustering:

- Principal Component Analysis (PCA) is performed to reduce the dimensionality of the data.

- Unsupervised clustering algorithms, such as k-means or graph-based clustering, are applied to the principal components to group cells with similar expression profiles.[3]

4. Cell Type Annotation:

- Known marker genes are used to annotate the identified cell clusters.

- Supervised machine learning classifiers can be trained on reference datasets to automatically assign cell type labels to the clusters.

5. Trajectory Inference (Optional):

- For developmental or dynamic processes, pseudotime analysis algorithms can be used to order cells along a trajectory and identify gene expression changes over time.

Mandatory Visualization: Signaling Pathways and Workflows

Caption: AI workflow for PI3K/Akt pathway analysis in drug discovery.[13][14]

AI in Signaling Pathway Analysis: Unraveling Complexity

AI is proving to be a powerful tool for dissecting the complexity of cellular signaling pathways, which are often dysregulated in diseases like cancer.

-

PI3K-AKT Signaling Pathway: Machine learning algorithms are used to build prognostic signatures based on the expression of genes in the PI3K-AKT pathway.[13] By integrating multi-omics data, these models can predict patient survival and sensitivity to different drugs, paving the way for personalized treatment strategies.[13][14]

The Future of AI in Genomics and Drug Development

The integration of AI into genomics is still in its early stages, but its potential is vast. Future developments are likely to focus on:

References

- 1. A primer on deep learning in genomics - PMC [pmc.ncbi.nlm.nih.gov]

- 2. A review of model evaluation metrics for machine learning in genetics and genomics - PMC [pmc.ncbi.nlm.nih.gov]

- 3. 10 Cutting-Edge Strategies for Genomic Data Analysis: A Comprehensive Guide - Omics tutorials [omicstutorials.com]

- 4. Deep Learning for Genomics: From Early Neural Nets to Modern Large Language Models - PMC [pmc.ncbi.nlm.nih.gov]

- 5. Machine Learning in Genomic Data Analysis for Personalized Medicine [ijraset.com]

- 6. AI in Genomics | Role of AI in genome sequencing [illumina.com]

- 7. How Artificial Intelligence Could Automate Genomics Research [today.ucsd.edu]

- 8. Can AI predict patient outcomes based on genomic data? [synapse.patsnap.com]

- 9. pharmiweb.com [pharmiweb.com]

- 10. orfonline.org [orfonline.org]

- 11. medrxiv.org [medrxiv.org]

- 12. researchgate.net [researchgate.net]

- 13. Machine learning developed a PI3K/Akt pathway-related signature for predicting prognosis and drug sensitivity in ovarian cancer - PMC [pmc.ncbi.nlm.nih.gov]

- 14. mdpi.com [mdpi.com]

- 15. cusabio.com [cusabio.com]

- 16. AI in Health Care and Biotechnology: Promise, Progress, and Challenges | Foley & Lardner LLP - JDSupra [jdsupra.com]

for Researchers, Scientists, and Drug Development Professionals

An In-depth Technical Guide to the AIAP Bioinformatics Package for Chromatin Accessibility Analysis

Core Features of this compound

This compound offers a complete system for ATAC-seq data analysis, encompassing quality assurance, enhanced peak calling, and downstream differential analysis.[1][2][3] The package is distributed as a Docker/Singularity image, ensuring reproducibility and ease of use across different computing environments with a single command-line execution.[1][2][3][4]

Key Quality Control Metrics

A central feature of this compound is its implementation of a series of robust QC metrics to ensure high-quality data for downstream analysis.[2][3][5][6] These include:

-

Reads Under Peak Ratio (RUPr): This metric assesses the fraction of reads that fall within identified accessible chromatin regions (peaks), providing an indication of signal-to-noise ratio.

-

Background (BG): this compound evaluates the background signal to gauge the level of noise in the ATAC-seq experiment.[2][3][5][6]

-

Promoter Enrichment (ProEn): This metric measures the enrichment of ATAC-seq signal at promoter regions, which are expected to be accessible in active cells.[2][3][5][6]

-

Subsampling Enrichment (SubEn): To assess the robustness of the signal, this compound performs subsampling of reads and evaluates the consistency of enrichment.[2][3][5][6]

In addition to these specific metrics, this compound also performs alignment QC, peak calling QC, saturation analysis, and signal ranking analysis.[1][5]

This compound Workflow

The this compound workflow is a structured process designed for efficiency and comprehensiveness, consisting of four main stages: Data Processing, Quality Control, Integrative Analysis, and Data Visualization.[4][5]

Quantitative Performance Improvements

This compound has been demonstrated to significantly enhance the sensitivity of ATAC-seq data analysis. By processing paired-end ATAC-seq datasets, this compound can achieve a 20%–60% improvement in both peak calling and differential analysis sensitivity.[1][2][3][4][6] Benchmarking studies using ENCODE ATAC-seq data have validated the performance of this compound and have been used to establish recommended QC standards.[1][2][3][4][6]

| Performance Metric | Improvement with this compound |

| Peak Calling Sensitivity | 20% - 60% increase |

| Differential Analysis Sensitivity | 20% - 60% increase |

Experimental Protocols

The methodologies employed by this compound are crucial for its enhanced performance. The following outlines the key experimental and computational protocols integrated into the this compound workflow.

Data Processing

-

Adapter Trimming: Raw FASTQ files are processed to remove adapter sequences.

-

Alignment: Trimmed reads are aligned to a reference genome.

-

Read Filtering: Post-alignment, reads are filtered to remove duplicates and those with low mapping quality.

Quality Control

This compound calculates a suite of QC metrics from the filtered reads, including RUPr, BG, ProEn, and SubEn. These metrics are compiled into a comprehensive JSON report.

Integrative Analysis

-

Peak Calling: this compound identifies regions of open chromatin (peaks) from the aligned reads.

-

Differential Accessibility Analysis: The package identifies differentially accessible regions (DARs) between different experimental conditions.

-

Transcription Factor Binding Region Discovery: this compound can be used to pinpoint transcription factor binding regions (TFBRs) within the accessible chromatin.[4]

Visualization of Analysis Outputs

The results from the integrative analysis can be visualized to understand the relationships between different genomic features. The following diagram illustrates the logical flow from identified accessible regions to potential regulatory insights.

References

- 1. academic.oup.com [academic.oup.com]

- 2. profiles.wustl.edu [profiles.wustl.edu]

- 3. This compound: A Quality Control and Integrative Analysis Package to Improve ATAC-seq Data Analysis - PubMed [pubmed.ncbi.nlm.nih.gov]

- 4. researchgate.net [researchgate.net]

- 5. This compound: A Quality Control and Integrative Analysis Package to Improve ATAC-seq Data Analysis - PMC [pmc.ncbi.nlm.nih.gov]

- 6. biorxiv.org [biorxiv.org]

AIAP: A Technical Guide to Enhancing ATAC-seq Data Analysis

For Researchers, Scientists, and Drug Development Professionals

This in-depth technical guide explores the core functionalities of the ATAC-seq Integrative Analysis Package (AIAP), a comprehensive computational workflow designed to improve the quality control and analysis of Assay for Transposase-Accessible Chromatin with sequencing (ATAC-seq) data. By implementing novel quality control metrics and an optimized analysis pipeline, this compound significantly enhances the sensitivity and accuracy of chromatin accessibility studies, providing a robust platform for genomics research and drug discovery.

Introduction to ATAC-seq and the Need for Improved Analysis

ATAC-seq has become a cornerstone technique for investigating genome-wide chromatin accessibility, offering insights into gene regulation and cellular states with advantages in speed and sample input requirements over previous methods.[1] The analysis of ATAC-seq data, however, presents challenges in ensuring data quality and in the sensitive detection of accessible chromatin regions. Traditional analysis pipelines, often adapted from ChIP-seq workflows, may not fully address the unique characteristics of ATAC-seq data, such as the pattern of Tn5 transposase insertion.

To address these challenges, the ATAC-seq Integrative Analysis Package (this compound) was developed. This compound is a complete system for ATAC-seq analysis, encompassing quality assurance, improved peak calling, and downstream differential analysis.[2] This guide details the methodologies and improvements this compound brings to ATAC-seq data analysis.

The this compound Workflow: A Four-Step Process

This compound streamlines ATAC-seq data analysis through a four-step workflow, packaged within a Docker/Singularity image to ensure reproducibility and ease of use.[2]

Core Improvements of this compound

This compound enhances ATAC-seq data analysis primarily through a sophisticated quality control module and an optimized peak-calling strategy.

Advanced Quality Control Metrics

This compound introduces several novel QC metrics to accurately assess the quality of ATAC-seq data.[1][2] These metrics provide a more nuanced evaluation of signal enrichment and background noise compared to standard alignment statistics.

| Metric | Description | Purpose |

| Reads Under Peak Ratio (RUPr) | The percentage of total Tn5 insertion sites that fall within called peak regions. | Measures the signal-to-noise ratio. A higher RUPr indicates better enrichment of accessible chromatin regions. |

| Promoter Enrichment (ProEn) | The enrichment of ATAC-seq signal in promoter regions, which are expected to be accessible. | Provides a positive control for signal enrichment and data quality. |

| Background (BG) | The percentage of randomly sampled genomic regions (outside of peaks) that show a high ATAC-seq signal. | Directly quantifies the level of background noise in the experiment. |

| Subsampling Enrichment (SubEn) | Signal enrichment in peaks called from a down-sampled dataset (10 million reads). | Assesses signal enrichment independent of sequencing depth. |

These key QC metrics, particularly RUPr, ProEn, and BG, have been shown to be effective indicators of ATAC-seq data quality and are not dependent on sequencing depth.[1]

Enhanced Peak Calling Sensitivity

A significant innovation in this compound is the processing of paired-end ATAC-seq reads. Instead of treating the entire fragment as the signal, this compound identifies the precise Tn5 insertion sites at both ends of the fragment. This is achieved by shifting the positive strand reads by +4 bp and the negative strand reads by -5 bp.[3] This "pseudo single-end" (PE-asSE) mode more accurately represents the transposase activity and leads to a substantial improvement in the sensitivity of peak calling.

Studies have demonstrated that this compound's methodology can lead to a 20% to 60% increase in the number of identified peaks and a more than 30% increase in the detection of differentially accessible regions (DARs) compared to standard analysis methods.[2]

Experimental Protocols

The following sections detail the methodologies implemented within each step of the this compound workflow.

Data Processing

-

Adapter Trimming: Raw paired-end FASTQ files are processed with Cutadapt to remove sequencing adapters.

-

Alignment: The trimmed reads are aligned to a reference genome using the BWA-MEM algorithm.[1]

-

BAM Processing: The resulting BAM files are processed using methylQA in "ATAC mode". This step filters for uniquely mapped, non-redundant reads.[1]

-

Tn5 Insertion Site Correction: To pinpoint the exact location of the Tn5 insertion event, the 5' ends of the aligned reads are shifted. Reads mapped to the positive strand are shifted by +4 bp, and reads mapped to the negative strand are shifted by -5 bp.[3]

Quality Control

This compound calculates a comprehensive set of QC metrics:

-

Alignment QC:

-

Non-redundant Uniquely Mapped Reads: The total number of unique reads that map to a single location in the genome.

-

Chromosome M (ChrM) Contamination Rate: The percentage of reads mapping to the mitochondrial genome, which can indicate cell stress or over-lysis.

-

-

Peak-Calling QC:

-

Reads Under Peak Ratio (RUPr): Calculated as the fraction of total Tn5 insertion sites located within the boundaries of called peaks.

-

Background (BG): 50,000 genomic regions of 500 bp each are randomly selected from outside the called peak regions. The ATAC-seq signal (in Reads Per Kilobase of transcript, per Million mapped reads - RPKM) is calculated for each. Regions with an RPKM above a theoretical threshold are considered high-background, and the percentage of such regions is reported.[1]

-

Promoter Enrichment (ProEn): Measures the enrichment of ATAC-seq signal over promoter regions that overlap with called peaks.

-

Subsampling Enrichment (SubEn): Peaks are called from a subset of 10 million reads, and the enrichment of the signal in these peaks is calculated to provide a sequencing depth-independent measure of enrichment.

-

-

Saturation Analysis: Peaks are called from incrementally larger subsets of the data to assess if the sequencing depth is sufficient to identify the majority of accessible regions.[1]

Integrative Analysis

-

Peak Calling: Open chromatin regions (peaks) are identified using MACS2 with the --nomodel and --shift -75 --extsize 150 parameters on the processed BAM file containing the corrected Tn5 insertion sites.

-

Differential Accessibility Region (DAR) Analysis: For comparative studies, this compound uses DESeq2 to identify statistically significant differences in chromatin accessibility between conditions.

-

Transcription Factor Binding Region (TFBR) Discovery: The Wellington algorithm is employed to identify transcription factor footprints within the called peaks, suggesting potential regulatory protein binding sites.[4]

Data Visualization

This compound generates a user-friendly and interactive QC report using qATACViewer .[2] This allows for the intuitive exploration of the various quality metrics. Additionally, this compound produces standard file formats for visualization in genome browsers, including:

-

bigWig files: For visualizing the normalized signal density and Tn5 insertion sites.

-

BED files: For representing the locations of called peaks and identified transcription factor footprints.[4]

Quantitative Improvements with this compound

The methodologies implemented in this compound lead to tangible improvements in the analysis of ATAC-seq data. The following table summarizes the recommended QC metric ranges based on the analysis of 70 mouse ENCODE ATAC-seq datasets.

| QC Metric | Poor | Acceptable | Good |

| Reads Under Peak Ratio (RUPr) | < 0.1 | 0.1 - 0.2 | > 0.2 |

| Promoter Enrichment (ProEn) | < 5 | 5 - 10 | > 10 |

| Background (BG) | > 0.2 | 0.1 - 0.2 | < 0.1 |

| ChrM Contamination | > 0.2 | 0.1 - 0.2 | < 0.1 |

Table adapted from the analysis of ENCODE datasets presented in the this compound publication.

Furthermore, a direct comparison of peak calling between a standard MACS2 approach and this compound's PE-asSE mode on the same dataset reveals a significant increase in the number of identified peaks with high confidence.

| Peak Calling Method | Number of Peaks |

| Standard MACS2 | ~100,000 |

| This compound (PE-asSE mode) | ~120,000 |

Illustrative data based on the reported ~20% increase in peak identification.

Logical Relationships in this compound's QC Metrics

The key QC metrics in this compound are interconnected and provide a holistic view of data quality.

Conclusion

This compound provides a significant advancement in the analysis of ATAC-seq data. Its comprehensive workflow, novel quality control metrics, and optimized peak calling strategy result in a more sensitive and accurate characterization of the chromatin accessibility landscape. For researchers and drug development professionals, this compound offers a reliable and reproducible pipeline to generate high-quality, actionable insights from ATAC-seq experiments, ultimately accelerating discoveries in gene regulation and epigenomics. The software, source code, and documentation for this compound are freely available at 52]

References

AIAP: A Technical Guide to Integrative Analysis of Open Chromatin

For Researchers, Scientists, and Drug Development Professionals

This technical guide provides an in-depth overview of the AIAP (ATAC-seq Integrative Analysis Package), a comprehensive computational workflow for the quality control (QC) and integrative analysis of Assay for Transposase-Accessible Chromatin with high-throughput sequencing (ATAC-seq) data. This document details the core functionalities of this compound, presents standardized experimental protocols for generating high-quality ATAC-seq data, and offers a guide to interpreting the analytical outputs.

Introduction to this compound

This compound is a robust bioinformatics pipeline designed to streamline the analysis of ATAC-seq data, ensuring high sensitivity and accuracy in the identification of open chromatin regions.[1][2] Developed to address the critical need for standardized QC metrics and an integrated analysis framework, this compound processes raw sequencing data to deliver comprehensive quality assessment, improved peak calling, and downstream differential accessibility analysis.[1][2] The package is distributed as a Docker/Singularity image, enabling reproducible analysis with a single command-line execution.[1]

The core philosophy of this compound is to provide a unified system that not only processes ATAC-seq data but also provides crucial quality metrics to ensure the reliability of downstream biological interpretation. It demonstrates a significant improvement in sensitivity, ranging from 20% to 60%, in both peak calling and differential analysis when processing paired-end ATAC-seq datasets.[1][2]

Data Presentation: Key Quality Control Metrics

This compound introduces and formalizes several key QC metrics to assess the quality of ATAC-seq data. These metrics are essential for identifying potential issues in the experimental workflow and ensuring the reliability of the results.[1][2]

| Metric | Description | Recommended Value/Interpretation |

| Reads Under Peaks Ratio (RUPr) | The proportion of non-redundant, uniquely mapped reads that fall within the identified ATAC-seq peaks. This metric reflects the signal-to-noise ratio of the experiment.[1][2] | A higher RUPr indicates better signal enrichment. The ENCODE consortium suggests a minimum of 20% of reads should be in peaks.[3] |

| Promoter Enrichment (ProEn) | Measures the enrichment of ATAC-seq signal in promoter regions, which are expected to be accessible in most cell types. This serves as a positive control for open chromatin detection.[1][2][3] | A higher ProEn value is indicative of a successful ATAC-seq experiment with a good signal-to-noise ratio.[3] |

| Background (BG) | Estimates the overall background noise level in the ATAC-seq data.[1][2] | A lower BG value is desirable and indicates less random transposition and a cleaner signal. |

| Subsampling Enrichment (SubEn) | Evaluates the enrichment of ATAC-seq signals on called peaks using a subsampled dataset of 10 million reads to avoid sequencing depth bias.[2] | Provides a standardized measure of signal enrichment across datasets of varying sequencing depths. |

| Mitochondrial DNA (mtDNA) Contamination | The percentage of reads mapping to the mitochondrial genome. High levels can indicate excessive cell lysis or issues with nuclear isolation. | Lower mtDNA contamination is preferred. The Omni-ATAC-seq protocol is designed to reduce mitochondrial reads by approximately 20%.[4] |

Experimental Protocol: Omni-ATAC-seq

This compound is optimized for data generated using the Omni-ATAC-seq protocol, which enhances the signal-to-noise ratio and reduces mtDNA contamination compared to the original ATAC-seq method.[1][4] The following is a detailed protocol for performing Omni-ATAC-seq on 50,000 viable cells.

Materials and Reagents

-

Cells: 50,000 viable cells (viability >90%)

-

Buffers and Solutions:

-

ATAC-Resuspension Buffer (RSB): 10 mM Tris-HCl pH 7.4, 10 mM NaCl, 3 mM MgCl₂ in nuclease-free water

-

Lysis Buffer: ATAC-RSB with 0.1% NP40, 0.1% Tween-20, and 0.01% Digitonin

-

Wash Buffer: ATAC-RSB with 0.1% Tween-20

-

1x PBS, cold

-

-

Enzymes and Kits:

-

Illumina Nextera DNA Library Prep Kit (or Vazyme Trueprep DNA Library Prep Kit V2)

-

QIAGEN MinElute PCR Purification Kit

-

AMPure XP beads

-

KAPA HiFi HotStart ReadyMix

-

Procedure

-

Cell Preparation:

-

Harvest 50,000 viable cells and centrifuge at 500 x g for 5 minutes at 4°C.

-

Carefully aspirate the supernatant.

-

Wash the cell pellet with 50 µl of cold 1x PBS and centrifuge again under the same conditions.

-

Aspirate the supernatant completely.

-

-

Cell Lysis:

-

Resuspend the cell pellet in 50 µl of cold Lysis Buffer.

-

Pipette gently up and down 3 times to mix.

-

Incubate on ice for 3 minutes.[5]

-

-

Lysis Washout:

-

Add 1 ml of cold Wash Buffer to the lysed cells.

-

Invert the tube 3 times to mix.

-

Centrifuge at 500 x g for 10 minutes at 4°C to pellet the nuclei.[5]

-

Carefully aspirate the supernatant in two steps to avoid disturbing the nuclear pellet.

-

-

Tagmentation:

-

Prepare the transposition mix:

-

25 µl 2x TD Buffer (from Nextera kit)

-

2.5 µl Transposase (from Nextera kit)

-

16.5 µl PBS

-

0.5 µl 1% Digitonin

-

0.5 µl 10% Tween-20

-

5 µl Nuclease-free water

-

-

Resuspend the nuclear pellet in 50 µl of the transposition mix.

-

Pipette gently up and down 6 times to mix.

-

Incubate at 37°C for 30 minutes in a thermomixer with shaking at 1,000 rpm.[5]

-

-

DNA Purification:

-

Immediately after tagmentation, purify the DNA using a QIAGEN MinElute Reaction Cleanup Kit.

-

Elute the DNA in 10 µl of Elution Buffer (EB).

-

-

Library Amplification:

-

Amplify the tagmented DNA using the KAPA HiFi HotStart ReadyMix and indexed primers.

-

Perform an initial 5 cycles of PCR.

-

To determine the additional number of cycles needed, perform a qPCR side reaction.

-

-

Library Purification and Quality Control:

-

Purify the amplified library using AMPure XP beads to remove primer dimers and large fragments.

-

Assess the library quality, including fragment size distribution, using an Agilent Bioanalyzer.

-

Quantify the library concentration using a Qubit fluorometer.

-

-

Sequencing:

-

Perform 50 bp paired-end sequencing on an Illumina platform. For transcription factor footprinting, a higher sequencing depth of >200 million reads is recommended.[6]

-

This compound Workflow and Analysis

The this compound package integrates the entire bioinformatic workflow from raw sequencing reads to differential accessibility analysis.

This compound Computational Workflow

The this compound workflow is composed of four main stages: Data Processing, Quality Control, Integrative Analysis, and Data Visualization.[2][3]

Caption: The this compound computational workflow, from raw data to visualization.

Downstream Integrative Analysis

The "integrative" aspect of this compound lies in its unified approach to quality control and differential accessibility analysis. After robust QC, this compound proceeds to identify differentially accessible regions (DARs) between different experimental conditions. This is a critical step in understanding the regulatory changes associated with cellular processes, disease states, or drug treatments. The improved sensitivity of this compound in peak calling directly translates to a more than 30% increase in the identification of DARs.[2]

Caption: Logical flow of differential accessibility analysis in this compound.

Conclusion

The this compound package provides a much-needed standardized and integrative solution for the analysis of ATAC-seq data. By incorporating a suite of robust QC metrics and an optimized analysis pipeline, this compound enhances the reliability and sensitivity of open chromatin studies. This technical guide serves as a comprehensive resource for researchers and professionals to effectively utilize this compound for their investigations into gene regulation and chromatin architecture, ultimately accelerating discoveries in basic research and therapeutic development. The software, source code, and detailed documentation for this compound are freely available at 71]

References

- 1. This compound: A Quality Control and Integrative Analysis Package to Improve ATAC-seq Data Analysis - PubMed [pubmed.ncbi.nlm.nih.gov]

- 2. biorxiv.org [biorxiv.org]

- 3. researchgate.net [researchgate.net]

- 4. lfz100.ust.hk [lfz100.ust.hk]

- 5. Omni-ATAC protocol [bio-protocol.org]

- 6. med.upenn.edu [med.upenn.edu]

- 7. github.com [github.com]

AIAP: An Apprenticeship Program, Not an Installable Software

Setting Up a Linux Environment for AI Development

This guide details the installation and configuration of essential tools and libraries for a comprehensive AI development environment on a Linux-based system. The focus is on creating a reproducible and powerful platform for machine learning experimentation, data analysis, and model deployment.

System Recommendations

| Component | Recommendation | Rationale |

| Operating System | Ubuntu 22.04 LTS or later | Long-Term Support (LTS) versions provide stability and extended security updates. |

| Processor (CPU) | 8-core processor or higher | Facilitates faster data preprocessing and model training for non-GPU intensive tasks. |

| Memory (RAM) | 32 GB or more | Large datasets and complex models can be memory-intensive. |

| Storage | 1 TB NVMe SSD or more | Fast storage is crucial for quick loading of large datasets and efficient disk I/O operations. |

| Graphics Card (GPU) | NVIDIA RTX 30-series or higher with at least 12 GB of VRAM | Essential for accelerating the training of deep learning models. CUDA and cuDNN support is critical. |

Core Environment Setup Workflow

The following diagram illustrates the logical workflow for establishing the AI development environment on a fresh Linux installation.

Experimental Protocols: Step-by-Step Installation

The following protocols provide detailed command-line instructions for installing the necessary components. These commands are intended for an Ubuntu-based Linux distribution.

1. System Preparation

First, ensure your system's package list and installed packages are up to date. Then, install essential build tools.

2. GPU Driver and CUDA Installation

For GPU acceleration in deep learning tasks, installing the appropriate NVIDIA drivers and CUDA toolkit is crucial.

-

NVIDIA Driver Installation: It is recommended to install the drivers from the official Ubuntu repositories for ease of installation and compatibility.

-

CUDA Toolkit and cuDNN: These can be installed via the NVIDIA repository to ensure you have the latest compatible versions.

3. Python Environment with Miniconda

Using a virtual environment manager like Conda is highly recommended to manage dependencies for different projects.

-

Install Miniconda:

-

Create a Conda Environment:

4. Installation of Core AI Libraries

With the Conda environment activated, you can now install the primary AI and machine learning libraries.

-

PyTorch: For GPU-accelerated tensor computations and deep learning.

-

TensorFlow: An end-to-end open-source platform for machine learning.

-

Jupyter Notebook/Lab: For interactive computing and development.

Verification Workflow

After completing the installation, it is essential to verify that all components are functioning correctly. The following diagram outlines the verification process.

References

AIAP: A Technical Guide to Enhancing Chromatin Accessibility Analysis

For Researchers, Scientists, and Drug Development Professionals

Introduction

The study of chromatin accessibility provides a window into the regulatory landscape of the genome, revealing how DNA is packaged and which regions are open for transcription factor binding and gene expression. Assay for Transposase-Accessible Chromatin with high-throughput sequencing (ATAC-seq) has emerged as a powerful technique to map these accessible regions. However, the quality of ATAC-seq data can be variable, impacting the reliability of downstream analysis. The ATAC-seq Integrative Analysis Package (AIAP) is a comprehensive software solution designed to address this challenge by providing robust quality control (QC) and integrative analysis of ATAC-seq data. This guide provides an in-depth technical overview of the this compound software, including its core functionalities, underlying methodologies, and practical applications in chromatin accessibility studies.

Core Concepts of this compound

This compound is a command-line tool, packaged in a Docker/Singularity container for ease of use and reproducibility, that streamlines the analysis of ATAC-seq data. Its primary goal is to improve the sensitivity of peak calling and the identification of differentially accessible regions between different conditions. This compound achieves this through a multi-faceted approach that encompasses rigorous quality control, optimized data processing, and integrated downstream analysis.

A key innovation of this compound is the introduction of several novel QC metrics that provide a more accurate assessment of ATAC-seq data quality. These metrics go beyond standard sequencing quality scores to evaluate the signal-to-noise ratio and enrichment of accessible chromatin regions.

Quantitative Data Summary

This compound has been shown to significantly improve the sensitivity of ATAC-seq data analysis. A key performance metric is the increase in the number of called peaks and differentially accessible regions (DARs) compared to standard analysis pipelines. The following tables summarize the performance of this compound on a set of publicly available ATAC-seq datasets.

| Metric | Standard Pipeline | This compound Pipeline | Percentage Improvement |

| Number of Called Peaks | Varies by dataset | Varies by dataset | 20% - 60% increase |

| Number of DARs Identified | Varies by dataset | Varies by dataset | Up to 30% increase |

Table 1: Improvement in Peak Calling and DAR Identification with this compound. The use of this compound can lead to a substantial increase in the number of identified accessible chromatin regions and differentially accessible regions, enhancing the discovery potential of ATAC-seq experiments.

The quality of ATAC-seq data is paramount for obtaining reliable results. This compound provides a suite of QC metrics to assess data quality. The table below outlines these key metrics and their significance.

| QC Metric | Description | Recommended Value |

| Reads Under Peaks Ratio (RUPr) | The fraction of total reads that fall within called peak regions. A higher RUPr indicates a better signal-to-noise ratio. | > 20% |

| Promoter Enrichment (ProEn) | The enrichment of ATAC-seq signal in promoter regions compared to background. High ProEn suggests good data quality. | Varies by cell type |

| Background (BG) | The level of background signal in the ATAC-seq data. Lower background is desirable. | Varies by experiment |

| Subsampling Enrichment (SubEn) | Assesses the enrichment of signal in peaks even with a reduced number of reads, indicating the robustness of the called peaks. | Consistent across subsamples |

Table 2: Key Quality Control Metrics in this compound. These metrics provide a comprehensive overview of the quality of an ATAC-seq experiment, enabling researchers to identify and troubleshoot problematic datasets.

Experimental Protocols

A successful ATAC-seq experiment is the foundation for high-quality data and meaningful biological insights. While this compound is a computational tool for data analysis, this section provides a detailed protocol for the Omni-ATAC-seq method, which is recommended for its improved signal-to-noise ratio.

Omni-ATAC-seq Protocol

This protocol is adapted from published methods and is suitable for 50,000 cells.

I. Nuclei Isolation

-

Start with a single-cell suspension of 50,000 viable cells.

-

Pellet the cells by centrifugation at 500 x g for 5 minutes at 4°C.

-

Wash the cells once with 50 µL of ice-cold 1x PBS. Centrifuge at 500 x g for 5 minutes at 4°C.

-

Resuspend the cell pellet in 50 µL of cold lysis buffer (10 mM Tris-HCl pH 7.4, 10 mM NaCl, 3 mM MgCl2, 0.1% IGEPAL CA-630, 0.1% Tween-20, and 0.01% Digitonin).

-

Incubate on ice for 3 minutes.

-

Wash out the lysis buffer by adding 1 mL of cold wash buffer (10 mM Tris-HCl pH 7.4, 10 mM NaCl, 3 mM MgCl2, and 0.1% Tween-20).

-

Centrifuge at 500 x g for 10 minutes at 4°C to pellet the nuclei.

-

Carefully remove the supernatant.

II. Transposition Reaction

-

Resuspend the nuclei pellet in 50 µL of transposition mix (25 µL 2x TD Buffer, 2.5 µL TDE1 Tn5 Transposase, 16.5 µL PBS, 0.5 µL 1% Digitonin, 0.5 µL 10% Tween-20, and 5 µL Nuclease-free water).

-

Incubate the reaction at 37°C for 30 minutes in a thermomixer with shaking at 1000 rpm.

-

Immediately after incubation, purify the transposed DNA using a Qiagen MinElute PCR Purification Kit.

-

Elute the DNA in 10 µL of elution buffer.

III. Library Amplification

-

Amplify the transposed DNA using a suitable PCR master mix and custom Nextera primers.

-

Perform an initial PCR amplification for 5 cycles.

-

To determine the optimal number of additional PCR cycles, perform a qPCR side reaction.

-

Based on the qPCR results, perform the remaining PCR cycles on the main library.

-

Purify the amplified library using AMPure XP beads to remove primer-dimers and large fragments. A double-sided bead purification is recommended.

-

Assess the quality and concentration of the final library using a Bioanalyzer and Qubit fluorometer.

-

The library is now ready for high-throughput sequencing.

This compound Software Workflow

The this compound software is structured as a pipeline that takes raw ATAC-seq sequencing data (in FASTQ format) and produces a comprehensive set of results, including quality control reports, processed data files, and downstream analysis outputs. The workflow can be broken down into four main stages: Data Processing, Quality Control, Integrative Analysis, and Data Visualization.

Caption: The this compound software workflow, from raw data to analysis and visualization.

Conclusion

The this compound software package provides a powerful and user-friendly solution for the quality control and integrative analysis of ATAC-seq data. By implementing novel QC metrics and an optimized analysis pipeline, this compound enhances the sensitivity and reliability of chromatin accessibility studies. This technical guide has provided an overview of the core functionalities of this compound, detailed experimental protocols for generating high-quality ATAC-seq data, and a summary of the software's workflow. For researchers and drug development professionals, this compound represents a valuable tool for unlocking the full potential of ATAC-seq in understanding the regulatory genome. For more detailed information, including the source code and full documentation, please refer to the official this compound GitHub repository.

Methodological & Application

AIAP for ATAC-seq Peak Calling: Application Notes and Protocols

For Researchers, Scientists, and Drug Development Professionals

Introduction

The Assay for Transposase-Accessible Chromatin with high-throughput sequencing (ATAC-seq) has become a cornerstone technique for investigating genome-wide chromatin accessibility. The quality of ATAC-seq data is paramount for the accurate identification of open chromatin regions and subsequent downstream analyses. The ATAC-seq Integrative Analysis Package (AIAP) is a comprehensive bioinformatics pipeline designed to streamline and improve the analysis of ATAC-seq data.[1][2][3][4][5] this compound provides a complete system for quality control (QC), enhanced peak calling, and differential accessibility analysis.[1][3][4] This document provides detailed application notes and protocols for utilizing this compound for ATAC-seq peak calling.

Core Features of this compound

This compound distinguishes itself through a series of optimized analysis strategies and defined QC metrics.[1][2][3][4] The key features include:

-

Optimized Data Processing: this compound processes paired-end ATAC-seq data in a pseudo single-end mode to improve sensitivity in peak calling.[6]

-

Comprehensive Quality Control: this compound introduces several key QC metrics to assess the quality of ATAC-seq data, including Reads Under Peak Ratio (RUPr), Promoter Enrichment (ProEn), and Background (BG).[2][3][4][7]

-

Improved Peak Calling Sensitivity: By optimizing the data preparation for the MACS2 peak caller, this compound demonstrates a significant improvement in the sensitivity of peak detection.[2][8]

-

Integrated Downstream Analysis: this compound facilitates the identification of differentially accessible regions (DARs) and transcription factor binding regions (TFBRs).[2][6]

-

Reproducibility and Ease of Use: this compound is distributed as a Docker/Singularity container, ensuring reproducibility and simplifying installation and execution.[1][3][4][5]

Quantitative Performance of this compound

This compound has been shown to significantly enhance the sensitivity of ATAC-seq analysis. The following table summarizes the performance improvements reported in the original publication.

| Performance Metric | Improvement with this compound | Description |

| Peak Calling Sensitivity | 20% - 60% increase | This compound identifies a greater number of true positive peaks compared to standard ATAC-seq analysis pipelines.[3][4][5] |

| Differentially Accessible Regions (DARs) | Over 30% more DARs identified | The enhanced sensitivity in peak calling leads to the discovery of more regions with statistically significant differences in chromatin accessibility between conditions.[6] |

This compound Workflow for ATAC-seq Peak Calling

The this compound pipeline follows a structured workflow from raw sequencing reads to peak calls and downstream analysis.

References

- 1. files.core.ac.uk [files.core.ac.uk]

- 2. This compound: A Quality Control and Integrative Analysis Package to Improve ATAC-seq Data Analysis - PubMed [pubmed.ncbi.nlm.nih.gov]

- 3. profiles.wustl.edu [profiles.wustl.edu]

- 4. researchgate.net [researchgate.net]

- 5. biorxiv.org [biorxiv.org]

- 6. ATAC-seq Data Standards and Processing Pipeline – ENCODE [encodeproject.org]

- 7. bioinformatics-core-shared-training.github.io [bioinformatics-core-shared-training.github.io]

- 8. youtube.com [youtube.com]

Evaluating ATAC-seq Library Complexity with AIAP: Application Notes and Protocols

For Researchers, Scientists, and Drug Development Professionals

Introduction

The Assay for Transposase-Accessible Chromatin with sequencing (ATAC-seq) has become a cornerstone technique for investigating chromatin accessibility, providing critical insights into gene regulation and cellular identity. The quality of ATAC-seq data is paramount for the accuracy of downstream analyses, such as transcription factor footprinting and differential accessibility analysis. A key determinant of data quality is the complexity of the sequencing library, which reflects the diversity of the initial pool of DNA fragments. Low-complexity libraries, often arising from insufficient starting material or excessive PCR amplification, can lead to a high proportion of duplicate reads and a reduced signal-to-noise ratio, ultimately compromising the biological interpretation of the data.

This document provides detailed application notes and protocols for the evaluation of ATAC-seq library complexity using the ATAC-seq Integrative Analysis Package (AIAP). This compound is a computational pipeline designed to streamline the quality control (QC) and analysis of ATAC-seq data.[1][2] It offers a suite of metrics specifically tailored to assess the quality and complexity of ATAC-seq libraries, enabling researchers to make informed decisions about their data.

I. Experimental Protocol: ATAC-seq Library Preparation

This protocol outlines the key steps for generating ATAC-seq libraries from cell suspensions.

Materials:

-

Freshly harvested cells (50,000–100,000 cells per reaction)

-

Lysis buffer (e.g., 10 mM Tris-HCl pH 7.4, 10 mM NaCl, 3 mM MgCl2, 0.1% IGEPAL CA-630)

-

Tagmentation buffer and enzyme (Tn5 transposase)

-

PCR amplification mix

-

DNA purification beads (e.g., AMPure XP)

-

Nuclease-free water

Procedure:

-

Cell Lysis and Nuclei Isolation:

-

Start with a single-cell suspension of 50,000 to 100,000 cells.

-

Pellet the cells by centrifugation and resuspend in 50 µL of ice-cold lysis buffer.

-

Incubate on ice for 10 minutes to lyse the cell membrane while keeping the nuclear membrane intact.

-

Centrifuge the lysate to pellet the nuclei.

-

Carefully remove the supernatant.

-

-

Tagmentation:

-

Resuspend the nuclear pellet in the tagmentation reaction mix containing the Tn5 transposase and tagmentation buffer.

-

Incubate the reaction at 37°C for 30 minutes. The Tn5 transposase will simultaneously fragment the DNA in open chromatin regions and ligate sequencing adapters to the ends of these fragments.

-

-

DNA Purification:

-

Purify the tagmented DNA using DNA purification beads to remove the Tn5 transposase and other reaction components.

-

-

PCR Amplification:

-

Amplify the tagmented DNA using a PCR mix containing primers that anneal to the ligated adapters.

-

The number of PCR cycles should be optimized to minimize amplification bias. A typical range is 5-12 cycles.

-

-

Library Purification and Quantification:

-

Purify the amplified library using DNA purification beads.

-

Assess the quality and quantity of the library using a DNA analyzer (e.g., Agilent Bioanalyzer) and a fluorometric quantification method (e.g., Qubit).

-

II. Computational Protocol: Library Complexity Evaluation with this compound

This compound is a computational pipeline that takes raw ATAC-seq sequencing data (FASTQ files) as input and generates a comprehensive QC report.

Software Requirements:

-

Docker or Singularity

-

This compound Singularity image (available from the this compound GitHub repository)[3]

Procedure:

-

Data Preprocessing:

-

This compound first performs adapter trimming on the raw FASTQ files using tools like Cutadapt.

-

The trimmed reads are then aligned to a reference genome using an aligner such as BWA.[4]

-

-

Read Filtering and Processing:

-

The aligned reads (in BAM format) are filtered to remove unmapped reads, reads with low mapping quality, and PCR duplicates.

-

For paired-end reads, this compound identifies the Tn5 insertion sites by shifting the reads (+4 bp for the positive strand and -5 bp for the negative strand) to account for the 9-bp duplication created by the transposase.[4]

-

-

QC Metrics Calculation:

-

This compound calculates a suite of QC metrics to assess library quality and complexity. These metrics are summarized in a JSON file.

-

-

Report Generation:

III. Key Metrics for ATAC-seq Library Complexity

A comprehensive evaluation of ATAC-seq library complexity involves assessing several QC metrics. The following tables summarize key metrics, including those generated by this compound, and provide general guidelines for interpreting their values.[2][5][6]

Table 1: Standard ATAC-seq Quality Control Metrics

| Metric | Description | Good Quality | Poor Quality |

| Uniquely Mapped Reads | Percentage of reads that map to a single location in the genome. | > 80% | < 70% |

| Mitochondrial Read Contamination | Percentage of reads mapping to the mitochondrial genome. | < 15% | > 30% |

| Library Complexity | Estimated number of unique DNA fragments in the library. Higher is better. | Varies by experiment, but should not be saturated at the sequencing depth. | Saturation at low sequencing depths. |

| Fraction of Reads in Peaks (FRiP) | The proportion of reads that fall into called peak regions. A measure of signal-to-noise. | > 0.3 (ENCODE guideline)[2] | < 0.2 |

| TSS Enrichment Score | Enrichment of reads around transcription start sites compared to flanking regions. | > 6 | < 4 |

Table 2: this compound-Specific Quality Control Metrics

| Metric | Description | Good Quality | Poor Quality |

| Reads Under Peak Ratio (RUPr) | A measure of the fraction of reads within identified peaks. Similar to FRiP. | High | Low |

| Background (BG) | An estimation of the background noise level in the data. | Low | High |

| Promoter Enrichment (ProEn) | The enrichment of ATAC-seq signal specifically at promoter regions. | High | Low |

| Subsampling Enrichment (SubEn) | Assesses the stability of enrichment signals when the data is downsampled. | Stable enrichment | Unstable enrichment |

IV. Visualizations

Experimental and Computational Workflow

The following diagram illustrates the complete workflow from sample preparation to data analysis with this compound.

Caption: ATAC-seq and this compound workflow.

Conceptual Diagram of Library Complexity

This diagram illustrates the concept of high versus low library complexity in ATAC-seq.

Caption: High vs. Low Library Complexity.

Example Signaling Pathway: Glucocorticoid Receptor Signaling

ATAC-seq is frequently used to study how signaling pathways modulate chromatin accessibility and gene expression. The following diagram depicts a simplified glucocorticoid receptor (GR) signaling pathway, a common subject of ATAC-seq studies.

Caption: Glucocorticoid Receptor Pathway.

Conclusion

References

- 1. researchgate.net [researchgate.net]

- 2. ATAC-seq Data Standards and Processing Pipeline – ENCODE [encodeproject.org]

- 3. GitHub - Zhang-lab/ATAC-seq_QC_analysis: Atac-seq QC matrix [github.com]

- 4. researchgate.net [researchgate.net]

- 5. This compound: A Quality Control and Integrative Analysis Package to Improve ATAC-seq Data Analysis - PubMed [pubmed.ncbi.nlm.nih.gov]

- 6. 24. Quality Control — Single-cell best practices [sc-best-practices.org]

Unlocking Chromatin Accessibility: A Step-by-Step Guide to the AIAP Pipeline for ATAC-seq Data Analysis

For Researchers, Scientists, and Drug Development Professionals

This application note provides a detailed protocol for utilizing the ATAC-seq Integrative Analysis Package (AIAP), a comprehensive bioinformatics pipeline designed for the quality control (QC) and analysis of Assay for Transposase-Accessible Chromatin using sequencing (ATAC-seq) data.[1][2][3][4] By following this guide, researchers can effectively process raw ATAC-seq data to identify open chromatin regions, a critical step in understanding gene regulation and its role in disease and drug response.

Introduction to this compound

The this compound pipeline is a powerful tool that streamlines the analysis of ATAC-seq data, from raw sequencing reads to peak calling and quality control.[2][3][4] It is particularly valuable for its implementation of specific QC metrics tailored to ATAC-seq data, which help to ensure the reliability and reproducibility of results.[1][2][3][5] this compound is distributed as a Docker/Singularity image, making it easily deployable on high-performance computing clusters.[1][2][3][4]

Core Features of the this compound Pipeline:

-

Comprehensive Quality Control: this compound calculates a suite of ATAC-seq specific QC metrics, including Reads Under the Peak Ratio (RUPr), Background (BG), Promoter Enrichment (ProEn), and Subsampling Enrichment (SubEn).[1][2][3][5]

-

Optimized Data Processing: The pipeline includes steps for adapter trimming, alignment, and removal of duplicate reads to ensure high-quality data for downstream analysis.

-

Robust Peak Calling: this compound utilizes MACS2 for the identification of accessible chromatin regions (peaks).

-

Reproducibility: Packaged as a Singularity image, this compound ensures that the same analysis environment can be recreated, leading to reproducible results.

Experimental Protocol: Data Analysis Workflow

This protocol outlines the computational steps for running the this compound pipeline. It assumes that raw ATAC-seq data (in FASTQ format) has already been generated from a sequencing experiment.

Prerequisites:

-

Singularity installed on your Linux-based high-performance computing (HPC) cluster.

-

The this compound Singularity image file (.simg). This can be downloaded from the official repository.

-

Reference genome files (e.g., hg38, mm10) in the appropriate format.

-

Paired-end ATAC-seq FASTQ files (e.g., read1.fastq.gz and read2.fastq.gz).

Step-by-Step Pipeline Execution:

-

Download the this compound Singularity Image and Reference Files: The first step is to obtain the necessary files to run the pipeline. The Singularity image contains all the software and dependencies required for the analysis. Reference genomes will also be needed for alignment.

-

Prepare Your Workspace: Navigate to the directory containing your FASTQ files. It is recommended to run the pipeline in the same directory where your data is located.

-

Execute the this compound Pipeline: The this compound pipeline is executed with a single command line. This command specifies the input files, the reference genome, and other parameters. The following is an example command:

-

singularity run: This command executes the Singularity image.

-

-B ./:/process: This binds the current directory to the /process directory within the container.

-

-B /path/to/reference:/atac_seq/Resource/Genome: This binds your reference genome directory to the location expected by the pipeline within the container.

-

/path/to/AIAP.simg: This is the path to your downloaded this compound Singularity image.

-

-r PE: Specifies that the data is Paired-End.

-

-g mm10: Specifies the reference genome to be used (in this case, mouse mm10).

-

-o read1.fastq.gz: Specifies the first read FASTQ file.

-

-p read2.fastq.gz: Specifies the second read FASTQ file.

-

Data Presentation: Key Quality Control Metrics

This compound generates a comprehensive QC report in a JSON file, which can be visualized using the qATACViewer.[5] The following tables summarize the key QC metrics and their typical ranges for high-quality ATAC-seq data.

Table 1: Alignment and Library Complexity Metrics

| Metric | Description | Recommended Value |

| Uniquely Mapped Reads | Percentage of reads that map to a single location in the genome. | > 80% |

| Non-redundant Uniquely Mapped Reads | Percentage of uniquely mapped reads after removing PCR duplicates. | > 50% |

| Mitochondrial Contamination Rate | Percentage of reads mapping to the mitochondrial genome. | < 15% |

Table 2: ATAC-seq Specific QC Metrics

| Metric | Description | Recommended Value |

| Reads Under the Peak Ratio (RUPr) | The percentage of total reads that fall within the called peaks.[5] | > 30% |

| Background (BG) | A measure of the background noise in the data, calculated from random genomic regions.[5] | < 30% |

| Promoter Enrichment (ProEn) | The enrichment of ATAC-seq signal around transcription start sites (TSSs). | > 6 |

| Subsampling Enrichment (SubEn) | Signal enrichment on peaks identified from a subsample of the data.[5] | > 1.5 |

Visualizing the this compound Workflow

To better understand the logical flow of the this compound pipeline, the following diagrams have been generated using the DOT language.

Caption: High-level overview of the this compound data processing and analysis workflow.

References

AI-Powered Analysis of Differential Chromatin Accessibility: Application Notes and Protocols

For Researchers, Scientists, and Drug Development Professionals

Introduction to Chromatin Accessibility and its Importance

Chromatin accessibility refers to the physical availability of DNA to regulatory proteins, such as transcription factors.[1][2][3] Regions of open chromatin are often associated with active regulatory elements like promoters and enhancers, playing a crucial role in gene expression.[4][5][6] The study of differential chromatin accessibility between different cell types, disease states, or treatment conditions provides a powerful lens to understand the dynamics of gene regulation.[7][8][9] In drug development, identifying changes in chromatin accessibility can reveal how a compound modulates gene regulatory networks, offering valuable information on its mechanism of action and potential off-target effects.[1]

The AI-Assisted Pipeline (AIAP) for Differential Analysis

Key advantages of the this compound include:

-

Enhanced Peak Calling: AI models can be trained to more accurately identify regions of open chromatin (peaks) from ATAC-seq data, reducing false positives and improving the detection of subtle changes.[10]

-

Improved Cell Type Identification (for single-cell ATAC-seq): In complex tissues, ML algorithms can effectively classify cell types based on their unique chromatin accessibility profiles.[11]

-

Predictive Modeling: AI can be used to build predictive models that link chromatin accessibility patterns to gene expression, disease phenotypes, or drug responses.

Experimental Workflow: ATAC-seq

The foundation of the this compound is high-quality ATAC-seq data. The following diagram and protocol outline the key steps in the ATAC-seq experimental workflow.

References

- 1. m.youtube.com [m.youtube.com]

- 2. m.youtube.com [m.youtube.com]

- 3. In vivo profiling of chromatin accessibility with CATaDa - the Node [thenode.biologists.com]

- 4. Pipelines for ATAC-Seq Data Analysis [scidap.com]

- 5. rosalind.bio [rosalind.bio]

- 6. m.youtube.com [m.youtube.com]

- 7. Comparison of differential accessibility analysis strategies for ATAC-seq data - PubMed [pubmed.ncbi.nlm.nih.gov]

- 8. Improved sensitivity and resolution of ATAC-seq differential DNA accessibility analysis | bioRxiv [biorxiv.org]

- 9. Chromatin accessibility profiling by ATAC-seq - PMC [pmc.ncbi.nlm.nih.gov]

- 10. youtube.com [youtube.com]