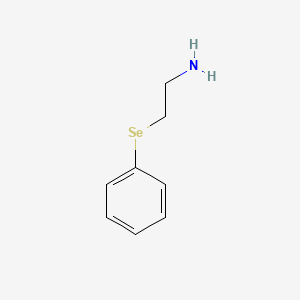

PAESe

Description

Properties

CAS No. |

81418-58-8 |

|---|---|

Molecular Formula |

C8H11NSe |

Molecular Weight |

200.15 g/mol |

IUPAC Name |

2-phenylselanylethanamine |

InChI |

InChI=1S/C8H11NSe/c9-6-7-10-8-4-2-1-3-5-8/h1-5H,6-7,9H2 |

InChI Key |

ZVWHXJQEPOSKDN-UHFFFAOYSA-N |

SMILES |

C1=CC=C(C=C1)[Se]CCN |

Canonical SMILES |

C1=CC=C(C=C1)[Se]CCN |

Other CAS No. |

81418-58-8 |

Synonyms |

PAESe phenyl 2-aminoethyl selenide phenyl-2-aminoethylselenide |

Origin of Product |

United States |

Foundational & Exploratory

The Bedrock of Discovery: A Technical Guide to Provenance Context Entities in RDF for Drug Development

A Whitepaper for Researchers, Scientists, and Drug Development Professionals

Abstract

In the intricate landscape of drug discovery and development, the ability to trace the origin, evolution, and context of data is not merely a matter of good practice—it is a cornerstone of scientific rigor, regulatory compliance, and innovation. This technical guide delves into the critical role of provenance in the semantic web, specifically focusing on the representation of provenance, context, and entities within the Resource Description Framework (RDF). We provide an in-depth analysis of the Provenance Context Entity (PaCE) approach, a scalable method for tracking the lineage of scientific data. This guide contrasts PaCE with traditional methods such as RDF reification and named graphs, offering a comprehensive overview for researchers, scientists, and drug development professionals. Through detailed explanations, practical use cases in drug development, and comparative data, this whitepaper aims to equip the reader with the knowledge to implement robust provenance tracking in their research and development workflows.

The Imperative of Provenance in Drug Development

Provenance, the documented history of an object or data, is fundamental to assessing its authenticity and quality. In drug development, where data underpins decisions with profound human and financial implications, a complete and transparent provenance trail is indispensable. Consider the following scenarios:

-

Preclinical Studies: A surprising result in a toxicology study could be an anomaly, an experimental artifact, or a breakthrough. Without detailed provenance—knowing the exact protocol, the batch of reagents, the operator, and the instrument's calibration—it is impossible to reliably distinguish between these possibilities.

-

High-Throughput Screening (HTS): An HTS campaign generates millions of data points. A "hit" compound's activity is only meaningful in the context of the specific assay conditions, cell line passage number, and data analysis pipeline used. Provenance ensures that these crucial details are inextricably linked to the results.

-

Clinical Trials: The integrity of clinical trial data is paramount. Regulatory bodies like the FDA demand a clear audit trail for every data point, from patient-reported outcomes to biomarker measurements.

The challenge lies in capturing this rich contextual information in a machine-readable and interoperable format. The Semantic Web, with RDF as its foundational data model, offers a powerful framework for this task.

Representing Statements About Statements in RDF

At its core, provenance information consists of "statements about statements." For example, "The statement 'Compound-X inhibits Kinase-Y with an IC50 of 50nM' was asserted by 'Assay-ID-123'." In RDF, a simple statement is a triple (subject-predicate-object). The question then becomes: how do we make this entire triple the subject of another triple? Several approaches have been developed to address this.

RDF Reification: The Standard but Flawed Approach

The earliest proposed solution is RDF reification, which uses a built-in vocabulary to deconstruct a triple into a resource of type rdf:Statement with four associated properties: rdf:subject, rdf:predicate, rdf:object, and the statement's identifier.

While standardized, reification is widely criticized for its verbosity and semantic ambiguity. It requires four additional triples to make a statement about a single triple, leading to a significant increase in data size.[1] Moreover, asserting the reified statement does not, by itself, assert the original triple, a semantic gap that can lead to misinterpretation.[2]

Named Graphs: Grouping Triples by Context

A more popular and practical approach is the use of named graphs. A named graph is a set of RDF triples identified by a URI.[3] This URI can then be used as the subject of other RDF statements to describe the context of the triples within that graph, such as their source or creation date.[4][5] This method is less verbose than reification when annotating multiple triples that share the same provenance. However, for annotating individual triples, it can still be cumbersome and may lead to a large number of named graphs, which can be challenging for some triple stores to manage efficiently.[5]

Provenance Context Entity (PaCE): A Scalable Alternative

The Provenance Context Entity (PaCE) approach was introduced to overcome the limitations of reification and named graphs.[1][6] PaCE creates "provenance-aware" RDF triples by embedding provenance context directly into the URIs of the entities themselves.[7] The intuition behind PaCE is that the provenance of a statement provides the necessary context to interpret it correctly.[8]

The structure of a PaCE URI typically includes a base URI, a "provenance context string," and the entity name. For example, instead of a generic URI for a protein like , PaCE would create a more specific URI that includes the source of the information, such as .[8] This approach avoids the need for extra triples to represent provenance, thus reducing storage overhead and simplifying queries.

Quantitative Comparison of Provenance Models

The choice of a provenance model has significant implications for storage efficiency and query performance. While the original PaCE research claimed substantial improvements, accessing the full dataset for a direct reproduction of those results is challenging. However, a study by Fu et al. (2015) provides a valuable comparison of different RDF provenance models, including the N-ary model (conceptually similar to reification in creating an intermediate node) and the Singleton Property model, against the Nanopublication model (which, like named graphs, groups triples).

The following table summarizes the total number of triples generated by different models for a dataset of chemical-gene-disease relationships.

| Model | Total Number of Triples |

| N-ary with cardinal assertion (Model I) | 21,387,709 |

| N-ary without cardinal assertion (Model II) | 28,158,829 |

| Singleton Property with cardinal assertion (Model III) | 18,228,889 |

| Singleton Property without cardinal assertion (Model IV) | 25,000,009 |

| Nanopublication (Model V) | 21,387,709 |

Data from "Exposing Provenance Metadata Using Different RDF Models" by Fu et al. (2015).

As the table shows, models that avoid the redundancy of reification-like structures (such as the Singleton Property model) can be more efficient in terms of the total number of triples. The PaCE approach, by embedding provenance in the URI, aims for even greater efficiency, claiming a minimum of 49% reduction in provenance-specific triples compared to RDF reification.[1][6][8]

Query performance is another critical factor. The original PaCE evaluation reported that for complex provenance queries, its performance improved by three orders of magnitude over RDF reification, while remaining comparable for simpler queries.[1][6][8]

Experimental Protocols and Methodologies

To understand how these different models are evaluated, we can outline a general experimental protocol for comparing their performance.

Dataset Preparation

A dataset relevant to the drug development domain would be selected, for instance, a collection of protein-ligand binding assays from a public repository like ChEMBL. The data would include the entities (protein, compound), the relationship (binding affinity), the value (e.g., IC50), and the source of the data (e.g., publication DOI).

RDF Model Construction

The dataset would be converted into RDF using each of the competing models:

-

RDF Reification: Each binding affinity statement would be reified, and provenance triples would be attached to the rdf:Statement resource.

-

Named Graphs: All triples from a single source (e.g., a specific publication) would be placed in a named graph, and provenance would be attached to the graph's URI.

-

PaCE: URIs for the compounds and proteins would be created to include the source information directly within the URI.

Query Formulation

A set of SPARQL queries would be designed to test different aspects of provenance tracking. These would range from simple to complex:

-

Simple Query (SQ): "Retrieve the IC50 value for the interaction between Compound X and Protein Y from any source."

-

Provenance Query (PQ): "Retrieve the IC50 value for the interaction between Compound X and Protein Y, and also retrieve the publication it was reported in."

-

Complex Query (CQ): "Find all compounds that inhibit proteins targeted by Drug Z, and for each inhibition event, retrieve the source publication and the assay type, but only include results from publications after 2020."

Performance Evaluation

The RDF datasets for each model would be loaded into a triple store (e.g., Virtuoso, GraphDB, or Stardog). Each query would be executed multiple times against each dataset, and the average execution time would be recorded. The total number of triples and the on-disk size of each dataset would also be measured.

The logical workflow for such an evaluation is depicted below:

Applying Provenance Models in Drug Development: A Use Case

Let's consider a concrete use case: representing the result of a high-throughput screening (HTS) assay.

The Statement: "Compound CHEMBL123 showed 85% inhibition of Target_Gene_ABC in assay AID_456 performed on 2025-10-29 by Lab_XYZ."

The W3C PROV Ontology (PROV-O)

To model this provenance information in a structured way, we use the PROV Ontology, a W3C recommendation. PROV-O provides a set of classes and properties to represent provenance. The core classes are:

-

prov:Entity: A physical, digital, or conceptual thing. (e.g., our HTS result, the compound).

-

prov:Activity: Something that occurs over a period of time and acts upon or with entities. (e.g., the HTS assay).

-

prov:Agent: Something that bears some form of responsibility for an activity taking place, for an entity existing, or for another agent's activity. (e.g., the laboratory).

The relationship between these core classes is visualized below:

RDF Representations of the HTS Result

Below are the representations of our HTS result using the three different approaches, written in the Turtle RDF syntax.

Prefixes:

a) RDF Reification

b) Named Graphs

c) Provenance Context Entity (PaCE)

This side-by-side comparison clearly illustrates the conciseness of the PaCE approach. It represents the core finding with a single triple, while reification requires five and named graphs (for a single statement) require at least two, plus the graph block syntax.

Conclusion and Recommendations

For organizations in the drug development sector, establishing a robust and scalable provenance framework is not a luxury but a necessity. The choice of RDF model for representing provenance has profound implications on data interoperability, storage costs, and query performance.

-

RDF Reification , while a W3C standard, is generally not recommended for large-scale applications due to its verbosity and semantic limitations.

-

Named Graphs offer a pragmatic and widely supported solution, particularly effective for grouping statements that share a common context, such as all data from a single publication or experimental run.

-

The Provenance Context Entity (PaCE) approach presents a highly efficient and scalable alternative by embedding provenance directly into the identifiers of the data entities. This significantly reduces the number of triples required to store provenance information and can lead to dramatic improvements in query performance, especially for complex queries that traverse provenance trails.

For new projects, particularly those building large-scale knowledge graphs in areas like genomics, proteomics, and high-throughput screening, the PaCE approach is a compelling choice that warrants serious consideration. Its design principles align well with the need for performance and scalability in data-intensive scientific domains. For existing systems that already leverage named graphs, a hybrid approach could be adopted, using named graphs for coarse-grained provenance and considering a PaCE-like URI strategy for new, high-volume data streams.

Ultimately, the ability to trust, verify, and reproduce scientific findings is the bedrock of drug discovery. By adopting powerful and efficient provenance models like PaCE within an RDF framework, we can build a more transparent, integrated, and reliable data ecosystem to accelerate the development of new medicines.

References

- 1. w3.org [w3.org]

- 2. Provenance Information for Biomedical Data and Workflows: Scoping Review - PMC [pmc.ncbi.nlm.nih.gov]

- 3. fabriziorlandi.net [fabriziorlandi.net]

- 4. w3.org [w3.org]

- 5. researchgate.net [researchgate.net]

- 6. researchgate.net [researchgate.net]

- 7. chemrxiv.org [chemrxiv.org]

- 8. m.youtube.com [m.youtube.com]

PaCE for Scientific Data Provenance: An In-depth Technical Guide

For Researchers, Scientists, and Drug Development Professionals

In the realms of scientific research and drug development, the ability to trace the origin and evolution of data—its provenance—is paramount for ensuring reproducibility, establishing trust, and enabling collaboration. The Provenance Context Entity (PaCE) is a scalable and efficient approach for managing scientific data provenance, particularly within the Resource Description Framework (RDF), a standard for data interchange on the Web. This guide provides a comprehensive technical overview of the PaCE framework, its core principles, and its practical implementation, offering a robust solution for the challenges of data provenance in complex scientific workflows.

The Challenge of Scientific Data Provenance

Scientific datasets are often an amalgamation of information from diverse sources, including experimental results, computational analyses, and public databases. This heterogeneity makes it crucial to track the lineage of each piece of data to understand its context, quality, and reliability. Traditional methods for tracking provenance in RDF, such as RDF reification, have been criticized for their verbosity, lack of formal semantics, and performance issues, especially with large-scale datasets.

Introducing the Provenance Context Entity (PaCE) Approach

The PaCE approach addresses the shortcomings of traditional methods by introducing the concept of a "provenance context." Instead of creating complex and numerous statements about statements, PaCE directly associates a provenance context with each element of an RDF triple (subject, predicate, and object). This is achieved by creating provenance-aware URIs for each entity.

The core idea is to embed contextual information, such as the data source or experimental conditions, directly into the URI of the data entity. This creates a self-describing data model where the provenance is an intrinsic part of the data itself.

The Logical Model of PaCE

The PaCE model avoids the use of blank nodes and the RDF reification vocabulary.[1][2] It establishes a direct link between the data and its origin. A provenance-aware URI in the PaCE model typically follows this structure:

For instance, a piece of data extracted from a specific publication in PubMed could have a URI like:

http://example.com/bkr/PUBMED_123456/proteinX

Here, PUBMED_123456 serves as the provenance context, immediately informing any user or application that "proteinX" is described in the context of that specific publication.

Below is a diagram illustrating the logical relationship of the PaCE model.

Quantitative Performance: PaCE vs. Other Methods

The efficiency of PaCE becomes evident when compared to other RDF provenance tracking methods. The primary advantages are a significant reduction in the number of triples required to store provenance information and a substantial improvement in query performance.

Storage Efficiency

The following table summarizes the number of RDF triples generated by different provenance tracking methods for the Biomedical Knowledge Repository (BKR) dataset. The data is based on a benchmark study comparing Standard Reification, Singleton Property, and RDF*. While PaCE was not directly included in this specific benchmark, its triple count is comparable to or better than the most efficient methods here, as it avoids the overhead of additional statements about statements. For the purpose of comparison, data from a study on PaCE is also included.

| Provenance Method | Total Triples (in millions) |

| Standard Reification | 175.6[3] |

| Singleton Property | 100.9[3] |

| RDF* | 61.0[3] |

| PaCE Approach | Results in a minimum of 49% reduction compared to RDF Reification [1][2] |

Query Performance

The performance of complex queries is dramatically improved with PaCE. By embedding the provenance context in the URI, queries can be filtered more efficiently at a lower level.

| Query Type | RDF Reification | PaCE Approach |

| Simple Provenance Queries | Comparable Performance | Comparable Performance[1][2] |

| Complex Provenance Queries | High Execution Time | Up to three orders of magnitude faster [1][2] |

Experimental Protocol: Implementing PaCE in a Scientific Workflow

While a universal, step-by-step protocol for implementing PaCE depends on the specific scientific domain and existing data infrastructure, the following provides a generalized methodology based on its application in biomedical research, such as in the Biomedical Knowledge Repository (BKR) project.[4]

Step 1: Define the Provenance Context

-

Objective: Identify the essential provenance information to be captured.

-

Procedure:

-

Determine the granularity of provenance required. For example, in drug discovery, this could be the specific experiment ID, the batch of a compound, the date of the assay, or the source publication.

-

Establish a consistent and unique identifier for each provenance context. For instance, for a publication, this would be its PubMed ID. For an internal experiment, a unique internal identifier should be used.

-

Step 2: Design the Provenance-Aware URI Structure

-

Objective: Create a URI structure that incorporates the defined provenance context.

-

Procedure:

-

Define a base URI for your project or organization.

-

Establish a clear and consistent pattern for appending the provenance context and the entity name to the base URI.

-

Example: http://

.com/data/ /

-

Step 3: Data Ingestion and Transformation

-

Objective: Convert existing and new data into PaCE-compliant RDF triples.

-

Procedure:

-

Develop scripts or use ETL (Extract, Transform, Load) tools to process incoming data.

-

For each data point, extract the relevant entity and its associated provenance context.

-

Generate the provenance-aware URIs for the subject, predicate, and object of each RDF triple.

-

Serialize the generated triples into an RDF format (e.g., Turtle, N-Triples).

-

Step 4: Storing and Querying PaCE Data

-

Objective: Load the PaCE-formatted data into a triple store and perform provenance-based queries.

-

Procedure:

-

Choose a triple store that can efficiently handle a large number of URIs (e.g., Virtuoso, Stardog, GraphDB).

-

Load the generated RDF data into the triple store.

-

Formulate SPARQL queries that leverage the structure of the provenance-aware URIs. For example, to retrieve all data from a specific experiment, a query can filter URIs that contain the experiment ID.

-

The following diagram illustrates a typical experimental workflow for implementing PaCE in a biomedical research context.

Application in Drug Development

In the drug development pipeline, maintaining a clear and comprehensive audit trail is not just a matter of good scientific practice but also a regulatory requirement. PaCE can be instrumental in this process.

-

Preclinical Research: Tracking the source of cell lines, reagents, and experimental protocols.

-

Clinical Trials: Managing data from different clinical sites, ensuring patient data integrity, and tracking sample provenance.

-

Regulatory Submissions: Providing a clear and verifiable lineage of all data submitted to regulatory bodies like the FDA.

By adopting PaCE, pharmaceutical companies and research institutions can build a more robust and transparent data infrastructure, accelerating the pace of discovery and ensuring the integrity of their scientific findings.

Conclusion

The Provenance Context Entity (PaCE) approach offers a powerful and efficient solution for managing scientific data provenance.[1][5] By embedding provenance information directly into the data's identifiers, PaCE simplifies the data model, reduces storage overhead, and dramatically improves query performance for complex provenance-related questions.[1][2] For researchers, scientists, and drug development professionals, adopting PaCE can lead to more reproducible research, greater trust in data, and a more streamlined approach to managing the ever-growing volume of scientific information.

References

- 1. Provenance Context Entity (PaCE): Scalable Provenance Tracking for Scientific RDF Data - PMC [pmc.ncbi.nlm.nih.gov]

- 2. research.wright.edu [research.wright.edu]

- 3. fabriziorlandi.net [fabriziorlandi.net]

- 4. researchgate.net [researchgate.net]

- 5. "Provenance Context Entity (PaCE): Scalable Provenance Tracking for Sci" by Satya S. Sahoo, Olivier Bodenreider et al. [corescholar.libraries.wright.edu]

A Technical Guide to Provenance Tracking with PaCE RDF

In the intricate landscape of scientific research and drug development, the ability to meticulously track the origin and transformation of data—its provenance—is paramount for ensuring data quality, reproducibility, and trustworthiness. The Provenance Context Entity (PaCE) approach offers a scalable and efficient method for tracking the provenance of scientific data within the Resource Description Framework (RDF), a standard for data interchange on the Web. This guide provides an in-depth technical overview of the PaCE approach, tailored for researchers, scientists, and drug development professionals who increasingly rely on large-scale RDF datasets.

Core Concepts of PaCE

The PaCE approach introduces the concept of a "provenance context" to create provenance-aware RDF triples.[1][2] Unlike traditional methods like RDF reification, which can be verbose and lack formal semantics, PaCE provides a more streamlined and semantically grounded way to associate provenance information with RDF data.[1][2]

At its core, PaCE treats a collection of RDF triples that share the same provenance as a single conceptual entity. This "provenance context" is then linked to the relevant triples, effectively creating a direct association between the data and its origin without the overhead of reification. This approach is particularly beneficial in scientific domains where large volumes of data are generated from various sources and experiments.

The formal semantics of PaCE are defined as a simple extension of the existing RDF(S) semantics, which ensures compatibility with existing Semantic Web tools and implementations.[1][2] This allows for easier adoption within established research and development workflows.

PaCE vs. RDF Reification: A Comparative Overview

The standard mechanism for making statements about other statements in RDF is reification. However, it is known to have several drawbacks, including the generation of a large number of auxiliary triples and the use of blank nodes, which can complicate query processing.[1][2] PaCE was designed to overcome these limitations.

The key difference lies in how provenance is attached to an RDF statement. In RDF reification, a statement is broken down into its subject, predicate, and object, and each part is linked to a new resource that represents the statement itself. Provenance information is then attached to this new resource. PaCE, on the other hand, directly links the components of the RDF triple (or the entire triple) to a provenance context entity.

The following diagrams illustrate the structural differences between the two approaches.

References

The PaCE Approach: A Technical Guide to Phage-Assisted Continuous Evolution for Accelerated Drug Discovery

A Note on Terminology: The user's request specified the "PaCE approach for RDF." Our comprehensive research has identified "PaCE" primarily as Phage-Assisted Continuous Evolution , a powerful laboratory technique for directed evolution with significant applications in drug development. In parallel, "PaCE" also stands for "Provenance Context Entity," a method for managing Resource Description Framework (RDF) data. Given the request's focus on an in-depth technical guide for researchers in drug development, including experimental protocols and signaling pathways, this document will focus exclusively on Phage-Assisted Continuous Evolution . The inclusion of "RDF" in the original query is assessed to be a likely incongruity.

Executive Summary

Phage-Assisted Continuous Evolution (PACE) is a revolutionary directed evolution technique that harnesses the rapid lifecycle of bacteriophages to evolve biomolecules with desired properties at an unprecedented speed. This method allows for hundreds of rounds of mutation, selection, and replication to occur in a continuous, automated fashion, dramatically accelerating the discovery and optimization of proteins, enzymes, and other macromolecules for therapeutic and research applications. For researchers, scientists, and drug development professionals, PACE offers a powerful tool to overcome the limitations of traditional, labor-intensive directed evolution methods. This guide provides an in-depth overview of the core concepts of PACE, detailed experimental protocols, quantitative data from key experiments, and visual workflows to facilitate its implementation in the laboratory.

Core Concepts of Phage-Assisted Continuous Evolution (PACE)

The fundamental principle of PACE is to link the desired activity of a target biomolecule to the propagation of an M13 bacteriophage. This is achieved through a cleverly designed biological circuit where the survival and replication of the phage are contingent upon the evolved function of the protein of interest.

The PACE system consists of several key components:

-

Selection Phage (SP): The M13 phage is engineered to carry the gene encoding the protein of interest (POI) in place of an essential phage gene, typically gene III (gIII). The gIII gene encodes the pIII protein, which is crucial for the phage's ability to infect E. coli host cells.

-

Host E. coli: A continuous culture of E. coli serves as the host for phage replication. These host cells are engineered to contain two critical plasmids:

-

Accessory Plasmid (AP): This plasmid contains the gIII gene under the control of a promoter that is activated by the desired activity of the POI. Thus, only when the POI performs its intended function is the essential pIII protein produced, allowing the phage to create infectious progeny.

-

Mutagenesis Plasmid (MP): This plasmid expresses genes that induce a high mutation rate in the selection phage genome as it replicates within the host cell. This continuous introduction of genetic diversity is the source of the evolutionary process.

-

-

The "Lagoon": This is a fixed-volume vessel where the continuous evolution takes place. It is constantly supplied with fresh host cells from a chemostat and is also subject to a constant outflow. This setup ensures that only phages that can replicate faster than they are washed out will survive, creating a strong selective pressure.

The PACE cycle begins with the infection of the host E. coli by the selection phage. Inside the host, the POI gene on the phage genome is expressed. If the POI possesses the desired activity, it activates the promoter on the accessory plasmid, leading to the production of the pIII protein. Simultaneously, the mutagenesis plasmid introduces random mutations into the replicating phage genome. The newly assembled phages, now carrying mutated versions of the POI gene, are released from the host cell. Phages with improved POI activity will lead to higher levels of pIII production and, consequently, a higher rate of replication. These more "fit" phages will outcompete their less active counterparts and come to dominate the population in the lagoon over time.

Data Presentation: Quantitative Outcomes of PACE

PACE has been successfully applied to evolve a wide range of biomolecules with dramatically improved properties. The following tables summarize key quantitative data from published PACE experiments, showcasing the power and versatility of this technique.

| Parameter | Value | Reference Context |

| General Performance Metrics | ||

| Acceleration over Conventional Directed Evolution | ~100-fold | General estimate based on the ability to perform many rounds of evolution per day. |

| Rounds of Evolution per Day | Dozens | The continuous nature of PACE allows for numerous generations of mutation and selection within a 24-hour period.[1] |

| In Vivo Mutagenesis Rate Increase (with MP6) | >300,000-fold | The MP6 mutagenesis plasmid dramatically increases the mutation rate over the basal level in E. coli.[2] |

| Time to Evolve Novel T7 RNAP Activity | < 1 week | Starting from undetectable activity, PACE evolved T7 RNA polymerase variants with novel promoter specificities.[1] |

| Evolved Protein | Target Property | Starting Material | Evolved Variant(s) | Fold Improvement / Key Result | Reference |

| T7 RNA Polymerase | Altered Promoter Specificity (recognize T3 promoter) | Wild-type T7 RNAP | Multiple evolved variants | ~10,000-fold change in specificity (PT3 vs. PT7) in under 3 days. | [3] |

| TEV Protease | Altered Substrate Specificity | Wild-type TEV Protease | Multiple evolved variants | Successfully evolved to cleave 11 different non-canonical substrates. | |

| TALENs | Improved DNA-binding Specificity | A TALEN with off-target activity | Evolved TALEN variants | Significant reduction in off-target cleavage while maintaining on-target activity. | |

| Adenine Base Editor (ABE) | Expanded Targeting Scope | ABE7.10 | ABE8e | Broadened targeting compatibility (e.g., enabling editing of G-C contexts). |

Experimental Protocols

The following provides a generalized, high-level protocol for a PACE experiment. Specific parameters will need to be optimized for the particular protein and desired activity. For a comprehensive, step-by-step guide, it is highly recommended to consult detailed protocols such as those published in Nature Protocols.

Materials and Reagents

-

E. coli strain: Typically a strain that supports M13 phage propagation and is compatible with the plasmids used (e.g., E. coli 1059).

-

Plasmids:

-

Selection Phage (SP) vector (with gIII replaced by the gene of interest).

-

Accessory Plasmid (AP) with the appropriate selection circuit.

-

Mutagenesis Plasmid (MP), e.g., MP6.

-

-

Media:

-

Luria-Bertani (LB) medium for general cell growth.

-

Davis Rich Medium for PACE experiments.

-

Appropriate antibiotics for plasmid maintenance.

-

-

Inducers: e.g., Arabinose for inducing the mutagenesis plasmid.

-

Phage stocks: A high-titer stock of the initial selection phage.

-

PACE apparatus:

-

Chemostat for continuous culture of host cells.

-

Lagoon vessels for the evolution experiment.

-

Peristaltic pumps for fluid transfer.

-

Tubing and connectors.

-

Waste container.

-

Experimental Workflow

-

Preparation of Host Cells: Transform the E. coli host strain with the Accessory Plasmid (AP) and the Mutagenesis Plasmid (MP). Grow an overnight culture of the host cells.

-

Assembly of the PACE Apparatus: Assemble the chemostat, lagoon(s), and waste container with sterile tubing. Calibrate the peristaltic pumps to achieve the desired flow rates for the chemostat and lagoons.

-

Initiation of the Chemostat: Inoculate the chemostat with the host cell culture. Grow the cells to a steady state (a constant optical density).

-

Initiation of the PACE Experiment:

-

Fill the lagoon(s) with fresh media and host cells from the chemostat.

-

Inoculate the lagoon(s) with the starting selection phage population.

-

Begin the continuous flow of fresh host cells from the chemostat into the lagoon(s) and the outflow from the lagoon(s) to the waste container. The dilution rate of the lagoon is a critical parameter for controlling the selection stringency.

-

Induce the mutagenesis plasmid (e.g., by adding arabinose to the media) to initiate the evolution process.

-

-

Monitoring the Experiment: Periodically, take samples from the lagoon(s) to monitor the phage titer and the evolution of the desired activity. This can be done through plaque assays, sequencing of the evolved genes, and in vitro assays of the protein of interest.

-

Analysis of Evolved Phage: After a sufficient number of generations, isolate individual phage clones from the lagoon. Sequence the gene of interest to identify mutations. Characterize the properties of the evolved proteins to confirm the desired improvements.

Mandatory Visualizations

The Phage-Assisted Continuous Evolution (PACE) Workflow

Caption: A schematic of the PACE workflow.

Selection Circuit for Evolving a DNA-Binding Protein

Caption: A selection circuit for evolving a DNA-binding protein.

References

The Indispensable Role of Provenance Context Entity (PaCE) in Scientific and Drug Development Research

In the intricate landscape of modern scientific research, particularly within drug discovery and development, the ability to trace the origin and transformation of data is not merely a matter of good practice but a cornerstone of reproducibility, trust, and innovation. This technical guide delves into the purpose and application of the Provenance Context Entity (PaCE) approach, a sophisticated method for capturing and managing data provenance. Designed for researchers, scientists, and drug development professionals, this document elucidates the core principles of PaCE, its advantages over traditional methods, and its practical implementation in scientific workflows.

The Essence of Provenance in Research

Provenance, in the context of scientific data, refers to the complete history of a piece of data—from its initial creation to all subsequent modifications and analyses.[1][2] It provides a transparent and auditable trail that is crucial for:

-

Verifying the quality and reliability of data: By understanding the lineage of a dataset, researchers can assess its trustworthiness and make informed decisions about its use.[1][2]

-

Ensuring the reproducibility of experimental results: Detailed provenance allows other researchers to replicate experiments and validate findings, a fundamental tenet of the scientific method.

-

Facilitating data integration and reuse: When combining datasets from various sources, provenance information is essential for understanding the context and resolving potential inconsistencies.

-

Assigning appropriate credit to data creators and contributors: Proper attribution is vital for fostering collaboration and acknowledging intellectual contributions.

In the high-stakes environment of drug development, where data integrity is paramount, robust provenance tracking is indispensable for regulatory compliance and ensuring patient safety.

Limitations of Traditional Provenance Tracking: RDF Reification

The Resource Description Framework (RDF) is a standard model for data interchange on the Web. However, the traditional method for representing statement-level provenance in RDF, known as RDF Reification, has significant drawbacks.[1][2] This approach involves creating four additional triples for each original data triple to describe its provenance, leading to a substantial increase in the size of the dataset.[1] This "triple bloat" not only escalates storage requirements but also significantly degrades query performance, making it an inefficient solution for large-scale scientific datasets.[1]

The Provenance Context Entity (PaCE) Approach

To overcome the limitations of RDF reification, the Provenance Context Entity (PaCE) approach was developed.[1][2] PaCE offers a more scalable and efficient method for tracking provenance by creating "provenance-aware" RDF triples without the need for reification.[1] The core idea behind PaCE is to embed contextual provenance information directly within the Uniform Resource Identifiers (URIs) of the entities in an RDF triple (the subject, predicate, and object).[3]

This is achieved by defining a "provenance context" for a specific application or experiment. This context can include information such as the data source, the time of data creation, the experimental conditions, and the software version used.[2][4] By incorporating this context into the URIs, each triple inherently carries its own provenance, eliminating the need for additional descriptive triples.

The PaCE approach was notably implemented in the Biomedical Knowledge Repository (BKR) project at the U.S. National Library of Medicine, which integrates vast amounts of biomedical data from sources like PubMed, Entrez Gene, and the Unified Medical Language System (UMLS).[1][4]

Quantitative Advantages of PaCE

The implementation of PaCE within the BKR project demonstrated significant quantitative advantages over the traditional RDF reification method.[1]

Reduction in Provenance-Specific Triples

The PaCE approach dramatically reduces the number of triples required to store provenance information.[1] The original research on PaCE reported a minimum of a 49% reduction in the total number of provenance-specific RDF triples compared to RDF reification.[1] The level of reduction can be tailored by choosing different implementations of PaCE, each offering a different granularity of provenance tracking:

-

Exhaustive PaCE: Explicitly links the subject, predicate, and object to the source, providing the most detailed provenance.

-

Intermediate PaCE: Links a subset of the triple's components to the source.

-

Minimalist PaCE: Links only one component of the triple (e.g., the subject) to the source.

The following table summarizes the relative number of provenance-specific triples generated by each approach compared to a baseline dataset with no provenance and the RDF reification method.

| Provenance Approach | Relative Number of Triples (Approximate) | Percentage Reduction vs. RDF Reification |

| No Provenance (Baseline) | 1x | - |

| Minimalist PaCE | 1.5x | 72% |

| Intermediate PaCE | 2x | 59% |

| Exhaustive PaCE | 3x | 49% |

| RDF Reification | 6x | 0% |

Query Performance Improvement

The reduction in the number of triples directly translates to a significant improvement in query performance. For complex provenance queries, the PaCE approach has been shown to be up to three orders of magnitude faster than RDF reification.[1] This enhanced performance is critical for interactive data exploration and analysis in large-scale research projects.

Experimental Protocol: Evaluating PaCE Performance

While the original papers provide a high-level overview of the evaluation, a detailed, step-by-step experimental protocol can be outlined as follows for a comparative analysis of PaCE and RDF reification:

-

Dataset Preparation:

-

Select a representative scientific dataset (e.g., a subset of the Biomedical Knowledge Repository).

-

Create a baseline version of the dataset in RDF format without any provenance information.

-

Generate four additional versions of the dataset, each incorporating provenance information using a different method:

-

Minimalist PaCE

-

Intermediate PaCE

-

Exhaustive PaCE

-

RDF Reification

-

-

-

Storage Analysis:

-

For each of the five datasets, measure the total number of RDF triples.

-

Calculate the percentage increase in triples for each provenance-aware dataset relative to the baseline.

-

Calculate the percentage reduction in triples for each PaCE implementation relative to the RDF reification dataset.

-

-

Query Performance Evaluation:

-

Develop a set of representative SPARQL queries that retrieve data based on provenance information. These queries should vary in complexity, from simple lookups to complex pattern matching.

-

Execute each query multiple times against each of the four provenance-aware datasets.

-

Measure the average query execution time for each query on each dataset.

-

Analyze the performance differences between the PaCE implementations and RDF reification, particularly for complex queries.

-

-

Data Loading and Indexing:

-

Measure the time required to load and index each of the five datasets into a triple store. This provides an indication of the overhead associated with each provenance approach.

-

Visualizing Scientific Workflows with PaCE and Graphviz

To illustrate the practical application of PaCE, we can model a hypothetical drug discovery workflow and a signaling pathway, and then visualize their provenance using Graphviz.

Drug Discovery Workflow: High-Throughput Screening

This workflow outlines the initial stages of identifying a potential drug candidate through high-throughput screening. The provenance of each step is crucial for understanding the experimental context and the reliability of the results.

Caption: A simplified high-throughput screening workflow in drug discovery.

Signaling Pathway: MAPK/ERK Pathway Activation

This diagram illustrates the provenance of data related to the activation of the MAPK/ERK signaling pathway, a crucial pathway in cell proliferation and differentiation.

Caption: Provenance of an experiment studying MAPK/ERK pathway activation.

Conclusion

The Provenance Context Entity approach represents a significant advancement in the management of scientific data provenance. By providing a scalable and efficient alternative to traditional methods, PaCE empowers researchers to maintain the integrity and reproducibility of their work, particularly in data-intensive fields like drug discovery. The ability to effectively track the lineage of data not only enhances the reliability of scientific findings but also accelerates the pace of innovation by fostering greater trust and collaboration within the research community. As scientific datasets continue to grow in size and complexity, the adoption of robust provenance frameworks like PaCE will be increasingly critical for unlocking the full potential of scientific data.

References

- 1. Provenance Context Entity (PaCE): Scalable Provenance Tracking for Scientific RDF Data - PMC [pmc.ncbi.nlm.nih.gov]

- 2. researchgate.net [researchgate.net]

- 3. A unified framework for managing provenance information in translational research - PMC [pmc.ncbi.nlm.nih.gov]

- 4. researchgate.net [researchgate.net]

The PaCE Platform: A Technical Guide to Accelerating Scientific Data Management in Drug Development

For Immediate Release

In the data-intensive landscape of pharmaceutical research and development, the ability to efficiently manage, analyze, and derive insights from vast and complex datasets is paramount. The PaCE Platform by PharmaACE has emerged as a comprehensive solution designed to address these challenges, offering a suite of tools for advanced business analytics and reporting. This technical guide provides an in-depth overview of the PaCE Platform, its core components, and its application in streamlining scientific data management for researchers, scientists, and drug development professionals.

Executive Summary

The PaCE Platform is an integrated suite of tools designed to enhance productivity, automate reporting, and facilitate collaboration in the management and analysis of pharmaceutical and healthcare data. By leveraging a centralized data management system and incorporating artificial intelligence and machine learning (AI/ML) capabilities, PaCE aims to transform raw data into actionable insights, thereby accelerating data-driven decision-making in the drug development lifecycle. The platform's modular design allows for flexibility, including the integration of existing user models ("Bring Your Own Model").

Core Platform Components and Functionalities

The PaCE Platform is comprised of several key tools, each tailored to specific analytical and reporting needs within the pharmaceutical industry.

| Component | Function | Key Features |

| PACE Tool | Excel-Based Analytics | - Automation of waterfall and sensitivity analyses- Simulation and trending capabilities- ETL (Extract, Transform, Load) for linking assumptions to external sources |

| PACE Point | Presentation & Reporting | - Automated report generation- Integration with PACE Tool for seamless data-to-presentation workflows |

| PACEBI | Business Intelligence | - Self-service, web-based data visualization- Interactive report building- Data pipeline development |

| InsightACE | Market Intelligence | - AI-enabled analysis of structured and unstructured data (e.g., clinical trials, patents, news)- Continuous surveillance and proactive alerts |

| ForecastACE | Predictive Analytics | - Cloud-based forecasting with scenario testing- Trending and simulation utilities |

| HCPACE | Customer Analytics | - AI-powered 360-degree view of Healthcare Professionals (HCPs)- Integration of deep data and behavioral insights |

| PatientACE | Real-World Data (RWD) | - "No-code" approach to RWD aggregation and transformation |

Platform Architecture and Data Workflow

The PaCE Platform is built on a cloud-based architecture that emphasizes data consolidation and standardized processes. The general workflow facilitates a seamless transition from data integration to insight generation.

Data Ingestion and Integration Workflow

The platform's ETL capabilities allow for the integration of data from diverse sources, a critical function in the fragmented landscape of scientific and clinical data.

Analytics and Reporting Workflow

Once data is centralized, the PaCE tools enable a streamlined process for analysis, visualization, and reporting, designed to support cross-functional teams.

Methodologies for Key Platform Applications

The following sections outline standardized methodologies for leveraging the PaCE Platform in common drug development scenarios.

Protocol for Competitive Landscape Analysis using InsightACE

Objective: To continuously monitor and analyze the competitive landscape for a drug candidate in Phase II clinical trials.

Methodology:

-

Data Source Configuration:

-

Connect InsightACE to public and licensed databases for clinical trials (e.g., ClinicalTrials.gov), patent offices, regulatory agencies (e.g., FDA, EMA), and financial news sources.

-

Define keywords and concepts for surveillance, including drug class, mechanism of action, target indications, and competitor company names.

-

-

-

Utilize the platform's Natural Language Processing (NLP) to ingest and categorize unstructured data from press releases, earnings call transcripts, and scientific publications.[1]

-

Establish alert triggers for key events such as new clinical trial initiations, trial data readouts, regulatory filings, and patent challenges.

-

-

Impact Analysis and Reporting:

-

Use PACEBI to create a dynamic dashboard visualizing competitor activities, timelines, and potential market disruptions.

-

Integrate findings with ForecastACE to model the potential impact of competitor actions on market penetration and revenue forecasts.[1]

-

Generate automated weekly intelligence briefings using PACE Point for dissemination to stakeholders.

-

Protocol for Real-World Evidence (RWE) Generation using PatientACE

Objective: To analyze real-world data to identify patient subgroups with optimal response to a newly marketed therapeutic.

Methodology:

-

Data Aggregation and Transformation:

-

Utilize PatientACE's "no-code" interface to ingest anonymized patient-level data from electronic health records (EHRs), claims databases, and patient registries.

-

Define data transformation rules to standardize variables across disparate datasets (e.g., diagnosis codes, medication names, lab values).

-

-

Cohort Building and Analysis:

-

Define the patient cohort based on inclusion/exclusion criteria (e.g., diagnosis, treatment initiation date, demographics).

-

Leverage the platform's analytical tools to stratify the cohort based on baseline characteristics and clinical outcomes.

-

-

Insight Visualization and Interpretation:

-

Use PACEBI to generate interactive visualizations, such as Kaplan-Meier curves for survival analysis or heatmaps to show treatment response by patient segment.

-

Collaborate with biostatisticians and clinical researchers through the platform's user management system to interpret the findings and generate hypotheses for further investigation.

-

The Future of Scientific Data Management with PaCE

The trajectory of scientific data management in drug development is toward greater integration, automation, and predictive capability. The PaCE Platform is positioned to contribute to this future by:

-

Democratizing Data Science: By providing "no-code" and self-service tools, the platform empowers bench scientists and clinical researchers to perform complex data analyses without extensive programming knowledge.

-

Enhancing Collaboration: Centralized data and user management systems break down data silos between functional areas (e.g., R&D, clinical operations, commercial), fostering a more integrated approach to drug development.[2]

-

Accelerating timelines: Automation of routine analytical and reporting tasks frees up researchers to focus on higher-value activities such as experimental design and data interpretation.[2]

As the volume and complexity of scientific data continue to grow, platforms like PaCE will be instrumental in harnessing this information to bring new therapies to patients faster and more efficiently.

References

Harnessing Linked Data in Pharmaceutical R&D: A Technical Guide to the PaCE Framework

For Researchers, Scientists, and Drug Development Professionals

Abstract

The explosion of biomedical data presents both a monumental challenge and an unprecedented opportunity for the pharmaceutical industry. The integration of vast, heterogeneous datasets is paramount to accelerating the discovery and development of new therapies. Linked data, built upon semantic web standards, provides a powerful paradigm for creating a unified, machine-readable web of interconnected knowledge. However, the successful implementation of linked data initiatives requires a structured and agile methodology. This technical guide introduces the PaCE (Plan, Analyze, Construct, Execute) framework as a foundational methodology for managing linked data projects in drug discovery. We provide a detailed walkthrough of how this framework can be applied to a typical drug discovery project, from initial planning to the generation of actionable insights. This guide also includes quantitative data on the impact of structured data initiatives and a practical example of visualizing a key signaling pathway using linked data principles.

The Foundational Principles of Linked Data in Pharmaceutical Research

Linked data is a set of principles and technologies that enable the creation of a global data space where data from diverse sources can be connected and queried as a single information system. The core principles, as defined by Tim Berners-Lee, are:

-

Use URIs (Uniform Resource Identifiers) as names for things: Every entity, be it a gene, a protein, a chemical compound, or a clinical trial, is assigned a unique URI.

-

Use HTTP URIs so that these names can be looked up: This allows anyone to access information about an entity by simply using a web browser or other web-enabled tools.

-

Provide useful information when a URI is looked up, using standard formats like RDF (Resource Description Framework) and SPARQL (SPARQL Protocol and RDF Query Language): RDF is a data model that represents information in the form of subject-predicate-object "triples," forming a graph of interconnected data. SPARQL is the query language used to retrieve information from this graph.

-

Include links to other URIs, thereby enabling the discovery of more information: This is the "linked" aspect, creating a web of data that can be traversed to uncover new relationships and insights.

In the pharmaceutical domain, these principles are being used to break down data silos and create comprehensive knowledge graphs that integrate public and proprietary data sources.[1] This integrated view is crucial for understanding complex disease mechanisms and identifying novel therapeutic targets.

The PaCE Framework: A Structured Approach for Linked Data Projects

The PaCE framework is a flexible and iterative methodology developed by Google for data analysis projects. It provides a structured workflow that is well-suited for the complexities of implementing linked data in a research environment. The four stages of PaCE are:

-

Plan: This initial stage focuses on defining the project's objectives, scope, and stakeholders. It involves identifying the key scientific questions and the data sources required to answer them.

-

Analyze: In this phase, the data is explored, cleaned, and pre-processed. The quality and structure of the data are assessed to ensure its suitability for the project.

-

Construct: This is the core implementation phase where the linked data knowledge graph is built. This includes data modeling, ontology development, and the integration of various data sources.

-

Execute: In the final stage, the constructed knowledge graph is utilized to answer the initial research questions, generate new hypotheses, and communicate the findings to stakeholders.

The cyclical nature of the PaCE framework allows for continuous learning and refinement throughout the project lifecycle.

A Detailed Methodology: Applying PaCE to a Target Identification and Validation Project

The following section provides a detailed experimental protocol for a linked data project aimed at identifying and validating new drug targets, structured according to the PaCE framework.

Plan Phase: Laying the Groundwork for Discovery

Objective: To identify and prioritize potential drug targets for a specific cancer subtype by integrating internal genomics data with public domain knowledge.

Experimental Protocol:

-

Define the Core Scientific Question: Formulate a precise question, for example: "Which genes are overexpressed in our patient cohort for cancer X, are known to be part of the Wnt signaling pathway, and have been targeted by existing compounds?"

-

Assemble a Cross-Functional Team: Include researchers, bioinformaticians, data scientists, and clinicians to ensure all perspectives are considered.

-

Inventory and Profile Data Sources:

-

Internal Data: Patient-derived gene expression data (e.g., RNA-seq), compound screening results.

-

External Data: Public databases such as UniProt (protein information), ChEMBL (bioactivity data), DrugBank (drug information), and pathway databases like Reactome.

-

Ontologies: Gene Ontology (GO) for gene function, Disease Ontology (DO) for disease classification.

-

-

Establish Success Criteria: Define measurable outcomes, such as the identification of at least three novel targets with strong evidence for further investigation.

Analyze Phase: Preparing the Data for Integration

Objective: To ensure the quality and consistency of the data and to map entities to a common vocabulary.

Experimental Protocol:

-

Data Quality Assessment: Profile each dataset to identify missing values, inconsistencies, and potential biases.

-

Data Cleansing and Normalization: Correct errors and standardize data formats. For example, normalize gene expression values across different experimental batches.

-

Entity Mapping and URI Assignment: Map all identified entities (genes, proteins, diseases, compounds) to canonical URIs from selected ontologies and public databases. This is a critical step for ensuring data interoperability.

-

Preliminary Data Exploration: Perform initial analyses on individual datasets to understand their characteristics and to inform the data modeling process in the next phase.

Construct Phase: Building the Knowledge Graph

Objective: To create an integrated knowledge graph that combines internal and external data.

Experimental Protocol:

-

Ontology Selection and Extension: Utilize existing ontologies like the Gene Ontology and create a custom ontology to model the specific relationships and entities in the project, such as "isOverexpressedIn" or "isTargetedBy."[2][3]

-

RDF Transformation: Convert the cleaned and mapped data into RDF triples. This can be done using various tools and custom scripts.

-

Data Loading and Integration: Load the RDF triples into a triple store (e.g., GraphDB, Stardog).

-

Link Discovery: Use link discovery tools or custom algorithms to identify and create owl:sameAs links between equivalent entities from different datasets (e.g., linking a gene in an internal database to its corresponding UniProt entry).

Execute Phase: Deriving Insights from the Knowledge Graph

Objective: To query the knowledge graph to answer the scientific question and to visualize the results for interpretation.

Experimental Protocol:

-

SPARQL Querying for Target Identification: Write and execute SPARQL queries to traverse the knowledge graph and identify entities that meet the criteria defined in the planning phase. For example, a query could retrieve all genes that are overexpressed in the patient cohort, are part of the Wnt signaling pathway, and are the target of a compound with known bioactivity.

-

Hypothesis Generation: The results of the SPARQL queries will provide a list of potential drug targets.

-

Visualization of Biological Context: Use Graphviz to visualize the sub-networks of the knowledge graph that are relevant to the identified targets, such as the protein-protein interactions around a potential target.

-

Prioritization and Experimental Validation: Prioritize the identified targets based on the strength of the evidence in the knowledge graph and design follow-up wet lab experiments for validation.

Quantitative Impact of Structured Data Initiatives in Pharma

The adoption of structured data methodologies and advanced analytics is having a measurable impact on the efficiency of pharmaceutical R&D. While a comprehensive industry-wide ROI for linked data is still emerging, case studies from various organizations demonstrate significant improvements in key areas.

| Metric | Impact | Context |

| Reduction in Clinical Trial Preparation Time | 43% - 44% reduction in preparation time for tumor boards.[4] | Implementation of a digital solution for urogenital and gynecology cancer tumor boards.[4] |

| Reduction in Case Postponements | Nearly 50% reduction in postponement rates for urology tumor boards.[4] | Implementation of a digital solution for urogenital and gynecology cancer tumor boards.[4] |

| AI Adoption in Life Sciences | 63% of life sciences organizations are interested in using AI for R&D data analysis.[5] | Menlo Ventures survey on AI adoption in healthcare.[5] |

| Acceleration of Procurement Cycles for AI Tools | 18% acceleration for health systems.[5] | Menlo Ventures survey on AI adoption in healthcare.[5] |

These figures highlight the potential for significant gains in efficiency and speed in the drug development lifecycle through the adoption of more structured and integrated data practices.

Visualizing Signaling Pathways with Linked Data and Graphviz

A key advantage of representing biological data as a graph is the ability to visualize complex networks. The Wnt signaling pathway, a critical pathway in many cancers, can be modeled as a set of RDF triples and then visualized using Graphviz.[6]

Caption: A simplified representation of the canonical Wnt signaling pathway.

This diagram illustrates the key molecular interactions in the Wnt pathway, providing a clear visual representation that can aid in understanding its role in disease and in identifying potential points of therapeutic intervention.

Conclusion

The convergence of the PaCE framework and linked data technologies presents a powerful opportunity for the pharmaceutical industry to overcome the challenges of data integration and to accelerate the pace of innovation. By adopting a structured, iterative, and semantically-rich approach to data management and analysis, research organizations can unlock the full potential of their data assets, leading to the faster discovery and development of novel, life-saving therapies. This technical guide provides a roadmap for embarking on this journey, empowering researchers and scientists to build the future of data-driven drug discovery.

References

- 1. What Are Ontologies and How Are They Creating a FAIRer Future for the Life Sciences? | Technology Networks [technologynetworks.com]

- 2. scitepress.org [scitepress.org]

- 3. How to Develop a Drug Target Ontology – KNowledge Acquisition and Representation Methodology (KNARM) - PMC [pmc.ncbi.nlm.nih.gov]

- 4. youtube.com [youtube.com]

- 5. menlovc.com [menlovc.com]

- 6. Drug Discovery Approaches to Target Wnt Signaling in Cancer Stem Cells - PMC [pmc.ncbi.nlm.nih.gov]

Getting Started with Provenance Context Entity: An In-depth Technical Guide

Audience: Researchers, scientists, and drug development professionals.

Introduction to Provenance in Scientific Research

In the realm of data-intensive scientific research, particularly within drug development, the ability to trust, reproduce, and verify experimental and computational results is paramount. Data provenance, defined as a record that describes the people, institutions, entities, and activities involved in producing, influencing, or delivering a piece of data or a thing, serves as this foundation of trust.[1][2] For researchers and drug development professionals, robust provenance tracking ensures data integrity, facilitates the reproducibility of complex analyses, and is increasingly critical for regulatory compliance.[3][4]

This guide provides a technical deep-dive into the core concepts of data provenance, with a specific focus on the Provenance Context Entity (PaCE) approach, a scalable method for tracking provenance in scientific RDF data.[5][6] We will explore the underlying data models, present quantitative data on performance, detail experimental protocols where provenance is critical, and provide visualizations of complex scientific workflows and signaling pathways.

Core Concepts: From W3C PROV to the Provenance Context Entity (PaCE)

The W3C PROV Data Model

The World Wide Web Consortium (W3C) has established a standard for provenance information called PROV. This model is built upon a few core concepts:

-

Entity : A digital or physical object. In a scientific context, this could be a dataset, a chemical compound, a biological sample, or a research paper.

-

Activity : A process that acts on or with entities. Examples include running a simulation, performing a laboratory assay, or curating a dataset.

-

Agent : An entity that is responsible for an activity. This can be a person, a software tool, or an organization.

These core components are interconnected through a series of defined relationships, allowing for a detailed and machine-readable description of how a piece of data came to be.

The Challenge of Provenance in RDF and the PaCE Solution

The Resource Description Framework (RDF) is a standard model for data interchange on the Web, often used in scientific applications. However, traditional methods for tracking provenance in RDF, such as RDF reification, have known issues, including a lack of formal semantics and the generation of a large number of additional statements, which can impact storage and query performance.[5][6]

The Provenance Context Entity (PaCE) approach was developed to address these challenges.[5][6] PaCE uses the notion of a "provenance context" to create provenance-aware RDF triples without the need for reification. This results in a more scalable and efficient representation of provenance information.[5][6]

Data Presentation: PaCE Performance Evaluation

The primary advantage of the PaCE approach lies in its efficiency. The following tables summarize the quantitative data from a study that implemented PaCE in the Biomedical Knowledge Repository (BKR) project at the US National Library of Medicine, comparing it to the standard RDF reification approach.[5][6][7][8]

Storage Overhead: Provenance-Specific Triples

This table illustrates the reduction in the number of additional RDF triples required to store provenance information when using different PaCE strategies compared to RDF reification. The base dataset contained 23,433,657 triples.[7]

| Provenance Approach | Total Triples | Provenance-Specific Triples | % Increase from Base |

| RDF Reification | 152,321,002 | 128,887,345 | 550% |

| PaCE (Exhaustive) | 46,867,314 | 23,433,657 | 100% |

| PaCE (Intermediate) | 35,150,486 | 11,716,829 | 50% |

| PaCE (Minimalist) | 24,605,340 | 1,171,683 | 5% |

Data sourced from the paper "Provenance Context Entity (PaCE): Scalable Provenance Tracking for Scientific RDF Data".[5][6][7][8]

Query Performance Comparison

This table shows the execution time for four different types of provenance queries, comparing the performance of the PaCE approach (Intermediate strategy) against RDF reification.

| Query Type | Description | RDF Reification (seconds) | PaCE (Intermediate) (seconds) | Performance Improvement |

| PQ1 | Retrieve all triples from a specific source. | 2.1 | 2.3 | ~ -9% |

| PQ2 | Retrieve triples asserted by a specific curator. | 1.9 | 2.1 | ~ -10% |

| PQ3 | Retrieve triples with a specific assertion method. | 1.8 | 2.0 | ~ -11% |

| PQ4 | Retrieve triples based on a combination of provenance attributes. | 3,456 | 2.9 | ~ 119,000% (3 orders of magnitude) |

Data sourced from the paper "Provenance Context Entity (PaCE): Scalable Provenance Tracking for Scientific RDF Data".[5][6][8] As the data shows, for simple queries, the performance is comparable, but for complex queries that require joining across multiple provenance attributes, the PaCE approach is significantly faster.[5][6]

Experimental Protocols with Provenance in Mind

Detailed and reproducible protocols are the bedrock of good science. Integrating provenance tracking into these protocols ensures that every step, parameter, and dependency is captured.

Protocol: Structure-Based Virtual Screening for Drug Discovery

This protocol outlines a typical workflow for identifying novel inhibitors for a protein target. Capturing the provenance of this workflow is crucial for understanding the results and reproducing the screening campaign.

Objective: To identify potential small molecule inhibitors of a target protein through a computational screening process.

Methodology:

-

Target Protein Preparation:

-

Activity: Obtain the 3D structure of the target protein.

-

Entity (Input): Protein Data Bank (PDB) ID or a locally generated homology model.

-

Agent: Researcher, Protein Preparation Wizard (e.g., in Maestro software).[9]

-

Details: The protein structure is pre-processed to add hydrogens, assign bond orders, create disulfide bonds, and remove any co-crystallized ligands or water molecules that are not relevant to the binding site. The protonation states of residues are optimized at a defined pH. Finally, the structure is minimized to relieve any steric clashes.

-

-

Binding Site Identification:

-

Activity: Define the binding pocket for docking.

-

Entity (Input): Prepared protein structure.

-

Agent: Researcher, SiteMap or FPocket software.[10]

-

Details: A grid box is generated around the identified binding site. The dimensions of this box are critical parameters that are recorded in the provenance.

-

-

Ligand Library Preparation:

-

Activity: Prepare a library of small molecules for screening.

-

Entity (Input): A collection of compounds in a format like SDF or SMILES (e.g., from the Enamine REAL library).[11]

-

Agent: LigPrep or a similar tool.

-

Details: Ligands are processed to generate different ionization states, tautomers, and stereoisomers. Energy minimization is performed on each generated structure.

-

-

Molecular Docking:

-

Activity: Dock the prepared ligands into the target's binding site.

-

Entity (Input): Prepared protein structure, prepared ligand library, grid definition file.

-

Agent: Docking software (e.g., AutoDock Vina, Glide).[10]

-

Details: Each ligand is flexibly docked into the rigid receptor binding site. The docking algorithm samples different conformations and orientations of the ligand.

-

-

Scoring and Ranking:

-

Activity: Score the docking poses and rank the ligands.

-

Entity (Input): Docked ligand poses.

-

Agent: Scoring function within the docking software.

-

Details: A scoring function is used to estimate the binding affinity of each ligand. The ligands are ranked based on their scores.

-

-

Post-processing and Hit Selection:

-

Activity: Filter and select promising candidates.

-

Entity (Input): Ranked list of ligands.

-

Agent: Researcher, filtering scripts.

-

Details: The top-ranked compounds are visually inspected. Further filtering based on properties like ADMET (Absorption, Distribution, Metabolism, Excretion, and Toxicity) can be applied.[10] The final selection of hits for experimental validation is recorded.

-

Protocol: A Reproducible Genomics Workflow for Variant Calling

This protocol describes a common bioinformatics pipeline for identifying genetic variants from raw sequencing data. Given the multi-step nature and the numerous software tools involved, provenance is essential for reproducibility.[12]

Objective: To identify single nucleotide polymorphisms (SNPs) and short insertions/deletions (indels) from raw DNA sequencing reads.

Methodology:

-

Data Acquisition:

-

Activity: Download raw sequencing data.

-

Entity (Input): Accession number from a public repository (e.g., SRA).

-

Agent: SRA Toolkit.

-

Details: Raw reads are downloaded in FASTQ format.

-

-

Quality Control:

-

Activity: Assess the quality of the raw reads.

-

Entity (Input): FASTQ files.

-

Agent: FastQC.

-

Details: Generate a quality report to check for issues like low-quality bases, adapter contamination, etc.

-

-

Read Trimming and Filtering:

-

Activity: Remove low-quality bases and adapters.

-

Entity (Input): FASTQ files.

-

Agent: Trimmomatic or similar tool.

-

Details: Specify parameters for trimming (e.g., quality score threshold, adapter sequences). The output is a set of cleaned FASTQ files.

-

-

Alignment to Reference Genome:

-

Activity: Align the cleaned reads to a reference genome.

-

Entity (Input): Cleaned FASTQ files, reference genome in FASTA format.

-

Agent: BWA (Burrows-Wheeler Aligner).

-

Details: The alignment process generates a SAM (Sequence Alignment/Map) file.

-

-

Post-Alignment Processing:

-

Activity: Convert SAM to BAM, sort, and index.

-

Entity (Input): SAM file.

-

Agent: SAMtools.

-

Details: The SAM file is converted to its binary equivalent (BAM), sorted by coordinate, and indexed for efficient access.

-

-

Variant Calling:

-

Activity: Identify variants from the aligned reads.

-

Entity (Input): Sorted and indexed BAM file, reference genome.

-

Agent: GATK (Genome Analysis Toolkit) or bcftools.

-

Details: Variants are called and stored in a VCF (Variant Call Format) file.

-

-

Variant Filtering and Annotation:

-

Activity: Filter low-quality variants and annotate the remaining ones.

-

Entity (Input): VCF file.

-

Agent: VCFtools, SnpEff, or ANNOVAR.

-

Details: Filters are applied based on criteria like read depth, mapping quality, and variant quality score. Variants are then annotated with information about their genomic location and predicted functional impact.

-

Mandatory Visualization with Graphviz (DOT language)

Visualizing the provenance of complex workflows and the logical relationships in biological pathways is crucial for understanding and communication. The following diagrams are created using the DOT language and adhere to the specified formatting requirements.

Virtual Screening Workflow

Caption: A high-level overview of a structure-based virtual screening workflow.

Reproducible Genomics Analysis Pipeline

Caption: A typical workflow for genomic variant calling and annotation.

EGFR Signaling Pathway (Simplified)

Caption: A simplified representation of the EGF/EGFR signaling cascade.

Conclusion

The adoption of robust provenance tracking mechanisms is not merely a technical exercise but a fundamental requirement for advancing reproducible and trustworthy science. The Provenance Context Entity (PaCE) approach offers a scalable and efficient solution for managing provenance in RDF-based scientific datasets, demonstrating significant improvements in storage and query performance over traditional methods. By integrating detailed provenance capture into experimental and computational workflows, such as those in virtual screening and genomics, researchers can enhance the reliability and transparency of their findings. The visualization of these complex processes further aids in their comprehension and communication. For drug development professionals, embracing these principles and technologies is essential for accelerating discovery, ensuring data integrity, and meeting the evolving standards of regulatory bodies.

References

- 1. Graphviz [graphviz.org]

- 2. How can you ensure data provenance and accurate data analysis? – Research Support Handbook [rdm.vu.nl]

- 3. The Importance of Data Provenance and Context in Clinical Data Registries - IQVIA [iqvia.com]

- 4. mmsholdings.com [mmsholdings.com]

- 5. "Provenance Context Entity (PaCE): Scalable Provenance Tracking for Sci" by Satya S. Sahoo, Olivier Bodenreider et al. [scholarcommons.sc.edu]

- 6. research.wright.edu [research.wright.edu]

- 7. researchgate.net [researchgate.net]

- 8. researchgate.net [researchgate.net]

- 9. researchgate.net [researchgate.net]

- 10. Frontiers | Drugsniffer: An Open Source Workflow for Virtually Screening Billions of Molecules for Binding Affinity to Protein Targets [frontiersin.org]

- 11. An artificial intelligence accelerated virtual screening platform for drug discovery - PMC [pmc.ncbi.nlm.nih.gov]

- 12. Investigating reproducibility and tracking provenance – A genomic workflow case study | Semantic Scholar [semanticscholar.org]

Methodological & Application

Application Notes and Protocols: Implementing Provenance Context Entity in RDF

For Researchers, Scientists, and Drug Development Professionals

Introduction to Provenance in Scientific Workflows