Bnadd

Description

BNadd is an auxiliary BatchNorm layer introduced in pre-trained audio-visual models to reactivate "dead channels" (inactive neural pathways) and enhance model performance . Key characteristics include:

- Function: Balances original and additional channels via a trainable parameter (αₖ).

- Efficiency: Adds only 1.5× the original BatchNorm parameters, minimizing GPU memory overhead.

- Versatility: Applicable across modalities (e.g., vision, audio) without architectural constraints.

Properties

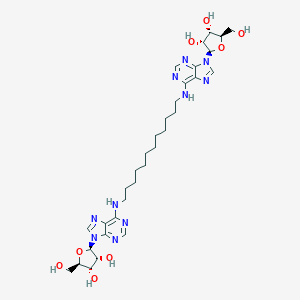

CAS No. |

111863-65-1 |

|---|---|

Molecular Formula |

C32H48N10O8 |

Molecular Weight |

700.8 g/mol |

IUPAC Name |

(2R,3R,4S,5R)-2-[6-[12-[[9-[(2R,3R,4S,5R)-3,4-dihydroxy-5-(hydroxymethyl)oxolan-2-yl]purin-6-yl]amino]dodecylamino]purin-9-yl]-5-(hydroxymethyl)oxolane-3,4-diol |

InChI |

InChI=1S/C32H48N10O8/c43-13-19-23(45)25(47)31(49-19)41-17-39-21-27(35-15-37-29(21)41)33-11-9-7-5-3-1-2-4-6-8-10-12-34-28-22-30(38-16-36-28)42(18-40-22)32-26(48)24(46)20(14-44)50-32/h15-20,23-26,31-32,43-48H,1-14H2,(H,33,35,37)(H,34,36,38)/t19-,20-,23-,24-,25-,26-,31-,32-/m1/s1 |

InChI Key |

UDFZRMFWXQQYTB-YHOFCQOESA-N |

SMILES |

C1=NC(=C2C(=N1)N(C=N2)C3C(C(C(O3)CO)O)O)NCCCCCCCCCCCCNC4=C5C(=NC=N4)N(C=N5)C6C(C(C(O6)CO)O)O |

Isomeric SMILES |

C1=NC(=C2C(=N1)N(C=N2)[C@H]3[C@@H]([C@@H]([C@H](O3)CO)O)O)NCCCCCCCCCCCCNC4=C5C(=NC=N4)N(C=N5)[C@H]6[C@@H]([C@@H]([C@H](O6)CO)O)O |

Canonical SMILES |

C1=NC(=C2C(=N1)N(C=N2)C3C(C(C(O3)CO)O)O)NCCCCCCCCCCCCNC4=C5C(=NC=N4)N(C=N5)C6C(C(C(O6)CO)O)O |

Synonyms |

is(N(6)-adenosyl)dodecane BNADD |

Origin of Product |

United States |

Comparison with Similar Compounds

Comparison with Similar Components

BNadd is compared below with analogous normalization techniques and ML components:

Table 1: Comparative Analysis of this compound and Related Techniques

Key Findings :

- This compound vs. Standard BatchNorm: this compound introduces a lightweight, adaptive mechanism to revive inactive channels, unlike standard BatchNorm, which focuses solely on stabilizing activations .

- This compound vs. LayerNorm: While LayerNorm addresses sequence-level normalization (e.g., in Transformers ), this compound targets channel-specific inefficiencies in pre-trained models.

- This compound vs. RoBERTa Optimizations : RoBERTa emphasizes data and hyperparameter tuning , whereas this compound modifies architectural components for better parameter utilization.

Research Implications

Featured Recommendations

| Most viewed | ||

|---|---|---|

| Most popular with customers |

Disclaimer and Information on In-Vitro Research Products

Please be aware that all articles and product information presented on BenchChem are intended solely for informational purposes. The products available for purchase on BenchChem are specifically designed for in-vitro studies, which are conducted outside of living organisms. In-vitro studies, derived from the Latin term "in glass," involve experiments performed in controlled laboratory settings using cells or tissues. It is important to note that these products are not categorized as medicines or drugs, and they have not received approval from the FDA for the prevention, treatment, or cure of any medical condition, ailment, or disease. We must emphasize that any form of bodily introduction of these products into humans or animals is strictly prohibited by law. It is essential to adhere to these guidelines to ensure compliance with legal and ethical standards in research and experimentation.