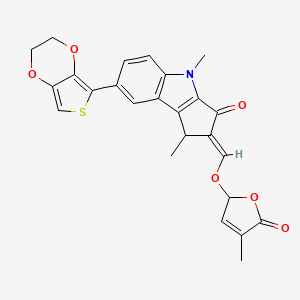

ST362

Beschreibung

BenchChem offers high-quality this compound suitable for many research applications. Different packaging options are available to accommodate customers' requirements. Please inquire for more information about this compound including the price, delivery time, and more detailed information at info@benchchem.com.

Eigenschaften

Molekularformel |

C25H21NO6S |

|---|---|

Molekulargewicht |

463.5 g/mol |

IUPAC-Name |

(2E)-7-(2,3-dihydrothieno[3,4-b][1,4]dioxin-5-yl)-1,4-dimethyl-2-[(4-methyl-5-oxo-2H-furan-2-yl)oxymethylidene]-1H-cyclopenta[b]indol-3-one |

InChI |

InChI=1S/C25H21NO6S/c1-12-8-19(32-25(12)28)31-10-16-13(2)20-15-9-14(4-5-17(15)26(3)21(20)22(16)27)24-23-18(11-33-24)29-6-7-30-23/h4-5,8-11,13,19H,6-7H2,1-3H3/b16-10+ |

InChI-Schlüssel |

GJUAGRMNTHWYBB-MHWRWJLKSA-N |

Isomerische SMILES |

CC1/C(=C\OC2C=C(C(=O)O2)C)/C(=O)C3=C1C4=C(N3C)C=CC(=C4)C5=C6C(=CS5)OCCO6 |

Kanonische SMILES |

CC1C(=COC2C=C(C(=O)O2)C)C(=O)C3=C1C4=C(N3C)C=CC(=C4)C5=C6C(=CS5)OCCO6 |

Herkunft des Produkts |

United States |

Foundational & Exploratory

Core Assumptions of Linear Regression: A Technical Guide for Researchers

The Four Principal Assumptions

For the results of a linear regression model to be considered valid for inference and prediction, four principal assumptions concerning the model's residuals (the differences between observed and predicted values) must be met.[7][8] These are often remembered by the acronym LINE : L inearity, I ndependence, N ormality, and E qual variance.

-

Linearity : The most fundamental assumption is that a linear relationship exists between the independent and dependent variables.[1][9] This means that a change in an independent variable is associated with a proportional change in the dependent variable.[1]

-

Independence : The errors (or residuals) of the model are assumed to be independent of each other.[1][10] This implies that the residual for one observation does not predict the residual for another.[10] This is particularly important for time-series data where consecutive observations may be correlated (a condition known as autocorrelation).[3][11]

-

Normality : The residuals of the model are assumed to be normally distributed.[12][13] This assumption is crucial for the validity of hypothesis tests, p-values, and the construction of confidence intervals.[1][14]

-

Homoscedasticity (Equal Variance) : The variance of the residuals should be constant across all levels of the independent variables.[1][3][15] The opposite condition, where the variance of the residuals changes, is called heteroscedasticity.[15]

Additionally, for multiple linear regression, two other assumptions are critical:

-

No Multicollinearity : The independent variables should not be highly correlated with each other.[13][16] High multicollinearity can make it difficult to determine the individual effect of each predictor on the outcome variable.[6][12]

-

No Endogeneity : The independent variables should not be correlated with the error term.[9] Violation of this, often due to an omitted variable, can cause biased and inconsistent parameter estimates.[9][17]

Logical Relationship of Core Assumptions

The following diagram illustrates how these core assumptions underpin a valid Ordinary Least Squares (OLS) regression model, which is the most common method for estimating the parameters of a linear regression.

Caption: Core assumptions for a valid OLS regression model.

Methodologies for Verifying Assumptions

A systematic workflow is essential to validate these assumptions. This typically involves a combination of visual inspection of plots and formal statistical tests.

Experimental Protocol: A Step-by-Step Validation Workflow

-

Initial Data Exploration :

-

Model Fitting :

-

Action : Fit the linear regression model to the data using a statistical software package (e.g., R, Python, SPSS).

-

Purpose : To obtain the predicted values and, most importantly, the residuals, which are the basis for testing the remaining assumptions.

-

-

Residual Analysis :

-

Action 1 (Homoscedasticity & Linearity) : Plot the residuals against the predicted (fitted) values.

-

Purpose : This is a key diagnostic plot. For the Homoscedasticity assumption to hold, the residuals should be randomly scattered around the zero line without any discernible pattern (like a cone or fan shape, which indicates heteroscedasticity).[12][15][18] This plot can also reveal non-linearity if the residuals show a curved pattern.[7]

-

-

Action 2 (Normality) : Create a Quantile-Quantile (Q-Q) plot of the residuals or a histogram.[14][16]

-

Action 3 (Independence) : For time-series data, plot the residuals against the observation order.

-

Purpose : To check for Autocorrelation . There should be no clear pattern; the residuals should appear random.

-

-

-

Formal Statistical Testing :

-

Action : Conduct formal statistical tests to complement the visual diagnostics.

-

Purpose : To obtain quantitative evidence for or against the assumptions. (See table below for specific tests).

-

Diagnostic Workflow Diagram

The diagram below outlines the logical flow for testing the assumptions of a linear regression model after it has been fitted.

Caption: Workflow for residual analysis in linear regression.

Summary of Assumptions, Diagnostics, and Implications

The following table summarizes each core assumption, provides common diagnostic methods, and outlines the consequences of violation, which is critical for researchers in fields like drug development where model accuracy is paramount.

| Assumption | Description | Diagnostic Methods (Visual & Formal) | Consequences of Violation |

| Linearity | The relationship between independent (X) and dependent (Y) variables is linear.[1][16] | Visual : Scatter plot of Y vs. X shows a linear pattern.[16] Residuals vs. Predicted plot shows no curved pattern.[7] | Biased and inaccurate coefficient estimates. The model systematically over or under-predicts, rendering it unreliable.[4] |

| Independence of Errors | The residuals are independent and not correlated with each other.[1][10] | Visual : Residuals vs. Time/Order plot shows no pattern. Formal : Durbin-Watson test (p-value > 0.05 indicates no autocorrelation).[10][19] | Standard errors of the coefficients become incorrect, leading to unreliable hypothesis tests and confidence intervals.[10][20] |

| Normality of Errors | The residuals follow a normal distribution.[13][16] | Visual : Histogram of residuals is bell-shaped. Q-Q plot of residuals follows the diagonal line.[12][16] Formal : Shapiro-Wilk test or Kolmogorov-Smirnov test (p-value > 0.05 supports normality).[16][21][22] | Invalidates p-values and confidence intervals, making statistical inference unreliable, especially with small sample sizes.[6][14] |

| Homoscedasticity | The variance of the residuals is constant across all levels of the predictors.[3][15] | Visual : Residuals vs. Predicted plot shows a random scatter with constant vertical spread (no "cone" shape).[15][18] Formal : Breusch-Pagan test or White test (p-value > 0.05 supports homoscedasticity).[16][23] | Standard errors are biased, making hypothesis tests untrustworthy. The model's efficiency is reduced.[20] |

| No Multicollinearity | Independent variables are not highly correlated with each other.[13][16] | Formal : Variance Inflation Factor (VIF). (VIF > 10 is often considered problematic).[16] Correlation matrix (coefficients should be < 0.8).[16] | Inflates the variance of coefficient estimates, making them unstable and difficult to interpret. Weakens the statistical power of the model.[12][17] |

In clinical research and drug development, the rigorous validation of these assumptions is not merely a statistical formality; it is a prerequisite for generating reliable evidence, making sound decisions, and ensuring the integrity of scientific findings.[24] Failure to do so can have significant consequences, impacting everything from preclinical analysis to the interpretation of clinical trial results.

References

- 1. medium.com [medium.com]

- 2. An introduction to linear regression - Advanced Pharmacy Australia [adpha.au]

- 3. jmp.com [jmp.com]

- 4. youtube.com [youtube.com]

- 5. quora.com [quora.com]

- 6. Do Violations in Linear Regression Affect ML? [finalroundai.com]

- 7. Testing the assumptions of linear regression [people.duke.edu]

- 8. youtube.com [youtube.com]

- 9. Assumptions of Linear Regression - GeeksforGeeks [geeksforgeeks.org]

- 10. Independence of Errors: A Guide to Validating Linear Regression Assumptions - DEV Community [dev.to]

- 11. rittmanmead.com [rittmanmead.com]

- 12. statisticssolutions.com [statisticssolutions.com]

- 13. analyticsvidhya.com [analyticsvidhya.com]

- 14. 3.6 Normality of the Residuals [jpstats.org]

- 15. Learn Homoscedasticity and Heteroscedasticity | Vexpower [vexpower.com]

- 16. statisticssolutions.com [statisticssolutions.com]

- 17. towardsdatascience.com [towardsdatascience.com]

- 18. 12.3.2 - Assumptions | STAT 200 [online.stat.psu.edu]

- 19. godatadrive.com [godatadrive.com]

- 20. medium.com [medium.com]

- 21. analyse-it.com [analyse-it.com]

- 22. 7.5 - Tests for Error Normality | STAT 501 [online.stat.psu.edu]

- 23. Heteroscedasticity in Regression Analysis - GeeksforGeeks [geeksforgeeks.org]

- 24. unanijournal.com [unanijournal.com]

Unlocking Insights: A Technical Guide to Regression Analysis in Biomedical Research

For Immediate Release

[City, State] – December 4, 2025 – In the intricate landscape of biomedical research and drug development, the ability to discern meaningful relationships from complex datasets is paramount. Regression analysis stands as a cornerstone statistical methodology, enabling researchers to model, predict, and understand the interplay between variables. This in-depth technical guide provides a comprehensive overview of regression analysis, tailored for researchers, scientists, and drug development professionals. From fundamental concepts to practical applications, this whitepaper serves as a vital resource for harnessing the power of regression to drive scientific discovery.

Introduction to Regression Analysis in a Biomedical Context

Regression analysis is a powerful statistical tool used to examine the relationship between a dependent variable (the outcome of interest) and one or more independent variables (predictors or explanatory variables).[1] In biomedical research, this technique is indispensable for a myriad of applications, from identifying risk factors for a disease to evaluating the efficacy of a new therapeutic intervention.[2] The core idea is to understand how the outcome variable changes as the predictor variables change, allowing for both the quantification of relationships and the prediction of future outcomes.[2]

Regression models are instrumental in advancing medical knowledge by translating raw data into actionable insights.[3] They are employed in all phases of clinical trials and preclinical studies to analyze and interpret results, ensuring the validity and reproducibility of research findings.[3][4]

Core Types of Regression Analysis in Biomedical Research

The choice of regression model is dictated by the nature of the dependent variable.[5] Understanding the different types of regression is crucial for selecting the appropriate analytical approach.

| Regression Type | Dependent Variable Type | Common Biomedical Applications |

| Linear Regression | Continuous (e.g., blood pressure, tumor volume) | Assessing the relationship between a biomarker and a physiological measurement; dose-response analysis.[2] |

| Multiple Linear Regression | Continuous | Modeling the effect of multiple factors (e.g., age, weight, dosage) on a continuous outcome.[2] |

| Logistic Regression | Binary (e.g., disease presence/absence, patient survival) | Identifying risk factors for a disease; predicting the likelihood of treatment success.[6] |

| Cox Proportional Hazards Regression | Time-to-event (e.g., time to disease recurrence, time to death) | Analyzing survival data in clinical trials to compare the efficacy of different treatments. |

| Poisson Regression | Count (e.g., number of lesions, number of adverse events) | Modeling the frequency of events, such as the number of asthma attacks in a given period. |

Methodologies for Key Experiments

The successful application of regression analysis hinges on a well-designed experimental protocol and a robust statistical analysis plan.

Experimental Protocol: Preclinical Dose-Response Study

This protocol outlines a typical preclinical study to assess the dose-dependent efficacy of a novel anti-cancer compound (Compound X) on tumor growth in a mouse xenograft model.

-

Objective: To determine the relationship between the dose of Compound X and the reduction in tumor volume.

-

Animal Model: Immunocompromised mice (e.g., NOD/SCID) will be used.

-

Procedure:

-

Human cancer cells will be implanted subcutaneously into the flank of each mouse.

-

Once tumors reach a palpable size (e.g., 100-150 mm³), mice will be randomized into treatment and control groups (n=10 mice per group).

-

Treatment groups will receive varying doses of Compound X (e.g., 1 mg/kg, 5 mg/kg, 10 mg/kg, 25 mg/kg) administered daily via intraperitoneal injection.

-

The control group will receive a vehicle control.

-

Tumor volume will be measured every three days for a period of 21 days using calipers (Volume = 0.5 * length * width²).

-

-

Statistical Analysis:

-

The primary endpoint will be the tumor volume at day 21.

-

A simple linear regression model will be used to assess the relationship between the dose of Compound X (independent variable) and the final tumor volume (dependent variable).

-

The assumptions of the linear regression model (linearity, independence of errors, homoscedasticity, and normality of residuals) will be checked.

-

The regression equation and the coefficient of determination (R²) will be reported.

-

Methodology: Building and Validating a Regression Model

A systematic approach to model building is essential for generating reliable and interpretable results.

-

Data Preparation and Exploration:

-

Clean the dataset to handle missing values and outliers.

-

Visualize the data using scatter plots to explore the relationships between variables.

-

Transform variables if necessary (e.g., log transformation) to meet model assumptions.

-

-

Model Specification and Fitting:

-

Choose the appropriate regression model based on the research question and the nature of the dependent variable.

-

Specify the independent variables to be included in the model based on prior knowledge and exploratory data analysis.

-

Fit the model to the data using statistical software (e.g., R, SAS, SPSS).

-

-

Checking Model Assumptions:

-

Model Interpretation and Reporting:

-

Interpret the regression coefficients to understand the magnitude and direction of the relationship between each predictor and the outcome.

-

Report the p-values to assess the statistical significance of each predictor.

-

For logistic regression, report the odds ratios and their 95% confidence intervals.

-

Present the overall model fit using metrics like R-squared for linear regression.

-

Data Presentation

Clear and concise presentation of regression analysis results is crucial for effective communication of research findings. Quantitative data should be summarized in well-structured tables.

Table 1: Multiple Linear Regression Analysis of Factors Associated with Systolic Blood Pressure

| Variable | Coefficient (β) | Standard Error | t-statistic | p-value |

| (Intercept) | 80.50 | 5.20 | 15.48 | <0.001 |

| Age (years) | 0.65 | 0.10 | 6.50 | <0.001 |

| BMI ( kg/m ²) | 1.20 | 0.25 | 4.80 | <0.001 |

| Daily Sodium Intake (g) | 2.50 | 0.50 | 5.00 | <0.001 |

| Model Summary | ||||

| R-squared | 0.72 | |||

| Adjusted R-squared | 0.70 | |||

| F-statistic | 35.6 | <0.001 | ||

| N | 150 |

Table 2: Logistic Regression Analysis of Risk Factors for Disease X

| Variable | Odds Ratio (OR) | 95% Confidence Interval | p-value |

| Age (per year) | 1.05 | 1.02 - 1.08 | 0.002 |

| Smoking Status (Smoker vs. Non-smoker) | 2.50 | 1.50 - 4.17 | <0.001 |

| Presence of Biomarker Y (Positive vs. Negative) | 3.20 | 1.80 - 5.71 | <0.001 |

| Model Summary | |||

| N | 500 |

Mandatory Visualizations

Visual representations of workflows and pathways can greatly enhance the understanding of complex analytical processes and biological systems.

References

- 1. content.csbs.utah.edu [content.csbs.utah.edu]

- 2. m.youtube.com [m.youtube.com]

- 3. researchgate.net [researchgate.net]

- 4. youtube.com [youtube.com]

- 5. researchgate.net [researchgate.net]

- 6. creative-diagnostics.com [creative-diagnostics.com]

- 7. Understanding and checking the assumptions of linear regression: a primer for medical researchers - PubMed [pubmed.ncbi.nlm.nih.gov]

- 8. Statistical notes for clinical researchers: simple linear regression 3 – residual analysis - PMC [pmc.ncbi.nlm.nih.gov]

The Cornerstone of Discovery: A Technical Guide to Independent and Dependent Variables in Research

An in-depth exploration for researchers, scientists, and drug development professionals on the fundamental principles of experimental design, data interpretation, and the critical interplay of variables in scientific inquiry.

Defining the Core Concepts: Independent and Dependent Variables

At its most fundamental level, an experiment is a structured procedure designed to test a hypothesis. This is achieved by manipulating one variable to observe its effect on another.[1][2][3]

-

Independent Variable (IV): This is the variable that the researcher intentionally manipulates or changes.[1][2][3][4] It is the presumed "cause" in a cause-and-effect relationship.[1][2] In drug development, the independent variable is often the dosage of a new medication, the frequency of its administration, or the type of therapeutic intervention.[5][6]

-

Dependent Variable (DV): This is the variable that is measured or observed to see how it is affected by the changes in the independent variable.[1][2][3] It represents the "effect" or outcome of the experiment.[1][2] In a clinical trial, dependent variables could include changes in blood pressure, tumor size, or the concentration of a specific biomarker in the blood.[5]

The relationship between these two types of variables is the central focus of most quantitative research. The goal is to determine if a change in the independent variable leads to a predictable and significant change in the dependent variable.[7][8]

A Case Study in Drug Development: The Antihypertensive Drug Trial

To illustrate the practical application of these concepts, let's consider a hypothetical Phase II clinical trial for a new antihypertensive drug, "Hypotensaril."

Research Hypothesis: Administration of Hypotensaril will lead to a dose-dependent reduction in systolic blood pressure in patients with grade 1 hypertension.

In this scenario:

-

Independent Variable: The daily dosage of Hypotensaril administered to the participants. This is manipulated by the researchers, with different groups of patients receiving different doses.

-

Dependent Variable: The change in systolic blood pressure (SBP) from the baseline measurement. This is the outcome that is measured to assess the drug's efficacy.

Data Presentation: Summarized Results of the Hypotensaril Phase II Trial

The following tables summarize the quantitative data from this hypothetical trial, demonstrating the relationship between the independent and dependent variables.

Table 1: Baseline Characteristics of Study Participants

| Characteristic | Placebo (n=50) | Hypotensaril 25 mg (n=50) | Hypotensaril 50 mg (n=50) | Hypotensaril 100 mg (n=50) |

| Age (years), mean ± SD | 55.2 ± 8.1 | 54.9 ± 7.8 | 55.5 ± 8.3 | 55.1 ± 7.9 |

| Sex (Male/Female) | 27/23 | 26/24 | 28/22 | 27/23 |

| Baseline Systolic BP (mmHg), mean ± SD | 145.3 ± 4.2 | 145.8 ± 4.5 | 145.1 ± 4.1 | 145.6 ± 4.3 |

| Baseline Diastolic BP (mmHg), mean ± SD | 92.1 ± 3.1 | 92.5 ± 3.3 | 91.9 ± 3.0 | 92.3 ± 3.2 |

Table 2: Change in Systolic Blood Pressure (SBP) after 12 Weeks of Treatment

| Treatment Group | Mean Change in SBP from Baseline (mmHg) | Standard Deviation of Change | p-value vs. Placebo |

| Placebo | -2.5 | 3.1 | - |

| Hypotensaril 25 mg | -8.7 | 4.2 | <0.01 |

| Hypotensaril 50 mg | -15.4 | 4.8 | <0.001 |

| Hypotensaril 100 mg | -19.2 | 5.1 | <0.001 |

The data clearly indicates a dose-dependent effect of Hypotensaril on systolic blood pressure. As the dosage (independent variable) increases, the reduction in SBP (dependent variable) becomes more pronounced.

Experimental Protocols: Ensuring Rigor and Reproducibility

The validity of the relationship between independent and dependent variables hinges on the quality of the experimental design and protocol. A well-defined protocol minimizes bias and ensures that the observed effects can be confidently attributed to the manipulation of the independent variable.

Detailed Methodology for the Hypotensaril Clinical Trial

3.1. Study Design: A 12-week, multi-center, randomized, double-blind, placebo-controlled Phase II clinical trial.

3.2. Participant Selection:

-

Inclusion Criteria:

-

Male and female participants aged 18-70 years.

-

Diagnosed with grade 1 hypertension (Systolic BP 140-159 mmHg or Diastolic BP 90-99 mmHg) according to the latest clinical guidelines.[9]

-

Willing and able to provide informed consent.

-

-

Exclusion Criteria:

-

Secondary hypertension.

-

History of significant cardiovascular events (e.g., myocardial infarction, stroke) within the past 6 months.

-

Severe renal or hepatic impairment.

-

Use of other antihypertensive medications that cannot be safely discontinued.

-

3.3. Randomization and Blinding:

-

Participants are randomly assigned in a 1:1:1:1 ratio to one of the four treatment arms (Placebo, 25 mg, 50 mg, or 100 mg Hypotensaril).

-

Randomization is stratified by study center to ensure a balanced distribution.

-

Both participants and investigators are blinded to the treatment allocation.

3.4. Intervention:

-

The investigational drug (Hypotensaril or matching placebo) is administered orally once daily.

-

Participants are instructed to take the medication at the same time each day.

3.5. Measurement of the Dependent Variable (Blood Pressure):

-

Blood pressure is measured at baseline and at weeks 4, 8, and 12.

-

Measurements are taken using a validated automated oscillometric device.[10]

-

To ensure accuracy, the following standardized procedure is followed:

-

Participants rest in a quiet room for at least 5 minutes before measurement.[11]

-

Measurements are taken in the seated position with the back supported and feet flat on the floor.[11]

-

The arm is supported at the level of the heart.[10]

-

Three readings are taken at 1-minute intervals, and the average of the last two readings is recorded.[3]

-

-

24-hour ambulatory blood pressure monitoring (ABPM) is performed at baseline and at week 12 to assess the drug's effect over a full day.[3][12]

3.6. Statistical Analysis:

-

The primary endpoint is the change in mean sitting systolic blood pressure from baseline to week 12.

-

An Analysis of Covariance (ANCOVA) model is used to compare the mean change in SBP between each Hypotensaril dose group and the placebo group, with baseline SBP as a covariate.

Visualizing the Relationships: Diagrams and Pathways

Visual representations are powerful tools for understanding the logical flow of an experiment and the biological mechanisms at play.

Experimental Workflow

The following diagram illustrates the workflow of the Hypotensaril clinical trial, from patient recruitment to data analysis.

Logical Relationship of Variables

This diagram illustrates the fundamental cause-and-effect relationship being tested in the experiment.

Signaling Pathway: Mechanism of Action of Beta-Blockers

To provide a more in-depth biological context, the following diagram illustrates the signaling pathway of beta-blockers, a common class of antihypertensive drugs. This demonstrates how understanding the underlying mechanism is crucial in drug development. Beta-blockers work by blocking the effects of catecholamines like epinephrine (B1671497) and norepinephrine (B1679862) on beta-adrenergic receptors.[7] This leads to a decrease in heart rate and blood pressure.[7][8]

Conclusion

The meticulous identification, manipulation, and measurement of independent and dependent variables are the cornerstones of robust scientific research. As demonstrated through the hypothetical "Hypotensaril" clinical trial, a clear understanding of these variables, coupled with rigorous experimental protocols and data analysis, is essential for advancing our knowledge and developing new therapeutic interventions. For researchers, scientists, and drug development professionals, a mastery of these fundamental principles is not merely academic—it is the very essence of their contribution to science and medicine.

References

- 1. Beta blocker - Wikipedia [en.wikipedia.org]

- 2. Beta-blockers: Historical Perspective and Mechanisms of Action - Revista Española de Cardiología (English Edition) [revespcardiol.org]

- 3. clario.com [clario.com]

- 4. Recommended Standards for Assessing Blood Pressure in Human Research Where Blood Pressure or Hypertension Is a Major Focus - PMC [pmc.ncbi.nlm.nih.gov]

- 5. Study: Potential Therapy for Uncontrolled Hypertension [health.ucsd.edu]

- 6. drug-interaction-research.jp [drug-interaction-research.jp]

- 7. Beta Blockers - StatPearls - NCBI Bookshelf [ncbi.nlm.nih.gov]

- 8. my.clevelandclinic.org [my.clevelandclinic.org]

- 9. Effects of Qingda granule on patients with grade 1 hypertension at low-medium risk: study protocol for a randomized, controlled, double-blind clinical trial - PMC [pmc.ncbi.nlm.nih.gov]

- 10. ahajournals.org [ahajournals.org]

- 11. Methods of Blood Pressure Assessment Used in Milestone Hypertension Trials - PMC [pmc.ncbi.nlm.nih.gov]

- 12. newsroom.clevelandclinic.org [newsroom.clevelandclinic.org]

Unveiling Relationships: A Technical Guide to Simple Linear Regression for Experimental Data

For Researchers, Scientists, and Drug Development Professionals

In the realm of experimental science and drug development, understanding the relationship between two continuous variables is a frequent necessity. Simple linear regression is a powerful statistical method that provides a framework to model and quantify these relationships. This in-depth technical guide delves into the core concepts of simple linear regression, offering practical insights into its application for analyzing experimental data. We will explore the underlying principles, essential assumptions, and the interpretation of model outputs, all illustrated with relevant examples from laboratory settings.

Core Concepts of Simple Linear Regression

Simple linear regression is a statistical method used to model the relationship between a single independent variable (predictor or explanatory variable, denoted as x) and a single dependent variable (response or outcome variable, denoted as y).[1] The relationship is modeled using a straight line.[1]

The Linear Regression Model

The fundamental equation of a simple linear regression model is:

y = β₀ + β₁x + ε

Where:

-

y is the dependent variable.[1]

-

x is the independent variable.[1]

-

β₀ is the y-intercept of the regression line, representing the predicted value of y when x is 0.[1]

-

β₁ is the slope of the regression line, indicating the change in y for a one-unit change in x.[1]

-

ε is the error term, which accounts for the variability in y that cannot be explained by the linear relationship with x.[2]

The goal of simple linear regression is to find the best-fit line that minimizes the error between the observed data points and the line itself.

Assumptions of Simple Linear Regression

For the results of a simple linear regression to be valid, several assumptions about the data must be met:

-

Linearity: The relationship between the independent and dependent variables must be linear.[1][3][4] This can be visually assessed with a scatter plot.

-

Independence of Errors: The errors (residuals) should be independent of each other.[3][4] This means there should be no correlation between consecutive residuals.

-

Homoscedasticity (Constant Variance): The variance of the errors should be constant across all levels of the independent variable.[1][3][4] A plot of residuals against predicted values can help check this assumption.

-

Normality of Errors: The errors should be normally distributed.[1][4] This can be checked using a histogram of the residuals or a Q-Q plot.

The logical flow of performing a simple linear regression analysis is depicted in the following diagram.

Parameter Estimation: The Method of Least Squares

The most common method for estimating the parameters (β₀ and β₁) of a linear regression model is the method of least squares .[5][6] This method aims to find the line that minimizes the sum of the squared differences between the observed values of the dependent variable (y) and the values predicted by the regression line (ŷ).[5][7] These differences are known as residuals.

The formulas for calculating the slope (β₁) and the y-intercept (β₀) using the least squares method are derived from minimizing the sum of squared residuals. For a set of n data points (xᵢ, yᵢ):

Slope (β₁):

β₁ = Σ((xᵢ - x̄)(yᵢ - ȳ)) / Σ((xᵢ - x̄)²)

Y-intercept (β₀):

β₀ = ȳ - β₁x̄

Where:

-

xᵢ and yᵢ are the individual data points.

-

x̄ and ȳ are the means of the independent and dependent variables, respectively.

The logical relationship for calculating the least squares estimates is as follows:

Model Evaluation

Once the regression model is fitted, it is crucial to evaluate how well it represents the data. Several metrics are used for this purpose.

| Metric | Description | Interpretation |

| R-squared (R²) | Also known as the coefficient of determination, it represents the proportion of the variance in the dependent variable that is predictable from the independent variable.[8] | Values range from 0 to 1. A higher R² indicates a better fit of the model to the data. For example, an R² of 0.85 means that 85% of the variation in the dependent variable can be explained by the independent variable.[8] |

| Adjusted R-squared | A modified version of R-squared that adjusts for the number of predictors in a model. | It is more suitable for comparing models with different numbers of independent variables. |

| p-value | The p-value for the slope coefficient (β₁) tests the null hypothesis that there is no linear relationship between the independent and dependent variables. | A small p-value (typically < 0.05) indicates that you can reject the null hypothesis and conclude that there is a statistically significant linear relationship between the variables. |

| Residual Plots | A graphical tool used to assess the assumptions of the linear regression model. | Patterns in the residual plot can indicate violations of assumptions like non-linearity or heteroscedasticity. Ideally, the residuals should be randomly scattered around zero.[8] |

Application in Experimental Data Analysis

Simple linear regression is widely used in various experimental contexts within drug development and scientific research. Below are detailed examples of its application.

Experiment 1: Protein Quantification using the Bradford Assay

Objective: To determine the concentration of an unknown protein sample by creating a standard curve using a series of known protein concentrations.

Experimental Protocol:

-

Preparation of Standards: A series of bovine serum albumin (BSA) standards with known concentrations (e.g., 0, 0.1, 0.2, 0.4, 0.6, 0.8, 1.0 mg/mL) are prepared by diluting a stock solution.[3]

-

Sample Preparation: The unknown protein sample is diluted to fall within the linear range of the assay.[3]

-

Assay Procedure:

-

Aliquots of each standard and the diluted unknown sample are added to separate test tubes or microplate wells.[9]

-

Bradford reagent is added to each tube/well and mixed.[9]

-

After a short incubation period (e.g., 5 minutes), the absorbance of each sample is measured at 595 nm using a spectrophotometer.[3]

-

Data Presentation:

| BSA Concentration (mg/mL) (x) | Absorbance at 595 nm (y) |

| 0.0 | 0.050 |

| 0.1 | 0.152 |

| 0.2 | 0.251 |

| 0.4 | 0.448 |

| 0.6 | 0.653 |

| 0.8 | 0.851 |

| 1.0 | 1.049 |

Regression Analysis:

A simple linear regression is performed with BSA concentration as the independent variable (x) and absorbance as the dependent variable (y).

| Parameter | Estimate | Standard Error | t-value | p-value |

| Intercept (β₀) | 0.051 | 0.008 | 6.375 | <0.001 |

| Slope (β₁) | 0.998 | 0.015 | 66.533 | <0.001 |

| R-squared: 0.9989 |

The resulting regression equation is: Absorbance = 0.051 + 0.998 * (BSA Concentration) .

To determine the concentration of the unknown protein sample, its absorbance is measured and the concentration is calculated using the regression equation. For example, if the unknown sample has an absorbance of 0.550, its concentration would be calculated as: (0.550 - 0.051) / 0.998 ≈ 0.500 mg/mL.

The workflow for creating a standard curve using linear regression is illustrated below.

References

- 1. Linearization of the Bradford Protein Assay - PMC [pmc.ncbi.nlm.nih.gov]

- 2. escholarship.org [escholarship.org]

- 3. Protocol for Bradford Protein Assay - Creative Proteomics [creative-proteomics.com]

- 4. stats.stackexchange.com [stats.stackexchange.com]

- 5. scribd.com [scribd.com]

- 6. teachmephysiology.com [teachmephysiology.com]

- 7. sites.chem.utoronto.ca [sites.chem.utoronto.ca]

- 8. Example of Regression Analysis and Its Application in Stability Testing o.. [askfilo.com]

- 9. researchgate.net [researchgate.net]

A-Z of Regression Analysis in Psychology Research: A Technical Guide

For Researchers, Scientists, and Drug Development Professionals

This guide provides a comprehensive overview of regression analysis, a powerful statistical tool used in psychological research to model and understand the relationships between variables.[1][2][3] It is designed to equip researchers with the knowledge to appropriately apply regression techniques to answer complex research questions.

Core Principle: When to Employ Regression Analysis

At its core, regression analysis is used for two primary purposes: prediction and explanation .[3][4] It is the appropriate method when a researcher seeks to understand how one or more independent variables (IVs), or predictors, relate to a single dependent variable (DV), or outcome.[1][3]

Use regression analysis to answer questions such as:

-

Prediction/Forecasting: Can we predict a future or current outcome based on a set of known factors? For example, can college GPA be predicted from high school SAT scores and hours of study per week?[1][3]

-

Etiology and Explanation: What are the key factors that contribute to a particular psychological phenomenon? For instance, what is the relationship between levels of perceived stress, social support, and the severity of depressive symptoms?[3]

-

Controlling for Variables: How does a primary relationship of interest hold up after accounting for the influence of other, potentially confounding, variables? A researcher might want to examine the effect of a new therapeutic intervention on anxiety levels while statistically controlling for the patient's age and initial symptom severity.[2][5]

-

Theory Testing: Does a theoretical model hold true? Regression is essential for testing complex psychological theories, such as models of mediation and moderation, which examine the how and when of an effect.[6][7][8]

Common Regression Models in Psychological Science

The choice of regression model depends on the nature of the research question and the type of variables being studied.[9][10][11][12]

| Model Type | Description | Typical Research Question | Variable Types |

| Simple Linear Regression | Models the linear relationship between a single IV and a single DV.[9][10][11] | How does the number of hours slept predict next-day mood rating? | IV: ContinuousDV: Continuous |

| Multiple Linear Regression | Extends simple regression by including two or more IVs to predict a single DV.[1][9] This allows for the assessment of the unique contribution of each predictor.[13] | How do IQ, motivation, and socioeconomic status collectively predict academic achievement? | IVs: Continuous or CategoricalDV: Continuous |

| Logistic Regression | Used when the dependent variable is dichotomous (i.e., has only two possible outcomes).[9][14][15] It models the probability of an outcome occurring.[9][16] | What is the likelihood of a patient responding to a specific treatment (yes/no) based on their demographic and clinical characteristics? | IVs: Continuous or CategoricalDV: Dichotomous (e.g., 0/1, Pass/Fail) |

| Hierarchical Regression | The researcher, based on theory, dictates the order in which predictor variables are entered into the model in a series of steps or "blocks".[5][17] This method is used to determine if newly added variables provide a significant improvement in prediction over the variables already in the model.[5][18][19] | After controlling for demographic factors like age and gender, does a measure of psychological resilience still significantly predict levels of burnout in healthcare workers? | IVs: Continuous or CategoricalDV: Continuous or Dichotomous |

| Mediation & Moderation Analysis | These are advanced applications of regression used to test theoretical pathways.[7][20] Mediation explains how or why an IV affects a DV through an intermediary variable (the mediator).[8][20] Moderation identifies when or for whom an IV affects a DV, by examining how a third variable (the moderator) changes the strength or direction of the primary relationship.[8][21] | Mediation: Does a new mindfulness intervention (IV) reduce stress (DV) by increasing self-awareness (mediator)?Moderation: Is the relationship between stress (IV) and depression (DV) stronger for individuals with low social support (moderator)? | IVs: Continuous or CategoricalDV: Continuous or Dichotomous |

The Foundational Assumptions of Linear Regression

-

Linearity: The relationship between the independent variable(s) and the dependent variable is assumed to be linear.[22][24][25][26] This means a straight line should best describe the relationship between the variables.

-

Independence of Errors: The residuals (the differences between the observed and predicted values) are independent of one another.[23][24][25] This is particularly important in data with a time-series component.

-

Homoscedasticity: The variance of the residuals is constant at every level of the independent variable(s).[23][24][25][26] In other words, the spread of the residuals should be roughly the same across the entire range of predicted values.

-

Normality of Errors: The residuals of the model are assumed to be normally distributed.[22][23][25][26] It is a common misconception that the variables themselves must be normally distributed; only the errors of the model need to follow a normal distribution.[22][23]

-

No Multicollinearity: In multiple regression, the independent variables should not be too highly correlated with each other.[25][26] High multicollinearity can make it difficult to determine the unique contribution of each predictor.

Example Experimental Protocol

Research Question: To what extent do prior academic achievement (high school GPA) and study habits (average weekly study hours) predict final exam scores in an undergraduate psychology course, after controlling for a student's pre-existing anxiety levels?

Methodology:

-

Participants: 250 undergraduate students enrolled in an introductory psychology course at a large university.

-

Materials:

-

Demographic Questionnaire: Collects basic information and self-reported high school GPA.

-

Study Habits Log: Participants log their average weekly hours spent studying for the course over the semester.

-

Generalized Anxiety Disorder 7-item (GAD-7) Scale: A validated self-report measure to assess baseline anxiety symptoms at the beginning of the semester.

-

Final Exam: A standardized, 100-point final examination for the course.

-

-

Procedure:

-

At the beginning of the semester, students complete the demographic questionnaire and the GAD-7 scale.

-

Throughout the semester, students are prompted weekly to log their study hours.

-

At the end of the semester, final exam scores are collected from the course instructor.

-

-

Proposed Analysis: Hierarchical Multiple Regression

-

Model 1: The control variable, GAD-7 score, is entered into the regression model to predict the final exam score.

-

Model 2: High school GPA and average weekly study hours are added to the model.

-

The analysis will determine if the addition of the academic variables in Model 2 significantly improves the prediction of the final exam score, over and above the variance explained by anxiety alone.[5]

-

Data Presentation: Interpreting Regression Output

The output of a regression analysis is typically summarized in a table. The following is a hypothetical result from the experiment described above.

Table 1: Hierarchical Regression Predicting Final Exam Score

| Model | Variable | B | SE | β | t | p-value |

|---|---|---|---|---|---|---|

| 1 | (Constant) | 85.12 | 2.45 | 34.74 | < .001 | |

| GAD-7 Score | -0.75 | 0.25 | -0.19 | -3.00 | .003 | |

| Model 1 Summary | F(1, 248) = 9.00, p = .003, R² = .035 | |||||

| 2 | (Constant) | 40.30 | 4.88 | 8.26 | < .001 | |

| GAD-7 Score | -0.41 | 0.20 | -0.10 | -2.05 | .041 | |

| High School GPA | 8.55 | 1.50 | 0.32 | 5.70 | < .001 | |

| Weekly Study Hours | 1.20 | 0.30 | 0.23 | 4.00 | < .001 | |

| Model 2 Summary | F(3, 246) = 25.67, p < .001, R² = .238 |

| Model 2 Change Statistics | | | | | ΔF(2, 246) = 31.25, p < .001, ΔR² = .203 | |

-

B (Unstandardized Coefficient): The change in the DV for a one-unit change in the IV. For every one-hour increase in weekly study, the exam score is predicted to increase by 1.20 points, holding other variables constant.

-

β (Standardized Coefficient): Standardized coefficients allow for comparison of the relative strength of the predictors. Here, High School GPA (β = 0.32) is the strongest predictor.

-

p-value: Indicates statistical significance (typically < .05). All predictors in Model 2 are significant.

-

R² (R-squared): The proportion of variance in the DV that is explained by the IVs in the model. Model 1 (anxiety) explains 3.5% of the variance in exam scores. Model 2 explains 23.8%.

-

ΔR² (R-squared Change): The change in R-squared between models. The addition of GPA and study hours explained an additional 20.3% of the variance in exam scores, a significant improvement.[5]

Visualizing Complex Relationships

Graphviz diagrams can illustrate the theoretical pathways tested with regression.

Conclusion

Regression analysis is a versatile and indispensable tool in psychology research, enabling scientists to move beyond simple descriptions of data to build and test predictive and explanatory models.[1][2] A thorough understanding of its different forms, underlying assumptions, and proper application is critical for producing robust and meaningful scientific insights. When used correctly, regression provides a powerful framework for dissecting the complex interplay of variables that define human behavior and psychological processes.

References

- 1. fiveable.me [fiveable.me]

- 2. statisticsbyjim.com [statisticsbyjim.com]

- 3. m.youtube.com [m.youtube.com]

- 4. Regression analysis - Wikipedia [en.wikipedia.org]

- 5. Section 5.4: Hierarchical Regression Explanation, Assumptions, Interpretation, and Write Up – Statistics for Research Students [usq.pressbooks.pub]

- 6. statisticssolutions.com [statisticssolutions.com]

- 7. Section 7.1: Mediation and Moderation Models – Statistics for Research Students [usq.pressbooks.pub]

- 8. martinlea.com [martinlea.com]

- 9. fiveable.me [fiveable.me]

- 10. 7 Common Types of Regression (And When to Use Each) [statology.org]

- 11. theknowledgeacademy.com [theknowledgeacademy.com]

- 12. analyticsvidhya.com [analyticsvidhya.com]

- 13. researchgate.net [researchgate.net]

- 14. APA Dictionary of Psychology [dictionary.apa.org]

- 15. Logistic regression - Wikipedia [en.wikipedia.org]

- 16. Logistic Regression: Overview and Applications | Keylabs [keylabs.ai]

- 17. APA Dictionary of Psychology [dictionary.apa.org]

- 18. files.eric.ed.gov [files.eric.ed.gov]

- 19. researchgate.net [researchgate.net]

- 20. researchgate.net [researchgate.net]

- 21. A General Model for Testing Mediation and Moderation Effects - PMC [pmc.ncbi.nlm.nih.gov]

- 22. Regression assumptions in clinical psychology research practice—a systematic review of common misconceptions - PMC [pmc.ncbi.nlm.nih.gov]

- 23. Testing the assumptions of linear regression [people.duke.edu]

- 24. statology.org [statology.org]

- 25. analyticsvidhya.com [analyticsvidhya.com]

- 26. statisticssolutions.com [statisticssolutions.com]

Foundational Principles of Multiple Regression Analysis: An In-depth Technical Guide for Researchers and Drug Development Professionals

An in-depth technical guide on the core principles of multiple regression analysis, tailored for researchers, scientists, and professionals in drug development. This guide delves into the fundamental assumptions, methodological workflow, and interpretation of multiple regression, providing a robust framework for its application in scientific research.

Introduction to Multiple Regression Analysis

Multiple regression analysis is a powerful statistical technique used to understand the relationship between a single dependent variable and two or more independent variables.[1][2] In the context of drug development and clinical research, it is an indispensable tool for identifying factors that may influence a particular outcome, such as treatment efficacy or patient response.[3] For instance, researchers might use multiple regression to determine how factors like drug dosage, patient age, and biomarker levels collectively predict a change in a clinical endpoint.[1] It is important to remember that regression analysis reveals relationships between variables but does not inherently imply causation.[1]

Core Principles and Assumptions

Key Assumptions of Multiple Regression:

-

Linearity: The relationship between each independent variable and the dependent variable is assumed to be linear. This can be visually inspected using scatterplots of each predictor against the outcome.[2]

-

Independence of Errors: The errors (the differences between the observed and predicted values) are assumed to be independent of one another. This assumption is particularly important in studies with repeated measures or clustered data.

-

Homoscedasticity: The variance of the errors is constant across all levels of the independent variables. A plot of residuals against predicted values should show no discernible pattern, such as a funnel shape.

-

Normality of Errors: The errors are assumed to be normally distributed. This can be checked by examining a histogram or a Q-Q plot of the residuals.

-

No Multicollinearity: The independent variables should not be too highly correlated with each other. High correlation, known as multicollinearity, can make it difficult to determine the individual effect of each predictor. This can be assessed using metrics like the Variance Inflation Factor (VIF).[4]

Methodological Workflow for Multiple Regression Analysis

A systematic approach to multiple regression analysis ensures robustness and reproducibility. The following diagram outlines a typical workflow for conducting such an analysis in a research setting.

Experimental Protocol: A Hypothetical Case Study in Drug Efficacy

To illustrate the application of multiple regression in drug development, we present a detailed protocol for a hypothetical clinical study.

Study Title: A Phase II, Randomized, Double-Blind, Placebo-Controlled Study to Evaluate the Efficacy of "LogiStat" in Reducing LDL Cholesterol in Patients with Hypercholesterolemia.

Objective: To determine the relationship between the dosage of LogiStat, patient age, and baseline LDL cholesterol levels on the percentage reduction in LDL cholesterol after 12 weeks of treatment.

Methodology:

-

Participant Recruitment: A total of 150 participants diagnosed with hypercholesterolemia, aged between 40 and 70 years, were recruited for the study. Participants were randomized into three arms: Placebo, LogiStat 10mg, and LogiStat 20mg.

-

Data Collection:

-

Dependent Variable: Percentage change in LDL cholesterol from baseline to week 12.

-

Independent Variables:

-

Drug Dosage (0mg for Placebo, 10mg, 20mg)

-

Patient Age (in years)

-

Baseline LDL Cholesterol (in mg/dL)

-

-

-

Statistical Analysis Plan:

-

A multiple linear regression model will be fitted to the data.

-

Model Equation: LDL_Reduction (%) = β₀ + β₁(Dosage) + β₂(Age) + β₃(Baseline_LDL) + ε

-

Assumption Checks: All core assumptions of multiple regression (linearity, independence, homoscedasticity, normality of errors, and no multicollinearity) will be formally tested.

-

Model Evaluation: The overall significance of the model will be assessed using the F-statistic. The proportion of variance in LDL reduction explained by the model will be determined by the R-squared value.

-

Interpretation of Coefficients: The regression coefficients (β) for each independent variable will be interpreted to understand their individual contribution to the change in LDL cholesterol, while holding other variables constant. A p-value of < 0.05 will be considered statistically significant.

-

Data Presentation: Summarized Results of the Hypothetical Study

The following table summarizes the results of the multiple regression analysis from our hypothetical study on "LogiStat".

| Variable | Unstandardized Coefficient (B) | Standard Error | Standardized Coefficient (β) | t-statistic | p-value |

| (Intercept) | 5.25 | 2.10 | 2.50 | 0.013 | |

| Drug Dosage (mg) | 1.50 | 0.25 | 0.60 | 6.00 | <0.001 |

| Age (years) | -0.15 | 0.05 | -0.18 | -3.00 | 0.003 |

| Baseline LDL (mg/dL) | 0.10 | 0.04 | 0.15 | 2.50 | 0.014 |

Model Summary:

-

R-squared (R²): 0.72

-

Adjusted R-squared: 0.71

-

F-statistic: 118.5

-

p-value (F-statistic): <0.001

Interpretation of Results

The results from the multiple regression analysis indicate that the overall model is statistically significant (F = 118.5, p < 0.001), explaining approximately 71% of the variance in LDL cholesterol reduction (Adjusted R² = 0.71).

-

Drug Dosage: For each 1mg increase in the dosage of LogiStat, the percentage reduction in LDL cholesterol is expected to increase by 1.50%, holding age and baseline LDL constant. This effect is statistically significant (p < 0.001).

-

Age: For each one-year increase in age, the percentage reduction in LDL cholesterol is expected to decrease by 0.15%, holding dosage and baseline LDL constant. This effect is statistically significant (p = 0.003).

-

Baseline LDL: For each 1 mg/dL increase in baseline LDL cholesterol, the percentage reduction in LDL cholesterol is expected to increase by 0.10%, holding dosage and age constant. This effect is statistically significant (p = 0.014).

Visualization of Logical Relationships

The following diagram illustrates the logical relationship between the independent and dependent variables in our hypothetical multiple regression model.

Conclusion

Multiple regression analysis is a versatile and powerful tool for researchers and professionals in drug development. By understanding its foundational principles, adhering to a systematic workflow, and carefully interpreting the results, it is possible to gain significant insights into the complex interplay of factors that influence clinical outcomes. This guide provides a foundational understanding to aid in the robust application of this essential statistical method.

References

Interpreting the Y-Intercept and Slope in Linear Regression: A Technical Guide for Researchers

An in-depth guide for researchers, scientists, and drug development professionals on the core principles of linear regression analysis, focusing on the practical interpretation of the y-intercept and slope. This document provides detailed experimental protocols, quantitative data summaries, and visual workflows to facilitate a deeper understanding of this fundamental statistical method.

Introduction to Linear Regression in Scientific Research

Linear regression is a powerful statistical tool used to model the relationship between a dependent variable and one or more independent variables.[1] In the context of life sciences and drug development, it is frequently employed to analyze experimental data, identify trends, and make predictions.[1] This guide will delve into the two key components of a simple linear regression equation—the y-intercept and the slope—providing a foundational understanding for their correct interpretation in various research applications.

The fundamental linear regression equation is expressed as:

Y = β₀ + β₁X + ε

Where:

-

Y is the dependent (response) variable.

-

X is the independent (predictor) variable.

-

β₀ is the y-intercept.

-

β₁ is the slope.

-

ε is the error term, representing the variability in Y that cannot be explained by X.

Core Concepts: The Y-Intercept (β₀) and the Slope (β₁)

Interpreting the Y-Intercept (β₀)

The y-intercept is the predicted value of the dependent variable (Y) when the independent variable (X) is equal to zero.[2][3][4] While mathematically straightforward, its practical interpretation depends heavily on the context of the experiment.

-

Meaningful Interpretation: In some scenarios, a value of zero for the independent variable is experimentally valid and meaningful. For example, in a dose-response study, a zero dose represents a control condition (no drug administered). In this case, the y-intercept would represent the baseline response of the biological system in the absence of the treatment.[5]

-

Meaningless Interpretation (Extrapolation): In many experiments, an X value of zero may be outside the range of the collected data or physically impossible. For instance, in a study relating drug concentration to absorbance, a zero concentration should theoretically yield zero absorbance. However, the regression line might intersect the y-axis at a non-zero value due to background noise or other experimental factors. Extrapolating the interpretation of the y-intercept in such cases can be misleading.[6] It is crucial to only apply the interpretation of the y-intercept within the range of the observed data.[6]

Interpreting the Slope (β₁)

The slope represents the change in the dependent variable (Y) for a one-unit change in the independent variable (X).[2][6][7] The sign of the slope (positive or negative) indicates the direction of the relationship.

-

Positive Slope: A positive slope signifies a direct relationship, where an increase in the independent variable leads to a predicted increase in the dependent variable.

-

Negative Slope: A negative slope indicates an inverse relationship, where an increase in the independent variable leads to a predicted decrease in the dependent variable.

The magnitude of the slope quantifies the steepness of the line and the strength of the linear relationship.[7] A larger absolute value of the slope suggests a more substantial change in Y for each unit change in X.

Practical Applications and Experimental Protocols

To illustrate the interpretation of the y-intercept and slope, we will explore two common applications in drug development and research: the Bradford protein assay and a dose-response analysis for IC₅₀ determination.

Example 1: Protein Quantification using the Bradford Assay

The Bradford assay is a widely used colorimetric method to determine the total protein concentration in a sample.[2] The assay relies on the binding of Coomassie Brilliant Blue G-250 dye to proteins, which results in a color change that can be measured by a spectrophotometer at 595 nm. A standard curve is generated using a series of known protein concentrations (e.g., Bovine Serum Albumin - BSA) and their corresponding absorbance readings. This standard curve is then used to determine the concentration of an unknown protein sample.

-

Preparation of Reagents:

-

Prepare a 1 mg/mL stock solution of Bovine Serum Albumin (BSA).

-

Prepare a series of BSA standards with concentrations ranging from 0.1 to 1.0 mg/mL by diluting the stock solution.

-

Prepare the Bradford reagent (commercially available or prepared in the lab).

-

-

Assay Procedure:

-

Pipette 20 µL of each BSA standard, the unknown protein sample, and a blank (buffer with no protein) into separate cuvettes or wells of a microplate.

-

Add 1 mL of Bradford reagent to each cuvette/well and mix thoroughly.

-

Incubate at room temperature for 5 minutes.

-

Measure the absorbance of each sample at 595 nm using a spectrophotometer.

-

-

Data Analysis:

-

Subtract the absorbance of the blank from the absorbance readings of all standards and the unknown sample.

-

Plot the corrected absorbance values (Y-axis) against the known BSA concentrations (X-axis).

-

Perform a linear regression analysis on the standard curve data to obtain the equation of the line (Y = β₁X + β₀).

-

| BSA Concentration (mg/mL) (X) | Absorbance at 595 nm (Y) |

| 0.0 | 0.050 |

| 0.1 | 0.150 |

| 0.2 | 0.255 |

| 0.4 | 0.460 |

| 0.6 | 0.655 |

| 0.8 | 0.860 |

| 1.0 | 1.050 |

Linear Regression Equation: Absorbance = 1.005 * [BSA] + 0.052

-

Slope (β₁ = 1.005): For every 1 mg/mL increase in BSA concentration, the absorbance at 595 nm is predicted to increase by 1.005 units. This indicates a strong positive linear relationship between protein concentration and absorbance within this range.

-

Y-Intercept (β₀ = 0.052): The y-intercept of 0.052 represents the predicted absorbance when the BSA concentration is zero. This small positive value is likely due to background absorbance from the reagent and the cuvette and is not practically interpreted as a true protein concentration.

Caption: Logical flow for estimating IC₅₀ using linear regression on dose-response data.

Quantitative Structure-Activity Relationship (QSAR) Modeling

Quantitative Structure-Activity Relationship (QSAR) is a computational modeling method used in drug discovery to predict the biological activity of chemical compounds based on their physicochemical properties. [3]Linear regression is a fundamental technique used in developing QSAR models.

In QSAR, the biological activity (e.g., pIC₅₀) is the dependent variable (Y), and various molecular descriptors (e.g., molecular weight, logP, polar surface area) are the independent variables (X). A multiple linear regression model can be built to establish a mathematical relationship between the descriptors and the activity.

-

Data Collection: Compile a dataset of chemical structures and their corresponding experimentally determined biological activities.

-

Descriptor Calculation: Calculate a wide range of molecular descriptors for each compound in the dataset.

-

Data Splitting: Divide the dataset into a training set (for model building) and a test set (for model validation).

-

Model Building: Use multiple linear regression to build a model that relates the selected descriptors to the biological activity for the training set.

-

Model Validation: Evaluate the predictive performance of the model on the test set.

Caption: A simplified workflow for developing a QSAR model using linear regression.

Conclusion

References

- 1. superchemistryclasses.com [superchemistryclasses.com]

- 2. Linearization of the Bradford Protein Assay - PMC [pmc.ncbi.nlm.nih.gov]

- 3. neovarsity.org [neovarsity.org]

- 4. researchgate.net [researchgate.net]

- 5. 2.1 Dose-Response Modeling | The inTelligence And Machine lEarning (TAME) Toolkit for Introductory Data Science, Chemical-Biological Analyses, Predictive Modeling, and Database Mining for Environmental Health Research [uncsrp.github.io]

- 6. scribd.com [scribd.com]

- 7. bio-rad.com [bio-rad.com]

Exploring different types of regression models for scientific data.

For Researchers, Scientists, and Drug Development Professionals

In the realm of scientific discovery and drug development, the ability to model and interpret complex data is paramount. Regression analysis serves as a powerful statistical toolkit for understanding the relationships between variables, predicting outcomes, and ultimately driving informed decisions. This in-depth technical guide explores a range of regression models, from foundational linear approaches to more sophisticated nonlinear and machine learning techniques, providing a roadmap for their application to scientific data.

Fundamental Regression Models

Linear Regression: Unveiling Linear Relationships

Linear regression is a foundational statistical method used to model the relationship between a dependent variable and one or more independent variables by fitting a linear equation to the observed data.[1][2] It is often the first step in data analysis to understand trends and make predictions when a linear relationship is suspected.[1]

Experimental Protocol: Correlating Drug Concentration with Efficacy

A common application of linear regression in pre-clinical research is to assess the relationship between the concentration of a drug and its measured efficacy in an in-vitro assay.

-

Cell Culture and Treatment: A specific cell line relevant to the disease of interest is cultured under standard conditions. The cells are then treated with a range of concentrations of the investigational drug.

-

Efficacy Measurement: After a predetermined incubation period, a biochemical assay is performed to measure a specific biological response indicative of the drug's efficacy. This could be, for example, the inhibition of a particular enzyme or the reduction in cell viability.

-

Data Collection: The drug concentration (independent variable) and the corresponding efficacy measurement (dependent variable) are recorded for each treatment condition.

-

Regression Analysis: Simple linear regression is applied to the collected data to model the relationship between drug concentration and efficacy. The output of the analysis includes the regression equation (Y = bX + A), where Y is the predicted efficacy, X is the drug concentration, b is the slope of the line, and A is the y-intercept.[2] The coefficient of determination (R-squared) is also calculated to assess the goodness of fit of the model.

Table 1: Linear Regression Analysis of Drug Concentration and Efficacy

| Drug Concentration (nM) | Measured Efficacy (% Inhibition) | Predicted Efficacy (% Inhibition) |

| 1 | 5.2 | 5.5 |

| 2 | 10.8 | 10.3 |

| 5 | 24.5 | 24.5 |

| 10 | 48.7 | 48.5 |

| 20 | 95.3 | 96.5 |

Logical Relationship: Linear Regression Workflow

Caption: Workflow for applying linear regression to experimental data.

Polynomial Regression: Modeling Non-Linear Trends

When the relationship between variables is not a straight line, polynomial regression can be employed to fit a curvilinear relationship.[3][4] This method extends linear regression by adding polynomial terms (e.g., squared, cubed) of the independent variable to the model, allowing it to capture more complex patterns in the data.[5][6]

Experimental Protocol: Optimizing Fertilizer Concentration for Crop Yield

In agricultural science, polynomial regression is often used to model the non-linear relationship between fertilizer concentration and crop yield, where increasing fertilizer initially boosts yield, but excessive amounts can become detrimental.[7][8]

-

Experimental Plot Setup: A field is divided into multiple plots, and a specific crop is planted under uniform conditions.

-

Fertilizer Application: Different concentrations of a fertilizer are applied to the plots, with each concentration level replicated across multiple plots to ensure statistical robustness.

-

Crop Growth and Harvest: The crops are allowed to grow to maturity under controlled environmental conditions. At the end of the growing season, the yield from each plot is harvested and measured.

-

Data Collection: The fertilizer concentration (independent variable) and the corresponding crop yield (dependent variable) are recorded for each plot.

-

Regression Analysis: A polynomial regression model is fitted to the data. The degree of the polynomial (e.g., quadratic, cubic) is chosen based on the observed trend in the data and statistical measures of model fit.[9] The resulting equation describes the curved relationship between fertilizer and yield, allowing for the determination of the optimal fertilizer concentration for maximum yield.[7]

Table 2: Polynomial Regression Analysis of Fertilizer Concentration and Crop Yield

| Fertilizer Concentration ( kg/ha ) | Observed Crop Yield (tons/ha) | Predicted Crop Yield (tons/ha) |

| 0 | 1.5 | 1.6 |

| 50 | 3.2 | 3.1 |

| 100 | 4.5 | 4.6 |

| 150 | 4.8 | 4.7 |

| 200 | 4.1 | 4.2 |

Logical Relationship: Polynomial Regression Workflow

Caption: Workflow for applying polynomial regression to agricultural data.

Regression for Categorical and Time-to-Event Data

Logistic Regression: Predicting Binary Outcomes

Logistic regression is a powerful statistical method for modeling the relationship between a set of independent variables and a binary dependent variable (an outcome with two categories, such as presence/absence of a disease).[6][10] Instead of predicting the value of the variable itself, logistic regression predicts the probability of the outcome occurring.[11]

Experimental Protocol: Developing a Diagnostic Model for a Disease

In clinical research, logistic regression is frequently used to develop diagnostic models that predict the likelihood of a patient having a particular disease based on various clinical and demographic factors.

-

Patient Cohort Selection: A cohort of patients is recruited, including individuals with a confirmed diagnosis of the disease of interest and a control group of healthy individuals.

-

Data Collection: For each patient, a set of predictor variables is collected. These can include demographic information (e.g., age, sex), clinical measurements (e.g., blood pressure, cholesterol levels), and biomarker data. The binary outcome variable (disease present/absent) is also recorded for each patient.

-

Model Building: A logistic regression model is built using the collected data. The model estimates the relationship between each predictor variable and the log-odds of having the disease.[12]

-

Model Evaluation and Interpretation: The performance of the model is evaluated using metrics such as accuracy, sensitivity, and specificity. The coefficients of the model are interpreted as odds ratios, which quantify the change in the odds of having the disease for a one-unit change in a predictor variable, holding other variables constant.[13][14]

Table 3: Logistic Regression Analysis for Disease Diagnosis

| Predictor Variable | Coefficient (log-odds) | Odds Ratio | 95% Confidence Interval for Odds Ratio | p-value |

| Age (per year) | 0.05 | 1.05 | (1.02, 1.08) | <0.001 |

| Biomarker X (per unit) | 1.20 | 3.32 | (2.50, 4.40) | <0.001 |

| Treatment (Active vs. Placebo) | -0.69 | 0.50 | (0.35, 0.71) | <0.001 |

Logical Relationship: Logistic Regression Workflow for Diagnostics

Caption: Workflow for developing a diagnostic model using logistic regression.

Cox Proportional Hazards Model: Analyzing Time-to-Event Data

The Cox proportional hazards model is a regression method used for investigating the relationship between the survival time of patients and one or more predictor variables.[15][16] It is particularly useful in clinical trials and observational studies where the outcome of interest is the time until an event occurs, such as death, disease recurrence, or recovery.[17]

Experimental Protocol: Evaluating a New Cancer Therapy in a Clinical Trial

A common application of the Cox model is in oncology clinical trials to assess the efficacy of a new treatment compared to a standard treatment in terms of patient survival.[15]

-

Patient Enrollment and Randomization: A cohort of patients with a specific type of cancer is enrolled in the clinical trial. Patients are randomly assigned to receive either the new investigational therapy or the standard of care.

-

Follow-up and Event Monitoring: Patients in both treatment arms are followed over a specified period. The time from randomization until the occurrence of a predefined event (e.g., death, disease progression) is recorded for each patient. For patients who do not experience the event by the end of the study or are lost to follow-up, their data is "censored," meaning their survival time is known to be at least as long as their follow-up time.

-

Data Collection: In addition to time-to-event data, baseline characteristics of the patients (e.g., age, sex, tumor stage, biomarker status) are collected as covariates.

-

Cox Regression Analysis: A Cox proportional hazards model is fitted to the data. The model estimates the hazard ratio for the treatment effect, which represents the relative risk of the event occurring in the investigational treatment group compared to the standard treatment group, while adjusting for the effects of other covariates.[18] A hazard ratio less than 1 indicates that the new treatment is associated with a lower risk of the event.[19]

Table 4: Cox Proportional Hazards Model for Survival Analysis in an Oncology Trial

| Covariate | Hazard Ratio | 95% Confidence Interval | p-value |

| Treatment (New vs. Standard) | 0.75 | (0.60, 0.94) | 0.012 |

| Age (per 10-year increase) | 1.20 | (1.05, 1.37) | 0.008 |

| Tumor Stage (Advanced vs. Early) | 2.50 | (1.80, 3.47) | <0.001 |

| Biomarker Status (Positive vs. Negative) | 0.60 | (0.45, 0.80) | <0.001 |

Experimental Workflow: Clinical Trial with Survival Analysis

Caption: Workflow of a clinical trial with time-to-event data analysis.

Advanced and Machine Learning-Based Regression Models

Non-Linear Regression: Modeling Complex Biological Processes

Non-linear regression is used when the relationship between the independent and dependent variables cannot be described by a linear model.[20] In drug development, it is frequently used to model dose-response curves and enzyme kinetics.[16]

Experimental Protocol: Determining the IC50 of a Drug Candidate

A crucial step in drug discovery is determining the half-maximal inhibitory concentration (IC50) of a compound, which is the concentration of a drug that is required for 50% inhibition in vitro. This is typically done using non-linear regression to fit a sigmoidal dose-response curve.[21]

-

Assay Setup: A biological assay is set up to measure the activity of a target (e.g., an enzyme or a cell line).

-

Serial Dilution and Treatment: The drug candidate is serially diluted to create a range of concentrations. The target is then treated with these different concentrations of the drug.

-

Response Measurement: The biological response (e.g., enzyme activity, cell viability) is measured at each drug concentration.

-

Data Analysis: The drug concentrations (typically log-transformed) are plotted against the corresponding responses. A non-linear regression model, often a four-parameter logistic model, is fitted to the data to generate a sigmoidal curve.[20] The IC50 value is then derived from this curve.

Table 5: Non-Linear Regression for IC50 Determination

| Log(Drug Concentration [M]) | % Inhibition (Observed) | % Inhibition (Predicted) |

| -9 | 2.1 | 1.8 |

| -8 | 15.4 | 16.2 |

| -7 | 48.9 | 50.1 |

| -6 | 85.2 | 84.5 |

| -5 | 98.7 | 98.9 |

| Derived IC50 (nM) | - | 50.1 |

Signaling Pathway: Drug-Target Inhibition

Caption: Simplified signaling pathway of drug-target inhibition.

Support Vector Regression (SVR): A Machine Learning Approach

Support Vector Regression (SVR) is a supervised learning algorithm that can be used for regression tasks.[22] It is particularly useful for high-dimensional data and can model non-linear relationships using different kernel functions.[23]

Experimental Protocol: Quantitative Structure-Activity Relationship (QSAR) Modeling

In drug discovery, SVR is often employed in Quantitative Structure-Activity Relationship (QSAR) studies to predict the biological activity of chemical compounds based on their molecular descriptors.[13][24]

-

Dataset Compilation: A dataset of chemical compounds with known biological activities (e.g., binding affinity, toxicity) is compiled.

-

Molecular Descriptor Calculation: For each compound, a set of numerical features, known as molecular descriptors, are calculated. These descriptors represent various physicochemical and structural properties of the molecules.

-

Model Training: An SVR model is trained on a subset of the data (the training set). The model learns the relationship between the molecular descriptors and the biological activity.

-

Model Validation: The trained SVR model is then used to predict the biological activity of the remaining compounds (the test set). The performance of the model is evaluated by comparing the predicted activities with the experimentally determined values.

Table 6: Support Vector Regression for QSAR Modeling

| Compound ID | Molecular Weight | LogP | Number of Hydrogen Bond Donors | Predicted Bioactivity (pIC50) |

| Cmpd-001 | 350.4 | 2.5 | 2 | 7.8 |

| Cmpd-002 | 412.5 | 3.1 | 3 | 6.5 |

| Cmpd-003 | 298.3 | 1.8 | 1 | 8.2 |

| Cmpd-004 | 450.6 | 4.2 | 2 | 5.9 |

| Cmpd-005 | 388.4 | 2.9 | 4 | 7.1 |

Logical Relationship: SVR-based QSAR Workflow

Caption: Workflow for QSAR modeling using Support Vector Regression.

Ridge and Lasso Regression: Handling High-Dimensional Data

Ridge and Lasso regression are regularization techniques used to handle multicollinearity and prevent overfitting in models with a large number of predictor variables, which is common in genomics and other high-throughput biological studies.[25] Lasso has the additional property of performing feature selection by shrinking the coefficients of less important variables to zero.[26]

Experimental Protocol: Identifying Prognostic Genes from Gene Expression Data

In cancer research, Lasso regression can be used to identify a small subset of genes from a large gene expression dataset that are most predictive of patient prognosis.[9]

-

Patient Cohort and Sample Collection: Tumor samples and corresponding clinical data (including survival information) are collected from a cohort of cancer patients.

-

Gene Expression Profiling: The expression levels of thousands of genes in each tumor sample are measured using techniques such as microarray or RNA-sequencing.

-

Data Preprocessing: The gene expression data is preprocessed and normalized.

-