DAPCy

Description

BenchChem offers high-quality this compound suitable for many research applications. Different packaging options are available to accommodate customers' requirements. Please inquire for more information about this compound including the price, delivery time, and more detailed information at info@benchchem.com.

Propriétés

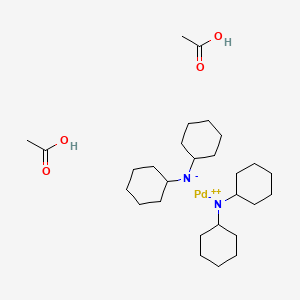

Formule moléculaire |

C28H52N2O4Pd |

|---|---|

Poids moléculaire |

587.1 g/mol |

Nom IUPAC |

acetic acid;dicyclohexylazanide;palladium(2+) |

InChI |

InChI=1S/2C12H22N.2C2H4O2.Pd/c2*1-3-7-11(8-4-1)13-12-9-5-2-6-10-12;2*1-2(3)4;/h2*11-12H,1-10H2;2*1H3,(H,3,4);/q2*-1;;;+2 |

Clé InChI |

LAYDWGNLLRXNPH-UHFFFAOYSA-N |

SMILES canonique |

CC(=O)O.CC(=O)O.C1CCC(CC1)[N-]C2CCCCC2.C1CCC(CC1)[N-]C2CCCCC2.[Pd+2] |

Origine du produit |

United States |

Foundational & Exploratory

DAPCy for Population Genetics: An In-depth Technical Guide

For Researchers, Scientists, and Drug Development Professionals

Introduction to Discriminant Analysis of Principal Components (DAPC)

Discriminant Analysis of Principal Components (DAPC) is a multivariate statistical method used to identify and describe clusters of genetically related individuals. It is particularly well-suited for population genetics as it makes no assumptions about the underlying population genetic model, such as Hardy-Weinberg equilibrium or linkage equilibrium. This makes it a robust tool for analyzing the genetic structure of a wide variety of organisms, including those that are clonal or partially clonal.[1][2] The core principle of DAPC is to maximize the genetic variation between predefined groups while minimizing the variation within those groups.[3]

DAPC is a two-step process:

-

Principal Component Analysis (PCA): Initially, the genetic data, typically in the form of single nucleotide polymorphisms (SNPs) or other genetic markers, is transformed using PCA. This step reduces the dimensionality of the data and removes the correlation between variables (alleles), which is a prerequisite for the subsequent discriminant analysis.[4][5]

-

Discriminant Analysis (DA): The principal components retained from the PCA are then used as input for a linear discriminant analysis. The DA identifies linear combinations of these principal components that best separate the predefined clusters of individuals.[4][5]

A key feature of DAPC is its ability to be used both when population groups are known a priori and when they are unknown.[6][7] In cases where groups are not predefined, DAPC employs a preliminary clustering step using the k-means algorithm to identify the optimal number of genetic clusters within the data.[1] The Bayesian Information Criterion (BIC) is often used to assess the best-supported number of clusters.[1]

DAPCy: A Python Implementation for Enhanced Performance

This compound is a Python package that provides a re-implementation of the DAPC method, originally available in the R package adegenet.[6][7] this compound is specifically designed for the analysis of large-scale genomic datasets, offering significant improvements in speed and memory efficiency.[7] This is achieved through the use of sparse matrices and truncated singular value decomposition (SVD) for the PCA step.[7] Furthermore, this compound integrates with the popular scikit-learn library, providing additional machine learning functionalities such as various cross-validation schemes and hyperparameter tuning options.[7]

Core Concepts and Advantages

The primary goal of DAPC is to provide a clear description of genetic clusters using a few synthetic variables known as discriminant functions. These functions are linear combinations of the original alleles, and the contribution of each allele to these functions is quantified by "loadings."[6] This allows researchers to identify the specific genetic markers that are most responsible for differentiating between populations.

Compared to other popular methods for population structure analysis, such as STRUCTURE, DAPC offers several advantages:

-

No Assumption of Panmixia: DAPC does not assume that populations are in Hardy-Weinberg or linkage equilibrium, making it suitable for a wider range of biological systems.[8]

-

Computational Efficiency: DAPC, and particularly this compound, is computationally much faster than Bayesian clustering methods, making it feasible to analyze large genomic datasets with thousands of individuals and markers.[1][7]

-

Handling of Clonal Organisms: Its model-free nature makes it a more appropriate choice for studying the population structure of clonal or partially clonal organisms.[8]

However, it is also important to be aware of the potential for overfitting when the number of retained principal components is too high relative to the number of individuals.[6]

Logical Framework: The Interplay of PCA and DA in DAPC

The following diagram illustrates the logical relationship between the key components of the DAPC method.

Experimental Protocols

This section outlines the detailed methodologies for performing a DAPC analysis using both the adegenet package in R and the this compound package in Python.

DAPC Analysis Workflow

The general workflow for a DAPC analysis can be broken down into the following key steps:

Detailed Methodologies

1. Data Preparation and Input

-

adegenet (R): Genetic data can be imported from various formats such as GENEPOP, FSTAT, or VCF files into a genind or genlight object. The vcfR package can be used to read VCF files and convert them to the genlight format, which is efficient for storing large SNP datasets.[8]

-

This compound (Python): this compound is optimized for large genomic datasets and can directly read data from VCF or BED files. It internally converts the genotype data into a compressed sparse row (csr) matrix to minimize memory consumption.[7]

2. De Novo Cluster Identification (if groups are unknown)

-

adegenet (R): The find.clusters function is used to identify the optimal number of genetic clusters. This function performs successive k-means clustering with an increasing number of clusters (k) and uses the Bayesian Information Criterion (BIC) to identify the best-supported k. A lower BIC value generally indicates a better fit.[6]

-

This compound (Python): this compound provides a k-means clustering pipeline with automated model optimization. By default, it uses the sum of squared errors (SSE) or Silhouette scores to evaluate different cluster solutions.[9]

3. Cross-Validation for Principal Component Selection

A crucial step in DAPC is to determine the optimal number of principal components (PCs) to retain for the discriminant analysis. Retaining too few PCs can lead to a loss of important information, while retaining too many can result in overfitting.[6]

-

adegenet (R): The xvalDapc function performs stratified cross-validation. It repeatedly partitions the data into a training set (e.g., 90%) and a validation set (e.g., 10%), performs DAPC on the training set with a varying number of PCs, and predicts the group membership of the individuals in the validation set. The optimal number of PCs is the one that yields the highest proportion of successful assignments and the lowest root mean squared error.[6]

-

This compound (Python): this compound leverages the cross-validation functionalities of scikit-learn, offering various schemes such as k-fold and stratified k-fold cross-validation for more robust model evaluation and hyperparameter tuning.[7]

4. Performing the DAPC

-

adegenet (R): The dapc function performs the main analysis. It takes the genetic data and the group assignments (either predefined or from find.clusters) as input. The user specifies the number of PCs and discriminant functions to retain.[8]

-

This compound (Python): this compound implements the DAPC algorithm within a scikit-learn compatible pipeline. The user can specify the number of components to use, and the analysis is performed in a computationally efficient manner.[7]

5. Interpretation of Results

The output of a DAPC analysis provides several key pieces of information for understanding population structure:

-

Scatterplots: These plots visualize the first few discriminant functions, showing the separation between the identified genetic clusters.

-

Assignment Probabilities: DAPC provides the probability of each individual belonging to each of the identified clusters. These can be visualized in a "structure-like" plot to assess the clarity of the clustering and identify potentially admixed individuals.[6]

-

Allele Loadings: These values indicate the contribution of each allele to the discriminant functions, allowing for the identification of the genetic markers that are most important for differentiating between populations.[6]

Quantitative Data Presentation

Performance Benchmarking: this compound vs. adegenet

The following table summarizes the performance of this compound compared to the R package adegenet on the Plasmodium falciparum Pf7 dataset (6,385 SNPs). This data is based on the findings from the official this compound publication.[9]

| Performance Metric | This compound | adegenet |

| Runtime (seconds) | ~10 | ~60 |

| Memory Usage (GB) | ~1 | ~4 |

| Mean Accuracy | Comparable | Comparable |

Note: The values are approximate and intended for comparative purposes.

Example: DAPC Assignment Probabilities

The following table provides a hypothetical example of assignment probabilities for a small number of individuals to three different genetic clusters as would be generated by a DAPC analysis.

| Individual ID | Cluster 1 Probability | Cluster 2 Probability | Cluster 3 Probability | Assigned Cluster |

| Ind_001 | 0.98 | 0.01 | 0.01 | 1 |

| Ind_002 | 0.95 | 0.03 | 0.02 | 1 |

| Ind_003 | 0.05 | 0.92 | 0.03 | 2 |

| Ind_004 | 0.10 | 0.88 | 0.02 | 2 |

| Ind_005 | 0.45 | 0.50 | 0.05 | 2 |

| Ind_006 | 0.01 | 0.02 | 0.97 | 3 |

| Ind_007 | 0.03 | 0.01 | 0.96 | 3 |

Individuals with high probabilities for a single cluster are clearly assigned, while individuals with more evenly distributed probabilities (like Ind_005) may be indicative of admixture.

Example: Allele Loading Analysis

This table illustrates how the results of an allele loading analysis might be presented, highlighting the top SNPs contributing to the separation of clusters along the first discriminant function.

| SNP ID | Chromosome | Position | Allele | Loading on DF1 |

| rs12345 | 1 | 100234 | A | 0.085 |

| rs67890 | 3 | 543210 | G | -0.079 |

| rs11223 | 5 | 987654 | T | 0.072 |

| rs44556 | 1 | 234567 | C | -0.068 |

| rs77889 | 8 | 876543 | A | 0.065 |

Alleles with high positive or negative loadings are the primary drivers of differentiation along that particular discriminant axis.

Conclusion

DAPC, and its high-performance Python implementation this compound, provides a powerful and flexible framework for the analysis of population genetic structure. Its freedom from the assumptions of traditional population genetics models, coupled with its computational efficiency, makes it an invaluable tool for researchers, scientists, and drug development professionals working with large and complex genomic datasets. By providing insights into population structure, identifying admixed individuals, and pinpointing the genetic loci driving differentiation, DAPC and this compound can significantly contribute to our understanding of evolutionary processes, the genetic basis of traits, and the design of effective conservation and management strategies.

References

- 1. Genomic architecture and population structure of Boreogadus saida from Canadian waters - PMC [pmc.ncbi.nlm.nih.gov]

- 2. mdpi.com [mdpi.com]

- 3. The influence of a priori grouping on inference of genetic clusters: simulation study and literature review of the DAPC method - PMC [pmc.ncbi.nlm.nih.gov]

- 4. tandfonline.com [tandfonline.com]

- 5. researchgate.net [researchgate.net]

- 6. academic.oup.com [academic.oup.com]

- 7. cdnsciencepub.com [cdnsciencepub.com]

- 8. researchgate.net [researchgate.net]

- 9. This compound [uhasselt-bioinfo.gitlab.io]

DAPCy: A Technical Guide to a High-Performance Python Package for Population Genetic Analysis

An In-depth Technical Guide for Researchers, Scientists, and Drug Development Professionals

Introduction

In the era of large-scale genomic data, the ability to efficiently analyze population structure is paramount for advancements in fields ranging from evolutionary biology to human health. For professionals in drug development, understanding the genetic landscape of human populations and disease vectors is critical for pharmacogenomics, biomarker discovery, and the development of targeted therapies. The Discriminant Analysis of Principal Components (DAPC) method is a powerful multivariate approach for inferring population structure from genetic markers. However, the canonical implementation in the R package adegenet can face performance bottlenecks with the vast datasets common in modern genomics.

To address this challenge, DAPCy has been developed as a high-performance, memory-efficient Python package that re-implements the DAPC method.[1] By leveraging the scikit-learn library, sparse matrices, and truncated singular value decomposition, this compound offers a significant leap in computational efficiency, making the analysis of large-scale genomic data more accessible and robust.[2][3] This guide provides a comprehensive technical overview of this compound, its core functionalities, and its application in population genetic analyses, with a particular focus on its relevance to the drug development pipeline.

Core Concepts: Discriminant Analysis of Principal Components (DAPC)

DAPC is a multivariate statistical method that integrates two fundamental techniques: Principal Component Analysis (PCA) and Discriminant Analysis (DA).[1] The primary goal of DAPC is to identify and describe clusters of genetically related individuals. The workflow of DAPC can be conceptualized as a two-stage process:

-

Data Transformation with PCA: Genetic data, such as Single Nucleotide Polymorphisms (SNPs), is often high-dimensional. PCA is first employed to reduce the dimensionality of the data while retaining the majority of the genetic variation. This step is crucial for stabilizing the subsequent discriminant analysis.

-

Clustering with Discriminant Analysis: Following PCA, discriminant analysis is performed on the retained principal components to maximize the separation between predefined or inferred groups. DA constructs discriminant functions that are linear combinations of the principal components, optimally separating the clusters.

A key feature of DAPC is its ability to be used both when population groups are known a priori and when they need to be inferred de novo from the data, typically using clustering algorithms like K-means.[1]

The this compound Package: Architecture and Advantages

This compound is engineered to overcome the computational limitations of its R-based predecessor, particularly for large genomic datasets.[2] Its architecture is built upon the robust and widely-used scikit-learn machine learning library in Python.

Key Architectural Features:

-

Sparse Matrix Representation: this compound utilizes compressed sparse row (CSR) matrices to store genotype data. This significantly reduces memory consumption, as genomic datasets are often sparse (i.e., contain many zero-valued entries).

-

Truncated Singular Value Decomposition (SVD): For the PCA step, this compound employs a truncated SVD algorithm. This is a more computationally efficient method for dimensionality reduction on large, sparse matrices compared to traditional eigendecomposition.[3]

-

Integration with scikit-learn: By adhering to the scikit-learn API, this compound allows for seamless integration into machine learning workflows, including options for hyperparameter tuning and various cross-validation schemes.[1]

-

Model Persistence: Trained DAPC models can be easily saved and loaded as pickle files, facilitating model deployment and reproducibility without the need for retraining.[2]

These features result in a faster and more memory-efficient implementation of the DAPC method, making it a powerful tool for population genetic analysis in the age of big data.

Quantitative Performance Benchmarks

To evaluate its computational performance, this compound was benchmarked against the R adegenet package using two publicly available genomic datasets: the Plasmodium falciparum Pf7 dataset from MalariaGEN and the 1000 Genomes Project dataset.[2] The benchmarks assessed both computation time and memory usage.

Table 1: Benchmarking Results for the Plasmodium falciparum Pf7 Dataset

| Metric | This compound | R (adegenet) |

| Computation Time (seconds) | 15.2 | 123.5 |

| Memory Usage (GB) | 1.2 | 4.8 |

Table 2: Benchmarking Results for the 1000 Genomes Project Dataset

| Metric | This compound | R (adegenet) |

| Computation Time (seconds) | 345.8 | 2160.7 |

| Memory Usage (GB) | 8.5 | 32.1 |

The results clearly demonstrate the superior performance of this compound in terms of both speed and memory efficiency, with the performance gap widening significantly on the larger 1000 Genomes Project dataset.

Experimental Protocols

The following sections detail the methodologies used in the benchmarking analyses of this compound.

Experimental Protocol 1: Analysis of the Plasmodium falciparum Pf7 Dataset

This protocol describes the steps taken to analyze the P. falciparum Pf7 dataset, a quality-controlled genotype set comprising 6,385 SNPs and 16,203 samples from 33 countries.

-

Data Acquisition: The raw VCF files for the Pf7 dataset were obtained from the MalariaGEN website.

-

Data Preprocessing:

-

The VCF files were converted to BED format using PLINK.

-

SNPs with a minor allele frequency (MAF) below 10% were removed.

-

Linkage disequilibrium (LD) pruning was performed to remove SNPs with an r² value greater than 0.3, resulting in a set of 6,385 uncorrelated SNPs.

-

-

DAPC Analysis with this compound:

-

The preprocessed genotype data was loaded into this compound.

-

A PCA was performed using truncated SVD.

-

K-means clustering was applied to the principal components to infer the optimal number of genetic clusters. The sum of squared errors (SSE) was used to identify the "elbow" point, suggesting an optimal k.

-

A DAPC model was trained using the inferred clusters.

-

-

DAPC Analysis with R adegenet:

-

The same preprocessed dataset was loaded into R.

-

The adegenet package was used to perform the DAPC analysis following the standard workflow, including PCA and discriminant analysis.

-

-

Performance Measurement: Computation time and peak memory usage were recorded for both the this compound and adegenet analyses.

Experimental Protocol 2: Analysis of the 1000 Genomes Project Dataset

This protocol outlines the analysis of a subset of the 1000 Genomes Project dataset, consisting of 359,130 SNPs and 2,805 samples.

-

Data Acquisition: The VCF files for the 1000 Genomes Project were downloaded from the project's data portal.

-

Data Preprocessing:

-

VCF files were converted to BED format using PLINK.

-

SNPs with a MAF below 10% were filtered out.

-

LD pruning was conducted, removing SNPs with an r² greater than 0.3, resulting in 359,130 uncorrelated SNPs.

-

-

DAPC Analysis with this compound:

-

The processed genotype data was loaded into this compound.

-

PCA was performed via truncated SVD.

-

K-means clustering was used to determine the number of population groups.

-

The DAPC model was trained based on the identified clusters.

-

-

DAPC Analysis with R adegenet:

-

The identical preprocessed data was analyzed using the adegenet package in R, following its standard DAPC workflow.

-

-

Performance Measurement: Execution time and maximum memory allocation were measured for both analyses.

Visualizing Workflows and Logical Relationships

To provide a clearer understanding of the processes involved, the following diagrams, generated using the DOT language, illustrate the core DAPC workflow and the experimental design for the benchmarking study.

adegenet.Conclusion

This compound represents a significant advancement in the tools available for population genetic analysis. By providing a Python-native, high-performance implementation of the DAPC method, it empowers researchers to analyze large-scale genomic datasets with greater speed and efficiency. For professionals in drug development, this compound offers a valuable tool for exploring the genetic architecture of human populations and disease vectors, which can inform strategies for personalized medicine, drug target identification, and understanding drug resistance. Its integration with the scikit-learn ecosystem further enhances its utility, allowing for its incorporation into broader machine learning pipelines for a deeper understanding of the genetic basis of health and disease.

References

Navigating Population Structure: A Technical Comparison of DAPCy and adegenet for DAPC Analysis

A deep dive into the computational and methodological nuances of two key software packages for Discriminant Analysis of Principal Components (DAPC) in genetic analysis.

In the realm of population genetics and genomics, Discriminant Analysis of Principal Components (DAPC) stands as a powerful multivariate method for identifying and describing genetic clusters without prior knowledge of population boundaries. This technique is pivotal for understanding population structure, identifying hybrids, and informing conservation and drug development efforts. The R package adegenet has long been the gold standard for performing DAPC. However, the ever-increasing scale of genomic datasets has necessitated the development of more computationally efficient tools. Enter DAPCy, a Python-based reimplementation of DAPC designed to handle large-scale genomic data with enhanced speed and reduced memory overhead.[1][2] This technical guide provides an in-depth comparison of this compound and adegenet, offering researchers, scientists, and drug development professionals a comprehensive overview to inform their choice of software for DAPC analysis.

Core Methodological Distinctions

At its core, DAPC is a two-step process. First, a Principal Component Analysis (PCA) is performed on the genetic data to reduce its dimensionality while retaining most of the variance. Second, a Discriminant Analysis (DA) is applied to the retained principal components to maximize the separation between groups.[3][4][5] While both adegenet and this compound adhere to this fundamental workflow, their underlying computational approaches differ significantly, leading to substantial performance disparities, particularly with large datasets.

The primary distinction lies in their handling of the initial PCA step. adegenet's DAPC implementation traditionally relies on eigendecomposition for PCA, a method that can be computationally intensive and memory-demanding, especially for datasets with a large number of features (e.g., SNPs) compared to the number of samples.[2] In contrast, this compound leverages the power of sparse matrices and a more modern dimensionality reduction technique, Truncated Singular Value Decomposition (SVD).[1][2][6] This approach is significantly more efficient for large, sparse datasets, which are common in genomics, leading to faster computation times and lower memory consumption.[1][6]

Quantitative Performance Comparison

The theoretical advantages of this compound's approach are borne out in direct performance benchmarks. Studies comparing the two packages on large genomic datasets, such as the Plasmodium falciparum dataset from MalariaGEN and the 1000 Genomes Project, have demonstrated this compound's superior performance.[1][2] The following tables summarize the key quantitative differences in computational time and memory usage.

| Performance Metric | adegenet (R) | This compound (Python) | Notes |

| Computational Time | Slower, especially with increasing dataset size. | Significantly faster, particularly for large genomic datasets. | This compound's use of Truncated SVD on sparse matrices reduces computational complexity.[1][2] |

| Memory Usage | Higher, can be a limiting factor for very large datasets. | Lower, more efficient memory management. | This compound's reliance on sparse matrices minimizes the amount of data held in memory.[1][2] |

| Feature | adegenet (R) | This compound (Python) |

| Core PCA Algorithm | Eigendecomposition | Truncated Singular Value Decomposition (SVD)[1][6] |

| Data Structure | genind and genlight objects | Compressed Sparse Row (CSR) matrices[2] |

| Primary Language | R | Python |

| Integration | ade4, MASS packages | scikit-learn library[1] |

Experimental Protocols: A Side-by-Side View

To provide a practical understanding of the user experience with each package, the following section outlines the typical experimental workflow for conducting a DAPC analysis.

adegenet DAPC Workflow

The protocol in adegenet generally involves loading genetic data into a specific object format (genind or genlight), identifying the optimal number of clusters if unknown, and then performing the DAPC.

-

Data Import and Preparation:

-

Load genetic data from various formats (e.g., GENEPOP, FSTAT, STRUCTURE) into a genind or genlight object.

-

Handle missing data as required.

-

-

Cluster Identification (if groups are unknown):

-

Use the find.clusters function to identify the optimal number of genetic clusters.

-

This function uses a k-means clustering algorithm on the principal components of the data.

-

The Bayesian Information Criterion (BIC) is typically used to assess the optimal number of clusters.

-

-

DAPC Execution:

-

Run the dapc function, specifying the genetic data and the group assignments (either predetermined or from find.clusters).

-

Select the number of principal components (PCs) to retain. Cross-validation (xvalDapc) can be used to determine the optimal number of PCs to avoid overfitting.

-

-

Visualization and Interpretation:

-

Visualize the results using scatterplots of the discriminant functions to observe cluster separation.

-

Analyze the contribution of alleles to the discriminant functions to identify loci driving population differentiation.

-

This compound DAPC Workflow

This compound leverages the scikit-learn ecosystem, providing a more machine learning-oriented workflow.

-

Data Import and Preparation:

-

Cluster Identification (if groups are unknown):

-

Perform k-means clustering on the principal components of the genotype data to infer the number of effective populations.

-

-

DAPC Execution using a Machine Learning Pipeline:

-

Create a DAPC model as an instance of the DAPC class.

-

Initiate a pipeline that incorporates the Truncated SVD for PCA and a linear discriminant analysis classifier from scikit-learn.[6]

-

The dataset can be split into training and testing sets for model validation.

-

-

Model Evaluation and Visualization:

-

Evaluate the performance of the classifier using metrics such as accuracy scores and confusion matrices.

-

Visualize the results with scatter plots of the discriminant functions.

-

The trained classifier can be saved and deployed for future use.

-

Visualizing the Workflows

To further clarify the logical flow of a DAPC analysis in both packages, the following diagrams, generated using the DOT language, illustrate the key steps and decision points.

Conclusion: Choosing the Right Tool for the Job

Both adegenet and this compound are powerful tools for conducting DAPC analysis, each with its own strengths. adegenet remains a robust and well-established package within the R ecosystem, with a wealth of documentation and a strong user community. For researchers working with moderately sized datasets and who are comfortable in the R environment, adegenet is an excellent choice.

However, for those working with large-scale genomic data, such as genome-wide SNP datasets, this compound offers a clear advantage in terms of computational performance. Its Python-based, scikit-learn-integrated framework is not only faster and more memory-efficient but also aligns well with modern machine learning workflows, including model training, validation, and deployment. The choice between this compound and adegenet will ultimately depend on the scale of the data, the computational resources available, and the researcher's preferred programming environment. As genomic datasets continue to grow in size and complexity, tools like this compound will become increasingly indispensable for timely and efficient analysis of population structure.

References

The "DAPCy" Library: A Technical Guide to DAP12 and DAP3 Signaling Pathways in Drug Development

For Immediate Release

This technical guide provides an in-depth overview of the core signaling pathways mediated by the adaptor protein DAP12 and the pro-apoptotic protein DAP3. Mistakenly referred to as the "DAPCy library," this collection of molecular interactions represents a critical resource for researchers and scientists in the field of drug development, particularly in the areas of neuroinflammation, oncology, and immunology. This document outlines the key features of these pathways, presents quantitative data from relevant studies, details experimental protocols for their investigation, and provides visual diagrams of the core signaling cascades.

Introduction to DAP Signaling Pathways

The "this compound library" encompasses two distinct but crucial signaling hubs centered around two proteins: DNAX-activating protein of 12 kDa (DAP12, also known as TYROBP) and Death-Associated Protein 3 (DAP3). These pathways are integral to fundamental cellular processes and their dysregulation is implicated in a range of human diseases, making them attractive targets for therapeutic intervention.

-

DAP12 Signaling: Primarily involved in the regulation of the immune system, DAP12 is a transmembrane signaling adaptor that associates with various receptors on myeloid cells, such as microglia and dendritic cells. The TREM2/DAP12 signaling axis is of particular interest in the context of neurodegenerative diseases like Alzheimer's, where it plays a complex, dual role in modulating inflammatory responses.[1][2]

-

DAP3 Signaling: DAP3 is a mitochondrial ribosomal protein that also functions as a positive mediator of apoptosis, or programmed cell death.[3] It is a key component of the extrinsic apoptosis pathway, triggered by death receptors like TNF-α and Fas.[3][4] Its role in cancer is multifaceted, with its expression levels correlating with prognosis in various tumor types.[5][6][7][8][9][10][11][12]

Quantitative Data Summary

The following tables summarize key quantitative findings from studies on DAP12 and DAP3 signaling, providing insights into their functional roles and therapeutic potential.

Table 1: Quantitative Effects of DAP12 Modulation on Inflammatory Cytokine Expression

| Cell Type | Condition | Modulation | Cytokine | Change in mRNA/Protein Level | Reference |

| Primary Microglia | LPS Stimulation | Dap12 knockdown | IL-1β | Significant Increase | [13] |

| Primary Microglia | LPS Stimulation | Dap12 knockdown | IL-6 | Significant Increase | [13] |

| Primary Microglia | Aβ42 oligomer treatment | Dap12 knockdown | IL-1β | Elevated | [13] |

| Primary Microglia | Aβ42 oligomer treatment | Dap12 knockdown | TNF-α | Elevated | [13] |

| Tauopathy Mouse Brain | Tau Pathology | Dap12 deletion | CXCL10/IP-10 | Significantly Reduced | [14] |

| Tauopathy Mouse Brain | Tau Pathology | Dap12 deletion | IL-6 | Significantly Reduced | [14] |

| Tauopathy Mouse Brain | Tau Pathology | Dap12 deletion | MCP-1 (Ccl2) | Significantly Reduced | [14] |

| Tauopathy Mouse Brain | Tau Pathology | Dap12 deletion | MIG (Cxcl9) | Significantly Reduced | [14] |

Table 2: Correlation of DAP3 Expression with Clinical Outcomes in Cancer

| Cancer Type | DAP3 Expression Level | Associated Clinical Outcome | Statistical Significance (p-value) | Reference |

| Hepatocellular Carcinoma | High | Shorter Overall Survival | <0.05 | [5] |

| Hepatocellular Carcinoma | High | Larger Tumor Size | 0.024 | [5] |

| Hepatocellular Carcinoma | High | Higher AFP Levels | 0.044 | [5] |

| Breast Cancer | Low | Local Recurrence | 0.013 | [6] |

| Breast Cancer | Low | Distant Metastasis | 0.0057 | [6] |

| Breast Cancer | Low | Higher Mortality | 0.019 | [6] |

| Gastric Cancer | High | Better Overall Survival | 0.013 | [8] |

| Gastric Cancer | Low | Higher Incidence of Recurrence | 0.0005 | [8] |

| Pancreatic Cancer | High | Shorter Overall Survival | 0.012 | [9] |

Key Signaling Pathways and Visualizations

The following diagrams, generated using the DOT language, illustrate the core signaling cascades of DAP12 and DAP3.

TREM2-DAP12 Signaling Pathway in Microglia

This pathway is initiated by the binding of ligands to the TREM2 receptor, which is associated with the DAP12 adaptor protein. This triggers a signaling cascade that influences microglial activation, phagocytosis, and inflammatory responses.[1]

DAP3-Mediated Extrinsic Apoptosis Pathway

DAP3 plays a crucial role in the extrinsic apoptosis pathway by facilitating the activation of caspase-8 in response to signals from death receptors like the TNF receptor.[4]

Experimental Protocols

This section provides detailed methodologies for key experiments used to investigate DAP12 and DAP3 signaling pathways.

Co-Immunoprecipitation (Co-IP) to Detect TREM2-DAP12 Interaction

This protocol is designed to verify the physical interaction between the TREM2 receptor and the DAP12 adaptor protein in a cellular context.

Materials:

-

Cell Lysis Buffer: 20 mM Tris (pH 7.5), 150 mM NaCl, 1 mM EDTA, 1 mM EGTA, 1% Triton X-100, 2.5 mM Sodium pyrophosphate, 1 mM β-glycerophosphate, 1 mM Na3VO4, 1 µg/ml Leupeptin. Add 1 mM PMSF immediately before use.[15]

-

Phosphate Buffered Saline (PBS), ice-cold.

-

Primary Antibodies: Anti-TREM2 antibody, Anti-DAP12 antibody.

-

Protein A/G Agarose (B213101) Beads.

-

3X SDS Sample Buffer.

-

Microcentrifuge tubes.

-

Cell scraper.

Procedure:

-

Cell Lysis:

-

Culture cells to desired confluency.

-

Wash cells once with ice-cold PBS.

-

Add 0.5 ml of ice-cold 1X cell lysis buffer per 10 cm plate and incubate on ice for 5 minutes.[15]

-

Scrape cells and transfer the lysate to a microcentrifuge tube.

-

Sonicate the lysate three times for 5 seconds each on ice.

-

Centrifuge at 14,000 x g for 10 minutes at 4°C. Transfer the supernatant to a new tube.[15]

-

-

Immunoprecipitation:

-

Washing:

-

Centrifuge the tubes at a low speed (e.g., 1,000 x g) for 30 seconds at 4°C.

-

Carefully remove the supernatant.

-

Wash the pellet five times with 500 µl of 1X cell lysis buffer.[15]

-

-

Elution:

-

Resuspend the final pellet in 20 µl of 3X SDS sample buffer.

-

Heat the sample at 95-100°C for 5 minutes.

-

Centrifuge for 1 minute at 14,000 x g.

-

-

Analysis:

-

Load the supernatant onto an SDS-PAGE gel.

-

Perform a Western blot using an antibody against the "prey" protein (e.g., anti-DAP12) to detect the interaction.

-

Flow Cytometry Analysis of Apoptosis using Annexin V Staining

This protocol allows for the quantification of apoptotic cells following manipulation of the DAP3 pathway.

Materials:

-

Annexin V-FITC Apoptosis Detection Kit (contains Annexin V-FITC, Propidium Iodide (PI), and 10X Binding Buffer).

-

Phosphate Buffered Saline (PBS).

-

Flow cytometer.

Procedure:

-

Cell Preparation:

-

Induce apoptosis in your target cells (e.g., through TNF-α treatment to activate the DAP3 pathway). Include a negative control of untreated cells.

-

Collect 1-5 x 10^5 cells by centrifugation.[16]

-

Wash the cells once with cold 1X PBS.

-

-

Staining:

-

Prepare 1X Binding Buffer by diluting the 10X stock with deionized water.

-

Resuspend the cell pellet in 1X Binding Buffer at a concentration of approximately 1 x 10^6 cells/mL.[16]

-

Transfer 100 µL of the cell suspension to a new tube.

-

Add 5 µL of Annexin V-FITC and 5 µL of Propidium Iodide (PI) staining solution.[16]

-

Gently vortex the cells and incubate for 15 minutes at room temperature in the dark.

-

-

Analysis:

-

Add 400 µL of 1X Binding Buffer to each tube.[16]

-

Analyze the samples by flow cytometry within one hour.

-

Interpretation:

-

Annexin V-negative, PI-negative: Live cells.

-

Annexin V-positive, PI-negative: Early apoptotic cells.

-

Annexin V-positive, PI-positive: Late apoptotic or necrotic cells.

-

-

Conclusion and Future Directions

The signaling pathways orchestrated by DAP12 and DAP3 are critical regulators of cellular function with profound implications for human health. A thorough understanding of these pathways, facilitated by the experimental and analytical approaches outlined in this guide, is paramount for the development of novel therapeutics. Future research should focus on the identification of specific small molecule modulators for these pathways and the elucidation of their complex interplay in various disease contexts. The continued exploration of the "this compound library" will undoubtedly pave the way for innovative treatments for a wide range of debilitating diseases.

References

- 1. Frontiers | Microglial TREM2/DAP12 Signaling: A Double-Edged Sword in Neural Diseases [frontiersin.org]

- 2. Microglial TREM2/DAP12 Signaling: A Double-Edged Sword in Neural Diseases - PMC [pmc.ncbi.nlm.nih.gov]

- 3. researchgate.net [researchgate.net]

- 4. Frontiers | Death-associated protein 3 in cancer—discrepant roles of DAP3 in tumours and molecular mechanisms [frontiersin.org]

- 5. Identification of DAP3 as candidate prognosis marker and potential therapeutic target for hepatocellular carcinoma - PMC [pmc.ncbi.nlm.nih.gov]

- 6. researchgate.net [researchgate.net]

- 7. researchgate.net [researchgate.net]

- 8. Death-associated protein-3, DAP-3, correlates with preoperative chemotherapy effectiveness and prognosis of gastric cancer patients following perioperative chemotherapy and radical gastrectomy - PMC [pmc.ncbi.nlm.nih.gov]

- 9. ar.iiarjournals.org [ar.iiarjournals.org]

- 10. Death-associated protein 3 in cancer—discrepant roles of DAP3 in tumours and molecular mechanisms - PMC [pmc.ncbi.nlm.nih.gov]

- 11. DAP3 promotes mitochondrial activity and tumour progression in hepatocellular carcinoma by regulating MT-ND5 expression - PubMed [pubmed.ncbi.nlm.nih.gov]

- 12. Death-associated protein 3 in cancer-discrepant roles of DAP3 in tumours and molecular mechanisms - PubMed [pubmed.ncbi.nlm.nih.gov]

- 13. TREM2/DAP12 Complex Regulates Inflammatory Responses in Microglia via the JNK Signaling Pathway - PMC [pmc.ncbi.nlm.nih.gov]

- 14. DAP12 deficiency alters microglia-oligodendrocyte communication and enhances resilience against tau toxicity - PMC [pmc.ncbi.nlm.nih.gov]

- 15. ulab360.com [ulab360.com]

- 16. Annexin V and PI Staining Protocol for Apoptosis by Flow Cytometry | Bio-Techne [bio-techne.com]

A Technical Guide to Discriminant Analysis of Principal Components (DAPC) for Genetic Structure Analysis

Audience: Researchers, scientists, and drug development professionals.

Note on Terminology: This guide focuses on the widely established statistical method, Discriminant Analysis of Principal Components (DAPC). A Python implementation of this method is available under the name DAPCy, which leverages machine learning libraries for enhanced performance on large datasets.[1] The principles and methodologies described herein are fundamental to both.

Introduction to DAPC

Discriminant Analysis of Principal Components (DAPC) is a multivariate statistical method designed to identify and describe clusters of genetically related individuals.[2] It is particularly effective for analyzing large and complex genetic datasets, such as those generated by single nucleotide polymorphism (SNP) arrays, microsatellites, or whole-genome sequencing.[2] Unlike some other population genetics methods, DAPC is free from assumptions about populations being in Hardy-Weinberg equilibrium or panmictic, making it a versatile tool for a wide range of organisms and population histories.[3]

The core strength of DAPC lies in its two-step process. It first transforms the genetic data using Principal Component Analysis (PCA) to reduce dimensionality and remove correlation between variables.[4][5] Subsequently, it applies Discriminant Analysis (DA) to the retained principal components to maximize the separation between groups while minimizing variation within them.[2][3][6] This approach makes DAPC highly effective at identifying subtle genetic structures and providing a clear visual representation of population differentiation.[2][7]

Core Principles of DAPC

DAPC is built upon a combination of two classical multivariate analysis techniques:

-

Principal Component Analysis (PCA): PCA is used as a preliminary step to transform the raw genetic data (e.g., allele frequencies).[4][5] It summarizes the total genetic variation into a set of uncorrelated variables called principal components (PCs). This step is crucial as it overcomes a key limitation of traditional DA, which requires the number of variables (alleles) to be less than the number of individuals.[2] By retaining a subset of PCs, DAPC can be applied to virtually any genetic dataset, regardless of its size.[2]

-

Discriminant Analysis (DA): Following PCA, DA is performed on the retained principal components. The goal of DA is to find linear combinations of these PCs, known as discriminant functions, that best separate the predefined or inferred clusters of individuals.[2][6] By maximizing the between-group variance and minimizing the within-group variance, DA provides a much clearer separation of populations than PCA alone.[2][7]

Experimental and Computational Protocol

The application of DAPC is a computational workflow, primarily performed using software packages such as adegenet in R.[8][9] The following protocol outlines the key steps from data preparation to interpretation of results.

Data Preparation and Formatting

-

Input Data: The genetic data should be in a matrix format where rows represent individuals and columns represent alleles (for microsatellites) or SNPs.

-

Data Conversion: Convert your raw genetic data (e.g., from VCF or Genepop files) into a suitable format for the analysis software. In R, the adegenet package provides tools to import and convert various data formats into genind or genlight objects.[10]

-

Handling Missing Data: Address any missing data in your dataset. Options include imputation, removal of individuals or loci with high rates of missingness, or using methods that can accommodate missing data.

Identifying Genetic Clusters (a priori unknown groups)

If the population structure is unknown, the first step is to infer the number of clusters (K).

-

Run find.clusters: Use a function like find.clusters in adegenet. This function uses a sequential K-means clustering approach.[2][8] It runs K-means for a range of possible cluster numbers.

-

Determine the Optimal K: The optimal number of clusters is typically identified by examining the Bayesian Information Criterion (BIC) for each value of K. The value of K after which the BIC value decreases negligibly or starts to increase is often chosen as the optimal number of clusters.[2]

Performing the Discriminant Analysis

Once the groups are defined (either from the previous step or based on prior knowledge, e.g., sampling locations), the core DAPC can be performed.

-

Choosing the Number of Principal Components (PCs): The number of PCs to retain is a critical parameter. Retaining too few PCs may discard valuable information, while retaining too many can introduce noise and overfit the model.

-

Cross-Validation: A robust method is to use cross-validation (e.g., the xvalDapc function in adegenet) to assess the performance of the DAPC with different numbers of retained PCs.[3] This helps to identify the number of PCs that provides the best predictive accuracy.

-

k-1 Criterion: A recommended guideline is to not exceed k-1 PCs, where k is the number of effective populations. This ensures a more parsimonious and biologically meaningful model.[11][12]

-

-

Running the DAPC Function: Execute the main DAPC function (e.g., dapc in adegenet) with the genetic data, the defined groups, and the chosen number of PCs.[3][8]

-

Choosing the Number of Discriminant Functions: The number of discriminant functions to retain is at most K-1. Typically, the first few discriminant functions capture the vast majority of the between-group variation.

Interpretation of Results

-

Scatterplots: Visualize the results by plotting the individuals on the first two discriminant functions. This will show the genetic relationships between the clusters.[13][14]

-

Allele Contributions: Analyze the contribution of different alleles or SNPs to the discriminant functions. This can help identify the genetic variants that are most responsible for differentiating the populations.[2]

-

Assignment Probabilities: Examine the posterior assignment probabilities of each individual to the different clusters. This can provide insights into potential admixture or misclassification.[8]

Data Presentation

The quantitative outputs of a DAPC analysis are typically summarized in tables to facilitate comparison and interpretation.

Table 1: Summary of DAPC Eigenvalues

| Discriminant Function | Eigenvalue | Percentage of Variance | Cumulative Percentage |

| 1 | 250.7 | 65.2% | 65.2% |

| 2 | 85.3 | 22.2% | 87.4% |

| 3 | 48.1 | 12.5% | 99.9% |

| ... | ... | ... | ... |

This table summarizes the importance of each discriminant function in explaining the between-group genetic variation.

Table 2: Individual Assignment to Clusters

| Individual ID | Prior Population | Posterior Assignment | Probability of Assignment |

| Ind_001 | Pop_A | Cluster 1 | 0.98 |

| Ind_002 | Pop_A | Cluster 1 | 0.95 |

| Ind_003 | Pop_B | Cluster 2 | 0.99 |

| Ind_004 | Pop_B | Cluster 1 | 0.65 |

| ... | ... | ... | ... |

This table shows the assignment of individuals to the inferred genetic clusters and the associated probabilities, which can be used to assess the clarity of the population structure.

Mandatory Visualizations

Diagram 1: DAPC Experimental Workflow

Caption: Workflow for genetic structure analysis using DAPC.

Diagram 2: Logical Relationship within DAPC

Caption: The two-stage logical structure of DAPC.

Applications in Research and Drug Development

DAPC is a powerful tool with a wide range of applications:

-

Population Genetics and Conservation: Identifying distinct population units for conservation management, understanding gene flow, and detecting hybridization.

-

Medical Genetics and Epidemiology: Stratifying patient populations based on genetic background to reduce spurious associations in genome-wide association studies (GWAS).[7] It can also be used to study the genetic structure of pathogen populations to understand disease transmission dynamics.[2]

-

Drug Development: In pharmacogenomics, DAPC can help identify genetic subgroups that may respond differently to a particular drug, aiding in the development of targeted therapies and personalized medicine.

-

Agrigenomics: Characterizing the genetic diversity of crop varieties and livestock breeds to inform breeding programs.[13]

Conclusion

DAPC is a robust and computationally efficient method for the analysis of genetic structure.[2] Its ability to handle large datasets and its freedom from demographic assumptions make it an invaluable tool for researchers in population genetics, molecular ecology, and medicine. By providing clear visualizations of population clusters and identifying the alleles that drive differentiation, DAPC offers deep insights into the complex patterns of genetic variation within and between populations. Proper parameterization, particularly the number of principal components retained, is crucial for obtaining biologically meaningful results.[11][12]

References

- 1. This compound Tutorial: MalariaGEN Plasmodium falciparum - this compound [uhasselt-bioinfo.gitlab.io]

- 2. Discriminant analysis of principal components: a new method for the analysis of genetically structured populations - PMC [pmc.ncbi.nlm.nih.gov]

- 3. Discriminant analysis of principal components (DAPC) [grunwaldlab.github.io]

- 4. HTTP redirect [search.r-project.org]

- 5. dapc function - RDocumentation [rdocumentation.org]

- 6. RPubs - DAPC [rpubs.com]

- 7. Comparison of principal component analysis (PCA) and discriminant analysis of principal component (DAPC) methods for analysis of population structure in Akhal-Take, Arabian and Caspian horse breeds using genomic data [ijasr.um.ac.ir]

- 8. adegenet.r-forge.r-project.org [adegenet.r-forge.r-project.org]

- 9. semanticscholar.org [semanticscholar.org]

- 10. GitHub - laurabenestan/DAPC: Discriminant Analysis in Principal Components (DAPC) [github.com]

- 11. researchgate.net [researchgate.net]

- 12. biorxiv.org [biorxiv.org]

- 13. Discriminant analysis of principal components and pedigree assessment of genetic diversity and population structure in a tetraploid potato panel using SNPs - PMC [pmc.ncbi.nlm.nih.gov]

- 14. researchgate.net [researchgate.net]

Getting Started with DAPCy for Genomics: An In-depth Technical Guide

For Researchers, Scientists, and Drug Development Professionals

This guide provides a comprehensive overview of Discriminant Analysis of Principal Components (DAPC), a powerful multivariate method for exploring the genetic structure of populations, with a focus on its scalable implementation in the Python package, DAPCy. This document details the core concepts, a step-by-step computational workflow, data presentation strategies, and the theoretical underpinnings of the methodology.

Introduction to Discriminant Analysis of Principal Components (DAPC)

Discriminant Analysis of Principal Components (DAPC) is a statistical method designed to identify and describe clusters of genetically related individuals.[1][2][3] It is a two-step process that combines the dimensionality reduction of Principal Component Analysis (PCA) with the group discrimination power of Discriminant Analysis (DA).[2][3] The primary goal of DAPC is to maximize the separation between groups while minimizing the variation within each group.[3][4] This makes it particularly effective for visualizing population structures, even when genetic differentiation is subtle.[5][6]

Initially implemented in the R package adegenet, DAPC has become a widely used tool in population genetics.[3][7] However, the growing size of genomic datasets has presented computational challenges for the original implementation.[7][8]

Introducing this compound: A Scalable Python Implementation

This compound is a Python package that re-implements the DAPC method, specifically designed for fast and robust analysis of large-scale genomic datasets.[1][9] It leverages the scikit-learn machine learning library, employing sparse matrices and truncated singular value decomposition (SVD) to handle large data with low memory consumption.[1][7] this compound is well-suited for modern genomic research, where datasets can contain thousands of samples and millions of genetic markers.[8]

Key Advantages of this compound:

-

Scalability: Efficiently analyzes large genomic datasets that are computationally prohibitive for the original R implementation.[7][8]

-

Performance: Utilizes truncated SVD and sparse matrices for faster computation and reduced memory usage.[7][10]

-

Flexibility: Integrates with the scikit-learn ecosystem, offering advanced options for model training, hyperparameter tuning, and cross-validation.[1][7]

-

User-Friendly: Accepts common genomic data formats like VCF and BED files.[7][10]

-

Reproducibility: Allows for the export of trained models, which can be deployed in different environments without retraining.[7]

Core Concepts and Theoretical Background

DAPC partitions genetic variation into two components: between-group and within-group variation. The method then seeks to maximize the between-group component while minimizing the within-group component.[3]

The DAPC process involves two main stages:

-

Principal Component Analysis (PCA): In the first step, the genomic data is transformed using PCA. PCA is a dimensionality-reduction technique that converts a set of correlated variables (e.g., allele frequencies at different loci) into a set of linearly uncorrelated variables called principal components (PCs). This step reduces the dimensionality of the data while retaining the majority of the variance. Importantly, it ensures that the variables submitted to Discriminant Analysis are uncorrelated.[1][3]

-

Discriminant Analysis (DA): The retained principal components are then used as input for a Linear Discriminant Analysis (LDA). LDA aims to find a linear combination of these PCs that best separates the predefined groups. These linear combinations are known as discriminant functions. The number of discriminant functions is at most the number of groups minus one.

The this compound Computational Workflow

The following section details the step-by-step computational protocol for performing a DAPC analysis using the this compound package.

Experimental Protocol: A Step-by-Step Guide

This protocol outlines the typical workflow for a DAPC analysis, from data input to visualization and interpretation.

Step 1: Data Preparation and Loading

-

Input Data: this compound accepts genomic data in Variant Call Format (VCF) or PLINK format (BED/BIM/FAM).[10]

-

Data Conversion: The input data is transformed into a compressed sparse row (csr) matrix, which is an efficient format for storing large, sparse matrices and performing calculations.[7]

-

Group Definition: If prior knowledge of population groups exists (e.g., sampling locations, known subspecies), these are provided as labels for the samples.

Step 2: De Novo Clustering (Optional)

-

If population groups are not known beforehand, this compound can infer them using a de novo clustering approach.[1]

-

K-means Clustering: This is typically done using the k-means algorithm on the principal components of the genetic data.[7][11]

-

Choosing the Optimal 'k': The optimal number of clusters (k) is often determined by running k-means with different values of k and selecting the one that minimizes a criterion such as the Bayesian Information Criterion (BIC) or identifies an "elbow" in the plot of the sum of squared errors.[3][10][12]

Step 3: Principal Component Analysis

-

Dimensionality Reduction: PCA is performed on the genotype matrix to obtain the principal components. This compound uses a truncated Singular Value Decomposition (SVD) for this, which is computationally efficient for large matrices.[7][10]

-

Selecting the Number of PCs: The number of PCs to retain is a critical parameter. Retaining too few may discard important information, while retaining too many can lead to overfitting. A common approach is to use cross-validation to find the number of PCs that maximizes the predictive accuracy of the discriminant analysis.[2] Another guideline suggests using no more than k-1 PCs, where k is the number of effective populations.[13]

Step 4: Discriminant Analysis

-

Model Training: A Linear Discriminant Analysis model is trained using the selected principal components as predictors and the group labels as the response variable.

-

Hyperparameter Tuning: this compound allows for hyperparameter tuning, for instance, through grid search cross-validation, to optimize the performance of the DA model.[7]

Step 5: Model Evaluation

-

Cross-Validation: The performance of the DAPC model is assessed using cross-validation. This compound implements various k-fold cross-validation schemes, such as stratified k-fold, to provide robust estimates of model accuracy.[7][8]

-

Performance Metrics: The model's performance is typically evaluated using metrics like overall accuracy and confusion matrices, which show the proportion of individuals correctly and incorrectly assigned to each group.[7]

Step 6: Visualization and Interpretation

-

Scatter Plots: The results of the DAPC are visualized by plotting the individuals on the first few discriminant functions. This allows for a visual assessment of the separation between the inferred or predefined genetic clusters.[7][12][14]

-

Allele Contributions: The contribution of each allele to the discriminant functions can be examined to identify the genetic variants that are most responsible for the observed population structure.[3][15]

Data Presentation

Quantitative data from a this compound analysis should be summarized in clear and concise tables to facilitate interpretation and comparison.

Table 1: Summary of Principal Component Analysis

| Principal Component | Eigenvalue | Variance Explained (%) | Cumulative Variance Explained (%) |

| 1 | 150.7 | 15.1 | 15.1 |

| 2 | 120.3 | 12.0 | 27.1 |

| 3 | 95.2 | 9.5 | 36.6 |

| ... | ... | ... | ... |

Table 2: Discriminant Analysis Eigenvalues

| Discriminant Function | Eigenvalue |

| 1 | 85.6 |

| 2 | 52.1 |

| 3 | 23.9 |

| ... | ... |

Table 3: Individual Coordinates on Discriminant Functions

| Individual ID | Group | DF1 | DF2 | DF3 |

| Ind_001 | A | 2.54 | -1.23 | 0.87 |

| Ind_002 | A | 2.89 | -1.56 | 0.91 |

| Ind_003 | B | -3.12 | 2.45 | -1.02 |

| ... | ... | ... | ... | ... |

Table 4: Posterior Membership Probabilities

| Individual ID | Assigned Group | P(Group A) | P(Group B) | P(Group C) |

| Ind_001 | A | 0.98 | 0.01 | 0.01 |

| Ind_002 | A | 0.99 | 0.01 | 0.00 |

| Ind_003 | B | 0.02 | 0.97 | 0.01 |

| ... | ... | ... | ... | ... |

Table 5: Model Performance from Cross-Validation

| Metric | Value |

| Overall Accuracy | 98.5% |

| Confusion Matrix | Predicted A |

| Actual A | 99 |

| Actual B | 2 |

| Actual C | 0 |

Visualizations

Visualizing the results of a DAPC analysis is crucial for understanding the relationships between genetic clusters.

References

- 1. This compound [uhasselt-bioinfo.gitlab.io]

- 2. Discriminant analysis of principal components (DAPC) [grunwaldlab.github.io]

- 3. Discriminant analysis of principal components: a new method for the analysis of genetically structured populations - PMC [pmc.ncbi.nlm.nih.gov]

- 4. adegenet.r-forge.r-project.org [adegenet.r-forge.r-project.org]

- 5. DAP-seq: Principles, Workflow and Analysis - CD Genomics [cd-genomics.com]

- 6. GitHub - laurabenestan/DAPC: Discriminant Analysis in Principal Components (DAPC) [github.com]

- 7. academic.oup.com [academic.oup.com]

- 8. This compound: a Python package for the discriminant analysis of principal components method for population genetic analyses - PubMed [pubmed.ncbi.nlm.nih.gov]

- 9. gitlab.com [gitlab.com]

- 10. This compound Tutorial: MalariaGEN Plasmodium falciparum - this compound [uhasselt-bioinfo.gitlab.io]

- 11. Discriminant Analysis of Principal Components (DAPC) · Xianping Li [xianpingli.github.io]

- 12. researchgate.net [researchgate.net]

- 13. biorxiv.org [biorxiv.org]

- 14. dapc graphics function - RDocumentation [rdocumentation.org]

- 15. HTTP redirect [search.r-project.org]

Principles of Discriminant Analysis of Principal Components (DAPC) in Population Genetics: An In-depth Technical Guide

For Researchers, Scientists, and Drug Development Professionals

Executive Summary

Discriminant Analysis of Principal Components (DAPC) is a powerful multivariate statistical method used in population genetics to identify and describe clusters of genetically related individuals.[1][2] This technique is particularly advantageous as it does not rely on the assumptions of Hardy-Weinberg equilibrium or linkage equilibrium, making it suitable for analyzing data from a wide range of organisms, including those that are clonal or partially clonal.[3] DAPC is a two-step process that first transforms the genetic data using Principal Component Analysis (PCA) to reduce dimensionality and remove correlation between variables. Subsequently, it employs Discriminant Analysis (DA) to maximize the separation between predefined or inferred groups while minimizing variation within them.[2][3] This guide provides a comprehensive overview of the core principles of DAPC, detailed experimental protocols for data generation, and a guide to the interpretation of its outputs.

Core Principles of DAPC

DAPC is designed to overcome the limitations of traditional methods for analyzing population structure. While Principal Component Analysis (PCA) is effective at summarizing the overall genetic variation, it may not be optimal for distinguishing between predefined groups.[2] Conversely, Discriminant Analysis (DA) is adept at separating groups but is constrained by the requirement that the number of variables (e.g., alleles) not vastly exceed the number of individuals and that these variables be uncorrelated.[2]

DAPC elegantly resolves these issues by first using PCA to transform the raw genetic data into a smaller set of uncorrelated principal components (PCs). These PCs, which capture a significant portion of the total genetic variance, are then used as the input variables for a DA. This approach allows for the identification of linear combinations of the original genetic variables (alleles) that best separate the defined clusters.[2][4]

Key Advantages of DAPC:

-

No Assumption of Panmixia: Unlike model-based clustering methods like STRUCTURE, DAPC does not assume that populations are in Hardy-Weinberg or linkage equilibrium, making it applicable to a broader range of biological systems.[3]

-

Computational Efficiency: DAPC is computationally fast, enabling the analysis of large genomic datasets, such as those generated from high-throughput sequencing.[2]

-

Identification of Key Discriminant Alleles: The method allows for the identification of specific alleles that contribute most to the differentiation between populations, providing insights into the genetic basis of population structure.[2][4]

-

Visualization of Population Structure: DAPC provides clear and intuitive graphical representations of population structure, facilitating the interpretation of complex genetic data.[4]

Experimental Protocols

The successful application of DAPC begins with the generation of high-quality genetic data. The following sections outline the key experimental stages, from sample collection to genotyping.

Sample Collection and DNA Extraction

The choice of sample material and DNA extraction protocol is critical for obtaining DNA of sufficient quality and quantity for downstream genotyping applications.

3.1.1 Sample Collection and Storage:

-

Tissue Samples: For animal studies, tissue samples (e.g., muscle, ear punches, blood) should be collected and immediately stored in ethanol (B145695) or frozen at -80°C to prevent DNA degradation. For plant studies, young leaf tissue is often preferred and can be stored in silica (B1680970) gel to desiccate the tissue or frozen at -80°C.

-

Non-invasive Samples: Buccal swabs, hair follicles, or fecal samples can be used for non-invasive sampling. These should be stored in appropriate buffers or dried to preserve the DNA.

3.1.2 DNA Extraction:

A variety of commercial kits and manual protocols are available for DNA extraction. The choice of method will depend on the sample type and desired throughput. High-throughput DNA extraction can be performed in a 96-well plate format.[5]

Table 1: Comparison of Common DNA Extraction Methods

| Method | Principle | Advantages | Disadvantages |

| CTAB (Cetyltrimethylammonium bromide) | Uses a cationic detergent to lyse cells and precipitate DNA. | Cost-effective, yields high molecular weight DNA. | Time-consuming, involves hazardous chemicals (phenol-chloroform). |

| Silica-based Spin Columns | DNA binds to a silica membrane in the presence of chaotropic salts. | Fast, high-purity DNA, amenable to high-throughput formats. | More expensive than manual methods. |

| Magnetic Beads | DNA binds to magnetic beads, which are then separated using a magnet. | Easily automated for high-throughput applications, yields high-purity DNA. | Can be more expensive than other methods. |

3.1.3 DNA Quantification and Quality Control:

Accurate quantification and quality assessment of the extracted DNA are essential for successful genotyping.

Table 2: DNA Quantification and Quality Control Methods

| Method | Principle | Measurement |

| UV Spectrophotometry (e.g., NanoDrop) | Measures the absorbance of UV light at 260 nm (for DNA) and 280 nm (for protein). | DNA concentration and purity (A260/A280 ratio). |

| Fluorometry (e.g., Qubit, PicoGreen) | Uses fluorescent dyes that specifically bind to double-stranded DNA. | Highly sensitive and specific DNA concentration measurement. |

| Agarose Gel Electrophoresis | Separates DNA fragments by size. | Assesses DNA integrity (presence of high molecular weight bands vs. smearing). |

Genotyping

DAPC can be applied to various types of genetic markers, with Single Nucleotide Polymorphisms (SNPs) and microsatellites being the most common.

3.2.1 Microsatellite Genotyping:

Microsatellites, or Short Tandem Repeats (SSRs), are highly polymorphic markers that are amplified using the Polymerase Chain Reaction (PCR).

-

Primer Design: Locus-specific primers are designed to flank the microsatellite repeat region.[6]

-

PCR Amplification: The microsatellite loci are amplified using fluorescently labeled primers. A typical PCR protocol involves an initial denaturation step, followed by multiple cycles of denaturation, annealing, and extension, and a final extension step.[6]

-

Fragment Analysis: The fluorescently labeled PCR products are separated by size using capillary electrophoresis on an automated DNA sequencer. The resulting electropherograms are then analyzed to determine the allele sizes for each individual at each locus.

3.2.2 SNP Genotyping:

SNPs are the most abundant type of genetic variation and can be genotyped using a variety of high-throughput methods.

-

Genotyping-by-Sequencing (GBS): GBS is a reduced-representation sequencing method that uses restriction enzymes to digest the genome, followed by ligation of barcoded adapters and high-throughput sequencing.[7][8] The resulting sequence data is then processed through a bioinformatics pipeline to call SNPs.[9][10]

-

SNP Arrays: Commercially available or custom-designed microarrays can be used to genotype thousands to millions of known SNPs simultaneously.

-

PCR-based methods (e.g., KASP): Kompetitive Allele-Specific PCR (KASP) is a cost-effective method for genotyping a smaller number of targeted SNPs.

DAPC Analysis Workflow

The DAPC analysis is typically performed using the adegenet package in the R statistical environment.[4][11] The workflow involves several key steps, from data preparation to the interpretation of results.

Data Input and Formatting

Genetic data from various formats (e.g., VCF, Genepop) needs to be imported into R and converted into a genind or genlight object, which are the standard data structures used by adegenet.[12]

Identification of Genetic Clusters

If there are no a priori defined populations, the find.clusters() function can be used to identify the optimal number of genetic clusters (k). This function employs a k-means clustering algorithm on the principal components of the genetic data and uses the Bayesian Information Criterion (BIC) to assess the best-fitting number of clusters.[2][4] A plot of the BIC values for different numbers of clusters is generated, and typically the value of k corresponding to the lowest BIC is chosen.[4]

Selecting the Number of Principal Components

A critical step in DAPC is determining the optimal number of PCs to retain for the DA. Retaining too few PCs can result in a loss of valuable information, while retaining too many can lead to overfitting. The xvalDapc() function performs cross-validation to identify the number of PCs that maximizes the predictive success and minimizes the root mean squared error.[3]

Running the DAPC

The dapc() function performs the main analysis. It takes the genetic data, the group assignments (either a priori or inferred from find.clusters()), and the number of PCs to retain as input. The output is a dapc object containing the results of the analysis.[11]

Data Presentation and Interpretation

The results of a DAPC analysis are typically presented through a combination of quantitative tables and graphical plots.

Quantitative Data Summary

Table 3: Example DAPC Summary Statistics

| Parameter | Value | Description |

| Number of Individuals | 237 | Total number of individuals in the analysis. |

| Number of Loci | 9 | Number of microsatellite loci genotyped. |

| Number of Alleles | 75 | Total number of alleles across all loci. |

| Number of Clusters (k) | 3 | Optimal number of clusters identified by find.clusters(). |

| Number of PCs Retained | 40 | Number of principal components used in the discriminant analysis. |

| Proportion of Variance Conserved by PCs | 0.85 | The proportion of the total genetic variance explained by the retained PCs. |

Table 4: Eigenvalues of the Discriminant Analysis

| Discriminant Function | Eigenvalue | Proportion of Variance | Cumulative Proportion |

| 1 | 250.3 | 0.75 | 0.75 |

| 2 | 83.4 | 0.25 | 1.00 |

Visualization of DAPC Results

-

Scatterplot: The primary graphical output of DAPC is a scatterplot of the individuals along the first two discriminant functions. This plot visually represents the genetic differentiation between the identified clusters. Each point represents an individual, and clusters are typically color-coded.[13]

-

Assignment Plot: This plot displays the posterior membership probability of each individual to each of the identified clusters. It provides a measure of the confidence in the assignment of individuals to clusters and can reveal individuals with admixed ancestry.

-

Loading Plot: The loading plot shows the contribution of each allele to the discriminant functions. Alleles with high absolute loading values are the most influential in discriminating between the clusters.[14] This information can be used to identify genomic regions that may be under selection or involved in local adaptation.

Conclusion

DAPC is a versatile and powerful tool for the analysis of population genetic structure. Its freedom from the assumptions of traditional population genetics models, computational efficiency, and informative graphical outputs make it an invaluable method for researchers in population genetics, molecular ecology, and conservation biology. By following the detailed experimental and analytical protocols outlined in this guide, researchers can effectively apply DAPC to their own data to gain novel insights into the genetic structure of their study populations.

References

- 1. [PDF] A tutorial for Discriminant Analysis of Principal Components ( DAPC ) using adegenet 1 . 4-0 | Semantic Scholar [semanticscholar.org]

- 2. Discriminant analysis of principal components: a new method for the analysis of genetically structured populations - PMC [pmc.ncbi.nlm.nih.gov]

- 3. Discriminant analysis of principal components (DAPC) [grunwaldlab.github.io]

- 4. adegenet.r-forge.r-project.org [adegenet.r-forge.r-project.org]

- 5. tandfonline.com [tandfonline.com]

- 6. Introduction to Microsatellite and Microsatellite Genotyping - CD Genomics [cd-genomics.com]

- 7. Genotyping By Sequencing: Principles, Workflow, and Applications - CD Genomics [cd-genomics.com]

- 8. Genotyping By Sequencing Analysis — Bodega GBS Workshop [bodega-gbs.readthedocs.io]

- 9. GBS-DP: a bioinformatics pipeline for processing data coming from genotyping by sequencing - PMC [pmc.ncbi.nlm.nih.gov]

- 10. Genome-Wide SNP Calling from Genotyping by Sequencing (GBS) Data: A Comparison of Seven Pipelines and Two Sequencing Technologies | PLOS One [journals.plos.org]

- 11. dapc: Discriminant Analysis of Principal Components (DAPC) in adegenet: Exploratory Analysis of Genetic and Genomic Data [rdrr.io]

- 12. GitHub - laurabenestan/DAPC: Discriminant Analysis in Principal Components (DAPC) [github.com]

- 13. researchgate.net [researchgate.net]

- 14. RPubs - DAPC [rpubs.com]

An In-depth Technical Guide to Daptomycin and the DAP12 Signaling Pathway

For Researchers, Scientists, and Drug Development Professionals

This technical guide provides a comprehensive overview of the lipopeptide antibiotic Daptomycin, its mechanism of action, and the associated DAP12 signaling pathway. The content is tailored for researchers, scientists, and professionals involved in drug development, offering detailed experimental protocols, quantitative data summaries, and visualizations of key biological processes.

Daptomycin: Core Concepts

Daptomycin is a cyclic lipopeptide antibiotic effective against a range of Gram-positive bacteria, including multidrug-resistant strains. Its unique mechanism of action, which targets the bacterial cell membrane, makes it a critical tool in combating serious infections.

Mechanism of Action

Daptomycin's bactericidal effect is achieved through a multi-step process that ultimately leads to the disruption of the bacterial cell membrane and subsequent cell death. This process is calcium-dependent and highly specific to Gram-positive bacteria due to their unique membrane composition.

The key steps in Daptomycin's mechanism of action are:

-

Calcium-Dependent Binding: In the presence of calcium ions, Daptomycin undergoes a conformational change that facilitates its binding to the bacterial cell membrane. The lipid tail of the Daptomycin molecule plays a crucial role in anchoring it to the cell surface.

-

Oligomerization: Once bound to the membrane, Daptomycin molecules oligomerize, forming a complex that inserts into the lipid bilayer.

-

Membrane Depolarization: The insertion of the Daptomycin complex disrupts the membrane's structure, leading to the formation of ion channels or pores. This results in a rapid efflux of potassium ions, causing membrane depolarization.

-

Inhibition of Macromolecule Synthesis: The loss of membrane potential disrupts essential cellular processes, including the synthesis of DNA, RNA, and proteins, and interferes with cell wall synthesis.

This cascade of events leads to rapid bacterial cell death without causing cell lysis.

Quantitative Data: In Vitro Activity

The in vitro activity of Daptomycin is typically measured by its Minimum Inhibitory Concentration (MIC), which is the lowest concentration of the antibiotic that prevents visible growth of a bacterium.

| Organism | Daptomycin MIC₅₀ (μg/mL) | Daptomycin MIC₉₀ (μg/mL) | Reference(s) |

| Staphylococcus aureus (MRSA) | 0.38 | 0.75 | |

| Staphylococcus aureus (hGISA) | 0.5 | 1.0 | |

| Enterococcus faecalis (VRE) | 1.0 | 4.0 | |

| Enterococcus faecium (VRE) | 4.0 | 4.0 | |

| Streptococcus pneumoniae | 0.12 - 0.25 | - |

MIC₅₀ and MIC₉₀ represent the concentrations at which 50% and 90% of isolates are inhibited, respectively.

Quantitative Data: Clinical Efficacy

Clinical trial data provides insights into the effectiveness of Daptomycin in treating various infections.

| Infection Type | Pathogen | Daptomycin Dose | Clinical Success Rate (%) | Reference(s) |

| Complicated Skin and Soft Tissue Infections | S. aureus (MRSA and MSSA) | 4 mg/kg/day | 83.9 | |

| Bacteremia | S. aureus (MRSA and MSSA) | 6 mg/kg/day | 83.9 | |

| Right-Sided Infective Endocarditis | S. aureus | 6 mg/kg/day | 83.0 (MRSA) | |

| Bacteremia and Endocarditis | MRSA | 10 mg/kg/day | 42.0 | |