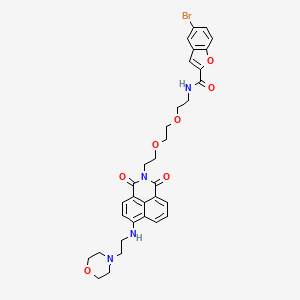

NDBM

Descripción

BenchChem offers high-quality this compound suitable for many research applications. Different packaging options are available to accommodate customers' requirements. Please inquire for more information about this compound including the price, delivery time, and more detailed information at info@benchchem.com.

Propiedades

Fórmula molecular |

C33H35BrN4O7 |

|---|---|

Peso molecular |

679.6 g/mol |

Nombre IUPAC |

5-bromo-N-[2-[2-[2-[6-(2-morpholin-4-ylethylamino)-1,3-dioxobenzo[de]isoquinolin-2-yl]ethoxy]ethoxy]ethyl]-1-benzofuran-2-carboxamide |

InChI |

InChI=1S/C33H35BrN4O7/c34-23-4-7-28-22(20-23)21-29(45-28)31(39)36-9-14-42-18-19-44-17-13-38-32(40)25-3-1-2-24-27(6-5-26(30(24)25)33(38)41)35-8-10-37-11-15-43-16-12-37/h1-7,20-21,35H,8-19H2,(H,36,39) |

Clave InChI |

IZCMYYUXRATCET-UHFFFAOYSA-N |

SMILES canónico |

C1COCCN1CCNC2=C3C=CC=C4C3=C(C=C2)C(=O)N(C4=O)CCOCCOCCNC(=O)C5=CC6=C(O5)C=CC(=C6)Br |

Origen del producto |

United States |

Foundational & Exploratory

An In-depth Technical Guide to the ndbm Database

For Researchers, Scientists, and Drug Development Professionals

This guide provides a comprehensive technical overview of the ndbm (New Database Manager) library, a key-value store database system. It is designed for an audience with a technical background, such as researchers, scientists, and drug development professionals, who may encounter or consider using this compound for managing experimental data, metadata, or other forms of key-addressable information.

Core Concepts of this compound

This compound is a high-performance, file-based database system that stores data as key-value pairs. It is an evolution of the original dbm (Database Manager) and offers enhancements such as the ability to have multiple databases open simultaneously. At its core, this compound is a library of functions that an application can use to manipulate a database.

The fundamental principle of this compound is its use of a hashing algorithm to quickly locate data on disk. When a key-value pair is stored, a hash of the key is calculated, which determines the storage location of the corresponding value. This allows for very fast data retrieval, typically in one or two disk accesses, making it suitable for applications requiring rapid lookups of relatively static data.[1]

An this compound database is physically stored as two separate files:

-

.dir file : This file acts as a directory or index, containing a bitmap of hash values.[1]

-

.pag file : This file contains the actual data, the key-value pairs themselves.[1]

This two-file structure separates the index from the data, which can contribute to efficient data retrieval operations.

Data Presentation: Quantitative Analysis

The following tables summarize key quantitative aspects of this compound and related dbm-family databases.

Table 1: Key and Value Size Limitations

| Database Implementation | Typical Key Size Limit | Typical Value Size Limit | Notes |

| Original dbm | ~512 bytes (total for key-value pair) | ~512 bytes (total for key-value pair) | Considered obsolete. |

| This compound | Varies by implementation | Varies by implementation | Often cited with a combined key-value size limit around 1008 to 4096 bytes.[2] |

| gdbm (GNU dbm) | No limit | No limit | Offers an this compound compatibility mode that removes the size limitations. |

| Berkeley DB | No practical limit | No practical limit | Also provides an this compound emulation layer with enhanced capabilities. |

Table 2: Performance Benchmarks of dbm-like Databases

The following data is based on a benchmark test storing 1,000,000 records with 8-byte keys and 8-byte values.

| Database | Write Time (seconds) | Read Time (seconds) | File Size (KB) |

| This compound 5.1 | 8.07 | 7.79 | 814,457 |

| GDBM 1.8.3 | 14.01 | 5.36 | 82,788 |

| Berkeley DB 4.4.20 | 9.62 | 5.62 | 40,956 |

| SDBM 1.0.2 | 11.32 | N/A* | 606,720 |

| QDBM 1.8.74 | 1.89 | 1.58 | 55,257 |

*Read time for SDBM was not available due to database corruption during the test.

Source: Adapted from Huihoo, Benchmark Test of DBM Brothers.

Internal Mechanics: The Hashing Algorithm

This compound and its derivatives often employ a variant of the sdbm (Sedgewick's Dynamic Bit Manipulation) algorithm for hashing keys. This algorithm is known for its good distribution of hash values, which helps in minimizing collisions and ensuring efficient data retrieval.[3][4][5]

The core of the sdbm algorithm is an iterative process that can be represented by the following pseudo-code:

This simple yet effective algorithm contributes to the fast lookup times characteristic of this compound databases.

Experimental Protocols: Core this compound Operations

The following section details the standard procedures for interacting with an this compound database using its C-style API. These protocols are fundamental for storing and retrieving experimental data.

Data Structures

The primary data structure for interacting with this compound is the datum, which is used to represent both keys and values. It is typically defined as:

-

dptr: A pointer to the data.

-

dsize: The size of the data in bytes.

Key Experimental Steps

-

Opening a Database Connection :

-

Protocol : Use the dbm_open() function.

-

Synopsis : DBM *dbm_open(const char *file, int flags, mode_t mode);

-

Description : This function opens a connection to the database specified by file. The flags argument determines the mode of operation (e.g., O_RDWR for read/write, O_CREAT to create the database if it doesn't exist). The mode argument specifies the file permissions if the database is created.[6][7]

-

Returns : A pointer to a DBM object on success, or NULL on failure.

-

-

Storing Data :

-

Protocol : Use the dbm_store() function.

-

Synopsis : int dbm_store(DBM *db, datum key, datum content, int store_mode);

-

Description : This function stores a key-value pair in the database. The store_mode can be DBM_INSERT (insert only if the key does not exist) or DBM_REPLACE (overwrite the value if the key exists).[6][8]

-

Returns : 0 on success, a non-zero value on failure.

-

-

Retrieving Data :

-

Deleting Data :

-

Protocol : Use the dbm_delete() function.

-

Synopsis : int dbm_delete(DBM *db, datum key);

-

Description : This function removes a key-value pair from the database.[9]

-

Returns : 0 on success, a non-zero value on failure.

-

-

Closing the Database Connection :

Mandatory Visualizations

This compound High-Level Data Flow

The following diagram illustrates the basic workflow of storing and retrieving data using the this compound library.

This compound data storage and retrieval workflow.This compound Internal Hashing and Lookup

This diagram provides a conceptual view of how this compound uses hashing to locate data within its file structure.

This compound hashing and data lookup process.Conclusion

The this compound database provides a simple, robust, and high-performance solution for key-value data storage. While it has limitations in terms of data size in its original form, its API has been emulated and extended by more modern libraries like gdbm and Berkeley DB, which overcome these constraints. For researchers and scientists who need a fast, local, and straightforward database for managing structured data, this compound and its successors remain a viable and relevant technology. Its simple API and file-based nature make it easy to integrate into various scientific computing workflows.

References

- 1. IBM Documentation [ibm.com]

- 2. NDBM_File - Tied access to this compound files - Perldoc Browser [perldoc.perl.org]

- 3. matlab.algorithmexamples.com [matlab.algorithmexamples.com]

- 4. cse.yorku.ca [cse.yorku.ca]

- 5. sdbm [doc.riot-os.org]

- 6. The this compound library [infolab.stanford.edu]

- 7. This compound (GDBM manual) [gnu.org.ua]

- 8. dbm/ndbm [docs.oracle.com]

- 9. This compound Tutorial [franz.com]

An In-depth Technical Guide to the ndbm File Format

For researchers, scientists, and drug development professionals who rely on robust data storage, understanding the underlying architecture of database systems is paramount. This guide provides a detailed technical exploration of the ndbm (new database manager) file format, a foundational key-value store that has influenced numerous subsequent database technologies.

Core Concepts of this compound

The this compound library, a successor to the original dbm, provides a simple yet efficient method for storing and retrieving key-value pairs. It was a standard feature in early Unix-like operating systems, including 4.3BSD.[1][2][3] Unlike modern database systems that often use a single file, this compound utilizes a two-file structure to manage data: a directory file (.dir) and a data page file (.pag).[1] This design is predicated on a hashing algorithm to provide fast access to data, typically in one or two file system accesses.[1]

The fundamental unit of data in this compound is a datum, a structure containing a pointer to the data (dptr) and its size (dsize). This allows for the storage of arbitrary binary data as both keys and values.[3][4]

The On-Disk File Format: A Deep Dive

The this compound file format is intrinsically tied to its implementation of extendible hashing. This dynamic hashing scheme allows the database to grow gracefully as more data is added, without requiring a complete reorganization of the file.

The Directory File (.dir)

The .dir file acts as the directory for the extendible hash table. It does not contain the actual key-value data but rather pointers to the data pages in the .pag file. The core of the .dir file is a hash table, which is an array of page indices.

A simplified view of the .dir file's logical structure reveals its role as an index. It contains a bitmap that is used to keep track of used pages in the .pag file.[1]

The Page File (.pag)

The .pag file is a collection of fixed-size pages, where each page stores one or more key-value pairs. The structure of a page is designed for efficient storage and retrieval. Key-value pairs that hash to the same logical bucket are stored on the same page.

When a page becomes full, a split occurs. A new page is allocated in the .pag file, and some of the key-value pairs from the full page are moved to the new page. The .dir file is then updated to reflect this change, potentially doubling in size to accommodate a more granular hash function.

The Hashing Mechanism

The efficiency of this compound hinges on its hashing algorithm, which determines the initial placement of keys within the .pag file. While the original this compound source code from 4.3BSD would provide the definitive algorithm, a widely cited and influential hashing algorithm comes from sdbm, a public-domain reimplementation of this compound.

The sdbm hash function is as follows:

This simple iterative function was found to provide good distribution and scrambling of bits, which is crucial for minimizing collisions and ensuring efficient data retrieval.

Collision Resolution: In this compound, collisions at the hash function level are handled by storing multiple key-value pairs that hash to the same bucket on the same data page. When a page overflows due to too many collisions, the page is split, and the directory is updated. This is a form of open addressing with bucket-level collision resolution.

Key Operations and Experimental Protocols

The this compound interface provides a set of functions for interacting with the database. Understanding these is key to appreciating its operational workflow.

| Function | Description |

| dbm_open() | Opens or creates a database, returning a handle to the two-file structure.[4][5] |

| dbm_store() | Stores a key-value pair in the database.[4][5] |

| dbm_fetch() | Retrieves the value associated with a given key.[4][5] |

| dbm_delete() | Removes a key-value pair from the database. |

| dbm_firstkey() | Retrieves the first key in the database for iteration. |

| dbm_nextkey() | Retrieves the next key in the database for iteration. |

| dbm_close() | Closes the database files.[4][5] |

Experimental Protocol for a dbm_store Operation:

-

Key Hashing: The key is passed through the this compound hash function to generate a hash value.

-

Directory Lookup: The hash value is used to calculate an index into the directory table in the .dir file.

-

Page Identification: The entry in the directory table provides the page number within the .pag file where the key-value pair should be stored.

-

Page Retrieval: The corresponding page is read from the .pag file into memory.

-

Key-Value Insertion: The new key-value pair is appended to the data on the page.

-

Overflow Check: If the insertion causes the page to exceed its capacity, a page split is triggered.

-

Page Split (if necessary): a. A new page is allocated in the .pag file. b. The key-value pairs on the original page are redistributed between the original and the new page based on a refined hash. c. The directory in the .dir file is updated to point to the new page. This may involve doubling the size of the directory.

-

Page Write: The modified page(s) are written back to the .pag file.

Visualizing the this compound Architecture and Workflow

To better illustrate the concepts described, the following diagrams are provided in the DOT language for Graphviz.

The diagram above illustrates the fundamental architecture of an this compound database. The .dir file contains a hash table that maps hash values to page numbers in the .pag file. Multiple directory entries can point to the same page. The .pag file itself is a collection of pages, each containing the actual key-value pairs.

This workflow diagram shows the steps involved in retrieving a value for a given key. The process begins with hashing the key, followed by a lookup in the .dir file to identify the correct data page in the .pag file. The relevant page is then read and searched for the key.

Modern Implementations and Compatibility

While the original this compound is now largely of historical and academic interest, its API has been preserved in modern database libraries such as GNU gdbm and Oracle Berkeley DB.[6][7] These libraries provide an this compound compatibility interface, allowing older software to be compiled and run on modern systems. However, it is crucial to note that the underlying on-disk file formats of these modern implementations are different from the original this compound format and are generally not compatible with each other.[7]

| Feature | Original this compound | GNU gdbm (in this compound mode) | Berkeley DB (in this compound mode) |

| File Structure | .dir and .pag files | .dir and .pag files (may be hard links) | Single .db file |

| On-Disk Format | Specific to the original implementation | gdbm's own format | Berkeley DB's own format |

| Data Size Limits | Key/value pair size limits (e.g., 1024 bytes)[2] | No inherent limits | No inherent limits |

| Concurrency | No built-in locking | Optional locking | Full transactional support |

Conclusion

The this compound file format represents a significant step in the evolution of key-value database systems. Its two-file, extendible hashing design provided a robust and efficient solution for data storage in early Unix environments. While it has been superseded by more advanced database technologies, its core concepts and API have demonstrated remarkable longevity, influencing and being preserved in modern database libraries. For professionals in data-intensive fields, understanding the principles of this compound offers valuable insights into the foundational techniques of data management.

References

- 1. grokipedia.com [grokipedia.com]

- 2. Introduction to dbm | KOSHIGOE.Write(something) [koshigoe.github.io]

- 3. dbm/ndbm [docs.oracle.com]

- 4. The this compound library [infolab.stanford.edu]

- 5. RonDB - World's fastest Key-Value Store [rondb.com]

- 6. DBM (computing) - Wikipedia [en.wikipedia.org]

- 7. Unix Incompatibility Notes: DBM Hash Libraries [unixpapa.com]

history of ndbm and dbm libraries

An In-depth Technical Guide to the Core of dbm and ndbm

Introduction

In the history of Unix-like operating systems, the need for a simple, efficient, and persistent key-value storage system led to the development of the Database Manager (dbm). This library and its successors became foundational components for various applications requiring fast data retrieval without the complexity of a full-fledged relational database. This document provides a technical overview of the original dbm library, its direct successor this compound, and the subsequent evolution of this database family, tailored for an audience with a technical background.

Historical Development and Evolution

The dbm family of libraries represents one of the earliest forms of NoSQL databases, providing a straightforward associative array (key-value) storage mechanism on disk.[1]

The Genesis: dbm

The original dbm library was written by Ken Thompson at AT&T Bell Labs and first appeared in Version 7 (V7) Unix in 1979.[1][2][3] It was designed as a simple, disk-based hash table, offering fast access to data records via string keys.[1][3] A dbm database consisted of two files:

-

.dir file : A directory file containing the hash table indices.

-

.pag file : A data file containing the actual key-value pairs.[2][4][5]

This initial implementation had significant limitations: it only allowed one database to be open per process and was not designed for concurrent access by multiple processes.[2][4] The pointers to data returned by the library were stored in static memory, meaning they could be overwritten by subsequent calls, requiring developers to immediately copy the results.[2]

The Successor: this compound

To address the limitations of the original, the New Database Manager (this compound) was developed and introduced with 4.3BSD Unix in 1986.[2][3] While maintaining compatibility with the core concepts of dbm, this compound introduced several crucial enhancements:

-

Multiple Open Databases : It modified the API to allow a single process to have multiple databases open simultaneously.[1][2]

-

File Locking : It incorporated file locking mechanisms to enable safe, concurrent read access.[2] However, write access was still typically limited to a single process at a time.[6]

-

Standardization : The this compound API was later standardized in POSIX and the X/Open Portability Guide (XPG4).[2]

Despite these improvements, this compound retained the two-file structure (.dir and .pag) and had its own limitations on key and data size.[4][5]

The Family Expands

The influence of dbm and this compound led to a variety of reimplementations, each aiming to improve upon the original formula by removing limitations or changing licensing.

-

sdbm : Written in 1987 by Ozan Yigit, sdbm ("small dbm") was a public-domain clone of this compound, created to avoid the AT&T license restrictions.[1][3]

-

gdbm : The GNU Database Manager (gdbm) was released in 1990 by the Free Software Foundation.[2][3] It implemented the this compound interface but also added features like crash tolerance, no limits on key/value size, and a different, single-file database format.[1][3][7]

-

Berkeley DB (BDB) : Originating in 1991 to replace the license-encumbered BSD this compound, Berkeley DB became the most advanced successor.[1] It offered significant enhancements, including transactions, journaling for crash recovery, and support for multiple access methods beyond hashing, all while providing a compatibility interface for this compound.[4]

The evolutionary path of these libraries shows a clear progression towards greater stability, fewer limitations, and more flexible licensing.

Core Technical Details and Methodology

The fundamental principle behind dbm and its variants is the use of a hash table stored on disk. This allows for very fast data retrieval based on a key.

Data Structure and Hashing

The core methodology involves a hashing function to map a given key to a specific location ("bucket") within the database files.[1][3]

-

Hashing : When a key-value pair is to be stored, the library applies a hash function to the key, which computes an integer value.

-

Bucket Location : This hash value is used to determine the bucket where the key-value pair should reside.

-

Storage : The key and its associated data are written into the appropriate block in the .pag file. An index pointing to this data is stored in the .dir file.

-

Collision Handling : Since different keys can produce the same hash value (a "collision"), the library must handle this. The dbm implementation uses a form of extendible hashing.[1] If a bucket becomes full, it is split, and the hash directory is updated to accommodate the growing data.

This approach ensures that, on average, retrieving any value requires only one or two disk accesses, making it significantly faster than sequentially scanning a flat file.[5]

Quantitative Data and Specifications

The various dbm implementations can be compared by their technical limitations and features. While formal benchmarks of these legacy systems are scarce, their documented specifications provide a clear comparison.

| Feature | dbm (Original V7) | This compound (4.3BSD) | gdbm (GNU) | Berkeley DB (Modern) |

| Release Date | 1979[1][2][3] | 1986[2][3] | 1990[2][3] | 1991 (initial)[1] |

| File Structure | Two files (.dir, .pag)[2][4] | Two files (.dir, .pag)[4][5] | Single file[6] | Single file[4] |

| Key/Value Size Limit | ~512 bytes (total per entry)[2][3] | ~1024 - 4096 bytes (implementation dependent)[3][4] | No limit[3] | No practical limit |

| Concurrent Access | 1 process max[2][4] | Multiple readers, single writer[2] | Multiple readers, single writer[6] | Full transactional (multiple writers) |

| Crash Recovery | None | None | Yes (crash tolerance)[1][7] | Yes (journaling, transactions) |

| API Header | [2] | [2] | [8] |

Conclusion

The dbm library and its direct descendant this compound were pioneering technologies in the Unix ecosystem. They established a simple yet powerful paradigm for on-disk key-value storage that influenced countless applications and spawned a family of more advanced database engines. While modern applications often rely on more sophisticated systems like Berkeley DB, GDBM, or other NoSQL databases, the foundational concepts of hashing for fast, direct data access introduced by dbm remain a cornerstone of database design. Understanding their history and technical underpinnings provides valuable insight into the evolution of data storage technology.

References

- 1. DBM (computing) - Wikipedia [en.wikipedia.org]

- 2. grokipedia.com [grokipedia.com]

- 3. Introduction to dbm | KOSHIGOE.Write(something) [koshigoe.github.io]

- 4. Unix Incompatibility Notes: DBM Hash Libraries [unixpapa.com]

- 5. IBM Documentation [ibm.com]

- 6. gdbm [edoras.sdsu.edu]

- 7. dbm â Interfaces to Unix âdatabasesâ — Python 3.14.2 documentation [docs.python.org]

- 8. dbm/ndbm [docs.oracle.com]

The NDBM Key-Value Store: A Technical Guide for Scientific Data Management

For researchers, scientists, and drug development professionals, managing vast and complex datasets is a daily challenge. While large-scale relational databases have their place, simpler, more lightweight solutions can be highly effective for specific tasks. This in-depth technical guide explores the ndbm (New Database Manager) key-value store, a classic and efficient library for managing key-data pairs, and its applicability to scientific data workflows.

Core Concepts of the this compound Key-Value Store

This compound is a library that provides a simple yet powerful way to store and retrieve data. It is a type of non-relational database, often referred to as a NoSQL database, that uses a key-value model.[1][2] Think of it as a dictionary or a hash table on disk, where each piece of data (the "value") is associated with a unique identifier (the "key").[3] This simplicity allows for extremely fast data access, making it suitable for applications where quick lookups are essential.[4]

The this compound library stores data in two files, typically with .dir and .pag extensions.[5] The .dir file acts as an index, while the .pag file contains the actual data.[5] This structure allows this compound to handle large databases and access data in just one or two file system accesses.[5]

Key Operations

The core functionality of this compound revolves around a few fundamental operations:

-

Opening a database: The dbm_open() function is used to open an existing database or create a new one.

-

Storing data: dbm_store() takes a key and a value and stores them in the database.

-

Retrieving data: dbm_fetch() retrieves the value associated with a given key.

-

Deleting data: dbm_delete() removes a key-value pair from the database.

-

Closing a database: dbm_close() closes the database file, ensuring that all changes are written to disk.

This compound in the Context of Scientific Data

While modern, more feature-rich key-value stores have emerged, the principles of this compound remain relevant for certain scientific applications. Its lightweight nature and straightforward API make it a good choice for:

-

Storing metadata: Associating metadata with experimental data files, samples, or simulations.

-

Caching frequently accessed data: Improving the performance of larger applications by keeping frequently used data in a fast key-value store.

-

Managing configuration data: Storing and retrieving configuration parameters for scientific software and pipelines.

-

Indexing large datasets: Creating an index of large files to allow for quick lookups of specific data points.

However, it is crucial to be aware of the limitations of this compound. It is an older library and may have restrictions on the size of the database and the size of individual key-value pairs.[6] It also lacks built-in support for transactions and concurrent write access, which can be a drawback in multi-user or multi-process environments.[6][7]

Comparative Analysis of DBM-style Databases

Several implementations and successors to the original dbm library exist, each with its own set of features and trade-offs. The following table provides a qualitative comparison of this compound with two of its common relatives: gdbm (GNU Database Manager) and Berkeley DB.

| Feature | This compound | gdbm | Berkeley DB |

| Data Storage | Two files (.dir, .pag)[5] | Single file[6] | Single file[6] |

| Key/Value Size Limits | Limited (e.g., 1024 bytes)[8] | No practical limit[6] | Limited by available memory[8] |

| Database Size Limit | Can be limited (e.g., 2GB on some systems)[6] | Generally very large | Up to 256 TB[8] |

| Concurrency | No built-in locking for concurrent writes[6] | Supports multiple readers or one writer[7] | Full support for concurrent access and transactions |

| Licensing | Varies by system (often part of the OS) | GPL[8] | Sleepycat Public License or commercial[9] |

| Portability | Widely available on Unix-like systems | Portable across many platforms | Highly portable |

| Features | Basic key-value operations | Extends this compound with more features | Rich feature set including transactions, replication, etc. |

Experimental Protocol: Using this compound for Storing Gene Annotations

This section outlines a detailed methodology for a hypothetical experiment where this compound is used to store and retrieve gene annotations. This protocol demonstrates a practical application of this compound in a bioinformatics workflow.

Objective: To create a local, fast-lookup database of gene annotations, mapping gene IDs to their functional descriptions.

Materials:

-

A C compiler (e.g., GCC)

-

The this compound.h library (usually included with the C standard library on Unix-like systems)

-

A tab-separated value (TSV) file containing gene annotations (gene_annotations.tsv) with the following format: GeneID\tAnnotation

Methodology:

-

Data Preparation:

-

Ensure the gene_annotations.tsv file is clean and properly formatted. Each line should contain a unique gene ID and its corresponding annotation, separated by a tab.

-

-

Database Creation and Population (C Program):

-

Write a C program that performs the following steps:

-

Include the necessary headers: , , , and .

-

Open the gene_annotations.tsv file for reading.

-

Open an this compound database named "gene_db" in write/create mode using dbm_open().

-

Read the annotation file line by line.

-

For each line, parse the gene ID and the annotation.

-

Create datum structures for the key (gene ID) and the value (annotation). The dptr member will point to the data, and dsize will be the length of the data.

-

Use dbm_store() to insert the key-value pair into the database.

-

After processing all lines, close the this compound database using dbm_close().

-

Close the input file.

-

-

-

Data Retrieval (C Program):

-

Write a separate C program or a function in the same program to demonstrate data retrieval.

-

Open the "gene_db" this compound database in read-only mode.

-

Take a gene ID as input from the user or as a command-line argument.

-

Create a datum structure for the input gene ID to be used as the key.

-

Use dbm_fetch() to retrieve the annotation associated with the input gene ID.

-

If the fetch is successful, print the retrieved annotation.

-

If the key is not found, dbm_fetch() will return a datum with a NULLdptr. Handle this case by printing a "gene not found" message.

-

Close the this compound database.

-

-

Sample C Code for Database Population

Visualization of a Scientific Workflow

To illustrate how this compound can fit into a larger scientific workflow, consider a scenario in drug discovery where researchers are screening a library of small molecules against a protein target. A key-value store can be used to manage the mapping of compound IDs to their screening results.

The following diagram, generated using the Graphviz DOT language, visualizes this workflow.

A drug discovery screening workflow utilizing an this compound key-value store.

In this workflow, the this compound store provides a fast and efficient way to look up the activity of a specific compound, which is essential for the subsequent hit identification and analysis steps.

Conclusion

The this compound key-value store, while a mature technology, still offers a viable and efficient solution for specific data management tasks in scientific research. Its simplicity, speed, and low overhead make it an attractive option for applications that require rapid lookups of key-value pairs. By understanding its core functionalities, limitations, and how it compares to other DBM-style databases, researchers can effectively leverage this compound to streamline their data workflows and focus on what matters most: scientific discovery.

References

- 1. What is a Key Value Database? - Key Value DB and Pairs Explained - AWS [aws.amazon.com]

- 2. How to use key-value stores [byteplus.com]

- 3. medium.com [medium.com]

- 4. hazelcast.com [hazelcast.com]

- 5. IBM Documentation [ibm.com]

- 6. Unix Incompatibility Notes: DBM Hash Libraries [unixpapa.com]

- 7. gdbm [edoras.sdsu.edu]

- 8. Introduction to dbm | KOSHIGOE.Write(something) [koshigoe.github.io]

- 9. DBM (computing) - Wikipedia [en.wikipedia.org]

An In-depth Technical Guide to NDBM Data Structures

For Researchers, Scientists, and Drug Development Professionals

This guide provides a comprehensive technical overview of the New Database Manager (NDBM), a foundational key-value pair data structure. While largely superseded by more modern libraries, understanding this compound's core principles offers valuable insight into the evolution of database technologies and the fundamental concepts of on-disk hash tables. This document is intended for researchers and professionals who require a deep understanding of data storage mechanisms for managing scientific and experimental data.

Core Concepts of this compound

This compound is a library of subroutines that provides a simple and efficient interface for managing key-value databases stored on disk.[1] It was developed as an enhancement to the original DBM library, offering improvements such as the ability to have multiple databases open simultaneously.[2] The primary function of this compound is to store and retrieve arbitrary data based on a unique key, making it an early example of a NoSQL data store.

At its core, this compound implements an on-disk hash table. This structure allows for fast data retrieval, typically in one or two file system accesses, without the overhead of a full relational database system.[1] Data is organized into key-value pairs, where both the key and the value can be arbitrary binary data. This flexibility is particularly useful for storing heterogeneous scientific data.

This compound On-Disk Structure

An this compound database consists of two separate files:

-

The Directory File (.dir): This file acts as an index or a bitmap for the data file.[1] It contains a directory that maps hash values of keys to locations within the page file.

-

The Page File (.pag): This file stores the actual key-value pairs.[1]

This two-file structure separates the index from the data, which can improve performance by allowing the potentially smaller directory file to be more easily cached in memory. It's important to note that modern emulations of this compound, such as those provided by Berkeley DB, may use a single file with a .db extension.[2][3][4]

The Hashing Mechanism: Extendible Hashing

This compound utilizes a form of extendible hashing to dynamically manage the on-disk hash table.[5] This technique allows the hash table to grow as more data is added, avoiding the need for costly full-table reorganizations.

The core components of the extendible hashing mechanism in this compound are:

-

Directory: An in-memory array of pointers to data buckets on disk. The size of the directory is a power of 2.

-

Global Depth (d): An integer that determines the size of the directory (2^d). The first 'd' bits of a key's hash value are used as an index into the directory.

-

Buckets (Pages): Fixed-size blocks in the .pag file that store the key-value pairs.

-

Local Depth (d'): An integer stored with each bucket, indicating the number of bits of the hash value shared by all keys in that bucket.

Data Insertion and Splitting Logic:

-

A key is hashed, and the first d (global depth) bits of the hash are used to find an entry in the directory.

-

The directory entry points to a bucket in the .pag file.

-

The key-value pair is inserted into the bucket.

-

If the bucket is full:

-

If the bucket's local depth d' is less than the directory's global depth d, the bucket is split, and its contents are redistributed between the old and a new bucket based on the d'+1-th bit of the keys' hashes. The directory pointers are updated to point to the correct buckets.

-

If the bucket's local depth d' is equal to the global depth d, the directory itself must be doubled in size. The global depth d is incremented, and the bucket is then split.

-

This dynamic resizing of the directory and splitting of buckets allows this compound to handle growing datasets efficiently.

Experimental Protocols: Algorithmic Procedures

While specific experimental protocols from scientific literature using the original this compound are scarce due to its age, we can detail the algorithmic protocols for the primary this compound operations. These can be considered the "experimental" procedures for interacting with the data structure.

Protocol for Storing a Key-Value Pair

-

Initialization: Open the database using dbm_open(), specifying the file path and access flags (e.g., read-write, create if not exists). This returns a database handle.

-

Data Preparation: Prepare the key and content in datum structures. A datum is a simple struct containing a pointer to the data (dptr) and its size (dsize).

-

Hashing: The this compound library internally computes a hash of the key.

-

Directory Lookup: The first d (global depth) bits of the hash are used to index into the in-memory directory.

-

Bucket Retrieval: The directory entry provides the address of the data bucket in the .pag file. This bucket is read from disk.

-

Insertion and Overflow Check: The new key-value pair is added to the bucket. If the bucket exceeds its capacity, the bucket splitting and/or directory doubling procedure (as described in Section 3) is initiated.

-

Write to Disk: The modified bucket(s) and, if necessary, the directory file are written back to disk.

-

Return Status: The dbm_store() function returns a status indicating success, failure, or if an attempt was made to insert a key that already exists with the DBM_INSERT flag.[6][7]

Protocol for Retrieving a Value by Key

-

Initialization: Open the database using dbm_open().

-

Key Preparation: Prepare the key to be fetched in a datum structure.

-

Hashing and Directory Lookup: The key is hashed, and the first d bits are used to find the corresponding directory entry.

-

Bucket Retrieval: The directory entry's pointer is used to locate and read the appropriate bucket from the .pag file.

-

Key Search: The keys within the bucket are linearly scanned to find a match.

-

Data Return: If a matching key is found, a datum structure containing a pointer to the corresponding value and its size is returned. If the key is not found, the dptr field of the returned datum will be NULL.[6]

Quantitative Data Summary

| Feature | This compound | GDBM (GNU DBM) | Berkeley DB |

| Primary Use | Simple key-value storage | A more feature-rich replacement for this compound | High-performance, transactional embedded database |

| File Structure | Two files (.dir, .pag) | Can emulate the two-file structure but is a single file internally | Typically a single file |

| Concurrency | Generally not safe for concurrent writers | Provides file locking for safe concurrent access | Full transactional support with fine-grained locking |

| Key/Value Size Limits | Limited (e.g., 1018 to 4096 bytes)[2] | No inherent limits | No inherent limits |

| API | dbm_open, dbm_store, dbm_fetch, etc. | Native API and this compound compatibility API | Rich API with support for transactions, cursors, etc. |

| In-memory Caching | Basic, relies on OS file caching | Internal bucket cache | Sophisticated in-memory cache management |

| Crash Recovery | Not guaranteed | Offers some crash tolerance | Full ACID-compliant crash recovery |

Visualizations

This compound File Structure

Caption: The two-file architecture of an this compound database.

This compound Data Storage Workflow

Caption: Logical workflow for storing data in an this compound database.

This compound Data Retrieval Workflow

Caption: Logical workflow for retrieving data from an this compound database.

Conclusion

This compound represents a significant step in the evolution of simple, efficient on-disk data storage. For researchers and scientists, understanding its architecture provides a solid foundation for appreciating the trade-offs involved in modern data management systems. While direct use of the original this compound is uncommon today, its principles of key-value storage and extendible hashing are still relevant in the design of high-performance databases. When choosing a data storage solution for research applications, the principles embodied by this compound—simplicity, direct key-based access, and predictable performance—remain valuable considerations. For new projects, however, modern libraries such as Berkeley DB or GDBM are recommended as they provide this compound-compatible interfaces with enhanced features, performance, and robustness.

References

- 1. IBM Documentation [ibm.com]

- 2. Unix Incompatibility Notes: DBM Hash Libraries [unixpapa.com]

- 3. dbm/ndbm [docs.oracle.com]

- 4. Berkeley DB: dbm/ndbm [ucalgary.ca]

- 5. DBM (computing) - Wikipedia [en.wikipedia.org]

- 6. This compound(3) - OpenBSD manual pages [man.openbsd.org]

- 7. The this compound library [infolab.stanford.edu]

Unraveling NDBM: A Technical Guide for Data Management in Bioinformatics and Drug Development

An In-depth Technical Guide for Researchers, Scientists, and Drug Development Professionals

The term "NDBM" in the context of bioinformatics does not refer to a specific, publicly documented bioinformatics tool or platform. Extensive research indicates that this compound stands for New Database Manager , a type of key-value store database system. This guide will, therefore, provide a comprehensive overview of the core concepts of this compound and similar database management systems, and then explore their potential applications within bioinformatics and drug development, a field that increasingly relies on robust data management.

While a direct "this compound for bioinformatics" tutorial is not feasible due to the apparent non-existence of such a specific tool, this whitepaper will equip researchers with the foundational knowledge of key-value databases and how they can be leveraged for managing complex biological data.

Core Concepts of this compound (New Database Manager)

This compound and its predecessor, DBM, are simple, high-performance embedded database libraries that allow for the storage and retrieval of data as key-value pairs.[1][2][3] This is analogous to a physical dictionary where each word (the key) has a corresponding definition (the value).

Key Characteristics:

-

Key-Value Store: The fundamental data model is a set of unique keys, each associated with a value.[1][2]

-

Embedded Library: It is not a standalone database server but a library that is linked into an application.

-

On-Disk Storage: Data is persistently stored in files, typically a .dir file for the directory/index and a .pag file for the data itself.[3]

-

Fast Access: Designed for quick lookups of data based on a given key.[3]

Basic Operations in an this compound-like System

The core functionalities of an this compound library revolve around a few fundamental operations. The following table summarizes these common functions, though specific implementations may vary.

| Operation | Description |

| dbm_open | Opens or creates a database file.[4][5] |

| dbm_store | Stores a key-value pair in the database.[4][5] |

| dbm_fetch | Retrieves the value associated with a given key.[4][5] |

| dbm_delete | Removes a key-value pair from the database.[4] |

| dbm_firstkey | Retrieves the first key in the database for iteration.[4] |

| dbm_nextkey | Retrieves the subsequent key during an iteration.[4] |

| dbm_close | Closes the database file.[4][5] |

Potential Applications of Key-Value Databases in Bioinformatics

While there isn't a specific "this compound bioinformatics tool," the principles of key-value databases are highly relevant to managing the large and diverse datasets common in bioinformatics. Here are some potential applications:

-

Genomic Data Storage: Storing genetic sequences or annotations where the key could be a gene ID, a chromosome location, or a sequence identifier, and the value would be the corresponding sequence, functional annotation, or other relevant data.

-

Mapping Identifiers: Efficiently mapping between different biological database identifiers (e.g., mapping UniProt IDs to Ensembl IDs).

-

Storing Experimental Metadata: Associating experimental sample IDs (as keys) with detailed metadata (as values), such as experimental conditions, sample source, and processing dates.

-

Caching Frequent Queries: Storing the results of computationally expensive analyses (like BLAST searches or sequence alignments) with the query parameters as the key and the results as the value to speed up repeated queries.

Experimental Workflow: Using a Key-Value Store for Gene Annotation

This hypothetical workflow illustrates how an this compound-like database could be used to create a simple gene annotation database.

Detailed Methodology for the Workflow:

-

Data Acquisition: Obtain gene sequences in a standard format like FASTA and functional annotations from public databases (e.g., NCBI, Ensembl) in a parsable format like GFF or CSV.

-

Database Creation:

-

Write a script (e.g., in Python using a library like dbm) to open a new database file.

-

The script should parse the FASTA file, using the gene identifier from the header as the key and the nucleotide or amino acid sequence as the value. For each gene, store this key-value pair in the database.

-

The script should then parse the annotation file, associating each gene identifier (key) with its corresponding functional annotation (value). This could be stored as a separate key-value pair or appended to the existing value for that key.

-

-

Data Retrieval:

-

Create a query script that takes a list of gene identifiers as input.

-

For each identifier, the script opens the database and uses the fetch operation to retrieve the corresponding sequence and/or annotation.

-

-

Downstream Analysis: The retrieved data can then be used for various bioinformatics analyses, such as sequence alignment, motif finding, or pathway analysis.

Signaling Pathways in Drug Development

While this compound is a data management tool, a key area of bioinformatics and drug development is the study of signaling pathways. Understanding these pathways is crucial for identifying therapeutic targets.[6] For instance, in the context of diseases like Glioblastoma (GBM), several signaling pathways are often dysregulated.[7][8][9]

Example: Simplified NF-κB Signaling Pathway

The NF-κB signaling pathway is frequently implicated in cancer development and therapeutic resistance.[8][10][11] The following diagram illustrates a simplified representation of this pathway.

In the context of drug development, researchers might use a key-value database to store information about compounds that inhibit various stages of this pathway. For example, the key could be a compound ID, and the value could be a data structure containing its target (e.g., "IKK Complex"), its IC50 value, and links to relevant publications.

Conclusion

While the initial premise of an "this compound for bioinformatics" tutorial appears to be based on a misunderstanding of the term "this compound," the underlying principles of key-value databases are highly applicable to the data management challenges in bioinformatics and drug development. These simple, high-performance databases can be powerful tools for storing, retrieving, and managing the vast amounts of data generated in modern biological research. By understanding the core concepts of this compound-like systems, researchers can build efficient and scalable data management solutions to support their scientific discoveries.

References

- 1. This compound Tutorial [franz.com]

- 2. The this compound library [infolab.stanford.edu]

- 3. IBM Documentation [ibm.com]

- 4. dbm/ndbm [docs.oracle.com]

- 5. This compound (GDBM manual) [gnu.org.ua]

- 6. pharmdbm.com [pharmdbm.com]

- 7. mdpi.com [mdpi.com]

- 8. Signaling pathways and therapeutic approaches in glioblastoma multiforme (Review) - PMC [pmc.ncbi.nlm.nih.gov]

- 9. Deregulated Signaling Pathways in Glioblastoma Multiforme: Molecular Mechanisms and Therapeutic Targets - PMC [pmc.ncbi.nlm.nih.gov]

- 10. Natural Small Molecules Targeting NF-κB Signaling in Glioblastoma - PMC [pmc.ncbi.nlm.nih.gov]

- 11. medrxiv.org [medrxiv.org]

Introduction to ndbm: A Lightweight Database for Scientific Data

An In-depth Technical Guide to the ndbm Module in Python for Researchers

For researchers, scientists, and drug development professionals, managing data efficiently is paramount. While complex relational databases have their place, many research workflows benefit from a simpler, faster solution for storing key-value data. The dbm package in Python's standard library provides a lightweight, dictionary-like interface to several file-based database engines, with dbm.this compound being a common implementation based on the traditional Unix this compound library.[1][2]

This guide provides an in-depth look at the dbm.this compound module, its performance characteristics, and practical applications in a research context. It is designed for professionals who need a straightforward, persistent data storage solution without the overhead of a full-fledged database server.

The this compound module, like other dbm interfaces, stores keys and values as bytes.[2] This makes it ideal for scenarios where you need to map unique identifiers (like a sample ID, a gene accession number, or a filename) to a piece of data (like experimental parameters, sequence metadata, or cached analysis results).

Core Concepts of this compound

The dbm.this compound module provides a persistent, dictionary-like object. The fundamental data structure is a key-value pair, where a unique key maps to an associated value.[3] Unlike in-memory Python dictionaries, this compound databases are stored on disk, ensuring data persists between script executions.

Key characteristics and limitations include:

-

Persistence: Data is saved to a file and is not lost when your program terminates.

-

Dictionary-like API: It uses familiar methods like [] for access, keys(), and can be iterated over, making it easy to learn for Python users.[4][5]

-

Byte Storage: Both keys and values must be bytes. This means you must encode strings (e.g., using .encode('utf-8')) before storing and decode them upon retrieval.

-

Non-portability: The database files created by dbm.this compound are not guaranteed to be compatible with other dbm implementations like dbm.gnu or dbm.dumb.[1] Furthermore, the file format may not be portable between different operating systems.[6]

-

Single-process Access: dbm databases are generally not safe for concurrent access from multiple processes without external locking mechanisms.

Quantitative Performance Analysis

The choice of a database often involves trade-offs between speed, features, and simplicity. The dbm package in Python can use several backends, and their performance can vary significantly. While direct, standardized benchmarks for this compound are scarce, we can infer its performance from benchmarks of its close relatives, gdbm (which this compound often wraps on Linux systems) and dumbdbm (the pure Python fallback).

The following table summarizes performance data from an independent benchmark of various Python key-value stores. The tests involved writing and then reading 100,000 key-value pairs.

| Database Backend | Write Time (seconds) | Read Time (seconds) | Notes |

| GDBM (dbm.gnu) | 0.20 | 0.38 | C-based library, generally very fast for writes. Often the default dbm on Linux. |

| SQLite (dbm.sqlite3) | 0.88 | 0.65 | A newer, portable, and feature-rich backend. Slower for simple writes but more robust.[6] |

| BerkeleyDB (hash) | 0.30 | 0.38 | High-performance C library, not always available in the standard library. |

| DumbDBM (dbm.dumb) | 1.99 | 1.11 | Pure Python implementation. Significantly slower but always available as a fallback.[7] |

Data is adapted from a benchmark performed by Charles Leifer, available at --INVALID-LINK--. The values represent the time elapsed for 100,000 operations.

From this data, it's clear that C-based implementations like gdbm significantly outperform the pure Python dumbdbm. Given that dbm.this compound is also a C-library interface, its performance is expected to be in a similar range to gdbm, making it a fast option for many research applications.

Experimental Protocols & Methodologies

Here we detail specific research-oriented workflows where this compound is a suitable tool.

Protocol 1: Caching Intermediate Results in a Bioinformatics Pipeline

Objective: To accelerate a multi-step bioinformatics pipeline by caching the results of a computationally expensive step, avoiding re-computation on subsequent runs.

Methodology:

-

Identify the Bottleneck: Profile the pipeline to identify a function that is computationally intensive and produces a deterministic output for a given input (e.g., a function that aligns a DNA sequence to a reference genome).

-

Create a Cache Database: Before the main processing loop, open an this compound database. This file will store the results.

-

Implement the Caching Logic:

-

For each input (e.g., a sequence ID), generate a unique key.

-

Check if this key exists in the this compound database.

-

Cache Hit: If the key exists, retrieve the pre-computed result from the database and decode it.

-

Cache Miss: If the key does not exist, execute the computationally expensive function.

-

Store the result in the this compound database. The key should be the unique input identifier, and the value should be the result, both encoded as bytes.

-

-

Close the Database: After the pipeline completes, ensure the this compound database is closed to write any pending changes to disk.

Python Code Example:

Protocol 2: Creating a Metadata Index for Large Genomic Datasets

Objective: To create a fast, searchable index of metadata for a large collection of FASTA files without loading all files into memory. This is common in genomics and drug discovery where datasets can contain thousands or millions of small files.

Methodology:

-

Define Metadata Schema: Determine the essential metadata to extract from each file (e.g., sequence ID, description, length, GC content).

-

Initialize the Index Database: Open an this compound database file that will serve as the index.

-

Iterate and Index:

-

Loop through each FASTA file in the dataset directory.

-

Use the filename or an internal identifier as the key for the database.

-

Parse the FASTA file to extract the required metadata. The Biopython library is excellent for this.[8][9]

-

Serialize the metadata into a string format (e.g., JSON or a simple delimited string).

-

Encode both the key and the serialized metadata value to bytes.

-

Store the key-value pair in the this compound database.

-

-

Querying the Index: To retrieve metadata for a specific file, open the this compound database, access the entry using the file's key, and deserialize the metadata string.

-

Close the Database: Ensure the database is closed upon completion of indexing or querying.

Python Code Example (requires biopython):

Visualizing Workflows with Graphviz

Diagrams can clarify the logical flow of data and operations. Below are Graphviz representations of the concepts and protocols described.

Caption: Logical structure of a key-value store like this compound.

Caption: Workflow for caching intermediate results.

Caption: Experimental workflow for metadata indexing.

Conclusion

The dbm.this compound module is a powerful yet simple tool in a researcher's data management toolkit. While it lacks the advanced features of relational databases, its speed, simplicity, and dictionary-like interface make it an excellent choice for a wide range of applications, including result caching, metadata indexing, and managing experimental parameters. For scientific and drug discovery professionals working in a Python environment, this compound offers a pragmatic, file-based solution for persisting key-value data with minimal overhead.

References

- 1. charles leifer | Completely un-scientific benchmarks of some embedded databases with Python [charlesleifer.com]

- 2. Benchmarking Semidbm — semidbm 0.5.1 documentation [semidbm.readthedocs.io]

- 3. Key-value database systems - Python for Data Science [python4data.science]

- 4. Tips for Managing and Analyzing Large Data Sets with Python [statology.org]

- 5. youtube.com [youtube.com]

- 6. discuss.python.org [discuss.python.org]

- 7. 11.12. dumbdbm — Portable DBM implementation — Stackless-Python 2.7.15 documentation [stackless.readthedocs.io]

- 8. kaggle.com [kaggle.com]

- 9. medium.com [medium.com]

NDBM vs. GDBM: A Technical Guide for Research Applications

For researchers, scientists, and drug development professionals managing vast and complex datasets, the choice of a database management system is a critical decision that can significantly impact the efficiency and scalability of their work. This guide provides an in-depth technical comparison of two key-value store database libraries, ndbm (New Database Manager) and gdbm (GNU Database Manager), with a focus on their applicability in research environments.

Core Architectural and Feature Comparison

Both this compound and gdbm are lightweight, file-based database libraries that store data as key-value pairs. They originate from the original dbm library and provide a simple and efficient way to manage data without the overhead of a full-fledged relational database system. However, they differ significantly in their underlying architecture, feature sets, and performance characteristics.

Data Storage and File Format

A fundamental distinction lies in how each library physically stores data on disk.

-

This compound : Employs a two-file system. For a database named mydatabase, this compound creates mydatabase.dir and mydatabase.pag. The .dir file acts as a directory or index, containing a bitmap for the hash table, while the .pag file stores the actual key-value data pairs.[1] This separation of index and data can have implications for data retrieval performance and file management.

-

gdbm : Utilizes a single file for storing the entire database.[2] This approach simplifies file management and can be more efficient in certain I/O scenarios. gdbm also supports different file formats, including a standard format and an "extended" format that offers enhanced crash tolerance.[3][4]

Key and Value Size Limitations

A critical consideration for scientific data, which can vary greatly in size, is the limitation on the size of keys and values.

-

This compound : Historically, this compound has limitations on the size of the key-value pair, typically ranging from 1018 to 4096 bytes in total.[5] This can be a significant constraint when storing large data objects such as gene sequences, protein structures, or complex chemical compound information.

-

gdbm : A major advantage of gdbm is that it imposes no inherent limits on the size of keys or values.[5] This flexibility makes it a more suitable choice for applications dealing with large and variable-sized data records.

Concurrency and Locking

In collaborative research environments, concurrent access to databases is often a necessity.

-

This compound : The original this compound has limited built-in support for concurrent access, making it risky for multiple processes to write to the database simultaneously.[5] Some implementations may offer file locking mechanisms.[6]

-

gdbm : Provides a more robust locking mechanism by default, allowing multiple readers to access the database concurrently or a single writer to have exclusive access.[5][7] This makes gdbm a safer choice for multi-user or multi-process applications.

Quantitative Data Summary

The following tables summarize the key quantitative and feature-based differences between this compound and gdbm.

| Feature | This compound | gdbm |

| File Structure | Two files (.dir, .pag)[1] | Single file[2] |

| Key Size Limit | Limited (varies by implementation)[5] | No limit[5] |

| Value Size Limit | Limited (varies by implementation)[5] | No limit[5] |

| Concurrency | Limited, typically no built-in locking[5] | Multiple readers or one writer (locking by default)[5][7] |

| Crash Tolerance | Basic | Enhanced, with "extended" file format option[3][4][8] |

| API | Standardized by POSIX | Native API with more features, also provides this compound compatibility layer[9] |

| In-memory Caching | Implementation dependent | Internal bucket cache for improved read performance[6] |

| Data Traversal | Sequential key traversal[10] | Sequential key traversal[9] |

Experimental Protocols: Use Case Scenarios

To illustrate the practical implications of choosing between this compound and gdbm, we present two hypothetical experimental protocols for common research tasks.

Experiment 1: Small Molecule Library Management

Objective: To create and manage a local database of small molecules for a drug discovery project, storing chemical identifiers (e.g., SMILES strings) as keys and associated metadata (e.g., molecular weight, logP, in-house ID) as values.

Methodology with this compound:

-

Database Initialization: A new this compound database is created using the dbm_open() function with the O_CREAT flag.

-

Data Ingestion: A script reads a source file (e.g., a CSV or SDF file) containing the small molecule data. For each molecule, a key is generated from the SMILES string, and the associated metadata is concatenated into a single string to serve as the value.

-

Data Storage: The dbm_store() function is used to insert each key-value pair into the database. A check is performed to ensure the total size of the key and value does not exceed the implementation's limit.

-

Data Retrieval: A separate script allows users to query the database by providing a SMILES string. The dbm_fetch() function is used to retrieve the corresponding metadata.

-

Concurrency Test: An attempt is made to have two concurrent processes write to the database simultaneously to observe potential data corruption issues.

Expected Outcome with this compound: The database creation and data retrieval for a small number of compounds with concise metadata will likely be successful and performant. However, issues are expected to arise if the metadata is extensive, potentially exceeding the key-value size limit. The concurrency test is expected to fail or lead to an inconsistent database state.

Methodology with gdbm:

-

Database Initialization: A gdbm database is created using gdbm_open(). The "extended" format can be specified for improved crash tolerance.

-

Data Ingestion: Similar to the this compound protocol, a script processes the source file. The metadata can be stored in a more structured format (e.g., JSON) as the value, given the absence of size limitations.

-

Data Storage: The gdbm_store() function is used for data insertion.

-

Data Retrieval: The gdbm_fetch() function retrieves the metadata for a given SMILES key.

-

Concurrency Test: Two processes will be initiated: one writing new entries to the database and another reading existing entries simultaneously, leveraging gdbm's reader-writer locking.

Expected Outcome with gdbm: The process is expected to be more robust. The ability to store larger, more structured metadata (like JSON) is a significant advantage. The concurrency test should demonstrate that the reading process can continue uninterrupted while the writing process is active, without data corruption.

Experiment 2: Storing and Indexing Genomic Sequencing Data

Objective: To store and quickly retrieve short DNA sequences and their corresponding annotations from a large FASTA file.

Methodology with this compound:

-

Database Design: The sequence identifier from the FASTA file will be used as the key, and the DNA sequence itself as the value.

-

Data Ingestion: A parser reads the FASTA file. For each entry, it extracts the identifier and the sequence.

-

Data Storage: The dbm_store() function is called to store the identifier-sequence pair. A check is implemented to handle sequences that might exceed the value size limit, potentially by truncating them or storing a file path to the sequence.

-

Performance Benchmark: The time taken to ingest a large FASTA file (e.g., >1GB) is measured. Subsequently, the time to perform a batch of random key lookups is also measured.

Expected Outcome with this compound: For FASTA files containing many short sequences, this compound might perform adequately. However, for genomes with long contigs or chromosomes, the value size limitation will be a major obstacle, requiring workarounds that add complexity. The ingestion process for very large files might be slow due to the overhead of managing two separate files.

Methodology with gdbm:

-

Database Design: The sequence identifier is the key, and the full, untruncated DNA sequence is the value.

-

Data Ingestion: A parser reads the FASTA file and uses gdbm_store() to populate the database.

-

Performance Benchmark: The same performance metrics as in the this compound protocol (ingestion time and random lookup time) are measured.

-

Feature Test: The gdbm_reorganize() function is called after a large number of deletions to observe the effect on the database file size.

Expected Outcome with gdbm: gdbm is expected to handle the large sequencing data without issues due to the lack of size limits. The performance for both ingestion and retrieval is anticipated to be competitive or superior to this compound, especially for larger datasets. The ability to reclaim space with gdbm_reorganize() is an added benefit for managing dynamic datasets where entries are frequently added and removed.

Signaling Pathways, Experimental Workflows, and Logical Relationships

The following diagrams illustrate the conceptual workflows and relationships discussed.

Conclusion and Recommendations

For modern research applications in fields such as bioinformatics, genomics, and drug discovery, gdbm emerges as the superior choice over this compound. Its key advantages, including the absence of size limitations for keys and values, a more robust concurrency model, and features like crash tolerance, directly address the challenges posed by large and complex scientific datasets. While this compound can be adequate for simpler, smaller-scale tasks with well-defined data sizes and single-process access, its limitations make it less suitable for the evolving demands of data-intensive research.

Researchers and developers starting new projects that require a simple, efficient key-value store are strongly encouraged to opt for gdbm. For legacy systems currently using this compound that are encountering limitations, migrating to gdbm is a viable and often necessary step to enhance scalability, data integrity, and performance. gdbm's provision of an this compound compatibility layer can facilitate such a migration.

References

- 1. researchgate.net [researchgate.net]

- 2. The this compound library [infolab.stanford.edu]

- 3. GDBM [gnu.org.ua]

- 4. Unix Incompatibility Notes: DBM Hash Libraries [unixpapa.com]

- 5. chemrxiv.org [chemrxiv.org]

- 6. Integrated data-driven biotechnology research environments - PMC [pmc.ncbi.nlm.nih.gov]

- 7. grokipedia.com [grokipedia.com]

- 8. chimia.ch [chimia.ch]

- 9. ahmettsoner.medium.com [ahmettsoner.medium.com]

- 10. Introduction to dbm | KOSHIGOE.Write(something) [koshigoe.github.io]

An In-depth Technical Guide to the Core Principles of NDBM File Organization

Audience: Researchers, scientists, and drug development professionals.

This guide provides a comprehensive technical overview of the NDBM (New Database Manager) file organization, a key-value storage system that has been a foundational component in various data management systems. We will delve into its core principles, file structure, hashing mechanisms, and operational workflows.

Introduction to this compound

This compound is a library of routines that manages data files containing key-value pairs.[1] It is designed for efficient storage and retrieval of data by key, offering a significant performance advantage over flat-file databases for direct lookups.[2] this compound is a successor to the original DBM library and introduces several enhancements, including the ability to handle larger databases.[2][3] An this compound database is physically stored in two separate files, which work in concert to provide rapid access to data.[3]

Core Principles of this compound File Organization

The fundamental principle behind this compound is the use of a hash table to store and retrieve data. This allows for, on average, O(1) lookup time, meaning that the time it takes to find a piece of data is independent of the total size of the database.[4] This is achieved by converting a variable-length key into a fixed-size hash value, which is then used to determine the location of the corresponding data.

This compound employs a dynamic hashing scheme known as extendible hashing .[2][4] This technique allows the hash table to grow dynamically as more data is added, thus avoiding the costly process of rehashing the entire database when it becomes full.[5][6]

This compound File Structure

An this compound database consists of two distinct files:

-

The Directory File (.dir): This file acts as the directory or index for the database.[3][7] It contains a hash table that maps hash values of keys to locations within the page file.[3] In some implementations, this file also contains a bitmap to manage free space.[3]

-

The Page File (.pag): This file stores the actual key-value pairs.[3][7] It is organized into "pages" or "buckets," which are fixed-size blocks of data. Multiple key-value pairs can be stored within a single page.

The separation of the directory and data allows for the directory to be potentially small enough to be cached in memory, leading to very fast lookups. The process of finding a value associated with a key typically involves one disk access to the directory file and one disk access to the page file.[3]

| File Component | Extension | Purpose |

| Directory File | .dir | Contains the hash table (directory) that maps keys to page file locations. |

| Page File | .pag | Stores the actual key-value data pairs in fixed-size blocks. |

The Hashing Mechanism: SDBM Hash Function

While the specific hash function can vary between implementations, a commonly associated algorithm is the sdbm (Sedgewick's Dynamic Bit Manipulation) hash function .[8][9][10] This is a non-cryptographic hash function designed for speed and good key distribution.[8][9] Good distribution is crucial for minimizing hash collisions (where two different keys produce the same hash value), which in turn maintains the efficiency of the database.[10]

The core principle of the sdbm algorithm is to iterate through each character of the key, applying a simple transformation to a running hash value.[8] The formula can be expressed as:

hash(i) = hash(i - 1) * 65599 + str[i][9][10]

Where hash(i) is the hash value after the i-th character, and str[i] is the ASCII value of the i-th character. The constant 65599 is a prime number chosen to help ensure a more even distribution of hash values.[8][9]

The following diagram illustrates the logical flow of hashing a key to find the corresponding data in an this compound database.

Experimental Protocols: Core this compound Operations

This section details the methodologies for performing fundamental operations on an this compound database. These protocols are based on the standard this compound library functions.

-

Objective: To create a new this compound database or open an existing one.

-

Methodology:

-

Include the this compound.h header file in your C application.

-

Use the dbm_open() function, providing the base filename for the database, flags to indicate the mode of operation (e.g., read-only, read-write, create if not existing), and file permissions for creation.[1][7]

-

The function returns a DBM pointer, which is a handle to the opened database. This handle is used for all subsequent operations.[1][7]

-

If the function fails, it returns NULL.

-

-

Objective: To insert a new key-value pair or update an existing one.

-

Methodology:

-

Ensure the database is opened in a writable mode.

-

Define the key and value as datum structures. A datum has two members: dptr (a pointer to the data) and dsize (the size of the data).[1]

-

Call the dbm_store() function, passing the database handle, the key datum, the value datum, and a flag indicating the desired behavior (DBM_INSERT to only insert if the key doesn't exist, or DBM_REPLACE to overwrite an existing entry).[1][7]

-

The function returns 0 on success, 1 if DBM_INSERT was used and the key already exists, and a negative value on error.[3]

-

-

Objective: To fetch the value associated with a given key.

-

Methodology:

-

Objective: To remove a key and its associated value from the database.

-

Methodology:

-

Define the key to be deleted as a datum structure.

-

Call the dbm_delete() function with the database handle and the key datum.[1]

-

The function returns 0 on success and a negative value on failure.

-

-

Objective: To properly close the this compound database and release resources.

-

Methodology:

| Function | Purpose | Key Parameters | Return Value |

| dbm_open() | Opens or creates a database. | filename, flags, mode | DBM* handle or NULL on error. |

| dbm_store() | Stores a key-value pair. | DBM, key datum, value datum, mode | 0 on success, 1 if key exists with DBM_INSERT, negative on error. |

| dbm_fetch() | Retrieves a value by key. | DBM, key datum | datum with value, or dptr = NULL if not found. |

| dbm_delete() | Deletes a key-value pair. | DBM, key datum | 0 on success, negative on error. |

| dbm_close() | Closes the database. | DBM | void |

The following diagram illustrates a typical workflow for using an this compound database in an application.

Dynamic Growth: Extendible Hashing

A key feature of this compound is its ability to handle growing datasets efficiently through extendible hashing.[2] This mechanism avoids the performance degradation that can occur in static hash tables when they become too full.

The core idea is to have a directory of pointers to data buckets. The size of this directory can be doubled when a bucket becomes full and needs to be split. The hash function produces a binary string, and a certain number of bits from this string (the "global depth") are used as an index into the directory.[4][6] Each bucket also has a "local depth," which is the number of bits used to distribute keys within that bucket.

When a bucket overflows:

-

If the bucket's local depth is less than the directory's global depth, the bucket is split, and the directory pointers are updated to point to the new buckets.

-

If the bucket's local depth is equal to the global depth, the directory itself is doubled in size (the global depth is incremented), and then the bucket is split.[6][11]

This process ensures that only the necessary parts of the hash table are expanded, making it a very efficient way to manage dynamic data.

This diagram illustrates the logic of splitting a bucket in an extendible hashing scheme.

Conclusion

The this compound file organization provides a robust and efficient mechanism for key-value data storage and retrieval. Its two-file structure, coupled with the power of extendible hashing, allows for fast lookups and graceful handling of database growth. For researchers and developers who require a simple yet high-performance embedded database solution, understanding the core principles of this compound is invaluable. While newer and more feature-rich database systems exist, the foundational concepts of this compound continue to be relevant in the design of modern data storage systems.

References

- 1. The this compound library [infolab.stanford.edu]

- 2. DBM (computing) - Wikipedia [en.wikipedia.org]

- 3. IBM Documentation [ibm.com]

- 4. delab.csd.auth.gr [delab.csd.auth.gr]

- 5. studyglance.in [studyglance.in]

- 6. Extendible Hashing (Dynamic approach to DBMS) - GeeksforGeeks [geeksforgeeks.org]

- 7. This compound (GDBM manual) [gnu.org.ua]

- 8. matlab.algorithmexamples.com [matlab.algorithmexamples.com]

- 9. cse.yorku.ca [cse.yorku.ca]

- 10. sdbm [doc.riot-os.org]