BBD

Descripción

Propiedades

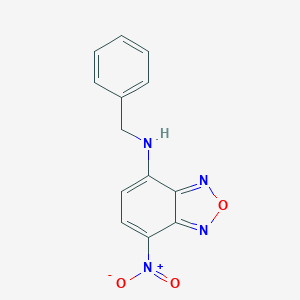

IUPAC Name |

N-benzyl-4-nitro-2,1,3-benzoxadiazol-7-amine | |

|---|---|---|

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI |

InChI=1S/C13H10N4O3/c18-17(19)11-7-6-10(12-13(11)16-20-15-12)14-8-9-4-2-1-3-5-9/h1-7,14H,8H2 | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

InChI Key |

GZFKJMWBKTUNJS-UHFFFAOYSA-N | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Canonical SMILES |

C1=CC=C(C=C1)CNC2=CC=C(C3=NON=C23)[N+](=O)[O-] | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

Molecular Formula |

C13H10N4O3 | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

DSSTOX Substance ID |

DTXSID90171475 | |

| Record name | 7-Nitro-N-(benzyl)benzofurazan-4-amine | |

| Source | EPA DSSTox | |

| URL | https://comptox.epa.gov/dashboard/DTXSID90171475 | |

| Description | DSSTox provides a high quality public chemistry resource for supporting improved predictive toxicology. | |

Molecular Weight |

270.24 g/mol | |

| Source | PubChem | |

| URL | https://pubchem.ncbi.nlm.nih.gov | |

| Description | Data deposited in or computed by PubChem | |

CAS No. |

18378-20-6 | |

| Record name | 7-Benzylamino-4-nitro-2,1,3-benzoxadiazole | |

| Source | CAS Common Chemistry | |

| URL | https://commonchemistry.cas.org/detail?cas_rn=18378-20-6 | |

| Description | CAS Common Chemistry is an open community resource for accessing chemical information. Nearly 500,000 chemical substances from CAS REGISTRY cover areas of community interest, including common and frequently regulated chemicals, and those relevant to high school and undergraduate chemistry classes. This chemical information, curated by our expert scientists, is provided in alignment with our mission as a division of the American Chemical Society. | |

| Explanation | The data from CAS Common Chemistry is provided under a CC-BY-NC 4.0 license, unless otherwise stated. | |

| Record name | 7-Nitro-N-(benzyl)benzofurazan-4-amine | |

| Source | ChemIDplus | |

| URL | https://pubchem.ncbi.nlm.nih.gov/substance/?source=chemidplus&sourceid=0018378206 | |

| Description | ChemIDplus is a free, web search system that provides access to the structure and nomenclature authority files used for the identification of chemical substances cited in National Library of Medicine (NLM) databases, including the TOXNET system. | |

| Record name | 18378-20-6 | |

| Source | DTP/NCI | |

| URL | https://dtp.cancer.gov/dtpstandard/servlet/dwindex?searchtype=NSC&outputformat=html&searchlist=240867 | |

| Description | The NCI Development Therapeutics Program (DTP) provides services and resources to the academic and private-sector research communities worldwide to facilitate the discovery and development of new cancer therapeutic agents. | |

| Explanation | Unless otherwise indicated, all text within NCI products is free of copyright and may be reused without our permission. Credit the National Cancer Institute as the source. | |

| Record name | 7-Nitro-N-(benzyl)benzofurazan-4-amine | |

| Source | EPA DSSTox | |

| URL | https://comptox.epa.gov/dashboard/DTXSID90171475 | |

| Description | DSSTox provides a high quality public chemistry resource for supporting improved predictive toxicology. | |

| Record name | 7-nitro-N-(benzyl)benzofurazan-4-amine | |

| Source | European Chemicals Agency (ECHA) | |

| URL | https://echa.europa.eu/substance-information/-/substanceinfo/100.038.404 | |

| Description | The European Chemicals Agency (ECHA) is an agency of the European Union which is the driving force among regulatory authorities in implementing the EU's groundbreaking chemicals legislation for the benefit of human health and the environment as well as for innovation and competitiveness. | |

| Explanation | Use of the information, documents and data from the ECHA website is subject to the terms and conditions of this Legal Notice, and subject to other binding limitations provided for under applicable law, the information, documents and data made available on the ECHA website may be reproduced, distributed and/or used, totally or in part, for non-commercial purposes provided that ECHA is acknowledged as the source: "Source: European Chemicals Agency, http://echa.europa.eu/". Such acknowledgement must be included in each copy of the material. ECHA permits and encourages organisations and individuals to create links to the ECHA website under the following cumulative conditions: Links can only be made to webpages that provide a link to the Legal Notice page. | |

| Record name | 7-Nitro-N-(benzyl)benzofurazan-4-amine | |

| Source | FDA Global Substance Registration System (GSRS) | |

| URL | https://gsrs.ncats.nih.gov/ginas/app/beta/substances/MKU6C7CU72 | |

| Description | The FDA Global Substance Registration System (GSRS) enables the efficient and accurate exchange of information on what substances are in regulated products. Instead of relying on names, which vary across regulatory domains, countries, and regions, the GSRS knowledge base makes it possible for substances to be defined by standardized, scientific descriptions. | |

| Explanation | Unless otherwise noted, the contents of the FDA website (www.fda.gov), both text and graphics, are not copyrighted. They are in the public domain and may be republished, reprinted and otherwise used freely by anyone without the need to obtain permission from FDA. Credit to the U.S. Food and Drug Administration as the source is appreciated but not required. | |

Foundational & Exploratory

Box-Behnken Design: A Technical Guide for Experimental Optimization in Scientific Research

For Researchers, Scientists, and Drug Development Professionals

In the landscape of experimental research, particularly within drug development and process optimization, the pursuit of efficiency and precision is paramount. The Box-Behnken Design (BBD) emerges as a powerful statistical tool, offering a strategic approach to understanding and optimizing complex processes. This technical guide provides an in-depth exploration of the core principles of this compound, its practical applications, and detailed methodologies, tailored for scientists and researchers seeking to enhance their experimental designs.

Core Principles of Box-Behnken Design

Developed by George E. P. Box and Donald Behnken in 1960, the Box-Behnken design is a type of response surface methodology (RSM) that provides an efficient alternative to other designs like central composite and full factorial designs.[1] this compound is specifically engineered to fit a quadratic model, making it highly effective for optimization studies where curvature in the response surface is expected.[2]

The fundamental structure of a this compound involves testing each experimental factor at three distinct, equally spaced levels, typically coded as -1 (low), 0 (center), and +1 (high).[3] A key characteristic and significant advantage of the this compound is its deliberate avoidance of experimental runs where all factors are simultaneously at their extreme (high or low) levels.[2][4] This "no corners" approach is particularly beneficial in situations where extreme factor combinations could lead to undesirable, unsafe, or impractical experimental conditions.[4][5]

The design is constructed by combining two-level factorial designs with incomplete block designs.[6] The experimental points are strategically placed at the midpoints of the edges of the design space and at the center.[4] This arrangement allows for the efficient estimation of linear, interaction, and quadratic effects of the factors on the response variable.[1]

The Logical Structure of a Box-Behnken Design

The following diagram illustrates the conceptual structure of a Box-Behnken Design for three factors. Each axis represents a factor, and the points represent the experimental runs. Notice the absence of points at the corners of the cube.

References

- 1. researchgate.net [researchgate.net]

- 2. Applying Box–Behnken Design for Formulation and Optimization of PLGA-Coffee Nanoparticles and Detecting Enhanced Antioxidant and Anticancer Activities - PMC [pmc.ncbi.nlm.nih.gov]

- 3. Box-Behnken Design for Optimization of Formulation Variables for Fast Dissolving Tablet of Urapidil [wisdomlib.org]

- 4. ijper.org [ijper.org]

- 5. Box-Behnken Design for Optimization of Formulation Variables for Fast Dissolving Tablet of Urapidil | Asian Journal of Pharmaceutics (AJP) [asiapharmaceutics.info]

- 6. researchgate.net [researchgate.net]

Principles of Box-Behnken Design: An In-depth Guide for Researchers

For Researchers, Scientists, and Drug Development Professionals

In the landscape of experimental design, particularly within pharmaceutical development and process optimization, the Box-Behnken Design (BBD) emerges as a highly efficient and economical approach. As a cornerstone of Response Surface Methodology (RSM), this compound provides a framework for modeling and analyzing processes where the response of interest is influenced by multiple variables. This guide offers a comprehensive overview of the core principles of this compound, its practical applications, and a detailed protocol for its implementation, tailored for professionals in scientific research and drug development.

Core Principles of Box-Behnken Design

Developed by George E. P. Box and Donald Behnken in 1960, the Box-Behnken design is a type of response surface design that is structured to fit a quadratic model.[1] It is particularly advantageous when the experimental region is known, and the primary goal is to understand the relationships between quantitative experimental variables and a response variable to find the optimal conditions.

At its core, this compound is a three-level incomplete factorial design.[1][2] This means that for each factor or independent variable, three levels are examined: a low level (coded as -1), a central level (coded as 0), and a high level (coded as +1).[1] A key characteristic of this compound is that it does not include experimental runs at the vertices (corners) of the cubic design space, where all factors are at their extreme high or low levels simultaneously.[3][4] Instead, the design points are located at the midpoints of the edges of the experimental space and at the center.[3][5] This feature provides a significant advantage in situations where extreme factor combinations could lead to undesirable outcomes, such as unsafe operating conditions or compromised product quality.[3][4]

BBDs are designed to be rotatable or nearly rotatable, which means the variance of the predicted response is constant at all points equidistant from the center of the design.[6] This property ensures that the quality of the prediction is consistent throughout the design space.

Box-Behnken Design vs. Central Composite Design

A common alternative to this compound is the Central Composite Design (CCD). While both are used for response surface modeling, they differ in their structure and the number of required experimental runs.

| Number of Factors | Box-Behnken Design (Number of Runs) | Central Composite Design (Number of Runs) |

| 3 | 15 (including 3 center points) | 20 (including 6 center points) |

| 4 | 27 (including 3 center points) | 30 (including 6 center points) |

| 5 | 46 (including 6 center points) | 52 (including 10 center points) |

| 6 | 54 (including 6 center points) | 91 (including 10 center points) |

As the table illustrates, for a smaller number of factors (typically 3 or 4), this compound is often more efficient in terms of the number of required experiments.[7][8] However, as the number of factors increases, the efficiency advantage of this compound may diminish.[8]

Advantages and Disadvantages of Box-Behnken Design

Advantages:

-

Efficiency: this compound often requires fewer experimental runs than CCD for the same number of factors, especially for 3 and 4 factors, saving time and resources.[7]

-

Safety: By avoiding extreme combinations of all factors, this compound is particularly useful when such conditions are dangerous, expensive, or could lead to equipment failure.[3][4]

-

Quadratic Model Fitting: The design is specifically structured to efficiently estimate the coefficients of a second-order (quadratic) model.[6]

Disadvantages:

-

Not for Sequential Experimentation: Unlike CCDs, which can be built up from a factorial or fractional factorial design, BBDs are not suitable for sequential experiments.

-

Limited to Second-Order Models: The design is primarily intended for fitting quadratic models and may not be suitable for higher-order polynomial models.[6]

-

Poor Coverage of Corners: The absence of experimental points at the corners of the design space can lead to higher prediction variance in these regions.[1]

Experimental Protocol for Implementing a Box-Behnken Design

The following is a generalized protocol for conducting an experiment using a Box-Behnken design, particularly relevant for drug development and process optimization.

Phase 1: Planning and Design

-

Define the Objective: Clearly state the goal of the experiment, such as optimizing a formulation to maximize drug release or minimizing impurities in a synthesis process.

-

Identify Factors and Ranges:

-

Select the critical independent variables (factors) that are believed to influence the response(s).

-

Determine the low (-1), medium (0), and high (+1) levels for each factor based on preliminary experiments, literature review, or prior knowledge.

-

-

Select the Responses: Identify the dependent variables (responses) that will be measured to assess the outcome of the experiment (e.g., dissolution rate, particle size, yield).

-

Generate the Design Matrix: Use statistical software (e.g., JMP, Minitab, Design-Expert) to generate the Box-Behnken design matrix. This will provide a randomized run order for the experiments. A typical this compound for three factors will consist of 15 runs, including three center points.

Phase 2: Experimentation

-

Prepare Materials and Equipment: Ensure all necessary materials, reagents, and equipment are calibrated and ready for the experiments.

-

Conduct the Experimental Runs: Perform the experiments according to the randomized run order provided by the design matrix. It is crucial to adhere strictly to the specified factor levels for each run.

-

Measure and Record Responses: Accurately measure the defined responses for each experimental run and record the data systematically.

Phase 3: Data Analysis and Optimization

-

Fit the Model: Analyze the experimental data using the same statistical software. Fit a quadratic model to the data for each response. The general form of a second-order polynomial equation is:

Y = β₀ + ΣβᵢXᵢ + ΣβᵢᵢXᵢ² + ΣβᵢⱼXᵢXⱼ

where Y is the predicted response, β₀ is the intercept, βᵢ are the linear coefficients, βᵢᵢ are the quadratic coefficients, and βᵢⱼ are the interaction coefficients.

-

Assess Model Significance and Fit:

-

Use Analysis of Variance (ANOVA) to check the statistical significance of the model.

-

Evaluate the goodness of fit using metrics like the coefficient of determination (R²), adjusted R², and predicted R².

-

-

Interpret the Results:

-

Examine the coefficients of the model to understand the effect of each factor and their interactions on the response.

-

Generate response surface plots (3D) and contour plots (2D) to visualize the relationship between the factors and the response.

-

-

Optimization:

-

Use the model to determine the optimal settings of the factors that will achieve the desired response. This can be done through numerical optimization functions within the statistical software.

-

Define the optimization criteria (e.g., maximize, minimize, target a specific value for each response).

-

-

Validation: Conduct a confirmation experiment at the predicted optimal conditions to verify the model's predictive accuracy.

Applications in Drug Development

Box-Behnken designs are widely applied in various stages of drug development:

-

Formulation Optimization: In the development of extended-release matrix tablets, this compound has been used to optimize the amounts of different polymers to achieve a desired drug release profile.[9]

-

Nanoparticle Formulation: this compound is employed to study the impact of process parameters like homogenization speed and time, and formulation parameters like surfactant concentration on the particle size and encapsulation efficiency of nanoparticles.[3]

-

Analytical Method Development: The design is used to optimize RP-HPLC (Reverse-Phase High-Performance Liquid Chromatography) conditions, such as the pH of the buffer, the percentage of the organic phase, and the flow rate, to achieve optimal separation of drug substances.[1]

-

Process Optimization in Pharmaceutical Manufacturing: this compound can be used to optimize manufacturing processes, such as the iontophoretic delivery of drugs, by studying the effects of variables like current density and pH.

Visualizing Box-Behnken Design Principles

To better understand the structure and workflow of a Box-Behnken design, the following diagrams are provided.

Caption: Logical structure of a 3-factor Box-Behnken Design.

Caption: General experimental workflow for a Box-Behnken Design.

References

- 1. Application of Box-Behnken experimental design and response surface methodology for selecting the optimum RP-HPLC conditions for the simultaneous determination of methocarbamol, indomethacin and betamethasone in their pharmaceutical dosage form - PMC [pmc.ncbi.nlm.nih.gov]

- 2. researchgate.net [researchgate.net]

- 3. Applying Box–Behnken Design for Formulation and Optimization of PLGA-Coffee Nanoparticles and Detecting Enhanced Antioxidant and Anticancer Activities - PMC [pmc.ncbi.nlm.nih.gov]

- 4. Central Composite Design vs. Box-Behnken Design [experimentaldesignhub.com]

- 5. Box–Behnken Designs and Their Applications in Pharmaceutical Product Development [ouci.dntb.gov.ua]

- 6. The Open Educator - 4. Box-Behnken Response Surface Methodology [theopeneducator.com]

- 7. 5.3.3.6.3. Comparisons of response surface designs [itl.nist.gov]

- 8. 11.2.2 - Box-Behnken Designs | STAT 503 [online.stat.psu.edu]

- 9. mdpi.com [mdpi.com]

An In-depth Technical Guide to the Geometry of Box-Behnken Design

For Researchers, Scientists, and Drug Development Professionals

The Box-Behnken design (BBD), conceived by George E. P. Box and Donald Behnken in 1960, is a highly efficient, second-order response surface methodology (RSM) design for modeling and optimizing processes.[1][2] Its unique geometric structure offers distinct advantages, particularly in scenarios where extreme combinations of factor levels are undesirable or infeasible. This guide provides a detailed exploration of the core geometry of the Box-Behnken design, its construction, and its practical implications in experimental design.

Core Principles of Box-Behnken Design

The Box-Behnken design is a three-level incomplete factorial design.[1][2] Each factor, or independent variable, is studied at three equally spaced levels, typically coded as -1 (low), 0 (central), and +1 (high).[1][2] A key characteristic of the this compound is that it does not contain an embedded full or fractional factorial design.[3] Consequently, it avoids experimental runs at the vertices of the cubic experimental region, where all factors are simultaneously at their highest or lowest levels.[4] This feature is particularly advantageous in drug development and other sensitive research areas where extreme conditions could lead to safety concerns, equipment failure, or undesirable side effects.[4]

The design is structured to efficiently estimate the parameters of a second-order (quadratic) model, which is often necessary to capture the curvature in the response surface and identify optimal process conditions.[1][2] BBDs are considered to be rotatable or nearly rotatable, meaning the variance of the predicted response is approximately constant at all points equidistant from the center of the design space.[3][5]

The Geometric Structure: A Sphere Within a Cube

Geometrically, the experimental points of a three-factor Box-Behnken design can be visualized as lying on a sphere within the cubic design space.[3] The design points are located at the midpoints of the edges of this cube and at its center.[3] This arrangement ensures that no experimental runs are performed at the corners of the cube.

This "sphere-like" distribution of points is a direct consequence of the design's construction, which involves a combination of two-level factorial designs and incomplete block designs.[1] For a three-factor design, the this compound is constructed by creating three blocks. In each block, a 2² factorial design is applied to two of the factors, while the third factor is held at its central level (0).[1] This structure is systematically rotated for all factor combinations, and then supplemented with center points.

Logical Structure of a Three-Factor Box-Behnken Design

The following diagram illustrates the logical construction of a three-factor Box-Behnken design. The design is partitioned into three blocks, where each block corresponds to holding one factor at its center level (0) while the other two factors are varied across their high (+1) and low (-1) levels. The center point runs, where all factors are at their central level, are also included.

Caption: Logical construction of a 3-factor Box-Behnken design.

Quantitative Comparison of Box-Behnken Designs

The number of experimental runs required for a Box-Behnken design is a function of the number of factors (k) and the number of center points (C₀). The total number of runs is given by the formula N = 2k(k-1) + C₀. The table below summarizes the design characteristics for different numbers of factors.

| Number of Factors (k) | Factorial Points | Center Points (Typical) | Total Runs (N) |

| 3 | 12 | 3 | 15 |

| 4 | 24 | 3 | 27 |

| 5 | 40 | 6 | 46 |

| 6 | 48 | 6 | 54 |

Table 1: Quantitative summary of Box-Behnken designs for varying numbers of factors.

Experimental Protocols

The successful implementation of a Box-Behnken design relies on a well-defined experimental protocol. A typical workflow is as follows:

-

Factor and Level Selection: Identify the critical process parameters (factors) and define their respective low (-1), medium (0), and high (+1) levels. These levels should be chosen based on prior knowledge, literature review, and preliminary screening experiments.

-

Design Generation: Generate the experimental design matrix using statistical software. This matrix will specify the combination of factor levels for each experimental run, including the randomized run order to minimize the impact of systematic errors.

-

Experimentation: Conduct the experiments according to the randomized run order specified in the design matrix. It is crucial to maintain consistency in experimental procedures and to accurately measure the response variable(s).

-

Data Analysis: After completing all experimental runs, analyze the data using response surface methodology. This involves fitting a second-order polynomial equation to the experimental data and evaluating the statistical significance of the model and its individual terms through analysis of variance (ANOVA).

-

Model Validation and Optimization: Validate the fitted model to ensure its predictive accuracy. Once validated, the model can be used to generate response surface plots and contour plots to visualize the relationship between the factors and the response, and to identify the optimal operating conditions.

Experimental Workflow for a Box-Behnken Design

The following diagram outlines the typical workflow for conducting an experiment using a Box-Behnken design, from the initial planning stages to the final optimization.

Caption: A typical experimental workflow using a Box-Behnken design.

References

The Genesis and Evolution of Box-Behnken Design: A Technical Guide for Modern Research

A cornerstone of response surface methodology (RSM), the Box-Behnken Design (BBD) stands as a testament to statistical ingenuity, offering an efficient and economical approach to optimizing complex processes. Conceived in 1960 by George E. P. Box and Donald Behnken, this experimental design has become an indispensable tool for researchers, scientists, and drug development professionals seeking to understand and refine multi-variable systems. [1][2][3] This in-depth technical guide explores the history, mathematical underpinnings, and practical application of Box-Behnken Design, providing a comprehensive resource for its effective implementation.

A Historical Perspective: The Dawn of an Efficient Design

In the mid-20th century, the field of experimental design was rapidly evolving, driven by the need for more efficient methods to explore the relationships between multiple input variables and a given response. It was within this context that George E. P. Box, a pioneering British statistician, and Donald Behnken, his collaborator, introduced their novel three-level incomplete factorial design.[1][2][3] Their seminal 1960 paper laid the groundwork for a design that would offer a compelling alternative to existing methods like the Central Composite Design (CCD).

The primary motivation behind the development of this compound was to create a design that could fit a second-order (quadratic) model with a reasonable number of experimental runs.[1][2] A key innovation of the Box-Behnken design is its avoidance of extreme corner points, where all factors are at their highest or lowest levels simultaneously.[4][5] This feature is particularly advantageous in situations where such extreme combinations could lead to undesirable or unsafe experimental outcomes, a common concern in industrial and pharmaceutical research.[5][6]

The Mathematical Foundation of Box-Behnken Design

At its core, the Box-Behnken Design is a strategic combination of two-level factorial designs with incomplete block designs.[2][3] This construction allows for the estimation of all the coefficients of a second-order polynomial model:

Y = β₀ + Σβᵢxᵢ + Σβᵢᵢxᵢ² + Σβᵢⱼxᵢxⱼ + ε

where Y is the predicted response, β₀ is the intercept, βᵢ are the linear coefficients, βᵢᵢ are the quadratic coefficients, βᵢⱼ are the interaction coefficients, xᵢ and xⱼ are the coded independent variables, and ε is the random error.

The design consists of a set of points lying at the midpoints of the edges of the experimental space and a central point.[2][7] Each factor is studied at three levels, typically coded as -1, 0, and +1, representing the low, middle, and high values of the factor.[1][3]

Comparing Box-Behnken Design with Central Composite Design

The choice between Box-Behnken Design and Central Composite Design often depends on the specific objectives and constraints of the experiment. The following table provides a quantitative comparison of these two popular response surface designs for a varying number of factors.

| Number of Factors (k) | Design | Total Runs (without center points) | Number of Levels per Factor | Extreme (Corner) Points |

| 3 | Box-Behnken | 12 | 3 | No |

| Central Composite (rotatable) | 14 | 5 | Yes | |

| 4 | Box-Behnken | 24 | 3 | No |

| Central Composite (rotatable) | 24 | 5 | Yes | |

| 5 | Box-Behnken | 40 | 3 | No |

| Central Composite (rotatable) | 42 | 5 | Yes | |

| 6 | Box-Behnken | 54 | 3 | No |

| Central Composite (rotatable) | 74 | 5 | Yes |

Note: The number of center points is typically chosen by the experimenter (e.g., 3-5) and added to the total runs.

As the table illustrates, for a smaller number of factors (k=3), this compound can be more efficient in terms of the number of runs required.[6][8] However, as the number of factors increases, the number of runs for both designs becomes more comparable.[5] A key advantage of this compound is its consistent three-level structure for each factor, whereas CCD requires five levels to achieve rotatability, which may not always be practical.[1][6]

Experimental Protocol: A Step-by-Step Guide to Applying Box-Behnken Design

The successful implementation of a Box-Behnken Design involves a systematic approach, from planning the experiment to analyzing the results. The following protocol outlines the key steps:

Step 1: Define the Experimental Objective and Identify Key Factors and Responses.

-

Clearly state the goal of the optimization study.

-

Identify the independent variables (factors) that are believed to influence the process and the dependent variables (responses) that need to be optimized.

Step 2: Determine the Experimental Range and Levels for Each Factor.

-

Based on prior knowledge and preliminary experiments, define the low (-1), middle (0), and high (+1) levels for each factor.

Step 3: Generate the Box-Behnken Design Matrix.

-

Use statistical software (e.g., JMP, Minitab, Design-Expert) to generate the experimental runs based on the number of factors.[1][9] The software will create a randomized run order to minimize the effect of nuisance variables.

Step 4: Conduct the Experiments.

-

Perform the experimental runs according to the generated design matrix in the specified randomized order.

-

Carefully record the observed response values for each run.

Step 5: Fit the Second-Order Polynomial Model.

-

Use the experimental data to fit a quadratic model that describes the relationship between the factors and the response.

Step 6: Analyze the Model and Assess its Adequacy.

-

Perform an Analysis of Variance (ANOVA) to determine the statistical significance of the model and its individual terms (linear, quadratic, and interaction).[4]

-

Key metrics to evaluate include the p-value (a value < 0.05 typically indicates significance), the coefficient of determination (R²), and the adjusted R².[4] A high R² value suggests that the model explains a large proportion of the variability in the response.

Step 7: Visualize the Response Surface.

-

Generate contour plots and 3D surface plots to visualize the relationship between the factors and the response. These plots are crucial for understanding the interaction effects and identifying the optimal operating conditions.

Step 8: Determine the Optimal Conditions and Validate the Model.

-

Use the model to predict the combination of factor levels that will result in the desired optimal response.

-

Conduct confirmation experiments at the predicted optimal conditions to validate the model's predictive ability.

Mandatory Visualizations

Experimental Workflow for Box-Behnken Design

Caption: A flowchart illustrating the systematic workflow of a Box-Behnken Design experiment.

Logical Relationship in Response Surface Optimization

Caption: A diagram illustrating the logical flow of response surface methodology for process optimization.

Application in Drug Development: A Case Study

Box-Behnken Design has found extensive application in the pharmaceutical industry, particularly in the formulation and optimization of drug delivery systems. A common application is in the development of nanoparticle-based drug delivery systems, where factors such as polymer concentration, sonication time, and surfactant concentration can significantly impact critical quality attributes like particle size, encapsulation efficiency, and drug release profile.

Case Study: Optimization of Polymeric Nanoparticles for Drug Delivery

In a study aimed at optimizing the formulation of irinotecan (B1672180) hydrochloride-loaded polycaprolactone (B3415563) (PCL) nanoparticles, researchers employed a Box-Behnken design.[7]

-

Factors:

-

Amount of PCL (polymer)

-

Amount of Irinotecan Hydrochloride (drug)

-

Concentration of PVA (surfactant)

-

-

Responses:

-

Particle Size

-

Zeta Potential

-

Encapsulation Efficiency

-

The this compound with 15 experimental runs was used to investigate the effects of these three factors at three levels.[7][10] The experimental data was then fitted to a second-order polynomial model. The ANOVA results revealed the significant factors and interactions influencing the responses. For instance, the amount of PCL and the concentration of PVA were found to have a significant impact on the particle size of the nanoparticles.

Through the analysis of response surface plots, the researchers were able to identify the optimal combination of factor levels to achieve the desired nanoparticle characteristics (e.g., smallest particle size and highest encapsulation efficiency).[7] This case study highlights the power of this compound in efficiently navigating the complex formulation landscape to develop robust and effective drug delivery systems.

Conclusion: The Enduring Legacy of Box-Behnken Design

More than six decades after its inception, the Box-Behnken Design continues to be a powerful and widely used tool in the arsenal (B13267) of researchers and scientists. Its efficiency, economy, and ability to fit a second-order model without resorting to extreme experimental conditions make it a highly practical choice for a wide range of applications. For professionals in drug development and other scientific fields, a thorough understanding of the principles and application of Box-Behnken Design is essential for accelerating the optimization of products and processes, ultimately leading to more robust and reliable outcomes.

References

- 1. m.youtube.com [m.youtube.com]

- 2. m.youtube.com [m.youtube.com]

- 3. How to create a Box-Behnken Design in Python [experimentaldesignhub.com]

- 4. Box-Behnken Designs: Analysis Results [help.synthesisplatform.net]

- 5. Central Composite Design vs. Box-Behnken Design [experimentaldesignhub.com]

- 6. What are response surface designs, central composite designs, and Box-Behnken designs? - Minitab [support.minitab.com]

- 7. mdpi.com [mdpi.com]

- 8. 5.3.3.6.3. Comparisons of response surface designs [itl.nist.gov]

- 9. The Open Educator - 4. Box-Behnken Response Surface Methodology [theopeneducator.com]

- 10. Applying Box–Behnken Design for Formulation and Optimization of PLGA-Coffee Nanoparticles and Detecting Enhanced Antioxidant and Anticancer Activities - PMC [pmc.ncbi.nlm.nih.gov]

Methodological & Application

Setting Up a Box-Behnken Design Experiment: Application Notes and Protocols for Pharmaceutical Researchers

Optimizing Drug Formulation with Statistical Precision

For researchers, scientists, and professionals in drug development, the optimization of formulation and process parameters is critical to ensuring product quality, efficacy, and reproducibility. The Box-Behnken Design (BBD), a type of response surface methodology (RSM), offers an efficient and powerful statistical approach for this purpose. This compound is particularly advantageous as it allows for the investigation of quadratic relationships between variables and responses without requiring an excessive number of experimental runs.[1][2]

These application notes provide a detailed guide on how to set up a Box-Behnken Design experiment, with a specific focus on the formulation of drug-loaded nanoparticles, a common application in modern drug delivery systems.

Core Principles of Box-Behnken Design

A Box-Behnken design is a three-level factorial design that is used to fit a quadratic model, making it ideal for optimization studies.[1] Key characteristics include:

-

Three Levels per Factor: Each independent variable (factor) is studied at three equally spaced levels, typically coded as -1 (low), 0 (intermediate), and +1 (high).[1]

-

Efficiency: this compound requires fewer experimental runs compared to other three-level designs like the full factorial design, especially as the number of factors increases.[2]

-

Avoidance of Extreme Conditions: A significant advantage of this compound is that it does not include experimental runs where all factors are at their extreme (high or low) levels simultaneously.[3] This is particularly useful in drug formulation where such extreme combinations could lead to undesirable outcomes, such as precipitation or degradation.

Application Spotlight: Optimization of PLGA Nanoparticle Formulation

This protocol outlines the use of a Box-Behnken design to optimize the formulation of Poly(lactic-co-glycolic acid) (PLGA) nanoparticles, a widely used biodegradable polymer for controlled drug delivery.[4][5] The objective is to determine the optimal combination of formulation variables to achieve desired nanoparticle characteristics, such as particle size and drug encapsulation efficiency.

Experimental Factors and Responses

For this example, we will consider a three-factor, two-response this compound.

| Independent Variables (Factors) | Levels | Dependent Variables (Responses) |

| A: PLGA Concentration (mg/mL) | Low (-1), Intermediate (0), High (+1) | Y₁: Particle Size (nm) |

| B: Surfactant Concentration (%) | Low (-1), Intermediate (0), High (+1) | Y₂: Encapsulation Efficiency (%) |

| C: Drug Amount (mg) | Low (-1), Intermediate (0), High (+1) |

Experimental Protocol: Preparation of PLGA Nanoparticles by Emulsion-Solvent Evaporation

The following is a generalized protocol for preparing PLGA nanoparticles. The specific values for the low, intermediate, and high levels of each factor would be determined based on preliminary studies and prior knowledge.

-

Organic Phase Preparation: Dissolve the specified amount of PLGA (Factor A) and the drug (Factor C) in an appropriate organic solvent (e.g., dichloromethane (B109758) or acetone).

-

Aqueous Phase Preparation: Prepare an aqueous solution containing the specified concentration of a surfactant, such as polyvinyl alcohol (PVA) (Factor B).

-

Emulsification: Add the organic phase to the aqueous phase under constant homogenization or sonication to form an oil-in-water (o/w) emulsion. The parameters of homogenization (e.g., speed and time) should be kept constant for all experimental runs.

-

Solvent Evaporation: Stir the resulting emulsion at room temperature for several hours to allow for the complete evaporation of the organic solvent. This leads to the formation of solid nanoparticles.

-

Nanoparticle Collection: Collect the nanoparticles by centrifugation, wash them with deionized water to remove excess surfactant and un-encapsulated drug, and then freeze-dry the nanoparticles for storage and characterization.

-

Characterization: For each experimental run, measure the particle size (Y₁) using a technique like dynamic light scattering (DLS) and determine the encapsulation efficiency (Y₂) through a suitable analytical method (e.g., UV-Vis spectrophotometry or HPLC) after lysing the nanoparticles to release the encapsulated drug.

Box-Behnken Experimental Design and Data Collection

A three-factor this compound typically consists of 15 experimental runs, including three center point replicates to estimate the experimental error.[3] The design matrix in coded and actual values, along with hypothetical response data, is presented below.

| Run | Factor A (Coded) | Factor B (Coded) | Factor C (Coded) | Factor A: PLGA Conc. (mg/mL) | Factor B: Surfactant Conc. (%) | Factor C: Drug Amount (mg) | Y₁: Particle Size (nm) | Y₂: Encapsulation Efficiency (%) |

| 1 | -1 | -1 | 0 | 5 | 0.5 | 10 | 250 | 65 |

| 2 | 1 | -1 | 0 | 15 | 0.5 | 10 | 350 | 75 |

| 3 | -1 | 1 | 0 | 5 | 1.5 | 10 | 220 | 80 |

| 4 | 1 | 1 | 0 | 15 | 1.5 | 10 | 320 | 88 |

| 5 | -1 | 0 | -1 | 5 | 1.0 | 5 | 230 | 60 |

| 6 | 1 | 0 | -1 | 15 | 1.0 | 5 | 330 | 70 |

| 7 | -1 | 0 | 1 | 5 | 1.0 | 15 | 260 | 72 |

| 8 | 1 | 0 | 1 | 15 | 1.0 | 15 | 360 | 82 |

| 9 | 0 | -1 | -1 | 10 | 0.5 | 5 | 280 | 68 |

| 10 | 0 | 1 | -1 | 10 | 1.5 | 5 | 260 | 78 |

| 11 | 0 | -1 | 1 | 10 | 0.5 | 15 | 290 | 75 |

| 12 | 0 | 1 | 1 | 10 | 1.5 | 15 | 270 | 85 |

| 13 | 0 | 0 | 0 | 10 | 1.0 | 10 | 275 | 81 |

| 14 | 0 | 0 | 0 | 10 | 1.0 | 10 | 280 | 82 |

| 15 | 0 | 0 | 0 | 10 | 1.0 | 10 | 278 | 81.5 |

Data Analysis and Model Fitting

The collected data is then analyzed using statistical software. The primary tool for analysis is the analysis of variance (ANOVA).[6] The goal is to fit the response data to a second-order polynomial equation of the following form:

Y = β₀ + β₁A + β₂B + β₃C + β₁₂AB + β₁₃AC + β₂₃BC + β₁₁A² + β₂₂B² + β₃₃C²

Where Y is the predicted response, β₀ is the model constant; β₁, β₂, and β₃ are the linear coefficients; β₁₂, β₁₃, and β₂₃ are the interaction coefficients; and β₁₁, β₂₂, and β₃₃ are the quadratic coefficients. The significance of each coefficient is determined by its p-value.

| Source | Sum of Squares | df | Mean Square | F-value | p-value |

| Model | 28950.0 | 9 | 3216.7 | 25.73 | < 0.001 |

| A-PLGA Conc. | 16900.0 | 1 | 16900.0 | 135.20 | < 0.001 |

| B-Surfactant Conc. | 4900.0 | 1 | 4900.0 | 39.20 | < 0.001 |

| C-Drug Amount | 2500.0 | 1 | 2500.0 | 20.00 | 0.004 |

| AB | 400.0 | 1 | 400.0 | 3.20 | 0.134 |

| AC | 100.0 | 1 | 100.0 | 0.80 | 0.408 |

| BC | 900.0 | 1 | 900.0 | 7.20 | 0.044 |

| A² | 1600.0 | 1 | 1600.0 | 12.80 | 0.012 |

| B² | 400.0 | 1 | 400.0 | 3.20 | 0.134 |

| C² | 250.0 | 1 | 250.0 | 2.00 | 0.217 |

| Residual | 625.0 | 5 | 125.0 | ||

| Lack of Fit | 587.5 | 3 | 195.8 | 10.45 | 0.088 |

| Pure Error | 37.5 | 2 | 18.8 | ||

| Cor Total | 29575.0 | 14 |

From this ANOVA table, the significant factors affecting particle size can be identified (those with p-values < 0.05). In this hypothetical example, the linear effects of all three factors, the interaction between surfactant concentration and drug amount, and the quadratic effect of PLGA concentration are significant.

Visualizing the Experimental Workflow

A clear workflow is essential for planning and executing a this compound experiment.

Conclusion

The Box-Behnken design is a highly effective tool for optimizing complex formulations and processes in drug development. By systematically varying key parameters and modeling their effects on critical quality attributes, researchers can efficiently identify optimal conditions with a minimal number of experiments. This leads to the development of robust and reproducible drug delivery systems. The application of this compound, as demonstrated in the optimization of PLGA nanoparticles, exemplifies a data-driven approach to pharmaceutical formulation that is both resource-efficient and scientifically rigorous.

References

- 1. Box–Behnken design - Wikipedia [en.wikipedia.org]

- 2. Box–Behnken Design of Experiments of Polycaprolactone Nanoparticles Loaded with Irinotecan Hydrochloride - PMC [pmc.ncbi.nlm.nih.gov]

- 3. 11.2.2 - Box-Behnken Designs | STAT 503 [online.stat.psu.edu]

- 4. Formulation and optimization of polymeric nanoparticles for intranasal delivery of lorazepam using Box-Behnken design: in vitro and in vivo evaluation - PubMed [pubmed.ncbi.nlm.nih.gov]

- 5. Applying Box–Behnken Design for Formulation and Optimization of PLGA-Coffee Nanoparticles and Detecting Enhanced Antioxidant and Anticancer Activities - PMC [pmc.ncbi.nlm.nih.gov]

- 6. Box-Behnken Designs: Analysis Results [help.synthesisplatform.net]

Optimizing Chemical Reactions: A Guide to Box-Behnken Design

Application Notes and Protocols for Researchers, Scientists, and Drug Development Professionals

In the pursuit of efficient and robust chemical processes, optimization is a critical step. Traditional one-variable-at-a-time approaches are often time-consuming and fail to capture the interactions between different reaction parameters. Box-Behnken Design (BBD), a type of response surface methodology (RSM), offers a statistically rigorous and efficient alternative for optimizing chemical reactions. This powerful tool allows for the simultaneous study of multiple variables, leading to a comprehensive understanding of the reaction landscape and the identification of optimal conditions with a minimal number of experiments.

This document provides detailed application notes and protocols for utilizing Box-Behnken Design in the optimization of chemical reactions, tailored for professionals in research, scientific, and drug development fields.

Core Principles of Box-Behnken Design

Box-Behnken designs are a class of rotatable or nearly rotatable second-order designs that are highly efficient for fitting a quadratic model.[1][2] Key characteristics include:

-

Three Levels per Factor: Each experimental factor is investigated at three equally spaced levels, typically coded as -1 (low), 0 (central), and +1 (high).[1]

-

Factorial and Incomplete Block Combination: The design can be conceptualized as a combination of a two-level factorial design with an incomplete block design. In each block, a subset of factors is varied while the others are held at their central values.[1]

-

Efficiency: this compound requires fewer experimental runs compared to other three-level designs like the central composite design (CCD), making it a more resource-efficient option.[3][4]

-

Quadratic Model Fitting: The design is specifically structured to efficiently estimate the coefficients of a second-order polynomial model, which can capture curvature in the response surface.[1]

The general workflow for implementing a Box-Behnken Design is illustrated below:

Application Case Study 1: Optimization of Esterification of Acrylic Acid

This section details the optimization of the esterification of acrylic acid with ethanol (B145695) using sulfuric acid as a catalyst, based on a study utilizing Box-Behnken Design.[5][6]

Experimental Factors and Levels

The key factors influencing the conversion of acrylic acid were identified as reaction temperature, initial molar ratio of reactants, and catalyst concentration.

| Factor | Code | Low (-1) | Medium (0) | High (+1) |

| Temperature (°C) | X₁ | 60 | 70 | 80 |

| Molar Ratio (Ethanol:Acrylic Acid) | X₂ | 1:1 | 2:1 | 3:1 |

| Catalyst Concentration (wt%) | X₃ | 1 | 2 | 3 |

Box-Behnken Design Matrix and Results

A 15-run experiment was designed, including three center points to estimate the experimental error.

| Run | Temperature (°C) | Molar Ratio | Catalyst Conc. (wt%) | Acrylic Acid Conversion (%) |

| 1 | 60 | 1:1 | 2 | 45.2 |

| 2 | 80 | 1:1 | 2 | 65.8 |

| 3 | 60 | 3:1 | 2 | 68.4 |

| 4 | 80 | 3:1 | 2 | 85.2 |

| 5 | 60 | 2:1 | 1 | 55.6 |

| 6 | 80 | 2:1 | 1 | 72.3 |

| 7 | 60 | 2:1 | 3 | 75.1 |

| 8 | 80 | 2:1 | 3 | 90.5 |

| 9 | 70 | 1:1 | 1 | 50.1 |

| 10 | 70 | 3:1 | 1 | 70.2 |

| 11 | 70 | 1:1 | 3 | 70.8 |

| 12 | 70 | 3:1 | 3 | 88.9 |

| 13 | 70 | 2:1 | 2 | 82.1 |

| 14 | 70 | 2:1 | 2 | 82.5 |

| 15 | 70 | 2:1 | 2 | 82.3 |

Protocol: Esterification of Acrylic Acid

-

Reactor Setup: The esterification reactions are conducted in a batch reactor equipped with a stirrer and a temperature controller.[5][6]

-

Reactant Charging: Charge the reactor with the specified amounts of acrylic acid and ethanol according to the molar ratios in the design matrix.

-

Catalyst Addition: Add the designated weight percentage of sulfuric acid catalyst to the reaction mixture.

-

Reaction: Heat the mixture to the specified reaction temperature and maintain it for a predetermined reaction time with constant stirring.

-

Sampling and Analysis: After the reaction is complete, cool the mixture and take a sample for analysis. The conversion of acrylic acid is determined using gas chromatography.[5][6]

Logical Relationship of this compound Factors

Application Case Study 2: Optimization of Photocatalytic Degradation of 2,4-D

This case study focuses on the optimization of the photocatalytic degradation of 2,4-dichlorophenoxyacetic acid (2,4-D) using a TiO₂/H₂O₂ system.[7][8][9]

Experimental Factors and Levels

Two models were developed. Model 2, which included the effect of hydrogen peroxide, is presented here.

| Factor | Code | Low (-1) | Medium (0) | High (+1) |

| pH | X₁ | 3 | 6 | 9 |

| TiO₂ Concentration (g/L) | X₂ | 0.5 | 1.0 | 1.5 |

| H₂O₂ Concentration (mg/L) | X₃ | 50 | 150 | 250 |

Box-Behnken Design Matrix and Results

The study employed a this compound to investigate the relationship between the factors and the degradation rate of 2,4-D.

| Run | pH | TiO₂ (g/L) | H₂O₂ (mg/L) | 2,4-D Degradation Rate (%) |

| 1 | 3 | 0.5 | 150 | 65.2 |

| 2 | 9 | 0.5 | 150 | 45.8 |

| 3 | 3 | 1.5 | 150 | 80.1 |

| 4 | 9 | 1.5 | 150 | 60.5 |

| 5 | 3 | 1.0 | 50 | 72.3 |

| 6 | 9 | 1.0 | 50 | 50.7 |

| 7 | 3 | 1.0 | 250 | 78.9 |

| 8 | 9 | 1.0 | 250 | 58.4 |

| 9 | 6 | 0.5 | 50 | 55.6 |

| 10 | 6 | 1.5 | 50 | 75.3 |

| 11 | 6 | 0.5 | 250 | 62.1 |

| 12 | 6 | 1.5 | 250 | 82.4 |

| 13 | 6 | 1.0 | 150 | 83.2 |

| 14 | 6 | 1.0 | 150 | 83.5 |

| 15 | 6 | 1.0 | 150 | 83.6 |

Note: The degradation rates are illustrative and based on the trends reported in the source material.

Protocol: Photocatalytic Degradation of 2,4-D

-

Reactor Setup: The experiments are performed in a laboratory-scale photoreactor equipped with a UV lamp and a stirring mechanism.[7][9]

-

Sample Preparation: Prepare an aqueous solution of 2,4-D at a specific initial concentration.

-

Parameter Adjustment: Adjust the pH of the solution to the desired level using acid or base.

-

Catalyst and Oxidant Addition: Add the specified amounts of TiO₂ photocatalyst and H₂O₂ to the solution.[9]

-

Photoreaction: Irradiate the mixture with the UV lamp for a set duration while continuously stirring.

-

Analysis: After the reaction, filter the sample to remove the TiO₂ particles and analyze the concentration of 2,4-D using a suitable analytical technique, such as high-performance liquid chromatography (HPLC), to determine the degradation rate.

Application Case Study 3: Microwave-Assisted Esterification of Succinic Acid

This example illustrates the optimization of the esterification of succinic acid with methanol (B129727) using a heterogeneous catalyst in a microwave reactor.[10][11]

Experimental Factors and Levels

The key parameters optimized were reaction time, microwave power, and catalyst dosing.

| Factor | Code | Low (-1) | Medium (0) | High (+1) |

| Reaction Time (min) | X₁ | 30 | 60 | 90 |

| Microwave Power (W) | X₂ | 100 | 200 | 300 |

| Catalyst Dosing (wt%) | X₃ | 1 | 2 | 3 |

Box-Behnken Design Matrix and Results

The this compound was used to optimize the conversion of succinic acid.

| Run | Reaction Time (min) | Microwave Power (W) | Catalyst Dosing (wt%) | Succinic Acid Conversion (%) |

| 1 | 30 | 100 | 2 | 65 |

| 2 | 90 | 100 | 2 | 80 |

| 3 | 30 | 300 | 2 | 85 |

| 4 | 90 | 300 | 2 | 98 |

| 5 | 30 | 200 | 1 | 70 |

| 6 | 90 | 200 | 1 | 85 |

| 7 | 30 | 200 | 3 | 90 |

| 8 | 90 | 200 | 3 | 99 |

| 9 | 60 | 100 | 1 | 75 |

| 10 | 60 | 300 | 1 | 92 |

| 11 | 60 | 100 | 3 | 88 |

| 12 | 60 | 300 | 3 | 99 |

| 13 | 60 | 200 | 2 | 95 |

| 14 | 60 | 200 | 2 | 96 |

| 15 | 60 | 200 | 2 | 95 |

Note: The conversion percentages are representative values based on the reported study.

Protocol: Microwave-Assisted Esterification

-

Reactant and Catalyst Loading: In a microwave-safe reaction vessel, combine succinic acid, methanol, and the heterogeneous catalyst (D-Hβ) according to the experimental design.[10]

-

Microwave Irradiation: Place the vessel in the microwave reactor and irradiate at the specified power for the designated reaction time.[10][11]

-

Product Recovery: After the reaction, cool the vessel, and separate the solid catalyst from the liquid product mixture, typically by filtration.

-

Analysis: Analyze the product mixture using techniques like gas chromatography to determine the conversion of succinic acid and the selectivity for dimethyl succinate.[10]

Data Analysis and Model Interpretation

Once the experiments are completed, the data is analyzed to fit a second-order polynomial equation:

Y = β₀ + ΣβᵢXᵢ + ΣβᵢᵢXᵢ² + ΣβᵢⱼXᵢXⱼ

Where Y is the predicted response, β₀ is the intercept, βᵢ are the linear coefficients, βᵢᵢ are the quadratic coefficients, and βᵢⱼ are the interaction coefficients. The significance of the model and each term is evaluated using Analysis of Variance (ANOVA).

Response surface plots and contour plots are then generated from the fitted model to visualize the relationship between the factors and the response, allowing for the identification of the optimal conditions.

Conclusion

Box-Behnken Design is a powerful and efficient statistical tool for the optimization of chemical reactions. By systematically exploring the effects of multiple variables and their interactions, researchers can significantly reduce the number of experiments required to identify optimal process conditions. The application of this compound, as demonstrated in the case studies, leads to improved reaction yields, enhanced degradation efficiencies, and overall more robust and efficient chemical processes, making it an invaluable methodology for scientists and professionals in the chemical and pharmaceutical industries.

References

- 1. Box–Behnken design - Wikipedia [en.wikipedia.org]

- 2. 5.3.3.6.2. Box-Behnken designs [itl.nist.gov]

- 3. researchgate.net [researchgate.net]

- 4. jchpe.ut.ac.ir [jchpe.ut.ac.ir]

- 5. <strong>Optimization of esterification of acrylic acid and ethanol by box-behnken design of response surface methodology</strong> | Jyoti | Indian Journal of Chemical Technology (IJCT) [op.niscair.res.in]

- 6. researchgate.net [researchgate.net]

- 7. [PDF] A Box-Behnken design (this compound) optimization of the photocatalytic degradation of 2,4-dichlorophenoxyacetic acid (2,4-D) using TiO2/H2O2 | Semantic Scholar [semanticscholar.org]

- 8. deswater.com [deswater.com]

- 9. gcris.iyte.edu.tr [gcris.iyte.edu.tr]

- 10. researchgate.net [researchgate.net]

- 11. Optimization of microwave-assisted esterification of succinic acid using Box-Behnken design approach - PubMed [pubmed.ncbi.nlm.nih.gov]

Application of Box-Behnken Design in Pharmaceutical Formulation Development: Notes and Protocols

Introduction

Box-Behnken Design (BBD) is a highly efficient statistical tool utilized in pharmaceutical formulation development to optimize drug delivery systems. As a response surface methodology (RSM), this compound allows researchers to understand the influence of multiple variables on the critical quality attributes of a formulation with a minimal number of experimental runs.[1] This methodology is particularly advantageous for developing complex formulations such as nanoparticles, tablets, and microspheres, where interactions between formulation components and process parameters can significantly impact therapeutic efficacy and stability. This compound is a three-level design that avoids extreme vertex points, making it a cost-effective and reliable approach for achieving robust and optimized pharmaceutical products.[2][3]

Application Note 1: Optimization of Polymeric Nanoparticles for Enhanced Drug Delivery

The development of nanoparticle-based drug delivery systems is a promising strategy for improving the therapeutic efficacy of various drugs by enhancing their stability, solubility, and bioavailability.[4] This application note details the use of a Box-Behnken design to optimize the formulation of drug-loaded polymeric blend nanoparticles.

Case Study Overview: The objective of this study was to develop an optimized formulation of polycaprolactone (B3415563) (PCL) and poly(lactic-co-glycolic) acid (PLGA) blend nanoparticles to enhance the encapsulation efficiency of a hydrophilic drug.[5] A three-factor, three-level this compound was employed to systematically investigate the effects of key formulation variables on the physicochemical properties of the nanoparticles.[5]

Experimental Design and Variables: A Box-Behnken design consisting of 15 experimental runs was utilized to evaluate the impact of three independent variables on three key responses. The independent variables and their levels are summarized in the table below.

| Independent Variables | Low Level (-1) | Medium Level (0) | High Level (+1) |

| X1: Polymer Blend Amount (mg) | 100 | 150 | 200 |

| X2: Drug Amount (mg) | 5 | 7.5 | 10 |

| X3: Surfactant Concentration (%) | 4 | 6 | 8 |

The dependent variables (responses) measured were particle size (Y1), zeta potential (Y2), and encapsulation efficiency (Y3).

Optimized Formulation: The this compound model predicted an optimal formulation with desirable characteristics. The predicted optimal formulation consisted of 162 mg of the polymer blend, 8.37 mg of the drug, and 8% surfactant.[5] This formulation was expected to yield nanoparticles with a size of 283.06 nm, a zeta potential of -31.54 mV, and an encapsulation efficiency of 70%.[5]

Experimental Protocol: Preparation of Polymeric Blend Nanoparticles using the Double Emulsion Solvent Evaporation Method

-

Preparation of the Primary Emulsion (w/o):

-

Dissolve the specified amounts of PCL and PLGA (polymer blend) in a suitable organic solvent (e.g., dichloromethane).

-

Dissolve the hydrophilic drug in an aqueous solution (e.g., deionized water).

-

Add the aqueous drug solution to the polymer solution.

-

Emulsify the mixture using a high-speed homogenizer or sonicator to form a water-in-oil (w/o) primary emulsion.

-

-

Preparation of the Double Emulsion (w/o/w):

-

Prepare an aqueous solution of the surfactant (e.g., polyvinyl alcohol - PVA) at the specified concentration.

-

Add the primary emulsion to the surfactant solution.

-

Homogenize or sonicate the mixture to form a water-in-oil-in-water (w/o/w) double emulsion.

-

-

Solvent Evaporation:

-

Stir the double emulsion at room temperature for a sufficient time (e.g., 3-4 hours) to allow the organic solvent to evaporate completely.

-

As the solvent evaporates, the polymers precipitate, forming solid nanoparticles.

-

-

Nanoparticle Recovery:

-

Centrifuge the nanoparticle suspension at a high speed (e.g., 15,000 rpm) for a specified time (e.g., 30 minutes).

-

Discard the supernatant and wash the nanoparticle pellet with deionized water to remove any unencapsulated drug and excess surfactant.

-

Repeat the centrifugation and washing steps twice.

-

Resuspend the final nanoparticle pellet in deionized water and lyophilize for long-term storage.

-

Characterization of Nanoparticles:

-

Particle Size and Zeta Potential: Analyze the nanoparticle suspension using a dynamic light scattering (DLS) instrument.

-

Encapsulation Efficiency: Determine the amount of encapsulated drug by separating the nanoparticles from the aqueous phase and quantifying the free drug in the supernatant using a suitable analytical method (e.g., UV-Vis spectrophotometry or HPLC). The encapsulation efficiency is calculated using the following formula:

-

EE (%) = [(Total Drug Amount - Free Drug Amount) / Total Drug Amount] x 100

-

Visualization of the this compound Experimental Workflow for Nanoparticle Optimization

Caption: Workflow for optimizing nanoparticle formulation using Box-Behnken Design.

Application Note 2: Formulation and Optimization of Fast Dissolving Tablets

Fast dissolving tablets (FDTs) are an important oral dosage form for patients who have difficulty swallowing conventional tablets. The rapid disintegration of FDTs in the oral cavity allows for pre-gastric absorption and can lead to a faster onset of action. This application note describes the use of this compound to optimize the formulation of FDTs.

Case Study Overview: The objective of this study was to formulate and optimize fast dissolving tablets of urapidil (B1196414) by investigating the effect of different formulation variables on the tablet's disintegration time.[6] A three-factor, three-level Box-Behnken design was employed to systematically evaluate the influence of key excipients.[6]

Experimental Design and Variables: A this compound with 17 experimental runs was used to assess the impact of three independent variables on the disintegration time of the tablets.[6] The independent variables and their levels are presented in the table below.

| Independent Variables | Low Level (-1) | Medium Level (0) | High Level (+1) |

| X1: Croscarmellose Sodium (%) | 2 | 4 | 6 |

| X2: Spray Dried Lactose (%) | 20 | 30 | 40 |

| X3: HPMC K4M (%) | 5 | 10 | 15 |

The primary dependent variable (response) measured was the disintegration time (Y1).

Optimized Formulation: The study concluded that an optimized formulation with desirable disintegration characteristics could be achieved by controlling the levels of the selected excipients.[7] The this compound model successfully predicted the relationship between the variables and the response, allowing for the identification of an optimal formulation.[7]

Experimental Protocol: Preparation of Fast Dissolving Tablets by Direct Compression

-

Sifting and Blending:

-

Sift the active pharmaceutical ingredient (urapidil) and all the excipients (croscarmellose sodium, spray dried lactose, HPMC K4M, and other standard excipients like microcrystalline cellulose, magnesium stearate, and talc) through a suitable mesh sieve to ensure uniformity.

-

Accurately weigh the required quantities of each ingredient according to the experimental design.

-

Blend the drug and excipients (except the lubricant and glidant) in a suitable blender for a specified time (e.g., 15 minutes) to achieve a homogenous mixture.

-

-

Lubrication:

-

Add the lubricant (magnesium stearate) and glidant (talc) to the powder blend.

-

Blend for a shorter period (e.g., 3-5 minutes) to ensure adequate lubrication without overlubrication.

-

-

Compression:

-

Compress the final powder blend into tablets using a tablet compression machine fitted with appropriate punches and dies.

-

Ensure that the tablet press is set to produce tablets of a consistent weight, hardness, and thickness.

-

Evaluation of Fast Dissolving Tablets:

-

Hardness and Friability: Measure the hardness of the tablets using a hardness tester and the friability using a friabilator.

-

Drug Content: Determine the amount of urapidil in the tablets using a suitable analytical method (e.g., UV-Vis spectrophotometry or HPLC) to ensure content uniformity.

-

Disintegration Time: Measure the time taken for the tablets to disintegrate completely in a specified medium (e.g., 0.1N HCl) using a disintegration test apparatus.[6]

Visualization of the Logical Relationships in FDT Formulation using this compound

Caption: Relationship between independent variables and the response in FDT formulation.

Application Note 3: Optimization of PLGA-Coffee Nanoparticles for Enhanced Biological Activity

This application note illustrates the use of this compound to optimize the formulation of poly(lactic-co-glycolic) acid (PLGA) nanoparticles encapsulating coffee extract, with the aim of enhancing its antioxidant and anticancer activities.[8]

Case Study Overview: The study aimed to investigate the impact of formulation and process parameters on the physicochemical properties of PLGA-coffee nanoparticles prepared by the single emulsion-solvent evaporation method.[8] A three-factor, three-level this compound was employed to optimize the formulation.[8]

Experimental Design and Variables: The this compound consisted of 15 experimental runs to evaluate the effects of three independent variables on five responses.[8] The independent variables and their levels are detailed below.

| Independent Variables | Low Level (-1) | Medium Level (0) | High Level (+1) |

| X1: PVA Concentration (%) | 0.5 | 1.0 | 1.5 |

| X2: Homogenization Speed (rpm) | 10,000 | 15,000 | 20,000 |

| X3: Homogenization Time (min) | 2 | 4 | 6 |

The dependent variables measured were particle size (Y1), zeta potential (Y2), polydispersity index (PDI) (Y3), encapsulation efficiency (Y4), and loading capacity (Y5).[8]

Optimized Formulation: The optimized formulation was selected based on achieving a small particle size, low PDI, high absolute zeta potential, and high encapsulation efficiency and loading capacity.[8] The study found that nano-encapsulation significantly enhanced the antioxidant and anticancer activities of the coffee extract.[8]

Experimental Protocol: Preparation of PLGA-Coffee Nanoparticles by Single Emulsion-Solvent Evaporation

-

Preparation of the Organic Phase:

-

Dissolve a fixed amount of PLGA and coffee extract in a suitable water-immiscible organic solvent (e.g., ethyl acetate).

-

-

Preparation of the Aqueous Phase:

-

Prepare an aqueous solution of polyvinyl alcohol (PVA) at the concentration specified by the this compound.

-

-

Emulsification:

-

Add the organic phase to the aqueous phase.

-

Emulsify the mixture using a high-speed homogenizer at the speed and for the duration specified by the this compound to form an oil-in-water (o/w) emulsion.

-

-

Solvent Evaporation:

-

Stir the emulsion at room temperature for several hours to allow for the complete evaporation of the organic solvent.

-

-

Nanoparticle Recovery and Purification:

-

Centrifuge the nanoparticle suspension to separate the nanoparticles from the aqueous phase.

-

Wash the nanoparticle pellet with deionized water to remove un-encapsulated coffee extract and excess PVA.

-

Resuspend the washed nanoparticles in deionized water and lyophilize for storage.

-

Characterization of PLGA-Coffee Nanoparticles:

-

Particle Size, PDI, and Zeta Potential: Determined using a DLS instrument.

-

Encapsulation Efficiency (EE%) and Loading Capacity (LC%): Quantify the amount of encapsulated coffee extract using a suitable analytical technique (e.g., UV-Vis spectrophotometry) after lysing the nanoparticles. The EE% and LC% are calculated as follows:

-

EE (%) = (Weight of Drug in Nanoparticles / Initial Weight of Drug) x 100

-

LC (%) = (Weight of Drug in Nanoparticles / Weight of Nanoparticles) x 100

-

Visualization of the this compound Optimization Cycle for PLGA-Coffee Nanoparticles

Caption: Iterative optimization cycle for PLGA-coffee nanoparticles using this compound.

References

- 1. researchgate.net [researchgate.net]

- 2. seejph.com [seejph.com]

- 3. Box–Behnken Design of Experiments of Polycaprolactone Nanoparticles Loaded with Irinotecan Hydrochloride - PMC [pmc.ncbi.nlm.nih.gov]

- 4. dovepress.com [dovepress.com]

- 5. Formulation and Systematic Optimisation of Polymeric Blend Nanoparticles via Box–Behnken Design [mdpi.com]

- 6. researchgate.net [researchgate.net]

- 7. Box-Behnken Design for Optimization of Formulation Variables for Fast Dissolving Tablet of Urapidil | Asian Journal of Pharmaceutics (AJP) [asiapharmaceutics.info]

- 8. Applying Box–Behnken Design for Formulation and Optimization of PLGA-Coffee Nanoparticles and Detecting Enhanced Antioxidant and Anticancer Activities - PMC [pmc.ncbi.nlm.nih.gov]

Application Notes and Protocols for Process Optimization in Biotechnology using Box-Behnken Design

Audience: Researchers, scientists, and drug development professionals.

Introduction to Box-Behnken Design in Biotechnology

The Box-Behnken Design (BBD) is a statistical tool from response surface methodology (RSM) that is highly effective for optimizing complex biotechnological processes.[1][2] Unlike conventional one-factor-at-a-time methods, this compound allows for the simultaneous study of multiple variables, making it a more efficient and cost-effective approach.[3][4] This methodology is particularly well-suited for fitting quadratic models and identifying the optimal conditions for a desired response.[1]

In biotechnology, this compound has been successfully applied to a wide range of applications, including the optimization of fermentation processes, enhancement of enzyme production, and development of drug delivery systems.[4][5][6][7] It is an invaluable tool for understanding the interactions between different process parameters and for determining the factor settings that will lead to the best possible outcomes.[2]

The Box-Behnken Design Methodology

The this compound is a three-level fractional factorial design that allows for the estimation of a second-order polynomial model.[8] The design consists of a specific set of experimental runs where the factors are varied over three levels, typically coded as -1 (low), 0 (central), and +1 (high).[1] A key feature of the this compound is that it does not include experimental points at the vertices of the cubic region, which can be advantageous when these extreme combinations are expensive or difficult to perform.[9][10]

The relationship between the response and the independent variables is described by the following second-order polynomial equation[5]:

Y = β₀ + ΣβᵢXᵢ + ΣβᵢᵢXᵢ² + ΣβᵢⱼXᵢXⱼ

Where:

-

Y is the predicted response.

-

β₀ is the model constant.

-

βᵢ , βᵢᵢ , and βᵢⱼ are the linear, quadratic, and interaction coefficients, respectively.

-

Xᵢ and Xⱼ are the independent variables.

The analysis of the experimental data is typically performed using analysis of variance (ANOVA) to determine the significance of the model and the individual factors.[11]

Logical Workflow of Box-Behnken Design

Caption: Logical workflow of the Box-Behnken Design methodology.

Application Example: Optimization of Fibrinolytic Enzyme Production

This section details the application of Box-Behnken Design to optimize the production of a novel fibrinolytic enzyme from Bacillus altitudinis S-CSR 0020.[5][12]

Experimental Factors and Levels

The key factors influencing enzyme production were identified as temperature, pH, and substrate concentration. A three-level, three-factor Box-Behnken design was employed to investigate their effects.

| Independent Variable | Code | Level -1 | Level 0 | Level +1 |

| Temperature (°C) | A | 37 | 47 | 57 |

| pH | B | 8.5 | 9.5 | 10.5 |

| Substrate Concentration (g/L) | C | 2 | 3 | 4 |

Experimental Design and Results

A total of 17 experimental runs were conducted, with the results summarized in the table below.

| Run | Temperature (°C) | pH | Substrate Conc. (g/L) | Enzyme Activity (U/mL) |

| 1 | 37 | 9.5 | 2 | 200 |

| 2 | 57 | 9.5 | 2 | 220 |

| 3 | 37 | 9.5 | 4 | 240 |

| 4 | 57 | 9.5 | 4 | 260 |

| 5 | 37 | 8.5 | 3 | 180 |

| 6 | 57 | 8.5 | 3 | 200 |

| 7 | 37 | 10.5 | 3 | 280 |

| 8 | 57 | 10.5 | 3 | 300 |

| 9 | 47 | 8.5 | 2 | 190 |

| 10 | 47 | 10.5 | 2 | 290 |

| 11 | 47 | 8.5 | 4 | 210 |

| 12 | 47 | 10.5 | 4 | 310 |

| 13 | 47 | 9.5 | 3 | 250 |

| 14 | 47 | 9.5 | 3 | 255 |

| 15 | 47 | 9.5 | 3 | 252 |

| 16 | 47 | 9.5 | 3 | 248 |

| 17 | 47 | 9.5 | 3 | 253 |

Note: Data is representative and based on the trends reported in the cited literature.

Model and Optimization

A second-order polynomial equation was fitted to the experimental data. The optimized conditions were found to be a temperature of 47°C, a pH of 10.5, and a substrate concentration of 4 g/L.[5][12] These conditions resulted in a significant increase in enzyme activity to 306.88 U/mL and a specific activity of 780 U/mg, which was a 2-fold increase compared to the initial levels.[5][12]

Experimental Protocols

Protocol for Fibrinolytic Enzyme Production

This protocol is based on the methodology for producing fibrinolytic enzyme from Bacillus altitudinis S-CSR 0020.[5][12]

-

Inoculum Preparation:

-

Inoculate a single colony of Bacillus altitudinis S-CSR 0020 into 50 mL of nutrient broth.

-

Incubate at 37°C for 24 hours with shaking at 150 rpm.

-

-

Fermentation:

-

Prepare the fermentation medium according to the experimental design matrix. The basal medium contains a nitrogen source (e.g., fibrin) and other essential nutrients.

-

Adjust the pH of the medium to the specified level using sterile 1N HCl or 1N NaOH.

-

Inoculate the fermentation medium with 1% (v/v) of the seed culture.

-

Incubate the flasks at the designated temperature for 48 hours with shaking at 150 rpm.

-

-

Enzyme Extraction:

-

After incubation, centrifuge the culture broth at 10,000 rpm for 15 minutes at 4°C.

-

The cell-free supernatant contains the crude fibrinolytic enzyme.

-

Protocol for Fibrinolytic Activity Assay

This assay is used to determine the activity of the produced enzyme.[5]

-

Substrate Preparation:

-

Prepare a 0.6% (w/v) solution of fibrinogen in 0.1 M phosphate (B84403) buffer (pH 7.4).

-

Add 0.1 mL of thrombin solution (10 NIH units/mL) to 0.9 mL of the fibrinogen solution to form a fibrin (B1330869) clot.

-

Incubate at 37°C for 10 minutes.

-

-

Enzyme Reaction:

-

Add 0.1 mL of the crude enzyme supernatant to the fibrin clot.

-

Incubate at 37°C for 60 minutes.

-

-

Measurement:

-

Stop the reaction by adding 2 mL of 0.4 M trichloroacetic acid (TCA).

-

Centrifuge at 5,000 rpm for 10 minutes.

-

Measure the absorbance of the supernatant at 280 nm.

-

One unit of fibrinolytic activity is defined as the amount of enzyme required to cause an increase in absorbance of 0.01 per minute.

-

Experimental Workflow for Enzyme Production Optimization

Caption: Experimental workflow for optimizing enzyme production using this compound.

Optimization of a Cellular Process: A Conceptual Pathway

In many biotechnological applications, the goal is to optimize a cellular process, such as the production of a specific metabolite or protein. The following diagram illustrates a conceptual signaling pathway that could be the target of such optimization efforts. The factors optimized using this compound (e.g., nutrient concentrations, pH, temperature) can influence various points in this pathway to enhance the desired output.

Caption: Conceptual diagram of a cellular process targeted for optimization.

References

- 1. Box–Behnken design - Wikipedia [en.wikipedia.org]

- 2. m.youtube.com [m.youtube.com]

- 3. mathsjournal.com [mathsjournal.com]

- 4. Optimization of Formulation using Box-Behnken Design - ProQuest [proquest.com]

- 5. microbiologyjournal.org [microbiologyjournal.org]

- 6. Utilizing Box-Behnken Design to Improve the Production of α-amylase via Bacillus Velezensis | International Journal of Pharmaceutical Sciences and Nanotechnology(IJPSN) [ijpsnonline.com]

- 7. Implementation of the Box-Behnken Design in the Development and Optimization of Methotrexate-Loaded Microsponges for Colon Cancer - PubMed [pubmed.ncbi.nlm.nih.gov]

- 8. researchgate.net [researchgate.net]

- 9. Box-Behnken Designs for Optimizing Product Performance [help.reliasoft.com]

- 10. 5.3.3.6.2. Box-Behnken designs [itl.nist.gov]

- 11. mdpi.com [mdpi.com]

- 12. pdfs.semanticscholar.org [pdfs.semanticscholar.org]

Optimizing Mammalian Cell Culture Processes with Box-Behnken Design: A Practical Guide

Application Note & Protocol

Audience: Researchers, scientists, and drug development professionals.

Introduction

In the realm of biopharmaceutical production and drug development, optimizing mammalian cell culture processes is paramount for maximizing product yield, ensuring consistent quality, and reducing manufacturing costs. The Box-Behnken Design (BBD), a type of response surface methodology (RSM), offers a statistically robust and efficient approach to process optimization.[1][2] Unlike traditional one-factor-at-a-time (OFAT) methods, this compound allows for the simultaneous investigation of multiple process parameters, uncovering complex interactions and identifying optimal conditions with a reduced number of experimental runs.[3][4]